Abstract

Background:

The quantitative analysis of microscope videos often requires instance segmentation and tracking of cellular and subcellular objects. The traditional method consists of two stages: (1) performing instance object segmentation of each frame, and (2) associating objects frame-by-frame. Recently, pixel-embedding-based deep learning approaches these two steps simultaneously as a single stage holistic solution. Pixel-embedding-based learning forces similar feature representation of pixels from the same object, while maximizing the difference of feature representations from different objects. However, such deep learning methods require consistent annotations not only spatially (for segmentation), but also temporally (for tracking). In computer vision, annotated training data with consistent segmentation and tracking is resource intensive, the severity of which is multiplied in microscopy imaging due to (1) dense objects (e.g., overlapping or touching), and (2) high dynamics (e.g., irregular motion and mitosis). Adversarial simulations have provided successful solutions to alleviate the lack of such annotations in dynamics scenes in computer vision, such as using simulated environments (e.g., computer games) to train real-world self-driving systems.

Methods:

In this paper, we propose an annotation-free synthetic instance segmentation and tracking (ASIST) method with adversarial simulation and single-stage pixel-embedding based learning.

Contribution:

The contribution of this paper is three-fold: (1) the proposed method aggregates adversarial simulations and single-stage pixel-embedding based deep learning (2) the method is assessed with both the cellular (i.e., HeLa cells); and subcellular (i.e., microvilli) objects; anad (3) to the best of our knowledge, this is the first study to explore annotation-free instance segmentation and tracking study for microscope videos.

Results:

The ASIST method achieved an important step forward, when compared with fully supervised approaches: ASIST shows 7%–11% higher segmentation, detection and tracking performance on microvilli relative to fully supervised methods, and comparable performance on Hela cell videos.

Keywords: Annotation free, Segmentation, Tracking, Cellular, Subcelluar

1. Introduction

Capturing cellular and subcellular dynamics through microscopy approaches helps domain experts in characterizing biological processes [1] in a quantitative manner, leading to advanced biomedical applications (e.g., drug discovery) [2].

Numerous image processing approaches have been proposed for precise instance object segmentation and tracking. Most of the previous solutions [3–5] follow a similar “two-stage” strategy: I. segmentation on each frame, and II. frame-by-frame association across the video. In recent years, a new family of “single-stage” algorithms was enabled by cutting-edge pixel-embedding based deep learning [6,7]. Such methods enforce the spatiotemporally consistent pixel-wise feature embedding for the same cellular or subcellular objects across video frames. However, pixel-wise annotations require spatial (segmentation) and temporal (tracking) consistency. Such labeling efforts are typically expensive, and potential unscalable, for microscope videos due to I. dense objects (e.g., overlapping or touching), and II. high dynamics (e.g., irregular motion and mitosis). Therefore, better learning strategies are desired beyond the current human annotation based supervised learning.

Adversarial simulation has provided a scalable option to create realistic synthetic environments without extensive human annotations. Particularly striking examples include a) using computer games such as Grand Theft Auto to train self-driving deep learning models [8], b) using a simulation environment Gazebo to train robotics [9], and c) using a SUMO simulator to train traffic management artificial intelligence (AI) [10].

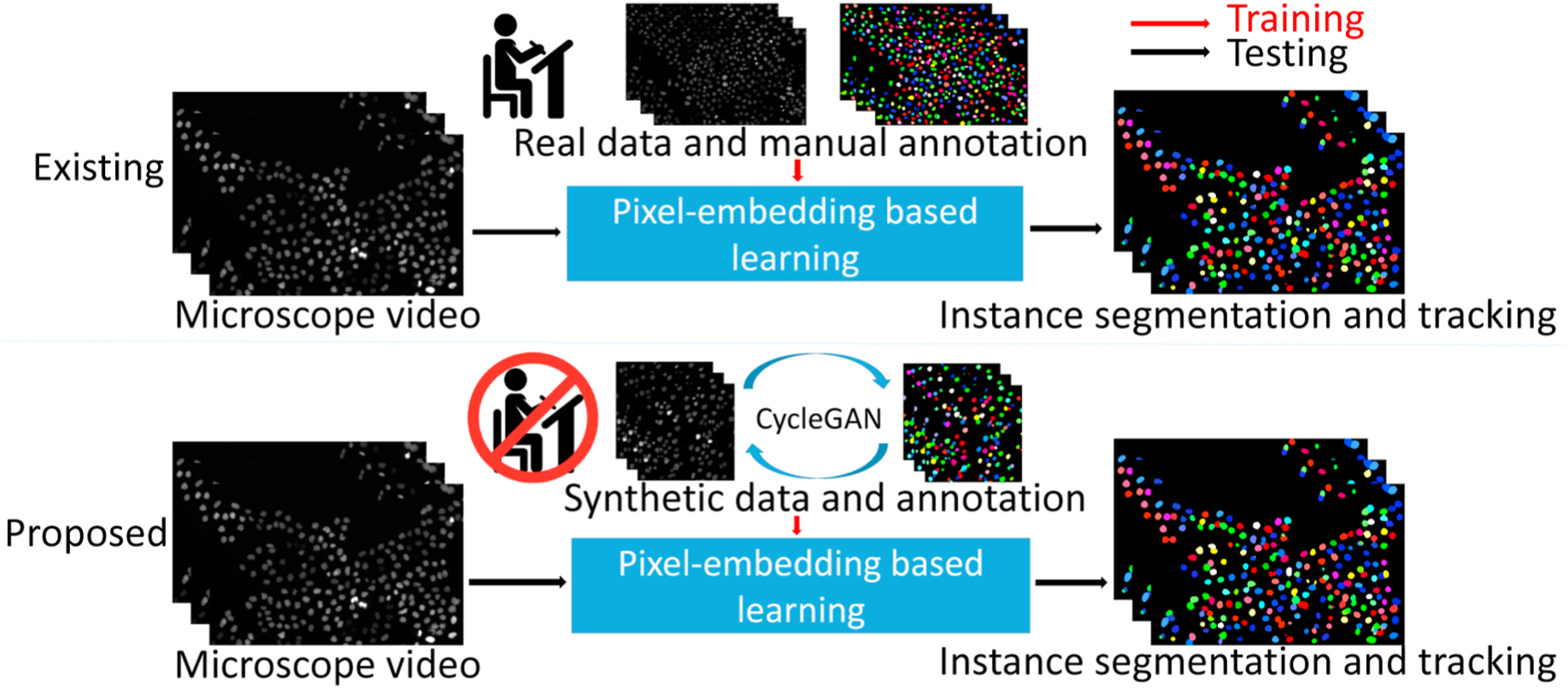

In this paper, we propose an annotation-free synthetic instance segmentation and tracking (ASIST) method with adversarial simulation and single-stage pixel-embedding based learning. Briefly, the ASIST framework consists of three major steps: I. unsupervised image-annotation synthesis, II. video and temporal annotation synthesis, and III. pixel-embedding based instance segmentation and tracking. As opposed to traditional manual annotation-based pixel embedding deep learning, the proposed ASIST method is annotation-free (Fig. 1).

Fig. 1.

The upper panel shows the existing pixel-embedding deep learning based single-stage instance segmentation and tracking method, which is trained by real microscope video and manual annotations. The lower panel presents our pro-posed annotation-free ASIST method, with synthesized data and annotations from adversarial simulations.

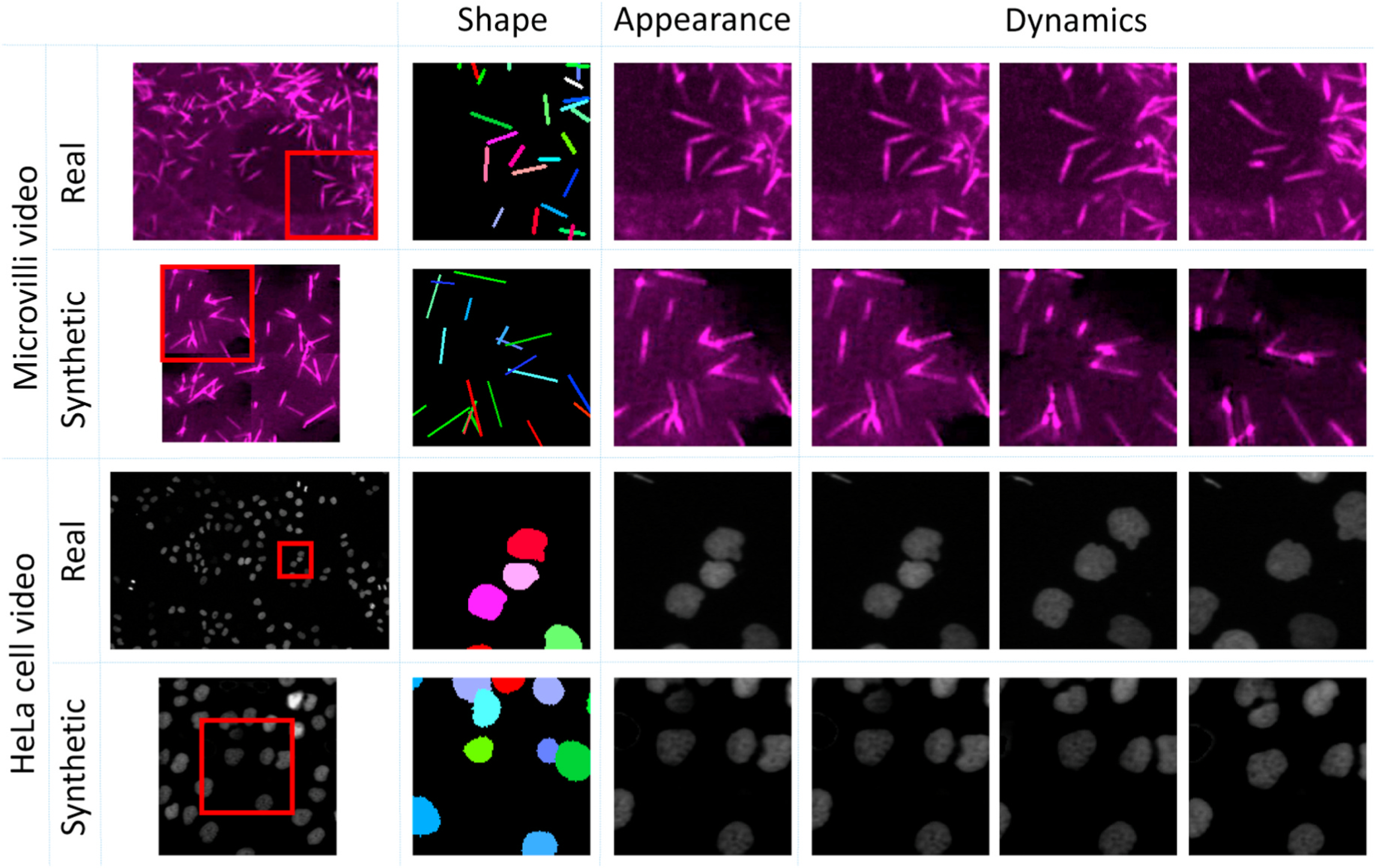

To achieve the annotation-free solution, we simulated cellular or subcellular structures with three important aspects: shape, appearance and dynamics (Fig. 2). To evaluate our proposed ASIST method, microscope videos of both cellular (i.e., HeLa cell videos from ISBI Cell Tracking Challenge [11,12]) and subcellular (i.e., microvilli videos from in house data) objects were included in this study. The HeLa cell videos have larger shape variations compared with microvilli videos. From the results, our ASIST method achieved promising accuracy compared with fully supervised approaches.

Fig. 2.

Real and synthetic video of Hela cell and microvilli consisting of three aspects: shape, appearance and dynamics. The “shape” is defined as the underlying shape of the manual annotations. The “appearance” is defined by the various appearances of objects. The “dynamics” indicates the mitigation of cellular and subcellular objects.

In summary, this paper has three major contributions:

We propose the ASIST annotation-free framework, aggregating adversarial simulations and single-stage pixel embedding based deep learning.

We propose a novel annotation refinement approach to simulate shape variations of cellular objects, with circles as a middle representation.

To our best knowledge, our proposed approach is the first annotation-free solution for single-stage pixel embedding deep learning based cell instance segmentation and tracking.

This research was supported by Vanderbilt Cellular, Biochemical and Molecular Sciences Training Grant 5T32GM008554-25, the NIH NIDDK National Research Service Award F31DK122692, NIH Grant R01-DK111949 and R01-DK095811.

2. Related work

2.1. Image synthesis

The simplest approach to synthesize new images is to perform image transformations, which includes flipping, rotation, resizing, and cropping. Such synthetic images improved the accuracy of image classification upon benchmark datasets [13] by enlarging dataset with synthetic images. Another study [14] improved the accuracy of image segmentation (Dice similarity coefficient) with synthetic images by applying data augmentation approaches like random sheering and rotation.

A method that is more complex than image transformations are generative adversarial networks (GAN) [15], which open a new window of synthesizing highly realistic images, and have been widely used in different computer vision and biomedical imaging applications. For instance, GAN has synthesized retinal images to map retinal images to binary retinal vessel trees [16]. The synthetic images can be generated from random noise [17] with geometry constraints [18], and even in high dimensional space [19]. To tackle the limitations of needing paired training data requirements, CycleGAN [20] was proposed to further advance the GAN technique to broader applications. CycleGAN has shown promise in cross-modality synthesis [21] and microscope image synthesis [22]. DeepSynth [23] demonstrated that CycleGAN can be applied to 3D medical image synthesis.

2.2. Microscope image segmentation and tracking

Historically, early approaches utilized intensity-based thresholding to segment a region of interest (ROI) from the background. Ridler et al. [24] use a dynamic updated threshold to segment an object based on the mean intensity of the foreground and the background. Otsu et al. [25] set a threshold by minimizing variance of the intraclass. To avoid the sensitivity to all image pixels, Pratt et al. [26] proposed growing a segmented area from a point, determined by texture similarity. Based on rough annotations, energy functions can be abstracted to segment images by minimizing the aforementioned energy function [27]. Among such methods, the watershed segmentation approaches are arguably the most widely used methods for intensity based cell image segmentation [28].

Object tracking on microscope videos is challenging due to the complex dynamics and vague instance boundaries when at cellular or subcellular resolutions. Gerlich et al. [29] used optical flow from microscope videos to track cell motion. Ray et al. [30] tracked leukocytes by computing gradient vectors of cell motions based on active contours. Sato et al. [31] designed orientation-selective filters to generate spatio-temporal information by enhancing the motion of cells [32,33]. also tracked cell motion by applying spatiotemporal analysis on microscope videos.

Recent studies have employed machine learning, especially deep learning approaches, for instance cell segmentation and tracking. Jain et al. [34] showed superior performance of a well-trained convolutional network. Baghli et al. [35] achieved 97% prediction accuracy by employing supervised machine learning approaches. To avoid relying on image annotation, Yu et al. [36] trained a Convolutional Neural Network without annotation to track large scale fibers in images of material acquired via microscope techniques. However, to the best of our knowledge, no existing studies have investigated the challenging problem of quantifying cellular and subcellular dynamics with pixel-wise instance segmentation and tracking with embedding based deep learning.

3. Methods

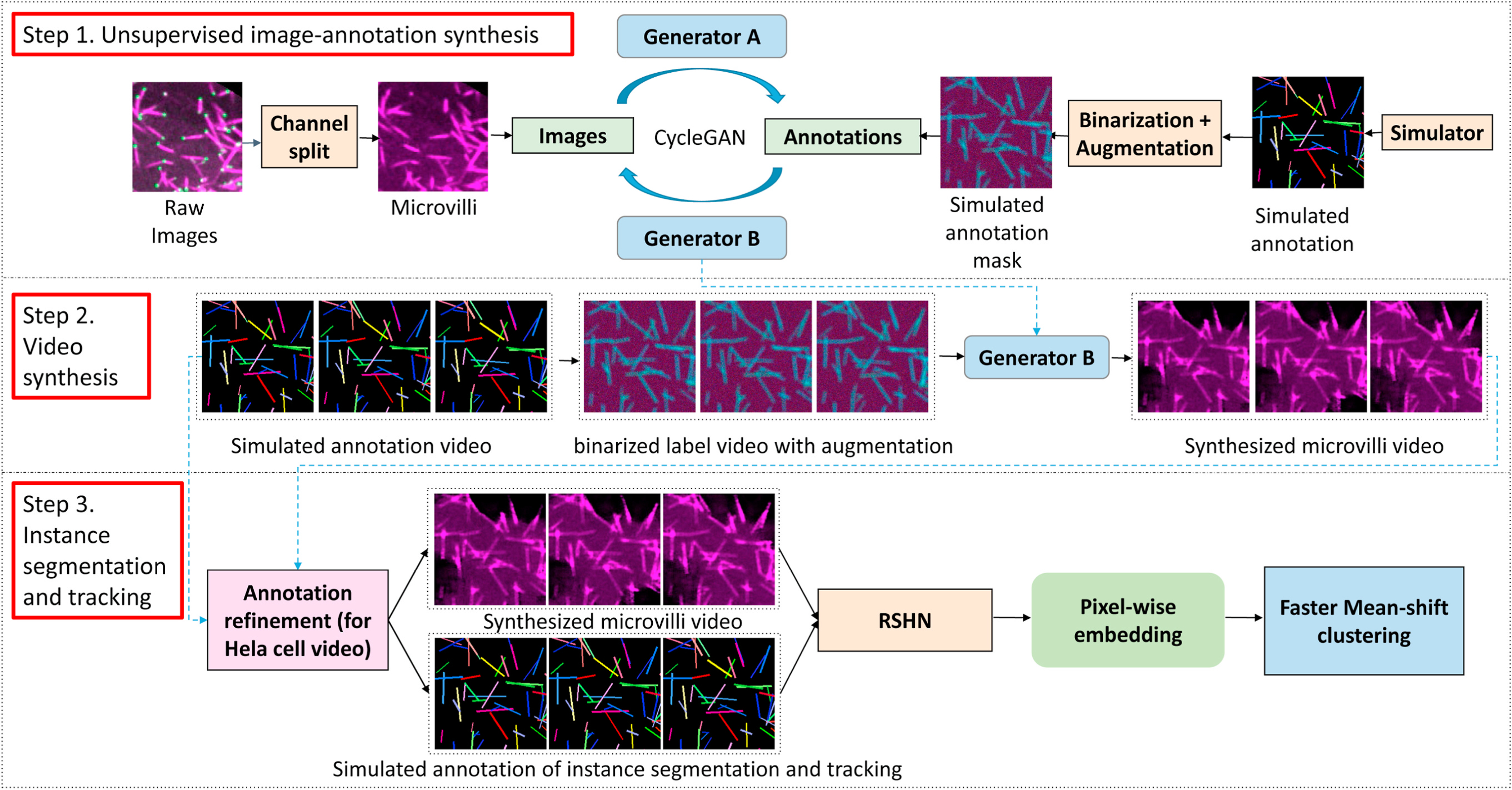

Our study has three steps: unsupervised image-annotation synthesis, video synthesis and instance segmentation and tracking (Fig. 3).

Fig. 3.

This figure shows the proposed ASIST method. First, CycleGAN based image-annotation synthesis is trained using real microscope images and simulated annotations. Second, synthesized microscope videos are generated from simulated annotation videos. Last, an embedding based instance segmentation and tracking algorithm is trained using synthetic training data. For HeLa cell videos, a new annotation refinement step is introduced to capture the larger shape variations.

3.1. Unsupervised image-annotation synthesis

The first step is to train a CycleGAN based approach [37] to directly synthesize annotations from microscope images, and vice versa. Compared with the tasks in computer vision, the objects in microscope images are often repetitive with more homogeneous shapes. Therefore, with knowledge of shapes associated with microvilli (stick-shaped) and HeLa cell nuclei (ball-shaped), we randomly generate fake annotations with repetitive sticks and circles to model the shape of microvilli and HeLa cells, respectively. When we train the CycleGAN on microvilli images, we clean the green marks on raw microvilli images which is EPS8 protein by splitting channel of RGB images. The network structure, training process and parameters follows [38]. The generator in CycleGAN consists of an encoder, transformer and decoder. We used ResNet [39] with 9 residual blocks as the encoder in both Generator A and Generator B in the deep learning architecture. We have tried to employ U-Net [40] as the encoder as well, suggested by Ref. [38]. Based on the our experience, ResNet generally has superior performance compared with U-Net. As a result, the ResNet is employed as the generator through all experiments in this paper.

3.2. Video synthesis

Using an annotation-to-image generator (marked as Generator B) from the above CycleGAN model, synthetic intensity images can be generated from simulated annotations. Since a video dataset represents a compilation of image frames, we extend the utilization of the trained Generator B from “annotation-to-image” to “annotation frames-to-video”. Briefly, simulated annotation videos are generated by our annotation simulator with variations in shape and dynamics. Then, each annotation video frame is used to generate a synthetic microscope image frame. After repeating such a process for the entire simulated annotation videos, synthetic microscope video is achieved for microvilli and HeLa cells, respectively.

3.2.1. Microvilli simulation

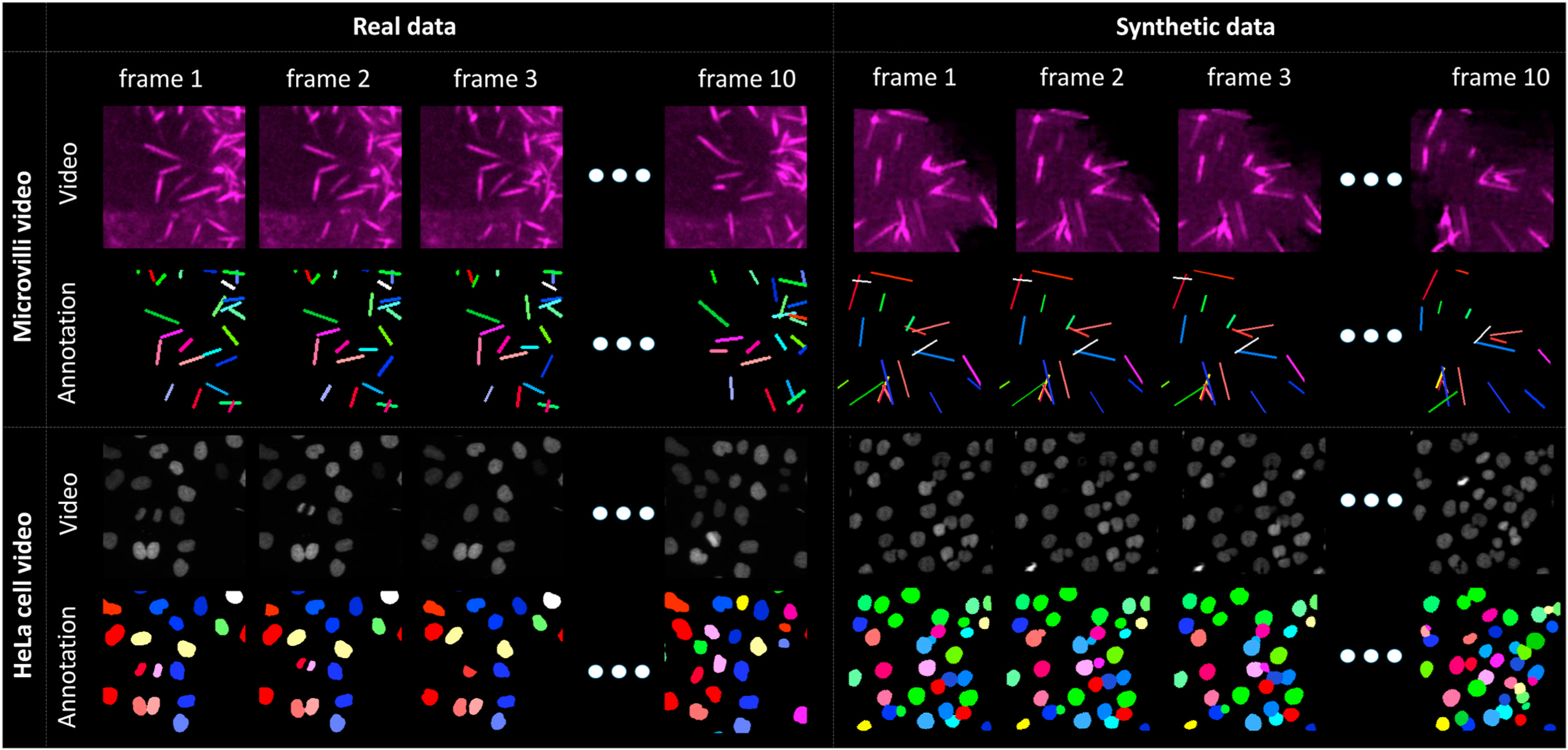

As shown in Fig. 4, we model the shape of microvilli as sticks (narrow rectangles) to simulate microvilli videos. The simulated microvilli annotation videos are determined by the following operations:

Fig. 4.

The left panel shows real microscope videos as well as manual annotations. The right panel presents our synthetic videos and simulated annotations.

Object number: Different numbers of objects are evaluated when simulating microvilli videos. The details are presented in §Experimental design.

Translation: Instance annotations are translated by 1 pixel at 50% probability.

Rotation: Each instance label is randomly rotated by 1◦ at 50% probability.

Shortening/Lengthening: Each object has 50% probability to become longer or shorter by 1 pixel. Each object can only become longer or shorter across the video.

Moving in/out: To simulate the instance moving in and out from the video scope, we generate frames in larger size (550 × 550 pixels) and center-cropped into the target size (512 × 512 pixels).

3.2.2. HeLa cell simulation

The HeLa cells have higher degrees of freedom in terms of shape variations, compared with microvilli. In this study, we proposed an annotation refinement strategy, to generate shape consistent synthetic HeLa cell videos and annotations, using circles as middle representations (Fig. 5), without introducing manual annotations. The simulated videos and annotations of HeLa cells are determined by the following operations:

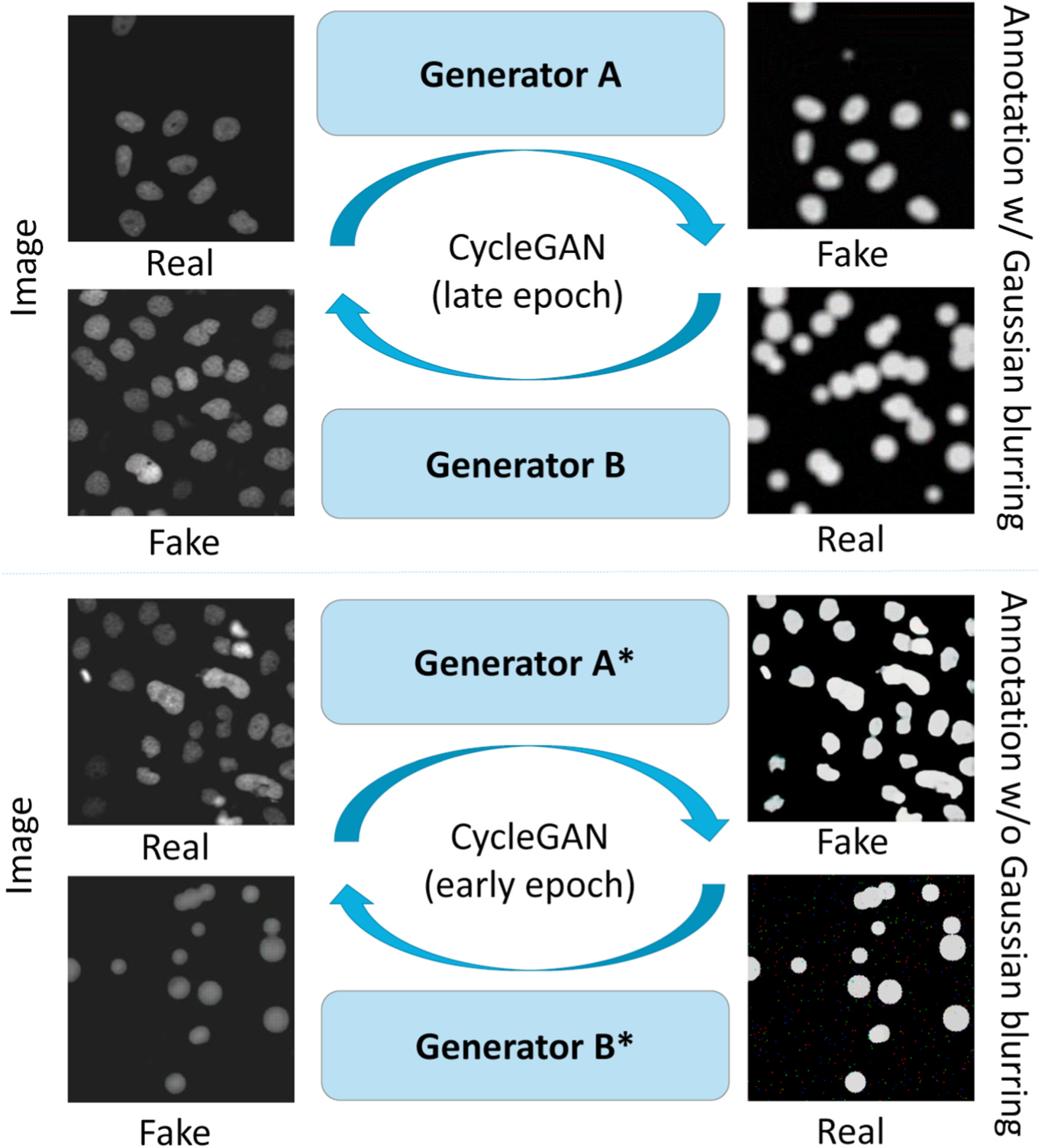

Fig. 5.

The upper panel shows the CycleGAN that is trained by real images and simulated annotations with Gaussian blurring. The lower panel shows the CycleGAN that is trained by the same data without Gaussian blurring. The Generator B is used to generate synthetic videos with larger shape variations from circle representations, while the Generator A* generate sharp segmentation for the annotation registrations.

Object number: The numbers of objects are evaluated when simulating HeLa cell videos. The details are presented in §Experimental design.

Translation: The instance annotation center can be moved by N pixels. N will be described in §Experimental design.

Radius changing: Radius of annotations has 10% probability to get bigger or smaller by 1 pixel.

Disappearing: Existing instance cells are randomly deleted from certain frames in videos.

Appearing: New instance cells shows up from certain frame in videos randomly. New cells will be added to the video from the appearing frame.

Mitosis: Mitosis is the process of cell replication and splitting. To simulate HeLa cell mitosis, we randomly define “mother cells” at the nth frame. At the n + 1th frame, we delete the “mother cells” and randomly create two new cells nearby. Based on biological knowledge, these two new instances are typically smaller than normal instances, and will grow up bigger and move randomly like other instance annotations.

Overlapping: We allow partial overlap between cells. The minimum distance between two cells are set to be 70% of the total diameter between two cells.

Size change: The radius of instance annotation has a 10% probability to become larger by 1 pixel or become smaller by 1 pixel.

3.3. Annotation refinement for HeLa cell video simulation

After training the initial CycleGAN synthesis, we are able to build simulated videos (with circle representation) as well as their corresponding synthetic microscope videos. However, circles are not the exact shape of annotations for synthetic videos. To further achieve consistent synthetic videos and annotations, we proposed an annotation refinement framework, which has a workflow shown in Fig. 6.

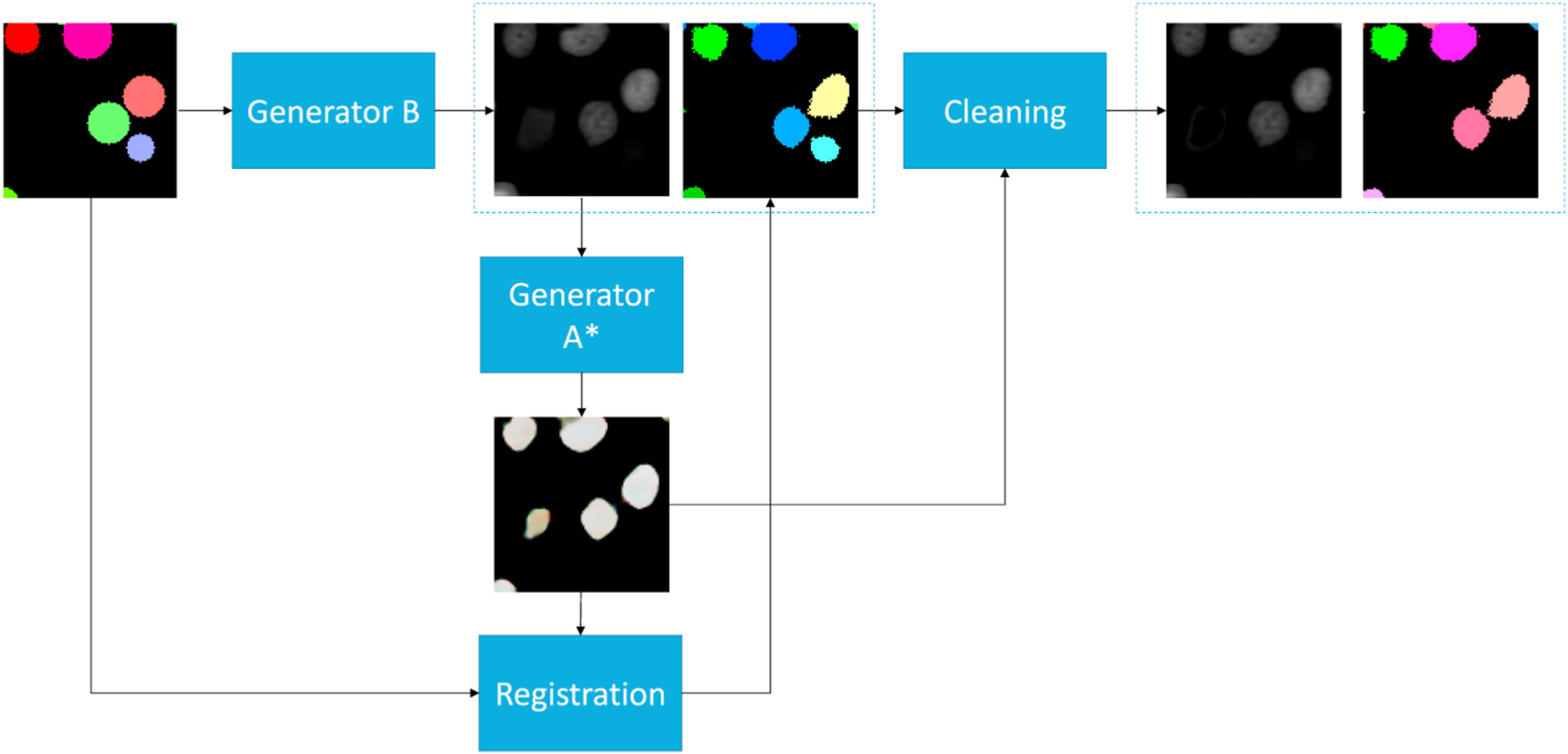

Fig. 6.

This figures shows the workflow of the annotation refinement approach. The simulated circle annotations are fed into Generator B to synthesize cell images. We used Generator A* in Fig. 5 to generate sharp binary masks from synthetic images. Then, we registered simulated circle annotations to binary masks to match the shape of cells in synthetic images. Last, an annotation cleaning step was introduced to delete the inconsistent annotations between deformed instance object masks and binary masks.

3.3.1. Binary mask generation

We trained CycleGAN to generate a binary mask of synthetic cell images. Unique from CycleGAN in §Unsupervised image-annotation synthesis, we used training data without applying Gaussian blurring and used the model from an early epoch. From our experiments, we observed that the early epochs of the CycleGAN training focused more on intensity adaptations rather than shape adaptations. The trained Generator A is used to generate sharp binary masks as templates in the following annotation registration step.

3.3.2. Annotation deformation (AD)

To bridge the gap between circle representations and HeLa cell shape annotations, a non-rigid registration approach from ANTs [41] is used to deform the circle shapes to the HeLa cell shapes. Briefly, we used generator B to synthesize cell images based on our simulated annotations. In the mask generation, we used generator A* to generate binary masks and registered the circle shape annotations to the binary masks. In that case, we keep the label numbers of circle re0070resentations, and deform their shapes to fit the synthetic cells.

3.3.3. Annotation cleaning (AC)

When performing image-annotation synthesis using CycleGAN, it is very likely to have a slightly different number of objects between HeLa cell images and annotations without using paired training data. To make the synthetic videos and simulated annotations to have more consistent numbers of objects, we introduce an annotation cleaning step (Fig. 6). First, we generate binary masks of simulated images using the Generator A*. Second, we clean up the inconsistent objects and annotations by comparing deformed simulated annotations and binary masks. Briefly, pseudovideos are simulated annotations. instance annotations are achieved from binary masks, by treating any connected components as instances. Third, if an instance object in the deformed simulated annotations is not 90% covered by binary masks, we re-assign the label as background. On the other hand, if a pseudo instance object from the binary masks is not 90% covered by deformed simulated annotations, we re-assign the corresponding region in the intensity image with the average background intensity values. In sum, the consistent synthetic videos and deformed simulated instance annotations are achieved with annotation cleaning (Fig. 6).

3.4. Instance segmentation and tracking

From the above stages, the synthetic videos and corresponding annotations are achieved frame-by-frame. The next step is to train our instance segmentation and tracking model. We used the recurrent stacked hourglass network (RSHN) [7] as the instance segmentation and tracking backbone to encode the embedding vectors of each pixel. The RSHN is a stacked hourglass network with a convolutional gated recurrent unit to process temporal information. The ideal pixel-embedding has two properties: (1) embedding of pixels belonging to the same objects should be similar across the entire video, and (2) the embedding of pixels belonging to different objects should be different. For a testing video, we employed the Faster Mean-shift algorithm [6] to cluster pixels to objects as the instance segmentation and tracking results. The embedding-based deep learning methods approach the instance segmentation and tracking as a “single-stage” approach, which is a simple and generalizable solution across different applications [6,7].

4. Experimental design

4.1. Instance segmentation and tracking on microvilli video

4.1.1. Data

Two microvilli videos captured by fluorescence microscopy are in 1.1 μm pixel resolution. Training data is one microvilli video in 512 × 512 in pixel resolution. Testing data is another microvilli video in the size of 328 × 238 pixels. Due to the heavy load of manual annotations on video frames, we only annotated the first ten frames of both videos as the golden standard. The annotation work includes two parts: 1) first we annotated each microvilli structure including overlapping or densely distributed areas; 2) secondly, each instance has been assigned consistent labels across all frames in same video. The manual annotation labor on both training and testing data takes roughly a week of work from a graduate student. This long manual annotation process shows the value of annotation-free solutions in quantifying cellular and subcellular dynamics.

4.1.2. Experimental design

In order to assess the performance of our annotation-free instance segmentation and tracking model, the proposed method is compared with the model trained with manual annotations on the same testing microvilli video. The different experimental settings are shown as the following:

Self: The testing video with manual annotations was used as both training and testing data.

Real: Another real microvilli video with manual annotations were used as training data.

Microvilli-1: One simulated video which consisted of 100 instances in size of 512 × 512 pixels was used as training data. The “Microvilli-1 10 frames” indicated only 10 frames were used, while other simulated data used 50 frames.

Microvilli-5: Five simulated videos with 512 × 512 pixel resolutions were used as training data. The number of objects were empirically chosen to be between 80 and 220.

Microvilli-20: We further spatially split each 512 × 512 video in Microvilli-5 to four 256 × 256 videos to form a total of 20 simulated videos with half resolution.

4.2. Instance segmentation and tracking on HeLa cell video

4.2.1. Data

HeLa cell videos (N2DL-HeLa) were obtained from the ISBI Cell Tracking Challenge [11,12]. The cohort has two 92-frame HeLa cell videos in size of 1100 × 700 pixels with annotations. The second video with complete manual annotations is used as the testing data for all experiments.

4.2.2. Experimental design

For experiments using an annotation-free framework, synthetic videos and simulated annotations are used for training. As a comparison experiment, experiments trained with annotated data used two N2DL-HeLa videos with annotations as training data. Our experiment settings are described as follows:

Self: The testing video with manual annotations was used as both training and testing data. The patch size of 256 × 256 was used, following [6,7].

Self-HW: The testing video with manual annotations was used as both training and testing data. The patch size of 128 × 128 was used, as a half window (HW) size.

HeLa: Our training data was 10 simulated videos with 512 × 512 resolution containing approximately 150 objects, including 20 cell appearing events, 20 cell disappearing events, and 5 or 10 mitosis events. The numbers were empirically chosen. This experiment employed the circle annotations directly as the baseline performance. The patch size of 256 × 256 was used.

HeLa-AD: The above simulated data was used for training, with an extra annotation deformation (AD) step.

HeLa-AD + AC: The above simulated data was used for training, with extra AD and annotation cleaning (AC) steps.

HeLa-AD + AC + HW: The above simulated data was used for training, with extra AD and AC steps. The patch size of 128 × 128 was used, as a half window (HW) size.

4.3. Evaluation matrix

TRA, DET, and SEG are the standard metrics in the ISBI cell tracking challenge [42], evaluating the performance of tracking, detection and segmentation, respectively. The ISBI Cell Tracking Challenge used these three metrics as de facto standard measurements based on Acyclic Oriented Graph Matching (AOGM) algorithms. The instance objects are presented as the nodes of the acyclic oriented graphs, while the tracking results are modeled as the vertices of the graphs. Then, graphs are obtained from both ground truth annotations and the predicted results to evaluate the accuracy of detection (DET) and tracking (TRA). SEG evaluates the overlap of predicted objects with true objects. The TRA, DET and SEG range from 0 to 1, where 0 and 1 indicate the worst and best performance, respectively. The details of such metrics can be found in Ref. [42].

5. Results

5.1. Instance segmentation and tracking on microvilli videos

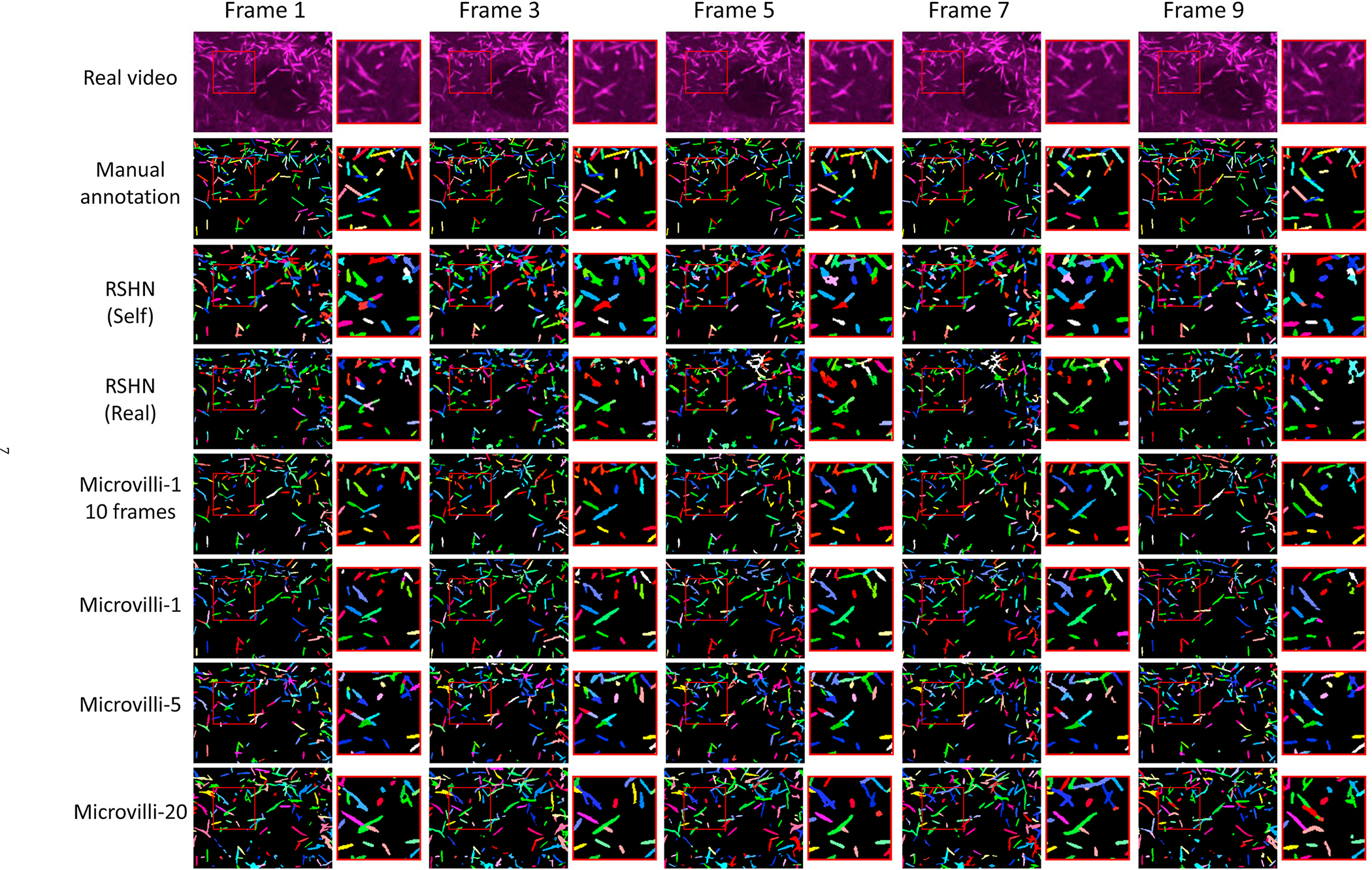

The qualitative and quantitative results are presented in Fig. 7 and Table 1. From the quantitative results shown in Table 1, the best performance according to the evaluation metric scores was achieved by Microvilli-20 without using manual annotations. By contrast, it took one week of manual annotation labor from a graduate student to annotate only 10 frames of RSHN (Self) and RSHN (Real). One salient feature of achieving better performance of the proposed framework is the larger number of total simulated training video.

Fig. 7.

This figure shows the instance segmentation and tracking results of the real testing microvilli video.

Table 1.

DET, SET and TRA values of different experiments on microvilli video.

| Exp. | T.V. | T.F. | DET | SEG | TRA |

|---|---|---|---|---|---|

| RSHN (Self) [7] | 1 | 10 | 0.662 | 0.298 | 0.629 |

| RSHN (Real) [7] | 1 | 10 | 0.357 | 0.169 | 0.334 |

| ASIST (Microvilli-1) | 1 | 10 | 0.580 | 0.306 | 0.551 |

| ASIST (Microvilli-1) | 1 | 50 | 0.586 | 0.311 | 0.556 |

| ASIST (Microvilli-5) | 5 | 50 | 0.660 | 0.338 | 0.627 |

| ASIST (Microvilli-20) | 20 | 50 | 0.715 | 0.332 | 0.674 |

T.V. is the number of training videos. T.F. is the number of training frames of each video. RSHN (Self) uses testing video for training. RSHN (Real) is the standard testing accuracy of using another independent video as training data.

5.2. Instance segmentation and tracking on HeLa cell videos

Instance segmentation and tracking results of HeLa cell videos were presented in Fig. 8. Based on the performance in Table 2. HeLa-AD + AC + HW achieved superior performance than other settings using the ASIST method. The best performance of our annotation-free ASIST method is 5%–9% lower than the manual annotation baseline. The most salient feature of improving the performance is to introduce the annotation cleaning (AC) step.

Fig. 8.

This figure shows the instance segmentation and tracking results on the real HeLa cell testing video.

Table 2.

DET, SET and TRA values of different experiments on HeLa cell video.

| Exp. | T.V. | T.F. | DET | SEG | TRA |

|---|---|---|---|---|---|

| RSHN (Self) [7] | 2 | 92 | 0.979 | 0.884 | 0.975 |

| RSHN (Self-HW) | 2 | 92 | 0.956 | 0.809 | 0.951 |

| ASIST (HeLa) | 10 | 50 | 0.858 | 0.656 | 0.849 |

| ASIST (HeLa-AD) | 10 | 50 | 0.853 | 0.718 | 0.844 |

| ASIST (HeLa-AD + AC) | 10 | 50 | 0.919 | 0.755 | 0.911 |

| ASIST (HeLa-AD + AC + HW) | 10 | 50 | 0.939 | 0.796 | 0.928 |

T.V. is the number of training videos. T.F. is the number of training frames per video. RSHN (Self) is the upper bound of RSHN using testing video for training.

6. Discussion

In this paper, we assess the feasibility of performing pixel-embedding based instance object segmentation and tracking in an annotation-free manner, with adversarial simulations. Compared with conventional segmentation and tracking methods on microscope videos, our experiment used a pixel-embedding strategy instead of the “segmentation and association” two-step method. Our method also used synthetic training data instead of manual annotation. According to our experimental results, our annotation-free instance segmentation and tracking model achieved superior performance on the microvilli dataset as well as comparable results on the HeLa dataset. Such encouraging results elucidated a promising new path to leverage the currently unsalable human annotation based pixel-embedding deep learning approach in an annotation free manner. In terms of robustness, the proposed pixel-embedding based method does not require heavy parameter tuning, which is typically inevitable in traditional model based methods. As a learning based method, the robustness of the proposed method can be further improved with more heterogeneous training images.

Strengths.

The strength of our proposed ASIST method is three-fold: I. the proposed method is annotation-free to alleviate the extensive manual efforts of preparing large-scale manual annotations for training deep learning approaches; II. The proposed method does not require heavy parameter tuning; III. The proposed ASIST method combines the strength of both adversarial learning and pixel embedding based cell instance segmentation and tracking.

Limitations.

One major limitation of our ASIST method is that both microvilli and HeLa cells have relatively homogeneous shape and appearance variations. In the future, it will be valuable to explore more complicated cell lines and more heterogeneous microscope videos. Meanwhile, the registration based method is introduced to capture the shape variations for ball-shaped HeLa cells. For more complicated cellular and subcellular objects, deep learning based solutions might be needed, such as the shape auto-encoder.

Following the proposed ASIST framework, our long term goal is to propose more general and comprehensive algorithms that can be applied to a variety of microscope videos with pixel-level instance segmentation and tracking. This would provide new analytical tools for domain experts to characterize high spatio-temporal dynamics of cells and subcellular structures.

7. Conclusion

In this paper, we propose the ASIST method – an annotation-free instance segmentation and tracking solution to characterize cellular and subcellular dynamics in microscope videos. Our method consists of unsupervised image-annotation synthesis, video synthesis, and instance segmentation and tracking. According to the experiments on subcellular (microvilli) videos and cellular (HeLa cell) videos, ASIST achieved comparable performance to manual annotation-based strategies. The proposed approach is a novel step towards annotation-free quantification of cellular and subcellular dynamics for microscope biology.

Declaration of competing interest

This research was supported by Vanderbilt Cellular, Biochemical and Molecular Sciences Training Grant 5T32GM008554-25, the NIH NIDDK National Research Service Award F31DK122692, NIH Grant R01-DK111949 and R01-DK095811.

References

- [1].Meenderink LM, Gaeta IM, Postema MM, Cencer CS, Chinowsky CR, Krystofiak ES, Millis BA, Tyska MJ, Actin dynamics drive microvillar motility and clustering during brush border assembly, Dev. Cell 50 (5) (2019) 545–556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Arbelle A, Reyes J, Chen J-Y, Lahav G, Raviv TR, A probabilistic approach to joint cell tracking and segmentation in high-throughput microscopy videos, Med. Image Anal. 47 (2018) 140–152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Al-Kofahi Y, Zaltsman A, Graves R, Marshall W, Rusu M, A deep learning-based algorithm for 2-d cell segmentation in microscopy images, BMC Bioinf 19 (1) (2018) 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Korfhage N, Mühling M, Ringshandl S, Becker A, Schmeck B, Freisleben B, Detection and segmentation of morphologically complex eukaryotic cells in fluorescence microscopy images via feature pyramid fusion, PLoS Comput. Biol. 16 (9) (2020), e1008179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Van Valen DA, Kudo T, Lane KM, Macklin DN, Quach NT, DeFelice MM, Maayan I, Tanouchi Y, Ashley EA, Covert MW, Deep learning automates the quantitative analysis of individual cells in live-cell imaging experiments, PLoS Comput. Biol. 12 (11) (2016), e1005177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Zhao M, Jha A, Liu Q, Millis BA, Mahadevan-Jansen A, Lu L, Landman BA, Tyskac MJ, Huo Y, Faster mean-shift: Gpu-accelerated embedding-clustering for cell segmentation and tracking,, 2020. arXiv preprint arXiv:2007.14283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Payer C, Štern D, Neff T, Bischof H, Urschler M, Instance segmentation and tracking with cosine embeddings and recurrent hourglass networks, in: International Conference on Medical Image Computing and Computer-Assisted Intervention. Plus 0.5em Minus 0.4em, Springer, 2018, pp. 3–11. [Google Scholar]

- [8].Johnson-Roberson M, Barto C, Mehta R, Sridhar SN, Rosaen K, Vasudevan R, Driving in the matrix: can virtual worlds replace human-generated annotations for real world tasks?, 2016. arXiv preprint arXiv:1610.01983. [Google Scholar]

- [9].Zamora I, Lopez NG, Vilches VM, Cordero AH, Extending the openai gym for robotics: a toolkit for reinforcement learning using ros and gazebo, 2016. arXiv preprint arXiv:1608.05742. [Google Scholar]

- [10].Kheterpal N, Parvate K, Wu C, Kreidieh A, Vinitsky E, Bayen A, Flow: deep reinforcement learning for control in sumo, EPiC Series Eng. 2 (2018) 134–151. [Google Scholar]

- [11].Maška M, Ulman V, Svoboda D, Matula P, Matula P, Ederra C, Urbiola A, España T, Venkatesan S, Balak DM, et al. , A benchmark for comparison of cell tracking algorithms, Bioinformatics 30 (11) (2014) 1609–1617. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Ulman V, Maška M, Magnusson KE, Ronneberger O, Haubold C, Harder N, Matula P, Matula P, Svoboda D, Radojevic M, et al. , An objective comparison of cell-tracking algorithms, Nat. Methods 14 (12) (2017) 1141–1152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Simard PY, Steinkraus D, Platt JC, et al. , Best practices for convolutional neural networks applied to visual document analysis, ICDAR 3 (2003), 2003. [Google Scholar]

- [14].Drozdzal M, Chartrand G, Vorontsov E, Shakeri M, Di Jorio L, Tang A, Romero A, Bengio Y, Pal C, Kadoury S, Learning normalized inputs for iterative estimation in medical image segmentation, Med. Image Anal. 44 (2018) 1–13. [DOI] [PubMed] [Google Scholar]

- [15].Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y, Generative adversarial nets, in: Advances in Neural Information Processing Systems, 2014, pp. 2672–2680. [Google Scholar]

- [16].Costa P, Galdran A, Meyer MI, Abràmoff MD, Niemeijer M, Mendonça AM, Campilho A, Towards Adversarial Retinal Image Synthesis, arXiv preprint arXiv: 1701.08974, 2017. [DOI] [PubMed] [Google Scholar]

- [17].Zhang Q, Wang H, Lu H, Won D, Yoon SW, Medical image synthesis with generative adversarial networks for tissue recognition, in: 2018 IEEE International Conference on Healthcare Informatics (ICHI), 2018, pp. 199–207. [Google Scholar]

- [18].Zhuang J, Wang D, Geometrically Matched Multi-Source Microscopic Image Synthesis Using Bidirectional Adversarial Networks, arXiv preprint arXiv: 2010.13308, 2020. [Google Scholar]

- [19].Liu S, Gibson E, Grbic S, Xu Z, Setio AAA, Yang J, Georgescu B, Comaniciu D, Decompose to Manipulate: Manipulable Object Synthesis in 3d Medical Images with Structured Image Decomposition, arXiv preprint arXiv:1812.01737, 2018. [Google Scholar]

- [20].Zhu J-Y, Park T, Isola P, Efros AA, Unpaired image-to-image translation using cycle-consistent adversarial networks, in: Proceedings of the IEEE International Conference on Computer Vision, 2017, pp. 2223–2232. [Google Scholar]

- [21].Huo Y, Xu Z, Bao S, Assad A, Abramson RG, Landman BA, Adversarial synthesis learning enables segmentation without target modality ground truth, in: 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018). Plus 0.5em Minus 0.4emIEEE, 2018, pp. 1217–1220. [Google Scholar]

- [22].Ihle SJ, Reichmuth AM, Girardin S, Han H, Stauffer F, Bonnin A, Stampanoni M, Pattisapu K, Vörös J, Forró C, Unsupervised data to content transformation with histogram-matching cycle-consistent generative adversarial networks, Nat. Mach. Intelligence 1 (10) (2019) 461–470. [Google Scholar]

- [23].Dunn KW, Fu C, Ho DJ, Lee S, Han S, Salama P, Delp EJ, “ Deepsynth, Three-dimensional nuclear segmentation of biological images using neural networks trained with synthetic data, Sci. Rep 9 (1) (2019) 1–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Ridler T, Calvard S, et al. , Picture thresholding using an iterative selection method, IEEE Trans Syst Man Cybern 8 (8) (1978) 630–632. [Google Scholar]

- [25].Otsu N, A threshold selection method from gray-level histograms, IEEE Trans. Syst. Man Cybernet. 9 (1) (1979) 62–66. [Google Scholar]

- [26].Pratt W, Digital Image Processing: Piks Scientific inside, wiley-interscience, john wiley & sons, inc, 2007. [Google Scholar]

- [27].Kass M, Witkin A, Terzopoulos D, Snakes: active contour models, Int. J. Comput. Vis. 1 (4) (1988) 321–331. [Google Scholar]

- [28].Kornilov AS, Safonov IV, An overview of watershed algorithm implementations in open source libraries, J. Imag 4 (10) (2018) 123. [Google Scholar]

- [29].Gerlich D, Mattes J, Eils R, Quantitative motion analysis and visualization of cellular structures, Methods 29 (1) (2003) 3–13. [DOI] [PubMed] [Google Scholar]

- [30].Ray N, Acton ST, Motion gradient vector flow: an external force for tracking rolling leukocytes with shape and size constrained active contours, IEEE Trans. Med. Imag. 23 (12) (2004) 1466–1478. [DOI] [PubMed] [Google Scholar]

- [31].Sato Y, Chen J, Zoroofi RA, Harada N, Tamura S, Shiga T, Automatic extraction and measurement of leukocyte motion in microvessels using spatiotemporal image analysis, IEEE (Inst. Electr. Electron. Eng.) Trans. Biomed. Eng. 44 (4) (1997) 225–236. [DOI] [PubMed] [Google Scholar]

- [32].De Hauwer C, Camby I, Darro F, Migeotte I, Decaestecker C, Verbeek C, Danguy A, Pasteels J-L, Brotchi J, Salmon I, et al. , Gastrin inhibits motility, decreases cell death levels and increases proliferation in human glioblastoma cell lines, J. Neurobiol. 37 (3) (1998) 373–382. [DOI] [PubMed] [Google Scholar]

- [33].De Hauwer C, Darro F, Camby I, Kiss R, Van Ham P, Decaesteker C, In vitro motility evaluation of aggregated cancer cells by means of automatic image processing, Cytometry: J. Int. Soc. Anal. Cytol 36 (1) (1999) 1–10. [DOI] [PubMed] [Google Scholar]

- [34].Jain V, Murray JF, Roth F, Turaga S, Zhigulin V, Briggman KL, Helmstaedter MN, Denk W, Seung HS, Supervised learning of image restoration with convolutional networks, in: 2007 IEEE 11th International Conference on Computer Vision. Plus 0.5em Minus 0.4emIEEE, 2007, pp. 1–8. [Google Scholar]

- [35].Baghli I, Benazzouz M, Chikh MA, Plasma cell identification based on evidential segmentation and supervised learning, Int. J. Biomed. Eng. Technol 32 (4) (2020) 331–350. [Google Scholar]

- [36].Yu H, Guo D, Yan Z, Liu W, Simmons J, Przybyla CP, Wang S, Unsupervised Learning for Large-Scale Fiber Detection and Tracking in Microscopic Material Images, arXiv preprint arXiv:1805.10256, 2018. [Google Scholar]

- [37].Zhu J-Y, Park T, Isola P, Efros AA, Unpaired image-to-image translation using cycle-consistent adversarial networkss, in: Computer Vision (ICCV), 2017 IEEE International Conference on, 2017. [Google Scholar]

- [38].Liu Q, Gaeta IM, Millis BA, Tyska MJ, Huo Y, Gan Based Unsupervised Segmentation: Should We Match the Exact Number of Objects, arXiv preprint arXiv:2010.11438, 2020. [Google Scholar]

- [39].He K, Zhang X, Ren S, Sun J, Deep residual learning for image recognition, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 770–778. [Google Scholar]

- [40].Ronneberger O, Fischer P, Brox T, U-net: convolutional networks for biomedical image segmentation, in: International Conference on Medical Image Computing and Computer-Assisted Intervention. Plus 0.5em Minus 0.4emSpringer, 2015, pp. 234–241. [Google Scholar]

- [41].Avants BB, Tustison NJ, Song G, Cook PA, Klein A, Gee JC, A reproducible evaluation of ants similarity metric performance in brain image registration, Neuroimage 54 (3) (2011) 2033–2044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [42].Matula P, Maška M, Sorokin DV, Matula P, Ortiz-de Solórzano C, Kozubek M, Cell tracking accuracy measurement based on comparison of acyclic oriented graphs, PloS One 10 (12) (2015), e0144959. [DOI] [PMC free article] [PubMed] [Google Scholar]