Key Points

Question

Can deep learning models (DLMs) using routine preoperative imaging predict surgical complexity and outcomes in abdominal wall reconstruction?

Findings

In this quality improvement study, 3 DLMs were developed and validated from 369 patients and 9303 images. DLMs predicting complexity (receiver operating curve = 0.744) and infection (receiver operating curve = 0.898) performed strongly and surgical complexity DLM was more accurate than expert surgeons; prediction of postoperative pulmonary failure was less effective (receiver operating curve = 0.545).

Meaning

DLMs built using routine preoperative imaging may successfully predict surgical complexity and postoperative outcomes in abdominal wall reconstruction.

This quality improvement study discusses the development and validation of a fully automated deep learning model to predict surgical complexity and postoperative outcomes in patients undergoing abdominal wall reconstruction based only on preoperative computed tomography imaging.

Abstract

Importance

Image-based deep learning models (DLMs) have been used in other disciplines, but this method has yet to be used to predict surgical outcomes.

Objective

To apply image-based deep learning to predict complexity, defined as need for component separation, and pulmonary and wound complications after abdominal wall reconstruction (AWR).

Design, Setting, and Participants

This quality improvement study was performed at an 874-bed hospital and tertiary hernia referral center from September 2019 to January 2020. A prospective database was queried for patients with ventral hernias who underwent open AWR by experienced surgeons and had preoperative computed tomography images containing the entire hernia defect. An 8-layer convolutional neural network was generated to analyze image characteristics. Images were batched into training (approximately 80%) or test sets (approximately 20%) to analyze model output. Test sets were blinded from the convolutional neural network until training was completed. For the surgical complexity model, a separate validation set of computed tomography images was evaluated by a blinded panel of 6 expert AWR surgeons and the surgical complexity DLM. Analysis started February 2020.

Exposures

Image-based DLM.

Main Outcomes and Measures

The primary outcome was model performance as measured by area under the curve in the receiver operating curve (ROC) calculated for each model; accuracy with accompanying sensitivity and specificity were also calculated. Measures were DLM prediction of surgical complexity using need for component separation techniques as a surrogate and prediction of postoperative surgical site infection and pulmonary failure. The DLM for predicting surgical complexity was compared against the prediction of 6 expert AWR surgeons.

Results

A total of 369 patients and 9303 computed tomography images were used. The mean (SD) age of patients was 57.9 (12.6) years, 232 (62.9%) were female, and 323 (87.5%) were White. The surgical complexity DLM performed well (ROC = 0.744; P < .001) and, when compared with surgeon prediction on the validation set, performed better with an accuracy of 81.3% compared with 65.0% (P < .001). Surgical site infection was predicted successfully with an ROC of 0.898 (P < .001). However, the DLM for predicting pulmonary failure was less effective with an ROC of 0.545 (P = .03).

Conclusions and Relevance

Image-based DLM using routine, preoperative computed tomography images was successful in predicting surgical complexity and more accurate than expert surgeon judgment. An additional DLM accurately predicted the development of surgical site infection.

Introduction

Artificial intelligence (AI) has been shown to accurately diagnose new lesions or abnormalities and has shown promise in analyzing complex computed tomography (CT) images to detect intracranial pathology and classify interstitial lung disease and lung nodules.1,2,3,4,5,6,7,8,9 AI can be as accurate as specialist physicians in diagnosing abnormalities, but its value in interpreting the clinical consequences of these findings and predicting outcomes is an area of possibility that has yet to be sufficiently studied.3,6,7,8,9 AI has the potential to augment the decision to perform surgery, aid in operative planning, assist surgeons in identifying patients who need referral to a specialist or specialty center, add to the informed consent process, and possibly improve outcomes.10

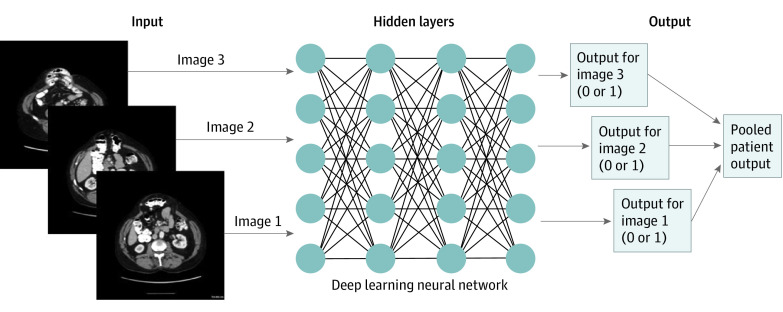

Machine learning is a subfield of AI in which a computer performs a task without explicit instructions. Deep learning is a further division of machine learning where models automatically learn without needing time-intensive handcrafted feature engineering.10 In deep learning models (DLM), the initial input and final output are connected by hidden layers containing hidden nodes with an assigned weight, with the computer automatically optimizing its algorithm and updating weights as the model is trained (Figure 1).10 DLMs of CT imaging have proven useful in prior applications in the trauma and oncologic settings, with the main focus on diagnosis.1,2,3,4,5 Despite the advancements achieved with DLMs, this technology has yet to be used to predict surgical complexity or outcomes from preoperative imaging. A surgical field that could benefit from such technology is ventral hernia repair.

Figure 1. Example of an Image-Based Deep Learning Model.

Hidden layers signify model architecture that cannot be extracted from the model. Predictive outputs are given per image, and all patient image predictions were pooled for overall patient prediction.

Ventral hernias, which occur in more than 1 in 8 patients after laparotomy, result in one of the most common surgical procedures performed in the world.11,12,13,14,15,16 Despite its frequency, when complex hernias require abdominal wall reconstruction (AWR), outcomes are marred by a high incidence of wound complications, frequent treatment failures (recurrences), increasing surgical difficulty with each operation, and significant financial burden to health care systems.15,16 Important elements of successful AWR are achieving fascial closure and minimizing surgical site infection (SSI), with failure of either increasing hernia recurrence rates by 3 to 5 fold.17,18,19,20,21 To achieve musculofascial closure, a percentage of patients who undergo AWR require unilateral or bilateral myofascial advancement flaps or component separation techniques (CST), a known surrogate of operative complexity in AWR.18,19,20 Using AI to identify patients who will require complex operations or those at high risk of surgical site or systemic complications by predicting surgical outcomes will significantly advance the field.10,22,23 Thus, the objective of this study was to develop and validate a fully automated DLM to predict surgical complexity and postoperative outcomes in patients undergoing AWR based only on preoperative CT imaging.

Methods

Study Design

Reporting for this study is based on the Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis (TRIPOD) reporting guideline.24 Following institutional review board approval from Carolinas Medical Center, patients were retrospectively identified from a prospectively maintained institutional database who underwent open AWR at a tertiary care hernia referral center and had a preoperative CT scan of the abdomen and pelvis. Patients were excluded if the preoperative CT images displayed architectural distortion (eg, artifact from an orthopedic prosthesis) or if they were incompletely rendered during processing, if they were younger than 18 years, or if they had an emergent operation. Patients with missing images were not included during model development. Patients were excluded from the complexity model if they received preoperative abdominal wall botulinum toxin A injection.25 Patients underwent preoperative preparation by eliminating smoking for 4 weeks prior to surgery and had a hemoglobin A1c level of 7.2 or less. Demographic data were collected for each patient including age, sex, race, body mass index, hernia defect size, comorbidities, and US Centers for Disease Control and Prevention wound class. Using preoperative CT imaging, 3 independent DLMs were developed, which included surgical complexity, SSI, and postoperative pulmonary complications. The features identified on the CT scan by the DLM were unknown and not visible to the user.

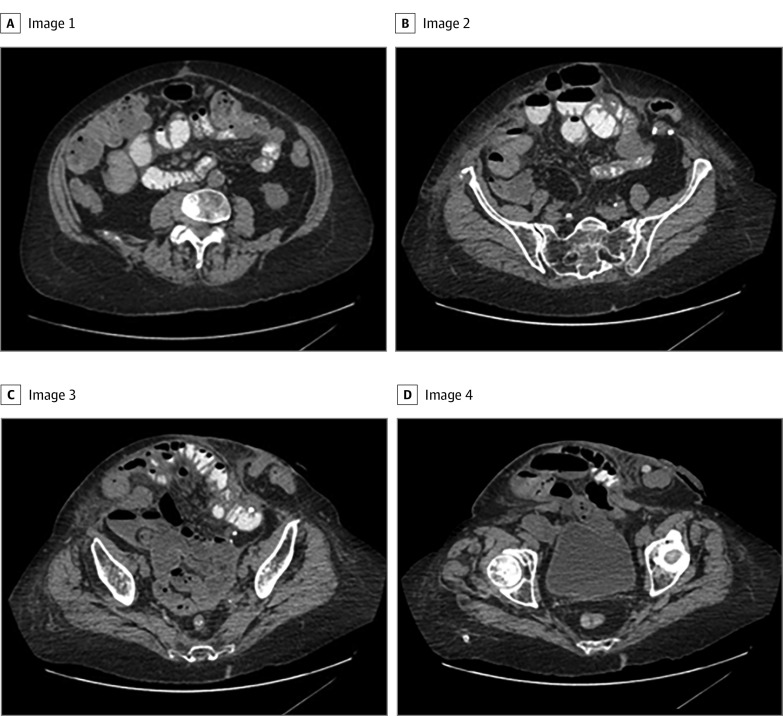

Once identified, axial cuts were deidentified and rendered into representative 3- to 5-mm slices using TeraRecon software (TeraRecon Inc) (Figure 2) to include only abdominal CT images that contained the hernia. All images were then batched, had patient identifiers removed, and were exported/stored in a secure folder used for training/development of the AI models.

Figure 2. Representative Axial Computed Tomography Images of a Patient Requiring Component Separation.

To capture the entire hernia defect for this patient, a total of 22 images were included for development of the deep learning model. Panels A through D are 4 representative computed tomography cuts in order from cranial to caudal.

DLM: Surgical Complexity, SSI, and Pulmonary Complications

For each DLM, a binary outcome was identified and associated with the image set for each patient. CST was used as a surrogate for complexity in the surgical complexity DLM and was defined as yes if the patient underwent musculofascial advancement via either an external oblique release or transversus abdominus release to enable complete abdominal wall closure. The external oblique release or transversus abdominus release could have been unilateral or bilateral. In our prospectively maintained database, SSIs are identified by trained data entry specialists based on hospital, clinic, and direct patient follow-up and imaging studies. SSI was defined as either deep or superficial wound infection. Deep infection was defined as involving the subfascial, fascial, and muscular layers, while superficial infection was an infection confined to the skin and subcutaneous tissues.26 Deep infection included deep space infection and mesh infection, while superficial infection included cellulitis and superficial wound breakdown. Pulmonary failure, retrospectively identified in the same manner as SSI, was defined as respiratory complication requiring transfer to the intensive care unit for bilevel positive airway pressure or intubation.

Model Architecture, Training, and Validation

The 3 DLMs were derived from LeNet architecture, which included 5 convolutional layers, 1 flattened layer, and 2 fully connected layers. Batch sizes for model analysis were set to 16. Input classes were designated during training as yes and no for outcomes in each of the models. Approximately 80% of the images were used for training, and 20% were randomly chosen for a test set for later validation within the convolutional neural network (CNN). The CNN was blind to the images in the test set until validation. All images were standardized by sizing to 150 × 150 pixels with 3 color channels for analysis.

The neural network graph parameters were specified as follows: the first 2 convolutional layers were given a filter size of 3 with 32 filters. Convolutional layers 3 to 5 were given a filter size of 3 with 64 filters. The fully connected layer size was subsequently set to 128 based on these parameter specifications. The activation function used in the first fully connected layer is the rectified linear unit. The learning rate for the CNN was set to 0.0001 to minimize potential overfitting errors associated with model construction. The model was trained for 10 000 iterations. Further CNN training beyond the 10 000 iterations was not performed because of a reported loss in accuracy and higher associated loss function associated with overtraining the model.27

Once the model was sufficiently trained, internal validation of the DLMs was performed with those images assigned for testing. No recalibration of the DLMs were performed. Classification percentages for test images were calculated using the trained weights from the CNN. For each slice analyzed by the computer, a continuous number was generated between 0 and 1, with a number closer to 1 indicating a specific outcome. These values were recorded with the corresponding classification label then evaluated for model discriminative ability through a receiver operating curve (ROC) value.

Expert Comparison for Surgical Complexity Model

A random sample of 35 patients was obtained from the preoperative CT database using a random number generator to create a validation set. Preoperative CT scans for this set of patients were analyzed by the trained neural network to determine whether these patients would require CST. Six expert surgeons (E.B.D., J.M.S., J.P.F., V.A.A., P.D.C., and B.T.H.) with fellowship training and a practice based in AWR were tested using the same validation set; surgeons were not excluded from reviewing imaging of their own patients but were blinded from patient information and identifiers. Expert predictions were not obtained for the SSI and pulmonary complication models because these are not outcomes that are routinely predicted by surgeons based on preoperative imaging.

Statistical Analyses

To determine prediction model parameters (ROC value and Brier statistic) of the DLMs, predictions for each image analyzed by the DLMs were compared against actual outcome. Predictions were classified as a binary outcome whereby if a particular probability was 50% or more in favor of an outcome, it was considered to predict the designated outcome. Predictions for each patient image are then pooled for the overall patient prediction (Figure 1). Using the predictions for all tested images ROC values and Brier statistics for the DLMs were generated using Stata version 15 (StataCorp). Test parameters that included accuracy, sensitivity, and specificity were also calculated. The threshold for statistical significance was defined as a P value less than .05, which was 2-sided, and the significance was determined by comparing the model prediction for each image slice. No power calculation was performed because there were no prior studies with data of algorithms assessing such questions. The number of images, and, in turn, the number of patients needed for model development was based on previous AI models that focused on diagnostic predictions and estimate a minimum benchmark.28,29 Analysis started February 2020.

Results

Patient Demographics

In total, 369 patients who underwent AWR were included with a total of 9303 images. All patients underwent AWR by 4 surgeons (K.W.K., V.A.A., P.D.C., and B.T.H.) with a combined 75 years of experience. The mean (SD) age of patients was 57.9 (12.6) years, 232 (62.9%) were female, and 323 (87.5%) were White. These patients were representative of the AWR patient population at this institution, and complete demographics can be found in the eTable in the Supplement.

Surgical Complexity Model

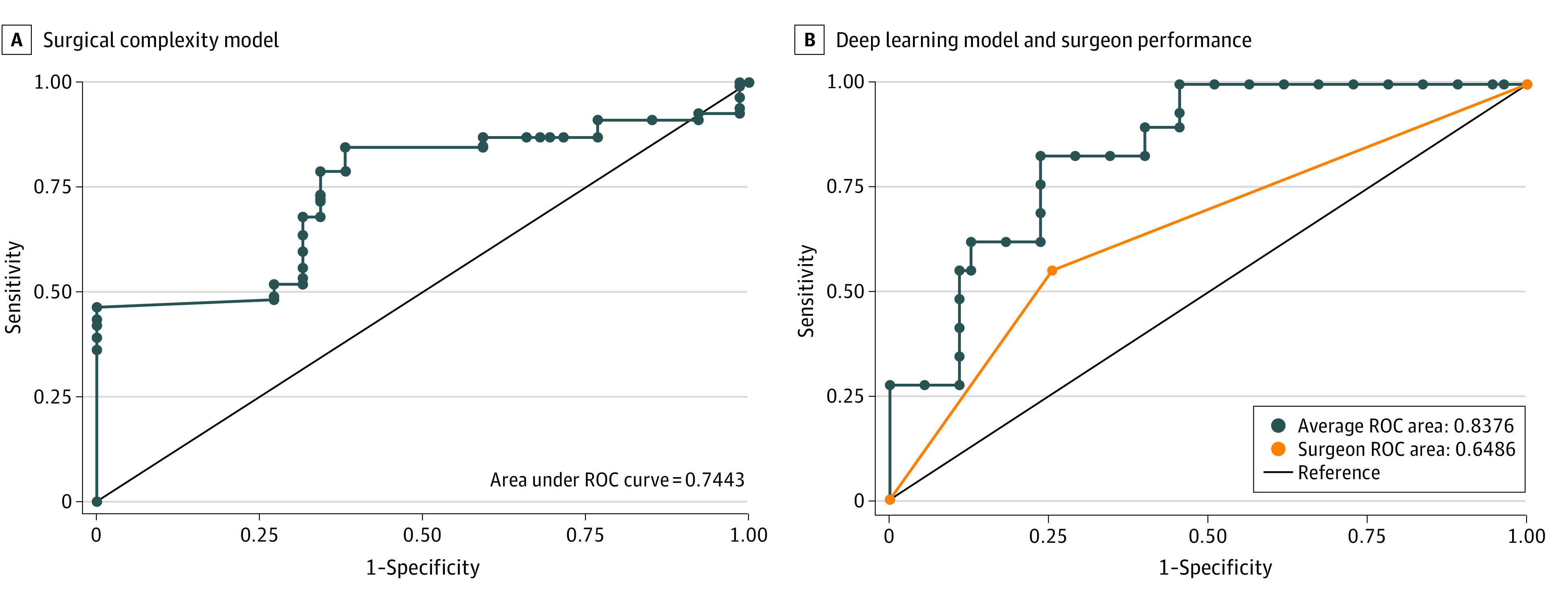

For the development of the surgical complexity model, 327 patients were included (7565 patient images) with 35 patients assigned for the validation set. Seven patients from the original cohort were excluded because the use of preoperative abdominal wall botulinum toxin injection. Of 327 patients in the surgical complexity model, 97 (29.7%) underwent CST intraoperatively (2829 patient images). Of 66 patients in the test set, 20 (30.3%) underwent CST. The model performed with an ROC of 0.744 (95% CI, 0.718-0.770; P < .001); the ROC is demonstrated in Figure 3A. The model had an accuracy of 76.6% (95% CI, 74.3%-78.9%), sensitivity of 84.5% (95% CI, 82.0%-86.8%), and specificity of 61.9% (95% CI, 57.4%-66.3).

Figure 3. Model Performance for Surgical Complexity.

A, Surgical complexity model performance compared with a reference receiver operating characteristic curve (ROC) of 0.5 is depicted. Model performance vs reference value: P < .001. B, Deep learning model performance (blue line) and surgeon performance (orange line). The ROC is 0.19 greater for the deep learning model vs surgeon (P < .001).

Validation Set for Surgical Complexity

Surgeon accuracy in predicting complexity based on preoperative CT images was significantly less than the DLM. The DLM performed better than it did on the training set with an ROC of 0.838 (95% CI, 0.783-0.892; P < .001; Figure 3B). The model had an accuracy of 81.3% (95% CI, 78.0%-84.1%), sensitivity of 88.9% (95% CI, 84.0%-91.4%), and specificity of 73.5% (95% CI, 69.2%-79.0%). The ROC for surgeons was 0.649 (95% CI, 0.582-0.715; P < .001; Figure 3B). For the group of surgeons, the accuracy was 65.0% (95% CI, 58.1%-71.4%), sensitivity was 53.3% (95% CI, 42.5%-63.9%), and specificity was 76.7% (95% CI, 68.1%-83.1%). The accuracy achieved by the expert surgeons ranged from 57.2% to 74.3%. Accuracy of the model was significantly higher than the surgeons at 81.3% (95% CI, 78.0%-84.1%) vs 65.0% (95% CI, 58.2%-71.4%) (P < .001). A comparison for the outcomes of the AI test set, AI validation set, and surgeon validation set can be found in the Table.

Table. AI and Surgeon Outcomes for Predicting Surgical Complexity.

| Test | ROC (95% CI) | % (95% CI) | ||

|---|---|---|---|---|

| Accuracy | Sensitivity | Specificity | ||

| AI test set | 0.744 (0.718-0.770) | 76.6 (74.3-78.9) | 84.5 (82.0-86.8) | 61.9 (57.4-66.3) |

| AI validation set | 0.838 (0.783-0.892) | 81.3 (78.0-84.1) | 88.9 (84.0-91.4) | 73.5 (69.2-79.0) |

| Surgeon validation set | 0.649 (0.582-0.715) | 65.0 (58.1-71.4) | 53.3 (42.5-63.9) | 76.7 (68.1-83.1) |

Abbreviations: AI, artificial intelligence; ROC, receiver operating curve characteristic.

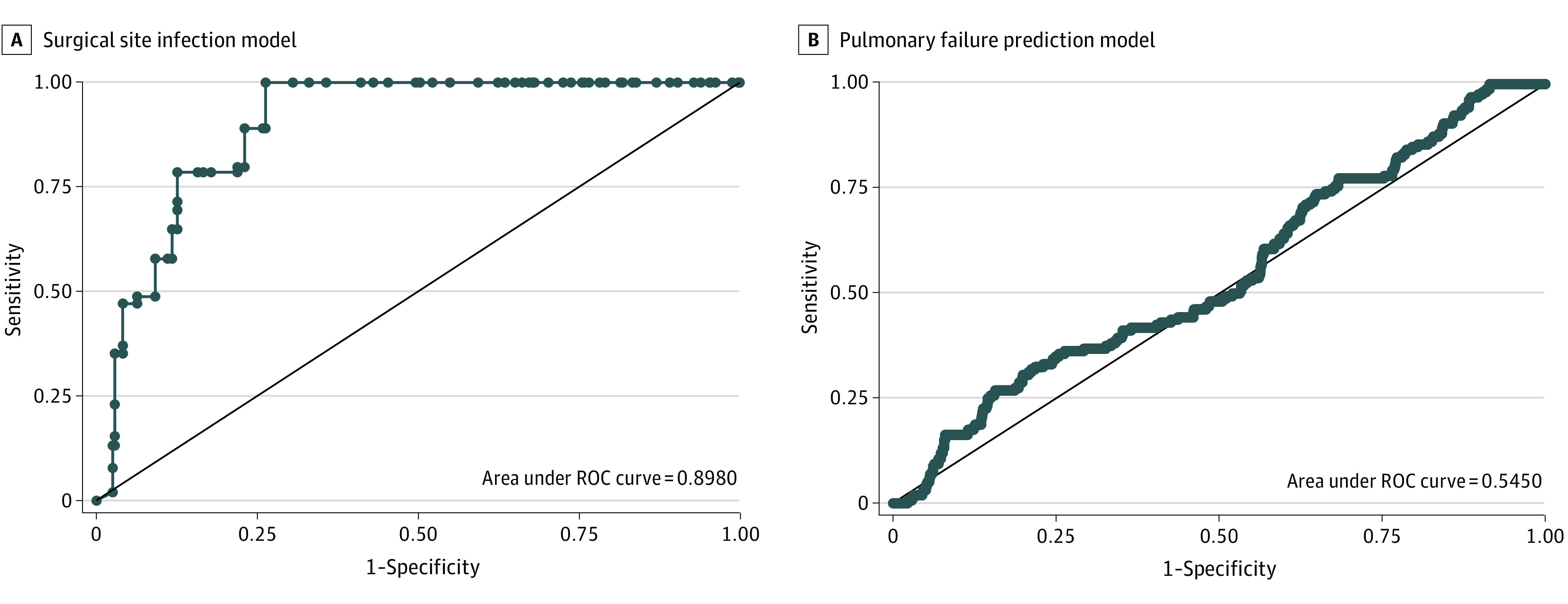

SSI and Pulmonary Failure Models

Overall, 83 of 369 patients (22.5%) had an SSI (2932 images), including 64 deep wound infections and 19 superficial wound infections. Of the deep infections, 1 was a mesh infection; of the superficial infections, 16 were cellulitis and 3 were superficial wound breakdown. In the test set, 17 of 74 patients (23.0%) had an SSI. The ability of the DLM to successfully identify patients who developed SSI was high and performed the best of the 3 DLMs developed with an ROC of 0.898 (95% CI, 0.833-0.912; P < .001; Figure 4A). For the SSI model, the accuracy was 85.2% (95% CI, 83.4%-86.9%), sensitivity was 78.5% (95% CI, 74.2%-82.4%), and specificity was 87.4% (95% CI, 85.4%-89.2%).

Figure 4. Model Performance for Surgical Site Infection and Pulmonary Failure.

A, Surgical site infection model performance compared with a reference receiver operating characteristic curve (ROC) of 0.5 is depicted. Model performance vs reference value: P < .001. B, Pulmonary failure prediction model compared with a reference ROC of 0.5 is depicted. Model performance vs reference value: P = .03.

In contrast, the pulmonary complication model was the least effective of the 3 DLMs developed. Overall, there were 29 of 369 patients (7.9%) who developed significant pulmonary complications (914 images) and 6 of 74 (8.1%) in the test set with such pulmonary complications. The ROC for the pulmonary complications DLM was 0.545 (95% CI, 0.495-0.594; P = .03; Figure 4B). The accuracy was 77.5% (95% CI, 75.3%-79.7%), sensitivity was 26.9% (95% CI, 20.2%-34.5%), and specificity was 84.0% (95% CI, 81.9%-86.0%).

Discussion

To our knowledge, the current study is the first to describe the use of DLMs to predict surgical complexity and outcomes using routinely available, objective, preoperative imaging. While DLMs have been created to accurately interpret and diagnose disease states based on CT imaging,1,2,3,4,5 herein we describe the successful use of DLMs to predict intraoperative complexity and postoperative complications. Overall, 2 of 3 DLMs performed well. The DLM for surgical complexity was validated by comparison to 6 surgeons with training, expertise, and clinical specialization in AWR. The accuracy of the DLM was superior to that of AWR surgeons in predicting which patients would require intraoperative CST to achieve complete abdominal fascial closure. While SSI was successfully predicted by the DLM, it was incapable of successfully predicting postoperative pulmonary failure in patients undergoing AWR.

These findings represent a significant innovation in the field of surgical prediction. Most important, the described successful DLMs provide a basis and proof of concept for future research aimed at predicting surgical complexity and outcomes and the ability to bolster an objective risk stratification model based on preoperative imaging. The ability to predict these aftereffects from static, objective, widely available imaging instead of the subjectivity of surgeon judgment can improve preoperative planning, patient-informed consent, resource allocation, overall surgical decision-making, and possibly outcomes. Adding to the importance of the use of such DLMs in AWR is that it is the fifth most common operation performed by general surgeons, and its end result includes a recurrence rate and SSI rate that can reach more than 30%.17,30,31

In the case of ventral hernia repair, DLMs may be able to objectively aid in delineating which patients can have surgery safely and effectively performed in a community hospital vs those who should have the hernia repaired at a specialized hernia referral center.15,17 With the evolution and specialization of hernia management, this concept has emerged to the forefront of the field.32,33,34,35 While some contend that all operations are better performed in specialized centers, the sheer volume of AWR would not allow the indiscriminate transfer of these patients to a limited number of focused surgical specialists and multidisciplinary teams.19,21,25,36,37,38,39,40 Thus, DLMs, if accurate, could objectively and efficiently define this subset of patients, identifying those with high surgical complexity or at high risk of complications. Ultimately, these tools may be able to create an objective AWR tiering system to ensure patients are cared for in a setting that will give them the best outcomes. Additionally, the knowledge of which patients may develop an SSI can allow surgeons to preemptively change operative tactics, such as perform delayed primary closures, use vacuum-assisted skin closure, or make appropriate surgical mesh choices in an attempt to avoid SSIs and improve long-term hernia outcomes.41,42 Additionally, knowledge garnered from DLMs can be used to strengthen the informed consent process.

Previous research has sought to answer such questions in an objective form; however, there remains a great deal of subjective variability or technical complexity. For example, the Carolinas Equation for Determining Associated Risk application identifies patients at risk for wound complications; however, use of this application requires a subjective assessment of whether or not the patient will require CST and possible intraoperative complications (enterotomy), which have yet to occur.43 Similarly, Schlosser et al44,45 used CT volumetrics to predict outcomes after hernia repair; however, volumetrics require computer technical skills and specialized software and is highly user dependent. In contrast, our DLMs use only standard CT images and analyze them independently to create a clinical decision support system that maximizes efficiency, limits input complexity, and reduces subjectivity.10,46 The information from such an algorithm should not initially be intended to replace surgical judgment or experience but rather augment the decision-making process in complex clinical scenarios, allowing for improved perioperative planning, informed consent, and better risk stratification.10,47

Existing literature has demonstrated the ability of AI to predict postoperative outcomes based on database analysis. For example, Bihorac et al48 created a machine learning algorithm to evaluate a single-institution database of surgical patients and determine risk of postoperative complications after inpatient surgery. While this model accurately predicted several complications and performed better than the physicians, this supervised machine learning model is highly dependent on human engineering and requires numerous variables as inputs.49 Conversely, in the trauma setting, Dreizin et al50 report deep learning based volumetric analysis of CT imaging successfully predicts the need for intervention in patients with traumatic pelvic hematomas.

Moving forward, this study highlights the strength of image-based DLM prediction tools and lays the groundwork for prospective trials in an effort to create more broadly functional algorithms that can be distributed and tested globally. The inaccuracy of the pulmonary complication DLM may have been predictable given the stringent definition applied and its low overall incidence. A more beneficial model may be one created using a broader consensus definition of pulmonary failure based on assessing outcomes in a prospective fashion.51 Similarly, future iterations of SSI DLMs can use prospectively collected data involving blinded SSI assessors and validated patient questionnaires in an attempt to limit common limitations in SSI underreporting, while surgical complexity DLMs can be based on multicenter data to limit surgeon bias.52,53 Finally, with future creation of more accurate image-based algorithms objectively identifying high-risk and complex patients, such algorithms could possibly be used to create an objective AWR tiering system, thereby triaging patients to appropriate levels of care.

Using the concepts demonstrated in this study, the future of surgical prediction modeling is vast. When considering that many elective surgeries, such as hernia repair, are often quality-of-life operations, it may be possible to use this technology to predict which patients may or may not improve functionally or as related to discomfort. Again, this could significantly contribute to the surgical consent process and patient satisfaction. Use of such techniques can lead to broader preoperative risk stratification tools for general surgery patients based on preoperative imaging, serving similar goals as the American College of Surgeons National Surgical Quality Improvement Surgical Risk Calculator.54

Limitations

In considering our findings, it is important to consider several limitations of our study. Because the study was performed using only data from operations performed at a single institution, this may affect perioperative decision-making. Similarly, the single-institution approach limits the number of patients/images available and increasing the sample size could improve the model strength for less frequent outcomes, such as pulmonary failure. Both of these limitations can be improved using aforementioned multicenter approaches to increase patient/image number and control for practice tendencies. Similarly, given how rapidly AI is evolving, there is significant potential to improve in our DLM algorithms using more complex architectures in such a way that integration of database analysis to the existing image analysis frameworks could be used to incorporate patient comorbidities. Inherent limitations also exist within the deep learning method of AI, with the most significant being the inability to decipher what the algorithm finds as important within the images, a concept inherent to all algorithms that use learning methods.8

Conclusions

This study demonstrates that DLMs developed using only preoperative CT imaging inputs may successfully predict surgical complexity and the occurrence of SSIs in patients who underwent AWR. This technology can be used for enhanced preoperative planning and informed consent, while also laying the groundwork for future research involving image-based DLM predictive algorithms and surgical outcomes.

eTable. Baseline Patient Demographics

References

- 1.Gao XW, Hui R, Tian Z. Classification of CT brain images based on deep learning networks. Comput Methods Programs Biomed. 2017;138:49-56. doi: 10.1016/j.cmpb.2016.10.007 [DOI] [PubMed] [Google Scholar]

- 2.Cheng JZ, Ni D, Chou YH, et al. Computer-aided diagnosis with deep learning architecture: applications to breast lesions in US images and pulmonary nodules in CT scans. Sci Rep. 2016;6:24454. doi: 10.1038/srep24454 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Chilamkurthy S, Ghosh R, Tanamala S, et al. Deep learning algorithms for detection of critical findings in head CT scans: a retrospective study. Lancet. 2018;392(10162):2388-2396. doi: 10.1016/S0140-6736(18)31645-3 [DOI] [PubMed] [Google Scholar]

- 4.Anthimopoulos M, Christodoulidis S, Ebner L, Christe A, Mougiakakou S. Lung pattern classification for interstitial lung diseases using a deep convolutional neural network. IEEE Trans Med Imaging. 2016;35(5):1207-1216. doi: 10.1109/TMI.2016.2535865 [DOI] [PubMed] [Google Scholar]

- 5.Caballo M, Pangallo DR, Mann RM, Sechopoulos I. Deep learning-based segmentation of breast masses in dedicated breast CT imaging: radiomic feature stability between radiologists and artificial intelligence. Comput Biol Med. 2020;118:103629. doi: 10.1016/j.compbiomed.2020.103629 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Wang P, Liu X, Berzin TM, et al. Effect of a deep-learning computer-aided detection system on adenoma detection during colonoscopy (CADe-DB trial): a double-blind randomised study. Lancet Gastroenterol Hepatol. 2020;5(4):343-351. doi: 10.1016/S2468-1253(19)30411-X [DOI] [PubMed] [Google Scholar]

- 7.Gong D, Wu L, Zhang J, et al. Detection of colorectal adenomas with a real-time computer-aided system (ENDOANGEL): a randomised controlled study. Lancet Gastroenterol Hepatol. 2020;5(4):352-361. doi: 10.1016/S2468-1253(19)30413-3 [DOI] [PubMed] [Google Scholar]

- 8.Gulshan V, Peng L, Coram M, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316(22):2402-2410. doi: 10.1001/jama.2016.17216 [DOI] [PubMed] [Google Scholar]

- 9.Esteva A, Kuprel B, Novoa RA, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542(7639):115-118. doi: 10.1038/nature21056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Loftus TJ, Tighe PJ, Filiberto AC, et al. Artificial intelligence and surgical decision-making. JAMA Surg. 2020;155(2):148-158. doi: 10.1001/jamasurg.2019.4917 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kingsnorth A, LeBlanc K. Hernias: inguinal and incisional. Lancet. 2003;362(9395):1561-1571. doi: 10.1016/S0140-6736(03)14746-0 [DOI] [PubMed] [Google Scholar]

- 12.Deerenberg EB, Harlaar JJ, Steyerberg EW, et al. Small bites versus large bites for closure of abdominal midline incisions (STITCH): a double-blind, multicentre, randomised controlled trial. Lancet. 2015;386(10000):1254-1260. doi: 10.1016/S0140-6736(15)60459-7 [DOI] [PubMed] [Google Scholar]

- 13.Jairam AP, Timmermans L, Eker HH, et al. ; PRIMA Trialist Group . Prevention of incisional hernia with prophylactic onlay and sublay mesh reinforcement versus primary suture only in midline laparotomies (PRIMA): 2-year follow-up of a multicentre, double-blind, randomised controlled trial. Lancet. 2017;390(10094):567-576. doi: 10.1016/S0140-6736(17)31332-6 [DOI] [PubMed] [Google Scholar]

- 14.Bosanquet DC, Ansell J, Abdelrahman T, et al. Systematic review and meta-regression of factors affecting midline incisional hernia rates: analysis of 14,618 patients. PLoS One. 2015;10(9):e0138745. doi: 10.1371/journal.pone.0138745 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Poulose BK, Shelton J, Phillips S, et al. Epidemiology and cost of ventral hernia repair: making the case for hernia research. Hernia. 2012;16(2):179-183. doi: 10.1007/s10029-011-0879-9 [DOI] [PubMed] [Google Scholar]

- 16.van Ramshorst GH, Eker HH, Hop WCJ, Jeekel J, Lange JF. Impact of incisional hernia on health-related quality of life and body image: a prospective cohort study. Am J Surg. 2012;204(2):144-150. doi: 10.1016/j.amjsurg.2012.01.012 [DOI] [PubMed] [Google Scholar]

- 17.Holihan JL, Alawadi Z, Martindale RG, et al. Adverse events after ventral hernia repair: the vicious cycle of complications. J Am Coll Surg. 2015;221(2):478-485. doi: 10.1016/j.jamcollsurg.2015.04.026 [DOI] [PubMed] [Google Scholar]

- 18.Holihan JL, Askenasy EP, Greenberg JA, et al. ; Ventral Hernia Outcome Collaboration Writing Group . Component separation vs. bridged repair for large ventral hernias: a multi-institutional risk-adjusted comparison, systematic review, and meta-analysis. Surg Infect (Larchmt). 2016;17(1):17-26. doi: 10.1089/sur.2015.124 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Maloney SR, Schlosser KA, Prasad T, et al. Twelve years of component separation technique in abdominal wall reconstruction. Surgery. 2019;166(4):435-444. doi: 10.1016/j.surg.2019.05.043 [DOI] [PubMed] [Google Scholar]

- 20.Booth JH, Garvey PB, Baumann DP, et al. Primary fascial closure with mesh reinforcement is superior to bridged mesh repair for abdominal wall reconstruction. J Am Coll Surg. 2013;217(6):999-1009. doi: 10.1016/j.jamcollsurg.2013.08.015 [DOI] [PubMed] [Google Scholar]

- 21.Heniford BT, Ross SW, Wormer BA, et al. Preperitoneal ventral hernia repair: a decade long prospective observational study with analysis of 1023 patient outcomes. Ann Surg. 2020;271(2):364-374. doi: 10.1097/SLA.0000000000002966 [DOI] [PubMed] [Google Scholar]

- 22.Bernardi K, Adrales GL, Hope WW, et al. ; Ventral Hernia Outcomes Collaborative Writing Group . Abdominal wall reconstruction risk stratification tools: a systematic review of the literature. Plast Reconstr Surg. 2018;142(3)(suppl):9S-20S. doi: 10.1097/PRS.0000000000004833 [DOI] [PubMed] [Google Scholar]

- 23.Kanters AE, Krpata DM, Blatnik JA, Novitsky YM, Rosen MJ. Modified hernia grading scale to stratify surgical site occurrence after open ventral hernia repairs. J Am Coll Surg. 2012;215(6):787-793. doi: 10.1016/j.jamcollsurg.2012.08.012 [DOI] [PubMed] [Google Scholar]

- 24.Collins GS, Reitsma JB, Altman DG, Moons KGM. Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis (TRIPOD): the TRIPOD Statement. Br J Surg. 2015;102(3):148-158. doi: 10.1002/bjs.9736 [DOI] [PubMed] [Google Scholar]

- 25.Deerenberg EB, Elhage SA, Raible RJ, et al. Image-guided botulinum toxin injection in the lateral abdominal wall prior to abdominal wall reconstruction surgery: review of techniques and results. Skeletal Radiol. 2021;50(1):1-7. doi: 10.1007/s00256-020-03533-6 [DOI] [PubMed] [Google Scholar]

- 26.Ban KA, Minei JP, Laronga C, et al. American College of Surgeons and Surgical Infection Society: surgical site infection guidelines, 2016 update. J Am Coll Surg. 2017;224(1):59-74. doi: 10.1016/j.jamcollsurg.2016.10.029 [DOI] [PubMed] [Google Scholar]

- 27.Holder CJ, Breckon TP, Wei X. From on-road to off: transfer learning within a deep convolutional neural network for segmentation and classification of off-road scenes. In: Hua G, Jégou H, eds. Computer Vision – ECCV 2016 Workshops. Springer Verlag; 2016:149-162. doi: 10.1007/978-3-319-46604-0_11 [DOI] [Google Scholar]

- 28.Zhang C, Bengio S, Hardt M, Recht B, Vinyals O. Understanding deep learning requires rethinking generalization. arXiv. Preprint posted online November 10, 2016. https://arxiv.org/abs/1611.03530

- 29.Gal Y, Ghahramani Z. A theoretically grounded application of dropout in recurrent neural networks. arXiv. Preprint posted online December 16, 2015. https://arxiv.org/abs/1512.05287

- 30.Decker MR, Dodgion CM, Kwok AC, et al. Specialization and the current practices of general surgeons. J Am Coll Surg. 2014;218(1):8-15. doi: 10.1016/j.jamcollsurg.2013.08.016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Carbonell AM, Criss CN, Cobb WS, Novitsky YW, Rosen MJ. Outcomes of synthetic mesh in contaminated ventral hernia repairs. J Am Coll Surg. 2013;217(6):991-998. doi: 10.1016/j.jamcollsurg.2013.07.382 [DOI] [PubMed] [Google Scholar]

- 32.Raigani S, De Silva GS, Criss CN, Novitsky YW, Rosen MJ. The impact of developing a comprehensive hernia center on the referral patterns and complexity of hernia care. Hernia. 2014;18(5):625-630. doi: 10.1007/s10029-014-1279-8 [DOI] [PubMed] [Google Scholar]

- 33.Schlosser KA, Arnold MR, Kao AM, Augenstein VA, Heniford BT. Building a multidisciplinary hospital-based abdominal wall reconstruction program: nuts and bolts. Plast Reconstr Surg. 2018;142(3)(suppl):201S-208S. doi: 10.1097/PRS.0000000000004879 [DOI] [PubMed] [Google Scholar]

- 34.Williams KB, Belyansky I, Dacey KT, et al. Impact of the establishment of a specialty hernia referral center. Surg Innov. 2014;21(6):572-579. doi: 10.1177/1553350614528579 [DOI] [PubMed] [Google Scholar]

- 35.Shao JM, Deerenberg EB, Elhage SA, et al. Recurrent incisional hernia repairs at a tertiary hernia center: are outcomes really inferior to initial repairs? Surgery. 2021;169(3):580-585. doi: 10.1016/j.surg.2020.10.009 [DOI] [PubMed] [Google Scholar]

- 36.Köckerling F, Sheen AJ, Berrevoet F, et al. The reality of general surgery training and increased complexity of abdominal wall hernia surgery. Hernia. 2019;23(6):1081-1091. doi: 10.1007/s10029-019-02062-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Carbonell AM, Warren JA, Prabhu AS, et al. Reducing length of stay using a robotic-assisted approach for retromuscular ventral hernia repair: a comparative analysis from the Americas Hernia Society Quality Collaborative. Ann Surg. 2018;267(2):210-217. doi: 10.1097/SLA.0000000000002244 [DOI] [PubMed] [Google Scholar]

- 38.Fong Y, Gonen M, Rubin D, et al. Long-term survival is superior after resection for cancer in high-volume centers. Ann Surg. 2005;242(4):540-544. doi: 10.1097/01.sla.0000184190.20289.4b [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Birkmeyer JD, Siewers AE, Finlayson EVA, et al. Hospital volume and surgical mortality in the United States. N Engl J Med. 2002;346(15):1128-1137. doi: 10.1056/NEJMsa012337 [DOI] [PubMed] [Google Scholar]

- 40.Evans M. A new factor when choosing a surgeon. The Wall Street Journal. Published September 19, 2016. Accessed November 29, 2020. https://www.wsj.com/articles/a-new-factor-when-choosing-a-surgeon-1474301023

- 41.Kao AM, Arnold MR, Augenstein VA, Heniford BT. Prevention and treatment strategies for mesh infection in abdominal wall reconstruction. Plast Reconstr Surg. 2018;142(3)(suppl):149S-155S. doi: 10.1097/PRS.0000000000004871 [DOI] [PubMed] [Google Scholar]

- 42.Bueno-Lledó J, Franco-Bernal A, Garcia-Voz-Mediano MT, Torregrosa-Gallud A, Bonafé S. Prophylactic single-use negative pressure dressing in closed surgical wounds after incisional hernia repair: a randomized, controlled trial. Ann Surg. 2021;273(6):1081-1086. doi: 10.1097/sla.0000000000004310 [DOI] [PubMed] [Google Scholar]

- 43.Augenstein VA, Colavita PD, Wormer BA, et al. CeDAR: Carolinas equation for determining associated risks. J Am Coll Surg. 2015;221(4):S65-S66. doi: 10.1016/j.jamcollsurg.2015.07.145 [DOI] [Google Scholar]

- 44.Schlosser KA, Maloney SR, Prasad T, Colavita PD, Augenstein VA, Heniford BT. Three-dimensional hernia analysis: the impact of size on surgical outcomes. Surg Endosc. 2020;34(4):1795-1801. doi: 10.1007/s00464-019-06931-7 [DOI] [PubMed] [Google Scholar]

- 45.Schlosser KA, Maloney SR, Prasad T, Colavita PD, Augenstein VA, Heniford BT. Too big to breathe: predictors of respiratory failure and insufficiency after open ventral hernia repair. Surg Endosc. 2020;34(9):4131-4139. doi: 10.1007/s00464-019-07181-3 [DOI] [PubMed] [Google Scholar]

- 46.Shortliffe EH, Sepúlveda MJ. Clinical decision support in the era of artificial intelligence. JAMA. 2018;320(21):2199-2200. doi: 10.1001/jama.2018.17163 [DOI] [PubMed] [Google Scholar]

- 47.Nundy S, Montgomery T, Wachter RM. Promoting trust between patients and physicians in the era of artificial intelligence. JAMA. 2019;322(6):497-498. doi: 10.1001/jama.2018.20563 [DOI] [PubMed] [Google Scholar]

- 48.Bihorac A, Ozrazgat-Baslanti T, Ebadi A, et al. MySurgeryRisk: development and validation of a machine-learning risk algorithm for major complications and death after surgery. Ann Surg. 2019;269(4):652-662. doi: 10.1097/SLA.0000000000002706 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Brennan M, Puri S, Ozrazgat-Baslanti T, et al. Comparing clinical judgment with the MySurgeryRisk algorithm for preoperative risk assessment: a pilot usability study. Surgery. 2019;165(5):1035-1045. doi: 10.1016/j.surg.2019.01.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Dreizin D, Zhou Y, Chen T, et al. Deep learning-based quantitative visualization and measurement of extraperitoneal hematoma volumes in patients with pelvic fractures: potential role in personalized forecasting and decision support. J Trauma Acute Care Surg. 2020;88(3):425-433. doi: 10.1097/TA.0000000000002566 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Abbott TEF, Fowler AJ, Pelosi P, et al. ; StEP-COMPAC Group . A systematic review and consensus definitions for standardised end-points in perioperative medicine: pulmonary complications. Br J Anaesth. 2018;120(5):1066-1079. doi: 10.1016/j.bja.2018.02.007 [DOI] [PubMed] [Google Scholar]

- 52.Macefield R, Blazeby J, Reeves B, et al. ; Bluebelle Study Group . Validation of the Bluebelle Wound Healing Questionnaire for assessment of surgical-site infection in closed primary wounds after hospital discharge. Br J Surg. 2019;106(3):226-235. doi: 10.1002/bjs.11008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Matthews JH, Bhanderi S, Chapman SJ, Nepogodiev D, Pinkney T, Bhangu A. Underreporting of secondary endpoints in randomized trials: cross-sectional, observational study. Ann Surg. 2016;264(6):982-986. doi: 10.1097/SLA.0000000000001573 [DOI] [PubMed] [Google Scholar]

- 54.Bilimoria KY, Liu Y, Paruch JL, et al. Development and evaluation of the universal ACS NSQIP surgical risk calculator: a decision aid and informed consent tool for patients and surgeons. J Am Coll Surg. 2013;217(5):833-42.e1, 3. doi: 10.1016/j.jamcollsurg.2013.07.385 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eTable. Baseline Patient Demographics