Abstract

This article reviews deep learning applications in biomedical optics with a particular emphasis on image formation. The review is organized by imaging domains within biomedical optics and includes microscopy, fluorescence lifetime imaging, in vivo microscopy, widefield endoscopy, optical coherence tomography, photoacoustic imaging, diffuse tomography, and functional optical brain imaging. For each of these domains, we summarize how deep learning has been applied and highlight methods by which deep learning can enable new capabilities for optics in medicine. Challenges and opportunities to improve translation and adoption of deep learning in biomedical optics are also summarized.

Keywords: biomedical optics, biophotonics, deep learning, machine learning, computer aided detection, microscopy, fluorescence lifetime, in vivo microscopy, optical coherence tomography, photoacoustic imaging, diffuse tomography, functional optical brain imaging, widefield endoscopy

INTRODUCTION

Biomedical optics is the study of biological light-matter interactions with the overarching goal of developing sensing platforms that can aid in diagnostic, therapeutic, and surgical applications [1]. Within this large and active field of research, novel systems are continually being developed to exploit unique light-matter interactions that provide clinically useful signatures. These systems face inherent trade-offs in signal-to-noise ratio (SNR), acquisition speed, spatial resolution, field of view (FOV), and depth of field (DOF). These trade-offs affect the cost, performance, feasibility, and overall impact of clinical systems. The role of biomedical optics developers is to design systems which optimize or ideally overcome these trade-offs in order to appropriately meet a clinical need.

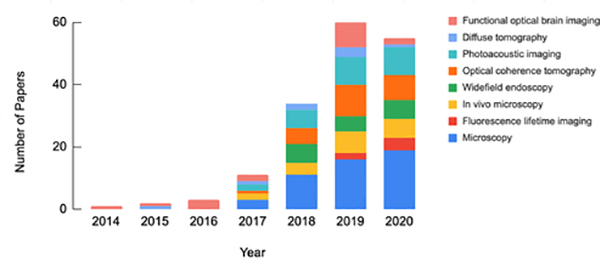

In the past few decades, biomedical optics system design, image formation, and image analysis have primarily been guided by classical physical modeling and signal processing methodologies. Recently, however, deep learning (DL) has become a major paradigm in computational modeling and demonstrated utility in numerous scientific domains and various forms of data analysis [2, 3]. As a result, DL is increasingly being utilized within biomedical optics as a data-driven approach to perform image processing tasks, solve inverse problems for image reconstruction, and provide automated interpretation of downstream images. This trend is highlighted in Fig. 1, which summarizes the articles reviewed in this paper stratified by publication year and image domain.

Fig 1:

Number of reviewed research papers which utilize DL in biomedical optics stratified by year and imaging domain.

This review focuses on the use of DL in the design and translation of novel biomedical optics systems. While image formation is the main focus of this review, DL has also been widely applied to the interpretation of downstream images, as summarized in other review articles [4, 5]. This review is organized as follows. First, a brief introduction to DL is provided by answering a set of questions related to the topic and defining key terms and concepts pertaining to the articles discussed throughout this review. Next, recent original research in the following eight optics-related imaging domains is summarized: (1) microscopy, (2) fluorescence lifetime imaging, (3) in vivo microscopy, (4) widefield endoscopy, (5) optical coherence tomography, (6) photoacoustic imaging, (7) diffuse tomography, and (8) functional optical brain imaging. Within each domain, state-of-the-art approaches which can enable new functionality for optical systems are highlighted. We then offer our perspectives on the challenges and opportunities across these eight imaging domains. Finally, we provide a summary and outlook of areas in which DL can contribute to future development and clinical impact biomedical optics moving forward.

DEEP LEARNING OVERVIEW

What is deep learning?

To define DL, it is helpful to start by defining machine learning (ML), as the two are closely related in their historical development and share many commonalities in their practical application. ML is the study of algorithms and statistical models which computer systems use to progressively improve their performance on a specified task [6]. To ensure the development of generalizable models, ML is commonly broken in two phases: training and testing. The purpose of the training phase is to actively update model parameters to make increasingly accurate predictions on the data, whereas the purpose of testing is to simulate a prospective evaluation of the model on future data.

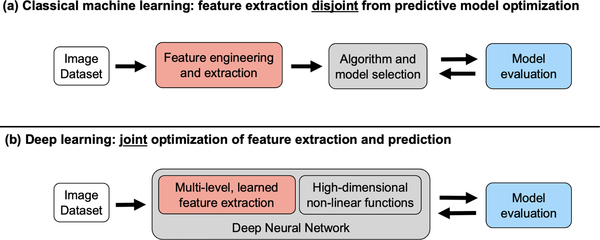

In this context, DL can be considered a subset of ML, as it is one of many heuristics for development and optimization of predictive, task-specific models [7]. In practice, DL is primarily distinguished from ML by the details of the underlying computational models and optimization techniques utilized. Classical ML techniques rely on careful development of task-specific image analysis features, an approach commonly referred to as “feature engineering” (Fig. 2(a)). Such approaches typically require extensive manual tuning and therefore have limited generalizability. In contrast, DL applies an “end-to-end” data-driven optimization (or “learning”) of both feature representations and model predictions [2] ((Fig. 2(b)). This is achieved through training a type of general and versatile computational model, termed deep neural network (DNN).

Fig 2:

(a) Classical machine learning uses engineered features and a model. (b) Deep learning uses learned features and predictors in an “end-to-end” deep neural network.

DNNs are composed of multiple layers which are connected through computational operations between layers, including linear weights and nonlinear “activation” functions. Thereby, each layer contains a unique feature representation of the input data. By using several layers, the model can account for both low-level and high-level representations. In the case of images, low-level representations could be textures and edges of the objects, whereas higher level representations would be object-like compositions of those features. The joint optimization of both feature representation at multiple levels of abstraction and predictive model parameters is what makes DNNs so powerful.

How is deep learning implemented?

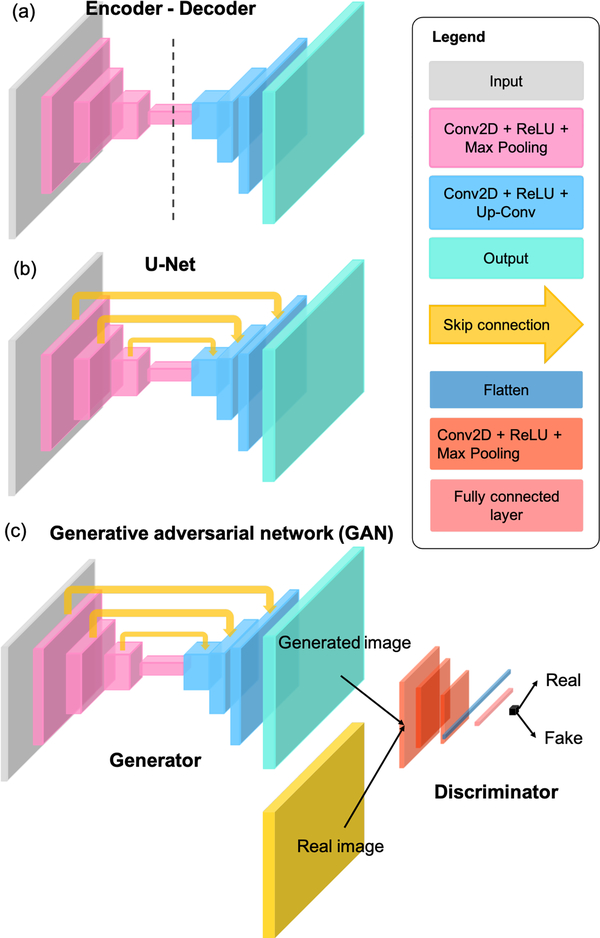

The majority of the existing DL models in biomedical optics are implemented using the supervised learning strategy. At a high-level, there are three primary components to implement a supervised DL model: 1) labeled data, 2) model architecture, and 3) optimization strategy. Labeled data consist of the raw data inputs as well as the desired model output. Large amounts of labeled data are often needed for effective model optimization. This requirement is currently one of the main challenges for utilizing DL on small-scale biomedical data sets, although strategies to overcome this are an active topic in the literature, such as unsupervised [8], self-supervised [9], and semi-supervised learning [10]. For a typical end-to-end DL model, model architecture defines the hypothesis class and how hierarchical information flows between each layer of the DNN. The selection of a DNN architecture depends on the desired task and is often determined empirically through comparison of various state-of-the-art architectures. Three of the most widely used DNN architectures in current biomedical optics literature are illustrated in Fig. 3.

Fig 3:

Three of the most commonly-used DNN architectures in biomedical optics: (a) Encoder-decoder, (b) U-Net, and (c) GAN.

The encoder-decoder network [11] shown in Fig. 3(a) aims to establish a mapping between the input and output images using a nearly symmetrically structure with a contracting “encoder” path and an expanding “decoder” path. The encoder consists of several convolutional blocks, each followed by a down-sampling layer for reducing the spatial dimension. Each convolutional block consists of several convolutional layers (Conv2D) that stacks the processed features along the last dimension, among which each layer is followed by a nonlinear activation function, e.g. the Rectified Linear Unit (ReLU). The intermediate output from the encoder has a small spatial dimension but encodes rich information along the last dimension. These low-resolution “activation maps” go through the decoder, which consists of several additional convolutional blocks, each connected by a upsampling convolutional (Up-Conv) layer for increasing the spatial dimension. The output of the network typically has the same dimension as the input image.

The U-Net [12] architecture shown in Fig. 3(b) can be thought of as an extension to the encoder-decoder network. It introduces additional “skip connections” between the encoder and decoder paths so that information across different spatial scales can be efficiently tunneled through the network, which has shown to be particularly effective to preserve high-resolution spatial information [12].

The generative adversarial network (GAN) [13] shown in Fig. 3(c) is a general framework that involves adversarially training a pair of networks, including the “generator” and “discriminator”. The basic idea is to train the generator to make high-quality image predictions that are indistinguishable from the real images of the same class (e.g. H&E stained lung tissue slices). To do so, the discriminator is trained to classify that the generator’s output is fake, while the generator is trained to fool the discriminator. Such alternating training steps iterate until a convergence is met when the discriminator can hardly distinguish if the images produced from the generator are fake or real. When applying to biomedical optics techniques, the generator is often implemented by the U-Net. The discriminator is often implemented using an image classification network. The input image is first processed by several convolutional blocks and downsampling layers to extract high-level 2D features. These 2D features are then “flattened” to a 1D vector, which is then processed by several fully connected layers to perform additional feature synthesis and make the final classification.

Once labeled data and model architecture have been determined, optimization of model parameters can be undertaken. Optimization strategy includes two aspects: 1) cost function, and 2) training algorithm. Definition of a cost function (a.k.a. objective function, error, or loss function) is needed to assess the accuracy of model predictions relative to the desired output and provide guidance to adjust model parameters. The training algorithm iteratively updates the model parameters to improve model accuracy. This training process is generally achieved by solving an optimization problem, using variants of the gradient descent algorithm, e.g. stochastic gradient descent and Adam [14]. The optimizer utilizes the gradient of the cost function to update each layer of the DNN through the principle of “error backpropagation” [15]. Given labeled data, a model architecture, and the optimization guides the model parameters towards a local minimum of the cost function, thereby optimizing model performance.

With the recent success of DL, several software frameworks have been developed to enable easier creation and optimization of DNNs. Many of the major technology companies have been active in this area. Two of the front-runners are TensorFlow and PyTorch, which are open-source frameworks published and maintained by Google and Facebook, respectively [16, 17]. Both frameworks enable easy construction of custom DNN models, with efficient parallelization of DNN optimization over high-performance graphics computing units (GPUs). These frameworks have enabled non-experts to train and deploy DNNs and have played a large role in the spread of DL research into many new applications, including the field of biomedical optics.

What is deep learning used for in biomedical optics?

There are two predominant tasks for which DL has been utilized in biomedical optics: 1) image formation and 2) image interpretation. Both are important applications; however, image formation is a more central focus of biomedical optics researchers and consequently is the focus of this review.

With regards to image formation, DL has proven very useful for effectively approximating the inverse function of an imaging model in order to improve the quality of image reconstructions. Classical reconstruction techniques are built on physical models with explicit analytical formulations. To efficiently compute the inverse of these analytical models, approximations are often needed to simplify the problem, e.g. linearization. Instead, DL methods have shown to be very effective to directly “learn” an inverse model, in the form of a DNN, based on the training input and output pairs. This in practice has opened up novel opportunities to perform image formation that would otherwise be difficult to formulate an analytical model. In addition, the directly learned DL inverse model can often better approximate the inverse function, which in turn leads to improved image quality as shown in several imaging domains in this review.

Secondly, DL has been widely applied for modeling image priors for solving the inverse problems across multiple imaging domains. Most image reconstruction problems are inherently ill-posed in that the reconstructed useful image signal can be overwhelmed by noise if a direct inversion is implemented. Classical image reconstruction techniques rely on regularization using parametric priors for incorporating features of the expected image. Although being widely used, such models severely limit the type of features that can be effectively modeled, which in turn limit the reconstruction quality. DL-based reconstruction bypasses this limitation and does not rely on explicit parameterization of image features, but instead represents priors in the form of a DNN which is optimized (or “learned”) from a large data set that is of the same type of the object of interest (e.g. endoscopic images of esophagus). By doing so, DL enables better quality reconstructions.

Beyond achieving higher quality reconstructions, there are other practical benefits of DNNs in image formation. Classical inversion algorithms typically require an iterative process that can take minutes to hours to compute. Furthermore, they have stringent sampling requirements, which if lessened, make the inversion severely ill-posed. Due to more robust “learned” priors, DL-based techniques can accommodate highly incomplete or undersampled inputs while still providing high-quality reconstructions. Additionally, although DNNs typically require large datasets for training, the resulting models are capable of producing results in real time with a GPU. These combined capabilities allow DL-based techniques to bypass physical trade-offs (e.g., acquisition speed and imaging quality) and enable novel capabilities beyond existing solutions.

By leveraging these unique capabilities of DL methods, innovative techniques have been broadly reported across many imaging domains in biomedical optics. Examples include improving imaging performance, enabling new imaging functionalities, extracting quantitative microscopic information, and discovering new biomarkers. These and other technical developments have the potential to significantly reduce system complexity and cost, and may ultimately improve the quality, affordability, and accessibility of biomedical imaging in health care.

DEEP LEARNING APPLICATIONS IN BIOMEDICAL OPTICS

Microscopy

Overview.

Microscopy is broadly used in biomedical and clinical applications to capture cellular and tissue structures based on intrinsic (e.g. scattering, phase, and autofluorescence) or exogenous contrast (e.g. stains and fluorescent labels). Fundamental challenges exist in all forms of microscopy because of the limited information that can be extracted from the instrument. Broadly, the limitations can be categorized based on two main sources of origin. The first class is due to the physical tradeoffs between multiple competing performance parameters, such as SNR, acquisition speed, spatial resolution, FOV, and DOF. The second class is from the intrinsic sensitivity and specificity of different contrast mechanisms. DL-augmented microscopy is a fast-growing area that aims to overcome various aspects of conventional limitations by synergistically combining novel instrumentation and DL-based computational enhancement. This section focuses on DL strategies for bypassing the physical tradeoffs and augmenting the contrast in different microscopy modalities.

Overcoming physical tradeoffs.

An ideal microscopy technique often needs to satisfy several requirements, such as high resolution in order to resolve the small features in the sample, low light exposure to minimize photo-damage, and a wide FOV in order to capture information from a large portion of the sample. Traditional microscopy is fundamentally limited by the intrinsic tradeoffs between various competing imaging attributes. For example, a short light exposure reduces the SNR; a high spatial resolution requires a high-magnification objective lens that provides a small FOV and shallow DOF. This section summarizes recent achievements in leveraging DL strategies to overcome various physical tradeoffs and expand the imaging capabilities.

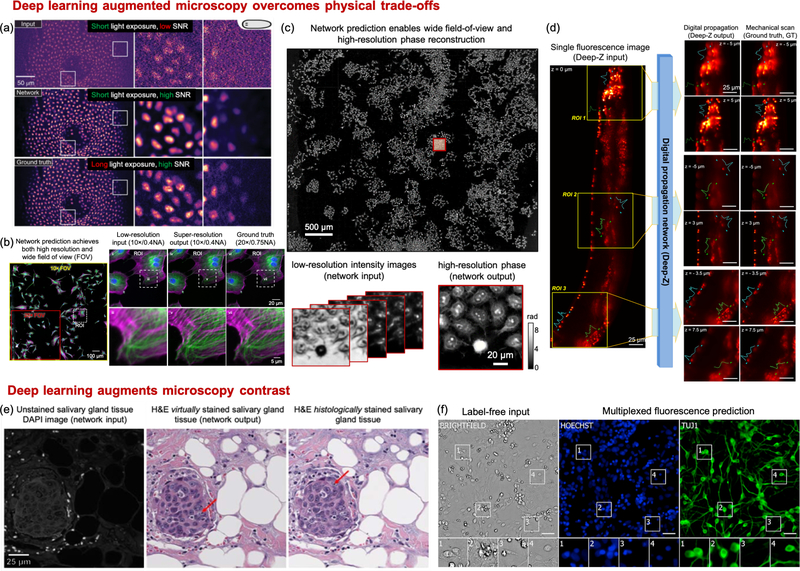

Denoising. Enhancing microscopy images by DL-based denoising has been exploited to overcome the tradeoffs between light exposure, SNR, and imaging speed, which in turn alleviates photo-bleaching and photo-toxicity. The general strategy is to train a supervised network that takes a noisy image as the input and produces the SNR-enhanced image output. Weigert et al. [18] demonstrated a practical training strategy of a U-Net on experimental microscopy data that involves taking paired images with low and high light exposures as the noisy input and high-SNR output of the network (Fig. 4(a)). This work showed that the DNN can restore the same high-SNR images with 60-fold fewer photons used during the acquisition. Similar strategies have been applied to several microscopy modalities, including widefield, confocal, light-sheet [18], structured illumination [24], and multi-photon microscopy [25].

Image reconstruction. Beyond denoising, the imaging capabilities of several microscopy techniques can be much expanded by performing image reconstruction. To perform reconstruction by DL, the common framework is to train a DNN, such as the U-Net and GAN, that takes the raw measurements as the input and the reconstructed image as the output. With this DL framework, three major benefits have been demonstrated. First, Wang et al. showed that GAN-based super-resolution reconstruction allows recovering high-resolution information from low-resolution measurements, which in turn provides an enlarged FOV and an extended DOF [19] (Fig. 4(b)). For widefield imaging, [19] demonstrated super-resolution reconstruction using input images from a 10×/0.4-NA objective lens and producing images matching a 20×/0.75-NA objective lens. In a cross-modality confocal-to-STED microscopy transformation case, [19] showed resolution improvement from 290 nm to 110 nm. Similar results have also been reported in label-free microscopy modalities, including brightfield [26], holography [27], and quantitative phase imaging [20] (Fig. 4(c)).

Fig 4:

DL overcomes physical tradeoffs and augments microscopy contrast. (a) CARE network achieves higher SNR with reduced light exposure (with permission from the authors [18]). (b) Cross-modality super-resolution network reconstructs high-resolution images across a wide FOV [19] (with permission from the authors). (c) DL enables wide-FOV high-resolution phase reconstruction with reduced measurements (adapted from [20]). (d) Deep-Z network enables digital 3D refocusing from a single measurement [21] (with permission from the authors). (e) Virtual staining GAN transforms autofluorescence images of unstained tissue sections to virtual H&E staining [22] (with permission from the authors) (f) DL enables predicting fluorescent labels from label-free images [23] (Reprinted from Cell, 2018 Apr 19;173(3):792–803.e19, Christiansen et al., In Silico Labeling: Predicting Fluorescent Labels in Unlabeled Images, Copyright (2020), with permission from Elsevier).

Second, DL-based 3D reconstruction technique allows drastically extending the imaging depth from a single-shot and thus bypasses the need for physical focusing. In [21], Wu et al. demonstrated 20× DOF extension in widefield fluorescence microscopy using a conditional GAN (Fig. 4(d)). Recent work on DL-based extended DOF has also shown promising results on enabling rapid slide-free histology [28].

Third, DL significantly improves both the imaging acquisition and reconstruction speeds and reduces the number of measurements for microscopy modalities that intrinsically require multiple measurements for the image formation, as shown in quantitative phase microscopy [20, 29, 30] (Fig. 4(c)), single molecule localization microscopy [31–33], and structured illumination microscopy [24]. For example, in [20], a 97% data reduction as compared to the conventional sequential acquisition scheme was achieved for gigapixel-scale phase reconstruction based on a multiplexed acquisition scheme using a GAN.

Augmenting contrasts.

The image contrast used in different microscopy modalities can be broadly categorized into endogenous and exogenous. For example, label-free microscopy captures endogenous scattering and phase contrast, and is ideal for imaging biological samples in their natural states, but suffers from lack of molecular specificity. Specificity is often achieved by staining with absorbing or fluorescent labels. However, applications of exogenous labeling are limited by the physical staining/labeling process and potential perturbation to the natural biological environment. Recent advances in DL-augmented microscopy have the potential to achieve the best of both label-free and labeled microscopy. This section summarizes two most widely used frameworks for augmenting microscopy contrast with DL.

-

Virtual staining/labeling. The main idea of virtual staining/labeling is to digitally transform the captured label-free contrast to the target stains/labels. DL has been shown to be particularly effective to perform this “cross-modality image transformation” task. By adapting this idea to different microscopy contrasts, two emerging applications have been demonstrated. First, virtual histological staining has been demonstrated for transforming a label-free image to the brightfield image of the histologically-stained sample (Fig. 4(e)). The label-free input utilized for this task include autofluorescence [22, 34], phase [35, 36], multi-photon and fluorescence lifetime [37]. The histological stains include H&E, Masson’s Trichrome and Jones’ stain. Notably, the quality of virtual staining on tissue sections from multiple human organs of different stain types was assessed by board-certified pathologists to show superior performance [22]. A recent cross-sectional study has been carried out for clinical evaluation of unlabeled prostate core biopsy images that have been virtually stained [38]. The main benefits of the virtual staining approach include saving time and cost [22], as well as facilitating multiplexed staining [34]. Interested readers can refer to a recent review on histopathology using virtual staining [39].

Second, digital fluorescence labeling has been demonstrated for transforming label-free contrast to fluorescence labels [23, 40–43] (Fig. 4(f)). In the first demonstration [23], Christiansen et al. performed 2D digital labeling using transmission brightfield or phase contrast images to identify cell nuclei (accuracy quantified by Pearson correlation coefficient PCC = 0.87–0.93), cell death (PCC = 0.85), and to distinguish neuron from astrocytes and immature dividing cells (PCC = 0.84). A main benefit of digital fluorescence labeling is digital multiplexing of multiple subcellular fluorescence labels, which is particularly appealing to kinetic live cell imaging. This is highlighted in [40], 3D multiplexed digital labeling using transmission brightfield or phase contrast images on multiple subcellular components are demonstrated, including nucleoli (PCC~0.9), nuclear envelope, microtubules, actin filaments (PCC~0.8), mitochondria, cell membrane, Endoplasmic reticulum, DNA+ (PCC~0.7), DNA (PCC~0.6), Actomyosin bundles, tight junctions (PCC~0.5), Golgi apparatus (PCC~0.2), and Desmosomes (PCC~0.1). Recent advances further exploit other label-free contrasts, including polarization [41], quantitative phase map [43], and reflectance phase-contrast microscopy [42]. Beyond predicting fluorescence labels, recent advances further demonstrate multiplexed single-cell profiling using the digitally predicted labels [42].

In both virtual histopathological staining and digital fluorescence labeling, the U-Net forms the basic architecture to perform the image transformation. GAN has also been incorporated to improve the performance [22, 38].

Classification Instead of performing pixel-wise virtual stain/label predictions, DL is also very effective in holistically capturing complex ‘hidden’ image features for classification. This has found broad applications in augmenting the label-free measurements and provide improved specificity and classify disease progression [44, 45] and cancer screening [46–48], as well as detect cell types [49, 50], cell states [44, 51], stem cell lineage [52–54], and drug response [55]. For example, in [44], Eulenberg et al. demonstrated a classification accuracy of 98.73% for the G1/S/G2 phase, which provided 6× improvement in error rate as compared to the previous state-of-the-art method based on classical ML techniques.

Opportunities and challenges.

By overcoming the physical tradeoffs in traditional systems, DL-augmented microscopy achieves unique combinations of imaging attributes that are previously not possible. This may create new opportunities for diagnosis and screening. By augmenting the contrast using virtual histological staining techniques, DL can open up unprecedented capabilities in label-free and slide-free digital pathology. This can significantly simplify the physical process and speed up the diagnosis. By further advancing the digital fluorescence labeling techniques, it can enable high-throughput and highly multiplexed single-cell profiling and cytometry. Beyond clinical diagnoses, this may find applications in drug screening and phenotyping.

In addition, several emerging DL techniques can further enhance the capabilities of microscopy systems. First, DL can be applied to optimize the hardware parameters used in microscopy experiments. In quantitative phase microscopy, DL was applied to optimized the illumination patterns to reduce the data requirement [30, 56]. In single molecule localization microscopy, DL was used to optimize the point spread functions to enhance the localization accuracy [33, 57]. DL has also been used to optimize the illumination power [58] and focus positions [59–61].

Second, new DL frameworks are emerging to significantly reduce the labeled data requirements in training, which is particularly useful in biomedical microscopy since acquiring a large-scale labeled training data set is often impractical. For example, a novel denoising approach, known as Noise2Noise [62], has been developed that can be trained using only independent pairs of noisy images, and bypasses the need for ground-truth clean images. Following this work, self-supervised denoising DL approaches have been advanced to further alleviate the training data requirement. Techniques, such as Noise2Void, Noise2Self and their variants, can be directly trained on noisy data set without the need for paired noisy images [63–65]. In addition, semi-supervised and unsupervised DL approaches have also been developed to reduce or completely remove the need for labeled training data during training, which have been demonstrated for vessel segmentation [66, 67]. Lastly, physics-embedded DL opens up a new avenue for reducing training requirements by incorporating the physical model of the microscopy technique [68, 69].

Finally, uncertainty quantification techniques address the need for assessing the reliability of the DL model by quantifying the confidence of the predictions, and has recently been applied in quantitative phase reconstruction [20].

Fluorescence Lifetime Imaging

Overview.

Fluorescence imaging has become a central tool in biomedical studies with high sensitivity to observe endogenous molecules [70, 71] and monitor important biomarkers [72]. Increasingly, fluorescence imaging is not limited to intensity-based techniques but can extract additional information by measuring fluorophore lifetimes [73–75]. Fluorescence lifetime imaging (FLI) has become an established technique for monitoring cellular micro-environment via analysis of various intracellular parameters [76], such as metabolic state [77, 78], reactive oxygen species [79] and/or intracellular pH [80]. FLI is also a powerful technique for studying molecular interactions inside living samples, via Förster Resonance Energy Transfer (FRET) [81], enabling applications such as quantifying protein-protein interactions [82], monitoring biosensor activity [83] and ligand-receptor engagement in vivo [84]. However, FLI is not a direct imaging technique. To quantify lifetime or lifetime-derived parameters, an inverse solver is required for quantification and/or interpretation.

To date, image formation is the main utility of DL in FLI. Contributions include reconstructing quantitative lifetime image from raw FLI measurements, enabling enhanced multiplexed studies by leveraging both spectral and lifetime contrast simultaneously, and facilitating improved instrumentation with compressive measurement strategies.

Lifetime quantification, representation, and retrieval.

Conventionally, lifetime quantification is obtained at each pixel via model-based inverse-solvers, such as least-square fitting and maximum-likelihood estimation [85], or the fit-free phasor method [86, 87]. The former is time-consuming, inherently biased by user-dependent a priori settings, and requires operator expertise. The phasor method is the most widely-accepted alternative for lifetime representation [87]. However, accurate quantification using the phasor method requires careful calibration, and when considering tissues/turbid-media in FLI microscopy (FLIM) applications, additional corrections are needed [87, 88]. Therefore, it has largely remained qualitative in use.

Wu et al. [89] demonstrated a multilayer perceptron (MLP) for lifetime retrieval for ultrafast bi-exponential FLIM. The technique exhibited an 180-fold faster speed then conventional techniques, yet it was unable to recover the true lifetime-based values in many cases due to ambiguities caused by noise. Smith et al. [90] developed an improved 3D-CNN, FLI-Net, that can retrieve spatially independent bi-exponential lifetime parameter maps directly from the 3D (x, y, t) FLI data. By training with a model-based approach including representative noise and instrument response functions, FLI-Net was validated across a variety of biological applications. These include quantification of metabolic and FRET FLIM, as well as preclinical lifetime-based studies across the visible and near-infrared (NIR) spectra. Further, the approach was generalized across two data acquisition technologies – Time-correlated Single Photon Counting (TCSPC) and Intensified Gated CCDs (ICCD). FLI-Net has two advantages. First, it outperformed classical approaches i’n the presence of low photon counts, which is a common limitation in biological applications. Second, FLI-Net can output lifetime-based whole-body maps at 80 ms in wide-field pre-clinical studies, which highlights the potential of DL methods for fast and accurate lifetime-based studies. In combination with DL in silico training routines that can be crafted for many applications and technologies, DL is expected to contribute to the dissemination and translation of FLI methods as well as to impact the design and implementation of future-generation FLI instruments. An example FLI-Net output for metabolic FLI is shown in Fig. 5.

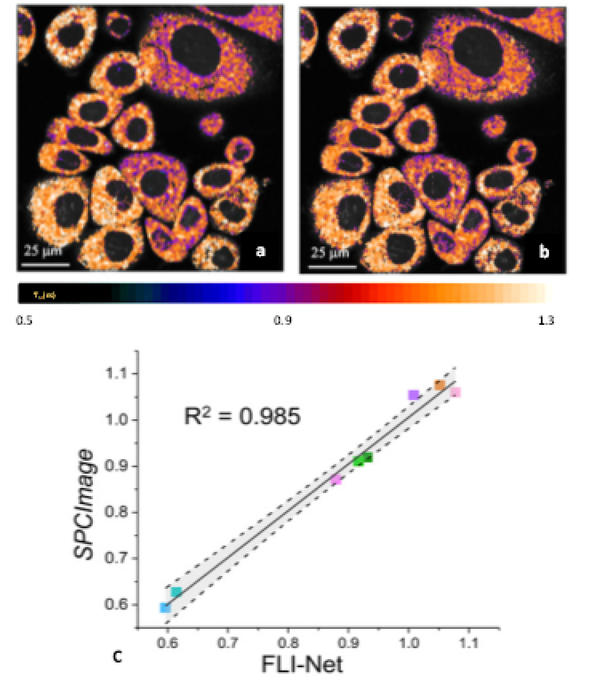

Fig 5:

Example of quantitative FLI metabolic imaging as reported by NADH tm for a breast cancer cell line (AU565) as obtained (a) with SPCImage and (b) FLI-Net. (c) Linear regression with corresponding 95% confidence band (gray shading) of averaged NADH Tm values from 4 cell line data (adapted from [90]).

Emerging FLI applications using DL.

The technologies used in FLI have not fundamentally shifted over the last two decades. One bottleneck for translation is a lack of sensitive, widefield NIR detectors. Advances in computational optics have sparked development of new approaches using structured light [91], such as single-pixel methods [92]. These methods are useful when widefield detectors are lacking, such as in applications with low photon budget and when higher dimensional data are sought [93] (e.g., hyperspectral imaging [94]). However, these computational methods are based on more complex inverse models that require user expertise and input.

Yao et al. [95] developed a CNN, NetFLICS, capable of retrieving both intensity and lifetime images from single-pixel compressed sensing-based time-resolved input. NetFLICS generated superior quantitative results at low photon count levels, while being four orders of magnitude faster than existing approaches. Ochoa-Mendoza et al. [96] further developed the approach to increase its compression ratio to 99% and the reconstruction resolution to 128×128 pixels. This dramatic improvement in compression ratio enables significantly faster imaging protocols and demonstrates how DL can impact instrumentation design to improve clinical utility and workflow [97].

Recent developments have made hyperspectral FLI imaging possible across microscopic [98] and macroscopic settings [99]. Traditionally, combining spectral and lifetime contrast analytically is performed independently or sequentially using spectral decomposition and/or iterative fitting [100]. Smith et al. [101] proposed a DNN, UNMIX-ME, to unmix multiple fluorophore species simultaneously for both spectral and temporal information. UNMIX-ME takes a 4D voxel (x, y, t, λ) as the input and outputs spatial (x, y) maps of the relative contributions of distinct fluorophore species. UNMIX-ME demonstrated higher performance during tri- and quadri-abundance coefficient retrieval. This method is expected to find utility in applications such as autofluorescence imaging in which unmixing of metabolic and structural biomarkers is challenging.

Although FLI has shown promise for deep tissue imaging in clinical scenarios, FLI information is affected by tissue optical properties. Nonetheless, there are several applications that would benefit from optical property-corrected FLI without solving the full 3D inverse problem. For optical guided surgery, Smith et al. [102] proposed a DNN that outputs 2D maps of the optical properties, lifetime quantification, and the depth of fluorescence inclusion (topography). The DNN was trained using a model-based approach in which a data simulation workflow incorporated “Monte Carlo eXtreme” [103] to account for light propagation through turbid media. The method was demonstrated experimentally, with real-time applicability over large FOVs. Both widefield time-resolved fluorescence imaging and Spatial Frequency Domain Imaging (SFDI) in its single snapshot implementation were performed with fast acquisition [91] and processing speeds [104]. Hence, their combination with DL-based image processing provides a possible future foundation for real-time intraoperative use.

While recent advances in FLI-based classification and segmentation are limited to using classical ML techniques [105–107], Sagar et al. [108] used MLPs paired with bi-exponential fitting for label-free detection of microglia. However, DL approaches often outperform such “shallow learning” classifiers. Although reports using DL for classification based on FLI data are currently absent from the literature, it is expected that DL will play a critical role in enhancing FLI classification and semantic segmentation tasks in the near future.

In vivo microscopy

Overview.

In vivo microscopy (IVM) techniques enable real-time assessment of intact tissue at magnifications similar to that of conventional histopathology [113]. As high-resolution assessment of intact tissue is desirable for many biomedical imaging applications, a number of optical techniques and systems have been developed which have trade-offs in FOV, spatial resolution, achievable sampling rates, and practical feasibility for clinical deployment [113]. However, a commonality of IVM systems used for clinical imaging is the need for image analysis strategies to support intraoperative visualization and automated diagnostic assessment of the high-resolution image data. Currently, three of the major IVM techniques for which DL is being utilized are optical coherence tomography (OCT) [114], confocal laser endomicroscopy (CLE, Fig. 6) [115], and reflectance confocal microscopy (RCM) [116]. This section focuses on DL approaches for CLE and RCM. More specifically, endoscopic imaging using probe-based CLE (pCLE) and dermal imaging for RCM. OCT is discussed in a subsequent section.

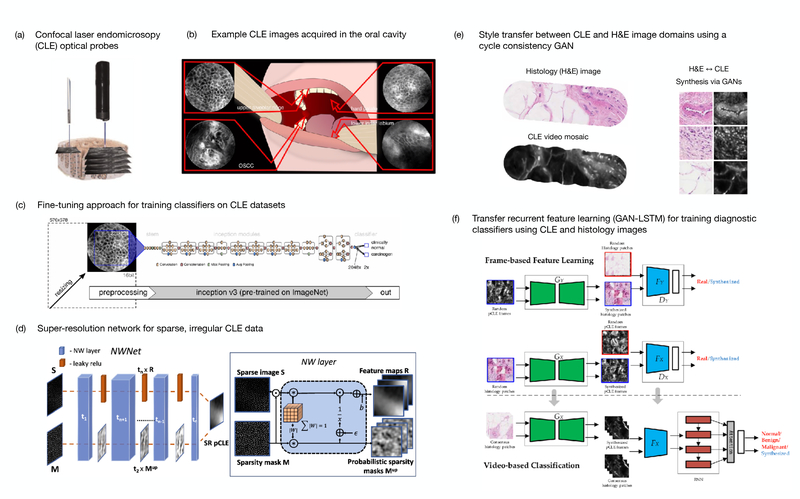

Fig 6:

DL approaches to support real-time, automated diagnostic assessment of tissues with confocal laser endomicroscopy. (a) Graphical rendering of two confocal laser endomicroscopy probes (left: Cellvizio, right: Pentax) (adapted from [109]). (b) Example CLE images obtained from four different regions of the oral cavity (adapted from [110]) (c) Fine-tuning of CNNs pre-trained using ImageNet is utilized in the majority of CLE papers reported since 2017 (adapted from [110]). (d) Super-resolution networks for probe-based CLE images incorporate novel layers to better account for the sparse, irregular structure of the images (adapted from [111]). (e) Example H&E stained histology images with corresponding CLE images. Adversarial training of GANs to transfer between these two modalities has been successful (adapted from [112]). (f) Transfer recurrent feature learning utilizes adversarially trained discriminators in conjunction with an LSTM for state-of-the-art video classification performance (adapted from [112]).

Automated diagnosis.

Automated diagnostic classification has been the earliest and most frequent application of DL within IVM. Most commonly, histopathology analysis of imaged specimens provides a ground truth categorization for assessing diagnostic accuracy. The limited size of pCLE and RCM datasets and logistical challenges in precisely correlating them with histopathology remain two ongoing challenges for training robust classifiers. To address these challenges, a variety of strategies have been applied which range from simple classification schemes (benign vs malignant) using pre-trained CNNs [110] to more complicated tasks, such as cross-domain feature learning and multi-scale encoder-decoder networks [112, 117]. The following section contrasts recent reports and methods utilizing DL for diagnostic image analysis of pCLE and RCM image datasets.

-

CNNs and transfer learning approaches. Early reports on DL-based image classification for CLE and RCM have demonstrated that transfer learning using pre-trained CNNs can outperform conventional image analysis approaches, especially when data is limited as is often the case for CLE and RCM [110, 118–120].

Aubreville et al. published an early and impactful study comparing the performance of two CNN-based approaches to a textural feature-based classifier (random forest) on pCLE video sequences acquired during surgical resection of oral squamous carcinoma (Fig. 6b) [110]. Of their two CNN-based approaches, one was a LeNet-5 architecture and was trained to classify sub-image patches whereas the other utilized transfer learning of a pre-trained CNN (Fig. 6c) for whole image classification. Using leave-one-out cross validation on 7,894 frames from 12 patients, the two CNN-based approaches both outperformed the textural classifier.

Transfer learning is one strategy to overcome limited dataset sizes, which remains a common challenge for CLE and RCM. As larger CLE and RCM datasets are obtainable in the future, transfer learning is unlikely to be an optimal strategy for image classification; however, it can remain a useful benchmark for the difficulty of image classification tasks on novel, small-scale datasets moving forward. The subsequent sections introduce alternatives to transfer learning which utilize video data as well as cross-domain learning.

Recurrent convolutional approaches. CLE and RCM are typically used in video recording while the optical probe is physically or optically scanned to obtain images over a larger tissue area or at varying depths. Some reports have utilized recurrent convolutional networks to account for spatial and/or temporal context of image sequences [121–123]. The additional spatial/temporal modeling provided by recurrent networks is one promising approach to leverage video data. [121–123].

Cross-domain learning. A novel approach, termed “transfer recurrent feature learning”, was developed by Gu et al. which leveraged cross-domain feature learning for classification of pCLE videos obtained from 45 breast tissue specimens [112]. Although this method relied on data acquired ex vivo, the data itself is not qualitatively different from other pCLE datasets and still provides a proof-of-principle. Their model utilized a cycle-consistent GAN (CycleGAN) to first learn feature representations between H&E microscopy and pCLE images and to identify visually similar images (Fig. 6e). The optimized discriminator from the CycleGAN is then utilized in conjunction with a recurrent neural network to classify video sequences (Fig. 6f). The method outperformed other DL methods and achieved 84% accuracy in classifying normal, benign, and malignant tissues.

Multiscale segmentation. Kose et al. [117] developed a novel segmentation architecture, “multiscale encoder-decoder network” (MED-Net), which outperformed other state-of-the-art network architectures for RCM mosaic segmentation. In addition to improving accuracy, MED-Net produced more globally consistent, less fragmented pixel-level classifications. The architecture is composed of multiple, nested encoder-decoder networks and was inspired by how pathologists often examine images at multiple scales to holistically inform their image interpretation.

Image quality assessment. A remaining limitation of many studies was some level of manual or semi-automated pre-processing of pCLE and RCM images/videos to exclude low-quality and/or non-diagnostic image data. Building on the aforementioned reports for diagnostic classification, additional work utilized similar techniques for automated image quality assessment using transfer learning [124, 125] as well as MED-Net [126].

Super-resolution.

Several IVM techniques, including pCLE, utilize flexible fiber-bundles as contact probes to illuminate and collect light from localized tissue areas [127]. Such probes are needed for minimally invasive endoscopic procedures and can be guided manually or via robotics. The FOV of fiber-optic probes is typically <1 mm2 and lateral resolution is limited by the inter-core spacing of individual optical fibers, which introduce a periodic image artifact (“honeycomb patterns”) from the individual fibers.

Shao et al. [128] developed a novel super-resolution approach which outperformed maximum a posteriori (MAP) estimation using a two-stage CNN model which first estimates the motion of the probe and then reconstructs a super-resolved image using the aligned video sequence. The training data was acquired using a dual camera system, one with and one without a fiber-bundle in the optical setup, to obtain paired data.

Others have taken a more computational approach to pCLE super-resolution by using synthetic datasets. For example, Ravì et al. [129] demonstrated super-resolution of pCLE images using unpaired image data via a CycleGAN, and Szczotka et al. [111] introduced a novel Nadaraya-Watson layer to account for the irregular sparse artifacts introduced by the fiber-bundle (Fig. 6d).

Future directions.

Beyond automatic diagnosis and super-resolution approaches in IVM, recent advances also highlight ways in which DL can enable novel instrumentation development and image reconstructions to enable new functionalities for compact microscopy systems. Such examples include multispectral endomicroscopy [130], more robust mosaicking for FOV expansion [131], and end-to-end image reconstruction using disordered fiber-optic probes [132, 133]. We anticipate that similarly to ex vivo microscopy, in the coming years DL will be increasingly utilized to overcome physical constraints, augment contrast mechanisms, and enable new capabilities for IVM systems.

Widefield endoscopy

Overview.

The largest application of optics in medical imaging, by U.S. market size, is widefield endoscopy [134]. In this modality, tissue is typically imaged on the >1 cm scale, over a large working distance range, with epi-illumination and video imaging via a camera. Endoscopic and laparoscopic examinations are commonly used for screening, diagnostic, preventative, and emergency medicine. There has been extensive research in applying various DL tools for analyzing conventional endoscopy images for improving and automating image interpretation [135–138]. This section instead reviews recent DL research in image formation tasks in endoscopy, including denoising, resolution enhancement, 3D scene reconstruction, mapping of chromophore concentrations, and hyperspectral imaging.

Denoising.

A hallmark of endoscopic applications is challenging geometrical constraints. Imaging through small lumens such as the gastrointestinal tract or “keyholes” for minimally-invasive surgical applications requires optical systems with compact footprints–often on the order of 1-cm diameter. These miniaturized optical systems typically utilize small-aperture cameras with high pixel counts, wide FOVs and even smaller illumination channels. Consequently, managing the photon budget is a significant challenge in endoscopy, and there have been several recent efforts to apply DL to aid in high-quality imaging in these low-light conditions. A low-light net (LLNET) with contrast-enhancement and denoising autoencoders has been introduced to adaptively brighten images [139]. This study simulated low-light images by darkening and adding noise and found that training on this data resulted in a learned model that could enhance natural low-light images. Other work has applied a U-Net for denoising on high-speed endoscopic images of the vocal folds, also by training on high-quality images that were darkened with added noise [140]. Brightness can also be increased via laser-illumination, which allows greater coupling efficiency than incoherent sources, but results in laser speckle noise in the image from coherent interference. Conditional GANs have been applied to predict speckle-free images from laser-illumination endoscopy images by training on image pairs acquired of the same tissue with both coherent and incoherent illumination sources [141].

Improving image quality.

In widefield endoscopy, wet tissue is often imaged in a perpendicular orientation to the optical axis, and the close positioning of the camera and light sources leads to strong specular reflections that mask underlying tissue features. GANs have been applied to reduce these specular reflections [142]. In this case, unpaired training data with and without specular reflections were used in a CycleGAN architecture with self-regularization to enforce similarity between the input specular and predicted specular-free images. Other work has found that specular reflection removal can be achieved in a simultaneous localization and mapping elastic fusion architecture enhanced by DL depth estimation [143]. Lastly, Ali et al. [144] introduced a DL framework that identifies a range of endoscopy artifacts (multi-class artifact detection), including specular reflection, blurring, bubbles, saturation, poor contrast, and miscellaneous artifacts using YOLOv3-spp with classes that were hand-labeled on endoscopy images. These artifacts were then removed and the image restored using GANs.

Resolution enhancement.

For capsule endoscopy applications, where small detectors with low pixel counts are required, DL tools have been applied for super-resolution with the goal of obtaining conventional endoscopy-like images from a capsule endoscope [145]. In this study, a conditional GAN was implemented with spatial attention blocks, using a loss function that included contributions of pixel loss, content loss, and texture loss. The intuition behind the incorporation of spatial attention blocks is that this module guides the network to prioritize the estimation of the suspicious and diagnostically relevant regions. This study also performed ablation studies and found that the content and texture loss components are especially important for estimating high-spatial frequency patterns, which becomes more important for larger upsampling ratios. With this framework, the resolution of small bowel images was increased by up to 12× with favorable quantitative metrics as well as qualitative assessment by gastroenterologists. Though this study demonstrated that the resolution of gastrointestinal images could be enhanced, it remains to be seen if preprocessing or enhancing these images provides any benefit to automated image analysis.

3D imaging and mapping.

The three dimensional shape of the tissue being imaged via endoscopy is useful for improving navigation, lesion detection and diagnosis, as well as obtaining meaningful quality metrics for the effectiveness of the procedure [146]. However, stereo and time-of-flight solutions are challenging and expensive to implement in an endoscopic form factor. Accordingly, there has been significant work in estimating the 3D shape of an endoscopic scene from monocular images using conditional GANs trained with photo realistic synthetic data [147, 148]. Domain adaptation can be used to improve the generalizability of these models, either by making the synthetic data more realistic, or by making the real images look more like the synthetic data that the depth-estimator is trained on [149]. Researchers have also explored joint conditional random fields and CNNs in a hybrid graphical model to achieve state-of-the-art monocular depth estimation [150]. A U-Net style architecture has been implemented for simultaneously estimating depth, color, and oxygen saturation maps from a fiber-optic probe that sequentially acquired structured light and hyperspectral images [151]. Lastly, DL tools have been applied to improve simultaneous localization and mapping (SLAM) tasks in endoscopic applications, both by incorporating a monocular depth estimation prior into a SLAM algorithm for dense mapping of the gastrointestinal tract [143], and by developing a recurrent neural network to predict depth and pose in a SLAM pipeline [152].

Widefield spectroscopy.

In addition to efforts to reconstruct high-quality color and 3D maps through an endoscope, DL is also being applied to estimate bulk tissue optical properties from wide FOV images. Optical property mapping can be useful for meeting clinical needs in wound monitoring, surgical guidance, minimally-invasive procedures, and endoscopy. A major challenge to estimating optical properties in turbid media is decoupling the effects of absorption, scattering, and the scattering phase function, which all influence the widefield image measured with flood illumination. Spatial frequency domain imaging can provide additional inputs to facilitate solving this inverse problem by measuring the attenuation of different spatial frequencies [153]. Researchers have demonstrated that this inverse model can be solved orders of magnitude faster than conventional methods with a 6-layer Perceptron [154]. Others have shown that tissue optical properties can be directly estimated from structured light images or widefield illumination images using content-aware conditional GANs [155]. In this application, the adversarial learning framework reduced errors in the optical property predictions by more than half when compared to the same network trained with an analytical loss function. Intuitively, the discriminator learns a more sophisticated and appropriate loss function in adversarial learning, allowing for the generation of more-realistic optical property maps. Moreover, this study found that the conditional GANs approach resulted in an increased performance benefit when data is tested from tissue types that were not spanned in the training set. The authors hypothesize that this observation comes from the discriminator preventing the generator from learning from and overfitting to the context of the input image. Optical properties can also be estimated more quickly using a lighter-weight twin U-Net architecture with a GPU-optimized look-up table [104]. Further, chromophores can be computed in real-time with reduced error compared to an intermediate optical property inference by directly computing concentrations from structured illumination at multiple wavelengths using conditional GANs [156].

Going beyond conventional color imaging, researchers are also processing 1D hyperspectral measurement through an endoscope using shallow CNNs to classify pixels into the correct color profiles, illustrating the potential to classify tissue with complex absorbance spectra [157]. The spectral resolution can be increased in dual-modality color/hyperspectral systems from sparse spectral signals with CNNs [151]. To enable quantitative spectroscopy measurements in endoscopic imaging, it may be necessary to combine hyperspectral techniques with structured illumination and 3D mapping [104, 151, 155, 158].

Future directions.

Future research in endoscopy and DL will undoubtedly explore clinical applications. Imaging system for guiding surgery are already demonstrating clinical potential for ex-vivo tissue classification: a modified Inception-v4 CNNs was demonstrated to effectively classify squamous cell carcinoma versus normal tissue at the cancer margin from ex-vivo hyperspectral images with 91 spectral bands [159]. For in-vivo applications, where generalizability may be essential and training data may be limited, future research in domain transfer [149] and semi-supervised learning [160] may become increasingly important. Moreover, for clinical validation, these solutions must be real-time, easy-to-use, and robust, highlighting the need for efficient architectures [104] and thoughtful user interface design [161].

Optical coherence tomography

Overview.

Optical coherence tomography (OCT) is a successful example of biophotonic technological translation into medicine [162, 163]. Since its introduction in 1993, OCT has revolutionized the standard-of-care in ophthalmology around the world, and continued thriving in technical advances and other clinical applications, such as dermatology, neurology, cardiology, oncology, gastroenterology, gynecology, and urology [164–171].

Image segmentation.

The most common use of OCT is to quantify structural metrics via image segmentation, such as retinal anatomical layer thickness, anatomical structures, and pathological features. Conventional image processing is challenging in the case of complex pathology where tissue structural alteration can be complex and may not be fully accounted for when designing a rigid algorithm. Image segmentation is the earliest application of DL explored in OCT applications. Several DNNs have been reported for OCT segmentation in conjunction with manual annotations (Fig. 7(a)), including U-Net [172–174], ResNet [175], and fully-convolutional network (FCN) [176, 177]. Successful implementation of DNNs have been broadly reported in different tissues beyond the eye [178–180]. In all areas of applications, the DNN showed superior segmentation accuracy over conventional techniques. For example, Devalla et al. [175] quantified the accuracy of the proposed DRUNET(Dilated-Residual U-Net) for segmenting the retinal nerve fiber layer (RNFL), retinal Layers, the retinal pigment epithelium (RPE), and choroid on both healthy and glaucoma subjects, and showed that the DRUNET consistently outperformed alternative approaches on all the tissues measured by dice coefficient, sensitivity, and specificity. The errors of all the metrics between DRUNET and the observers were within 10% and the patch-based neural network always provided greater than 10% error irrespective of the observer chosen for validation. In addition, the DRUNET segmentation further allowed automatic extraction of six clinically relevant neural and connective tissue structural parameters, including the disc diameter, peripapillary RNFL thickness (p-RNFLT), peripapillary choroidal thickness (p-CT), minimum rim width (MRW), prelaminar thickness (PLT), and the prelaminar depth (PLD).

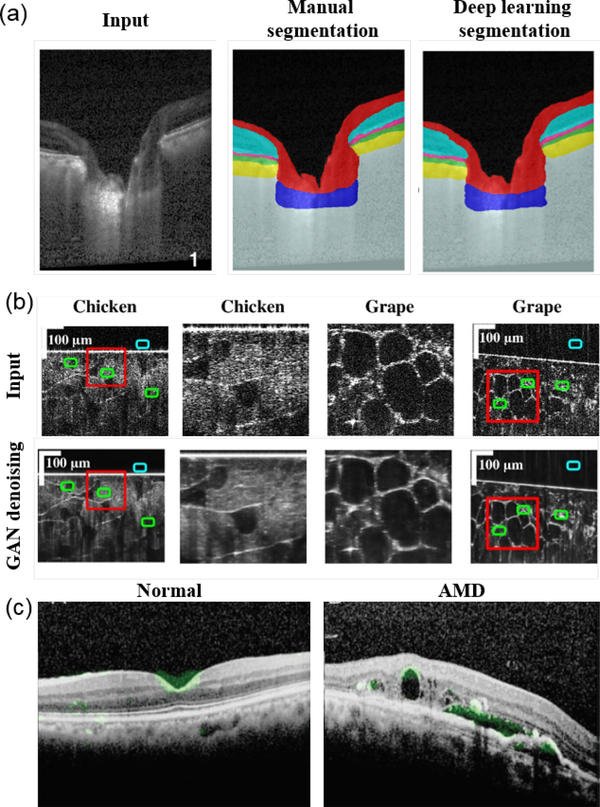

Fig 7:

(a) Example automatic retinal layer segmentation using DL compared to manual segmentation (reprinted from [175]). (b) GAN for denoising OCT images (adapted from [181]). (c) Attention map overlaid with retinal images indicated features that CNN used for diagnosing normal versus age-related macular degeneration (AMD) [182] (Reproduced from Detection of features associated with neovascular age-related macular degeneration in ethnically distinct data sets by an optical coherence tomography: trained deep learning algorithm, Hyungtaek et al., Br. J. Ophthalmol. bjophthalmol-2020–316984, 2020 with permission from BMJ Publishing Group Ltd.).

Denoising and speckle removal.

OCT images suffer from speckle noise due to coherent light scattering, which leads to image quality degradation. There exist other sources of noise to further degrade the image quality when the signal level is low. Denoising and despeckling are important applications of DNNs, which are often trained with the averaged reduced-noise image as the ‘ground truth’ in a U-Net and ResNet [183, 184]. GAN has also been applied and provided improved visual perception than the DNNs trained with only the least-squares loss function [181] (Fig. 7(b)). For example, Dong et al. [181] showed that the GAN-based denoising network outperformed state-of-the-art image processing based (e.g. BM3D and MSBTD) and a few other DNNs (e.g. SRResNet and SRGAN) in terms of contrast-to-noise ratio (CNR) and peak signal-to-noise ratio (PSNR).

Clinical diagnosis and classification.

In clinical applications using DL, a large body of literature over the past 3 years emerges particularly in ophthalmology. Most of the studies use a CNN to extract image features for diagnosis and classification [185]. A clear shift of attention recently is to interpret the DNN, for example using the attention map [182, 186] (Fig. 7(c)). The purpose is to reveal the most important structural features that the DNN used for making the predictions. This addresses the major concern from the clinicians on the “black-box” nature of DL. Another emerging effort is to improve the generalization of a trained DNN to allow process images from different devices, with different image qualities and other possible variations. Transfer learning has been reported to refine pre-trained DNNs to other dataset, with much reduced training and data burdens [187, 188]. Domain adaptation is another method to generalize the DNN trained on images taken by one device to another [189, 190]. We expect more innovations for addressing the generalization in clinical diagnosis and prediction.

Emerging applications.

Beyond segmentation, denoising, and diagnosis/classification, there are several emerging DL applications for correlating the OCT measurements with vascular functions. OCT angiography (OCTA) and Doppler OCT (DOCT) are two advanced methods to measure label-free microangiography and blood flows. While normally requiring specific imaging protocols, the raw OCT measurements contain structural features that may be recognized by a CNN. Reports have shown that angiographic image and blood flows can be predicted by mere structural image input without specific OCTA or DOCT protocols [191, 193, 194]. For example, Braaf et al. [191] showed that DL enabled accurate quantification of blood flow from OCT intensity time-series measurements, and was robust to vessel angle, hematocrit levels, and measurement SNR. This is appealing for generating not only anatomical features, but also functional readouts using the simplest OCT imaging protocols by any regular OCT device (Fig. 8(a)). Recent work also reports the use of a fully connected network and a CNN to extract the spectroscopic information in OCT to quantify the blood oxygen saturation (sO2) within microvasculature, as an important measure of the perfusion function [192] (Fig. 8(b-c)). The DL models in [192] demonstrated more than 60% error reduction for predicting sO2 as compared to the standard nonlinear least-squares fitting method. These advances present emerging directions of DL applied to OCT to extract functional metrics beyond structures.

Fig 8:

(a) Examples of using DL to predict blood flow based on structural OCT image features (reprinted from [191]). (b) Example of deep spectral learning for label-free oximetry in visible light OCT (reprinted from [192]). (c) The predicted blood oxygen saturation and the tandem prediction uncertainty from rat retina in vivo in hypoxia, normoxia and hyperoxia (reprinted from [192]).

Photoacoustic imaging and sensing

Overview.

Photoacoustic imaging relies on optical transmission, followed by sensing of the resulting acoustic response [195, 196]. This response may then be used to guide surgeries and interventions [197, 198] (among other possible uses [199]). In order to guide these surgeries and interventions, image maps corresponding to structures of high optical absorption must be formed, which is a rapidly increasing area of interest for the application of DL to photoacoustic imaging and sensing. This section focuses on many of the first reports of DL for photoacoustic source localization, image formation, and artifact removal. Techniques applied after an image has been formed (e.g., segmentation, spectral unmixing, and quantitative imaging) are also discussed, followed by a summary of emerging applications based on these DL implementations.

Source localization.

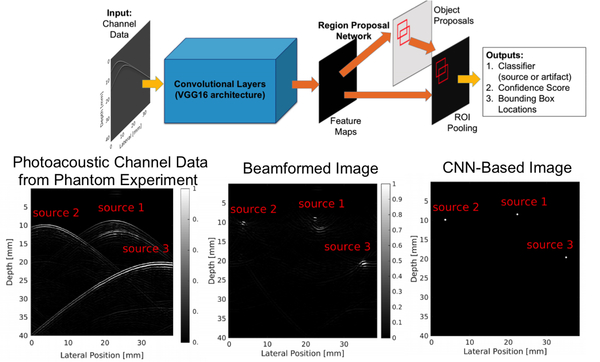

Localizing sources correctly and removing confusing artifacts from raw sensor data (also known as channel data) are two important precursors to accurate image formation. Three key papers discuss the possibility of using DL to improve source localization. Reiter and Bell [200] introduced the concept of source localization from photoacoustic channel data, relying on training data derived from simulations based on the physics of wave propagation. Allman et al. [201] built on this initial success to differentiate true photoacoustic sources from reflection artifacts based on wavefront shape appearances in raw channel data. Waves propagating spherically outward from a photoacoustic source are expected to have a unique shape based on distance from the detector, while artifacts are not expected to preserve this shape-to-depth relationship [201]. A CNN (VGG-16) was trained to demonstrate this concept, with initial results shown in Fig. 9. Johnstonbaugh et al. [202] expanded this concept by developing an encoder-decoder CNN with custom modules to accurately identify the origin of photoacoustic wavefronts inside an optically scattering deep-tissue medium. In the latter two papers [201, 202], images were created from the accurate localization of photoacoustic sources.

Fig 9:

Example of point source detection as a precursor to photoacoustic image formation after identifying true sources and removing reflection artifacts, modified from [201]. (©2018 IEEE. Adapted, with permission, from Allman et al. Photoacoustic source detection and reflection artifact removal enabled by deep learning, IEEE Transactions on Medical Imaging. 2018; 37:1464–1477.)

Image formation.

Beyond source localization, DL may also be used to form photoacoustic images directly from raw channel data with real-time speed [203, 204]. This section summarizes the application of DL to four technical challenges surrounding image formation: (1) challenges surrounding the limited view of transducer arrays [205–207] (in direct comparison to what is considered the “full view” provided by ring arrays), (2) sparse sampling of photoacoustic channel data [206, 208–210], (3) accurately estimating and compensating for the fluence differences surrounding a photoacoustic target of interest [211], and (4) addressing the traditional limited bandwidth issues associated with array detectors [212].

-

Limited view. Surgical applications often preclude the ability to completely surround a structure of interest. Historically, ring arrays were introduced for small animal imaging [213]. While these ring array geometries can also be used for in vivo breast cancer detection [214] or osteoarthritis detection in human finger joints [215], a full ring geometry is often not practical for many surgical applications [198]. The absence of full ring arrays often leads to what is known as “limited view” artifacts, which can appear as distortions of the true shape of circular targets or loss in the appearance of the lines in vessel targets.

DL has been implemented to address these artifacts and restore our ability to interpret the true structure of photoacoustic targets. For example, Hauptmann et al. [205] considered backprojection followed by a learned denoiser and a learned iterative reconstruction, concluding that the learned iterative reconstruction approach sufficiently balanced speed and image quality, as demonstrated in Fig. 10. To achieve this balance, a physical model of wave propagation was incorporated during the gradient of the data fit and an iterative algorithm consisting of several CNNs was learned. The network was demonstrated for a planar array geometry. Tong et al. [206] learned a feature projection, inspired by the AUTOMAP network [216], with the novelty of incorporating the photoacoustic forward model and universal backprojection model in the network design. The network was demonstrated for a partial ring array.

-

Sparse sampling. In tandem with limited view constraints, it is not always possible to sufficiently sample an entire region of interest when designing photoacoustic detectors, resulting in sparse sampling of photoacoustic responses. This challenge may also be seen as an extension of limited view challenges, considering that some of the desired viewing angles or spatial locations are missing (i.e., limited) due to sparse sampling, which often results in streak artifacts in photoacoustic images [217]. Therefore, networks that address limited view challenges can simultaneously address sparse sampling challenges [206, 218].

Antholzer et al. [208] performed image reconstruction to address sparse sampling with a CNN, modeling a filtered backprojection algorithm [219] as a linear preprocessing step (i.e., the first layer), followed by the U-Net architecture to remove undersampling artifacts (i.e., the remaining layers). Guan et al. [209] proposed pixel-wise DL (Pixel-DL) for limited-view and sparse PAT image reconstruction. The raw sensor data was first interpolated to window information of interest, then provided as an input to a CNN for image reconstruction. In contrast to previously discussed model-based approaches [205, 208], this approach does not learn prior constraints from training data and instead the CNN uses more information directly from the CNN and sensor data to reconstruct an image. This utilization of sensor data directly shares similarity with source localization methods [197, 201, 202].

The majority of methods discussed up until this point have used simulations in the training process for photoacoustic image formation. Davoudi et al. [210] take a different approach to address sparse sampling challenges by using whole-body in vivo mouse data acquired with a high-end, high-channel count system. This approach also differs from previously discussed approaches by operating solely in the image domain (i.e., rather than converting sensor or channel data to image data).

Fluence correction. The previous sections address challenges related to sensor spacing and sensor geometries. However, challenges introduced by the laser and light delivery system limitations may also be addressed with DL. For example, Hariri et al. [211] used a multi-level wavelet-CNN to denoise photoacoustic images acquired with low input energies, by mapping these low fluence illumination source images to a corresponding high fluence excitation map.

Limited transducer bandwidth. The bandwidth of a photoacoustic detector determines the spatial frequencies that can be resolved. Awasthi et al. [212] developed a network with the goal of resolving higher spatial frequencies than those present in the ultrasound transducer. Improvements were observable as better boundary distinctions in the presented photoacoustic data. Similarly, Gutta et al. [220] used a DNN to predict missing spatial frequencies.

Fig 10:

Example of blood vessel and tumor phantom results with multiple DL approaches. (Reprinted from [205].)

Segmentation.

After photoacoustic image formation is completed, an additional area of interest has been segmentation of various structures of interest, which can be performed with assistance from DL. Moustakidis et al. [221] investigated the feasibiliity of DL to segment and identify skin layers by using pretrained models (i.e., ResNet50 [222] and AlexNet [223]) to extract features from images and by training CNN models to classify skin layers directly using images, skipping the processing, transformation, feature extraction, and feature selection steps. These DL methods were compared to other ML techniques. Boink et al. [224] explored simultaneous photoacoustic image reconstruction and segmentation for blood vessel networks. Training was based on the learned primal-dual algorithm [225] for CNNs, including spatially varying fluence rates with a weighting between imaging reconstruction quality and segmentation quality.

Spectral unmixing and quantitative imaging.

Photoacoustic data and images may also be used to determine or characterize the content of identified regions of interest based on data obtained from a series of optical wavelength excitations. These tasks can be completed with assistance from DL. Cai et al. [226] proposed a DL framework for quantitative photoacoustic imaging, starting with the raw sensor data received after multiple wavelength transmisions, using a residual learning mechanism adopted to the U-Net to quantify chromophore concentration and oxygen saturation.

Emerging applications.

Demonstrated applications for image formation with DL has spanned multiple spatial scales, with applications that include cellular-level imaging (e.g., microscopy [227], label-free histology), molecular imaging (e.g., low concentrations of contrast agents in vivo [211]), small animal imaging [210], clinical and diagnostic imaging, and surgical guidance [197]. In addition to applications for image formation, other practical applications in photoacoustic imaging and sensing include neuroimaging [209, 228], dermatology (e.g., clinical evaluation, monitoring, and diagnosis of diseases linked to skin inflammation, diabetes, and skin cancer [221]), real-time monitoring of contrast agent concentrations, microvasculature, and oxygen saturation during surgery [203, 226], and localization of biopsy needle tips [229], cardiac catheter tips [229–231], or prostate brachytherapy seeds [201].

Diffuse Tomography

Overview.

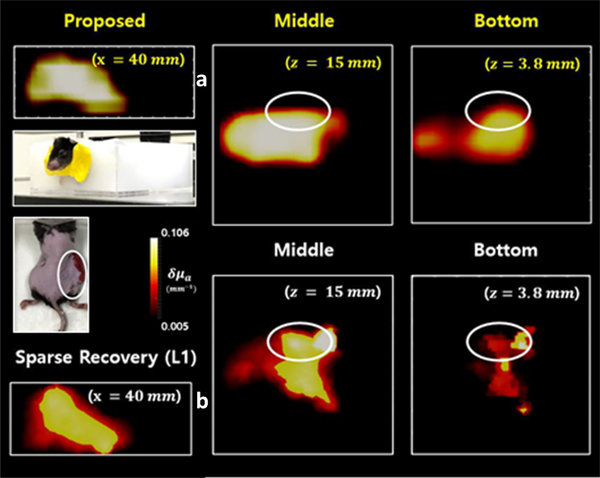

Diffuse Optical Tomography (DOT), Fluorescence Diffuse Optical Tomography (fDOT, also known as Fluorescence Molecular Tomography - FMT) and Bioluminescence Tomography (BLT) are non-invasive and nonionizing 3D diffuse optical imaging techniques [232]. They are all based on acquiring optical data from spatially resolved surface measurements and performing similar mathematical computational tasks that involve the modeling of light propagation according to the tissue attenuation properties. In DOT, the main biomarkers are related to the functional status of tissues reflected by the total blood content (HbT) and relative oxygen saturation (StO2) that can be derived from the reconstructed absorption maps [233]. DOT has found applications in numerous clinical scenarios including optical mammography [234, 235], muscle physiology [236], brain functional imaging [237] and peripheral vascular diseases monitoring. In fDOT, the inverse problem aims to retrieve the effective quantum yield distribution (related to concentration) of an exogenous contrast agent [238, 239] or reporter gene in animal models [240] while illuminated by excitation light. In BLT, the goal is to retrieve the location and strength of an embedded bioluminescent source.

The highly scattering biological tissues lead to ill-posed nonlinear inverse problems that are highly sensitive to model mismatch and noise amplification. Therefore, tomographic reconstruction in DOT/fDOT/BLT is often performed via iterative approaches [241] coupled with regularization. Moreover, the model is commonly linearized using the Rytov (DOT) or Born (DOT/fDOT) methods [242]. Additional constraints, such as preconditioning [243] and a priori information are implemented [244–247]. Further experimental constraints in DOT/fDOT are also incorporated using spectral and temporal information [248]. Despite this progress, the implementation and optimization of a regularized inverse problem is complex and requires vast computational resources. Recently, DL methods have been developed for DOT/fDOT/BLT to either aid or fully replace the classical inverse solver. These developments have focused on two main approaches, including 1) learned denoisers and 2) end-to-end solvers.

Learned denoisers.

Denoisers can enhance the final reconstruction by correcting for errors from model mismatch and noise amplification. Long [249] proposed a 3D CNN for enhancing the spatial accuracy of mesoscopic FMT outputs. The spatial output of a Tikhonov regularized inverse solver was translated into a binary segmentation problem to reduce the regularization-based reconstruction error. The network was trained with 600 random ellipsoids and spheres as it only aimed to reconstruct simple geometries in silico. Final results displayed improved “intersection over union” values with respect to the ground truth. Since denoising approaches still involve inverting the forward model, it can still lead to large model mismatch. Hence, there has been great interest in end-to-end solutions that directly map the raw measurements to the 3D object without any user input.

End-to-end solvers.

Several DNNs have been proposed to provide end-to-end inversion. Gao et al. [250] proposed a MLP for BLT inversion for tumor cells, in which the boundary measurements were inputted to the first layer that has a similar number of surface nodes as a standardized mesh built using MRI and CT images of the mouse brain, and output the photon distribution of the bioluminescent target. Similarly, Guo et al. [251] proposed “3D-En-Decoder”, a DNN for FMT with the encoder-decoder structure that inputs photon densities and outputs the spatial distribution of the fluorophores. It was trained with simulated FMT samples. Key features of the measurements were extracted in the encoder section and the transition of boundary photon densities to fluorophore densities was accomplished in the middle section with a fully connected layer. Finally, a 3D-Decoder outputted the reconstruction with better accuracy than L1-regularized inversion method in both simulated and phantom experiments.

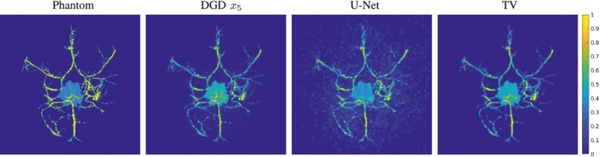

Huang et al. [253] proposed a similar CNN approach. After feature encoding, a “Gated Recurrent unit (GRU)” combines all the output features in a single vector, and the MLP (composed of two hidden layers with dropout and ReLu activations) outputs the fluorophores’ location. Simulated samples of a mouse model (with five organs and one fluorescent tumor target) were used. In silico results displayed comparable performance to an L1 inversion method. It was also validated with single-embeddings in silico by outputting only the positions since the network does not support 3D rendering. Yoo et al. [252] proposed an encoder-decoder DNN to invert the Lippmann–Schwinger integral photon equation for DOT using the deep convolutional framelet model [254] and learn the nonlinear scattering model through training with diffusion-equation based simulated data. Voxel domain features were learned through a fully connected layer, 3D convolutional layers and a filtering convolution. The method was tested in biomimetic phantoms and live animals with absorption-only contrast. Figure 11 shows an example reconstruction for an in vivo tumor in a mouse inside water/milk mixture media.

Fig 11:

Reconstruction for a mouse with tumor (right thigh) where higher absorption values are resolved (slices at z=15 and 3.8 mm) for the tumor area with the DNN in (a) compared to the L1-based inversion in (b). (adapted with permission from the authors from [252]).

Summary and future challenges.

DL has been demonstrated for improving (f)DOT image formation for solving complex ill-posed inverse problems. The DL models are often trained with simulated data, and in a few cases, validated in simple experimental settings. With efficient and accurate light propagation platforms such as MMC/MCX [255, 256], model-based training could become more efficient. Still, it is not obvious that such DL approaches will lead to universal solutions in DOT/FMT since many optical properties of tissues are still unknown and/or heterogeneous. Hence, further studies should aim to validate the universality of the architectures across different tissue conditions.

Functional optical brain imaging

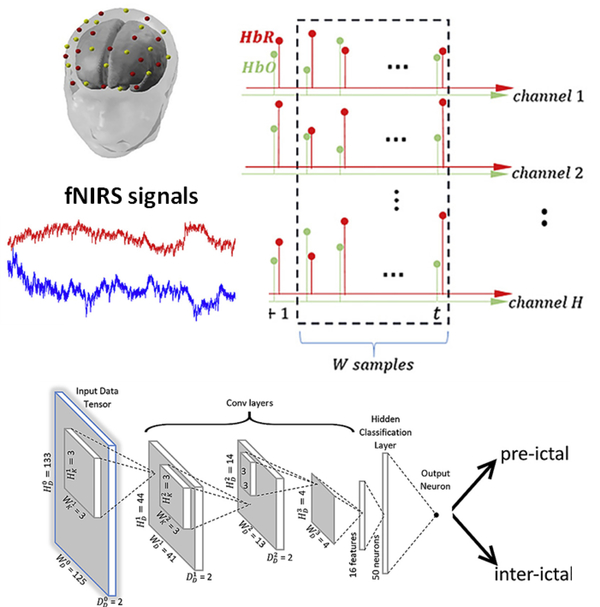

Overview.