In 2003, the Institute of Medicine reported that Black Americans receive fewer procedures and poorer-quality care than white individuals, independent of socioeconomic determinants of health. Seventeen years later, access to care and perioperative level of care assignments potentiate disparities in surgical care, particularly affecting Black patients.1 These disparities are partially attributable to implicit bias that is entrenched deeply in American culture and media, and are exacerbated when patient and physician demographics are mismatched. It is difficult to identify modifiable mechanisms of implicit bias because its latent mental constructs cannot be directly observed, but the weight of evidence suggests that many well-intentioned clinicians have two conflicting cognitive processes: one that is governed by a conscious, explicit system of beliefs and values, and one subconscious, implicit process that adapts to repeated stimuli. The former process is typically fair and equitable; the latter may drive implicit bias. Efforts to overcome implicit bias and healthcare disparities by building awareness and enacting structural changes to credentialing agencies and training curricula have yielded modest progress; additional strategies are needed. This article endeavors to impart understanding of mechanisms by which artificial intelligence can either propagate or counteract disparities, and suggests methods to tilt the balance toward fairness and equity in surgical care.

Artificial Intelligence can Propagate Disparities

Artificial intelligence algorithms learn from data; when trained on biased data, algorithms produce biased results. Injustices occur when an algorithm is applied to a patient that is poorly represented by training data. In deriving a dataset of inpatient surgeries at the University of Florida Health campus in Gainesville, Florida, 14% of all subjects were Black; 73 miles away at the Jacksonville campus, 34% of all subjects were Black. It seems unlikely that a model derived from these datasets would accurately represent patients in Detroit, Michigan, where approximately 79% of the population is Black, or Rochester, Minnesota, where approximately 8% of the population is Black.2 Similarly, socioeconomic determinants of health vary substantially across geographic domains and people groups; these personalized variables (e.g., residing neighborhood characteristics, education, and income) are predictive of postoperative outcomes.3 Therefore, careful model development, validation, and testing across diverse datasets is necessary to minimize the negative effects of biased source data.

Incorporation of clinician judgements in algorithm training can introduce implicit biases held by clinicians. For example, clinician notes contain information that can be learned by artificial intelligence models, affecting model accuracy and bias. In a study using psychiatrists’ notes to predict intensive care unit mortality and psychiatric readmission, there were significant differences in documented diagnoses across race, and model performance was worst when predicting outcomes for women and subjects with public insurance.4 This source of bias can be mitigated by using input data that is “upstream” of clinician judgements, e.g., objective vital sign and laboratory data collected by standardized methods.5 Careful management of each model input feature can minimize the risk of bias in model outputs.

Using race, ethnicity, sex, and gender as input variables for decision-support tools has potential benefits and harms. If the outcome of interest is influenced by these variables via a biologically proven or plausible mechanism, then ignoring them may degrade model accuracy; if not, then including these variables has the potential to introduce bias. This binary distinction becomes unrealistic when it is unknown whether demographic factors influence pathophysiology or reflect systemic bias, which is difficult to ascertain. One prominent decision-support tool incorporates the observation that Black patients have increased risk for mortality after coronary artery bypass.6 If this observation is attributable to suboptimal access to care for Black patients, then incorporating race as an input variable could decrease the likelihood that Black patients will garner the benefits of an indicated procedure.7 Therefore, it is critically important to use machine learning interpretation mechanisms, causal inference, and clinical interpretation of biologic plausibility to understand whether race, ethnicity, sex, and gender are causal, pathophysiologic mechanisms of disease, or simply indicators that the outcome of interest is influenced by systemic bias. These elements should be mandated in machine learning-specific expansions of reporting guidelines for predictive models, such as the Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis statement.

Artificial Intelligence can Counteract Disparities

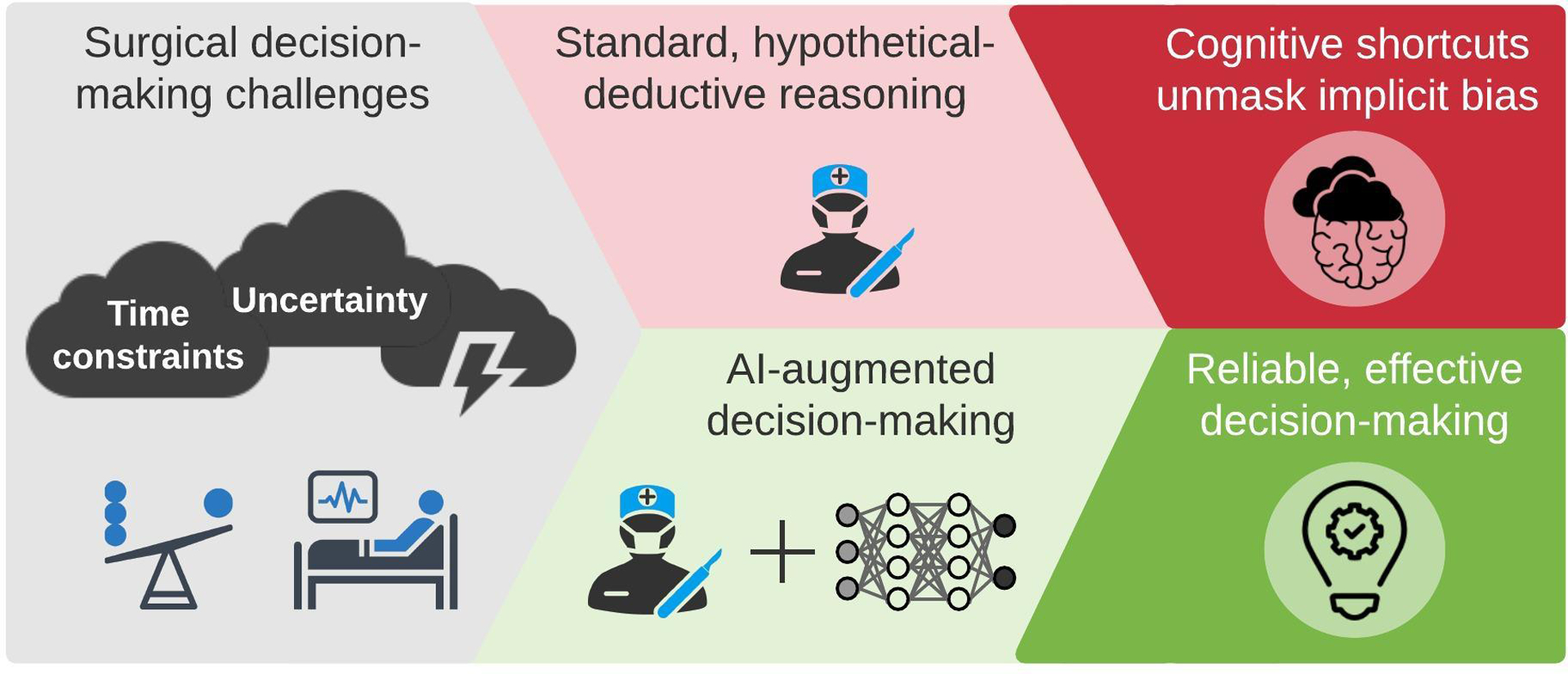

In surgical decision-making, clinicians make high-stakes decisions with incomplete information while facing time constraints. Under these conditions, humans tend to rely on heuristics, or cognitive shortcuts. Heuristics facilitate efficient decision-making and offer powerful advantages if they are guided by intuition that is honed by extensive experience with similar decision-making scenarios; unfortunately, heuristics are also influenced by implicit bias. To mitigate bias from heuristic-based decision-making under time constraints and uncertainty, decision-support tools must keep pace with clinical workflow. The emerging availability of live-streaming electronic health record data offers opportunities to generate and deliver artificial intelligence model outputs to healthcare providers in real time. These models could identify scenarios in which the decision made by a clinician is significantly different than the decision that yields the highest probability of achieving the goal of care, as illustrated in Figure 1.

Figure 1:

Artificial intelligence can augment surgical decision-making by minimizing the impact of cognitive shortcuts and implicit bias.

Artificial intelligence-augmented decision-making is founded on the hypothesis that a human with efficient access to accurate, computer-enabled information will make better decisions than a human that does not have access to such information. In this paradigm, the human is the most important element. If the computer does provide the right information at the right time in the right format, then clinicians will ignore the information. Further, computer-enabled information must align with patient-centered outcomes. A model that recommends treatments yielding the highest probability of 30-day survival may not align with patients’ values and preferences for resource intensity and quality of life after discharge. Therefore, input from patients and clinicians is essential to the design and implementation of optimal artificial intelligence-augmented decision-support tools.

Summary

Implicit bias is a major threat to surgical care. Surgery referral patterns and individual surgeon judgement are inherently affected by implicit bias, resulting in disparities; surgical decision-support tools propagate these disparities when source data and model input features are biased. Each input feature must be managed carefully to minimize the risk of bias in model outputs, including assumptions about whether relationships between outcomes and race, ethnicity, sex, and gender are attributable to pathophysiologic mechanisms or systemic bias; this should be mandated in model reporting guidelines. Artificial intelligence models can also mitigate disparities by augmenting the decision-making process when time constraints and uncertainty unmask implicit bias. To humanize this process, the computer must conform to clinician needs and patient values. Understanding and implementing these principles is necessary to shift the influence of artificial intelligence toward equity and optimal surgical care in the next 17 years and beyond.

Acknowledgements

The authors thank Chair of Surgery Dr. Gilbert Upchurch Jr., MD for supporting diversity, equity, and inclusion as primary missions of the University of Florida Department of Surgery. AB was supported by R01 GM110240 from the National Institute of General Medical Sciences (NIGMS) and by Sepsis and Critical Illness Research Center Award P50 GM-111152 from the NIGMS. TJL was supported by K23 GM140268 from the NIGMS. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

References

- 1.Haider AH, Scott VK, Rehman KA, et al. Racial disparities in surgical care and outcomes in the United States: a comprehensive review of patient, provider, and systemic factors. J Am Coll Surg. 2013;216(3):482–492 e412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Gianfrancesco MA, Tamang S, Yazdany J, Schmajuk G. Potential Biases in Machine Learning Algorithms Using Electronic Health Record Data. JAMA Intern Med. 2018;178(11):1544–1547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bihorac A, Ozrazgat-Baslanti T, Ebadi A, et al. MySurgeryRisk: Development and Validation of a Machine-learning Risk Algorithm for Major Complications and Death After Surgery. Annals of surgery. 2019;269(4):652–662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Chen IY, Szolovits P, Ghassemi M. Can AI Help Reduce Disparities in General Medical and Mental Health Care? AMA J Ethics. 2019;21(2):E167–179. [DOI] [PubMed] [Google Scholar]

- 5.Parikh RB, Teeple S, Navathe AS. Addressing Bias in Artificial Intelligence in Health Care. JAMA. 2019. [DOI] [PubMed] [Google Scholar]

- 6.Shahian DM, Jacobs JP, Badhwar V, et al. The Society of Thoracic Surgeons 2018 Adult Cardiac Surgery Risk Models: Part 1-Background, Design Considerations, and Model Development. Ann Thorac Surg. 2018;105(5):1411–1418. [DOI] [PubMed] [Google Scholar]

- 7.Vyas DA, Eisenstein LG, Jones DS. Hidden in Plain Sight - Reconsidering the Use of Race Correction in Clinical Algorithms. New Engl J Med. 2020;383(9):873–881. [DOI] [PubMed] [Google Scholar]