Abstract

Drawing on the philosophy of psychological explanation, we suggest that psychological science, by focusing on effects, may lose sight of its primary explananda: psychological capacities. We revisit Marr’s levels-of-analysis framework, which has been remarkably productive and useful for cognitive psychological explanation. We discuss ways in which Marr’s framework may be extended to other areas of psychology, such as social, developmental, and evolutionary psychology, bringing new benefits to these fields. We then show how theoretical analyses can endow a theory with minimal plausibility even before contact with empirical data: We call this the theoretical cycle. Finally, we explain how our proposal may contribute to addressing critical issues in psychological science, including how to leverage effects to understand capacities better.

Keywords: theory development, formal modeling, computational analysis, psychological explanation, levels of explanation, computational-level theory, theoretical cycle

A substantial proportion of research effort in experimental psychology isn’t expended directly in the explanation business; it is expended in the business of discovering and confirming effects.

—Robert Cummins (2000, p. 120)

Psychological science has a preoccupation with “effects.” However, effects are explananda (things to be explained), not explanations. The Stroop effect, for instance, does not explain why naming the color of the word “red” written in green takes longer than naming the color of a green patch. That just is the Stroop effect. 1 The effect itself is in need of explanation. Moreover, effects such as we experimentally test in the laboratory are secondary explananda for psychology. Ideally, we do not construct theories just to explain effects. 2 Rather, the Stroop effect, the McGurk effect, the primacy and recency effects, and visual illusions, for example, serve to arbitrate between competing explanations of the capacities for cognitive control, speech perception, memory, and vision, respectively.

Primary explananda are key phenomena defining a field of study. They are derived from observations that span far beyond, and often even precede, the testing of effects in the lab. Cognitive psychology’s primary explananda are the cognitive capacities that humans and other animals possess. These capacities include, in addition to those already mentioned, those for learning, language, perception, concept formation, decision-making, planning, problem-solving, reasoning, and so on. 3 Only in the manner in which we postulate that such capacities are exercised do our explanations of capacities come to imply effects. An example is given by Cummins (2000):

Consider two multipliers, M1 and M2. M1 uses the standard partial products algorithm. . . . M2 uses successive addition. Both systems have the capacity to multiply. . . . But M2 also exhibits the “linearity effect”: computation is, roughly, a linear function of the size of the multiplier. It takes twice as long to compute 24 × N as it does to compute 12 × N. M1 does not exhibit the linearity effect. Its complexity profile is, roughly, a step function of the number of digits in the multiplier. (p. 123)

This example illustrates two points. First, many of the effects studied in our labs are by-products of how capacities are exercised. They may be used to test different explanations of how a system works: For example, by giving a person different pairs of numerals and by measuring response times, one can test whether the person’s timing profile fits M1 or M2, or any different M′. Second, candidate explanations of capacities (multiplication) come in the form of different algorithms (e.g., partial products or repeated addition) computing a particular function (i.e., the product of two numbers). Such algorithms are not devised to explain effects; rather, they are posited as a priori candidate procedures for realizing the target capacity.

Although effects are usually discovered empirically through intricate experiments, capacities (primary explananda) do not need to be discovered in the same way (Cummins, 2000). Just as we knew that apples fall straight from the trees (rather than move upward or sideways) before we had an explanation in terms of Newton’s theory of gravity, 4 so too do we already know that humans can learn languages, interpret complex visual and social scenes, and navigate dynamic, uncertain, culturally complex social worlds. These capacities are so complex to explain computationally or mechanistically that we do not know yet how to emulate them in artificial systems at human levels of sophistication. The priority should be the discovery not of experimentally constructed effects but of plausible explanations of real-world capacities. Such explanations may then provide a theoretical vantage point from which to also explain known effects (secondary explananda) and perhaps to guide inquiry into the discovery of new informative ones.

This approach is not the one psychological science has been pursuing in recent decades; nor is it what the contemporary methodological-reform movement in psychological science has been recommending. Methodological reform so far seems to follow the tradition of focusing on establishing statistical effects, and, arguably, the reform has even been entrenching this bias. The reform movement has aimed primarily at improving methods for determining which statistical effects are replicable (cf. debates on preregistration; Nosek et al., 2019; Szollosi et al., 2020), and there has been relatively little concern for improving methods for generating and formalizing scientific explanations (for notable exceptions, see Guest & Martin, 2021; Muthukrishna & Henrich, 2019; Smaldino, 2019; van Rooij, 2019). But if we are already “overwhelmed with things to explain, and somewhat underwhelmed by things to explain them with” (Cummins, 2000, p. 120), why do psychological scientists expend so much energy hunting for more and more effects? We see two reasons besides tradition and habit.

One is that psychological scientists may believe in the need to build large collections of robust, replicable, uncontested effects before even thinking about starting to build theories. The hope is that, by collecting many reliable effects, the empirical foundations are laid on which to build theories of mind and behavior. As reasonable as this seems, without a prior theoretical framework to guide the way, collected effects are unlikely to add up and contribute to the growth of knowledge (Anderson, 1990; Cummins, 2000; Newell, 1973). An analogy may serve to bring the point home. In a sense, trying to build theories on collections of effects is much like trying to write novels by collecting sentences from randomly generated letter strings. Indeed, each novel ultimately consists of strings of letters, and theories should ultimately be compatible with effects. Still, the majority of the (infinitely possible) effects are irrelevant for the aims of theory building, just as the majority of (infinitely possible) sentences are irrelevant for writing a novel. 5 Moreover, many of the relevant effects (sentences) may never be discovered by chance, given the vast space of possibilities. 6 How can we know which effects are relevant and informative and which ones are not? To answer this question, we first need to build candidate theories and determine which effects they imply.

Another reason, not incompatible with the first, may be that psychological scientists are unsure how to even start to construct theories if those theories are not somehow based on effects. After all, building theories of capacities is a daunting task. The space of possible theories is, prima facie, at least as large as the space of effects: For any finite set of (naturalistic or controlled) observations about capacities, there exist (in principle) infinitely many theories consistent with those observations. However, we argue that theories may be built by following a constructive strategy and meeting key plausibility constraints to rule out from the start theories that are least likely to be true: We refer to this as the theoretical cycle. An added benefit of this cycle is that theories constructed in this way already have (minimal) verisimilitude before their predictions are tested: This may increase the likelihood that confirmed predicted effects turn out to be replicable (Bird, 2018). The assumptions that have to be added to theories to meet those plausibility constraints provide means for (a) making more rigorous tests of theory possible and (b) restricting the number and types of theories considered for testing, channeling empirical research toward testing effects that are most likely to be relevant (more on this later).

This article aims to make accessible ideas for doing exactly this. We present an approach for building theories of capacities that draws on a framework that has been highly successful for this purpose in cognitive science: Marr’s levels of analysis.

What Are Theories of Capacities?

A capacity is a dispositional property of a system at one of its levels of organization: For example, single neurons have capacities (firing, exciting, inhibiting) and so do minds and brains (vision, learning, reasoning) and groups of people (coordination, competition, polarization). A capacity is a more or less reliable ability (or disposition or tendency) to transform some initial state (or “input”) into a resulting state (“output”).

Marr (1982/2010) proposed that, to explain a system’s capacities, we should answer three kinds of questions: (a) What is the nature of the function defining the capacity (the input-output mapping)? (b) What is the process by which the function is computed (the algorithms computing or approximating the mapping)? (c) How is that process physically realized (e.g., the machinery running the algorithms)? Marr called these computational-level theory, algorithmic-level theory, and implementational-level theory, respectively. Marr’s scheme has occasionally been criticized (e.g., McClamrock, 1991) and variously adjusted (e.g., Anderson, 1990; Griffiths et al., 2015; Horgan & Tienson, 1996; Newell, 1982; Poggio, 2012; Pylyshyn, 1984), but its gist has been widely adopted in cognitive science and cognitive neuroscience, where it has supported critical analysis of research practices and theory building (see Baggio et al., 2012a, 2015, 2016; Isaac et al., 2014; Krakauer et al., 2017). We also see much untapped potential for it in areas of psychology outside of cognitive science.

Following Marr’s views, we propose the adoption of a top-down strategy for building theories of capacities, starting at the computational level. A top-down or function-first approach (Griffiths et al., 2010) has several benefits. First, a function-first approach is useful if the goal is to “reverse engineer” a system (Dennett, 1994; Zednik & Jäkel, 2014, 2016). As Marr stated, “an algorithm is . . . understood more readily by understanding the nature of the problem being solved than by examining the mechanism . . . in which it is embodied” (Marr, 1982/2010, p. 27; see also Marr, 1977).

Knowing a functional target (“what” a system does) may facilitate the generation of algorithmic- and implementational-level hypotheses (i.e., how the system “works” computing that function). Reconsider, for instance, the multiplication example from above: By first expressing the function characterizing the capacity to multiply (f(x,y) = xy), one can devise different algorithms realizing this computation (M1 or M2). If there is no functional target it is difficult or impossible to come up with ways of computing that target. This relates to a second benefit of a function-first approach: It allows one to assess candidate algorithmic or implementational theories for whether they indeed compute or implement that capacity as formalized (Blokpoel, 2018; Cummins, 2000). A third benefit, beyond cognitive psychology, is that social, developmental, or evolutionary psychologists may be more interested in using theories of capacities as explanations of patterns of behavior of agents or groups over time, in the world, rather than in the internal mechanisms of those capacities, say, in the brain or mind, which is more the realm of cognitive science and cognitive neuroscience.

Psychological theories of capacities should generally be (a) mathematically specified and (b) independent of the details of implementation. The strategy is to try to precisely produce theories of capacities meeting these two requirements, unless evidence is available that this is impossible, for example, that the capacity cannot be modeled in terms of functions mapping inputs to outputs (Gigerenzer, 2020; Marr, 1977). A computational-level theory of a capacity is a specification of input states, I, and output states, O, and the theorized mapping, f: I → O. For the multiplication example, the input would be the set of pairs of numbers (ℕ × ℕ), the range would be the set of numbers (ℕ), and the function f: ℕ × ℕ → ℕ would be defined such that f(a,b) = ab, for each a, b ∈ ℕ. The mapping f need not be numerical. It can also be qualitative, structural, or logical. For instance, a computational-level theory of coherence-based belief updating could specify the input as a network N = (P, C) of possible beliefs (modeled by a set of propositions P), in which beliefs in the network may cohere or incohere with each other (modeled by positive or negative connections C in the network), a set of currently held beliefs (modeled as truth assignment T: P → {believed to be true, believed to be false}), and new information that contradicts, conflicts, or is otherwise incoherent with one or more of the held beliefs, D. The output could be a belief revision (modeled as a new truth assignment T′) that maintains internal coherence as much as possible while accommodating new information, that is, f(N, T, D) = T′ (for applications in the domain of moral, social, legal, practical, and emotional judgments and decision-making, see Thagard, 2000, 2006; Thagard & Verbeurgt, 1998).

Marr’s computational-level theory has often been applied to information-processing capacities as studied by cognitive psychologists. However, Marr’s framework can be extended beyond its traditional domains. First, to the extent that cognitive capacities also figure in explanations in other subfields of psychology, Marr’s framework naturally extends to these domains. A few areas in which the approach has been fruitfully pursued include (a) social cognition, for instance, in social categorization (Klapper et al., 2018), mentalizing or “theory of mind” (Baker et al., 2009; Michael & MacLeod, 2018; Mitchell, 2006; Thagard & Kunda, 1998), causal attribution (De Houwer & Moors, 2015), moral cognition (Mikhail, 2008), signaling and communication (Frank & Goodman, 2012; Moreno & Baggio, 2015), and social attachment (Chumbley & Steinhoff, 2019); (b) cognitive development, for instance, in theory of mind (Goodman et al., 2006), probabilistic and causal learning (Bonawitz et al., 2014; Gopnik & Bonawitz, 2015), self-directed learning (Gureckis & Markant, 2012), pragmatic communication (Bohn & Frank, 2019), analogical processing (Gentner, 1983, 2010), and concept formation (Carey, 2009; Kinzler & Spelke, 2007); and (c) cognitive evolution, for instance, in cognitive structures and architectures that aim to account for language, social cognition, reasoning and decision-making (Barrett, 2005; Carruthers, 2006; Cosmides & Tooby, 1995; Fodor, 2000; Lieder & Griffiths, 2020; Marcus, 2006).

Second, and this is a less conventional and less explored idea, the framework can also be applied to noncognitive or nonindividual capacities of relevance to social, developmental, and evolutionary psychology and more. Preliminary explorations into computational-level analyses of noncognitive or nonindividual capacities can be found in work by Krafft and Griffiths (2018) on distributed social processes, Huskey et al. (2020) on communication processes, Rich et al. (2020) on natural and/or cultural-evolution processes, and van Rooij (2012) on self-organized processes.

Later in this article we spell out our approach to theory building using examples. To encourage readers to envisage applications of the approach to their own domains of expertise and to more complex phenomena than those we can cover here, we provide a stepwise account of what is involved in constructing theories of psychological capacities in general. Following Marr’s (1982/2010) successful cash register example, we foresee that more abstract illustrations demonstrating general principles can encourage a wider and more creative adoption of these ideas.

First Steps: Building Theories of Capacities

We have proposed that theories of capacities may be formulated at Marr’s computational level. A computational-level theory specifies a capacity as a property-satisfying computation f. This idea applies across domains in psychology and for capacities at different levels of organization. How does one build a computational-level theory f of some capacity c? Or better yet, how does one build a good computational-level theory?

A first thought may be to derive f from observations of the input-output behavior of a system having the capacity under study. However, for anything but trivial capacities, where we can exhaustively observe (or sample) the full input domain, 7 this is unlikely to work. The reason is that computational-level theories (or any substantive theories) are grossly underdetermined by data. The problem that we cannot deduce (or even straightforwardly induce) theories from data is a limitation, or perhaps an attribute, of all empirical science (the Duhem-Quine thesis; Meehl, 1997; Stam, 1992). Still, one may abduce hypotheses, including computational-level analyses of psychological capacities. Abduction is reasoning from observations (not limited to experimental data; more below) to possible explanations (Haig, 2018; Niiniluoto, 1999; P. Thagard, 1981; P. R. Thagard, 1978). It consists of two steps: generating candidate hypotheses (abduction proper) and selecting the “best” explanatory one (inference to the best explanation, or IBE). Note, however, that IBE is only as good as the quality of the candidates: The best hypothesis might not be any good if the set does not contain any good hypotheses (Blokpoel et al., 2018; Kuipers, 2000; van Fraassen, 1985). For this reason it is worth building a set of good candidate theories before selecting from the set.

Abduction is sensitive to background knowledge. We cannot determine which hypotheses are good (verisimilar) by considering only locally obtained data (e.g., data for a toy scenario in a laboratory task). We should interpret any data in the context of our larger “web of beliefs,” which may contain anything we know or believe about the world, including scientific or commonsense knowledge. One does not posit a function f in a vacuum. What we already know or believe about the world may be used to create a first rough set of candidate hypotheses for f for any and all capacities of interest. One can either cast the net wide to capture intuitive phenomena and refine and formalize the idea in a well-defined f (Blokpoel, 2018; van Rooij, 2008) or, alternatively, make a first guess and then adjust it gradually on the basis of the constraints that one later imposes: The first sketch of an f need not be the final one; what matters is how the initial f is constrained and refined and how the rectification process can actually drive the theory forward. Theory building is a creative process involving a dialectic of divergent and convergent thinking, informal and formal thinking.

What are the first steps in the process of theory building? Theorists often start with an initial intuitive verbal theory (e.g., that decisions are based on maximizing utilities, that people tend toward internally coherent beliefs, that meaning in language has a systematic structure). The concepts used in this informal theory should then be formally defined (e.g., utilities are numbers, beliefs are propositions, and meanings of linguistic expressions can be formalized in terms of functions and arguments; numbers, propositions, functions, and arguments are all well-defined mathematical concepts). The aim of formalization is to cast initial ideas using mathematical expressions (again, of any kind, not just quantitative) so that one ends up with a well-defined function f—or at least a sketch of f. Once this is achieved, follow-up questions can be asked: Does f capture one’s initial intuitions? Is f well defined (no informal notions are left undefined)? Does f have all the requisite properties and no undesirable properties (e.g., inconsistencies)? If inconsistencies are uncovered between intuitions and formalization, theorists must ask themselves if they are to change their intuitions, the formalization, or both (van Rooij et al., 2019, Chapter 1; for a tutorial, see van Rooij & Blokpoel, 2020). In practice, it always takes several iterations to arrive at a complete, unambiguously formalized f given the initial sketch.

Let us illustrate the first steps of theory construction with an example—compositionality, a property of the meanings that can be expressed through language. Speakers of a language know intuitively that the meaning of a phrase, sentence, or discourse is codetermined by the meanings of its constituents: For example, “broken glass” means what it does by virtue of the meanings of “broken” and “glass,” plus the fact that composing an adjective (“broken”) and a noun (“glass”) in that order is licensed by the rules of English syntax. Compositionality is the notion that people can interpret the meaning of a complex linguistic expression (a sentence, etc.) as a function of the meanings of its constituents and of the way those constituents are syntactically combined (Partee, 1995). It is the task of a computational theory of syntax and semantics to formalize this intuition. Like other properties of the outputs of psychological capacities, compositionality comes in degrees (Box 1): A system has that capacity just in case it can produce outputs (meanings) showing a higher-than-chance degree of compositionality but not necessarily perfect compositionality.

Box 1. Possible Objections.

One could object that computational-level theorizing is possible only for cognitive (sub)systems, whereas other types of systems require fundamentally different ways of theorizing. We understand this objection in two ways: First, there is something special about the biophysical realization of cognition that makes Marr’s computational level apply only to it (e.g., that brains are computational in ways other systems are not); and second, computational-level analyses intrinsically assume that capacities have functional purposes or serve goals, whereas noncognitive systems (e.g., evolution or emergent group processes) do not.

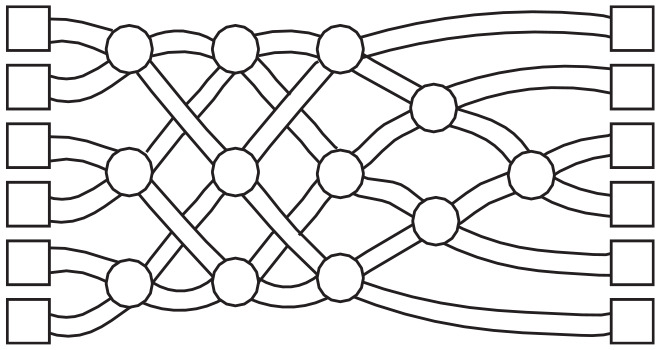

To counter the first objection, we note that multiple realizability (Chalmers, 2011; Dennett, 1995; Miłkowski, 2016) is the bedrock of Marr’s approach: Any function f can be physically realized in different systems, even at different levels of organization. Consider the capacity for sorting. Its inputs are items varying with respect to a quantity that can be ordered (e.g., the unordered list of numbers 89254631) and gives as output the items in order of magnitude (the ordered list 12345689). This ordering capacity may be physically implemented in several ways. One individual could perform the ordering, a computer program could do it, or a group of people could implement the capacity in a distributed way. In the last of these cases, each individual need not have access to the input array or need not even be aware that they are partaking in a collective behavior that is ordering (see figure in this box for an illustration).

A sorting network. Imagine a maze structured this way; each of six people, walking from left to right, enters a square on the left. Every time two people meet at any node (circle) they compare their height. The shorter of the two turns left next, and the taller turns right. At the end of the maze, people end up sorted by height. This holds regardless of which six people enter the maze and of what order they enter the maze. Hence, the maze (combined with the subcapacity of people for making pairwise comparisons) has the capacity for sorting people by height. Adapted from https://www.csunplugged.org, under a CC BY-SA 4.0 license.

We note that a system may produce outputs in which the target property comes only in degrees: For example, the network in the figure in this box may not always produce a perfect sorting by height if the people entering the maze do not meet at every node; even then, (a) the outputs tend to show a greater degree of ordering than is expected by chance, and (b) under relevant idealizations (i.e., people meet at every node) the system can still produce a complete, correct ordering: Together this illustrates the system’s sorting capacity. The fact that target properties come in degrees holds generally; see our discussion of compositionality in the text.

Functions, so conceived, may describe capacities at any level of organization: We see no reason to reserve computational-level explanations only to levels of organization traditionally considered in cognitive science. Even within cognitive psychology, the computational level may be (and has been) applied at different levels of organization—from various neural levels (e.g., feature detection) to persons or groups (e.g., communication). A Marr-style analysis applies regardless of the level of organization at which the primary explananda are situated; hence, it need not be limited to the domain of cognitive psychology.

To counter the second objection, we note that computational-level theories are usually considered to be normative (e.g., rational or optimal, in a realist or instrumentalist “as if” sense; Chater & Oaksford, 2000; Chater et al., 2003; Danks, 2008, 2013; van Rooij et al., 2018), but that is not formally required. A computational-level analysis is a mathematical object, a function f, mapping some input domain to an output domain (Egan, 2010, 2017). Any normative interpretation of an f is just an add on—an additional, independent level of analysis (Danks, 2008; van Rooij et al., 2018). Marr did suggest that the theory “contains separate arguments about what is computed and why” (1982, p. 23), but the meaning of “why” has been altered over time by (especially, Bayesian) modelers, as requiring that computational theories are idealized optimization functions serving rational goals (Anderson, 1990, 1991; Chater & Oaksford, 1999). Such an explanatory strategy may have heuristic value for abducing computational-level theories (see text), but it is mistaken to see this strategy as a necessary feature of Marr’s scheme.

Compositionality is a useful example in this context because it holds across cognitive and noncognitive domains and has important social, cultural, and evolutionary ramifications (Table 1), as may be expected from a core property of the human-language capacity. Compositionality is therefore used here to illustrate the applicability of Marr’s framework across areas of cognitive, developmental, social, cultural, and evolutionary psychology. For example, cognitive psychologists may be interested in explaining a person’s capacity to assign compositional meaning to a given linguistic expression, like a vision scientist may be interested in explaining how perceptual representations of visual objects arise from representations of features or parts (the “binding problem”; Table 1, row 1). In all cases covered in Table 1, a “sketch” of a computational theory can be provided as a first step in theory building. A sketch requires that the capacity of interest, the explanandum, is identified with sufficient precision to allow the specification of the inputs, or initial states, and the outputs, or resulting states, of the function f to be characterized in full detail in the theoretical cycle. At this stage, we need not say much about the f itself, the algorithms that compute it, and the physical systems that implement the algorithms. Moreover, the sketch need not assume anything about the goals (if any) that the capacity serves in each case (Box 1). A discussion of the goals of compositionality, for example, would rather require that a sketch is in place. Are compositional languages easier to learn or to use (Kirby et al., 2008; Nowak & Baggio, 2016)? Is compositional processing one computational resource among others, harnessed only in particular circumstances (Baggio, 2018; Baggio et al., 2012b)? These questions about compositionality’s goals are easier to address when a sketch of f is in place. In general, questions about the goals and purposes of the capacity need not affect how either f or the output property are defined (Table 1; Box 1).

Table 1.

Sketches of Computational-Level Analyses of Explananda Involving Compositionality in Different Domains of Psychological Science

| Psychological domain | Example explanandum (compositionality) | Computational-level theory (sketch) f | Example explananda from other subdomains |

|---|---|---|---|

| Cognitive | The capacity to assign a compositional meaning to a linguistic expression |

Input: Complex linguistic expression u1, . . ., un, with elementary parts ui

Output: Meaning of input μ(u1, . . ., un), such that μ(u1, . . ., un) = c(μ(u1), . . ., μ(un)), where c is a composition operation |

The capacity to recognize complex perceptual objects with parts (binding problem) |

| Development | The capacity to develop comprehension and production skills for a compositional language |

Input: Basic sensorimotor and cognitive capacities (e.g., memory, precursors of theory of mind), a linguistic environment Output: A cognitive capacity fc for processing compositional language |

The capacity to develop, e.g., fine motor control, abstract arithmetic and geometric skills, etc. |

| Learning | The capacity to learn a (second or additional) compositional language |

Input: Basic sensorimotor and cognitive capacities; a linguistic environment; a cognitive capacity fc for compositional language understanding and production Output: A new cognitive capacity fc′ that is also compositional |

The capacity to learn a new motor skill related to one already mastered, e.g., from ice skating to skiing (skill transfer) |

| Biological evolution | The capacity to evolve comprehension and production skills for a compositional language |

Input: A capacity for assigning natural or conventional meanings to signals Output: A cognitive capacity fc for compositional language use |

The capacity to evolve, e.g., fine motor control, spatial representation, navigation, etc. |

| Social interaction; cultural evolution | The capacity of groups and populations to jointly create new compositional communication codes |

Input: An arbitrary assignment of meanings to strings Output: A compositional assignment of meanings to strings |

The capacity of groups or populations to jointly create structured norms and rituals (“culture”); division of labor |

Further Steps: Assessing Theories in the Theoretical Cycle

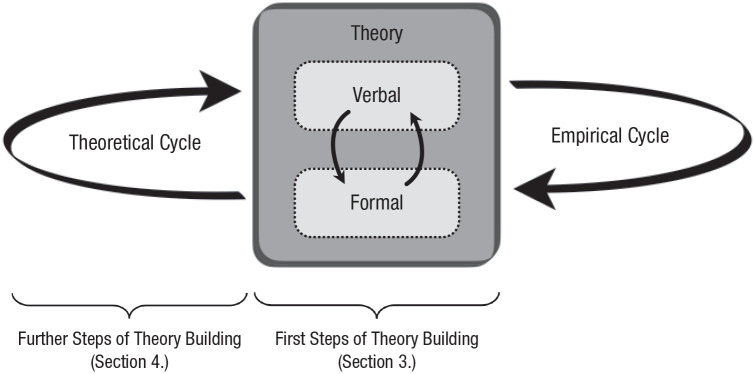

Once an initial characterization of f is in place, one must ask follow-up questions that probe the verisimilitude of f. This leads to a crucial series of steps in theory development that are often overlooked in psychological theorizing. Even if one’s intuitive ideas are on the right track and f is formalized and internally consistent, it might still lack verisimilitude. A traditional way of testing a theory’s verisimilitude is by deriving predictions from f and investigating whether they are borne out when put to an empirical test. Using empirical tests to update and fine-tune a theory is the modus operandi of the empirical cycle. We argue that even before (and interlaced with) putting computational-level theories to empirical tests, they can be put to theoretical tests, in what we call the theoretical cycle (Fig. 1), in which one assesses whether one’s formalization of intuitive, verbal theories satisfies certain theoretical constraints on a priori plausibility.

Fig. 1.

The empirical cycle is familiar to most psychological scientists: The received view is that our science progresses by postulating explanatory hypotheses, empirically testing their predictions (including, but not limited to, effects), and revising and refining the hypotheses in the process. Explanatory hypotheses often remain verbal in psychological research. The first steps of (formal) theory building include making such verbal theories formally explicit. In the process of‘ formalization the verbal theory may be revised and refined. Theory building does not need to proceed with empirical testing right away. Instead, theories can be subjected to rigorous theoretical tests in what we refer to as the theoretical cycle. This theoretical cycle is aimed at endowing the (revised) theory with greater a priori plausibility (verisimilitude) before assessing the theory’s empirical adequacy in the empirical cycle.

The hypothetical 8 example below from the domain of action planning appears simple, but as we demonstrate later it is actually quite complex. One can think of an organism foraging as engaging in ordering a set of sites to visit, starting and ending at its “home base,” such that the ordering has overall satisfactory value (e.g., the total cost of travel to the sites in that particular order yields a good trade-off between energy expended for travel and amount of food retrieved). This intuitive capacity can be formalized as follows 9 :

Foraging f

Input: A set of sites S = {s0, s1, s2, . . ., sn}, each site si ∈ S with i > 0, hosts a particular amount of food g(s) ∈ ℕ, and for each pair of sites si, sj ∈ S, there is a cost of travel, c(si, sj) ∈ ℕ.

Output: An ordering π(S) = [s0, s1, . . ., sn, s0] of the elements in S such that s0 = s0 and the sum of foods collected at s1, . . ., sn exceeds the total cost of the travel, that is,

Some arbitrary choices were made here that might matter for the theory’s explanatory value or verisimilitude. For example, we could have formalized the notion of “good trade-off” by defining it as (a) maximizing the amount of food collected given an upper bound on the cost of travel, (b) minimizing the amount of travel given a lower bound on the amount of food collected, or (c) maximizing the difference between the total amount of food collected and the cost of travel. We could also have added free parameters, weighing differentially the importance of maximizing the amount of food and of minimizing the cost of travel.

In the theoretical cycle, one explores the assumptions and consequences of a given set of formalization choices, thereby assessing whether a computational-level theory is making unrealistic assumptions or otherwise contradicts prior or background knowledge. As an example we use a theoretical constraint called tractability, but others may be considered (more later). Tractability is the constraint that a theory f of a real-world capacity (e.g., foraging) must be realizable by the type of system under study given minimal assumptions on its resource limitations. Tractability is useful for illustrating the theoretical cycle because it is a fundamental computational constraint, is insensitive to the type of system implementing the computation, and applies at all levels of organization (given basic background assumptions: e.g., computation takes time, its speed is limited by an upper bound). Tractability is a property of f that can be assessed independently of algorithmic- and implementational-level details (Frixione, 2001; van Rooij, 2008; van Rooij et al., 2019): For example, an organism could solve the foraging problem by deciding on an ordering before travel (planning) or it could compute a solution implicitly as it arises from local decisions made while traveling (the same applies to sorting in the figure shown in Box 1). An assessment of the tractability or intractability of f is independent of this “how” of the computation.

In relation to tractability, the foraging f (as stated) turns out a priori implausible. If an animal had the capacity f (as stated), then it would have a capacity for computing problems known to be intractable: The foraging f is equivalent to the known intractable (NP-hard) traveling-salesperson problem (Garey & Johnson, 1979). This problem is so hard, even to approximate (Ausiello et al., 1999; Orponen & Heikki, 1987), that all algorithms solving it require time that grows exponentially in the number of sites (n). For all but very small n values such a computation is infeasible.

The intractability of an f does not necessarily mean that the computational-level theory is wholly misconceived, but it does signal it is underconstrained (van Rooij, 2015). Tractability can be achieved by introducing constraints on the input and/or output domains of f. For instance, one could assume that the animal’s foraging capacity is limited to a small number of sites (e.g., n ≤ 5) or that the animal has the general capacity for a larger number of sites, but only if the amount of food per site meets some minimum criterion (e.g., g(s) ≥ max(c(s,s′)) + for all s in S). 10 In both cases, the foraging f is tractable. 11 Theoretical considerations (e.g., tractability) can constrain the theory so as to rule out its most unrealistic versions, effectively endowing it with greater a priori verisimilitude. Moreover, theoretical considerations can yield new empirical consequences, such as predictions about the conditions under which performance breaks down (i.e., n > 5 vs. g(s) ≤ max(c(s,s′)) + ; for further examples, see Blokpoel et al., 2013; Bourgin et al., 2017), and can constrain algorithmic-level theorizing (different algorithms exploit different tractability constraints; Blokpoel, 2018; Zednik & Jäkel, 2016). Thus, the theoretical cycle can improve both theory verisimilitude and theory testability.

Tractability/intractability analyses apply widely, not just to simple examples such as the ones above. The approach has been used to assess constraints that render tractable/intractable computational accounts for various capacities relevant for psychological science that span across domains and levels (Table 1), such as coherence-based belief updating (van Rooij et al., 2019), action understanding and theory of mind (Blokpoel et al., 2013; van de Pol et al., 2018; Zeppi & Blokpoel, 2017), analogical processing (van Rooij et al., 2008; Veale & Keane, 1997), problem-solving (Wareham, 2017; Wareham et al., 2011), decision-making (Bossaerts & Murawski, 2017; Bossaerts et al., 2019), neural-network learning (Judd, 1990), compositionality of language (Pagin, 2003; Pagin & Westerståhl, 2010), evolution, learning or development of heuristics for decision-making (Otworowska et al., 2018; Rich et al., 2019), and evolution of cognitive architectures generally (Rich et al., 2020). This existing research (for an overview, see Compendium C in van Rooij et al., 2019) shows that tractability is a widespread concern for theories of capacities relevant for psychological science and moreover that the techniques of tractability analysis can be fruitfully applied across psychological domains.

Building on other mathematical frameworks, and depending on the psychological domain of interest (Table 1) and on one’s background assumptions, computational-level theories can also be assessed for other theoretical constraints, such as computability, physical realizability, learnability, developability, evolvability, and so on. For instance, reconsider foraging. We discussed foraging above at only the cognitive level (Table 1, row 1), but one can also ask how a foraging capacity can be learned, developed, or evolved biologically and/or socially (Table 1, rows 2–5). In some cases, these theoretical constraints can again be assessed by analyses analogous to the general form of the tractability analysis illustrated above (for learnability, see, e.g., Chater et al., 2015; Clark & Lappin, 2013; Judd, 1990; for evolvability, see Kaznatcheev, 2019; Valiant, 2009; for learnability, developability, and evolvability, see Otworowksa et al., 2018; Rich et al., 2020). On the one hand, such analyses are all similar in spirit, as they assess the in-principle possibility of the existence of a computational process that yields the output states from initial states as characterized by the computational-level theory. On the other hand, they may involve additional constraints that are specific to the real-world physical implementation of the computational process under study. For instance, a learning algorithm running on the brain’s wetware needs to meet physical implementation constraints specific to neuronal processes (e.g., Lillicrap et al., 2020; Martin, 2020), evolutionary algorithms realized by Darwinian natural selection are constrained to involve biological information that can be passed on genetically across generations (Barton & Partridge, 2000), and cultural evolution is constrained to involve the social transmission of information across generations and through bottlenecks (Henrich & Boyd, 2001; Kirby, 2001; Mesoudi, 2016; Woensdregt et al., 2020). Hence, brain processes and biological and cultural evolution are all amenable to computational analyses but may have their own characteristic physical realization constraints.

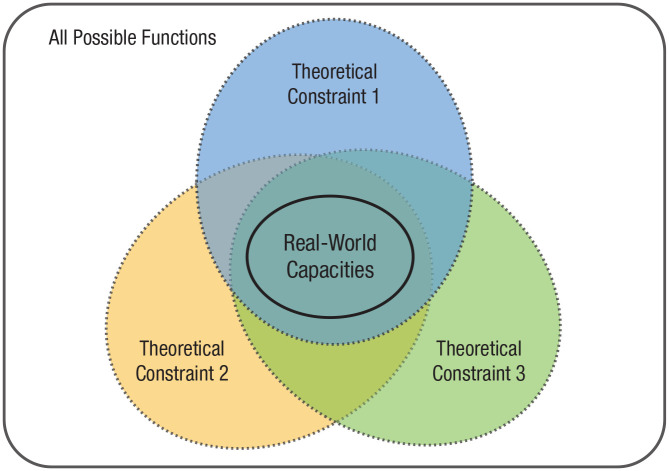

By combining different theoretical constraints one can narrow down the space of possible functions to those describing real-world capacities (Fig. 2). The theoretical cycle thus contributes to early theory validation and advances knowledge even before putting theories to an empirical test. In practice, it serves as a timely safeguard system: It allows one to detect logical or conceptual errors as soon as possible (e.g., intractability of f) and, in any case, before empirical tests are undertaken.

Fig. 2.

The universe of all possible functions (indicated by the rectangle) contains infinitely many possible functions. By applying several constraints jointly (e.g., tractability, learnability, evolvability) psychological scientists can reduce the subset of candidate functions to only those plausibly describing real-world capacities.

What Effects Can Do for Theories of Capacities

We have argued that the primary aim of psychological theory is to explain capacities. But what is the role of effects in this endeavor? How are explanations of capacities (primary explananda) and explanations of effects (secondary explananda) related? Our position, in a nutshell, is that inquiry into effects should be pursued in the context of explanatory multilevel theories of capacities and in close continuity with theory development. 12 From this perspective, the main goal of empirical research, including experimentation (e.g., testing for effects) and computational modeling or simulation, is to further narrow down the space of possible functions after relevant theoretical constraints have been applied. Specifically, good empirical inquiry assumes a set of a priori verisimilar theories of real-world capacities (the intersection in Fig. 2) and then proceeds to partition this set into n subsets. Each subset will (a fortiori) contain a high-verisimilitude theory of capacities. However, across subsets, theories may be empirically different: Theories in subset A may predict effects that are not predicted by theories in subset B, and vice versa. Empirical research, including testing for effects, may allow one to adjudicate among competing theories, thereby eliminating some a priori verisimilar ones that turned out to be implausible a posteriori. To the extent that theories do predict effects, and that those effects are testable experimentally, or via models or simulations, psychology is already well equipped to test those predictions. Here, we are interested in situating effects conceptually in a broader view of inquiry that also encompasses the theoretical cycle: What can effects do for theories of capacities? To answer this question, we need to accept a simple premise: that finding out that a theory is empirically inadequate is more informative if the theory is deemed verisimilar a priori than if it is already known to be implausible (e.g., the f intractable) before the test.

Consider again the multipliers example from Cummins (2000). Multiplication is tractable, and the partial products (M1) and successive addition (M2) algorithms meet minimal constraints of learnability and physical realizability. M1 and M2 are plausible algorithmic-level accounts of the human capacity for multiplication. But depending on which algorithm is used on a particular occasion, performance (e.g., the time it takes for one to multiply two numbers) might show either the linearity effect predicted by M2 or the step-function profile predicted by M1. Note that both M1 and M2 explain the capacity for multiplication. It is not the computational-level analysis that predicts different effects (the f is the same) but rather the algorithmic-level theory. In other cases, effects could follow from one’s computational-level theory (for examples from the psychology of language and logical reasoning, see Baggio et al., 2008, 2015; Geurts & van der Slik, 2005; for examples from the psychology of action, see Blokpoel et al., 2013; Bourgin et al., 2017) or from limitations of resource usage (memory or time), details of physical realization (some effects studied in neuroscience are of this kind), and so on. So one could classify effects depending on the level of analysis from which they follow. This is not a rigid taxonomy but a stepping stone for thinking about the precise links between theory and data. For example, one should want to know why an a priori verisimilar theory of a capacity is found to be a posteriori implausible, which is essential in deciding whether and how to repair the theory. A theory could fail empirically for many reasons, including because its algorithmic- or implementational-level analyses are incorrect (e.g., the capacity f is not realized as the theory says it is), because the postulated f predicts unattested effects or does not predict known effects despite having passed relevant tests of tractability, and so on.

Another dimension in the soft taxonomy of effects suggested by our approach pertains to the degree to which effects are relevant for understanding a psychological capacity. Some effects may well be implied by the theory at some level of analysis but may reflect more or less directly, or not at all, how a capacity is realized and exercised. For example, a brain at work may dissipate a certain amount of heat greater than the heat depleted by the same brain at rest; the chassis of an old vacuum-tube computer may vibrate when it is performing its calculations. These effects (heat or vibration) can tell us something about resource use and physical constraints in these machines, but they do not illuminate how the brain or the computer processes information. These may sound like extreme examples, but the continuum between clearly irrelevant effects such as heat or vibration and the kind of effects studied by experimental psychologists is not partitioned for us in advance: The effects collected by psychological scientists cannot just be assumed to be all equally relevant for understanding capacities across levels of analysis. We may more prudently suppose that they sit on a continuum of relevance or informativeness in relation to a capacity’s theory: Some can evince how the capacity is realized or exercised, but others are in the same relation to the target capacity as heat is to brain function.

There are no steadfast rules that dictate how or where to position specific effects on that continuum, but one may envisage some diagnostic tests. Consider again classical effects, such as the Stroop effect, the McGurk effect, primacy and recency effects, visual illusions, priming, and so on. In each case, one may ask whether the effect reveals a constitutive aspect of the capacity and how it is realized and exercised. Diagnostic tests may be in the form of counterfactual questions: Take effect X and suppose X was not the case; would that entail that the system lacks the capacity attributed to it? Would it entail that the capacity is realized or exercised differently than what the algorithmic or implementation theories hypothesize? For example, would a system not subject to visual illusions (i.e., lacking their characteristic phenomenology) also lack the human capacity for visual perception? Would a system that does not show semantic priming effects also thereby lack a capacity for accessing and retrieving stored lexical meanings? Our intent here is not to suggest specific answers but to draw attention to the fact that addressing those questions should enable us to make better informed decisions on what effects we decide to leverage to understand capacities. It also matters for whether we can expect effects to be stable across situations or tests. An effect that reveals a constitutive aspect of a capacity (one for which a counterfactual question gets an affirmative answer) may be expected to occur whenever that capacity is exercised, and so do effects that are direct manifestations of how the capacity is realized: Such effects can therefore also be expected to be replicable across experimental tests.

This brings us to a further point. Tests of effects can contribute to theories of capacities to the extent that the information they provide bears on the structure of the system, whether it is the form of the f it computes or the virtual machines (algorithms) or physical machines on which it is running. The contrast between qualitative (e.g., the direction of an effect) and quantitative predictions (e.g., numerical point predictions) cuts the space of effects in a way that may be useful in natural science (e.g., physics) but not in psychology. Meehl (1990, 1997) rightly criticized the use of “weak” tests for theory appraisal, but his call for “strong” tests (i.e., tests of hypotheses with risky point predictions), if pursued systematically, would entrench the existing focus in psychology on effects, albeit requiring that effects be quantitative. The path to progress in psychological science lies not in a transition from weak qualitative to strong quantitative tests but rather in strong tests of qualitative structure, that is, tests for effects that directly tap into the workings of a system as it is exercising the capacity of interest. Computational-level theories of capacities are not quantitative but qualitative models: They reveal the internal formal structure of a system, or the invariants that allow it to exercise a particular capacity across situations, conditions, and so on (Simon, 1990). There is usually some flexibility resulting from free parameters, but, as we have argued, principled constraints on those parameters (tractability, etc.) may be established via analyses in the theoretical cycle and by explorations of qualitative structure (Navarro, 2019; Pitt et al., 2006; Roberts & Pashler, 2000).

Conclusion

Several recent proposals have emphasized the need to potentiate or improve theoretical practices in psychology (Cooper & Guest, 2014; Fried, 2020; Guest & Martin, 2021; Muthukrishna & Henrich, 2019; Smaldino, 2019; Szollosi & Donkin, 2021), whereas others have focused on clarifying the complex relationship between theory and data in scientific inference (Devezer et al., 2019, 2020; Kellen, 2019; MacEachern & Van Zandt, 2019; Navarro, 2019; Szollosi et al., 2020). Our proposal fits within this landscape but aims at making a distinctive contribution through the idea of the theoretical cycle: Before theories are even put to an empirical test, they can be assessed for their plausibility on formal, computational grounds; this requires that there is something to assess formally; that is, it requires a computational-level analysis of the capacity of interest. In a theoretical cycle, one addresses questions iteratively concerning, for example, the tractability, learnability, physical realizability, and so on, of the capacity as formalized in the theory. However, the theoretical cycle and the empirical cycle will need to always be interlaced: Abduced theories can then both be explanatory and meet plausibility constraints (i.e., have minimal verisimilitude) on testing; conversely, relevant effects can be leveraged to understand capacities better. The net result is that empirical tests are informative and can play a direct role in further improving our theories.

Acknowledgments

We thank Mark Blokpoel, Marieke Woensdregt, Gillian Ramchand, Alexander Clark, Eirini Zormpa, Johannes Algermissen, and participants of ReproduciliTea Nijmegen for useful discussions. We are grateful to Travis Proulx and two anonymous reviewers for their constructive comments that helped us improve an earlier version of the manuscript. Parts of this article are based on van Rooij (2019).

Cummins (2000) uses the McGurk effect to make the same point. We mention the Stroop effect because it is among the least contested effects in psychology and easily replicable in a live in-class demonstration. Yet our point is that even uncontested, highly replicable effects are not primary explananda in psychology.

This is not to say that in practice this never happens (Newell, 1973). But we believe good theoretical practice has a different aim and starting position, as we explain later.

One can reasonably debate whether these capacities “carve up” the mind in the right way; and indeed this is a topic of dispute between, for example, cognitivists and enactivists (van Dijk et al., 2008). Still, few if any cognitive psychologists would maintain that the primary explananda are laboratory effects, instead of cognitive capacities, regardless of how one carves up the latter. We see the carving up as part of the activity of theory development.

We thank Ivan Toni for this analogy.

See Meehl (1997) on the “crud factor” (Lykken, 1991): In complex systems often “everything correlates with almost everything else” (p. 393); so the precise null hypothesis for statistical effects is seldom if ever true, but most effects may not provide much information about the key principles of operation of a complex mechanism; many will be by-effects of idiosyncratic conditions.

How vast is the space of possible effects? We can in principle define an unlimited number of conditions and compare them to each other. If a “condition” is some combination of values for situational variables, even if we assume only binary values (e.g., yes vs. no, presence vs. absence, greater than vs. less than), then there are 2k distinct conditions that we can, in principle, define. For the number of situational variables (from low-level properties of the world, such as lighting conditions, to higher-order properties, such as the day of the week), k ≥ 100 is a conservative lower bound. Then there are at least 2100 × (2100 − 1) > 1059 distinct comparisons we can make to test for an “effect” of condition, such as the number of seconds since the birth of the universe (< 1018) and the world population (< 1010). Sampling this vast space to discover effects without any guidance of substantive theory, we are likely to “discover” many meaningless effects (“crud factor”) and fail to discover actually informative effects.

Even then, coding the input-output mapping as a look-up table is not explanatory, even if it is descriptive and possibly predictive (within limits). As, for instance, Cummins (2000) notes, the tide tables predict the tides well but do not explain them. One could make a list of (input, output) pairs ({1, 2}, 2), ({3, 4}, 12), ({12, 3}, 36)), but that is hardly an explanation. Moreover, the list does not allow predictions beyond the observed domain: Unless one hypothesizes that the capacity one is observing is “multiplication,” one would not be able to know the value of x in ({112, 3.5}, x). This is all the more pressing because any observations we would make in a laboratory task setting are typically a very small subset of all possible capacity inputs and outputs, and the functions that we are trying to abduce are much more complex than multiplication (e.g., compositionality; Baroni, 2020; Martin & Baggio, 2020).

The idea is not fully hypothetical (see Lihoreau et al., 2012), but details here are for illustration only.

The theory admits different orderings as long as they satisfy the output property (the constraint given by the inequality “≥”). Formally, functions are always one-to-one or many-to-one, so strictly speaking we are dealing here with a relation, or a computational problem. This is fine for characterizing capacities, which usually involve abilities to produce outputs that are invariant with respect to some property (“laws of qualitative structure”; Newell & Simon, 1976; Simon, 1990). So too in our foraging example: Depending on input details, there may be two or more routes of travel that meet the constraint; then producing at least one of them would be exercising the foraging capacity as defined above.

These constraints can be seen as hypothesized “normal conditions” for the proper exercise of a capacity. An intuitive argument for the g(s) ≥ max(c(s,s′)) + max(c(s,s′))/n constraint would be as follows: If the amount of food collected at each site exceeds max(c(s,s′)), the animal always collects more food than it expends energy for traveling from s0 to the n sites; to have enough food to cover traveling back from site sn to s0, it needs additionally max(c(s,s′))/n at each site.

There may be other constraints that can achieve the same result; we invite interested readers to explore this as an exercise (for guidance, see van Rooij et al., 2019).

This is implicit in our view of interactions between the theoretical and empirical cycles and has been emphasized in recent philosophy of science: For example, van Fraassen (2010) discusses “the joint evolution of experimental practice and theory,” arguing that “experimentation is the continuation of theory construction by other means” (pp. 111–112).

Footnotes

ORCID iDs: Iris van Rooij  https://orcid.org/0000-0001-6520-4635

https://orcid.org/0000-0001-6520-4635

Giosuè Baggio  https://orcid.org/0000-0001-5086-0365

https://orcid.org/0000-0001-5086-0365

Transparency

Action Editors: Travis Proulx and Richard Morey

Advisory Editor: Richard Lucas

Editor: Laura A. King

Declaration of Conflicting Interests: The author(s) declared that there were no conflicts of interest with respect to the authorship or the publication of this article.

References

- Anderson J. R. (1990). The adaptive character of thought. Erlbaum. [Google Scholar]

- Anderson J. R. (1991). Is human cognition adaptive? Behavioral and Brain Sciences, 14(3), 471–517. [Google Scholar]

- Ausiello G., Crescenzi P., Gambosi G., Kann V., Marchetti-Spaccamela A., Protasi M. (1999). Complexity and approximation: Combinatorial optimization problems and their approximability properties. Springer. [Google Scholar]

- Baggio G. (2018). Meaning in the brain. MIT Press. [Google Scholar]

- Baggio G., Stenning K., van Lambalgen M. (2016). Semantics and cognition. In Aloni M., Dekker P. (Eds.), The Cambridge handbook of formal semantics (pp. 756–774). Cambridge University Press. [Google Scholar]

- Baggio G., Van Lambalgen M., Hagoort P. (2008). Computing and recomputing discourse models: An ERP study. Journal of Memory and Language, 59(1), 36–53. [Google Scholar]

- Baggio G., Van Lambalgen M., Hagoort P. (2012. a). Language, linguistics and cognition. In Kempson R., Fernando T., Asher N. (Eds.), Philosophy of linguistics (pp. 325–355). Elsevier. [Google Scholar]

- Baggio G., Van Lambalgen M., Hagoort P. (2012. b). The processing consequences of compositionality. In Werning M., Hinzen W., Machery E. (Eds.), The Oxford handbook of compositionality (pp. 655–672). Oxford University Press. [Google Scholar]

- Baggio G., van Lambalgen M., Hagoort P. (2015). Logic as Marr’s computational level: Four case studies. Topics in Cognitive Science, 7(2), 287–298. [DOI] [PubMed] [Google Scholar]

- Baker C. L., Saxe R., Tenenbaum J. B. (2009). Action understanding as inverse planning. Cognition, 113(3), 329–349. [DOI] [PubMed] [Google Scholar]

- Baroni M. (2020). Linguistic generalization and compositionality in modern artificial neural networks. Philosophical Transactions of the Royal Society B: Biological Sciences, 375(1791). 10.1098/rstb.2019.0307 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett H. C. (2005). Enzymatic computation and cognitive modularity. Mind & Language, 20(3), 259–287. [Google Scholar]

- Barton N., Partridge L. (2000). Limits to natural selection. BioEssays, 22(12), 1075–1084. [DOI] [PubMed] [Google Scholar]

- Bird A. (2018). Understanding the replication crisis as a base rate fallacy. The British Journal for the Philosophy of Science. Advance online publication. 10.1093/bjps/axy051 [DOI]

- Blokpoel M. (2018). Sculpting computational-level models. Topics in Cognitive Science, 10(3), 641–648. [DOI] [PubMed] [Google Scholar]

- Blokpoel M., Kwisthout J., van der Weide T., Wareham T., van Rooij I. (2013). A computational-level explanation of the speed of goal inference. Journal of Mathematical Psychology, 570(3–4), 117–133. [Google Scholar]

- Blokpoel M., Wareham T., Haselager P., Toni I., van Rooij I. (2018). Deep analogical inference as the origin of hypotheses. Journal of Problem Solving, 11(1), Article 3. 10.7771/1932-6246.1197 [DOI] [Google Scholar]

- Bohn M., Frank M. C. (2019). The pervasive role of pragmatics in early language. Annual Review of Developmental Psychology, 1, 223–249. 10.1146/annurev-devpsych-121318-085037 [DOI] [Google Scholar]

- Bonawitz E., Denison S., Griffiths T., Gopnik A. (2014). Probabilistic models, learning algorithms, response variability: Sampling in cognitive development. Trends in Cognitive Sciences, 18, 497–500. 10.1016/j.tics.2014.06.006 [DOI] [PubMed] [Google Scholar]

- Bossaerts P., Murawski C. (2017). Computational complexity and human decision-making. Trends in Cognitive Sciences, 21(12), 917–929. [DOI] [PubMed] [Google Scholar]

- Bossaerts P., Yadav N., Murawski C. (2019). Uncertainty and computational complexity. Philosophical Transactions of the Royal Society B: Biological Sciences, 374(1766). 10.1098/rstb.2018.0138 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bourgin D., Lieder F., Reichman D., Talmon N., Griffiths T. (2017). The structure of goal systems predicts human performance. In Gunzelmann G., Howes A., Tenbrink T., Davelaar E. J. (Eds.), Proceedings of the 39th annual meeting of the Cognitive Science Society (pp. 1660–1665). Cognitive Science Society. https://cognitivesciencesociety.org/wp-content/uploads/2019/01/cogsci17_proceedings.pdf [Google Scholar]

- Carey S. (2009). The origin of concepts. Oxford University Press. [Google Scholar]

- Carruthers P. (2006). The architecture of the mind. Oxford University Press. [Google Scholar]

- Chalmers D. J. (2011). A computational foundation for the study of cognition. Journal of Cognitive Science, 12, 325–359. [Google Scholar]

- Chater N., Clark A., Goldsmith J. A., Perfors A. (2015). Empiricism and language learnability. Oxford University Press. [Google Scholar]

- Chater N., Oaksford M. (1999). Ten years of the rational analysis of cognition. Trends in Cognitive Sciences, 3(2), 57–65. [DOI] [PubMed] [Google Scholar]

- Chater N., Oaksford M. (2000). The rational analysis of mind and behavior. Synthese, 122, 93–131. [Google Scholar]

- Chater N., Oaksford M., Nakisa R., Redington M. (2003). Fast, frugal, and rational: How rational norms explain behavior. Organizational Behavior and Human Decision Processes, 90, 63–86. [Google Scholar]

- Chumbley J., Steinhoff A. K. (2019). A computational perspective on social attachment. Infant Behavior and Development, 54, 85–98. [DOI] [PubMed] [Google Scholar]

- Clark A., Lappin S. (2013). Complexity in language acquisition. Topics in Cognitive Science, 5(1), 89–110. [DOI] [PubMed] [Google Scholar]

- Cooper R. P., Guest O. (2014). Implementations are not specifications: Specification, replication and experimentation in computational cognitive modeling. Cognitive Systems Research, 27, 42–49. [Google Scholar]

- Cosmides L., Tooby J. (1995). Beyond intuition and instinct blindness: Toward an evolutionary rigorous cognitive science. Cognition, 50(1–3), 41–77. [DOI] [PubMed] [Google Scholar]

- Cummins R. (2000). “How does it work?” v. “What are the laws?” Two conceptions of psychological explanation. In Keil F., Wilson R. (Eds.), Explanation and cognition (pp. 117–145). MIT Press. [Google Scholar]

- Danks D. (2008). Rational analyses, instrumentalism, and implementations. In Chater N., Oaksford M. (Eds.), The probabilistic mind: Prospects for rational models of cognition (pp. 59–75). Oxford University Press. [Google Scholar]

- Danks D. (2013). Moving from levels and reduction to dimensions and constraints. In Knauff M., Pauen M., Sebanz N., Wachsmuth I. (Eds.), Proceedings of the 35th annual meeting of the Cognitive Science Society (pp. 2124–2129). Cognitive Science Society. https://cognitivesciencesociety.org/wp-content/uploads/2019/05/cogsci2013_proceedings.pdf [Google Scholar]

- De Houwer J., Moors A. (2015). Levels of analysis in social psychology. In Gawronski B., Bodenhausen G. (Eds.), Theory and explanation in social psychology (pp. 24–40). Guilford. [Google Scholar]

- Dennett D. (1994). Cognitive science as reverse engineering: Several meanings of ‘top-down’ and ‘bottom-up.’ In Prawitz D., Skyrms B., Westerstahl D. (Eds.), Logic, methodology & philosophy of science IX (pp. 679–689). Elsevier Science. [Google Scholar]

- Dennett D. C. (1995). Darwin’s dangerous idea: Evolution and the meanings of life. Simon & Schuster. [Google Scholar]

- Devezer B., Nardin L. G., Baumgaertner B., Buzbas E. O. (2019). Scientific discovery in a model-centric framework: Reproducibility, innovation, and epistemic diversity. PLOS ONE, 14(5), Article 0216125. 10.1371/journal.pone.0216125 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Devezer B., Navarro D. J., Vandekerckhove J., Buzbas E. O. (2020). The case for formal methodology in scientific reform. BioRxiv. 10.1101/2020.04.26.048306 [DOI] [PMC free article] [PubMed]

- Egan F. (2010). Computational models: A modest role for content. Studies in History and Philosophy of Science, 41, 253–259.20934646 [Google Scholar]

- Egan F. (2017). Function-theoretic explanation and the search for neural mechanisms. In Kaplan D. M. (Ed.), Explanation and integration in mind and brain science (pp. 145–163). Oxford University Press. [Google Scholar]

- Fodor J. (2000). The mind doesn’t work that way: The scope and limits of evolutionary psychology. MIT Press. [Google Scholar]

- Frank M. C., Goodman N. D. (2012). Predicting pragmatic reasoning in language games. Science, 336(6084), 998–998. [DOI] [PubMed] [Google Scholar]

- Fried E. I. (2020). Lack of theory building and testing impedes progress in the factor and network literature. Psychological Inquiry, 31(4), 271–288. 10.1080/1047840X.2020.1853461. [DOI] [Google Scholar]

- Frixione M. (2001). Tractable competence. Minds and Machines, 11(3), 379–397. [Google Scholar]

- Garey M. R., Johnson D. S. (1979). Computers and intractability: A guide to the theory of NP-completeness. Freeman. [Google Scholar]

- Gentner D. (1983). Structure-mapping: A theoretical framework for analogy. Cognitive Science, 7, 155–170. [Google Scholar]

- Gentner D. (2010). Bootstrapping the mind: Analogical processes and symbol systems. Cognitive Science, 34(5), 752–775. [DOI] [PubMed] [Google Scholar]

- Geurts B., van der Slik F. (2005). Monotonicity and processing load. Journal of Semantics, 22(1), 97–117. [Google Scholar]

- Gigerenzer G. (2020). How to explain behavior? Topics in Cognitive Science, 12(4), 1363–1381. 10.1111/tops.12480 [DOI] [PubMed] [Google Scholar]

- Goodman N. D., Baker C. L., Bonawitz E. B., Mansinghka V. K., Gopnik A., Wellman H., Schulz L. E., Tenenbaum J. B. (2006). Intuitive theories of mind: A rational approach to false belief. In Proceedings of the 28th Annual Conference of the Cognitive Science Society (pp. 1382–1387). Erlbaum. [Google Scholar]

- Gopnik A., Bonawitz E. (2015). Bayesian models of child development. Wiley Interdisciplinary Reviews: Cognitive Science, 6(2), 75–86. [DOI] [PubMed] [Google Scholar]

- Griffiths T. L., Chater N., Kemp C., Perfors A., Tenenbaum J. B. (2010). Probabilistic models of cognition: Exploring representations and inductive biases. Trends in Cognitive Sciences, 14(8), 357–364. [DOI] [PubMed] [Google Scholar]

- Griffiths T. L., Lieder F., Goodman N. D. (2015). Rational use of cognitive resources: Levels of analysis between the computational and the algorithmic. Topics in Cognitive Science, 7(2), 217–229. [DOI] [PubMed] [Google Scholar]

- Guest O., Martin A. E. (2021). How computational modeling can force theory building in psychological science. Perspectives on Psychological Science, 16(4), 789–802. 10.1177/1745691620970585 [DOI] [PubMed] [Google Scholar]

- Gureckis T. M., Markant D. B. (2012). Self-directed learning: A cognitive and computational perspective. Perspectives in Psychological Science, 7(5), 464–481. [DOI] [PubMed] [Google Scholar]

- Haig B. D. (2018). An abductive theory of scientific method. In Method matters in psychology (pp. 35–64). Springer. [Google Scholar]

- Henrich J., Boyd R. (2001). Why people punish defectors: Weak conformist transmission can stabilize costly enforcement of norms in cooperative dilemmas. Journal of Theoretical Biology, 208(1), 79–89. [DOI] [PubMed] [Google Scholar]

- Horgan T., Tienson J. (1996). Connectionism and the philosophy of psychology. MIT Press. [Google Scholar]

- Huskey R., Bue A. C., Eden A., Grall C., Meshi D., Prena K., Schmälzle R., Scholz C., Turner B. O., Wilcox S. (2020). Marr’s tri-level framework integrates biological explanation across communication subfields. Journal of Communication, 70, 356–378. [Google Scholar]

- Isaac A. M., Szymanik J., Verbrugge R. (2014). Logic and complexity in cognitive science. In Baltag A., Smets S. (Eds.), Johan van Benthem on logic and information dynamics (pp. 787–824). Springer. [Google Scholar]

- Judd J. S. (1990). Neural network design and the complexity of learning. MIT Press. [Google Scholar]

- Kaznatcheev A. (2019). Computational complexity as an ultimate constraint on evolution. Genetics, 212(1), 245–265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kellen D. (2019). A model hierarchy for psychological science. Computational Brain & Behavior, 2(3–4), 160–165. [Google Scholar]

- Kinzler K. D., Spelke E. S. (2007). Core systems in human cognition. Progress in Brain Research, 164, 257–264. [DOI] [PubMed] [Google Scholar]

- Kirby S. (2001). Spontaneous evolution of linguistic structure: An iterated learning model of the emergence of regularity and irregularity. IEEE Transactions on Evolutionary Computation, 5(2), 102–110. 10.1109/4235.918430 [DOI] [Google Scholar]

- Kirby S., Cornish H., Smith K. (2008). Cumulative cultural evolution in the laboratory: An experimental approach to the origins of structure in human language. Proceedings of the National Academy of Sciences, USA, 105(31), 10681–10686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klapper A., Dotsch R., van Rooij I., Wigboldus D. (2018). Social categorization in connectionist models: A conceptual integration. Social Cognition, 36(2), 221–246. [Google Scholar]

- Krafft P. M., Griffiths T. L. (2018). Levels of analysis in computational social science. In Rogers T. T., Rau M., Zhu X., Kalish C. W. (Eds.), Proceedings of the 40th annual Cognitive Science Society meeting (pp. 1963–1968). Cognitive Science Society. https://cognitivesciencesociety.org/wp-content/uploads/2019/01/cogsci18_proceedings.pdf [Google Scholar]

- Krakauer J. W., Ghazanfar A. A., Gomez-Marin A., MacIver M. A., Poeppel D. (2017). Neuroscience needs behavior: Correcting a reductionist bias. Neuron, 93(3), 480–490. [DOI] [PubMed] [Google Scholar]

- Kuipers T. A. F. (2000). From instrumentalism to constructive realism. Kluwer Academic Publishers. [Google Scholar]

- Lieder F., Griffiths T. L. (2020). Resource-rational analysis: Understanding human cognition as the optimal use of limited computational resources. Behavioral and Brain Sciences, 43, Article e1. 10.1017/S0140525X1900061X [DOI] [PubMed] [Google Scholar]

- Lihoreau M., Raine N. E., Reynolds A. M., Stelzer R. J., Lim K. S., Smith A. D., Osborne J. L., Chittka L. (2012). Radar tracking and motion-sensitive cameras on flowers reveal the development of pollinator multi-destination routes over large spatial scales. PLOS Biology, 10(9), Article e1001392. 10.1371/journal.pbio.1001392 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lillicrap T. P., Santoro A., Marris L., Akerman C. J., Hinton G. (2020). Backpropagation and the brain. Nature Reviews Neuroscience, 21, 335–346. [DOI] [PubMed] [Google Scholar]

- Lykken D. T. (1991). What’s wrong with psychology anyway? In Cicchetti D., Grove W. M. (Eds.), Thinking clearly about psychology (Vol. 1, pp. 3–39). University of Minnesota Press. [Google Scholar]

- MacEachern S. N., Van Zandt T. (2019). Preregistration of modeling exercises may not be useful. Computational Brain & Behavior, 2, 179–182. [Google Scholar]

- Marcus G. F. (2006). Cognitive architecture and descent with modification. Cognition, 101(2), 443–465. [DOI] [PubMed] [Google Scholar]

- Marr D. (1977). Artificial intelligence—A personal view. Artificial Intelligence, 9(1), 37–48. [Google Scholar]

- Marr D. (2010). Vision: A computational investigation into the human representation and processing of visual information. MIT Press. (Original work published 1982) [Google Scholar]

- Martin A. E. (2020). A compositional neural architecture for language. Journal of Cognitive Neuroscience, 32(8), 1407–1427. 10.1162/jocn_a_01552 [DOI] [PubMed] [Google Scholar]

- Martin A. E., Baggio G. (2020). Modelling meaning composition from formalism to mechanism. Philosophical Transactions of the Royal Society B: Biological Sciences, 375(1791). 10.1098/rstb.2019.0298 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McClamrock R. (1991). Marr’s three levels: A re-evaluation. Minds and Machines, 1(2), 185–196. [Google Scholar]

- Meehl P. E. (1990). Appraising and amending theories: The strategy of Lakatosian defense and two principles that warrant it. Psychological Inquiry, 1(2), 108–141. [Google Scholar]

- Meehl P. E. (1997). The problem is epistemology, not statistics: Replace significance tests by confidence intervals and quantify accuracy of risky numerical predictions. In Harlow L. L., Mulaik S. A., Steiger J. H. (Eds.), What if there were no significance tests? (pp. 393–425). Erlbaum. [Google Scholar]

- Mesoudi A. (2016). Cultural evolution: A review of theory, findings and controversies. Evolutionary Biology, 43(4), 481–497. [Google Scholar]

- Miłkowski M. (2016). Computation and multiple realizability. In Müller V. C. (Ed.), Fundamental issues of artificial intelligence (pp. 29–41). Springer. [Google Scholar]

- Michael J., MacLeod M. A. J. (2018). Computational approaches to social cognition. In Sprevak M., Colombo M. (Eds.)., Routledge handbook of the computational mind (pp. 469–482). Routledge. [Google Scholar]

- Mikhail J. (2008). Moral cognition and computational theory. In Sinnott-Armstrong W. (Ed.), Moral psychology: The neuroscience of morality: Emotion, brain disorders, and development (Vol. 3, p. 81). MIT Press. [Google Scholar]

- Mitchell J. P. (2006). Mentalizing and Marr: An information processing approach to the study of social cognition. Brain Research, 1079(1), 66–75. [DOI] [PubMed] [Google Scholar]

- Moreno M., Baggio G. (2015). Role asymmetry and code transmission in signaling games: An experimental and computational investigation. Cognitive Science, 39(5), 918–943. [DOI] [PubMed] [Google Scholar]

- Muthukrishna M., Henrich J. (2019). A problem in theory. Nature Human Behaviour, 3(3), 221–229. [DOI] [PubMed] [Google Scholar]

- Navarro D. J. (2019). Between the devil and the deep blue sea: Tensions between scientific judgement and statistical model selection. Computational Brain & Behavior, 2, 28–34. [Google Scholar]

- Newell A. (1973). You can’t play 20 questions with nature and win: Projective comments on the papers of this symposium. In Chase W. G. (Ed.), Visual information processing: Proceedings of the eighth annual Carnegie symposium on cognition, held at the Carnegie-Mellon University, Pittsburgh, Pennsylvania, May 19, 1972 (pp. 283–305). Academic Press. https://kilthub.cmu.edu/articles/journal_contribution/You_can_t_play_20_questions_with_nature_and_win_projective_comments_on_the_papers_of_this_symposium/6612977 [Google Scholar]

- Newell A. (1982). The knowledge level. Artificial Intelligence, 18(1), 87–127. [Google Scholar]

- Newell A., Simon H. A. (1976). Computer science as empirical inquiry: Symbols and search. Communications of the ACM, 19(3), 113–126. [Google Scholar]

- Niiniluoto I. (1999). Defending abduction. Philosophy of Science, 66, S436–S451. [Google Scholar]

- Nosek B. A., Beck E. D., Campbell L., Flake J. K., Hardwicke T. E., Mellor D. T., van’t Veer A. E., Vazire S. (2019). Preregistration is hard, and worthwhile. Trends in Cognitive Sciences, 23(10), 815–818. [DOI] [PubMed] [Google Scholar]

- Nowak I., Baggio G. (2016). The emergence of word order and morphology in compositional languages via multigenerational signaling games. Journal of Language Evolution, 1(2), 137–150. [Google Scholar]

- Orponen P., Heikki M. (1987). On approximation preserving reductions: Complete problems and robust measures. CiteSeerx.http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.64.7246

- Otworowska M., Blokpoel M., Sweers N., Wareham T., van Rooij I. (2018). Demons of ecological rationality. Cognitive Science, 42, 1057–1065. [DOI] [PubMed] [Google Scholar]

- Pagin P. (2003). Communication and strong compositionality. Journal of Philosophical Logic, 32(3), 287–322. [Google Scholar]

- Pagin P., Westerståhl D. (2010). Compositionality II: Arguments and problems. Philosophy Compass, 5(3), 265–282. [Google Scholar]

- Partee B. H. (1995). Lexical semantics and compositionality. In Osherson D. (Ed.), An invitation to cognitive science (pp. 311–360). MIT Press. [Google Scholar]