Abstract

The emergence of digital technologies such as smartphones in healthcare applications have demonstrated the possibility of developing rich, continuous, and objective measures of multiple sclerosis (MS) disability that can be administered remotely and out-of-clinic. Deep Convolutional Neural Networks (DCNN) may capture a richer representation of healthy and MS-related ambulatory characteristics from the raw smartphone-based inertial sensor data than standard feature-based methodologies. To overcome the typical limitations associated with remotely generated health data, such as low subject numbers, sparsity, and heterogeneous data, a transfer learning (TL) model from similar large open-source datasets was proposed. Our TL framework leveraged the ambulatory information learned on human activity recognition (HAR) tasks collected from wearable smartphone sensor data. It was demonstrated that fine-tuning TL DCNN HAR models towards MS disease recognition tasks outperformed previous Support Vector Machine (SVM) feature-based methods, as well as DCNN models trained end-to-end, by upwards of 8–15%. A lack of transparency of “black-box” deep networks remains one of the largest stumbling blocks to the wider acceptance of deep learning for clinical applications. Ensuing work therefore aimed to visualise DCNN decisions attributed by relevance heatmaps using Layer-Wise Relevance Propagation (LRP). Through the LRP framework, the patterns captured from smartphone-based inertial sensor data that were reflective of those who are healthy versus people with MS (PwMS) could begin to be established and understood. Interpretations suggested that cadence-based measures, gait speed, and ambulation-related signal perturbations were distinct characteristics that distinguished MS disability from healthy participants. Robust and interpretable outcomes, generated from high-frequency out-of-clinic assessments, could greatly augment the current in-clinic assessment picture for PwMS, to inform better disease management techniques, and enable the development of better therapeutic interventions.

Subject terms: Biomedical engineering, Computer science, Multiple sclerosis

Introduction

Digital health technology assessments may enable a deeper characterisation of the symptoms of neurodegenerative diseases from at-home environments1. A wealth of recent work is focusing on how digital outcomes can be captured from sensor data collected with consumer devices to represent impairment in neurodegenerative and autoimmune diseases such as multiple sclerosis (MS)2,3, Parkinson’s disease (PD)4,5, and rheumatoid arthritis (RA)6, both remotely and longitudinally. MS is a heterogeneous and highly mutable disease, where people with MS (PwMS) can experience symptomatic episodes (a relapse) which fluctuate periodically and impairment tends to increase over time7. Objective and frequent monitoring of the manifestations of PwMS disability is therefore of considerable importance; digital sensor-based assessments may be more accurate than conventional clinical outcomes recorded at infrequent visits in detecting subtle progressive sub-clinical changes or long-term disability in PwMS8. Furthermore, earlier identification of changes in PwMS impairment are important to identify and provide better therapeutic strategies9.

Alterations during ambulation (gait) due to MS are a amongst the most common indication of MS impairment10–15. PwMS can display postural instability11, gait variability12–14 and fatigue15 during various stages of disease progression.

The gold-standard assessment of disability in MS is the Expanded Disability Status Scale (EDSS)16, as well as specific functional domain assessments such as the Timed 25-Foot Walk (T25FW), which is part of the Multiple Sclerosis Functional Composite score17,18, and the Two-Minute Walk Test (2MWT) which also assesses physical gait function and fatigue in PwMS19.

Body worn inertial sensor-based measurements have been proposed as objective methods to characterise gait function in PwMS13,14,20. This study builds upon our previous investigations3, where we have shown how inertial sensors contained within consumer-based smartphones and smartwatches can be used to characterise gait impairments in PwMS from a remotely administered Two-Minute Walk Test (2MWT)19. We have demonstrated how inertial sensor-based features can be extracted from these consumer devices to develop machine learning (ML) models that can distinguish MS disability from healthy participants.

These approaches, however, are constrained transformations and approximations of ambulatory function which are based on prior assumptions. Hand-crafted gait features are often established signal-processing metrics re-purposed as surrogates to represent aspects of PwMS gait; for instance, extracting the variance in a sensor signal in an attempt to capture gait variability in PwMS. As such, there may be greater power in allowing an algorithm to learn its own features, termed representation learning21. Deep learning is an overarching term given to representation learning, where multiple levels of representation are obtained through the combination of a number of stacked (hence deep) non-linear model layers. Deep learning models typically describe convolutional neural networks (CNN), deep neural networks (DNN), and combined fully-connected deep convolutional neural networks (DCNN) architectures21. Other architectures include recurrent neural networks (RNN), such as Long Short Term Memory (LSTM) networks, which are especially adept at modelling sequential time-series data21. While CNNs are omnipresent in image recognition-based tasks, these models are often extremely successful at many time-series related tasks22,23. For example, CNNs have been shown to act as feature extractors capable of learning temporal and spatio-temporal information directly from the raw time-series signals22. The features extracted by convolutional layers can then be arranged to create a final output through fully connected (dense) layers. It should be noted that although there is no fundamental difference between the nomenclature ‘CNN’ and ‘DCNN’, in this manuscript we explicitly refer to the entire model as a DCNN in order to facilitate the distinguishment between the role of the feature extraction CNN layers and classification fully connected layers.

Recently, deep networks are also being applied towards inertial sensor data for a range of various activity related tasks. For instance, by far the most popular—and most accurate—techniques which have been applied to Human Activity Recognition (HAR) based sensor activities incorporate deep networks23–25. Many studies are beginning to explore disease classification and symptom monitoring with wearable-generated inertial sensor data using deep networks. Representations learned using DCNNs from wearable and smartphone inertial sensors have been shown to predict gait impairment in Parkinson’s disease5,26, to predict falls risk in both the elderly27 and in PwMS28, as well as DCNNs for subject identification tasks29,30.

Deep transfer learning for remote disease classification

The work presented in this study compares the performance of CNN extracted features and DCNN models against hand-crafted features previously introduced in3 to directly classify healthy controls (HC) and subgroups of PwMS. Despite the possibility of significant performance improvements compared to hand-crafted feature-based methods, deep networks require much more training data to make successful, robust and generaliseable decisions21. Transfer learning (TL) is a machine learning technique which aims to overcome these challenges by transferring information learned between related source and target domains21,31.

While the data may be in different domains, or the distributions may differ from the target and source tasks, transfer learning assumes that the knowledge that is learned in another task and dataset will be useful and related to the new target task.

Transfer learning has been successfully implemented in many computer vision tasks32 and for time series classification tasks31, such as EEG sleep-staging33,34, and importantly, towards accelerometery based falls prediction27 and within physical activity recognition35,36.

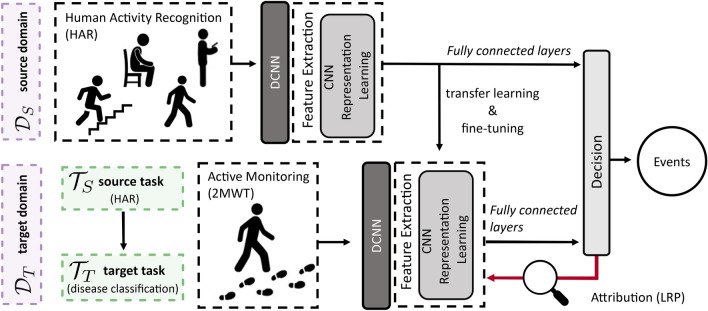

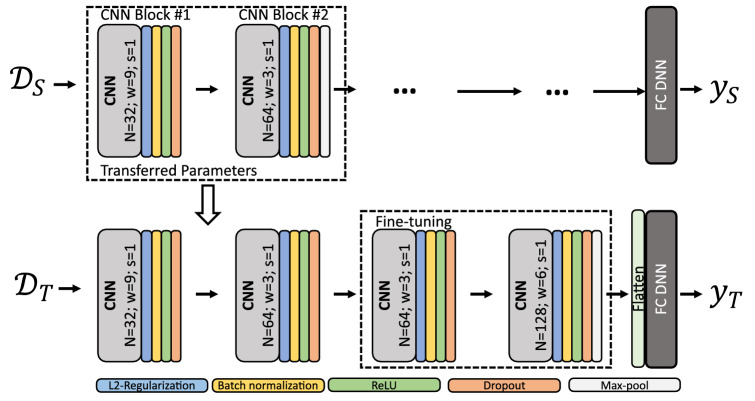

We therefore aim to utilise transfer learning to supplement our model’s ability to discriminate between healthy and diseased subjects in the FLOODLIGHT proof-of-concept (FL) dataset (see Table 1)3,37. Deep transfer learning was performed by first identifying relevant large (open-) source datasets from which information can be exploited. The similarity between some HAR datasets and FL, the applicability of the HAR domain task (which includes walking bouts), as well as the trove of established HAR deep network architectures, suggests that HAR may be a suitable candidate to transfer domain knowledge. We identified two publicly available datasets, UCI HAR38 and WISDM39, which use comparable smartphone and smartwatch devices, and device affixing locations similar to that of FL. Figure 1 schematically illustrates the transfer learning approach undertaken, where the information learned from a HAR classification task () and dataset () are transferred towards a disease recognition task () within the FL dataset (). Demographic details of the UCI HAR and WISDM HAR datasets explored in this study can be found in the accompanying supplementary material.

Table 1.

Population Demographics of the FLOODLIGHT PoC dataset. Clinical scores taken as the average per subject over the entire study, where the mean ± standard deviation across population are reported; RRMS, Relapsing-remitting MS; PPMS, Primary-progressive MS; SPMS, Secondary-progressive MS; EDSS, Expanded Disability Status Scale; T25FW, the Timed 25-Foot Walk; EDSS (amb.) refers to the ambulation sub-score as part of the EDSS; [s], indicates measurement in seconds;

| HC | PwMSmild | PwMSmod | |

|---|---|---|---|

| (n=24) | (n=52) | (n=21) | |

| Age | 35.6 ± 8.9 | 39.3 ± 8.3 | 40.5 ± 6.9 |

| Sex (M/F) | 18/6 | 16/36 | 7/14 |

| RRMS/PPMS/SPMS | 52/0/0 | 14/3/4 | |

| EDSS | 1.7 ± 0.8 | 4.2 ± 0.7 | |

| EDSS (amb.) | 0.1 ± 0.3 | 1.9 ± 1.5 | |

| T25FW [s] | 5.0 ± 0.9 | 5.3 ± 0.9 | 7.9 ± 2.2 |

Figure 1.

Schematic of proposed smartphone-based remote disease classification approach. First, open-source datasets () were utilised to learn a HAR classification task () with a Deep Convolutional Neural Network (DCNN). Learned activity information was then subsequently transferred using the transfer learning (TL) framework, where a portion of the DCNN model is retrained on the FL datatset (), and parameters are fine-tuned towards the application of a disease recognition task (). DCNN model decisions can then be visually interpreted using attribution techniques, such as layer-wise relevance propagation (LRP), which aim to map the patterns of an input signal that are responsible for the activations within a network, and hence uncover pertinent MS disease-related ambulatory characteristics.

Visually interpreting smartphone-based remote sensor models through attribution

Deep networks can be highly non-linear and complex, leading to an inherent difficulty in interpreting the decisions that lead to a prediction40,41. As such, there is a considerable interest in explaining and understanding these “black-box” algorithms42; model transparency is particularly considered a hindrance to the widespread uptake and acceptance of deep networks in medical contexts, versus less powerful, but interpretable linear models43. A number of techniques have been developed in recent years to help explain deep neural networks40,42,44–46. Layer-wise relevance propagation (LRP) is a backward propagation technique which has gained considerable notoriety as a method to explain and interpret deep networks beyond many existing techniques47,48. Layer-wise relevance propagation has demonstrated clinical utility in interpreting relevant parts of the brain responsible for the predictions of Alzheimer’s disease (AD)49 and MS using MRI images50. Attribution through LRP has also been successfully applied to clinical time series data such as EEG trial classification for brain-computer interfacing51 and, crucially, at identifying gait patterns unique to individual subjects52,53. The latter study, by Horst et al., reliably demonstrated how LRP could characterise temporal gait patterns, and explained the nuances of particular gait characteristics that distinguished between individual participants in detail53. An extension of this rationale is that there may be gait patterns that are characteristic of a disease, or diseased sub-population. As such, using the LRP framework, we will attempt to attribute, explain, and interpret the patterns of sensor signal (and therefore the features) that are relevant for distinguishing MS-related gait impairment from healthy ambulation identified using the DCNN models this study (as outlined in Fig. 1).

Results

A DCNN model was first trained independently on UCI HAR and WISDM datasets. The information learned from these HAR tasks were then transferred and fine-tuned on the FL dataset for disease recognition (classification) tasks. DCNN models trained exclusively on FL were compared to those fine-tuned from HAR and bench-marked against established feature-based approaches. Finally, DCNN model predictions were decomposed using layer-wise relevance propagation (LRP) in order to interpret the signal characteristics that influenced a prediction for various individual HC and PwMSmod 2MWT segment examples. Table 1 depicts the population demographics for the FLOODLIGHT PoC dataset.

Classification evaluation

Evaluation of activity recognition

It was observed that UCI HAR-based activities were well differentiated (Acc: 0.905 ± 0.018, : 0.880 ± 0.023, MF: 0.893 ± 0.025). Much of the confusion between classes occurred between similar “static” activities (such as sitting and standing). Distinguishing WISDM-based HAR activities was less accurate in comparison (Acc: 0.621 ± 0.037, : 0.525 ± 0.0047, MF: 0.622 ± 0.038), although much of the relative added confusion in WISDM occurred between similar “dynamic” activities (such as jogging and walking). Despite this, the prediction of static vs. dynamic activities were distinctly separate for both UCI HAR and WISDM.

Evaluation of MS disease recognition

Three separate classification models were constructed for binary tasks (HC vs. PwMSmild, PwMSmild vs. PwMSmod and HC vs. PwMSmod) to allow for direct comparison of the hand-crafted feature-based classification outcomes assessed in3, as well as a unified multi-class model incorporating all three classes simultaneously. The implementation of the baseline SVM model and hand-crafted features have previously been described in3. Hand-crafted features included various statistical moments of the acceleration epochs and frequency content, as well as energy- and entropy-based properties of the time-frequency signal components though wavelet and empirical mode decomposition. For further information we refer the reader to3. Table 2 depicts the classification outcomes for all tasks. Bench-marking against a feature based Support Vector Machines (SVM) classifier3, DCNN (end-to-end) model performance was similar in all tasks. PwMSmod could largely be distinguished from HC and PwMSmild. HAR DCNN models evaluated directly on FL (“direct”) did not distinguish between subject groups. Transfer learning improved disease classification accuracy for all tasks examined relative to feature-based and end-to-end models by upwards of 8%–15%, and by 33% in multi-class tasks, where TL DCNN based on , UCI HAR and WISDM performed similarly for all target classification tasks (Table 2). Further results expanding on this work can be found in the accompanying supplementary material, including the parameters of DCNN models achieving maximal classification performance within Table 2.

Table 2.

Comparison of HC vs. PwMS subgroup classification results between various models for each task subset, . Results are presented as: (1) the posterior overall subject-wise outcome for one cross-validation (CV) run as well as (2) the 2MWT test-wise median and interquartile range (IQR) across that CV in brackets. The best performing model for each are highlighted in bold. Acc: Accuracy; , Cohen’s Kappa statistic; MF, Macro-F1 score.

| Acc. | MF | ||

|---|---|---|---|

| HC vs. PwMSmild | |||

| Features + SVM | 0.671 (0.576, 0.544–0.696) | 0.212 (0.153, 0.088–0.393) | 0.605 (0.575, 0.527–0.694) |

| DCNN (end-to-end) | 0.658 (0.601, 0.517–0.641) | 0.226 (0.082, 0.037–0.194) | 0.613 (0.541, 0.494–0.588) |

| DCNN (UCI HARFL) | 0.776 (0.741, 0.688–0.767) | 0.510 (0.435, 0.346–0.481) | 0.754 (0.716, 0.662–0.737) |

| DCNN (WISDMFL) | 0.763 (0.733, 0.698–0.761) | 0.486 (0.479, 0.343–0.490) | 0.741 (0.727, 0.667–0.743) |

| PwMSmild vs. PwMSmod | |||

| Features + SVM | 0.849 (0.783, 0.706–0.858) | 0.627 (0.566, 0.412–0.708) | 0.813 (0.778, 0.692–0.853) |

| DCNN (end-to-end) | 0.822 (0.682, 0.617–0.763) | 0.583 (0.356, 0.166–0.444) | 0.791 (0.675, 0.562–0.721) |

| DCNN (UCI HAR FL) | 0.904 (0.849, 0.839–0.873) | 0.776 (0.675, 0.650–0.707) | 0.888 (0.837, 0.823–0.852) |

| DCNN (WISDMFL) | 0.918 (0.869, 0.833–0.935) | 0.810 (0.690, 0.630–0.844) | 0.905 (0.845, 0.812–0.922) |

| HC vs. PwMSmod | |||

| Features + SVM | 0.800 (0.773, 0.737–0.881) | 0.595 (0.546, 0.474–0.763) | 0.796 (0.772, 0.737–0.881) |

| DCNN (end-to-end) | 0.822 (0.734, 0.663–0.831) | 0.641 (0.462, 0.292–0.657) | 0.820 (0.730, 0.618–0.828) |

| DCNN (UCI HARFL) | 0.889 (0.873, 0.730–0.929) | 0.777 (0.743, 0.446–0.847) | 0.889 (0.870, 0.723–0.924) |

| DCNN (WISDMFL) | 0.911 (0.886, 0.766–0.911) | 0.821 (0.772, 0.520–0.820) | 0.911 (0.886, 0.760–0.910) |

| HC vs. PwMSmild vs. PwMSmod | |||

| Features + SVM | 0.629 (0.551, 0.510–0.577) | 0.368 (0.093, 0.020–0.103) | 0.580 (0.510, 0.495–0.540) |

| DCNN (end-to-end) | 0.608 (0.503, 0.488–0.516) | 0.274 (0.106, 0.081–0.130) | 0.523 (0.446, 0.402–0.483) |

| DCNN (UCI HARFL) | 0.814 (0.703, 0.700–0.744) | 0.673 (0.331, 0.325–0.423) | 0.796 (0.672, 0.664–0.720) |

| DCNN (WISDMFL) | 0.763 (0.690, 0.677–0.737) | 0.571 (0.303, 0.274–0.407) | 0.725 (0.671, 0.644–0.699) |

“features + SVM” refers to classification performed using features and a SVM with the pipeline described in3;

“end-to-end”, refers to a model trained and validated end-to-end exclusively on data;

“” denotes the source HAR dataset used and transferred to FL and . See Fig. 6 for a more detailed description of the TL approach used in this study.

Interpreting MS remote sensor data

The results described in this section aim to interpret smartphone sensor data recorded from FL through attribution techniques. Trained models were decoded using LRP, where we propose that this framework allows us to understand (at least to some extent) the classification decision in individual out-of-sample 2MWT epochs. Holistic interpretation of the disease-classification outcomes with respect to the inertial sensor data can be greatly augmented from the integration of: (1) visualising the raw data, (2) its time-frequency representation using the (discretised) continuous wavelet transform (CWT) and (3) LRP attribution techniques. The CWT is a method used to measure the similarity between a signal and an analysing function (in this case the Morlet wavelet) which can provide a precise time-frequency representation of a signal3,54. For more information we refer the reader to the analysis performed in3.

Relevance propagation through LRP decomposed the output of a learned function f, given an input , attributing relevance values to individual input samples . In this case, were represented by discrete sensor samples within an testing epoch and therefore was directly embedded in the time domain. The contribution of LRP could also be quantified across the input channels (in this case the sensor axis).

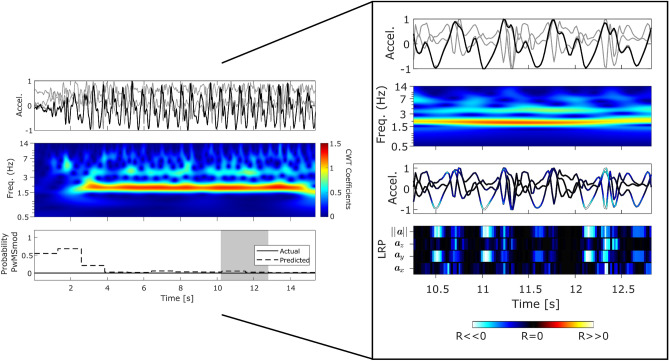

Figures 2, 3 and 4 compared the example patterns and characteristics captured from depicting the raw sensor signal, augmented with LRP-CWT analysis for representative examples of correctly classified HC, PwMSmild and PwMSmod subjects respectively. Figure 2 first illustrated the performance of a correctly classified HC subject’s 2MWT segment, supplemented by the raw sensor data, its CWT representation and the final disease model’s probabilistic output . In this example, gait signal was apparent from the raw sensor data and supported by strong gait domain energy, , within the CWT representation. This collection of epochs were predicted as predominantly “walking” by a HAR model and corresponded to a confident HC classification with high probability. Focusing on an overlapped epoch example from 10.3–12.8 [s], gait was clearly visible in the time-frequency domain (i.e. large CWT coefficients around 1.5 Hz) and reflected clear steps, as depicted by the magnitude of acceleration. LRP attributed high relevance scores to these steps in the vertical and orientation invariant signal (i.e. represented by channel 2 and 4).

Figure 2.

HC epoch: Panel plot illustrating example performance of a typical HC subject (true negative) which can be visually interpreted using LRP decomposition and CWT frequency analysis. (HC, T25FW: 4.8 ± 0.35) The top row represents a 3-axis accelerometer trace captured from a smartphone over 15.4 seconds, which corresponds to 12 epochs of length 128 samples (or 2.56 [s]) with a 50% overlap. The magnitude ) of the 3-axis signal is highlighted in bold. The second row depicts the top view of the CWT scalogram of , which is the absolute value of the CWT as a function of time and frequency. The final row depicts the output disease classification probabilities (). The shaded grey area represents an example epoch (n=128 samples, or 2.56 [s]) within the acceleration trace, which is examined in the larger subplot through the decomposition of DCNN input relevance values () using Layer-Wise Relevance Propagation (LRP). Red and hot colours identify input segments denoting positive relevance () indicating (i.e. MS). Blue and cold hues are negative relevance values () indicating (i.e. HC), while black represents () inputs which have little or no influence to the DCNNs decision. LRP values are overlaid upon the accelerometer signal, where the bottom panel represents the LRP activations per input (i.e. ).

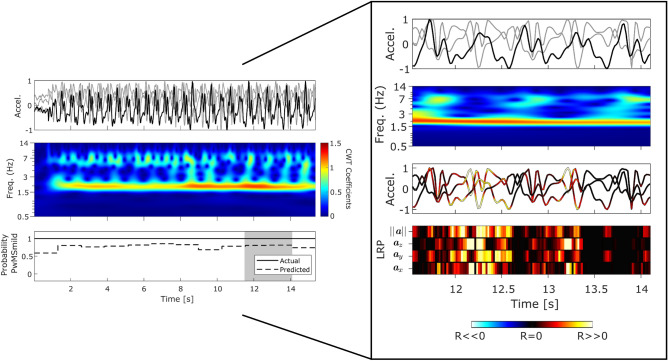

Figure 3.

PwMSmild epoch: Panel plot illustrating example performance from a section of correctly classified PwMSmild subject’s 2MWT (true positive) which can be visually interpreted using LRP decomposition and CWT frequency analysis. (PwMSmild, EDSS 3.25 ± 0.35; T25FW: 5.5 ± 0.53 [s]) For further information regarding the interpretation of this example, we refer the reader to Fig. 2.

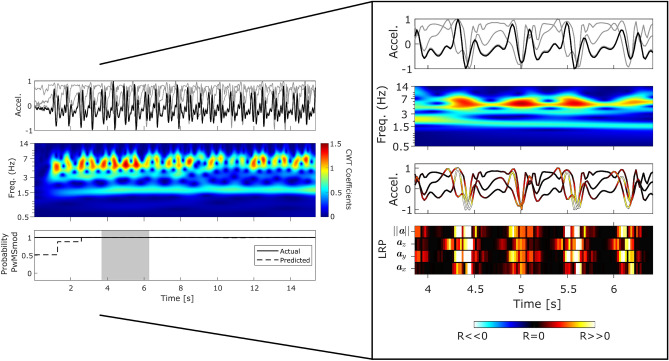

Figure 4.

PwMSmod epoch: Panel plot illustrating example performance from a section of a correctly classified PwMSmod subject’s 2MWT (true positive) which can be visually interpreted using LRP decomposition and CWT frequency analysis. (PwMSmod, EDSS: 3.8 ± 2.9; T25FW: 6.9 ± 0.5 [s]) For further information regarding the interpretation of this example, we refer the reader to Fig. 2.

Figure 3 depicted an example 2MWT from a representative correctly classified PwMSmild subject. Similarly to the HC example, gait signal was visible in this example, (i.e. CWT , HAR and clear steps in ) (Note: HAR posterior probabilities also indicated “walking”). Relevance propagation for an example epoch during 11.5–14 [s] indicated that step occurrences attributed to the “mild” DCNN posterior output. Time-frequency gait signal visualisation through CWT analysis also revealed harmonics occurring at higher frequencies than the gait domain (>3.5 Hz).

Figure 4, in contrast, represented a panel plot illustrating example performance of a typical correctly classified PwMSmod subject’s 2MWT segment. In this example, a concentration of higher frequency disturbances temporally coincided with each step event. These gait-related perturbations were examined in the zoomed sub figure for an example epoch during 3.9–6.4 [s], as highlighted by the shaded shaded grey area during the longer gait example. Relevance decomposition of this epoch attributed all LRP-based relevance to each step and associated high-frequency disturbance (i.e. events influencing output as PwMSmod).

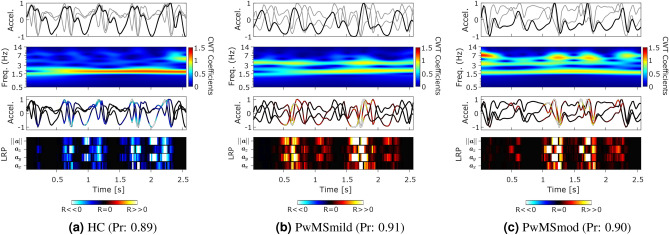

Finally, average gait epochs for HC, PwMSmild and PwMSmod groups were created using Dynamic Time Warping Barycenter Averaging55. Visualisation of each representative epoch using the LRP-CWT framework was depicted in Fig. 5. The DCNN posterior probabilities for each representative epoch were strongly predictive of the true class (HC, Pr. 0.89; PwMSmild, Pr. 0.91; and PwMSmod, Pr. 0.90).

Figure 5.

Visualisation of the average gait signal through the LRP-CWT analysis framework. Representative average epochs (n=128 samples, 2.56 [s]) were first created using Dynamic Time Warping Barycenter Averaging (DBA) independently for sets of correctly classified epochs from HC, PwMSmild, and PwMSmod subject groups. (Pr; represents the DCNN posterior probability for that class).

Discussion

Learning a representation of MS ambulatory function

Deep networks may learn a better representation of gait function collected from smartphone-based inertial sensors, than those of traditionally hand-crafted features. This study leveraged DCNNs to extract unconstrained features on raw smartphone accelerometery data captured from remotely performed 2MWTs by HC and PwMS subjects. Rather than relying on hand-crafted features, which are constrained transformations and approximations of the original signal, DCNNs offer a data-driven approach to characterise ambulatory related features directly from the raw sensor data. Remote health data is often sparse and infrequently sampled3; low study participant numbers and heterogeneous data can make it difficult to build reliable and robust models. To help overcome this we have demonstrated how we can learn common gait-related characteristics from open-source datasets first, then fine-tune these representations to learn disease-specific ambulatory traits using transfer learning.

In this work, higher-level DCNN features learned on open-source HAR datasets were transferred to the FL domain. A DCNN model was first trained as a HAR classification task on two independent open-source HAR datasets. In accordance with other studies56, excellent discrimination of HAR activities was achieved using deep networks in the UCI HAR database. Prediction of WISDM-based HAR activities were less accurate in comparison, although much of the relative added confusion in WISDM occurred between similar “movement” activities (such as jogging and walking). Importantly, applying a HAR-trained model directly to FL did not identify HC and PwMS subgroups.

The results presented in this study demonstrated that DCNN models can be applied to raw smartphone-based inertial sensor data to successfully distinguish sub-groups of PwMS from HC subjects. The performance of DCNN models applied directly to FL (end-to-end) was similar to that of the feature-based approaches.

However, models that were trained end-to-end using only FL data were highly susceptible to over-fitting and struggled to generalise compared to models which had been fine-tuned from a trained HAR network. This paradigm was particularly evident in the poor end-to-end model performance for the more difficult multi-class task. As a result, transfer learning improved model robustness and generalisability towards the recognition of subgroups of PwMS from HCs, compared to DCNN models trained end-to-end on FL, as well as the feature based methods (by between 8–15% in binary tasks and up to 33% in the multi-class task). Larger performance gains in the latter were particularly evident as improved recognition of PwMSmild from HC.

The improved DCNN model performance through TL could be attributed to a number of rationale. For instance, there is added benefit of training on a larger and more diverse set of data, as well as the regularisation properties TL induces (freezing layers mitigates against over-fitting). More interestingly, both UCI HAR (n = 30) and WISDM (n = 51) specifically comprised of young healthy individuals. In FL there were only n =24 healthy participants (only 16 of which contributed more than 10 unique running belt 2MWT tests). As such, initially training on more healthy examples in particular may have allowed the DCNN to initialise a more accurate representation of “healthy” walking from inertial sensor data. It is noteworthy that models transferred based on UCI HAR versus WISDM performed similarly for all target classification tasks . Transferring from WISDM tended to perform marginally better at distinguishing PwMSmod however, whereas PwMSmild were slightly better identified from HC when transferring from UCI HAR. Perhaps the activity patterns within each dataset also uniquely aided each task. For example, WISDM explicitly learned a unique “jogging” class, which could allow the better representation learning of faster versus slower gait. Moderately disabled PwMS in particular are known to have relatively slower cadence19. Other characteristics must also be considered, such as affixing of the phone to the waist in UCI HAR (similar to the FL running belt) versus the pocket in WISDM. Regardless, more work is certainly needed to uncover the performance gain and understand explicit reasons for the improvement of TL models applied to remote sensor data. Particularly, further studies will be needed to fully define the attributes of a source domain and task which are relevant for the target domain and tasks , or to determine the optimal (or combination thereof) among multiple candidates.

The DCNN architecture investigated in this study was relatively simple compared to other frameworks5,23,30,57, future work will also aim to investigate the use recurrent layers (such as in LSTMs), which have proven beneficial to characterise the temporal nature of gait recorded within the sensor signal23,30.

Interpreting smartphone-based remote sensor models

Recently, breakthroughs in visualising neural networks have paved the way for explanations in deep and complex models, for example heatmaps of “relevant” parts of an input can be built by decomposing the internal neural networks using layer-wise relevance propagation42,44. Visual interpretation of the factors which influence a model’s prediction may enable a deeper understanding of how healthy and disease-influenced characteristics are captured from remote smartphone-based inertial sensor data. This work aims to establish a framework to further understand the patterns of healthy and MS disease through multiple viewpoints: visualising the raw inertial sensor signal, its analogous time-frequency CWT representation, as well as augmenting this picture using layer-wise relevance propagation. Attribution through LRP has already been successfully applied to visualise gait patterns that are predictive of an individual which were acquired from lab-based ground reaction force plates and infrared camera-based full-body joint angles53.

The patterns of healthy gait were first visually inspected in an example HC 2MWT (Fig. 2) through the LRP-CWT framework. Comparing these healthy gait templates to PwMS examples offered a visual interpretation between the differences in the signal characteristics each classifier recognised as important for a prediction. For instance, it was found that walking in healthy predicted gait in FL was typically characterised by distinct steps, consistent cadence, and strong gait power () in the 1.5–3 Hz range. Attribution using LRP highlighted step inflections, especially in the vertical and magnitude of acceleration signals , as important predictors for HC ambulation. Misclassified PwMSmild and PwMSmod examples as HC depicted in this work (see supplementary material) also tended to visually resemble that of the HC (Fig. 2), for instance LRP tended to attribute relevance to the and channels during clear step inflections, much like to that of the actual HC example.

The morphology of the sensor data in PwMSmod examples were visually different to correctly classified HC and PwMSmild. In the case of the PwMSmod gait epochs (Fig. 4) and the false positives (see supplementary material), these examples exhibited distinct higher frequency “pertubations” in the presence of gait, where further LRP decomposition attributed those disturbances as temporally important for the prediction of PwMSmod in each case. Interestingly, these higher frequency disturbances temporally coincided with each step event from the raw sensor signal. Misclassified examples of HC and PwMSmild as PwMSmod tended to reflect similar properties to the correctly classified example from Fig. 4, such as as lower gait domain energy (see3), evidence of higher frequency perturbations and a visually less well defined step morphology within the raw sensor signal. As corroboration to the factors influencing these misclassifications, LRP clearly attributed positive relevance (i.e. PwMSmod predictions) to time points of the signal corresponding to higher frequency signal-based activity.

Generating representative correctly classified gait epochs using DTW Barycenter Averaging painted a macro-picture of the average gait patterns for HC, PwMSmild and PwMSmod groups. Visualising these representative epochs using the LRP-CWT framework (Fig. 5), displayed confirmatory patterns observed in previous independently classified 2MWT examples (Figs. 2–4). Importantly, the raw accelerometer signals collected from healthy controls and the DBA-generated average cycles were highly comparable to established characteristics that are representative of healthy walking58,59. For example, a DBA representative HC epoch was visually observed to have clear step patterns with discernible initial and final feet contact points, and stronger in the gait domain (0.5–3 Hz). In comparison, milder and moderate MS-predicted average epochs tended to have reduced (gait) signal-to-noise and the presence of higher-frequency perturbations, as described previously.

The uncovering of the inertial sensor characteristics that distinguished MS-related disease from healthy ambulation, through LRP attribution, enables for clinical interpretations. For example, higher relevance values coinciding with distinct step inflections and higher power () in the upper-end of the gait domain could represent cadence-based factors associated with faster walking, which are established characteristics that may differentiate healthy versus PwMS ambulation10,19,60. Moderately disabled PwMS in particular are known to have relatively slower cadence19. The attribution of gait disturbances suggesting MS-related impairment, could be associated with other accepted indicators, such as gait variability, which have shown to stratify PwMS from HC12–14.

More interestingly, hand-crafted features that captured similar characteristics to those visually observed through the CWT-LRP framework appeared before as top features within our previous study3. Features such as wavelet entropy and energy, capturing predictability and energy in the faster gait domain (1.5–3.3 Hz), or (gait) signal-to-noise related measures separated the same healthy and PwMS participants.

The similarity between hand-crafted features, visual examples, and LRP-explained DCNN features clinically corroborated an interpretation of the factors which may be sensitive to MS-related gait impairment. The hand-crafted features introduced previously in3 focused on using established signal-based metrics as surrogates to represent aspects of PwMS gait function. As such, these surrogate features were not engineered to specifically capture complex biomechanical processes in PwMS gait. Data-driven measures may therefore have been more comprehensive, sensitive, or specific to capture the same representation of MS-indicative characteristics, than the approximations from constrained, hand-crafted features.

Limitations and concluding remarks

There are a number of limitations which should be discussed with respect to this study. Firstly, while deep networks exhibit unrivalled potential in many healthcare applications, such as in this setting to characterise ambulatory and physical activity patterns from wearable accelerometery, the ramifications of applying these models to healthcare data should also be considered. Often observational clinical studies are small and initially collecting vast amounts of data on a larger number of participants can be both unfeasible and costly. Although the TL-framework introduced in this work helps overcome some of the difficulties encountered when attempting to build deep networks in the presence of heterogeneity and low subject numbers, the fine-tuning and evaluation of DCNN performance could still be predisposed by the limitations of the data. For instance, the relatively small number of participants (n<100) with multiple repeated measurements, the differences in the number of unique tests contributed per subject, or even demographic biases, such as the male-to-female ratio mismatches between HC and PwMS, the inclusion of various different MS phenotypes, or that the mild versus moderate sub-groups were bluntly created based on clinician-subjective EDSS scores, are all factors that should all be considered when evaluating model performance. Learning more accurate global models were therefore biased by the diversity, representation, and size of the data available. In reality, the NCT02952911 FLOODLIGHT PoC study was only intended as a small proof-of-concept investigation to assess the feasibility to remotely monitor PwMS subjects, yet has provided many meaningful insights which can be implemented in future studies. Follow-up trials with larger, more diverse cohorts are already being undertaken, such as FLOODLIGHT OPEN, a crowd sourced dataset where the general public can contribute their own data61.

Despite the clinical utility LRP could hold in visualising and interpreting neural network decisions, heatmap interpretations were only qualitatively evaluated based on visual assessment, albeit motivated by a clinical hypothesis. For instance, LRP relevance values coinciding with distinct step inflections and higher power () in the upper-end of the gait domain could represent cadence-based factors associated with faster walking, which are established characteristics that may differentiate healthy versus PwMS ambulation19,60. Other objective methods to evaluate heatmap representations have been proposed which involve perturbing the model’s inputs62. Verifying that LRP has attributed meaningful relevance is inherently difficult however due to the remote nature of the 2MWTs performed by participants in this study. Further studies should aim to evaluate HC and PwMS gait function in more controlled settings, such as under visual observation or using in-clinic gait measurement systems, which will allow the underlying attributions of LRP to be further evaluated.

More comprehensive analysis should also aim to compare various other attribution techniques, especially similar attribution methods, to evaluate smartphone-based remote sensor models. This future work could be used to further verify the predictive patterns uncovered with one method (e.g. the confirmatory hypothesis of another attribution method also picking up on the same pattern as LRP highlighted).

As initial steps, this study focused on establishing clear and concise interpretations of smartphone-based inertial sensor models to characterise patterns of gait and disease-influenced ambulation by first focusing on only the positive contributions towards class predictions (i.e. LRP -rules). With this baseline, further work (and especially in more controlled settings) should aim to apply LRP for more complex tasks to develop more full-bodied explanations, such as visualising contributions which contradict the prediction of a class (e.g. using LRP -rules).

In conclusion, the work presented here aimed to explore the ability of deep networks to detect impairment in PwMS from remote smartphone inertial sensor data. Transfer learning may present a useful technique to circumvent common problems associated with remotely generated health data, such as low subject numbers and heterogeneous data. TL DCNN models appeared to learn a better representation of gait function compared to feature based approaches characterising HCs and subgroups of PwMS. Further work is needed however to to understand the underlying feature structure, along with the most applicable source datasets and methods to extract the most appropriate information available. Incorporating expert clinical knowledge through better visual interpretation techniques could greatly develop clinicians’ fundamental understanding of how disease-related ambulatory activity can be captured by wearable inertial sensor data. This work proposed the use of LRP heatmaps to interpret a deep network’s decisions by attributing relevance scores to the inertial sensor data and augmenting this assessment with time-frequency visual representations. This improved domain knowledge could be used to reverse engineer features, develop more robust models or to help refine more sensitive and specific measurements. This study, with on-going future work, therefore further demonstrates the clinical utility of objective, interpretable, out-of-clinic assessments for monitoring PwMS.

Methods

Data

The FLOODLIGHT (FL) proof-of-concept (PoC) study (NCT02952911) was a trial to assess the feasibility of remote patient monitoring using smartphone (and smartwatch) devices in PwMS and HC37. A total of 97 participants (24 HC subjects; 52 mildly disabled, PwMSmild, EDSS [0–3]; 21 moderately disabled PwMSmod, EDSS [3.5–5.5]) contributed data which was recorded from a 2MWT performed out-of-clinic3.

Subjects were requested to perform a 2MWT daily over a 24-week period, and were clinically assessed baseline, week 12 and week 24. For further information on the FL dataset and population demographics we direct the reader to37 and specifically to our previous work3, from which this study expands upon.

Deep transfer learning for time series classification

Model construction

In this time series classification problem, raw smartphone sensor data recorded during remote 2MWTs were partitioned into epoch sequences and DCNNs were used to classify each given sensor epoch, , as having been performed by a HC, PwMSmild or PwMSmod participant; where , a are acceleration vectors for the x-, y- and z-axis coordinates, containing samples and refers to original orientation invariant signal magnitude.

Three separate classification models were constructed for binary tasks (HC vs. PwMSmild, PwMSmild vs. PwMSmod and HC vs. PwMSmod) to allow for direct comparison of the hand-crafted feature-based classification outcomes in3, as well as a unified multi-class model incorporating all three classes simultaneously. The population subset explored for this study are the same as reported previously in3. The accompanying supplementary material further details the DCNN model architecture, parameterisation, and evaluation.

A model architecture was first trained on source domain and task , in this instance a HAR classification task on the UCI HAR or WISDM dataset (see supplementary material for more information on these datasets). The parameters and learned weights of source model were then used to initialise and train a new model on domain and task by transferring the source model layers and re-training (fine-tuning) the network towards this new target task (i.e. in this case the subject group classification between HC, PwMSmild and PwMSmod). Figure 6 schematically details the TL approach. Baseline “end-to-end” refers to a DCNN trained and validated exclusively on FL data. “Direct” transfer refers classification that is performed with full HAR trained model and weights; after, only the last fully connected dense layer has been replaced by disease targets and retrained on FL data (all other layers are frozen). “Fixed” transfer refer to a HAR trained architecture, where the convolutional blocks and weights are frozen and act as a “fixed feature extractor”, however DNN weights thereafter are fine-tuned.

Figure 6.

Schematic of deep transfer learning approach. refers to input data from a source domain, in this case a HAR dataset, to learn a task , which is represented by the label space (the HAR activity classes). refers to the target domain, in this case the FLOODLIGHT data, where are the disease classification outputs of HC, PwMSmild or PwMSmod for target task . During transfer learning, a model’s parameters and learned weights, of , are then used to initialise and train a model on target domain and task . Transfer learning is then performed by transferring the source model’s layers (where these weights and parameters are “frozen”) to subsequently re-train a new model (i.e. fine-tune) using data for the new target task, . Downstream layers in the network are fine-tuned towards this new target task decision .

Pre-processing

Several pre-processing steps were first performed to format the raw signals for input into the respective deep networks. To maintain consistency and for comparability using TL approaches, all signals were processed according to the same structure as3. For additional consistency with3, only subject’s 2MWTs identified using the running belt were considered for subsequent analysis in this study. All inertial sensor signals were sampled at 50 Hz; in the case of the WISDM dataset, signals were resampled to 50 Hz using a shape-preserving piecewise cubic interpolation. Signals were filtered with order Butterworth filter with a cut-off frequency at 17 Hz3, and as per previous work, the smartphone coordinate axes were aligned with the global reference frame using the technique described in29. All signals were detrended and amplitude normalised with zero mean and unit variance29. Sensor signals per each test were then up-sampled using fixed-width sliding windows of 2.56 sec and 50% overlap (128 samples/epoch), in accordance with parameters in similar studies29,30,38. The total number of observations/epochs for each constructed datasets are depicted in Table 3.

Table 3.

Overview of source and target domain datasets. Datasets were constructed from the original sensor signal using 2.56 [s] epoch sliding windows with a 50% overlap.

| (n) | |||

|---|---|---|---|

| UCI HAR | WISDM | FL | |

| Subjects | 30 | 51 | 97 |

| Tests | 61 | 252 | 970 |

| Samples | 10013 | 54781 | 82450 |

See supplementary material for more information on the UCI HAR and WISDM datasets.

HC, n=24; PwMSmild, n=52; PwMSmod, n=21; see demographics Table 1 for more details.

Randomly sampling tests per subject.

Model evaluation

To determine the generalisability of our models, stratified 5-fold, subject-wise, cross-validation (CV) was employed with the same seeding as in3. This consisted of randomly partitioning the dataset into k=5 folds which was stratified with equal class proportions where possible. One set was denoted the training set (in-sample), which was further split for into smaller set for validation, using roughly 10% of the training data proportionally. The remaining 20% of the dataset was then denoted testing set (out-of-sample) on which predictions were made.

To help alleviate model biases occurring from the varying number of repeated tests contributed per subject over the duration of the FL study, m number of 2MWTs per subject were randomly selected with replacement to create balanced datasets. Parameterisation of the number of tests per subject was determined using a baseline DCNN prior to building all subsequent models within FL. The classification performance over varying data set sizes was examined by sampling daily tests sampled (with replacement) per subject. It was observed that there was minimal additional classification performance after 2MWTs sampled per subject across each binary task. Class distributions in the training and validation sets were then balanced using the re-sampling approach in3. Imbalances in the HAR training and validation data were also countered using this approach. The total number of observations/epochs for each constructed datasets are depicted in Table 3.

HAR model performance was reported based on the classification of individual epochs into the correct activity class for UCI HAR and WISDM. FL and TL performance was based on the majority prediction of all epochs over a 2MWT, test-wise, where final subject-wise classification results were computed though majority voting each aggregated individual 2MWT prediction per subject (see3).

Multi-class classification metrics were reported as the 2MWT test-wise median and interquartile range over one CV, as well as the final subject-wise outcome for that CV (in the case of FL), using overall metrics such as the macro accuracy, macro F1-score (MF1) and Cohen’s kappa (k) statistic63,64.

Layer-wise relevance propagation

Layer Wise Relevance Propagation (LRP) back-propagates through a network to decompose the final output decision, 47,48. Briefly, a trained model’s activations, weights and biases are first obtained in a forward pass through the network. Secondly, during a backwards pass through the model, LRP attributes relevance to the individual input nodes, layer by layer. For example denotes the relevance for neuron k in layer , and defines the share of that is redistributed to neuron j in layer . The fundamental concept underpinning LRP is that the conservation of relevance per layer such that: and = . The conservation of total relevance per layer can can also be denoted as:

| 1 |

Propagation rules are implemented to withhold this conservation property. Considering a DNN model, , which consists of , the activations from the previous layer, and , , the weight and bias parameters of the neuron. The function is a positive and monotonically increasing activation function. In case of a component-wise operating non-linear activation, e.g. a ReLU then , since the top layer relevance values only need to be attributed towards one single respective input j for each output neuron k. The -rule for LRP has been shown to work well at decomposing a model’s decisions:

| 2 |

where each term of the sum corresponds to a relevance propagation , where and denote the positive and negative parts respectively, and where the parameters and are chosen subject to the constraints and . The -rule (=1, =0) emphasises the weights of positive contributions relative to inhibitory contributions predicting and has been shown to create crisp and interpretable heatmaps in image recognition tasks48 and for explaining the presence of Alzheimer’s disease (AD) detection based on MRI imaging49. For this first interpretation of LRP gait heatmaps we used to focus on the morphology and characteristics of a sensor signal influencing with respect to that prediction. To benefit interpretation of LRP examples, we have standardised the heatmap colors, where hot hues represented to presence of factors which influenced , to predict MS disease, whereas cold hues (inversely) contradicted the prediction of MS (i.e. , or HC). A signed small stabilising term can be added to the denominator (termed -rule):

| 3 |

The -rule has been shown to filter noisy heatmaps by absorbing some relevance when the contributions to the activation of neuron k are weak or contradictory47,65. For larger values of only the most prominent explanation factors are retained, yielding a more sparse and less noisy explanation. In accordance with47, -rules were applied to convolutional layers and the -rule () to dense layers.

In this study, individual LRP heatmaps were produced for epochs in the out-of-sample testing set using the iNNvestigate toolbox66. For more information on the theoretical and practical implementation of LRP, we direct the reader to47,66,67. Both Keras and PyTorch implementations of the LRP algorithm have been developed and can be found at http://heatmapping.org/.

Constructing representative gait signal epochs

In order to determine the population-wise gait characteristics pertinent of healthy versus mild and moderate MS disease, an average representative epoch was generated for each subject-group using Dynamic Time Warping Barycenter Averaging (DBA)55. First, Dynamic Time Warping (DTW) is a method to measure the similarity (distance) between two sequences in cases where the order of elements in the sequences must be considered68. DTW can be used to align the signals such that the similarity between their points is minimised, hence generating a “warped” optimal alignment between sequences. For instance, gait cycle templates have previously been generated for PwMS using DTW69. DTW Barycenter Averaging is a global averaging method for an arbitrary set of DTW sequences, which can be used to create a macro-average sequence, in this case, a representative gait epoch. An average gait cycle epoch for each subject-group was constructed by applying DBA to a random selection of correctly classified epochs (n = 2000) with a high posterior probability of that class (Pr.>0.85), with no more than (k) epochs (i.e. %) contributed from a single 2MWT. A previously trained DCNN model was then applied to each representative epoch and relevance scores were attributed using LRP. An implementation of DBA can be found at https://github.com/fpetitjean/DBA/.

Supplementary Information

Acknowledgements

The authors would like to thank all staff and participants involved in capturing test data. This study was sponsored by F. Hoffmann-La Roche Ltd. This research was supported by the National Institute for Health Research (NIHR) Oxford Biomedical Research Centre (BRC). This research also received funding from the Flemish Government under the “Onderzoeksprogramma Artificiële Intelligentie (AI) Vlaanderen” programme.

Author contributions

A.C. Conceptualisation, Methodology, Software, Formal Analysis, Writing - original draft, Writing - review & editing; F.L. Conceptualisation, Methodology, Writing - review & editing, Supervision; M.L. Conceptualisation, Methodology, Writing - review & editing, Supervision; M.D.V. Conceptualisation, Methodology, Writing - review & editing, Supervision.

Data availibility

Qualified researchers may request access to individual patient level data through the clinical study data request platform (https://vivli.org/). Further details on Roche’s criteria for eligible studies are available here (https://vivli.org/members/ourmembers/). For further details on Roche’s Global Policy on the Sharing of Clinical Information and how to request access to related clinical study documents, see here (https://www.roche.com/research_and_development/who_we_are_how_we_work/clinical_trials/our_commitment_to_data_sharing.htm).

Code availability

Deep networks were built using Python v3.7.4. through a Keras framework v2.2.4 with a Tensorflow v1.14 back-end. Layer-wise Relevance Propagation (LRP) heatmaps were build using the iNNvestigate toolbox: https://github.com/albermax/innvestigate, that has been developed as part of the http://heatmapping.org/ project. Visualisations were created using MATLAB v2019b. The Dynamic Time Warping Barycenter Averaging (DBA) methodology for creating average gait epochs was implemented using the code described at https://github.com/fpetitjean/DBA/. Experimental code can be found at: https://github.com/apcreagh/MS-GAIT_InterpretableDL.

Competing interests

During the completion of this work, A. P. Creagh was a Ph.D. student at the University of Oxford and acknowledges the support of F. Hoffmann-La Roche Ltd.; F. Lipsmeier is an employee of F. Hoffmann-La Roche Ltd; M. Lindemann is a consultant for F. Hoffmann-La Roche Ltd. via Inovigate; M. De Vos has nothing to disclose.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors jointly supervised: Michael Lindemann and Maarten De Vos.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-021-92776-x.

References

- 1.Taylor, K. I., Staunton, H., Lipsmeier, F., Nobbs, D. & Lindemann, M. Outcome measures based on digital health technology sensor data: data- and patient-centric approaches. NPJ Digit. Med.3, 97. 10.1038/s41746-020-0305-8 (2020). [DOI] [PMC free article] [PubMed]

- 2.Creagh, A. et al. Smartphone-based remote assessment of upper extremity function for multiple sclerosis using the draw a shape test. Physiological Measurement41, 054002, 10.1088/1361-6579/ab8771 (2020) [DOI] [PubMed]

- 3.Creagh, A. P. et al. Smartphone- and Smartwatch-Based Remote Characterisation of Ambulation in Multiple Sclerosis During the Two-Minute Walk Test. IEEE J Biomed Health Inform25, 838-849, 10.1109/JBHI.2020.2998187 (2021). [DOI] [PubMed]

- 4.Prince J, Arora S, de Vos M. Big data in parkinson’s disease: using smartphones to remotely detect longitudinal disease phenotypes. Physiol. Measur. 2018;39:044005. doi: 10.1088/1361-6579/aab512. [DOI] [PubMed] [Google Scholar]

- 5.Zhang H, Deng K, Li H, Albin RL, Guan Y. Deep learning identifies digital biomarkers for self-reported parkinson’s disease. Patterns. 2020;100042:1. doi: 10.1016/j.patter.2020.100042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Crouthamel M, et al. Using a researchkit smartphone app to collect rheumatoid arthritis symptoms from real-world participants: feasibility study. JMIR mHealth uHealth. 2018;6:e177. doi: 10.2196/mhealth.9656. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Goldenberg MM. Multiple sclerosis review. Pharm. Therap. 2012;37:175. [PMC free article] [PubMed] [Google Scholar]

- 8.Bove R, et al. Evaluating more naturalistic outcome measures: a 1-year smartphone study in multiple sclerosis. Neurol. Neuroimmunol. Neuroinflamm. 2015;2:e162. doi: 10.1212/NXI.0000000000000162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Comi G, et al. Effect of early interferon treatment on conversion to definite multiple sclerosis: a randomised study. The Lancet. 2001;357:1576–1582. doi: 10.1016/S0140-6736(00)04725-5. [DOI] [PubMed] [Google Scholar]

- 10.Sosnoff JJ, Sandroff BM, Motl RW. Quantifying gait abnormalities in persons with multiple sclerosis with minimal disability. Gait Post. 2012;36:154–156. doi: 10.1016/j.gaitpost.2011.11.027. [DOI] [PubMed] [Google Scholar]

- 11.Martin CL, et al. Gait and balance impairment in early multiple sclerosis in the absence of clinical disability. Multiple Scleros. J. 2006;12:620–628. doi: 10.1177/1352458506070658. [DOI] [PubMed] [Google Scholar]

- 12.Crenshaw S, Royer T, Richards J, Hudson D. Gait variability in people with multiple sclerosis. Multiple Scleros. J. 2006;12:613–619. doi: 10.1177/1352458505070609. [DOI] [PubMed] [Google Scholar]

- 13.Huisinga JM, Mancini M, George RJS, Horak FB. Accelerometry reveals differences in gait variability between patients with multiple sclerosis and healthy controls. Ann. Biomed. Eng. 2013;41:1670–1679. doi: 10.1007/s10439-012-0697-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Spain RI, Mancini M, Horak FB, Bourdette D. Body-worn sensors capture variability, but not decline, of gait and balance measures in multiple sclerosis over 18 months. Gait Post. 2014;39:958–964. doi: 10.1016/j.gaitpost.2013.12.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Motl RW, Sandroff BM, Suh Y, Sosnoff JJ. Energy cost of walking and its association with gait parameters, daily activity, and fatigue in persons with mild multiple sclerosis. Neurorehabil. Neural Repair. 2012;26:1015–1021. doi: 10.1177/1545968312437943. [DOI] [PubMed] [Google Scholar]

- 16.Kurtzke JF. Rating neurologic impairment in multiple sclerosis an expanded disability status scale (edss) Neurology. 1983;33:1444–1444. doi: 10.1212/WNL.33.11.1444. [DOI] [PubMed] [Google Scholar]

- 17.Rudick R, Cutter G, Reingold S. The multiple sclerosis functional composite: a new clinical outcome measure for multiple sclerosis trials. Multiple Scleros. J. 2002;8:359–365. doi: 10.1191/1352458502ms845oa. [DOI] [PubMed] [Google Scholar]

- 18.Motl RW, et al. Validity of the timed 25-foot walk as an ambulatory performance outcome measure for multiple sclerosis. Mult Scler. 2017;23:704–710. doi: 10.1177/1352458517690823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Motl RW, et al. Evidence for the different physiological significance of the 6-and 2-minute walk tests in multiple sclerosis. BMC Neurol. 2012;12:6. doi: 10.1186/1471-2377-12-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Spain R, et al. Body-worn motion sensors detect balance and gait deficits in people with multiple sclerosis who have normal walking speed. Gait Post. 2012;35:573–578. doi: 10.1016/j.gaitpost.2011.11.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Goodfellow, I., Bengio, Y. & Courville, A. Deep learning (MIT Press, 2016).

- 22.Cui, Z., Chen, W. & Chen, Y. Multi-scale convolutional neural networks for time series classification. arXiv preprint arXiv.1603.06995 (2016).

- 23.Ordóñez, F. J. & Roggen, D. Deep Convolutional and LSTM Recurrent Neural Networks for Multimodal Wearable Activity Recognition. Sensors (Basel, Switzerland)16, 115, 10.3390/s16010115 (2016). [DOI] [PMC free article] [PubMed]

- 24.Wang J, Chen Y, Hao S, Peng X, Hu L. Deep learning for sensor-based activity recognition: a survey. Pattern Recognit. Lett. 2019;119:3–11. doi: 10.1016/j.patrec.2018.02.010. [DOI] [Google Scholar]

- 25.Alsheikh, M. A. et al. Deep activity recognition models with triaxial accelerometers. arXiv preprint arXiv:1511.04664 (2015).

- 26.Cheng, W.-Y. et al. Large-scale continuous mobility monitoring of parkinson’s disease patients using smartphones. In Wireless Mobile Communication and Healthcare, pp. 12–19 (Springer, 2017).

- 27.Martinez MT, Leon PD. Falls risk classification of older adults using deep neural networks and transfer learning. IEEE J. Biomed. Health Inf. 2019;1:1. doi: 10.1109/JBHI.2019.2906499. [DOI] [PubMed] [Google Scholar]

- 28.Gong, G. & Lach, J. Deepmotion: a deep convolutional neural network on inertial body sensors for gait assessment in multiple sclerosis. In IEEE Wireless Health (WH), pp. 1–8, 10.1109/WH.2016.7764572 (IEEE, 2016).

- 29.Gadaleta M, Rossi M. Idnet: Smartphone-based gait recognition with convolutional neural networks. Pattern Recognit. 2018;74:25–37. doi: 10.1016/j.patcog.2017.09.005. [DOI] [Google Scholar]

- 30.Zou Q, Wang Y, Wang Q, Zhao Y, Li Q. Deep learning-based gait recognition using smartphones in the wild. IEEE Trans. Inf. Forens. Secur. 2020;15:3197–3212. [Google Scholar]

- 31.Fawaz, H. I., Forestier, G., Weber, J., Idoumghar, L. & Muller, P.-A. Transfer learning for time series classification. In IEEE International Conference on Big Data (Big Data), pp. 1367–1376 (IEEE, 2018).

- 32.Oquab M, Bottou L, Laptev I, Sivic J. Learning and transferring mid-level image representations using convolutional neural networks. Proceedings of the IEEE conference on computer vision and pattern recognition. 2014;1:1717–1724. [Google Scholar]

- 33.Andreotti, F. et al. Multichannel sleep stage classification and transfer learning using convolutional neural networks. In 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), pp. 171–174 (IEEE, 2018). [DOI] [PubMed]

- 34.Phan H, et al. Towards more accurate automatic sleep staging via deep transfer learning. IEEE Trans. Biomed. Eng. 2020;1:1. doi: 10.1109/TBME.2020.3020381. [DOI] [PubMed] [Google Scholar]

- 35.Morales, F. J. O. & Roggen, D. Deep convolutional feature transfer across mobile activity recognition domains, sensor modalities and locations. In Proceedings of the 2016 ACM International Symposium on Wearable Computers, pp. 92-99. (2016)

- 36.Kalouris, G., Zacharaki, E. I. & Megalooikonomou, V. Improving cnn-based activity recognition by data augmentation and transfer learning. In 17th International Conference on Industrial Informatics (INDIN) vol. 1, pp 1387–1394 (IEEE, 2019).

- 37.Midaglia L, et al. Adherence and satisfaction of smartphone-and smartwatch-based remote active testing and passive monitoring in people with multiple sclerosis: Nonrandomized interventional feasibility study. J. Med. Internet Res. 2019;21:e14863. doi: 10.2196/14863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Anguita, D., Ghio, A., Oneto, L., Parra, X. & Reyes-Ortiz, J. L. A public domain dataset for human activity recognition using smartphones. In Esann. 3, 3 (2013).

- 39.Weiss GM, Yoneda K, Hayajneh T. Smartphone and smartwatch-based biometrics using activities of daily living. IEEE Access. 2019;7:133190–133202. doi: 10.1109/ACCESS.2019.2940729. [DOI] [Google Scholar]

- 40.Samek, Wojciech, Grégoire Montavon, Andrea Vedaldi, Lars Kai Hansen, and Klaus-Robert Müller. Explainable AI: interpreting, explaining and visualizing deep learning. Vol. 11700. Springer Nature, (2019).

- 41.Simonyan, K., Vedaldi, A. & Zisserman, A. Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv preprint arXiv.1312.6034 (2013).

- 42.Guidotti R, et al. A survey of methods for explaining black box models. ACM Comput. Surv. (CSUR) 2018;51:1–42. doi: 10.1145/3236009. [DOI] [Google Scholar]

- 43.Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat. Med. 2019;25:44–56. doi: 10.1038/s41591-018-0300-7. [DOI] [PubMed] [Google Scholar]

- 44.Montavon G, Samek W, Müller K-R. Methods for interpreting and understanding deep neural networks. Digit. Signal Process. 2018;73:1–15. doi: 10.1016/j.dsp.2017.10.011. [DOI] [Google Scholar]

- 45.Zeiler, M. D. & Fergus, R. Visualizing and understanding convolutional networks. In European conference on computer vision, pp. 818–833 (Springer, 2014).

- 46.Adadi A, Berrada M. Peeking inside the black-box: a survey on explainable artificial intelligence (xai) IEEE Access. 2018;6:52138–52160. doi: 10.1109/ACCESS.2018.2870052. [DOI] [Google Scholar]

- 47.Montavon, G., Binder, A., Lapuschkin, S., Samek, W. & Müller, K.-R. Layer-wise relevance propagation: an overview, 193–209 (Springer, 2019).

- 48.Bach S, et al. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PloS One. 2015;10:e0130140. doi: 10.1371/journal.pone.0130140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Böhle M, Eitel F, Weygandt M, Ritter K. Layer-wise relevance propagation for explaining deep neural network decisions in mri-based alzheimer’s disease classification. Front. Aging Neurosci. 2019;11:1. doi: 10.3389/fnagi.2019.00194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Eitel F, et al. Uncovering convolutional neural network decisions for diagnosing multiple sclerosis on conventional mri using layer-wise relevance propagation. NeuroImage Clin. 2019;24:102003. doi: 10.1016/j.nicl.2019.102003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Sturm I, Lapuschkin S, Samek W, Müller K-R. Interpretable deep neural networks for single-trial eeg classification. J. Neurosci. Methods. 2016;274:141–145. doi: 10.1016/j.jneumeth.2016.10.008. [DOI] [PubMed] [Google Scholar]

- 52.Slijepcevic, D. et al. On the explanation of machine learning predictions in clinical gait analysis. arXiv e-prints arXiv.1912.07737 (2019).

- 53.Horst F, Lapuschkin S, Samek W, Müller K-R, Schöllhorn WI. Explaining the unique nature of individual gait patterns with deep learning. Sci. Rep. 2019;9:2391. doi: 10.1038/s41598-019-38748-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Addison PS, Walker J, Guido RC. Time-frequency analysis of biosignals. IEEE Eng. Med. Biol. Mag. 2009;28:14–29. doi: 10.1109/MEMB.2009.934244. [DOI] [PubMed] [Google Scholar]

- 55.Petitjean F, Ketterlin A, Gançarski P. A global averaging method for dynamic time warping, with applications to clustering. Pattern Recognit. 2011;44:678–693. doi: 10.1016/j.patcog.2010.09.013. [DOI] [Google Scholar]

- 56.Xia K, Huang J, Wang H. Lstm-cnn architecture for human activity recognition. IEEE Access. 2020;8:56855–56866. doi: 10.1109/ACCESS.2020.2982225. [DOI] [Google Scholar]

- 57.Karim F, Majumdar S, Darabi H, Chen S. Lstm fully convolutional networks for time series classification. IEEE Access. 2018;6:1662–1669. doi: 10.1109/ACCESS.2017.2779939. [DOI] [Google Scholar]

- 58.Zijlstra W, Hof AL. Assessment of spatio-temporal gait parameters from trunk accelerations during human walking. Gait Post. 2003;18:1–10. doi: 10.1016/S0966-6362(02)00190-X. [DOI] [PubMed] [Google Scholar]

- 59.Zijlstra W. Assessment of spatio-temporal parameters during unconstrained walking. Eur. J. Appl. Physiol. 2004;92:39–44. doi: 10.1007/s00421-004-1041-5. [DOI] [PubMed] [Google Scholar]

- 60.Pau M, et al. Clinical assessment of gait in individuals with multiple sclerosis using wearable inertial sensors: Comparison with patient-based measure. Multiple Scler. Rel. Disord. 2016;10:187–191. doi: 10.1016/j.msard.2016.10.007. [DOI] [PubMed] [Google Scholar]

- 61.van Beek, J. et al. Floodlight open–a global, prospective, open-access study to better understand multiple sclerosis using smartphone technology. In Annual Meeting of the Consortium of Multiple Sclerosis Centers (CMSC). (2019).

- 62.Samek W, Binder A, Montavon G, Lapuschkin S, Müller K-R. Evaluating the visualization of what a deep neural network has learned. IEEE Trans. Neural Networks Learn. Syst. 2016;28:2660–2673. doi: 10.1109/TNNLS.2016.2599820. [DOI] [PubMed] [Google Scholar]

- 63.He H, Garcia EA. Learning from imbalanced data. IEEE Trans. Knowledge Data Eng. 2009;21:1263–1284. doi: 10.1109/TKDE.2008.239. [DOI] [Google Scholar]

- 64.Cohen J. A coefficient of agreement for nominal scales. Educ. Psychol. Measur. 1960;20:37–46. doi: 10.1177/001316446002000104. [DOI] [Google Scholar]

- 65.Kohlbrenner, M., Bauer, A., Nakajima, S., Binder, A., Samek, W., & Lapuschkin, S. Towards best practice in explaining neural network decisions with LRP. In International Joint Conference on Neural Networks (IJCNN). pp. 1-7. (IEEE, 2020)

- 66.Alber M, et al. Innvestigate neural networks! J. Mach. Learn. Res. 2019;20:1–8. [Google Scholar]

- 67.Alber, M. "Software and application patterns for explanation methods." In Explainable AI: Interpreting, Explaining and Visualizing Deep Learning, pp. 399-433. Springer, Cham, (2019).

- 68.Berndt, D. J. & Clifford, J. Using dynamic time warping to find patterns in time series. In KDD workshop, vol. 10, 16, 359–370.(1994)

- 69.Engelhard MM, Dandu SR, Patek SD, Lach JC, Goldman MD. Quantifying six-minute walk induced gait deterioration with inertial sensors in multiple sclerosis subjects. Gait Posture. 2016;49:340–345. doi: 10.1016/j.gaitpost.2016.07.184. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Qualified researchers may request access to individual patient level data through the clinical study data request platform (https://vivli.org/). Further details on Roche’s criteria for eligible studies are available here (https://vivli.org/members/ourmembers/). For further details on Roche’s Global Policy on the Sharing of Clinical Information and how to request access to related clinical study documents, see here (https://www.roche.com/research_and_development/who_we_are_how_we_work/clinical_trials/our_commitment_to_data_sharing.htm).

Deep networks were built using Python v3.7.4. through a Keras framework v2.2.4 with a Tensorflow v1.14 back-end. Layer-wise Relevance Propagation (LRP) heatmaps were build using the iNNvestigate toolbox: https://github.com/albermax/innvestigate, that has been developed as part of the http://heatmapping.org/ project. Visualisations were created using MATLAB v2019b. The Dynamic Time Warping Barycenter Averaging (DBA) methodology for creating average gait epochs was implemented using the code described at https://github.com/fpetitjean/DBA/. Experimental code can be found at: https://github.com/apcreagh/MS-GAIT_InterpretableDL.