Abstract

We study the assessment of the accuracy of heterogeneous treatment effect (HTE) estimation, where the HTE is not directly observable so standard computation of prediction errors is not applicable. To tackle the difficulty, we propose an assessment approach by constructing pseudo-observations of the HTE based on matching. Our contributions are three-fold: first, we introduce a novel matching distance derived from proximity scores in random forests; second, we formulate the matching problem as an average minimum-cost flow problem and provide an efficient algorithm; third, we propose a match-then-split principle for the assessment with cross-validation. We demonstrate the efficacy of the assessment approach using simulations and a real dataset.

Keywords: heterogeneous treatment effect, model assessment, matching, proximity scores

1. Introduction

Nowadays the heterogeneous treatment effect (HTE) estimation under the Neyman-Rubin potential outcome model1;2 is gaining increasing popularity due to various practical demands, such as personalized medicine3;4, personalized education5, and personalized advertisements6. There are a number of works focusing on estimating the HTE using various machine learning tools: LASSO7, random forests8, boosting9, and neural networks10. Despite the vast literature on HTE estimation, evaluating the accuracy of an HTE estimator is in general open.

An assessment approach measures the performance of estimators on future data and guides estimator comparison. Aware that a large proportion of HTE estimators involve hyper-parameters, such as the amount of penalization in LASSO-based estimators, number of trees in random-forests-based estimators, efficient model selection or tuning methods are ultra-important.

The major difficulty of the HTE assessment is attributed to the “invisibility” of HTE. Standard assessment methods evaluate the performance of a predictor by comparing predictions to observations on a validation dataset. The approach is valid since the observations are unbiased realizations of the values to be predicted. In contrast, in the potential outcome model we observe the response of a unit under treatment or control, whereas the value to be predicted, i.e., HTE, is the difference of the two. Therefore, HTE is not observable, and the standard assessment methods can not be applied.

In this paper, we design a two-step assessment approach. In the first step, we match treated and control units and regard the differences in matched pairs’ responses as the HTE pseudo-observations. In the second step, we compare predictions to the pseudo-observations and compute the prediction error. We propose a distance based on proximity scores in random forests for matching. We also introduce a matching method that minimizes the average pair distance instead of the more commonly used total distance11, and provide an efficient matching algorithm adapted from the average minimum-cost flow problem.

For conducting the assessment approach with cross-validation, we recommend a match-then-split principle. Explicitly, we first perform matching on the complete dataset, then split the matched pairs into different folds for cross-validation. Since the quality of matched pairs deteriorates as the sample size decreases, the pairs constructed by matching first are more similar than those obtained by splitting first. We remark that matching first does not snoop the data since the distance has no access to the HTE.

The organization of the paper is as follows. In Section 2, we introduce the HTE assessment background and discuss related works. In Section 3, we introduce the assessment approach with a hold-out validation dataset. In Section 4, we discuss how to conduct the assessment in the framework of cross-validation. In Section 5, we compare several assessment approaches on synthetic data. In Section 6, we illustrate the assessment approach’s performance on a real data example. In Section 7, we extend the assessment approach to handle various types of responses. In Section 8, we provide discussions on future works.

2. Background

2.1. Potential outcome model

We consider the Neyman-Rubin potential outcome model with two treatment assignments. Each unit is associated with a p dimensional covariate vector X independently sampled from an underlying distribution . Given covariates X, a binary group assignment W ∈ {0, 1} (1 for the treatment group and 0 for the control group) is generated from the Bernoulli distribution with success probability e(X), i.e., the propensity score12;13. Each unit is also associated with two potential outcomes Y (0), Y (1). We observe Y (1) if the unit is under treatment and Y (0) if the unit is under control. Let ν(x) and μ(x) be the treatment and control group mean functions respectively. We assume the potential outcomes follow

where ε is some mean zero noise independent of X, W. We define HTE as the difference of the group mean functions: τ(x) := ν(x) − μ(x). The treatment effect is heterogeneous because it depends on the covariates. We summarize the data generation model as follows,

| (1) |

Model (1) has implicitly made the following assumptions14.

Assumption 1

(Stable unit treatment value assumption). The potential outcomes for any unit do not depend on the treatments assigned to other units. There are no different versions of the treatment.

Assumption 2

(Unconfoundedness). The assignment mechanism does not depend on the potential outcomes given confounders:

2.2. Matching

Matching is commonly used in the estimation of average treatment effect on the treated (ATT) in observational studies15;16;17. The primary goal of matching is to make the treatment and control groups comparable and reduce the confounding bias. A matching method consists of two parts: matching distance and matching structure17. Matching distances describe similarities between a pair of units, and matching structures characterize matches’ skeletons.

There are plentiful options for matching distances. Arguably the most popular choice is based on the propensity score12;13. The propensity score summarizes the information to balance the covariate distribution in a scalar function, and the propensity score matching reduces the confounding bias. Another branch of distances focuses on covariates. To begin with, exact matching pairs a treated unit with a control unit only if they share the same covariates. Though exact matching produces pairs of the best quality, the method is only feasible on the dataset with a limited set of discrete covariates. To enable the matching, metrics like Mahalanobis distance18;19 reduce the dimension of covariates and encapsulate the similarity regarding covariates in scalars. An alternative distance is based on prognostic scores that summarize the covariates’ dependence on the potential outcomes. The prognostic score matching brings a desirable form of balance to uncontrolled studies20.

In terms of the matching structure, there are also several choices. Pair-matching is the simplest structure, where pairs of one treated unit and one control unit are formed. However, when the group sizes are not balanced10, pair-matching will discard a considerable number of observations. This gives birth to 1 to k (k to 1) matching21, that is each treated (control) unit is matched to k control (treated) units. Nevertheless, 1 to k (k to 1) matching poses a rigid restriction that all treated (control) units should be matched to the same number of control (treated) units. To provide more flexibility, methods allowing treated units matched to a variable number of control units have been discussed22. Nevertheless, those matching methods require each control unit to be used at most once. Full matching11;23 further relaxes the restriction allowing a set of one-to-multiple and multiple-to-one matches. Furthermore, full matching moves forward from ATT estimation to allow average treatment effect (ATE) estimation.

In the following, we introduce the notations of matching used in the paper. Assume that there are n units in total: nt treated units and nc control units . We define a match π as a function from treated units to the subsets of control units, and let be the indicators whether the treated unit ti and the control unit cj are matched. Let Π be the associated set of matched pairs

and denote the number of pairs in set Π as |Π|. There is a bijection between matches and sets of matched pairs, and we use the two notations exchangeably. We define the multiplicity number of the treatment group in match π as

and similarly we define . Let be a distance defined for each treatment-control pair (ti, cj). We denote the total distance and the average distance of a match π under by

2.3. Related works

In the literature of HTE, a number of works24;25;26;10 perform accuracy assessment by predicting the response: on the training data, the treatment and control group mean functions are estimated, and the difference of the two is regarded as the HTE estimator; on the validation data, prediction errors of group mean functions are computed and used to assess the HTE estimator’s accuracy. The drawback is that large prediction errors of group mean functions do not ruin out accurate HTE estimation. In other words, the estimators of mean group functions may be of poor quality while the difference is still a reasonably good estimator of the HTE. This may happen when the HTE enjoys better properties than the mean group functions, such as higher sparsity or smoothness27. Moreover, if an HTE estimator comes without estimates of the mean group functions, predicting the response can not be carried out.

Athey and Imbens propose an assessment method based on covariate matching28. Each unit in the validation data is paired with a unit in the opposite treatment status and close concerning the covariates. In this way, a pseudo-observation of HTE is obtained for each pair by taking the difference of the responses, and from here standard prediction error computations can be applied. The method makes considerable progress in avoiding estimating the control group mean function, but is limited to the case where the number of covariates is not too large.

Athey and Imbens also propose the honest validation for causal recursive partitioning29. Honest validation applies some tree structure to the training data and the validation data, and compares the HTE estimates based on the training and validation data. The method relies on the homogeneity of HTE at each terminal node, and it is not obvious how to generalize the method to other HTE estimators.

We finally review two assessment methods for the average treatment effect (ATE) estimation. Synth-validation30 generates synthetic data based on the observed data with a sequence of possible ATEs and evaluates the ATE estimators’ performance by comparing them to the known effects. The approach can not be easily extended to HTE evaluation since the number of possible configurations of HTE increases exponentially with regard to the covariate dimension. Another approach called within-study comparison31 contrasts ATE estimators from observational studies with those from randomized experiments. The approach is not effective for assessing HTE estimators due to the small sample size in each heterogeneity subgroup.

3. Assessment with hold-out validation dataset

3.1. General framework

In this section, we consider the HTE assessment with a hold-out validation dataset. We consider the following validation error of an HTE estimator

| (2) |

In the ideal world, for each treated unit, there is an identical copy that goes under control. We can replace in (2) by the difference of the two outcomes. In the real world, no identical copy exists. As a surrogate, we construct a match π between treated units and control units, and regard the differences in responses as the HTE pseudo-observations. We then estimate the validation error (2) by

| (3) |

The proposition below characterizes the bias and variance of the validation error estimator conditioned on the covariates and the treatment assignments. Define the oracle validation error of a match π as

| (4) |

The validation error estimator equals the oracle validation error if the match π is perfect and the potential outcomes are noiseless. For a treated unit ti and a control unit cj, define the difference in the control group mean function values as . For a match π, define the mean squared differences in the control group mean function values as .

Proposition 1.

Assuming model (1), Var(ε) = σ2, Var(ε2) = κσ4, we have

According to Proposition 1, the bias of the validation error is more problematic in match construction. The following example of random matching shows the bias may not vanish even if we have infinite data, while the variance will always go to zero. Assume there is only one binary covariate following the Bernoulli distribution with success probability one half. Let the control group mean function be , and the propensity score be e(x) = e2x−1/(1+e2x−1). On the one hand, the average squared difference of random pair-matching is (1 + e2)/(1 + e)2 in expectation, and the bias of is non-zero independent of the sample size. On the other hand, the variance upper bound is inversely proportional to the number of pairs and will vanish as long as the multiplicity numbers , go to infinity slower than the number of pairs |Π|, e.g., , are fixed at constant level.

Proposition 1 suggests that (1) a smaller average squared difference will result in smaller upper bounds for both the bias and the variance; (2) a larger multiplicity numbers , will lead to a smaller and thus a smaller bias, but possibly a larger variance. Since the bias is the primary concern, we recommend minimizing similar quantities of and enforcing constant order multiplicity numbers ,. In the following, we design a matching method following the idea.

3.2. Matching distance

Motivated by Proposition 1, we match treated and control units with similar control group mean function values. On the validation data, we first build a random forest on the control group which learns the control group mean function. Next, we compute each treatment-control pair’s proximity score: the number of trees that the two units end up in the same terminal node. We define the proximity score distance by subtracting the proximity score from the total number of trees. The proximity score distance is a pseudo-metric. A smaller proximity score distance suggests a closer pair in the eye of the random forest.

We compare the proximity score distance with other popular matching distances. Propensity score distances are of little relevance here because two units similar in the control group mean function values are not necessarily close in the propensity scores, and vice versa. Exact covariate matching serves the goal but is usually unrealistic. Besides, distances based solely on covariates often treat covariates equally and are inefficient when only a small proportion of the covariates are informative to the control group mean function.

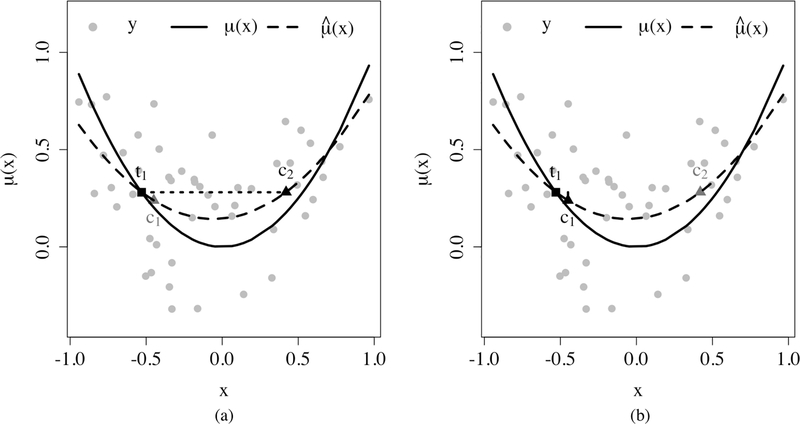

Prognostic score distances20 are the most relevant. Prognostic scores are introduced to provide a form of balance desirable for ATE estimation. Prognostic scores summarize the association between covariates and control group potential outcomes. Mathematically, we call ψ(X) a prognostic score if Y (0) ⫫ X | ψ(X). Prognostic scores are not unique, and the control group mean function is a valid prognostic score. We consider the prognostic score distance: the absolute difference of control group mean function values. In comparison with the prognostic score distance, the proximity score distance admits two advantages. First, the absolute difference of control group mean function values rely more heavily on accurate estimates and are less robust to outliers. The reason is that the proximity scores only depend on the tree structures, while the control group mean function estimates also depend on the responses at each terminal node. Second, as Figure 1 shows, matching on distances based on estimated control group mean functions may pair units close in estimates but far apart in the influential covariates, while matching on the proximity score distance will result in pairs with close estimated control group mean functions as well as similar influential covariates. The latter is less likely to produce spurious treatment-control pairs.

Figure 1.

(a): pair (t1, c2) favored by the prognostic score distance

(b): pair (t1, c1) favored by the proximity score distance

Comparison of the proximity score distance and the prognostic score distance. The blue curves are the true control group mean function, gray points are observations, and the red curves are the estimated control group mean function via least squares. For the treated unit t1, there are two candidate control units c1 and c2. Candidate c1 is closer with regard to the true control group mean function, i.e., . Candidate c2 is closer with regard to the estimated control group mean function, i.e., . In the left panel, the prognostic score distance prefers c2. In the right panel, the proximity score distance prefers c1 since there is likely to be a split between and , and thus t1 and c2 will end up in different terminal nodes.

We highlight that to ensure objectivity, only the control group is used for learning the proximity score distance. As discussed by Hansen20, models fitted only to the control units, i.e., the realizations of Y (0), in general do not carry information about the HTE, i.e., the differences Y (1) − Y (0). In other words, the proximity scores based on the control group can be viewed as nuisance to the HTE, and the theoretical foundation of conditioning on such statistics can be traced to the conditionality principles illustrated, for example, by Cox and Hinkley32. In contrast, if both the treatment and the control groups are touched in the proximity score distance construction, the distance is no longer ancillary to the HTE and is prone to data dredging.

3.3. Matching structure

Given a distance, by Proposition 1, we aim to find a match in which (1) paired control units and treated units are close regarding the provided distance; (2) as many units as possible are used; (3) no units are overused.

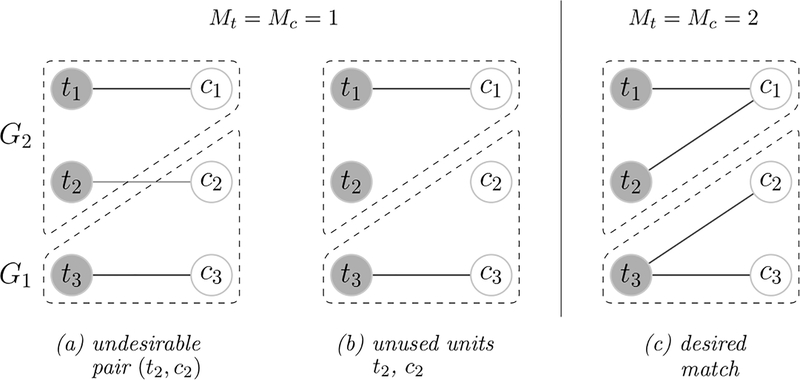

To illustrate the three criteria, we consider the example in Figure 2. There are two clusters G1, G2, where units in the same cluster share similar covariates and units in different clusters differ in covariates. As a result, control group mean function values are similar within clusters but different across clusters. We further assume that the units in cluster G2 are more likely to be treated, and the opposite for cluster G1. We observe more treated units in cluster G2 and more control units in cluster G1. There are three match candidates: in panel (a) each treated unit is matched to exactly one control unit and all the units are used, but there are undesirable matches across clusters; in panel (b) one-to-one matching is conducted and no pairs consist of units from different clusters, but part of the control units and the treated units are not used; in panel (c) there are no across-cluster pairs, every unit is matched, the treated units in cluster G1 are used twice and similarly for the control units in cluster G2. Among the three matches, panel (c) satisfies the three properties aforementioned and is the most favorable candidate.

Figure 2.

Example of matching structure. There are two equal-sized clusters G1, G2, where units in the same cluster share similar covariates and units not from the same clusters differ in covariates. Control group mean function values are similar within clusters but different across clusters. Cluster G2 has more treated units, while cluster G1 has more control units. In (a), (b) , and in panel (c) .

The example is motivated by the confounding phenomenon in observational studies. Confounders influence both the propensity score and the control group mean function. If we cluster the units according to the confounder values, control group mean function values and proportions of treated units will be different across clusters – the scenario in Figure 2.

To find a match with the desired properties, we propose the following matching structure

| (5) |

| (6) |

| (7) |

with pre-specified mc, mt, Mc, Mt ≥ 0. The lower bounds in the multiplicity constraints (6), (7) guarantee that as many units as possible are used. The upper bounds in the multiplicity constraints (6), (7) enforce that no units are matched excessively. The objective function (5), focusing on the average distance, prefers a match with more good quality pairs to fewer poor quality pairs. Particularly for the example in Figure 2, the total distance minimization may rule out panel (c) while the average distance minimization always prefers panel (c). In the following, we discuss the multiplicity constraints (6), (7), and the objective function (5) in detail.

3.3.1. Multiplicity constraints

Arguably the most common multiplicity parameters are Mt = Mc = 1, and mt = 1, mc = 0. The constraint requires each treated unit to be matched to one control unit and no control units are used multiple times. The constraint can be stringent if multiple control units are close to one treated unit and vice versa. Consider the example in Figure 2. If Mt = Mc = 1, mt = 1 are enforced, a proportion of the control units in cluster G1 will be matched to the treated units in cluster G2. If we relax mt = 1 and avoid pairs across clusters, part of the control units in cluster G1 and part of the treated units in cluster G2 will not be matched as in panel (b). If we consider Mt = Mc = 2, mt = mc = 1, there will be treated units in cluster G1 matched to multiple control units, and the same for the control units in cluster G2 as in panel (c). The match contains no pairs of units from different clusters and uses all the data. Therefore, we recommend Mt and Mc to be reasonably large and mt = 1 if nt ≤ nc.

3.3.2. Objective function

The major difference between the aforementioned matching and the full matching11 is the objective function: the former focuses on the average distance, and the latter focuses on the total distance. If the number of matched pairs is fixed, the total distance minimization and the average distance minimization are equivalent. This is the case in pair-matching where the number of matched pairs equals that of the treated units. However, when the number of matched pairs is not fixed, the average distance minimization and the total distance minimization may favor different matches.

The following proposition further illustrates how the average distance minimization and the total distance minimization are different. We call a matching method invariant to the translation of distance if for any distances d1, d2 such that d2(ti, cj) = d1(ti, cj) + c for some constant c, the resulted matches are the same. We call a matching method invariant to the scale of distance if for any distances d1, d2 such that d2(ti, cj) = c·d1(ti, cj) for some positive constant c, the resulted matches are the same.

Proposition 2.

If the optimization problem (5) is feasible,

the average distance minimization is translation and scale-invariant, and the total distance minimization is scale-invariant but not translation-invariant;

given multiplicity parameters Mt, Mc, mt, mc, let πave and πtot denote an optimal solution of the average distance minimization and the total distance minimization respectively, then

By Proposition 2, if Dave(π) is relevant to , will be less biased and variant compared to . In Section 5, we demonstrate that the method minimizes the average proximity score distance is also associated with small . Besides, the average distance minimization produces a larger number of pairs, which further reduces the variance of .

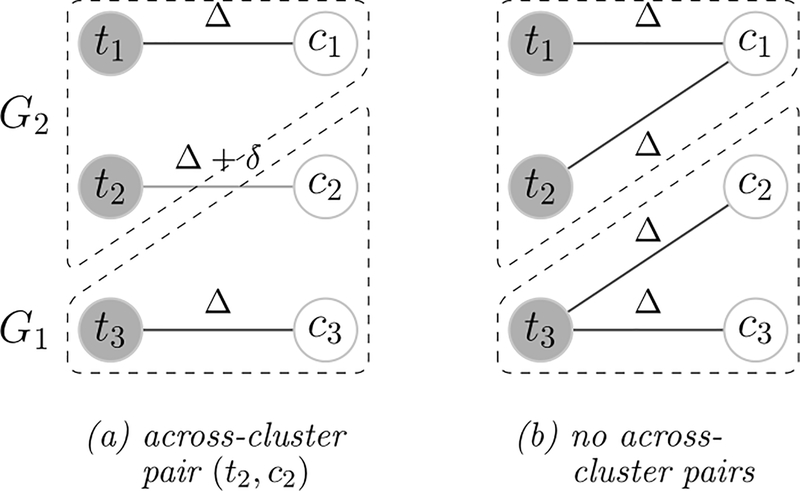

Reconsider the example in Figure 2. We further assume that distances between units in the same cluster are Δ, and those between units across clusters are Δ+δ. As demonstrated in Figure 3, there are two match candidates: in panel (a), there is one across-cluster pair, the total distance is 3Δ+δ and the average distance is Δ+δ/3; in panel (b), there are no across-cluster pairs, the total distance is 4Δ and the average distance is Δ. The average distance minimization always prefers the match with no across-cluster pairs in panel (b). On the contrary, the total distance minimization prefers the match with unfavorable across-cluster pairs in panel (a) if Δ > δ. The translation-invariance makes the average distance minimization robust to distance inflations, i.e., the distance shifts up by a constant.

Figure 3.

Comparison of the average distance minimization and the total distance minimization (continued from the example in Figure 2). Distances between units in the same cluster and across clusters are Δ and Δ + δ respectively. In panel (a), there is one across-cluster pair, the total distance is 3Δ+δ and the average distance is Δ + δ/3; in panel (b), there are no across-cluster pairs, the total distance is 4Δ and the average distance is Δ. The average distance minimization always prefers the match in panel (b), while the total distance minimization prefers the match in panel (a) if Δ > δ.

The example is motivated by multiple popular distances. Consider the semi-oracle distance . Let μ1 and μ2 be the control group mean function values in cluster G1 and G2. The expectation of the semi-oracle distance equals 2σ2 for within-cluster pairs and 2σ2 + (μ2 − μ1)2 for across-cluster pairs. As the noise magnitude increases, the distance inflates. Another motivating distance is . Suppose that the group mean function only depends on the first covariate and units are clustered according to it, then the distance is for within-cluster pairs and for across-cluster pairs. As the number of nuisance covariates increases, the covariate distance shifts up.

3.3.3. Computation

There are two major approaches to solve the matching problem with total distance minimization. The first approach reformulates the matching problem as a minimum-cost flow problem22;11. If the distances are positive, there exists a feasible integral flow achieving the minimal cost. The optimal integer flow corresponds to a solution to the aforementioned matching problem. The minimum-cost flow algorithm runs in O(n2 log(n)) on sparse graphs (constant order , as n → ∞). On dense graphs (,), finding a minimum flow takes O(n3) time.

The second approach casts the matching problem in the language of linear programming. Let πij denote whether the treated unit ti and the control unit cj are matched. The total distance minimization problem can be rewritten as

where we relax the integer constraints πij ∈ {0, 1} to the linear constraints πij ∈ [0, 1]. In fact, as shown in the following, the extremal points defined by the linear constraints are all integer vectors. Thus the simplex method will output an optimal solution of integer values. In this way, we avoid the computationally heavy integer programming.

We show that the extremal points of the feasible regions are integer-valued. We rewrite the linear constraints in the matrix form Aπ ≤ b, where both the coefficient matrix A and the coefficient vector b are integer-valued. The coefficient matrix A defined by the linear constraints is totally unimodular33, i.e., each subdeterminant of A is 0, 1, or −1. Then for any integer vector b, the polyhedron {π : Aπ ≤ b} is integral34, i.e., a convex polytope whose vertices all have integer coordinates.

Unfortunately, the two major approaches can not be directly applied to the average distance minimization problem. Standard minimum-cost flow problem requires to input the flow value, or equivalently the total number of pairs in the match. However, the flow value is not directly available in the average distance minimization. Linear programming entails a linear objective function, while the average distance is non-linear.

We propose an algorithm for the average distance minimization. The algorithm is derived from the average minimum-cost flow solver designed by Chen35. Explicitly, we search for the optimal flow value via binary search. In each sub-routine, we fix a flow value and solve a minimum-cost flow problem.

Let the time of solving one minimum flow problem on a certain graph be a unit, which ranges from O(n2 log(n)) to O(n3) depending on the graph structure. The average distance minimization problem only takes log(n(Mt+Mc)) time units. In other words, the algorithm is of the same time complexity as solving one minimum-cost flow problem up to logarithmic factors of the maximal number of pairs.

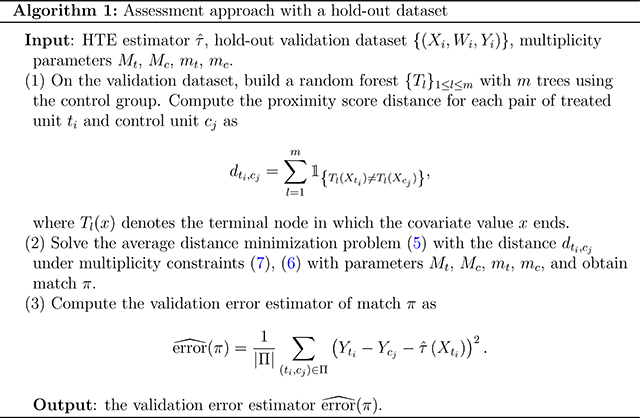

Finally, we summarize the assessment approach with a hold-out validation dataset in Algorithm 1.

4. Assessment with cross-validation

In practice, hold-out datasets may be costly. Cross-validation is a popular validation paradigm that uses the whole dataset for training while providing a reasonably good evaluation of the estimation performance. In this section, we discuss how to conduct the assessment approach under the framework of cross-validation.

The standard cross-validation consists of two steps: first, split the data into several folds randomly equally; second, train on all but one fold, conduct validation on the left-out fold, and repeat this for each fold. Naively integrating the assessment approach and the standard cross-validation framework raises the issue: the former splitting hurts the later matching. Consider the most favorable case where the samples are perfectly paired. By splitting first, we may assign two perfectly paired units to different folds, thus missing the ideal match.

To tackle this problem, we propose to do matching before splitting, short as match-then-split. Particularly, on the whole dataset, we obtain proximity score distances and solve the average distance minimization problem to obtain the optimal match. We next split the samples into folds preserving the pair-structures. In this way, we avoid assigning matched units to different folds. Recall the perfectly matched example. If we apply the match-then-split principle, all perfect pairs will be matched and stay in the same fold.

A natural concern of the match-then-split principle is data snooping. Note that the proximity score distance is obtained solely on the control group data, and the treatment group is not touched. The one-sided data provides no information for the differences between the two groups. Therefore, splitting after matching is blind to the validation target and fair.

A new difficulty arises in data splitting to keep matched units together. We represent a match by an undirected graph where each node denotes a unit. There is an edge between two nodes if and only if the two units are matched. The pair-preserving constraint means that connected components should stay together. Since each unit is allowed to be matched multiple times, there may exist large connected components as depicted in Figure 4. The graph may be connected in the extremist scenario, and splitting without breaking pairs is impossible.

Figure 4.

Example of pruning. In panel (a), the graph is a chain of alternate treated units and control units. There are three removable edges (t2, c1), (t2, c2), (t3, c2). We pick the removable edge with the maximal distance, i.e., (t2, c2), eliminate the edge and obtain panel (b). After pruning (t2, c2), edges (t2, c1) and (t3, c2) are no longer removable, and the set of removable edges is empty. Therefore, we stop pruning. In the pruned match, units can be split into two connected subgroups: {t1, t2, c1} and {t3, c2, c3}. The connected subgroups either consist of one treated unit and multiple control units or vice versa.

To enable proper splitting, we modify the average distance minimization problem. Beyond the multiplicity constraints (6), (7), we further restrict the maximal path length of the graph to be at most three – a constraint also adopted in full matching11. As proved in Lemma 1, under the maximal depth constraint, only two possible types of connected components are allowed: (1) one treated unit with multiple matched control units; (2) one control unit with multiple matched treated units. The maximal size of the connected components is upper bounded by 1+max{Mt, Mc} – usually small compared to the sample size. In this way, we can assign the connected components randomly into folds without destroying the pair-structures.

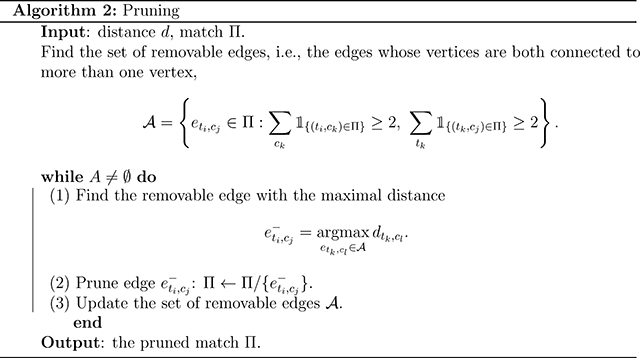

In full matching, the constraint is automatically fulfilled. However, this is not true for the average distance minimization. In fact, no known efficient network algorithm works under the path length constraint. As a surrogate, we propose the following heuristic pruning algorithm. Particularly, we start with the solution of the average distance minimization. We call an edge (ti, cj) removable if the treated unit ti is matched to more than one control unit, and the control unit cj is matched to more than one treated unit. As shown in Lemma 1, the new constraint is equivalent to the condition that there are no removable edges in the graph. We iteratively prune the highest cost removable edge until the set of removable edges is empty. See Figure 4 for an example. The approach is summarized in Algorithm 2. We call the matching with pruning “FACT matching”: “Full matching constraints” and “Average CosT minimization”.

Lemma 1.

In the graph representing a match, the following constraints are equivalent:

the maximal path length is at most three;

there are only two types of connected components: one treated unit with multiple control units and vice versa;

there is no such edge (ti,cj) that ti is connected with multiple control units and cj is connected with multiple treated units.

The pruned match possesses several appealing properties. First, pairs after pruning are a subset of the matched pairs of the average distance minimization. Thus multiplicity constraints (6) and (7) are satisfied. Second, if the match without the path-length constraint can avoid low-quality pairs, the pruned match will automatically avoid those pairs by choosing from existing pairs. Third, by eliminating the removable pair with the maximal distance each time, we are heading greedily towards the optimal solution with the path-length constraint.

5. Simulation

In this section, we compare various validation methods under the cross-validation framework on the synthetic data generated from model (1).

We consider the following validation methods for comparison.

Response prediction (prd). On the training data, we estimate the treatment and control group mean functions. On the validation data, we compare the out-of-sample predictions with the observations;

Covariate distance with FACT matching (cvr). We compute validation errors as described in Section 4 with Mahalanobis distance1 and FACT matching;

Proximity score distance with full matching2 (full). We compute validation errors as described in Section 4 with the proximity score distance and full matching;

Proximity score distance with FACT matching and the split-then-match principle (S-M). We first split samples randomly into folds, then match within folds using the proximity score distance and FACT matching. The rest of the steps are the same as described in Section 4;

Prognostic score distance with FACT matching (prgn)3. We compute estimation errors as described in Section 4 with the prognostic score distance4 and FACT matching;

Proximity score distance with FACT matching (combo). We compute estimation errors as described in Section 4 with the proximity score distance and FACT matching.

Table 1 summarizes the characteristics of the validation methods.

Table 1:

Summary of the validation methods’ characteristics.

| method abbreviation | target of comparison13 | matching distance | matching structure | split or match first |

|---|---|---|---|---|

| prd | response | NA | NA | NA |

| cvr | HTE | covariate dist. | FACT matching | match |

| full | HTE | proximity score dist. | full matching | match |

| S-M | HTE | proximity score dist. | FACT matching | split |

| prgn | HTE | prognostic score dist. | FACT matching | match |

| combo | HTE | proximity score dist. | FACT matching | match |

There are a number of tuning parameters. As for the random forests to compute the proximity score distance and the prognostic score distance, we determine the number of trees and the number of variables randomly sampled as candidates at each splits by the out-of-bag mean-squared errors. In terms of the maximal group sizes in full matching and FACT matching, we experiment with a grid of values. As the group size increases, the distance objectives first decrease then stabilize, and we choose the “elbow point”5.

We consider the following four data generation settings.

Setting I. Setting I serves as the default. There are in total 200 units and each unit is associated with 10 covariates generated i.i.d. uniformly from [−1, 1]. The HTE, treatment and control group mean functions are linear of the first 5 covariates. The treatment assignment is randomized, i.e., the propensity score is always 0.5. We use 10-fold cross-validation;

Setting II. Compared to setting I, in setting II we only increase the number of covariates from 10 to 100. The HTE, treatment and control group mean functions remain the same, and are independent of the additional covariates. Only 5% of the covariates are meaningful, and the rest are nuisances;

Setting III. Compared to setting I, in setting III we only increase the number of folds in cross-validation from 10 to 25. After splitting, there are around 8 units in each fold;

Setting IV. Compared to setting I, setting IV is more realistic with two major changes. First, we let the propensity score depend on the covariates and be correlated with the group mean functions and the HTE. Second, we also change the group mean functions to non-linear functions while keeping the HTE linear. The reason for adding non-linear terms into the control group mean function instead of the HTE is that, according to domain knowledge, the control group mean function, e.g., blood pressure, is usually influenced by more factors than the HTE, e.g., the difference in blood pressure induced by therapy, and in a more complicated way.

The signal-noise-ratios of all settings are below one. Table 2 summarizes the characteristics of the simulation settings.

Table 2:

Summary of the simulation settings’ characteristics.

| Simulation setting | group mean functions | number of covariates | propensity score | number of folds |

|---|---|---|---|---|

| I | linear | 10 | 0.5 | 10 |

| II | linear | 100 | 0.5 | 10 |

| III | linear | 10 | 0.5 | 25 |

| IV | non-linear | 10 | nonconstant | 10 |

We evaluate the tuning performance of the validation methods applied to the following LASSO-based HTE estimator7:

| (8) |

The approach (8) estimates the control group mean function by and the HTE by . We add ℓ1 penalties of α and β since the true control group mean function and the true HTE depend on only a few covariates. The approach is not the state-of-art of HTE estimation. However, our emphasis is on the validation step but not the estimation step and the estimator serves our goal well: an HTE estimator making variable selection and involving only one tuning parameter. We expect that a good validation method should be able to select the best tuning parameter for the estimator (8).

To evaluate the tuning performance, for each setting in Table 2, we run the validation methods in Table 1 with a sequence of tuning parameters Λ. We pick the tuning parameters λmethod with the minimal validation errors. We then solve (8) on the whole dataset with the selected hyper-parameters to obtain and compute the estimation error . Meanwhile, we define the oracle estimation error as . For comparison, we compute the log ratio of MSEmethod over MSEoracle:

| (9) |

referred to as relative MSE in the following. The smaller the relative MSE is, the better the validation method performs.

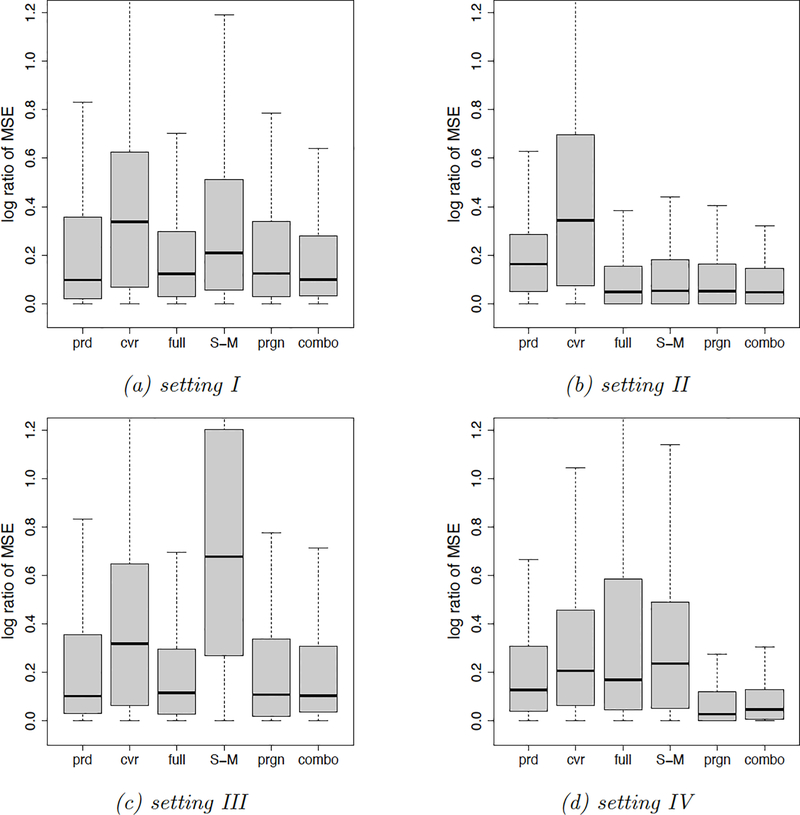

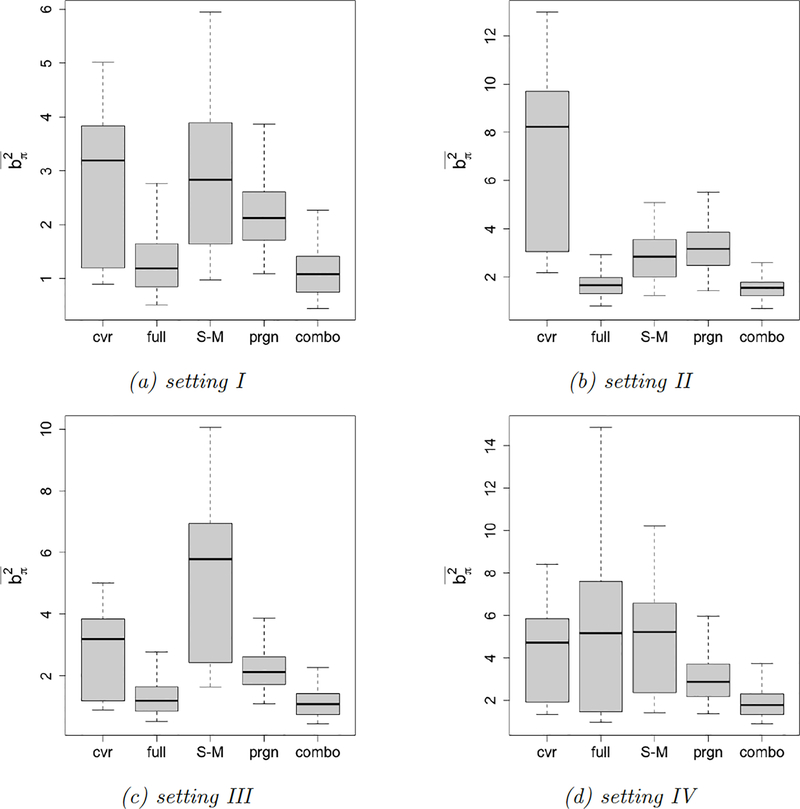

In Figure 5 we present box plots of the relative MSE. Overall, the combo and the prgn methods produce the smallest relative MSEs. The cvr method is problematic in Setting II, since the covariate distance’s quality deteriorates in the presence of many irrelevant covariates. The S-M method produces a large relative MSE in setting III, since there are fewer units in each fold and matched pairs are of lower quality. The full method is less attractive in setting IV – a setting similar to the example in Figure 3 where the average distance minimization (FACT matching) is preferred over the total distance minimization (full matching). The prd method is always dominated by the combo and the prgn methods. We also compare the mean squared differences in all simulation settings. The results of agree with those of the relative MSE, and the combo method always produces the smallest . The plots can be found in the appendix.

Figure 5.

Relative MSE box plots. We display the relative MSE of the validation methods in Table 1 under the simulation settings in Table 2. Each setting is repeated 200 times.

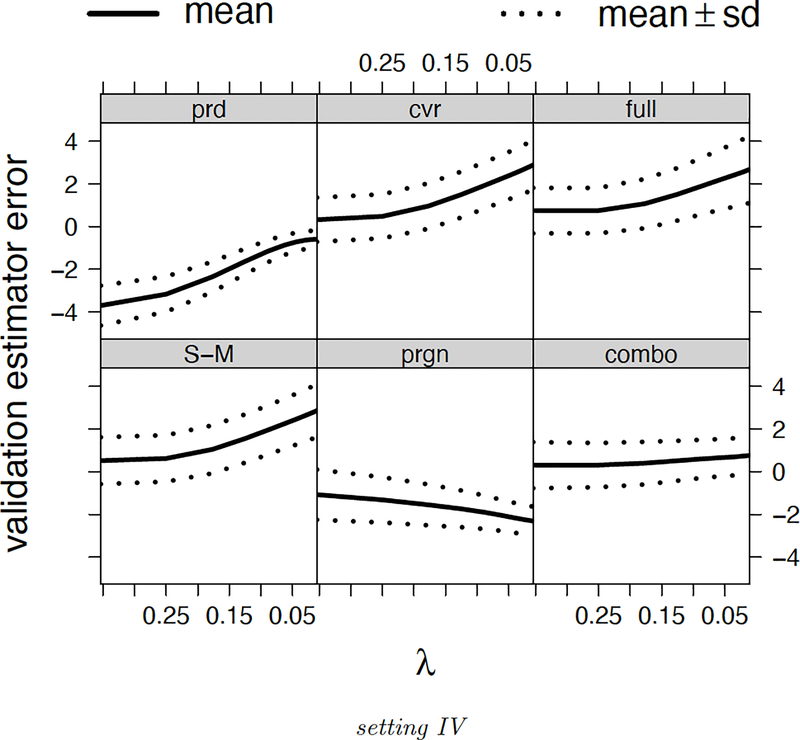

Furthermore, we compare validation error curves. Figure 6 presents the error curves of validation error estimators in the setting IV6. The combo method produces the smallest biases across different λ. The validation error estimators’ biases are more problematic than the variances. We also regress average validation error curves over the oracle error curve. In Table 3 we present coefficients and R2 of the regressions. Both ideal values are one. The results agree with those of the relative MSE: the combo method leads the performance, and the cvr method, S-M method, full method are not very promising in setting II, III and IV respectively. We also observe that compared to the combo method, the prgn method is performing worse regarding the validation error curve.

Figure 6.

Error curves of validation error estimators (setting IV). The solid curves demonstrate the validation error estimators’ biases. The dotted curves demonstrate the biases plus or minus one standard deviation of . Each setting is repeated 200 times.

Table 3:

Comparison of validation error curves. We average the MSEs of the validation methods in Table 1 at each tuning parameter in Λ under the simulation settings in Table 2. We regress validation error curves over the oracle error curve and present the coefficient, R2 of each regression.

| method | I | II | III | IV | ||||

|---|---|---|---|---|---|---|---|---|

| coef. | R2 | coef. | R2 | coef. | R2 | coef. | R2 | |

| prd | 2.97 | 0.99 | 1.32 | 0.92 | 2.95 | 0.99 | 4.15 | 0.95 |

| cvr | 1.81 | 0.88 | 0.75 | 0.88 | 1.78 | 0.89 | 2.18 | 0.81 |

| full | 1.05 | 1.00 | 1.08 | 1.00 | 1.03 | 1.00 | 1.76 | 0.90 |

| S–M | 1.56 | 0.93 | 0.96 | 0.99 | 1.12 | 0.14 | 2.02 | 0.85 |

| prgn | 0.68 | 0.99 | 1.16 | 0.98 | 0.67 | 0.99 | 0.78 | 1.00 |

| combo | 0.99 | 1.00 | 1.09 | 1.00 | 0.98 | 1.00 | 1.13 | 1.00 |

6. Real data example

We compare the validation methods on the national supported work (NSW) program data36;37. We use the validation results obtained from a randomized evaluation of the NSW program as the oracle, and compare with the results on an observational dataset combining the treated units from the NSW randomized evaluation and the non-experimental control units from the 1978 panel study of income dynamics (PSID).

The National Supported Work Demonstration is a program aiming to study whether and how employment benefits disadvantaged workers to get and hold unsubsidized jobs. Qualified applicants were randomly assigned to treatment and guaranteed with jobs for 9 to 18 months. We focus on the subset 7 with 185 treated units and 260 control units, referred to as the NSW-NSW dataset in the following. Pre-intervention variables include pre-intervention earnings, marital status, race, education and age. The distributions of pre-intervention variables in the treatment and control groups are very similar except hispanic and no-degree 8.

We follow Dehejia and Wahba’s analysis and create an observational control group based on the PSID9. The control group consists of 128 units with the same set of pre-intervention variables. We then construct an observational study dataset combining the NSW treatment group and the PSID control group, referred to as the NSW-PSID dataset in the following. Unlike the NSW-NSW dataset, the pre-intervention variables in the NSW-PSID dataset are significantly different across the treatment and control groups: the control group are on average 12.4 years older, less likely to be black (45% versus 84%), more likely to be married (70% versus 19%) and earn $1079 more in the year before the program. Table 4 summarizes the pre-intervention variables’ characteristics of the NSW-NSW and the NSW-PSID datasets.

Table 4:

Pre-intervention variables’ characteristics of the NSW-NSW and NSW-PSID datasets. Standard errors are in parentheses. The NSW-NSW dataset contains the NSW treatment group and the NSW control group. The NSW-PSID dataset contains the NSW treatment group and the PSID control group. The pre-intervention variables are: age: age in years; education: number of years of schooling; black : 1 if black and 0 otherwise; hispanic: 1 if hispanic and 0 otherwise; no degree: 1 if no high school degree and 0 otherwise; married: 1 if married and 0 otherwise; pre-intervention income: earnings (in dollars) in the calendar year 1975.

| sample size | Age | education | black | hispanic | married | no-degree | pre-intervention income ($) | |

|---|---|---|---|---|---|---|---|---|

| NSW treated | 185 | 25.82 (0.34) | 10.35 (0.10) | 0.84 (0.02) | 0.06 (0.01) | 0.19 (0.02) | 0.71 (0.02) | 1532 (153) |

| NSW control | 260 | 25.05 (0.33) | 10.09 (0.08) | 0.83 (0.02) | 0.11 (0.01) | 0.15 (0.02) | 0.83 (0.02) | 1267 (147) |

| PSID control | 128 | 38.26 (1.14) | 10.30 (0.28) | 0.45 (0.04) | 0.12 (0.03) | 0.70 (0.04) | 0.51 (0.04) | 2611 (493) |

To prepare the data for the LASSO estimator in (8), we log-transform the heavy-tailed pre-intervention income and the post-intervention income10. We center and normalize the continuous pre-intervention variables education, age and pre-intervention income in both datasets by the means and standard deviations in the NSW-NSW dataset. We add two-way interactions between the 7 pre-intervention variables, which amounts to 28 features in total.

We evaluate the validation methods in Table 1 applied to the LASSO estimator (8). On the NSW-NSW dataset, we compute as described in Section 4 the 20-fold cross-validation errors with a sequence of tuning parameters11. We also obtain the LASSO estimates based on the full NSW-NSW dataset corresponding to the sequence of tuning parameters. On the NSW-PSID dataset, we compute the hold-out validation errors of the LASSO estimates from the full NSW-NSW dataset as described in Section 3. We finally compare the LASSO estimates selected from the two datasets. For each validation method, we regard the selected estimate based on the validation errors of the NSW-NSW dataset as the oracle, and expect it to select the same estimate based on the NSW-PSID dataset. Table 5 demonstrates the results.

Table 5:

Comparison of the LASSO estimates selected from the NSW-NSW dataset and the NSW-PSID dataset by minimizing the validation errors. For each validation method, we compute – the treatment group L2 norm of the difference of the selected LASSO estimates from the NSW-NSW dataset and from the NSW-PSID dataset. The results regarding the L2 norm based on the NSW-NSW control group and the NSW-PSID control group are similar and are presented in Table 6 in the appendix.

| method | prd | cvr | full | S-M | prgn | combo |

|---|---|---|---|---|---|---|

| × 102 | 0.74 | 1.36 | 0.00 | 1.36 | 4.36 | 0.00 |

According to Table 5, the full method and the combo method select the same LASSO estimates across datasets, while the other validation methods do not pick consistent estimates. In particular, the prgn method selects the most different estimates since the response is rather noisy and the prognostic score distance produces doubtfully close pairs. Often randomized experiment data like the NSW-NSW dataset are rare and expensive to acquire compared to observational study data like the NSW-PSID dataset. With a validation method making consistent selections across datasets, we can assess an HTE estimate using observational study data while arriving at the same result as using randomized experiment data.

The selected LASSO estimates of the combo and the full methods coincide on both datasets. The selected estimate concludes that there is an overall 13.3% improvement in the post-intervention income brought by the NSW program12. The finding of the overall positive effect agrees with previous works36;37 though we analyze the log-transformed income while they focus on the original income. The LASSO estimate also suggests that subgroups black and married, young and married, young and high pre-intervention income benefit more from the program.

7. Extension to general exponential family

In the previous sections, we focus on continuous responses. There are other types of outcomes worthwhile to study. For instance, doctors measure whether the patients who underwent the operation or not survive to a certain time spot to study the effectiveness of surgery; agencies compare the times of bicycles used from automated bicycle counters before and after the policy is enforced to investigate the influence of a policy encouraging non-motor vehicles. In this section, we extend the aforementioned assessment approach to address multiple types of responses.

We generalize the model (1) to the general exponential family, which deals with a wide range of responses, including binary data and count data. Mathematically, we assume

| (10) |

where η(x, w) represents the natural parameter, ψ(η) is the cumulant generating function, and κ(y) is the carrying density. We write

and focus on the quantity τ(x) := ν(x)−μ(x). The model (10) with Gaussian distribution is a sub-case of the model (1).

Next, we extend the validation criterion, i.e. the mean squared error in (4). We first state the following result regarding conditional likelihoods.

Proposition 3.

Consider n pairs of data {(Xi1,Xi2,Wi1,Wi2, Yi1, Yi2)}, where μ(Xi1) = μ(Xi2), Wi1 = 1, Wi2 = 0, and Yi1, Yi2 are generated independently from model (10) given Xij, Wij. Then the conditional likelihood of {Yij} given {Yi1 + Yi2} does not depend on μ(x).

Proposition 3 implies that with pairs perfectly matched on control group mean function values, the conditional likelihood, which serves as a valid criterion for the HTE estimation assessment, can be evaluated without μ(x). For example, consider the Gaussian distribution, the log conditional likelihood equals up to scale.

If data comes in perfectly matched pairs, the condition μ(Xi1) = μ(Xi2) is automatically satisfied. Examples of Proposition 3 with perfectly matched pairs can be found in the book by Argesti38. When such data are not available, we can apply the matching method based on the proximity score distance to construct pairs such that μ(Xi) ≈ μ(Xj). Based on the matched pairs, we compute the conditional likelihood pretending the pairs are perfectly matched, and use the conditional likelihood as the criterion for assessment.

8. Discussion

The paper discusses an assessment approach of HTE estimators by constructing pseudo-observations based on matching. In terms of the matching, we suggest to use the proximity score distance and minimize the average distance. When conducting the assessment approach under the cross-validation framework, we suggest to match before split.

Our proposed method can be further enhanced by existing matching ideas such as exact and near-exact matching16. In particular, augmentations motivated by domain knowledge could help HTE assessment when the proximity score learning is onerous, possibly due to limited samples or a large number of covariates. For instance, in the selective serotonin reuptake inhibitors (SSRIs) example discussed by Rosenbaum17, exact matching on gender is enforced with the belief that the potential outcomes would differ significantly across male and female subjects. To incorporate the gender constraint into our method, we can partition the validation samples according to gender and match within each subgroup. A more flexible alternative is incorporating the restriction into the proximity score distance, e.g., adding a large value to the distance if a treatment-control pair differs in sex.

The pseudo-observations can also be used for data calibration. Given an estimator, the standard calibration tunes the prediction bands’ widths on the hold-out data to achieve the exact coverage. As for the calibration of an HTE estimator, observations’ coverage is not the fundamental goal. We can construct pseudo-observations as discussed and determine the prediction bands’ widths by covering a certain proportion of the pseudo-observations.

A limitation of the assessment approach is the computation of matching. Solving a matching problem exactly is generally computationally heavy. Even if in the simplest case where each treated unit is mapped to exactly one control unit and the distance matrix is not sparse, minimizing the total/average distance takes O(n3) time. Thus, fast approximate matching algorithms are desirable to make the validation method scalable.

9. Acknowledgements

This research was partially supported by grants DMS-1407548 and IIS 1837931 from the National Science Foundation, and grant 5R01 EB 001988-21 from the National Institutes of Health.

12. Appendix

12.1. Proofs

Proof of Proposition 1.

We prove for bias and variance respectively. The expectations are taken with regard to the noise ε.

For bias, under model (1)

| (11) |

By Cauchy-Schwarz inequality,

| (12) |

Plug (12) into (11), and divide both sides by error(π),

Similarly for the lower bound of the bias.

For variance, let for simplicity. Define the neighborhood of a matched pair (ti, cj) as

Since each treated unit falls into at most Mt pairs, and each control unit falls into at most Mc pairs,

| (13) |

Under model (1),

Since 2 Cov(ξ1, ξ2) ≤ Var(ξ1) + Var(ξ2) for any random variables ξ1, ξ2,

By (13),

Recall that Var(ε) = σ2, Var(ε2) = κσ4,

□

Proof of Proposition 2.

For C > 0,

thus the total distance optimization is invariant to scaling. The example in Figure 3 implies that the total distance minimization is not invariant to translation.

For C1, C2 > 0,

thus the average distance minimization is invariant to both scaling and translation.

Let πave, πtot be the optimal solution of average distance minimization. By the optimality condition,

□

Proof of Lemma 1.

We prove the equivalence by showing (1) ⟹ (2), (2) ⟹ (3), (3) ⟹ (1).

(1) ⟹ (2): we prove by negation. If (2) is not true, then there exists a connected component consisting of at least 2 treated units and 2 control units. Without loss of generality, assume the node with the highest degree in the connected component is treated, and denote the node by t1. Let t2 be a treated node other than t1, by the connectivity, there exists a path connecting t1 and t2. Notice that the graph is bipartite, then at least one control unit appears in the path. If there is more than one control unit in the path, the path is of length at least 4. Otherwise, there is only one control unit in the path, denoted by c1. Then there is another control unit c2 connected to t1 since t1 is connected to at least two control units. The path c2 −t1 −c1 −t2 is of length 4. Therefore, if the maximal path is of length at most three, then the connected components can consist of either one control or one treated unit.

(2) ⟹ (3): we prove by negation. Assume (3) is not true, i.e. there exists an edge (t1, c1) that t1 is also connected to another control unit c2, and c1 is connected to another treated unit t2. Then the connected component containing c2 − t1 − c1 − t2 consists of at least two treated and two control units.

(3) ⟹ (1): we prove by negation. Assume (1) is not true, then there exists a path of length 4 of the type c2 − t1 − c1 − t2. In this way, for edge (t1, c1), the treated unit t1 is connected with two control units and c1 is connected with two treated units. □

Proof of Proposition 3.

Since Yi1, Yi2 are generated independently from model (10), the marginal density of Zi = Yi0 + Yi1 is

Then the conditional distribution given Zi is

| (14) |

Since μ(Xi0) = μ(Xi1), then

Thus the conditional likelihood does not depend on μ(x). □

12.2. Synthetic data examples

We include the error curves of validation error estimators (Figure 7) in the setting I, II, III. In all settings, the combo method produces the smallest biases across different λ. The validation error estimators’ biases are more problematic than the variances.

Figure 7.

Error curves of validation error estimators (setting I, II, III). The solid curves demonstrate the validation error estimators’ biases. The dotted curves demonstrate the biases plus or minus one standard deviation of . Each setting is repeated 200 times.

We compare the mean squared differences of five matching-based validation methods in Figure 8. In all simulation settings, the combo method produces the smallest . The full method comes second except in the setting IV. The cvr method is not very promising in setting I in the presence of many irrelevant covariates. The S-M method is less attractive in setting III with 25-fold cross-validation. According to Proposition 1, a smaller implies a less biased and variant validation estimator. As expected, Figure 8 agrees with Figure 5.

Figure 8.

Mean squared differences box plots. We display the mean squared differences of the five matching-based validation methods in Table 1 under the simulation settings in Table 2. Each setting is repeated 200 times.

12.3. Real data example

We provide more details of the empirical study in Section 6. In complement of Table 5, we demonstrate the differences in selected LASSO estimates under alternative norms in Table 6.

Table 6:

Comparison of selected LASSO estimates from two datasets via different validation methods. For each validation method, we compute the NSW-NSW and NSW-PSID control group L2 norm of the difference of the selected LASSO estimate from the NSW-NSW dataset and from the NSW-PSID dataset.

| method | prd | cvr | full | S-M | prgn | combo |

|---|---|---|---|---|---|---|

| × 102 | 0.60 | 1.12 | 0.00 | 1.49 | 4.76 | 0.00 |

| × 102 | 0.60 | 1.12 | 0.00 | 1.53 | 5.33 | 0.00 |

12.4. Maximal group size selection

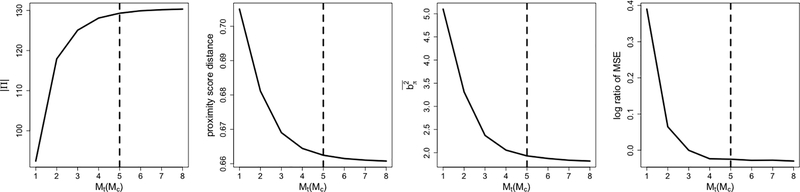

We empirically demonstrate how Mt and Mc affect the assessment of HTE estimators. In particular, we illustrate how the number of matched pairs |Π|, the average proximity score distance of the resulting match Dave(π) (distance objective), the mean squared difference , and the relative MSE (9) change as Mt and Mc increase 14. The former two are available without the underlying truth and can be used for parameter selection. The latter two can not be computed without an oracle and are used as measurements of matching quality and assessment performance. Figure 9 corresponds to setting I in Table 2, where the treatment assignment is randomized. Figure 10 addresses setting IV in Table 2, where the propensity scores vary significantly across subjects15.

Figure 9.

Selection of multiplicity upper bounds Mt, Mc in setting I. For simplicity, we set Mt = Mc. From the left to the right, we plot in solid curves the number of matched pairs |Π|, the average proximity score distance of the resulting match Dave(π), the mean squared difference , and the relative MSE (9) (all aggregated over 200 trials). The dashed lines correspond to the selected multiplicity upper bounds Mt = Mc = 3.

Figure 10.

Selection of multiplicity upper bounds Mt, Mc in setting IV. For simplicity, we set Mt = Mc. From the left to the right, we plot in solid curves the number of matched pairs |Π|, the average proximity score distance of the resulting match Dave(π), the mean squared difference , and the relative MSE (9) (all aggregated over 200 trials). The dashed lines correspond to the selected multiplicity upper bounds Mt = Mc = 5.

In both settings, the number of pairs |Π| first increases then stabilizes, and the average proximity score distance Dave(π) first decreases then stabilizes. As expected in Section 3.3.2, the mean squared difference — critical to the validation error estimator’s quality — behaves similarly to the proximity score distance Dave(π). Finally, the relative MSE first decreases then stabilizes or slightly increases. The relative MSE may rise for Mt, Mc excessively large due to additional variances.

As discussed in Section 5, we choose the “elbow point” of the average proximity score distance Dave(π) (distance objective) curve. In setting I (Figure 9), we select Mt = Mc = 3 (dashed lines), while in setting IV (Figure 10), we pick Mt = Mc = 5 (dashed lines). Setting IV prefers larger Mt, Mc because the treatment and control groups are imbalanced at subregions with propensity scores far from one half, and the units in the smaller group should be used multiple times. The necessity of larger Mt, Mc in setting IV is also illustrated in the example of Figure 2.

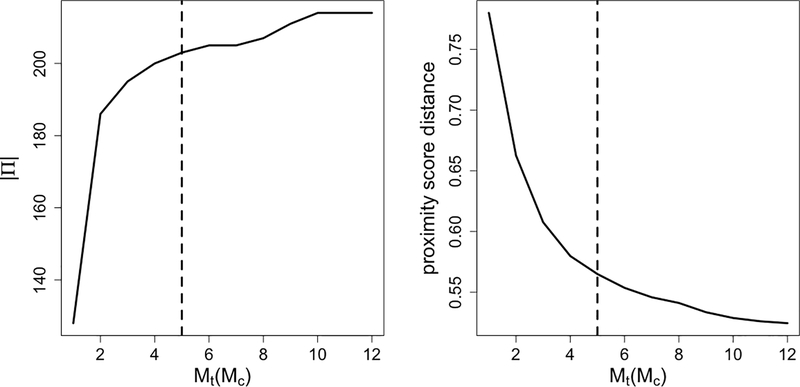

We provide more details of choosing the multiplicity upper bounds Mt, Mc in the real data example (NSW-PSID dataset). In Figure 11, from left to right, we plot the number of matched pairs |Π| and the average proximity score distance Dave(π) (distance objective) against multiplicity upper bounds Mt, Mc. The number of pairs |Π| first increases then stabilizes, and the average proximity score distance Dave(π) first decreases then stabilizes. We choose the “elbow point” Mt = Mc = 5 (dashed lines) of the average proximity score distance Dave(π) (distance objective) curve as discussed in Section 5.

Figure 11.

Selection of multiplicity upper bounds Mt, Mc in the real data example (NSW-PSID dataset). For simplicity, we set Mt = Mc. From the left to the right, we plot in solid curves the number of matched pairs |Π| and the average proximity score distance of the resulting match Dave(π). The dashed lines correspond to the selected multiplicity upper bounds Mt = Mc = 5.

Footnotes

For a treated unit ti and a control unit cj, the Mahalanobis distance is defined as , where Σ denotes the covariance matrix. When Σ is unknown, we replace Σ by the empirical covariance matrix.

We use the full matching from the R package optmatch.

The codes of proximity score distance construction and FACT matching are available at https://github.com/ZijunGao/causal-validation.

For a treated unit ti and a control unit cj, we define the prognostic score distance as . Here is the estimated control group mean function from the random forest used to compute the proximity score distance.

In simulations, we set multiplicity lower bounds mt = 1, mc = 0 if nt ≤ nc, and mt = 0, mc = 1 if nt > nc. We set multiplicity upper bounds Mt = Mc = 3 for setting I to III in Table 2, and Mt = Mc = 5 for setting IV. More details of the selection of maximal group sizes are included in the appendix.

Plots of error curves in the setting I, II, III can be found in the appendix.

We use the RE74 subset treatment and control groups considered by Dehejia and Wahba37.

None of the pre-intervention variable distributions except hispanic and no-degree are significantly different at the 5% level across the treatment and control groups.

We use the PSID-3 group considered by Dehejia and Wahba37.

We use log(1+RE75) as the pre-intervention income and log(1+RE78) as the post-intervention income, where RE75 and RE78 denote the earnings (in dollars) in the calendar year 1995 and 1998 respectively.

In the real data example, we set multiplicity lower bounds mt = 1, mc = 0 for the NSW-NSW dataset, and mt = 0, mc = 1 for the NSW-PSID dataset. We set multiplicity upper bounds Mt = Mc = 5. More details of the maximal group sizes selection can be found in the appendix.

The overall improvement is evaluated on the treatment group and computed as , for the selected by the combo and the full methods. The overall improvements evaluated on the NSW-NSW control group and the NSW-PSID control group are 10.6% and 9.5% respectively.

Since the prd method compares the observed and the estimated responses, the target of comparison is the response. Matching-based methods compare the constructed pseudo-HTEs and the estimated HTEs, and thus the target of comparison is the HTE.

In both settings, we set Mt = Mc for simplicity.

Selections in setting II and III are similar and thus omitted.

References

- [1].Rubin Donald B Estimating causal effects of treatments in randomized and nonrandomized studies. Journal of educational Psychology. 1974;66:688. [Google Scholar]

- [2].Splawa-Neyman Jerzy, Dabrowska Dorota M, Speed TP. On the application of probability theory to agricultural experiments. Essay on principles. Section 9. Statistical Science. 1990:465–472. [Google Scholar]

- [3].Sia Low Yen, Blanca Gallego, Haresh Shah Nigam. Comparing high-dimensional confounder control methods for rapid cohort studies from electronic health records Journal of comparative effectiveness research. 2016;5:179–192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Lesko LJ. Personalized medicine: elusive dream or imminent reality? Clinical Pharmacology & Therapeutics. 2007;81:807–816. [DOI] [PubMed] [Google Scholar]

- [5].Marilyn Murphy, Sam Redding, Janet Twyman. Handbook on personalized learning for states, districts, and schools. IAP; 2016. [Google Scholar]

- [6].James Bennett, Stan Lanning, others. The netflix prize in Proceedings of KDD cup and workshop;2007:35.New York, NY, USA. 2007. [Google Scholar]

- [7].Kosuke Imai, Marc Ratkovic, others. Estimating treatment effect heterogeneity in randomized program evaluation The Annals of Applied Statistics. 2013;7:443–470. [Google Scholar]

- [8].Stefan Wager, Susan Athey. Estimation and inference of heterogeneous treatment effects using random forests Journal of the American Statistical Association. 2018;113:1228–1242. [Google Scholar]

- [9].Scott Powers, Junyang Qian, Kenneth Jung, et al. Some methods for heterogeneous treatment effect estimation in high dimensions Statistics in medicine. 2018;37:1767–1787. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Künzel Sören R, Stadie Bradly C, Vemuri Nikita, Ramakrishnan Varsha, Sekhon Jasjeet S, Abbeel Pieter. Transfer learning for estimating causal effects using neural networks arXiv preprint arXiv:1808.07804. 2018. [Google Scholar]

- [11].Rosenbaum Paul R A characterization of optimal designs for observational studies Journal of the Royal Statistical Society: Series B (Methodological). 1991;53:597–610. [Google Scholar]

- [12].Rosenbaum Paul R, Rubin Donald B. The central role of the propensity score in observational studies for causal effects Biometrika. 1983;70:41–55. [Google Scholar]

- [13].Rosenbaum Paul R, Rubin Donald B. Reducing bias in observational studies using subclassification on the propensity score Journal of the American statistical Association. 1984;79:516–524. [Google Scholar]

- [14].Imbens Guido W, Rubin Donald B. Causal inference in statistics, social, and biomedical sciences. Cambridge University Press; 2015. [Google Scholar]

- [15].Rosenbaum Paul R, others. Design of observational studies;10. Springer; 2010. [Google Scholar]

- [16].Stuart Elizabeth A Matching methods for causal inference: A review and a look forward Statistical science: a review journal of the Institute of Mathematical Statistics. 2010;25:1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Rosenbaum Paul R Modern Algorithms for Matching in Observational Studies Annual Review of Statistics and Its Application. 2019;7. [Google Scholar]

- [18].Rubin Donald B Multivariate matching methods that are equal percent bias reducing, I: Some examples Biometrics. 1976:109–120. [Google Scholar]

- [19].Rubin Donald B Bias reduction using Mahalanobis-metric matching Biometrics. 1980:293–298. [Google Scholar]

- [20].Hansen Ben B The prognostic analogue of the propensity score Biometrika. 2008;95:481–488. [Google Scholar]

- [21].Rubin Donald B Matching to remove bias in observational studies Biometrics. 1973:159–183. [Google Scholar]

- [22].Rosenbaum Paul R Optimal matching for observational studies Journal of the American Statistical Association. 1989;84:1024–1032. [Google Scholar]

- [23].Hansen Ben B Full matching in an observational study of coaching for the SAT Journal of the American Statistical Association. 2004;99:609–618. [Google Scholar]

- [24].Hill Jennifer L Bayesian nonparametric modeling for causal inference Journal of Computational and Graphical Statistics. 2011;20:217–240. [Google Scholar]

- [25].Green Donald P, Kern Holger L. Modeling heterogeneous treatment effects in survey experiments with Bayesian additive regression trees Public opinion quarterly. 2012;76:491–511. [Google Scholar]

- [26].Ertefaie Ashkan, Asgharian Masoud, Stephens David A. Variable selection in causal inference using a simultaneous penalization method Journal of Causal Inference. 2018;6. [Google Scholar]

- [27].Künzel Sören R, Sekhon Jasjeet S, Bickel Peter J, Yu Bin. Metalearners for estimating heterogeneous treatment effects using machine learning Proceedings of the National Academy of Sciences. 2019;116:4156–4165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Susan Athey, Imbens Guido W. Machine learning methods for estimating heterogeneous causal effects stat. 2015;1050. [Google Scholar]

- [29].Susan Athey, Guido Imbens. Recursive partitioning for heterogeneous causal effects Proceedings of the National Academy of Sciences. 2016;113:7353–7360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Alejandro Schuler, Ken Jung, Robert Tibshirani, Trevor Hastie, Nigam Shah. Synth-Validation: Selecting the Best Causal Inference Method for a Given Dataset arXiv preprint arXiv:1711.00083. 2017. [Google Scholar]

- [31].Cook Thomas D, Shadish William R, Wong Vivian C. Three conditions under which experiments and observational studies produce comparable causal estimates: New findings from within-study comparisons Journal of Policy Analysis and Management: The Journal of the Association for Public Policy Analysis and Management. 2008;27:724–750. [Google Scholar]

- [32].Roxbee Cox David, Victor Hinkley David. Theoretical statistics. London: Chapman & Hall. 1974. [Google Scholar]

- [33].Oswald Veblen, Philip Franklin. On matrices whose elements are integers Annals of Mathematics. 1921:1–15. [Google Scholar]

- [34].Alexander Schrijver. Theory of linear and integer programming. John Wiley & Sons; 1998. [Google Scholar]

- [35].Chen YL. The minimal average cost flow problem European journal of operational research. 1995;81:561–570. [Google Scholar]

- [36].LaLonde Robert J Evaluating the econometric evaluations of training programs with experimental data The American economic review. 1986:604–620. [Google Scholar]

- [37].Dehejia Rajeev H, Wahba Sadek. Causal effects in nonexperimental studies: Reevaluating the evaluation of training programs Journal of the American statistical Association. 1999;94:1053–1062. [Google Scholar]

- [38].Alan Argesti. An introduction to categorical data analysis University of Florida. 1996. [Google Scholar]