Abstract

Open and reproducible research practices increase the reusability and impact of scientific research. The reproducibility of research results is influenced by many factors, most of which can be addressed by improved education and training. Here we describe how workshops developed by the Reproducibility for Everyone (R4E) initiative can be customized to provide researchers at all career stages and across most disciplines with education and training in reproducible research practices. The R4E initiative, which is led by volunteers, has reached more than 3000 researchers worldwide to date, and all workshop materials, including accompanying resources, are available under a CC-BY 4.0 license at https://www.repro4everyone.org/.

Research organism: None

Why is training in reproducibility needed?

Reproducibility and replicability are central to science. Reproducibility is the ability to regenerate a result using the dataset and data analysis workflow that was used in the original study, while replicability is the ability to obtain similar results in a different experimental system (Leek and Peng, 2015; Schloss, 2018). Despite their importance, studies have shown that it can be quite challenging to reproduce and replicate peer-reviewed results (Baker and Penny, 2016; Freedman et al., 2015). In the past few years, several multi-center projects have assessed the level of reproducibility and replicability in various scientific fields, and have identified major factors that are critical for repeating and confirming scientific results (Alsheikh-Ali et al., 2011; Amaral et al., 2019; Baker et al., 2014; Button et al., 2013; Cova et al., 2021; Errington et al., 2014; Friedl, 2019; Hardwicke et al., 2018; Lazic, 2010; Marqués et al., 2020; Open Science Collaboration, 2015; Shen et al., 2012; Stevens, 2017; Strasak et al., 2007; Weissgerber et al., 2019; Weissgerber et al., 2015). In the rest of this article we will use the term reproducibility as shorthand for reproducibility and replicability, as is often done in the life sciences (Barba, 2018).

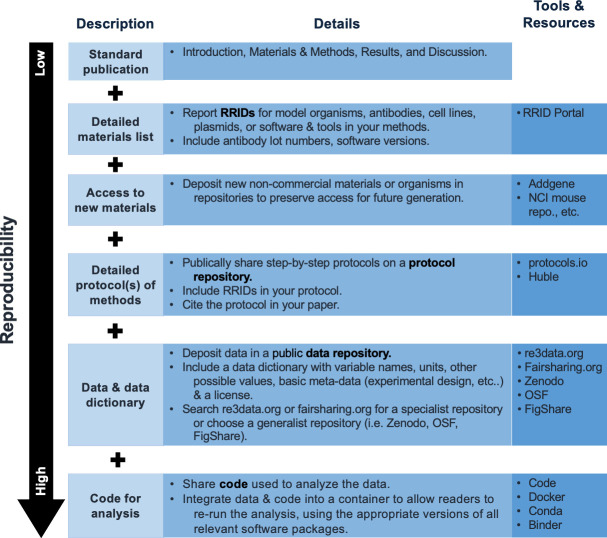

The factors that control the reproducibility of an experiment can be grouped into the four categories shown in Figure 1. The first represents technical factors, such as variability in reagents or materials used to perform research. The second category contains factors related to flaws in study design and/or statistical analysis such as the use of inappropriate controls, insufficient sample sizes to properly power the study, inappropriate statistical analyses, underpowered studies, and others. The third category contains human factors, which includes insufficient description of methods and the use of reagents or organisms that are not shared. In addition, scientific misconduct, such as hypothesizing after results have been obtained (HARKing; Kerr, 1998) or P-hacking (Fraser et al., 2018; Head et al., 2015; Miyakawa, 2020), is hard to detect and contributes to confirmation and publication bias issues. Lastly, external factors that are beyond the researchers' control can negatively impact reproducibility; these can include scientific rewards such as a high impact publication or paywalls that restrict access to crucial information. Going forward, developing solutions to minimize these confounding factors will be of vital importance to improve scientific integrity and to further accelerate the advancement of the scientific enterprise (Botvinik-Nezer et al., 2020; Fomel and Claerbout, 2009; Friedl, 2019; Gentleman and Temple Lang, 2007; Mangul et al., 2019; Mesirov, 2010; NIH, 2020; Peng, 2011).

Figure 1. Factors that affect reproducibility in research.

An approximation of the classification of categories that contribute to irreproducible scientific results, including technical, human, errors in study design and statistical analysis and external. Specific examples have been listed under each category.

While the problems with experimental reproducibility have been known for decades, they have only come to the fore over the past ten years (Begley and Ellis, 2012; Munafò et al., 2017; Prinz et al., 2011). Within the scientific community, systemic solutions and tools are being developed that allow scientists to efficiently share research materials, protocols, data, and computational analysis pipelines (some of these tools are covered in our training materials, see Box 1). Despite their transformative potential, these tools are underutilized, as most researchers are unaware of their existence, or do not know how to incorporate them in their daily workflows.

Box 1.

Unit topics.

The units included in the standard introductory workshop cover a range of skills and tools needed to conduct reproducible research. Below are examples of content that has been used in previous workshops. The specific content of each workshop can vary and is adjusted to the audience and event.

1. The reproducibility framework: Reproducible research practices allow others to repeat analyses and corroborate results from a previous study. This is only possible when authors have provided all necessary data, methods and computer codes (Figure 2). Our reproducibility toolbox includes reproducible practices for organization, documentation, analysis, and dissemination of scientific research.

2. Organization, data management and file naming: An effective data management plan, including clear file naming conventions, prevents problems such as lost data, difficulties identifying the most recent version of a file, the inability to locate files after team members leave the laboratory, or difficulties in finding or interpreting files years after the project is completed. This section describes techniques to ensure that all project files are easy to identify and locate and that they are appropriately documented.

3. Electronic lab notebooks: Electronic lab notebooks (ELNs) overcome many of the limitations of paper lab notebooks – they are searchable, cannot be damaged or misplaced, and are easy to back-up and share with collaborators. This section discusses available electronic lab notebooks and strategies for selecting the electronic lab notebook that meets the needs of an individual research team.

4. Preregistrations and protocol sharing: Scientific publications often lack essential details needed to reproduce the methods described. Preregistrations of planned research include details of the methods and tools that will be used in the project and provide transparency of the intended analyses and outcome. Protocol repositories allow researchers to share detailed, step-by-step protocols, which can be cited in scientific papers. Repositories also make it easy to track changes in protocols over time by incorporating version control, allowing researchers to post updated versions of protocols from their own lab, or adapted versions of protocols that were originally shared by other research groups. This section describes strategies for creating effective ‘living protocols’ that other research teams can easily locate, follow, cite and adapt.

5. Biological material and reagent sharing: Laboratories regularly produce specialized materials and organisms, such as reagents, plasmids, seeds and organism strains. Access to these materials is essential to reproduce and expand upon published work. Repositories maintain reagents and biological materials deposited by scientists, and also make these materials accessible to the scientific community for a small or symbolic donation. Nonetheless, many laboratories do not use repository resources to share their materials, and thus limit their outreach and impact. This section introduces the concept of material repositories, which allow investigators access to materials without investing time and resources to recreate, maintain, verify and distribute their own or another researcher’s reagents.

6. Data visualization and figure design: Figures show the key findings of a study and should allow readers to critically evaluate the data and structures behind them. As an example, scientists routinely use the default plots of spreadsheet software such as bar graphs for presenting continuous data (Weissgerber et al., 2015). This is a problem, as many data distributions can lead to the same bar or line graph and the actual data may suggest different conclusions from the summary statistics alone. This section illustrates strategies for replacing bar graphs with more informative alternatives (i.e. dot, box, or violin plots), provides guidance on choosing the visualization best suited for various data structures and images, and provides a brief overview of tools for creating more effective, appealing and informative graphics and figures.

7. Bioinformatic tools: The sample size and number of data points (in multidimensional data) in research studies has greatly increased in the last decade. Bioinformatic tools for analyzing large data sets are essential in many fields. Unfortunately, analyses performed using these tools can only be reproduced or adapted to other study designs if authors share their code, software version and software settings. This section examines techniques and tools for reproducible data analysis, including notebooks, version control, managers for packages, dependencies and the programming environment, and containers.

8. Data sharing: Depositing data in public repositories allows other scientists to review published results and reuse the data for additional analyses or studies. All data should adhere to the principle of FAIR data: be findable, accessible, interoperable and reusable (https://fairsharing.org/). This section describes the types of information that should be shared to allow the community to interpret and use archived data. We also discuss best practices, including criteria for selecting a repository and the importance of specifying a license for data and code reuse. There are instances where data cannot be shared, this includes when there are privacy concerns with genetic data from living people.

Integrating these tools into the standard scientific workflow has the potential to shift the scientific community towards a more transparent and reproducible future. Educational initiatives with open-source materials can significantly increase the reach of teaching materials (Lawrence et al., 2015) to accelerate the uptake of best practices and existing tools for reproducible research. Several initiatives exist that offer tutorials or seminars on some aspects of reproducibility (Box 2). While they each have their strengths, none of them individually offer a scalable solution to the existing training gap in reproducibility. Here, we present Reproducibility for Everyone, a set of workshop materials and modules that can be used to train researchers in reproducible research practices. Our trainings are scalable, from a dozen attendees in an intensive workshop to a few hundred participants in an introductory workshop that can attend at once in a virtual format or a large venue. However, the reproducibility movement worldwide is growing, and as different initiatives cover various aspects of the training process, they can together help bridge the reproducible training gap.

Box 2.

Resources for training in reproducible research.

Carpentries workshops (https://carpentries.org/): Workshops teaching reproducible data handling and coding skills. Intended for scientists at any career stage.

Frictionless Data Fellowship (https://fellows.frictionlessdata.io): Nine-month virtual training program on frictionless data tools and approaches. Target audience are mainly early-career researchers (ECRs). Eight fellows are selected each year and a stipend is provided.

Oxford Berlin Summer School (https://www.bihealth.org/en/notices/oxford-berlin-summer-school-on-open-research-2020): Five-day summer school covering open research and reproducibility in science.

ReproducibiliTea (https://reproducibilitea.org/): Locally run journal clubs focused on open science and reproducibility. Target audience are mainly early career researchers. Global reach with currently 114 local groups.

Research Transparency and Reproducibility Training (RT2; https://bitss.org): Three-day training providing overview of reproducible tools and practices for social sciences. Target audience are scientists at any career stage of Social Sciences.

Project TIER (Teaching Integrity in Empirical Research) (https://www.projecttier.org/): Training in empirical research transparency and replicability for social scientists, students and faculty. Offer fellowships and workshops for faculty and graduate students.

Framework for Open and Reproducible Research Training (FORRT; https://forrt.org/): Connects educators and scholars in higher education to include open and reproducible science tenets in education. Offer the e-learning platform Nexus with several curated resources that include sufficient context for educators to use.

Reproducibility for Everyone (R4E)

R4E was formed in 2018 to address the challenges of integrating reproducible research practices in life science laboratories across the globe. Our mission is to increase awareness of the factors that affect reproducibility, and to promote best practices for reproducible and transparent scientific research. We offer open access introductory materials and workshops to teach scientists at all career stages and across disciplines about concrete steps they can take to improve the transparency and reproducibility of their research. All workshops are offered free of charge. We developed eight modules as independent, in-depth slide sets focusing on different aspects of the day-to-day scientific workflow, allowing trainers to customize the workshop and adapt it to audiences in different disciplines (Box 1). R4E targets mainly biological and medical research practices (reagent and protocol sharing, data management) and in part computer science (bioinformatic tools) as evidenced by the range of trainings offered so far. Tools we discuss could also be useful for disciplines close to biological research like bioengineering, biophysics, (bio)chemistry, etc. Some training modules, especially Data management, Data visualization and Figure design, might be valuable for qualitative research that collects and analyzes text and other non-numerical data.

All materials, including recordings of previous R4E workshops and webinars, are available at https://www.repro4everyone.org/ (RRID:SCR_018958). The goal of R4E is to provide scientists with a clear overview of existing reproducibility-promoting tools, as well as to give scientists the opportunity to revisit all training material when needed, by providing them with full access to all training materials so they learn at their own pace. In addition, we welcome each trainee to fine-tune the material for their own field of expertise and to train their peers. For trainees who want to help run one of our workshops we offer the train-the-trainer approach: We meet with the trainee before the workshop and decide together which section of the material the trainee will present. Then we go through the material together, share speaker notes and practice with the trainee if needed to stay in time during the workshop.

We have developed materials for both introductory and intensive workshop formats that are described below:

Introductory workshops are organized as two-hour sessions, including a 60- to 90 min presentation and 30 min interactive discussion of case studies, which can be held as in-person or virtual workshops with a large number of participants (>100). These introductory workshops are designed for an interdisciplinary audience and do not require prior knowledge of reproducible research practices as they cover many different topics (Box 1). These workshops are generally presented from a team of two to four instructors.

Intensive workshops provide in-depth training in the implementation of reproducible research practices for one or more topics. These workshops take at least four hours. Depending on the number of topics covered, intensive workshops may be spread over several days. R4E members typically design these sessions to provide intensive instruction within their areas of expertise. Outside experts may also be invited to teach sessions on additional topics. This type of workshop is best suited for a smaller (<50) group of participants.

Over the years, our community has grown and diversified substantially, consisting of scientists who taught one, or many R4E workshops. To date, we have reached more than 3000 researchers through over 30 workshops, which were predominantly held at international conferences and spanned numerous life science disciplines (e.g. ecology, biotechnology, plant sciences, neuroscience and many others). In addition, we have hosted several webinars that allowed researchers from all around the world to join, including webinars for early career scientists participating in the eLife Community Ambassadors Program. Investigators and conference organizers can request to host a workshop led by our volunteers or use our materials to learn more about responsible research practices and offer their own training.

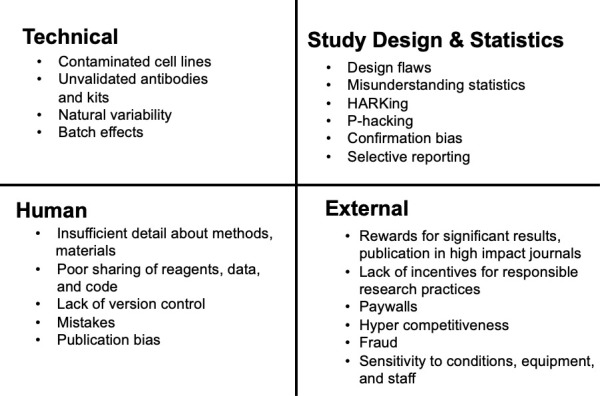

The goal of our training is to introduce participants to a reproducible scientific workflow. Individual scientists or laboratories can make their research more reproducible by implementing as many of the steps introduced in our workshops as they are comfortable with (Figure 2). Feedback on our workshops indicate that 80% of participants learned important new aspects of reproducibly research practices and are very likely to implement at least some of the presented tools in their own research workflows in the future.

Figure 2. Approaches that scientists can use to increase the reproducibility of their publications.

From top to bottom, approaches that can be used on their own or in combination to increase the reproducibility of experiments, ordered from least reproducibility to most. The column on the right includes details of tools and resources than can be used to help scientists take each specific approach.

It is important to point out that this will likely work best as a stepwise, iterative process to avoid scientists from feeling overwhelmed with implementing too many changes at once. When writing a research paper, the largest impact on the reproducibility of your work can be made by incorporating the following changes: adding a detailed list of materials used for the research, that includes research resource identifiers (RRIDs; https://scicrunch.org/resources) and catalog numbers for all materials (kits, antibodies, seeds, cell lines, organisms, etc.) that were created or used during the study. Ideally, newly generated reagents or organisms are deposited at appropriate repositories to enable easy access for other scientists. Incorporating a detailed and specific methods section is crucial to reproduce the research. Ideally, protocols are deposited at a repository, and the DOI number of the respective protocol is incorporated in the methods section. Large data sets, including all metadata, should be deposited in public data repositories to generate findable, accessible, interoperable, and reusable (FAIR) data (Sansone et al., 2019). Finally, bioinformatic analytic pipelines and scripts can easily be shared via Github, Anaconda, or computational containers such as Singularity. At a minimum, authors should list and cite all programs used, including version numbers and parameters.

We would also like to point out that a supportive environment is critical for these efforts to be properly adopted in a research environment. Being the first one to speak up about irreproducible research practices at your lab or institute can be challenging, or in some cases even isolating. In this case, getting involved with a local ReproducibiliTea journal club or reaching out to the initiative to start a chapter of your own can help you connect with like-minded individuals. Similarly, joining the R4E community and discussing these situations with our community members can help you find solutions to convince your peers and supervisors of the importance of incorporating reproducible research practices.

How can scientists use the R4E materials?

There are several ways for researchers to take advantage of the materials presented here to teach reproducible research practices. First, researchers can request a workshop run by the Reproducibility for Everyone team for a conference via email (hello@repro4everyone.org). Alternatively, researchers can use the slides and training materials available on our website to organize their own workshops. Reproducibility can be integrated into the research curriculum by asking trainees to organize and run a poster workshop at an institutional or departmental research day. Trainees can also discuss individual topics at journal clubs or as part of a methods course, after which they can develop plans to implement the identified solutions in their own research. Upcoming workshops and other opportunities to get involved and contribute will be shared through our Twitter account (@repro4everyone) and website (https://www.repro4everyone.org/).

Conclusions

Widespread adoption of new tools and practices is urgently needed to make scientific publications more transparent and reproducible. This transition will require scalable and adaptable approaches to reproducibility education that allow scientists to efficiently learn new skills and share them with others in their lab, department and field.

R4E demonstrates how a common, public set of materials curated and maintained by a small group may form the basis for a global initiative to improve transparency and reproducibility in the life-sciences. Flexible materials allow instructors to adapt both the content and workshop format to meet the needs of the audience in their discipline. Continued training on reproducibility could be promoted in the laboratory by for instance changing every nth journal club to an educational meeting, discussing the latest developments in the reproducibility field.

Our workshops have reached over 3000 learners on six continents and continue to expand each year, offering a unique opportunity to train the next generation of scientists. Moving forward, R4E plans to broaden our reach by translating the existing materials into different languages and bring reproducibility training to more non-native English-speaking scientists. However, increasing training in reproducible research practices alone will not suffice to make all scientific findings reproducible. To achieve this goal, higher-level changes are needed to reduce the hypercompetitive nature of scientific research. Large structural and cultural changes are needed to transition from rewarding only breakthrough scientific findings, to promoting those that were performed using reproducible and transparent research practices.

Acknowledgements

Members of the Reproducibility for Everyone initiative would like to thank all organizers, volunteers and staff who have helped over the years with running our workshops. We would like to thank the eLife Ambassador program, Addgene, Protocols.io, the American Society of Plant Biology, the American Society of Microbiology, New England Biolabs, the Chan-Zuckerberg Initative, Dorothy Bishop, and many others for supporting the Reproducibility for Everyone initiative.

Biographies

Susann Auer is in the Department of Plant Physiology, Institute of Botany, Faculty of Biology, Technische Universität Dresden, Dresden, Germany and is an eLife ambassador

Nele A Haeltermann is in the Department of Molecular and Human Genetics, Baylor College of Medicine, Houston, United States and is an eLife ambassador

Tracey L Weissgerber is in the QUEST Center, Berlin Institute of Health, Charité Universitätsmedizin Berlin and is a member of the eLife Early-Career Advisory Group

Jeffrey C Erlich is in the NYU-ECNU Institute of Brain and Cognitive Science, NYU Shanghai and the Shanghai Key Laboratory of Brain Functional Genomics, East China Normal University, Shanghai, China and is an eLife ambassador

Damar Susilaradeya is in the Medical Technology Cluster, Indonesian Medical Education and Research Institute, Faculty of Medicine, Universitas Indonesia, Jakarta, Indonesia and is an eLife ambassador

Magdalena Julkowska is in the Boyce Thompson Institute, Ithaca, United States and is an eLife ambassador

Małgorzata Anna Gazda is in the CIBO/InBIOO, Centro de Investigaçao em Biodiversidade e Recursos Genéticos, Campus Agrário de Vairão and the Departamento de Biologia, Faculdade de Ciências, Universidade do Porto, Porto, Portugal and is an eLife ambassador

Benjamin Schwessinger is in the Research School of Biology, Australian National University, Canberra, Australia and is a member of the eLife Early-Career Advisory Group

Nafisa M Jadavji is in the Department of Biomedical Science, Midwestern University, Glendale, United States and in the Department of Neuroscience, Carleton University, Ottawa, Canada and is an eLife ambassador

Funding Statement

The funders had no role in study design, data collection and interpretation, or the decision to submit the work for publication.

Contributor Information

Benjamin Schwessinger, Email: benjamin.schwessinger@anu.edu.au.

Nafisa M Jadavji, Email: njadav@midwestern.edu.

Helena Pérez Valle, eLife, United Kingdom.

Peter Rodgers, eLife, United Kingdom.

Reproducibility for Everyone Team:

Angela Abitua, Anzela Niraulu, Aparna Shah, April Clyburne-Sherinb, Benoit Guiquel, Bradly Alicea, Caroline LaManna, Diep Ganguly, Eric Perkins, Helena Jambor, Ian Man Ho Li, Jennifer Tsang, Joanne Kamens, Lenny Teytelman, Mariella Paul, Michelle Cronin, Nicolas Schmelling, Peter Crisp, Rintu Kutum, Santosh Phuyal, Sarvenaz Sarabipour, Sonali Roy, Susanna M Bachle, Tuan Tran, Tyler Ford, Vicky Steeves, Vinodh Ilangovan, Ana Baburamani, and Susanna Bachle

Funding Information

This paper was supported by the following grants:

Mozilla Foundation MF-1811-05938 to Benjamin Schwessinger.

Chan Zuckerberg Initiative 223046 to Susann Auer, Nele A Haeltermann, Benjamin Schwessinger, Nafisa M Jadavji, Reproducibility for Everyone Team.

Additional information

Competing interests

No competing interests declared.

Author contributions

Formal analysis, Visualization, Methodology, Writing - original draft, Writing - review and editing.

Visualization, Writing - original draft, Project administration, Writing - review and editing.

Conceptualization, Funding acquisition, Visualization, Writing - original draft, Project administration, Writing - review and editing.

Visualization, Writing - original draft.

Visualization, Writing - original draft, Project administration.

Visualization, Writing - original draft, Writing - review and editing.

Visualization, Writing - original draft, Project administration, Writing - review and editing.

Conceptualization, Funding acquisition, Investigation, Methodology, Writing - original draft, Writing - review and editing.

Supervision, Visualization, Writing - original draft, Project administration, Writing - review and editing.

Data availability

No new data were generated in this study.

References

- Alsheikh-Ali AA, Qureshi W, Al-Mallah MH, Ioannidis JP. Public availability of published research data in high-impact journals. PLOS ONE. 2011;6:e24357. doi: 10.1371/journal.pone.0024357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amaral OB, Neves K, Wasilewska-Sampaio AP, Carneiro CF. The Brazilian Reproducibility Initiative. eLife. 2019;8:e41602. doi: 10.7554/eLife.41602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker D, Lidster K, Sottomayor A, Amor S. Two years later: journals are not yet enforcing the ARRIVE guidelines on reporting standards for pre-clinical animal studies. PLOS Biology. 2014;12:e1001756. doi: 10.1371/journal.pbio.1001756. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker M, Penny D. Is there a reproducibility crisis? Nature. 2016;533:452–454. doi: 10.1038/d41586-019-00067-3. [DOI] [PubMed] [Google Scholar]

- Barba LA. Terminologies for reproducible research. arXiv. 2018 https://arxiv.org/abs/1802.03311

- Begley CG, Ellis LM. Drug development: raise standards for preclinical cancer research. Nature. 2012;483:531–533. doi: 10.1038/483531a. [DOI] [PubMed] [Google Scholar]

- Botvinik-Nezer R, Holzmeister F, Camerer CF, Dreber A, Huber J, Johannesson M, Kirchler M, Iwanir R, Mumford JA, Adcock RA, Avesani P, Baczkowski BM, Bajracharya A, Bakst L, Ball S, Barilari M, Bault N, Beaton D, Beitner J, Benoit RG, Berkers RMWJ, Bhanji JP, Biswal BB, Bobadilla-Suarez S, Bortolini T, Bottenhorn KL, Bowring A, Braem S, Brooks HR, Brudner EG, Calderon CB, Camilleri JA, Castrellon JJ, Cecchetti L, Cieslik EC, Cole ZJ, Collignon O, Cox RW, Cunningham WA, Czoschke S, Dadi K, Davis CP, Luca AD, Delgado MR, Demetriou L, Dennison JB, Di X, Dickie EW, Dobryakova E, Donnat CL, Dukart J, Duncan NW, Durnez J, Eed A, Eickhoff SB, Erhart A, Fontanesi L, Fricke GM, Fu S, Galván A, Gau R, Genon S, Glatard T, Glerean E, Goeman JJ, Golowin SAE, González-García C, Gorgolewski KJ, Grady CL, Green MA, Guassi Moreira JF, Guest O, Hakimi S, Hamilton JP, Hancock R, Handjaras G, Harry BB, Hawco C, Herholz P, Herman G, Heunis S, Hoffstaedter F, Hogeveen J, Holmes S, Hu C-P, Huettel SA, Hughes ME, Iacovella V, Iordan AD, Isager PM, Isik AI, Jahn A, Johnson MR, Johnstone T, Joseph MJE, Juliano AC, Kable JW, Kassinopoulos M, Koba C, Kong X-Z, Koscik TR, Kucukboyaci NE, Kuhl BA, Kupek S, Laird AR, Lamm C, Langner R, Lauharatanahirun N, Lee H, Lee S, Leemans A, Leo A, Lesage E, Li F, Li MYC, Lim PC, Lintz EN, Liphardt SW, Losecaat Vermeer AB, Love BC, Mack ML, Malpica N, Marins T, Maumet C, McDonald K, McGuire JT, Melero H, Méndez Leal AS, Meyer B, Meyer KN, Mihai G, Mitsis GD, Moll J, Nielson DM, Nilsonne G, Notter MP, Olivetti E, Onicas AI, Papale P, Patil KR, Peelle JE, Pérez A, Pischedda D, Poline J-B, Prystauka Y, Ray S, Reuter-Lorenz PA, Reynolds RC, Ricciardi E, Rieck JR, Rodriguez-Thompson AM, Romyn A, Salo T, Samanez-Larkin GR, Sanz-Morales E, Schlichting ML, Schultz DH, Shen Q, Sheridan MA, Silvers JA, Skagerlund K, Smith A, Smith DV, Sokol-Hessner P, Steinkamp SR, Tashjian SM, Thirion B, Thorp JN, Tinghög G, Tisdall L, Tompson SH, Toro-Serey C, Torre Tresols JJ, Tozzi L, Truong V, Turella L, van ‘t Veer AE, Verguts T, Vettel JM, Vijayarajah S, Vo K, Wall MB, Weeda WD, Weis S, White DJ, Wisniewski D, Xifra-Porxas A, Yearling EA, Yoon S, Yuan R, Yuen KSL, Zhang L, Zhang X, Zosky JE, Nichols TE, Poldrack RA, Schonberg T. Variability in the analysis of a single neuroimaging dataset by many teams. Nature. 2020;582:84–88. doi: 10.1038/s41586-020-2314-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Button KS, Ioannidis JP, Mokrysz C, Nosek BA, Flint J, Robinson ES, Munafò MR. Power failure: why small sample size undermines the reliability of neuroscience. Nature Reviews Neuroscience. 2013;14:365–376. doi: 10.1038/nrn3475. [DOI] [PubMed] [Google Scholar]

- Cova F, Strickland B, Abatista A, Allard A, Andow J, Attie M, Beebe J, Berniūnas R, Boudesseul J, Colombo M, Cushman F, Diaz R, N’Djaye Nikolai van Dongen N, Dranseika V, Earp BD, Torres AG, Hannikainen I, Hernández-Conde JV, Hu W, Jaquet F, Khalifa K, Kim H, Kneer M, Knobe J, Kurthy M, Lantian A, Liao S-yi, Machery E, Moerenhout T, Mott C, Phelan M, Phillips J, Rambharose N, Reuter K, Romero F, Sousa P, Sprenger J, Thalabard E, Tobia K, Viciana H, Wilkenfeld D, Zhou X. Estimating the reproducibility of experimental philosophy. Review of Philosophy and Psychology. 2021;12:9–44. doi: 10.1007/s13164-018-0400-9. [DOI] [Google Scholar]

- Errington TM, Iorns E, Gunn W, Tan FE, Lomax J, Nosek BA. An open investigation of the reproducibility of cancer biology research. eLife. 2014;3:e04333. doi: 10.7554/eLife.04333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fomel S, Claerbout JF. Guest editors' Introduction: reproducible research. Computing in Science & Engineering. 2009;11:5–7. doi: 10.1109/MCSE.2009.14. [DOI] [Google Scholar]

- Fraser H, Parker T, Nakagawa S, Barnett A, Fidler F. Questionable research practices in ecology and evolution. PLOS ONE. 2018;13:e0200303. doi: 10.1371/journal.pone.0200303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freedman LP, Cockburn IM, Simcoe TS. The economics of reproducibility in preclinical research. PLOS Biology. 2015;13:e1002165. doi: 10.1371/journal.pbio.1002165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedl P. Reproducibility in cancer biology: rethinking research into metastasis. eLife. 2019;8:e53511. doi: 10.7554/eLife.53511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gentleman R, Temple Lang D. Statistical analyses and reproducible research. Journal of Computational and Graphical Statistics. 2007;16:1–23. doi: 10.1198/106186007X178663. [DOI] [Google Scholar]

- Hardwicke TE, Mathur MB, MacDonald K, Nilsonne G, Banks GC, Kidwell MC, Hofelich Mohr A, Clayton E, Yoon EJ, Henry Tessler M, Lenne RL, Altman S, Long B, Frank MC. Data availability, reusability, and analytic reproducibility: evaluating the impact of a mandatory open data policy at the journal Cognition. Royal Society Open Science. 2018;5:180448. doi: 10.1098/rsos.180448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Head ML, Holman L, Lanfear R, Kahn AT, Jennions MD. The extent and consequences of p-hacking in science. PLOS Biology. 2015;13:e1002106. doi: 10.1371/journal.pbio.1002106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kerr NL. HARKing: hypothesizing after the results are known. Personality and Social Psychology Review. 1998;2:196–217. doi: 10.1207/s15327957pspr0203_4. [DOI] [PubMed] [Google Scholar]

- Lawrence KA, Zentner M, Wilkins‐Diehr N, Wernert JA, Pierce M, Marru S, Michael S. Science gateways today and tomorrow: positive perspectives of nearly 5000 members of the research community. Concurrency and Computation: Practice and Experience. 2015;27:4252–4268. doi: 10.1002/cpe.3526. [DOI] [Google Scholar]

- Lazic SE. The problem of pseudoreplication in neuroscientific studies: is it affecting your analysis? BMC Neuroscience. 2010;11:5. doi: 10.1186/1471-2202-11-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leek JT, Peng RD. Opinion: reproducible research can still be wrong: adopting a prevention approach. PNAS. 2015;112:1645–1646. doi: 10.1073/pnas.1421412111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mangul S, Mosqueiro T, Abdill RJ, Duong D, Mitchell K, Sarwal V, Hill B, Brito J, Littman RJ, Statz B, Lam AK, Dayama G, Grieneisen L, Martin LS, Flint J, Eskin E, Blekhman R. Challenges and recommendations to improve the installability and archival stability of omics computational tools. PLOS Biology. 2019;17:e3000333. doi: 10.1371/journal.pbio.3000333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marqués G, Pengo T, Sanders MA. Imaging methods are vastly underreported in biomedical research. eLife. 2020;9:e55133. doi: 10.7554/eLife.55133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mesirov JP. Accessible reproducible research. Science. 2010;327:415–416. doi: 10.1126/science.1179653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miyakawa T. No raw data, no science: another possible source of the reproducibility crisis. Molecular Brain. 2020;13:24. doi: 10.1186/s13041-020-0552-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Munafò MR, Nosek BA, Bishop DVM, Button KS, Chambers CD, du Sert NP, Simonsohn U, Wagenmakers EJ, Ware JJ, Ioannidis JPA. A manifesto for reproducible science. Nature Human Behaviour. 2017;1:0021. doi: 10.1038/s41562-016-0021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- NIH Rigor and reproducibility. 2020. [May 28, 2021]. https://www.nih.gov/research-training/rigor-reproducibility

- Open Science Collaboration Estimating the reproducibility of psychological science. Science. 2015;349:aac4716. doi: 10.1126/science.aac4716. [DOI] [PubMed] [Google Scholar]

- Peng RD. Reproducible research in computational science. Science. 2011;334:1226–1227. doi: 10.1126/science.1213847. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prinz F, Schlange T, Asadullah K. Believe it or not: how much can we rely on published data on potential drug targets? Nature Reviews Drug Discovery. 2011;10:712. doi: 10.1038/nrd3439-c1. [DOI] [PubMed] [Google Scholar]

- Sansone SA, McQuilton P, Rocca-Serra P, Gonzalez-Beltran A, Izzo M, Lister AL, Thurston M, FAIRsharing Community FAIRsharing as a community approach to standards, repositories and policies. Nature Biotechnology. 2019;37:358–367. doi: 10.1038/s41587-019-0080-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schloss PD. Identifying and overcoming threats to reproducibility, replicability, robustness, and generalizability in microbiome research. mBio. 2018;9:e00525-18. doi: 10.1128/mBio.00525-18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shen K, Qi Y, Song N, Tian C, Rice SD, Gabrin MJ, Brower SL, Symmans WF, O'Shaughnessy JA, Holmes FA, Asmar L, Pusztai L. Cell line derived multi-gene predictor of pathologic response to neoadjuvant chemotherapy in breast cancer: a validation study on US oncology 02-103 clinical trial. BMC Medical Genomics. 2012;5:51. doi: 10.1186/1755-8794-5-51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevens JR. Replicability and reproducibility in comparative psychology. Frontiers in Psychology. 2017;8:862. doi: 10.3389/fpsyg.2017.00862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strasak AM, Zaman Q, Marinell G, Pfeiffer KP, Ulmer H. The use of statistics in medical research: a comparison of the New England Journal of Medicine and Nature Medicine. The American Statistician. 2007;61:47–55. doi: 10.1198/000313007X170242. [DOI] [Google Scholar]

- Weissgerber TL, Milic NM, Winham SJ, Garovic VD. Beyond bar and line graphs: time for a new data presentation paradigm. PLOS Biology. 2015;13:e1002128. doi: 10.1371/journal.pbio.1002128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weissgerber TL, Winham SJ, Heinzen EP, Milin-Lazovic JS, Garcia-Valencia O, Bukumiric Z, Savic MD, Garovic VD, Milic NM. Reveal, don't conceal: transforming data visualization to improve transparency. Circulation. 2019;140:1506–1518. doi: 10.1161/CIRCULATIONAHA.118.037777. [DOI] [PMC free article] [PubMed] [Google Scholar]