Abstract

Children’s gaze behavior reflects emergent linguistic knowledge and real-time language processing of speech, but little is known about naturalistic gaze behaviors while watching signed narratives. Measuring gaze patterns in signing children could uncover how they master perceptual gaze control during a time of active language learning. Gaze patterns were recorded using a Tobii X120 eye-tracker, in 31 non-signing and 30 signing hearing infants (5-14 months) and children (2-8 years) as they watched signed narratives on video. Intelligibility of the signed narratives was manipulated by presenting them naturally and in video-reversed (“low intelligibility”) conditions. This video manipulation was used because it distorts semantic content, while preserving most surface phonological features. We examined where participants looked, using linear mixed models with Language Group (non-signing vs. signing) and Video Condition (Forward vs. Reversed), controlling for trial order. Non-signing infants and children showed a preference to look at the face as well as areas below the face, possibly because their gaze was drawn to the moving articulators in signing space. Native signing infants and children demonstrated resilient, face-focused gaze behavior. Moreover, their gaze behavior was unchanged for video-reversed signed narratives, similar to what was seen for adult native signers, possibly because they already have efficient highly focused gaze behavior. The present study demonstrates that human perceptual gaze control is sensitive to visual language experience over the first year of life and emerges early, by 6 months of age. Results have implications for the critical importance of early visual language exposure for deaf infants.

Keywords: Sign language, early language acquisition, narratives, gaze, eye tracking, children, infants

Graphical Abstract

Successful language acquisition requires coordination of visual-perceptual mechanisms via control of gaze behavior during real-time language processing (Lieberman, Hatrak & Mayberry, 2011). Gaze -- defined as where, when, and how long one looks – is one of the ways infants and children can gather incoming linguistic input (Hessels, 2020). Their gaze behavior reflects emergent linguistic knowledge and real-time language processing of speech (e.g., Fernald, Zangl, Portillo et al., 2008), but less is known about gaze behaviors of infants and children who are acquiring signed language. Young signers must learn where to look while watching language, a task that is unique to sign language acquisition (Harris & Mohay, 1997; Lieberman, Borovsky & Mayberry, 2018; Mayberry & Squires, 2006). Sign language watching represents a unique perceptual challenge because not all articulators -- the signer’s eyes, mouth, eyebrows, two independently moving hands, body posture and body contact points -- can be resolved simultaneously with foveal vision. As such, when foveating on the signer’s face, the manual articulators typically fall in peripheral vision and will be seen with low spatial resolution.1 In order to perceive peripheral objects (especially small objects like the fingers) in greater detail, saccades can be made to them. This competition for overt gaze poses an interesting question about where infants and children choose to distribute gaze across multiple articulators. In the present study, we asked two questions. First, what are the gaze patterns of young native signers during sign perception across the course of development? Second, how are the gaze patterns impacted by intelligibility of signed narratives? Studying perceptual gaze control in this unique population may allow us to uncover novel insights into the biological, linguistic, and cultural factors that underlie early language acquisition.

Deaf and hearing infants with deaf signing parents start acquiring signed language early in life. Regardless of language modality – signed or spoken – young language learners have similar challenges in identifying and segmenting the language signal into phonological and lexical units that they later use for vocabulary acquisition, followed by acquiring production of syntactic structures, sentences, and discourse (Mayberry, 2002; Pichler, Lillo-Martin, de Quadros et al., 2018). Observational studies and parental reports have largely confirmed that language acquisition milestones and trajectories are similar among infants acquiring signed or spoken languages (Corina & Singleton, 2009; Harris, Clibbens, Chasin et al., 1989; Lieberman, Hatrak & Mayberry, 2014; Lillo-Martin, 1999; Mayberry & Squires, 2006; Newport & Meier, 1985; Spencer, 2000). For example, signing and speaking children both go through the productive stages of babbling, first-word, and two-word phrases at around the same ages (e.g., Newport & Meier, 1985). However, given that signed language places different demands on visual attention and gaze control compared to spoken language, it stands to reason that the development of perceptual gaze control may differ among infants and children depending on modality (visual vs. auditory) of their primary language input.

Recent studies have suggested this may be the case. Infants of deaf signing parents spend more time looking at their caregivers than do infants of hearing caregivers, regardless of whether the infants themselves are deaf or hearing (Harris & Mohay, 1997; Koester, 1995; Spencer, 2000). Recently, deaf infants of deaf signing parents were found to have accelerated maturation of gaze-following behavior. This is based on findings that deaf infants between 7-14 months of age are more likely to look direction of an adult’s gaze, compared to same-age non-signing controls, suggesting that deaf infants precociously understand the visual communicative value of facial and bodily signals of others, acquired from their deaf parents (Brooks, Singleton & Meltzoff, 2020; Harris et al., 1989). Also, during parent–child book reading interactions, toddlers with deaf parents are able to rapidly and purposefully shift gaze (Lieberman et al., 2014). Further in development, observational studies show that deaf native-signing children develop distinctive, consistent gaze-following during social engagement with adults and can demonstrate effective turn-taking behaviors during ASL conversations (Crume & Singleton, 2008; Lieberman et al., 2014).

These studies show accelerated development of enhanced gaze control during social engagement in deaf and hearing children whose first native language is sign language. Recently, gaze recorded with an eye tracker was used to measure the efficiency and speed of word recognition in young signers. With the visual world paradigm, participants watched signed stimuli on video that labels one of multiple objects, while gaze shifts to pictures of the objects are recorded (Lieberman et al., 2018; MacDonald, LaMarr, Corina et al., 2018; MacDonald, Marchman, Fernald et al., 2020). Native signing children make early, anticipatory gazes to the labelled objects just like hearing children do when they hear spoken English labels (Lieberman, Borovsky, Hatrak et al., 2015). MacDonald and colleagues (2018; 2020) found children who knew more signs were faster to look at the identified target object, showing strong relations amongst gaze direction, word recognition, and parent-reported productive vocabulary size. In these visual world paradigm studies, child signers reveal an ability to control perceptual gaze amongst competing linguistic and non-linguistic information, rapidly shifting gaze to what is most informative.

Together, these early changes to social and linguistic gaze control are believed to reflect perceptual strategies that arise because of the rich linguistic and social input provided by deaf signing parents. Deaf parents use effective social engagement strategies, at an early age with their infants. Deaf parents are also particularly attuned to relating linguistic input to what is in the child’s field of vision and are able to direct the child’s focus of attention to objects that are the topic of conversation (Corina & Singleton, 2009; Harris et al., 1989; Loots, Devise & Jacquet, 2005). This early exposure to signed language may provide infants with ample opportunity to optimize their attention across multiple, competing visual targets, leading to an enhanced control of gaze behavior during social interactions and language processing. However, no study has used eye-tracking to study perceptual gaze control during sign watching. It is unknown where on the signer’s body young signers primarily look when they are watching signed narratives, or how this may change over development. Do they maintain a focus on the eyes and face, or do they select instead to focus on hands as the primary articulators in signed language?

Recent studies with adult signers reveal that gaze patterns, recorded via eye-tracking technology, do differ depending on the observer’s signed language experience (Agrafiotis et al., 2003; Bosworth, Stone & Hwang, 2020; Emmorey, Thompson & Colvin, 2009). Recently, we measured “sign-watching” behavior among a range of native to novice adult signers who learned American Sign Language (ASL) at different ages from birth to adulthood (Bosworth et al., 2020). We showed that while watching signed narratives on video, native signers maintain focus on the face, primarily on the eyes and mouth regions. In contrast, novice signers had more dispersed gaze patterns, with gaze shifted to lower regions near or on the torso where the hands often are located during signing. In the same study, we also explored how gaze behavior would change under low-intelligibility conditions; here, intelligibility was corrupted by playing the stimuli in reverse. Not surprisingly, accuracy measures of story comprehension decreased and this decrease was modest for native signers and quite drastic for signers who learned ASL later in life. Importantly, we found that the native signers’ highly face-focused gaze patterns did not change under the low-intelligibility condition, while novice signers’ gaze became more distributed downward while they struggled to comprehend the story. We hypothesized that our findings provided evidence of an “expert” gaze behavior optimized for both high- and low-intelligibility conditions and acquired only after sufficient language exposure or proficiency. Native signing adults demonstrate highly focused gaze behavior, relying on their peripheral vision to perceive the moving hands even when challenged with less intelligible input, while novice signers are more likely to shift foveal vision closer to the hands, especially when they don’t understand the input.

When and how during development this perceptual gaze control skill emerges still remains unclear. On one hand, maturation of perceptual gaze control may arise from general neurocognitive maturation or low-level sensory mechanisms, and as a result, be impervious to variations in the degree to which adult caregivers attempt to structure and direct infants’ attention episodes. On the other hand, findings from the observational and experimental studies with hearing and deaf young signers summarized above put forth a hypothesis that unique sensory and linguistic experiences can change infant gaze behavior (Brooks & Meltzoff, 2008; Brooks et al., 2020; Lieberman et al.; 2014; MacDonald et al., 2018; 2020; Spencer, 2000). This hypothesis is also motivated by reports that early sign exposure can lead to low-level visual and attentional advantages seen later in life (reviewed in Dye, Hauser & Bavelier, 2009). To address this hypothesis that perceptual gaze control is affected by early sign language experience, the present study compares gaze behavior in sign-exposed infants and children with similarly-aged participants who were primarily exposed to spoken English at home. The sign-exposed group were all hearing children raised by one or two deaf caregivers and exposed to ASL as the primary language starting from birth.2 Therefore, the two signing vs. non-signing populations are similar in that they have full native language exposure and are hearing, but differ in the modality of their primary language input and gaze behavior modelled by their parents.

We further contrasted gaze behavior in signing and non-signing cohorts in two age groups, infants aged 5-14 months, and children, aged 2-8 years. These age cohorts were chosen to reveal when in development we might see the emergence of gaze behavior differences—during the earliest stages of language acquisition or when children were farther along in social language development and experience. On one hand, because of full exposure to visual signed language and different sociolinguistic strategies used by deaf signing parents (described above), it is possible that young infants may already show evidence of emerging “expert” gaze behavior optimized for perceiving signed language. This would be possible only if perceptual gaze control is malleable and influenced by early language experience. There is ample evidence suggesting this could be true, where specific cultural and spoken language experiences impacts where infants look on the face of a speaker (reviewed by Hessels, 2020), although this remains unknown for sign watching. If this is the case, then we would expect to see differences between signing and non-signing cohorts already evident in the younger age group.

Another possibility is that enhanced gaze control might require more time to develop and/or its emergence may contingent on achieving a particular set of language acquisition milestones or level of sign language input. Many linguistic behaviors are acquired following a gradual process; for example, the use of classifiers, perspective shits, pronouns, negation, verb agreement, and conditionals are mastered between the ages of 2.5 to 5 years and not before (Mayberry & Squires, 2006). By the age of 5 years, signing children can comprehend non-manual linguistic facial expressions but are inconsistent in their production of these structures, and the timing and scope of non-manual markers are not fully mastered until the age of 8 years (Mayberry & Squires, 2006; Reilly, McIntire & Bellugi, 1990). In this case, young sign-exposed children’s gaze behavior may be more similar to that of novice signers rather native adult signers (as described above), and we would also not see any differences in gaze behavior among hearing infants who are learning a signed vs. spoken language.

The present study also addresses a hypothesis about whether deafness is a prerequisite for early perceptual adaptations. For instance, profound deafness (but not sign language experience) has been found to enhance peripheral visual attention, especially for the peripheral visual field (Bavelier, Dye & Hauser, 2006; Pavani & Bottari, 2012). It is possible that “expert” control of gaze behavior develops off this peripheral vision enhancement (or vice versa), and therefore would be observed only among deaf people. Brooks et al. (2020, p. 5) offer that the “social-linguistic ecologies” of deaf infants, necessitated by lack of access to environmental and communicative sounds, serve to entrain them to be more “visually vigilant” and attentive to important signals, such as the direction of an adult’s gaze or the movement of a hand. If this is the case, we should not see signing and non-signing infants’ gaze behavior differ as both groups are hearing and not deaf.

To address these hypotheses in the present study, the young participants were shown signed narratives produced by a native ASL deaf signer, intended to be highly engaging and easy to understand. The narratives contained linguistic structures common to signed languages such as constructed action and dialogue, body shifts, classifiers, and facial expressions (see Appendix for the translations of the narratives used). The narratives were presented under normal and low-intelligibility conditions (i.e., reversed). Reversed speech has been used as a means to investigate early language (phonological) processing (May, Byers-Heinlein, Gervain et al., 2011; Pena et al., 2003). We applied this manipulation to signed language stimuli to make the narrative more difficult to understand by reversing phoneme and word order and thus disrupting the syntax and semantic content of narratives (Bosworth et al., 2020; Hwang, 2011; Wilbur & Petersen, 1997). This means that both the normal (forward) and low-intelligibility (reversed) conditions contain the exact same perceptual features (such as the handshapes and location), while the timing and order of those features is different. This also provided an opportunity to examine whether signing children have different gaze patterns for familiar versus unfamiliar signed stimuli, while both stimuli conditions would be equally unfamiliar to non-signing children.

Gaze data in the present study were analyzed to learn where infants and children look while sign watching. The four groups – signing and non-signing infants and children – might differ in their distribution of attention across the signer’s body, and particularly whether they elect to focus (e.g., the face or the moving hands). The face is important for conveying emotional and linguistic information in sign languages (Baker-Shenk & Cokely, 1991; Fenlon, Cormier & Brentari, 2017; Liddell, 1980). On the other hand, infants may also be especially attracted to perceptually salient attributes of the moving hands. The moving articulators are familiar to young signers but unfamiliar (and possibly novel) to non-signing infants and children. Therefore, the face, particularly the eyes and the mouth, and the hands compete for overt attention. If early sign language exposure influences the development of perceptual gaze control, we predict that we would see emerging differences between signing and non-signing infants where signing infants show increased focus on the face, given that this appears to be the most efficient way to perceive signed language in native signing adults (Bosworth et al., 2020). This “expert” gaze behavior which is invariant to normal or low-intelligibility conditions would be also be observed and perhaps be more refined in signing children, but not in non-signing children. However, if sustained signed language exposure and/or expertise are required for the emergence of signing adult-like gaze control, we would not observe any differences among infants in where they look, while signing children may show immature eye gaze control.

This is the first empirical study to track the development of gaze behavior during sign watching in young signers. Eye-tracking during viewing of ASL narratives enables us to address the following questions. First, how do the gaze patterns of young native signers during sign watching change across development? Specifically, to what extent are young signers’ gaze behavior like that of mature adults? By measuring the same metric across all ages, we are able to determine whether there are age-related changes in perceptual gaze control during sign language comprehension. Second, how are young signers’ gaze patterns impacted by intelligibility of signed narratives? Specifically, is the effect of low-intelligibility in young signers the same as or different from the effect seen in adult signers? This study on the gaze patterns of young native signers during sign watching is an extension of prior research with deaf and hearing signing adults (Bosworth et al., 2020), and has important implications for how deaf children mediate gaze during language learning. Successful maturation of perceptual gaze control and visual processing is likely to promote language learning and socio-emotional and attentional self-regulation (Dye & Hauser, 2014; Dye, Hauser & Bavelier, 2008).

Methods

Participants

A total of 68 hearing participants, aged 5 months to 8 years, participated in this study. All participants were recruited from the San Diego local community via internet ads. Seven participants were not included because they failed to provide sufficient data or accurate eye tracker calibration, resulting in a sample of 61 participants for analysis. Thirty participants were exposed to sign language at home since birth, and 31 were exposed to spoken English at home.

Based on our inclusion criteria, the non-signing participants had not seen any sign language at home and had not been exposed to any baby sign instruction videos at any time; only spoken English was used at home. All signing children had at least one caregiver who reported using ASL as their primary language and communication mode on a daily basis at home. Parental sign language use was as follows: both caregivers were deaf (N = 18), sole caregiver was deaf (N = 3), one deaf and one hearing caregiver who was an ASL interpreter (N = 5), one deaf and one hearing caregiver (N = 4). Based on a questionnaire, parents reported that ASL was used at least 80% of the daytime. Prior to testing, all deaf parents completed a self-rated proficiency test, taken from Bosworth et al. (2020). Scores ranged from 0 to 5 (fluent). All deaf parents gave themselves the maximum rating of 5. Mean participant ages are shown in Table 1. There was no significant difference in mean age for non-signing vs. signing infants, t(24) = .93; p = 0.36, or for non-signing vs. signing children, t(33) = .14; p = .89. The ethnicity breakdown of non-signing and signing groups was as follows: 68% and 73% Caucasian, 13% and 17% Asian, Hispanic, or African American, and 19% and 10% undisclosed. All parents reported completing high school, and most completed college. Parents reported that all participants had no intellectual disabilities, normal hearing and vision, and none had any complications during pregnancy or delivery.

Table 1.

Demographics of the participants with age in years.

| Age Group | Infants | Children | ||

|---|---|---|---|---|

| Language Group | Non-Signing | Signing | Non-Signing | Signing |

| Count (N = 61) | 16 | 10 | 15 | 20 |

| Female/Male | 5/11 | 7/3 | 8/7 | 10/10 |

| Age in years (Mean ± SE) | .69 ±.06 | .78 ±.07 | 4.91 ±.37 | 4.99 ±.39 |

| Range in years | .44 to 1.1 | .58 to 1.18 | 2.13 to 8.15 | 2.70 to 8.3 |

Parents of the participants provided consent in the laboratory and received a small toy and monetary compensation for the 30-minute study. The procedures were approved by the University of California, San Diego Institutional Review Board.

Apparatus

Video stimuli were presented on a HP p1230 20” monitor (1440 x 1080 pixels; 75 Hz) using Tobii Studio version 3.4.2 on a Dell Precision T5500 Workstation computer. A Tobii X120 eye tracker recorded near-infrared reflectance of both eyes. The eye tracker was positioned under the stimulus monitor, at an angle of 23.2°, aimed upward facing the infant’s face at a distance of about 55-60 cm. The x-y coordinates, averaged across both eyes for a single binocular value, corresponded to the observer’s gaze point during stimulus presentation recorded at a sampling rate of 120 hertz. Tobii Studio Pro was used to present the stimuli, record the eye movements, and filter the gaze for analysis.

Materials

Stimuli consisted initially of four videos of an adult deaf female native signer narrating classic fairy tale stories in ASL. These same stories were used in a companion study involving deaf and hearing adults with varying ASL proficiencies (Bosworth et al., 2020). The stories were produced with natural prosody and intonation. The model was asked to narrate, without a script, the following stories, as if she was addressing young children: Goldilocks and the Three Bears, Cinderella, King Midas and the Golden Touch, and Red Riding Hood (transcripts available in Appendix). No attempt was made to restrain her word or grammar choice. All stories started and ended with the model’s hands placed together on her lower torso (i.e., “resting” position) and in view.

The size of the videos was 1080 x 720 pixels. The video image of the signer’s body spanned 15.33 degrees of visual angle in height. In a naturalistic setting, this stimulus size roughly corresponds to viewing a real person of average height viewed from ~2 meters away. The video background was changed to white because a bright display yielded the best condition for capturing eye gaze data by maximizing the corneal reflection.

For each story, we selected two complete sentences and produced a video clip of each. The beginning and end of each of the 8 clips had fading in/out for smoother transitions. Mean clip duration was 8.45 seconds (SD=2.2; range is 4.2 to 11.1 seconds). The durations were not identical for all clips because we based our editing of the stories on length of a complete sentence and trimming clips only when the model had finished an utterance. Mean durations are presented in Table 2.

Table 2.

Overall percent looking at video (mean and standard errors) as a function of total video exposure time, for each group and Video Direction condition.

| Infants | Children | |||

|---|---|---|---|---|

| Video Condition | Non-Signing | Signing | Non-Signing | Signing |

| Forward | 69.4% (3.0) | 64.8% (3.0) | 74.2% (2.8) | 77.1% (3.6) |

| Reversed | 66.1% (4.1) | 63.9% (3.6) | 70.4% (2.8) | 73.8% (2.4) |

The story clip videos were reversed in iMovie while maintaining the same number of frames and video resolution. The Video Direction condition was counterbalanced across infants, so that the infants did not see the same items in both Forward and Reversed conditions.

Procedure

In a dimly lit room, participants viewed the stimulus monitor from a distance of 55-60 cm. Infants were placed in a booster seat, on the parent’s lap. Parents wore glasses with filters to prevent the tracker from erroneously picking up the parent’s eyes and so they would not bias the child. Parents were instructed not to distract their child or point to the monitor during the main experiment.

The experiment began with a five-point eye tracker calibration routine (~10s), with small spinning circles presented in all four corners and the center of the screen (see Figure 1). If calibration was of sufficient quality, testing was initiated. Between each stimulus presentation, small puppy images were shown for at least 3 seconds. Once the child’s gaze was in the center of the puppy, the next trial started. The order of Forward and Reversed video clips alternated for each infant. Participants were alternately assigned to Group 1 or Group 2 to counterbalance the video-reversal conditions and stimuli order, and this was done keeping roughly even numbers of participants in each Group 1 and 2 for each Subject Group. No clip was seen twice; half the clips were in Forward and half were Reversed for Group 1 and this was reversed for Group 2. For analysis, the counterbalanced Groups 1 and 2 were collapsed, to minimize any order or fatigue effects.

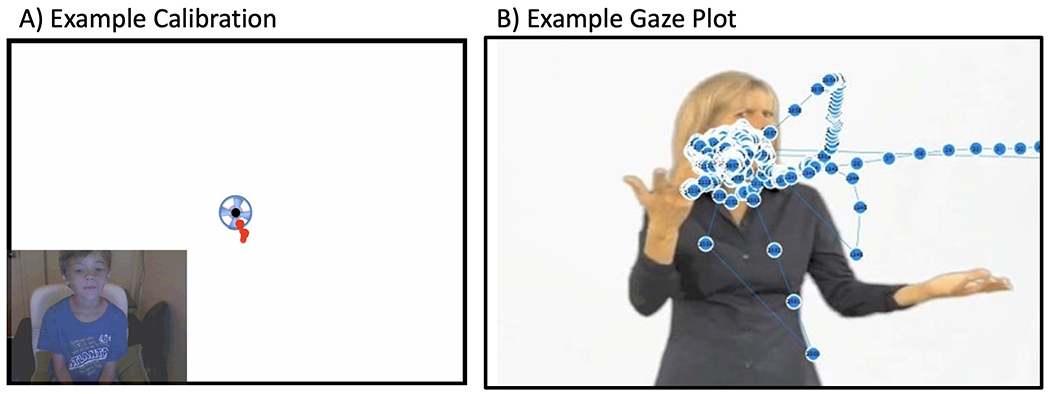

Figure 1.

A) The experiment began with a five-point eye tracker calibration routine (~10s), with small spinning circles (2.2 degrees of visual angle) presented in all four corners and the center of the screen. The duration of each calibration target was controlled by the experimenter. If a participant showed error >1.15° from the center of any target during calibration, we recalibrated until error for all targets was reduced below this value before starting the experiment. Data was included for analysis only if gaze plots showed that eye gaze had, in fact, hit each target in the three-point calibration check, as shown here. B) Example raw gaze plot from one trial.

Data Analysis

Data Processing.

For each gaze data sample, the position of the two eyes were averaged. No spatial-temporal filter was used to define fixations, because we had no assumptions about fixation behavior of infants. Instead, all gaze points were included for analysis, because infants were allowed to move their eyes freely.3 A 3-sample noise-reduction smoothing function was applied to the raw data to smooth out effects of blinking and microsaccades.

All participants saw all trials, but some trials for individuals garnered few gaze points. When a participant looked at the video for less than 25% of that trial duration, that trial was eliminated. This resulted in a removal of 106 trials out of 501 total trials for all participants (21% of the data set). The first 60 samples (equal to 500 ms) were removed from each trial, as this is where participants orient to the onset of the stimulus.

We inspected all gaze points for outliers, and no extreme outliers or calibration drifts over the course of the recordings were observed. The distribution of data appeared normal.

Areas of Interest (AOI’s).

Areas of interest (AOIs) were drawn using Tobii Studio (see Figure 2). We attempted to avoid making a priori specifications of where differences are expected to arise by including equal-sized AOIs across the entire video image, allowing all aspects of the video image to be given a fair chance for analysis. Total looking duration for each AOI was calculated for each participant and summed for each story. Next, we converted looking durations for each AOI to percentages of total looking time recorded for each story. For every participant, the looking percentages for all AOIs always summed to 100% for each story. This allowed for meaningful comparisons of time spent looking at AOIs regardless of differences in story length or individual variation (e.g., missing data due to individual differences in blinking, saccades, looking away, rare occlusions from moving hands across the face, or moving briefly out of eye tracker range).

Figure 2.

Areas of Interests (AOIs) used to analyze looking time for each region. The AOI grid was dynamically re-positioned every 3 frames to align with the signer’s body position throughout the story. This ensured that each AOI consistently referred to the same part of the body. For example, the “MidFaceBottom” AOI box that encompasses the mouth always covered that part of the signer’s body.

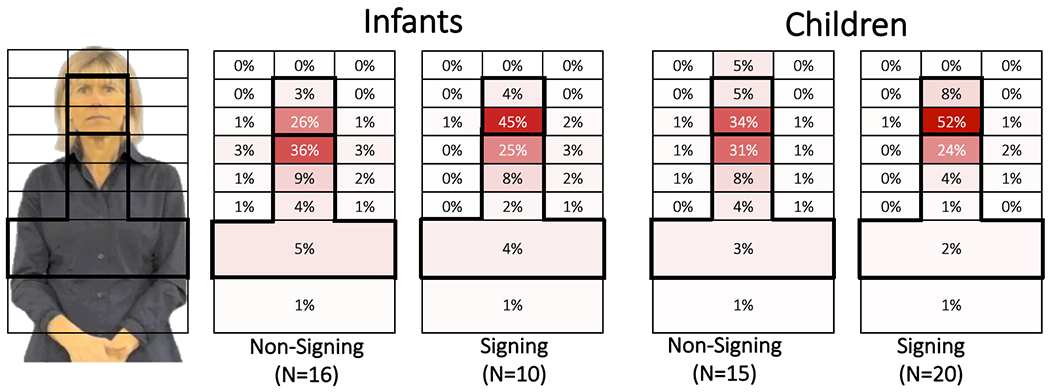

Most gaze points fell along a vertical axis of the central 6 AOIs running from the face to the lower torso (see Figure 3). For ease of discussion in the Results–AOI Analysis section, and to test hypotheses about areas on the signer that compete for overt visual attention, we grouped the mouth and eye AOIs into the “face region” and the remaining lower central AOIs into the “torso” region (see bolded lines around the face and torso region in Figure 3).

Figure 3.

Percent looking for each of the primary AOIs, for Infants (left) and Children (right), for each Non-Signing and Signing groups, with the highest concentration of gaze shown in the darkest shaded AOIs. Results were very similar for Forward and Reversed, therefore, only Forward is shown. Few gaze points (~1%) fell in the two left and right side regions, so those two AOIs are omitted. The bolded outlined AOIs were used to calculate Face Preference Index values for each subject, and were used in statistical analyses (see text).

Statistical Analysis.

We calculated a Face Preference Index (FPI) for each participant and each story by first taking the sum of looking percentages for the face region and the sum for the torso region. We did not include the MidFaceTop, lowermost Belly AOI or side AOIs because there were very few gaze points in these areas. The FPI was calculated as: (Face − Torso)/(Face + Torso), modelled on similar formulas comparing two regions of interest (Seghier, 2008; Stone, Petitto & Bosworth, 2017). A positive FPI value indicates greater looking at the face region, while a negative FPI value indicates greater looking at the torso region.

Data was prepared and analyzed in R (R Core Team, 2017) using the RStudio IDE. Data preparation, cleaning, aggregation, and visualization was performed using the tidyverse, janitor, and scales R packages (Firke, 2019; Wickham, 2017) and statistical analyses using the lme4 and lmerTest R packages (Bates et al., 2015; Kuznetsova, Brockhoff & Christensen, 2017). For infants and children separately, we fitted linear mixed models to the data using Language Group, Video Direction, the interaction of Language and Video Direction, Age, and Trial Order as fixed effects, and participant and video clip as random effects (random intercepts only). P-values for LMM fixed effects were calculated using Satterthwaite’s degrees of freedom method. We present fixed effect estimates, p-values, and 95% confidence intervals when discussing significant results. Full model outputs are available in Appendix.

Results

Global Looking.

To examine the possibility that groups may differ in their “attentiveness” to the videos, we first inspected global percent looking for each Video Direction condition. These values indicate the length of time which participants looked at the monitor (and gaze was successfully recorded), divided by total stimulus duration.4 On average, across all trials, infants and children looked at the monitor when the stimulus was presented 66.04% and 73.84% of the time, respectively. Averages and standard errors of global looking are presented in Table 2.

Among infants, there was a significant effect of Trial Order (p < 0.001, 95% CI: −1.5%, −0.6%) where infants’ looking time decreased 1.0% with each subsequent trial. There were no significant effects of Language Group, Video Direction, or Age, and no significant interactions (all p-values > .318). Among children, the amount of time spent looking towards the stimuli increased 3.2% with each additional year of age (p = .004, 95% CI: 1.2%, 5.4%).5 As with infants, there was also a significant effect of Trial Order (p < 0.001, 95% CI: −1.2%, −0.4%) where children’s looking time decreased 0.8% with each subsequent trial. There were no significant effects of Language Group and Video Direction, and no significant interactions (all p-values > .432).

Overall, the amount of global looking at the video stimuli was similar between non-signing and signing groups, suggesting participants did not overtly differ in level of interest, looking, blinking, cooperation or attentiveness during testing, other than fatigue effects which are expected in this population. The results also suggest that despite not understanding the signed narratives, the non-signing participants maintained high interest in watching them.

AOI Analysis.

Next, we looked at how participants allocated gaze to different regions of the image. We plotted looking percentages for each AOI using “heat grids” in Figure 3, with dark shading for high gaze concentrations and lighter or no shading for AOIs with few or no gaze points. Each grid summed to 100% looking time.6 All groups primarily looked at the six central AOIs, from eyebrows to the torso (MidFaceMiddle to BelowChest, outlined in bold in Figure 3), which accounted for between 80% to 91% of all gaze data.

There were very few differences between Forward and Reversed looking percentages for each AOI, across all Signing and Age groups. For signing infants and children, the overall mean difference across all AOIs between Forward and Reversed was −0.024% (SD=0.7%) and −0.023% (SD=1.19%). For non-signing infants and children, the mean difference across all AOIs between Forward and Reversed was essentially zero (SD=1.3%). While these differences between AOI looking values for the two Video Directions were very small, there were more prominent differences in AOI looking values between the participant groups, which we turn to next.

Overall, signing participants looked longer at facial region more than non-signing participants. When considering the Forward condition in Figure 3, signing infants looked at the facial region 49% of the time, while non-signing infants looked at the face only 29% of the time. Likewise, signing children also looked at the facial region 60% of the time, while non-signing children did so only 39% of the time. When considering eye and mouth regions separately, we noted that all participants looked primarily at the mouth. Signing infants and children looked at the mouth 45% and 52% of the time, which was greater than non-signing infants and children did (26% and 34%). While eye-looking was relatively low, the signing infants and children looked at the eyes (4% and 8%) almost twice as much as non-signing infants and children (3% and 5%; see Figure 3). The effect of Language Group is statistically explored using the Face Preference Index (FPI) in the next section.

Face Preferences.

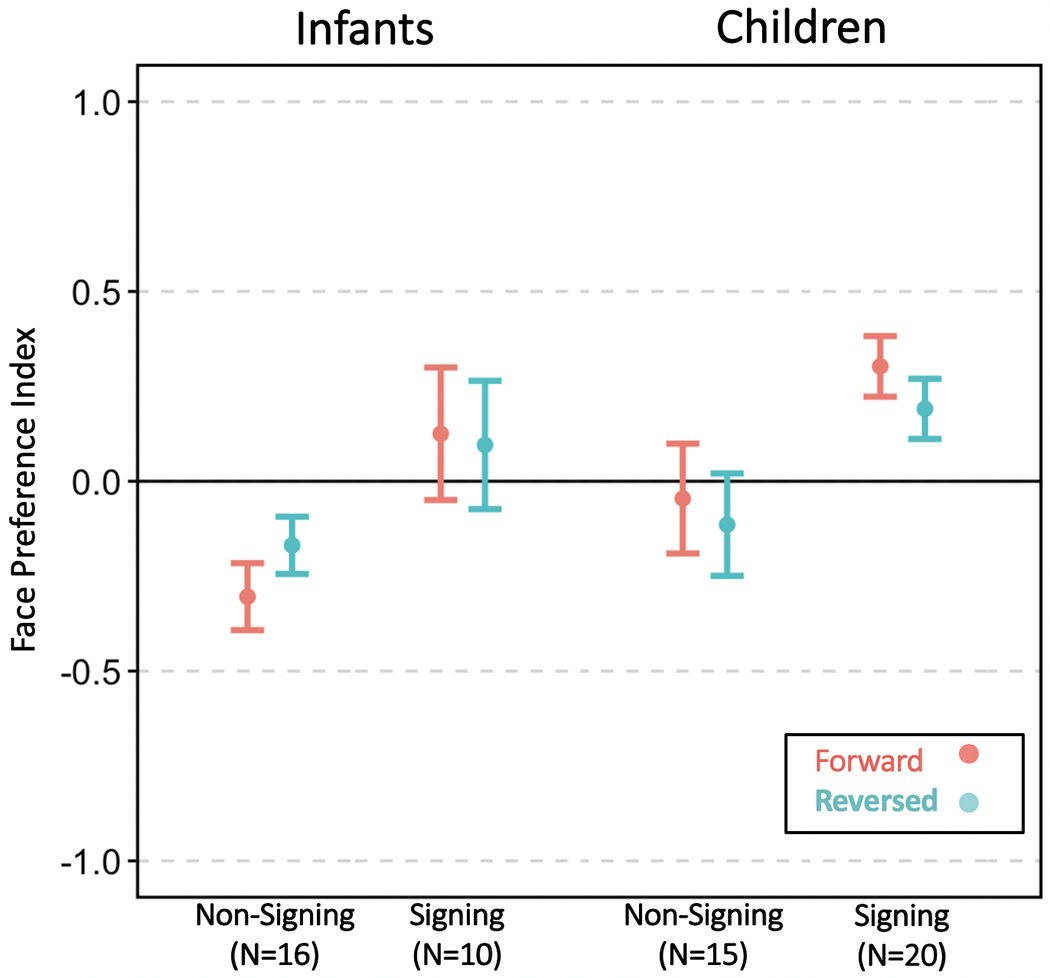

We examined how participants demonstrated different face vs. torso looking preferences by measuring their Face Preference Index (FPI). To review, FPI ranges from −1 to 1, and a positive FPI indicates greater looking time at the face than at the torso. Average FPIs for all four groups and Video Direction are shown in Figure 4.

Figure 4.

Mean Face Preference Index (FPI) values for each Language Group and Video Direction condition. FPI values at around zero mean participants look equally at the Face and Torso regions. An FPI value greater than zero indicates a face-looking bias. Signing infants and children preferred to look at the face across all conditions, which was not observed for non-signing infants or children. Error bars denote standard error of the mean.

Signing infants had a positive face preference (M = .11) while non-signing infants tended to look below the face (M = −.24). Signing infants’ mean FPI was significantly higher than non-signing infants’ mean FPI (p = .007, 95% CI: .132, .774). We also observed a significant main effect of Video Direction, where FPI for reversed videos was 0.12 higher than for forward videos (p = .026, 95% CI: 0.015, 0.232). This main effect is driven by non-signing infants per a post-hoc analysis on this group which detected a significant effect of Video Direction (p = 0.043, 95% CI: 0.004, 0.227) while no difference can be seen within signing infants in Figure 4. Finally, we also found a significant effect of Trial Order where FPI decreased by 0.015 for each subsequent trial (p = 0.003, 95% CI: −0.025, −0.005). There were no significant effects of Age or any significant interactions. Taken together, these results indicate that signing infants have stronger face preferences than non-signing infants, and this pattern held for both Forward and Reversed Video Direction conditions.

Signing children had a positive face preference (M = .25) while non-signing children showed a weak preference for torso looking (M = −.08). As with infants, signing children’s mean FPI was significantly higher than non-signing children (p = .024, 95% CI: .05, .66). Trial Order was a significant factor where FPI decreased by 0.03 with each subsequent trial (p < 0.001, 95% CI: −0.043, −0.024), indicating fatigue effects. There were no significant effects of Video Direction, Age, or any significant interactions.

To check for age effects across the full age range tested, we ran a similar linear mixed model including all signing infants and children. While FPI did increase by 0.046 with each year of age, this relationship was not significant (p = .174, 95% CI: −.02, .11).

Discussion

Past studies have explored gaze behavior as a way to uncover emergent linguistic knowledge and real-time language processing of speech (e.g., Fernald et al., 2008), but this approach has been rarely applied to children acquiring signed language. Studying gaze behavior is well suited to reveal information-processing and learning strategies in infants and children, because it requires no overt verbal or motor response. It is also ideal as a measure that can be compared across all ages. The present study is the first to empirically track the development of gaze behavior during natural vieweing of signed narratives among young signers. We addressed two questions. First, what are the gaze patterns of children exposed to sign language at home, as compared to non-signing children, over the course of early development? Second, how are their gaze patterns during visual language processing impacted by intelligibility of signed narratives? Lastly, we discuss the results in the context of sensitive vs. critical periods of development.

We first tested the hypothesis that perceptual gaze control while watching signed language is altered by early visual language experience, against an alternative hypothesis that gaze behavior is immutable or is slower to develop in response to different language experiences. We observed robust differences in gaze patterns depending on whether the participants’ home language was primarily visual-signed or auditory-spoken. Signing participants, across all ages tested, focus primarily on the face, while non-signing infants foveated on the face and on the signing space where the hands typically fall during sign production. The chief difference between the two infant groups was the modality of their home language, suggesting a change in eye gaze control as a response to their home language input. But why, given a language that is ostensibly conveyed through the hands, do infants with signed language input opt to not fixate on them while infants without this linguistic input do? Our second question addressed in this study was whether sign-exposed infants and children changed their gaze patterns for signed narratives that are highly disrupted using video reversal. We observed that these participants maintained a high focus on the face even when presented with unfamiliar and unintelligible linguistic input. In this linguistically challenging setting, why didn’t the sign-exposed participants shift focus to the hands, on which, again, the language is ostensibly conveyed?

Evidence from a companion study (Bosworth et al., 2020) suggest a possible answer to both puzzles. In that study, we recorded gaze in 52 signing adults who learned ASL at various ages from infancy to adulthood. Fifteen of the adult participants were native ASL users, who acquired ASL as their primary language from birth, and 11 were adult students learning ASL as a second language. All stimuli and methods used for adults were identical to the present study, with the exception that we also measured adults’ comprehension of these stories after viewing. We found that while reversal reduced comprehension, native adult signers maintained focus on the face for both Forward and Reversed conditions. By contrast, the novice signers showed more dispersed gaze where focus frequently shifted between the face and lower regions near or on the torso. We believe that the adult native signers demonstrated what we called “efficient sign-watching,” marked by maintenance of high face focus and video direction invariance, and likely optimized for the perception and comprehension of signed language.

In the present study, we observed in sign-exposed infants and children the very same efficient sign-watching behavior (see signers of all ages in Figure 5). The non-sign exposed groups did not demonstrate that behavior (shown in Figure 4). These findings from the adult companion study and the present study suggest that native signers, across all ages, are watching signed narratives in a manner that permits the most efficient perception and comprehension of signed language. It is likely that signers develop heightened peripheral vision to perceive the moving hands in the lower visual field, even when challenged with less intelligible input, while novice signers are more likely to shift foveal vision closer to the hands, especially when they do not understand the input. This idea that signers must “learn to look” at the sign language signal with their peripheral vision is also suggested by Dye & Thompson (2020).

Figure 5.

Signing infants’ and children’s mean FPI (from the present study) are contrasted with that of 15 adult native signers from a companion study using the same signed narratives and nearly identical methods (Bosworth et al., 2020). Note that the children are hearing, the adults are deaf, and all participants are native signers. Error bars denote standard error of the mean.

What is particularly striking is this high “face-focused” sign-watching behavior was seen even in the youngest age tested, 5.3 months of age, and was stable across the age range tested up to 8 years, as indicated by no statistically significant relationship with age, even within the first 14 months of life. Efficient gaze control and visual attention during sign watching arises in response to very early exposure to a visual language. If early visual language experience was not a contributor, and if efficient control of eye gaze could not be learned early in life, we would have not observed differences in gaze behavior during sign watching between sign-exposed and non-sign-exposed infants.

Moreover, the native sign-exposed infants were hearing and the native signing adults in the companion study were deaf. Hence, deafness per se is not required to exact changes to visual attention during sign watching (although future studies are certainly needed with deaf infants). MacDonald et al. (2020) also found no differences between deaf and hearing native sign-exposed children’s gaze patterns when looking at objects that match sign labels, suggesting that the ability to control eye movements during word recognition is shaped by learning a visual language and not by hearing ability. Deafness and visual language experience can have independent effects on perceptual-cognitive processes. For instance, profound deafness (but not sign language experience) has been found to enhance visual attention, especially for the periphery (Bavelier et al., 2006; Bosworth & Dobkins, 2002; Pavani & Bottari, 2012). Sign language experience (for both deaf and hearing individuals) enhances performance for face discrimination tasks and shape detection for the lower visual field (Stoll & Dye, 2019; Stoll, Rodger, Lao et al., 2019). The key point is that deafness is not required to exert effects on perceptual gaze control and that being exposed to sign language (not yet producing it) is sufficient to alter perceptual gaze control at a very early age.

Another important aspect of development to consider is young signers’ sensitivity to the face, and why they are so focused on the face from such an early age. All infants are certainly attracted to faces, but why should signing infants be more so? Although not tested in the present study, we speculate that young signers have an understanding of linguistically meaningful information on the face because they are trained by their parents to engage in appropriate face-scanning behaviors (Corina & Singleton, 2009). Previous studies have shown that native-signing infants show understanding of emotional expressions by one year of age, while production of linguistic facial expressions typically appear much later (Reilly & Bellugi, 1996; Reilly, McIntire & Bellugi, 1990a; Reilly et al., 1990b). In the present study, the strong face preference was seen even in the youngest age tested, ~5.3 months of age and stable within the first year of life. Our results offer an intriguing possible interpretation that signing infants may understand the meaning (or at least the value) of linguistic facial expressions much earlier than reported in the literature.

We also do not believe it to be a coincidence that the development of efficient sign-watching behavior among sign-exposed infants occurs during the well-known sensitive period for acquiring knowledge about phonological patterns in language (Mayberry & Eichen, 1991; Mayberry et al., 2002; Newport, 1988; Stone et al., 2017). Studies have shown that infants in the first year of life appear to be biologically predisposed towards linguistic input over non-linguistic input, be the input be on the hands or on the mouth (for speech: see Vouloumanos & Werker, 2007; Gervain, Berent, & Werker, 2012; for sign: Krentz & Corina, 2008; Stone et al., 2017). These studies suggest very compellingly that all infants share a potential for being sensitive to signed language input and thus acquiring signed language (Petitto, 2000). Initial and sustained exposure to a signed language via signing caregivers supports the development of efficient sign-watching behavior, which, in turns, enables more efficient perception of that signed language. The sensitive period of language acquisition also affords very rapid maturation of specific behaviors which facilitate language acquisition. Otherwise, infants, like the novice adults in our companion study, would need years of signed language experience in order to acquire mastery of eye gaze control equivalent to that of native signers.

Do these results mean that early sign input is required for efficient gaze watching? They do not. The concept of “sensitive period” of development is a period when sensory experience has a relatively greater influence on behavioral and cortical development, but is not necessarily exclusive to that period. This is differentiated from a “critical period” of development, defined as a period of time that requires input, and if input is not received in that time, development does not proceed normally (which often applies to vision, Knudsen, 2004; Voss, 2013). In our companion study, adult signers, both deaf and hearing, who acquired sign language between late childhood and early teens and had been signing for about 7 years, also had highly efficient gaze patterns, despite having lower comprehension accuracy than native signers. This means there is not a critical period for perceptual gaze control development, in the sense that exposure during infancy is required for mature perceptual gaze control, but there is a sensitive period, in which infants and children are much more sensitive to visual language input and are able to acquire efficient native-like gaze patterns very early and rapidly. Note, that several aspects of language acquisition certainly do follow a critical period of development, where language deficits are seen if language input is not provided within the first year of life (Mayberry et al., 2002; Mayberry & Eichen, 1991; Newport, 1988).

Our findings join the bulk of findings that show spoken language experience influences looking behaviors quite early in infancy (Birulés et al., 2019; Kubicek et al., 2013; Pons, Bosch & Lewkowicz, 2015; Pons & Lewkowicz, 2014). Many studies have shown that infants, near the age of one year, have a strong mouth-attraction, because of infants’ emerging interest in language. Studies show a shift from eye-gazing to mouth-gazing while viewing the face of (and listening to) a speaker speaking a foreign language, presumably because information provided by the mouth, more so than the eyes at this age, is important for the development of language skills at this age when language production is rapidly emerging (Lewkowicz & Hansen-Tift, 2012; Pons et al., 2015). Our study complements this notion, but also challenge it to be broadened, as this notion is typically framed in the context of auditory speech processing. What we find instead is evidence of a broader search for relevant linguistic information in either visual or auditory modalities. This search extends to all salient linguistic articulators, on the hands or on the mouth. An important follow-up study is to ask how hearing sign-exposed infants view signers vs. speakers of familiar or unfamiliar languages, and whether their highly focused gaze patterns during sign watching carry over to viewing speakers.

There are important limitations of the present study as well aspects of gaze behavior that need further study. First, the present study did not include measures of developmental milestones, proficiency, comprehension, or cognitive ability. This prevents us from fully understanding why video reversal does not change eye gaze, and furthermore prevents us from confirming whether the native signing children understood the video stories when presented normally (although this does not negate the main finding of significant language group differences). It is possible that young signers consider the reversed videos to still be viable input, even if it is unintelligible or unfamiliar.7 On the other hand, it is possible the reversed stimuli are far too distorted, and stimuli that varies by content difficulty, rather than intelligibility, are necessary to measure such effects on eye gaze. Future studies should note Henner, Hoffmeister, and Reis (2017) for a discussion on appropriate language proficiency assessments to use with signing children. Another important issue that is unknown is the amount of signed language input necessary to foster the development of efficient sign-watching behavior. Being born into an environment with signing parents was obviously sufficient, but future studies need to be done to quantify and describe the input sign-exposed infants.

A second limitation, and grounds for future extension, lies with how we defined gaze behavior. As a starting point, we aggregated all gaze points and measured their distribution across different regions of the face and body—a spatial summation. There are other ways to measure gaze patterns, some of which preserve temporal information, such as scan paths, saccades, anticipatory gazes, or temporal correlations with specific moving elements of the video, that might provide more useful information. For example, how does gaze behavior change across the unfolding of the stimuli, particularly if it contains unexpected or grammatically complex elements? The use of temporal—as well as spatial—analyses for studying eye gaze almost certainly will permit a richer understanding of what it means to “watch” sign language.

Conclusion

The present study is the first demonstration that gaze patterns during sign watching in young signers exposed to ASL from birth are very similar to those seen in adult native deaf signers and very different from those seen in infants and children whose parents used only spoken English. Within the first 6 months of life, signing infants quickly rapidly adopt highly resilient face-focused gaze behaviors that aid in language learning. These enhanced gaze behaviors are likely to facilitate language learning throughout childhood. We believe these behaviors are a result of deaf fluent signing parents providing their infants with a “visual learning ecology that supports social, cognitive, and linguistic development” (Brooks et al., 2020). Aside from providing obvious linguistic input and socialization cues, deaf parents use explicit visual and tactile strategies to support the development of perceptual gaze control, which the child uses to direct and regulate visual attention (Corina & Singleton, 2009; Harris et al., 1989; Waxman & Spencer, 1997). This successful maturation of perceptual gaze control and visual attention will likely facilitate the mastery of vocabulary and world knowledge (Lieberman et al., 2014; 2015; Stone et al., 2015) and promote stronger socio-emotional self-regulation (Dye & Hauser, 2014; Dye et al., 2008).

Deaf infants who are not exposed to sign language from birth may be missing important learning strategies that native signers quickly acquire shortly after birth. Deaf children who are not exposed to ASL may not learn to use their “perceptual span” to gather linguistic information effectively (an idea also suggested by Dye & Thompson, 2020). The early experiences of native signing children are in stark contrast to most deaf children who are typically raised with only aided speech input (via hearing aids or cochlear implants). Often, hearing parents are advised not to expose their infant to sign language (Hall, 2017). As a consequence, most deaf children do not have full and complete access to language during the first year of life (Humphries et al., 2012). This diminished early language input has serious, detrimental repercussions for social, cognitive, and language development (Carrigan & Coppola, 2020; Davidson, Lillo-Martin & Chen Pichler, 2014; Hauser et al., 2008; Mayberry & Eichen, 1991; Mayberry & Lock, 2003; Peterson & Siegal, 2000; Prezbindowski et al., 1998; Wood, 1989). By contrast, deaf children of deaf signing parents often have full access to sign language and have robust language, cognitive, and social development (Loots et al., 2005; MacDonald et al., 2018; Meadow-Orlans et al., 2004; Newport & Meier, 1985; Peterson & Siegal, 2000; Rinaldi, Caselli, Di Renzo et al., 2014). Many researchers now believe that spoken-language-only approach in the first year of life is insufficient for deaf and hard of hearing infants (e.g., see Humphries et al, 2014). Hearing parents certainly can adapt their communicative practices and provide their deaf and hard-of-hearing infants with signed input, however, resources need to be made available to them by medical and educational service providers (Kushalnagar et al., 2007), and more research studies are needed to inform best practices for fostering language development during early infancy.

Research Highlights.

This study is the first to measure natural gaze behavior of young hearing native signers while viewing signed narratives.

Gaze behavior in hearing infants and children exposed to American Sign Language at home were compared to hearing non-signers whose caregivers used only spoken English.

Non-signing infants and children looked at the face and below the face, where moving articulators primarily fall, while young native signers looked primarily at the face.

Hearing young native signers, aged 5-14 months, exhibit highly focused gaze patterns on the face that resemble those of adult native deaf signers.

Future studies should study gaze in Deaf infants not exposed to sign language who may be missing input that native signers quickly acquire during infancy.

Acknowledgements

Special gratitude to Cindy O’Grady and So-One Hwang, Ph.D. for their help in creating the stimuli. We are grateful to the parents and their children in the San Diego community for their contributions. The research was supported by grants awarded to Rain Bosworth from the National Science Foundation (1423500) and the National Institutes of Health (R01-EY024623 from the National Eye Institute). Dr. Stone was supported by a Research Supplement to Promote Diversity in Health-Related Research from the National Eye Institute (NEI/NIH).

Appendix

Table A-1.

Story Clips and Duration in Seconds

| Cinderella | ||

| 1 | 8.44 | Once upon a time, there was a girl who was sad because she had two really mean stepsisters who teased her all the time. |

| 2 | 4.2 | Her stepmother was also mean, and she was sad and upset. |

| King Midas | ||

| 1 | 9.12 | Once upon a time, there was a king who was thinking of ways to become rich. He prayed and prayed, and suddenly he met an old woman who had magical powers. |

| 2 | 11.08 | The old woman told the king, “I heard you wanted to become rich. I’m going to give you a magical power to turn anything you touch into gold!” The king was quite excited and thanked the old woman. |

| Red Riding Hood | ||

| 1 | 8.4 | Red Riding Hood heard that her grandmother was sick. She packed a basket with food, went walking towards her grandmother’s house, and arrived there. |

| 2 | 7.72 | She opened the door and went inside. She saw her grandmother, and exclaimed, “Hello, but what big teeth you have!” |

| Goldilocks and the Three Bears | ||

| 1 | 7.52 | The three bears went outside for a long walk until they decided to go back home. They went inside and saw something surprising. |

| 2 | 11.12 | They asked, “Who ate my porridge? Who broke my chair?” They went upstairs and exclaimed, “Who’s sleeping in my bed right now?” |

Table A-2.

Linear mixed model output for effects of Age, Language Group, Video Direction, and Trial Order on infants’ overall looking time (as a percent of total stimuli duration).

| Percent Looking Time for Infants | |||||

|---|---|---|---|---|---|

| Predictors | Estimates | std. Error | CI | Statistic | p |

| (Intercept) | 0.767 | 0.089 | 0.595 – 0.939 | 8.574 | <0.001 |

| Age | 0.001 | 0.009 | −0.017 – 0.019 | 0.115 | 0.909 |

| Language Group [Signing] | −0.047 | 0.055 | −0.155 – 0.06 | −0.844 | 0.398 |

| Video Direction [Reversed] | −0.025 | 0.025 | −0.075 – 0.024 | −1.00 | 0.318 |

| Trial Order | −0.011 | 0.002 | −0.015 – −0.006 | −4.607 | <0.001 |

| Language Group [Signing] * Video Direction [Reversed] | 0.024 | 0.039 | −0.053 – 0.1 | 0.604 | 0.546 |

| Random Effects | |||||

| σ2 | 0.03 | ||||

| τ00 name | 0.01 | ||||

| τ00 story | 0.00 | ||||

| ICC | 0.36 | ||||

| N name | 26 | ||||

| N story | 8 | ||||

| Observations | 349 | ||||

| Marginal R2/ Conditional R2 | 0.052 / 0.398 | ||||

Table A-3.

Linear mixed model output for effects of Age, Language Group, Video Direction, and Trial Order on children’s overall looking time (as a percent of total stimuli duration).

| Percent Looking Time for Children | |||||

|---|---|---|---|---|---|

| Predictors | Estimates | std. Error | CI | Statistic | p |

| (Intercept) | 0.643 | 0.066 | 0.517 – 0.77 | 9.769 | <0.001 |

| Age | 0.033 | 0.011 | 0.012 – 0.054 | 2.875 | 0.004 |

| Language Group [Signing] | 0.03 | 0.04 | −0.047 – 0.108 | 0.754 | 0.451 |

| Video Direction [Reversed] | −0.022 | 0.027 | −0.077 – 0.032 | −0.785 | 0.432 |

| Trial Order | −0.008 | 0.002 | −0.012 – −0.004 | −4.111 | <0.001 |

| Language Group [Signing] * Video Direction [Reversed] | −0.004 | 0.037 | −0.076 – 0.069 | −0.121 | 0.904 |

| Random Effects | |||||

| σ2 | 0.04 | ||||

| τ00 name | 0.01 | ||||

| τ00 story | 0.00 | ||||

| ICC | 0.18 | ||||

| N name | 35 | ||||

| N story | 8 | ||||

| Observations | 471 | ||||

| Marginal R2/ Conditional R2 | 0.088 / 0.249 | ||||

Table A-4.

Linear mixed model output for effects of Age, Language Group, Video Direction, and Trial Order on infants’ face preference index.

| Face Preference Index (FPI) for Infants | |||||

|---|---|---|---|---|---|

| Predictors | Estimates | std. Error | CI | Statistic | p |

| (Intercept) | 0.139 | 0.265 | −0.369 – 0.658 | 0.527 | 0.598 |

| Age | −0.039 | 0.029 | −0.095 – 0.016 | −1.366 | 0.172 |

| Language Group [Signing] | 0.453 | 0.168 | 0.132 – 0.774 | 2.703 | 0.007 |

| Video Direction [Reversed] | 0.124 | 0.056 | 0.015 – 0.232 | 2.227 | 0.026 |

| Trial Order | −0.015 | 0.005 | −0.025 – −0.005 | −2.96 | 0.003 |

| Language Group [Signing] * Video Direction [Reversed] | −0.129 | 0.086 | −0.297 – 0.04 | −1.494 | 0.135 |

| Random Effects | |||||

| σ2 | 0.15 | ||||

| τ00 name | 0.14 | ||||

| τ00 story | 0.01 | ||||

| ICC | 0.50 | ||||

| N name | 26 | ||||

| N story | 8 | ||||

| Observations | 349 | ||||

| Marginal R2/ Conditional R2 | 0.135 / 0.569 | ||||

Table A-5.

Linear mixed model output for effects of Age, Language Group, Video Direction, and Trial Order on children’s face preference index.

| Face Preference Index (FPI) for Children | |||||

|---|---|---|---|---|---|

| Predictors | Estimates | std. Error | CI | Statistic | p |

| (Intercept) | 0.198 | 0.267 | −0.318 – 0.714 | 0.739 | 0.460 |

| Age | −0.000 | 0.047 | −0.092 – 0.09 | −0.018 | 0.986 |

| Language Group [Signing] | 0.354 | 0.156 | 0.053 – 0.656 | 2.265 | 0.024 |

| Video Direction [Reversed] | −0.005 | 0.066 | −0.135 – 0.124 | −0.074 | 0.941 |

| Trial Order | −0.033 | 0.005 | −0.043 – −0.024 | −6.776 | <0.001 |

| Language Group [Signing] * Video Direction [Reversed] | −0.076 | 0.088 | −0.248 – 0.097 | −0.864 | 0.388 |

| Random Effects | |||||

| σ2 | 0.21 | ||||

| τ00 name | 0.18 | ||||

| τ00 story | 0.01 | ||||

| ICC | 0.47 | ||||

| N name | 35 | ||||

| N story | 8 | ||||

| Observations | 471 | ||||

| Marginal R2/ Conditional R2 | 0.117 / 0.531 | ||||

Footnotes

Conflict of Interest Statement

The authors have declared no conflicts of interest with respect to their authorship or publication of this article.

Data Sharing and Data Accessibility

The data that support the findings of this study will be openly available in a data repository.

During signed conversations, adult observers typically bring a small part of the signer’s face within clear foveal vision, which spans only 1.5 degrees of visual angle, placing most of the full signing space in peripheral vision. To a perceiver, the interlocuter’s hands primarily fall, on average, 6.5 degrees, and as far as 16 degrees, below the interlocuter’s eyes (Bosworth, Wright & Dobkins, 2019). The hands are therefore seen with spatial resolution that is approximately 25% of that seen with foveal vision (Strasburger, Rentschler & Jüttner, 2011). When presented with unfamiliar handshapes at 14.4 degrees from central fixation, signers perform poorly (but still above chance) on a handshape discrimination task (Emmorey, Bosworth & Kraljic, 2009)

These groups are often identified as “Hearing of Deaf” or Children of Deaf Adults (CODA), and “Hearing of Hearing” in the literature. Hearing children of deaf parents typically grow up to self-identify as belonging to the Deaf community, with many having native sign fluency (Padden & Humphries, 2005; Singleton & Tittle, 2000)

As discussed in Hessels (2018), definitions of saccades and fixations are often inconsistent and arbitrary in the field, and many algorithms for defining saccades and fixations are based on adult data, which may not apply to infants. Eliminating non-stationary gaze points would not necessarily provide a more accurate reflection of where foveal vision is directed. We chose to include all gaze points for this reason.

Data loss can be attributed to inattentiveness, looks away from the monitor, blinks and excessive head movements. We verified that data loss did not increase or decrease as the experiment unfolded over time.

This 3.2% looking increase per year between the ages of 2 and 8 years represents an increase in 1.6 seconds per year in attention to the stimulus.

Looking percentages for AOIs cannot be analyzed statistically because they are collinear.

One could argue that young signers should consider reversed stimuli viable, because children need to attend to unfamiliar input in order to learn it. In fact, almost about 40% of our adult signers were unable to identify the distortion as video reversal and did not reject reversed signs as nonsense or impossible. This shows that reversed signs are not impossible to understand in the way that reversed speech is, and hence, could be seen as viable input by young signers

References

- Agrafiotis D, Canagarajah CN, Bull DR, Dye M, Twyford H, Kyle J, & How JC (2003). Optimized sign language video coding based on eye-tracking analysis. Paper presented at the Visual Communications and Image Processing 2003. [Google Scholar]

- Baker-Shenk CL, & Cokely D (1991). American Sign Language: A Teacher’s Resource Text on Grammar and Culture: Gallaudet University Press. [Google Scholar]

- Bates D, Maechler M, Bolker B, Walker S, Christensen RHB, Singmann H, …, & Bolker MB (2015). Package ‘lme4’s. Convergence, 12(1). [Google Scholar]

- Bavelier D, Dye MW, & Hauser PC (2006). Do deaf individuals see better? Trends Cogn Sci, 10(11), 512–518. doi: 10.1016/j.tics.2006.09.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Birulés J, Bosch L, Brieke R, Pons F, & Lewkowicz DJ (2019). Inside bilingualism: Language background modulates selective attention to a talker’s mouth. Dev Sci, 22(3), e12755. doi: 10.1111/desc.12755 [DOI] [PubMed] [Google Scholar]

- Bosworth R, Stone A, & Hwang SO (2020). Effects of video reversal on gaze patterns during signed narrative comprehension. Journal of Deaf Studies and Deaf Education, 25(3), 283–297. doi: 10.1093/deafed/enaa007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bosworth RG, & Dobkins KR (2002). The effects of spatial attention on motion processing in deaf signers, hearing signers, and hearing nonsigners. Brain Cogn, 49(1), 152–169. doi: 10.1006/brcg.2001.1497 [DOI] [PubMed] [Google Scholar]

- Brooks R, & Meltzoff AN (2008). Infant gaze following and pointing predict accelerated vocabulary growth through two years of age: a longitudinal, growth curve modeling study. J Child Lang, 35(1), 207–220. doi: 10.1017/s030500090700829x [DOI] [PubMed] [Google Scholar]

- Brooks R, Singleton JL, & Meltzoff AN (2020). Enhanced gaze-following behavior in Deaf infants of Deaf parents. Dev Sci, 23(2), e12900. doi: 10.1111/desc.12900 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carrigan E, & Coppola M (2020). Delayed language exposure has a negative impact on receptive vocabulary skills in deaf and hard of hearing children despite early use of hearing technology. Paper presented at the Proceedings of the 44th annual Boston University Conference on Language Development Boston. [Google Scholar]

- Corina D, & Singleton J (2009). Developmental social cognitive neuroscience: insights from deafness. Child Dev, 80(4), 952–967. doi: 10.1111/j.1467-8624.2009.01310.x [DOI] [PubMed] [Google Scholar]

- Crume P, & Singleton J (2008). Teacher practices for promoting visual engagement of deaf children in a bilingual school. Paper presented at the Association of College Educators of the Deaf/Hard of Hearing, Monterey, CA. [Google Scholar]

- Davidson K, Lillo-Martin D, & Chen Pichler D (2014). Spoken english language development among native signing children with cochlear implants. Journal of deaf studies and deaf education, 19(2), 238–250. doi: 10.1093/deafed/ent045 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dye MW, & Hauser PC (2014). Sustained attention, selective attention and cognitive control in deaf and hearing children. Hearing Research, 309, 94–102. doi: 10.1016/j.heares.2013.12.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dye MW, Hauser PC, & Bavelier D (2008). Visual attention in deaf children and adults. Deaf Cognition: Foundations and Outcomes, 250–263. [Google Scholar]

- Dye MW, Hauser PC, & Bavelier D (2009). Is visual selective attention in deaf individuals enhanced or deficient? The case of the useful field of view. PLoS One, 4(5), e5640. doi: 10.1371/journal.pone.0005640 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dye MW, & Thompson RL (2020). Perception and production of language in the visual modality. In Morgan G (Ed.), Understanding Deafness, Language and Cognitive Development: Essays in honour of Bencie Woll (Vol. 25, pp. 133). City University London: John Benjamins. [Google Scholar]

- Emmorey K, Thompson R, & Colvin R (2009). Eye gaze during comprehension of American Sign Language by native and beginning signers. Journal of Deaf Studies and Deaf Education, 14(2), 237–243. doi: 10.1093/deafed/enn037 [DOI] [PubMed] [Google Scholar]

- Fenlon J, Cormier K, & Brentari D (2017). The Phonology of Sign Languages. In Hannahs SJ & Bosch A (Eds.), The Routledge Handbook of Phonological Theory ( 10.4324/9781315675428). London: Routledge. [DOI] [Google Scholar]

- Fernald A, Zangl R, Portillo AL, & Marchman VA (2008). Looking while listening: Using eye movements to monitor spoken language. Developmental Psycholinguistics: On-line Methods in Children’s Language Processing, 44, 97. [Google Scholar]

- Firke S (2019). Janitor: Simple Tools for Examining and Cleaning Dirty Data (Version 2.0.1). Retrieved from https://cran.csiro.au/web/packages/janitor/index.html [Google Scholar]

- Hall WC (2017). What you don’t know can hurt you: The risk of language deprivation by impairing sign language development in deaf children. Maternal and Child Health Journal, 21(5), 961–965. doi: 10.1007/s10995-017-2287-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris M, Clibbens J, Chasin J, & Tibbitts R (1989). The social context of early sign language development. First Language, 9(25), 81–97. [Google Scholar]

- Harris M, & Mohay H (1997). Learning to look in the right place: a comparison of attentional behavior in deaf children with deaf and hearing mothers. Journal of Deaf Studies and Deaf Education, 2(2), 95–103. doi: 10.1093/oxfordjournals.deafed.a014316 [DOI] [PubMed] [Google Scholar]

- Hauser P, Lukomski J, & Hillman T (2008). Development of deaf and hard-of-hearing students’ executive function. In Marschark M & Hauser P (Eds.), Deaf Cognition: Foundations and Outcomes. New York: Oxford University Press. [Google Scholar]

- Henner J, Hoffmeister R, & Reis J (2017). Developing sign language measurements for research with deaf populations. Research in Deaf Education: Contexts, Challenges, and Considerations, 141. [Google Scholar]

- Hessels RS (2020). How does gaze to faces support face-to-face interaction? A review and perspective. Psychon Bull Rev, 10.3758/s13423-020-01715-w, 1–26. doi: 10.3758/s13423-020-01715-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hessels RS, Niehorster DC, Nyström M, Andersson R, & Hooge IT (2018). Is the eye-movement field confused about fixations and saccades? A survey among 124 researchers. Royal Society Open Science, 5(8), 180502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humphries T, Kushalnagar P, Mathur G, Napoli DJ, Padden C, Pollard R, … Smith S (2014). What medical education can do to ensure robust Language development in deaf children. Medical Science Educator, 24(4), 409–419. doi: 10.1007/s40670-014-0073-7 [DOI] [Google Scholar]

- Humphries T, Kushalnagar P, Mathur G, Napoli DJ, Padden C, Rathmann C, & Smith SR (2012). Language acquisition for deaf children: Reducing the harms of zero tolerance to the use of alternative approaches. Harm Reduction Journal, 9(1), 16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hwang S-OK (2011). Windows into sensory integration and rates in language processing: Insights from signed and spoken languages. (Ph.D. Doctoral Dissertation). University of Maryland, Maryland. [Google Scholar]

- Koester LS (1995). Face-to-face interactions between hearing mothers and their deaf or hearing infants. Infant Behavior and Development, 18(2), 145–153. [Google Scholar]

- Kubicek C, de Boisferon AH, Dupierrix E, Lœvenbruck H, Gervain J, & Schwarzer G (2013). Face-scanning behavior to silently-talking faces in 12-month-old infants: The impact of pre-exposed auditory speech. International Journal of Behavioral Development, 37(2), 106–110. doi: 10.1177/0165025412473016 [DOI] [Google Scholar]

- Kushalnagar P, Krull K, Hannay J, Mehta P, Caudle S, & Oghalai J (2007). Intelligence, Parental Depression, and Behavior Adaptability in Deaf Children Being Considered for Cochlear Implantation. Journal of Deaf Studies and Deaf Education, 12(3), 335–349. doi: 10.1093/deafed/enm006 [DOI] [PubMed] [Google Scholar]

- Kuznetsova A, Brockhoff PB, & Christensen RHB (2017). lmerTest package: tests in linear mixed effects models. Journal of Statistical Software, 82(13). [Google Scholar]

- Lewkowicz DJ, & Hansen-Tift AM (2012). Infants deploy selective attention to the mouth of a talking face when learning speech. Proc Natl Acad Sci U S A, 109(5), 1431–1436. doi: 10.1073/pnas.1114783109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liddell SK (1980). American Sign Language Syntax (Vol. 52): Mouton De Gruyter. [Google Scholar]

- Lieberman A, Hatrak M, & Mayberry R (2011). The Development of Eye Gaze Control for Linguistic Input in Deaf Children. [Google Scholar]

- Lieberman AM, Borovsky A, Hatrak M, & Mayberry RI (2015). Real-time processing of ASL signs: Delayed first language acquisition affects organization of the mental lexicon. J Exp Psychol Learn Mem Cogn, 41(4), 1130–1139. doi: 10.1037/xlm0000088 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lieberman AM, Borovsky A, & Mayberry RI (2018). Prediction in a visual language: real-time sentence processing in American Sign Language across development. Lang Cogn Neurosci, 33(4), 387–401. doi: 10.1080/23273798.2017.1411961 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lieberman AM, Hatrak M, & Mayberry RI (2014). Learning to look for language: Development of joint attention in young deaf children. Lang Learn Dev, 10(1), 19–35. doi: 10.1080/15475441.2012.760381 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lillo-Martin D (1999). Modality effects and modularity in language acquisition: The acquisition of American Sign Language. Handbook of Child Language Acquisition, 531, 567. [Google Scholar]

- Loots G, Devise I, & Jacquet W (2005). The impact of visual communication on the intersubjective development of early parent-child interaction with 18- to 24-month-old deaf toddlers. Journal of Deaf Studies and Deaf Education, 10(4), 357–375. doi: 10.1093/deafed/eni036 [DOI] [PubMed] [Google Scholar]

- MacDonald K, LaMarr T, Corina D, Marchman VA, & Fernald A (2018). Real-time lexical comprehension in young children learning American Sign Language. Dev Sci, 21(6), e12672. doi: 10.1111/desc.12672 [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacDonald K, Marchman VA, Fernald A, & Frank MC (2020). Children flexibly seek visual information to support signed and spoken language comprehension. J Exp Psychol Gen, 149(6), 1078–1096. doi: 10.1037/xge0000702 [DOI] [PMC free article] [PubMed] [Google Scholar]

- May L, Byers-Heinlein K, Gervain J, & Werker JF (2011). Language and the newborn brain: does prenatal language experience shape the neonate neural response to speech? Front Psychol, 2, 222. doi: 10.3389/fpsyg.2011.00222 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mayberry RI (2002). Cognitive development in deaf children: The interface of language and perception in neuropsychology. Handbook of Neuropsychology, 8, 71–107. [Google Scholar]

- Mayberry RI, & Eichen EB (1991). The long-lasting advantage of learning sign language in childhood: Another look at the critical period for language acquisition. Journal of Memory and Language, 30(4), 486–512. [Google Scholar]

- Mayberry RI, & Lock E (2003). Age constraints on first versus second language acquisition: evidence for linguistic plasticity and epigenesis. Brain and Language, 87(3), 369–384. doi: 10.1016/s0093-934x(03)00137-8 [DOI] [PubMed] [Google Scholar]

- Mayberry RI, & Squires B (2006). Sign language acquisition. Encyclopedia of Language and Linguistics, 11, 739–743. [Google Scholar]

- Meadow-Orlans KP, Spencer PE, & Koester LS (2004). The World of Deaf Infants: A Longitudinal Study: Oxford University Press, USA. [Google Scholar]

- Newport E, & Meier R (1985). The Acquisition of American Sign Language. In Slobin D (Ed.), The Crosslinguistic Study of Language Acquisition (Vol. 1, pp. 881–938). Hillsdale, NJ: Lawrence Erlbaum & Associates. [Google Scholar]