Keywords: auditory cortex, decomposition, expertise, fMRI, music

Abstract

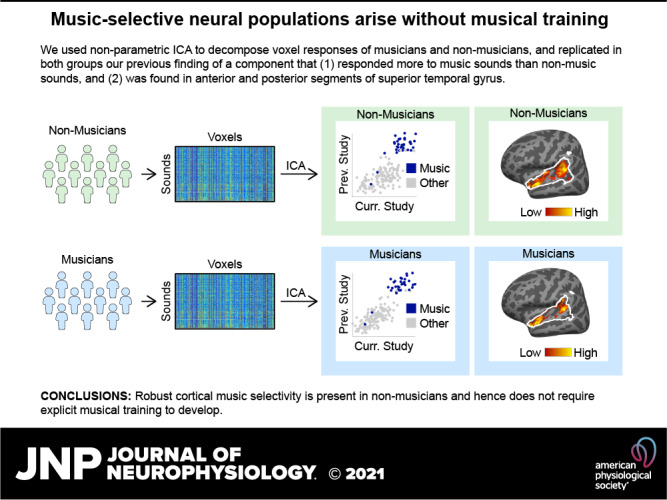

Recent work has shown that human auditory cortex contains neural populations anterior and posterior to primary auditory cortex that respond selectively to music. However, it is unknown how this selectivity for music arises. To test whether musical training is necessary, we measured fMRI responses to 192 natural sounds in 10 people with almost no musical training. When voxel responses were decomposed into underlying components, this group exhibited a music-selective component that was very similar in response profile and anatomical distribution to that previously seen in individuals with moderate musical training. We also found that musical genres that were less familiar to our participants (e.g., Balinese gamelan) produced strong responses within the music component, as did drum clips with rhythm but little melody, suggesting that these neural populations are broadly responsive to music as a whole. Our findings demonstrate that the signature properties of neural music selectivity do not require musical training to develop, showing that the music-selective neural populations are a fundamental and widespread property of the human brain.

NEW & NOTEWORTHY We show that music-selective neural populations are clearly present in people without musical training, demonstrating that they are a fundamental and widespread property of the human brain. Additionally, we show music-selective neural populations respond strongly to music from unfamiliar genres as well as music with rhythm but little pitch information, suggesting that they are broadly responsive to music as a whole.

INTRODUCTION

Music is uniquely and universally human (1), and musical abilities arise early in development (2). Recent evidence has revealed neural populations in bilateral nonprimary auditory cortex that respond selectively to music and thus seem likely to figure importantly in musical perception and behavior (3–9). How does such selectivity arise? Most members of Western societies have received at least some explicit musical training in the form of lessons or classes, and it is possible that this training leads to the emergence of music-selective neural populations. However, most Western individuals, including nonmusicians, also implicitly acquire knowledge of musical structure from a lifetime of exposure to music (10–15). Thus, another possibility is that this type of passive experience with music is sufficient for the development of cortical music selectivity. The roles of these two forms of musical experience in the neural representation of music are not understood. Here, we directly test whether explicit musical training is necessary for the development of music-selective neural responses, by testing whether music-selective responses are robustly present—with similar response characteristics and anatomical distribution—in individuals with little or no explicit training.

Why might explicit musical training be necessary for neural tuning to music? The closest analogy in the visual domain is learning to read, where several studies have shown that selectivity to visual orthography (16) arises in high-level visual cortex only after children are taught to read (17, 18). In addition, exposure to specific sounds can elicit long-term changes in auditory cortex, such as sharper tuning of individual neurons (19–21) and expansion of cortical maps (21–23). These changes occur primarily for behaviorally relevant stimulus features (23–27) related to the intrinsic reward value of the stimulus (26, 28, 29), and thus are closely linked to the neuromodulatory system (30–32). Additionally, the extent of cortical map expansion is correlated with the animal’s subsequent improvement in behavioral performance (21–23, 33–35). Most of this prior work on experience-driven plasticity in auditory cortex has been done in animals undergoing extensive training on simple sensory sound dimensions, and it has remained unclear how the results from this work might generalize to humans in more natural settings with higher-level perceptual features. Musical training in humans meets virtually all of these criteria for eliciting functional plasticity: playing music requires focused attention, fine-grained sensory-motor coordination, it is known to engage the neuromodulatory system (36–38), and expert musicians often begin training at a young age and hone their skills over many years.

Although many prior studies have measured neural changes as a result of auditory experience (39, 40), including comparing responses in musicians and nonmusicians (41–54), it remains unclear whether any tuning properties of auditory cortex depend on musical training. Previous studies have found that fMRI responses to music are larger in musicians compared with nonmusicians in posterior superior temporal gyrus (41, 48, 52). However, these responses were not shown to be selective for music, leaving the relationship between musical training and cortical music selectivity unclear.

Music selectivity is weak when measured in raw voxel responses using standard voxel-wise fMRI analyses, due to spatial overlap between music-selective neural populations and neural populations with other selectivities (e.g., pitch). To overcome these challenges, Norman-Haignere et al. (7) used voxel decomposition to infer a small number of component response profiles that collectively explained voxel responses throughout auditory cortex to large set of natural sounds. This approach makes it possible to disentangle the responses of neural populations that overlap within voxels and has previously revealed a component with clear selectivity for music compared with both other real-world sounds (7) and synthetic control stimuli matched to music in many acoustic properties (55). These results have recently been confirmed by intracranial recordings, which show individual electrodes with clear selectivity for music (6). Although Norman-Haignere et al. (7) did not include actively practicing musicians, many of the participants had substantial musical training earlier in their lives.

Here, we test whether music selectivity arises only after explicit musical training. To this end, we probed for music selectivity in people with almost no musical training. On the one hand, if explicit musical training is necessary for the existence of music-selective neural populations, music selectivity should be weak or absent in these nonmusicians. If, however, music selectivity does not require explicit training but rather is either innate or arises as a consequence of passive exposure to music, then we would expect to see robust music selectivity even in the nonmusicians. A group of highly trained musicians was also included for comparison. Using these same methods, we were also able to test whether the inferred music-selective neural population responds strongly to less familiar musical genres (e.g., Balinese gamelan), and to drum clips with rich rhythm but little melody.

Note that this is not a traditional group comparison study contrasting musicians and nonmusicians in an attempt to ascertain whether musical training has any detectable effect on music selective neural responses, as it would be unrealistic to collect the amount of data that would be necessary for a direct comparison between groups (see Direct Group Comparisons of Music Selectivity in the appendix). Rather, our goal was to ask whether the key properties of music selectivity described in our earlier study are present in each group when analyzed separately, thus determining whether or not explicit training is necessary for the emergence of music selective responses in the human brain.

MATERIALS AND METHODS

Participants

Twenty young adults (14 female, mean = 24.7 yr, SD = 3.8 yr) participated in the experiment: 10 nonmusicians (6 female, mean = 25.8 yr, SD = 4.1 yr) and 10 musicians (8 female, mean = 23.5 yr, SD = 3.3 yr). This number of participants was chosen because our previous study (7) was able to infer a music-selective component from an analysis of 10 participants. Although these previous participants were described as “nonmusicians” (defined as no formal training in the 5 years preceding the study), many of the participants had substantial musical training earlier in life. We therefore used stricter inclusion criteria to recruit 10 musicians and 10 nonmusicians for the current study.

To be classified as a nonmusician, participants were required to have less than 2 years of total music training, which could not have occurred either before the age of seven or within the last 5 years. Out of the 10 nonmusicians in our sample, eight had zero years of musical training, one had a single year of musical training (at the age of 20), and one had 2 years of training (starting at age 10). These training measures do not include any informal “music classes” included in participants’ required elementary school curriculum, because (at least in the United States) these classes are typically compulsory, are only for a small amount of time per week (e.g., 1 h), and primarily consist of simple sing-a-longs. Inclusion criteria for musicians were beginning formal training before the age of seven (56) and continuing training until the current day. Our sample of 10 musicians had an average of 16.30 years of training (ranging from 11–23 years, SD = 2.52). Details of participants’ musical experience can be found in Table 1.

Table 1.

Details of participants’ musical backgrounds and training, as measured by a self-report questionnaire

| Subject No. | Instrument | Age of Onset (Yr) | Years of Lessons | Years of Regular Practice | Years of Training | Hours of Weekly Practice | Hours of Daily Music Listening | |

|---|---|---|---|---|---|---|---|---|

| Nonmusicians | 1 | 0 | 0 | 0 | 0 | 0 | ||

| 2 | 20 | 1 | 0 | 1 | 0 | 2 | ||

| 3 | 0 | 0 | 0 | 0 | 0.25 | |||

| 4 | 0 | 0 | 0 | 0 | 5 | |||

| 5 | 0 | 0 | 0 | 0 | 1 | |||

| 6 | 0 | 0 | 0 | 0 | 1.5 | |||

| 7 | 10 | 1.5 | 0 | 1.5 | 0 | 1 | ||

| 8 | 0 | 0 | 0 | 0 | 0.1 | |||

| 9 | 0 | 0 | 0 | 0 | 0.5 | |||

| 10 | 0 | 0 | 0 | 0 | 5 | |||

| Mean | 24.4 | 0.3 | 0 | 0.3 | 0 | 1.64 | ||

| Musicians | 11 | Violin | 7 | 11 | 11 | 11 | 2 | 2 |

| 12 | Piano, French horn | 5 | 7 | 18 | 12 | 6 | 4 | |

| 13 | Piano, Bass | 6 | 12 | 18 | 18 | 2 | 2 | |

| 14 | Piano | 3 | 15 | 15 | 15 | 2 | 2.5 | |

| 15 | Piano, Violin, Viola | 5 | 17 | 19 | 20 | 3 | 4 | |

| 16 | Piano, Flute | 5 | 13 | 13 | 13 | 12 | 3 | |

| 17 | Flute | 7 | 12 | 13 | 15 | 15 | 3 | |

| 18 | Piano, Cello, Flute | 4 | 19 | 15 | 18 | 25 | 1 | |

| 19 | Violin | 3 | 18 | 18 | 18 | 4 | 10 | |

| 20 | Violin, Clarinet | 4 | 13 | 8 | 23 | 4 | 5 | |

| Mean | 5.2 | 13.7 | 14.8 | 16.3 | 7.1 | 3.65 | ||

| Grand mean | 14.8 | 7 | 7.4 | 8.3 | 3.55 | 2.64 | ||

Nonparametric Wilcoxon rank sum tests indicated that there were no significant group differences in median age (musician median = 24.0 yr, SD = 3.3, nonmusician median = 25.0 yr, SD = 4.1, Z = −1.03, P = 0.30, effect size r = −0.23), postsecondary education (i.e., formal education after high school; musician median = 6.0 yr, SD = 8.2 yr, nonmusician median = 6.5 yr, SD = 7.5 yr, Z = −0.08, P = 0.94, effect size r = −0.02), or socioeconomic status as measured by the Barrett Simplified Measure of Social Status questionnaire (BSMSS; Ref. 57; musician median = 54.8, SD = 7.1, nonmusician median = 53.6, SD = 15.4, Z = 0.30, P = 0.76, effect size r = 0.07). Note that we report the group standard deviations because this measure is more robust than the interquartile range with our modest sample size of 10 participants per group. All participants were native English speakers and had normal hearing (audiometric thresholds <25 dB HL for octave frequencies 250 Hz to 8 kHz), as confirmed by an audiogram administered during the course of this study. The study was approved by Massachusetts Institute of Technology’s (MIT) human participants review committee (Committee on the Use of Humans as Experimental Subjects), and written informed consent was obtained from all participants.

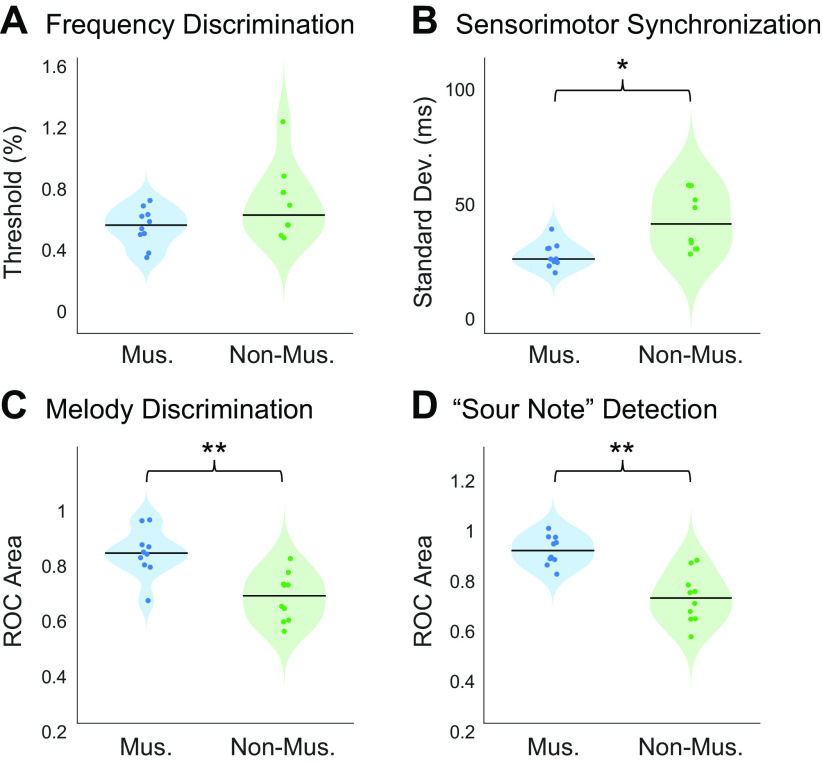

To validate participants’ self-reported musicianship, we measured participants’ abilities on a variety of psychoacoustical tasks for which prior evidence suggested that musicians would outperform nonmusicians, including frequency discrimination, sensorimotor synchronization, melody discrimination, and “sour note” detection. As predicted, musician participants outperformed nonmusician participants on all behavioral psychoacoustic tasks. See Appendix for more details, and Fig. A1 for participants’ performance on these behavioral tasks.

Study Design

Each participant underwent a 2-h behavioral testing session as well as three 2-h fMRI scanning sessions. During the behavioral session, participants completed an audiogram to rule out the possibility of hearing loss, filled out questionnaires about their musical experience, and completed a series of basic psychoacoustic tasks described in the APPENDIX.

Natural Sound Stimuli for fMRI Experiment

Stimuli consisted of 2-s clips of 192 natural sounds. These sounds included the 165-sound stimulus set used in Ref. 7, which broadly sampled frequently heard and recognizable sounds from everyday life. Examples can be seen in Fig. 1A. This set of 165 sounds was supplemented with 27 additional music and drumming clips from a variety of musical cultures, so that we could examine responses to rhythmic features of music, as well as compare responses to more versus less familiar musical genres. Stimuli were ramped on and off with a 25-ms linear ramp. During scanning, auditory stimuli were presented over MR-compatible earphones (Sensimetrics S14) at 75 dB SPL.

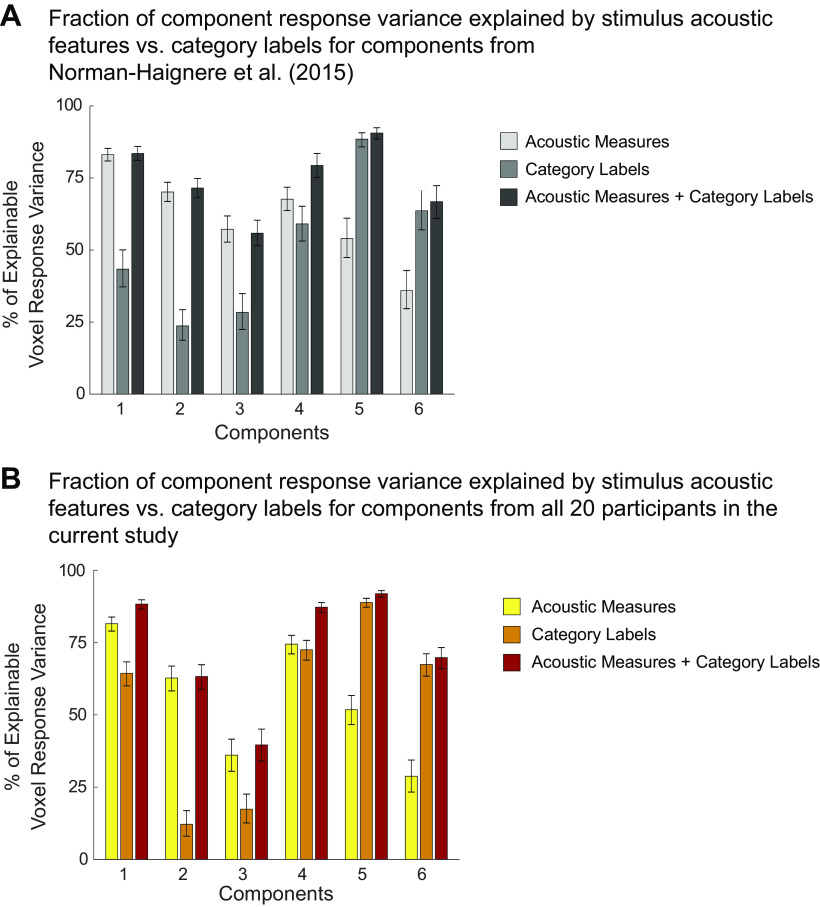

Figure 1.

Experimental design and voxel decomposition method. A: fifty examples from the original set of 165 natural sounds used in Ref. 7 and in the current study, ordered by how often participants reported hearing them in daily life. An additional 27 music stimuli were added to this set of 165 for the current experiment. B: scanning paradigm and task structure. Each 2-s sound stimulus was repeated three times consecutively, with one repetition (the second or third) being 12 dB quieter. Subjects were instructed to press a button when they detected this quieter sound. A sparse scanning sequence was used, in which one fMRI volume was acquired in the silent period between stimuli. C: diagram depicting the voxel decomposition method, reproduced from Ref. 7. The average response of each voxel to the 192 sounds is represented as a vector, and the response vector for every voxel from all 20 subjects is concatenated into a matrix (192 sounds × 26,792 voxels). This matrix is then factorized into a response profile matrix (192 sounds × N components) and a voxel weight matrix (N components × 26,792 voxels).

An online experiment (via Amazon’s Mechanical Turk) was used to assign a semantic category to each stimulus, in which 180 participants (95 females; mean age = 38.8 yr, SD = 11.9 yr) categorized each stimulus into one of 14 different categories. The categories were taken from Ref. 7, with three additional categories (“non-Western instrumental music,” “non-Western vocal music,” “drums”) added to accommodate the additional music stimuli used in this experiment.

A second Amazon Mechanical Turk experiment was run to ensure that American listeners were indeed less familiar with the non-Western music stimuli chosen for this experiment, but that they still perceived the stimuli as “music.” In this experiment, 188 participants (75 females; mean age = 36.6 yr, SD = 10.5 yr) listened to each of the 62 music stimuli and rated them based on (1) how “musical” they sounded, (2) how “familiar” they sounded, (3) how much they “liked” the stimulus, and (4) how “foreign” they sounded.

fMRI Data Acquisition and Preprocessing

Similar to the design of Ref. 7, sounds were presented during scanning in a “mini-block design,” in which each 2-s natural sound was repeated three times in a row. Sounds were repeated because we have found this makes it easier to detect reliable hemodynamic signals. We used fewer repetitions than in our prior study (3 vs. 5), because we wanted to test a larger number of sounds and because we observed similarly reliable responses using fewer repetitions in pilot experiments. Each stimulus was presented in silence, with a single fMRI volume collected between each repetition [i.e., “sparse scanning” (58)]. To encourage participants to pay attention to the sounds, either the second or third repetition in each “mini-block” was 12 dB quieter (presented at 67 dB SPL), and participants were instructed to press a button when they heard this quieter sound (Fig. 1B). Overall, participants performed well on this task (musicians: mean = 92.06%, SD = 5.47%; nonmusicians: mean = 91.47%, SD = 5.83%; no participant’s average performance across runs fell below 80%). Each of the three scanning sessions consisted of sixteen 5.5-min runs, for a total of 48 functional runs per participant. Each run consisted of 24 stimulus mini-blocks and five silent blocks during which no sounds were presented. These silent blocks were the same duration as the stimulus mini-blocks and were distributed evenly throughout each run, providing a baseline. Each specific stimulus was presented in two mini-blocks per scanning session, for a total of six mini-block repetitions per stimulus over the three scanning sessions. Stimulus order was randomly permuted across runs and across participants.

MRI data were collected at the Athinoula A. Martinos Imaging Center of the McGovern Institute for Brain Research at MIT, on a 3T Siemens Prisma with a 32-channel head coil. Each volume acquisition lasted 1 s, and the 2-s stimuli were presented during periods of silence between each acquisition, with a 200-ms buffer of silence before and after stimulus presentation. As a consequence, one brain volume was collected every 3.4 s (1 s + 2 s + 0.2*2 s; TR = 3.4 s, TA = 1.02 s, TE = 33 ms, 90 degree flip angle, 4 discarded initial acquisitions). Each functional acquisition consisted of 48 roughly axial slices (oriented parallel to the anterior-posterior commissure line) covering the whole brain, each slice being 3 mm thick and having an in-plane resolution of 2.1 × 2.1mm (96 × 96 matrix, 0.3-mm slice gap). A simultaneous multislice (SMS) acceleration factor of 4 was used to minimize acquisition time (TA = 1.02 s). To localize functional activity, a high-resolution anatomical T1-weighted image was obtained for every participant (TR = 2.53 s, voxel size: 1 mm3, 176 slices, 256 × 256 matrix).

Preprocessing and data analysis were performed using FSL software and custom Matlab scripts. Functional volumes were motion-corrected, slice-time-corrected, skull-stripped, linearly detrended, and aligned to each participant’s anatomical image (using FLIRT and BBRegister; Refs. 59, 60). Motion correction and function-to-anatomical registration was done separately for each run. Preprocessed data were then resampled to the cortical surface reconstruction computed by FreeSurfer (61) and smoothed on the surface using a 3-mm full-width half-maximum (FWHM) kernel to improve signal-to-noise ratio (SNR). The data were then downsampled to a 2-mm isotropic grid on the FreeSurfer-flattened cortical surface.

Next, we estimated the response to each of the 192 stimuli using a general linear model (GLM). Each stimulus mini-block was modeled as a boxcar function convolved with a canonical hemodynamic response function (HRF). The model also included six motion regressors and a first-order polynomial noise regressor to account for linear drift in the baseline signal. Note that this analysis differs from our prior paper (7), in which signal averaging was used in place of a GLM. We made this change because blood oxygen level-dependent (BOLD) responses were estimated more reliably using an HRF-based GLM, potentially due to the use of shorter stimulus blocks causing more overlap between BOLD responses to different stimuli.

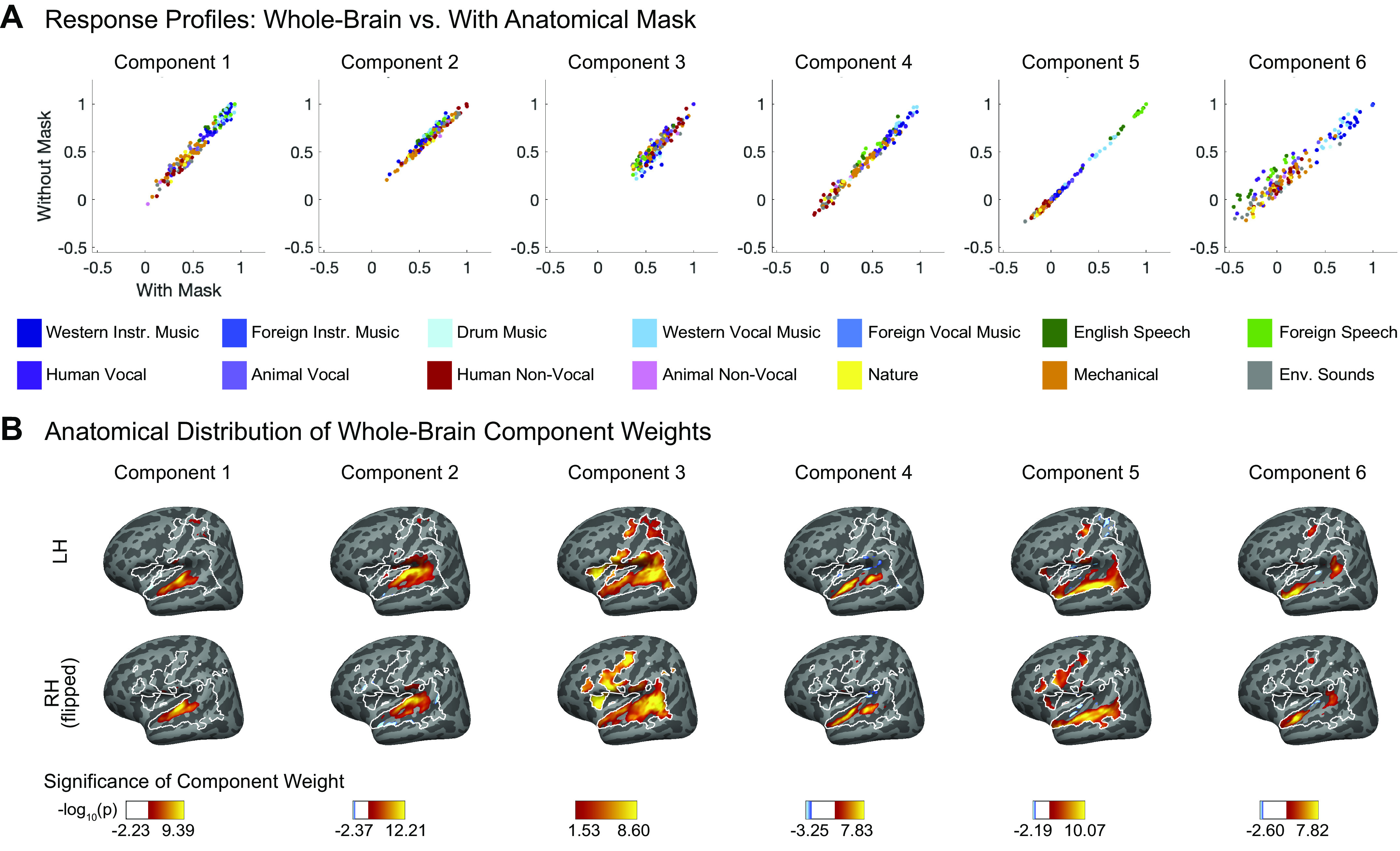

Voxel Selection

The first step of this analysis method is to determine which voxels serve as input to the decomposition algorithm. All analyses were carried out on voxels within a large anatomical constraint region encompassing bilateral superior temporal and posterior parietal cortex (Fig. A2), as in Ref. 7. In practice, the vast majority of voxels with a robust and reliable response to sound fell within this region (Fig. A3), which explains why our results were very similar with and without this anatomical constraint (Fig. A4). Within this large anatomical region, we selected voxels that met two criteria. First, they displayed a significant (P < 0.001, uncorrected) response to sound (pooling over all sounds compared with silence). This consisted of 51.45% of the total number of voxels within our large constraint region. Second, they produced a reliable response pattern to the stimuli across scanning sessions. Note that rather than using a simple correlation to determine reliability, we used the equation from Ref. 7 to measure the reliability across split halves of our data. This reliability measure differs from a Pearson correlation in that it assigns high values to voxels that respond consistently to sounds and does not penalize them even if their response does not vary much between sounds, which is the case for many voxels within primary auditory cortex:

where v1 and v2 indicate the vector of beta weights from a single voxel for the 192 sounds, estimated separately for the two halves of the data (v1 = first three repetitions from runs 1–24, v2 = last three repetitions from runs 25–48), and ‖ ‖ is the L2 norm. Note that these equations differ from Eq. 1 and 2 in Ref. 7, because the equations as reported in that paper contained a typo: the L2-norm terms were not squared. We used the same reliability cutoff as in our prior study (r > 0.3). Of the sound-responsive voxels, 54.47% of them also met the reliability criteria. Using these two selection criteria, the median number of voxels per participant = 1,286, SD = 254 (Fig. A2A). The number of selected voxels did not differ significantly between musicians (median = 1,216, SD = 200) and nonmusicians (median = 1,341, SD = 284; Z = −1.40, P = 0.16, effect size r = −0.31, two-tailed Wilcoxon rank sum test), and the anatomical location of the selected voxels was largely similar across groups (Fig. A2B). When visualizing each group's data on the cortical surface (Fig. 3, C and D), we chose which voxels to include by first averaging voxel responses across participants within each group, and then applying the same selection criteria to the averaged data.

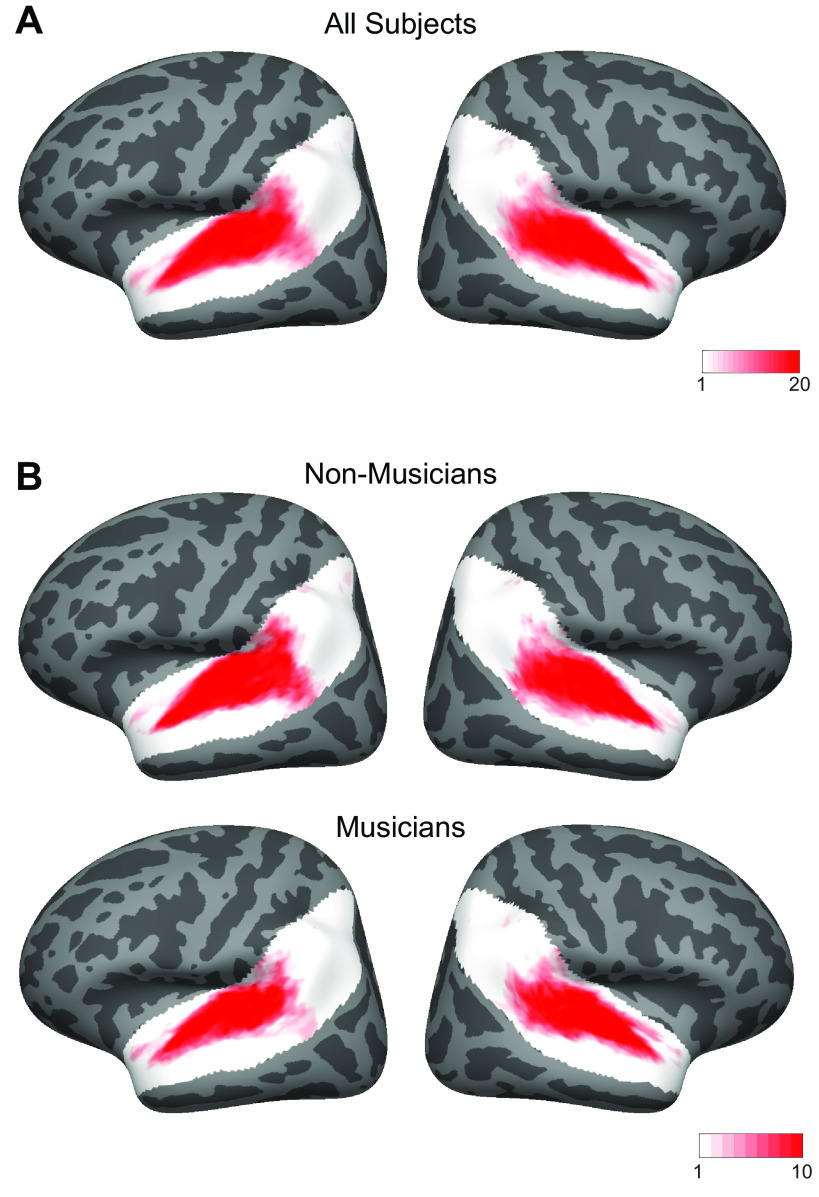

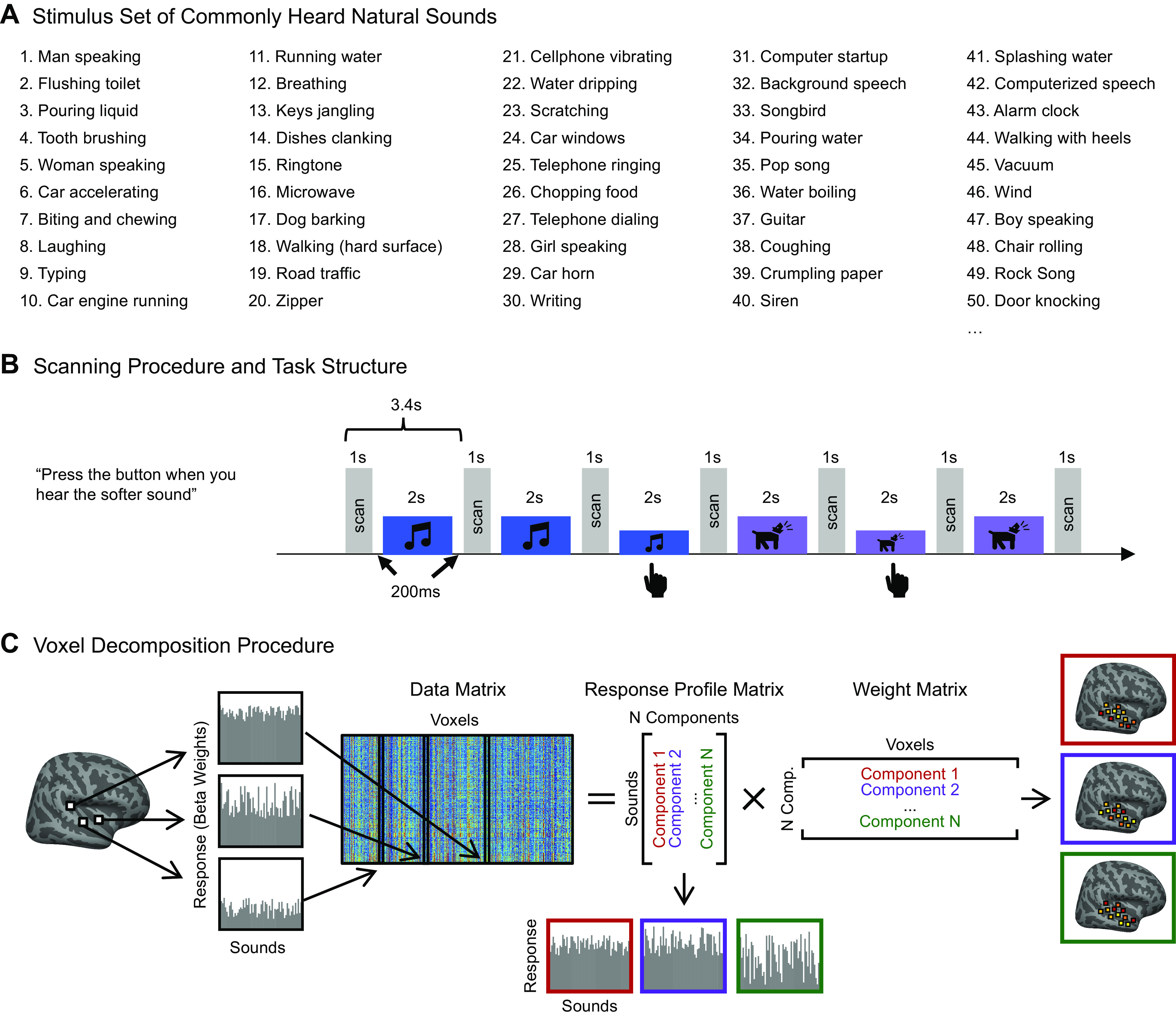

Figure 3.

Comparison of speech-selective and music-selective components for participants from previous study (n = 10), nonmusicians (n = 10), and musicians (n = 10). A and B: component response profiles averaged by sound category (as determined by raters on Amazon Mechanical Turk). A: the speech-selective component responds highly to speech and music with vocals, and minimally to all other sound categories. Shown separately for the previous study (left), nonmusicians (middle), and musicians (right). Note that the previous study contained only a subset of the stimuli (165 sounds) used in the current study (192 sounds) so some conditions were not included and are thus replaced by a gray rectangle in the plots and surrounded by a gray rectangle in the legend. B: the music-selective component (right) responds highly to both instrumental and vocal music, and less strongly to other sound categories. Note that “Western Vocal Music” stimuli were sung in English. We note that the mean response profile magnitude differs between groups, but that selectivity as measured by separability of music and nonmusic is not affected by this difference (see text for explanation). For both A and B, error bars plot one standard error of the mean across sounds from a category, computed using bootstrapping (10,000 samples). C: spatial distribution of speech-selective component voxel weights in both hemispheres. D: spatial distribution of music-selective component voxel weights. Color denotes the statistical significance of the weights, computed using a random effects analysis across subjects comparing weights against 0; P values are logarithmically transformed (−log10[P]). The white outline indicates the voxels that were both sound-responsive (sound vs. silence, P < 0.001 uncorrected) and split-half reliable (r > 0.3) at the group level (see materials and methods for details). The color scale represents voxels that are significant at FDR q = 0.05, with this threshold computed for each component separately. Voxels that do not survive FDR correction are not colored, and these values appear as white on the color bar. The right hemisphere (bottom rows) is flipped to make it easier to visually compare weight distributions across hemispheres. Note that the secondary posterior cluster of music component weights is not as prominent in this visualization of the data from Ref. 7 due to the thresholding procedure used here; we found in additional analyses that a posterior cluster emerged if a more lenient threshold is used. FDR, false discovery rate; LH, left hemisphere; RH, right hemisphere.

Unlike our prior study, we collected whole brain data in this experiment and thus were able to repeat our analyses without any anatomical constraint. Although a few additional voxels outside of the mask do meet our selection criteria (Fig. A3), the resulting components are very similar to those obtained using the anatomical mask, both in response profiles (Fig. A4A; correlations ranging from r = 0.91 to r > 0.99, SD = 0.03) and voxel weights (Fig. A4B).

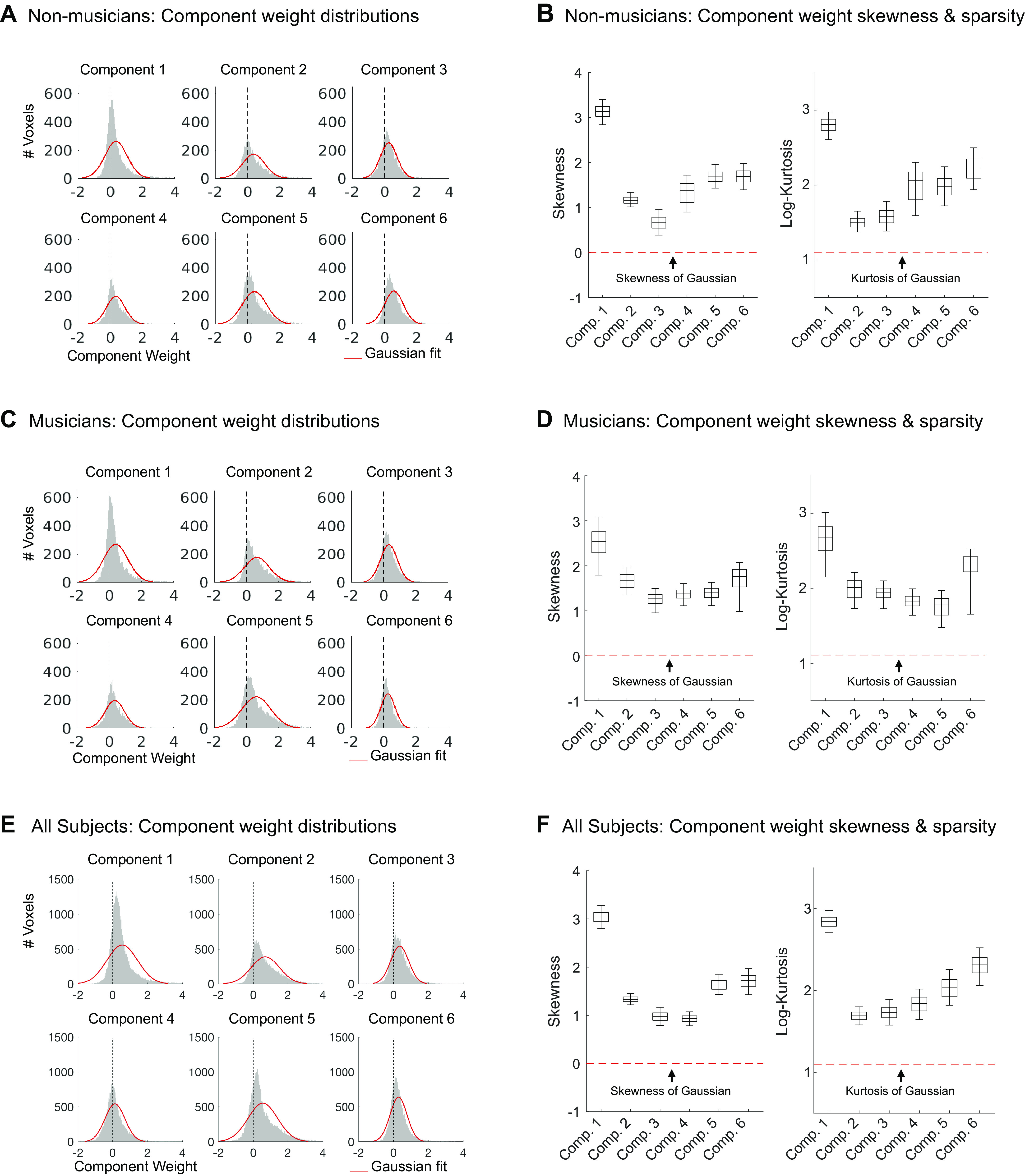

Decomposition Algorithm

The decomposition algorithm approximates the response of each voxel (v1) as the weighted sum of a small number of component response profiles that are shared across voxels (Fig. 1B):

where rk represents the kth component response profile that is shared across all voxels, wk,i represents the weight of component k in voxel i, and K is the total number of components.

We concatenated the selected voxel responses from all participants into a single data matrix D (S sounds × V voxels). We then approximated the data matrix as the product of two smaller matrices: 1) a response matrix R (S sounds × K components) containing the response profile of all inferred components to the sound set, and 2) a weight matrix W (K components × V voxels) containing the contribution of each component response profile to each voxel. Using matrix notation this yields:

The method used to infer components was described in detail in our prior paper (7) and code is available online (https://github.com/snormanhaignere/nonparametric-ica). The method is similar to standard algorithms for independent components analysis (ICA) in that it searches among the many possible solutions to the factorization problem for components that have a maximally non-Gaussian distribution of weights across voxels (the non-Gaussianity of the components inferred in this study can be seen in Fig. A5). The method differs from most standard ICA algorithms in that it maximizes non-Gaussianity by directly minimizing the entropy of the component weight distributions across voxels as measured by a histogram, which is feasible due to the large number of voxels. Entropy is a natural measure to minimize because the Gaussian distribution has maximal entropy. The algorithm achieves this goal in two steps. First, PCA is used to whiten and reduce the dimensionality of the data matrix. This was implemented using the singular value decomposition:

where Uk contains the response profiles of the top K principal components (192 sounds × K components), Vk contains the whitened voxel weights for these components (K components × 26,792 voxels), and Sk is a diagonal matrix of singular values (K × K). The number of components (K) was chosen by measuring the amount of voxel response variance explained by different numbers of components and the accuracy of the components in predicting voxel responses in left-out data. Specifically, we chose a value of K that balanced these two measures such that the set of components explained a large fraction of the voxel response variance (which increases monotonically with additional components) but still maintained good prediction accuracy (which decreases once additional components begin to cause overfitting). In practice, the plateau in the amount of explained variance coincided with the peak of the prediction accuracy.

The principal component weight matrix is then rotated to maximize the negentropy (J) summed across components:

where W is the rotated weight matrix (K × 26,792), T is an orthonormal rotation matrix (K × K), and W[c,:] is the cth row of W. We estimated entropy using a histogram-based method (62) applied to the voxel weight vector for each component (W[c,:]), and defined negentropy as the difference in entropy between the empirical weight distribution and a Gaussian distribution of the same mean and variance:

The optimization is performed by iteratively rotating pairs of components to maximize negentropy, which is a simple algorithm that does not require the computation of gradients and is feasible for small numbers of components [the number of component pairs grows as ].

This voxel decomposition analysis was carried out on three different data sets: 1) on voxels from the 10 musicians only, 2) on voxels from the 10 nonmusicians only, and 3) on voxels from all 20 participants. We note that the derivation of a set of components using this method is somewhat akin to a fixed-effects analysis, in that it concatenates participants’ data and infers a single set of components to explain the data from all participants at once. However, the majority of the analyses that we carried out using these components (as described in the following paragraphs) involve deriving participant-specific metrics and investigating the consistency of effects across participants.

Measuring Component Selectivity

To quantify the selectivity of the music component, we measured the difference in mean response profile magnitude between music and nonmusic sounds, divided by their pooled standard deviation (Cohen’s d). This measure provides a measure of the separability of the two sound categories within the response profile. We measured Cohen’s d for several different pairwise comparisons of sound categories. In each case, the significance of the separation of the two stimulus categories was determined using a permutation test (permuting stimulus labels between the two categories 10,000 times). This null distribution was then fit with a Gaussian, a P-value from which was assigned to the observed value of Cohen’s d.

Anatomical Component Weight Maps

To visualize the anatomical distribution of component weights, individual participants’ component weights were projected onto the cortical surface of the standard FsAverage template, and a random effects analysis (t test) was performed to determine whether component weights were significantly greater than zero across participants at each voxel location. To visualize the component weight maps separately for musicians and nonmusicians, a separate random effects analysis was run for the participants in each group. To correct for multiple comparisons, we adjusted the false discovery rate [FDR, c(V) = 1, q = 0.05] using the method from Genovese et al. (63).

We note that the details of the analyses and plotting conventions used for visualizing component weight maps differ from those of our previous study (7). These differences include the process involved in aggregating weights across subjects (Norman-Haignere et al. smoothed individual participants’ data and then averaged across participants, whereas there was no smoothing or averaging across participants in the current study), the use of different measures of statistical significance of the component weights (a random effects analysis across subjects in the current study, a permutation test across sounds in Norman-Haignere et al.), and different thresholding (an FDR threshold of q = 0.05 in the current study, whereas the component weight maps in Norman-Haignere et al. showed the entire weight distribution and were not thresholded). We made these changes in the current study so that we could ask about the consistency of effects across participants and better visualize which voxels’ component weights passed standard significance thresholds. However, we note that these changes cause the maps we regenerated from the Ref. 7 data (Fig. 3, C and D) to look somewhat different from those shown in the original paper (replotted in Fig. 5A).

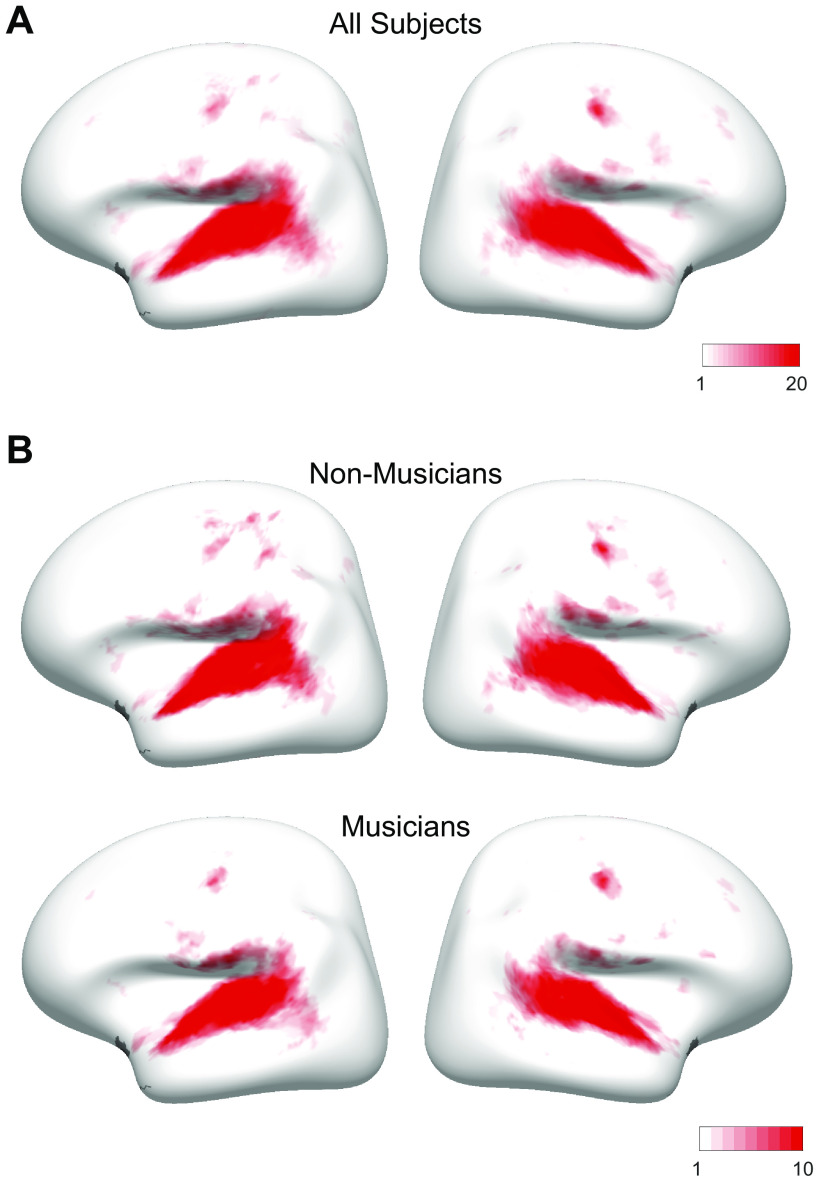

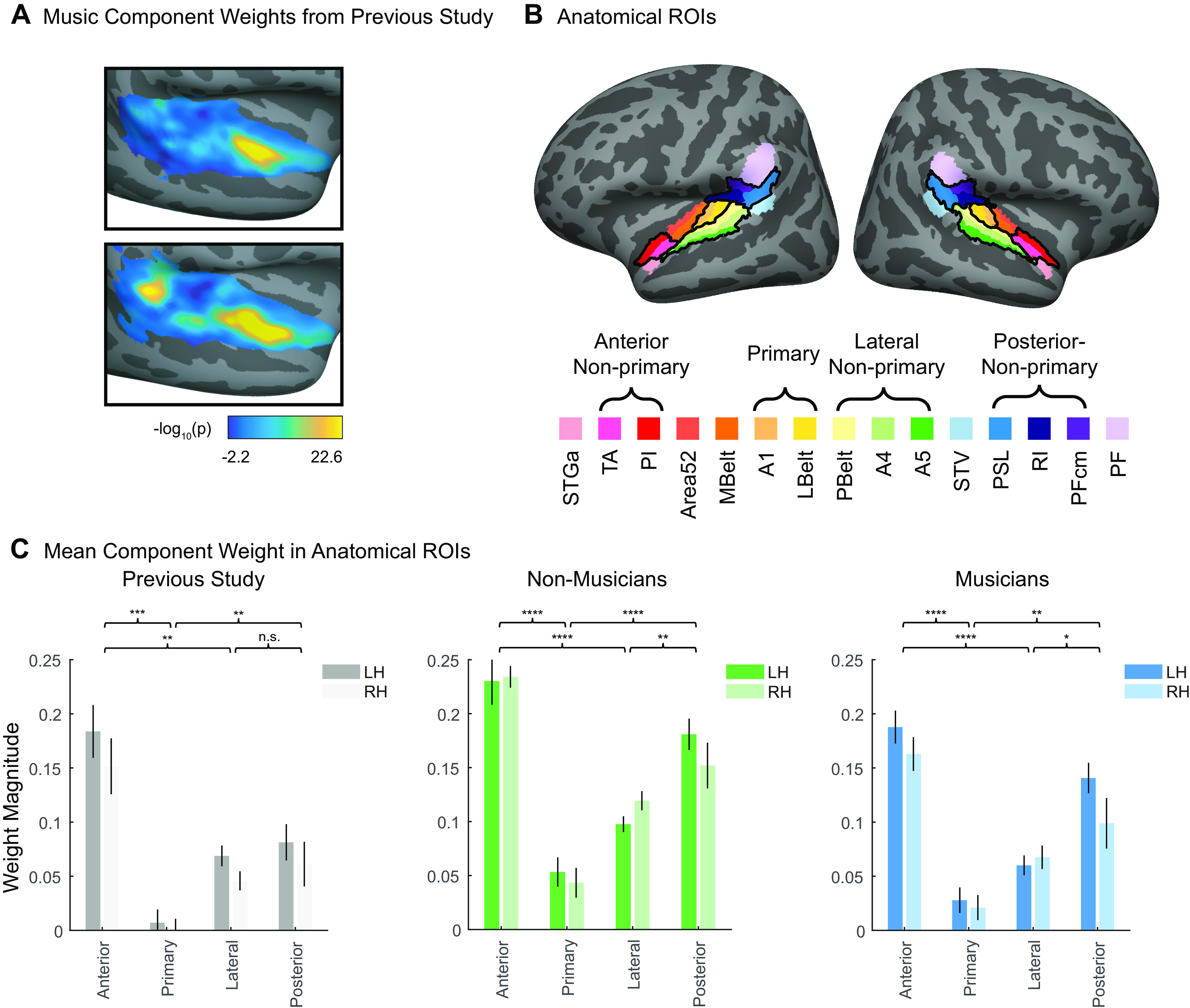

Figure 5.

Quantification of bilateral anterior/posterior concentration of voxel weights for the music-selective components inferred in nonmusicians and musicians separately. A: music component voxel weights, reproduced from Ref. 7. See materials and methods for details concerning the analysis and plotting conventions from our previous paper. B: fifteen standardized anatomical parcels were selected from Ref. 64, chosen to fully encompass the superior temporal plane and superior temporal gyrus (STG). To come up with a small set of ROIs to use to evaluate the music component weights in our current study, we superimposed these anatomical parcels onto the weights of the music component from our previously published study (7), and then defined ROIs by selecting sets of the anatomically defined parcels that correspond to regions of high (anterior nonprimary, posterior nonprimary) vs. low (primary, lateral nonprimary) music component weights. The anatomical parcels that comprise these four ROIs are indicated by the brackets and outlined in black on the cortical surface. C: mean music component weight across all voxels in each of the four anatomical ROIs, separately for each hemisphere, and separately for our previous study (n = 10; left, gray shading), nonmusicians (n = 10; center, green shading), and musicians (n = 10; right, blue shading). A repeated-measures ROI × hemisphere ANOVA was conducted for each group separately. Error bars plot one standard error of the mean across participants. Brackets represent pairwise comparisons that were conducted between ROIs with expected high vs. low component weights, averaged over hemisphere. See Table 3 for full results of pairwise comparisons, and Fig. A9 for component weights from all 15 anatomical parcels. *Significant at P < 0.05, two-tailed; **Significant at P < 0.01, two-tailed; ***Significant at P < 0.001, two-tailed; ****Significant at P < 0.0001, two-tailed. Note that because of our prior hypotheses and the significance of the omnibus F test, we did not correct for multiple comparisons. LH, left hemisphere; RH, right hemisphere; ROI, region of interest.

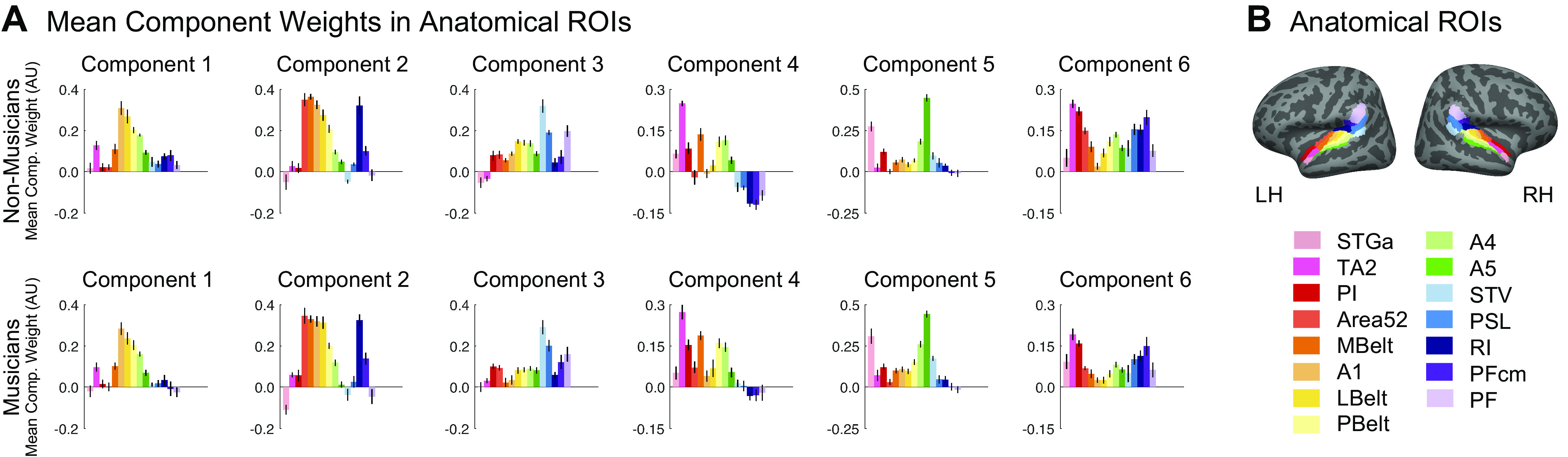

Component Voxel Weights within Anatomical ROIs

In addition to projecting the weight distributions on the cortical surface, we summarized their anatomical distribution by measuring the mean component voxel weight within a set of standardized anatomical ROIs. To create these ROIs, a set of 15 parcels were selected from an atlas (64) to fully encompass the superior temporal plane and superior temporal gyrus (STG). To identify a small set of ROIs suitable for evaluating the music component weights in our current study, we superimposed these anatomical parcels onto the weights of the music component from our previously published study (7, shown in Fig. 5A), and then defined ROIs by selecting sets of the anatomically defined parcels that best correspond to regions of high vs. low music component weights (Fig. 5B). The mean component weights within these ROIs were computed separately for each participant and then averaged across participants for visualization purposes (e.g., Fig. 5C). We then ran a 4 (ROI) × 2 (hemisphere) repeated-measures ANOVA on these weights. A separate ANOVA was run for musicians and nonmusicians, to evaluate each group separately.

To compare the magnitude of the main effect of ROI with the main effect of hemisphere, we bootstrapped across participants, resampling participants 1,000 times. We reran the repeated-measures ANOVA on each sample, each time measuring the difference in the effect size for the two main effects, i.e., ηpROI2 – ηpHemi2. We then calculated the 95% confidence interval (CI) of this distribution of effect size differences. The significance of the difference in main effects was evaluated by determining whether or not each group’s 95% CI for the difference overlapped with zero.

In addition, we ran a Bayesian repeated-measures ANOVA on these same data, implemented in JASP v.0.13.1 (78), using the default prior (Cauchy distribution, r = 0.5). Effects are reported as the Bayes Factor for inclusion (BFinc) of each main effect and/or interaction, which is the ratio between the likelihood of the data given the model including the effect in question vs. the likelihood of the next simpler model without the effect in question.

RESULTS

Our primary question was whether cortical music selectivity is present in people with almost no musical training. To that end, we scanned 10 people with almost no musical training (Table 1) and used voxel decomposition to infer a small set of response components that could explain the observed voxel responses. We also included another set of 10 participants with extensive musical training for comparison. First, we asked whether the response components across all of auditory cortex reported by Norman-Haignere et al. (7) replicate in both nonmusicians and highly trained musicians when analyzed separately. Second, we examined the detailed characteristics of music selectivity in particular, to test whether its previously documented key properties are present in both nonmusicians and highly trained musicians. Third, we took advantage of our expanded stimulus set to look at additional properties of music selectivity, such as the response to musical genres that are less familiar to our Western participants.

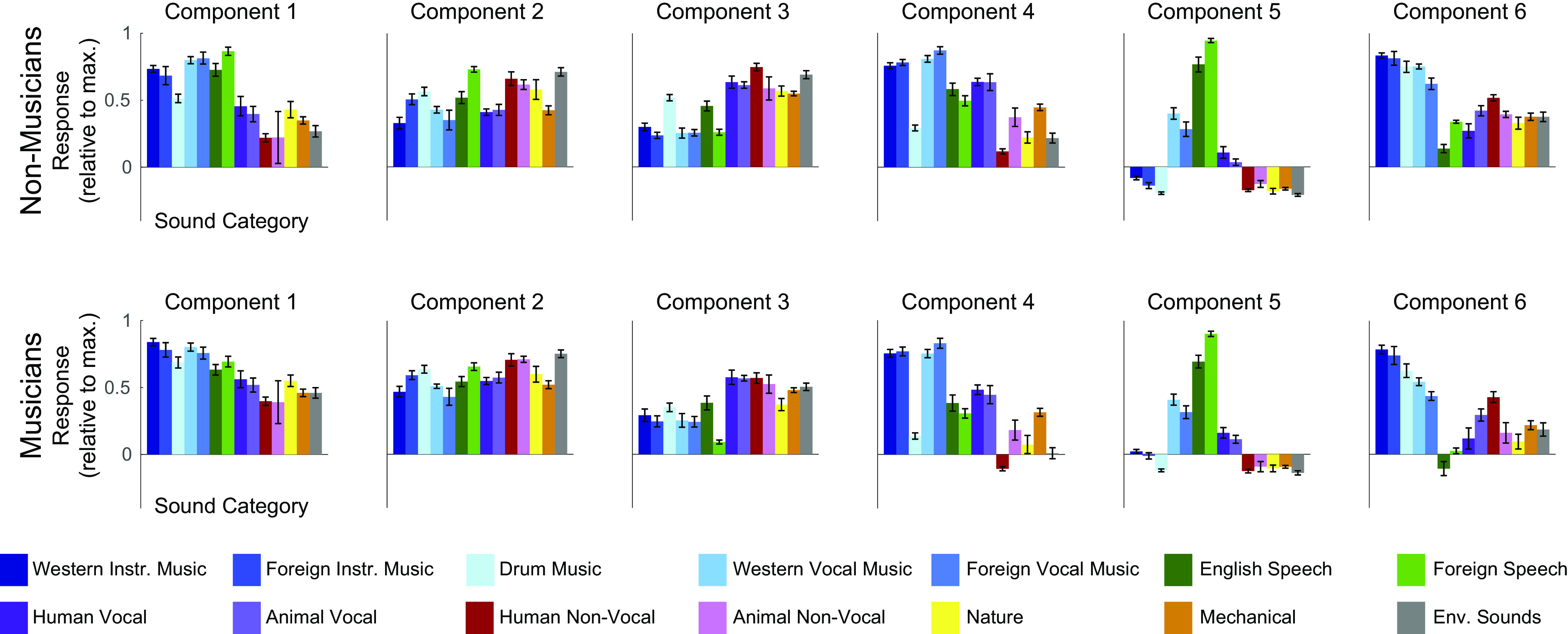

Replication of Functional Components of Auditory Cortex from Norman-Haignere et al. in Musicians and in Nonmusicians

Replication of previous voxel decomposition results.

We first tested the extent to which we would replicate the overall functional organization of auditory cortex reported by Norman-Haignere et al. (7) in people with almost no musical training, using the voxel decomposition method introduced in that paper. We also performed the same analysis on a group of highly trained musicians. Specifically, in every participant, we measured the response of voxels within auditory cortex to 192 natural sounds (Fig. 1, A and B; the average response of each voxel to each sound was estimated using a standard hemodynamic response function). Then, separately for nonmusicians and musicians, we used voxel decomposition to model the response of these voxels as the weighted sum of a small number of canonical response components (Fig. 1C). This method factorizes the voxel responses into two matrices: one containing the components’ response profiles across the sound set and the second containing voxel weights specifying the extent to which each component contributes to the response of each voxel.

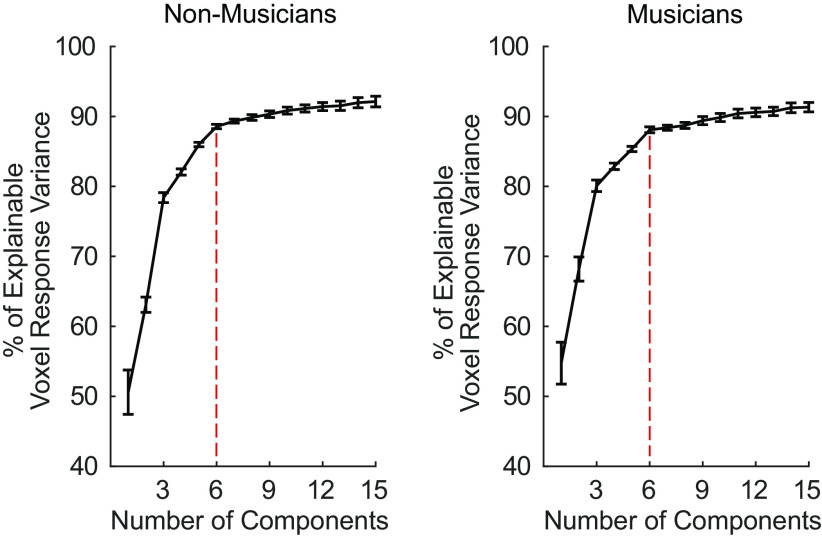

Since the only free parameter in this analysis is the number of components recovered, the optimal number of components was determined by measuring the fraction of the reliable response variance the components explain. In the previous study (7), six components were sufficient to explain over 80% of the reliable variance in voxel responses. We found the same to be true in both participant groups of the current study: six components were needed to optimally model the data from the 10 participants in separate analyses of each group. The six components explained 88.56% and 88.09% of the reliable voxel response variance for nonmusicians and musicians, respectively, after which the amount of explained variance for each additional component plateaued (Fig. A6).

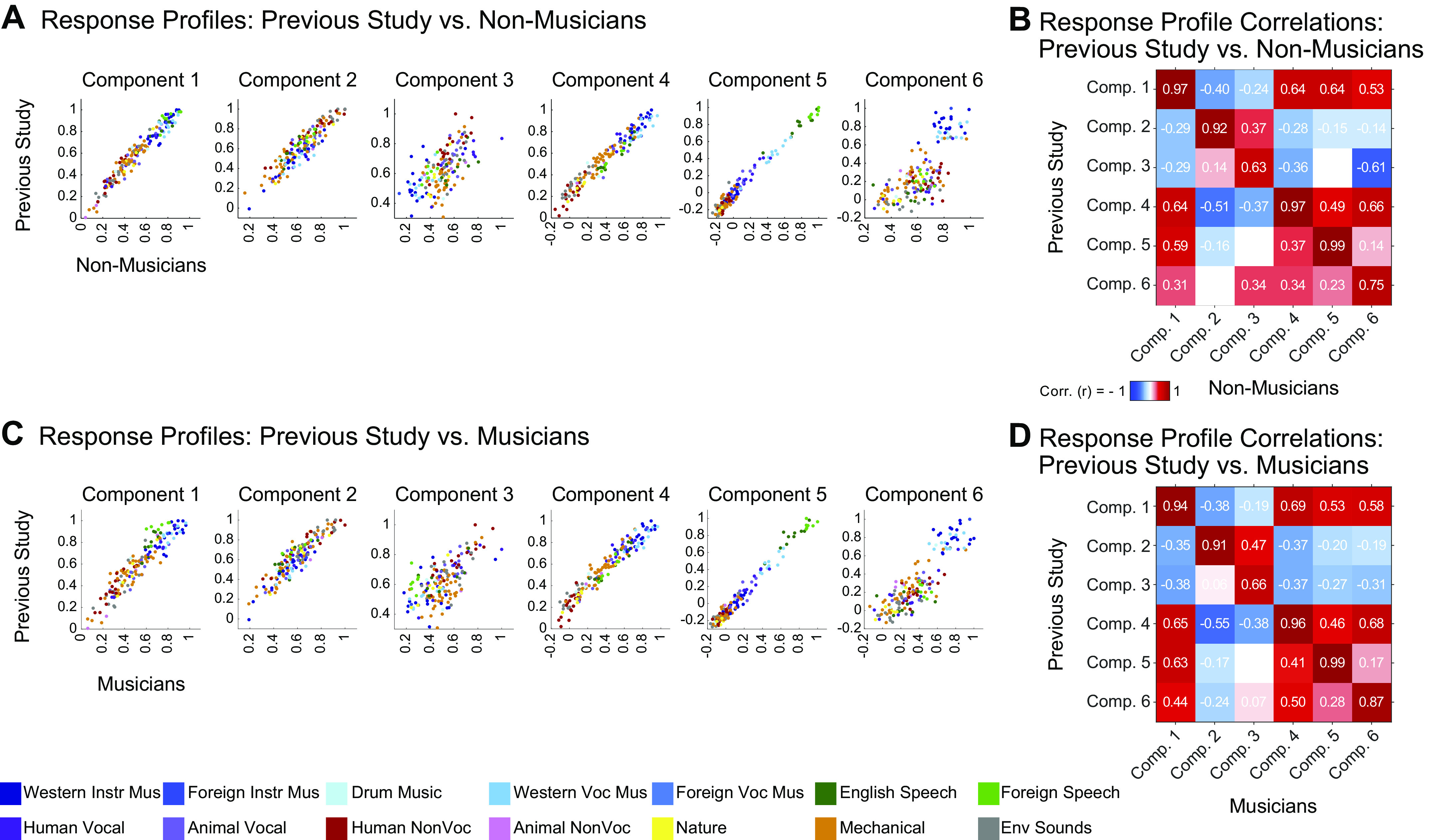

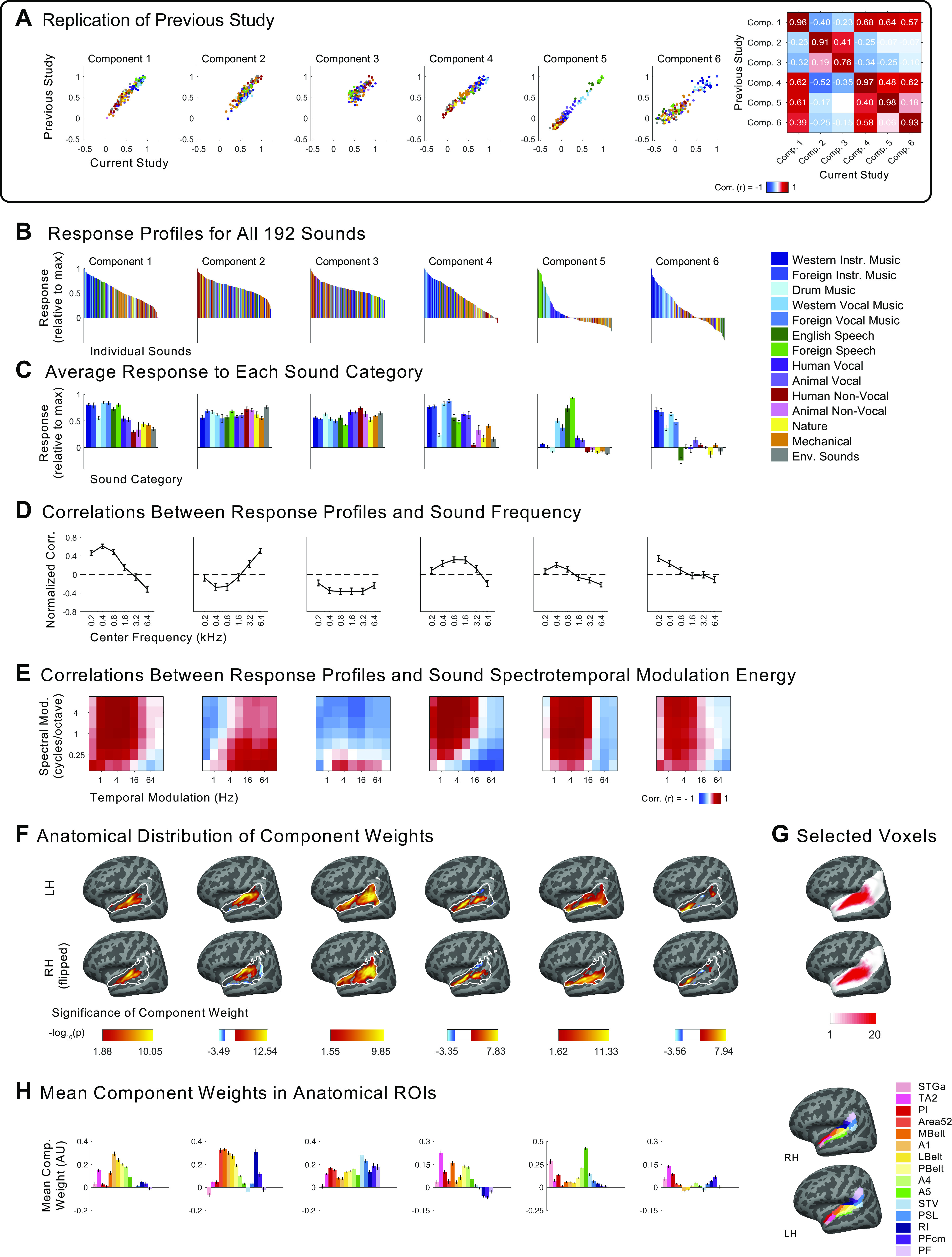

Next, we examined the similarity of the components inferred from nonmusicians to the components from our previous study, comparing their responses to the 165 sounds common to both. Because the order of the components inferred using ICA holds no significance, we first used the Hungarian algorithm (65) to optimally reorder the components, maximizing their correlation with the components from our previous study. For comparisons of the response profile matrices of two groups of subjects, we matched components using the weight matrices; conversely, for comparisons involving the voxel weights, we matched components using the response profile matrices (see materials and methods). In practice, the component matches were identical regardless of which matrices were used for matching. We also conducted the same analysis for the musicians, comparing the components derived from their data with those in our previous study.

For both nonmusicians and musicians, corresponding pairs of components were highly correlated with those from our previous study, with r values for nonmusicians’ components ranging from 0.63 to 0.99 (Fig. 2, A and B) and from 0.66 to 0.99 for musicians’ components (Fig. 2, C and D).

Figure 2.

Replication of components from Ref. 7. A: scatterplots showing the correspondence between the component response profiles from the previous study (n = 10, y-axis) and those inferred from nonmusicians (n = 10, x-axis). The 165 sounds common to both studies are colored according to their semantic category, as determined by raters on Amazon Mechanical Turk. Note that the axes differ slightly between groups to make it possible to clearly compare the pattern of responses across sounds independent of the overall response magnitude. B: correlation matrix comparing component response profiles from the previous study (y-axis) and those inferred from nonmusicians (n = 10, x-axis). C and D: same as A and B but for musicians. Comp., component.

Component response profiles and selectivity for sound categories.

Four of the six components from our previous study captured expected acoustic properties of the sound set (e.g., frequency, spectrotemporal modulation; see Fig. A8A for analyses relating the responses of these components to audio frequency and spectrotemporal modulation) and were concentrated in and around primary auditory cortex (PAC), consistent with prior results (55, 66–72). The two remaining components responded selectively to speech (Fig. 3A, left column) and music (Fig. 3B, left column), respectively, and were not well accounted for using acoustic properties alone (Fig. A8A). The corresponding components inferred from nonmusicians (Fig. 3, A and B, middle columns) and musicians (Fig. 3, A and B, right columns) also show this category selectivity.

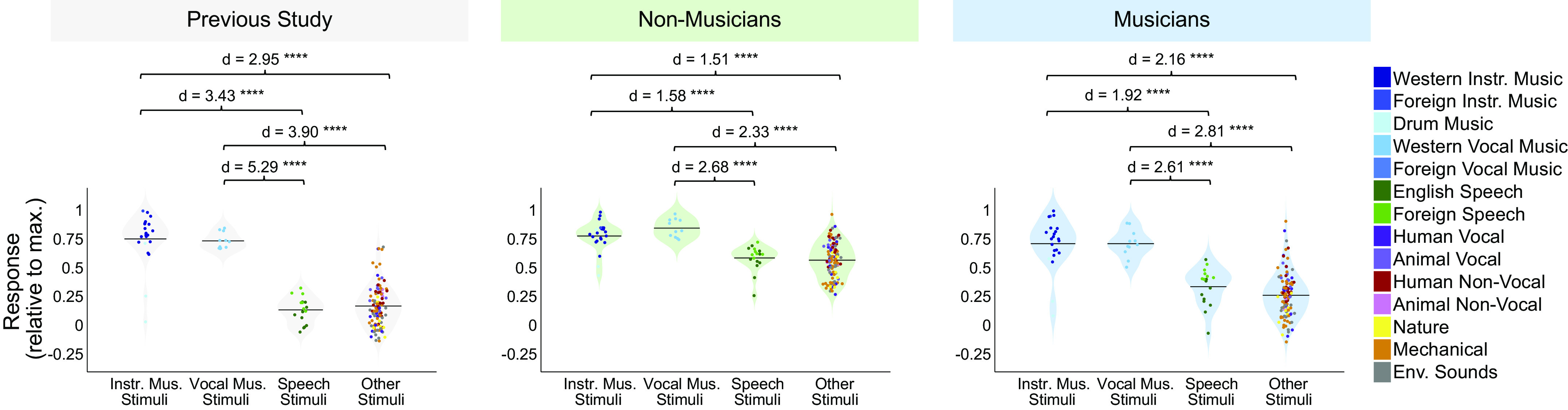

We note that the mean response profile magnitude for the music-selective component differed between groups, being lower in musicians than nonmusicians (Fig. 3B). This effect seems unlikely to be a consequence of musical training because the mean response magnitude was lower still in the previous study, whose participants had substantially less musical training than the musicians in the current study. Further, we have found that component response profile magnitude tends to vary depending on the method used to infer the components. For example, using a probabilistic parametric matrix factorization model instead of the simpler, nonparametric method presented throughout this paper resulted in components that had different mean responses despite otherwise being very similar to those obtained via ICA (see Appendix for details of the parametric model, and Fig. A10 for the component response profiles inferred using this method). Moreover, the information about music contained in the response as measured by the separability of music versus nonmusic sounds (Cohen’s d) is independent of this overall mean response (see Fig. 4). For these reasons, we do not read much into this apparent difference between groups.

Figure 4.

Separability of sound categories in music-selective components of nonmusicians and musicians. Distributions of 1) instrumental music stimuli, 2) vocal music stimuli, 3) speech stimuli, and 4) other stimuli within the music component response profiles from our previous study (n = 10; left, gray shading), as well as those inferred from nonmusicians (n = 10; center, green shading) and musicians (n = 10; right, blue shading). The mean for each stimulus category is indicated by the horizontal black line. The separability between pairs of stimulus categories (as measured using Cohen’s d) is shown above each plot. The 165 individual sounds are colored according to their semantic category. Stimuli consisted of instrumental music (n = 22), vocal music (n = 11), speech (n = 17), and other (n = 115). See Table 2 for results of pairwise comparisons indicated by brackets; ****Significant at P < 0.0001, two-tailed.

We also found similarities in the anatomical distribution of speech- and music-selective component weights between the previous study and both groups in the current study. The weights for the speech-selective component were concentrated in the middle portion of the superior temporal gyrus (midSTG, Fig. 3C), as expected based on previous reports (73–75). In contrast, the weights for the music-selective component were most prominent anterior to PAC in the planum polare, with a secondary cluster posterior to PAC in the planum temporale (Fig. 3D) (3, 7, 41, 48, 52, 76, 77).

Together, these findings show that we are able to twice replicate the overall component structure underlying auditory cortical responses described in our previous study (once for nonmusicians and once for musicians). Further, both nonmusicians and musicians show category-selective components that are largely similar to those in our previous study, including a single component that appears to be selective for music.

Characterizing Music Selectivity in Nonmusicians and Musicians Separately

We next asked whether nonmusicians exhibited the signature response characteristics of music selectivity documented in our prior paper: 1) the music component response profile showed a high response to both instrumental and vocal music and a low response to all other categories, including speech; 2) music component voxel weights were highest in anterior superior temporal gyrus (STG), with indications of a secondary concentration of weights in posterior STG, and low weights in both PAC and lateral STG; and 3) music component voxel weights had a largely bilateral distribution. We examined whether each of these properties was present in the components separately inferred from nonmusicians and musicians.

Response profiles show selectivity for both instrumental and vocal music.

The defining feature of the music-selective component from Ref. 7 was that its response profile showed a very high response to stimuli that had been categorized by humans as “music,” including both instrumental music and music with vocals, relative to nonmusic stimuli, including speech (Fig. 3B, left column). The category-averaged responses of the music-selective component showed similar effects in nonmusicians and highly trained musicians (Fig. 3B, center and right columns).

To quantify this music selectivity, we measured the difference in mean response profile magnitude between music and nonmusic sounds, divided by their pooled standard deviation (Cohen’s d). So that we could compare across our previous and current experiments, this was done using the set of 165 sounds that were common to both studies. We measured Cohen’s d separately for several different pairwise comparisons: 1) instrumental music vs. speech stimuli, 2) instrumental music vs. other nonmusic stimuli, 3) vocal music vs. speech stimuli, and 4) vocal music vs. other nonmusic stimuli (Fig. 4). In each case, the significance of the separation of the two stimulus categories was determined using a nonparametric test permuting stimulus labels 10,000 times. All four of these statistical comparisons were highly significant for nonmusicians when analyzed separately (all Ps < 10−5, Table 2). This result shows that the music component is highly music-selective in nonmusicians, in that it responds highly to both instrumental and vocal music, and significantly less to both speech and other nonmusic sounds. Similar results were also found for musicians (all Ps < 10−5, Table 2). We note that the selectivity of the music component inferred from nonmusicians seems to be slightly lower than that of the component inferred from musicians, but we are not sufficiently powered to directly test for differences in selectivity between groups (see Direct Group Comparisons of Music Selectivity in the Appendix). It’s also true that the values of Cohen’s d tend to be somewhat larger for the music component from Ref. 7 than for the components inferred from both groups of participants in the current study. It is not clear why this is the case, but it is more likely to be due to slight methodological differences between the experiments than an effect of musical training, because the participants in our previous study had intermediate levels of musical training.

Table 2.

Results of pairwise comparisons between stimulus categories shown in Fig. 4

| Subject Group | Pairwise Comparison | Stimulus Category | Mean | SD | Cohen’s d | P value |

|---|---|---|---|---|---|---|

| Nonmusicians | 1 | Instrumental music | 0.78 | 0.13 | 1.58 | 2.14E-06 |

| Speech | 0.59 | 0.11 | ||||

| 2 | Instrumental music | 0.78 | 0.13 | 1.51 | 1.88E-10 | |

| Other | 0.57 | 0.15 | ||||

| 3 | Vocal music | 0.85 | 0.08 | 2.68 | 4.54E-11 | |

| Speech | 0.59 | 0.11 | ||||

| 4 | Vocal music | 0.85 | 0.08 | 2.33 | 1.87E-12 | |

| Other | 0.57 | 0.15 | ||||

| Musicians | 1 | Instrumental music | 0.72 | 0.22 | 1.92 | 1.19E-08 |

| Speech | 0.34 | 0.16 | ||||

| 2 | Instrumental music | 0.72 | 0.22 | 2.16 | 7.40E-20 | |

| Other | 0.27 | 0.19 | ||||

| 3 | Vocal music | 0.72 | 0.12 | 2.61 | 8.38E-11 | |

| Speech | 0.34 | 0.16 | ||||

| 4 | Vocal music | 0.72 | 0.12 | 2.81 | 3.62E-18 | |

| Other | 0.27 | 0.19 |

The significance of the separation of each pair of stimulus categories was determined using a nonparametric test permuting stimulus labels 10,000 times. Stimuli consisted of instrumental music (n = 22), vocal music (n = 11), speech (n = 17), and other (n = 115).

Music component weights concentrated in anterior and posterior STG.

A second notable property of the music component from Ref. 7 was that the weights were concentrated in distinct regions of nonprimary auditory cortex, with the most prominent cluster located in anterior STG, and a secondary cluster located in posterior STG (at least in the left hemisphere). Conversely, music component weights were low in primary auditory cortex (PAC) and intermediate in nonprimary lateral STG (see Fig. 5A).

To assess whether these anatomical characteristics were evident for the music components inferred from our nonmusician and musician participants, we superimposed standardized anatomical parcels (64) on the data and defined anatomical regions of interest (ROIs) by selecting four sets of these anatomically defined parcels that best corresponded to anterior nonprimary, primary, and lateral nonprimary auditory cortex (Fig. 5B). We then calculated the average music component weight for each individual participant within each of these four anatomical ROIs, separately for each hemisphere (Fig. 5C). This was done separately for nonmusicians and musicians, using their respective music components.

For each group, a 4 (ROI) × 2 (hemisphere) repeated measures ANOVA on these mean component weights showed a significant main effect of ROI for both nonmusicians [F(3,27) = 50.12, P = 3.63e-11, η2p = 0.85] and musicians [F(3,27) = 19.62, P = 5.90e-07, η2p = 0.69]. Pairwise comparisons showed that for each group, component weights were significantly higher in the anterior and posterior nonprimary ROIs than both the primary and lateral nonprimary ROIs when averaging over hemispheres (Table 3; nonmusicians: all Ps < 0.004, musicians: all Ps < 0.03).

Table 3.

Results of pairwise comparisons between mean weights in ROIs shown in Fig. 5C

| Subject Group | Pairwise Comparison | ROI | t Value | Cohen’s d | P value |

|---|---|---|---|---|---|

| Nonmusicians | 1 | Anterior nonprimary | 15.91 | 5.03 | 6.75E-08 |

| Primary | |||||

| 2 | Anterior nonprimary | 8.99 | 2.84 | 8.61E-06 | |

| Lateral nonprimary | |||||

| 3 | Posterior nonprimary | 7.25 | 2.29 | 4.81E-05 | |

| Primary | |||||

| 4 | Posterior nonprimary | 3.84 | 1.21 | 0.0040 | |

| Lateral nonprimary | |||||

| Musicians | 1 | Anterior nonprimary | 11.40 | 3.61 | 1.19E-06 |

| Primary | |||||

| 2 | Anterior nonprimary | 7.66 | 2.42 | 3.14E-05 | |

| Lateral nonprimary | |||||

| 3 | Posterior nonprimary | 4.49 | 1.42 | 0.0015 | |

| Primary | |||||

| 4 | Posterior nonprimary | 2.72 | 0.86 | 0.0237 | |

| Lateral nonprimary |

Component weights were first averaged over hemispheres, and significance between ROI pairs was evaluated using paired t tests (n = 10). Note that because of our prior hypotheses and the significance of the omnibus F test, we did not correct for multiple comparisons. ROI, region of interest.

These results show that in both nonmusicians and musicians, music selectivity is concentrated in anterior and posterior STG and present to a lesser degree in lateral STG, and only minimally in PAC.

Music component weights are bilaterally distributed.

A third characteristic of the previously described music selectivity is that it was similarly distributed across hemispheres, with no obvious lateralization (7). The repeated-measures ANOVA described in the previous section showed no evidence of lateralization in either nonmusicians or musicians [Fig. 5C; nonmusicians: F(1,9) = 0.15, P = 0.71, η2p = 0.02; musicians: F(1,9) = 2.43, P = 0.15, η2p = 0.21]. Furthermore, for both groups, the effect size of ROI within a hemisphere was significantly larger than the effect size of hemisphere [measured by bootstrapping across participants to get 95% CIs around the difference in the effect size for the two main effects, i.e., ηpROI2 – ηpHemi2; the significance of the difference in main effects was evaluated by determining whether or not each group’s 95% CI for the difference overlapped with zero: nonmusicians’ CI: (0.37, 0.89), musicians’ CI: (0.16, 0.82)].

Because the lack of a significant main effect of hemisphere could be due to insufficient statistical power, we ran a Bayesian version of the repeated-measures ANOVA, which allows us to quantify evidence both for and against the null hypothesis that there was not a main effect of hemisphere. We used JASP (78), with its default prior (Cauchy distribution, r = 0.5), and computed the Bayes Factor for inclusion of each main effect and/or interaction (the ratio between the likelihood of the data given the model including the effect in question vs. the likelihood of the next simpler model without the effect in question, with values further from 1 providing stronger evidence in favor of one model or the other). We found no evidence for a main effect of hemisphere (Bayes factor of inclusion, BFincl = 0.77 for musicians, suggestive of weak evidence against inclusion; BFincl = 0.24 for nonmusicians, suggestive of moderate evidence against inclusion, using the guidelines suggested by Ref. 79). By contrast, the main effect of ROI was well supported (BFincl for ROI for nonmusicians = 1.02e17, and for musicians = 6.11e12, both suggestive of extreme evidence in favor of inclusion).

Neither group showed a significant ROI × hemisphere interaction [nonmusicians: F(3,27) = 1.48, P = 0.24, η2p = 0.14; musicians: F(3,27) = 2.24, P = 0.11, η2p = 0.20]. This was also the case for the Bayesian repeated-measures ANOVA, in which the Bayes Factors for the interaction between ROI and hemisphere provided weak evidence against including the interaction term in the models (BFincl = 0.38 for nonmusicians, BFincl = 0.36 for musicians).

The fact that the music component inferred from nonmusicians exhibits all of the previously described features of music selectivity (7) suggests that explicit musical training is not necessary for a music-selective neural population to arise in the human brain. In addition, the results from musicians suggest that that the signature properties of music selectivity are not drastically altered by extensive musical experience. Both groups exhibited a single response component selective for music. And in both groups, this selectivity was present for both instrumental and vocal music, was localized to anterior and posterior nonprimary auditory cortex, and was present bilaterally.

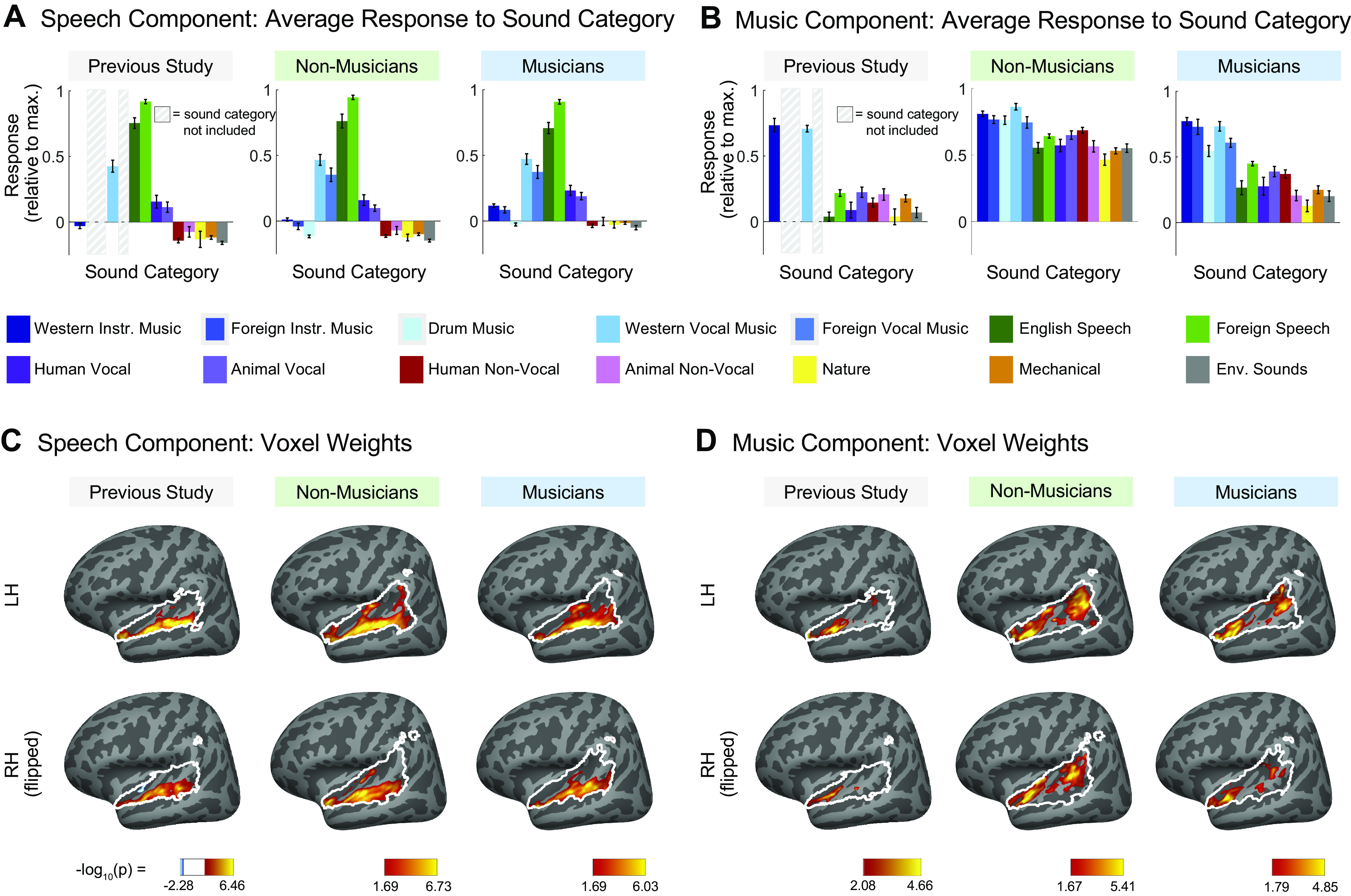

New Insights into Music Selectivity: Music-Selective Regions of Auditory Cortex Show High Responses to Drum Rhythms and Unfamiliar Musical Genres

Because our experiment utilized a broader stimulus set than the original study (7), we were able to use the inferred components to ask additional questions about the effect of experience on music selectivity, as well as gain new insights into the nature of cortical music selectivity. The set of natural sounds used in this study included a total of 60 music stimuli, spanning a variety of instruments, genres, and cultures. Using this diverse set of music stimuli, we can begin to address the questions of 1) whether music selectivity is specific to the music of one’s own culture, and 2) whether music selectivity is driven solely by features related to pitch, like the presence of a melody. Here, we analyze the music component inferred from all 20 participants since similar music components were inferred separately from musicians and nonmusicians (see Appendix and Fig. A7 for details of the components inferred from all 20 participants).

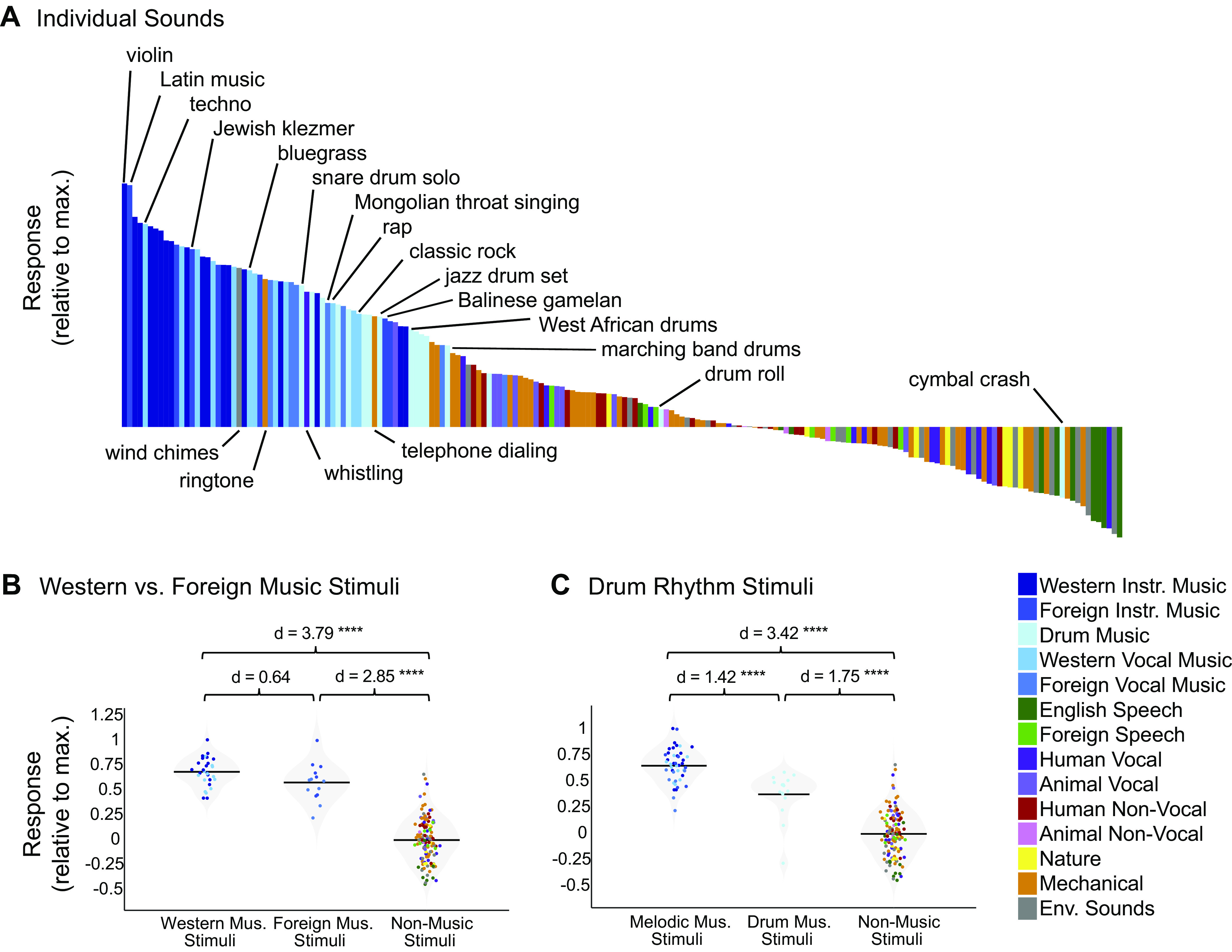

To expand beyond the original stimulus set from Ref. 7, which contained music exclusively from traditionally Western genres and artists, we selected additional music clips from several non-Western musical cultures that varied in tonality and rhythmic complexity (e.g., Indian raga, Balinese gamelan, Chinese opera, Mongolian throat singing, Jewish klezmer, Ugandan lamellophone music; Fig. 6A). We expected that our American participants would have less exposure to these musical genres, allowing us to see whether the music component makes a distinction between familiar and less familiar music. The non-Western music stimuli were rated by American Mechanical Turk participants as being similarly musical (mean rating on 1–100 scale for Western music = 86.28, SD = 7.06; non-Western music mean = 79.63, SD = 9.01; P = 0.37, 10,000 permutations) but less familiar (mean rating on 1–100 scale for Western music = 66.50, SD = 8.23; non-Western music mean = 45.50, SD = 15.83; P < 1.0e-5, 10,000 permutations) than typical Western music. Despite this difference in familiarity, the magnitude of non-Western music stimuli within the music component was only slightly smaller than the magnitude of Western music stimuli (Cohen’s d = 0.64), a difference that was only marginally significant (Fig. 6B; P = 0.052, nonparametric test permuting music stimulus labels 10,000 times). Moreover, the magnitudes of both Western and non-Western music stimuli were both much higher than nonmusic stimuli (Western music stimuli vs. nonmusic stimuli: Cohen’s d = 3.79, P < 0.0001, 10,000 permutations; non-Western music vs. nonmusic: Cohen’s d = 2.85; P < 0.0001, 10,000 permutations). Taken together, these results suggest that music-selective responses in auditory cortex occur even for relatively unfamiliar musical systems and genres.

Figure 6.

A: close-up of the response profile (192 sounds) for the music component inferred from all participants (n = 20), with example stimuli labeled. Note that there are a few “nonmusic” stimuli (categorized as such by Amazon Mechanical Turk raters) with high component rankings, but that these are all arguably musical in nature (e.g., wind chimes, ringtone). Conversely, “music” stimuli with low component rankings (e.g., “drumroll” and “cymbal crash”) do not contain salient melody or rhythm, despite being classified as “music” by human listeners. B: distributions of Western music stimuli (n = 30), non-Western music stimuli (n = 14), and nonmusic stimuli (n = 132) within the music component response profile inferred from all 20 participants, with the mean for each stimulus group indicated by the horizontal black line. The separability between categories of stimuli (as measured using Cohen’s d) is shown above the plot. Note that drum stimuli were left out of this analysis. C: distributions of melodic music stimuli (n = 44), drum rhythm stimuli (n = 16), and nonmusic stimuli (n = 132) within the music component response profile inferred from all 20 participants, with the mean for each stimulus group indicated by the horizontal black line. The separability between categories of stimuli (as measured using Cohen’s d) is shown above the plot, and significance was evaluated using a nonparametric test permuting stimulus labels 10,000 times. ****Significant at P < 0.0001, two-tailed. Sounds are colored according to their semantic category.

Which stimulus features drive music selectivity? One of the most obvious distinctions is between melody and rhythm. Although music typically involves both melody and rhythm, when assembling our music stimuli we made an attempt to pick clips that varied in the prominence and complexity of their melodic and rhythmic content. In particular, we included 13 stimuli consisting of drumming from a variety of genres and cultures, because drum music mostly isolates the rhythmic features of music while minimizing (though not eliminating) melodic features. Whether music-selective auditory cortex would respond highly to these drum stimuli was largely unknown, partially because the Norman-Haignere et al. (7) study only included two drum stimuli, one of which was just a stationary snare drum roll that produced a low response in the music component, likely because it lacks both musical rhythm and pitch structure. The drum stimuli in our study ranked below the other instrumental and vocal music category responses (Cohen’s d = 1.42, P < 8.76e-07), but higher than the other nonmusic stimulus categories (Cohen’s d = 1.75, P < 9.60e-11; Fig. 6C). This finding suggests that the music component is not simply tuned to melodic information but is also sensitive to rhythm.

DISCUSSION

Our results show that cortical music selectivity is present in nonmusicians and hence does not require explicit musical training to develop. Indeed, the same six response components that characterized human auditory cortical responses to natural sounds in our previous study were replicated twice here, once in nonmusicians, and once in musicians. Our goal in this study was not to make statistical comparisons between nonmusicians and musicians (which would have required a prohibitive amount of data, see Direct Group Comparisons of Music Selectivity in the Appendix) but rather to assess whether the key properties of music selectivity were present in each group. Thus, although we cannot rule out the possibility that there are some differences between music-selective neural responses in musicians and nonmusicians, we have shown that in both groups, voxel decomposition produced a single music-selective component, which was selective for both instrumental and vocal music, and which was concentrated bilaterally in anterior and posterior superior temporal gyrus (STG). We also observed that the music-selective component responds strongly to both drums and less familiar non-Western music. Together, these results suggest that passive exposure to music is sufficient for the development of music selectivity in nonprimary auditory cortex, and that music-selective responses extend to rhythms with little melody, and to relatively unfamiliar musical genres.

Origins of Music Selectivity

Our finding of music-selective responses in nonmusicians is inconsistent with the hypothesis that explicit training is necessary for the emergence of music selectivity in auditory cortex and suggests rather that music selectivity is either present from birth or results from passive exposure to music. If present from birth, music selectivity could in principle represent an evolutionary adaptation for music, definitive evidence for which has long been elusive (80). But it is also plausible that music-specific representations emerge over development due to the behavioral importance of music in everyday life. For example, optimizing a neural network model to solve ecological speech and music tasks yields separate processing streams for the two tasks (81), suggesting that musical tasks sometimes require music-specific features. Another possibility is that music-specific features might emerge in humans or machines without tasks per se, due to the fact that music is acoustically distinct from other natural sounds. One way of testing this hypothesis might be to use generic unsupervised learning, for instance for producing efficient representations of sound (82–84), which might produce a set of features that are activated primarily by musical sounds.

Nearly all of our participants reported listening to music on a daily basis, and in other contexts, this everyday musical experience has clear effects (10–15), providing an example of how unsupervised learning from music might alter representations in the brain. Additionally, behavioral studies of nonindustrialized societies who lack much contact with Western culture show pronounced differences from Westerners in many aspects of music perception (85–88) and might plausibly also exhibit differences in the degree or nature of cortical music selectivity. Thus, our data do not show that music selectivity in the brain is independent of experience but rather that typical exposure to music in Western culture is sufficient for cortical music selectivity to emerge. It remains possible that the brains of people who grow up with less extensive musical exposure than our participants would not display such pronounced music selectivity.

What Does Cortical Music Selectivity Represent?

The music-selective component responds strongly to a wide range of music and weakly to virtually all other sounds, demonstrating that it is driven by a set of features that are relatively specific to music. One possibility is that there are simple acoustic features that differentiate music from other types of stimuli. Speech and music are known to differ in their temporal modulation spectra, peaking at 5 Hz and 2 Hz, respectively (89), and some theories suggest that these acoustic differences lead to neural specialization for speech vs. music in different cortical regions (90). However, standard auditory models based on spectrotemporal modulation do not capture the perception of speech and music (91) or neural responses selective for speech and music (55, 74, 81, 92). In particular, the music-selective component responds substantially less to sounds that have been synthesized to have the same spectrotemporal modulation statistics as natural music, suggesting that the music component does not simply represent the audio or modulation frequencies that are prevalent in music (55).

Our finding that the music-selective component shows high responses to less familiar musical genres places some constraints on what these properties might be, as does the short duration of the stimuli used to characterize music selectivity. For instance, the music-specific features that drive the response are unlikely to be specific to Western music and must unfold over relatively short timescales. Features that are common to nearly all music, but not other types of sounds, include stable and sustained pitch organized into discrete note-like elements, and temporal patterning with regular time intervals. Because the music component anatomically overlaps with more general responses to pitch (7), it is natural to wonder if it represents higher-order aspects of pitch, such as the previously mentioned stability, or discrete jumps from one note to another. However, the high response to drum rhythms in the music component that we observed here indicates that the component is not only sensitive to pitch structure. Instead, this result suggests that melody and rhythm might be jointly analyzed, rather than dissociated, at least at the level of auditory cortex. One possibility is that the underlying neural circuits extract temporally local representations of melody and rhythm motifs that are assembled elsewhere into the representations of contour, key, meter, groove etc. that are the basis of music cognition (3, 93–96).

Limitations

Our paradigm used relatively brief stimuli since music-selective regions are present just outside of primary auditory cortex, where integration periods appear to be short (74, 97). And we intentionally used a simple task (intensity discrimination) to encourage subjects to attend to all stimuli. But because the responses of auditory cortical neurons have been known to change based on task demands (e.g., 98), it is possible that more complex stimuli or tasks would reveal additional aspects of music-selective responses, which might not be present to a similar degree in nonmusicians. One relevant point of comparison is the finding that amusic participants with striking pitch perception deficits show pitch-selective auditory cortical responses that are indistinguishable from those of control participants with univariate analyses (99). Recent evidence suggests it is nonetheless possible to discriminate amusic participants from controls and to predict participants’ behavioral performance, using fMRI data collected in the context of a pitch task (100). Utilizing a music-related task might produce larger differences between musicians and nonmusicians, as might longer music stimuli (compared with the 2-s clips used in this experiment), which could be argued to contain richer melodic, harmonic, and/or rhythmic information.

Finally, our study is limited by the resolution of fMRI. Voxel decomposition is intended to help overcome the spatial limitations of fMRI, and indeed appears to reveal responses that are not evident in raw voxel responses but can be seen with finer-grained measurement substrates such as electrocorticography (6). But the spatial and temporal resolution of the BOLD signal inevitably constrain what is detectable and place limits on the precision with which we can observe the activity of music-selective neural populations. Music-selective brain responses might well exhibit additional characteristics that would only be evident in fine-grained spatial and temporal response patterns that cannot be resolved with fMRI. Thus, we cannot rule out the possibility that there are additional aspects of music-selective neural responses that might be detectable with other neuroimaging methods (e.g., M/EEG, ECoG) and which are absent or altered in nonmusicians.

Future Directions

One of the most interesting open questions raised by our findings is whether cortical music selectivity reflects implicit knowledge gained through typical exposure to music, or whether it is present from birth. These hypotheses could be addressed by testing people with very different musical experiences from non-Western cultures or other populations whose lifetime perceptual experience with music is limited in some way (e.g., people with musical anhedonia, children of deaf adults). It would also be informative to test whether music selectivity is present in infants or young children. Finally, much remains to be learned about the nature of cortical music selectivity, such as what acoustic or musical features might be driving it. The voxel decomposition approach provides one way of answering these questions and exploring the quintessentially human ability for music.

GRANTS

This work was supported by National Science Foundation Grant BCS-1634050 to J. McDermott and NIH grant DP1HD091947 to N. Kanwisher. S. Norman-Haignere was supported by a Life Sciences Research Fellowship from the Howard Hughes Medical Institute (HHMI). The Athinoula A. Martinos Imaging Center at Massachusetts Institute of Technology is supported by the NIH Shared Instrumentation Grant S10OD021569.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the authors.

AUTHOR CONTRIBUTIONS

D.B., S.N-H., J.M., and N.K. conceived and designed research; D.B. performed experiments; D.B. analyzed data; D.B., S.N-H., J.M., and N.K. interpreted results of experiments; D.B. prepared figures; D.B. drafted manuscript; D.B., S.N-H., J.M., and N.K. edited and revised manuscript; D.B., S.N-H., J.M., and N.K. approved final version of manuscript.

ENDNOTE

At the request of the authors, readers are herein alerted to the fact that additional materials related to this manuscript may be found at https://github.com/snormanhaignere/nonparametric-ica. These materials are not a part of this manuscript and have not undergone peer review by the American Physiological Society (APS). APS and the journal editors take no responsibility for these materials, for the website address, or for any links to or from it.

ACKNOWLEDGMENTS

We thank the McDermott lab for useful comments on an earlier version of this manuscript.

APPENDIX

Psychoacoustic Data Acquisition and Analysis

To validate participants’ self-reported musicianship, we measured participants’ abilities on a variety of psychoacoustical tasks for which prior evidence suggested that musicians would outperform nonmusicians. For all psychoacoustic tasks, stimuli were presented using Psychtoolbox for Matlab (101). Sounds were presented to participants at 70dB SPL over circumaural Sennheiser HD280 headphones in a soundproof booth (Industrial Acoustics; SPL level was computed without any weighting across time or frequency). After each trial, participants were given feedback about whether or not they had answered correctly. Group differences for each task were measured using nonparametric Wilcoxon rank sum tests.

Pure tone frequency discrimination.

Because musicians have superior frequency discrimination abilities when compared with nonmusicians (102–104), we first measured participants’ pure tone frequency discrimination thresholds using an adaptive two-alternative forced choice (2AFC) task. In each trial, participants heard two pairs of tones. One of the tone pairs consisted of two identical 1-kHz tones, whereas the other tone pair contained a 1-kHz tone and a second tone of a different frequency. Participants determined which tone interval contained the frequency change. The magnitude of the frequency difference was varied adaptively using a 1-up 3-down procedure (105), which targets participants’ 79.4% threshold. The frequency difference was changed initially by a factor of two, which was reduced to a factor of √2 after the fourth reversal. Once 10 reversals had been measured, participants’ thresholds were estimated as the average of these 10 values. Multiple threshold estimations were obtained per participant (three threshold estimations for the first seven participants, and five for the remaining 13 participants), and then averaged.

Synchronized tapping to an isochronous beat.

Sensorimotor abilities are crucial to musicianship, and finger tapping tasks show some of the most reliable effects of musicianship (106–108). Participants were asked to tap along with an isochronous click track. They heard ten 30-s click blocks, separated by 5 s of silence. The blocks varied widely in tempo, with interstimulus intervals ranging from 200 ms to 1 s (tempos of 60 to 300 bpm). Each tempo was presented twice, and the order of tempi was permuted across participants. We recorded the timing of participants’ responses using a tapping sensor used in previous studies (85, 109). We then calculated the difference between participants’ response onsets and the actual stimulus onsets. The standard deviation of these asynchronies between corresponding stimulus and response onsets was used as a measure of sensorimotor synchronization ability (109).

Melody discrimination.

Musicians have also been reported to outperform nonmusicians on measures of melodic contour and interval discrimination (44, 110, 111). In each trial, participants heard two five-note melodies and were asked to judge whether the two melodies were the same or different. Melodies were composed of notes that were randomly drawn from a log uniform distribution of semitone steps from 150 Hz to 270 Hz. The second melody was transposed up by half an octave and was either identical to the first melody or contained a single note had that had been altered either up or down by 1 or 2 semitones. Half of the trials contained a second melody that was the same as the first melody, whereas 25% contained a pitch change that preserved the melodic contour and the remaining 25% contained a pitch change that violated the melodic contour. There were 20 trials per condition (same/different melody × same/different contour × 1/2 semitone change), for a total of 160 trials. This task was modified from McPherson and McDermott (111).

“Sour note” detection.

To measure participants’ knowledge of Western music, we also measured participants’ ability to determine whether a melody conforms to the rules of Western music theory. The melodies used in this experiment were randomly generated from a probabilistic generative model of Western tonal melodies that creates a melody on a note-by-note basis according to the principles that 1) melodies tend to be limited to a narrow pitch range, 2) note-to-note intervals tend to be small, and 3) the notes within the melody conform to a single key (112). In each trial of this task, participants heard a 16-note melody and were asked to determine whether the melody contained an out-of-key (“sour”) note. In half of the trials, one of the notes in the melody was modified so that it was rendered out of key. The modified notes were always scale degrees 1, 3, or 5 and they were increased by either 1 or 2 semitones accordingly so that they were out of key (i.e., scale degrees 1 and 5 were modified by 1 semitone, and scale degree 3 was modified by 2 semitones). Participants judged whether the melody contained a sour note (explained as a “mistake in the melody”). There were 20 trials per condition (modified or not × 3 scale degrees), for a total of 120 trials. This task was modified from McPherson and McDermott (111).

Psychoacoustic Results