Abstract

Background

Recent years have been witnessing a substantial improvement in the accuracy of skin cancer classification using convolutional neural networks (CNNs). CNNs perform on par with or better than dermatologists with respect to the classification tasks of single images. However, in clinical practice, dermatologists also use other patient data beyond the visual aspects present in a digitized image, further increasing their diagnostic accuracy. Several pilot studies have recently investigated the effects of integrating different subtypes of patient data into CNN-based skin cancer classifiers.

Objective

This systematic review focuses on the current research investigating the impact of merging information from image features and patient data on the performance of CNN-based skin cancer image classification. This study aims to explore the potential in this field of research by evaluating the types of patient data used, the ways in which the nonimage data are encoded and merged with the image features, and the impact of the integration on the classifier performance.

Methods

Google Scholar, PubMed, MEDLINE, and ScienceDirect were screened for peer-reviewed studies published in English that dealt with the integration of patient data within a CNN-based skin cancer classification. The search terms skin cancer classification, convolutional neural network(s), deep learning, lesions, melanoma, metadata, clinical information, and patient data were combined.

Results

A total of 11 publications fulfilled the inclusion criteria. All of them reported an overall improvement in different skin lesion classification tasks with patient data integration. The most commonly used patient data were age, sex, and lesion location. The patient data were mostly one-hot encoded. There were differences in the complexity that the encoded patient data were processed with regarding deep learning methods before and after fusing them with the image features for a combined classifier.

Conclusions

This study indicates the potential benefits of integrating patient data into CNN-based diagnostic algorithms. However, how exactly the individual patient data enhance classification performance, especially in the case of multiclass classification problems, is still unclear. Moreover, a substantial fraction of patient data used by dermatologists remains to be analyzed in the context of CNN-based skin cancer classification. Further exploratory analyses in this promising field may optimize patient data integration into CNN-based skin cancer diagnostics for patients’ benefits.

Keywords: skin cancer classification, convolutional neural networks, patient data

Introduction

Background

The incidence of skin cancer has been increasing throughout the world, resulting in substantial health and economic burdens [1]. Early detection increases the possibility of curing all types of skin cancers. However, distinguishing benign skin lesions from malignant skin lesions is challenging, even for experienced clinicians [2]. Over the past few years, different digital approaches have been proposed to assist in the detection of skin cancer [3,4]. Convolutional neural networks (CNNs) are the most successful systems for handling image classification problems [5]. Recent publications have reported CNNs that support [6] and outperform [7-9] dermatologists in challenging binary melanoma detection and multiclass skin cancer classification when only taking single images of the skin lesion as input.

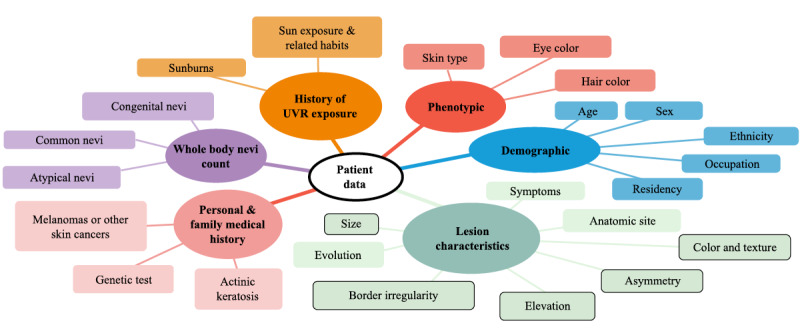

However, single-image classification does not reflect the clinical reality. In fact, dermatologists’ diagnoses are based on both the visual inspection of a single image and the integration of information from various sources. Figure 1 shows the different types of information that dermatologists may collect from their patients, including information on known risk factors for skin cancer. The corresponding references can be found in Multimedia Appendix 1 [10-32]. The complexity of this figure illustrates the diversity of patient data that can be included in the diagnosis. Roffman et al [33] and Wang et al [34] presented reasonably accurate skin cancer predictions using deep learning methods based exclusively on patient nonimage information. Haenssle et al [35] showed that dermatologists perform somewhat better in a dichotomous skin lesion classification task (benign vs malignant or premalignant) when they were provided with clinical images and textual case information, such as the patient’s age, sex, and lesion location, in addition to a dermoscopic image. This raises the question of whether a combination of CNN-based image analysis and patient data might also increase the accuracy of the classifier. A combination of image features and patient data has become a topic of the International Skin Imaging Collaboration challenge in 2019, where a large repository of dermoscopic images including patient data was offered for clinical training and technical research toward automated algorithmic analysis [36].

Figure 1.

An overview of patient data considered by dermatologists while diagnosing skin lesions. The framed characteristics in the figure illustrate the fraction of patient data that can potentially be recognized by convolutional neural networks from a single image input. UVR: ultraviolet radiation.

Objective

This review presents the status quo of CNN-based skin lesion classification using image input and patient data. The included studies were analyzed with respect to the amount and type of patient data used for integration, the encoding and fusing techniques, and the reported results. The review also discusses the heterogeneity of the studies that have been conducted so far and points out the potential and challenges of such combined classifiers that should be addressed in the future.

Methods

Search Strategy

Google Scholar, PubMed, MEDLINE, and ScienceDirect were searched for peer-reviewed publications, restricted to human research published in English. The search terms skin cancer classification, convolutional neural network(s), deep learning, lesions, melanoma, metadata, clinical information, and patient data were combined.

Study Selection

This review only includes skin lesion classification studies using CNNs that consider both image and patient data. It must be noted that there are a few studies that investigated the incorporation of visual and nonvisual information on skin cancer classification, but did not obtain visual features using deep learning techniques, for example, the studies by Binder et al [37], Alcon et al [38], Cheng et al [39], Liu et al [40], and Rubegni et al [41]. This review exclusively focuses on integrating patient data with the state-of-the-art CNN-based feature extractors. Therefore, the abovementioned studies were not considered in this review.

Study Analysis

The objective of this review is to update practitioners on the status quo approaches toward patient data incorporation into CNN-based skin lesion diagnostics regarding all relevant practical aspects.

Type and Amount of Patient Data

The goal is to achieve better performance of the CNN-based classifier by integrating new information that cannot be extracted from a digitized image. Various types of patient data have been shown to assist dermatologists. Key question: Which and how many different types of patient data have been tested for CNN-based classification?

Encoding and Fusing Techniques

A CNN-based classifier extracts various visual features from a digitized image as the basis for its diagnosis. Patient data are nonimage data and are mostly provided as numbers or strings in tables. The patient data can be classified in a dichotomous fashion (presence of the feature: yes or no), fall into several discrete categories (eg, Fitzpatrick skin type), or be continuous (eg, patient age). This may require different, carefully chosen encoding and fusing techniques. Moreover, the weight attributed to patient data in comparison with image features can strongly influence how the different features contribute toward the decision making of the system. Key questions: What are the encoding and fusing techniques applied in the studies? Do the studies focus on the quantitative relationship between image and nonimage features?

Reported Study Results

This review aims to summarize the recent findings regarding the impact of patient data on the performance of CNN-based classifiers. Key questions: What is the classification task? Is it a binary or multiclass problem? Which skin lesions should be distinguished? How is the influence of individual and/or combined patient data documented? In the case of multiclass classification, is the impact also shown for each single class of skin lesion individually?

Applied Performance Metrics

The included publications reported different statistical metrics as the study end points. If the classes in the test set are approximately equally distributed, then accuracy is a frequently used performance metric, where the total number of correctly predicted samples is divided by the total number of samples in the test set. In binary classification problems with a positive and a negative class, sensitivity and specificity are further common study end points, especially if there is an imbalance between the samples of both classes. Sensitivity was determined only on the basis of the actual positive samples in the test set. It is calculated by counting the correctly classified positive samples by the total number of positive samples. In contrast, specificity was determined based on actual negative samples in the test set. Here, the correctly classified negative samples were divided by the total number of negative samples. While using a CNN, the sensitivity and specificity depend on the selected cutoff value. If the output of the neural network is greater than the cutoff value, the input is assigned to the positive class, and if it is below that value, then the input is assigned to the negative class. Thus, this value represents a central parameter for the trade-off between sensitivity and specificity. A decrease in the threshold value leads to an increase in the sensitivity with a simultaneous decrease in specificity and vice versa. The dependence of the cutoff value of the specificity and sensitivity of the two metrics is shown in the receiver operating characteristic curve. Here, the sensitivity is plotted against the false-positive rate (1−specificity) in a diagram for each possible cutoff value. The area under the receiver operating characteristic curve was used as an integral performance measure for the algorithms.

Results

Classification Tasks

A total of 11 publications fulfilling the inclusion criteria are summarized in Table 1. The studies were very heterogeneous with respect to CNN architecture, classification task, including image and patient data, data augmentation (if reported), and fusion techniques, rendering a meaningful direct comparison very difficult. A total of 5 studies dealt with binary classifications with the end point of either dichotomous melanoma or basal cell carcinoma (BCC) classification or with the end point to distinguish malignant from benign lesions in general. The remaining 6 studies were classified between 5 and 8 different skin diseases or lesions. As malignant lesions, melanoma (6 studies), BCC (6 studies), and squamous cell carcinoma (SCC; 3 studies) were included. In addition, these studies differentiated among the following benign lesions: melanocytic nevus (NV; 6 studies), benign keratosis-like lesions (BKLs; 4 studies), dermatofibroma (3 studies), vascular lesions (VASCs; 3 studies), actinic keratosis (AK; 2 studies), and seborrheic keratosis (SK; 2 studies). Moreover, merged groups comprising AK and intraepithelial carcinoma or Bowen disease (2 studies) and dermatofibroma, lentigo, melanosis, miscellaneous, and VASCs (1 study) were used in some of the studies.

Table 1.

Summary table.

| Study | Patient data types | Result (without/with) | Classification task | CNNa architecture | Data set | Samples, n |

| Bonechi et al [42] | 4 types: age, sex, location, and presence of melanocytic cells | Accuracy: 0.8344/0.8834 | Binary: benign or malignant (MELb, BCCc, SCCd) | ResNet50 | ISICe | 5405 |

| Chin et al [43] | 5 types: age; sex; size; how long it existed; changes in size, color, or shape including bleeding and itching | Accuracy: 0.84/0.92 | Binary: low risk or high risk for MEL | DenseNet121 | Own | 5289 |

| Gonzalez-Diaz [44] | 2 types: age and sex | Accuracy: 0.848/0.859 | Binary: MEL yes or no | ResNet50 | 2017 ISBIf challenge+interactive atlas of dermoscopy [45]+ISIC | 6302 |

| Gessert et al [46] | 3 types: age, sex, and location | Sensitivity: 0.725/0.742; specificity: data not available | 8 classes: MEL, NVg, BCC, AKh, BKLi, DFj, VASCk, SCC | EfficientNets | ISIC (HAM10000 [47], BCN_2000 [48], MSK [49])+7-point data set [50] | 27,665 |

| Kawahara et al [50] | 3 types: sex, location, and elevation | Sensitivity: 0.527/0.604; specificity: 0.902/0.910 | 5 classes: MEL, BCC, NV, MISCl, SKm | Inception V3 | 7-point data set | 808 |

| Kharazmi et al [51] | 5 types: age, sex, location, size, and elevation | Accuracy: 0.847/0.911 | Binary: BCC yes or no | Convolutional filters of learned kernel weights from a sparse autoencoder | Own | 1199 |

| Li et al [52] | 3 types: age, sex, and location | Sensitivity: 0.8544/0.8764; specificity: data not available | 7 classes: NV, MEL, BKL, BCC, AKIECn, VASC, DF | SENet154 | ISIC 2018 data set | 10,015 |

| Pacheco and Krohling [53] | 8 types: age, location, lesion itches, bleeds or has bled, pain, recently increased, changed its pattern, and elevation | Accuracy: 0.671/0.788 | 6 Classes: BCC, SCC, AK, SK, MEL, NV | ResNet50 | Own | 1612 |

| Ruiz-Castilla et al [54] | 3 types: age, sex, and size | Accuracy: 0.61/0.85 | Binary: MEL yes or no | Shallow network with 2 convolutional layers | ISIC | 300 |

| Sriwong et al [55] | 3 types: age, sex, and location | Accuracy: 0.7929/0.8039 | 7 classes: AKIEC, BCC, BKL, DF, MEL, NV, VASC | AlexNet | HAM10000 | 16,720 |

| Yap et al [56] | 3 types: age, sex, and location | Mean average precision: 0.726/0.729; Accuracy: 0.721/0.720 | 5 classes: BCC, SCC, MEL, BKL, NV | ResNet50 | ILSVRCo 2015 [57]+own | 2917 (only testing) |

aCNN: convolutional neural network (most of the studies had the goal of investigating the usefulness of the presented fusion technique independently of the convolutional neural network architecture and, therefore, often showed the performance of the fusion with multiple architectures; we included only the best-performing architecture).

bMEL: melanoma.

cBCC: basal cell carcinoma.

dSCC: squamous cell carcinoma.

eISIC: International Skin Imaging Collaboration.

fISBI: International Symposium on Biomedical Imaging [49].

gNV: melanocytic nevus.

hAK: actinic keratosis.

iBKL: benign keratosis-like lesion.

jDF: dermatofibroma.

kVASC: vascular lesion.

lMISC: summary of dermatofibroma, lentigo, melanosis, miscellaneous, and vascular lesion.

mSK: seborrheic keratosis.

nAKIEC: actinic keratosis and intraepithelial carcinoma.

oILSVRC: ImageNet Large Scale Visual Recognition Challenge.

Types and Amount of Patient Data

Most of the studies included three types of patient data (7/11, 64%). Compared with the diversity of potentially useful patient data illustrated in Figure 1, only a few types of patient data were considered. The most commonly included types of data were patient’s age and sex (studies: 10/11, 91%). Only Kawahara et al [50] and Pacheco and Krohling [53] did not consider age and sex, respectively. The third most commonly considered feature was lesion location (studies: 8/11, 73%). Elevation and lesion size were considered in 27% (3/11) of studies. Chin et al [43] and Pacheco and Krohling [53] included statements about symptoms such as itching, bleeding or pain. In addition, they tracked the lesion’s evolution by documenting whether the lesion increased in size or changed its shape. Furthermore, Bonechi et al [42] considered the presence of melanocytic cells as an additional potentially relevant feature.

Encoding

The means of choice to encode the patient data was one-hot encoding in most cases. One-hot encoding is one way to encode several discrete classes with a string of bits, where exactly one value in the string of bits encoding one class is assigned 1 and all others are assigned 0 (eg, melanoma=010; BCC=100; NV=001). Different techniques were used for continuous parameters such as the patient’s age. One-hot encoding is only possible after discretizing the continuous range, which was performed by Bonechi et al [42], who divided the age ranging from 0 to 95 in the sections of 5 years. Gessert et al [46] tested numerical against one-hot encoding and found the former to be superior. Li et al [52] normalized the age in the range between 0 and 1 and represented its information using only one value.

As patient data are rarely documented in a standardized way, dealing with missing values is an essential skill that requires the algorithm to be proficient. However, only 18% (2/11) of publications went into detail on how they dealt with missing values. Gessert et al [46] suggested a negative fixed value for missing data, whereas Li et al [52] used the more common approach to fill in missing values with average values for continuous data and the most frequent values for discrete patient data.

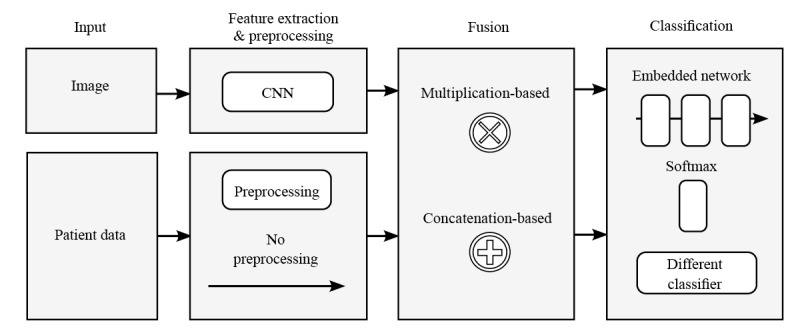

Fusing Technique

Figure 2 illustrates the main function blocks in which the studies vary with respect to the fusing techniques.

Figure 2.

Overview of the different fusing techniques in the main function blocks of the combined classifier. CNN: convolutional neural network.

The fusing techniques differ in the way they actively weigh the image and patient data. In 82% (9/11) of studies, a concatenation-based fusion was applied, that is, the feature vector extracted from the images was enlarged by attaching the encoded patient data. In this case, weighting is achieved by defining the ratio between the number of features originating from the image and the patient data input. Common CNN architectures extract 1024, 2048, or even more features from the image input. In most studies, the authors decided to reduce the image features before concatenating them with patient data. In only 27% (3/11) of studies, the authors provided sufficient information on this point and revealed a considerable variance in the ratio of image features to patient data: 112 to 28 [53], 128 to 80 [42], and 2048 or 2×2048 to 11 [56]. However, reducing the image features should be done with care, as it is accompanied by a loss of information. Only Pacheco and Krohling [53] reported the effect of changing the ratio and proved its strong influence on the classification performance. A totally different weighting approach was introduced by Li et al [52]. This approach used a multiplication-based fusion. Inspired by the squeeze-and-excitation operation of a SENet network [58], the authors used patient data to control the importance of each image feature channel at the last convolutional layer. Thus, the network was able to focus on specific parts of the image feature based on patient data. The authors determined the multiplication-based fusion to be superior to the concatenation-based approach in multiple network architectures.

In addition, the studies vary in the extent to which deep learning methods were applied to the patient data before fusing or on the combined feature vector after fusing it with the image data. Sriwong et al [55] applied no further deep learning methods but used a separate support vector machine for classification, which received the image features extracted by the CNN and the encoded patient data as input. Gonzalez-Diaz [44] used a separate support vector machine for the patient data to generate a probabilistic output, which was factorized with the output of the CNN-based classifier to provide the final diagnosis of the system. Because of the end-to-end training of a neural network, a direct fusion within the CNN architecture was used in most studies. Kharazmi et al [51] simply added patient data before the last classifying softmax layer. More complex deep learning methods were applied by Gessert et al [46] before concatenation or using an embedded network comprising multiple fully connected layers after concatenation as presented by Pacheco and Krohling [53] and Yap et al [56].

Reported Study Results

As summarized in Table 1, all but one study reported a considerable improvement in the classification performance when patient data were used in addition to image analysis. However, the authors consistently emphasized that patient data are only a support source and the image features clearly provide the main evidence [53,56]. Yap et al [56], who considered the features of age, sex, and lesion location for a multiclass problem (BCC, SCC, melanoma, BKL, NV), concluded in the discussion that their incorporation of patient data showed only a slight but not significant improvement in accuracy and recommended testing different features, such as nevus count, proportion of atypical nevi, and history of melanoma.

Although 5 studies reported results for binary classification tasks, 55% (6/11) of studies dealt with a multiclass classification problem, distinguishing between up to 8 different skin diseases, and revealed insights on how the use of patient data influences the classification performance for an individual type of skin lesion. Table 2 shows which individual classifications benefitted from the integration of patient data and whether this was achieved at the expense of others. Among the 6 studies, Gessert et al [46], Sriwong et al [55], and Li et al [52] dealt with comparable classification tasks and used the same patient data (age, sex, and location). All 3 studies identified improvements in the classification of BKL and dermatofibroma. Li et al [52] even listed an absolute increase in the sensitivity for dermatofibroma of approximately 20% (from 63.56% to 84.55%). It must be stated critically that the authors failed to mention the corresponding specificity, which makes it difficult to draw reliable conclusions. Furthermore, Table 2 shows that the improvements may go along with the degradation of classification performance for other lesion types [52,55] or that the improvement of sensitivity for one class may be paralleled by a decrease in specificity, as shown clearly by the results of Gessert et al [46]. In contrast, Kawahara et al [50] and Pacheco and Krohling [53] used different patient data (eg, elevation of the lesion) and reported an increase in sensitivity and specificity in almost all classes. Unfortunately, a deeper insight into the study results of Yap et al [56] was not possible because the confusion matrix, including the classification performance of the single-lesion types, was not legible.

Table 2.

Influence of included patient data on the classification performance of the single skin diseases or lesionsa.

| Study, patient data, and metric | Skin disease | |||||||||||

|

|

MELb | NVc | BCCd | SCCe | AKf | AKIECg | BKLh | DFi | VASCj | MISCk | SKl | |

| Gessert et al [46]: age, sex, location | ||||||||||||

|

|

AUCm | +n | (+/−)o | −p | − | + | Xq | + | + | − | X | X |

|

|

Sensitivity | − | − | − | − | − | X | − | − | − | X | X |

|

|

Specificity | + | + | + | + | + | X | + | + | + | X | X |

| Sriwong et al [55]: age, sex, location | ||||||||||||

|

|

Sensitivity | + | − | + | X | X | − | + | + | − | X | X |

|

|

Specificity | − | + | − | X | X | + | + | +/− | + | X | X |

| Li et al [52]: age, sex, location | ||||||||||||

|

|

Sensitivity | − | − | + | X | X | − | + | + | + | X | X |

| Kawahara et al [50]: sex, location, elevation | ||||||||||||

|

|

Sensitivity | + | + | + | X | X | X | X | X | X | + | + |

|

|

Specificity | + | + | +/− | X | X | X | X | X | X | + | + |

| Pacheco and Krohling [53]: age, location, itches, bleeds, pain, increased, changed, elevation | ||||||||||||

|

|

Sensitivity | + | + | + | + | + | X | X | X | X | X | + |

|

|

Specificity | + | + | + | − | + | X | X | X | X | X | + |

aThe study of Yap et al [56] is excluded because the confusion matrix was not legible. It must be noticed that there are some combinations where the outcome deteriorates by including patient data.

bMEL: melanoma.

cNV: Melanocytic nevus.

dBCC: basal cell carcinoma.

eSCC: squamous cell carcinoma.

fAK: Actinic keratosis.

gAKIEC: actinic keratosis and intraepithelial carcinoma.

hBKL: benign keratosis-like lesion.

iDF: dermatofibroma.

jVASC: vascular lesion.

kMISC: miscellaneous and vascular lesion.

lSK: seborrheic keratosis.

mAUC: area under the curve.

nIndicates improvement compared with classification performance without patient data.

oIndicates no change compared with classification performance without patient data.

pIndicates degradation compared with classification performance without patient data.

qThis implies that the lesion type was not considered in the classification task of the study.

In total, 36% (4/11) of studies analyzed the influence of the used patient data on the classification performance in a more differentiated way. They showed the impact of either individual patient data or special combinations of patient data on classification performance, thereby providing a more detailed insight into the contribution of individual patient data.

As the only ones, Pacheco and Krohling [53] performed an exploratory analysis of the patient data within the used data set before observing the classification of the CNN. The authors considered eight types of patient data (age, location, lesion itches, lesion bleeds, lesion hurts [“pain”], recent increase in size, changed shape or pattern, and elevation) and six different types of skin lesions (BCC, SCC, AK, SK, melanoma, and NV). The exploratory analysis suggested that the patient data parameters such as “bleeding” and “pain” were suitable to differentiate between pigmented (NV, melanoma, and SK) and nonpigmented lesions (AK, BCC, and SCC), whereas the patient data parameters such as “changed its pattern” and “elevation” helped to identify melanomas. As “pain” was always denied in the case of AK, this feature seemed to be a promising discriminator. The analyzed patient data for SCC and BCC were very similar; therefore, no improvement was expected for these 2 skin diseases because of the integration of patient data. The classification results confirmed the exploratory analysis because the classifications of AK, melanoma, NV, and SK improved when patient data were incorporated into the classifiers, whereas the performance for BCC and SCC remained almost the same.

Li et al [52] considered three types of patient data (age, sex, and lesion location) and 7 skin lesion types (NV, melanoma, BKL, BCC, AK and intraepithelial carcinoma, VASC, and dermatofibroma). The study showed the overall classification performance for all possible combinations of patient data. The integration of parameter “location” resulted in the best classification performance, individually. The combination of patient data parameters of “age” and “location” provided the best result overall, whereas the parameter “sex” decreased performance upon integration. The authors concluded that the rare diseases of VASC and dermatofibroma are more location specific, whereas none of the skin diseases in question occur preferentially in men or women. Therefore, the authors recommended the use of “location” and the avoidance of “sex” in the combined classifier.

Sriwong et al [55] addressed the same problem as that of Li et al [52]. However, their study only analyzed some combinations of patient data (age, age+sex, and age+sex+location). The best overall result was achieved by incorporating the combination of “age,” “sex,” and “location.” Contrary to Li et al [52], the study yielded the largest improvement for the feature “age.” Although adding “sex” did not show a considerable improvement, additionally adding “location” increased the performance slightly. The authors stated that the information of “sex” and “location” is more powerful when used in combination, thereby confirming statements in related studies [40,59].

Bonechi et al [42] considered four types of patient data individually (age, sex, location, and presence of melanocytic cells) for a binary classification (malignant yes or no). Unfortunately, the analysis results have not been reported in detail, but the authors reported the parameter “presence of melanocytic cells” to be the most informative.

Discussion

Principal Findings

Although the main evidence for a good diagnosis is still provided by the image input, all 11 publications indicate a possible benefit of integrating patient data in CNN classifiers, as illustrated in Table 1. This corresponds with the results of other approaches that combine visual and nonvisual features for skin lesion classification [37-41], thereby suggesting it as a promising avenue of research. However, publication bias favoring studies with positive results cannot be excluded.

One focus of further research into combined CNN-based classifiers should be to render its classification process transparent, easy to understand, and applicable in a clinical setting. The 11 studies published so far have dealt with these aspects only marginally. Therefore, these issues need to be addressed in future studies to reliably reveal the potential of integrating patient data.

Reproducibility, Comparability, and Generalization

No objective benchmarks exist in the field of integrating patient data into CNN-based classifiers. The heterogeneity of the studies conducted so far is substantial. This applies to the number and types of skin diseases or lesions to be classified, databases and data augmentation, CNN architectures, patient data, and fusion techniques. These aspects have a great influence on the way that the algorithm learns to diagnose the lesions in question and render it very difficult to reproduce and compare the approaches and results externally and independently. A way to solve this would be the more extensive use of external and publicly available data sets to objectively optimize the classification accuracy in an experimental setting. This needs to be done systematically in preparation for clinical trials that will be required to prove the algorithm’s generalizability and applicability in the clinic. In addition, the best way to handle missing data needs to be addressed.

Transparency and Explainability

All presented studies lack an investigation of the impact of patient data individually and in combination on single-lesion classes. Both the fusion method and weight attributed to the patient data in addition to the biological significance itself may substantially influence the classification results. Further research should be dedicated to explaining the mechanisms by which the incorporation of these factors contributes to the decision making of the CNN-based combined classifier to render the results more transparent.

Call for Extensive Exploration Analysis

As shown in Figure 1, a diversity of patient data has been shown to be useful in a clinical setting and could be considered for diagnosis by CNN-based classifiers as well. So far, researchers have mostly used patient data that are readily available and/or routinely recorded, such as age, sex, and lesion location. However, readily available factors may not be the best choice. For instance, sex was included in 91% (10/11) of studies, but was stated to be of minimal benefit for the classification task if investigated in detail. Regarding the results of studies considering patient data besides these three factors, the results indicate that the integration of other patient data may be more promising [39,42,53]. Studies analyzing the risk factors for skin cancer so far demonstrated that patient data can be helpful in distinguishing skin lesions in binary classification tasks. Corresponding studies are available for differentiating between melanoma and nonmelanoma skin cancer [10,60] and for distinguishing between BCC and SCC [61]. Patient data, such as the skin type (I, II, III vs IV), the count of atypical nevi (>4 vs none) and common nevi (>100 vs 0-4) are well-established criteria for melanoma risk [11,12]. To our knowledge, no extensive study has analyzed significant correlations among individual or combinations of these types of patient data and the improvement of multiclass problems as considered in this review. An extensive exploration analysis in this field would help to choose patient data suitable for the considered classification task. Following the study of Haenssle et al [35], it would further be of interest to note which type of patient data influences the clinician’s decision the most. Studies comparing the benefit of specific patient data integration in the artificial intelligence system versus the clinician’s decision and, therefore, pointing out the opportunities of human-algorithm integration systems should be the subject of future research.

Conclusions

All 11 studies published so far indicate that the integration of patient data into CNN-based skin lesion classifiers may improve classification accuracy. The studies mainly used patient data that were routinely recorded (age, sex, and lesion location). Regarding the technical details, the main differences in the presented approaches occur in the fusing techniques. Further research should be dedicated to systematically evaluating the impact of incorporation of individual and combined patient data into CNN-based classifiers to show its benefit reproducibly and transparently and to pave the way for the translation of these combined classifiers into the clinic.

Acknowledgments

JH, A Heckler, EKH, and TJB contributed to the concept and design of the study. JH identified and analyzed studies with contributions from A Heckler and EKH. TJB oversaw the study, critically reviewed and edited the manuscript, and gave final approval. JNK, JSU, FM, FFG, A Hauschild, LF, JGS, KG, TW, HK, MH, SH, WS, DS, BS, RCM, MS, TJ, SF, and DBL substantially contributed to the conception and design, provided critical review and commentary on the draft manuscript, and approved the final version. All of the authors guaranteed the integrity and accuracy of this study. This research is funded by the Federal Ministry of Health in Germany (Skin Classification Project; grant holder: TJB).

Abbreviations

- AK

actinic keratosis

- BCC

basal cell carcinoma

- BKL

benign keratosis-like lesion

- BMS

Bristol Myers Squibb

- CNN

convolutional neural network

- MSD

Merck Sharp & Dohme

- NV

melanocytic nevus

- SCC

squamous cell carcinoma

- SK

seborrheic keratosis

- VASC

vascular lesion

Appendix

Relevant references for the overview of the patient data illustrated.

Footnotes

Conflicts of Interest: A Hauschild reports clinical trial support, speaker’s honoraria, and consultancy fees from the following companies: Amgen, Bristol Myers Squibb (BMS), Merck Serono, Merck Sharp & Dohme (MSD), Novartis, Oncosec, Philogen, Pierre Fabre, Provectus, Regeneron, Roche, OncoSec, Sanofi Genzyme, and Sun Pharma (outside the submitted work). BS reports advisory roles for or has received honoraria from Pierre Fabre Pharmaceuticals, Incyte, Novartis, Roche, BMS, and MSD; research funding from BMS, Pierre Fabre Pharmaceuticals and MSD; and travel support from Novartis, Roche, BMS, Pierre Fabre Pharmaceuticals, and Amgen outside the submitted work. FM has received travel support, speaker’s fees, and/or advisor’s honoraria from Novartis, Roche, BMS, MSD, and Pierre Fabre and research funding from Novartis and Roche outside the submitted work. JSU is on the advisory board or has received honoraria and travel support from Amgen, Bristol Myers Squibb, GSK, LeoPharma, Merck Sharp and Dohme, Novartis, Pierre Fabre, Roche, outside the submitted work. SH reports advisory roles for or has received honoraria from Pierre Fabre Pharmaceuticals, Novartis, Roche, BMS, Amgen, and MSD outside the submitted work. TJB reports owning a company that develops mobile apps (Smart Health Heidelberg GmbH, Handschuhsheimer Landstr. 9/1, 69120 Heidelberg). WS received travel expenses for attending meetings and/or (speaker) honoraria from Abbvie, Almirall, Bristol Myers Squibb, Celgene, Janssen, LEO Pharma, Lilly, MSD, Novartis, Pfizer, Roche, Sanofi Genzyme, and UCB outside the submitted work.

References

- 1.Schadendorf D, van Akkooi AC, Berking C, Griewank KG, Gutzmer R, Hauschild A, Stang A, Roesch A, Ugurel S. Melanoma. Lancet. 2018 Sep;392(10151):971–84. doi: 10.1016/s0140-6736(18)31559-9. [DOI] [PubMed] [Google Scholar]

- 2.Brinker TJ, Hekler A, Hauschild A, Berking C, Schilling B, Enk AH, Haferkamp S, Karoglan A, von Kalle C, Weichenthal M, Sattler E, Schadendorf D, Gaiser MR, Klode J, Utikal JS. Comparing artificial intelligence algorithms to 157 German dermatologists: the melanoma classification benchmark. Eur J Cancer. 2019 Apr;111:30–7. doi: 10.1016/j.ejca.2018.12.016. https://linkinghub.elsevier.com/retrieve/pii/S0959-8049(18)31562-4. [DOI] [PubMed] [Google Scholar]

- 3.Dick V, Sinz C, Mittlböck M, Kittler H, Tschandl P. Accuracy of computer-aided diagnosis of melanoma: a meta-analysis. JAMA Dermatol. 2019 Nov 01;155(11):1291–9. doi: 10.1001/jamadermatol.2019.1375. http://europepmc.org/abstract/MED/31215969. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Brinker TJ, Hekler A, Utikal JS, Grabe N, Schadendorf D, Klode J, Berking C, Steeb T, Enk AH, von Kalle C. Skin cancer classification using convolutional neural networks: systematic review. J Med Internet Res. 2018 Oct 17;20(10):e11936. doi: 10.2196/11936. https://www.jmir.org/2018/10/e11936/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Rawat W, Wang Z. Deep convolutional neural networks for image classification: a comprehensive review. Neural Comput. 2017 Sep;29(9):2352–449. doi: 10.1162/neco_a_00990. [DOI] [PubMed] [Google Scholar]

- 6.Han SS, Park I, Chang SE, Lim W, Kim MS, Park GH, Chae JB, Huh CH, Na J. Augmented intelligence dermatology: deep neural networks empower medical professionals in diagnosing skin cancer and predicting treatment options for 134 skin disorders. J Invest Dermatol. 2020 Sep;140(9):1753–61. doi: 10.1016/j.jid.2020.01.019. http://paperpile.com/b/4NlRPX/lwOT. [DOI] [PubMed] [Google Scholar]

- 7.Maron R, Weichenthal M, Utikal J, Hekler A, Berking C, Hauschild A, Enk AH, Haferkamp S, Klode J, Schadendorf D, Jansen P, Holland-Letz T, Schilling B, von Kalle C, Fröhling S, Gaiser MR, Hartmann D, Gesierich A, Kähler KC, Wehkamp U, Karoglan A, Bär C, Brinker TJ, Collabrators Systematic outperformance of 112 dermatologists in multiclass skin cancer image classification by convolutional neural networks. Eur J Cancer. 2019 Sep;119:57–65. doi: 10.1016/j.ejca.2019.06.013. https://linkinghub.elsevier.com/retrieve/pii/S0959-8049(19)30381-8. [DOI] [PubMed] [Google Scholar]

- 8.Brinker T, Hekler A, Enk A, Klode J, Hauschild A, Berking C, Schilling B, Haferkamp S, Schadendorf D, Holland-Letz T, Utikal JS, von Kalle C, Collaborators Deep learning outperformed 136 of 157 dermatologists in a head-to-head dermoscopic melanoma image classification task. Eur J Cancer. 2019 May;113:47–54. doi: 10.1016/j.ejca.2019.04.001. https://linkinghub.elsevier.com/retrieve/pii/S0959-8049(19)30221-7. [DOI] [PubMed] [Google Scholar]

- 9.Tschandl P, Codella N, Akay BN, Argenziano G, Braun RP, Cabo H, Gutman D, Halpern A, Helba B, Hofmann-Wellenhof R, Lallas A, Lapins J, Longo C, Malvehy J, Marchetti MA, Marghoob A, Menzies S, Oakley A, Paoli J, Puig S, Rinner C, Rosendahl C, Scope A, Sinz C, Soyer HP, Thomas L, Zalaudek I, Kittler H. Comparison of the accuracy of human readers versus machine-learning algorithms for pigmented skin lesion classification: an open, web-based, international, diagnostic study. Lancet Oncol. 2019 Jul;20(7):938–47. doi: 10.1016/s1470-2045(19)30333-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Krensel M, Petersen J, Mohr P, Weishaupt C, Augustin J, Schäfer I. Estimating prevalence and incidence of skin cancer in Germany. J Dtsch Dermatol Ges. 2019 Dec 30;17(12):1239–49. doi: 10.1111/ddg.14002. [DOI] [PubMed] [Google Scholar]

- 11.S3 Leitlinie Prävention Hautkrebs, AWMF Registernummer: 032/052OL. Leitlinienprogramm Onkologie (Deutsche Krebsgesellschaft, Deutsche Krebshilfe, AWMF) [2021-06-07]. http://leitlinienprogrammonkologie.de/Leitlinien.7.0.html.

- 12.Bataille V, Bishop J, Sasieni P, Swerdlow A, Pinney E, Griffiths K, Cuzick J. Risk of cutaneous melanoma in relation to the numbers, types and sites of naevi: a case-control study. Br J Cancer. 1996 Jun;73(12):1605–11. doi: 10.1038/bjc.1996.302. http://europepmc.org/abstract/MED/8664138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Consensus Development Panel National institutes of health summary of the consensus development conference on sunlight, ultraviolet radiation, and the skin. J Am Acad Dermatol. 1991 Apr;24(4):608–12. doi: 10.1016/s0190-9622(08)80159-4. [DOI] [PubMed] [Google Scholar]

- 14.IARC Working Group on the Evaluation of Carcinogenic Risks to Humans . International Agency for Research on Cancer. Geneva: World Health Organization; 1992. Solar and ultraviolet radiation. [Google Scholar]

- 15.Malignes Melanom der Haut : ICD-10 C43. Krebsdaten. [2020-04-05]. https://www.krebsdaten.de/Krebs/DE/Content/Krebsarten/Melanom/melanom_node.html.

- 16.Gandini S, Sera F, Cattaruzza MS, Pasquini P, Abeni D, Boyle P, Melchi CF. Meta-analysis of risk factors for cutaneous melanoma: I. Common and atypical naevi. Eur J Cancer. 2005 Jan;41(1):28–44. doi: 10.1016/j.ejca.2004.10.015. https://linkinghub.elsevier.com/retrieve/pii/S0959-8049(04)00832-9. [DOI] [PubMed] [Google Scholar]

- 17.Marcil I, Stern RS. Risk of developing a subsequent nonmelanoma skin cancer in patients with a history of nonmelanoma skin cancer: a critical review of the literature and meta-analysis. Arch Dermatol. 2000 Dec 01;136(12):1524–30. doi: 10.1001/archderm.136.12.1524. [DOI] [PubMed] [Google Scholar]

- 18.Salasche SJ. Epidemiology of actinic keratoses and squamous cell carcinoma. J Am Acad Dermatol. 2000 Jan;42(1 Pt 2):4–7. doi: 10.1067/mjd.2000.103342. http://paperpile.com/b/4NlRPX/rifo. [DOI] [PubMed] [Google Scholar]

- 19.Evans T, Boonchai W, Shanley S, Smyth I, Gillies S, Georgas K, Wainwright B, Chenevix-Trench G, Wicking C. The spectrum of patched mutations in a collection of Australian basal cell carcinomas. Hum Mutat. 2000 Jul;16(1):43–8. doi: 10.1002/1098-1004(200007)16:1<43::aid-humu8>3.0.co;2-7. [DOI] [PubMed] [Google Scholar]

- 20.Lock-Andersen J, Drzewiecki KT, Wulf HC. Eye and hair colour, skin type and constitutive skin pigmentation as risk factors for basal cell carcinoma and cutaneous malignant melanoma. A Danish case-control study. Acta Derm Venereol. 1999 Jan 18;79(1):74–80. doi: 10.1080/000155599750011778. https://www.medicaljournals.se/acta/content/abstract/10.1080/000155599750011778. [DOI] [PubMed] [Google Scholar]

- 21.Titus-Ernstoff L, Perry AE, Spencer SK, Gibson JJ, Cole BF, Ernstoff MS. Pigmentary characteristics and moles in relation to melanoma risk. Int J Cancer. 2005 Aug 10;116(1):144–9. doi: 10.1002/ijc.21001. doi: 10.1002/ijc.21001. [DOI] [PubMed] [Google Scholar]

- 22.Veierød MB, Weiderpass E, Thörn M, Hansson J, Lund E, Armstrong B, Adami H. A prospective study of pigmentation, sun exposure, and risk of cutaneous malignant melanoma in women. J Natl Cancer Inst. 2003 Oct 15;95(20):1530–8. doi: 10.1093/jnci/djg075. [DOI] [PubMed] [Google Scholar]

- 23.Lasithiotakis K, Krüger-Krasagakis S, Ioannidou D, Pediaditis I, Tosca A. Epidemiological differences for cutaneous melanoma in a relatively dark-skinned Caucasian population with chronic sun exposure. Eur J Cancer. 2004 Nov;40(16):2502–7. doi: 10.1016/j.ejca.2004.06.032. http://paperpile.com/b/4NlRPX/ApD4. [DOI] [PubMed] [Google Scholar]

- 24.Fargnoli MC, Piccolo D, Altobelli E, Formicone F, Chimenti S, Peris K. Constitutional and environmental risk factors for cutaneous melanoma in an Italian population. A case-control study. Melanoma Res. 2004 Apr;14(2):151–7. doi: 10.1097/00008390-200404000-00013. http://paperpile.com/b/4NlRPX/sXhk. [DOI] [PubMed] [Google Scholar]

- 25.Naldi L, Imberti GL, Parazzini F, Gallus S, La Vecchia C. Pigmentary traits, modalities of sun reaction, history of sunburns, and melanocytic nevi as risk factors for cutaneous malignant melanoma in the Italian population. Cancer. 2000 Jun 15;88(12):2703–10. doi: 10.1002/1097-0142(20000615)88:12<2703::aid-cncr8>3.0.co;2-q. [DOI] [PubMed] [Google Scholar]

- 26.Ródenas JM, Delgado-Rodríguez M, Herranz MT, Tercedor J, Serrano S. Sun exposure, pigmentary traits, and risk of cutaneous malignant melanoma: a case-control study in a Mediterranean population. Cancer Causes Control. 1996 Mar;7(2):275–83. doi: 10.1007/bf00051303. http://paperpile.com/b/4NlRPX/9QQT. [DOI] [PubMed] [Google Scholar]

- 27.Nachbar F, Stolz W, Merkle T, Cognetta AB, Vogt T, Landthaler M, Bilek P, Braun-Falco O, Plewig G. The ABCD rule of dermatoscopy: high prospective value in the diagnosis of doubtful melanocytic skin lesions. J Am Acad Dermatol. 1994 Apr;30(4):551–9. doi: 10.1016/s0190-9622(94)70061-3. [DOI] [PubMed] [Google Scholar]

- 28.Marzuka A, Book S. Basal cell carcinoma: pathogenesis, epidemiology, clinical features, diagnosis, histopathology, and management. Yale J Biol Med. 2015 Jun;88(2):167–79. http://europepmc.org/abstract/MED/26029015. [PMC free article] [PubMed] [Google Scholar]

- 29.Gordon R. Skin cancer: an overview of epidemiology and risk factors. Semin Oncol Nurs. 2013 Aug;29(3):160–9. doi: 10.1016/j.soncn.2013.06.002. [DOI] [PubMed] [Google Scholar]

- 30.Scrivener Y, Grosshans E, Cribier B. Variations of basal cell carcinomas according to gender, age, location and histopathological subtype. Br J Dermatol. 2002 Jul;147(1):41–7. doi: 10.1046/j.1365-2133.2002.04804.x. [DOI] [PubMed] [Google Scholar]

- 31.Augustin J, Kis A, Sorbe C, Schäfer I, Augustin M. Epidemiology of skin cancer in the German population: impact of socioeconomic and geographic factors. J Eur Acad Dermatol Venereol. 2018 Nov 17;32(11):1906–13. doi: 10.1111/jdv.14990. [DOI] [PubMed] [Google Scholar]

- 32.Pion I, Rigel D, Garfinkel L, Silverman M, Kopf A. Occupation and the risk of malignant melanoma. Cancer. 1995 Jan 15;75(2 Suppl):637–44. doi: 10.1002/1097-0142(19950115)75:2+<637::aid-cncr2820751404>3.0.co;2-#. [DOI] [PubMed] [Google Scholar]

- 33.Roffman D, Hart G, Girardi M, Ko CJ, Deng J. Predicting non-melanoma skin cancer via a multi-parameterized artificial neural network. Sci Rep. 2018 Jan 26;8(1):1701. doi: 10.1038/s41598-018-19907-9. doi: 10.1038/s41598-018-19907-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Wang H, Wang Y, Liang C, Li Y. Assessment of deep learning using nonimaging information and sequential medical records to develop a prediction model for nonmelanoma skin cancer. JAMA Dermatol. 2019 Sep 04;:2335. doi: 10.1001/jamadermatol.2019.2335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Haenssle H, Fink C, Toberer F, Winkler J, Stolz W, Deinlein T, Hofmann-Wellenhof R, Lallas A, Emmert S, Buhl T, Zutt M, Blum A, Abassi M, Thomas L, Tromme I, Tschandl P, Enk A, Rosenberger A, Reader Study Level I and Level II Groups Man against machine reloaded: performance of a market-approved convolutional neural network in classifying a broad spectrum of skin lesions in comparison with 96 dermatologists working under less artificial conditions. Ann Oncol. 2020 Jan;31(1):137–43. doi: 10.1016/j.annonc.2019.10.013. https://linkinghub.elsevier.com/retrieve/pii/S0923-7534(19)35468-7. [DOI] [PubMed] [Google Scholar]

- 36.ISIC Challenge 2019. [2020-05-11]. https://challenge2019.isic-archive.com/

- 37.Binder M, Kittler H, Dreiseitl S, Ganster H, Wolff K, Pehamberger H. Computer-aided epiluminescence microscopy of pigmented skin lesions: the value of clinical data for the classification process. Melanoma Res. 2000 Dec;10(6):556–61. doi: 10.1097/00008390-200012000-00007. [DOI] [PubMed] [Google Scholar]

- 38.Alcon JF, Ciuhu C, ten Kate W, Heinrich A, Uzunbajakava N, Krekels G, Siem D, de Haan G. Automatic imaging system with decision support for inspection of pigmented skin lesions and melanoma diagnosis. IEEE J Sel Top Signal Process. 2009 Feb;3(1):14–25. doi: 10.1109/jstsp.2008.2011156. [DOI] [Google Scholar]

- 39.Cheng B, Stanley RJ, Stoecker WV, Stricklin SM, Hinton KA, Nguyen TK, Rader RK, Rabinovitz HS, Oliviero M, Moss RH. Analysis of clinical and dermoscopic features for basal cell carcinoma neural network classification. Skin Res Technol. 2013 Feb 22;19(1):217–22. doi: 10.1111/j.1600-0846.2012.00630.x. http://europepmc.org/abstract/MED/22724561. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Liu Z, Sun J, Smith M, Smith L, Warr R. Incorporating clinical metadata with digital image features for automated identification of cutaneous melanoma. Br J Dermatol. 2013 Nov 31;169(5):1034–40. doi: 10.1111/bjd.12550. [DOI] [PubMed] [Google Scholar]

- 41.Rubegni P, Feci L, Nami N, Burroni M, Taddeucci P, Miracco C, Butorano MA, Fimiani M, Cevenini G. Computer-assisted melanoma diagnosis: a new integrated system. Melanoma Res. 2015 Dec;25(6):537–42. doi: 10.1097/CMR.0000000000000209. [DOI] [PubMed] [Google Scholar]

- 42.Bonechi S, Bianchini M, Bongini P, Ciano G, Giacomini G, Rosai R, Tognetti L, Rossi A, Andreini P. Fusion of visual and anamnestic data for the classification of skin lesions with deep learning. In: Cristani M, Prati A, Lanz O, Messelodi S, Sebe N, editors. New Trends in Image Analysis and Processing - ICIAP 2019. Switzerland: Springer; 2019. pp. 211–9. [Google Scholar]

- 43.Chin Y, Hou Z, Lee M, Chu H, Wang H, Lin Y, Gittin A, Chien S, Nguyen P, Li L, Chang T, Li Y. A patient-oriented, general-practitioner-level, deep-learning-based cutaneous pigmented lesion risk classifier on a smartphone. Br J Dermatol. 2020 Jun 06;182(6):1498–500. doi: 10.1111/bjd.18859. [DOI] [PubMed] [Google Scholar]

- 44.Gonzalez-Diaz I. DermaKNet: Incorporating the knowledge of dermatologists to convolutional neural networks for skin lesion diagnosis. IEEE J Biomed Health Inform. 2019 Mar;23(2):547–59. doi: 10.1109/jbhi.2018.2806962. [DOI] [PubMed] [Google Scholar]

- 45.Argenziano G, Soyer HP, De Giorgio V, Piccolo D, Carli P, Delfinoo M, Ferrari A, Hofmann-Wellenhof R, Massi D, Mazzocchetti G, Scalvenzi M, Wolf IH. Interactive Atlas of Dermoscopy. Milan, Italy: Edra Medical Publishing & New Media; 2000. pp. 1–208. [Google Scholar]

- 46.Gessert N, Nielsen M, Shaikh M, Werner R, Schlaefer A. Skin lesion classification using ensembles of multi-resolution EfficientNets with meta data. MethodsX. 2020;7:100864. doi: 10.1016/j.mex.2020.100864. https://linkinghub.elsevier.com/retrieve/pii/S2215-0161(20)30083-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Tschandl P, Rosendahl C, Kittler H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci Data. 2018 Aug 14;5:180161. doi: 10.1038/sdata.2018.161. doi: 10.1038/sdata.2018.161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Combalia M, Codella NC, Rotemberg V, Helba B, Vilaplana V, Reiter O, Carrera C, Barreiro A, Halpern AC, Puig S, Malvehy J. BCN20000: Dermoscopic lesions in the wild. arXiv:1908.02288. 2019. [2021-06-14]. https://arxiv.org/abs/1908.02288. [DOI] [PMC free article] [PubMed]

- 49.Codella NC, Gutman D, Celebi ME, Helba B, Marchetti MA, Dusza SW, Kalloo A, Liopyris K, Mishra N, Kittler H, Halpern A. Skin lesion analysis toward melanoma detection: a challenge at the 2017 international symposium on biomedical imaging (ISBI), hosted by the international skin imaging collaboration (ISIC) Proceedings of the IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018) 2018:168–72. doi: 10.1109/ISBI.2018.8363547. [DOI] [Google Scholar]

- 50.Kawahara J, Daneshvar S, Argenziano G, Hamarneh G. Seven-point checklist and skin lesion classification using multitask multimodal neural nets. IEEE J Biomed Health Inform. 2019 Mar;23(2):538–46. doi: 10.1109/jbhi.2018.2824327. http://paperpile.com/b/4NlRPX/OuJE. [DOI] [PubMed] [Google Scholar]

- 51.Kharazmi P, Kalia S, Lui H, Wang ZJ, Lee TK. A feature fusion system for basal cell carcinoma detection through data-driven feature learning and patient profile. Skin Res Technol. 2018 May 22;24(2):256–64. doi: 10.1111/srt.12422. [DOI] [PubMed] [Google Scholar]

- 52.Li W, Zhuang J, Wang R, Zhang J, Zheng W. Fusing metadata and dermoscopy images for skin disease diagnosis. Proceedings of the IEEE 17th International Symposium on Biomedical Imaging (ISBI); IEEE 17th International Symposium on Biomedical Imaging (ISBI); April 3-7, 2020; Iowa City, IA, USA. 2020. [DOI] [Google Scholar]

- 53.Pacheco AG, Krohling RA. The impact of patient clinical information on automated skin cancer detection. Comput Biol Med. 2020 Jan;116:103545. doi: 10.1016/j.compbiomed.2019.103545. [DOI] [PubMed] [Google Scholar]

- 54.Ruiz-Castilla J, Rangel-Cortes J, García-Lamont F, Trueba-Espinosa A. Intelligent Computing Theories and Application, ICIC 2019, Lecture Notes in Computer Science, Vol 11644. Switzerland: Springer; 2019. CNN and metadata for classification of benign and malignant melanomas; pp. 569–79. [Google Scholar]

- 55.Sriwong K, Bunrit S, Kerdprasop K, Kerdprasop N. Dermatological classification using deep learning of skin image and patient background knowledge. Int J Mach Learn Comput. 2019 Dec;9(6):862–7. doi: 10.18178/ijmlc.2019.9.6.884. http://paperpile.com/b/4NlRPX/EdW3. [DOI] [Google Scholar]

- 56.Yap J, Yolland W, Tschandl P. Multimodal skin lesion classification using deep learning. Exp Dermatol. 2018 Nov 27;27(11):1261–7. doi: 10.1111/exd.13777. [DOI] [PubMed] [Google Scholar]

- 57.Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M, Berg AC, Fei-Fei L. ImageNet large scale visual recognition challenge. IJCV, 2015. [2021-06-14]. https://image-net.org/challenges/LSVRC/2015/index.php.

- 58.Hu J, Shen L, Albanie S, Sun G, Wu E. Squeeze-and-excitation networks. IEEE Trans Pattern Anal Mach Intell. 2020 Aug;42(8):2011–23. doi: 10.1109/TPAMI.2019.2913372. http://paperpile.com/b/4NlRPX/mLFU. [DOI] [PubMed] [Google Scholar]

- 59.Visconti A, Ribero S, Sanna M, Spector TD, Bataille V, Falchi M. Body site-specific genetic effects influence naevus count distribution in women. Pigment Cell Melanoma Res. 2020 Mar 25;33(2):326–33. doi: 10.1111/pcmr.12820. [DOI] [PubMed] [Google Scholar]

- 60.Jerant A, Johnson J, Sheridan C, Caffrey T. Early detection and treatment of skin cancer. Am Fam Physician. 2000 Jul 15;62(2):357–68, 75. https://www.aafp.org/link_out?pmid=10929700. [PubMed] [Google Scholar]

- 61.Firnhaber J. Diagnosis and treatment of basal cell and squamous cell carcinoma. Am Fam Physician. 2012 Jul 15;86(2):161–8. https://www.aafp.org/link_out?pmid=22962928. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Relevant references for the overview of the patient data illustrated.