Significance

Many real-world data are inherently multidimensional; however, often data are processed as two-dimensional arrays (matrices), even if the data are naturally represented in higher dimension. The common practice of matricizing high-dimensional data is due to the ubiquitousness and strong theoretical foundations of matrix algebra. Various tensor-based approximations have been proposed to exploit high-dimensional correlations. While these high-dimensional techniques have been effective in many applications, none have been theoretically proven to outperform matricization generically. In this study, we propose matrix-mimetic, tensor-algebraic formulations to preserve and process data in its native, multidimensional format. For a general family of tensor algebras we prove the superiority of optimal truncated tensor representations to traditional matrix-based representations with implications for other related tensorial frameworks.

Keywords: tensor, compression, multiway data, SVD, rank

Abstract

With the advent of machine learning and its overarching pervasiveness it is imperative to devise ways to represent large datasets efficiently while distilling intrinsic features necessary for subsequent analysis. The primary workhorse used in data dimensionality reduction and feature extraction has been the matrix singular value decomposition (SVD), which presupposes that data have been arranged in matrix format. A primary goal in this study is to show that high-dimensional datasets are more compressible when treated as tensors (i.e., multiway arrays) and compressed via tensor-SVDs under the tensor-tensor product constructs and its generalizations. We begin by proving Eckart–Young optimality results for families of tensor-SVDs under two different truncation strategies. Since such optimality properties can be proven in both matrix and tensor-based algebras, a fundamental question arises: Does the tensor construct subsume the matrix construct in terms of representation efficiency? The answer is positive, as proven by showing that a tensor-tensor representation of an equal dimensional spanning space can be superior to its matrix counterpart. We then use these optimality results to investigate how the compressed representation provided by the truncated tensor SVD is related both theoretically and empirically to its two closest tensor-based analogs, the truncated high-order SVD and the truncated tensor-train SVD.

1. Introduction

A. Overview.

Following the discovery of the spectral decomposition by Lagrange in 1762, the singular value decomposition (SVD) was discovered independently by Beltrami and Jordan in 1873 and 1874, respectively. Further generalization of the decomposition came independently by Sylvester in 1889 and by Autonne in 1915 (1). Perhaps the most notable theoretical results associated with the decomposition is due to Eckart and Young, who provided the first optimality proof of the decomposition back in 1936 (2).

The use of SVD in data analysis is ubiquitous. From a statistical point of view, the singular vectors of a (mean-subtracted) data matrix represent the principal component directions, i.e., the directions in which there is maximum variance corresponding to the largest singular values. However, the SVD is historically well-motivated by spectral analysis of linear operators. In typical data analysis tasks, matrices are treated as rectangular arrays of data which may not correspond directly to either statistical interpretations or representations of linear transforms. Hence, the prevalence of the SVD in data analysis applications requires further investigation.

The utility of the SVD in the context of data analysis is due to two key factors: the aforementioned Eckart–Young theorem (also known as the Eckart–Young–Minsky theorem) and the fact that the SVD (or in some cases a partial decomposition or high-fidelity approximation) can be efficiently computed relative to the matrix dimensions and/or desired rank of the partial decomposition (see, e.g., ref. 3 and references therein). Formally, the Eckart–Young theorem offers the solution to the problem of finding the best (in the Frobenius norm or 2-norm) rank- approximation to a matrix with rank greater than in terms of the first terms of the SVD expansion of that matrix. The theorem implies, in some informal sense, that the majority of the informational content is captured by the dominant singular subspaces (i.e., the span of the singular vectors corresponding to the largest singular values), opening the door to compression, efficient representation, denoising, and so on. The SVD motivates modeling data as matrices, even in cases where a more natural model is a high-dimensional array (a tensor)—a process known as matricization.

Intuitively, there is an inherent disadvantage of matricization of data which can naturally be represented as a tensor. For example, a grayscale image is intrinsically represented as a matrix of numbers, but a video is natively represented as a tensor since there is an additional dimension: time. Preservation of the dimensional integrity of the data can be imperative for subsequent analysis, e.g. to account for high-dimensional correlations embedded in the structures in which the data are organized. Even so, in practice, there is often a surprising dichotomy between the data representation and the algebraic constructs employed for its processing. Thus, in the last century there have been efforts to define decomposition of tensorial structures, e.g. CANDECOMP/PARAFAC (CP) (4–6), Tucker (7), higher-order SVD (HOSVD) (8), and Tensor-Train (9). However, none of the aforementioned decompositions offers an Eckart–Young-like optimality result.

In this study, we attempt to close this gap by proving a general Eckart–Young optimality theorem for a tensor-truncated representation. An Eckart–Young-like theorem must revolve around some tensor decomposition and a metric. We consider tensor decompositions built around the idea of the t-product presented in ref. 10 and the tensor-tensor product extensions of a similar vein in ref. 11. In refs. 10 and 11 the authors define tensor-tensor products between third-order tensors and corresponding algebra in which notions of identity, orthogonality, and transpose are all well-defined. For the t-product in ref. 10, the authors show there exists an Eckart–Young type of optimality result in the Frobenius norm. Other popular tensor decompositions [e.g., Tucker, HOSVD, CP, Tensor-Train SVD (TT-SVD) (4, 7–9, 12)] do not lend themselves easily to a similar analysis, either because direct truncation does not lead to an optimal lower-term approximation or because they lack analogous definitions of orthogonality and energy preservation.

In this paper, for the generalization of the t-product, the -product (11), we prove two Eckart–Young optimality results. Importantly, we prove the superiority of the corresponding compressed tensor representation to the matrix compressed representation of the same data. This is a theoretical proof of superiority of compression of multiway data using tensor decomposition as opposed to treating the data as a matrix. Leveraging our algebraic framework, we show that the HOSVD can be interpreted as a special case of the class of tensor-tensor products we consider. As such, we are able to apply our tensor Eckart–Young results to explain why truncation of the HOSVD will, in general, not give an optimal compressed representation when compared to the proposed truncated tensor SVD approach. Moreover, we show how our Eckart–Young results can be applied in the context of comparison of the proposed tensor-tensor framework to a truncated TT-SVD approximation.

The -product-based algebra is orientation-dependent, meaning that the ability to compress the data in a meaningful way depends on the orientation of the tensor (i.e., how the data are organized in the tensor). For example, a collection of grayscale images of size can be placed into a tensor as lateral slices or be rotated first and then placed as lateral slices, and so on. In many applications, there are good reasons to keep the second, lateral, dimension fixed (i.e., representing time, total number of samples, etc.), but in others there may be no obvious merit to preferentially treat one of the other two dimensions. Thus, we also consider variants of the t-product approach that offer optimal approximations to the tensorized data without handling one spatial orientation differently than another.

We primarily limit the discussion to third-order tensors, though in the final section we discuss how the ideas generalize to higher-order as well. Indeed, the potential for even greater compression gain for higher-order representations of the data exists, provided the data have higher-dimensional correlations to be exploited.

B. Paper Organization.

In Section 2, we give background notation and definitions. In Section 3, we define decompositions, the t-SVDM and its variant, the t-SVDMII, and prove an Eckart–Young-like theorem for each. In Section 5, we employ these theorems to prove the superior representation of the t-SVDM and t-SVDMII compared to the matrix SVD. To provide intuition, Section 4 and Section 5 discuss when and why some data are more amenable to optimal representation through the use of the truncated t-SVDM than through the matrix SVD. In Section 6, we relate the -framework to other related tensorial frameworks, including HOSVD and TT-SVD. Section 7 discusses multisided tensor compression. Section 8 contains a numerical study and highlights extensions to higher-order data. A summary and future work are the subjects of Section 9.

2. Background

For the purposes of this paper, a tensor is a multidimensional array, and the order of the tensor is defined as the number of dimensions of this array. As we are concerned with third-order tensors throughout most of the discussion, we limit our notation and definitions to the third-order case here and generalize to higher order in the final section.

A. Notation and Indexing

A third-order tensor is an object in . Its Frobenius norm, , is analogous to the matrix case, that is, . We use MATLAB notation for entries: denotes the entry at row and column of the matrix going “inward.” The fibers of tensor are defined by fixing two indices. Of note are the tube fibers, written as or , . A slice of a third-order tensor is a two-dimensional array defined by fixing one index. Of particular note are the frontal and lateral slices, as depicted in Fig. 1. The frontal slice is expressed as and also referenced as for convenience in later definitions. The lateral slice would be or equivalently expressed as .

Fig. 1.

Fibers and slices of tensor . Left to right: Frontal slices, denoted either or ; lateral slices denoted or ; tube fibers or .

Some other notation that we use for convenience are the vec and reshape operators that map matrices to vectors by column unwrapping, and vice versa:

We can also define invertible mappings between matrices and tensors by twisting and squeezing* (13): i.e., is related to via

The mode-1, mode-2, and mode-3 unfoldings of are , , and , respectively, and are given by

| [1] |

These are useful in defining modewise tensor-matrix products (see ref. 12). For example, for a matrix is equivalent to computing the matrix-matrix product , which is an matrix, and then reshaping the result to an tensor.

The HOSVD (8) can be expressed as

| [2] |

where are the matrices containing the left singular vectors, corresponding to nonzero singular values, of the matrix SVDs of , respectively. For an tensor, would be , , , and , where are the ranks of the three respective unfoldings. Correspondingly, we say the tensor has HOSVD rank . The core tensor is given by . While the columns of the factor matrices are orthonormal, the core need not be diagonal, and its entries need not be nonnegative. In practice, compression is achieved by truncating to an HOSVD rank , but unlike the matrix case such truncation does not lead to an optimal truncated approximation in a norm sense. It does, however, offer a quasi-optimal approximation in the following sense: , which means that if there exists an optimal solution with a small error then a truncated HOSVD will yield a bounded approximation to it (14–16).

If are length vectors, respectively, then (i.e., ) is called a rank-1 tensor. A CP (4–6) decomposition of a tensor is an expression as a sum of rank-1 outer-products:

where the factor matrices have the as their columns, respectively, and is a diagonal matrix containing the weights. If is minimal, then is said to be the rank of the tensor; that is, is the tensor rank. Although it is known that , determining the rank of a tensor is an NP-hard problem (17). Also, the factor matrices need not have orthonormal columns, nor be full rank.† .

However, if we are given a set of factor matrices with columns such that holds, this is still a CP decomposition, even if we do not know if corresponds to, or is bigger than, the rank. In other words, given a CP decomposition where the factor matrices have columns, all we know is that the rank of the tensor represented by this decomposition has tensor rank at most .

In the remaining subsections of this section, we provide background on a tensor-tensor product representation recently developed in the literature (10, 11, 13). The primary goal of this paper is to derive provably optimal (i.e., minimal, in the Frobenius norm) approximations to tensors under this matrix mimetic framework. We compare these approximations to those derived by processing the same data in matrix form and show links between our approximations and to direct tensorial factorizations that offer a notion of orthogonality, the HOSVD and TT-SVD.

B. A Family of Tensor-Tensor Products

The first closed multiplicative operation between a pair of third-order tensors of appropriate dimension was given in ref. 10. That operation was named the t-product, and the resulting linear algebraic framework is described in refs. 10 and 13. In ref. 11, the authors followed the theme from ref. 10 by describing a new family of tensor-tensor products, called -product, and gave the associated algebraic framework. As the presentation in ref. 11 includes the t-product as one example, we will introduce the class of tensor-tensor products of interest and at times throughout the paper highlight the t-product as a special case.

Let be any invertible matrix, and . We will use hat notation to denote a tensor in the transform domain specified by :

where, since is , has the same dimension as . Importantly, corresponds to applying along all tube fibers, although it is implemented according to the definition of computing the matrix-matrix product and reshaping the result. The “hat” notation should be understood in context relative to the applied.

Algorithm 1:

Algorithm for invertible from ref. 11

| INPUT: , , invertible |

| 1: Define , |

| 2: for do |

| 3: |

| 4: end for |

| 5: Define- |

From ref. 11, we define the -product between and through the steps in Algorithm 1. The inner for-loop (steps 2 through 4) is the facewise product, denoted , and is embarrassingly parallelizable since the matrix-matrix products in the loop are independent. Step 1 (and 5) could in theory also be performed in independent blocks of matrix-matrix products (or matrix solves, in the case of to avoid inverse computation).

Choosing as the (unnormalized) DFT matrix and comparing to the algorithm in the previous section, we see that effectively reduce to the t-product operation, , defined in ref. 10. Thus, the t-product is a special instance of products from the -product family.

C. Tensor Algebraic Framework

Now we can introduce the remaining parts of the framework. For the t-product, the linear algebraic framework is in refs. 10 and 13. However, as the t-product is a special case of the as noted above, we will elucidate here the linear algebraic framework as described in ref. 11, with pointers to refs. 10 and 13 so we can cover all cases.

There exists a notion of conjugate transposition and an identity element:

Definition 2.1 (Conjugate Transpose): Given its conjugate transpose under is defined

As noted in ref. 11, this definition ensures the multiplication reversal property for the Hermitian transpose under : This definition is consistent with the t-product transpose given in ref. 10 when is defined by the DFT matrix.

Definition 2.2 (Identity Tensor; Unit-Normalized Slices): The identity tensor satisfies for . For invertible , this tensor always exists. Each frontal slice of is an identity matrix. If is , and is the identity tensor under , we say the is a unit-normalized tensor slice.

The concept of unitary and orthogonal tensors is now straightforward:

Definition 2.3 (Unitary/Orthogonality): Two tensors, , are called -orthogonal slices if is the tube fiber . If () is called -unitary,‡ (-orthogonal), if

where is replaced by transpose for real-valued tensors. Note that must be the one defined under as well and that tube fibers on the diagonal correspond to unit-normalized slices and off-diagonals to tube fibers formed from products with .

3. Tensor *M-SVDs and Optimal Truncated Representation

As noted in the previous section and described in more detail in ref. 11, any invertible matrix can be used to define a valid tensor-tensor product. However, in this paper we will focus on a specific class of matrices for which unitary invariance under the Frobenius norm is preserved, as we discuss in the following. We then use this feature to develop Eckart–Young theory for our tensor decompositions later in this section.

A. Unitary Invariance

Unitary invariance of the Frobenius norm of real-valued orthogonal tensors under the t-product was shown in ref. 10. Here, we prove a more general result.

Theorem 3.1. With the choice of for unitary (orthogonal) , and nonzero , assume is and -unitary (-orthogonal). Then

Likewise, if , .

Proof.

Suppose where is unitary. Then, . Next,

| [3] |

Let . Using [3],

as each is unitary. The other direction is similar.

We now have the framework we need to describe tensor SVDs induced by a fixed, operator. These were defined and existence was proven in ref. 10 for the t-product over real-valued tensors and in ref. 11 for more generally.

Definition 3.2 (10, 11): Let be a tensor. The (full) tensor SVD (t-SVDM) of is

| [4] |

where , are , and is a tensor whose frontal slices are diagonal (such a tensor is called f-diagonal), and is the number of nonzero tubes in . When is the DFT matrix, this reduces to the t-product-based t-SVD introduced in ref. 10.

Note that Definition 3.2 implies each frontal slice of has rank less than or equal to . Clearly, if , from the second equality we can get a reduced t-SVDM, by restricting to have only orthonormal lateral slices, and to be , as opposed to the full representation. Similarly, if , we only need to keep the portion of and the columns of to obtain the same representation. An illustration of the decomposition is presented in Fig. 2, Top.

Fig. 2.

(Top) Illustration of the tensor SVD. (Bottom) Example showing different truncations across the different SVDs of the faces, based on Algorithm 3.

Algorithm 2:

Full t-SVDM from ref. 11

| INPUT: , invertible |

| 1: |

| 2: for do |

| 3: |

| End for |

| 4: |

Independent of the choice of , the components of the t-SVDM are computed in transform space. We describe the full t-SVDM in Algorithm 2. As noted, the t-SVDM above was proposed already in ref. 11. However, when we restrict the class of to nonzero multiples of unitary or orthogonal matrices, we can now derive an Eckart–Young theorem for tensors in general form. To do so, we first give a new corollary for the restricted class of considered.

Corollary 3.3. Assume , where , and is unitary. Then given the t-SVDM of over defined in Definition 3.2,

Moreover, .

Proof: The proof of the first equality follows from Theorem 3.1, the second from the definition of Frobenius norm. To prove the ordering property, use the shorthand for each singular tube fiber as , and note using [3] that

where we have used to denote the largest singular value of the frontal face of . However since , the result follows.

This observation gives rise to a new definition.

Definition 3.4: We refer to in the t-SVDM Definition 3.2 (see the second equality in [4]) as the t-rank,§ the number of nonzero singular tubes in the t-SVDM.

We can also extend the idea of multirank in ref. 13 to the general case:

Definition 3.5: The multirank of under is the vector such that its entry denotes the rank of the frontal slice of ; that is, .

Notice that a tensor with multirank must have t-rank equal to .

Definition 3.6: The implicit rank under of is .

Note that is uniquely defined in Definition 3.2, thus the t-rank and multirank of a tensor are also unique.

B. Eckart–Young Theorem for Tensors

The key aspect that has made the t-product-based t-SVD so instrumental in many applications (see, for example, refs. 18–21) is the tensor Eckart–Young theorem proven in ref. 10 for real-valued tensors under the t-product. In loose terms, truncating the t-product-based t-SVDM gives an optimal low t-rank approximation in the Frobenius norm. An Eckart–Young theorem for the operator was not provided in ref. 11. We give a proof below for the special case that we have been considering in which is a multiple of a unitary matrix.

Theorem 3.7. Define , where is a nonzero multiple of a unitary matrix. Then, is the best Frobenius norm approximation¶ over the set the set of all t-rank tensors under , of the same dimensions as . The squared error is , where is the t-rank of .

Proof: The squared error result follows easily from the results in the previous section. Now let . By definition, is a rank-k outer product . The best rank-k approximation to is , so , and the result follows.

In ref. 18, the authors used the Eckart–Young result for the t-product for compression of facial data and a PCA-like approach to recognition. They also gained additional compression in an algorithm they called the t-SVDII (only for the t-product on real-valued tensors), which, although not described as such in that paper, is effectively reducing the multirank for further compression. Here, we provide the theoretical justification for the t-SVDII approach in ref. 18 while simultaneously extending the result to the -product family restricted to being a nonzero multiple of a unitary matrix.

Theorem 3.8. Given the t-SVDM of under , define , to be the approximation having multirank : that is,

Then is the best multirank approximation to in the Frobenius norm and

where denotes the rank of the frontal face of .

Proof: Follows similarly to the above, and is omitted.

To use this in practice, we generalize the idea of the t-SVDII in ref. 18 to the -product for a multiple of a unitary matrix. First, we need a suitable method to choose . We know

where is the rank of the frontal face of . Thus, there are total nonzero singular values. Let us order the values in descending order and put them into a vector of length . We find the first index such that . Keeping total terms thus implies an approximation of energy . Then let —this will be the value of the singular value that is the smallest one which we should include in the approximation. We run back through the faces, and for face we keep only the singular 3-tuples such that . In other words, the RE in our approximation is given by

The pseudo-code is given in Algorithm 3, and a cartoon illustration of the output is given in Fig. 2.

Algorithm 3:

Return t-SVDMII under , to meet energy constraint

| INPUT: , a multiple of unitary matrix; desired energy . |

| 1: Compute t-SVDM of . |

| 2: Concatenate for all into a vector . |

| 3: . |

| 4: Let be the vector of cumulative sums: i.e., |

| 5: Find the first index such that . |

| 6: Define . |

| 7: for do |

| 8: Set as number of singular values for greater or equal to . |

| 9: Keep only the and . |

| End for |

In Section 5, we compare our Eckart–Young results for tensors with the corresponding matrix approximations obtained by using the matrix-based Eckart–Young theorem, but first we need a few results that show what structure these tensor approximations inherit from .

4. Latent Structure

To understand why the proposed tensor decompositions are efficient at compression and feature extraction we investigate the latent structure induced by the algebra in which we operate. We shall also capitalize on this structural analysis in the next section’s proofs stating how the proposed t-SVDM and t-SVDMII decompositions can be used to devise superior approximations compared to their matrix counterparts.

If , from Algorithm 1 we have

where indicates pointwise scalar products on each face. Using the definitions, this expression is tantamount to

| [5] |

Note that is mathematically equivalent to forming the tube fiber , and applied to a tube fiber works analogously to applied to that tube fiber’s column vector equivalent. Further, the transpose is a real-valued transpose, stemming from the definition of the mode-3 product.

Let be any element in and consider computing the product . In ref. 11 it was shown that the tube fiber entry in is effectively the product of the tubes . From [5] we have

| [6] |

The matrix in the parentheses on the right is an element of the space of all matrices , where is diagonal. This brings us to a major result.

Theorem 4.1. Given . Then, using

| [7] |

Thus, each lateral slice of is a weighted combination of “basis” matrices given by , but the weights, instead of being scalars, are matrices from the matrix algebra induced by the choice of . For , the DFT matrix, the matrix algebra is the algebra of circulants.

5. Tensors and Optimal Approximations

In ref. 18, the claim was made that a t-SVD to terms could be superior to a matrix SVD based compression to terms. Here, we offer a formal proof then discuss the relative meaning of . Then, in the next section, we discuss what can be done to obtain further compression.

A. Theory: T-rank vs. Matrix Rank

Let us assume that our data are a collection of , matrices . For example, might be a grayscale image, or it might be the values of a function discretized on a two-dimensional uniform grid. Let , so that has length .

We put samples into a matrix (tensor) from left to right:

Thus, represent the same data, just in different formats. It is first instructive to consider in what ways the t-rank, , of and the matrix rank of are related. Then, we will move on to relating the optimal t-rank approximation of with the optimal rank- approximation to .

Theorem 5.1. The t-rank, , of is less than or equal to the rank, , of . Additionally, since , if , then .

Proof: The problem’s dimensions necessitate and . Let be a rank-r factorization of such that is and is . From the fact , we can show the -sized frontal face of satisfies

| [8] |

Clearly, the rank of this frontal slice is bounded above by since this is the maximal rank of the matrix in parentheses. Then, the singular values of the matrix satisfy , where . As , for a particular value will be a nonzero tube fiber iff for any of the at least one is nonzero. There can be at most nonzero tube fibers, so .

Note that the proof was independent of the choice of invertible . In particular, it holds for . This means that the act of “folding” the data matrix into a tensor may provide a reduced rank approximation (a rank- matrix goes to a t-rank tensor). A nonidentity choice of , though, may reveal . To make the idea concrete, let us consider an example.

Example 5.2: Let invertible, and , with be a set of independent vectors. Define such that . The t-rank is 1.

On the other hand, with the circulant downshift matrix,

The rank of the therefore is . If and have orthonormal columns, we can show for any .

B. Theory: Comparison of Optimal Approximations

In this subsection we compare the quality of approximations obtained by truncating the matrix SVD of the data matrix vs. truncating the t-SVDM of the same data as a tensor. We again assume that and that has rank .

Let be the matrix SVD of , and denote its best rank-, approximation according to

| [9] |

for where . Finally, we need the following matrix version of [9] which we reference in the proof:

| [10] |

Theorem 5.3. Given , as defined above, with having rank and having t-rank , let denote the best rank- matrix approximation to in the Frobenius norm, where . Let denote the best t-rank- tensor approximation under , where is a multiple of a unitary matrix, to in the Frobenius norm. Then

Proof: Consider [10]. The multiplication by the scalar in the sum is equivalent to multiplication from the right by . However, since for unitary , we have , where is the vector of all ones. Define the tube fiber from the matrix-vector product oriented into the third dimension. Then, . Now we observe that [10] can be equivalently expressed as

| [11] |

These can be combined into a tensor equivalent

Since , the t-rank of is . The t-rank of must also not be smaller than , by Theorem 5.1.

Thus, given the definition of as the minimizer over all such -term “outer-products” under , it follows that

Here is an example showing strict inequality is possible. Additional supporting examples are in the numerical results.

Example 5.4: Given the Haar wavelet matrix, and let

It is easily shown that . Setting , we observe

In the next subsection we discuss the level of approximation provided by the output of Algorithm 3 by relating it back to the truncated t-SVDM and also to truncated matrix SVD. First, we need a way to relate storage costs.

Theorem 5.5. Let be the t-SVDM t-rank approximation to , and suppose its implicit rank is . Define . There exists such that the t-SVDMII approximation, , obtained for this in Algorithm 3, has implicit rank less than or equal to an implicit rank of and

Proof: From Theorem 5.3 that . The proof is by construction using as the starting point; that is, assume for each frontal slice. Let be the largest singular value we truncated; that is,

Let be the union of all of the singular values that were kept in the approximation and smaller than . If is empty, then and we are done.

Otherwise, was larger than at least one of the singular values we kept. Let be the index of the frontal slice containing with corresponding rank . Let be the smallest element contained in and, by definition, . Let be the index of the frontal slice containing with corresponding rank . Note that must be the smallest singular value kept from frontal slice . Set and . Then, the error has changed by an amount .

In practice, we can decrease the implicit rank further. For convenience, assume the elements of are labeled in increasing order . Let for less than or equal to the cardinality of . Choose the largest value of such that . Again, set and reduce the values of that correspond to . Then, the error has changed by an amount and the implicit rank has decreased by .

C. Storage Comparisons

Let us suppose that is the truncation parameter for the tensor approximation and is the truncation parameter for the matrix approximation. Table 1 gives a comparison of storage for the methods we have discussed so far. Note that for the t-SVDMII it is necessary to work only in the transform domain, as moving back to the spatial domain would cause fill and unnecessary storage.

Table 1.

Comparison of storage costs for approximations of an tensor

| Basis storage | Coefficients storage | Total implicit storage | |||

| + st[] | |||||

| + st[] |

Top-to-bottom: a -term truncated matrix SVD expansion, a -term truncated t-SVDM expansion, and a -multirank t-SVDMII. Recall that for the t-SVDMII, we store terms in the transform domain and the implicit rank for is . The notation refers to the implicit storage of , described below.

In practice, we can omit the storage costs of when using fast transform techniques, such as the DCT, DFT, or discrete wavelet transform. In the case where cannot be applied by fast transform the storage for for t-SVDM is bounded by the number of nonzeros in for t-SVDM. As we show in Section 5D, if has many zeros (that is, many faces in the transform domain do not contribute singular values above the threshold), we can reduce the storage to .

Discussion

If we assume we do not need to store the transformation matrix , then if , Theorem 5.3 says the approximation error of the t-SVD is at least as good as the corresponding matrix approximation. In applications where we only need to store the basis terms, e.g., to do projections, the basis for the tensor approximation is better in a relative error sense than the basis for the matrix case, for the same storage. However, unless , if we need to store both the basis and the coefficients, we will need more storage for the tensor case if we need to take . Fortunately, in practice for . Indeed, we already showed an example (see Example 5.2) where the error is zero for , but had to be much larger to achieve exact approximation. If , then the total implicit storage of the tensor approximation of terms is less than the total storage for the matrix case of terms.

Compared to the matrix SVD, the t-SVDMII approach can provide compression for at least as good, or better, an approximation level as indicated by the theorem. Of course, should be an appropriate one given the latent structure in the data. The t-SVDMII approach allows us to account for the more “important” features (e.g., low frequencies and multidimensional correlations) and therefore impose a larger truncation on the corresponding frontal faces because those features contribute more to the global approximation. Truncation of t-SVDM by a single truncation index, on the other hand, effectively treats all features equally and always truncates each to terms, which depending on the choice of may not be as good. This is demonstrated in Section 8.

6. Comparison to Other Tensor Decompositions

Now, we compare the decompositions to other types of tensor representations as described in Section 1.

A. Comparison to truncated HOSVD

In this section, we wish to show how truncated HOSVD (tr-HOSVD) can be expressed using a -product. Then, we can compare our truncated results to the tr-HOSVD. Other truncation strategies than the one discussed below could be considered, e.g. ref. 22, but are outside the scope of this study.

The tr-HOSVD is formed by truncating to columns, respectively, the factor matrices and forming the core tensor as

where denotes the triple . The tr-HOSVD approximation then is

We now prove the following theorem that shows the tr-HOSVD can be represented under when .

Theorem 6.1. Define the matrix as (since is unitary, it follows that ), and define and tensors in the transform space according to

Then and it is easy to show that , are unitary tensors. Define as the tensor with identity matrices on faces 1 to and 0 matrices from faces to .

Let . Then

Proof: First consider

| [12] |

using properties of modewise products (see [12]). From the definitions of the modewise product the face of as defined via [12] is . However, this means that we can equivalently represent as .

Now implies . However, since for , we only need to zero-out the last frontal slices of to get to , which we do by taking the -product with on the right.

Note that in our theorem we assume is square, but the transformation is effectively truncated by postmultiplying by , which allows for the -product presentation of the tr-HOSVD. Thus, we now can compare the theoretical results from tr-HOSVD to our truncated methods.

Theorem 6.2. Given the tr-HOSVD approximation , for ,

with equality only if .

Proof: Note that the t-ranks of and are and , respectively. Since is , its t-rank cannot exceed . As such, we know can be written as a sum of outer-products of tensors under , and the result follows given the optimality of .

Corollary 6.3. Given the tr-HOSVD approximation , for there exists such that returned by Algorithm 3 has implicit rank less than or equal to and

Note this is independent of the choice of : The best tr-HOSVD approximation will occur when .

While this has theoretical value, it begs the question of whether or not needs to be stored explicitly. When is a matrix that can be applied quickly without explicit storage, such as a discrete cosine transform, this is not a consideration. We will say more about this at the end of this section.

B. Comparison to TT-SVD

The TT-SVD was introduced in ref. 9. In recent years, the (truncated) TT-SVD has been used successfully for compressed representations of matrix operators in high-dimensional spaces and for data compression.

For a third-order tensor, the first step of the TT-SVD algorithm is to perform a truncated matrix SVD of one unfolding. Thus, the results depend upon the choice of unfolding. In order to compare with our method, we shall choose the mode-2 unfolding.# We note that , and since has been used already, we observe is the SVD of the mode-2 unfolding. The TT-SVD algorithm proceeds as follows for third-order tensors:

1. Truncate , to terms, keep (typically, as a tensor). Let , and note it is .

2. Let denote reshaped to an matrix. Compute the SVD and truncate to terms. The is folded into a tensor, and the remaining is stored as a tensor.

The truncations are chosen based on a user-defined threshold such that , where is the TT-SVD approximation. The storage cost of this implicit tensor approximation is numbers.

Theorem 6.4. Let denote the truncated TT-SVD approximation, where correspond to the truncation indices that satisfy the user input threshold . Then,

with strict inequality if is less than the rank of . Furthermore, there exists such that

Proof: Let and let be the rank of and be the rank of . The steps in the algorithm imply that . Thus,

by employing unitary-invariance of the Frobenius norm.

Applying Theorem 5.5,

The inequality is strict if ; it is also strict independent of if which, as noted earlier, is achievable and is almost always the case in practice.

C. Approximation in CP Form

The purpose of the interpretation of our decomposition in CP form is with an eye toward an implicit compressed representation discussed in the next subsection. Consider the formation of in Algorithm 3. In step 10, we keep terms in the matrix SVD for frontal slice of . So

| [13] |

and is the column of the identity matrix. However, . Thus,

Next, concatenate vectors to form three matrices of columns as follows. Let similarly for . Let

| [14] |

where , so that also has columns, with repeats as dictated by the . Let contain the . Then

Note that if is an orthogonal (unitary) matrix then ( for complex ) the elements of the rightmost matrix are columns of the transpose of and hence have unit norm. The columns of also have unit norm.

D. Discussion: Storage of the Transformation Matrix

From [14], only columns of for which need to be stored (along with a storage-negligible vector of integer pointers) so storage drops to ; see Table 2. In the numerical results on hyperspectral data, this fact, in combination with Corollary 6.3, allows the t-SVDMII to give results superior to the tr-HOSVD in both error and storage.

Table 2.

Summary of st

| t-SVDM | t-SVDMII | |

| Fast transform | 0 | 0 |

| Unstructured | nnz() | (nnz, nnz()) |

7. Multisided Tensor Compression

Consider the mapping induced by matrix-transposing (without conjugation) each of the lateral slices.∥ We use a superscript of to denote such a permuted tensor:

In this section, we define techniques for compression using both orientations of the lateral slices in order to ensure a more balanced approach to the compression of the data.

A. Optimal Convex Combinations

Given and , compress each and form

Observe that , where as before and denotes a stride permutation matrix. Since is orthogonal, the singular values and right singular vectors of are the same as those of , and the left singular vectors are row permuted by . For a truncation parameter ,

It follows that for

Similarly for the optimal t-SVDMII approximations, , where are the multiindices for each orientation, respectively, which may have been determined with different energy levels. From Theorem 3.8,

where is the rank of under , is the rank of under and are for under as well.

B. Sequential Compression

Sequential compression is also possible, as described in ref. 23. A second tensor SVD is applied to , making each step locally optimal. Due to space constraints, we will not elaborate on this further here.

8. Numerical Examples

In the following discussion, the compression ratio (CR) is defined as the number of floating point numbers needed to store the uncompressed data divided by the number of floating point numbers needed to store the compressed representation (in its implicit form). Thus, the larger the ratio, the better the compression. The relative error (RE) is the ratio of the Frobenius-norm difference between the original data and the approximation over the Frobenius-norm of the original data.

A. Compression of Yale B Data

In this section, we show the power of compression for the t-SVDMII approach with appropriate choice of , that is, exploits structure inherent in the data. We create a third-order tensor from the Extended Yale B face database (24) by putting the training images in as lateral slices in the tensor. Then, we apply Algorithm 3, varying , for four different choices of : We choose as a random orthogonal matrix, we use as an orthogonal wavelet matrix, we use as the unnormalized DCT matrix, and we form in a data-driven approach by taking the transpose of the left factor matrix of the mode-3 unfolding. We have chosen to use a random orthogonal matrix in this experiment to show that the compression power is relative to structure that is induced through the choice of , so we do not expect, nor do we observe, value in choosing to be random. In Fig. 3, we plot the CR against the RE in the approximation. We observe that for RE on the order of 10 to 15% the margins in compression achieved by the t-SVDMII for both the DCT, the wavelet transform, and the data-driven transform vs. treating the data in either matrix form, or in choosing a transform that—like the matrix case—does not exploit structure in the data, are quite large. To evaluate only compressibility associated with the transformation we do not count the storage of .

Fig. 3.

Illustration of the compressive power of the t-SVDIIM in Algorithm 3 for appropriate choices of . Far more compression is achieved using either the DCT or wavelet transform to define , as well as defining in a data-driven approach since they capitalize on structural features in the data.

B. Video Frame Data

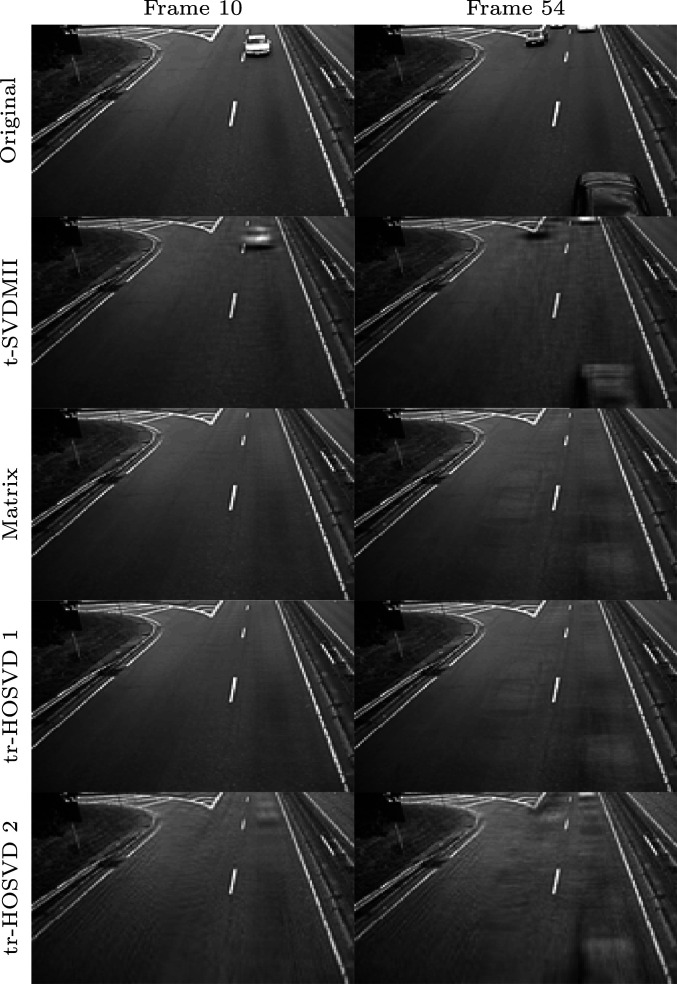

For this experiment, we use video data available in MATLAB.** The video consists of 120, frames in grayscale. The camera is positioned near one spot in the road, and cars travel on that road (more or less from the top to the bottom as the frames progress), so the only changes per frame are cars entering and disappearing.

We compare the performance of our truncated t-SVDMII, for being the DCT matrix, against the truncated matrix and truncated HOSVD approximations. We orient the frames as transposed lateral slices in the tensor to confine the change in one lateral slice to another to rows where there is car movement.†† Thus, is .

With both the truncated t-SVDMII and truncated matrix SVD approaches we can truncate based on the same energy value. Thus, we get RE in our respective approximations with about the same value, and then we can compare the relative compression. Alternatively, we can fix our energy value and compute our truncated t-SVDMII and find its CR. Then, we can compute the truncated matrix SVD approximation with similar CR and compare its RE to the tensor-based approximation. We show some results for each of these two ways of comparison.

There are many ways of choosing the truncation 3-tuple for HOSVD. Trying to choose a 3-tuple that has a comparable relative approximation to our approach would be cumbersome and would still leave ambiguities in the selection process. Thus, we employ two truncation methods that yield an approximation best matching the CRs of our tensor approximation. The indices are chosen as follows: 1) Compress only on the second mode (i.e., change , fix ) and 2) choose truncation parameters on dimensions such that the modewise compression to dimension ratios are about the same. The second option amounts to looping over , setting , and . Thus, it is possible to compute the CR for the tr-HOSVD based on the dimension in advance to find the closest match to the desired compression levels. The results are given in Table 3.

Table 3.

Video experiment results

| t-SVDMII | Matrix | tr-HOSVD | |||

| CR | |||||

| CR | 4.76 | 1.83 | 4.76 | 4.95 | 4.90 |

| RE | 0.044 | 0.045 | 0.093 | 0.098 | 0.065 |

| CR | |||||

| CR | 10.10 | 2.54 | 10.87 | 10.75 | 10.42 |

| RE | 0.063 | 0.064 | 0.120 | 0.125 | 0.090 |

Matrix-based compression can be for predefined relative energy (which affects RE) or set to achieve desired compression, so we performed both. For experiment 1 with , the and values that gave the same compression results were 25 and (92, 69, 69), respectively; for the second experiment these were 11 and (70, 53, 53), respectively. Truncations for the matrix case were 65 and 25 for the experiment and 47 and 11 for the second.

We can also visualize the impact of the compression schemes. In Fig. 4 we give the corresponding reconstructed representations of frame 10 and 54 for the four methods under comparable CR for the second (columns 2 and 4 through 6, second row-block of the table, i.e., the results corresponding to the most compression). Cars disappear altogether and/or artifacts make it appear as though cars may be in the frame when they are not—at these compression levels, the matrix and tr-HOSVD suffer from a ghosting effect.

Fig. 4.

Various reconstructions for frames 10 and 54 (Left and Right). Top to bottom: Original, tr-tSVDMII with , tr-Matrix for same , tr-HOSVD , and tr-HOSVD. See Table 3 for numerical results.

C. Hyperspectral Image Compression

We compare the performance of the t-SVDMII and the truncated HOSVD based on our results in Corollary 6.3 on hyperspectral data. The hyperspectral data comes from flyover images of the Washington, DC mall with a sensor that measured 210 bands of visible infrared spectra (25). After removing the opaque atmospheric levels the data consist of 191 images corresponding to different wavelengths and each image is of size 307 × 1,280.

To reduce the computational cost for this comparison, and to allow for equal truncation in both spatial dimensions, we resize the images using MATLAB imresize to images. We store the data in a tensor of size , where the third dimension contains the wavelengths. We choose this orientation because we use , where contains the left singular vectors of . We can expect a high correlation and hence high compressibility (i.e., many will be 0) along the third dimension as they correspond to exactly the same spatial location at each wavelength.

For a fair comparison, we relate the t-SVDMII, the tr-HOSVD, and the TT-SVD via the truncation parameter of the truncated t-SVDM using . For the t-SVDMII based on Theorem 5.5 we use the energy parameter . For the tr-HOSVD based on Theorem 6.2 we use a variety of multilinear truncations and for various choices of and . For the TT-SVD based on Theorem 6.4 we choose the accuracy threshold such that the truncation of the first unfolding is . Note we first permute the tensor so that the first TT-SVD unfolding is the mode-2 unfolding. For completeness, we include the matrix SVD and apply Theorem 5.3. We display the RE-vs.-CR results in Fig. 5.

Fig. 5.

Performance of various representations the hyperspectral data compression. Each shape represents a different method. The methods are related by the truncation parameter and each color represents a different choice of . The HOSVD results depicted by the squares correspond to multilinear rank and maximally compresses the first two dimensions such that Theorem 6.2 applies and does not compress in the third dimension to minimize the RE. The HOSVD results depicted by the asterisks are many different choices of multilinear rank such that Theorem 6.2 applies. For each , we depict all possible combinations of and either and is any larger truncation value, or vice versa. The RE depends most on the value of and the CR depends most on the value of . This is due to the orientation and structure of the data; the third dimension is highly compressible and thus truncating does not significantly worsen the approximation. Comparing shapes, the t-SVDMII produces the best results, clearly outperforming all cases of the SVD and HOSVD and outperforming the TT-SVD, most noticeably for better approximations (lower RE). Comparing colors, for a fixed value of the t-SVDMII produces the smallest RE, providing empirical support for the theory.

The results use the t-SVDMII storage method described in Section 6D. Even when counting the storage of , the t-SVDMII outperforms the matrix SVD and the tr-HOSVD and is highly competitive with the TT-SVD and outperforms for larger values of (i.e., less truncation, but better approximation quality). For example, if we compare the t-SVDMII result with (dark gray diamond) with the TT-SVD result with (light gray circle), we see that the t-SVDMII produces a better approximation for roughly the same CR. The color-coded results in Fig. 5 follow directly from writing the tr-HOSVD in our -framework and the optimality condition in Theorem 6.2 and follow from relating the TT-SVD to the matrix SVD and using our Eckart–Young result in Theorem 5.3. Specifically, we see that for each choice of the t-SVDMII approximation has a smaller RE than all other methods.

We also note that for the HOSVD in Fig. 5 a large number of combinations of multirank parameters are possible and can drastically change the approximation–compression relationship. Finding a reasonable tr-HOSVD multirank via trial and error may not be feasible in practice. In comparison, there is only one parameter for the t-SVDMII and TT-SVD which impacts the approximation–compression relationship more predictably, particularly because the approximation quality is known a priori.

D. Extension to Four Dimensions and Higher

Although the algorithms and optimality results were described for third-order tensors, the algorithmic approach and optimality theory can be extended to higher-order tensors since the definitions of the tensor-tensor products extend to higher-order tensors in a recursive fashion, as shown in ref. 26. Ideas based on its truncation for compression and high-order tensor completion and robust PCA can be found in the literature (19, 27). As noted in ref. 11, a similar recursive construct can be used for higher-order tensors for the -product, or different combinations of transform-based products can be used along different modes. We give the four-dimensional t-SVDMII algorithm in ref. 23. Due to space constraints, we do not discuss this further here.

9. Conclusions and Ongoing Work

We have proved Eckart–Young theorems for the t-SVDM and t-SVDMII. Importantly, we showed that the truncated t-SVDM and t-SVDMII representations give better approximation to the data than the corresponding matrix-based SVD compression. Although the superiority of tensor-based compression has been observed by many in the literature, our result proves this theoretically. By interpreting the HOSVD in the -framework, we developed the relationship between the HOSVD and the t-SVDM and t-SVDMII and then applied our Eckart–Young results to show how our tensor approximations improve on the Frobenius norm error. We were also able to apply our Eckart–Young theorems to compare Frobenius norm error with that of a TT-SVD approximation.

We briefly considered both multisided compression and extensions of our work to higher-order tensors. In future work, means for optimizing , , and such that the upper bound on the error is minimized while minimizing the total storage will be investigated.

The choice of defining the tensor-tensor product should be tailored to the data for best compression. Therefore, consideration as for how to best design to suit the dataset shall be pursued in future work.

Acknowledgments

M.E.K.’s work was partially supported by a grants from IBM Thomas J. Watson Research Center, Tufts T-Tripods Institute (under NSF Harnessing the Data Revolution grant CCF-1934553), and NSF grant DMS-1821148.

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission.

*If is , the MATLAB command returns the matrix.

†Since computation of the best rank- CP decomposition requires an iterative method and does not require orthogonality, CP results are not brought for comparison in this study.

The reader should regard the elements of the tensor as tube fibers under . This forms a free module (see ref. 11). The analogy to elemental inner-product-like definitions over the underling free module induced by and space of tube-fibers is referenced in section 4 of ref. 11 for general -products and in section 3 of ref. 13 for the t-product. The notion of orthogonal/unitary tensors is therefore consistent with this generalization of inner-products, which is captured in the first part of the definition.

§The term “t-rank” is exclusive to the tensor decomposition and should not be confused with the rank of a tensor, which was defined in Section 1.

¶Specfically, we mean the minimizer of the Frobenius norm of the discrepancy between the original and the approximated tensor.

#If we want to choose a different mode for the first unfolding we would correspondingly permute the tensor prior to decomposing with our method.

In MATLAB, this would be obtained by using the command .

**The video data are built-in to MATLAB and can be loaded using trafficVid = VideoReader(‘traffic.mj2′).

††Orienting with transposed frames did not affect performance significantly.

Data Availability

There are no data underlying this work.

References

- 1.Stewart G. W., On the early history of the singular value decomposition. SIAM Rev. 35, 551–566 (1993). [Google Scholar]

- 2.Eckart C., Young G., The approximation of one matrix by another of lower rank. Psychometrika 1, 211–218 (1936). [Google Scholar]

- 3.Watkins D. S., Fundamentals of Matrix Computations (Wiley, ed. 3, 2010). [Google Scholar]

- 4.Hitchcock F. L., The expression of a tensor or a polyadic as a sum of products. J. Math. Phys. 6, 164–189 (1927). [Google Scholar]

- 5.Carroll J. D., Chang J., Analysis of individual differences in multidimensional scaling via an n-way generalization of ‘Eckart-Young’ decomposition. Psychometrika 35, 283–319 (1970). [Google Scholar]

- 6.Harshman R.. “Foundations of the PARAFAC procedure: Models and conditions for an ”explanatory” multi-modal factor analysis” (UCLA Working Papers in Phonetics, 16, 1970). [Google Scholar]

- 7.Tucker L. R., “Implications of factor analysis of three-way matrices for measurement of change” in Problems in Measuring Change, Harris C. W., Ed. (University of Wisconsin Press, Madison WI, 1963),pp. 122–137. [Google Scholar]

- 8.De Lathauwer L., De Moor B., Vandewalle J., A multilinear singular value decomposition. SIAM J. Matrix Anal. Appl. 21, 1253–1278. [Google Scholar]

- 9.Oseledets I. V., Tensor-train decomposition. SIAM J. Sci. Comput. 33, 2295–2317 (2011). [Google Scholar]

- 10.Kilmer M. E., Martin C. D., Factorization strategies for third-order tensors. Lin. Algebra Appl. 435, 641–658 (2011). [Google Scholar]

- 11.Kernfeld E., Kilmer M., Aeron S., Tensor–tensor products with invertible linear transforms. Lin. Algebra Appl. 485, 545–570 (2015). [Google Scholar]

- 12.Kolda T., Bader B., Tensor decompositions and applications. SIAM Rev. 51, 455–500 (2009). [Google Scholar]

- 13.Kilmer M. E., Braman K., Hao N., Hoover R. C., Third-order tensors as operators on matrices: A theoretical and computational framework with applications in imaging. SIAM J. Matrix Anal. Appl. 34, 148–172 (2013). [Google Scholar]

- 14.Grasedyck L., Hierarchical singular value decomposition of tensors. SIAM J. Matrix Anal. Appl. 31, 2029–2054 (2010). [Google Scholar]

- 15.Hackbusch W., Tensor Spaces and Numerical Tensor Calculus (Springer Series in Computational Mathematics, Springer, 2012), vol. 42. [Google Scholar]

- 16.Vannieuwenhoven N., Vandebril R., Meerbergen K., A new truncation strategy for the higher-order singular value decomposition. SIAM J. Sci. Comput. 34, A1027–A1052 (2012). [Google Scholar]

- 17.Hillar C. J., Lim L., Most tensor problems are NP-Hard. J. ACM 60, 1–39 (2013). [Google Scholar]

- 18.Hao N., Kilmer M. E., Braman K., Hoover R. C., Facial recognition using tensor-tensor decompositions. SIAM J. Imag. Sci. 6, 457–463 (2013). [Google Scholar]

- 19.Zhang Z., Ely G., Aeron S., Hao N., Kilmer M., “Novel methods for multilinear data completion and de-noising based on tensor-SVD” in 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR)(IEEE, 2014), pp. 3842–3849. [Google Scholar]

- 20.Zhang Y., et al. , “Multi-view spectral clustering via tensor-SVD decomposition” in 2017 IEEE 29th International Conference on Tools with Artificial Intelligence (ICTAI, 2017), pp. 493–497. [Google Scholar]

- 21.Sagheer S. V. M., George S. N., Kurien S. K., Despeckling of 3D ultrasound image using tensor low rank approximation. Biomed. Signal Process Contr. 54, 101595 (2019). [Google Scholar]

- 22.Ballester-Ripoll R., Lindstrom P., Pajarola R., TTHRESH: Tensor compression for multidimensional visual data. arXiv [Preprint] (2018). https://arxiv.org/abs/1806.05952 (Accessed 1 December 2020). [DOI] [PubMed]

- 23.Kilmer M. E., Horesh L., Avron H., Newman E., Tensor-tensor algebra for optimal representation and compression. arXiv [Preprint] (2019). https://arxiv.org/abs/2001.00046 (Accessed 1 December 2020). [DOI] [PMC free article] [PubMed]

- 24.Georghiades A. S., Belhumeur P. N., Kriegman D. J., From few to many: Illumination cone models for face recognition under variable lighting and pose. IEEE Trans. Pattern Anal. Mach. Intell. 23, 643–660 (2001). [Google Scholar]

- 25.Landgrebe D., Biehl L., An introduction and reference for MultiSpec (2019). https://engineering.purdue.edu/biehl/MultiSpec/. Accessed 1 December 2020.

- 26.Martin C. D., Shafer R., LaRue B., An order- tensor factorization with applications in imaging. SIAM J. Sci. Comput. 35, A474–A490 (2013). [Google Scholar]

- 27.Ely G., Aeron S., Hao N., Kilmer M., 5D seismic data completion and denoising using a novel class of tensor decompositions. Geophysics 80, V83–V95 (2015). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

There are no data underlying this work.