Abstract

Objective.

Speech-related neural modulation was recently reported in “arm/hand” area of human dorsal motor cortex that is used as a signal source for intracortical brain-computer interfaces (iBCIs). This raises the concern that speech-related modulation might deleteriously affect the decoding of arm movement intentions, for instance by affecting velocity command outputs. This study sought to clarify whether or not speaking would interfere with ongoing iBCI use.

Approach.

A participant in the BrainGate2 iBCI clinical trial used an iBCI to control a computer cursor, spoke short words in a stand-alone speech task, and spoke short words during ongoing iBCI use. We examined neural activity in all three behaviors and compared iBCI performance with and without concurrent speech.

Main results.

Dorsal motor cortex firing rates modulated strongly during stand-alone speech, but this activity was largely attenuated when speaking occurred during iBCI cursor control using attempted arm movements. “Decoder-potent” projections of the attenuated speech-related neural activity were small, explaining why cursor task performance was similar between iBCI use with and without concurrent speaking.

Significance.

These findings indicate that speaking does not directly interfere with iBCIs that decode attempted arm movements. This suggests that patients who are able to speak will be able to use motor cortical-driven computer interfaces or prostheses without needing to forgo speaking while using these devices.

Introduction

One application of intracortical brain-computer interfaces (iBCI) is to restore movement and communication for people with paralysis by decoding the neural correlates of attempted arm and hand movements (e.g., Brandman et al., 2017; Slutzky, 2019). Most existing pre-clinical [3-11] and clinical [12-18] iBCI systems record neural signals from the “arm/hand area” of dorsal motor cortex. An important consideration for these systems is whether other cognitive processes may modulate the same neural population whose activity is being decoded (translated into movement commands by an algorithm, see [19]). If so, activity unrelated to the user’s movement intentions could “mask” or add to the underlying movement intention signal, thereby acting as a nuisance variable that deleteriously affects the decoder output and thus reduces iBCI performance.

We recently reported that neurons in dorsal motor cortex modulate during speaking and movement of the mouth, lips, and tongue [20,21]. On the one hand, this speech-related activity presents an opportunity for efforts to build BCIs to restore lost speech [2,22]. But on the other hand, this finding raises an immediate practical concern for iBCI applications such as controlling a prosthetic device with attempted arm and hand movements: does this speech-related activity interfere with ongoing iBCI use, for example by “leaking out” via the decoder so that whenever the person tries to speak, an unintentional command is sent to the BCI-driven effector? If this were the case, it would reduce the clinical utility of iBCI systems, since having to stay silent while using a brain-driven computer interface or prosthetic arm would be a substantial limitation. There is some anecdotal evidence that people can talk while using their iBCI from the supplementary videos and media coverage accompanying [12,14] and our own observations during [16]. However, to the best of our knowledge this question has never been directly examined, which is not surprising given that there was previously little reason to suspect that there was speech-related activity in dorsal motor cortex.

Here we specifically tested for speech-related interference while a BrainGate2 participant performed an iBCI task in which he used arm movement imagery to move a computer cursor to a target in a 2D workspace. We used a task design in which the participant was randomly presented with an auditory prompt during ongoing cursor control. Depending on each block’s condition, the participant was instructed either to say a short word after hearing the prompt, or to not speak when hearing the prompt. We also compared speech-evoked responses during this ongoing iBCI task to responses when speaking words during a stand-alone audio-cued speaking task. We found that speech-related neural modulation was greatly reduced during ongoing BCI use compared to stand-alone speaking, and that the speech-related firing rate changes had minimal effect on decoder output when speaking during BCI use. Consistent with this, we did not observe reduced cursor task performance during the speak-during-BCI condition.

Methods

Participant and approvals

This research was conducted within the BrainGate2 Neural Interface System pilot clinical trial (ClinicalTrials.gov Identifier: NCT00912041), whose overall purpose is to collect preliminary safety information and demonstrate proof of feasibility that an iBCI can help people with tetraplegia communicate and control external devices. Permission for the trial was granted by the U.S. Food and Drug Administration under an Investigational Device Exemption (Caution: investigational device. Limited by federal law to investigational use). The study was also approved by the Institutional Review Boards of Stanford University Medical Center (protocol #20804), Brown University (#0809992560), and Partners HealthCare and Massachusetts General Hospital (#2011P001036).

One participant (‘T5’) performed research sessions specific to this study. T5 is male, right-handed, and 65 years old at the time of the study. He was diagnosed with C4 AIS-C spinal cord injury eleven years prior to these research sessions. T5 retained the ability to weakly flex his left elbow and fingers and has some slight and inconsistent residual movement of both the upper and lower extremities. He is able to speak and move his head. T5 gave informed consent for the research and publications resulting from the research, including consent to publish audiovisual recordings.

Behavioral tasks

We compared data from a stand-alone speaking task and two variants of a BCI Radial 8 Target Task that had randomly occurring audio prompts. Depending on the block, these audio prompts instructed the participant to either speak a word (which was specified to him before the start of the block), or to say nothing. We examined specific time epochs within the data from these tasks to isolate neural activity and/or cursor kinematics across a variety of different behavioral contexts: stand-alone speaking (figures 1 and 2); speaking in response to an audio prompt during ongoing BCI control (figures 1 and 2); BCI cursor trials without any audio prompts or speaking (figures 1-3); and BCI cursor control following an audio prompt either with or without subsequent speaking (figure 3). During all tasks, the participant was comfortably seated in his wheelchair while facing a computer monitor and microphone, as illustrated in figure 1a.

Figure 1.

Speech-related neural modulation in dorsal motor cortex is much weaker when occurring during ongoing BCI cursor control.

(a) Task setup. We recorded speaking data and neural activity from a participant with two intracortical 96-electrode arrays implanted in the ‘hand knob’ area of motor cortex during BCI cursor control, during speaking, and during speaking while using the BCI.

(b) Threshold crossing spike firing rates (mean ± s.e.) are shown for three example electrodes (rows) across three different behavioral contexts: 1) BCI cursor movement trials without any speaking (left column); 2) speaking alone (middle column); and 3) speaking at random times during ongoing BCI cursor control (right column). Cursor position traces from the corresponding trials are shown above the BCI column (one color per target). Trial-averaged acoustic spectrograms for one example word (“bat”) are shown above each speaking column. The acoustic spectrogram’s horizontal axis spans 100 ms before to 500 ms after acoustic onset, and its vertical axis spans 100 Hz to 10,000 Hz. Examples are from dataset T5.2018.12.17.

(c) Summary of population firing rate changes (speaking minus silence, time-averaged over the 2 s epoch shown) when speaking alone (green) and when speaking during BCI use (blue). Each spoken word condition contributes one datum from each of the two datasets. Bars show the mean across all the dataset-conditions within each behavior.

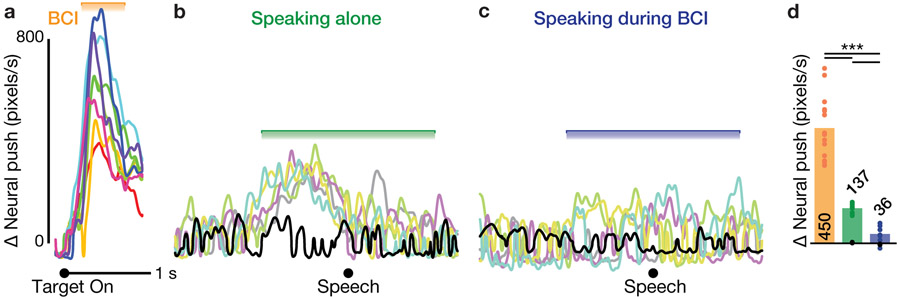

Figure 2.

Speaking during ongoing BCI use has very little effect on cursor velocity decoder output.

(a) Mean ‘neural push’ changes, i.e. the decoder-potent projection of firing rates that generated the cursor velocity, when making BCI cursor movements to each outward target (colors are the same as in figure 1b). Neural push traces shown in panels a, b, and c are from dataset T5.2018.12.17.

(b) Neural push changes that would have occurred due to firing rate changes when speaking each of the five words, or silence (black), during stand-alone speech blocks, if the velocity decoder had been active.

(c) Neural push changes aligned to speaking each of the five words, plus silence, when speaking occurred during the BCI cursor task.

(d) Summary of neural push changes across the Radial 8 targets (orange) and word speaking conditions (green and blue) in both datasets. Silence conditions are shown separately as black markers (these lie very close to 0). Each dataset-condition contributes one datum based on its mean neural push change in the epoch shown with horizontal brackets in panels a - c. Bars show the mean across all the dataset-conditions within each behavior.

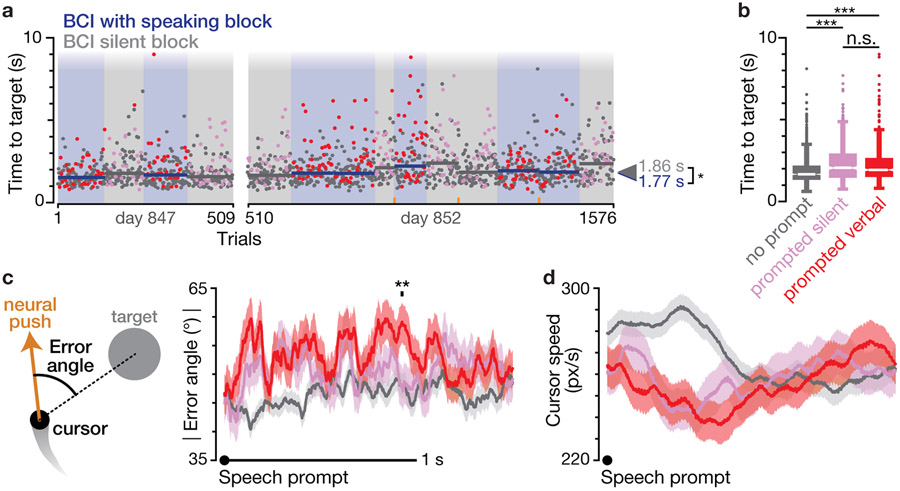

Figure 3.

Speaking during ongoing BCI use does not reduce cursor task performance.

(a) Timeline of all BCI comparison blocks across two research sessions. Blue background denotes blocks in which the participant was instructed to speak after hearing an audio prompt; gray background denotes blocks with an instruction to not speak when hearing the prompt. Each dot shows one trial’s time to target. Trials during which a prompt was played are shown in red (if during a speaking block) or pink (if during a silent block). Horizontal bars show the median time to target of each block. Arrows on the right show the median across all trials of each instruction type. Orange ticks along the abscissa show when the decoder was recalibrated.

(b) Box-and-whisker plots of times to target for ‘no cue’ trials that did not have an audio prompt, ‘prompted silent’ trials during a BCI silent block, and ‘prompted verbal’ trials during a BCI with speaking block. For each trial type, the center white line shows the distribution median, the thick (box) portion spans the 25th to 75th percentiles, and the thin lines (whiskers) extend another 1.5 times the box range. All remaining outlier points are shown as dots. Only the no prompt distribution was significantly different from that of the other two trial types (p < 0.001, rank-sum test).

(c) Mean ± s.e. instantaneous absolute value cursor error angle aligned to the audio prompt that indicated when to speak (red), or not to speak (pink). As illustrated in the left side schematic, error angle is the angular difference between the instantaneous neural push and the vector pointing from the cursor to the target. 1 ms time bins in which there was a significant difference between the prompted silent and prompted verbal conditions are marked with a black tick above the traces (p < 0.01, rank-sum test). For comparison, no prompt trials are shown in gray (aligned to faux prompt times). Data are aggregated across both datasets.

(d) Mean ± s.e. instantaneous cursor speeds for each trial type, aligned to the audio prompt, presented similarly to panel c.

Stand-Alone Speaking Task:

This audio-cued speaking task was based on the words speaking task from [21], except that here we used a subset (five) of the words arbitrarily chosen from the ten words in that previous study to increase the number of repetitions per word condition. A custom program written in MATLAB (The Mathworks) generated audio prompts on each trial. These consisted of two beeps to alert the participant that the trial was starting, followed after 0.4 seconds by the cued word being spoken by the computer. Then, after a delay of 0.8 seconds, two clicks were played to serve as the go cue for the participant to speak back this cued word. The inter-trial interval was 2.2 seconds. On ‘silence’ trials, no word was prompted, and the participant was instructed to speak nothing back in response. Words were pseudorandomly interleaved in sequences consisting of one repetition of each word (and one silence trial). The participant was instructed to look at the center of the (blank) screen in front of him during the task and refrain from any other movements or speech during the task.

Two blocks each consisting of 17 repetitions of each of the five pseudorandomly interleaved words were collected on each of the two research sessions. We did not analyze the rare trials where the participant missed a word, misspoke, or if the trial was interrupted by loud noise in the environment. On the first session, the number of trials for each speaking condition were: silence (34), seal (33), shot (34), more (33), bat (34), beet (34). On the second session, the trial counts were: silence (34), seal (34), shot (34), more (34), bat (33) beet (34).

BCI Radial 8 Target Task:

The core of this task was a standard BCI cursor-to-target acquisition task in which the participant controlled the velocity of a computer cursor with decoded neural activity [23]. A trial was successful if the participant kept the center of the circular cursor (45 pixels diameter) inside a circular target (100 pixels diameter) for a contiguous 400 ms before a 10 second trial time out elapsed. The participant was instructed to acquire the target as quickly as possible. The target location alternated between the center of the workspace and a radial target at one of eight equally spaced locations that were 409 pixels from the workspace center. The overall workspace was by 1920 pixels wide by 1080 pixels tall (59.8 × 33.6 cm at a distance of approximately 73 cm from the participant). Each block was 5 minutes long.

We asked the participant not to move his head during all BCI cursor tasks. Otherwise, head movement-related neural modulation might occur during the task [24] and become utilized by the cursor velocity decoder (due to correlations in head movement and target direction). If so, then head movements that might co-occur with speaking could also affect the cursor velocity decoder in a way that is unrelated to a neural overlap between attempted arm movements and speech. Specifically, an OptiTrack V120:Trio camera system mapped the vertical (coronal) plane position of a headband worn by the participant onto to an additional on-screen “head cursor”, which was a different color from the BCI-controlled cursor. The participant was instructed to keep the head cursor within 80 pixels of the screen center, and could not acquire targets if the head-tracking cursor was outside of this boundary. Furthermore, a trial was immediately failed if the head-tracking cursor’s speed exceeded 270 pixels / second. The participant was already familiar with this “head still” BCI protocol from previous research sessions (not part of this study) and was successfully able to keep his head still while performing the BCI task.

The novel element of the BCI task in this study was the addition of an audible speaking prompt at random times during the Radial 8 Target Task. This prompt consisted of a pair of go clicks (like in the Stand-Alone Speaking Task) delivered every 4 to 10 seconds (clipped exponential distribution with a mean interval of 7 seconds) during the Radial 8 Task block. The “speech prompt” in figure 3d refers to the start of the second click. At the start of each BCI task block, the participant was told to either say a specific word when he heard the audio prompt (a ‘BCI with speaking block’), or to say nothing (a ‘BCI silent block’). We refer to trials within these blocks that had an audio prompt as ‘prompted verbal’ and ‘prompted silent’ trials, respectively. The order of these block types was counter-balanced within the session in a ‘ABAB’ sequence on the first session (A = BCI with speaking, B = BCI silent) and a ‘ABBA, BAAB, …’ sequence on the second session. This task was designed to identify whether the act of speaking interfered with BCI cursor control, while reducing the potential effects of perception of, and distraction by, the prompts. Specifically, the participant was instructed to say the same word in response to the prompt throughout a given BCI with speaking block. We used a fixed pre-instructed word and a simple click prompt (rather than audio of the word) in order to minimize the perceptual and cognitive burden of the prompted speaking element of this task. We tried to match the prompts’ distraction effects across conditions by presenting the same click prompts during the BCI silent blocks, with presentation times drawn from the same probability distribution. We changed the instructed word between blocks in order to measure neural responses during speaking a variety of words, despite using the same word within each block. During the first session, the words that the participant was instructed to speak in response to the BCI with speaking prompts were “beet” and “bot”, in that order across blocks. During the second session, the words were “seal”, “more”, “bat”, “shot”, and “beet”.

The participant also performed one more block of the Radial 8 Target Task during which he was asked to speak out loud a story of his choosing while using the BCI cursor to acquire targets. There were no audio prompts during this ‘storytelling’ block. In contrast to the prompted speaking during the other blocks, this story was self-generated; it was not pre-rehearsed or read out loud from a script. Thus, there was also an additional cognitive task element of generating the story in addition to the motoric element of continuous speaking.

As part of this study, we asked the participant about his subjective experience when speaking while using the BCI. At the start of the first research session and after the second research session, we asked the participant whether he thought speaking made it harder to control the BCI cursor. We also asked an open-ended “how did that block feel?” after each block.

Neural and audio recording

T5 had two 4.2 mm x 4.2 mm 96-electrode (1.5 mm long) Utah arrays (Blackrock Microsystems) neurosurgically placed in the dorsal ‘hand knob’ area of his left motor cortex 28 months prior to this study. Array locations are shown overlaid on the participant’s MRI-derived brain anatomy in figure 1a. The naming scheme for electrodes in figure 1 is <array #>.<electrode #> where array 1 is the more lateral array and the electrode numbers (ranging from 1 to 96) follow the manufacturer’s electrode numbering scheme. Neural signals (electrodes’ voltages with respect to a reference wire) were recorded from the arrays using the NeuroPort™ system (Blackrock Microsystems) and analog filtered from 0.3 Hz to 7.5 kHz. The signals were then digitized at 30 kHz and sent to the experiment control computers for storage and also real-time processing to implement the BCI. This real-time BCI system was implemented in custom Simulink Realtime software.

To extract action potentials (spikes), the signal was first common average re-referenced within each array (i.e., at each time sample, we subtracted from each electrode the mean voltage across all 96 electrodes of that array), and then filtered with a 250 Hz asymmetric FIR high pass filter designed to extract spike-band activity [25]. A threshold crossing spike was detected when the voltage crossed a threshold of −4.5 × root mean square (RMS) voltage. In keeping with standard iBCI practice [14,16,17,26,27], we did not spike sort (assign threshold crossings to specific single neurons). For analysis and visualization, spike trains were smoothed with a Gaussian kernel with σ = 25 ms.

Audio, including the participant’s voice and task-related sounds played by the experiment control computers, were recorded by the microphone (Shure SM-58) and pre-amplified ~60 dB (ART Tube MP Studio microphone pre-amplifier). This audio signal was then recorded by the electrophysiology data acquisition system via an analog input port and digitized at 30 ksps. Each speaking event’s sound onset time (‘speech’ events in figures 1 and 2) was manually labeled from visual and auditory inspection of the recorded audio data. For the silence condition, there was not a sound onset time; however, for several analyses (the speech-aligned firing rates in figure 1b and speech-aligned neural push in figure 2b,c) we wanted to compare neural activity during the overt speaking conditions to a comparable time period from the silence condition. We did this by assigning a “faux” sound onset time to each silent trial equal to the median sound onset time (relative to the audio prompt time) across the overt speaking conditions from that dataset.

BCI cursor control

The participant controlled the 2D velocity of the on-screen cursor with a Recalibrated Feedback Intention-Trained Kalman Filter (ReFIT-KF) as previously described in [8,16,23]. Decoder calibration began with the participant watching automated movements of the cursor to targets (Radial 8 Target Task) while instructed to attempt to move his arm as if he were controlling the cursor. This provided an initial set of neural and velocity data that were used to seed an initial velocity Kalman filter decoder. This decoder was then used to control the cursor in a subsequent Radial 8 Target Task block.

Successful trials from this closed-loop BCI block were then used to fit a new “recalibrated” decoder. These kinematics underwent two adjustments prior to being used as training data to putatively better match them to the user’s underlying movement intentions [8]. First, velocities during cursor movements were rotated to point towards the target (regardless of the actual instantaneous cursor velocity). Second, velocity was set to 0 while the cursor was over the target. In our second research session, we performed an additional re-calibration from the closed-loop block performed using this second decoder because a velocity bias was observed during that block. There were no audio speaking prompts during these initial decoder calibration blocks; those were only included for the main task from which all the analyzed data come. To improve the consistency of the Kalman filter’s dynamics across decoder fits and facilitate reproducibility, we used a fixed smoothing and gain during the Kalman filter fitting (both from open-loop data and from closed-loop data) as described in [28]. Specifically, we used smoothing α = 0.94 and gain β = 500 pixels/s.

Due to within-day non-stationarities in neural recordings [29], iBCI decoder performance can be sustained by occasionally re-calibrating the decoder from recently collected data [16,30]. We therefore performed several decoder recalibrations during the longer second research session; orange ticks in figure 3a indicate when a new decoder was used. These re-calibrations used the previous two blocks as training data, i.e., one BCI with speaking block and one BCI silent block. Our reasoning for including one block of each task condition when recalibrating the decoder was that, if there were context-dependent differences between these two conditions, possible model mismatch due to recalibrating from just one condition (e.g., only refitting from BCI with speaking blocks) would unfairly penalize subsequent performance during the other condition (e.g., this would potentially penalize BCI with silence blocks).

Once fit, the time-bin-by-time-bin operation of this decoder can be described in the standard steady-state Kalman filter form [31,32]:

| (1) |

where v(t) is a 2 × 1 vector of horizontal and vertical dimension velocities at time step t, yt is a 192 × 1 vector of each electrode’s firing rates in a 15 ms bin (after subtracting a static baseline offset rate), M1 is a 2 × 2 diagonal matrix that applies temporal smoothing to the velocity [28], and M2 is a 2 × 192 matrix that maps firing rates to changes in velocity by assigning each electrode a preferred direction such that increases in that electrode’s firing rate tend to “push” the velocity more in this direction.

We use this factorization of the decoder to compute instantaneous “neural push” [33,34], which is a 2 × 1 vector calculated by multiplying firing rates yt by M2. This vector indicates the direct contribution of neural activity at that moment in time on velocity in this time step (note that the neural activity at a given time also has a subsequent lingering effect on cursor velocity due to the temporal smoothing introduced by M1). We chose to examine neural push at a given time, rather than the cursor velocity, because neural push is a more sensitive (less temporally smoothed) measure of transient neural changes due to, e.g., interference from speaking. To present the neural push at a more intuitive scale, we divide it by (1- α), where α = 0.94. This has the effect of scaling the neural push so that indicates how fast the neural activity would push the cursor (in units of pixels/second) if there were no smoothing applied. During actual BCI operation, the neural push magnitude is smaller, but it is integrated by M1 across decoder time steps.

Measuring task-related firing rate changes

We trial-averaged firing rates across trials of a given condition (for example, cursor movements towards a particular target, or speaking a particular word) aligned to either the time when the BCI task target appeared (‘target on’) or speaking sound onset time (‘speech’). These firing rates are shown in figure 1b. Note that since the speech prompts occurred at random times during the Radial 8 Target Task, the cursor task-related firing rate changes that were also occurring during speaking should average away in speech-aligned firing rates, leaving the modulation related to the speaking behavior.

To examine speech-related modulation at the neural ensemble level, we measured the differences between population firing rates during speaking each word and during the silence condition within the same behavioral context (i.e., speaking alone or speaking during BCI use). To do so, we essentially (with several additional technical improvements described below) took the norm of the firing rate vector difference when speaking the word and when not speaking (silence condition), ∥yword − ysilence∥, where each y is an E-dimensional vector of electrodes’ firing rates, trial-averaged for that condition and time-averaged from 1 s before to 1 s after speech onset (or faux speech, in the case of silence).

The additional steps were as follows. First, we did not include very low signal-to-noise electrodes whose firing rate did not exceed 1 spike/second in either the speaking alone or speaking during BCI contexts. Second, after calculating a given condition’ firing rates (e.g., firing rates across all trials where the participant said “seal” in the speaking alone context), we subtracted a “baseline” firing rate from it, where baseline was a time window from 500 ms before the audio prompt until the audio prompt, from these same trials. The motivation for this was to account for and offset potential neural recording non-stationarity [29] between blocks in the speaking during BCI conditions (recall that the participant was instructed to speak the same word, or remain silent, for the duration of a 5 minute BCI block). Without this baseline subtraction, even a small firing rate drift between the BCI silent blocks and a given word’s BCI with speaking block would appear as a speech-related population firing rate change, even if there was no actual change in firing rate when the participant started to speak. This concern still applies despite having multiple BCI silent blocks over the course of each research session, since these silence trials still all come from a different span of time than each word’s trials. There should be less need for baseline subtraction in the speaking alone conditions, where all five words (plus silence) were interleaved within blocks (and thus recording drifts should affect all words and silence conditions similarly). For consistency, however, we also applied baseline subtraction to the speaking alone conditions.

Third, when calculating the magnitude of a population firing rate vector difference, we used an unbiased measurement of the norm of vector differences [24,35]. This was done to avoid the problem that, since a vector norm is always positive, ∥yword − ysilence∥ is biased upwards, especially when firing rates are calculated from a small number of trials or when the firing rate differences are small (to illustrate this problem, consider that the population firing rate difference between two sets of trials drawn from identical distributions will always be positive due to the presence of noise, despite the true difference between the firing rate vectors being zero). Specifically, given N1 trials from condition 1, and N2 trials from condition 2, we can calculate an unbiased estimate of the squared vector norm of the difference in the two conditions’ mean firing rates by averaging over all combinations of leave-one-trial-from-each-condition-out sample estimates of differences in means:

| (2) |

where and are single trial firing rate vectors from condition 1 and condition 2, respectively, and and are trial-averaged firing rate vectors from all the other trials of condition 1 and condition 2, respectively. The key property of this algorithm is that the dot product is taken between firing rates computed from completely non-overlapping sets of trials. Unlike a standard squared distance, this D can be negative. To convert this to a distance while preserving the sign, we then take .

This calculation is almost identical to a standard Euclidean vector norm of ∥y1 − y2∥ if firing rates are calculated from large numbers of trials and the two conditions’ firing rates differ substantially. However, it provides a less biased estimate of the population firing rate distance when there are fewer trials or if the true difference between the two conditions’ population firing rates is close to 0. In our case, since the firing rate differences when speaking words during BCI use and silence are small, using this unbiased metric was important to avoid overestimating the degree of speech-related modulation during BCI use.

Finally, we divided this unbiased vector norm d by (the number of included electrodes) so that the final metric was at a more intuitive “single-electrode” scale (i.e., the firing rate change magnitude that would need to be observed on each electrode if all electrodes contributed equally to the overall population vector norm).

To compare the aggregate population firing rate changes when speaking alone versus speaking during BCI use (figure 1c), we treated the (unbiased) population firing rate difference norm when speaking each word (compared to silence) during a given behavioral context (speaking alone or speaking during BCI), from a given dataset, as one dataset-condition datum.

Measuring the effect of neural modulation on decoder output

To quantify how neural population firing rate changes affected the decoded velocity (figure 2), we used the neural push metric described above. Neural data recorded during the BCI Radial 8 Target Task were projected into the decoder that was actually in operation during that time (this applies to both the BCI without speaking, and the speaking during BCI use behavioral contexts). Neural data from the Stand-Alone Speaking Task were projected into the first decoder used for that session’s BCI tasks with prompted speaking or prompted silence (that is, the decoder used in the first four blocks shown for each session in figure 3a.)

We examined changes in neural push relative to a baseline neural push prior to the behavior we were interested in. The baseline epoch for the neural push aligned to BCI target presentation was from 100 ms pre-target onset until target onset. The baseline epoch for the neural push aligned to speech onset was from 500 ms before the audio prompt until the audio prompt. Subtracting trial-averaged baseline neural push from the neural push aligned to each trial’s event of interest helps account for the fact that neural push may be non-zero even before a given behavior begins (e.g., at the start of a cursor task trial or before speaking) because of decoder output biases that can crop up due to neural non-stationarity. In the case of speech-aligned neural push during ongoing BCI use, trial-averaged neural push may be non-zero due to asymmetry in the underlying cursor task being performed. Baseline subtraction is particularly important for the stand-alone speaking data condition, since these data were recorded at a different time during the research session with respect to when the decoder’s training data were collected. The traces in figure 2 show the vector norm (a scalar value at each time point) of this baseline-subtracted neural push, which in this cursor task is a two-dimensional vector. To avoid over-estimating the neural push magnitude if the true neural push is close to 0, we used the unbiased norm technique described in the previous section to calculate the neural push vector norm. In this case, single-trial neural pushes comprised one distribution, and the [0, 0]T was the second distribution.

Measuring BCI performance

Our primary BCI Radial 8 Target Task performance measure was time to target, defined as the time between target onset and when the cursor entered the target prior to successful acquisition. Time to target did not include the last 400 ms target hold time used to acquire the target, but it did include any previous (unsuccessful) target hold times in which the cursor left the target before the requisite 400 ms. Time to target is only defined for successful trials (> 98% of trials in these sessions). We also excluded the trial immediately after a failure, since its starting cursor position was not the previous target and thus could be very close to the current target, which would invalidate the time to target measurement. In the figures, we use standard conventions for the meaning of stars to denote significant differences: * p < 0.05, ** p < 0.01, *** < 0.001.

We wanted to be able to compare neural push error angles (figure 3c) and cursor speeds (figure 3d) of prompted silent and prompted verbal trials to what happened in non-prompted trials. We therefore generated faux speech prompts in each non-prompted trial by declaring a faux prompt at a random time within that trial (drawn from a uniform distribution). This allowed us to calculate faux speech prompt-aligned error angles and cursor speeds for no prompt trials (gray traces in figure 3c,d).

Results

We studied the interaction between speaking and performing a target acquisition task using an iBCI-driven cursor. Participant T5 was enrolled in the BrainGate2 iBCI pilot clinical trial and had two 96-electrode Utah arrays chronically placed in his left dorsal motor cortex approximately 28 months prior to this study. T5 participated in two research sessions during which, on the same day, he performed a stand-alone speaking task and used a ReFIT-Kalman filter [16,23] velocity decoder to perform a BCI cursor control task with or without concurrent speaking (Figure 1a). The motor imagery that the participant used for controlling the cursor was attempting to move his right (contralateral) hand in a horizontal plane as if holding a joystick (or an automobile gearshift) from above.

Speech-related neural modulation is minimal during attempted arm movements

The key observation motivating this study is that the same neural population that is used to control the iBCI is also active during speaking. Figure 1b presents three example electrodes’ trial-averaged firing rates during the three studied behavioral contexts. The left column shows the familiar result that dorsal motor cortex modulated when the participant moved the cursor via attempted arm movements, and that firing rates were tuned to target direction [12]. Critically, the center column shows that these same electrodes, on the same day, also strongly responded when the participant spoke short words. This result is consistent with our recent report of speech-related modulation in this participant [21] and raises the question of whether such speech-related activity will interfere with decoding velocity intentions. Note that the three example electrodes shown were specifically chosen because they exhibited a variety of strong speech-related modulation patterns.

The right column shows a novel observation: the speech-related modulation was largely attenuated when the participant spoke while performing the BCI cursor task. We quantified speech-related neural response magnitudes at the population level by taking the difference between the ensemble firing rates during speaking each word and during the silence condition (see Methods). Figure 1c compares this population modulation metric for stand-alone speech and speaking during BCI. As suggested by the example electrodes, across the population of electrodes and all words from both datasets, modulation was significantly smaller when speaking during BCI use (1.85 ± 0.25 Hz, mean ± s.d.) compared to when speaking alone (6.40 ± 0.83 Hz; p < 0.001, rank-sum test). This substantial attenuation already suggests that perhaps speaking would not interfere with ongoing iBCI use. In the next sections, we will more thoroughly test this prediction.

Speaking during iBCI use minimally affects decoder output

The previous section reported speech-related firing rate modulation across electrodes. However, not all firing rate changes are the same in terms of how they affect the BCI decoder. In theory, even modest firing rate changes could potentially have an outsized effect on the BCI if they were well-aligned with the neural decoder’s readout dimensions. We therefore specifically looked at how these speech-related firing rate changes affected the decoded velocity output. To do so, we projected the firing rate changes described in the previous section into the 2D decoder-potent neural subspace and quantified the moment-by-moment change in the magnitude of this 2D ‘neural push’ relative to a baseline period (see Methods).

To give a sense of scale for the neural push change associated with performing the BCI task, figure 2a shows neural push magnitude changes following Radial 8 target onset for trials without any speaking or speaking prompts. Unsurprisingly, the neural push rapidly increased after the target was presented (because the participant started moving the cursor towards the target) and then decreased shortly thereafter (as the participant slowed down the cursor to acquire the target). We next compared these Radial 8 Target Task-related neural push changes to the neural push changes that can be attributed to speaking alone and to speaking during BCI use. Although no decoder was actually used during the Stand-Alone Speaking Task, firing rates recorded during that behavior can similarly be projected through a BCI decoder from the same research session. Figure 2b shows how these stand-alone speech-aligned firing rate changes would have affected the decoder had it been active. Figure 2c shows how the speech-aligned firing rate changes during BCI use actually did change neural push, based on the decoder that was active during that behavior. Comparing these neural push measurements reveals that speech-aligned neural push changes were much smaller than BCI task-aligned neural push changes, but speaking did slightly affect neural push as compared to the silent conditions (black traces).

We summarized each condition’s neural push by taking the time-averaged (mean across the epochs shown in figure 2a-c) neural push change; figure 2d aggregates across all conditions in both datasets. For movements to the BCI targets, this aggregate neural push change was 450 ± 121 pixels/s (mean ± s.d. across the 8 targets × 2 datasets = 16 dataset-conditions). Speaking during BCI use caused neural push changes that were only 8.0% of this magnitude (36 ± 34 pixels/s across 5 words × 2 datasets = 10 dataset-conditions). Stand-alone speaking would have caused a larger (but still small compared to BCI task-related modulation) neural push change: 137 ± 17 pixels/s across 10 dataset-conditions. This suggests that speaking without concurrent arm movement imagery would have a modest effect on the BCI (if the decoder were active), but — crucially — that the effect of speaking during ongoing BCI use on decoder output was very small.

Prompted speaking did not reduce iBCI cursor task performance

We next examined the Radial 8 Target Task data to determine what effect the small speech-related neural push changes described in the previous section had on cursor task performance. As shown in figure 3a, the task was performed as a sequence of blocks during which the participant either was instructed to speak when he heard the audio prompt (blue background), or he was instructed to say nothing when he heard the audio prompt (gray background). We used this task design, rather than simply omitting the audio prompt in the BCI silent blocks, to better equalize the distraction and additional cognitive demands of hearing a prompt.

We first compared performance at the broad resolution of dividing trials based on their behavioral instruction (i.e., speak versus don’t speak in response to the audio prompt). Median time to target during BCI with speaking was 1.77 seconds (2.07 ± 1.08 s mean ± s.d.). This performance was slightly but significantly (p = 0.017, rank-sum test) better than during BCI without speaking: the BCI silent median time to target was 1.86 s (2.20 ± 1.10 s mean ± s.d.). The somewhat counter-intuitive result that BCI without speaking condition trials were on average slightly slower may be attributable to the audio prompt being more distracting or cognitively burdensome in this condition because the participant had to override a default impulse to speak in response to the prompt (i.e., the task had an element of inhibition [36]).

We next evaluated performance at a medium resolution by comparing trials that either: 1) did not have an audio prompt and did not follow a trial with an audio prompt (‘no prompt’); 2) had an audio prompt or followed a trial with an audio prompt in a BCI silent block (‘prompted silent’); or 3) had an audio prompt or followed a trial with an audio prompt in a BCI with speaking block (‘prompted verbal’). Our reasoning for treating both a prompted trial and the subsequent trial as ‘prompted’ for this analysis was because in many cases the verbal speaking response started (or continued) into the subsequent trial; even if it did not, the speaking, recovery from speaking, or distraction due to the prompt may well have extended into the subsequent trial. We thus conservatively treated both trials as “compromised” for the purpose of this analysis. Figure 3b shows the distributions of times to target for these three trial types. Times to target were significantly longer for both prompted trial types (prompted silent: 2.06 s median, 2.39 ± 1.19 s mean ± s.d.; prompted verbal: 1.93 s median, 2.29 ± 1.22 s mean ± s.d.) as compared to the no prompt trials (1.66 s median, 1.92 ± 0.91 s mean ± s.d., p << 0.001 for both comparisons, rank-sum test). However, times to target were not significantly different between the prompted verbal and prompted silent trials (p = 0.11). This indicates that the presence of an audio prompt modestly interfered with the cursor task (~20% longer times to target), but not in a way that was dependent on actual speaking. This would be consistent with the randomly-occurring audio prompt briefly distracting the participant.

We then zoomed in to compare these trials’ performance at a millisecond-by-millisecond resolution. We calculated the neural push error angle throughout each trial, i.e., the angular error between each time point’s neural push vector (essentially, what intended velocity was decoded from that moment’s neural activity) and the vector that pointed straight from the cursor to the center of the target. Figure 3c shows trial-averaged neural push error angle aligned to the audio speaking prompt for prompted silent (pink) and prompted verbal (red) trials. There was little difference between prompted silent and prompted verbal error angles, except for a brief increase in the prompted verbal trials’ error angles at ~0.9 s after the speech prompt. For comparison, we also generated faux speech prompt-aligned error angles for no prompt trials (gray trace) by randomly assigning these trials prompt times.

We also performed a similar analysis for the instantaneous cursor speed (i.e., how fast the cursor moved during these BCI trials, aligned to the audio speaking prompt), shown in figure 3d. This revealed that in comparison to no prompt trials, cursor movements during both prompted verbal and prompted silent trials slowed down after the speech prompt. This is consistent with both of these conditions having longer times to target than no prompt trials. We did not observe significant differences in the prompted silent and prompted verbal cursor speeds. Together, these kinematics analyses are consistent with the previously described trial-wise and block-wise times to target analyses. They indicate that having to actually speak in the prompted verbal condition did not interfere with iBCI use more than just hearing a prompt which did not require a speaking response.

iBCI use during continuous, spontaneous speaking

Lastly, we wanted to demonstrate that the BCI cursor could be controlled while the user speaks spontaneously, rather than in response to a prompt. Using an iBCI while also conversing is likely to be a typical scenario for these systems’ eventual real-world use. To approximate this, at the end of the second day of the study we asked T5 to perform one more ‘storytelling’ block of the Radial 8 Target Task while telling the experimenters a story of his choice. The Supplementary Movie shows him performing the task while at the same time telling us about a recent adventure of his. This block’s performance was significantly worse than during the preceding BCI silent block (median time to target of 3.35 s vs. 2.73 s, p = 0.0010), which is not surprising given the larger cognitive load of sustained, unrehearsed storytelling. Nonetheless, this result demonstrates that the participant could use the iBCI despite nearly continuous speech.

Participant’s description of speaking while using the iBCI

After the first session’s first pair of BCI with versus BCI without speaking blocks, the participant reported that he did not feel any difference between the two conditions in terms of his ability to control the cursor. After the first pair of blocks on the second session, he reported that it was “distracting” to speak while moving the cursor, but this was “not insurmountable”. When asked to rate the degree of distraction from 0 to 10, he described it as a 5. We also asked T5 if he thought that speaking interfered with his ability to control the cursor both at the start of this study, and after the conclusion of the second session. Both times, T5 emphatically answered that it did not.

After the spontaneous speaking block, the participant reported that the storytelling and cursor control felt like two separate tasks, and that keeping an eye on the movement of the cursor did not take as much concentration as creating a narrative. He also reported that there were a few times when he had trouble acquiring a target and had to stop thinking about his narration.

Discussion

We tested whether the presence of speech-related activity in arm/hand area of motor cortex [21] would interfere with iBCI use based on attempted arm movements, and found that it did not. This adds to a growing body of work showing that velocity decoding is robust to other processes reflected in dorsal motor cortical activity such as visual feedback [34,37], but not necessarily to proprioceptive feedback [34], activity related to object interactions [38], or other concurrent motor tasks [10] (at least without additional training). Here, this robustness stems from the observation that speech-related modulation in dorsal motor cortex, which was previously only reported during stand-alone speaking [21], is markedly reduced when it occurs concurrently with iBCI cursor control. This “robustness-through-attenuation” result is a notably different from recent decoding results showing “robustness-through-orthogonality”: that is, previous studies found that neural variability due to visual feedback [37], day-to-day signal changes [39], and across-task differences [40] was substantial but did not interfere with decoding movements because this activity could be sequestered in decoder-null neural dimensions.

Attenuation of speech-related activity during attempted arm movements is consistent with a recent report that the arm contralateral to the recorded motor cortical area is the “dominant” effector which suppresses concurrent activity related to attempted movements of the ipsilateral arm, the legs, or the head [24]. The larger question of why this attenuation happens, and what function speech-related activity in dorsal motor cortex serves, remains unanswered. One possibility is that the speech-related activity reflects a coordination signal that is received by dorsal motor cortex from other areas that generate orofacial movements, and that this activity is masked or gated when concurrently generating arm movements. Another possibility is that dorsal motor cortex is only available to be recruited as an “auxiliary” computational resource to support movements of other body parts, such as the speech articulators, when it is not generating arm movements. Yet another possibility is that the brain has learned to intentionally attenuate speech-related activity (possibly over many months of prior BCI use) because it otherwise would have interfered with cursor control. Future studies could test this last hypothesis by examining speech-related neural activity during concurrent “open-loop” attempted arm movements (i.e., when the participant is not provided any sensory feedback or read-out of these movements’ neural correlates) during a BCI-naïve participant’s initial research sessions.

The attenuation of speech-related activity during concurrent attempted arm movements is advantageous for robustly decoding these arm movements, but this phenomenon also has a downside: speech information becomes largely unavailable for simultaneously driving speech and arm iBCIs. Thus, while dorsal motor cortex may potentially contribute useful signals for decoding attempted speech [20], BCIs for simultaneously restoring speech and arm movements will require additional signal sources, such as from ventral cortical areas known to have strong speech-related modulation [41-43].

There are several limitations to this study that should temper over-generalizing these interpretations. First, the results are from a single participant. Second, we tested for speech-related interference during a 2D cursor control task; while we predict that this result will extend to higher degree-of-freedom arm decoding, this remains to be confirmed. That said, our finding that speaking does not interfere with iBCI use is consistent with previous anecdotal reports from other participants [12], including participants controlling robotic arms (Collinger et al., 2013 and the NBC interview with Nathan Copeland fist-bumping his robot arm with President Obama while simultanously talking to him)a. Third, we did observe somewhat slower cursor task performance during simultaneous storytelling. We attribute this to this storytelling block’s higher cognitive demands (rather than direct speech-related decoder interference) due to the participant’s self-report that it was difficult to generate a continuous narrative, and the lack of observed iBCI interference during the cognitively less demanding prompted single word speaking. However, our data cannot rule out there being less attenuation of speech-related activity during continuous speech and/or that this activity is more decoder-potent. Future studies could test this by measuring BCI performance while participants speak a well-rehearsed continuous passage, and by making repeated measurements of the same continuous speech with and without concurrent attempted arm movements. Fourth, here we examined activity in dorsal motor cortex. There are ongoing efforts to decode arm movement intentions from other cortical areas (e.g., parietal cortex [26]), and it remains to be seen whether these areas also modulate during speaking and, if so, whether this could interfere with BCI performance.

We observed much more speech-related neural activity when the participant spoke while not engaged in the iBCI task, and that this activity had decoder-potent components. This raises a concern that if an iBCI prosthesis is on and actively controllable while its user is not paying attention to it, speaking might cause unintended movement of e.g., a robot arm. Such unintentional iBCI output may be ameliorated by a “gating” state decoder that identifies when the user is or is not trying to make arm movements [44].

In conclusion, we found that speaking does not interfere with ongoing use of a cursor iBCI because speech-related modulation is greatly reduced during attempted arm movements. This biological phenomenon is fortuitous from an iBCI design perspective, since it suggests that patients will be able to speak freely while controlling motor cortically-driven prostheses even without deliberate engineering of the decoder to be robust to speech-related activity.

Supplementary Material

Acknowledgements

We thank participant T5 and his caregivers for their dedicated contributions to this research, and N. Lam for administrative support.

This work was supported by the A. P. Giannini Foundation, the Wu Tsai Neurosciences Institute Interdisciplinary Scholars Fellowship, US NINDS Transformative Research Award R01NS076460, Director's Transformative Research Award (TR01) from the NIMH #5R01MH09964703, NIDCD R01DC014034, NINDS R01NS066311, NIDCD R01DC009899; Department of Veterans Affairs Rehabilitation Research and Development Service B6453R; MGH-Deane Institute for Integrated Research on Atrial Fibrillation and Stroke; Simons Foundation; Samuel and Betsy Reeves.

Footnotes

Declaration of interests

K.V.S. consults for Neuralink Corp. and is on the scientific advisory boards of CTRL-Labs Inc., MIND-X Inc., Inscopix Inc., and Heal Inc. J.M.H. is a consultant for Neuralink Corp, Proteus Biomedical and Boston Scientific, and serves on the Medical Advisory Boards of Enspire DBS and Circuit Therapeutics. All other authors have no competing interests.

References

- [1].Brandman DM, Cash SS and Hochberg LR 2017. Review: Human Intracortical Recording and Neural Decoding for Brain-Computer Interfaces IEEE Trans. Neural Syst. Rehabil. Eng 25 1687–96 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Slutzky M W 2019. Brain-Machine Interfaces: Powerful Tools for Clinical Treatment and Neuroscientific Investigations Neurosci. 25 139–54 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Serruya M, Hatsopoulos NG, Paninski L, Fellows MR and Donoghue JP 2002. Instant neural control of a movement signal Nature 416 141–2 [DOI] [PubMed] [Google Scholar]

- [4].Taylor DM, Tillery SIH and Schwartz AB 2002. Direct cortical control of 3D neuroprosthetic devices Science (80-. ) 296 1829–32 [DOI] [PubMed] [Google Scholar]

- [5].Carmena JM, Lebedev MA, Crist RE, O’Doherty JE, Santucci DM, Dimitrov DF, Patil PG, Henriquez CS and Nicolelis MAL 2003. Learning to control a brain-machine interface for reaching and grasping by primates PLoS Biol. 1 193–208 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Velliste M, Perel S, Spalding MC, Whitford AS and Schwartz AB 2008. Cortical control of a prosthetic arm for self-feeding Nature 453 1098–101 [DOI] [PubMed] [Google Scholar]

- [7].Ethier C, Oby ER, Bauman MJ and Miller LE 2012. Restoration of grasp following paralysis through brain-controlled stimulation of muscles Nature 485 368–71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Gilja V, Nuyujukian P, Chestek CA, Cunningham JP, Yu BM, Fan JM, Churchland MM, Kaufman MT, Kao JC, Ryu SI and Shenoy KV 2012. A high-performance neural prosthesis enabled by control algorithm design Nat. Neurosci 15 1752–7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Flint RD, Wright ZA, Scheid MR and Slutzky MW 2013. Long term, stable brain machine interface performance using local field potentials and multiunit spikes J. Neural Eng 10 056005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Orsborn AL, Moorman HG, Overduin SA, Shanechi MM, Dimitrov DF and Carmena JM 2014. Closed-Loop Decoder Adaptation Shapes Neural Plasticity for Skillful Neuroprosthetic Control Neuron 82 1380–93 [DOI] [PubMed] [Google Scholar]

- [11].Sussillo D, Stavisky SD, Kao JC, Ryu SI and Shenoy KV 2016. Making brain–machine interfaces robust to future neural variability Nat. Commun 7 13749. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Hochberg LR, Serruya MD, Friehs GM, Mukand JA, Saleh M, Caplan AH, Branner A, Chen D, Penn RD and Donoghue JP 2006. Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature 442 164–71 [DOI] [PubMed] [Google Scholar]

- [13].Hochberg LR, Bacher D, Jarosiewicz B, Masse NY, Simeral JD, Vogel J, Haddadin S, Liu J, Cash SS, van der Smagt P and Donoghue JP 2012. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm Nature 485 372–5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Collinger, Wodlinger B, Downey JE, Wang W, Tyler-Kabara EC, Weber DJ, McMorland AJC, Velliste M, Boninger ML and Schwartz AB 2013. High-performance neuroprosthetic control by an individual with tetraplegia. Lancet 381 557–64 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Wodlinger B, Downey JE, Tyler-Kabara EC, Schwartz AB, Boninger ML and Collinger JL 2015. Ten-dimensional anthropomorphic arm control in a human brain–machine interface: difficulties, solutions, and limitations J. Neural Eng. 12 016011. [DOI] [PubMed] [Google Scholar]

- [16].Pandarinath C, Nuyujukian P, Blabe CH, Sorice BL, Saab J, Willett FR, Hochberg LR, Shenoy KV. and Henderson JM 2017. High performance communication by people with paralysis using an intracortical brain-computer interface Elife 6 1–27 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Ajiboye AB, Willett FR, Young DR, Memberg WD, Murphy BA, Miller JP, Walter BL, Sweet JA, Hoyen HA, Keith MW, Peckham PH, Simeral JD, Donoghue JP, Hochberg LR and Kirsch RF 2017. Restoration of reaching and grasping movements through brain-controlled muscle stimulation in a person with tetraplegia: a proof-of-concept demonstration Lancet 389 1821–30 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Nuyujukian P, Albites Sanabria J, Saab J, Pandarinath C, Jarosiewicz B, Blabe CH, Franco B, Mernoff ST, Eskandar EN, Simeral JD, Hochberg LR, Shenoy KV. and Henderson JM 2018. Cortical control of a tablet computer by people with paralysis PLoS One 13 1–16 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Kao JC, Stavisky SD, Sussillo D, Nuyujukian P and Shenoy KV 2014. Information systems opportunities in brain-machine interface decoders Proc. IEEE 102 [Google Scholar]

- [20].Stavisky SD, Rezaii P, Willett FR, Hochberg LR, Shenoy KV. and Henderson JM 2018. Decoding Speech from Intracortical Multielectrode Arrays in Dorsal “Arm/Hand Areas” of Human Motor Cortex 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) (IEEE; ) pp 93–7 [DOI] [PubMed] [Google Scholar]

- [21].Stavisky SD, Willett FR, Murphy BA, Rezaii P, Memberg WD, Miller JP, Kirsch RF, Hochberg LR, Ajiboye AB, Shenoy KV. and Henderson JM 2018. Neural ensemble dynamics in dorsal motor cortex during speech in people with paralysis BioRxiv [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Rabbani Q, Milsap G and Crone NE 2019. The Potential for a Speech Brain–Computer Interface Using Chronic Electrocorticography Neurotherapeutics 1–22 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Gilja V, Pandarinath C, Blabe CH, Nuyujukian P, Simeral JD, Sarma AA, Sorice BL, Perge JA, Jarosiewicz B, Hochberg LR, Shenoy KV. and Henderson JM 2015. Clinical translation of a high-performance neural prosthesis Nat. Med 21 1142–5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Willett FR, Deo DR, Avansino DT, Rezaii P, Hochberg L, Henderson J and Shenoy K 2019. Hand Knob Area of Motor Cortex in People with Tetraplegia Represents the Whole Body in a Modular Way BioRxiv [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Masse NY, Jarosiewicz B, Simeral JD, Bacher D, Stavisky SD, Cash SS, Oakley EM, Berhanu E, Eskandar E, Friehs G, Hochberg LR and Donoghue JP 2014. Non-causal spike filtering improves decoding of movement intention for intracortical BCIs J. Neurosci. Methods 236 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Aflalo T, Kellis S, Klaes C, Lee B, Shi Y, Pejsa K, Shanfield K, Hayes-Jackson S, Aisen M, Heck C, Liu C and Andersen RA 2015. Decoding motor imagery from the posterior parietal cortex of a tetraplegic human Science (80-. ) 348 906–10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Bouton CE, Shaikhouni A, Annetta NV., Bockbrader MA, Friedenberg DA, Nielson DM, Sharma G, Sederberg PB, Glenn BC, Mysiw WJ, Morgan AG, Deogaonkar M and Rezai AR 2016. Restoring cortical control of functional movement in a human with quadriplegia Nature 533 247–50 [DOI] [PubMed] [Google Scholar]

- [28].Willett FR, Murphy BA, Young D, Memberg WD, Blabe CH, Pandarinath C, Franco B, Saab J, Walter BL, Sweet JA, Miller JP, Henderson JM, Shenoy KV., Simeral JD, Jarosiewicz B, Hochberg LR, Kirsch RF and Ajiboye AB 2018. A comparison of intention estimation methods for decoder calibration in intracortical brain-computer interfaces IEEE Trans. Biomed. Eng 65 2066–78 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Perge JA, Homer ML, Malik WQ, Cash S, Eskandar E, Friehs G, Donoghue JP and Hochberg LR 2013. Intra-day signal instabilities affect decoding performance in an intracortical neural interface system. J. Neural Eng 10 036004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Jarosiewicz B, Sarma AA, Bacher D, Masse NY, Simeral JD, Sorice B, Oakley EM, Blabe C, Pandarinath C, Gilja V, Cash SS, Eskandar EN, Friehs G, Henderson JM, Shenoy KV, Donoghue JP and Hochberg LR 2015. Virtual typing by people with tetraplegia using a self-calibrating intracortical brain-computer interface Sci. Transl. Med 7 313ra179–313ra179 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Malik WQ, Truccolo W, Brown EN and Hochberg LR 2011. Efficient decoding with steady-state Kalman filter in neural interface systems IEEE Trans. Neural Syst. Rehabil. Eng 19 25–34 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Kao JC, Stavisky SD, Sussillo D, Nuyujukian P and Shenoy KV 2014. Information Systems Opportunities in Brain-Machine Interface Decoders Proc. IEEE 102 666–82 [Google Scholar]

- [33].Stavisky SD, Kao JC, Nuyujukian P, Ryu SI and Shenoy KV 2015. A high performing brain–machine interface driven by low-frequency local field potentials alone and together with spikes J. Neural Eng 12 036009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Stavisky SD, Kao JC, Nuyujukian P, Pandarinath C, Blabe C, Ryu SI, Hochberg LR, Henderson JM and Shenoy KV. 2018. Brain-machine interface cursor position only weakly affects monkey and human motor cortical activity in the absence of arm movements Sci. Rep 8 16357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Allefeld C and Haynes JD 2014. Searchlight-based multi-voxel pattern analysis of fMRI by cross-validated MANOVA Neuroimage 89 345–57 [DOI] [PubMed] [Google Scholar]

- [36].Logan GD and Cowan WB 1984. On the ability to inhibit thought and action: A theory of an act of control. Psychol. Rev 91 295–327 [DOI] [PubMed] [Google Scholar]

- [37].Stavisky SD, Kao JC, Ryu SI and Shenoy KV 2017. Motor Cortical Visuomotor Feedback Activity Is Initially Isolated from Downstream Targets in Output-Null Neural State Space Dimensions Neuron 95 195–208.e9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Downey JE, Brane L, Gaunt RA, Tyler-Kabara EC, Boninger ML and Collinger JL 2017. Motor cortical activity changes during neuroprosthetic-controlled object interaction Sci. Rep 7 16947. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [39].Flint RD, Scheid MR, Wright ZA, Solla SA and Slutzky MW 2016. Long-Term Stability of Motor Cortical Activity: Implications for Brain Machine Interfaces and Optimal Feedback Control J. Neurosci 36 3623–32 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [40].Gallego JA, Perich MG, Naufel SN, Ethier C, Solla SA and Miller LE 2018. Cortical population activity within a preserved neural manifold underlies multiple motor behaviors Nat. Commun 9 4233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [41].Angrick M, Herff C, Mugler E, Tate MC, Slutzky MW, Krusienski DJ and Schultz T 2019. Speech synthesis from ECoG using densely connected 3D convolutional neural networks J. Neural Eng 16 036019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [42].Moses DA, Leonard MK, Makin JG and Chang EF 2019. Real-time decoding of question-and-answer speech dialogue using human cortical activity Nat. Commun 10 3096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [43].Anumanchipalli GK, Chartier J and Chang EF 2019. Speech synthesis from neural decoding of spoken sentences Nature 568 493–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [44].Velliste M, Kennedy SD, Schwartz AB, Whitford AS, Sohn J and McMorland AJC 2014. Motor cortical correlates of arm resting in the context of a reaching task and implications for prosthetic control J. Neurosci 34 6011–22 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.