Abstract

Radiology reports are consumed not only by referring physicians and healthcare providers, but also by patients. We assessed report readability in our enterprise and implemented a two-part quality improvement intervention with the goal of improving report accessibility. A total of 491,813 radiology reports from ten hospitals within the enterprise from May to October, 2018 were collected. We excluded echocardiograms, rehabilitation reports, administrator reports, and reports with negative scores leaving 461,219 reports and report impressions for analysis. A grade level (GL) was calculated for each report and impression by averaging four readability metrics. Next, we conducted a readability workshop and distributed weekly emails with readability GLs over a period of 6 months to each attending radiologist at our primary institution. Following this intervention, we utilized the same exclusion criteria and analyzed 473,612 reports from May to October, 2019. The mean GL for all reports and report impressions was above 13 at every hospital in the enterprise. Following our intervention, a statistically significant drop in GL for reports and impressions was demonstrated at all locations, but a larger and significant improvement was observed in impressions at our primary site. Radiology reports across the enterprise are written at an advanced reading level making them difficult for patients and their families to understand. We observed a significantly larger drop in GL for impressions at our primary site than at all other sites following our intervention. Radiologists at our home institution improved their report readability after becoming more aware of their writing practices.

Keywords: Report, Readability, Patient-centered care, Quality improvement

Introduction

The advent of patient portals has allowed patients and their families to become direct consumers of the radiology report [1]. Restructuring the report into a more accessible document can help radiologists transition from a consultant to a more direct clinician [2] as studies have demonstrated that patients find radiology reports difficult to understand [3]. Radiology report readability also has implications for future reimbursement practices. The Medicare Access and CHIP Reauthorization Act (MACRA) of 2015 created a value-based incentive program that shifted the focus of health care delivery from quantity to quality [4]. Medical imaging is one of the most visible targets for healthcare spending reductions [2], and by catering more directly to patients, radiologists can improve health outcomes [1]. Readability indices are one tool to provide objective measurements to assess radiology report accessibility. Improving readability may benefit patient communication and potentially enrich discourse with referring physicians. Previous studies have examined baseline radiology report characteristics, including readability, in various institutions [5–7], and other groups have enacted short-term interventions to improve readability [8]. Our study, in contrast, examined the effects of long-term approaches. We first assessed the state of readability in our enterprise, and then implemented a two-part intervention over 18 months to achieve improvements in report accessibility at one tertiary care hospital.

Materials and Methods

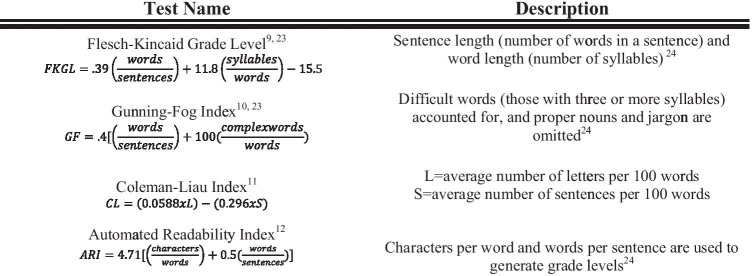

A total of 491,813 reports were generated from May 1 to October 31, 2018 at ten sites in one large hospital enterprise in the USA. The enterprise includes approximately 140 attending radiologists employed across six community hospitals and four tertiary care centers. Within the four tertiary care centers is our primary site, the academic flagship hospital of the system where residents are trained. Across the enterprise radiology report structure varies; though at our primary site, there has been a greater effort to utilize templates, this has not been mandated across all sites. Reports from the rehabilitation hospital, administrator-generated reports, and echocardiograms across all institutions were excluded as these were deemed beyond the scope of the project. GLs were generated for both the whole report and report impressions using the average of four readability indices: the Flesch-Kincaid grade level [9], Gunning Fog index [10], Coleman Liau index [11], and Automated Readability Index [12] (Fig. 1). These indices were chosen as they have commonly been utilized to evaluate healthcare text readability [13]. We chose to calculate an average of the indices as this method has been used previously in the literature [6]. Additionally, we found that presenting one score to attendings participating in our intervention was simpler to understand than four scores. Upon further analysis, we observed that short impressions such as “CHF” or “no acute process” were yielding negative GLs so these were also excluded leaving 461,219 reports in the initial pre-intervention set. After analyzing baseline readability information, a two-part intervention was implemented. On May 1st, 2019, we began distributing a weekly email to all attending radiologists at our primary site with information regarding their personal readability scores. The emails included the maximum, minimum, and average GLs for their reports and impressions (Fig. 2) for the week. The second component of the intervention was a 1-h workshop highlighting salient aspects of report readability such as brevity and word choice delivered at the primary site’s Department of Radiology monthly quality meeting. At this workshop, we began by explaining the four metrics utilized to evaluate reports, and using our own tips, we then presented examples of reports before and after they were edited. This helped our audience understand concrete steps to take to improve accessibility of their reports. Following an intervention period of 6 months, we reanalyzed the data using the same four readability formulas.

Fig. 1.

These are the four formulas utilized in our study. All four apply different aspects of a sentence to generate a number that corresponds to a grade level; the lower the score, the more accessible the report is to a layperson

Fig. 2.

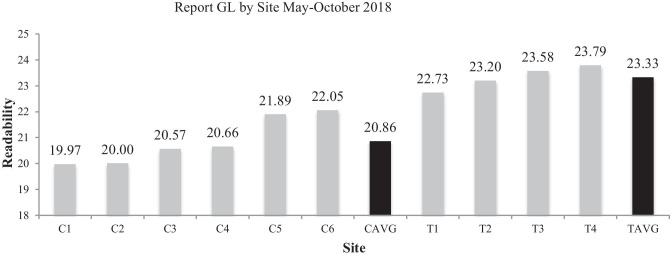

This graph shows the report GL for May–October 2018 prior to any intervention. The black bars correspond to community hospital and tertiary care center averages, respectively. Our primary site is labeled as “T2”

Our initial post-intervention set from May 1 to October 31, 2019 consisted of 491,778 reports. After excluding echocardiograms, rehabilitation reports, administrator reports, and short impression reports as in the pre-intervention set, we were left with 473,612 reports. For post-intervention analysis, we categorized reports by the site from which the attending radiologist was based to clearly delineate those that received the readability training and feedback and those that did not. Any attending that read studies at our primary hospital was labeled as a “primary” attending, and all remaining attendings were labeled as “other”. Attending IDs were used to match our pre- and post-intervention data sets. We excluded any attendings that only appeared in the pre-intervention or post-intervention set, as there was no point of comparison. Using this method, we excluded 83,820 reports (64,382 from the pre-intervention set and 19,438 from the post-intervention set) and analyzed 396,837 pre-intervention and 454,174 post-intervention reports. The average readability was calculated as the mean of the four readability indices for each report and report impression, and all statistical analysis was performed on this average score. Data characteristics were summarized with mean (GL) and standard deviation (SD). All analyses were subject to two-sided tests at a significance level of 0.05 and were performed using statistical software RStudio (Version 0.99.902) and SAS 9.4.

Results

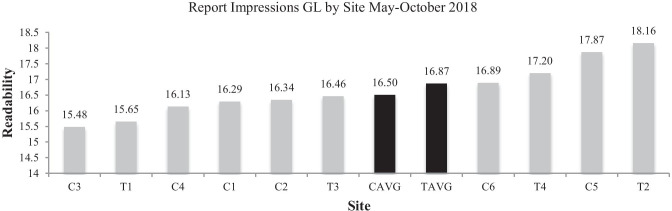

We began by classifying data by hospital type, community hospital, or tertiary care center. Prior to any intervention, the reports read from community hospitals had a GL (SD) of 20.86 (0.91) and 16.50 (0.80) while those read from tertiary care centers had a GL of 23.22 (0.46) and 16.87 (1.07) (Figs. 2 and 3) for the entire report and impressions, respectively. The GL for reports at our primary site was 24.53 (6.23) and for all other sites was 22.09 (5.41) (Table 1).

Fig. 3.

This graph demonstrates report impression GL at each of the sites for May–October 2018. Again, the black bars correspond to the averages for community and tertiary hospitals

Table 1.

Univariate analysis revealed drop in GL sites for the entire body of the report across the enterprise. Results reported as GL (standard deviation)

| Overall | Pre-intervention | Post-intervention | p value | |

|---|---|---|---|---|

| All (n = 255) | 21.72 (5.4) | 22.58 (5.66) | 20.85 (5.00) | p < 0.0001 |

| Primary (n = 51) 20% | 23.39 (6.17) | 24.53 (6.23) | 22.24 (5.95) | p < 0.0001 |

| Other (n = 204) 80% | 21.30 (5.12) | 22.09 (5.41) | 20.51 (4.69) | p < 0.0001 |

Following our intervention, community hospitals reported a GL of 19.94 (0.61) and 14.43 (0.89) whereas tertiary care centers had a GL of 20.98 (1.91) and 14.39 (0.49) (Figs. 4 and 5) for overall reports and report impressions, respectively. The GL for our primary site after intervention was 22.24 (5.95), and for all other sites, it was 20.51 (4.69) (Tables 1 and 2 ). When the impressions were examined in isolation, our primary site’s GL was 18.7 (5.22) and all other sites were 16.9 (2.43) prior to any intervention (Table 3). Following our intervention, our primary site’s impressions had a GL of 15.22 (2.14) and all other sites were 14.76 (2.4) (Table 3).

Fig. 4.

This graph demonstrates report GL across our enterprise following the implementation of the intervention

Fig. 5.

This graph demonstrates report impression GL across sites following intervention implementation

Table 2.

The mixed effect model evaluated the effect of the intervention at sites within the enterprise. Though the site effect and the intervention effect were significant, the site-specific intervention was not so we could not credit the improvement in report readability to our intervention

| Effect | Num DF | Den DF | F value | Pr > F |

|---|---|---|---|---|

| Site | 1 | 253 | 7.52 | 0.0065 |

| Intervention | 1 | 253 | 38.01 | < 0.0001 |

| Site × intervention | 1 | 253 | 1.27 | 0.2601 |

Table 3.

Univariate analysis revealed drop in impression GL across sites for the entire body of the report across the enterprise. Results reported as GL (standard deviation)

| Overall | Pre-intervention | Post-intervention | p value | |

|---|---|---|---|---|

| All (n = 255) | 16.07 (3.09) | 17.28 (3.26) | 14.85 (2.35) | p < 0.0001 |

| Primary (n = 51) 20% | 16.96 (4.34) | 18.70 (5.22) | 15.22 (2.14) | p < 0.0001 |

| Other (n = 204) 80% | 15.85 (2.64) | 16.93 (2.43) | 14.76 (2.40) | p < 0.0001 |

Our analysis revealed that the GL decreased significantly at all sites for both impressions and overall reports (Tables 1 and 2). A mixed effect model was utilized to ascertain the significance of our intervention on site-specific data. The interaction between the sites and intervention was not significant for the entire report (Table 2), and therefore, we could not attribute the drop in overall report GL to our intervention. When we examined our impressions with this model, a statistically significant interaction between the intervention and sites was observed (Table 4). We therefore are able to attribute the improvement in impression readability at our primary site to our intervention, namely, our emails and workshop (Fig. 6 ). We additionally found that the primary site impressions had a larger magnitude of decrease in readability than impressions at all other sites (Table 5) with a mean drop of 3.48 points compared with 2.16, respectively.

Table 4.

The mixed effect model was again utilized to evaluate intervention effects at sites for impressions. For the impressions, the site and intervention effect was significant so we could attribute to the decrease in GL to our intervention

| Effect | Num DF | Den DF | F value | Pr > F |

|---|---|---|---|---|

| Site | 1 | 253 | 7.76 | 0.0058 |

| Intervention | 1 | 253 | 257.23 | < 0.0001 |

| Site × intervention | 1 | 253 | 13.91 | 0.0002 |

Fig. 6.

This is an example of the emails distributed to attendings at our primary institution. The attending’s report scores were included along with a histogram to underscore their personal report score distribution

Table 5.

This table examines the magnitude of change in impression GL. The primary site had a greater decrease in score compared with all other sites, demonstrated in the estimate column, and this change was statistically significant (p < 0.0001)

| Group | Estimate | Standard error | DF | t value | Pr > [t] | Alpha |

|---|---|---|---|---|---|---|

| Primary post | −3.48 | 0.55 | 50 | −6.1 | < 0.0001 | 0.05 |

| Other post | −2.16 | 0.10 | 203 | −20.98 | < 0.0001 | 0.05 |

| Primary pre | 0 | 0.05 | ||||

| Other pre | 0 | 0.05 |

Discussion

Due to the complex nature of medical terminology, radiology reports often have intricate terms that are difficult for the average patient and their families to comprehend [14]. Data shows that the average adult reads at an 8th grade level [15] and that the majority of health resources available online are above the level of understanding of an average patient [16]. Our results demonstrate that radiology reports are at an advanced reading level across the enterprise. The results showed that community hospitals had lower GLs for both reports and impressions compared with tertiary care centers within our enterprise. This could be a byproduct of the greater complexity of patients treated at tertiary care centers. Our post-intervention GLs decreased at all sites for both the entire report and the report impressions. This change was statistically significant, and there are a few reasons that could explain this result. First, there has been a general movement towards higher-quality reporting across institutions [17], which could have inspired attendings at all sites to improve report readability. Additionally, in our enterprise, there has been an undertaking to increase standardized radiology template use across sites, and this may have contributed to the global decrease in readability scores. Our analysis further revealed that our intervention, though unable to account for the improvement in readability for the entire reports, was a significant factor for improved impression GL at our home institution. We theorize that attendings receiving the emails may have chosen to focus on the impressions in lieu of the report body since referring physicians place more emphasis on this component of the document [18].

Though it is unrealistic to expect that radiology reports can be simplified to an eight-grade level, some improvement in accessibility is feasible. When dictating reports, attendings can choose to focus on clarity and brevity, both important for communication [1]. Alternatively, separate reports crafted specifically for the patient can be explored; this practice is prevalent in breast imaging but has not yet expanded to other radiology disciplines [19]. Finally, one group has developed tool that incorporates lay definitions directly in the report, and though this has not yet become fully available, it would potentially be a valuable asset for patients and radiologists [20].

Our study has several limitations including the fact that readability formulas alone are not comprehensive tools for assessing health information quality [21]. Moreover, because our intervention only catalyzed an improvement in readability to a GL of 13 for impressions, significantly higher than the average adult’s level of comprehension, it is possible that improving radiology report readability does not have any practical value for enhancing patient experience. Additionally, we did not track which physicians attended our workshops or opened our emails; despite this, we are confident that our primary site faculty were aware of the project as we emailed them on a weekly basis, and shared readability tips through word of mouth as well. Finally, the exclusion of reports with negative GLs could contribute to bias. We chose to exclude such reports because on closer inspection, it was revealed that reports with negative scores corresponded to very short impressions such as “CHF” or “no change”, and that such impressions could not be edited further to improve readability. Our analysis did not reveal that any one index yielded a greater amount of negative scores than any other. Another limitation includes that despite the push for report template utilization, there is variability in templates and penetrance across our enterprise, and this could have affected our results. The next step would be to gauge the importance of readability from the point of view of patients and physicians. We are planning to have Internal Medicine and Emergency Medicine physicians evaluate reports to observe if their opinions align with the computer-generated grades and to assess whether focusing on readability affects report content. Additionally, we plan to survey radiologists about the importance of readability to gain peer input. Lastly, receiving feedback from patients would further highlight the role that readability has on their experience.

Conclusion

Readability is one of the many factors involved in radiology report quality. Our results demonstrate that reports are written at an advanced level at both community and tertiary care hospitals across our enterprise. Though our post-intervention reports demonstrated a statistically significant drop in GL at our primary site, this phenomenon was also observed at all other sites in the group. Our intervention, however, was a significant factor in improving impression scores and our primary site displayed a larger decrease in GL than observed at all other sites. Though dictating radiologists have the main duty to convey accurate information and diagnoses to ordering physicians, there is potential to improve accessibility of radiology reports to the general patient population. Additionally, radiologists can underscore their role in medicine, an action that has implications for reimbursement [19], by focusing on delivering information directly to the patient. Finally, improving report readability could allow radiologists to convey the most essential information to referring physicians, a practice in alignment with the ACR’s Imaging 3.0 Initiative [22]. We hope that our findings will contribute to the ultimate goal of improving patient care across hospitals.

Declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Bruno MA, Petscavage-Thomas JM, Mohr MJ, Bell SK, Brown SD. The “Open Letter”: radiologists reports in the era of patient web portals. Journal of the American College of Radiology. 2014;11(9):863–867. doi: 10.1016/j.jacr.2014.03.014. [DOI] [PubMed] [Google Scholar]

- 2.Itri JN. Patient-centered radiology. RadioGraphics. 2015;35(6):1835–1846. doi: 10.1148/rg.2015150110. [DOI] [PubMed] [Google Scholar]

- 3.Carlos RC. Patient-centered radiology. Academic Radiology. 2009;16(5):515–516. doi: 10.1016/j.acra.2009.01.025. [DOI] [PubMed] [Google Scholar]

- 4.Silva E, Mcginty GB, Hughes DR, Duszak R. Alternative payment models in radiology: the legislative and regulatory roadmap for reform. Journal of the American College of Radiology. 2016;13(10):1176–1181. doi: 10.1016/j.jacr.2016.05.023. [DOI] [PubMed] [Google Scholar]

- 5.Gunn AJ, Gilcrease-Garcia B, Mangano MD, Sahani DV, Boland GW, Choy G. JOURNAL CLUB: structured feedback from patients on actual radiology reports: a novel approach to improve reporting practices. American Journal of Roentgenology. 2017;208(6):1262–1270. doi: 10.2214/ajr.16.17584. [DOI] [PubMed] [Google Scholar]

- 6.Martin-Carreras T, Cook TS, Kahn CE. Readability of radiology reports: implications for patient-centered care. Clinical Imaging. 2019;54:116–120. doi: 10.1016/j.clinimag.2018.12.006. [DOI] [PubMed] [Google Scholar]

- 7.Yi PH, Golden SK, Harringa JB, Kliewer MA. Readability of lumbar spine MRI reports: will patients understand? American Journal of Roentgenology. 2019;212(3):602–606. doi: 10.2214/ajr.18.20197. [DOI] [PubMed] [Google Scholar]

- 8.Chen W, Durkin C, Huang Y, Adler B, Rust S, Lin S. Simplified readability metric drives improvement of radiology reports: an experiment on ultrasound reports at a pediatric hospital. Journal of Digital Imaging. 2017;30(6):710–717. doi: 10.1007/s10278-017-9972-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kincaid, J. P., Fishburne, J., P., R. R., L., C. R., & S., B. (1975). Derivation of New Readability Formulas (Automated Readability Index, Fog Count and Flesch Reading Ease Formula) for Navy Enlisted Personnel. 10.21236/ada006655

- 10.Gunning R. The Technique of Clear Writing. New York: McGraw Hill; 1952. [Google Scholar]

- 11.Coleman M, Liau TL. A computer readability formula designed for machine scoring. Journal of Applied Psychology. 1975;60(2):283–284. doi: 10.1037/h0076540. [DOI] [Google Scholar]

- 12.Smith EA, Kincaid JP. Derivation and validation of the automated readability index for use with technical materials. Human Factors: The Journal of the Human Factors and Ergonomics Society. 1970;12(5):457–564. doi: 10.1177/001872087001200505. [DOI] [Google Scholar]

- 13.Ley P, Florio T. The use of readability formulas in health care. Psychology, Health & Medicine. 1996;1(1):7–28. doi: 10.1080/13548509608400003. [DOI] [Google Scholar]

- 14.Safeer RS, Keenan J. Health literacy: the gap between physicians and patients. Am Fam Physician. 2005;72(3):463–68. [PubMed] [Google Scholar]

- 15.Davis TC, Wolf MS. Health literacy: implications for family medicine. Fam Med. 2004;36(8):595–8. [PubMed] [Google Scholar]

- 16.Hansberry DR, Agarwal N, Baker SR. Health literacy and online educational resources: an opportunity to educate patients. American Journal of Roentgenology. 2015;204(1):111–116. doi: 10.2214/ajr.14.13086. [DOI] [PubMed] [Google Scholar]

- 17.Reiner BI, Knight N, Siegel EL. Radiology reporting, past, present, and future: the radiologist’s perspective. Journal of the American College of Radiology. 2007;4(5):313–319. doi: 10.1016/j.jacr.2007.01.015. [DOI] [PubMed] [Google Scholar]

- 18.Camilo DMR, Tibana TK, Adôrno IF, Santos RFT, Klaesener C, Junior WG, Nunes TF. Radiology report format preferred by requesting physicians: prospective analysis in a population of physicians at a university hospital. RadiologiaBrasileira. 2019;52(2):97–103. doi: 10.1590/0100-3984.2018.0026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Brandt-Zawadski MK, Kerlan RK. Patient-centered radiology: use it or lose it! Academic Radiology. 2009;16(5):521–523. doi: 10.1016/j.acra.2009.01.027. [DOI] [PubMed] [Google Scholar]

- 20.Oh SC, Cook TS, Kahn CE. PORTER: a prototype system for patient-oriented radiology reporting. Journal of Digital Imaging. 2016;29(4):450–454. doi: 10.1007/s10278-016-9864-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Jindal P, Macdermid J. Assessing reading levels of health information: uses and limitations of Flesch formula. Education for Health. 2017;30(1):84. doi: 10.4103/1357-6283.210517. [DOI] [PubMed] [Google Scholar]

- 22.Ellenbogen, P. H. (2013). Imaging 3.0: What Is It? Journal of the American College of Radiology, 229. 10.1016/j.jacr.2013.02.011 [DOI] [PubMed]