Abstract

Caregivers modify their speech when talking to infants, a specific type of speech known as infant-directed speech (IDS). This speaking style facilitates language learning compared to adult-directed speech (ADS) in infants with normal hearing (NH). While infants with NH and those with cochlear implants (CIs) prefer listening to IDS over ADS, it is yet unknown how CI processing may affect the acoustic distinctiveness between ADS and IDS, as well as the degree of intelligibility of these. This study analyzed speech of seven female adult talkers to model the effects of simulated CI processing on (1) acoustic distinctiveness between ADS and IDS, (2) estimates of intelligibility of caregivers’ speech in ADS and IDS, and (3) individual differences in caregivers’ ADS-to-IDS modification and estimated speech intelligibility. Results suggest that CI processing is substantially detrimental to the acoustic distinctiveness between ADS and IDS, as well as to the intelligibility benefit derived from ADS-to-IDS modifications. Moreover, the observed variability across individual talkers in acoustic implementation of ADS-to-IDS modification and the estimated speech intelligibility was significantly reduced due to CI processing. The findings are discussed in the context of the link between IDS and language learning in infants with CIs.

Keywords: Infant-directed speech, cochlear implant, acoustic distance, speech intelligibility, individual differences

I. INTRODUCTION

A. Infant-directed speech and language learning

Caregivers around the world modify their speaking style from adult-directed speech (ADS) to infant-directed speech (IDS) when talking to infants (Fernald & Kuhl, 1987; Snow, 1977). Greater exposure to IDS and some aspects of its acoustic and linguistic properties facilitates language learning in children with normal hearing (NH) (Cristia & Seidl, 2014; Liu et al., 2003; Song et al., 2010; Trainor et al., 2000; Weisleder & Fernald, 2013). The supportive role of IDS in infants’ language learning occurs through several pathways, such as directing and holding infants’ attention to speech (Fernald, 1985, 1989; Kitamura et al., 2001; Kuhl et al., 1997; Schachner & Hannon, 2011; Wang et al., 2017, 2018; Werker et al., 1994), while making speech more clear by providing more salient cues for speech discrimination (Karzon, 1985), word segmentation (Singh & Nestor, 2009), speech segmentation (Thiessen et al., 2005), and word learning (Ma et al., 2011). Taken together, these studies demonstrate the supportive role of IDS in infants’ early language learning. However, it is not yet clear how cochlear implants may affect acoustic properties of IDS, and its supportive role in language learning. The present study aims to investigate the effects of simulated CI processing on acoustic information pertaining to distinguishing and recognizing words in IDS versus ADS.

B. Infants’ attention to IDS

In listening to speech, infants with NH and those with CIs demonstrate increased attention to IDS compared to ADS (The ManyBabies Consortium, 2020; Cooper et al., 1997; Fernald, 1985; Wang et al., 2017; Wang et al., 2018; Werker & McLeod, 1989; Werker et al., 1994). This effect of IDS on attention begins very early as observed in newborns and infants as young as 4 months old (Cooper & Aslin, 1990; Fernald, 1985; Werker & McLeod, 1989), demonstrating the capability of infants in using subtle acoustic information to distinguish IDS from ADS while having acquired only minimal linguistic knowledge. Multiple acoustic-phonetic cues have been identified as potentially supporting infants’ robust preference for IDS over ADS, such as higher pitch (Cooper & Aslin, 1990; Fernald, 1989), greater pitch fluctuation (Fernald & Simon, 1984), slower speaking rate (Leong et al., 2014; Narayan & McDermott, 2016; Song et al., 2010), and an expanded vowel space (Burnham et al., 2015) in IDS compared to ADS. These acoustic cues relevant to the distinction between IDS and ADS have also been shown for a variety of other languages (Wassink et al., 2007; Broesch & Bryant, 2015; Kitamura et al., 2001). For instance, Wassink et al., (2007) showed that IDS contains a higher pitch, and greater pitch range than other clear speech styles (e.g., hyperarticulated and citation-form speech) in Jamaican English and Jamaican Creole. IDS was also acoustically distinctive from clear speech and citation-form speech in terms of intensity (higher) and overall vowel duration (longer). These distinctive acoustic-phonetic cues are manifested in spectro-temporal representations of caregivers’ speech, which are actively incorporated through infants’ highly sensitive auditory systems to recognize IDS as distinct from ADS (Kuhl, 2004; Maye et al., 2002; McMurray & Aslin, 2005; Telkemeyer et al., 2009). Although IDS over ADS preference was observed in infants with CIs (Wang et al., 2017), it is still not clear how they are different from their peers with NH in terms of access to spectral information involved in distinguishing IDS from ADS. Infants with CIs may face difficulties in resolving distinctive acoustic information toward performing this task effectively, leading potentially to a disruption in the developmental time course of CI infants’ attention to IDS and delayed language development, as compared to that of NH infants. These considerations suggest a benefit of examining how CI-related speech processing may affect acoustic distinctiveness and intelligibility of IDS compared with ADS, and how this acoustic distinctiveness and intelligibility may differ as a function of individual talkers’ speech patterns.

C. CI processing and acoustic distinction between IDS and ADS

Infants with CIs have access to a spectro-temporally degraded representation of speech in their language environments, due to limitations of electric stimulation to faithfully transmit this information to the auditory nerve. The limited number of active channels and the broad current spread, that causes the interaction between channels, lead to a degraded representation of spectral information in speech (e.g., Arenberg Bierer, 2010; Baskent & Gaudrain, 2016; Svirsky, 2017), as well as partial access to temporal fine structure (TFS) cues (e.g., Mehta et al., 2020). Listeners with CIs do not have access to TFS cues, which are also not available in the vocoded speech due to a limited number of frequency channels (Lorenzi & Moore, 2008; Mehta et al., 2020). However, lack of access to TFS-based timing cues may not be problematic for perception of fundamental frequency (Oxenham, 2018), which is an important acoustic dimension for the distinction between IDS and ADS. Therefore, it might be fair to assume that the degradation of spectral cues through CIs causes greater detrimental effects in speech perception compared to the effects of the TFS degradation on speech understanding. Friesen et al. (2001) showed that speech recognition in listeners with NH improved by increasing the number of spectral channels in vocoded speech, highlighting the effect of spectral resolution in recognition of speech in listeners with CIs (Baskent & Gaudrain, 2016; Friesen et al., 2001; Fu et al., 2005; Fu & Nogaki, 2005; Luo & Poeppel, 2007; Shannon et al., 1995; Svirsky, 2017). Temporal resolution is also reduced in CIs and it is mainly limited to temporal-envelope cues, which disrupts faithful transmission of temporal fine-structure (TFS) in speech to auditory nerves (Rubinstein, 2004; Svirsky, 2017). This reduced spectro-temporal resolution may negatively affect infants’ access to segmental and suprasegmental (i.e., prosodic) features of IDS.

Transmission of multiple speech cues are negatively affected by the limited resolution of CIs, including pitch (Mehta & Oxenham, 2017; Qin & Oxenham, 2005; Svirsky, 2017), timbre (Kong et al., 2011), and melody (Mehta & Oxenham, 2017; Zeng et al., 2014) in talkers’ speech. These cues have been recognized as major attributes that distinguish IDS from ADS (Fernald, 1989; Fernald & Simon, 1984; Wang et al., 2017). For example, caregivers’ pitch is identified as an important perceptual cue for children’s robust attention in listening to IDS (Fernald, 1985; Piazza et al., 2017) and was also recognized as an important cue in distinguishing IDS from ADS (Fernald & Kuhl, 1987). Higher mean pitch and wider pitch range are recognized as prosodic modifications that signal speech directed to infants as compared to adults (Garnica, 1977; Narayan & McDermott, 2016). Talkers’ pitch further contributes to lexical segmentation of speech in listeners with CIs (Spitzer et al., 2009), a task critical for word learning. Recognition of emotion from speech is another important aspect in recognizing and processing IDS (Trainor et al., 2000), which relies heavily on perceiving pitch contour and pitch fluctuation in caregivers’ speech. These cues are poorly perceived by listeners with CIs. For example, listeners with CIs performed worse in emotion recognition as the number of spectral channels in vocoded speech was decreased (Luo et al., 2007). Another instance of degraded representation of speech cues related to IDS is poor perception of melodic pitch that is shown to be negatively affected by reducing the number of spectral channels (Kong et al., 2004). These findings suggest that children with CIs have probably partial access to spectro-temporal cues in speech that could contribute to the distinctiveness of IDS and ADS (e.g., pitch and intonation). This limited access to speech cues that signal IDS may interfere with the connection between recognizing and listening to IDS and developing better language skills. Therefore, it is important to understand how CI may impact the acoustic distinction between IDS and ADS - a major knowledge gap that the present study aimed to address based on acoustic and computational analysis of simulated CI speech.

D. Intelligibility of IDS through CI

Another possible way IDS may foster language learning is by making speech more intelligible. Prior studies showed evidence that IDS may provide acoustic cues that assist infants for parsing linguistic units in speech. Karzon (1985) showed that the exaggerated suprasegmental features of IDS may provide supportive perceptual cues for syllabification of multisyllabic sequences. In another study, infants had better long-term word recognition when the words were presented in IDS compared to ADS (Singh & Nestor, 2009). It was further shown that IDS facilitates word segmentation (Thiessen et al., 2005). Although evidence on intelligibility benefits of IDS over ADS have somehow been contradictory at the level of segmental speech cues (e.g., Kuhl et al., 1997; McMurray et al., 2013), there might be an intelligibility benefit associated with other properties of IDS (e.g., prosodic cues), similar to the effects observed in close analogues of a “clear speech” register (Bradlow et al., 2003; Ferguson & Kewley-Port, 2007). Caregivers’ modification of speaking style from ADS to IDS impacts aspects of speech such as pitch contour and speech rate, which have been shown to be strong predictors of speech clarity and intelligibility (Bradlow et al., 2003; Cutler et al., 1997; Ferguson & Poore, 2010; Ferguson & Quené, 2014; Spitzer et al., 2007; Watson & Schlauch, 2008). Different choices of speaking style often impact speech rate, where a slower rate is thought to contribute to enhanced intelligibility of clear speech compared to conversational speech both in typical listeners (Ferguson et al., 2010) and recipients of CIs (Li et al., 2011; Zanto et al., 2013).

Limited spectral resolution of the cochlear implant device affects the quality by which listeners with CIs receive speech cues (Croghan et al., 2017; Jain & Vipin Ghosh, 2018; Peng et al., 2019; Qin & Oxenham, 2005), which may negatively impact CI users’ ability to recognize words and phonemes, especially children (Grieco-calub et al., 2010; Peng et al., 2019). Therefore, any likely beneficial role of IDS in improvement of speech intelligibility is prone to change when caregivers’ speech is perceived through a CI device. Prior studies have shown that performance of listeners with CIs is considerably poorer than listeners with NH in understanding sentences spoken with relatively higher rate (Zanto et al., 2013), which is an acoustic dimension signaling distinction of IDS from ADS (i.e., slower speaking rate in IDS compared to ADS). Variation of fundamental frequency (F0) is another acoustic dimension of the IDS-vs-ADS distinction that contributes to speech recognition (Spitzer et al., 2009; Spitzer et al., 2007). Findings on the effect of these acoustic properties on intelligibility of caregivers’ speech is still controversial, and little is known about the effects of CI speech processing on intelligibility of caregivers’ speech in ADS and IDS conditions. The present study uses computational modeling approaches to address whether IDS is beneficial for intelligibility improvement and how this effect may be compromised depending on listeners’ hearing status (NH vs. CI).

E. Individual differences in acoustic implementation of IDS and speech intelligibility

Listeners’ familiarity with the range of variability in voice of individual talkers is important for robust identification and recognition of individuals’ voices (Lavan et al., 2019; Souza et al., 2013). Caregiver-specific acoustic modification is important for effective caregiver-infant interaction and significantly contributes to infants’ recognition of caregivers’ voices both before and after birth (Beauchemin et al., 2011; Kisilevsky et al., 2003), as well as for neural development of infants’ auditory cortices (Webb et al., 2015). Singh (2008) showed that exposure to IDS with relatively higher variability in vocal affects facilitates word recognition in infants by forming a relatively wider and more generalizable lexical category, highlighting the supportive role of exposure to a wide range of ADS-to-IDS acoustic variation in effective processing of caregivers’ speech. The degree of acoustic variability that caregivers introduce to their infants may vary across individuals. The acoustic characteristics of IDS reveal finer details about the unique voice timbre of individual caregivers, which potentially contributes to infant’ identification of individual caregivers (Piazza et al., 2017). It is, however, unclear how CI processing impacts the range of acoustic variability within and across caregivers due to shifts in caregivers’ speaking style (i.e., ADS to IDS), a change which likely eliminates acoustic details and potentially negatively impacts the supportive role of IDS in recognizing caregivers’ voices. CI processing is also detrimental to the acoustic distance of voices across caregivers, which potentially makes distinguishing talkers from each other more challenging for infants with CIs, compared to those with NH. The present study aimed to investigate these questions by focusing on simulating the limited resolution of CIs using noise-excited envelope vocoder and modeling the intelligibility benefit of ADS-to-IDS modification.

F. Benefits of investigations of acoustic properties of CI-related speech processing

Studies aimed at understanding speech perception in individuals with CIs can be characterized as involving one of three general approaches. The first approach examines performance of listeners with NH in response to vocoded speech to simulate hearing in listeners with CIs (Dorman et al., 1997a; Jahn et al., 2019; Mehta & Oxenham, 2017; Qin & Oxenham, 2003, 2005). The second method involves directly study of how listeners with CIs perform in various speech recognition tasks (Brown & Bacon, 2010; Kong et al., 2005; Peng et al, 2008; Peng et al., 2019). In a third method – the one which we focus on in the present paper – acoustic properties of simulated CI speech and unprocessed analogs are analyzed comparatively. This comparative analysis can be facilitated through the use of various quantitative metrics that emulate speech recognition in CI users as proxies to analyze properties of CI speech and its perception in listeners with CIs (Jain et al., 2018; Qin & Oxenham, 2003; Santos et al., 2013). Analysis of simulated CI speech and quantitative measures of speech quality and intelligibility tailored to listeners with CIs provide repeatable, automated, inexpensive, and fast tools for gaining preliminary evidence about speech perception in individuals with CIs. As such, a comparative analysis method offers benefits for undertaking efficient investigations for further study, and as such provides benefits over various major challenges that accompany the first two categories of studies, such as participant recruitment, time, and cost.

G. Current study

In the present study, we analyzed unprocessed and simulated CI speech of seven female talkers who spoke fifteen utterances both in ADS and IDS. These pairs of ADS and IDS speech were analyzed to examine how the limited frequency resolution of CIs may affect acoustic distinctiveness between ADS and IDS, as well as its effects on the estimated intelligibility of caregivers’ speech under modifications of speaking style (i.e., ADS to IDS). ADS and IDS stimuli of the seven female talkers were processed using noise-excited envelope vocoder with different number of channels to simulate the restricted spectral resolution and the amount of distortion in CIs (Dorman et al., 1998; Dorman et al., 1997b; Friesen et al., 2001; Fu et al., 1998; Loizou et al., 1999; Mehta et al., 2020; Mehta & Oxenham, 2017; Qin & Oxenham, 2003, 2005; Rosen et al., 1999; Shannon et al., 1995).

To examine the effects of CIs on acoustic distinctiveness between IDS and ADS, we first calculated mel-frequency cepstral coefficients (MFCCs), which have been shown in multiple studies to be effective for characterizing the distinctive qualities of IDS vs. ADS (Inoue et al., 2011; Piazza et al., 2017; Sulpizio et al., 2018). Second, to quantify the acoustic distinctiveness between IDS and ADS, we calculated a Mahalanobis distance (MD) measure over MFCCs features. MD is a multivariate distance metric that has been widely used to measure the distances between vectors in a variety of multidimensional feature spaces (Arjmandi et al., 2018; Masnan et al., 2015; Xiang et al., 2008). Third, to model how CIs may influence intelligibility of caregivers’ speech, we calculated the speech-to-reverberation-modulation energy ratio (SRMR) to estimate the signal-based intelligibility of speech signals delivered to listeners with NH (Falk et al., 2010), as well as its CI-tailored version (SRMR-CI) to model intelligibility of speech signals delivered to listeners with CIs (Santos et al., 2013). These measures were examined both within and across speakers to model the impacts of speaking styles (IDS vs. ADS) and listener group (NH vs. CI) on signals’ distinctiveness and estimated intelligibility. All these analyses were implemented in Matlab 2019a (The Mathworks Web-Site [http://www.mathworks.com]).

The following four specific questions were addressed in this study. First, we asked how simulated CI speech processing affects the acoustic distinctiveness of IDS compared with ADS, particularly as a function of degree of spectral degradation (i.e., number of spectral channels) in CI-simulated speech. We hypothesized that (a) CI-related speech processing significantly degrades the acoustic distinctiveness of IDS compared with ADS, and further that (b) increasing the number of channels would not compensate for degradation imposed by CI-related processing as compared to the unprocessed condition. Second, we asked whether there is signal-based evidence that IDS may be more intelligible than ADS, as gauged by the SRMR metric (to simulate a NH listening condition) or the SRMR-CI metric (to simulate a CI listening condition). We hypothesized that IDS is more intelligible than ADS, but that estimated intelligibility would vary as a function of simulated listening status (NH vs. CI). Third, we asked to what extent the acoustic distinctiveness between IDS and ADS varies across individual caregivers, as well as how such individual differences might be impacted by CI speech processing, focusing on change in the spectral resolution. We predicted individual differences across caregivers in acoustic implementation of the differences between ADS and IDS; we further predicted that CI-related speech processing would decrease the extent of acoustic distinctiveness as a function of speech style, leading to loss of intra- and inter-subject acoustic variability in terms of IDS vs. ADS distinctiveness. Fourth, and finally, we asked how estimated intelligibility differences for IDS compared with ADS vary across individual caregivers, and how such intelligibility differs as a function of hearing status (as gauged by SRMR vs. SRMR-CI differences to simulate effects of NH vs. CI listening conditions, respectively). We hypothesized that intelligibility differences for IDS vs. ADS would vary across caregivers, and that these intelligibility differences would be more similar as estimated for CI listeners, compared with NH listeners.

II. METHODS

A. Speech stimuli

Seven female adult native talkers of American English ranging in age from 21 to 24 years old spoke fifteen utterances in IDS and ADS (See Supplementary Material I for the list of stimuli). The utterances were elicited to be also used in separate infant word-learning experiments in which infants were exposed to a novel target word (i.e., “modi”) in the context of behavioral measures of infant word recognition to assess whether infants learned the novel word from IDS better than ADS. To elicit stimuli, the talkers were instructed to speak utterances as if talking to an infant (IDS condition) or an adult (ADS condition); this procedure was similar to that used in Wang et al., (2017; 2018). Acoustic properties of IDS and ADS stimuli were characterized using three acoustic measures of median F0 (Hz), interquartile range (IQR) of F0 (Hz), and speech rate (number of words per second) (See Appendix A). The statistical analysis of these three acoustic measures to assess potential significant effects of speaking style (IDS vs. ADS) demonstrated that IDS and ADS were significantly different across these three acoustic dimensions (cf. Appendix A). IDS stimuli, on average, had higher median F0, greater IQR of F0, and slower speech rate compared to ADS. These results are consistent with prior findings showing higher F0 (e.g., Wassink et al., 2007; Inoue et al., 2011), higher F0 variability (e.g., Inoue et al., 2011), and slower speech rate in IDS compared to ADS (e.g., Leong et al., 2014; Narayan & McDermott, 2016; Song et al., 2010). Finally, the IDS and ADS stimuli were checked auditorily by the senior researchers of the current study and verified perceptually to be consistent with typical IDS and ADS speech styles.

Speech stimuli were recorded using an AKG D 542 ST-S microphone in a sound booth and digitized at a sampling rate of 44.1 kHz with 16-bit resolution. The distance between talkers’ mouths and the microphone was controlled to assure the quality of the recorded stimuli. Prior to processing as discussed below, the start and end points of recorded utterances were manually identified in Praat software (Boersma & Weenink, 2001) to remove preceding and following silent portions. All participants were fully informed about the purpose and procedure of this study, and they had given informed consent to participate. This study was approved by the Institutional Review Boards of the Ohio State University and Michigan State University.

B. Creation of simulated CI speech

CI-simulated versions of the unprocessed stimuli were created using noise-excited envelope vocoder processing at six levels of spectral degradation, corresponding to 4-, 8-, 12-, 16-, 22- and 32-channel noise-vocoded stimuli. The choice of number of channels was made to cover both the actual number of channels in FDA-approved devices (12, 16, 22) and to query ranges of variation ranging from minimal cues used in prior studies (4, 8) out to 32 channels to examine CI vocoder scenarios with higher spectral resolution. The natural stimuli consisting of spoken IDS or ADS were processed in AngelSim™ Cochlear Implant and Hearing Loss Simulator (Fu, 2019; Emily Shannon Fu Foundation, www.tigerspeech.com) using 4-, 8-, 12-, 16-, 22-, and 32-channel noise-vocoding CI-simulated stimuli; the noise vocoding method followed the procedure in Shannon et al. (1995). The original stimuli were first band-passed filtered using the Greenwood function (emulating the Greenwood frequency-place map) into N (N = {4, 8, 12, 16, 22, 32}) adjacent frequency channels ranging from 200 Hz to 8000 Hz. This was implemented in AngelSim™ software by setting the absolute lower- and higher-frequency threshold for analysis and carrier filters to 200 Hz and 8000 Hz with a filter slope of 24 dB/Oct (Fu, 2019). These frequency ranges are fairly close to the corner frequencies of the Cochlear Nucleus speech processors in CI listeners cochlear implant devices (Crew & Galvin, 2012; Winn & Litovsky, 2015), which emulate the performance of average CI listeners in speech envelope discrimination (Chatterjee & Oberzut, 2011; Chatterjee & Peng, 2008). The same analysis filter and carrier filter of the Greenwood function was used to analyze white noise as a carrier signal (Greenwood, 1990). The AngelSim software used this setup to extract a time-varying amplitude envelope of speech stimuli under each frequency band using half-wave rectification, and then modulated independent white-noise carriers. There were 210 stimuli per speech style condition (7 talkers x 15 stimuli x 2 speaking styles). Including the noise-vocoded versions of these utterances (at 4, 8, 12, 16, 22, and 32-channels), we analyzed 1470 (210 x 7 levels of speech degradation) utterances in the present study.

C. Using MFCC features to characterize acoustic properties of IDS and ADS

We calculated 12 MFCCs for each speech stimulus to characterize its acoustic information (Hunt et al., 1980; Imai, 1983; Shaneh & Taheri, 2009). Modeling the frequency response of the human auditory system via MFCCs captures acoustic features that are involved in distinguishing IDS and ADS, such as shifts in vocal timbre (Piazza et al., 2017) and acoustic distinctiveness between IDS and ADS (Inoue et al., 2011), including in other languages (e.g., Italian and German) (Sulpizio et al., 2018). MFCCs are also able to effectively reflect caregivers’ emotional state and vocal affect (e.g., happiness vs. sadness, Sato & Obuchi, 2007; Slaney & McRoberts, 1998), which are attributes that help explain infants’ preferences for IDS over ADS (Fernald, 2018; Horowitz, 1983; Mastropieri & Turkewitz, 1999; Moore et al., 1997; Papoušek et al., 1990; Singh et al., 2002; Walker-Andrews & Grolnick, 1983; Walker-Andrews & Lennon, 1991). Furthermore, MFCCs allowed us to analyze spectral properties of vocoded speech, which was not feasible to do based on calculation of common acoustic properties such as fundamental frequency (F0), as these cues are partially present in CI-simulated speech (Fuller et al., 2014; Gaudrain & Baskent, 2018).

Figure 1 illustrates MFCC extraction for a sample pair of IDS-ADS stimuli in the process for measuring the acoustic distinctiveness between each ADS-IDS stimulus pair. To calculate MFCCs, each stimulus was re-sampled at 16 kHz using a Hamming window of 25 ms applied to each frame, with a frame shift of 10 ms, following the procedure used in Inoue et al. (2011). For each pair of IDS and ADS stimuli (SIDSij and SADSij in Figure 1), MFCCs were calculated for all frames of these stimuli (MFCCsIDSij and MFCCsADSij). Here, i indicates the index of the speech stimulus, i = {1,2,3,…,15}, and j is the index for the talker, j ={1,2,3,…,7}. Unlike previous studies in which a single, time-averaged MFCC vector was calculated to represent acoustic information in IDS and ADS (e.g., Piazza et al., 2017), our approach took advantage of all MFCCs derived from all frames of a speech stimuli to calculate the acoustic distinctiveness between ADS-IDS pairs of stimuli within a multidimensional feature space, thereby preserving the details about spectro-temporal information in speech at the level of frame. Since each analyzed pair of IDS and ADS stimuli contained identical word strings, our analysis was expected to mainly model the acoustic effects of caregivers’ speaking styles (IDS vs. ADS).

FIG. 1.

Schematic diagram of the approach used in the present study for measuring (1) MD between pairs of MFCCs derived from pairs of IDS-ADS stimuli, and (2) intelligibility of IDS and ADS stimuli as estimated by SRMR value for listeners with NH and SRMR-CI for those with CIs. Note that the dashed line denotes the process for creating and analyzing the noise-vocoded versions of the same pairs of stimuli, while N stands for the number of spectral channels in the noise-excited envelope vocoder. Blocks and lines with blue (dark gray) color indicate paths for processing ADS, while those with orange (light gray) color indicate paths for processing IDS. Note that SRMR and SRMR-CI were calculated only for unprocessed stimuli in order to estimate intelligibility for NH and CI listeners, respectively. In this figure, the waveforms and their corresponding MFCCs are from the utterance “See the modi?” spoken by one of the seven talkers both in IDS and ADS speaking styles. SIDSij and SADSij are the ith pair of IDS and ADS stimuli (i = {1,2,3,…,15}) for talker j (j ={1,2,3,…,7}). MFCCsIDSij and MFCCsADSij are MFCC features derived from SIDSij and SADSij speech stimuli, respectively. The middle two panels show MFCCs obtained from the frames of these IDS and ADS stimuli. MDij is the MD calculated to measure the acoustic distance between the two matrices for MFCCsIDSij and MFCCsADSij. SRMRIDSij and SRMRADSij are the estimated intelligibility for SIDSij and SADSij speech stimuli, respectively, as heard by listeners with NH. SRMR-CIIDSij and SRMR-CIADSij are the estimated speech intelligibility for the same SIDSij and SADSij speech stimuli, respectively, as heard by a listener with CIs.

D. Acoustic distinctiveness quantification using Mahalanobis Distance (MD) measure

After representing acoustic features of pairs of IDS and ADS stimuli by calculating their MFCCs, the acoustic distinctiveness between each pair was measured by calculating MD on the corresponding MFCC matrices (i.e., MFCCsIDSij and MFCCsADSij) using a 12-dimensional feature space (i.e., 12 MFCCs) (Maesschalck & Massart, 2000; Masnan et al., 2015; Xiang et al., 2008). MD is a multivariate statistical approach that evaluates distances between two multidimensional feature vectors or matrices that belong to two classes (here IDS vs. ADS) (Arjmandi et al., 2018; Heijden et al., 2005; Maesschalck & Massart, 2000; Masnan et al., 2015). The MD calculation returns the distance between means of two classes (here IDS and ADS) relative to the average per-class covariance matrix (Maesschalck & Massart, 2000). Here, acoustic properties of each class were represented by two feature matrices (here, MFCCs). The larger the distance between pairs of MFCCsIDSij and MFCCsADSij feature vectors, the smaller the overlap between two classes of IDS and ADS; this corresponds in turn to greater acoustic distance (or distinctiveness) between IDS and ADS stimuli.

Each row of the calculated MFCCs matrix for utterance i from talker j (e.g., MFCCsIDSij or MFCCsADSij) corresponds to a frame of that utterance, and 12 MFCCs were presented on the columns of this matrix. Therefore, the dimension of each MFCC matrix was NF x 12, where NF refers to the number of frames in that speech stimulus. The acoustic distinctiveness between IDS and ADS stimuli for each female talker j was computed by averaging the fifteen MD values obtained from that talker’s fifteen IDS-ADS pairs (, N=15). This process was separately performed on pairs of IDS and ADS stimuli for seven levels of spectral degradation – unprocessed, 32-, 22-, 16-, 12-, 8-, and/or 4-channel noise-vocoded CI-simulated stimuli – to give average MDs used to examine (1) how the acoustic distinctiveness between IDS and ADS stimuli changes as a function of CI processing (and number of spectral channels), (2) how individual caregivers vary in acoustic distinctiveness between IDS and ADS stimuli, and (3) how the limited spectral resolution in CIs may affect this pattern of individual differences across talker.

E. Estimation of speech intelligibility

As shown in Figure 1, we estimated the degree of intelligibility of each speech stimulus as it might be experienced by listeners with NH or with CIs. To approximate stimulus intelligibility as associated with NH, we calculated the quantitative metric of speech-to-reverberation-modulation energy ratio (SRMR) for IDS and ADS (unprocessed) stimuli, respectively (Santos et al., 2013). This metric has previously been validated as an approximation of intelligibility of speech to listeners with NH in behavioral tasks (Falk et al., 2015; Santos et al., 2013). As demonstrated by Falk et al., (2010), there is a high correlation (>90%) between objective measure of speech intelligibility based on SRMR ratio calculation and subjective ratings of speech intelligibility obtained from NH listeners. Further, to approximate intelligibility as might be experienced by listeners with CIs, we used an adapted version of the SRMR metric, which was also developed by Falk et al. (2013) to tailor the SRMR metric for CI users; this adapted metric is known as SRMR-CI (Santos et al., 2013). The SRMR-CI metric is based on a CI-inspired filterbank that emulates the Nucleus mel-like filterbank. The SRMR-CI ratio was shown to be strongly correlated with the speech intelligibility ratings obtained from listeners with CIs (>95%; Santos et al., 2013). These studies provided solid evidence that these two objective metrics of speech intelligibility closely reflect perceptual performance of listeners with NH (SRMR) and those with CIs (SRMR-CI) in subjective ratings of speech intelligibility.

To calculate SRMR, an (unprocessed) speech stimulus was first filtered by a 23-channel gammatone filterbank in order to emulate cochlear function. Next, a Hilbert transform was applied to output signals from each of the 23 filters in the filterbank to obtain their temporal envelopes. Next, modulation spectral energy for each critical band was calculated by windowing stimulus temporal envelope and computing the discrete Fourier transform. To emulate frequency selectivity in the modulation domain, the modulation frequency bins were grouped into eight overlapping modulation bands with logarithmically-spaced center frequencies between 4 and 128 Hz. Finally, the SRMR value was obtained by calculating the ratio of the average modulation energy in the first four modulation bands (~ 3–20 Hz) to the average modulation energy in the last four modulation bands (~ 20-160 Hz), as explained in Santos et al., (2013).

To calculate SRMR-CI, a 22-channel filterbank was used instead of a 23-channel filterbank in order to emulate the structure of filterbanks in Nucleus CI devices. In addition, the 4-128 Hz range of modulation for filterbank center frequencies was replaced by a 4–64 Hz range to better emulate CI users’ performance (Santos et al., 2013). Although these measures have not primarily been developed to estimate the effect of talkers’ speaking style on speech intelligibility, the signal processing algorithms used in these metrics estimate the distribution of energy in various frequency bands, as well as other spectral properties that are expected to emulate fairly well performance of NH and CI users in speech recognition (Santos et al., 2013). Intelligibility of signals as estimated for different hearing statuses (NH or CI) is inherently modeled through the difference in algorithmic implementation of these metrics (SRMR vs. SRMR-CI, respectively) (Santos et al., 2013).

As shown in Figure 1, SRMR and SRMR-CI values were separately calculated (SRMRIDSij, SRMRADSij, SRMR-CIIDSij and SRMR-CIADSij) for each pair of stimuli in IDS and ADS conditions (SIDSij and SADSij). Intelligibility of speech stimuli produced by talker j in the two conditions (ADS and IDS) was summarized by averaging the relevant SRMR-related values over the 15 stimuli spoken by this talker at each of these two conditions. Therefore, for talker j, four values were calculated: (i) , (ii) , (iii) , (iv) . These four values for each talker j provided an approximation of the intelligibility of IDS and ADS stimuli spoken by this talker, as heard by listeners with NH (SRMRIDSj and SRMRADSj) and by those with CIs (SRMR-CIIDSj and SRMR-CIADSj). These values were then used to address (1) how the intelligibility of speech is affected by the two separate factors of caregivers’ speaking styles (ADS vs. IDS), as well as by (simulated) group hearing status (NH vs. CI), (2) the extent to which the degree of impact of ADS-to-IDS speaking style modifications on intelligibility varies across individual caregivers, and (3) how this variation is affected by (simulated) hearing status (NH vs. CI).

F. Statistical analysis

We constructed two Generalized Linear Mixed Models (GLMMs) in Matlab (using the fitglme function) (Matuschek et al., 2017; Quené & van den Bergh, 2008) in order to (1) identify whether changes in acoustic distinctiveness (i.e., MD) between IDS and ADS due to CI noise vocoding were statistically significant, and (2) to examine the effect of speaking style (IDS vs. ADS) and simulated hearing status (NH vs. CI), and any potential interaction, on intelligibility of speech (as measured by SRMR and SRMR-CI to simulate NH and CI hearing statuses, respectively). For the first GLMM, the speech degradation level (i.e., 4, 8, 12, 16, 22, and 32-channel, and unprocessed) was entered into the model as a fixed predictor, and MDs were defined as the response variable. The individual female talkers and speech stimuli were defined as random effects (intercepts) in the model to account for quality differences that might exist due to talker- and stimulus-specific variation. A post hoc test using Tukey’s multiple comparison approach (multcompare, MATLAB, Mathworks) was conducted to examine statistically significant mean differences for all possible pairwise comparisons across seven levels of spectral degradation (4, 8, 12, 16, 22, 32-channels, and unprocessed). In the second GLMM, factors of speaking style (IDS or ADS) and simulated hearing status (NH or CI) were entered into the model as independent fixed variables; the estimated speech intelligibility (cf. SRMR or SRMR-CI values) corresponded to the response variable in the model. Talkers and speech stimuli were entered as random-effect intercepts in the model to account for quality and/or information differences that might exist due to talker- and stimulus-specific variation.

Measures of central tendency and variability (i.e., mean, standard deviation, and range) were calculated as descriptive statistics to evaluate the degree of variability across caregivers in acoustic implementation of IDS and ADS. We further calculated coefficients of variance (CVs) for MD differences between ADS and IDS conditions for both unprocessed and simulated CI speech within a 22-channel noise-vocoder to examine whether variability across individual caregivers in ADS-IDS acoustic distinctiveness decreased as a function of speech degradation (unprocessed vs. 22-channel simulated CI speech). The same analysis was performed to examine whether changes in speech intelligibility – due to an ADS-to-IDS style shift – were variable across talkers and/or how variability changed as (simulated) listener hearing status changed (from NH to CI). We further calculated Pearson correlation coefficients to examine whether changes in acoustic distinctiveness and speech intelligibility due to changes in speaking style (ADS to IDS) across degradation levels (unprocessed vs. 22-channel vocoded) and hearing status (NH vs. CI) involved a linear transformation.

III. RESULTS

A. Effects of CI-related spectral degradation on ADS-vs-IDS acoustic distinctiveness

Fig. 2 shows the mean and distribution of MDs obtained as a function of signal degradation that ranged from a no-degradation (i.e., unprocessed) condition to CI-simulated speech for which the number of spectral channels in the noise-vocoder was gradually reduced from 32 to 4. Overall, MDs between IDS and ADS monotonically decreased with decreasing numbers of spectral channels in the noise-vocoder, thereby highlighting the detrimental effects of limited spectral resolution in CI devices for signal fidelity. As illustrated in Fig. 2, the acoustic distinctiveness between IDS and ADS is reduced by CI speech processing systems and further reduced through decreasing the number of spectral channels. Even the highest-fidelity CI-simulated condition (32-channel noise-vocoded speech) showed a substantial drop in acoustic distinctiveness between IDS and ADS, relative to the unprocessed condition. The average MD across the six noise-vocoded conditions showed a decline of approximately 67% relative to MD at the unprocessed condition, indicating that a large portion of acoustic information involved in conveying the distinction between IDS and ADS was lost due to CI-related speech processing.

FIG. 2.

The mean (bar) and ±1 standard error (vertical error bar in black) of Mahalanobis distance (MD) across groups of IDS and ADS stimuli at seven levels of spectro-temporal degradation, ranging from no-degradation (natural/unprocessed) to 4-channel noise-vocoded stimuli. Green (dark gray) circles show the mean MDs for each talker derived by averaging MDs over 15 pairs of IDS-ADS stimuli for that talker.

Table I represents the results of the GLMM analysis to test statistical significance of the effect of CI-related speech processing on MD. As this table suggests, the detrimental effect of CI-related processing, as captured in the decrease in the number of spectral channels on the distinctiveness between IDS and ADS was statistically significant (β = −0.886, t = −16.54, p < 0.0001). Post hoc testing using a Tukey’s multiple comparison approach revealed that the simulated effect of limited spectral resolution in CI processing on the decrease in MDs was statistically significant for comparisons of all pairs of speech degradation conditions except three (32 vs. 22 channels, 22 vs. 16 channels, and 16 vs. 12 channels).

TABLE I.

Statistical GLMM for modeling the effect of level of speech degradation on acoustic distinctiveness between IDS and ADS as quantified by MD.

| β Estimate | St. Error | t | Pr(>∣t∣) | |

|---|---|---|---|---|

| (intercept) | 6.694 | 0.58 | 11.45 | 0.000a |

| Level of degradation | −0.886 | 0.053 | −16.54 | 0.000a |

The p-value was statistically significant.

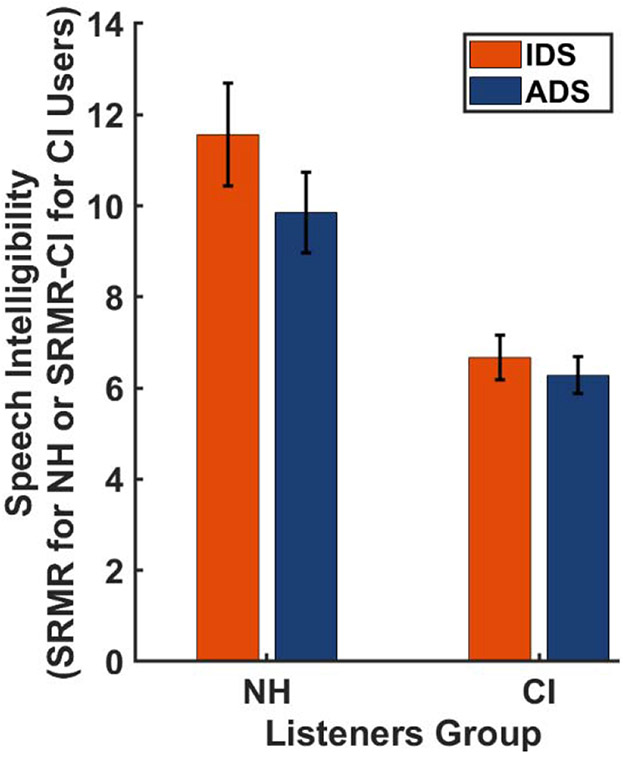

B. Effects of CI-related speech processing on intelligibility of ADS and IDS

Fig. 3 shows average SRMR or SRMR-CI values estimating intelligibility of ADS and IDS stimuli for NH and CI listeners. The bar graphs show the group data, corresponding to the means averaged across talkers. The figure suggests that speech spoken in IDS is more intelligible than matched utterances in ADS, regardless of whether intelligibility is estimated for listeners with NH or those with CIs. On average, the stimuli spoken in IDS were ~15% more intelligible than those spoken in ADS for listeners with normal hearing, as measured by the SRMR metric. This average improvement in intelligibility of IDS stimuli over ADS stimuli was ~5.7% for listeners with CIs, as estimated by SRMR-CI, suggesting that the supportive effects of IDS for speech intelligibility improvement is decreased through CIs.

FIG. 3.

Estimated intelligibility of speech stimuli spoken in IDS (orange; light gray) and in ADS (blue; dark gray) styles (simulated) groups of listeners with different hearing statuses: NH (estimated by SRMR) and those with CIs (estimated by SRMR-CI). The bar graphs represent average values of SRMR or SRMR-CI over the seven talkers. The vertical error bars in black show ±1 standard error.

The results from the GLMM analysis for examining the effect of talkers’ speaking style (IDS vs. ADS), listeners’ group (NH vs. CI), and their interaction on intelligibility of speech (Table II) revealed a significant effect of speaking style (β = 3.05, SE = 0.74, t = 4.14, p < 0.0001). This analysis provides new evidence that IDS may likely improve intelligibility of caregivers’ speech to both listeners with NH and those with CIs. These results further showed a significant, negative effect of CI-related processing on intelligibility of caregivers’ speech (β = −2.23, SE = 0.74, t = −3.02, p < 0.0001). In addition, a significant interactive effect between speaking style and listeners group was revealed by the GLMM model (β = −1.337, SE = 0.466, t = −2.865, p < 0.001), reflecting the fact that hearing speech through CIs resulted in a smaller intelligibility gain from IDS relative to ADS than was observed in the NH condition.

TABLE II.

Statistical GLMM for the effects of speaking style, level of speech degradation, and their interaction on intelligibility of speech, as measured by SRMR or SRMR-CI.

| β Estimate | St. Error | t | Pr(>∣t∣) | |

|---|---|---|---|---|

| (intercept) | 10.365 | 1.339 | 7.74 | <0.00001a |

| Speaking Style | 3.054 | 0.737 | 4.14 | <0.00001a |

| Level of degradation | −2.23 | 0.737 | −3.024 | <0.0001a |

| Speaking Style:Level of degradation | −1.336 | 0.466 | −2.865 | <0.0001a |

The p-value was statistically significant.

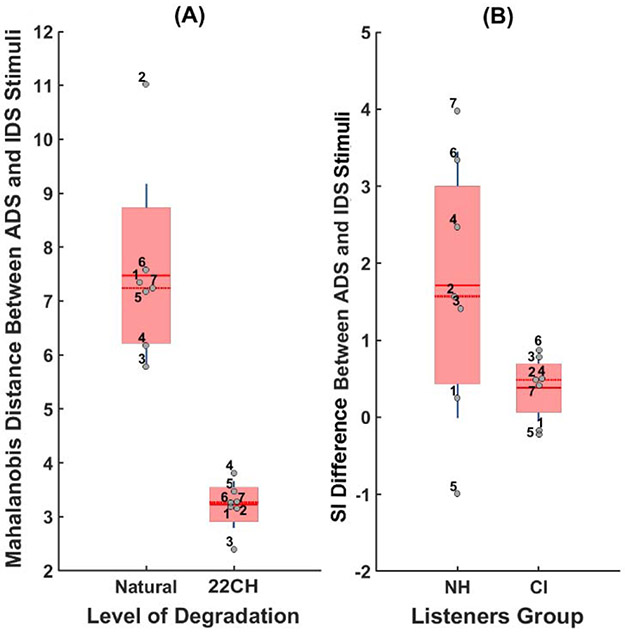

C. Individual differences across caregivers in ADS-vs-IDS acoustic distinctiveness and the effect of simulated CI processing

To examine individual differences across talkers in acoustic distinctiveness of IDS from ADS, we focused on MD data from the individual talkers in two conditions (unprocessed and 22-channel CI-simulated noise-vocoded speech), as shown in Fig. 4A (left vs. right bars, respectively). Two general observations are noticeable in this data. First, caregivers vary considerably in terms of the amount of acoustic variability created by their ADS-to-IDS modification (mean = 7.47, SD = 1.70, range = 5.23). For example, the ADS-to-IDS shift in MD for talker 2 exposes a child with NH (cf. natural, unprocessed condition) to a much wider range of acoustic information compared to that for talker 3. This, in turn, suggests that a child of talker 2 would be more advantaged in learning acoustic patterns of her caregivers’ speech compared to a child of talker 3.

FIG. 4.

Variability across seven female talkers in (A) acoustic distinctiveness between their IDS and ADS for unprocessed stimuli (Natural) and simulated CI speech within a 22-channel noise vocoder (22CH), and (B) the change in talkers’ speech intelligibility (SI) due to a change in their speaking style (ADS to IDS), as heard for two (simulated) listener groups with either NH (estimated by SRMR) or CIs (estimated by SRMR-CI). The data points (gray circles) are laid over a 1.96 standard error of the mean (95% confidence interval) in red (rectangle area with light gray) and 1 standard deviation shown by blue lines (vertical dark gray lines). The solid and dotted red lines (horizontal solid and dotted dark gray lines) show the mean and median, respectively.

The second observation is that the magnitude of this acoustic variability significantly reduced for CI-simulated signals (mean = 3.22, SD = 0.43, range = 1.4). An analysis of coefficient of variation (CV) revealed that the standard deviation of MD for unprocessed (natural) speech stimuli was 22.7% of its mean, whereas this value was 13.3% for CI-simulated speech stimuli for the 22-channel noise-vocoder. Comparing these two CV values shows that the magnitude of variation in ADS-IDS acoustic distinctiveness (i.e., MD) reduces approximately by half due to CI-related speech processing, highlighting a major loss of acoustic information useful for recognition of caregivers themselves and for distinguishing them from other caregivers.

If CI-simulated processing involves a linear transformation of acoustic distinctiveness across speech styles for each talker, then we should observe MD differences to be significantly correlated across natural (i.e., unprocessed) and 22-channel noise-vocoded conditions. The Pearson’s correlation coefficient was r(5) = 0.07 (p = 0.87), suggesting that CI-simulated speech processing did not impact talkers’ speech proportionately in terms of the acoustic distinctiveness between their IDS and ADS. Instead, CI-related speech processing reduced the IDS-ADS acoustic distance for some talkers (e.g., talker 6) more than others (e.g., talker 4).

D. Individual differences across caregivers in IDS and ADS intelligibility and the effect of hearing status

To examine how individual talkers vary in the effects of their ADS-to-IDS modifications on intelligibility of speech to listeners with NH and those with CIs, we focused on patterns of SRMR and SRMR-CI metrics from the individual talkers. For (simulated) listeners with NH, SRMRs of pairs of IDS and ADS stimuli were subtracted for each talker and averaged over 15 pairs of ADS-IDS stimuli in order to measure the amount of change in her speech intelligibility due to ADS-to-IDS modification (for talker j, ). Likewise, for (simulated) listeners with CI, SRMR-CIs of pairs of IDS and ADS stimuli were subtracted for each talker and averaged over 15 pairs of ADS-IDS stimuli in order to quantify the amount of change in her speech intelligibility due to ADS-to-IDS modification (for talker j, ). Thus, a positive value of this difference measure represents IDS being, on average, more intelligible than ADS for a talker, showing an intelligibility benefit for IDS over ADS for that talker.

Figure 4B (right panel) shows the results for changes in intelligibility of speech of talkers due to modifications to speaking style. The bar plot on the left in Figure 4B (labeled as NH on the x-axis) presents the difference across seven talkers in IDS-ADS intelligibility as estimated for listeners with NH. The data in this scatter plot shows how much speech of a talker (e.g., talker 6) would be heard as more or less intelligible as the talker speaks the utterances or words in IDS compared to ADS.

Two patterns are observable in Figure 4B. First, the change in intelligibility of talkers’ speech due to the speaking style modification (ADS to IDS) was fairly variable across talkers for simulated listeners with NH (mean = 1.71, SD = 1.73, range = 4.97), such that this change was associated with relatively more intelligible speech for some talkers compared to others. As this plot suggests, when talkers change their speaking style from ADS to IDS, not only does this not lead to an equal amount of change in intelligibility of speech across talkers, but for one talker the direction of this effect is even slightly negative (talker 5). For the majority of talkers (6 out of 7 talkers), IDS was more intelligible than ADS (as shown by a positive difference value).

Second, relative to the size of the ADS-to-IDS shift for simulated NH listeners, the size of this ADS-to-IDS shift was overall considerably reduced for simulated listeners with CIs (mean = 0.38, SD = 0.43, range = 1.10). The bar plot on the right panel of figure 4B (labeled as CI on the x-axis) presents the dispersion of change in speech intelligibility for each talker due to changing speaking style from ADS to IDS, as estimated for listeners with CIs. Notably, CI-related processing decreases the degree of variability in speech intelligibility, compared with that for NH, as expected. An analysis of CV showed that although the mean of change in speech intelligibility due to ADS-to-IDS modifications was overall smaller for the simulated CI condition compared to the simulated NH condition, while the magnitude of variability was almost the same for two groups of listeners (CV ≅ 11%). Highlighting non-linearity of effects of CI processing on speech across different talkers, there was no significant correlation across the seven talkers for ADS-to-IDS intelligibility differences in simulated CI vs. NH listening conditions (r(5) = 0.74, p = 0.58).

IV. DISCUSSIONS

The present study investigated the simulated effects of CI processing on acoustic distinctiveness and intelligibility of speech signals as influenced by change of speaking style from IDS and ADS. Results from the present study supported the hypothesis that the limited spectral resolution in CIs significantly degrades acoustic information involved in distinguishing IDS from ADS. This significant loss of acoustic information related to the distinction between IDS and ADS may negatively impact infants’ recognizing IDS as distinct from ADS, leading possibly to less attention to caregivers’ speech, with potential consequences for children with CIs developing relatively poorer language skills compared to those with NH.

These results are in line with prior findings demonstrating difficulties by listeners with CIs in distinguishing among various speaking styles (Tamati et al., 2019). Distinguishing caregivers’ speaking styles is tied to advances in language development (Karzon, 1985; Singh & Nestor, 2009; Thiessen et al., 2005; Wang et al., 2017), but is made challenging when speech is degraded by CIs and/or other undesirable sources, e.g., noise and reverberation (Fetterman & Domico, 2002; Hazrati & Loizou, 2012; Zheng et al., 2011). The significant elimination of IDS-related acoustic information suggested by the present study indicates that infants with CIs likely do not have access to a wide range of spectro-temporal information in caregivers’ speech to foster recognizing when speech is directed to them. As a result, these children may experience considerable difficulties in recognizing IDS as distinct from ADS, particularly in complex linguistic environments. Although infants with CI have limited access to fine-grained spectral cues in speech, they might be able to employ other well-coded cues through CI devices, such as caregivers’ speaking rate, to detect whether speech is directed to them.

Although Wang et al., (2017) showed that IDS enhanced attention to speech in infants with CIs compared to ADS, results from the present study suggest that infants’ capability to recognize IDS from ADS and to prefer attending to IDS over ADS is not probably comparable to that of children with NH and is expected to be largely reduced. Furthermore, the fact that infants with CIs showed this preference after 12 months of CI experience in Wang et al.’s (2017) study highlights the possibility that the time course of developing certain capabilities for differentiating IDS and ADS would be longer than peers with NH and would depend on the amount of experience with CIs.

If the results of our simulated CI speech fairly approximate the perception of IDS and ADS utterances in infants with CIs, infants with CIs must develop a different cue-weighting system for recognition of IDS from ADS compared to infants with NH, where the type and magnitude of the relevant acoustic cues would be possibly different from what infants with NH develop. For example, in the absence of prominent acoustic cues of IDS such as F0 (Fernald & Mazzie, 1991; Kuhl & Meltzoff, 1999; Mehler, 1981), which is poorly perceived through CI devices (Mehta et al., 2020; Mehta & Oxenham, 2017; Qin & Oxenham, 2003), infants with CIs may rely more on suprasegmental cues such as speech rate (Peng et al., 2017) and/or periodicity in the temporal envelope (Fu et al., 2004; Kong et al., 2004). However, perception of pitch through periodicity in the temporal envelope is mostly limited to F0s below around 300 Hz (Carlyon et al., 2010; Kong & Carlyon, 2010), which is generally below the range of F0 variation in IDS. This lack of access to the entire spectro-temporal cue range in IDS suggests that infants with CIs are probably at high risk for missing IDS-related communicative events, and thus for developing sub-optimal language skills.

Our results corroborated our hypothesis that caregivers likely expose infants to more intelligible speech when speaking in IDS compared to ADS, providing the first evidence on the intelligibility benefit of IDS over ADS. This was shown using a novel application of a recently-developed metric of intelligibility, which models intelligibility of speech for listeners with NH (i.e., SRMR) and those with CIs (i.e., SRMR-CI) (Falk et al., 2015; Santos et al., 2013). This positive effect of IDS on speech intelligibility might be because caregivers provide more clear speech by speaking louder, slower, and/or in a hyperarticulated fashion when using IDS (Wassink et al., 2007; Hazan et al., 2018; Krause & Braida, 2002; Li et al., 2011; Liu et al., & Zeng, 2004) These specific speaking patterns likely lead to an intelligibility benefit compared to ADS (Janse et al., 2007; Liu et al., 2004). Greater intelligibility for IDS over ADS suggests that use of IDS during caregiver-infant spoken communication assists infants in better understanding speech, conceivably supporting infants’ word learning process through a direct link between exposure to IDS and improved speech intelligibility. However, the results of our computational study also revealed an interactive effect between caregivers’ speaking style (ADS and IDS) and listening condition (NH or CI); this may indicate that the size of this positive effect of IDS on caregivers’ speech intelligibility is smaller for infants with CIs compared to their peers with NH. This, in turn, may suggest that the limited spectral resolution of CI devices not only disrupts the communication of IDS-ADS-specific acoustic information, but also decreases the intelligibility of caregivers’ IDS, indicating that infants with CIs are probably not expected to benefit from exposure to IDS as much as their NH peers. These results are consistent with prior findings demonstrating greater difficulties that adult listeners with CIs incur in processing speech and accomplishing lexical access, compared to listeners with NH (McMurray et al., 2017; Nagels et al., 2020). Although prior studies demonstrated a supportive role of IDS on infants’ language outcomes, to our knowledge, this is the first study that provides evidence showing the detrimental effects of the CI speech processing on the supportive role of IDS in speech understanding.

Prior studies on speech recognition in listeners with CIs have highlighted the importance of further examining patterns of individual differences, in addition to group differences (Dilley et al., 2020; Nagels et al., 2020; Peng et al., 2019; Spencer, 2004; Szagun & Schramm, 2016). Our investigation of patterns of individual differences across caregivers in acoustic distinctiveness between IDS and ADS and the simulated effect of limited spectral resolution in CI on these patterns highlighted multiple important observations. The first observation relates to the amount of acoustic variability produced by each talker due to changing speaking style from ADS to IDS. The amount of resultant acoustic variability varied across talkers, indicating that infants would experience different language environments in terms of qualities of their caregivers’ speech. This is expected to result in some caregivers’ exposing infants to relatively larger ranges of acoustic information, as compared to others, which would foster their infants’ speech processing and language acquisition by assisting infants in more robust recognition of their caregivers’ voices (Beauchemin et al., 2011; Kisilevsky et al., 2003), as well as better development of auditory cortical processing for language development (Webb et al., 2015). Similar to the recent findings by Dilley et al. (2020), these results also suggest that IDS is not always readily distinguishable from ADS, due to the fact that caregivers vary in implementation of IDS. As such, these results confirm that conditions for recognition of IDS by infants is not always optimal (Piazza et al., 2017) and might differentially affect language outcomes for infants with CIs (Dilley et al., 2020).

More importantly, the size of the ADS-IDS acoustic difference was considerably reduced for each caregiver as her speech passed through a CI simulator, indicating that infants with CIs might have access to a much narrower range of acoustic variability in the voices of their caregivers, as compared to their peers with NH. This large decline in the degree of acoustic variability in caregivers’ voices may negatively impact infants’ robust identification of their caregivers’ voices, thereby preventing their gaining the maximal benefit from language input that may be available in their linguistic environments. In fact, encoding and learning these cues is crucial as they both significantly contribute to infants’ familiarity with ranges of acoustic variation in talkers’ speech and their robust performance in understanding speech, despite of multiple sources of variability, such as talker variation (Allen et al., 2009; Eskenazi, 1993), language context (Mattys, 2000; McMurray & Aslin, 2005; Miller, 1994), speech rate (Sommers et al., 1992), and background noise and/or reverberation (Hawkins, 2004). Thus, infants with CIs are probably at risk for partial learning of subtle voice cues specific to their caregivers, as reflected in the spectral and temporal fine-structures of their voices.

Acoustic implementation of ADS-to-IDS modification varies across individual caregivers, which may differentially impact infants’ understanding of speech, and thus their language outcomes (Dilley et al., 2020; Hoff, 2006; Weisleder & Fernald, 2013). Results from the present study showed that individual talkers varied in the effect of their speaking style modification on estimated speech intelligibility, which may suggest that modification of speaking style from ADS to IDS does not always cause an equal degree of improvement in intelligibility of caregivers’ speech. Notably, our results showed that this variability across caregivers in the impact of their speaking style modification on the estimated speech intelligibility was reduced due to limited spectral resolution in CIs. In the present study, we only studied variability across caregivers in acoustic information and the estimated intelligibility of their IDS compared to ADS, whereas caregivers of infants with CIs may vary in other aspects of spoken communication such as gestural and proprioceptive behaviors, which very likely change the degree of intelligibility by which infants eventually perceive caregivers’ speech (Kirk et al., 2007; Kirk & Pisoni, 2002; Lachs et al., 2001).

These findings can be further interpreted in the context of spectro-temporal information available to infants’ auditory systems, which is very sensitive to subtle changes in speech acoustics (Jusczyk et al., 1999; Kuhl, 2004). When infants’ accessibility to fine-grained spectral and temporal structures in speech is largely compromised because of limited spectral resolution in CIs and CIs’ limitation in transmission of timing cues from the TFS of resolved harmonics, they may not be able to readily recognize and attend to rich IDS. In the absence of this spectral fine structure at the output of CI electrodes, infants must rely on course-grained cues in the speech envelope, such as temporal envelope periodicity (Green et al., 2002; Moore, 2003) or cues from other sensory modalities (e.g., visual and tactile; Green et al., 2010; Rohlfing et al., 2006) in order to recognize IDS from ADS. This likely increases the cognitive load in processing speech particularly in complex auditory environments, and could therefore negatively contributes to the observed poor language outcomes in some children (Davidson et al., 2014; Dunn et al., 2014; Geers et al., 2011; Geers et al., 2015; Houston et al., 2003; Houston et al., 2012; Miyamoto et al., 2003; Niparko et al., 2010; Pisoni et al., 2007; Pisoni et al., 2018).

The present study used a within-talker manipulation of speech style to investigate how limited spectral resolution in CIs may affect processing of speech style shifts which are tied to language development, namely IDS vs. ADS signals. While the study involved a sizeable number of individual utterances with control of phonetic properties within talkers, the amount of speech collected from each of the seven talkers was relatively small. Further work will be needed to test how these results extend to a larger sample of talkers with utterances with more varied segmental and lexical composition. Furthermore, studying natural IDS and ADS would be ideal; however, it is difficult to control the content and quality of the speech collected from naturalistic environments. Importantly, studies based on simulated CI speech and computational models of human hearing provide valuable evidence for understanding speech perception in listeners with normal and impaired hearing (Litvak et al., 2001; Mehta et al., 2020; Rubinstein et al., 1999; Throckmorton & Collins, 2002). Findings from these studies should be viewed as evidence for general trends, rather than the actual performance of listeners with CIs, subject to further investigation. Although we simulated limitation of CIs in spectral resolution by changing the number of channels in noise-vocoded speech, the effect of other aspects of CI processing on spectral resolution, such as channel interactions (i.e., steepness of spectral slope) (Crew & Galvin, 2012; Mehta et al., 2020), requires further investigation. Additionally, metrics of speech intelligibility of SRMR and SRMR-CI have been validated for adult listeners with CIs and are not direct estimates of speech intelligibility, which is very likely different from what infants with CIs experience in terms of recognition of utterances. Considering these limitations, the results should not be taken as the final determination of how children with CIs perform in recognition between IDS and ADS and how ADS-to-IDS modification impacts the degree of intelligibility of caregivers’ speech to children with CIs.

Despite these limitations, the simulated results from the present study may suggest that, compared to infants with NH, infants with CIs could be disadvantaged in perceiving IDS and acquiring spoken language due to multiple factors. First, partial transmission of spectro-temporal cues because of limited spectral resolution likely decreases the supportive role of IDS in infants’ language learning by weakening the link between attending to IDS and speech comprehension. In fact, degraded representation of spectral information that contributes to acoustic distinction between IDS and ADS in CIs may have detrimental effects on infants’ ability to pay attention to caregivers’ speech, something that is a fundamental cognitive skill for spoken language acquisition (Bergeson, 2014; Glenn et al., 1981; Houston et al., 2003; Rottmann & Zobrist, 2004; Wang et al., 2018). It is worth mentioning that infants’ language learning involves incorporating information from multiple intertwined communication dimensions (i.e., visual, social, tactile, and emotional), which creates a very rich channel for learning language through infant-directed speech (Gogate et al., 2000; Nomikou & Rohlfing, 2011), even in the absence of major spectral cues such as talker’s F0 in the output of CI electrodes. Our findings further suggested that CIs diminish the likely benefit of exposure to more intelligible IDS, as compared to ADS, which may negatively impact infants’ abilities to process caregivers’ speech, possibly with further significant downstream consequences for later language skills. Last but not least, our simulated results may suggest that, compared to infants with NH, infants with CIs have access to a relatively narrower range of acoustic information (corresponding to smaller acoustic variability) in their caregivers’ speech, which probably leads to experiencing greater difficulties in robust identification and recognition of their caregivers’ voices (Beauchemin et al., 2011; Kisilevsky et al., 2003; Lavan et al., 2019), as well as possibly developing poorer word recognition skills (Singh, 2008) and an impaired auditory system for language processing (Webb et al., 2015). Despite the reduced ADS-vs-IDS distinctiveness, intelligibility, and variability of vocoded IDS, it is possible that children with CIs may have developed certain coping/adapting strategies to mitigate the degraded speech input. For example, it is possible that children with CIs may have higher sensitivity (lower threshold) to the acoustic cues than children with NH.

In summary, the current study used computational and signal processing approaches in order to provide new evidence for how CI-related speech processing may impact recognition of IDS from ADS in children with CIs, as well as how these style differences may affect intelligibility benefits derived by style shifts from ADS to IDS. These findings provide solid grounding for developing new perceptual studies to test abilities of infants with CIs to recognize their caregivers’ speaking style (ADS vs. IDS) and to recognize intelligible words in caregivers’ speech. Focusing on computational metrics as undertaken here provides an important complement to costly, complex, labor-intensive perceptual studies. The results provided preliminary evidence for how CI-related speech processing may alter the pathway from exposure to IDS to processing speech and, by extension, the acquisition of language by children with CIs compared to that of their NH peers in two ways: (1) making it possibly harder for children with CIs to recognize IDS from ADS, (2) decreasing the ADS-to-IDS intelligibility benefit. The most direct and immediate implication of these findings is the imperative need to improve signal processing in CI devices to assure the faithful transmission of acoustic cues relevant to identification and recognition of IDS from ADS. Until then, the major clinical implication of these findings is that the maximum benefit from exposure to IDS for language learning in infants with CIs requires caregivers’ active use of multimodal (i.e., gesture, tactile, visual, social, and emotional) communicative behaviors in order to compensate for the degraded representation of acoustic information relevant to IDS and to support its robust perception.

Supplementary Material

Highlights.

Simulated cochlear-implant processing reveals a significant reduction in the acoustic distinctiveness between IDS and ADS.

The estimated intelligibility benefit of IDS over ADS is greatly reduced due to cochlear-implant processing.

Cochlear-implant processing potentially weakens the link between attending to IDS and improved language learning.

ACKNOWLEDGMENTS

Research reported in this publication was supported by the National Institute on Deafness and other Communicative Disorders of the National Institutes of Health under award number R01DC008581 to D. Houston and L. Dilley and a Dissertation Completion Award received by Meisam K. Arjmandi from Michigan State University.

Footnotes

Portions of this work were presented at the 177th and 178th Meeting of the Acoustical Society of America.

Also at: Nationwide Children’s Hospital, Columbus, OH, USA

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERENCES

- Allen JS, Miller JL, & DeSteno D (2009). Individual talker differences in voice-onset-time: Contextual influences. The Journal of the Acoustical Society of America, 125(6), 3974–3982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arenberg Bierer J (2010). Probing the electrode-neuron interface with focused cochlear implant stimulation. Trends in Amplification, 14(2), 84–95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arjmandi M, Dilley LC, & Wagner SE (2018). Investigation of acoustic dimension use in dialect production: machine learning of sonorant sounds for modeling acoustic cues of African American dialect. 11th International Conference on Voice Physiology and Biomechanics, 12–13. East Lansing, USA. [Google Scholar]

- Baskent D, & Gaudrain E (2016). Perception and psychoacoustics of speech in cochlear implant users. Scientific Foundations of Audiology. Perspectives from Physics, Biology, Modelling, and Medicine, 185–320. [Google Scholar]

- Beauchemin M, Gonzalez-Frankenberger B, Tremblay J, Vannasing P, Martínez-Montes E, Belin P, Beland R, Francoeur D, Carceller AM, Wallois F and Lassonde M (2011). Mother and stranger: An electrophysiological study of voice processing in newborns. Cerebral Cortex, 21(8), 1705–1711. [DOI] [PubMed] [Google Scholar]

- Beckford Wassink A, Wright RA, & Franklin AD (2007). Intraspeaker variability in vowel production: An investigation of motherese, hyperspeech, and Lombard speech in Jamaican speakers. Journal of Phonetics, 35(3), 363–379. [Google Scholar]

- Bergeson TR (2014). Hearing versus listening: attention to speech and its role in language acquisition in deaf infants with cochlear implants. Lingua, 10–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boersma P, & Weenink D (2001). Praat, a system for doing phonetics by computer. Glot International, 5:9/10, 341–345. [Google Scholar]

- Bradlow AR, Kraus N, & Hayes E (2003). Speaking clearly for children with learning disabilities: Sentence perception in noise. Journal of Speech, Language, and Hearing Research, 46(1), 80–97. [DOI] [PubMed] [Google Scholar]

- Broesch TL, & Bryant GA (2015). Prosody in infant-directed speech is similar across western and traditional cultures. Journal of Cognition and Development, 16(1), 31–43. [Google Scholar]

- Brown CA, & Bacon SP (2010). Fundamental frequency and speech intelligibility in background noise. Hearing Research, 266(1–2), 52–59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burnham EB, Wieland EA, Kondaurova MV, McAuley JD, Bergeson TR, & Dilley LC (2015). Phonetic modification of vowel space in storybook speech to infants up to 2 years of age. Journal of Speech, Language, and Hearing Research, 58(2), 241–253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carlyon RP, Deeks JM, & McKay CM (2010). The upper limit of temporal pitch for cochlear-implant listeners: Stimulus duration, conditioner pulses, and the number of electrodes stimulated. The Journal of the Acoustical Society of America, 127(3), 1469–1478. [DOI] [PubMed] [Google Scholar]

- Chatterjee M, & Oberzut C (2011). Detection and rate discrimination of amplitude modulation in electrical hearing. The Journal of the Acoustical Society of America, 130(3), 1567–1580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chatterjee M, & Peng SC (2008). Processing F0 with cochlear implants: Modulation frequency discrimination and speech intonation recognition. Hearing Research, 235(1–2), 143–156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Consortium, M. (2020). Quantifying sources of variability in infancy research using the infant-directed speech preference. Advances in Methods and Practices in Psychological Science, 3(1), 24–52. [Google Scholar]

- Cooper RP, Abraham J, Berman S, & Staska M (1997). The development of infants’ preference for motherese. Infant Behavior and Development, 20(4), 477–488. [Google Scholar]

- Cooper RP, & Aslin RN (1990). Preference for infant-directed speech in the first month after birth. Child Development, 61(5), 1584–1595. [PubMed] [Google Scholar]

- Crew JD, & Galvin JJ (2012). Channel interaction limits melodic pitch perception in simulated cochlear implants. The Journal of the Acoustical Society of America, 132(5), EL429–EL435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cristia A, & Seidl A (2014). The hyperarticulation hypothesis of infant-directed speech. Journal of Child Language, 41(4), 913–935. [DOI] [PubMed] [Google Scholar]

- Croghan NBH, Duran SI, & Smith ZM (2017). Re-examining the relationship between number of cochlear implant channels and maximal speech intelligibility. The Journal of the Acoustical Society of America, 142(6), EL537–EL543. [DOI] [PubMed] [Google Scholar]

- Cutler A, Dahan D, & van Donselaar W (1997). Prosody in the comprehension of spoken language: A literature review. Language and Speech, 40, 141–201. [DOI] [PubMed] [Google Scholar]

- Davidson LS, Geers AE, & Nicholas JG (2014). The effects of audibility and novel word learning ability on vocabulary level in children with cochlear implants. Cochlear Implants International, 15(4), 211–221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dilley L, Lehet M, Wieland EA, Arjmandi MK, Kondaurova M, Wang Y, Reed J, Svirsky M, Houston D and Bergeson T, (2020). Individual differences in mothers’ spontaneous infant-directed speech predict language attainment in children with cochlear implants. Journal of Speech, Language, and Hearing Research, 1–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman MF, Loizou PC, Fitzke J, & Tu Z (1998). The recognition of sentences in noise by normal-hearing listeners using simulations of cochlear-implant signal processors with 6–20 channels. The Journal of the Acoustical Society of America, 104(6), 3583–3585. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Loizou PC, & Rainey D (1997a). Simulating the effect of cochlear-implant electrode insertion depth on speech understanding. The Journal of the Acoustical Society of America, 102(5), 2993–2996. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Loizou PC, & Rainey D (1997b). Speech intelligibility as a function of the number of channels of stimulation for signal processors using sine-wave and noise-band outputs. The Journal of the Acoustical Society of America, 102(4), 2403–2411. [DOI] [PubMed] [Google Scholar]

- Dunn CC, Walker EA, Oleson J, Kenworthy M, Van Voorst T, Tomblin JB, Ji H, Kirk KI, McMurray B, Hanson M and Gantz BJ (2014). Longitudinal speech perception and language performance in pediatric cochlear implant users: The effect of age at implantation. Ear and Hearing, 35(2), 148–160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eskenazi M (1993). Trends in speaking styles research. Third European Conference on Speech Communication and Technology, 501–509. [Google Scholar]

- Falk TH, Parsa V, Santos JF, Arehart K, Hazrati O, Huber R, Kates JM and Scollie S, (2015). Objective quality prediction for users of and intelligibility assistive listening devices. IEEE Signal Processing Magazine, 32(2), 114–124. [DOI] [PMC free article] [PubMed] [Google Scholar]