Abstract

There is a pressing need to capture and track subtle cognitive change at the preclinical stage of Alzheimer's disease (AD) rapidly, cost‐effectively, and with high sensitivity. Concurrently, the landscape of digital cognitive assessment is rapidly evolving as technology advances, older adult tech‐adoption increases, and external events (i.e., COVID‐19) necessitate remote digital assessment. Here, we provide a snapshot review of the current state of digital cognitive assessment for preclinical AD including different device platforms/assessment approaches, levels of validation, and implementation challenges. We focus on articles, grants, and recent conference proceedings specifically querying the relationship between digital cognitive assessments and established biomarkers for preclinical AD (e.g., amyloid beta and tau) in clinically normal (CN) individuals. Several digital assessments were identified across platforms (e.g., digital pens, smartphones). Digital assessments varied by intended setting (e.g., remote vs. in‐clinic), level of supervision (e.g., self vs. supervised), and device origin (personal vs. study‐provided). At least 11 publications characterize digital cognitive assessment against AD biomarkers among CN. First available data demonstrate promising validity of this approach against both conventional assessment methods (moderate to large effect sizes) and relevant biomarkers (predominantly weak to moderate effect sizes). We discuss levels of validation and issues relating to usability, data quality, data protection, and attrition. While still in its infancy, digital cognitive assessment, especially when administered remotely, will undoubtedly play a major future role in screening for and tracking preclinical AD.

Keywords: clinical assessment, clinical trials, cognition, computerized assessment, digital cognitive biomarkers, home‐based assessment, preclinical Alzheimer's disease, smartphone‐based assessment

1. INTRODUCTION

In Alzheimer's disease (AD), the major pathophysiological processes (accumulation of amyloid beta protein [Aβ] into plaques assumed to be followed by the aggregation of hyperphosphorylated tau protein [p‐tau]into neurofibrillary tangles [NFT]), begin years to decades prior to the clinical dementia syndrome. 1 During this preclinical phase, cognitive functions are largely unaffected, 2 but as neuropathological burden increases over time, subtle cognitive decrements emerge. 3 The preclinical phase offers a promising window for preventing decline, which emphasizes that capturing the subtle changes in cognition during this phase is immensely important. 4

1.1. Associations between paper‐and‐pencil cognitive measures and AD biomarkers in preclinical AD

In clinically normal (CN) individuals, abnormal levels of Aβ (Aβ+), as measured in cerebrospinal fluid (CSF) (e.g., levels of Aβ42 or Aβ42/40) or with positron emission tomography (PET) neuroimaging, are considered indicative of an early AD pathological process. 2 , 3 During this preclinical stage of the disease continuum, the cross‐sectional association between Aβ and cognitive deficits is generally weak, 5 , 6 , 7 , 8 or insignificant. 9 , 10 , 11 However, CN individuals with higher Aβ burden (Aβ+) exhibit faster cognitive decline, 12 , 13 , 14 and often progress to a clinical stage faster than those with lower levels of Aβ. 15 , 16 , 17 , 18 This cognitive decline is subtle and detectable only over several years. In one study, Aβ+ CN participants declined at an average rate of −0.42 z‐score units per 18 months, 19 while another study showed a decline of between –0.07 and –0.15 average z‐score units per year. 20 The strongest association between AD biomarkers and cognitive decline is for memory function, 21 but there are also reports of decline across other cognitive domains, 22 , 23 including executive 24 and visuospatial functions. 25

The second major pathogenic process in preclinical AD is tau aggregation into NFT, also measurable in CSF (e.g., p‐tau) and with PET neuroimaging. 1 Compared to Aβ, tau has been considered more closely linked to cognitive impairment during the AD process. 26 , 27 , 28 In CN individuals, tau burden has been associated with memory impairment and longitudinal cognitive decline. 16 , 29 , 30 Given that tau PET is still relatively newer than Aβ PET, less is known about the longitudinal relation between tau and cognition, including later clinical progression. Generally, those with higher tau are at greater risk for longitudinal cognitive decline; importantly, however, this decline is several fold faster in Aβ+ CN individuals. 30 , 31 , 32

1.2. Paper‐and‐pencil versus digitized cognitive assessment

The relationship between paper‐and‐pencil measures of cognition and AD biomarkers among CN older adults is complex, but observed correlations are generally relatively weak, particularly cross‐sectionally. 8 Longitudinally, these relationships are more consistently observed and of greater magnitude, with CN older adults with elevated biomarker levels exhibiting cognitive decline. 31 , 33 Weak relationships between cognition and AD biomarkers may be partially attributable to the limitations of paper‐and‐pencil assessments, most of which were designed to detect frank impairment in clinical populations as opposed to being designed to detect subtle preclinical impairment. 34 Furthermore, normal fluctuations in cognitive performance, 35 practice effects, 36 and cognitive reserve 37 may obscure the detection of subtle cognitive decline.

The use of digital technology to assess cognition has the potential to mitigate some of the limitations of current paper‐and‐pencil assessments. 38 For example, mobile devices enable more frequent testing, resulting in more reliable and informative longitudinal data 39 and are more accessible and cost‐effective thanks to self‐administration. 40 Computerized measures that automatically generate alternative forms may help minimize practice and version effects. 41 Artificial intelligence (AI) methods such as deep learning enable faster, novel, and potentially more sensitive analysis of cognitive data. 42

Digital assessments also pose new challenges. Many studies using remote assessments struggle to maintain participant engagement. 43 Digital storage and sharing of cognitive data pose questions related to data privacy, 44 particularly when devices may collect additional identifiable personal data (e.g., voice recordings). Unsupervised digital assessments require systems to ensure the individual assigned to a remote assessment is the individual taking that assessment. Rapidly developing technologies and operating systems pose challenges to selecting and maintaining a single version of a digital assessment over time. Finally, while secular trends suggest that older adults are increasingly familiar and comfortable with new technology, 45 a not insignificant population may be excluded from research with digital assessments due to lack of familiarity, technical skills, or access.

Digital technology has not yet replaced paper‐and‐pencil assessments, particularly not in clinical trials, because multiple questions remain unanswered: Does digital technology capture cognitive information analogous to gold‐standard paper‐and‐pencil measures? Is there a fundamental difference between capturing data with a rater versus a device? How reliable and feasible is digital technology? These questions are just beginning to be addressed in a more widespread fashion as use of digital technology is rapidly evolving, 46 for instance, in research on preclinical AD.

HIGHLIGHTS

Digital assessments for preclinical Alzheimer's disease (AD) vary by intended setting (e.g., remote vs. in‐clinic), level of supervision (e.g., self vs. supervised), and device origin (personal vs. study‐provided).

At least 11 articles characterize digital cognitive assessment in biomarker‐defined preclinical AD, but the literature generally remains nascent, particularly for remote and novel assessments.

Multiple digital assessment instruments exhibit predominantly weak to moderate relationships with AD biomarkers in preclinical groups. More work is needed to confirm the concrete diagnostic potential in preclinical disease stages.

Potential benefits and challenges are discussed within the framework of future implementation in clinical trials, including recommendations for future studies.

RESEARCH IN CONTEXT

Systematic Review: The authors reviewed the literature from sources such as PubMed, Scopus, and PsycINFO, as well as grant and clinical trial databases and conference presentations. Publications reporting on novel, digital cognitive assessment methods in cognitively healthy individuals characterized as preclinical Alzheimer's disease (AD) by established biomarker evidence were appropriately cited and discussed.

Interpretation: We included and discussed different platforms and approaches used to enable both on‐site and remotely administered digital assessment to identify early cognitive impairment and decline in preclinical AD. Their sensitivity to AD biomarkers was found ranging from predominantly weak to moderate. Several promising newly developed assessment instruments were identified, currently under evaluation. Our findings have implications for the use of these instruments for the enrichment of clinical trials with relevant participants.

Future Directions: This article emphasizes the potential of novel assessment instruments to advance cognitive assessment in the early identification of preclinical AD. Before being fully implemented in clinical practice and screening for clinical trials, however, further research is needed to establish the concrete associations between assessment outcome and established biomarkers sensitive for the earliest signs of AD pathology. Last, we recommend conducting feasibility studies to investigate potential barriers for future implementation.

1.3. Organization of results

In this context, our objectives were to systematically review the current landscape of digital cognitive tests for use in preclinical AD and to describe the extent of validation of these digital cognitive tests against (1) gold‐standard cognitive tests and test composites (paper‐and‐pencil measures) and (2) biomarkers of Aβ and tau pathology. Furthermore, we will critically discuss the potential and pitfalls of digital cognitive assessments in the context of implementation in clinical trials, and to provide an outlook for the future of digital cognitive assessment. Our goal, however, was not to give an exhaustive overview of mobile technology 40 or computer testing 47 for use in elderly populations in general. Additionally, we do not address the separate field of passive monitoring to infer cognition using sensors and wearables. 48

We first describe the current understanding of the associations between cognitive performance on conventional paper‐and‐pencil measures and AD biomarkers. Subsequently, we discuss digital assessments organized into three groups based on technological platform and/or setting: (1) primarily in‐clinic computerized and tablet‐based, (2) primarily unsupervised environment and smartphone‐ or tablet‐based, and (3) novel data collection systems and analysis procedures (e.g., digital pen, eye‐tracking, and language analysis; novel methods for data analysis, e.g., using AI approaches).

For each digital assessment, validation is discussed in terms of (1) biomarker validation and (2) paper‐and‐pencil validation.

Paper‐and‐pencil validation involved comparing digital measures to conventional measures such as relevant global cognitive composites (e.g., Preclinical Alzheimer Cognitive Composite [PACC]) 17 or domain‐specific test composites.

2. METHODS

2.1. Search strategies

From January 2020 to December 2020, we searched three electronic databases (PubMed, Scopus, PsycINFO) for relevant publications (using search terms such as digital, mobile, smartphone, tablet, Alzheimer's, preclinical, amyloid), two online registers (ClinicalTrials.gov and National Institutes of Health [NIH] research portfolio) for relevant trials and awarded grants. A second search using the names of digital tests and companies identified in the first search was performed. We also searched two conferences for any relevant preliminary results: Clinical Trials on Alzheimer's Disease conference (CTAD) 2020 and Alzheimer's Association International Conference (AAIC) 2020.

2.2. Inclusion and exclusion criteria

Published articles, ongoing studies, and clinical trials using digital cognitive assessment were selected if they involved individuals identified with preclinical AD. Preclinical AD was defined either based on biomarker evidence of Aβ plaque pathology either by cortical Aβ PET ligand binding or low CSF Aβ42 and/or NFT pathology (elevated CSF p‐tau or cortical tau PET ligand binding). 1 Using the National Institute on Aging and Alzheimer's Association (NIA‐AA) Research Framework revised guidelines, 2 we defined preclinical AD as corresponding to the earliest stages in the numeric clinical staging (stage 1–2). We excluded studies that only included participants meeting criteria for clinical diagnoses, such as mild cognitive impairment (MCI) or dementia.

2.3. Procedures

A total of 469 articles were screened using the web‐app Rayyan, 49 of which 458 were excluded due to failure to meet inclusion criteria, and 11 were included in the review. Grant applications were screened from the NIH research portfolio, but no additional study was included. Since this initial literature search, two additional newly published articles were included. Preliminary results from seven conference presentations have also been included, specifically from CTAD 2020 and AAIC 2020. The resulting relatively small, heterogeneous, and methodologically inconsistent body of literature limited our review's methodology. Therefore, we performed a qualitative synthesis rather than a meta‐analysis.

3. RESULTS

3.1. Primarily in‐clinic computerized and tablet‐based cognitive assessment

An established area of digital development in cognitive testing is adapting traditional cognitive measures onto computerized platforms such as the Pearson's Q‐interactive for Wechsler Adult Intelligence Scale or the Montreal Cognitive Assessment (MoCA) Electronic Test. Furthermore, clinical trial data management companies such as Medavante and Clinical Ink have adapted traditional cognitive measures to be administered as electronic clinical outcome assessments. Automatic scoring and recording mitigate common error sources, but these systems, by definition, do not reimagine neuropsychological testing. A number of computerized cognitive tests have been developed to detect cognitive decline. These may include stand‐alone apps and programs as well as web‐based apps that can be completed either on personal computers (PC) or tablets. Some of these tests consist of digitized versions of traditional paper‐and‐pencil neuropsychological tests, while others involve newly developed tests designed to be completed without the active participation or presence of an examiner; these include, for example, Savonix, BrainCheck, Cogniciti, Mindmore, BAC, NIH‐Toolbox, CANTAB, and Cogstate, among others. Each vary in their approach, degree of commercialization, security and regulatory readiness, and degree of “gamification.” They also differ in their respective target populations and clinical indications. Here, we focus on the systems and platforms specifically or mainly designed to detect the earliest cognitive decline in AD. See Table 1 for an overview of the validation of these types of cognitive assessment instruments. In Figure 1, a selection of primarily in‐clinic computerized and tablet‐based cognitive assessments are exemplified.

TABLE 1.

Validation of primarily in‐clinic computerized and tablet‐based cognitive assessment

| Type of validation | Instrument | Authors | Longitudinal/cross‐sectional | Platform | Validation | Effect size | Biomarker | Participants (n) |

|---|---|---|---|---|---|---|---|---|

| Biomarker validation | Cogstate C3 | Papp et al. (2020) | Cross‐sectional | Tablet | Lower scores were associated with Aβ | Small effect (d = 0.11) | [18F]florbetapir‐PET | 4486 |

| NIHTB‐CB | Snitz et al. (2020) | Cross‐sectional | Tablet | Lower scores were associated with tau in higher Braak regions | Small/moderate effect (β = –0.19 to –0.28) | [11C]PiB‐PET [18F]AV1451‐PET | 118 | |

| Cogstate CPAL | Baker et al. (2019) | Longitudinal (36 months) | Personal computer | Lower training effect in Aβ+ CN | Small effect (d = 0.25 to 0.30) | [11C]PiB‐PET | 356 | |

| Cogstate CBB | Mielke et al. (2016) | Longitudinal (30 months) | Personal computer | No significant correlation between Aβ and cognition | No effect | [11C]PiB‐PET | 464 | |

| CANTAB | Bischof et al. (2016) | Cross‐sectional | Personal computer | Lower memory scores were associated with higher Aβ | Moderate effect (r = 0.47 to 0.48) | [18F]florbetapir‐PET | 147 | |

| Cogstate CBB | Lim et al. (2015) | Longitudinal (36 months) | Personal computer | Decline in memory were greater in Aβ+ CN | Small/moderate effect (d = 0.39 to 0.59) | [11C]PiB‐PET | 178 | |

| Paper/pencil validation | NIHTB‐CB and Cogstate C3 | Buckley et al. (2017) | Cross‐sectional | Tablet | Memory tasks was associated with PACC | Moderate/large effect (ρ r = 0.49 to 0.58) | N/A | 50 |

Abbreviations: Aβ, amyloid beta; β, standardized β coefficients; CN, clinically normal; Cogstate CBB, Cogstate Brief Battery; Cogstate CPAL, Cogstate Continuous Paired Associate Learning; d, Cohen's d; NIHTB‐CB, National Institutes of Health Toolbox Cognition Battery; CANTAB, Cambridge Neuropsychological Test Automated Battery; PACC, Preclinical Alzheimer Cognitive Composite; PiB, Pittsburgh compound B positron emission tomography; ρ r, Spearman correlation; r, correlation coefficient.

Note: Only published articles are included in this table.

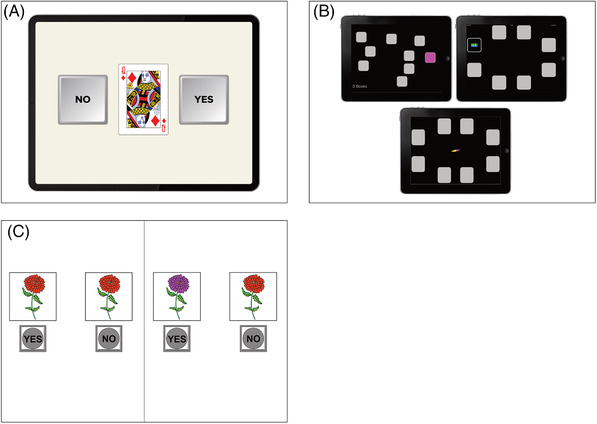

FIGURE 1.

A, Cogstate One Back tests. Copyright© 2020 Cogstate. All rights reserved. Used with Cogstate's permission. B, CANTAB Spatial Span and Paired Associates Learning. Copyright Cambridge Cognition. All rights reserved. C, NIH‐Toolbox Pattern Comparison Processing Speed Test Age 7+ v2.1. Used with permission NIH Toolbox, © 2020 National Institutes of Health and Northwestern University

3.1.1. Cogstate digital cognitive testing system

Cogstate is a commercial company based in Australia. A founding principle behind the Cogstate Brief Battery (CBB) was to mitigate the effects of language and culture on cognitive assessment. 50 , 51 , 52 Therefore, their measures of response time, working memory, and continuous visual memory are completed using the universal stimulus set of common playing cards. However, additional non–card‐playing tasks are also available (e.g., a paired associative learning task and a maze learning task). This test battery was initially developed in the early 2000s for PC (where participants would respond via keystrokes) but is now available for tablets. A second founding principle behind Cogstate tasks is a more reliable measurement of change over time through randomized alternative versions to reduce confounding practice effects. The Cogstate system was initially designed to be administered by an examiner, but there have been recent efforts for remote administration; additionally, once logged into the platform, the tasks are easy to progress through independently. Recently, the CBB has been made available for unsupervised testing using a web browser. A recent report 53 from the Healthy Brain Project in Australia showed high acceptability and usability for this unsupervised cognitive testing in a non‐clinical sample. They observed low rates of missing data and the psychometric characteristics of the CBB were similar to those collected from supervised testing.

A more recent iteration of Cogstate tasks is the C3 (Computerized Cognitive Composite) which includes the CBB in addition to two measures potentially sensitive to changes in early AD based on evidence from the cognitive neuroscience literature: the Behavioral Pattern Separation–Object Version (BPS‐O) and The Face‐Name Associative Memory Test (FNAME). Behavioral versions of the FNAME 54 and a modified version of the BPS‐O 55 were selected for inclusion in the C3 as they have been shown sensitive to activity in the medial temporal lobes in individuals at risk for AD based on biomarkers. 56 , 57

In a large sample of older adults (n = 4486), C3 performance was shown to be moderately correlated with cognitive performance on a composite of paper‐and‐pencil measures (PACC). 7 A smaller study similarly showed this correlation between the C3 and paper‐and‐pencil measures. 58 It also showed that the Cogstate C3 battery's memory tasks were best at identifying individuals’ subtle cognitive impairment, as defined by PACC performance. Combined, these findings suggest that these computerized tasks are valid measures of cognitive function and may be used for further study of cognitive decline in preclinical AD.

The Cogstate test batteries are used in several ongoing studies and clinical trials, for example, the Wisconsin Registry for Alzheimer's Prevention (WRAP), Alzheimer's Disease Neuroimaging Initiative 3 (ADNI3), Cognitive Health in Ageing Register: Cohort Study, and the Dominantly Inherited Alzheimer Network‐Trials Unit (DIAN‐TU). The C3 is currently being used in the Anti‐Amyloid Treatment in Asymptomatic Alzheimer's Disease (A4) study and the Study to Protect Brain Health Through Lifestyle Intervention to Reduce Risk.

Preclinical AD biomarker validation

Screening data from the A4 study showed that, among a large sample of CN elderly, elevated Aβ as assessed with [18F]florbetapir‐PET was associated with slightly worse C3 performance. 7 Other observational studies have not shown associations between CBB performance and Aβ status in preclinical AD cross‐sectionally, 51 but some studies have demonstrated that Aβ+ individuals decline on CBB over time. For example, in the Australian Imaging, Biomarkers and Lifestyle (AIBL) study, decline in episodic and working memory over 36 months was associated with higher baseline Aβ burden in CN participants. 13 Researchers from the Mayo Clinic Study on Aging used similar methods in a population‐based sample, and in contrast, they did not find any significant association between Aβ and CBB decline. 59 In another study from AIBL, performance on a continuous paired associative learning task (CPAL) within the Cogstate battery was explored for Aβ+ and Aβ– CN. Over 36 months, Aβ– task performance improved over time, whereas Aβ+ showed no practice effect. In CN, the absence of benefit from repeated exposure over time was associated with a higher Aβ burden. 60

3.1.2. The computerized National Institutes of Health Toolbox Cognition Battery (NIH‐TB)

The National Institutes of Health (NIH) Toolbox Cognitive Battery (TB‐CB) was designed as an easily accessible and low‐cost means to provide researchers with standard and brief cognitive measures for various settings. Development of the NIH TB‐CB was a large‐scale effort across government funding, scientists (250+), and institutions (80+). 61 It consists of seven established neuropsychological tests, selected and adapted to a digital platform by an expert panel. The NIH TB‐CB tests assess a range of cognitive domains (attention and executive functions, language, processing speed, working memory, and episodic memory). It was released in 2012 for PC, and a tablet version is now also available that has been validated against standard neuropsychological measures, 62 as well as against established cognitive composites for use in preclinical AD. 58 To ensure valid results, an examiner is still required to administer the app; however, some tests have recently been implemented for remote administration via screen sharing in a web browser.

The NIH TB‐CB is currently implemented in several clinical trials and longitudinal studies in aging and early AD, for example, in the Risk Reduction for Alzheimer's Disease study, the Comparative Effectiveness Dementia & Alzheimer's Registry, and the ongoing project Advancing Reliable Measurement in Alzheimer's Disease and Cognitive Aging (ARMADA). The latter study, ARMADA, is an NIH‐funded large multi‐site project in the United States, aiming to validate the NIH Toolbox in several demographically diverse CN and clinical cohorts, including earlier underrepresented demographical groups. ARMADA's additional goals are to further facilitate the use of NIH TB‐CB in aging research through the formation of a consortium with the National Alzheimer's Coordinating Center and in collaboration with researchers from other existing cohorts.

Preclinical AD biomarker validation

There are a handful of studies examining NIH TB‐CB in aging and dementia populations; however, there is currently a limited number of published studies on NIH TB‐CB and preclinical AD biomarkers. A recent study in 118 CN older adults did not find an association between AD neuroimaging markers of Aβ and any of the NIH TB‐CB cognitive tasks. However, they did find a weak association between measures of processing speed and executive functions and higher Braak regions of tau pathology. 63

3.1.3. The Cambridge Neuropsychological Test Automated Battery (CANTAB)

The Cambridge Neuropsychological Test Automated Battery (CANTAB) is intended as a language‐independent and culturally neutral cognitive assessment tool initially developed by the University of Cambridge in the 1980s, but now commercially provided by the company Cambridge Cognition. CANTAB has been used in a wide range of clinical settings and clinical trials, 64 including aging studies. 65 CANTAB mostly uses non‐verbal stimuli, and it includes measures of working memory, planning, attention, and visual episodic memory. Administration of CANTAB was initially on PC but is now available through CANTAB mobile (tablet‐based). Additionally, CANTAB offers an online platform for recruitment by pre‐screening patients using their cognitive assessment instruments.

Preclinical AD biomarker validation

In the Dallas Lifespan Brain Study, CN individuals underwent Aβ PET with [18F]florbetapir) and the CANTAB Verbal Recognition Test, including measures of memory recall and recognition. In this test, the participants are shown a sequence of words on a touchscreen. Subsequently, the participant is asked to recall the words, and the task ends with a recognition task. The researchers found that in relatively younger adults (30 to 55), higher Aβ was moderately associated with diminished memory recall and recognition, whereas the effect weakened as people aged and amyloid levels increased. 66

3.2. Remotely administered tablet‐ and smartphone‐based cognitive assessment

Demographic survey trends in the United States from 2019 indicated that 77% of Americans aged 50+ own smartphones 67 with that number climbing annually. 68 Similar numbers are being reported from European countries. 69 Simultaneously, there has been an increase in smartphone‐based apps designed for cognitive assessment in older populations. 40 The appeal and implication of smartphone‐based cognitive assessment for detection and tracking in preclinical AD are obvious. It is highly scalable, allowing for remote assessment in a much larger population compared to samples acquired through in‐clinic and supervised assessment. It allows for more frequent assessment with potentially more sensitive cognitive paradigms. 70 With mobile technology, cognitive assessment can be performed in a familiar environment and may thus increase the ecological validity (i.e., the generalizability to real‐life setting) of the task. Having a participant complete tasks on their own phone (as opposed to a study‐issued device) may be more reflective of their cognition in everyday life. Improved ecological validity of smartphone‐based assessment is timely, as researchers and regulators emphasize the importance of demonstrating the clinical meaningfulness of cognitive change in a preclinical AD population. Furthermore, the participant being in a familiar environment during cognitive assessments may reduce the risk of the "white‐coat effect” (participants underperforming on tasks in a medical environment). 71 Remote and mobile tracking of cognitive functioning provide an extra opportunity for an individual to track their own cognitive health over time, potentially leading to increased commitment to their well‐being. 72 Finally, for those willing to participate in demanding clinical trials, reducing in‐clinic visits through remote testing may mitigate the overall participant burden and encourage those in more remote areas to participate.

However, despite the potential of smartphone‐based assessment, multiple issues remain, including challenges related to (1) feasibility (e.g., older adults’ openness to completing smartphone assessments, compliance, attrition, privacy issues), (2) validity (e.g., ensuring alignment between smartphone‐based vs. gold standard cognitive assessment data, guaranteeing the identity of the examinee), and (3) reliability (e.g., variability between hardware and operating systems, diminished control over the test‐taking environment).

Given the recent rapid expansion of interest in this area, we focus on observed themes for smartphone‐based instruments that are in early (but varying) stages of development. Identified themes include (1) improving reliability of assessment through ambulatory/momentary testing, (2) using mobile and serial assessment to identify subtle decrements in learning and practice effects, (3) targeting cognitive processes more specific to decline in preclinical AD, (4) and harnessing the potential of big‐data collection. Validity data in relation to in‐clinic cognitive assessment and AD biomarkers is discussed where available. See Figure 2 for selected examples of smartphone‐based assessment applications. Table 2 displays the validation of remotely administered tablet‐ and smartphone‐based cognitive assessments.

FIGURE 2.

A, Ambulatory Research in Cognition (ARC) Symbols Test, Grids Test, and Prices Test. Used with permission from J. Hassenstab. B, neotiv Objects‐in‐Rooms Recall test. Used with permission from neotiv GmbH. C, Boston Remote Assessment for Neurocognitive Health (BRANCH). Used with permission from K. V. Papp

TABLE 2.

Validation of remotely administered tablet‐ and smartphone‐based cognitive assessment and other novel types of cognitive assessment

| Type of validation | Instrument | Authors | Longitudinal/cross‐sectional | Platform | Validation | Effect size | Biomarker | Participants (n) |

|---|---|---|---|---|---|---|---|---|

| Biomarker validation | FNAME | Samaroo et al. (2020) | Longitudinal | iPad | Diminished learning were associated with greater amyloid and tau PET burden | Moderate effect (d = 0.60) | [11C]PiB‐PET, [18F]flortaucipir‐PET | 94 |

| ORCA‐LLT | Lim et al. (2020) | Longitudinal | Any platform using web‐browser | Lower learning curves were seen in Aβ+ CN | Large effect (d = 2.22) | [11C]PiB‐PET, [18F]florbetapir‐PET, or [18F] flutemetamol‐PET | 80 | |

| Sea Hero Quest | Coughlan (2019) | Cross‐sectional | Smartphone | Wayfinding discriminated between carriers and non‐carriers | Acceptable discrimination (AUC = 0.71) | APOE | 60 | |

| Speech analysis | Verfaillie et al. (2019) | Cross‐sectional | Audio recorder | Fewer specific words were associated with Aβ burden | Moderate (β = 0.48 to 0.69) | CSF Aβ42, [18F]florbetapir‐PET | 63 | |

| Spatial Navigation task | Allison et al. (2016) | Cross‐sectional | Computer | Lower results on wayfinding were seen in Aβ+ CN | Moderate effect (d = 0.76) | CSF Aβ42 | 71 | |

| Paper/pencil validation | VPC task using eye‐tracking devices | Bott et al. (2018) | Cross‐sectional | Eye‐tracking camera and device embedded camera | Eye movement was associated with PACC and NIHTB‐CB | Moderate effect (ρ r = 0.35‐0.39) | NA | 49 |

Abbrevations: Aβ, amyloid beta; AUC, area under the curve; β, beta interaction effect; CN, clinically normal; CSF, cerebrospinal fluid; d, Cohen's d; ORCA‐LLT, Online Repeatable Cognitive Assessment‐Language Learning Task; PiB, Pittsburgh Compound B PET; ρ r, Spearman's rank correlation; p r, Pearsons correlation coefficient; VPC, Visual Paired Association.

Note: Only published articles are included in this table.

3.2.1. Feasibility of using mobile devices to capture cognitive function

While retention in longitudinal study designs is especially challenging for studies using remotely administered testing, adherence in short studies is promising. In a recent study, 1594 CN subjects (age = 40 to 65) completed a testing session using a web‐based version of four playing card tasks within the Cogstate battery. 53 High adherence to instructions and low rates of missing data were observed (1.9%), indicating high acceptability. Error rates were consistently low across tests and did not vary due to the self‐reported environment (e.g., with others present or in a public space). Another recent study investigated adherence during 36 days using a smartphone‐based app. 73 Thirty‐five CN participants (age = 40 to 59) completed very short daily cognitive tasks, where 80% completed all tasks, with 88% of the participants still active at the end of the study. More problematic, a recent report from eight digital health studies (providing study‐app usage data from > 100,000 participants) in the United States describes substantial participant attrition (e.g., participants losing engagement over time), confounding the generalizability of data obtained. 43 Monetary compensation improved retention, and boding well for preclinical AD studies, older age was associated with longer study participation duration. However, participants involved in trials that included in‐clinic visits had the highest compliance, suggesting that attrition in fully remote longitudinal studies remains a significant challenge.

3.2.2. Improving reliability: ambulatory/momentary cognitive assessment

The premise behind ambulatory/momentary cognitive assessment is that single‐timepoint assessments fail to capture the endemic variability in human cognitive performance impacted by a host of factors, including mood, stress, or time of day. 35 Capturing the most representative sample of an individual's cognition at a given interval is one promising approach to improving the sensitivity of measurement by reducing variability and increasing reliability. Using a “burst” design, a more reliable composite measure of cognitive performance is derived by averaging performance over multiple assessment timepoints administered in short succession (e.g., four assessments per day for 7 days).

Sliwinski et al. developed the brief smartphone‐based app Mobile Monitoring of Cognitive Change (M2C2) aimed at capturing cognition more frequently in an uncontrolled and naturalistic setting. 39 In a younger (age 25 to 65) but highly diverse (9% White) sample, they showed that brief smartphone‐based cognitive assessments of perceptual speed and working memory in an uncontrolled environment were correlated with in‐clinic cognitive performance. 39 The proportion of total variance in performance attributable to differences between people (accounting for within‐person variance across each test session and number of test sessions) was high, illustrating the excellent level of reliability achieved using a burst design.

Similarly, Hassenstab designed the Ambulatory Research in Cognition app (ARC) for use in the DIAN study. 74 In contrast with previous studies that have relied on study‐provided devices, 39 , 75 participants download the app onto their own devices and indicate the days and times they are available to be tested. Participants subsequently receive notifications to take ARC, which lasts a few minutes, 4 times per day for 1 week. ARC evaluates working spatial memory (Grids Test), processing speed (Symbols Test), and associative memory (Prices Test). Preliminary results suggest that ARC is reliable, correlated with in‐clinic cognitive measures and AD biomarkers, and well‐liked by participants. 74 Further work is required to determine whether ambulatory cognitive data are (1) more strongly related to AD‐biomarker burden in CN older adults compared to conventional in‐clinic assessments and (2) whether these data represent a more reliable measure of cognitive and clinical progression compared to conventional in‐clinic assessments.

3.2.3. Using mobile and serial assessment to identify subtle decrements in learning and practice effects

A diminished practice effect, that is, a lack of the characteristic improved performance on retesting, has been suggested as a subtle indicator of cognitive change prior to overt decline. 76 Mobile technology allows for much more frequent serial assessment. For example, a recent study provided iPads to 94 participants to take home and to complete a monthly challenging associative memory task requiring memory for face‐name pairs (FNAME) for 1 year. 77 They found an association between diminished learning and greater amyloid and tau PET burden among CN, with the Aβ ± group differences in memory performance emerging by the fourth exposure.

Work using a web‐based version of FNAME 78 and other memory tasks, called the Boston Remote Assessment for Neurocognitive Health (BRANCH), was designed to move learning paradigms from study‐provided tablets to smartphones and to reduce the time interval for serial assessment (e.g., from months to days). These tasks focus on cognitive processes supported by the medial temporal lobes and thus best able to characterize AD‐related memory changes. BRANCH primarily consists of measures of associative memory, pattern separation, and semantically facilitated learning and recall. BRANCH also uses paradigms and stimuli relevant to everyday cognitive tasks. 79

In a similar vein, the Online Repeatable Cognitive Assessment‐Language Learning Test (ORCA‐LLT) developed by Dr. Lim at Monash University in Australia, asks participants to learn the English word equivalents of 50 Chinese characters for 25 minutes daily over 6 days. The task is web‐based and completed on a participant's own device in their home. They found that learning curves were diminished in 38 Aβ+ versus 42 Aβ– CN older adults, and the magnitude of this difference was very large. 80

The assessment of learning curves over short time intervals using smartphones may serve as a cost‐effective screening tool to enrich samples for AD biomarker positivity prior to expensive assays. For example, a clinical study found that lower practice effects over 1 week were associated with a nearly 14 times higher odds of being Aβ+ on a composite measure using [18F]flutemetamol. 81 Future work with larger CN samples and further optimized learning paradigms may show similar discriminability properties of learning curves to AD biomarker positivity in a preclinical sample. Capturing learning curves over short intervals using remote smartphone‐based assessment may provide a more rapid means of assessing whether a novel treatment has beneficial effects on cognition. This could assist in more rapidly discontinuing futile treatment trials or, more importantly, trials with deleterious effects on cognition. 82 However, how the repeated measures of short‐term learning curves can be used to track cognitive progression remains unexplored. Methods to establish this relationship are in development but will require validation studies to overcome logistical and technical challenges.

3.2.4. Targeting relevant cognitive functions

While there is significant heterogeneity in the nature and progression of cognitive decline within AD, the availability of AD biomarkers and adoption of findings from the cognitive neuroscience literature have allowed researchers to hone in on cognitive processes potentially more sensitive and specific to AD. For example, researchers from the Otto‐von‐Guericke University in Magdeburg and the German Center for Neurodegenerative Diseases (DZNE) have been involved in the development of a digital platform including a mobile app for smartphones and tablets (neotiv), which consists of memory tests focused on object and scene mnemonic discrimination, 83 pattern completion, 84 face‐name association, 85 and complex scene recognition. 86 The object and scene mnemonic discrimination paradigm was designed to capture memory function associated with an object‐based (anterior‐temporal) and a spatial (posterior‐medial) memory system. 87 While functional magnetic resonance imaging (fMRI) studies have shown that both memory systems were activated in individuals performing the task, age‐related and performance‐dependent changes in functional activity have been observed in the anterior temporal lobe in older adults. 83 Furthermore, two studies in biomarker‐characterized individuals revealed that object mnemonic discrimination performance was associated with measures of tau pathology (i.e., anterior temporal tau‐PET binding and CSF p‐tau levels), while there was evidence for an association of performance in the scene mnemonic discrimination task with Aβ‐PET signal in posterior‐medial brain regions. 88 , 89 The complex scene recognition task has been shown to rely on a wider episodic memory network 86 and task performance was associated with CSF total‐tau levels. 90 All of the above tests have been implemented in a digital platform for unsupervised testing using smartphones and tablets. Recently, relationships of these tests and biomarkers for tau pathology as well as strong relationships with in‐clinic neuropsychological assessments have been demonstrated (i.e., Alzheimer's Disease Assessment Scale‐Cognitive subscale delayed word recall, PACC). 91 , 92

Participants download the app onto their own mobile devices and undergo a short introduction and training session as well as a short vision screening. Participants subsequently receive notifications to complete tests according to a predefined study schedule to acquire longitudinal trajectories with high frequency. To minimize practice effects, stimulus material has been piloted in large scale web‐based behavioral assessments, and parallel test sets with matched task difficulty have been created. 93 The neotiv platform is currently included in several AD cohort studies (e.g., DELCODE, BioFINDER‐2, and WRAP).

3.2.5. Harnessing the potential of “big data”: citizen science projects

Citizen science is a concept in which the general public is involved in collaborative projects, for example, by collecting their own data for use in research, 94 and can be a way to gather large amounts of data on individuals at risk of developing AD. One such citizen science project is The Many Brains Project, with their research platform TestMyBrain.org. 95 The Many Brains Project has yielded some of the largest samples in cognition research, with more than 2.5 million people tested since 2008; however, the study is not specific to AD. Another study, the Models of Patient Engagement for Alzheimer's Disease study, is an EU‐funded international initiative aiming to identify individuals with early AD hidden in their communities and traditionally not found in memory clinic settings. 96 Through web‐based cognitive screening instruments, individuals from the public with a heightened risk of AD are identified and invited to a memory clinic to undergo a full diagnostic evaluation.

At the Oxford University's Big Data Institute, researchers have developed the smartphone app Mezurio, included in several European studies (e.g., PREVENT, GameChanger, Remote Assessment of Disease and Relapse in Alzheimer's Disease study, BioFINDER‐2). One of them, GameChanger, is a citizen science project with more than 16,000 participants from the general UK population completing remote, frequent cognitive assessments with the Mezurio app. Through this project, healthy volunteers can perform tests on their smartphones, thus providing population norms for different age groups and demographic groups. Mezurio is installed on a smartphone and has several game‐like tests examining episodic memory (Gallery Game and Story Time), connected language (Story Time), and executive function (Tilt Task), including multiple recall tasks, and longer delays of up to several days. In a recent study investigating the feasibility of Mezurio in middle‐aged participants, the participants demonstrated high compliance indicating that this app may be suitable for longitudinal follow‐up of cognition. 73

In Germany, Dr. Berron et al. from the German Center for Neurodegenerative Diseases (DZNE) developed a Germany‐wide citizen science project (“Exploring memory together”) focused on the feasibility of unsupervised digital assessments in the general population. Besides demographic factors that affect task performance, there are several factors in everyday life (e.g., taking the test in the evening compared to during the day) that could contribute to performance on remote unsupervised cognitive assessments. Preliminary results from more than 1700 participants (ages 18 to 89) identified important factors that need to be considered in future remote studies, including time of day, the time between learning and retrieval, and (for one task) screen size of the mobile device. They concluded that investigating memory function using remote and unsupervised assessments is feasible in an adult population. 97

3.3. Novel data collection systems and analysis procedures

Other promising assessment instruments under evaluation in different studies on preclinical AD are the analyses of spoken language, eye movements, spatial navigation performance, and digital pen stroke data. Some of these tasks require stand‐alone equipment (e.g., eye‐tracker, digital pen), while others can use existing platforms or devices (e.g., device‐embedded cameras, 98 such as those in a personal laptop or the front‐facing camera on a smartphone). Figure 3 exemplifies some of these assessment instruments. Table 2 displays the validation of novel types of assessment instruments. Some instruments, such as commercial‐grade eye‐tracking cameras, or digital pens, are still not widely accessible, hampering their implementation. Predominantly passive monitoring of cognition, such as speech recording and eye movement‐tracking, may prove less stressful and time‐consuming than conventional cognitive tests. Participants complete a task of objective cognition (e.g., drawing a clock, or describing a picture) while subtle aspects of their performance are being recorded (e.g., pen strokes, eye movements, language). The result is a large quantity of data about performance, which must then be reduced or synthesized to glean relevant performance features. Using machine learning (ML) or deep learning, researchers have started to investigate whether automated analyses and classification of test performance according to specific criteria (e.g., biomarker or clinical status) can aid in sensitive screening in preclinical AD. 99 , 100 In a clinical context, ML can be used as a clinical decision support system, building prediction models that achieve high accuracy in clinical diagnosis and selecting patients for clinical trials at the early stages of dementia development. 101 , 102

FIGURE 3.

A, Sea Hero Quest Wayfinding and Path integration. Used with permission from M. Hornberger. B, Digital Maze Test from survey perspective and landmarks from a first‐person perspective. Used with permission from D. Head. C, Data and analysis process for digital Clock Drawing Test (dCDT), from data collection, the artificial intelligence (AI) analysis steps, and the machine learning (ML) analysis and reporting. Used with permission from Digital Cognition Technologies

3.3.1. Spoken language analysis and automated language processing

New technical developments have provided further insight into language deficits in preclinical AD, and several newly developed analysis instruments are now available. 103 For these instruments, speech production is usually recorded during spontaneous speech; verbal fluency tasks; or describing a picture, typically using the Cookie Theft picture from the Boston Diagnostic Aphasia Examination. 104 Speech is typically recorded using either a stand‐alone audio recorder or embedded microphone, and after that, transcribed and analyzed using computer software. For example, using a picture task, researchers can analyze speech, such as verbs and nouns in spontaneous speech; the complexity of sentences, as represented by grammatical complexity and verb usage; diversity of words; and the flow of speaking, such as speech repetitions and pauses. 105

Transcription of speech to text, a vital part of speech analysis, is by definition time consuming, but there has been an increasing effort to apply machine‐ and deep‐learning technology to detect cognitive impairment in AD. For example, researchers from the European research project Dem@care and the EIT Digital project ELEMENT demonstrated that automated analysis of verbal fluency could distinguish between healthy aging and clinical AD, 106 as well as vocal analysis using smartphone apps for automatic differentiation between different clinical AD groups. 107 In the latter, speech was recorded while performing short verbal cognitive tasks, including verbal fluency, picture description, counting down, and a free speech task. Thereafter, it was used to train automatic classifiers for detecting MCI and AD, based on ML methods. The question is still whether they can identify early cognitive impairment and decline in preclinical AD.

Intensive development of these methods is ongoing at several research sites. For example, researchers are exploring subtle speech‐related cognitive decline in early AD through the European Deep Speech Analysis (DeepSpA) project. The DeepSpA project uses telecommunication‐based assessment instruments for early screening and monitoring in clinical trials, using remote semiautomated analysis methods. In the United States, researchers in the Framingham Health Study are recording and analyzing speech obtained during neuropsychological assessments (from 8800+ neuropsychological examinations from 5376+ participants). Similarly, in Sweden, researchers are recording speech (1000+ participants) during neuropsychological assessments in the Gothenburg H70 Birth Cohort Studies. The studies mentioned above are in cooperation with the company ki elements, a spin‐off of the German Research Center for AI. Another company, Canadian Winterlight Labs, has developed a tablet‐based app to identify cognitive impairment using spoken language. The app is currently being evaluated in the Winterlight's Healthy Aging Study, an ongoing longitudinal normative study.

Preclinical AD biomarker validation

While most researchers have investigated MCI patients, 108 researchers from the Netherlands‐based Subjective Cognitive Impairment Cohort recorded spontaneous speech in CN individuals performing three open‐ended tasks (e.g., describing an abstract painting). After manual transcription, the researchers extracted linguistic parameters using a fully automated freely available software (T‐Scan). Using conventional neuropsychological tests, the participants performed within normal range, regardless of Aβ status (either using CSF Aβ1‐42 or [18F]florbetapir‐PET). Interestingly, a modest correlation was seen between abnormal Aβ and subtle speech changes (fewer specific words). 109

3.3.2. Eye‐tracking

In AD research, commercial‐grade eye‐tracking cameras have been shown to detect abnormal eye movements in clinical groups. 110 , 111 These are high frame rate cameras managing to collect a wealth of data on eye movement behavior, including saccades (simultaneous eye movements), and fixation (eyes focusing on areas). Eye movements can be recorded within specific tasks, for example, reading a text or performing a memory test. For example, Peltsch et al. 110 measured the ability to inhibit unwanted eye movements within a task using visual stimuli. This data can, in turn, be analyzed automatically using commercial software or inspected manually by researchers. However, eye‐tracking devices are so far expensive and are not widely available in clinical settings. A solution for this may be the use of device‐embedded cameras (e.g., in a laptop or tablet) to capture eye‐movement during tasks, such as performing memory tests. Bott et al. 112 from the company Neurotrack Technologies showed that device‐embedded cameras (i.e., in a PC), which are low cost and have high scalability, are feasible to capture valid eye‐movement data of sufficient quality. In this study, eye movements were recorded in CN participants during a visual recognition memory task. They observed a modest association between eye movements and cognitive performance on a paper‐and‐pencil composite. Interestingly, both device‐embedded cameras and commercial‐grade eye‐tracking cameras showed robust data of sufficient quality. This suggests that device‐embedded eye‐tracking methods may be useful for further study of AD‐related cognitive decline in CN. Besides accuracy of performance, eye trackers yield data on additional eye movement behaviors, opening opportunities for new types of potentially meaningful outcomes.

3.3.3. Digital pen

Digital pens look like regular pens but have an embedded camera and sensors that can capture position and pen stroke data with high spatial and temporal resolution. Outcomes include time in air and surface, velocity, and pressure. This results in the collection of hundreds or thousands of datapoints and variables in contrast with traditional paper‐and‐pencil measures wherein point estimates of reaction time and accuracy are the primary outcomes. Big‐data techniques such as ML can then be applied to these datasets to extract relevant signal. For example, Digital Cognition Technologies captured data from thousands of individuals completing the standard clock drawing test. They subsequently developed the digital Clock Drawing Test (dCDT), 100 which features an extensive scoring system based on ML techniques that describes performance outcomes related to information processing, simple motor functioning, and reasoning (among many others). This approach allows researchers to capture an individual's inefficiencies in completing a cognitive task despite overall intact performance, which has the potential to collect and analyze much more subtle aspects of behavior systematically.

Preclinical AD biomarker validation

Preliminary results from a study of older adults found that worse performance on dCDT, particularly on a visuospatial reasoning subscore, was associated with greater Aβ burden on PET and exhibited better discrimination between those with high versus low Aβ in contrast with standard neuropsychological tests included in a multi‐domain cognitive composite. 113

3.3.4. Virtual reality and spatial navigation

In virtual reality (VR)‐based tests, participants perform tasks of varying complexity in computer‐generated environments. These tasks are traditionally presented on computer screens (e.g., laptops or tablets) with which participants interact using a joystick, keyboard, touch screen, or VR head‐mounted display. The Four Mountains Test is an example of a VR‐based test, available for use on iPad. It measures spatial function by alternating viewpoints and textures of the four mountains’ typographical layout within a computer‐generated landscape. The clinical usefulness of the test has been demonstrated in clinical studies; 114 , 115 however, its relation to preclinical AD biomarkers is still unknown. Another example is a VR path integration task, developed by researchers from Cambridge University. In this task, participants are asked to explore virtual open arena environments. Using a professional‐grade VR headset, the participants are then asked to walk back to specific locations. In a clinical study, 116 it was superior to other cognitive assessments in differentiating MCI from CN, and was correlated to CSF biomarkers (total tau and Aβ). This task is currently being evaluated in a preclinical population with biomarker data.

The launch of the online mobile game Sea Hero Quest yielded great interest, and as of today, > 4.3 million people have played it. Deutsche Telekom collaborated with scientists from University College London and University of East Anglia to create this mobile game. The idea is to gather data to create population norms from several countries, enabling the development of easily administered spatial navigation tasks to detect AD. Preliminary results suggest that Sea Hero Quest is comparable to real‐world navigation experiments, suggesting that it does not only capture mere video gaming skills. 117

Preclinical AD biomarker validation

In a recent study, performance on the Sea Hero Quest mobile game was found to discriminate healthy aging from genetically at‐risk individuals of AD. They used Sea Hero Quest performance in a smaller apolipoprotein E (APOE) genotyped cohort. Despite having no clinically detectable cognitive deficits, individuals genetically at risk performed worse on spatial navigation. Wayfinding performance was able to discriminate between APOE carriers and non‐carriers. 118

In another study, participants from the Knight Alzheimer's Disease Research Center underwent a virtual maze task measuring spatial navigation. This virtual maze was created using commercial software and is presented on a laptop, participants maneuvering through a joystick. The mazes consist of a series of interconnected hallways with several landmarks. Their findings indicated that Aβ+ positivity (CSF Aβ42+) was associated with lower wayfinding performance. For inclusion in future studies, the spatial navigation task has been made available for remote use through a web‐based interface. 119

4. DISCUSSION

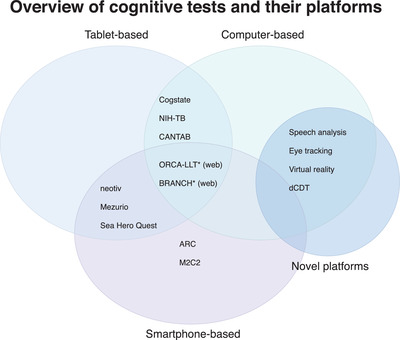

In this systematic review, several digital assessments were identified on multiple delivery platforms (e.g., tablets, smartphones, and external hardware), intended to measure cognition or behaviors in preclinical AD (see Figure 4 for an overview of assessments and their platforms). These assessment instruments varied by intended setting (e.g., remote vs. in‐clinic), level of supervision (e.g., self vs. supervised), and device origin (personal vs. study‐provided). Studies validating assessment instruments for more established platforms (e.g., PC, tablet) are more common than those developed for more novel platforms (e.g., smartphone). However, many of these newly developed tests are currently being evaluated in several biomarker studies.

FIGURE 4.

Overview of cognitive tests and their platforms. BRANCH, Boston Remote Assessment for Neurocognitive Health; ORCA‐LLT, Online Repeatable Cognitive Assessment‐Language Learning Test; NIH‐TB, National Institutes of Health Toolbox; CANTAB, Cambridge Neuropsychological Test Automated Battery; ARC, Ambulatory Research in Cognition; M2C2, Monitoring of Cognitive Change; dCDT, digital Clock Drawing Test. *Is available for use through a web browser

4.1. Validation with biomarkers of AD pathology

A critical part of early detection of preclinical AD in CN is the ability of cognitive tests to identify evidence of subtle cognitive impairment or decline over time. Primarily in‐clinic administered tests have demonstrated a cross‐sectional relationship to preclinical AD biomarkers, in parity with traditional neuropsychological assessment, with weak to moderate effect sizes. Longitudinal studies using conventional paper‐and‐pencil assessments are mixed, but most demonstrate subtle declines in preclinical phases of AD. Remotely administered tests, on the other hand, have been less explored, but work from several preclinical biomarker studies is underway. A handful of validation studies in the literature and preliminary results of a smartphone‐based memory test show a relationship to tau pathology. 91 In a small but promising study using a remotely administered web‐based assessment of learning, learning curves in Aβ+ were significantly slower than those in Aβ–, warranting further study of this approach. 80 Other novel assessment instruments, including speech analysis, 109 eye‐tracking, 112 and VR, 118 , 119 have demonstrated potential usefulness for further study of relevant preclinical AD biomarkers. Interestingly, preliminary results using a digital clock drawing test has shown high sensitivity to changes among CN individuals with positive AD biomarkers. 113

Future longitudinal studies should include longitudinal biomarker data, also exploring the validity for changing biomarkers over time. Future studies also need to investigate the ability to detect clinical progression, that is, from preclinical AD to MCI and dementia, warranting extensive longitudinal studies of CN individuals.

4.2. Validation with established cognitive composites

In addition to validation using preclinical AD biomarkers, an alternative means of validation is to compare digital assessments against conventional cognitive measures used in large‐scale studies. This type of validation can supplement biomarker studies or provide important preliminary data before using more costly biomarker studies. A handful of assessment instruments, including a tablet‐based test, 58 eye‐tracking assessment, 112 and a smartphone app, 92 have been validated against relevant global cognitive composites, indicating some validity for further study of biomarkers in preclinical AD. However, as the correlation between conventional composites and preclinical AD is already weak, pen‐and‐paper validation alone is not enough to be able to claim that a test is suitable as a measure of subtle cognitive changes in preclinical AD.

4.3. Potential of digital cognitive assessment instruments in different settings

The contexts in which new technology is being used impose different requirements on a test's capabilities. The requirements are, for instance, higher if the test results are outcomes in a clinical study than if used for participant selection for inclusion into studies. Tablet‐based tests, similar to traditional cognitive test batteries, have already been implemented in clinical trials. They are primarily intended to be administered with the help of a trained rater. Unsupervised and remotely administered tests have not yet shown sufficient robustness to be used in this context, and there remain concerns regarding reliability, adherence, privacy, and user identification.

The different digital assessment instruments discussed in this review enable different usage. Supervised digital assessment instruments could provide robust outcomes in clinical trials, with benefits such as automatic recording of response and scoring, making it easier to follow study protocols, reducing the risk of error, and increasing inter‐rater reliability. Remotely administered tests could serve as a cost‐effective pre‐screening before more expensive and invasive examinations, such as lumbar puncture and brain imaging, are recommended. In clinical trials, mobile devices could be used to identify individuals at greatest risk of cognitive decline, who are most likely to benefit from a specific intervention. Close follow‐up of people's cognitive function from their home environment may also enable high‐quality evaluation of interventions.

4.4. Importance of data security, privacy, and adherence

As an effect of the increasingly digitized cognitive testing, the issue of data security and privacy issues have been raised by regulatory authorities. Pharmaceutical companies have also emphasized the importance of these issues. One such consideration is the data storage and transmission between servers, crucial when data are to be stored and processed on servers that are not under the direct control of the study. When commercial companies are involved, questions can arise regarding ownership of data and conflicts of interest.

Data protection in the United States is governed by several laws enacted on federal and state levels (e.g., Patient Safety and Quality Improvement Act and Health Information Technology for Economic and Clinical Health Act). In the European Union (EU), the General Data Protection Regulation (GDPR) governs data storage and processing in EU countries. It affects scientific cooperation between countries inside and outside the EU. Technological development places increased demands on developers and researchers to familiarize themselves with regulatory issues, especially now that new types of personal data are gathered to a greater extent and across country borders.

Finally, an important and necessary focus is to ensure that data captured remotely, in an uncontrolled environment, is reliable and an accurate reflection of an individual's cognitive functioning. Here also the importance of adherence comes into play and, although there is increasing evidence that unsupervised testing can be done, large longitudinal health studies also indicate that there are significant problems with participant attrition. Work remains to ensure valid and reliable results for participants performing unsupervised testing in large clinical trials.

5. CONCLUSION

This review highlights the wealth of digital assessment instruments currently being evaluated in preclinical populations. Digital technology can be used to assess the subtle cognitive decline that defines biomarker‐confirmed preclinical AD. Potential benefits include increased sensitivity and reliability, and it could add value to individuals through increased accessibility, engagement, and reduced participant burden. Digital assessments may have clinical trial implications by optimized screening, facilitating case finding, and providing more sensitive clinical outcomes. Several promising tests are currently in development and are undergoing validation, but work remains before many of these can be considered alongside conventional in‐clinic cognitive assessments. We have begun to understand the reliability and validity of cognitive assessments obtained in naturalistic environments, which is required before beginning cognitive testing outside research centers on a large scale. Last, more feasibility studies investigating potential barriers for implementation are needed, including the challenges of adherence, privacy, and data security.

CONFLICTS OF INTEREST

Fredrik Öhman declares no conflict of interest. Jason Hassenstab is a paid consultant for Lundbeck, Biogen, Roche, and Takeda, outside the scope of this work. David Berron has co‐founded neotiv GmbH and owns shares. Kathryn V. Papp has served as a paid consultant for Biogen Idec and Digital Cognition Technologies. Michael Schöll has served on a scientific advisory board for Servier and received speaker honoraria by Genentech, outside the scope of this work.

ACKNOWLEDGMENTS

This work was funded by the Knut and Alice Wallenberg Foundation (Wallenberg Centre for Molecular and Translational Medicine), the Swedish Research Council, and the Swedish state under the agreement between the Swedish government and the country councils, the ALF‐agreement. Fredrik Öhman is supported by the Sahlgrenska Academy, Anna‐Lisa och Bror Björnssons Foundation, Handlanden Hjalmar Svensson Foundation, Carin Mannheimers Prize for Junior Researchers, Gun & Bertil Stohnes Foundation, Fredrik och Ingrid Thurings Foundation, and the Swedish Neuropsychological Society (all paid to the institution). Jason Hassenstab is supported by the NIH (R01 AG057840 Payments to institution; P01 AG003991 Payments to institution; BrightFocus Foundation A2018202S Payments to institution). Michael Schöll is supported by the Knut and Alice Wallenberg Foundation (Wallenberg Centre for Molecular and Translational Medicine Fellow; KAW 2014.0363), the Swedish Research Council (#2017‐02869), and the Swedish state under the agreement between the Swedish government and the County Councils, the ALF‐agreement (#ALFGBG‐813971) (all paid to the institution). David Berron is supported by the European Union's Horizon 2020 research and innovation programme under the Marie Skłodowska‐Curie (grant agreement No 843074), Kockska foundation, Alzheimerfonden and BrightFocus Foundation (all paid to the institution). Kathryn V. Papp is supported by the NIA (K23 Award), paid to the institution.

Öhman F, Hassenstab J, Berron D, et al. Current advances in digital cognitive assessment for preclinical Alzheimer's disease. Alzheimer's Dement. 2021;13:e12217. 10.1002/dad2.12217

Michael Schöll and Kathryn V. Papp contributed equally to this study.

REFERENCES

- 1. Jack CR, Holtzman DM. Biomarker modeling of alzheimer's disease. Neuron. 2013;80(6):1347‐1358. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Jack CR, Bennett DA, Blennow K, et al. NIA‐AA Research Framework: toward a biological definition of Alzheimer's disease. Alzheimer's Dement. 2018;14(4):535‐562. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Sperling RA, Aisen PS, Beckett LA, et al. Toward defining the preclinical stages of Alzheimer's disease: recommendations from the National Institute on Aging‐Alzheimer's Association workgroups on diagnostic guidelines for Alzheimer's disease. Alzheimer's Dement. 2011;7(3):280‐292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Weintraub S, Carrillo MC, Farias ST, et al. Measuring cognition and function in the preclinical stage of Alzheimer's disease. Alzheimer's Dement Transl Res Clin Interv. 2018;4:64‐75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Perrotin A, Mormino EC, Madison CM, Hayenga AO, Jagust WJ. Subjective cognition and amyloid deposition imaging: a Pittsburgh compound B positron emission tomography study in normal elderly individuals. Arch Neurol. 2012;69(2):223‐229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Sperling RA, Johnson KA, Doraiswamy PM, et al. Amyloid deposition detected with florbetapir F 18 (18F‐AV‐45) is related to lower episodic memory performance in clinically normal older individuals. Neurobiol Aging. 2013;34(3):822‐831. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Papp KV, Rentz DM, Maruff P, et al. The computerized cognitive composite (C3) in A4, an Alzheimer's disease secondary prevention trial. J Prev Alzheimer's Dis. 2020;8(1):59‐67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Duke Han S, Nguyen CP, Stricker NH, Nation DA. Detectable neuropsychological differences in early preclinical Alzheimer's disease: a meta‐analysis. Neuropsychol Rev. 2017;27(4):305‐325. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Aizenstein HJ, Nebes RD, Saxton JA, et al. Frequent amyloid deposition without significant cognitive impairment among the elderly. Arch Neurol. 2008;65(11):1509‐1517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Ying Lim Y, Ellis KA, Harrington K, et al. Cognitive consequences of high aβ amyloid in mild cognitive impairment and healthy older adults: implications for early detection of Alzheimer's disease. Neuropsychology. 2013;27(3):322‐332. [DOI] [PubMed] [Google Scholar]

- 11. Song Z, Insel PS, Buckley S, et al. Brain amyloid‐β burden is associated with disruption of intrinsic functional connectivity within the medial temporal lobe in cognitively normal elderly. J Neurosci. 2015;35(7):3240‐3247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Harrington KD, Lim YY, Ames D, et al. Amyloid β–Associated cognitive decline in the absence of clinical disease progression and systemic illness. Alzheimer's Dement. 2017;8:156‐164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Lim YY, Pietrzak RH, Bourgeat P, et al. Relationships between performance on the cogstate brief battery, neurodegeneration, and aβ accumulation in cognitively normal older adults and adults with MCI. Arch Clin Neuropsychol. 2015;30(1):49‐58. [DOI] [PubMed] [Google Scholar]

- 14. Papp KV, Rentz DM, Orlovsky I, Sperling RA, Mormino EC. Optimizing the preclinical Alzheimer's cognitive composite with semantic processing: the PACC5. Alzheimer's Dement Transl Res Clin Interv. 2017;3(4):668‐677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Rowe CC, Bourgeat P, Ellis KA, et al. Predicting Alzheimer disease with β‐amyloid imaging: results from the Australian imaging, biomarkers, and lifestyle study of ageing. Ann Neurol. 2013;74(6):905‐913. [DOI] [PubMed] [Google Scholar]

- 16. Hanseeuw BJ, Betensky RA, Jacobs HIL, et al. Association of amyloid and tau with cognition in preclinical Alzheimer disease: a longitudinal study. JAMA Neurol. 2019;76(8):915‐924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Donohue MC, Sperling RA, Salmon DP, et al. The preclinical Alzheimer cognitive composite: measuring amyloid‐related decline. JAMA Neurol. 2014;71(8):961‐970. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Papp KV, Rentz DM, Mormino EC, et al. Cued memory decline in biomarker‐defined preclinical Alzheimer disease. Neurology. 2017;88(15):1431‐1438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Bransby L, Lim YY, Ames D, et al. Sensitivity of a Preclinical Alzheimer's Cognitive Composite (PACC) to amyloid β load in preclinical Alzheimer's disease. J Cli Exp Neuropsychol. 2019;41(6):591‐600. [DOI] [PubMed] [Google Scholar]

- 20. Mormino EC, Papp KV, Rentz DM, et al. Early and late change on the preclinical Alzheimer's cognitive composite in clinically normal older individuals with elevated amyloid β. Alzheimer's Dement. 2017;13(9):1004‐1012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Hedden T, Oh H, Younger AP, Patel TA. Meta‐analysis of amyloid‐cognition relations in cognitively normal older adults. Neurology. 2013;80(14):1341‐1348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Mortamais M, Ash JA, Harrison J, et al. Detecting cognitive changes in preclinical Alzheimer's disease: a review of its feasibility. Alzheimer's Dement. 2017;13(4):468‐492. [DOI] [PubMed] [Google Scholar]

- 23. Baker JE, Lim YY, Pietrzak RH, et al. Cognitive impairment and decline in cognitively normal older adults with high amyloid‐β: a meta‐analysis. Alzheimer's Dement. 2017;6:108‐121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Petersen RC, Wiste HJ, Weigand SD, et al. Association of elevated amyloid levels with cognition and biomarkers in cognitively normal people from the community. JAMA Neurol. 2016;73(1):85‐92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Johnson DK, Storandt M, Morris JC, Galvin JE. Longitudinal study of the transition from healthy aging to Alzheimer disease. Arch Neurol. 2009;66(10):1254‐1259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Nelson PT, Alafuzoff I, Bigio EH, et al. Correlation of Alzheimer disease neuropathologic changes with cognitive status: a review of the literature. J Neuropathol Exp Neurol. 2012;71(5):362‐381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Ossenkoppele R, Schonhaut DR, Schöll M, et al. Tau PET patterns mirror clinical and neuroanatomical variability in Alzheimer's disease. Brain. 2016;139(5):1551‐1567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Bejanin A, Schonhaut DR, La Joie R, et al. Tau pathology and neurodegeneration contribute to cognitive impairment in Alzheimer's disease. Brain. 2017;140(12):3286‐3300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Maass A, Lockhart SN, Harrison TM, et al. Entorhinal tau pathology, episodic memory decline, and neurodegeneration in aging. J Neurosci. 2018;38(3):530‐543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Schöll M, Lockhart SN, Schonhaut DR, et al. PET imaging of tau deposition in the aging human brain. Neuron. 2016;89(5):971‐982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Schöll M, Maass A. Does early cognitive decline require the presence of both tau and amyloid‐β? Brain. 2020;143(1):10‐13. [DOI] [PubMed] [Google Scholar]

- 32. Betthauser TJ, Koscik RL, Jonaitis EM, et al. Amyloid and tau imaging biomarkers explain cognitive decline from late middle‐age. Brain. 2020;143(1):320‐335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Mormino EC, Papp KV. Amyloid accumulation and cognitive decline in clinically normal older individuals: implications for aging and early Alzheimer's disease. J Alzheimer's Dis. 2018;64(s1):S633‐S646. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Rentz DM, Parra Rodriguez MA, Amariglio R, Stern Y, Sperling R, Ferris S. Promising developments in neuropsychological approaches for the detection of preclinical Alzheimer's disease: a selective review. Alzheimer's Res Ther. 2013;5(6):1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Sliwinski MJ. Measurement‐burst designs for social health research. Soc Personal Psychol Compass. 2008;2(1):245‐261. [Google Scholar]

- 36. Goldberg TE, Harvey PD, Wesnes KA, Snyder PJ, Schneider LS. Practice effects due to serial cognitive assessment: implications for preclinical Alzheimer's disease randomized controlled trials. Alzheimer's Dement. 2015;1(1):103‐111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Soldan A, Pettigrew C, Albert M. Evaluating cognitive reserve through the prism of preclinical Alzheimer disease. Psychiatr Clin North Am. 2018;41(1):65‐77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Schatz P, Browndyke J. Applications of computer‐based neuropsychological assessment. J Head Trauma Rehabil. 2002;17(5):395‐410. [DOI] [PubMed] [Google Scholar]

- 39. Sliwinski MJ, Mogle JA, Hyun J, Munoz E, Smyth JM, Lipton RB. Reliability and validity of ambulatory cognitive assessments. Assessment. 2018;25(1):14‐30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Koo BM, Vizer LM. Mobile technology for cognitive assessment of older adults: a scoping review. Innov Aging. 2019;3(1):1‐14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Miller JB, Barr WB. The technology crisis in neuropsychology. Arch Clin Neuropsychol. 2017;32(5):541‐554. [DOI] [PubMed] [Google Scholar]

- 42. Laske C, Sohrabi HR, Frost SM, et al. Innovative diagnostic tools for early detection of Alzheimer's disease. Alzheimer's Dement. 2015;11(5):561‐578. [DOI] [PubMed] [Google Scholar]

- 43. Pratap A, Neto EC, Snyder P, et al. Indicators of retention in remote digital health studies: a cross‐study evaluation of 100,000 participants. npj Digit Med. 2020;3(1):1‐10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Bush SS. A Casebook of Ethical Challenges in Neuropsychology. Taylor & Francis; 2004. [Google Scholar]

- 45. Anderson BYM, Perrin A. PI_2017.05.17_Older‐Americans‐Tech_FINAL. Pew Res Cent. 2017;(May):1‐22. [Google Scholar]