Abstract

Over the course of 2 years a global technology education nonprofit engaged ~ 20,000 under-resourced 3rd-8th grade students, parents and educators from 13 countries in a multi-week AI competition. Families worked together with the help of educators to identify meaningful problems in their communities and developed AI-prototypes to address them. Key findings included: (1) Identifying a high level of interest in underserved communities to develop and apply AI-literacy skills; (2) Determining curricular and program implementation elements that enable families to apply AI knowledge and skills to real problems; (3) Identifying effective methods of engaging industry mentors to support participants; (4) Measuring and identifying changes in self-efficacy and ability to apply AI-based tools to real-world problems; (5) Determining effective curricula around value-sensitive design and ethical innovation.

Keywords: Under-resourced families, Mentoring, AI-ethics curriculum

Introduction

Our greatest challenges, from climate change to fast moving pandemics will require complex systems-thinking and real-world problem solving skills. We need to move beyond the traditional “command and control” approach that merely increases the speed with which students develop literacy, numeracy, coding skills, and now artificial intelligence (AI) literacy [1]. Content knowledge skills are relatively easy to learn, standardize, and measure, but they are also easy to automate. As Stuart Elliott points out, “AI literacy programs surpassed 30% of workers in developed countries in 2016, and by 2026, this number will be 60%. Similarly current math and data analysis systems outperform nearly all workers” [2]. The top three skills needed in 2025, according to the World Economic Forum, are cognitive abilities, systems skills and complex problem-solving [3].

Background

Nurturing Resilient Interest in Computer Science in Diverse Learners

Efforts to prepare students (especially those from under-resourced communities) with twenty-first century skills, such as computational thinking, are not new, and we can learn from prior efforts as we work to improve engagement of underserved groups in technology. A notable example is the coding movement (that is still underway worldwide). One goal of this movement has been to engage and equip students from underrepresented groups such as women and minorities. However, over the past 10 years the percentage of women Computer Science undergraduates in the United States has declined by 2% units from 20.7 to 18.7% [4]. The percentage of African American Computer Science undergraduates also decreased from 11.8 to 9.3%. Hispanic Computer Science undergraduates increased slightly (+ 3%), which could be due to the Hispanic population being the fastest growing demographic (increasing by almost 30% over the past 10 years in comparison to 5% of whites and 12% of African Americans).

These numbers have decreased despite investing millions of dollars into focused efforts towards increasing the number of girls and minorities in Computer Science.

A comprehensive literature review (150 + research articles spanning Creativity, Creativity Training, Gamification, Team Science, Complex Decision Making, Computer Science Education, Computational Thinking, Gender Equity and Social-Emotional learning) surfaced the following four issues:

A syntax-first approach doesn’t result in resilient change in attitudes, interest and identity [5–9].

Learning how to code is not the same as being able to solve a problem with code. Studies show that students who have knowledge of coding fundamentals still have no ability to apply this knowledge to real-world problems [10, 11].

Students drop out at every level if there is inadequate social and technical support for their efforts. Education systems, and online platforms have shown that it is possible to build technical capabilities, even at large scale. However, building scalable social support systems is much more messy and unpredictable [12–17].

Students drop out if they do not find an authentic purpose to their learning [18, 19].

In summary, what was easiest to measure (i.e. content knowledge, or learning to code), ended up becoming the goal, and the focus of significant collective effort and analysis. The larger questions of nurturing resilient interest in diverse learners were not addressed and as a result even after a decade of investing in CS education, the number of women and minorities are decreasing in CS. The problem of diversity and inclusion in CS remains at all levels, and in fact is worsening with the scale, speed and impact of technologies [20].

Parental Engagement Leads to Sustainable Impact

It is broadly recognized that childhood development and learning are sociocultural processes, and that children’s emerging skills, knowledge, and beliefs are strongly scaffolded and co-regulated by parents and caregivers [21–24]. Parents are the single factor that help a child improve academically, aim higher in postsecondary education, develop social competence and strong career aspirations, and lower rates of adolescent high-risk behavior [25–30].

Parents understand that they are primarily responsible for their child’s physical health needs, attending to their spiritual and emotional needs, helping establish their value systems and so on. However, parents rely on schools and educators to plan and lead their child’s education and especially technology education [31]. Herein lies an opportunity to build capacity at multiple levels in under-resourced communities. By engaging parents as co-learners in an education program, the hypothesis is that students will continue to be supported by invested parents after the intervention ends. Through the subsequent increased dosage and skill building, the family will change their attitudes towards science, technology, engineering and math (STEM)—seeing these fields as viable and accessible career choices for their children. And finally, parents themselves will begin to learn more about these fields, fueling and sustaining their own curiosity and interest.

Engaging Parents as Co-learners in a Technology Program

Technovation, a global technology education nonprofit started in 2006 to engage parents as co-learners in engineering and technology education programs, alongside children. It began testing the hypothesis that parents would continue to support their child’s learning, and that they themselves would change their attitudes and interest in these fields.

Strategies that Technovation used to engage parents were to offer meals during the program; allowing the whole family (including younger children) to participate and learn (reducing the need for childcare); ensuring that parents are learning new concepts and increasing their own sense of self-efficacy; and bringing in new social capital in the form of industry and university mentors.

After 14 years of engaging 12,500 parents and 100,000 students (70% of whom are from under-resourced communities), across 100 + countries, the main finding was that parents, even those from under-resourced communities, do more hands-on science projects at home and take their children to more enrichment programs—after Technovation’s programs—thereby continuing to support their child’s interest and skills [32].

Through a 5-week program (10 h), Technovation had some success in changing the family's attitudes towards potential careers in STEM for their children, and increasing a family’s interest in STEM, but the change was not deep, with insufficient skill development [33].

According to Hidi and Renninger higher dosage and practice enables learners to move from “situational interest”—participating in a program because it is offered in their community—to “well-developed, individual interest”—where the activity becomes a core part of their identity [8]. The 5-week program was insufficient to help learners develop a resilient identity as problem solvers.

To motivate families to dedicate considerable time and energy to learning, Technovation adapted Bandura’s self-efficacy theory, that outlines four pillars for successful behavior change interventions:

Exposure to mentors and stories modeling lifelong learning

Multi-exposure learning experiences that are personally relevant, engaging and meaningful

Supportive cheerleaders who hold learners to high expectations while providing necessary support

High-energy, dramatic, social gatherings/competitions that help the learners feel collective pride (and adrenaline) at their accomplishments, cementing the learning experience in their memory [9].

Keeping these principles in mind, Technovation launched and implemented a 15-week AI-entrepreneurship competition for families in 2018, encouraging them to identify pressing problems in their communities and developing AI-based prototypes to address these problems.

After conducting impact analysis, the Technovation team identified areas of improvement, namely, reduction in program length, improvement in parent training materials, connecting technical mentors to sites, improving the curriculum to be more hands-on, engaging, and better illustrative of the concepts, and inclusion of guidance on ethical innovation [34]. Technovation made the appropriate curriculum and programmatic changes before implementing the AI-entrepreneurship program for families again in 2019. The following research questions guided areas of focus, data collection and analysis:

Research Question 1 (RQ1): How can industry mentors be engaged to support participants?

Research Question 2 (RQ2): What impact do the second year program model changes have on participant content knowledge, interest and attitudes towards AI/technology?

Research Question 3 (RQ3): How can we introduce value-sensitive design and ethical innovation to learners?

This study outlines the research questions, curriculum, findings from pre- and post-surveys and AI-prototype judge reviews, in 2019, and draws insights from comparisons to results from 2018. We also compare 2018 and 2019 parent survey results to those from parents in previous 5-week-long, Technovation programs to see if the increased dosage and real-world problem solving competition model resulted in significant differences in learning outcomes.

Methods

Technovation implemented the AI education program, Technovation Families, for the second year in 2019 across 62 sites in 13 countries, engaging ~ 9200 under-resourced 3rd-8th grade students, parents and educators. 247 families submitted prototypes ranging from fire safety tools inspired by the Amazonian fire, devices children can wear for protection against kidnapping, and tools to assist people with disabilities to better access our world.

The following section outlines the recruitment, adoption, curriculum, methods of implementation, data collection, and analysis.

Recruitment

Technovation recruited participants through its network of global partners and offered a financial incentive ($1000 USD in 2019, vs $5000 in 2018). The partner stipends were reduced in order to increase financial sustainability of the overall endeavor. The stipends were unrestricted and allowed local partners to adapt the program to be successful to local needs and contexts. For instance, stipends were used to obtain materials for activities, internet hotspots and meals for the families or prizes for those who completed a certain number of sessions.

Selection criteria—Most sites were schools or after-school organizations. Selection criteria required that the sites engage under-resourced communities, engage between 20–30 families, have access to computers and wifi for each family and 1–2 staff members who could spend 30 + hours training for and leading the program. Local mentors were recruited from industry and university partners, by local partners as well as Technovation.

Table 1 compares the number of participants who registered for the program and those who completed in each of the 2 years. There was a slight increase in the number of families who completed the whole program and submitted AI-prototypes in 2019 (+ 5%) as compared to 2018.

Table 1.

Participant registration and completion in 2018 and 2019

| Students | Parents | Coaches | Mentors | Total Program Participants | AI prototypes submitted | Judges | |

|---|---|---|---|---|---|---|---|

| 2019 | |||||||

| Registered | 4101 | 2696 | 2420 | 277 | 9494 | 247 | 495 |

| Completed | 2460 | 1097 | 120 | 20 | 3697 | 258 | |

| 2018 | |||||||

| Registered | 6227 | 3287 | 1255 | 0 | 10,769 | 205 | |

| Completed | 4940 | 1290 | 250 | 0 | 6480 | 175 | |

Adoption and Retention

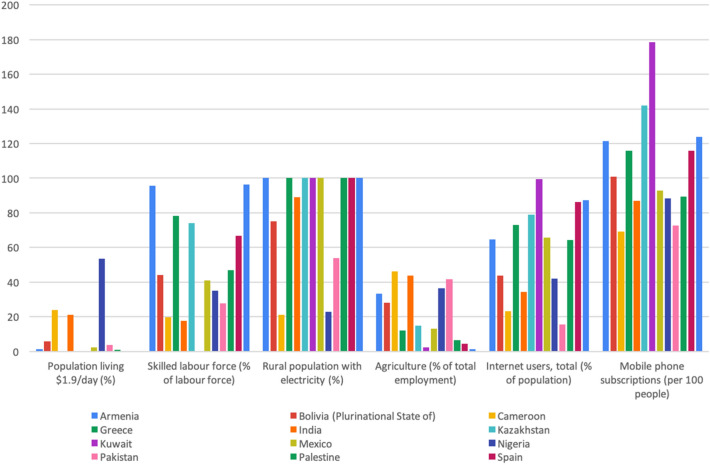

Participants from 62 sites across 13 countries: Armenia, Bolivia, Cameroon, Greece, India, Kazakhstan, Kuwait, Mexico, Nigeria, Pakistan, Palestine, Spain and USA submitted AI-prototypes addressing local problems. 22 sites from 9 countries returned from 2018 (~ 35%). Figure 1 graphs the Human Development Index (HDI) for participating countries providing country-level information on resources, employment, access to technology, and financial stability. The HDI is defined by the United Nations Development Programme (UNDP) as the ability of an individual to lead a long, healthy life and have access to education that enables them to have a decent standard of living [35]. Participating country indices are as follows: Armenia = 076, Bolivia = 0.70, Cameroon = 0.56, Greece = 0.87, India = 0.65, Kazakhstan = 0.82, Kuwait = 0.81, Mexico = 0.77, Nigeria = 0.53, Pakistan = 0.56, Palestine = 0.69, Spain = 0.89, USA = 0.92.

Fig. 1.

Poverty and employment metrics from the Human Development Index of participating countries, 2019

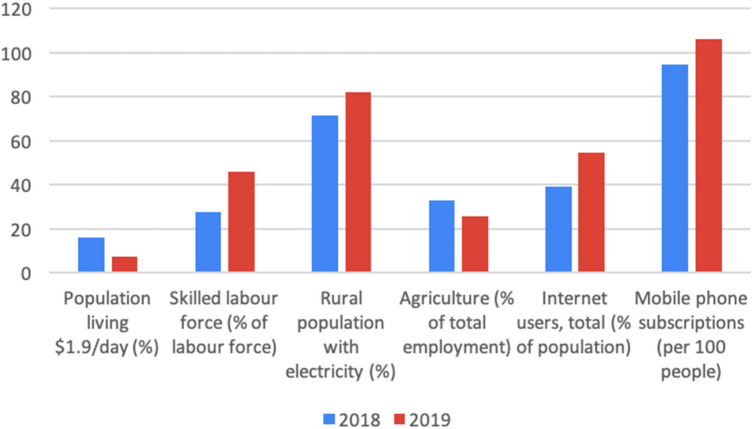

The average HDI of countries participating in 2019 was 0.73, and the 2018 average was 0.72. However, when removing countries with HDI > 0.85, the adjusted average HDI in 2019 was 0.69, a 7.8% increase from 0.64 in 2018. The adjusted average values of poverty and employment metrics in Fig. 2 indicate higher levels of skilled labor, financial stability, and access to technology in countries participating in 2019 than in 2018. The reduction in stipends provided to partners most likely accounts for why only more developed countries (in comparison to 2018) were able to participate in the second year of the program.

Fig. 2.

Comparison of adjusted average poverty and employment metrics, 2018–2019

Overall 9217 participants (students, parents, educators and mentors) registered for the program, ~ 30% less than 2018. 277 mentors registered for online mentor training.

Curriculum and Training

The Technovation team was successful in implementing a few of the recommendations from the 2018 program analysis: (1) Improved curriculum around identification of meaningful problems; (2) Providing better analogies and explanations for fundamental concepts of machine learning; (3) More guidance to determine which problems were suited to machine learning based solutions; (4) Connecting technical mentors with site facilitators and families to provide technical guidance [36]. 20 AI researchers were also gathered from different industry and research institutions around the world to advise on which AI concepts to explain in the lessons and how.

Sites accessed an online curriculum (https://www.curiositymachine.org/lessons/lesson/) with 10 lessons (reduced from 15 in 2018) introducing problem identification, datasets, machine learning and training models to recognize images, text and emotions through an IBM-Watson based platform Machine Learning for Kids (https://machinelearningforkids.co.uk/). Two big differences from 2018 were the removal of 5 hands-on design challenges (to reduce the length of the program commitment) and compressing the 10 sessions into one fall season, instead of spreading them out over the full calendar year.

A new ethics module (https://www.technovation.org/stem-explorers/ai-ethics/) was created in partnership with a legal firm, Hogan Lovells, that provided a set of 12 questions (or checklist) for participants to use to consider their invention’s impacts on diverse peoples and communities.

The Technovation team trained sites through online webinars, while providing access to detailed lesson plans and customizable slide-decks. Following the training, local site educators and facilitators engaged 3rd-8th grade students and parents meeting weekly for 2 h over 10 weeks.

The Judging Rubric (https://s3.amazonaws.com/devcuriositymachine/images/sources/final%20T%20Families%20Season%202%20rubric.pdf) was updated to emphasize understanding of the AI concepts, the appropriate use of AI, Responsible Innovation and personal growth.

Data Collection and Analysis

Four types of quantitative and qualitative data were collected to assess program impact:pre- and post-surveys with parents, educators/coaches and mentors, interviews with participants, student responses to multiple choice questions, and judges’ scores on families’ prototypes. Surveys were offered to only English-speaking participants compared to in 2018 when all participants were asked to fill out the surveys. All program participants signed consent forms which explained in simple language why and what type of data was being collected. The sign test was used to determine the statistical difference of survey results taken before and after the program for each family.

Results

Website Traffic

The curriculum was made freely available on Technovation’s hands-on design challenge website that hosts science and engineering projects for students, in addition to the AI curriculum. Table 2 shows the website traffic and unique pageviews to the AI curriculum in 2018 and 2019, as well as the overall traffic to the other design challenges. There was a significant increase in traffic to the AI curriculum in 2019 despite a decrease in traffic to the other design challenges. This could be due to an overall increased interest in AI in the public.

Table 2.

Total web traffic and unique pageviews on design challenges and AI family challenge lessons, 2018–2019

| 2018 | 2019 | |

|---|---|---|

| Design challenge total traffic | 452,469 | 355,109 |

| Design challenge unique views | 208,246 | 162,303 |

| AI lessons total traffic | 15,719 | 111,373 |

| AI lessons unique views | 4,533 | 33,538 |

Survey Results

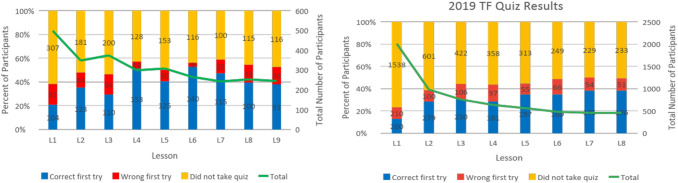

For both years, the majority of guardians that came with the students were mothers, grandmothers, aunts and older sisters. 53% of 2019 guardians were mothers, up from 45% in 2018 (Figs. 3, 4).

Fig. 3.

Guardian relation to child, 2019

Fig. 4.

Age of guardian, 2019

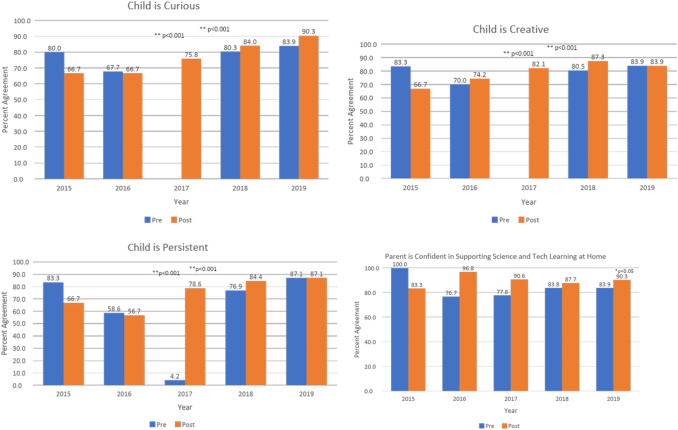

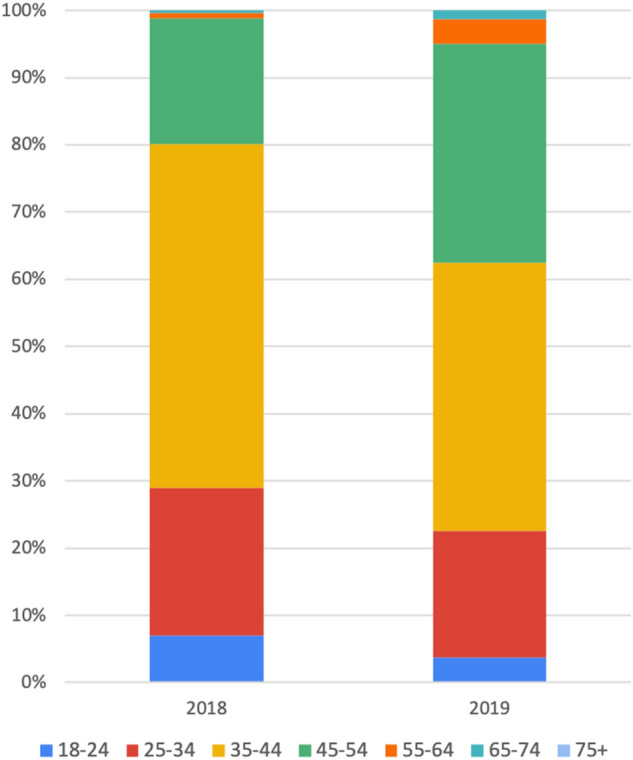

Comparison of Learning Gains Across 5 years of Family Learning Programs

To test whether higher dosage of engagement led to higher learning gains, parent survey responses were compared from 2015–2019 (Fig. 5). The programs in 2015, 2016 and 2017 were 5 weeks long with families spending between 6–10 h in hands-on STEM projects. The program in 2018 was the AI technology competition for families extending for 15 weeks across the year, while the AI competition in 2019 was 10 weeks in length. All the programs appeared to attract parents who wanted their children to learn more about Science and Technology, however the AI competitions attracted families with a higher level of pre-self-efficacy. This was expected as participation in a global AI competition requires a threshold level of interest, curiosity and self-efficacy.

Fig. 5.

Pre and post survey results from programs implemented over 5 years, measuring changes in students’ curiosity, creativity and persistence as reported by parents, and changes in parents’ confidence in supporting children’s technology learning at home

There were 463 paired pre- and post-survey respondents in 2018 and 31 in 2019. The significant reduction in post-survey completion in 2019 was due to participants not being required to complete post-surveys. The Sign Test was used to test for significant differences between paired pre- and post-survey responses.

Parents perceived their child to have a high degree of self-efficacy (Curiosity, Creativity, Persistence) before the programs, with the exception of year 2017 when it was reported to be less than 5% of respondents. Significant gains were seen in 2017 and 2018. Parents reported they were confident in supporting their child’s Science and Technology learning at home before the program, and this was maintained after the program. This result was not surprising, given their decision to enroll their child into such a program. In conclusion, the programs appeared to attract parents who wanted their child to learn more about Science and Technology, and for the 2019 program specifically, parents showed a significant increase in their confidence to support their child’s science and technology learning at home.

Coaches

For the 2019 season 2400 coaches,compared to the1255 in 2018, registered to implement the program locally. Following the training, coaches engaged with almost 6800 3rd-8th grade students and parents, meeting weekly for 2 h over a 10 week period. Coaches were mostly educators who were proficient in science, technology, and math, but less so in coding, engineering, and electronics.

In 2018, coaches wanted to further develop their STEM instruction abilities at the outset; and it did change significantly after the program (paired pre- and post- survey, n = 40). There was also a significant improvement in their confidence to stimulate a child’s STEM interest after participating in the program.

In 2019, 100% of the survey respondents (paired pre-and post-survey, n = 15) said they learned better ways to stimulate a student’s interest in STEM (AI, Coding, Technology and Engineering) after the program, and 80% of the coaches said they were able to help children develop and explore new skills.

The competition element of the program provided the usual combination of pros and cons: time-based deadline that motivated families to persist and submit their prototypes, excitement of competing at a global level counterbalanced by stress, frustration, impatience, and forced deliberation.

Pre-Survey Results with Mentors

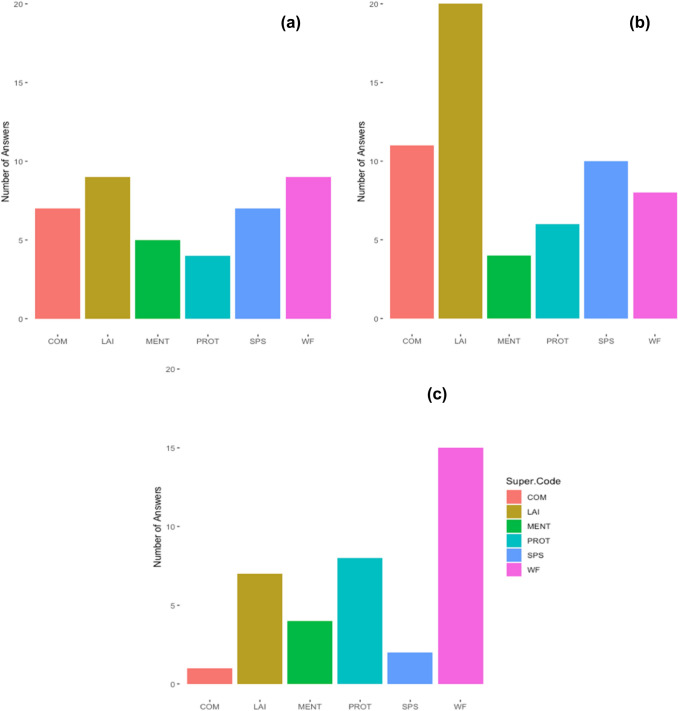

The Know-Center at TU Graz partnered with Technovation to improve mentor retention over the course of the program. The Know-Center conducted a clustering analysis on pre-surveys completed by industry mentors (n = 90). Figurs 6a–c illustrate three clusters that were determined based on interests and desires to gain different skills: COM: Communication skills; LAI: Learning AI; MENT: Mentoring; PROT: Prototype development; SPS: Solving problem skills; WF: Working with families.

Fig. 6.

a Cluster 1: the interests were evenly distributed with respondents scoring 4 in all of the dimensions in the pre-survey. b Cluster 2: average scores were high (5) in all dimensions, particularly in intrinsic motivation and interest in learning AI and developing communication skills. c Cluster 3: scores were between 4 and 5 in most dimensions in the pre-survey and represented an interest in working with families and developing prototypes

These three clusters (Fig. 6a–c) indicate that differentiated strategies could be used to better train and retain mentors through the program, enabling them to gain the skills they were looking to develop. Only about 20 mentors from industry were able to connect with families due to challenges in geographical matching. Most of the families were supported by their site educator, who in turn was trained and supported by the Technovation team.

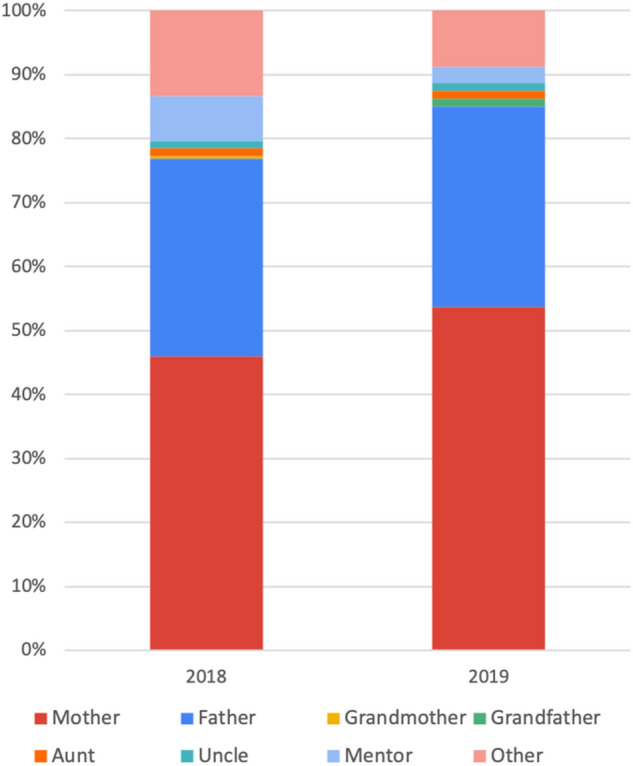

Quizzes

Students tested their understanding of concepts through selected response questions on the curriculum platform. If they selected the wrong answer, they were prompted to try again.

For both seasons, there was a decline in the number of participants who completed quizzes after the first lesson, from 498 in Lesson 1 to 348 in Lesson 2 for Season 1, and from 2008 in Lesson 1 to 980 in Lesson 2 (Fig. 7). Nearly half of participants who completed lessons did not take the quiz. Those who continued to take the quiz were able to improve their ability to correctly answer questions as the lessons progressed, as shown by the increasing percentage of correct first tries, verifying that comprehension increases with higher dosage and program participation.

Fig. 7.

Quiz results and total number of students completing each quiz, 2018–2019

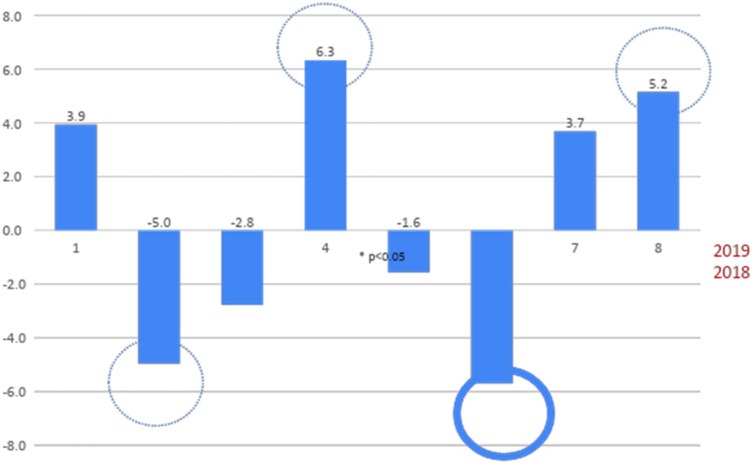

Judge Scoring

For each of the two seasons, at least three judges gave an impartial assessment of the quality of the AI-prototype based on a rubric that targeted the desired outcomes of the program. Based on the analysis of 2018 data, changes were made to improve the curriculum and rubric. Four of the core categories did not change: problem definition, Use of AI, Innovation or Uniqueness of the solution, and Project Execution. A new section was added around Responsible Invention. A significant decline of 5.7% was seen in the scoring for Problem Definition and the quality of the solution addressing the problem (Table 3). This may be due to ethical constraints newly added to the 2019 season, which may have introduced a product specification that not all teams were able to meet adequately. However, improvements to the curriculum appeared to result in a trend of better execution of the project, and improved likelihood of a successful invention and product.

Table 3.

Percentage of relative change in judge scoring from 2018 to 2019

| 2018 | 2019 | Relative change | p |

|---|---|---|---|

| Ideation 1 | Ideation 1 | 3.9 | > 0.1 |

| Ideation 3 | Ideation 2 | − 5.0 | 0.06 |

| PD 1 | AI 1 | − 2.8 | > 0.1 |

| PD 2 | AI 2 | 6.3 | 0.07 |

| PD 3 | AI 3 | − 1.6 | > 0.1 |

| Pitch 1 | OI 1 | − 5.7 | 0.02 |

| Pitch 3 | OI 2 | 3.7 | > 0.1 |

| OI 1 | OI 3 | 5.2 | 0.08 |

p < 0.05 indicates statistical significance

Coefficient of Variability (CV = Standard Deviation/Mean) was examined as a way to look at judges' assessment consistency as well as areas of improvement in the curriculum. There was a wide distribution in judges’ scores for Responsible Innovation (ethical design) with a CV = 37%, indicating that judges did not interpret or evaluate the prototypes in the same way.

Interviews

After the 2018 program, 34 interviews with families in the US, Cameroon, and Bolivia were conducted to gain a better understanding of why families signed up for the program, what they were expecting from the experience and what enabled them to finish it successfully. Universal themes across the three countries and socio-economic groups were that parents wanted their children to work on something that they were passionate about, leading them to happiness and success; the AI program was a way for parents to learn more about their children as well as themselves; and parents appreciated increasing their own problem-solving and technological abilities at the same time as their child. For all, the biggest barrier to participation was time. Keeping these findings in mind the length of the program was reduced from 15 to 10 weeks in 2019, and more emphasis was placed on improving the AI-focused curriculum modules so that families could still be successful in developing working AI-prototypes within the compressed timeframe.

For 2019, six interviews were conducted with families and mentors from India, Pakistan and Cambodia. Themes that emerged were that the compressed curriculum (without the hands-on design challenges) was less engaging for the families. Mentors and parents recommended more case studies, examples of AI being used to tackle local problems and flexibility in choosing topics and content according to interest and skill level.

Discussion

Comparing 2 years of implementation of a global AI competition engaging under-resourced families revealed a few insights and areas of further exploration that are relevant to organizations and groups interested in AI literacy, upskilling and helping all communities develop future-ready skills. Table 4 lists the programmatic and curricular differences between the 2018 and 2019 programs.

Table 4.

Program and assessment changes from 2018 to 2019

| 2018 | 2019 | |

|---|---|---|

| No. of lessons | 15 | 10 |

| Duration | 5 weeks in Spring, 10 weeks in Fall | 10 weeks in Fall |

| Lesson type | 5 lessons in Spring used hands-on materials | All focused on using online tools |

| Partner Stipends | $5000 | $1000 |

| Adjusted Average Human Development Index of countries (removing those with HDI > 0.85) | 0.64 | 0.69 |

| No. of registered participants | 10,769 | 9494 |

| Surveys | Everyone was asked to complete | English speaking participants were encouraged |

| No. of AI prototypes submitted | 205 | 247 |

| Judges’ scores |

A significant decline of 5.7% was seen in scores for problem identification and the quality of the solution. Increased scores for project execution and feasibility Returning sites (e.g. Palestine), demonstrated greater understanding of what problems could be solved with AI/ML |

Research Question 1 (RQ1): How Can Industry Mentors be Engaged to Support Participants?

Mentor recruitment proved to be challenging despite a high visibility launch of the program in May 2019 at the UN AI for Good Summit in Geneva, with 7 industry partners and 2 associations—NVIDIA, Google, General Motors, Cisco, Intel, Amazon, ThoughtWorks, Association for the Advancement of Artificial Intelligence, and the Society of Hispanic Professional Engineers. A logistical lesson was that recruitment of industry mentors over summer is not effective or efficient due to different vacation schedules.

Mentor engagement proved to be more challenging with only 7% of mentors successfully matching with and mentoring families. Key features of successful mentoring were: having a strong community partner geographically close to an industry partner and mentors having prior experience with mentorship. Community partner liaisons also needed to be flexible and good communicators.

The mentor research analysis conducted by the Know-Center revealed that: (1) Technovation’s mentors are highly motivated professionals and self-directed learners as indicated by high scores in the following attributes: intrinsic motivation, career motivation, self-efficacy, goal setting, strategic planning, critical thinking, help seeking, and self-reflection; (2) Participants with high intrinsic motivation were more interested in personal development: learning AI, communications skills and problems solving skills; (3) Participants with high scores across all categories were most interested in developing an AI-prototype and working with families. Based on these findings, one program improvement could be to use the pre-survey attributes to direct volunteers to specific volunteering opportunities to maximize engagement and positive impact.

Research Question 2 (RQ2): What Impact do the Second Year Program Model Changes have on Participant Content Knowledge, Interest and Attitudes Towards Ai/Technology?

Figures 5, 7 and 8 illustrate that the families did make gains in content knowledge, creativity and persistence, although the gains were smaller in comparison to 2018. The difference in learning gains across the 2 years could be due to higher economic development in the 2019 cohort (as the program implementation stipend from Technovation was significantly reduced)—resulting in a higher baseline and lower net gains (in creativity and persistence).

Fig. 8.

Percentage of relative change in judge scoring from 2018 to 2019

Research Question 3 (RQ3): How can we Introduce Value-Sensitive Design and Ethical Innovation?

The 12-question-responsible invention module was a first step in helping participants reflect on various consequences of their invention for different user groups. However, based on the large variance in judges’ scores of this feature, an area of improvement is to provide more engaging, accessible, localized case stories and video content, illustrating complex systems thinking and value-sensitive design. Improved content would enable more participants to better understand and apply the value-sensitive design principles, leading to an improved prototype.

Conclusion

The following are key features of a successful AI-education program model for under-resourced communities, combining best practices from literature and the experience of running an AI-entrepreneurship program for 20,000 participants from under-resourced communities, across 17 countries for 2 years:

Curriculum

Beyond content, towards purpose—with the continued rise in interest in AI, and online learning due to COVID, the emphasis for education programs and platforms needs to move beyond just content knowledge. Interviews with participants show that learners need to see the value and application of knowledge to real-world problems, while building their own sense of purpose and self-efficacy as problem solvers, entrepreneurs and leaders.

Making learning engaging—following online programming tutorials is not engaging for novices. The retention rate in the Technovation AI Families program was similar to MOOCs where the retention rates are typically < 13% [36, 37]. Strategies to improve retention include providing a variety of project-based learning lessons, starting from hands-on, unplugged activities and then moving onto software projects. Feedback frequency needs to be higher as well, at least weekly [38]. This can be accomplished by recruiting and training mentors, and matching them with the families. The geographical barriers can be overcome by encouraging virtual mentorship.

Complex systems thinking and responsible AI—since participants are tackling complex social problems, better support needs to be provided through engaging case studies and real-world examples. These examples should illustrate the steps of identifying and tackling complex, real-world problems, and developing technology solutions that take into account the values of direct and indirect stakeholders, and the positive and negative feedback loops that could be triggered due to large-scale technologies [39].

Capacity Building

Focus on under-resourced communities—as the UNDP HDR 2019 report pointed out, the conversation needs to move beyond the basics—beyond basic skills. The COVID-19 pandemic has brought to light additional barriers for society’s most vulnerable communities. Technology access, which was previously a luxury for many underserved communities, now is a necessity for vulnerable families to continue their education and improve their life choices [35]. This problem, however, runs deeper than simply providing access. For underserved communities, most remote education offerings are not sufficient to overcome the lack of knowledge and social capital, and deeply entrenched systemic inequities. Implementing the AI competition in such communities at global scale demonstrates that there is interest to participate and persist, and that early adopters (coaches, mentors and parents) are highly motivated individuals who will continue to lead and build AI capacity and capabilities in their communities.

Building communities of learners—many technology programs focus on students and educators, but parents and other family members need to be engaged to build broader communities of learners and support a culture of lifelong learning [40, 41]. This also helps increase the sustainability of the program and impact on the child, making the intervention more cost-effective.

Building social capital—engaging mentors from industry is logistically complex, but an effective way to increase social capital in under-resourced communities—bringing individuals that span different social groups such as race and class closer together. These interactions also bring more demographic diversity, novel information and resources that can assist individuals in advancing in society [42]. However, mentors need sufficient and appropriate training to ensure they are effective as mentors [43].

Bringing financial capital—sufficient funding is needed to build the infrastructure, capacity and social-capital in under-resourced communities so that they are successful in a technology education program. On average it costs Technovation $150 to provide access and exposure for an individual from an under-resourced community, to an AI program and 15 × to support that individual to complete the entire program and create an AI-prototype tackling a real-world problem. Funds cover recruiting, training and support of community partners, including disbursement of stipends (21%), recruiting, training and supporting mentors (7%), dissemination and marketing (23%) of the program, software development (20%), assessment (3%), organizing events (3%), curriculum development (2%) and finance, insurance and fundraising (19%). This level of financial commitment is needed, over multiple years to truly make the change we aim for.

Measuring success—learning from the coding movement again, a recommendation is to move beyond measuring change in interest and content knowledge, to measuring tangible improvements in resources, voice, influence, agency and achievements for all participants—children and adults [44]. This framework sets us on the path to building resilient communities.

Patience and commitment—It takes ~ 3–5 years to iteratively develop fun, engaging, effective curriculum, training and scalable program delivery methods. This level of patience and commitment is needed from all community and industry partners and funders.

Future Work

Technovation will be launching the third year of the AI family challenge in October 2021. Key improvements and model changes are based on lessons and research findings over the past years, and include: (1) Virtual mentoring to overcome geographical barriers; (2) Providing increased financial support to interested community partners from under resourced communities; (3) Improving curriculum materials, especially those supporting Responsible Innovation and complex systems thinking, by adding more engaging videos, case stories and unplugged activities.

The key will be to not just focus on building capabilities, but to also motivate and build agency at the same time, so that we are nurturing resilient learners and problem solvers [45].

Contributor Information

Tara Chklovski, Email: tara@technovation.org.

Richard Jung, Email: richard@technovation.org.

Rebecca Anderson, Email: rebecca@technovation.org.

Kathryn Young, Email: kathryn@technovation.org.

References

- 1.Gunderson LH, Holling SC. Panarchy: understanding transformations in human and natural systems. Washington, DC: Island Press; 2002. [Google Scholar]

- 2.Elliott S. Computers and the future of skill demand, educational research and innovation. Paris: OECD Publishing; 2017. [Google Scholar]

- 3.World Economic Forum (2016) The Future of Jobs. http://www3.weforum.org/docs/WEF_Future_of_Jobs.pdf. Accessed July 17, 2020

- 4.National Science Foundation (2019) Women, Minorities, and Persons with Disabilities in Science and Engineering. NSF. https://ncses.nsf.gov/pubs/nsf19304/data. Accessed 17 July 2020

- 5.National Research Council . How people learn brain, mind, experience, and school (Expanded edition) Washington, DC: The National Academies Press; 2000. [Google Scholar]

- 6.Ericsson KA, Hoffman RR, Kozbelt A, Williams AM. The Cambridge Handbook of expertise and expert performance. 2. Cambridge: Cambridge University Press; 2018. [Google Scholar]

- 7.Hoidn S, Kärkkäinen K. Promoting skills for innovation in higher education: a literature review on the effectiveness of problem-based learning and of teaching behaviours. OECD education working papers, no. 100. Paris: OECD Publishing; 2014. [Google Scholar]

- 8.Hidi S, Renninger KA. The four-phase model of interest development. Edu Psychol. 2010;41(2):111–127. doi: 10.1207/s15326985ep4102_4. [DOI] [Google Scholar]

- 9.Bandura A. Self-efficacy: the exercise of control. 1. New York: Worth Publishers; 1997. [Google Scholar]

- 10.Pintrich PR, Schunk DH (1996) Motivation in Education: Theory, Research, and Applications. Pearson College Div

- 11.Astrachan O, Reed D (1995) AAA and CS 1 The Applied Apprenticeship Approach to CS 1. SIGCSE. https://users.cs.duke.edu/~ola/papers/aaacs1.pdf. Accessed 17 July 2020

- 12.Valian V. Why So Slow? The advancement of women. Cambridge: The MIT Press; 1997. [Google Scholar]

- 13.Margolis J, Fisher A. Unlocking the clubhouse: women in computing. Cambridge: The MIT Press; 2001. [Google Scholar]

- 14.Margolis J. Stuck in the shallow end: education, race, and computing. Cambridge: The MIT Press; 2010. [Google Scholar]

- 15.Shen R, Wohn DY, Lee MJ (2019) Comparison of Learning Programming between Interactive Computer Tutors and Human Teachers. CompEd '19: Proceedings of the ACM Conference on Global Computing Education. 10.1145/3300115.3309506

- 16.Wilson BC. Contributing to success in an introductory computer science course: a study of twelve factors. ACM SIGCSE Bull. 2001;33(1):184–188. doi: 10.1145/366413.364581. [DOI] [Google Scholar]

- 17.Kinnunen P, Malmi L (2006) Why students drop out CS1 course? ICER '06: Proceedings of the second international workshop on Computing education research 90–108. 10.1145/1151588.1151604

- 18.Tissenbaum M, Sheldon J, Abelson H. From computational thinking to computational action. Commun ACM. 2019;62(3):34–36. doi: 10.1145/3265747. [DOI] [Google Scholar]

- 19.Land R, Reimann N, Meyer J (2005) Enhancing Learning and Teaching in Economics: A Digest of Research Findings and their Implications. ETL Project. www.ed.ac.uk/etl/publications.htm. . Accessed 17 July 2020

- 20.United Nations Development Programme (2020) Human Development Report 2020. http://hdr.undp.org/sites/default/files/hdr_2020_overview_english.pdf. Accessed 17 Jan 2021

- 21.Institute of Medicine and National Research Council (2012) From neurons to neighborhoods: An update: Workshop summary. Washington, DC [PubMed]

- 22.National Research Council . From neurons to neighborhoods: the science of early child development. Washington, DC: National Research Council; 2000. [Google Scholar]

- 23.Rogoff B, Paradise R, Arauz RM, Correa-Chávez M, Angelillo C. Firsthand learning through intent participation. Annu Rev Psychol. 2003;54(1):175–203. doi: 10.1146/annurev.psych.54.101601.145118. [DOI] [PubMed] [Google Scholar]

- 24.Vygotskiĭ LS. Mind in society. In: Cole M, editor. The development of higher psychological processes. Cambridge: Harvard University Press; 1978. [Google Scholar]

- 25.Caplan J., Hall C, Lubin S, Fleming R (1997) Parent involvement: Literature review of school-family partnerships. http://www.ncrel.org/sdrs/areas/pa0cont.html. Accessed 17 July 2020

- 26.Webster-Stratton C, Reid MJ, Hammond M. Preventing conduct problems, promoting social competence: a parent and teacher training partnership in Head Start. J Clin Child Adolesc Psychol. 2010;30:283–302. doi: 10.1207/S15374424JCCP3003_2. [DOI] [PubMed] [Google Scholar]

- 27.Christenson SL, Sheridan SM. Schools and families: creating essential connections for learning. New York: Guilford Press; 2001. [Google Scholar]

- 28.Henderson AT, Mapp KL. A new wave of evidence: The impact of school, family, and community connections on student achievement. Austin: National Center for Family and Community Connections with Schools; 2002. [Google Scholar]

- 29.Patrikakou EN (2004) Adolescence: Are parents relevant to students' high school achievement and post-secondary attainment? Global Family Research Project. www.hfrp.org/publications-resources/browse-our-publications/adolescence-are-parents-relevant-to-students-high-school-achievement-and-post-secondary-attainment. Accessed 17 July 2020

- 30.Jeynes WH. A meta·analysis of the relation of parental involvement to urban elementary school student academic achievement.". Urban Edu. 2005;40:237–269. doi: 10.1177/0042085905274540. [DOI] [Google Scholar]

- 31.Taylor C, Wright J (2019) Building Home Reading Habitats: Behavioral Insights for Creating and Strengthening Literacy Programs. Ideas42. https://www.ideas42.org/wp-content/uploads/2019/04/I42-1089_At-Home-Reading_Jan11.pdf. Accessed July 17, 2020

- 32.Pierson E, Momoh L, Hupert N (2015) Summative Evaluation Report for the Be A Scientist! Project’s Family Science Program. EDC Center for Children and Technology. https://iridescentlearning.org/wp-content/uploads/2014/01/BAS-2015-Eval-FINAL-3.pdf. Accessed 21 Jan 2021

- 33.Bonebrake V, Riedinger K, Storksdieck M (2019) Investigating the Impact of Curiosity Machine Classroom Implementation: Year 2 Study Findings. Technical Report. Corvallis, OR: Oregon State University. https://www.technovation.org/wp-content/uploads/2019/08/Curiosity-Machine_Research-Report_FINAL.pdf. Accessed 17 July 2020

- 34.Chklovski T, Jung R, Fofang JB, Gonzales P (2019) Implementing a 15-week AI-education program with under-resourced families across 13 global communities. Technovation. https://www.technovation.org/wp-content/uploads/2019/09/Chklovski_et_al.pdf. Accessed 17 July 2020

- 35.United Nations Development Programme (2019) Human Development Report 2019. UNDP. New York, NY. http://hdr.undp.org/sites/default/files/hdr2019.pdf. Accessed 17 July 2020

- 36.Onah DFO, Sinclair J, Boyatt R (2014) Dropout rates of massive open online courses: behavioural patterns. 6th International Conference on Education and New Learning Technologies, Barcelona, Spain, 7–9 Jul 2014. EDULEARN14 Proceedings: 5825–5834

- 37.Taylor C, Veeramachaneni K, O’Reilly U (2014) Likely to stop? Predicting Stopout in Massive Open Online Courses. MIT. https://dai.lids.mit.edu/wp-content/uploads/2017/10/1408.3382v1.pdf. Accessed 17 July 2020

- 38.Gee JP (2007) Good video games and good learning: collected essays on video games, learning, and literacy. Peter Lang Inc., International Academic Publishers

- 39.Friedman B, Hendry DG. Value sensitive design: shaping technology with moral imagination. Cambridge: MIT Press; 2019. [Google Scholar]

- 40.Wilson GA. Community resilience and environmental transitions. Abingdon: Routledge; 2012. [Google Scholar]

- 41.Sharifi A. A critical review of selected tools for assessing community resilience. Ecol Ind. 2016;69:629–647. doi: 10.1016/j.ecolind.2016.05.023. [DOI] [Google Scholar]

- 42.Granovetter M. The strength of weak ties: a network theory revisited. Sociol Theory. 1983;1:201–233. doi: 10.2307/202051. [DOI] [Google Scholar]

- 43.Kozlowski SWJ, Gully SM, Salas E, Cannon-Bowers JA. Team leadership and development: theory, principles and guidelines for training leaders and teams. Advances in interdisciplinary studies of work teams. Greenwich: Elsevier ScienceJAI Press; 1996. pp. 253–291. [Google Scholar]

- 44.Kabeer N. Resources, agency, and achievements: reflections on the measurement of women’s empowerment. Dev Chang. 1999;30:435–464. doi: 10.1111/1467-7660.00125. [DOI] [Google Scholar]

- 45.Bennett NJ, Whitty TS, Finkbeiner E, Pittman J, Bassett H, Gelcich S, Allison EH. Environmental stewardship: a conceptual review and analytical framework. Environ Manage. 2018;61(4):597–614. doi: 10.1007/s00267-017-0993-2. [DOI] [PMC free article] [PubMed] [Google Scholar]