SUMMARY

Humans and animals can be strongly motivated to seek information to resolve uncertainty about rewards and punishments. And in particular, despite its clinical and societal relevance, very little is known about information-seeking about punishments. We show that attitudes toward information about punishments and rewards are distinct and separable, at both the behavioral and neuronal levels. We demonstrate the existence of prefrontal neuronal populations that anticipate opportunities to gain information in a relatively valence-specific manner, separately anticipating information about either punishments or rewards. These neurons are located in anatomically interconnected subregions of anterior cingulate (ACC) and ventrolateral prefrontal cortex (vlPFC) in area 12o/47. Unlike ACC, vlPFC also contains a population of neurons that integrate attitudes toward both reward- and punishment-information, to encode the overall preference for information in a bivalent manner. This cortical network is well-suited to mediate information-seeking by integrating the desire to resolve uncertainty about multiple, distinct motivational outcomes.

Short (E-TOC) paragraph

Despite its clinical and societal relevance, very little is known about how the brain regulates information seeking about aversive punishments. Here, the authors uncovered a prefrontal network, including the anterior cingulate and the ventral lateral prefrontal cortex, that tracks uncertainty of, and mediates information seeking about, uncertain rewards and punishments.

INTRODUCTION

One of our most fundamental strategies for coping with an uncertain future is to seek information about what rewards and hazards the future holds. Indeed, humans and animals are often willing to pay to obtain information to resolve their uncertainty about future rewards, even when they cannot use this knowledge to change the outcome in any way (Blanchard et al., 2015,Bromberg-Martin and Hikosaka, 2009,Bromberg-Martin and Monosov, 2020,Gottlieb et al., 2013,Kobayashi et al., 2019,Monosov, 2020,White et al., 2019). This non-instrumental preference for information about rewards is also reflected in the gaze behavior of monkeys and humans (Bromberg-Martin and Monosov, 2020,Daddaoua et al., 2016,Hunt et al., 2018,Monosov, 2020,Stewart et al., 2016,White et al., 2019). That is, when primates know that an object will provide an visual cue with information about future rewards, they are prone to hold their eye on that object in anticipation of viewing the cue and resolving their reward uncertainty as early as possible (White et al., 2019).

Taking advantage of these behavioral observations, the field is beginning to learn how the brain controls our desire to know what rewards our future holds (Bromberg-Martin and Monosov, 2020,Gottlieb et al., 2020,Monosov, 2020,Taghizadeh et al., 2020). For example, we found that the anterior cingulate cortex (ACC) contains a population of cells that predict reward-uncertainty driven information seeking behavior (White et al., 2019), and that ACC projection targets in the basal ganglia causally contribute to the motivation to make information seeking eye movements (Bromberg-Martin and Monosov, 2020,Monosov, 2020,White et al., 2019). However, the cortico-cortical networks that participate with ACC in controlling information seeking are as of yet unknown.

In stark contrast to information seeking about uncertain rewards, very little is known about how the brain regulates information seeking about uncertain punishments (here defined as physically aversive or noxious outcomes). This is striking given how our individual strategies for coping with uncertain future punishments impact our everyday lives and society as a whole, depending on whether we eagerly seek knowledge or ‘stick our heads in the sand’ to avoid knowledge about them. Behaviorally, we know that humans show variability in their desire for non-instrumental information to resolve uncertainty about future punishments (Miller, 1987). For example, in clinical settings, some human patients want to know if they are likely to suffer various debilitating diseases, while others choose to avoid or see little value in obtaining this information prior to possible symptoms (Lerman et al., 1998,Miller, 1995). Relatedly, humans display variability in their curiosity to experience noxious stimuli and negatively valenced information in the form of aversive or scary images (Niehoff and Oosterwijk, 2020,Oosterwijk, 2017,Oosterwijk et al., 2020). Similarly, even humans who seek information about uncertain monetary gains can do so less consistently for uncertain monetary losses (Charpentier et al., 2018). Attitudes toward information about future punishments have also been investigated in animal models (Badia et al., 1979,Fanselow, 1979,Miller et al., 1983,Tsuda et al., 1989). However, the neuronal basis of these preferences remains unclear, because there has been no investigation of what neurons generate this preference, and what neuronal code they use to do so. Furthermore, information preferences for punishments and rewards have predominantly been studied in isolation from each other (Bromberg-Martin and Monosov, 2020,Monosov, 2020), so little is known about whether and how attitudes toward these two types of information are linked to each other, at both the behavioral and neuronal levels.

To address these open questions, we designed an experiment to simultaneously measure the behavioral attitudes of monkeys toward information about punishments and rewards, and investigated the prefrontal cortical substrates of these preferences. We discovered that attitudes toward punishment and reward information are not strictly tied to each other – individuals with similar preferences for reward information can have strikingly different attitudes toward punishment information. Furthermore, the ACC, as well as an anatomically interconnected subregion of the ventrolateral prefrontal cortex (vlPFC), contained neural populations that anticipated opportunities to gain uncertainty-resolving information in a valence-specific manner – anticipating information about either uncertain punishments or uncertain rewards. Crucially, vlPFC also contained a subpopulation of neurons that integrated attitudes toward punishment and reward information, consistent with encoding the overall preference for information in a bivalent manner suitable for guiding motivation and behavior. Indeed, neural information signals in both ACC and vlPFC reflected individual differences in the monkeys’ preferences for the corresponding types of information, and trial-by-trial fluctuations in neural signals anticipating punishment information predicted the strength of information-anticipatory behavior. These results uncover a cortical network well-suited to mediate information seeking to reduce uncertainty in a flexible manner by integrating distinct motivational outcomes.

RESULTS

Information preferences to resolve punishment and reward uncertainty are dissociable in individual animals.

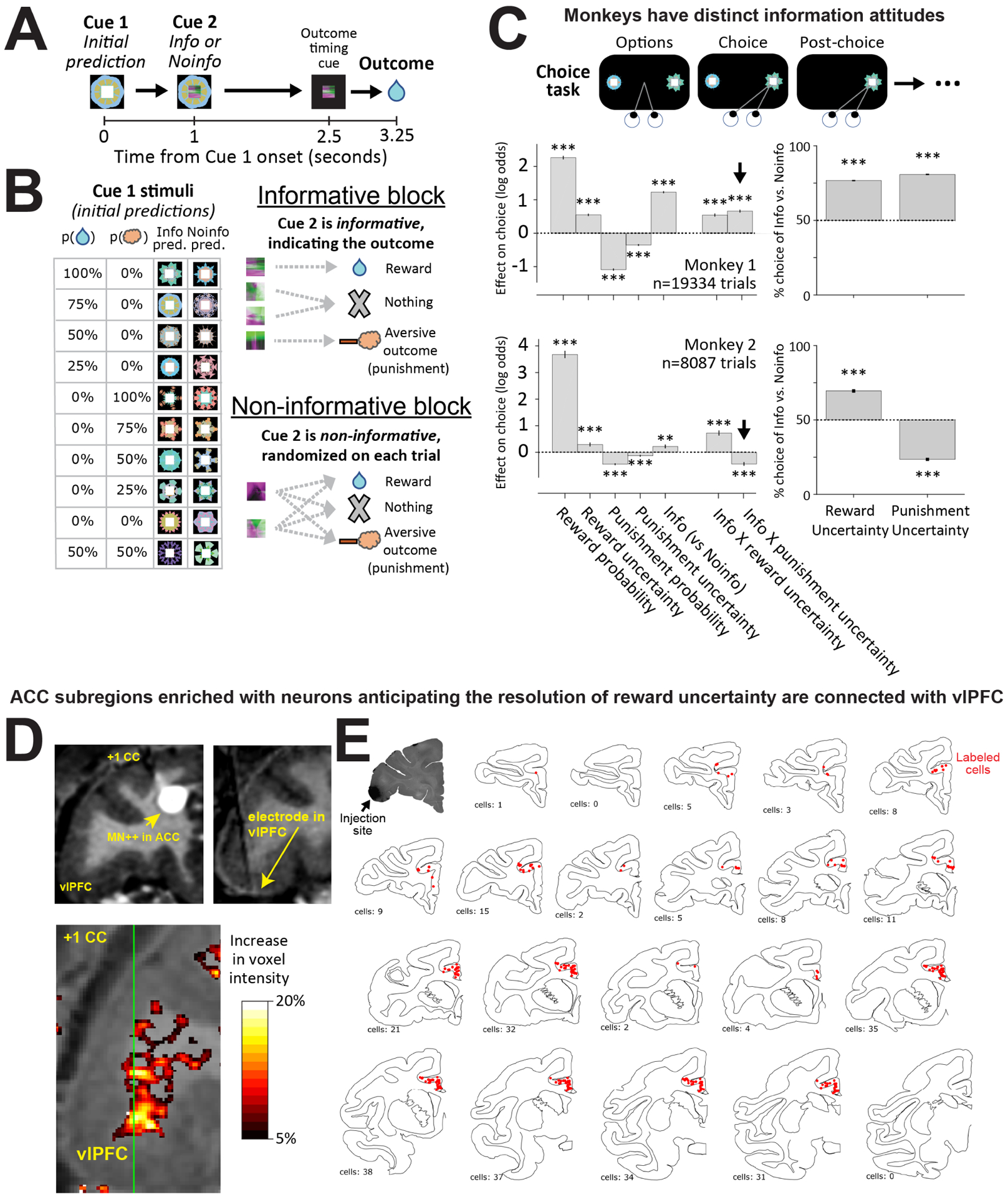

To study the mechanisms of information seeking and preference, we trained monkeys to associate visual fractal cues with different probabilities that a juice reward or airpuff punishment would be delivered in the future (Figure 1A). The first visual fractal cue indicated the initial probabilities that rewards and punishments would be delivered in 3.25 seconds (Figure 1A, Cue1, “Initial prediction”). This included reward-predictive cues associated with 25, 50, 75, and 100% probabilities of reward; punishment-predictive cues associated with 25, 50, 75, and 100% probabilities of punishment; a neutral cue that was not followed by either punishment or reward; and a bivalent cue associated with a 50% chance of reward and a 50% chance of punishment (Figure 1B). This design allowed us to dissociate between high expectations of reward and punishment (maximal at 100%) and high amounts of uncertainty about reward and punishment (maximal at 50%) (Monosov, 2017,White et al., 2019,White and Monosov, 2016).

Figure 1. Investigating information seeking to resolve uncertainty about rewards and punishments.

(A) Timeline of events in the reward and punishment information task. (B) In the informative block, 10 Cue1s yield 10 different chances of reward and punishment (air puffs), and are followed by informative Cue2s that indicate the outcome. In the non-informative block 10 other Cue1s yield the same outcome probabilities, but are followed by non-informative Cue2s that do not predict the outcome, so uncertainty is not resolved until outcome delivery. (C) Choice version of the task. After choosing a Cue1, the same sequence of events occurred as in the non-choice task (including Cue2, the pre-outcome timing cue, and the outcome). Plotted are the weights from a logistic GLM fit to each monkey’s choices based on the attributes of each Cue1, including outcome probability, uncertainty (operationalized as standard deviation), information-predictiveness, and key interactions. Both monkeys were fit with similar patterns of weights, including positive weights of Info × Reward Uncertainty, with one exception: Monkey 1 had a positive weight of Info × Punishment Uncertainty, while Monkey 2 had a negative weight (black arrows). Error bars are +/− 1 SE, **, *** indicate p < 0.01, 0.001. Right: % choice of informative versus non-informative Cue1 for trials where both options had reward uncertainty only (left bar) or punishment uncertainty only (right bar). (D) Top-left, 150 ul injection of manganese chloride anterograde tracer into the ACC region of Monkey 1 that was enriched in information anticipating neurons (White et al., 2019). Bottom-left, % increase of voxel intensity (MEMRI labeling) shown in a coronal plane 24 hours after injection in the vlPFC. Color axis: greater or equal to 20% change is shown as white; while black represents 5%. Top-right, MRI with an electrode in Monkey 1 targeting the MEMRI-identified region of vlPFC. (E). Schematic chartings of the distribution of retrogradely labeled cells (red dots) in the ACC - areas 32 and 24 - after LY injection in the vlPFC – area 12/47. Top-left: the vlPFC tracer injection was placed in a similar location to the vlPFC electrode in D. A cluster of labeled cells is observed over the same subregion injected with Mn2+ in the ACC.

Crucially, this initial cue (Cue1) was always followed after 1 second by a second visual fractal cue, which could either provide additional information to resolve uncertainty about the outcome, or could provide no new information (Figure 1B, Cue2, “Info or noinfo”). Thus, the identity of Cue1 indicated both the probabilities of reward and punishment and indicated whether the upcoming Cue2 would provide information about the outcome (Figure 1B). Specifically, an information-predictive Cue1 was always followed by an informative Cue2 that provided complete and accurate information about whether the trial’s outcome would be a punishment, a reward, or neither (Figure 1B). A non-information-predictive Cue1 was always followed by a non-informative Cue2 whose identity was randomized and hence uncorrelated with the upcoming outcome (Figure 1B).

These two trial types were presented in separate blocks (Figure 1B, “Informative block” and “Non-informative block”). After training on the task, both monkeys had stable conditioned responses indicating that they learned the meanings of the task stimuli, by updating their predictions about rewards and punishments using both Cue1 and the informative Cue2s (Figure S1).

To test how these outcome predictions translated into behavioral preferences during decision making, we allowed monkeys to choose between all possible pairs of Cue1s in a two-alternative forced choice task (Figure 1C). We then used logistic regression to model their log odds of choosing each Cue1 as a linear weighted combination of its attributes, including its information-predictiveness, its reward and punishment probabilities, and its reward and punishment uncertainties (operationalized here as their standard deviations) (Figure 1C). These attributes were chosen to replicate previous work on information seeking and risk seeking, to show that the animals understood the stimuli and assigned negative subjective values to punishments and positive subjective values to rewards, and to measure their attitudes towards reward-related and punishment-related uncertainty resolution. This allowed us to measure how animals subjectively valued the alternatives – and in particular, whether and how they valued information to resolve uncertainty about future outcomes.

The results corroborated our analysis of conditioned responses and were consistent with previous studies (Figure 1C). As expected, the choices of both monkeys were best fit by significant positive weights for reward probability and negative weights for punishment probability – i.e. monkeys were reward seeking and punishment averse. Also, as expected, both monkeys were fit with significant positive weights for reward uncertainty – i.e. they were risk seeking for rewards (Christopoulos et al., 2009,Ledbetter et al., 2016b,Monosov, 2020,So and Stuphorn, 2010). We also found that both monkeys were fit with significant negative weights for punishment uncertainty. This demonstrates that monkeys have attitudes toward punishment uncertainty, and specifically, indicates that they can be risk averse for punishments. Finally, both monkeys were fit with significant positive weights for informativeness, indicating a general preference for advance information about future outcomes.

We next asked the key behavioral questions of our experiment: (1) Do monkeys have behavioral attitudes toward gaining information to resolve punishment uncertainty? (2) If so, what is the nature of their attitudes? That is, do they seek or avoid information about punishments? (3) What is the relationship between attitudes toward information about punishments and rewards? One hypothesis is that attitudes toward information are generated by the same neural algorithm for all types of uncertain motivational outcomes (for instance, by a neural process that follows the convention of many reinforcement learning models of simply treating punishments as ‘negative rewards’ (Sutton and Barto, 1998)). If so, then attitudes toward information about rewards and punishments should move in lock-step with each other. Alternately, attitudes toward information about rewards and punishments could be generated by distinct neuronal processes. In that case, they might also be dissociable at the level of behavioral preferences.

To answer these questions, we examined the model’s weights for the interactions between informativeness and outcome uncertainty (Figure 1C, right). In other words, how did the preference for information scale with outcome uncertainty? This produced a striking dissociation. For rewards, both animals had a significantly greater preference for information for outcomes with a high degree of reward uncertainty (Figure 1C, Info x reward uncertainty; Figure S2). For punishments, however, the two animals behaved quite differently from each other, with distinct and actually opposite informational preferences. Monkey 1 was best fit with a significant positive weight for the corresponding interaction term in the model, indicating that its information preference was enhanced by punishment uncertainty (Figure 1C, Info x punishment uncertainty). In contrast, Monkey 2 was best fit with a significant negative weight, indicating that its information preference was reduced by punishment uncertainty (Figure 1C). Importantly, their different attitudes toward obtaining information to resolve punishment uncertainty were not due to the animals having different attitudes toward encountering punishment uncertainty per se; both animals were best fit with significant negative weights of punishment uncertainty (Figure 1C). We observed similar results when examining raw choice percentages: on trials where both options had punishment uncertainty but only one option provided information, Monkey 1 was significantly more likely to choose the informative option (information seeking) while Monkey 2 was more significantly likely to choose the non-informative option (information averse) (Figure 1C, right). Furthermore, both monkeys’ information preferences were significantly stronger for uncertain outcomes than certain outcomes, consistent with the hypothesis that their information preferences were motivated to resolve uncertainty (Figure S2). Also, their distinct preferences were not due to the information having any objective, instrumental value in preparing for the air puffs (Figure S1). Thus, the behavior of these animals was consistent with observations in humans showing that some individuals cope with uncertain future punishments by seeking information about them, while others purposefully avoid information (Charpentier et al., 2018,Lerman et al., 1998,Miller, 1987, 1995).

Thus, this data answers the three key questions posed above: (1) monkeys can have strong attitudes toward gaining information to resolve punishment uncertainty, (2) the nature of their information attitude varies across individuals, and can consist of either preferences or aversions, (3) attitudes toward information about punishments and rewards are distinct and strongly dissociable, such that an individual animal’s attitude toward the former can even be opposite to their attitude toward the latter.

Given this behavioral dissociation between attitudes toward information to resolve uncertainty about rewards vs. punishments, we hypothesized that these preferences are generated by dissociable neural mechanisms. To test this idea, we build on a recent finding that information seeking to resolve reward uncertainty is controlled by a population of neurons that anticipatorily ramp towards the time of uncertainty resolution (Bromberg-Martin and Monosov, 2020,Monosov, 2020,White et al., 2019). These information preference-related neurons were found in the ACC and in specific regions of the basal ganglia (BG) that receive strong projections from the ACC. We previously found that the ACC had a particularly important role in this cortical-BG circuit. Both ACC and BG neurons predicted future information seeking behaviors, but ACC neurons did so early in advance, while BG neurons did so only just before the behavior was carried out. Hence, we hypothesized that the ACC may interact with other prefrontal regions to control information preference before the basal ganglia translates information preference into the motivation to act.

Anterior cingulate regions enriched with information anticipating neurons form an anatomical network with the ventral lateral prefrontal cortex (vlPFC).

To identify regions that receive particularly strong inputs from the ACC we turned to manganese-enhanced magnetic resonance imaging (Monosov et al., 2015,Murayama et al., 2006,Saleem et al., 2002,Simmons et al., 2008) (MEMRI). Ours and others’ studies indicate that MEMRI reliably detects major outputs of regions injected with manganese chloride (Monosov et al., 2015,Murayama et al., 2006,Saleem et al., 2002,Simmons et al., 2008). We utilized MEMRI to identify candidate regions for receiving information-related signals from the ACC. To this end, we electrophysiologically identified a region within the ACC of one of our animals that was particularly enriched in neurons that anticipated reward uncertainty (Figure 1D) and injected it with manganese chloride. Subsequent MR imaging and analyses revealed several hotspots receiving ACC inputs. Consistent with our recent work, one hotspot was the internal capsule bordering dorsal striatum (icbDS; Figure S3A), a region that we previously identified as an anatomical target of ACC projections (Figure S3B) and as a key site of BG neural signals that anticipate information about uncertain future rewards (White et al., 2019).

A second hotspot was the vlPFC, especially areas 47/12 where intense MR signal was observed (Figure 1D). We sought to confirm this result, because MEMRI is not as precise as classical anatomical methods and can miss diffuse or weak projections (Monosov et al., 2015,Murayama et al., 2006,Saleem et al., 2002,Simmons et al., 2008). We therefore turned to retrograde and anterograde tracing. We injected retrograde tracer into the area of the vlPFC that received strong MEMRI-identified inputs from the ACC. This produced robust labeling in the ACC (Figure 1E), particularly in the same regions that we identified as enriched with information-related neurons (Monosov, 2017,White et al., 2019). Additional analyses of tracer injections confirmed the bidirectional nature of the ACC-vlPFC circuit (Figure S4). In addition, analysis of retrograde tracer injection into the basal ganglia confirmed that both ACC and vlPFC project to the same region of icbDS that contains activity anticipating information about uncertain rewards (Figure S3).

Single ACC and vlPFC neurons anticipate information to resolve reward and punishment uncertainty.

We next used targeted electrophysiology to examine how the ACC and vlPFC participate in information seeking to resolve reward and punishment uncertainty. We studied task sensitive neurons (Kruskal-Wallis test; p<0.01; Methods) in the ACC and vlPFC of the same monkeys whose behavior was shown in Figure 1 while they participated in the task in Figure 1A (M1 ACC n = 149, vlPFC n = 259; M2 ACC n = 128, vlPFC n = 275). We then quantified how single neurons responded to uncertainty about rewards and punishments, and whether they anticipated information to resolve that uncertainty.

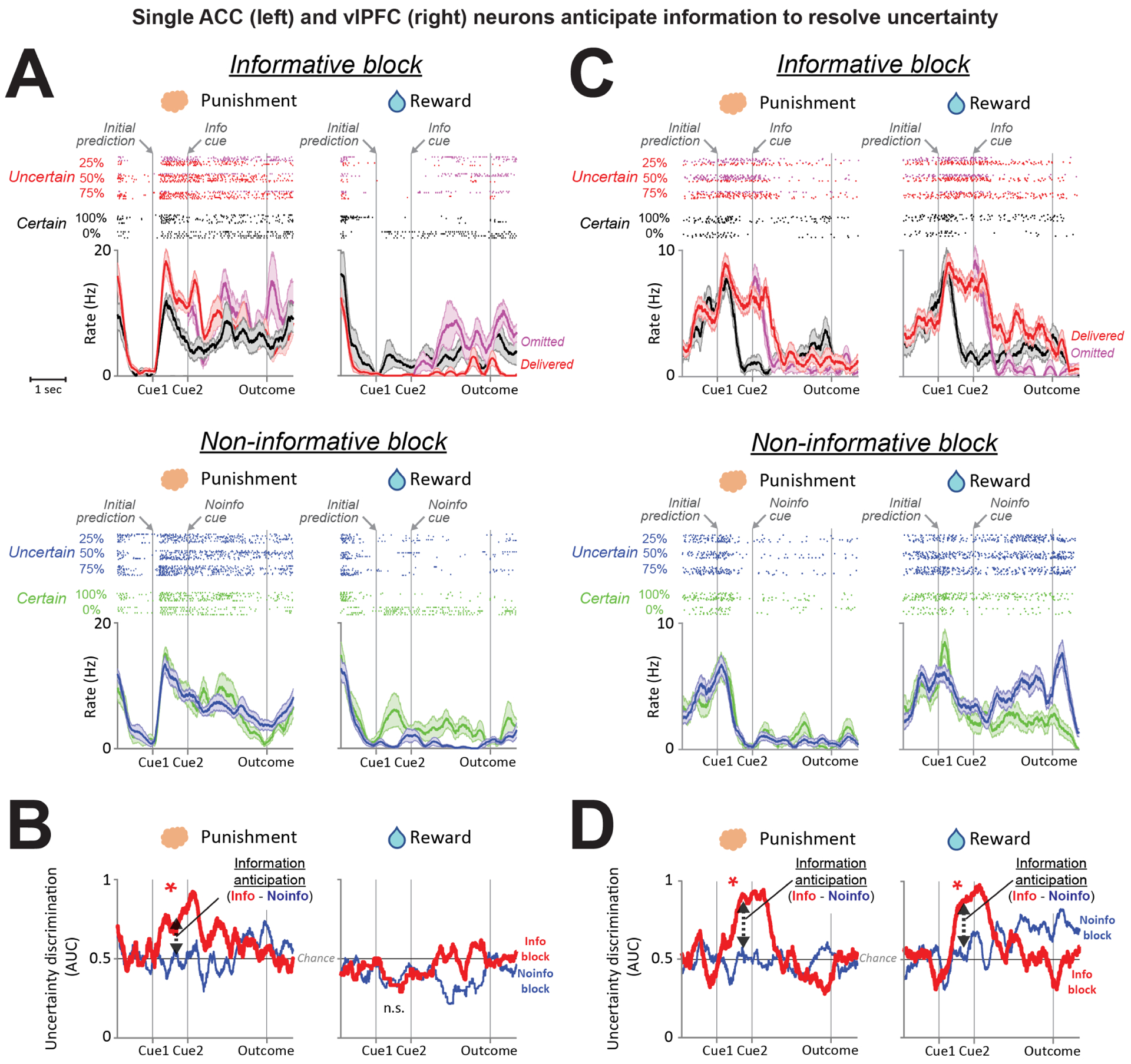

We defined neural signals that anticipated information to resolve uncertainty as activity that (i) selectively differentiated task epochs when outcomes were uncertain vs. certain and (ii) was enhanced when animals were anticipating information that would resolve that uncertainty. Information anticipation was therefore indexed by neural signals that were enhanced on uncertain information trials in a time window immediately before the informative cue appeared (White et al., 2019).

We found that both ACC and the vlPFC contained single neurons that anticipated information to resolve reward and punishment uncertainty. An example of one such ACC neuron is shown in Figure 2A. In the Informative block, the neuron was most strongly excited by Cue1s associated with punishment uncertainty (Figure 2A top – red rasters and curves indicating 25,50,75% chance of air puff > black rasters and curves indicating 0% and 100% chance of air puff). This uncertainty signal was strongest just before the informative cue appeared to resolve the uncertainty, and then rapidly diminished afterward. In contrast, during the Non-informative block, the same neuron did not discriminate between certain vs. uncertain punishment predictions in advance of the non-informative cue. Furthermore, this neuron only showed this information anticipatory activity for punishments; it had no similar anticipation of informative cues about uncertain rewards. Therefore, this neuron selectively anticipated the resolution of punishment uncertainty but not reward uncertainty.

Figure 2. Example single ACC and vlPFC neurons that anticipate information to resolve uncertainty.

(A-B) A single ACC neuron anticipates information to resolve punishment uncertainty. (A) Activity is shown separately for the informative and non-informative blocks (top, bottom), for punishment and reward trials (left, right), and for certain vs. uncertain outcomes (colored rasters and spike density functions). Shaded area is SEM. After informative cues, activity is split into trials when outcomes were going to be delivered or omitted (red, magenta). (B) Information Anticipation Index displayed in time. During punishment trials, the uncertainty signal increases in anticipation of the informative cue that will resolve the monkey’s uncertainty (red), but not in anticipation of non-informative cues (blue). The Information Anticipation Index is quantified as the difference between uncertainty signals before onset of informative vs. non-informative Cue2s (Methods). (C-D) A single vlPFC neuron anticipates information to resolve uncertainty about both punishments and rewards. Same format as A-B.

While some neurons only anticipated information about one type of outcome (see additional example neurons in Figure S5), other neurons anticipated information about both punishments and rewards. This can be seen in a second example neuron, from the vlPFC (Figure 2C). During the informative block, this neuron was activated by both punishment and reward uncertainty in similar manners. This signal that was particularly strong in the moments before the informative cue appeared, and then rapidly diminished after the cue appeared and resolved the uncertainty. During the Non-informative block, the same neuron had little or no discrimination between certain vs. uncertain outcome predictions in advance of the non-informative cue.

To quantify this selective information anticipation signal on a neuron-by-neuron basis we followed the approach from (White et al., 2019). We first computed an index quantifying the strength of uncertainty signals at each time during the task, using the ROC area for discriminating neural activity from uncertain trials vs. certain trials. We then computed this uncertainty index in a time window before Cue2 onset, and calculated the difference between the index on trials where that cue was informative vs. non-informative. This analysis is illustrated for the example neurons in Figure 2 (2B and 2D). This approach identifies neurons with selective uncertainty signals that are stronger in anticipation of informative cues than non-informative cues.

Using this approach, we next asked two key questions. (1) Are information to resolve reward and punishment uncertainty generally anticipated in similar manners and by the same neural population, or are they anticipated by distinct populations? And is this the same in ACC and vlPFC? (2) Are there significant differences in the neural codes of these populations reflecting individual differences in information attitudes, especially their attitudes toward information about uncertain punishments, which were strikingly different between animals?

Information seeking preferences are reflected in distinct manners across the ACC-vlPFC network.

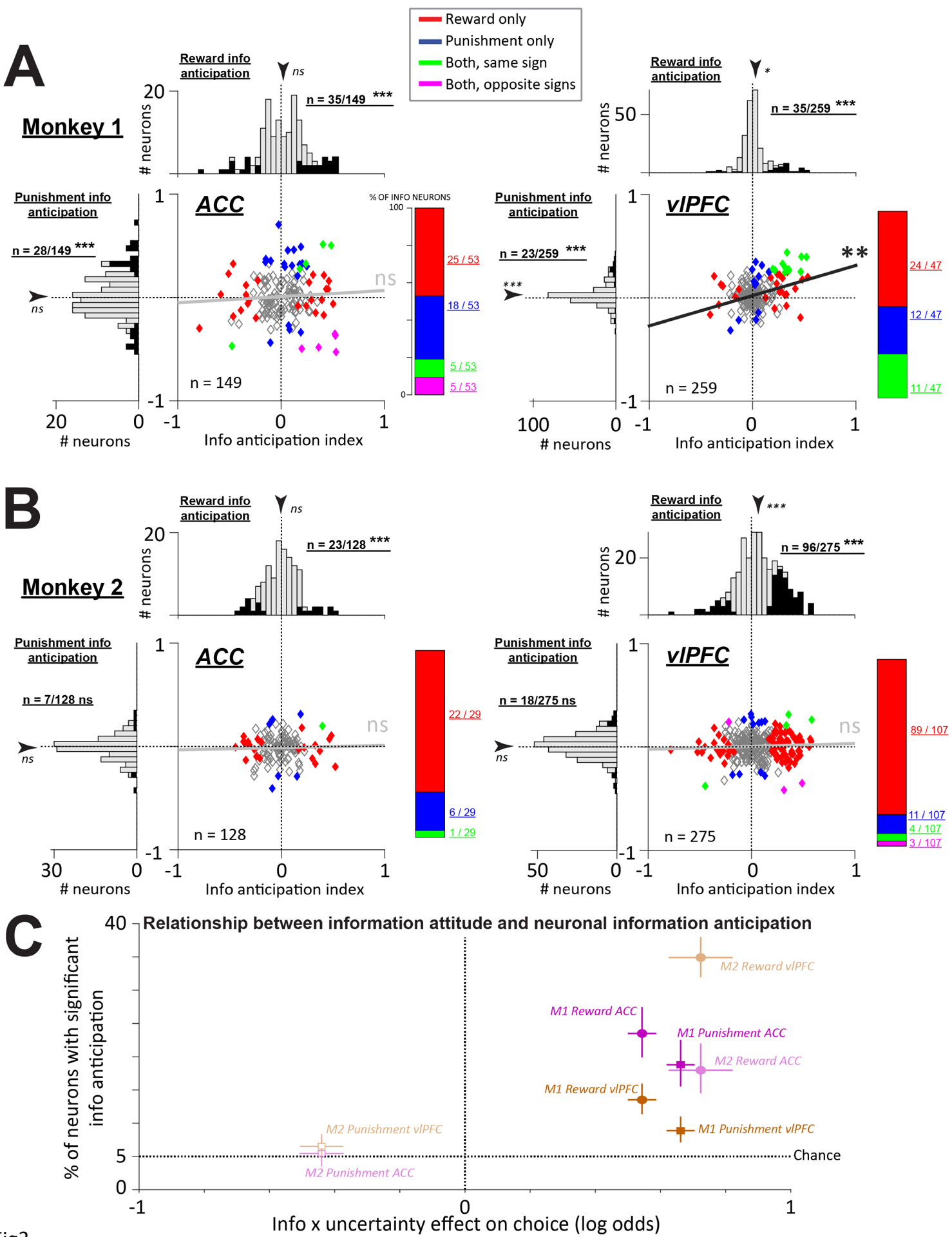

We found that in both monkeys, many task-responsive ACC neurons significantly anticipated reward information, and the proportion of these neurons was much greater than would be expected by chance (Figure 3A–B, left, binomial test, p < 10−6 in both animals). In Monkey 1, which preferred information about both rewards and punishments, there were also neurons that significantly anticipated punishment information, and their proportion was much greater than expected by chance (Figure 3A, left; binomial test, p < 10−8). However, these signals anticipating reward and punishment information appeared to be distributed independently across the neural population. That is, the reward and punishment information anticipation signals were not significantly correlated with each other (Figure 3A, left; rho=0.074; p=0.3705), and the number of individual neurons that anticipated both types of information (n=10/149 (6.7%)) was not significantly greater than or less than what would be expected under the null hypothesis that these two forms of anticipation were independent of each other (Methods; permutation test, p = 0.18). Of the ACC neurons that did anticipate both types of information, they were equally likely to be anticipate them with the same sign (n=5) or opposite signs (n=5). Finally, in Monkey 2 which did not prefer information about punishments, there was no significant neural anticipation of information about punishments (Figure 3B, left; n=7/128, 5.5%) of task-responsive neurons; binomial test, p = 0.92). Thus, when comparing the two animals in terms of the proportion of their ACC neurons that anticipated information, the proportion was similar for reward information (Fisher’s exact test, p = 0.34), but was significantly higher in Monkey 1 than Monkey 2 for punishment information (Fisher’s exact test, p = 0.001).

Figure 3. ACC and vlPFC neurons anticipate information to resolve uncertainty about rewards and punishments and reflect information attitudes.

(A) Neural information anticipation indexes for resolving uncertainty about rewards and punishments (x- and y-axes) in Monkey 1. Each data point is a neuron in the ACC (left) or vlPFC (right). Colors indicate neurons that significantly anticipate information to resolve uncertainty about punishments (blue), rewards (red), both with the same coding sign (green), or both with opposite coding signs (magenta). To the right of each scatter, a bar plot shows the relative proportions of these groups. Marginal histograms summarize the single neuron indexes. Arrow is the mean, asterisks indicate significance (signed-rank test). Filled bars indicate significant neurons, and text next to each marginal histogram indicates whether that number of neurons is significantly more than expected by chance (binomial test). Both ACC and vlPFC had significant numbers of neurons anticipating information to resolve reward and punishment uncertainty, and vlPFC had a substantial population that anticipated both with the same sign (green). Moreover, across the population, the reward and punishment information anticipation indexes were correlated. *, **, *** indicate p < 0.05, 0.01, 0.001. (B) Same plots for Monkey 2. Unlike Monkey 1, neither region had a significant number of neurons anticipating information to resolve punishment uncertainty or a significant correlation across valences. (C) Summary of the relationship between choice preferences for information to resolve uncertainty (x-axis; log odds from the analyses in Figure 1) and neural anticipation of information (y-axis; % of neurons with significant information anticipation). Data are shown for each brain area, monkey, and valence. Error bars are bootstrap 68% CIs. Filled data points have significant neural information anticipation (p < 0.05, binomial test).

These results show that ACC contains distinct neuronal processes suitable to mediate preferences for reward and punishment uncertainty resolution. We previously suggested that valence specificity in value processing in ACC could support flexible context-dependent choice behavior (Monosov, 2017). Similarly, the distinct processes reflected in single ACC neurons that anticipate reward and punishment information are well-suited to support the individual differences in preferences that we observed in Figure 1C.

The vlPFC also contained substantial populations of task-responsive neurons that significantly anticipated either reward or punishment information (Figure 3A–B, right). However, the vlPFC displayed a very different pattern of results than ACC. First, in Monkey 1 which preferred information about both rewards and punishments, vlPFC contained a notable subpopulation of neurons that significantly anticipated the resolution of both rewards and punishments (Figure 3A, right; n=11/259, 4.25%) of task-responsive neurons, significantly higher than the 0.25% of neurons expected by chance; binomial test, p < 10−10). Indeed, signals anticipating reward and punishment information had a highly significant tendency to co-occur in the same neurons, and to be coded in similar manners. Across all task-responsive neurons, the reward and punishment anticipation signals were significantly correlated with each other (Figure 3A, right; rho=0.174; p=0.0052), and there was a significant tendency for neurons to anticipate both reward and punishment information, compared to the null hypothesis that the two were independent (permutation test, p < 0.001). Notably, all of these vlPFC neurons anticipated both reward and punishment information by changing their activity in the same direction (Figure 3A, right; anticipatory activity taking the form of increases in activity, indicated by neurons in the upper right quadrant). Thus, there was a highly above chance proportion of neurons that anticipated both types of information with the same sign, compared to the null hypothesis that reward and punishment coding were independent of each other (permutation test, p < 0.001), and this was significantly greater than the proportion of neurons that anticipated reward and punishment information with opposite signs (permutation test, p < 0.001). These properties were in stark contrast to the same animal’s ACC. Comparing the two areas, vlPFC had a significantly greater difference between the proportion of joint coding neurons and the proportion expected by chance under the null of independent coding (permutation test, p < 0.034), and this was specifically driven by neurons that anticipated reward and punishment information with the same sign (permutation test of proportion of joint coding neurons with the same sign, p < 0.001; analogous test for neurons with opposite signs, p = 0.11).

Monkey 2 also preferred information to resolve reward uncertainty, but unlike Monkey 1, did not prefer information to resolve punishment uncertainty (Figure 1C). The vlPFC of Monkey 2 reflected these behavioral biases. The vlPFC of Monkey 2 contained many neurons anticipating information about rewards (Figure 3B, right; binomial test, p < 10−10) but not for information about punishment (n=18/275 (6.6%) of task-responsive neurons; not different from chance, binomial test, p = 0.30). Indeed, unlike Monkey 1, there was no significant correlation between reward and punishment anticipation signals (Figure 3B, right; rho=0.04; p=0.4808), and there was no significant tendency for reward and punishment signals to occur in the same neurons (permutation test, p = 0.83) or to have the same sign (permutation test, p = 0.92). Thus, when comparing the two animals, Monkey 1 had a significantly higher proportion of neurons that jointly anticipated reward and punishment information (permutation test, p < 0.001), and a significantly greater difference between the proportion of neurons that anticipated them with the same sign vs. anticipated them with opposite signs (permutation test, p < 0.001). Taken together, this data shows that ACC and vlPFC contain neural populations that anticipate information about uncertain rewards and punishments.

In addition, it suggests a possible relationship between information anticipatory activity and behavioral information preferences. To visualize this, we plotted the prevalence of significant neuronal information anticipatory activity as a function of the behavioral preference to seek information to resolve uncertainty, for each of the 2×2×2 combinations of animals, areas, and outcome types (Figure 3C). The behavioral preference was quantified using the fitted weight of Information x Uncertainty from the behavioral GLM (Figure 1C). In all six cases where animals expressed a preference for information to resolve uncertainty, ACC and vlPFC contained neurons that anticipated that type of information, at significantly above chance levels. When, on average, an animal did not prefer information (Monkey 2 for punishment information), both ACC and vlPFC had few neurons that anticipated that type of information, with a prevalence that was not significantly above chance. And hence, the correlations among reward and punishment information anticipations signals in the vlPFC were not observed (Figure 3A–B, right). This suggests that the ACC-vlPFC network may be especially engaged when animals are motivated to seek information.

Trial-to-trial relationship between fluctuations in neuronal and behavioral information anticipation.

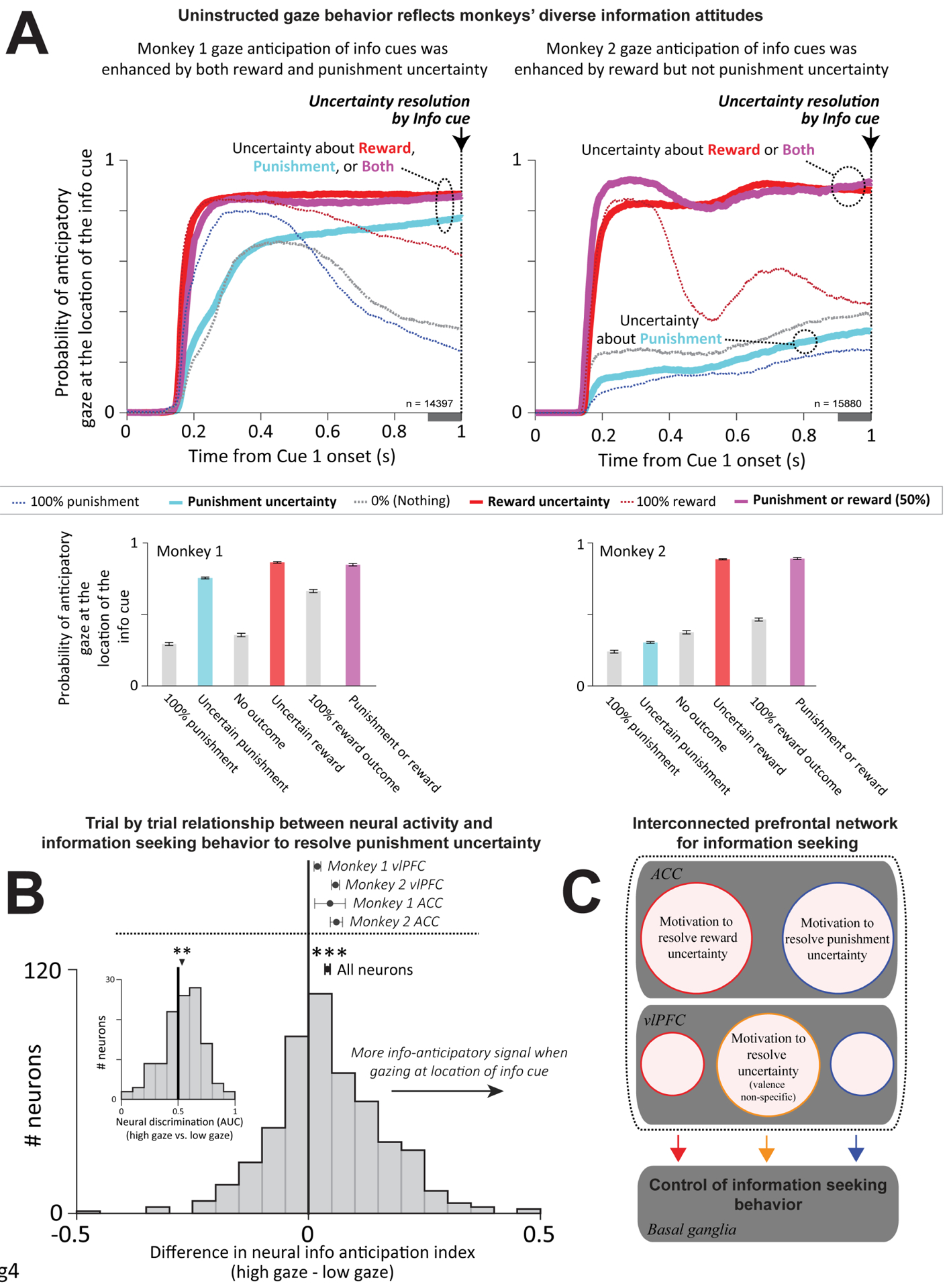

To study the relationship of information seeking and neural activity on a trial-by-trial basis, we leveraged our recent finding that monkeys express a form of information seeking behavior by anticipatorily gazing at spatial locations where informative visual cues will appear to resolve reward uncertainty (Monosov, 2020,White et al., 2019). In the current data, we replicated those results, and in addition, we found that this information-anticipatory gaze mirrored the behavioral information preferences in our choice-task experiment (Figure 1C), consistent with a desire to resolve reward uncertainty. That is, in the realm of rewards, both monkeys had strong anticipatory gaze toward the location of upcoming informative cues, and did so selectively when they were in a state of reward uncertainty (Figure 4A), persistently holding their gaze at the cue location until the cue appeared and their uncertainty was resolved.

Figure 4. Single trial relationship of neural activity to information seeking behavior to resolve punishment uncertainty, and summary of ACC-vlPFC circuit.

(A) During Cue1 and subsequent cue presentations monkeys were allowed to gaze freely; their gaze had no influence on the outcome. As shown previously (White et al., 2019), gaze during the informative block is attracted to the location of the upcoming informative cue before it appeared to resolve reward uncertainty (top, thick red line). Crucially, anticipatory gaze also reflected attitudes to information to resolve punishment uncertainty. Monkey 1 (left) had prominent information-anticipatory gaze during punishment uncertainty (thick cyan line; summary in bar plots below). In contrast, Monkey 2 (right) only had prominent information-anticipatory gaze during reward uncertainty. In fact, Monkey 2 had more anticipatory gaze on no-outcome trials (gray) than uncertain punishment trials (p < 0.05, signed-rank test). Error bars indicate SEM. Gray bar below the x axis is the analysis time window (100 milliseconds before uncertainty resolution). (B) Difference in each neuron’s information anticipation index for uncertain punishments, when computed using trials with high vs. low anticipatory gaze. Dot and error bars above histogram indicate mean and SEM pooling all neurons; *** indicates significant difference from 0 (p < 0.001, signed-rank test). Mean and SEM for each area and each monkey are also shown. Pooled across areas, both monkeys had significant effects (p < 0.001). Monkey 2 had significantly stronger effects (p < 0.01, rank-sum test) consistent with the greater trial-to-trial variability this monkey displayed in gaze behavior (A). Pooled across monkeys, both areas had significant effects (p < 0.001) with no difference between areas (p = 0.22, rank-sum test). Inset: Neural firing rate discrimination between punishment uncertainty trials that had high vs. low anticipatory gaze, based on separate calculations for each punishment condition (Methods). (C) Summary of the ACC-vlPFC circuit coding for information seeking to resolve reward and punishment uncertainty. Anatomical and functional connections with the basal ganglia are based on our previous work showing that the basal ganglia, in particular the internal capsule bordering dorsal striatum (White et al., 2019), controls gaze shifts to seek advance information about uncertain rewards. Size of circles do not denote % of neurons within each region.

We next asked whether the same relationship between information seeking choice and gaze also held in the realm of punishments. Indeed, there was a clear correspondence between choice and anticipatory gaze: only Monkey 1 had a choice preference to for information to resolve punishment uncertainty, and only Monkey 1 had selective anticipatory gaze toward informative cues to resolve punishment uncertainty (Figure 4A). Finally, we examined how reward and punishment uncertainty interacted to drive information anticipatory gaze, using the bivalent 50% reward / 50% punishment condition that presented both types of uncertainty at the same time. The results indicated that animals had strong information-anticipatory gaze as long as they preferred to resolve at least one of the two types of uncertainty. Notably, Monkey 2 strongly anticipated information to resolve combined reward + punishment uncertainty despite not doing so for punishment uncertainty alone (Figure 4A, right). These data show that the monkeys’ information-anticipatory gaze is a sensitive index of their motivation to seek information to resolve uncertainty.

With these findings in hand, we sought to relate the animal’s motivation to seek information to information-anticipatory neural activity. In the realm of reward uncertainty, we previously showed that trial-to-trial fluctuations in ACC information-anticipatory activity predicted corresponding fluctuations in information seeking gaze behavior (Monosov et al., 2020,White et al., 2019). Here, we asked whether a similar neural-behavioral link is true in the realm of punishment uncertainty; whether it extends to both ACC and vlPFC neurons; and whether this link only occurs in an animal with a net preference for this information (Monkey 1), or also occurs an animal that did not have a net preference for this information (Monkey 2). To answer these questions, we took advantage of the fact that both monkeys had trial-to-trial variability in their anticipatory gaze for information to resolve punishment uncertainty, such that on some trials they gazed at the upcoming cue location and on other trials they did not (Figure 4A, “Uncertain punishment”). Hence, for each neuron, we quantified how neural information anticipation was related to gaze by splitting each animal’s data into ‘high gaze’ and ‘low gaze’ trials, calculating the neural punishment information anticipation index separately for each group, and then taking the difference between the two indexes (Methods). Thus, a positive difference between indexes indicates that neural punishment information anticipatory signals were stronger on trials with more anticipatory gaze.

The results were clear: there were significantly stronger neural information anticipatory signals on trials when animals had stronger behavioral measures of information seeking to resolve punishment uncertainty (Figure 4B). This effect was significant in each individual animal (p < 0.001 in each animal, signed-rank tests), and was significant or had a consistent trend in each individual combination of animal and area (Figure 4B, top; vlPFC, Monkey 1 p = 0.002, Monkey 2 p < 0.001; ACC, Monkey 1 p = 0.16, Monkey 2 p < 0.001, signed-rank tests). The effect tended to be somewhat stronger in Monkey 2, perhaps because there was greater power to detect neural-behavioral relationships in that animal due to greater variation in behavior during punishment uncertainty (Figure 4A, Monkey 1 is closer to ceiling while Monkey 2 had a more equal mix of ‘high gaze’ and ‘low gaze’ trials). Finally, we confirmed this finding by using ROC analysis to directly compare neural firing rates between ‘high gaze’ vs. ‘low gaze’ trials, while controlling for any potential differences in neural activity or behavior across the different punishment probability conditions (Methods). This analysis revealed significantly higher neural activity on trials when monkeys expressed high motivation to reduce punishment uncertainty (Figure 4B, inset).

Importantly, while enhanced information-anticipatory activity was linked to enhanced information-anticipatory behavior, information-anticipatory activity was not simply the result of neurons encoding specific behavioral responses, such as licking, blinking, or gazing at the cue. First, consistent with previous research, these behaviors were all strongly influenced by the probability of reward or punishment (Figure 4, S1) while information-anticipatory activity was primarily driven by uncertainty (Figure 2, 3, S6). Second, the time courses of licking and blinking were distinct from the time course of information-anticipatory activity (Figure S1, S7). Finally, information-anticipatory activity was still highly significant after controlling for gaze state (Methods). Thus, the link between neurons and behavior was consistent with a motivational signal rather than a sensory or motor signal.

DISCUSSION

We performed the first neuronal investigation of informational preferences to resolve uncertainty about future rewards and punishments. We found that informational attitudes about rewards and punishments are dissociable at both the behavioral and neuronal levels. This result mirrors important observations in human behavior: while most people prefer to resolve their uncertainty about future rewards, they display considerable variability in preferences for information about punishments or other undesirable outcomes (Charpentier et al., 2018,Miller, 1987, 1995,Niehoff and Oosterwijk, 2020,Oosterwijk, 2017).

We identified an ACC-vlPFC network containing neurons that selectively encode opportunities to gain information to resolve uncertainty. Furthermore, their neural code was closely linked to informational preferences, both in terms of individual differences in decision making and trial-to-trial fluctuations in information seeking behavior. This information-anticipatory activity was not simply due to neurons encoding behavioral responses.

Within this network we found a key difference between ACC and vlPFC. While both regions contained neurons that anticipated information to resolve uncertainty, the ACC contained largely independent activations for each specific valence – anticipating information about punishments but not rewards, or vice versa. These results are consistent with our and others’ previous work indicating that ACC supports behavioral control, flexibly, in a valence specific manner: that is ACC neurons can signal decision and learning related variables in relation to either future rewards or future punishments (Monosov, 2017,Monosov et al., 2020). By contrast, the vlPFC also had a significant subpopulation of neurons that anticipated information in a bivalent manner, with similar neural codes for resolving uncertainty about both punishments and rewards (Figures 2 and 4C).

We found differences among monkeys in the neural signatures of the motivation to resolve punishment uncertainty that reflected differences in their behavioral attitudes (Figure 1C). Importantly, these differences were likely not due to differences in recording locations. We used imaging and functional electrophysiological mapping to find the same regions in the ACC that were preferentially enriched with neurons that anticipated reward uncertainty resolution. These areas matched our previous data (White et al., 2019). At these sites, in ACC, we found that punishment- and reward-uncertainty resolution anticipating neurons were highly intermingled; there were no apparent anatomical differences in their locations (also see (Monosov, 2017)). This fits well with previous reports showing that positive and negative value neurons and valence-specific neurons are not anatomically separable within area 24 which was the subject of this study (Hosokawa et al., 2013,Kennerley et al., 2011,Kennerley et al., 2009,Monosov, 2017,Monosov et al., 2020,Wallis and Kennerley, 2010). In turn, vlPFC recordings were verified by imaging and aimed directly at the location of dense ACC projections (Figure 1).

In addition, these differences were not due to differences across the two animals in training or understanding the task. In fact, the monkeys had remarkably similar attitudes towards most attributes of the stimuli, including reward probability, punishment probability, reward uncertainty, punishment uncertainty, and the main effect of information. Furthermore, neural activity in the ACC-vlPFC network strongly reflected both monkeys’ desire to resolve reward uncertainty. The key difference was that Monkey 1 sought information to resolve punishment uncertainty and Monkey 2 did not – and this was also differentiated by neural representations in ACC and vlPFC. One other difference among the monkeys that warrants consideration was that the effect of punishment probability on choice was relatively smaller in monkey 2 than monkey 1. Humans show variability in information attitude across many distinct contexts, particularly about the resolution of uncertainty about punishments. It is therefore possible that changing the subjective aversiveness of punishments could impact the individual differences we observe. If so, we would predict that these changes would also be reflected in the ACC-vlPFC network. In fact, this conjecture is supported by the results of Figure 4. Both monkeys had variability in their trial-by-trial information attitudes that were linked to concomitant changes in ACC-vlPFC network activity. Hence, in both animals the activity in this network was related to trial-by-trial information attitudes.

An important question is how much ACC-vlPFC activity anticipates opportunities to obtain information per se, versus anticipates the subjective value of that information. Our data does not conclusively resolve this, but several lines of evidence are consistent with the latter: (1) information signals and information preferences were both strongest for uncertain outcomes (Figure S6), (2) information signals were only significant when information was preferred, (3) vlPFC neurons only significantly anticipated reward and punishment information with the same sign when both types of information were preferred, (4) punishment information signals were linked to trial-to-trial fluctuations in information-anticipatory behavior, which in turn matched behavioral preferences.

To date, little is known about single neurons’ activity in the vlPFC areas we recorded, particularly anterior ventral 47/12o. This region is anterior-ventral to the auditory domain within vlPFC (Romanski and Goldman-Rakic, 2002). And, to our knowledge, it is lateral (and in some cases ventral) to regions studied in previous electrophysiological experiments in lateral orbitofrontal recordings in monkeys, which concentrated most prominently on area 13 (Blanchard et al., 2015,Cai and Padoa-Schioppa, 2012,Hosokawa et al., 2013,Kennerley et al., 2011,Kennerley and Wallis, 2009,Luk and Wallis, 2013,Padoa-Schioppa and Cai, 2011,Rich and Wallis, 2014). A recent study lesioned vlPFC in monkeys and found deficits in trials in which the animals were required to track reward probability to assess how available or not available reward might be (Murray and Rudebeck, 2018,Rudebeck et al., 2017). Another study showed that the lateral regions of the orbital bank somewhat overlapping with our region-of-interest derive their trial-to-trial value information from external stimuli relatively more so than from internal representations (Rich and Wallis, 2014). Our work adds to these previous findings by showing that many single neurons in vlPFC regions 47/12o have distinct signals that encode opportunities to gain advance information about future uncertain outcomes, which in our study indeed is obtained from external visual cues. Notably, this information-related activity we observed was not related to the animal’s overall preference for a particular stimulus or to its expected reward value; instead, this activity was specifically related to the animals’ preference to gain information to resolve uncertainty, about both rewards and punishments.

These data are related to a line of work that suggests that vlPFC is involved widely in surprise processing (Grohn et al., 2020) and participates in credit assignment in probabilistic and dynamic settings (Noonan et al., 2011,Noonan et al., 2017). Our data suggests that these processes may be supported by information-seeking related functions of vlPFC neurons. For example, one can interpret their bivalent information-anticipatory activity as anticipating the moment of surprise (or an absolute reward prediction error) when uncertainty will be resolved and the trial’s outcome will be revealed. This would permit vlPFC to highlight the key moments in a task when cognitive processes must be recruited to interpret new feedback, perform credit assignment, and promote learning and adjustments in behavior.

Here and in our previous work (White et al., 2019), similar overlapping populations of neurons anticipated the resolution of reward uncertainty by both (i) informative visual cues and (ii) the delivery (or omission) of uncertain reward outcomes (Figure S7). Interestingly, our data suggests that distinct populations of neurons perform these functions for aversive/noxious punishments: one population anticipates informative cues, while a second tonically signals the level of uncertainty leading up to uncertain punishment outcomes (Figures S7–8). Future studies must resolve how these distinct populations support behavior related to punishment uncertainty.

Of the diverse theoretical mechanisms have been proposed to motivate non-instrumental information seeking, many can be divided into two types: explicit motivation to resolve uncertainty, and overweighting of desirable vs. undesirable information (Bromberg-Martin and Monosov, 2020). Our data shows that the ACC-vlPFC network is highly suitable for the former type of mechanism. The latter type of mechanism could be implemented by distinct or overlapping brain networks (e.g. (Iigaya et al., 2020)), since multiple mechanisms may operate in parallel to motivate information seeking (Bromberg-Martin and Monosov, 2020,Kobayashi et al., 2019,Sharot and Sunstein, 2020). We believe that the emerging field of research on information seeking will shed further light on the distinct circuits and motivational mechanisms that govern these informational preferences.

STAR Methods

RESOURCE AVAILABILITY

Lead contact

Further information and requests for resources, data and code should be directed to the Lead Contact, Dr. Ilya E. Monosov (ilya.monosov@gmail.com).

Material sharing

This study did not generate new unique reagents

Data and code availability

Original source data is available upon reasonable from the Lead Contact.

EXPERIMENTAL MODEL AND SUBJECT DETAILS

Two adult sexually mature male monkeys (Macaca mulatta; Monkey B and Monkey Sb; ages: 7–10 years old) were used for recording and imaging experiments. All these procedures conform to the Guide for the Care and Use of Laboratory Animals and were approved by the Institutional Animal Care and Use Committee at Washington University. Anatomical tracer experiments were carried out on separate animals from those used for electrophysiology. Procedures were conducted in accordance with the Institute of Laboratory Animal Resources Guide for the Care and Use of Laboratory Animals and approved by the University Committee on Animal Resources at the University of Rochester and by Washington University Institutional Animal Care and Use Committee. Two adult sexually mature male macaque monkeys (Macaca mulatta) were used for these experiments.

METHOD DETAILS

A plastic head holder and plastic recording chamber were fixed to the skull under general anesthesia and sterile surgical conditions. For ACC, the chambers were tilted laterally by 35° and aimed at the anterior cingulate and the anterior regions of the basal ganglia. After the monkeys recovered from surgery, they participated in the behavioral and neurophysiological experiments. For vlPFC, novel grids were manufactured to approach vlPFC at an extreme lateral angle (up to 20 degrees) such that electrodes can be accurately targeted to the sites of strongest anatomical connectivity with the ACC.

Data acquisition.

While the monkeys participated in the behavioral procedure, we recorded single neurons in the anterior cingulate and the ventral lateral prefrontal cortex, areas 47/12o. The recording sites were determined with 1 mm-spacing grid system and with the aid of MR (3T) and CT images. This imaging-based estimation of neuron recording locations was aided by custom-built software (PyElectrode). Single-unit recording was performed using glass-coated electrodes (Alpha Omega) and 16 channel linear arrays (v-probes, Plexon). These devices were inserted into the brain through a stainless-steel guide tube and advanced by an oil-driven micromanipulator (MO-97A, Narishige).

Signal acquisition (including amplification and filtering) was performed using Plexon 40kHZ recording system. Action potential waveforms were identified online by multiple time-amplitude windows, and the isolation was refined offline (Plexon Offline Sorter) clustering by the first three principal components and non-linear energy.

Because in Monkey 1 (M1), we use electrophysiological identification of information-anticipating neurons to perform in-vivo tracing (MEMRI), we functionally mapped M1 ACC using single unit (single channel) electrophysiology, the most precise method that we used in the past to target anatomical injections (Monosov et al., 2015). In M1, as previously, we recorded only neurons that were online judged to be task responsive that is exhibiting inhibition or excitation to any task PS (Monosov, 2017,White et al., 2019,White and Monosov, 2016). This rendered 162 neurons, of which 149 were task responsive (Kruskal-Wallis test across all PS epochs, p<0.01). For Monkey 2 (M2), we used linear arrays and included any well-isolated neuron, yielding 128/334 neurons that were task responsive (38%). This matched our previous measurements in M1 (White et al., 2019). Next, after we carefully targeted the tracer in M1 using single unit electrophysiology to identify vlPFC region receiving ACC inputs, and confirmed MEMRI accuracy using chemical tracing, we recorded in vlPFC using linear arrays and confirmed accuracy using imaging in both monkeys. In M1, this yielded 259/711 task responsive neurons and 275/452 neurons in M2.

ACC recordings were performed in the range of ~8–15mm anterior (mean: ~12mm) in B and ~7–14mm anterior (mean: ~11mm) in Sb relative to the center of the anterior commissure (AC; located ~21 mm anterior to the interaural 0). The medial-lateral extent of ACC recording spanned the lateral bank of the ACC matching our previous studies (Monosov, 2017,White et al., 2019). vlPFC recordings were performed ~14mm from the center of the AC in B, and in the range of ~8–14mm anterior (mean: ~11mm) in Sb relative to the center of the AC. The medial-lateral extent of vlPFC recording was from ~1mm medial-2mm lateral relative to the location of the electrode in Figure 1D. Recording locations were verified by MRI and CT imaging (Daye et al., 2013).

Eye position was obtained with an infrared video camera (Eyelink, SR Research). Behavioral events and visual stimuli were controlled by Matlab (Mathworks, Natick, MA) with Psychophysics Toolbox extensions. Juice, used as reward, was delivered with a solenoid delivery reward system (CRIST Instruments). Juice-related licking was measured and quantified using previously described methods (Ledbetter et al., 2016a,Monosov, 2017,Monosov and Hikosaka, 2013,White et al., 2019). Airpuff (~35 psi) was delivered through a narrow tube placed ~ 6–8 cm from the monkey’s face (Monosov, 2017).

Chemical tracer injections.

For retrograde tracing experiments (Figure 1D and Figure S4), two adult male macaque monkeys were used (Macaca mulatta). The monkey received ketamine 10 mg/kg, diazepam 0.25 mg/kg, and atropine 0.04 mg/kg IM in the cage. A surgical plane of anesthesia was maintained via 1–3% isoflurane in 100% oxygen via vaporizer. Temperature, heart rate, and respiration were monitored throughout the surgery. The monkey was placed in a Kopf stereotaxic, a midline scalp incision was made, and the muscle and fascia were displaced laterally to expose the skull. A craniotomy (~2–3 cm2) was made over the region of interest, and small dural incisions were made only at injection sites. The monkey received an injection of Lucifer Yellow (LY) tracer (40 nl, 10% in 0.1 M phosphate buffer (PB), pH 7.4; Invitrogen).

The tracer was pressure-injected over 10 min using a 0.5-μl Hamilton syringe. Following each injection, the syringe remained in situ for 20–30 min. Twelve to 14 days after surgery, the monkey was again deeply anesthetized and perfused with saline followed by a 4% paraformaldehyde/1.5% sucrose solution in 0.1 M PB, pH 7.4. The brain was postfixed overnight, and cryoprotected in increasing gradients of sucrose (10, 20, and 30%). Serial sections of 50 μm were cut on a freezing microtome into 0.1 M PB or cryoprotectant solution. One in eight sections was processed free-floating for immunocytochemistry to visualize the tracers. Tissue was incubated in primary anti-LY (1:3000 dilution; Invitrogen) in 10% NGS and 0.3% Triton X-100 (Sigma-Aldrich) in PB for four nights at 4 °C. Following extensive rinsing, the tissue was incubated for 40 min in biotinylated secondary anti-rabbit antibody made in goat (1:200; Vector BA-1000) followed by incubation with the avidin–biotin complex solution (Vectastain ABC kit, Vector Laboratories). Immunoreactivity was visualized using standard DAB procedures. Staining was intensified by incubating the tissue for 5–15 s in a solution of 0.05% 3,3′-diaminobenzidine tetra-hydrochloride, 0.025% cobalt chloride, 0.02% nickel ammonium sulfate, and 0.01% H2O2. Sections were mounted onto gel-coated slides, dehydrated, defatted in xylene, and cover-slipped with Permount. Retrogradely labeled input cells were identified under brightfield microscopy (x20). StereoInvestigator software (Micro-BrightField) was used to stereologically count labeled cells with an even sampling (1.2 mm of interval between slides).

Anterograde cases in the Supplemental Materials come from the newly-digitized collection of anatomical tracers from the laboratories of Dr. Joel Price. For those injections, BDA (10,000 kDA) was used as anterograde tracer and processed as described previously (An et al., 1998,Ferry et al., 2000,Hsu and Price, 2007, 2009,Ongur and Price, 2000).

Manganese enhanced magnetic resonance imaging tracing.

To localize where the ACC information anticipation related functional hotspot most strongly projects, we used manganese-enhanced MRI tracing (MEMRI). The method relies on two properties of manganese ions: (1) the manganese ion (Mn2+) is a calcium ion analog and is therefore taken up by neurons and transported in an anterograde fashion; and (2) Mn2+ increases the MR intensity of voxels (Pautler et al., 1998,Saleem et al., 2002,Simmons et al., 2008). Therefore, by injecting Mn2+ directly into the brain, we can trace neuronal pathways using standard T1-weighted MRI. To choose the injection site, we recorded neuronal activity in the ACC before the injection and verified that the information anticipation neurons were there and used T1 MRI to double check that this location roughly matched the region we previously quantitatively identified as enriched in information anticipation related neurons (White et al., 2019). We then used a small metal canula to target the injection to the appropriate depth of high concentration of information anticipation related neurons. We injected 0.15 μl of 150 mM solution of manganese chloride. This concentration was previously shown to be nontoxic (Eschenko et al., 2010,Simmons et al., 2008). The injection was made over approximately 30-minute duration. We then waited approximately 15 minutes to retract. We first retracted 0.5 mm and waited for approximately 10 minutes, and then retracted completely from the brain and prepared the monkey for MRI.

MR anatomical images were acquired in a 3T horizontal scanner. The monkey’s head was placed in the scanner in stereotactic position. To minimize changes in RF across scans, as previously (Monosov et al., 2015), we made sure that the MR surface coil was always in roughly the same location (relative to the monkey’s head) before acquiring a 3D volume with an 0.5 mm isotropic voxel size. Each 3D volume took ~15 min to acquire without averaging. Five baseline pre-injection scans were collected a week earlier. Five post-injection scans were collected 24 hours after the injection. Because in our previous studies we found that MN++ accumulates in subcortical structures over time (unpublished), we also scanned 96 hours after the injection to further validate our methods and this observation (data available upon request). All scans were obtained using the same acquisition under isoflurane gas anesthesia. On the injection day, a single T1 scan was collected ~1 h after the injection was finished to visualize MN++.

As in previous monkey MEMRI experiments, we could not detect manganese transport by eye 24 h after the injection (Simmons et al., 2008). To visualize the transport, we calculated the percentage increase of voxel intensity after the injection by comparing averaged pre-injection scan with averaged post-injection scans. This was the same procedure and analyses that we developed with the Leopold laboratory (Monosov et al., 2015). Before doing this, the averaged MRIs were processed by the Analysis of Functional NeuroImages (AFNI) toolkit (Cox, 1996). First, the image nonuniformity was reduced (AFNI function: 3dUniformize). Second, the images were resampled, doubling the number of voxels (AFNI function: 3dresample). Third, the post-injection scans were aligned with the average pre-injection baseline scan using affine transforms (AFNI function: 3dAllineate). Fifth, the averaged images (pre and post) were smoothed with a 3D Gaussian filter (σ = 0.2 mm). Last, before calculating the percentage increase, each average scan was normalized by dividing by the average intensity value of 5 mm3 of cortex (Monosov et al., 2015).

Behavioral tasks.

The information task used in this study is as displayed in Figure 1A. The task began with the appearance of a small circular trial start cue at the center of the screen. This trial start cue then disappeared and was followed in succession by a donut shaped visual fractal Cue1 (~4° radius) that was displayed for a fixed duration (1 second). Then, within it, a square shaped fractal Cue2 appeared, filling the blank space within it. Then, in 1.5 seconds the donut shaped Cue1 disappeared indicating the monkeys that in 0.75 seconds the trial’s scheduled outcome would occur. Then, the screen became empty and simultaneously the outcome was delivered.

The stimuli were presented randomly on either the left or right side of the screen (~12.5°). The Cue1s came in two types. The information-predictive Cue1s predicted juice reward (typically 0.4 mL) or punishment (air puff) with different probabilities, and were followed by informative cues whose color or texture indicated the trial’s outcome (Figure 1A). The non-information-predictive Cue1s also yielded rewards and punishments with matched size and probability, but were followed by noninformative cues whose colors or textures were randomized on each trial and hence did not convey any information about the trial’s outcome. The Cue1s were presented in a pseudorandom order in 40 trial blocks of Info and Noinfo trials. We also used a second version of the task in which monkeys were allowed to choose among the Cue1s. Each trial started with the presentation of a trial-start cue at the center of the screen. Then, after 1 second, a choice array was presented consisting of two fractals used in the information procedure in Figure 1A. Monkeys then made a saccadic eye movement to their preferred fractal and fixated it for 0.75 seconds to indicate their choice. Then, the unchosen stimulus disappeared, and the exact sequence of events the animals experienced in Figure 1A occurred. Following these trials, during the intertrial interval, another visually distinct fixation spot appeared. When the monkeys fixated this fixation spot, a small reward was delivered (typically, 0.125 ml of juice). This kept the monkeys engaged, particularly when they encountered many trials in which only punishments were available (see (Barberini et al., 2012)).

QUANTIFICATION AND STATISTICAL ANALYSIS

All statistical tests were two-tailed and non-parametric unless otherwise noted. A significance threshold of p<0.05 was used unless otherwise noted. Recorded neurons were included in analyses if they displayed significant task responsiveness, defined as variance in neural activity across all task Cue1s (Kruskal-Wallis test, p<0.01). For this analysis, activity was measured in a broad time window encompassing the task period in order to avoid making any assumptions about the time course of neural responses (from 0.1s after Cue1 onset to 3.25 s after Cue1 onset).

Neural activity was converted to normalized activity as follows. Each neuron’s spiking activity was smoothed with a Gaussian kernel (mean = 50 ms) and then z-scored. To z-score the activity, the neuron’s average activity time course aligned at PS onset was calculated for each condition (defined here as each combination of PS and cue). These average activity time courses from the different conditions were all concatenated into a single vector, and its mean and standard deviation were calculated and used to z-score the data. Henceforth, all future analyses converted that neuron’s firing rates to normalized activity by (1) subtracting the mean of that vector, (2) dividing by the standard deviation of that vector (White et al., 2019).

The Informative Cue Anticipation Index (White et al., 2019) referred to here as the Information Anticipation Index was defined as the difference between a neuron’s uncertainty signal for Info and Noinfo trials in a 0.5 s pre-cue time window (Figure 2). Hence the index was positive if a neuron had a higher uncertainty signal in anticipation of Info PSs, and negative if a neuron had a higher uncertainty signal in anticipation of Noinfo PSs. Neurons were classified as information-responsive if their Information Anticipation Index was significantly different from 0 (p < 0.05, permutation tests conducted by comparing the index calculated on the true data to the distribution of indexes calculated on 20,000 permuted data sets which shuffled the assignment of trials to Info and Noinfo conditions). Exactly as previously, the uncertainty signal was measured by an ROC area comparing certain and uncertain trials, separately in Info and Noinfo conditions (White et al., 2019).

Analysis of behavioral choice.

To model choice behavior, each animal’s choice data was fit with a generalized linear model (GLM) with a logistic link function for binomial data. The dependent variable on each trial was the animal’s choice (0 or 1 if they chose the leftmost or rightmost option). The regressors were the difference between the right and left options in each specific attribute of the options. The attributes used for the regressors were: the main effects of expected reward value (i.e. reward probability), reward uncertainty (SD of reward distribution), expected aversive value (i.e. aversive outcome probability), aversive uncertainty (SD of aversive outcome distribution), informativeness (0 or 1 if the option was non-informative or informative); and the pairwise interactions between informativeness and each of the following variables: expected reward value, reward uncertainty, expected aversive value, and aversive uncertainty. In order to obtain regression coefficients in standardized units, all nonbinary attributes were standardized by z-scoring before being used to construct the regressors. Thus, their fitted regression weights can be interpreted as increase in the log odds of choosing an option as a result of increasing the option’s attribute by an amount equal to one standard deviation of the distribution of that attribute over all options in the task. Finally, to allow for the possibility of interactions between simultaneous expectations of rewarding and aversive outcomes, we modeled the bivalent 50% reward/50% punishment options by setting all of the above attributes to 0 and instead including two additional attributes that were binary indicator variables, one that was 1 if and only if the option was the 50/50 non-informative option, and one that was 1 if and only if the option was the 50/50 informative option. We focused on the Info × Uncertainty terms as measures of each animal’s attitudes toward reward and punishment information, as we found that information attitudes were greatly enhanced under uncertainty, such that these terms provided the best explanation of information-related choice behavior (Figure S2). Specifically, when compared to a baseline model in which the only information-related term was a main effect of Info, the model’s ability to predict behavior (measured by cross-validated log likelihood assessed by 10-fold cross-validation, by AIC, or by BIC) was improved to a greater extent by adding Info × Uncertainty terms (i.e. Info × Reward Uncertainty and Info × Punishment Uncertainty) than by adding either Info × Probability terms (i.e. Info × Reward Probability and Info × Punishment Probability) or Info × Valence terms (i.e. Info × R and Info × P, where R is an indicator variable equal to 1 when reward is possible and 0 otherwise, and P is an analogous indicator variable for punishment).

Analysis of neural coding prevalence in each neural population.

For each animal, area, and outcome type (reward or aversive), we tested whether the fraction of neurons with significant information-anticipatory activity for that outcome type was greater than expected by chance (binomial test, chance = 0.05). Then, for each animal and area, we then classified each neuron as either non-significant or as having one of four coding types: significant reward only, significant aversive only, significant reward and aversive with the same coding sign, and significant reward and aversive with opposite coding signs. We tested whether the fraction of neurons with each coding type was significantly greater than expected under the null hypothesis that no cells had true reward or aversive coding (binomial test, chance = (1–0.05)*0.05 for reward-only and aversive-only and 0.05*0.05*0.5 for same-sign and opposite-signs). Finally, we tested whether, among neurons classified as having one of these four coding types, the fraction of neurons with each coding type was significantly different from that expected under the null hypothesis that neurons had the same probabilities as in the actual data of having significant reward coding and significant aversive coding, but that reward and aversive coding were independent of each other (permutation test, carried out by comparing the observed fraction of neurons with each coding type to the fractions from 1000 permuted datasets in which reward coding and punishment coding properties were each independently shuffled across neurons). We also used these results to perform an analogous test pooling same-sign and opposite-sign neurons to test the overall proportion of cells that anticipated both reward and punishment information, as well as a test based on the difference between the proportions of same-sign and opposite-sign neurons to test whether these neurons had an above-chance tendency to anticipate them with the same sign. We also used an analogous permutation test to determine whether there was a significant difference between vlPFC and ACC in the difference between the proportion of neurons with specific coding properties (e.g. significant reward coding and significant aversive coding with the same sign) and the proportion expected by chance under the null hypothesis of independent coding. Latency of uncertainty and info signals was computed in several ways utilizing previously described methods and produced similar results (Monosov, 2017,Monosov and Hikosaka, 2013,Monosov et al., 2008,White et al., 2019).

Trial-by-trial relationship of activity and Info Anticipation.

For the analyses in Figure 4B we calculate the Information Anticipation Index separately using (1) high-gaze trials in which the animal gazed at the cue at least 80% of the time in the final 100 ms of the pre-Cue2 window (i.e. a window from 0.9–1.0 s after Cue1 onset) at the location at which Cue2 would be presented and (2) low-gaze trials in which the animal gazed at the cue during at most 20% of that time window. We applied this trial selection only to uncertain trials to avoid biasing the index with behavioral differences relative to certain trials. We then visualized the within-neuron difference between these two gaze indices for the population of neurons (Figure 4B). Differences for neurons that displayed negative Information Anticipation Indices (< 0) were sign flipped, so that positive differences indicate that a neuron had greater activity in the same manner that it normally anticipates information (i.e. indicates higher activity for cells that were normally excited before information, and indicates lower activity for cells that were normally inhibited before information).

To confirm the robustness of these results, we performed an additional version of this analysis that directly compared neural firing rates between high-gaze vs. low-gaze trials, while treating each uncertain punishment condition separately. This is to control for any possibility that the apparent neural-behavioral relationships could be due to mean differences in gaze behavior and/or neural activity across the punishment conditions. To do this, for each neuron, we computed the ROC area for its pre-Cue2 firing rate to discriminate between ‘high gaze’ and ‘low gaze’ trials (defined here as trials in which monkeys looked more than 60% of the time vs. less than 40% of the time). We computed this separately for each information-predictive Cue1 that was associated with punishment uncertainty, and then averaged these ROC areas to get an overall index of the relationship between that neuron’s activity and behavior (Figure 4B, inset). For this analysis we used all neurons with task variability across uncertain punishment conditions, and for each neuron we only used the uncertain punishment conditions where there was behavioral variability while that neuron was recorded, so that the ROC area could be computed.

Analysis of neural activity as a function of gaze state.

As a further test of whether information-anticipatory neurons were simply encoding gaze, we directly measured the strength of information-anticipatory activity separately for times during the cue period when the animal’s gaze was on the cue vs. off the cue. If these neurons were simply encoding gaze state, then information-anticipatory activity should be greatly reduced or abolished after controlling for gaze state, either by restricting the analysis to times when gaze was on the cue or to times when gaze was off the cue. Whereas if neurons were truly anticipating information, they should have significant information signals in all cases.