Abstract

Each of our theories of mental representation provides some insight into how the mind works. However, these insights often seem incompatible, as the debates between symbolic, dynamical, emergentist, sub-symbolic, and grounded approaches to cognition attest. Mental representations—whatever they are—must share many features with each of our theories of representation, and yet there are few hypotheses about how a synthesis could be possible. Here, I develop a theory of the underpinnings of symbolic cognition that shows how sub-symbolic dynamics may give rise to higher-level cognitive representations of structures, systems of knowledge, and algorithmic processes. This theory implements a version of conceptual role semantics by positing an internal universal representation language in which learners may create mental models to capture dynamics they observe in the world. The theory formalizes one account of how truly novel conceptual content may arise, allowing us to explain how even elementary logical and computational operations may be learned from a more primitive basis. I provide an implementation that learns to represent a variety of structures, including logic, number, kinship trees, regular languages, context-free languages, domains of theories like magnetism, dominance hierarchies, list structures, quantification, and computational primitives like repetition, reversal, and recursion. This account is based on simple discrete dynamical processes that could be implemented in a variety of different physical or biological systems. In particular, I describe how the required dynamics can be directly implemented in a connectionist framework. The resulting theory provides an “assembly language” for cognition, where high-level theories of symbolic computation can be implemented in simple dynamics that themselves could be encoded in biologically plausible systems.

1. Introduction

At the heart of cognitive science is an embarrassing truth: we do not know what mental representations are like. Many ideas have been developed, from the field’s origins in symbolic AI (Newell & Simon, 1976), to parallel and distributed representations of connectionism (Rumelhart & McClelland, 1986; Smolensky & Legendre, 2006), embodied theories that emphasize the grounded nature of the mental (Barsalou, 2008, 2010), Bayesian accounts of structure learning and inference (Griffiths, Chater, Kemp, Perfors, & Tenenbaum, 2010; Tenenbaum, Kemp, Griffiths, & Goodman, 2011), theories of cognitive architecture (Newell, 1994; Anderson, Matessa, & Lebiere, 1997; Anderson et al., 2004), and accounts based on mental models, simulation (Craik, 1967; Johnson-Laird, 1983; Battaglia, Hamrick, & Tenenbaum, 2013), or analogy (Gentner & Stevens, 1983; Gentner & Forbus, 2011; Gentner & Markman, 1997; Hummel & Holyoak, 1997). These research programs have developed in concert with foundational debates in the philosophy of mind about what kinds of things concepts may be (e.g. Margolis & Laurence, 1999), with similarly diverse and seemingly incompatible answers.

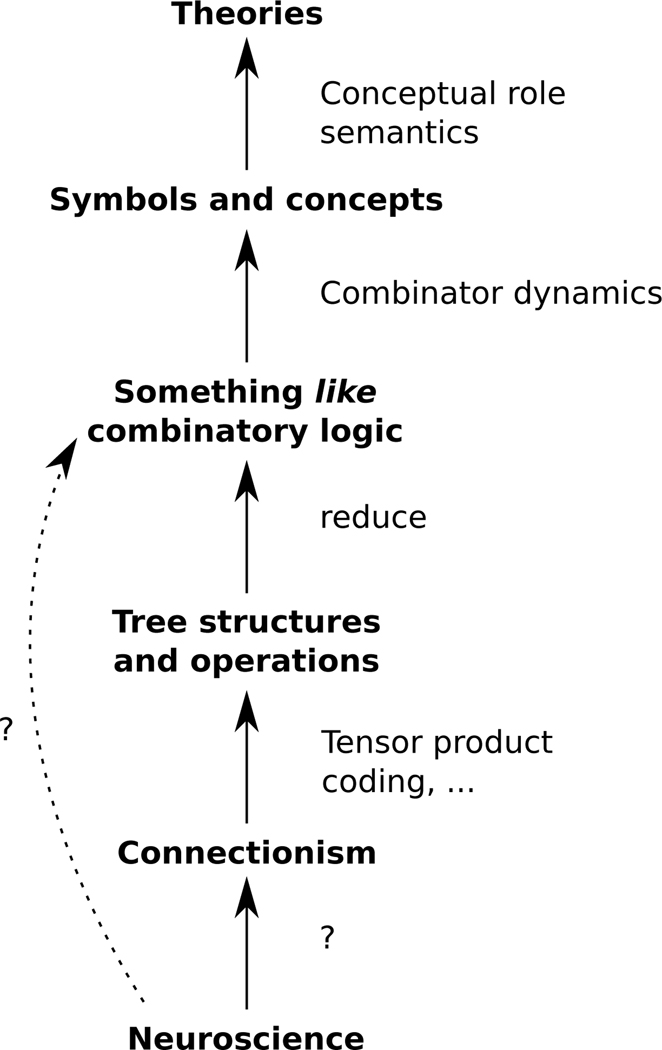

This paper develops an approach that attempts to unify a variety of ideas about how mental representations may work. I argue that what is needed is an intermediate bridging theory that lives below the level of symbols and above the level of neurons or neural network nodes, and that in fact such a system can be found in a pre-existing formalism of mathematical logic. The system I present shows how it is possible to implement high-level symbolic constructs permitting essentially arbitrary (Turing-complete) computation while being simple, parallelizable, and addressing foundational questions about meaning. Although the particular instantiation I describe is an extreme simplification, its general principles, I’ll argue, are likely to be close to the truth.

The theory I describe is a little unusual in that it is built almost exclusively out ideas that have been independently developed in fields adjacent to cognitive science. The logical formalism comes from mathematical logic and computer science in the early 1900s. The philosophical and conceptual framework has been well-articulated in prior debates. The inferential theory builds on work in theoretical AI and Bayesian cognitive science. The overall goal of finding symbolic cognition below the level of “symbols” comes from connectionism and other sub-symbolic accounts. An emphasis on the importance of getting symbols eventually comes from the remarkable properties of human symbolic thought and language, including centuries of argumentation in philosophy and mathematics about what kinds of formal systems may capture the core properties of humanlike thinking. What is new is the unification of these ideas into a framework that can be shown to learn complex symbolic processes and representations that are grounded in simple underlying dynamics without dodging key questions about meaning and representation. The resulting theory intentionally muddies the water between traditional symbolic and non-symbolic approaches by studying a system that is both simple to implement in neural systems, and in which it is simple to implement high-level cognitive processes. Such as system, I argue, represents a promising and concrete avenue for spanning the chasm between neural and cognitive systems.

The resulting theory formulates hypotheses about how fundamental conceptual change can occur, both computationally and philosophically. A pressing mystery is how children might start with whatever initial knowledge they are born with, and come to develop the rich and sophisticated systems of representation we can find in adults (Rule, Tenenbaum, & Piantadosi, under review). The problem is perhaps deepest when we consider simple logical capacities—for instance, the ability to represent boolean values, compute syllogisms, follow logical/deductive rules, use number, or process conditionals and quantifiers. If infants do not have some of these abilities, we are in need of a learning theory that can explain where such computational processes might come from. Yet, it is hard to imagine how a computational system that does not know these could function. From what starting point could learners possibly construct Boolean logic, for instance? It is hard to imagine what a computer would look like if it did not already have notions like and, or, not, true, and false. Is it even possible to “compute” without having concepts like logic or number? The answer provided in this paper is emphatically yes—computation is possible without any explicit form of these operations. In fact, learning systems can be made that construct and test these logical systems as hypotheses–genuinely without presupposing them. My goal here is to show how.

The paper is organized as follows: In the next section, I describe a leading example of symbolic cognitive science, Fodor’s Language of Thought (LOT) theory (Fodor, 1975, 2008). The LOT motivates the need for structured, compositional representations, but leaves at its core unanswered questions about how the symbols in these representations come to have meaning or may be implemented in neural systems. To address this, I then discuss conceptual role semantics (CRS) as an account of how meaningful symbols may arise. The problem with CRS is that it has no implementations, leaving its actual mechanics vague and unspecified. To address this, I connect the idea of CRS to formalizations in computer science that effectively use CRS-like methods to show how logical systems can encode other processes. Specifically, I describe a mathematical formalism combinatory logic, and a key operation in it, Church encoding, which permits one modeling of one system (e.g. world) within another (e.g. a mental logic). This reduces the problem of learning meaningful symbols to that of constructing the right structure in a mental language like combinatory logic. I then address the question of learning these representations, drawing on work in theoretical artificial intelligence. The inferential technique allows learners to acquire computationally complex representations, yet largely avoid the primary difficulty that faces learners who operate on spaces of computations: the halting problem. Providing a GPL-licensed implementation of the inference scheme, I then demonstrate how learners could acquire a large variety of mental representations found across human and non-human cognition. In each of these cases, the core symbolic aspect of representation is built out only out of extraordinarily simple and mechanistic dynamics from which the meanings emerge in an interconnected system of concepts. I argue that whatever mental representations are, they must be like these kinds of objects, where symbolic meanings for algorithms, structures, and relations arise out of the sub-symbolic dynamics that implement these processes. I then describe how the system can be implemented straightforwardly in existing connectionist frameworks, and discuss broader philosophical implications.

1.1. Representation in the Language of Thought

There is a lot going for the theory that human cognition uses, among other things, a structured symbolic system of representation analogous to language. The idea that something like a mental logic describes key cognitive processes dates back at least to Boole (1854), who described his logic as capturing “the laws of thought” and Gottfried Leibniz, who tried to systematize knowledge and reasoning in his own universal formal language, the characteristica universalis. As a psychological theory, the LOT reached prominence through the works of Jerry Fodor who argues for a compositional system of mental representation that is analogous to human language called a Language of Thought (LOT) (Fodor, 1975, 2008). The LOT has had numerous incarnations throughout the history of AI and cognitive science (Newell & Simon, 1976; Penn, Holyoak, & Povinelli, 2008; Fodor, 2008; Kemp, 2012; Goodman, Tenenbaum, & Gerstenberg, 2015; Piantadosi, Tenenbaum, & Goodman, 2016; Rule et al., under review; Chater & Oaksford, 2013; Siskind, 1996). Most recent versions focus on combining language-like—or program-like—representations with Bayesian probabilistic inference to explain concept induction in empirical tasks (Goodman, Tenenbaum, Feldman, & Griffiths, 2008; Goodman, Mansinghka, Roy, Bonawitz, & Tenenbaum, 2008; Yildirim & Jacobs, 2013; Erdogan, Yildirim, & Jacobs, 2015; Goodman et al., 2015; Piantadosi & Jacobs, 2016; Rothe, Lake, & Gureckis, 2017; Lake, Salakhutdinov, & Tenenbaum, 2015; Overlan, Jacobs, & Piantadosi, 2017; Romano et al., 2017, 2018; Amalric et al., 2017; Lake et al., 2015; Depeweg, Rothkopf, & Jäkel, 2018; Overlan et al., 2017).1

If mentalese is like a program, its primitives are humans’ most basic mental operations, a view of conceptual representation that has roots and branches in psychology and computer science. Miller and Johnson-Laird (1976) developed a theory of language understanding based on structured, program-like representations. Commonsense knowledge in domains like objects, beliefs, physical reasoning, and time also draw on logical representations (Davis, 1990) mirroring psychological theorizing about the LOT. Modern incarnations of conceptual representations can be found in programming languages like Church that aim to capture phenomena like the gradience of concepts through a semantics centered on probability and conditioning (Goodman, Mansinghka, et al., 2008; Goodman et al., 2015). In computer science, the program metaphor has been applied in computational semantics under the name procedural semantics, in which representations of linguistic meaning are taken to be programs that compute something about the meaning of the sentence (Woods, 1968; Davies & Isard, 1972; Johnson-Laird, 1977; Woods, 1981). For instance, the meaning of “How many US presidents have had a first name starting with the letter ‘T’?” might be captured by a program that searches for an answer to this question in a database. This approach has been elaborated in a variety of modern machine learning models, many of which draw on logical tools closely akin to the LOT (e.g Zettlemoyer & Collins, 2005; Wong & Mooney, 2007; Liang, Jordan, & Klein, 2010; Kwiatkowski, Zettlemoyer, Goldwater, & Steedman, 2010; Kwiatkowski, Goldwater, Zettlemoyer, & Steedman, 2012).

A LOT would explain some of the richness of human thinking by positing a combinatorial capacity through which a small set of built in cognitive operations can be combined to express new concepts. For instance, in the word learning model of Siskind (1996) the meaning of the word “lift” might be captured as

Lift (x, y) = CAUSE (x, GO (y, UP))

where CAUSE, GO and UP are “primitive” conceptual representations—possibly innate—that are composed to express a new word meaning. This compositionality allows theories to posit relatively little innate content, with the heavy lifting of conceptual development accomplished by combining existing operations productively in new ways. To discover what composition is “best” to explain their observed data, learners may engage in hypothesis testing or Bayesian inference (Siskind, 1996; Goodman, Tenenbaum, et al., 2008; Piantadosi, Tenenbaum, & Goodman, 2012; Ullman, Goodman, & Tenenbaum, 2012; Mollica & Piantadosi, 2015).

The content that a LOT assumes is distinctly symbolic, can be used compositionally to generate new thoughts, and obeys systematic patterns made explicit in the symbol structures. For example, it would be impossible to think that x was lifted without also thinking that x was caused to go up. Though the psychological tenability of systematicity (Johnson, 2004) and compositionality (Clapp, 2012) are debated, classically such compositionality, productivity, and systematicity have been argued to be desirable features of cognitive theories (Fodor & Pylyshyn, 1988), and lacking in connectionism (for ensuing discussion, see Smolensky, 1988, 1989; Chater & Oaksford, 1990; Fodor & McLaughlin, 1990; Chalmers, 1990; Van Gelder, 1990; Aydede, 1997; Fodor, 1997; Jackendoff, 2002; Van Der Velde & De Kamps, 2006; Edelman & Intrator, 2003).

However, work on the LOT as a psychological theory has progressed despite a serious problem lurking at its foundation: how is it that symbols themselves come to have meaning? It is far from obvious what would make a symbol GO mean go and CAUSE mean cause. This is especially troublesome when we recognize that even ordinary concepts like these are notoriously difficult to formalize, perhaps even lacking definitions (Fodor, 1975). Certainly there is nothing inherent in the symbol (the G and the O) itself that give it this meaning; in some cases the symbols don’t even refer to anything external which could ground their meaning either, as is the case for most function words in language (e.g. “for”, “too”, “seven”). This problem appears even more pernicious when we consider how what meanings might be to a physical brain. If we look at neural spike trains, for instance, how would we find a meaning like CAUSE?2

1.2. Meaning through conceptual role

The framework developed here builds off an approach to meaning in philosophy of mind and language known as conceptual role semantics (CRS) (Field, 1977; Loar, 1982; Block, 1987; Harman, 1987; Block, 1997; Greenberg & Harman, 2005). CRS which holds that mental tokens get their meaning through their relationship with other symbols, operations, and uses, an idea dating back at least to Newell and Simon (1976). There is nothing inherently disjunctive about your mental representation of the mental operation OR. What distinguishes it from AND is that the two interact differently with other mental tokens, in particular TRUE and FALSE. The idea extends to more ordinary concepts: a concept of an “accordion” might be inherently about its role in a greater conceptual system, perhaps inseparable from the inferences it licenses about the player, its means of producing sound, its likely origin, etc. An example from Block (1987) is that of learning the system of concepts involved in physics:

One way to see what the CRS approach comes to is to reflect on how one learned the concepts of elementary physics, or anyway, how I did. When I took my first physics course, I was confronted with quite a bit of new terminology all at once: ‘energy’, ‘momentum’, ‘acceleration’, ‘mass’, and the like. ... I never learned any definitions of these new terms in terms I already knew. Rather, what I learned was how to use the new terminology—I learned certain relations among the new terms themselves (e.g., the relation between force and mass, neither of which can be defined in old terms), some relations between the new terms and the old terms, and, most importantly, how to generate the right numbers in answers to questions posed in the new terminology.

Indeed, almost everyone would be hard-pressed to define a term like “force” in any rigorous way, other than appealing to other terminology like “mass” and “acceleration” (e.g., f = m · a). This emphasis on the role of concepts in systems of other concepts leads CRS to be closely related to the Theory Theory in development (see Brigandt, 2004) as well work in psychology emphasizing the role of entire systems of knowledge—theories—in conceptualization, categorization, and cognitive development (Carey, 1985; Murphy & Medin, 1985; Wellman & Gelman, 1992; Wisniewski & Medin, 1994; Gopnik & Meltzoff, 1997; Kemp, Tenenbaum, Griffiths, Yamada, & Ueda, 2006; Tenenbaum, Griffiths, & Kemp, 2006; Tenenbaum, Kemp, & Shafto, 2007; Carey, 2009; Kemp, Tenenbaum, Niyogi, & Griffiths, 2010; Ullman et al., 2012; Bonawitz, Schijndel, Friel, & Schulz, 2012; Gopnik & Wellman, 2012). Empirical studies of how human learners use systems of knowledge suggest that concepts cannot be studied in isolation—our inferences depend not only on simple perceptual factors, but the way in which our internal systems of knowledge interrelate.

Block (1987) further argues that CRS satisfies several key desiderata for a theory of mental representation, including its ability to handle truth, reference, meaning, compositionality, and the relativity of meaning. Some authors focus on the inferential role of concepts, meaning the way in which they can be used to discover new knowledge. For instance, the concept of conjunction AND may be defined by its ability to permit use of the “elimination rule” P&Q → P (Sellars, 1963). Here I will use the term CRS in a general way, but my implementation will make the specific meaning unambiguous later. In the version of CRS I describe, concepts will be associated with some, but perhaps not all, of these inferential roles, and some other relations that are less obviously inferential. The view that concepts are defined in terms of their relationships to other concepts has close connections to accounts of meaning given in early theories of intelligence (Newell & Simon, 1976), as well as implicit assumptions of computer science. Operations in a computer only come to have meaning in virtue of how they interact with the architecture, memory, and other instructions. For example, nearly all modern computers represent negative numbers with a two’s complement where a number can be negated by swapping the 1s and 0s and adding 1. For instance, a five-bit processor might represent 5 as 00101 and −5 as 11010 + 1 = 11011. Then, −5 plus one 00001 is 11011 + 00001 = 11100, which is the representation for −4. Use of two-complement is only convention, and equally mathematically correct systems have been considered throughout the history of computer science, including using the first bit to represent sign, “ones complement” (swapping zeros and ones), and analog systems. If we just looked inside of an alien’s computer and saw the bit pattern 11010, we could not form a good theory of what it meant unless we also understood how it was treated by operations like negation and addition. The meanings of symbols are inextricable from their use.

A primary shortcoming of conceptual role theories as cognitive accounts is that they lack a computational backbone, leaving vagueness about what a “role” might be. The lack of computational implementation has given rise to a variety of philosophical debates about what is possible for CRS, but as I argue, at least some of these issues become less problematic once we consider a concrete implementation. The lack of implementations also means that it is difficult to make progress on experimental psychology probing the particular representations and processes of a CRS because there are few ideas about what, formally, a role might be. A primary goal of this paper is to give the conceptual role semantics a computational backbone by using a version of the LOT a framework where roles can be formalized, learned, and studied explicitly.

1.3. Isomorphism and representation

Any CRS theory will have to start by saying which mental representations we create and why. Here, it will be assumed that the mental representations we construct are likely to correspond to evolutionarily relevant structures, relations, and dynamics present in the real world.3 This notion of correspondence between mental representations and the world can be captured with the mathematical idea of isomorphism. Roughly, systems X and Y are isomorphic if operations in X do “the same thing” as the corresponding operations in Y and vice versa. For instance, the ticking of a second hand is isomorphic to the ticking of an hour hand: both take 60 steps and then loop around to the beginning. How one state leads to the next is “the same” even though the details are different since one ticks every second and the other every minute. Scientific theories form isomorphisms in that they attempt to construct formal systems which capture the key dynamics of the system under study. A simple case to have in mind in science is Newton’s laws of gravity, where the behavior of a real physical object is captured by constructing an isomorphism into vectors of real numbers, which themselves represent position, velocity, etc. The dynamics of updating these numbers with Newton’s equations is the same as updating the real objects, which is the whole reason why the equations are useful.4

The notion that the mind contains structures isomorphic to the world lies at the heart of many theories of mental content (Craik, 1952; McNamee & Wolpert, 2019; Gallistel, 1998; Shepard & Chipman, 1970; Hummel & Holyoak, 1997). Shepard and Chipman (1970) emphasized that while mental representations need not be structurally similar to what they represent, the relationships between internal representations must be “parallel” to the relationships between the real world objects. Gallistel (1998) writes,

A mental representation is a functioning isomorphism between a set of processes in the brain and a behaviorally important aspect of the world. This way of defining a representation is taken directly from the mathematical definition of a representation. To establish a representation in mathematics is to establish an isomorphism (formal correspondence) between two systems of mathematical investigation (for example, between geometry and algebra) that permits one to use one system to establish truths about the other (as in analytic geometry, where algebraic methods are used to prove geometric theorems).

In this case, mental representations could be used to establish truths about the world without having to alter the world. The notion of isomorphism is also deeply connected to the ability of the brain to usefully interact with the world. Conant and Ashby (1970) show that if a system X (e.g. the brain) wishes to control the dynamics of another system Y (e.g. the world), and X does so well (in a precise, information-theoretic sense), then X must have an isomorphism of Y (see Scholten, 2010, 2011). This result developed out of cybernetics and control theory and is not well-known in cognitive science and neuroscience. Yet, the authors recognized its relevance, noting that the result “has the interesting corollary that the living brain, so far as it is to be successful and efficient as a regulator for survival, must proceed, in learning, by the formation of a model (or models) of its environment.”

What is mysterious about the brain, though, is that we are able to encode a staggering array of different isomorphisms—from language, to social reasoning, physics, logical deduction, artistic expression, causal understanding, meta-cognition, etc. (Rule et al., under review). Such breadth suggests that our conceptual system can support essentially any computation or construct any isomorphism. Moreover, little of this knowledge could possibly be innate because it is so clearly driven by the right set of experiences. Yet, the question of how systems might encode, process, and learn isomorphisms in general has barely been addressed in cognitive science. Indeed, work on the LOT has typically made ad-hoc choices about what primitives should be considered in hypotheses in any given context, thus failing to provide a demonstrably generalized theory of learning that takes the breadth of human cognition seriously. The representational system below develops a universal framework for isomorphism, a mental system in which we can construct, in principle, a representation of anything else. Unsurprisingly, the existence of such a formalism is closely connected to the existence of universal computation.

1.4. The general theory

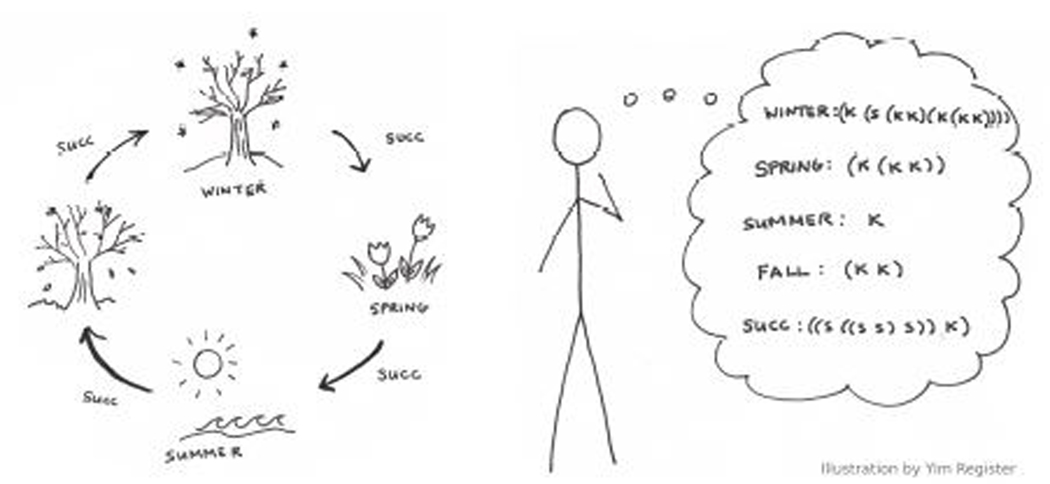

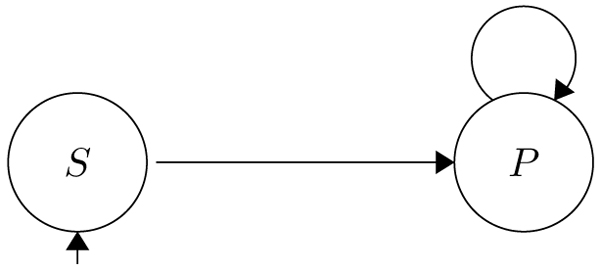

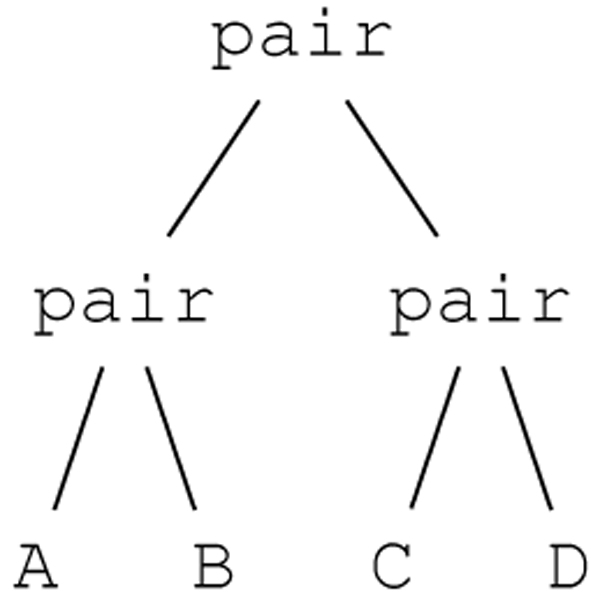

We are now ready to put together some pieces. The overall setup is illustrated in Figure 1. We assume that learners observe a structure in the world. In this case, the learner sees the circular structure of the seasons, where the successor (succ) of spring is summer, the successor of summer is fall, etc. Learners are assumed to have access to this relational information between tokens shown on the left. Their job is to internalize (mentally represent) each symbol and relation by mapping symbols to expressions in their LOT that obey the right relations, as shown on the right. The mapping will effectively construct an internal isomorphism of the observations, written in the language of mentalese.

Figure 1:

Learners observe relations in the world, like the successor relationship between seasons. Their goal is to create an internal mental representation which obeys the same dynamics. This is achieved by mapping each observed token to a simple expression written in a universal mental language whose constructs/concepts specify interactions between elements. This system uses a logic for function compositions that is capable of universal computation.

A little more concretely, the relations in Figure 1 might be captured with the following facts,

(succ winter) → spring (succ spring) → summer (succ summer) → fall (succ fall)→ winter

Here, I have written the relations as functions, where for instance the first line means that succ is a function applied to winter, that returns the value spring. To represent these, we must map each of these symbols to a mental LOT expression that obeys the same relational structure. So, if succ, winter, and spring get mapped to mental representations ψsucc, ψwinter, and ψspring respectively, then the first fact means that

(ψsucc ψwinter) → ψspring

also holds. Though this statement looks simple, it actually involves some subtlety. It says that whatever internal representation succ gets mapped to, this representation must also be able to be used internally as a function. The return value when this mental function is evaluated on ψwinter has to be the same as how spring is represented mentally. Each token participates in several roles and must simultaneously yield the correct answer in each, providing a full mental isomorphism of the observed relations.

One might immediately ask why we need anything other than the facts—isn’t it enough to know that (succ ↪ spring) → winter in that purely symbolic form? A Prolog program might directly encode the relations (e.g. ψspring is a symbol “SPRING”) and look up facts in what is essentially a symbolic database. Such a program could even be able to answer questions like “The successor of which season is spring?” by compiling these questions into the appropriate database query. Of course, if this worked well, good old fashioned AI would have yielded striking successes. Unfortunately, several limitations of such purely symbolic encoding are clear. First, it is not apparent how looked-up symbols get their meaning, a version of the problem highlighted by Searle (1980)’s Chinese Room. It is not enough to know these symbolic relationships; what matters is the semantic content that they correspond to, and there is no semantic content in a database lookup of the answer. Second, architectures for processing symbols seem decidedly unbiological, and the problem of how these symbols may be grounded in a biological or neural system has plagued theories of representation and meaning. Third, in many of the cases I’ll consider, what matters is not the symbols themselves, but the computations they correspond to—what they do to other symbols. For instance, we might consider a case of a simple algorithm like repetition. Your internal concept of repetition must encode the process for repetition; but what mental format could do so? Fourth, our cognitive systems must go beyond memorization of facts—we are able to generalize beyond what we have observed, extracting regularities and abstract rules. What might representations be like such that they can allow us to deduce more than what we’ve already seen? Each of these four goals—meaning, implementation, computation, and induction—can be met with the logical system described below.

The core hypothesis developed in this paper is that the symbols like succ and winter get mapped to LOT expressions that are dynamical and computational objects supporting function composition. ψsucc is represented as an object that, when applied via function composition to ψwinter, gives us back the expression for ψspring. These meanings are specified in a language of pure computational dynamics, absent any additional primitives or meanings. This is shown with the minimalist set of primitives in Figure 1, where each token is mapped to some structure built of S and K, special symbols whose “meanings” are discussed below. In this setup, symbols like spring come to have meaning as CRS supposes, by virtue of how the structures they are mapped to act on other symbols. Learners are able to derive new facts by applying their internal expressions to each other in novel ways. As I show, this can give rise to rich systems of knowledge that span classes of computations and permit learners to extend a few simple observations into the domain of richer cognitive theories.

2. Combinatory logic as a language for universal isomorphism

A mathematical system known as combinatory logic provides the formal tool we’ll use to construct a universal isomorphism language as a hypothesized LOT. Combinatory logic was developed in the early- and mid-1900s in order to allow logicians to work with expressions that did not require variables like “x” and “y”, yet had the same expressive power (Hindley & Seldin, 1986). Combinatory logic’s usefulness is demonstrated by the fact that it was invented at least three independent times by mathematicians, including Moses Schönfinkel, John von Neumann, and Haskell Curry (Cardone & Hindley, 2006). The main advantages of combinatory logic are its simplicity (allowing us to posit very minimal built-in machinery) and its power (allowing us to model symbols, structures, and relations). In cognitive research, combinatory logic is primarily seen in formal theories of natural language semantics (Steedman, 2001; Jacobson, 1999), although its relevance has also been argued in other domains like motor planning (Steedman, 2002). The use of combinatory logic as a representational substrate, moreover, fits with the idea that tree-like structures are fundamental to human-like thinking(e.g. Fitch, 2014).

The next two sections are central to understanding the formalism used in the remainder of the paper and are therefore presented as a tutorial. This first section will illustrate how combinatory logic can write down a simple function. This illustrates only some of its basic properties, such as its simplicity (involving only two primitives), its ability to handle variables, and its ability to express arbitrary compositions of operations. The more powerful view of combinatory logic comes later, where I describe how we may use combinatory logic to create something essentially new.

2.1. A very brief introduction to combinatory logic

To illustrate the basics of combinatory logic, consider the simple function definition,

| (1) |

The challenge with expressions like (1) is that the use of a variable x adds bookkeeping to a computational system because one has to keep track of what variables are allowed where. Compare (1) to a function of two variables g(x,y) = .... When we define f, we are permitted to use x. When we define g, we are permitted to use both x and y. But when we define f, it would be nonsensical to use y, assuming y is not defined elsewhere. Analogously in a programming language—or cognitive/logical theories that look like programs—we can only use variables that are defined in the appropriate context (scope). The syntax of what symbols are allowed changes in different places in a representation—and this creates a nightmare for the bookkeeping required in implementation. In combinatory logic’s rival formalism, lambda calculus (Church, 1936), most of the formal machinery is spent ensuring that variable names are distinct and only used in the appropriate places, and that substitution does not incorrectly handle variable names.

What logicians discovered was that this situation could be avoided by using combinators to glue together the primitive components +, and 1 without ever explicitly creating a variable x (e.g. Schönfinkel, 1967). A combinator is a higher-order function (a function whose arguments are functions) that, in this case, routes arguments to the correct places. For instance using := to denote a definition, let

f := (S + (K 1))

define f in terms of other functions S&K, in addition to the operator + and the number 1. Notably there is no x in the above expression for f, even though f does take an argument, as we will see. The functions S&K are just symbols, and when they are evaluated, they have very simple definitions:

(K x y)→ x (S x y z) → ((x z) (y z))

Here, the arrow (→) indicates a process of evaluation, or moving one step forward in the computation. The combinator K takes two arguments x and y and ignores y, a constant (Konstant) function. S is a function of three arguments, x, y, and z, that essentially passes z to each of x and y before composing the two results. In other notation, S might be written as S(x, y, z) := x(z, y(z)). Note that both S and K both return expressions which are themselves structured compositions of whatever their arguments happened to be.

In this notation, if a function does not have enough arguments it may take the next one in line. For instance in ((K x) y) the K only has one argument. But, because there is nothing else in the way, it can grab the y as its second argument, meaning that computation proceeds,

((K x) y) → (K x y) → x

This operation must respect the grouping of terms, so that ((K x) (y z)) becomes (K x (y z)). The capacity to take the next argument is known in logic as currying, although Curry attributed it to Schönfinkel, and it was more likely first invented by Frege (Cardone & Hindley, 2006). Together, S&K and currying define a logical system that is much more powerful than it first appears.

To see how the combinator definition of f works, we can apply f to an argument. For instance, if we evaluate f on the number 7, we get can substitute in the definition of f into the expression (f 7):

(f 7) = ((S + (K 1)) 7) ; Definition of f → (S + (K 1) 7) ; Currying → ((+ 7) ((K 1) 7)) ; Definition of S → (+ 7 ((K 1) 7)) ; Currying → (+ 7 (K 1 7)) ; Currying → (+ 7 1) ; Definition of K

Essentially what has happened is that S&K have shuttled 7 around to the places where x would have appeared. They have done so merely by their compositional structure and definitions, without ever requiring the variable x in f(x) = x + 1 to be explicitly written. Schönfinkel—and other independent discoverers of combinatory logic—proved the non-obvious fact that any function composition could be expressed this way, meaning any structure with written variables has an equivalent combinatory logic expression without them.

Terminologically, the process of applying the rules of combinatory logic (shown in the gray box just above) is known as reduction. The question of whether a computation halts is equivalent to whether or not reduction leads to a normal form in which none of the combinators have enough arguments to continue reduction. In terms of computational power, combinatory logic is equivalent to lambda calculus (see Hindley & Seldin, 1986), both of which are capable of expressing any computation through function composition (Turing, 1937). This means that any typical program (e.g. in Python or C++) can be written as a composition of these combinators, and the combinators reduce to a normal form if and only if the program halts. Equivalently, any system that implements these very simple rules for S&K is, potentially, as powerful as any computer. This is a remarkable result in mathematical logic because it means that computation can be expressed with the simplest syntax imaginable, compositions of S&K with no extra variables or syntactic operations. Evaluation is equally simple and requires no special machinery beyond the ability to perform S&K’s simple definitions, which are themselves just transformations of binary trees. It is this uniformity and simplicity of syntax that opens the door for implementation in physical or biological systems.

2.2. Church Encoding

The example above uses primitive operations like + and objects like the number 1. It therefore fits well within the traditional LOT view where mental representations correspond to compositions of intrinsically meaningful primitive functions. The primary point of this paper, however, is to argue that the right metaphor for mental representations is actually not structures like (1) or its combinator version, but rather structures without any cognitive primitives at all—that is, structures that contain only S&K.

The technique behind this is known as Church encoding. The idea is that if symbols and operations are encoded as pure combinator structures, they may act on each other via the laws of combinatory logic alone to produce equivalent algorithms to those that act on numbers, boolean operators, trees, or any other formal structure. As Pierce (2002) writes,

[S]uppose we have a program that does some complicated calculation with numbers to yield a boolean result. If we replace all the numbers and arithmetic operations with [combinator]-terms representing them and evaluate the program, we will get the same result. Thus, in terms of their effects on the overall result of programs, there is no observable difference between the real numbers and their Church-[encoded ]numeral representations.

A simple, yet philosophically profound, demonstration is to construct a combinator structure that implements boolean logic. One possible way to do this is to define

true := (K K) false := K and := ((S (S (S S))) (K (K K))) or := ((S S) (K (K K))) not := ((S ((S K) S)) (K K))

Defined this way, these combinator structures obey the laws of Boolean logic: (not true) → false, and (or true, ↪ false) → true, etc. The meaning of mental symbols like true and not is given entirely in terms of how these little algorithmic chunks operate on each other. To illustrate, the latter computation would proceed as

(or true false) = (((S S) (K (K K))) (K K) K) ; Definition of or, true, false → (((S S) (K (K K)) (K K)) K) ; Currying rule → ((S S (K (K K)) (K K)) K) ; Currying rule twice → ((S (K K) ((K (K K)) (K K))) K) ; Definition of S → ((S (K K) (K (K K) (K K))) K) ; Currying → ((S (K K) (K K)) K) ; Definition of K → (S (K K) (K K) K) ; Currying → ((K K) K ((K K) K)) ; Definition of S → ((K K K) ((K K) K)) ; Currying → (K ((K K) K)) ; Definition of K → (K (K K K)) ; Currying → (K K) ; Definition of K

resulting in an expression, (K K), which is the same as the definition of true! Readers may also verify other relations, like that (and true true) → true and (or (not false)false) → true, etc.

The Church encoding has essentially tricked S&K’s boring default dynamics into doing something useful—implementing a theory of simple boolean logic. This is a CRS because the symbols have no intrinsic meaning beyond that which is specified by their dynamics and interaction. The meaning of each of these terms is, as in a CRS, critically dependent on the form of the others. The capacity to do this reflects a more general idea in dynamical systems—one which is likely central to understanding how minds represent other systems—which is that sufficiently powerful dynamical systems permit encoding or embedding of other computations (e.g. Sinha & Ditto, 1998; Ditto, Murali, & Sinha, 2008; Lu & Bassett, 2018). The ability to use S&K to perform useful computations is very general, allowing us to encode complex data types, operations, and a huge variety of other logical systems. Appendix A sketches a simple proof of conditions under which combinatory logic is capable of representing any consistent set of facts or relations, with a few assumptions, making it essentially a universal isomorphism language.

2.3. An inferential theory from the probabilistic LOT

The capacity to represent anything is, of course, not enough. A cognitive theory must also have the ability to construct the right particular representations when data—perhaps partial data—is observed. The data that we will consider is sets of base facts like those shown in Figure 1, (succ winter) → spring, etc. These facts may be viewed as structured or relational representations of perceptual observations—for instance, the observation that some season (spring) comes after (succ) another (winter). Note, though, that the meanings of these symbols are not specified by these facts; all we know is that spring (whatever that is) comes after (whatever that is) the season winter (whatever that is). Apart from any perceptual links, that knowledge is structurally no different from (father jim) → billy. Because these symbols do not yet have meanings, knowledge of the base facts is much like knowledge of a placeholder structure (Carey, 2009), or concepts whose meanings yet to be filled in, even though some of their conceptual role is known.

The goal of the learner is to assign each symbol a combinator structure so that the structures in the base facts are satisfied.5 For this one rule (succ winter) → spring we could assign succ := (K S), winter := K and spring := S since then

(succ winter) := ((K S) K) → (K S K) → S = spring

Only some mappings of symbols to strings will be valid. For instance, if spring := K instead, we’d have that

(succ winter) := ((K S) K) → (K S K) → S ≠ spring.

The real challenge a learner faces in each domain is to find a mapping of symbols to combinators that satisfies all of the facts simultaneously. Such a solution provides an internal model—a Church encoding—whose computational dynamics captures the relations you have observed under repeated reduction via S&K. Often, the mapping of symbols to combinators will often be required to be unique, meaning that we can always tell which symbol a combinator output stands for. In addition, once symbols are mapped to combinators satisfying the observed base facts, learners or reasoners may derive new generalizations that go beyond these facts.

The choice of which combinator each symbol should be mapped to is here made using ideas about smart ways of solving the problem of induction. In particular, our approach is motivated in large part by Solomonoff’s theory of inductive inference, where learners observe data and try to find a concise Turing machine that describes the data (Solomonoff, 1964a, 1964b). Indeed, human learners prefer to induce hypotheses that have a shorter description length in logic (Feldman, 2000, 2003a; Goodman, Tenenbaum, et al., 2008), with simplicity preferences perhaps a governing principle of cognitive systems (Feldman, 2003b; Chater & Vitányi, 2003). Simplicity-based preferences have been used to structure the priors in standard LOT models (Goodman, Tenenbaum, et al., 2008; Katz, Goodman, Kersting, Kemp, & Tenenbaum, 2008; Ullman et al., 2012; Piantadosi et al., 2012; Piantadosi, 2011; Kemp, 2012; Yildirim & Jacobs, 2014; Erdogan et al., 2015), and has close connections to the idea of minimum description lengths (Grünwald, 2007). Since S&K are equivalent in power to a Turing machine, then finding a concise S&K expression for a domain corresponds to finding a short program (up to an additive constant, as in Kolmogorov complexity (Li & Vitányi, 2008)) that computes its dynamics; finding a S&K expression that evaluates quickly is tantamount to finding a fast-running program.

One problem with theories based on description length is that they can easily run into computability problems: short programs or logical expressions often do not halt6 meaning that we may not be able to even evaluate every given logical hypothesis to see if it yields the correct answer, according to the base facts. A solution is to instead base our preferences in part on how quickly each combinator arrives at the correct answer. We can assign prior to a hypothesis h that is proportional to (1/2)t(h)+l(h) where t(h) is the number of steps it takes h to reduce the base facts to normal form and l(h) is the number of combinators in h (i.e. its description length). Similar time-based priors have been developed in theories of artificial intelligence (Levin, 1973, 1984; Schmidhuber, 1995, 2002; Hutter, 2005; Schmidhuber, 2007) or as a measure of complexity (Bennett, 1995). These priors allow inductive inference to avoid difficulties with the halting problem because, essentially, any finite amount of computation will allow us to upper-bound a hypothesis’ probability, even if it does not halt. For instance, a machine that has not halted or a combinator that has not finished evaluation in 1000 steps will have a prior probability of at most (1/2)1000. Thus, as a computation runs, its probability drops, meaning that long-running expressions can effectively be pruned out of searches without knowing whether they might eventually halt (thus, evaluation of all computations—halting or not—can be dovetailed). This fact can be used in Markov-Chain Monte-Carlo techniques like the Metropolis Hastings algorithm to implement Bayesian inference over these spaces by rejecting proposed hypotheses once their probability drops too low (Piantadosi & Jacobs, 2016). Here, we search over assignments of symbols in the base facts to combinators and disregard those that are too low probability either in complexity or in evaluation time when run on the given base facts.

Since so much other work has explored the probabilistic details of LOT theories, and I intend to provide a simple demonstration, I’ll make two simplifying assumptions in this paper. First, I assume that learners want only the fastest-running combinator string which describes their data, ignoring the gradience of fully Bayesian accounts. Second, it will be assumed that only theories that are consistent with the data are considered. This will therefore assume that leaner’s data is noise-free, although the general inferential mechanisms can readily be extended to noisy data (see LOT citations above).

2.4. Details of the implementation

The problem of finding a concise mapping of the symbols to combinators that obey these laws is solved here using a custom implementation named churiso (pronounced like the sausage “chorizo”) and available under a GPL license in both Scheme and Python7. The implementation was provided with base facts and searched for mappings from symbols to combinators that satisfies those constraints under the combinator dynamics defined above. Among all mappings of symbols to combinators that are consistent with the base facts, those with the fastest running time are preferred.

The implementation uses a backtracking algorithm that exhaustively tries pairing symbols and combinator structures (ordered in terms of increasing description-length complexity), rejecting a partial solution if it is found to violate a constraint. Several optimizations are provided in the implementation. First, the set of combinators considered for each symbol can be limited to those already in normal form to avoid re-searching equivalent combinators. Second, the algorithm uses a simple form of constraint propagation in order to rapidly reject assignments of symbols to combinator strings that would violate a later constraint. For instance, if a constraint says that (f x) must reduce to y, and f and x are determined, then the resulting value is pushed as the assignment for y. An order for searching is chosen which maximizes the number of constraints which can be propagated in this way. In order to explore the space, Churiso also allows us to define and include other combinators either as base primitives or as structures derived from S&K. The results in this paper use the search algorithm including several other standard combinators (B, C, I) to increase the search effectiveness, but each is converted to S&K in evaluating a potential hypothesis. Notably, however, search likely still scales exponentially in the number of symbols in base facts—trying to find how to assign c combinators to n symbols (assuming none can be determined through constraint propagation above) takes cn search steps.8 This form of backtracking shares much with, for example, implementations of the Prolog programming language (Bratko, 2001, see, e.g.). However, it is important to note that we do not intend this backtracking search to be an implementation-level theory of how people themselves might find these representations. The implementation that people use to do something like Church encoding will depend on the specifics of the representation they possess. Instead, this algorithm is only intended to provide a computational-level theory (Marr & Poggio, 1976; Marr, 1982) that says if people choose representations of the base facts that are fast-evaluating and concise, then they will be able to represent and generalize similarly to people. Thus, our primary concern is whether this algorithm finds any solutions, not whether in doing so it searchers the space in a way similar to how biological organisms might.

In a testament to the simplicity and parsimony of combinatory logic, the full implementation requires only a few hundred lines of code, including algorithms for reading the base facts, searching for constraints, and evaluating combinator structures. Ediger (2011) provides an independent combinatory logic implementation that includes several abstraction algorithms and was used to validate implementation of combinatory logic in Churiso.

3. Applications to cognitive domains

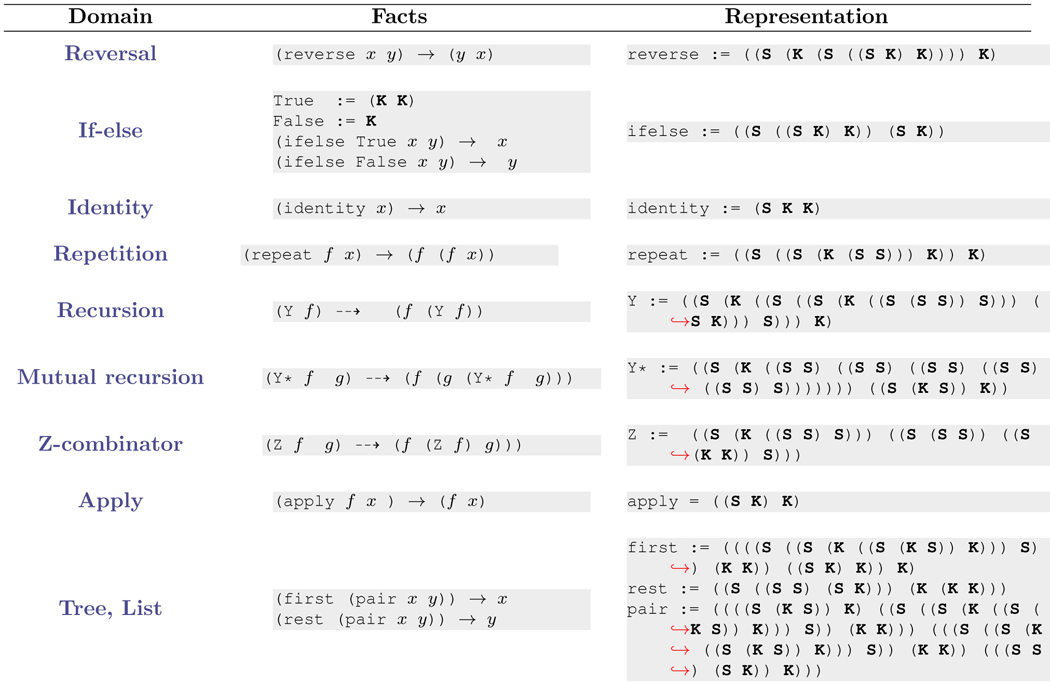

This section presents a number of examples of using the inferential setup to discover combinator structures for a variety of domains. In each example, I will provide the base facts and then the fastest running combinator structure (Church encoding of the base facts) that was discovered by Churiso. The examples have been chosen to illustrate a variety of different domains that have been emphasized in human and animal psychology. The first section shows that theories can represent or encode relational structures. The second examines conceptual structures that involve generalization, meaning that we are primarily interested in how the combinator structure extends to compute new relations not in set of base facts. In each of these cases, the generalization fits simple intuitions about and permits derivation of new knowledge in the form of “correct” predictions about unobserved new structures. The third section look at combinatory logic to represent new computations in the form of components that could be used in new mental algorithms.

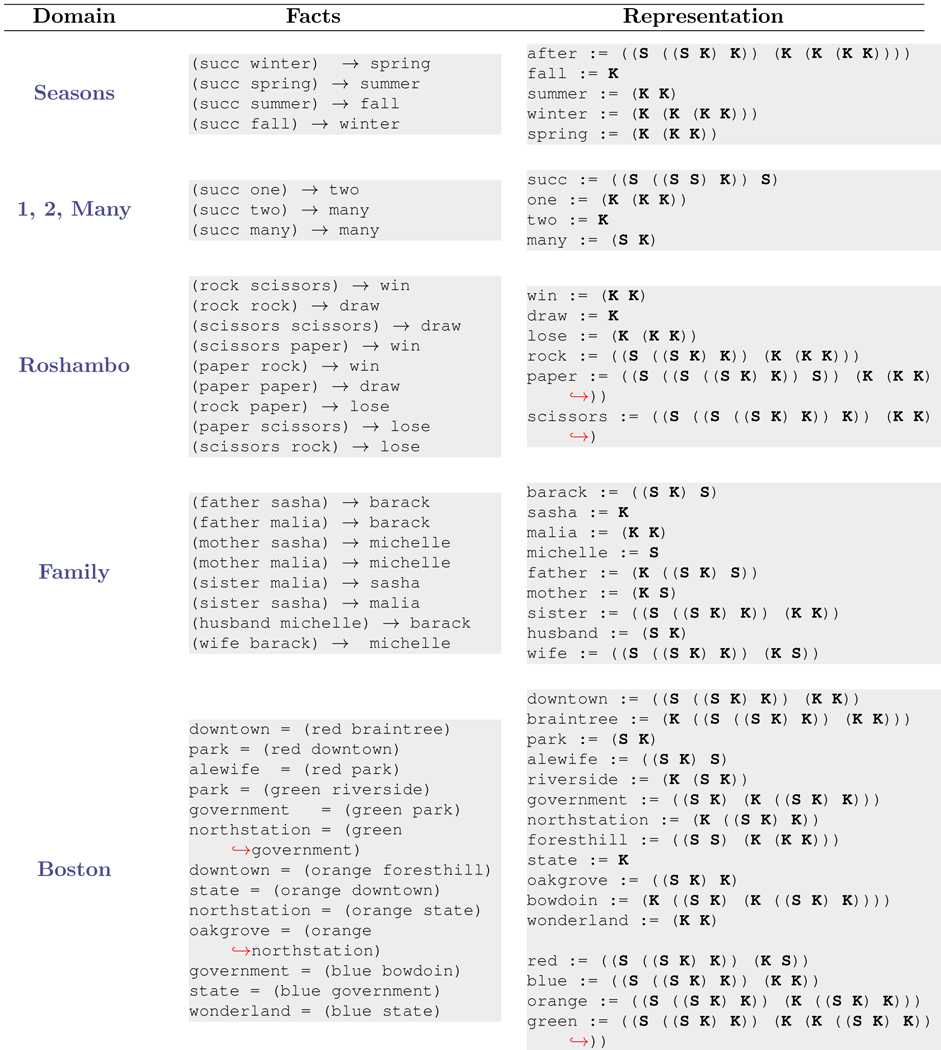

3.1. Representation

Table 1 shows five domains with very different structural properties and how they may be represented with S&K. The middle column shows the base facts that were provided to Churiso and the right hand column shows the most efficient combinator structure. The seasons example show a circular system of labeling, where the successor (succ) of each season loops around in a Mod-4 type of system. The 1, 2, many concept where there is similarly a successor, but the successor of any number above two is just the token many, a counting system found in many languages of the world. Roshambo (also called “Rock, paper, scissors”) is an interesting case where each object represents a function that operates on each other object, and returns an outcome in a discrete set (“rock” beats “scissors”, etc.). This game has been studied in primate neuroscience (Abe & Lee, 2011). This illustrates a type of circular dominance structure, but one which is dependent on which object is considered the operator (e.g. first in the parentheses) and which is the operand. The family example shows a case where simple relations like mother and husband can be defined and are functions that yield the correct individual. Interestingly, realization of just these representations does not automatically correspond to representation of a “tree”—instead the requirement to represent only these relations yields a simpler composition of combinators without an explicit tree structure. Later examples (tree, list) show how trees themselves might be learned; an interesting challenge is to discover a latent tree structure from examples like in family (for work on learning the general case with LOT theories, see Katz et al. (2008); Mollica and Piantadosi (2015)). Note that in all examples, the combinator structures Churiso discovers shouldn’t be intuitively obvious to us—these combinators structures are not the symbols we are used to thinking about (like father and many), certainly not in conscious awareness. Instead, the base facts should sound obvious; the S&K structures are the stuff out of which the symbols in the base facts are made. The Boston example shows encoding of a graph structure, a simplified version of Boston’s subway map. Here, there are 4 relations red, green, orange, and blue, which map some stations to other stations. This structure, too, can be encoded into S&K.

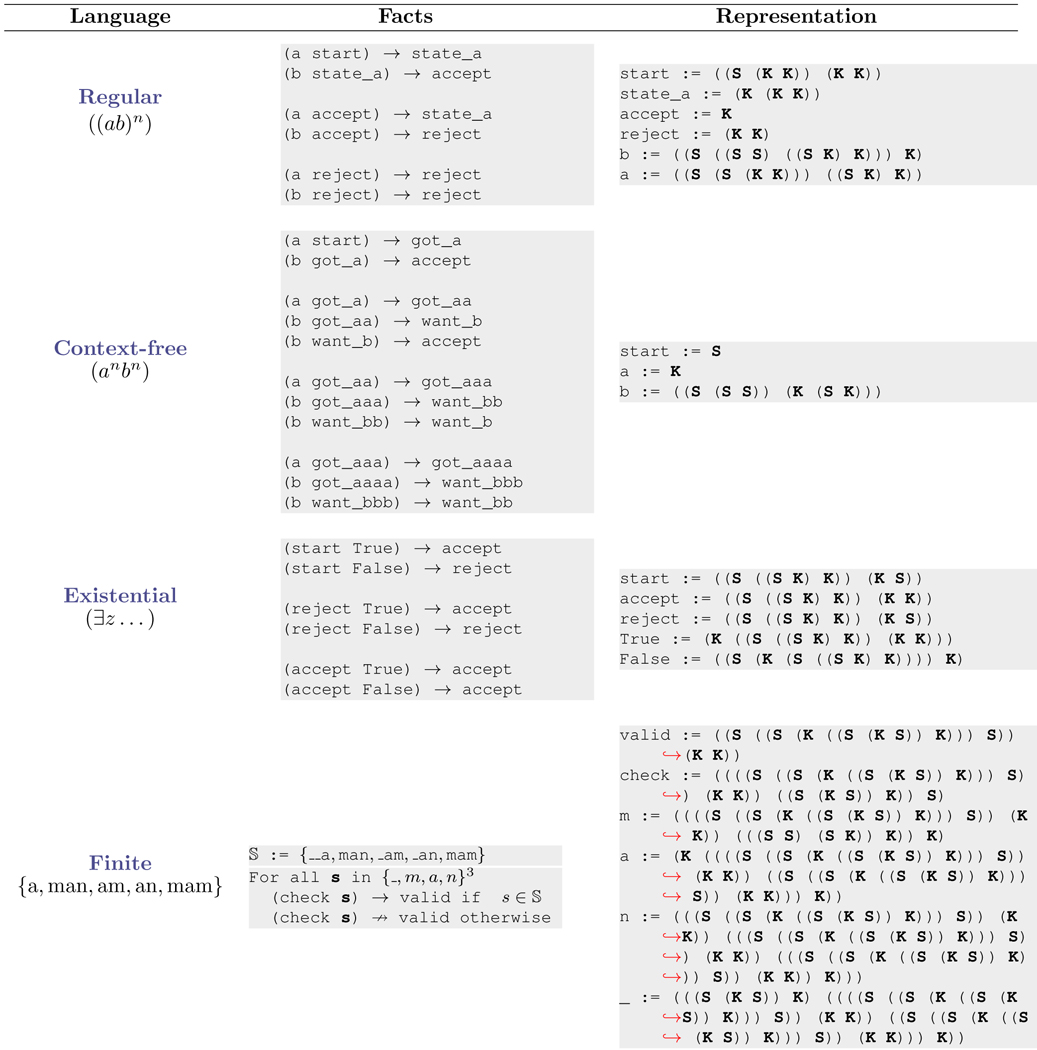

Table 1:

Church encoding inferred from the base facts that permit representation of several logical structures common in psychology.

|

One challenge for theories like this may be in learning multiple representations at the same time. It is indeed possible to do so, while enforcing uniqueness constraints among the symbols. To illustrate, for example, if we simultaneously try to encode seasons, roshambo, and family into a single set of facts, where symbols in each must be unique. Churiso finds the solution,

after := ((S ((S S) S)) K) fall := K summer := (K (K K)) winter := (K K) spring := (K ((S (K K)) (K (K K)))) succ := ((S ((S S) K)) S) one := (K (K (S (K K)))) two := (S (K K)) many := (K ((S (S (K K))) (S (K K)))) barack := S father := (K S) sasha := ((S K) S) malia := (K ((S K) S)) michelle := ((S S) S) mother := (K ((S S) S)) sister := ((S ((S K) S)) K) husband := ((S (K (K S))) S) wife := (S S)

This illustrates that while managing the complexity of multiple concepts may, in some situations, be tractable even with current methods.

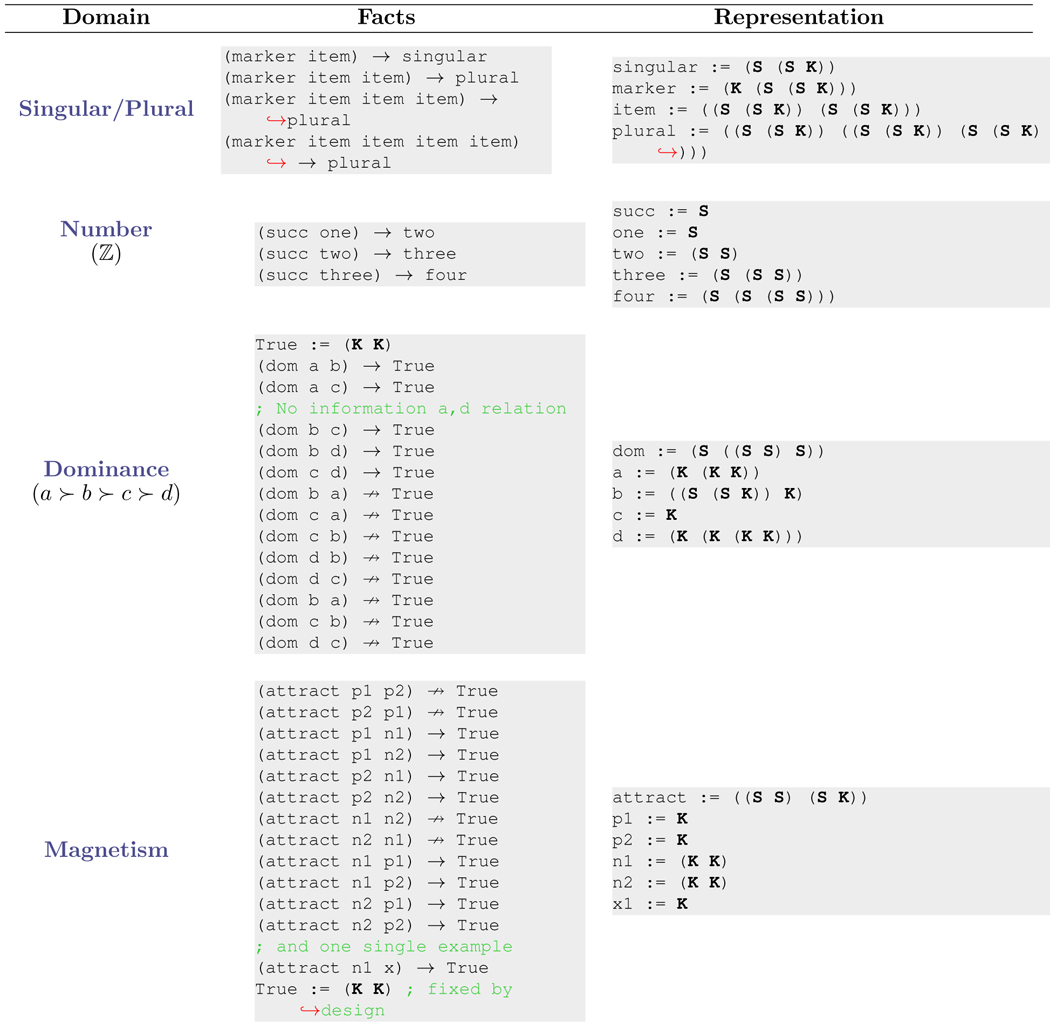

3.2. Generalization

The examples in Table 1 mainly shows how learners might memorize facts and relations using S&K. But equally or more important to cognitive systems is generalization: given some data, can we learn a representation whose properties allow us to infer new and unseen facts? What this means in the context of Churiso is that we are able to form new combinations of functions—those whose outcome is not specified in the base facts. Table 2 shows some examples. The first of these is a representation of a singular/plural system like those found in natural language. Here, there is a relation marker that takes some number of arguments item, item, etc. and returns singular if it receives one argument, plural if it receives more than one. This requires generalization because the base facts only show the output for up to four items. However, the learned representation is enough to go further to any number of items, outside of the base facts. For instance,

(marker item item item item item item item) → ((S (S K)) (S (S K))) = plural

Table 2:

S&K structures in domains involving interesting generalization, where the combinator structures allow deduction beyond the base facts.

|

Intuitively, the first time marker is applied to item, we get

(marker item) → ((S (K (S K K))) (S (S K))) = singular

When this is applied to another item, you get the expression for plural:

(marker item item) = ((marker item) item) → (singular item) → plural

And then plural has the property that it returns itself when given one more item:

(plural item) → plural

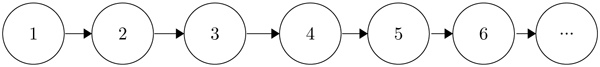

So, plural is a “fixed point” for further applications of item, allowing it to generalize to any number of arguments. In other words, what Churiso discovers is a system that is functionally equivalent to a simple finite-state machine:

Note that this finite state machine is not an explicitly-specified hypothesis that learners have, but only emerges

implicitly through the appropriate composition of S&K.

The next domain, number, shows a generalization that builds an infinite structure. Intuitively, the learner is given a successor relationship between the first few words. The critical question is whether this is enough to learn a S&K structure for succ that will continue to generalize beyond the meaning of four. The results show that it is: the form of succ that is learned is essentially one that builds a “larger” representation for each successive number. The way this works is extremely simple: K requires more than one argument. But, the structure represented in the relation (succ x) for x=one, two, ... only provides it a single argument (here called x). Thus, the n’th number in this Church encoding is the one that needs n more arguments to successfully evaluate (or reduce to nothing). This will make it such that (succ four) is a new concept (representation) and (succ (succ four)) is yet another, generalizing infinitely to infinitely many numbers. In this case, S&K create from the three base facts a structure isomorphic to the natural numbers,

The assumed base facts correspond to the kind of evidence that might be available to learners of counting (Carey, 2009). This provides a close theory to Piantadosi et al. (2012)’s LOT model of number acquisition, which was created to solve the inductive riddle posed by Rips and colleagues (Rips, Asmuth, & Bloomfield, 2006, 2008; Rips, Bloomfield, & Asmuth, 2008) about what might constrain children’s generalization in learning number. The difference is that the Piantadosi et al. (2012)’s LOT representations were based in primitive cognitive content, like an ability to represent sets and perform operations on them. Here, the learning is not a counting algorithm, but rather an internal conceptual structure that is generative of the concepts themselves, providing a possible answer to the question of where the structure itself may come from (see Rips, Asmuth, & Bloomfield, 2013). It is interesting to contrast number, 1 2 many, and seasons. In each of these, there is a “successor” function, but which function is learned depends on the structure of the base facts. This means that notions like “successorship” cannot be defined narrowly by the relationship between a few elements, but will critically rely on the role this concept plays in a larger collection of operations.

The dominance concept in Table 2 shows another interesting case of generalization. Dominance structures are common in animal cognition (see, e.g., Drews, 1993). For instance, Grosenick, Clement, and Fernald (2007) show that fish can make transitive inferences consistent with a dominance hierarchy: if they observe a ≻ b and b ≻ c, then they know that a ≻ c, where ≻ is a relation specifying who dominates in a pairwise interaction. The base facts for the dominance encode slightly more information, corresponding to almost all of the dominance relationships between some mental tokens a, b, c, and d.9 This example illustrates another feature of Churiso: we are able to specify constraints in terms of evaluations not yielding some relations. So for instance,

(dom b a) ↛ True

means that (dom b a) evaluates to something other than True. This relaxation of the constraints to only partially-specified often helps to learn more concise representations in S&K. Critically the relation between a and d is not specified in the base facts. Note that this relation could be anything and any possible value could be encoded by S&K. The simplicity bias of the inference, however, prefers combinator structures for these symbols such that the unseen relation (dom a d) → True but (dom d a) does not. Thus, the S&K encoding of the base facts gives learners an internal representation that automatically generalizes to an unseen relation.

The magnetism example is motivated by Ullman et al. (2012)’s model studying the learning of entire systems of knowledge (theories) in conceptual development. In magnetism, we know about different kinds of materials (positive, negative, non-magnetic) and that these follow some simple relationships, like that positives attract negatives and that positives repel each other, etc. The magnetism example provides base facts giving the pairwise interaction of two positive two positives (p1, p2) and two negatives (n1, n2). But from the point of view of the inference, these four symbols are not categorized into “positives” and “negatives”, they are just arbitrary symbols. In this example, I have also dropped the uniqueness requirement to allow grouping of these symbols into “types”, as shown by their learned combinator structures with the pi getting mapped to the same structure and the ni getting mapped to a different one. To test generalization, we can provide the model with one single additional fact, that n1 and x attract each other. The model automatically infers that x has the same S&K structure as p1 and p2, meaning that it learns from a single observation of it is a “positive”, including all of the associated predictions such as that (attract n1 x) → True.

3.3. Computational process

The examples above respectively show computation and generalization, but they do not yet illustrate one of the most remarkable properties of thinking—we appear able to discover a wide variety of computational processes. The concepts in Table 3 are ones that implement some simple and intuitive algorithmic components. Here, I have introduced some new symbols to the base facts, f, x, and y. These are treated as universally quantified variables, meaning that the constraint must hold for all values (combinator expressions) they can take. The learning model’s discovery of how to encode these facts corresponds to the creation of fundamental algorithmic representations using only the facts’ simple description of what the algorithm must do.

Table 3:

S&K structures that implement computational operations.

|

An example is provided by if-else. A convenient feature of many computational systems it that when they reach a conditional branch (“if” statement), they only have to evaluate the corresponding branch of the program. The shown base facts make if-else return x if it receives a true first argument and y otherwise, regardless of what x and y happen to be. Even though conditional branching is a basic computation, it can be learned from even more primitive components S&K.

The identity example illustrates the distinction between implicit and explicit knowledge in the system. We can define identity := (S K K) so,

(identity x) = ((S K K) x) → (S K K x) → ((K x) (K x)) → (K x (K x)) → x.

It may be surprising that we could construct a cognitive system without any notion of identity. Surely to even perform a computation on a representation x, the identity of x must be respected! In S&K, this is true in one sense: the default dynamics of S and K do respect representational identity. But in another sense, such a system comes with no “built in” cognitive representation of a function which is the identity function. Instead, it can be learned.

A more complex example can be found in the example of repetition. Here, we seek a function repeat that takes two arguments f and x and calls f twice on x. Humans clearly have cognitive representations of a concept like repeating a computation; “again” is an early-learned word, and the general concept of repetition is often marked morphologically in the world’s languages with reduplication. As is suggested by the preceding examples, the concept of repetition need not be assumed by a computational system.

Related to repetition, or doing an operation “again” is the ability to represent recursion, a computational ability that has been hypothesized to be the key defining characteristic of human cognition (Hauser, Chomsky, & Fitch, 2002) (see Tabor (2011) for a study of recursion in neural networks). One example of how to implement recursion in combinatory logic is the Y-combinator,

Y = (S (K (S I I)) (S (S (K S) K) (K (S I I)))),

a function famous enough in mathematical logic to have been the target of at least one logician’s arm tattoo. Like other concepts, the Y-combinator can be built only from S&K. It works by “making” a function recursive, passing the function to itself as an argument. The details of this clever mechanism are beyond the scope of this paper (see Pierce, 2002). One challenge in learning Y is that by definition it has no normal form when applied to a function. To address this, we introduce a new kind of constraint --->, which holds true if the partial evaluation trace of the left and right hand sides yield expressions that are equal to a given fixed constant depth. To learn recursion, we require that applying Y to f is the same as applying f to this expression itself,

(Y f) ---> (f (Y f))

Neither side reduces to a normal form, but the special arrow means that when we run both sides, we get out the same structure, which in this case happens to be the (infinite) recursion of f composed with itself,

(f (f (f (f ... ))))

Churiso learns a slightly longer form than the typical Y-combinator due to the details of its search procedure (for the most concise recursive combinator possible, see Tromp, 2007) (Note that in these searches, the runtime is ignored since the combinator does not stop evaluation). The ability to represent Y permits us to capture algorithms, some of which may never halt. For instance, if we apply Y top the definition of successor from the number example, we get back the concept of that counts forever, continuously adding one to its its result: (↪Y succ). The ability to learn recursion as a computation from a simple constraint might be surprising to programmers and cognitive scientists alike, for whom recursion may seem like an aspect of computation that has to be “built in” explicitly. It need not be so, if what we mean by learning “recursion” is coming up with a Church encoding implementation of it.

The mutual recursion case shows a recursive operator of two functions, known as the Y*-combinator, that yields an infinite alternating composition of f and g,

(f (g (f (g (f (g ... ))))))

This is the analog of the Y-combinator but for mutually recursive functions–where f is defined in terms of g and g is defined in terms of f. This illustrates that even more sophisticated kinds of algorithmic processes can be discovered and implemented in S&K.

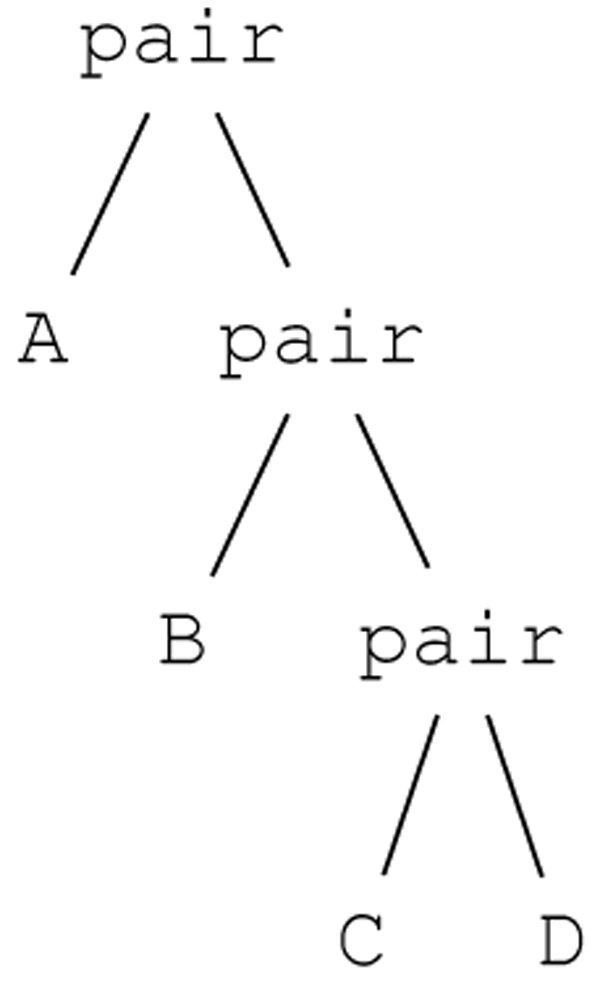

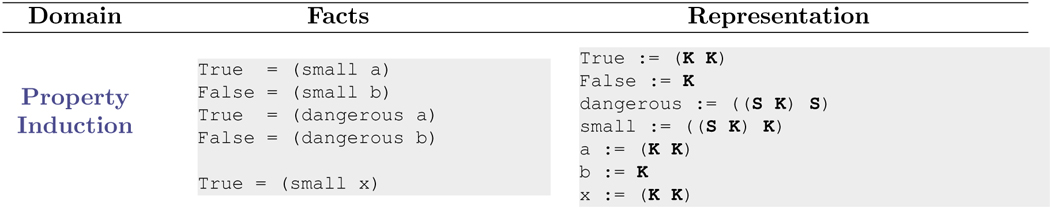

From surprisingly small base facts, Churiso is also able to discover primitives first, rest, and pair, corresponding to the structure-building operations with memory. The arguments for pair are “remembered” by the combinator structure until they are later accessed by either first or rest. As a result, they can build common data structures. For instance, a list may be constructed by combining pair:

L = (pair A (pair B (pair C D)))

Or, a binary tree may be encoded,

L = (pair (pair A B) (pair C D))

An element such as C may then be accessed (first (rest T)), the first element of the second grouping in the tree. These data structures are so foundational that they form the foundational built-in data type in programming languages like Scheme and Lisp (where they are called car, cdr, and cons for historical reasons), and thus support a huge variety of other data structures and algorithms (Abelson & Sussman, 1996; Okasaki, 1999). By showing how speakers might internalize these concepts, we can therefore demonstrate in principle how many algorithms and data structures could be represented as well.

3.4. Formal languages

One especially interesting case to consider is how S&K handles concepts that correspond to (potentially) infinite sets of strings, or formal languages. Theoretical distinctions between classes of formal languages form the basis of computational theories of human language (e.g. Chomsky, 1956, 1957) as well as computation itself (see Hopcroft, Motwani, & Ullman, 1979). To implement each, Table 4 provides base facts giving the transitions between computational states for processing languages. The regular language provides the transition table for a simple finite-state machine that recognizes the language {ab,abab,ababa,...}. The existential one also describes a finite state machine that can implement first-order quantification, an association potentially useful in natural language semantics (van Benthem, 1984; Mostowski, 1998; Tiede, 1999; Costa Florêncio, 2002; Gierasimczuk, 2007).

Table 4:

Church encoding of several formal language constructs.

|

The most interesting example is provided by context-free, which is a language {ab,aabb,aaabbb,...} that provably cannot be expressed with a regular language (finite-state machine). Instead, the learned mapping essentially implements a computational device with an infinite number of states from the base facts. For instance, the state after observing 1, 2, and 3 as are,

got_a := ((S (K K)) S) got_aa := ((S (K K)) (S (K K) S)) got_aaa := ((S (K K)) (S (K K) (S (K K) S))) got_aaaa := ((S (K K)) (S (K K) (S (K K) (S (K K) S))))

Each additional a adds to this structure. Then, each incoming b removes from it

(b got_aaaa) = want_bbb = (K (K (K (K (S S))))) (b got_aaa) = want_bb = (K (K (K (S S)))) (b got_aa) = want_b = (K (K (S S))) (b want_b) = accept = (K (S S))

This works precisely like a stack in a parser, even though such a stack is not explicitly encoded into S&K or the base facts. Thus, this mapping generalizes infinitely to strings of arbitrary length, far beyond the input base facts’ length of four (Note that the base facts and combinators ensure correct recognition, but do not guarantee correct rejection).

Finally, the finite example shows an encoding of the set of strings of the letters “m”, “a”, “n” and space (“_ ”) that form valid English words, {a, man, am, an, mam}. These can be encoded by assigning each character a combinator structure, but the resulting structures are quite complex. Note, too, that these base facts do not guarantee correct generalization to longer character sequences. This example illustrates that while Church encoding can represent such information, it is unwieldy for rote memorization. Church encoding is more likely to be useful for algorithmic processes and conceptual systems with many patterns. Memorized facts (or sets) may instead rely on specialized systems of memory representation.

The ability to represent formal languages like these is important because they correspond to provably different levels of computational power, showing that a single system for learning and representation across these levels is a defining strength of this approach (for for LOT work along these lines, see Yang & Piantadosi (in prep); for language learning on Turing-complete spaces in general, see Chater and Vitányi (2007); Hsu and Chater (2010); Hsu, Chater, and Vitányi (2011)). In the examples, we have taught Churiso the full algorithm by showing it a few steps from which it generalizes the appropriate algorithm. This ability demonstrates the induction of a novel dynamical system from a few simple observations, work in many ways reminiscent of encoding structure in continuous dynamical systems (Tabor, Juliano, & Tanenhaus, 1997; Tabor, 2009, 2011; Lu & Bassett, 2018).

3.5. Summary of computational results

The results of this section have shown that learners can in principle start with only representations of S&K and construct much richer types of knowledge. Not only can they represent structured knowledge, by doing so they permit derivation of fundamentally new knowledge and types of information processing. The ability of a simple search algorithm to actually discover these kinds of representations shows that the resulting representational and inductive system can “really work” on a wide variety of domains. However, the main contribution of this work are the general lessons that we can extract from considering systems like S&K.

4. Mental representations are like combinatory logic (LCL)

My intention is not to claim that combinatory logic is the solution to mental representation—it would be pretty lucky if logicians of the early 19th century happened to hit on the right theory of how a biological system works. Rather, I see combinatory logic as a metaphor with some of the right properties. I will refer to the more general form of the theory as like Combinatory-Logic, or LCL, and describe some of its core components.

4.0.1. LCL theories have no cognitive primitives

The primitives used in LCL theories (like S&K) specify only the dynamical properties of a representation—how each structure interacts with any other. LCL therefore has no built-in cognitive representations, or symbols like CAUSE and True. This is the primary difference between LCL and LOT theories, whose bread and butter is meaningful components with intrinsic meaning. The lack of these operations is beneficial because LCL therefore leaves no lingering questions about how mental tokens may come to have meaning. The challenge for LCL is then to eventually specify how a token such as CAUSE comes to have its meaning by formalizing the necessary and sufficient relations to other concepts that fully characterize its semantics.

4.0.2. LCL theories are Turing-complete

Though it is not widely appreciated in cognitive science or philosophy of mind, humans excel at learning, internalizing, creating, and communicating algorithmic processes of incredible complexity (Rule et al., under review). This is most apparent in domains of expertise—a good car mechanic or numerical analyst has a remarkable level of technical and computational knowledge, including not only domain-specific facts, but knowledge of specific algorithms, processes, causal pathways, and causal interventions. The developmental mystery is how humans start with what a baby knows and build the complex algorithms and representations that adults understand. The power of LCL systems come from starting with a small basis of computational elements that have the capacity to express arbitrary computations, and applying a powerful learning theory that can operate on such spaces.

Though combinatory logic does not differ from Turing machines in terms of computational power, it does differ in terms of qualitative character. There are several key differences that make combinatory logic a better architecture for thinking of cognition, even beyond its simplicity and uniformity. First, it is a formalism for computation which is entirely compositional, motivated here by the compositional nature of language and other human abilities. Turing machines are simply an architecture that Turing thought of in trying to formulate a theory of “effective procedures” and it does not seem particularly natural to connect biological neurons to Turing machines, efforts to do so in artificial neural network not withstanding (Graves, Wayne, & Danihelka, 2014; Trask et al., 2018).

4.0.3. LCL theories are compositional

The compositionality of natural language and natural thinking indicates that mental representations must themselves support composition (Fodor, 1975). Semantic formalisms in language (e.g Montague, 1973; Heim & Kratzer, 1998; Steedman, 2001; Blackburn & Bos, 2005) rely centrally on compositionality, dating back to Frege (1892). It is no accident that these theories formalize meaning through function composition, using a system (λ-calculus) that is formally equivalent to combinatory logic. The inherently compositional architecture of LCL contrasts with Turing machines and von Neumann architectures, which have been dominant conceptual frameworks primarily because they are easy for us to conceptualize and implement in hardware. When we consider the apparent compositionality of thought, a computational formalism based in composition becomes a more plausible starting point.

4.0.4. LCL theories are structured