Abstract

Artificial intelligence (AI) is being increasingly adopted in medical research and applications. Medical AI devices have continuously been approved by the Food and Drug Administration in the United States and the responsible institutions of other countries. Ultrasound (US) imaging is commonly used in an extensive range of medical fields. However, AI-based US imaging analysis and its clinical implementation have not progressed steadily compared to other medical imaging modalities. The characteristic issues of US imaging owing to its manual operation and acoustic shadows cause difficulties in image quality control. In this review, we would like to introduce the global trends of medical AI research in US imaging from both clinical and basic perspectives. We also discuss US image preprocessing, ingenious algorithms that are suitable for US imaging analysis, AI explainability for obtaining informed consent, the approval process of medical AI devices, and future perspectives towards the clinical application of AI-based US diagnostic support technologies.

Keywords: ultrasound imaging, artificial intelligence, machine learning, deep learning, preprocessing, classification, detection, segmentation, explainability

1. Introduction

Ultrasound (US) imaging is superior to other medical imaging modalities in terms of its convenience, non-invasiveness, and real-time properties. In contrast, computed tomography (CT) has a risk of radiation exposure, and magnetic resonance imaging (MRI) is non-invasive but costly and time-consuming. Therefore, US imaging is commonly used for screening as well as definitive diagnosis in numerous medical fields [1]. Current advances in image rendering technologies and the miniaturization of ultrasonic diagnostic equipment have led to its use in point-of-care testing in emergency medical care, palliative care, and home medical care [2]. It is worth considering the combination of US diagnostic capabilities and laboratory tests as the multi-biomarker strategy for prediction of clinical outcome [3]. However, US imaging exhibits characteristic issues relating to image quality control. In CT and MRI, image acquisition is performed automatically with a specific patient, a fixed measurement time, and consistent image settings. On the other hand, US imaging is acquired through manual sweep scanning; thus, its image quality is dependent on the skill levels of the examiners [4]. Furthermore, acoustic shadows owing to obstructions such as bones affect the image quality and diagnostic accuracy [5]. Certain US diagnostic support technologies are required to resolve these practical difficulties that arise in normalizing sweep scanning techniques and image quality.

In recent years, artificial intelligence (AI), which includes machine learning and deep learning, has been developing rapidly, and AI is increasingly being adopted in medical research and applications [6,7,8,9,10,11,12,13,14,15,16]. Deep learning is a leading subset of machine learning, which is defined by non-programmed learning from a large amount of data with convolutional neural networks (CNNs) [17]. Such state-of-the-art technologies offer the potential to achieve tasks more rapidly and accurately than humans in particular areas such as imaging and pattern recognition [18,19,20]. In particular, medical imaging analysis is compatible with AI, where classification, detection, and segmentation used as the fundamental tasks in AI-based imaging analyses [21,22,23]. Furthermore, many AI-powered medical devices have been approved by the Food and Drug Administration (FDA) in the United States [24,25].

The abovementioned clinical issues have affected and slowed the progress of medical AI research and development in US imaging compared to other modalities [26,27]. Table 1 shows the AI-powered medical devices for US imaging that have been approved by the FDA as of April 2021 (https://www.accessdata.fda.gov/scripts/cdrh/cfdocs/cfpmn/pmn.cfm, the access date was 10 May 2021) (Table 1). Deep learning requires the availability of sufficient datasets on both normal and abnormal subjects for different diseases in high-quality controls. It is necessary to assess the input data quality and to accumulate robust technologies, including effective data structuring and algorithm development, to facilitate the clinical implementation of AI devices. Another concern is the AI black box problem, whereby the decision-making process of the manner in which complicated synaptic weighting is performed in the hidden layers of CNNs is unclear [28]. Examiners need to understand and explain the rationale for diagnosis to patients objectively for obtaining informed consent in constructing valid AI-based US diagnostic technologies in clinical practice.

Table 1.

List of FDA-approved medical AI devices for US imaging.

| No. | FDA Approval Number |

Product Name/Company | Description | Body Area | Decision Date | Regulatory Class/Submission Type |

|---|---|---|---|---|---|---|

| 1 | K161959 | ClearView cCAD/ClearView Diagnostics, Inc., Piscataway, NJ, USA | Automatically classifies the shape and orientation characteristics of user-selected ROIs in breast US images with the BI-RADS category using machine learning. | Breast | 28 December 2016 | Class II/510(k) |

| 2 | K162574 | AmCAD-US/AmCAD BioMed Corporation, Taipei, Taiwan | Visualizes and quantifies US image data with backscattered signals echoed by tissue compositions. | Thyroid | 30 May 2017 | Class II/510(k) |

| 3 | K173780 | EchoMD AutoEF software/Bay Labs, Inc., San Francisco, CA, USA | Provides automated estimation of the LVEF on previously acquired cardiac US images using machine learning. | Heart | 14 June 2018 | Class II/510(k) |

| 4 | K180006 | AmCAD-UT Detection 2.2/AmCad BioMed Corporation, Taipei, Taiwan | Analyzes thyroid US images of user-selected ROIs. Provides detailed information with the quantification and visualization of US characteristics of thyroid nodules. | Thyroid | 31 August 2018 | Class II/510(k) |

| 5 | K190442 | Koios DS/Koios Medical, Inc., New York, NY, USA | Diagnostic aid using machine learning to characterize US image features with user-provided ROIs to generate categorical output that aligns to BI-RADS and the auto-classified shape and orientation. | Breast | 3 July 2019 | Class II/510(k) |

| 6 | K191171 | EchoGo Core/Ultromics Ltd., Oxford, UK | Automatically measures cardiac US parameters including EF, Global Longitudinal Strain, and LV volume using machine learning. | Heart | 13 November 2019 | Class II/510(k) |

| 7 | DEN190040 | Caption Guidance/Caption Health, Inc., Brisbane, CA, USA | Assists in the acquisition of anatomically correct cardiac US images that represent standard 2D echocardiographic diagnostic views and orientations using deep learning. | Heart | 7 February 2020 | Class II/De Novo |

| 8 | K200356 | MEDO ARIA/Medo.ai, Inc., Edmonton, Canada | Views and quantifies US image data to aid trained medical professionals in the diagnosis of developmental dysplasia of the hip using machine learning. | Hip | 11 June 2020 | Class II/510(k) |

| 9 | K200980 | Auto 3D Bladder Volume Tool/Butterfly Network, Inc., Guilford, CT, USA | Views, quantifies, and reports the results acquired on Butterfly Network US systems using machine learning-based 3D volume measurements of the bladder. | Bladder | 11 June 2020 | Class II/510(k) |

| 10 | K200621 | Caption Interpretation Automated Ejection Fraction Software/Caption Health, Inc., Brisbane, CA, USA | Processes previously acquired cardiac US images and provides machine learning-based estimation of the LVEF. | Heart | 22 July 2020 | Class II/510(k) |

| 11 | K201369 | AVA (Augmented Vascular Analysis)/See-Mode Technologies Pte. Ltd., Singapore, Singapore | Analyzes vascular US scans including vessel wall segmentation and measurement of the intima-media thickness of the carotid artery using machine learning. | Carotid artery |

16 September 2020 | Class II/510(k) |

| 12 | K201555 | EchoGo Pro/Ultromics Ltd., Oxford, UK | Decision support system for diagnostic stress ECG using machine learning to assess the severity of CAD using LV segmentation of cardiac US images. | Heart | 18 December 2020 | Class II/510(k) |

| 13 | K210053 | LVivo software application/DiA Imaging Analysis Ltd., Beer Sheva, Israel | Evaluates the LVEF using deep learning-based LV segmentation in cardiac US images. | Heart | 5 February 2021 | Class II/510(k) |

Abbreviations: ROI, region of interest; BI-RADS, Breast Imaging Reporting and Data System; LVEF, left ventricular ejection fraction; ECG, echocardiography; CAD, coronary artery disease.

This review introduces the current efforts and trends of medical AI research in US imaging. Moreover, future perspectives are discussed to establish the clinical applications of AI for US diagnostic support.

2. US Image Preprocessing

US imaging typically exhibits low spatial resolution and numerous artifacts owing to ultrasonic diffraction. These characteristics affect not only the US examination and diagnosis but also AI-based image processing and recognition. Therefore, several methods have been proposed for US image preprocessing which eliminates noises that are obstacles to accurate feature extraction before US image processing. In this session, we present two representative methods: US image quality improvement and acoustic shadow detection.

Firstly, various techniques have been developed for US image quality improvement at the time of image data acquisition by reducing speckle, clutter, and other artifacts [29]. Real-time spatial compound imaging using ultrasonic beam steering of a transducer array to acquire several multiangle scans of an object has been presented [30]. Furthermore, harmonic imaging using endogenously generated low frequency to reduce the attenuation and improve the image contrast was proposed [31]. Several methods for US image enhancement using traditional image processing have been reported [32]. Despeckling is the representative research subject on filtering or removing punctate artifacts in US imaging [33]. In this method, the cause of the image quality degradation is eliminated during the US image generation phase or the noise characteristics are modeled along with the US image generation process following close examination. Current approaches for US image quality improvement using machine learning or deep learning include methods for improving the despeckling performance [34,35], and enhancing the overall image quality [36]. Such data-driven methods offer the significant advantage that it is not necessary to create a model for each domain. However, substantial training data with targeted high quality are required to improve the US image quality, and because the preparation of such a dataset is generally difficult, critical issues arise in clinical application.

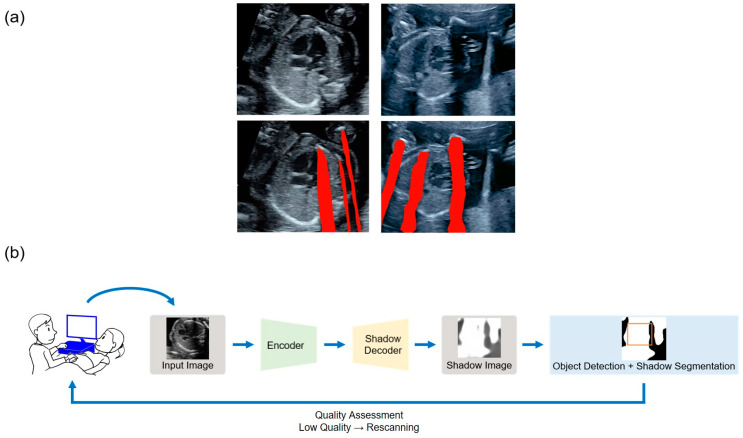

Secondly, acoustic shadow detection is also a well-known US image preprocessing method. An acoustic shadow is one of the most representative artifacts, which is caused by several reflectors blocking the ultrasonic beams with rectilinear propagation from a transducer. Useful artifacts exist, such as the comet-tail artifact (B-line), which may provide diagnostic clues for COVID-19 infection in point-of-care lung US [37]. However, acoustic shadows are depicted in black with missing information in that region, and obstruct the examination and AI-based image recognition of the target organs in US imaging. Therefore, performing acoustic shadow detection prior to US imaging analysis may enable a judgment to be made on whether an acquired image is suitable as the input data. Traditional image processing methods for acoustic shadow detection include automatic geometrical and statistical methods using rupture detection of the brightness value along the scanning line [38], and random walk-based approaches [39,40]. In these methods, the parameters and models need to be carefully changed in response to a domain shift. However, deep learning-based methods can be applied to a wider range of domains. The preparation of the training dataset remains challenging as the pixel-level annotation of acoustic shadows is highly costly and difficult owing to their translucency and blurred boundaries. Meng et al. employed weakly supervised estimation of confidence maps using labels for each image with or without acoustic shadows [41,42]. Yasutomi et al. proposed a semi-supervised approach for integrating domain knowledge into a data-driven model using the pseudo-labeling of plausible synthetic shadows that were superimposed onto US imaging (Figure 1) [43].

Figure 1.

Acoustic shadow detection: (a) The red areas represent the segmented acoustic shadows using the semi-supervised approach [43]. (b) As a candidate for clinical application, examiners can evaluate whether the current acquired US imaging is suitable for diagnosis in real time. In the case of low image quality, rescanning can be performed in the same examination time. This application may improve the workflow of examiners and reduce the patient burden.

3. Algorithms for US Imaging Analysis

In this section, we briefly present the fundamental machine learning algorithms for US imaging, along with other medical imaging modalities. Thereafter, we focus on specialized algorithms for US imaging analysis to overcome the noisy artifacts as well as the instability of the viewpoint and cross-section owing to manual operation.

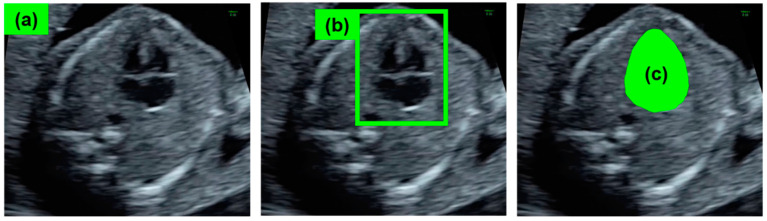

Classification, detection, and segmentation have generally been used as the fundamental algorithms in US imaging analysis (Figure 2). Classification estimates one or more labels for the entire image, and it has typically been used to seek the standard scanning planes for screening or diagnosis in US imaging analysis. ResNet [44] and Visual Geometry Group (VGG) [45] are examples of classification methods. Detection is mainly used to estimate lesions and anatomical structures. YOLO [46] and the single-shot multibox detector (SSD) [47] are popular detection algorithms. Segmentation is used for the further precise measurement of lesions and organ structures in pixels as well as index calculations of the lengths, areas, and volumes. U-Net [48] and DeepLab [49,50] are representative algorithms for segmentation. These standard algorithms are often used as baselines to evaluate the performance of specialized algorithms for US imaging analysis.

Figure 2.

Fundamental algorithms generally used in US imaging analysis. (a) Image classification of whether the fetal US image contains a diagnostically useful cross-section such as a four-chamber view (4CV). (b) Detection of the fetal heart for evaluation of fetal heart structure. (c) Segmentation of the boundaries or regions of the fetal heart to measure the fetal cardiac index such as cardiothoracic area ratio (CTAR).

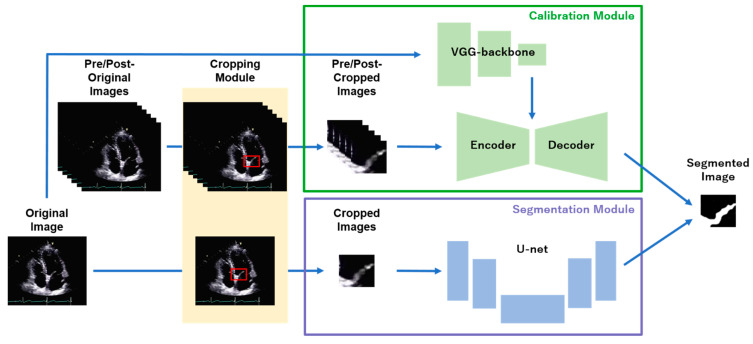

We introduce the specialized algorithms for US imaging analysis to address the performance deterioration owing to noisy artifacts. Cropping–segmentation–calibration (CSC) [51] and the multi-frame + cylinder method (MFCY) [52] use time-series information to reduce noisy artifacts and to perform accurate segmentation in US videos (Figure 3). Deep attention networks have also been proposed for improved segmentation performance in US imaging, such as the attention-guided dual-path network [53] and a U-Net-based network combining a channel attention module and VGG [54]. A contrastive learning-based framework [55] and a framework based on the generative adversarial network (GAN) [56] with progressive learning have been reported to improve the boundary estimation in US imaging [57].

Figure 3.

Use of time-series information to reduce noisy artifacts and to perform accurate segmentation in US videos. CSC employs the time-series information of US videos and specific section information to calibrate the output of U-Net [51].

The critical issues resulting from the instability of the viewpoint and cross-section often become apparent when the clinical indexes are calculated using segmentation. One traditional US image processing method is the reconstruction of three-dimensional (3D) volumes [58]. Direct segmentation methods for conventional 3D volumes, including 3D U-Net [59], are useful for accurate volume quantification; however, their labeling is very expensive and time-consuming. The interactive few-shot Siamese network uses a Siamese network and a recurrent neural network to perform 3D segmentation training from few-annotated two-dimensional (2D) US images [60]. Another research subject is the extraction of 2D US images involving standard scanning planes from the 3D US volume. The iterative transformation network was proposed to guide the current plane towards the location of the standard scanning planes in the 3D US volume [61]. Moreover, Duque et al. proposed a semi-automatic segmentation algorithm for a freehand 3D US volume, which is a continuum of 2D cross-sections, by employing an encoder–decoder architecture with 2D US images and several 2D labels [62]. We summarize the abovementioned segmentation algorithms for US imaging analysis in Table 2.

Table 2.

List of segmentation algorithms for US imaging analysis.

| Algorithm Name | Description | Ref. |

|---|---|---|

| U-Net | Based on a fully convolutional network and achieves more accurate segmentation using smaller amounts of training data compared with the other methods. Several studies have reported superior segmentation performances using their models based on U-Net, which is particularly suitable for biomedical image segmentation. | [48] |

| DeepLab | Utilizes atrous convolution and demonstrates its state-of-the-art segmentation performance. DeepLabv3+ is the latest version developed by combining pyramidal pooling modules with an encoder-decoder model. | [49,50] |

| CSC | Utilizes time-series information to reduce noisy artifacts and performs accurate segmentation on a small and deformable organ in US videos. | [51] |

| MFCY | Uses time-series information and demonstrates high-performance segmentation on a target organ with a cylindrical shape in US videos. | [52] |

| AIDAN | The attention-guided dual-path network improves segmentation performance in US imaging. | [53] |

| Deep attention network | A U-Net-based network combining a channel attention module and VGG improves segmentation performance in US imaging. | [54] |

| Contrastive rendering | A contrastive learning-based framework improves the boundary estimation in US imaging. | [55] |

| GAN-based method | A GAN-based framework with progressive learning improves the boundary estimation in US imaging. | [57] |

| 3D U-Net | The representative direct segmentation method for conventional 3D volumes is useful for accurate volume quantification. | [59] |

| IFSS-NET | The interactive few-shot Siamese network uses a Siamese network and a recurrent neural network to perform 3D segmentation training from few-annotated 2D US images. | [60] |

| Encoder–decoder architecture | A semi-automatic segmentation algorithm for a freehand 3D US volume by employing an encoder–decoder architecture with 2D US images and several 2D labels. | [62] |

Abbreviations: CSC, cropping–segmentation–calibration; MFCY, multi-frame + cylinder method; AIDAN, attention-guided dual-path network; GAN, generative adversarial network; IFSS-NET, interactive few-shot Siamese network.

4. Medical AI Research in US Imaging

4.1. Oncology

4.1.1. Breast Cancer

Breast cancer is the most common cancer in woman globally [63]. US imaging is used extensively for breast cancer screening in addition to mammography. Various efforts have been made to date regarding the classification of benign and malignant breast tumors in US imaging. Han et al. trained the CNN model architecture to differentiate between benign and malignant breast tumors [64]. The Inception model, which is a CNN model with batch normalization, exhibited equivalent or superior diagnostic performance compared to radiologists [65]. Byra et al. introduced a matching layer to convert grayscale US images into RGB to leverage the discriminative power of the CNN more efficiently [66]. Antropova et al. employed VGG and the support vector machine for classification using the CNN features and conventional computer-aided diagnosis features [67]. A mass-level classification method enabled the construction of an ensemble network by combining VGG and ResNet to classify a given mass using all views [68]. Considering that both thyroid and breast cancers exhibit several similar high-frequency US characteristics, Zhu et al. developed a generic VGG-based framework to classify thyroid and breast lesions in US imaging [69]. The model that was constructed with features that were extracted from all three transferred models achieved the highest overall performance [70]. The Breast Imaging Reporting and Data System (BI-RADS) provides guidance and criteria for physicians to determine breast tumor categories based on medical images in clinical settings. Zhang et al. proposed a novel network that integrates the BI-RADS features into task-oriented semi-supervised deep learning for accurate diagnosis using US images with a small training dataset [71]. Huang et al. developed the ROI-CNN (ROI identification network) and the subsequent G-CNN (tumor categorization network) to generate effective features for classifying the identified ROIs into five categories [72]. The Inception model achieved the best performance in predicting lymph node metastasis from US images in patients with primary breast cancer [73].

Yap et al. investigated the use of three deep learning approaches for breast lesion detection in US imaging. The performances were evaluated on two datasets and the different methods achieved the highest performance for each dataset [74]. An experimental study was performed to evaluate the different CNN architectures on breast lesion detection and classification in US imaging, in which SSD for breast lesion detection and DenseNet [75] for classification exhibited the best performance [76].

Several ingenious segmentation methods for breast lesions in US imaging have been reported. Kumar et al. demonstrated the performance of the Multi-U-Net segmentation algorithm for suspicious breast masses in US imaging [77]. A novel automatic tumor segmentation method that combines a dilated fully convolutional network (FCN) with a phase-based active contour model was proposed [78]. Residual-dilated-attention-gate-U-Net is based on the conventional U-Net, but the plain neural units are replaced with residual units to enhance the edge information [79]. Vakanski et al. introduced attention blocks into the U-Net architecture to learn feature representations that prioritize spatial regions with high saliency levels [80]. Singh et al. proposed automatic tumor segmentation in breast US images using contextual-information-aware GAN architecture. The proposed model achieved competitive results compared to other segmentation models in terms of the Dice and intersection over union metrics [81].

4.1.2. Thyroid Cancer

The incidence of thyroid cancer has been increasing globally as a result of overdiagnosis and overtreatment owing to the sensitive imaging techniques that are used for screening [82]. A CNN with the addition of a spatial constrained layer was proposed to develop a detection method that is suitable for papillary thyroid carcinoma in US imaging [83]. The Inception model achieved excellent diagnostic efficiency in differentiating between papillary thyroid carcinomas and benign nodules in US images. It could provide more accurate diagnosis of nodules that were 0.5 to 1.0 cm in size, with microcalcification and a taller shape [84]. Ko et al. designed CNNs that exhibited comparable diagnostic performance to that of experienced radiologists in differentiating thyroid malignancy in US imaging [85]. Furthermore, a fine-tuning approach based on ResNet was proposed, which outperformed VGG in terms of the classification accuracy of thyroid nodules [86]. Li et al. used CNNs for the US image classification of thyroid nodules. Their model exhibited similar sensitivity and improved specificity in identifying patients with thyroid cancer compared to a group of skilled radiologists [82].

4.1.3. Ovarian Cancer

Ovarian cancer is the most lethal gynecological malignancy because it exhibits few early symptoms and generally presents at an advanced stage [87]. The screening methods for ovarian cysts using imaging techniques need to be improved to overcome the poor prognosis of ovarian cancer. Zhang et al. proposed an image diagnosis system for classifying ovarian cysts in color US images using the high-level deep features that were extracted by the fine-tuned CNN and the low-level rotation-invariant uniform local binary pattern features [88]. US imaging analysis using an ensemble model of CNNs demonstrated comparable diagnostic performance to human expert examiners in classifying ovarian tumors as benign or malignant [89].

4.1.4. Prostate Cancer

Feng et al. presented a 3D CNN model to detect prostate cancer in sequential contrast-enhanced US (CEUS) imaging. The framework consisted of three convolutional layers, two sub-sampling pooling layers, and one fully connected classification layer. Their method achieved a specificity of over 91% specificity and an average accuracy of 90% over the targeted CEUS images for prostate cancer detection [90]. A random forest-based classifier for the multiparametric localization of prostate cancer lesions based on B-mode, shear-wave elastography, and dynamic contrast-enhanced US radiomics was developed [91]. A segmentation method was proposed for the clinical target volume (CTV) in the transrectal US image-guided intraoperative process for permanent prostate brachytherapy. A CNN was employed to construct the CTV shape in advance from automatically sampled pseudo-landmarks, along with an encoder–decoder CNN architecture for low-level feature extraction. This method achieved a mean accuracy of 96% and a mean surface distance error of 0.10 mm [92].

4.1.5. Other Cancers

Hassan et al. developed stacked sparse auto-encoder and softmax classifier architecture for US image classification of focal liver diseases into a benign cyst, hemangioma, and hepatocellular carcinoma along with the normal liver [93]. Schmauch et al. proposed a deep learning model based on ResNet for the detection and classification of focal liver lesions into the abovementioned diseases, as well as focal nodular hyperplasia and metastasis in liver US images [94]. An ensemble model of CNNs was proposed for kidney US image classification into four classes, namely normal, cyst, stone, and tumor. This method achieved a maximum classification accuracy of 96% in testing with quality images and 95% in testing with noisy images [95].

4.2. Cardiovascular Medicine

4.2.1. Cardiology

Echocardiography is the most common imaging modality in cardiovascular medicine, and it is frequently used for the screening as well as diagnosis and management of cardiovascular diseases [96]. Current technological innovations in echocardiography, such as the assessments of 3D US volumes and global longitudinal strain, are remarkable. Clinical evidence has been accumulating for the utilization of 3D echocardiography. However, 3D US volume is still inferior in spatial and temporal resolutions to 2D US images. To utilize these latest technologies, it is a prerequisite for examiners to have the skill levels of acquiring high-quality images in 2D echocardiography. In addition, echocardiography has become the primary point-of-care imaging modality for the early diagnosis of the cardiac symptoms of COVID-19 [97,98]. Therefore, it is expected that the clinical applications of AI will improve the diagnostic accuracy and workflow in echocardiography. To our knowledge, there is the highest number of the AI-powered medical devices for echocardiography among those devices which the FDA has been approved in application to US imaging.

Abdi et al. developed a CNN to reduce the user variability in data acquisition by automatically computing a score of the US image quality of the apical four-chamber view for examiner feedback [99]. Liao et al. proposed a quality assessment method for cardiac US images through modeling the label uncertainty in CNNs resulting from intra-observer variability in the labeling [100]. Deep learning-based view classification has also been reported. EchoNet could accurately identify the presence of pacemaker leads, an enlarged left atrium, and left ventricular (LV) hypertrophy by analyzing the local cardiac structures. In this study, the LV end systolic and diastolic volumes, and ejection fraction (EF), as well as the systemic phenotypes of age, sex, weight, and height, were also estimated [101]. Zhang et al. proposed a deep learning-based pipeline for the fully automated analysis of cardiac US images, including view classification, chamber segmentation, measurements of the LV structure and function, and the detection of specific myocardial diseases [102].

The assessment of regional wall motion abnormalities (RWMAs) is an important testing process in echocardiography, which can localize ischemia or infarction of coronary arteries. Strain imaging, including the speckle tracking method, has been used extensively to evaluate LV function in clinical practice. Ahn et al. proposed an unsupervised motion tracking framework using U-Net [103]. Kusunose et al. compared the area under the curve (AUC) obtained by several CNNs and physicians for detecting the presence of RWMAs. The CNN achieved an equivalent AUC to that of an expert, which was significantly higher than that of resident physicians [104].

4.2.2. Angiology

Lekadir et al. proposed a CNN for extracting the optimal information to identify the different plaque constituents from carotid US images. The results of cross-validation experiments demonstrated a correlation of approximately 0.90 with the clinical assessment for the estimation of the lipid core, fibrous cap, and calcified tissue areas [105]. A deep learning model was developed for the classification of the carotid intima-media thickness to enable reliable early detection of atherosclerosis [106]. Araki et al. introduced an automated segmentation system for both the near and far walls of the carotid artery using grayscale US morphology of the plaque for stroke risk assessment [107]. A segmentation method that integrated the random forest and an auto-context model could segment the plaque effectively, in combination with the features extracted from US images as well as iteratively estimated probability maps [108]. The quantification of carotid plaques by measuring the vessel wall volume using the boundary segmentation of the media-adventitia (MAB) and lumen-intima (LIB) is sensitive to temporal changes in the carotid plaque burden. Zhou et al. proposed a semi-automatic segmentation method based on carotid 3D US images using a dynamic CNN for MAB segmentation and an improved U-Net for LIB segmentation [109]. Biswas et al. performed boundary segmentation of the MAB and LIB, incorporating a machine learning-based joint coefficient method for fine-tuning of the border extraction, to measure the carotid intima-media thickness from carotid 2D US images [110]. The application of a CNN and FCN to automated lumen detection and lumen diameter measurement was also presented [111]. The deep learning-based boundary detection and compensation technique enabled the segmentation of vessel boundaries by harnessing the CNN and wall motion compensation in the analysis of near-wall flow dynamics in US imaging [112]. Towards the cost-effective diagnosis of deep vein thrombosis, Kainz et al. employed a machine learning model for the detection and segmentation of the representative veins and the prediction of their vessel compression status [113].

4.3. Obstetrics

US imaging plays the most important role in medical diagnostic imaging in the obstetrics field. The non-invasiveness and real-time properties of US imaging enable fetal morphological and functional evaluations to be performed effectively. US imaging is used for the screening of congenital diseases, the assessment of fetal development and well-being, and the detection of obstetric complications [114]. Transvaginal US enables the clear observation of the fetus and other organs including the uterus, ovaries, and fallopian tubes, which are mainly located on the pelvic floor during the first trimester. Moreover, transabdominal US is useful for observing the fetal growth during the gestational weeks.

During fetal US imaging, numerous anatomical structures with small shapes and movement are simultaneously observed in clinical practice. Medical AI research has been conducted on the development of algorithms that are applicable to the US imaging analysis of the fetus or fetal appendages. Dozen et al. improved the segmentation performance of the ventricular septum in fetal cardiac US videos using cropped and original image information in addition to time-series information [51]. CSC can be applied to the segmentation of other organs that are small and have dynamically changing shapes with heartbeats, such as the heart valves. Shozu et al. proposed a novel model-agnostic method to improve the segmentation performance of the thoracic wall in fetal US videos. This method was based on ensemble learning of the time-series information of US videos and the shape information of the thoracic wall [52]. Medical AI research was conducted on the measurement of fetal anatomical segments in US imaging [115,116,117,118]. The scale attention pyramid deep neural network using multi-scale information could fuse local and global information to infer the skull boundaries that contained speckle noise or discontinuities. The elliptic geometric axes were modified by a regression network to obtain the fetal head circumference, biparietal diameter, and occipitofrontal diameter more accurately [119]. Kim et al. proposed a machine learning-based method for the automatic identification of the fetal abdominal circumference [120]. The localizing region-based active contour method, which was integrated with a hybrid speckle noise-reducing technique, was implemented for the automatic extraction and calculation of the fetal femur length [121]. A computer-aided detection framework for the automatic measurement of fetal lateral ventricles [122] and amniotic fluid volume [123] was also developed. The fully automated and real-time segmentation of the placenta from 3D US volumes could potentially enable the use of the placental volume to screen for an increased risk of pregnancy complications [124].

The acquisition of optimal US images for diagnosis in fetal US imaging is dependent on the skill levels of the examiners [4]. Therefore, it is essential to evaluate whether the acquired US images have a suitable cross-section for diagnosis. Furthermore, when labeling a huge amount of US images for AI-based image processing, it is necessary to classify the acquired US images and to assess whether the image quality thereof is suitable for the input data. Burgos-Artizzu et al. evaluated a wide variety of CNNs for the automatic classification of a large dataset containing over 12,400 images from 1792 patients that were routinely acquired during maternal-fetal US screening [125]. An automatic recognition method using deep learning for the fetal facial standard planes, including the axial, coronal, and sagittal planes was reported [126]. Moreover, automated partitioning and characterization on an unlabeled full-length fetal US video into 20 anatomical or activity categories was performed [127]. A generic deep learning framework for the automatic quality control of fetal US cardiac four-chamber views [128] as well as a framework for tracking the key variables that described the contents of each frame of freehand 2D US scanning videos of a healthy fetal heart [129] were developed. Wang et al. presented a deep learning framework for differentiating operator skills during fetal US scanning using probe motion tracking [130].

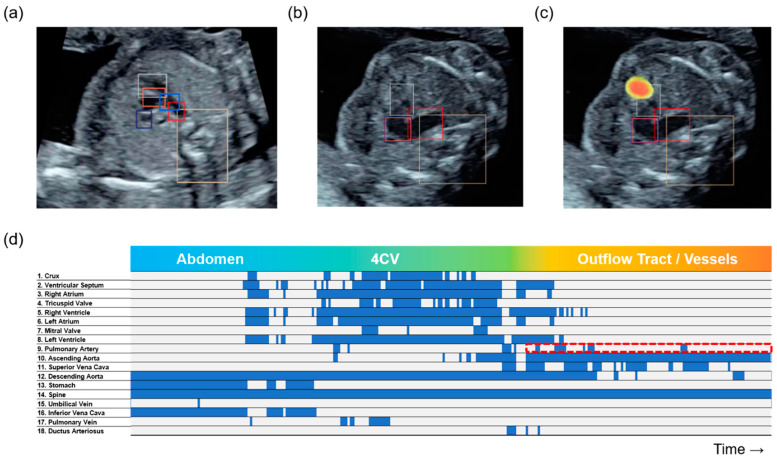

AI-based abnormality detection and classification in fetal US imaging remain challenging owing to the wide variety and relatively low incidence of congenital diseases. Xie et al. proposed deep learning algorithms for the segmentation and classification of normal and abnormal fetal brain US images in the standard axial planes. Furthermore, they provided heat maps for lesion localization using gradient-weighted class activation mapping [131]. An ensemble of neural networks, which was trained using 107,823 images from 1326 retrospective fetal cardiac US studies, could identify the recommended cardiac views as well as distinguish between normal hearts and complex congenital heart diseases. Segmentation models were also proposed to calculate standard fetal cardiothoracic measurements [132]. Komatsu et al. proposed the CNN-based architecture known as supervised object detection with normal data only (SONO) to detect 18 cardiac substructures and structural abnormalities in fetal cardiac US videos. The abnormality score was calculated using the probability of the cardiac substructure detection. SONO enables abnormalities to be detected based on the difference from the correct anatomical localization of normal structures, thereby addressing the challenge of the low incidence of congenital heart diseases. Furthermore, in our previous work, the above probabilities were visualized similar to a barcode-like timeline. This timeline was useful in terms of AI explainability when detecting cardiac structural abnormalities in fetal cardiac US videos (Figure 4) [133].

Figure 4.

Possible techniques for AI explainability. The cardiac substructures were detected with colored bounding boxes in a three-vessel trachea view in (a) a normal case, and (b) a tetralogy of Fallot (TOF) case. (c) An image of the class-specific heatmap indicates the discriminative regions of the image that caused the particular class activity of interest. (d) Barcode-like timeline in a TOF case. The vertical axis represents the 18 selected substructures and the horizontal axis represents the examination timeline in the rightward direction. A probability of ≥0.01 was set as well-detected and is indicated as the blue bar, whereas <0.01 was set as non-detected and is indicated by the gray bar in each frame. The pulmonary artery was not detected (red dotted box).

Deep learning-incorporated software improved the prediction performance of neonatal respiratory morbidity induced by respiratory distress syndrome or transient tachypnea of the newborn in fetal lung US imaging for AI-based fetal functional evaluation [134].

5. Discussion and Future Directions

In this review, we have introduced various areas of medical AI research with a focus on US imaging analysis to understand the global trends and future research subjects from both the clinical and basic perspectives. In addition to other medical imaging modalities, classification, detection, and segmentation are the fundamental tasks of AI-based image analysis. However, US imaging exhibits several issues in terms of image quality control. Thus, US image preprocessing needs to be performed and ingenious algorithm combinations are required.

Acoustic shadow detection is the characteristic task in US imaging analysis. Although deep learning-based methods can be applied to a wide range of domains, the preparation of training datasets remains challenging. Therefore, weakly or semi-supervised methods offer the advantage of cost-effectiveness for labeling [41,42,43]. Towards the clinical application of acoustic shadow detection methods, examiners can evaluate whether the current acquired US imaging is suitable for diagnosis in real time. If not, rescanning can be performed during the same examination time. This application may improve the workflow of examiners and reduce the patient burden. Several frameworks relating to specialized algorithms for US imaging analysis have been proposed, in which the time-series information in US video [51,52] or a channel attention module [53,54] have been integrated with conventional algorithms to overcome the performance deterioration owing to noisy artifacts. Furthermore, the AI-based analysis of 3D US volumes is expected to resolve the problem of the viewpoint and cross-section instability resulting from manual operation.

From a clinical perspective, breast cancer and cardiovascular diseases are medical fields in which substantial research efforts in AI-based US imaging analysis have been made to date, resulting in more medical AI devices being approved. Considering the clinical background of these two medical fields in which US imaging is commonly used, the potential exists to develop medical AI research and technologies in obstetrics as well. However, AI-based US imaging analysis remains challenging and few medical AI devices are available for this purpose. Therefore, deep learning-based methods that are applicable to cross-disciplinary studies and a wide range of domains need to be learned and incorporated. According to our review, several ingenious segmentation methods for target lesions or structures in US imaging may apply to cross-disciplinary utilization among oncology, cardiovascular medicine, and obstetrics. For example, CSC can be applied to the segmentation of other small and deformable organs using time-series information of US videos. Valid US diagnostic support technologies can be established in clinical practice by accumulating AI-based US image analyses. Automated image quality assessment and detection can lead to the development of a scanning guide and training material for examiners. Accurate volume quantification as well as the measurement of lesions and indexes can result in an improved workflow and a reduction in examiner bias. AI-based abnormality detection is expected to be used for the objective evaluation of lesions or abnormalities and in preventing oversights. However, it remains challenging to prepare sufficient datasets on both normal and abnormal subjects for the target diseases. To address the data preparation issue, it is possible to implement AI-based abnormality detection using correct anatomical localization and the morphologies of normal structures as a baseline [133].

Furthermore, AI explainability is key to the clinical application of AI-based US diagnostic support technologies. It is necessary for examiners to understand and explain their rationale for diagnosis to patients when obtaining informed consent. Class activation mapping is a popular technique for AI explainability, which enables the computation of class-specific heatmaps indicating the discriminative regions of the image that caused the particular class activity of interest [135]. Zhang et al. provided an interpretation for regression saliency maps, as well as an adaptation of the perturbation-based quantitative evaluation of explanation methods [136]. ExplainGAN is a generative model that produces visually perceptible decision-boundary crossing transformations, which provide high-level conceptual insights that illustrate the manner in which a model makes decisions [137]. We proposed a barcode-like timeline to visualize the progress of the probability of substructure detection along with sweep scanning in US videos. This technique was demonstrated to be useful in terms of AI explainability when we detected cardiac structural abnormalities in fetal cardiac US videos. Moreover, the barcode-like timeline diagram is informative and understandable, thereby enabling examiners of all skill levels to consult with experts knowledgeably [133].

Towards the clinical application of medical AI algorithms and devices, it is important to understand the approval processes and regulations of the US FDA, the Japan Pharmaceuticals and Medical Devices Agency, and the responsible institutions of other countries. Furthermore, knowledge of the acts on the protection of personal information and the guidelines for handling all types of medical data, including the clinical information of patients and medical imaging data, should be updated. Wu et al. compiled a comprehensive overview of medical AI devices that are approved by the FDA and pointed out the limitations of the evaluation process that may mask the vulnerabilities of devices when they are developed on patients [25]. In the majority of evaluations, only retrospective studies have been performed. These authors recommended the performance evaluation of medical AI devices in multiple clinical sites, prospective studies, and post-market surveillance. Moreover, industry–academia–medicine collaboration is required to share valuable concepts in the development of medical AI devices for patients and examiners, and its actual use in clinical practice.

The utilization of AI and internet of things (IoT) technologies, along with advanced networks such as 5G, will presently accelerate infrastructure development in the medical field, including remote medical care and regional medical cooperation. The current COVID-19 pandemic has also provided an opportunity to promote such developments. US imaging is the most common medical imaging modality in an extensive range of medical fields. However, stronger support for examiners in terms of image quality control should be considered. The clinical implementation of AI-based US diagnostic support technologies is expected to correct the medical disparities between regions through examiner training or by remote diagnosis using cloud-based systems.

Acknowledgments

We would like to thank all members of the Hamamoto Laboratory, who provided valuable advice and a comfortable research environment.

Author Contributions

Conceptualization, M.K. and R.H.; investigation, M.K., A.S., A.D., K.S., S.Y. and R.H.; writing—original draft preparation, M.K., A.S., A.D., K.S., S.Y. and R.H.; writing—review and editing, M.K., A.S., A.D., K.S., S.Y., H.M., K.A., S.K. and R.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the subsidy for Advanced Integrated Intelligence Platform (MEXT) and the commissioned projects income for the RIKEN AIP-FUJITSU Collaboration Center.

Institutional Review Board Statement

The studies were conducted according to the guidelines of the Declaration of Helsinki. The study for fetal ultrasound was approved by the Institutional Review Board (IRB) of RIKEN, Fujitsu Ltd., Showa University, and the National Cancer Center (approval ID: Wako1 29-4). The study for adult echocardiography was approved by the IRB of RIKEN, Fujitsu Ltd., Tokyo Medical and Dental University, and the National Cancer Center (approval ID: Wako3 2019-36).

Informed Consent Statement

The research protocol for each study was approved by the medical ethics committees of the collaborating research facilities. Data collection was conducted in an opt-out manner in the study for fetal ultrasound. Informed consent was obtained from all subjects involved in the study for adult echocardiography.

Data Availability Statement

Data sharing is not applicable owing to the patient privacy rights.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Reddy U.M., Filly R.A., Copel J.A. Prenatal imaging: Ultrasonography and magnetic resonance imaging. Obstet. Gynecol. 2008;112:145–157. doi: 10.1097/01.AOG.0000318871.95090.d9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Zhang L., Wang B., Zhou J., Kirkpatrick J., Xie M., Johri A.M. Bedside Focused Cardiac Ultrasound in COVID-19 from the Wuhan Epicenter: The Role of Cardiac Point-of-Care Ultrasound, Limited Transthoracic Echocardiography, and Critical Care Echocardiography. J. Am. Soc. Echocardiogr. 2020;33:676–682. doi: 10.1016/j.echo.2020.04.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Duchnowski P., Hryniewiecki T., Kuśmierczyk M., Szymański P. The usefulness of selected biomarkers in patients with valve disease. Biomark. Med. 2018;12:1341–1346. doi: 10.2217/bmm-2018-0101. [DOI] [PubMed] [Google Scholar]

- 4.Donofrio M.T., Moon-Grady A.J., Hornberger L.K., Copel J.A., Sklansky M.S., Abuhamad A., Cuneo B.F., Huhta J.C., Jonas R.A., Krishnan A., et al. Diagnosis and treatment of fetal cardiac disease: A scientific statement from the American Heart Association. Circulation. 2014;129:2183–2242. doi: 10.1161/01.cir.0000437597.44550.5d. [DOI] [PubMed] [Google Scholar]

- 5.Feldman M.K., Katyal S., Blackwood M.S. US artifacts. Radiographics. 2009;29:1179–1189. doi: 10.1148/rg.294085199. [DOI] [PubMed] [Google Scholar]

- 6.Asada K., Kaneko S., Takasawa K., Machino H., Takahashi S., Shinkai N., Shimoyama R., Komatsu M., Hamamoto R. Integrated Analysis of Whole Genome and Epigenome Data Using Machine Learning Technology: Toward the Establishment of Precision Oncology. Front. Oncol. 2021;11:666937. doi: 10.3389/fonc.2021.666937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Asada K., Kobayashi K., Joutard S., Tubaki M., Takahashi S., Takasawa K., Komatsu M., Kaneko S., Sese J., Hamamoto R. Uncovering Prognosis-Related Genes and Pathways by Multi-Omics Analysis in Lung Cancer. Biomolecules. 2020;10:524. doi: 10.3390/biom10040524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Jinnai S., Yamazaki N., Hirano Y., Sugawara Y., Ohe Y., Hamamoto R. The Development of a Skin Cancer Classification System for Pigmented Skin Lesions Using Deep Learning. Biomolecules. 2020;10:1123. doi: 10.3390/biom10081123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kobayashi K., Bolatkan A., Shiina S., Hamamoto R. Fully-Connected Neural Networks with Reduced Parameterization for Predicting Histological Types of Lung Cancer from Somatic Mutations. Biomolecules. 2020;10:1249. doi: 10.3390/biom10091249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hamamoto R., Komatsu M., Takasawa K., Asada K., Kaneko S. Epigenetics Analysis and Integrated Analysis of Multiomics Data, Including Epigenetic Data, Using Artificial Intelligence in the Era of Precision Medicine. Biomolecules. 2020;10:62. doi: 10.3390/biom10010062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Takahashi S., Asada K., Takasawa K., Shimoyama R., Sakai A., Bolatkan A., Shinkai N., Kobayashi K., Komatsu M., Kaneko S., et al. Predicting Deep Learning Based Multi-Omics Parallel Integration Survival Subtypes in Lung Cancer Using Reverse Phase Protein Array Data. Biomolecules. 2020;10:1460. doi: 10.3390/biom10101460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Takahashi S., Takahashi M., Kinoshita M., Miyake M., Kawaguchi R., Shinojima N., Mukasa A., Saito K., Nagane M., Otani R., et al. Fine-Tuning Approach for Segmentation of Gliomas in Brain Magnetic Resonance Images with a Machine Learning Method to Normalize Image Differences among Facilities. Cancers. 2021;13:1415. doi: 10.3390/cancers13061415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Takahashi S., Takahashi M., Tanaka S., Takayanagi S., Takami H., Yamazawa E., Nambu S., Miyake M., Satomi K., Ichimura K., et al. A New Era of Neuro-Oncology Research Pioneered by Multi-Omics Analysis and Machine Learning. Biomolecules. 2021;11:565. doi: 10.3390/biom11040565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Yamada M., Saito Y., Imaoka H., Saiko M., Yamada S., Kondo H., Takamaru H., Sakamoto T., Sese J., Kuchiba A., et al. Development of a real-time endoscopic image diagnosis support system using deep learning technology in colonoscopy. Sci. Rep. 2019;9:14465. doi: 10.1038/s41598-019-50567-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Yamada M., Saito Y., Yamada S., Kondo H., Hamamoto R. Detection of flat colorectal neoplasia by artificial intelligence: A systematic review. Best Pract. Res. Clin. Gastroenterol. 2021;52–53:101745. doi: 10.1016/j.bpg.2021.101745. [DOI] [PubMed] [Google Scholar]

- 16.Hamamoto R. Application of Artificial Intelligence for Medical Research. Biomolecules. 2021;11:90. doi: 10.3390/biom11010090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.LeCun Y., Bengio Y., Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 18.Chen Y., Logan P., Avitabile P., Dodson J. Non-Model Based Expansion from Limited Points to an Augmented Set of Points Using Chebyshev Polynomials. Exp. Tech. 2019;43:521–543. doi: 10.1007/s40799-018-00300-0. [DOI] [Google Scholar]

- 19.Chen Y. Ph.D. Thesis. University of Massachusetts Lowell; Lowell, MA, USA: 2019. A Non-Model Based Expansion Methodology for Dynamic Characterization. [Google Scholar]

- 20.Chen Y., Avitabile P., Page C., Dodson J. A polynomial based dynamic expansion and data consistency assessment and modification for cylindrical shell structures. Mech. Syst. Signal Process. 2021;154:107574. doi: 10.1016/j.ymssp.2020.107574. [DOI] [Google Scholar]

- 21.Gröhl J., Schellenberg M., Dreher K., Maier-Hein L. Deep learning for biomedical photoacoustic imaging: A review. Photoacoustics. 2021;22:100241. doi: 10.1016/j.pacs.2021.100241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Esteva A., Kuprel B., Novoa R.A., Ko J., Swetter S.M., Blau H.M., Thrun S. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.De Fauw J., Ledsam J.R., Romera-Paredes B., Nikolov S., Tomasev N., Blackwell S., Askham H., Glorot X., O’Donoghue B., Visentin D., et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat. Med. 2018;24:1342–1350. doi: 10.1038/s41591-018-0107-6. [DOI] [PubMed] [Google Scholar]

- 24.Hamamoto R., Suvarna K., Yamada M., Kobayashi K., Shinkai N., Miyake M., Takahashi M., Jinnai S., Shimoyama R., Sakai A., et al. Application of Artificial Intelligence Technology in Oncology: Towards the Establishment of Precision Medicine. Cancers. 2020;12:3532. doi: 10.3390/cancers12123532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Wu E., Wu K., Daneshjou R., Ouyang D., Ho D.E., Zou J. How medical AI devices are evaluated: Limitations and recommendations from an analysis of FDA approvals. Nat. Med. 2021;27:582–584. doi: 10.1038/s41591-021-01312-x. [DOI] [PubMed] [Google Scholar]

- 26.Litjens G., Kooi T., Bejnordi B.E., Setio A.A.A., Ciompi F., Ghafoorian M., van der Laak J.A.W.M., van Ginneken B., Sánchez C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017;42:60–88. doi: 10.1016/j.media.2017.07.005. [DOI] [PubMed] [Google Scholar]

- 27.Pesapane F., Codari M., Sardanelli F. Artificial intelligence in medical imaging: Threat or opportunity? Radiologists again at the forefront of innovation in medicine. Eur. Radiol. Exp. 2018;2:35. doi: 10.1186/s41747-018-0061-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Chereda H., Bleckmann A., Menck K., Perera-Bel J., Stegmaier P., Auer F., Kramer F., Leha A., Beißbarth T. Explaining decisions of graph convolutional neural networks: Patient-specific molecular subnetworks responsible for metastasis prediction in breast cancer. Genome Med. 2021;13:42. doi: 10.1186/s13073-021-00845-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Sassaroli E., Crake C., Scorza A., Kim D.S., Park M.A. Image quality evaluation of ultrasound imaging systems: Advanced B-modes. J. Appl. Clin. Med Phys. 2019;20:115–124. doi: 10.1002/acm2.12544. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Entrekin R.R., Porter B.A., Sillesen H.H., Wong A.D., Cooperberg P.L., Fix C.H. Real-time spatial compound imaging: Application to breast, vascular, and musculoskeletal ultrasound. Semin. Ultrasound CT MRI. 2001;22:50–64. doi: 10.1016/S0887-2171(01)90018-6. [DOI] [PubMed] [Google Scholar]

- 31.Desser T.S., Jeffrey Jr R.B., Lane M.J., Ralls P.W. Tissue harmonic imaging: Utility in abdominal and pelvic sonography. J. Clin. Ultrasound. 1999;27:135–142. doi: 10.1002/(SICI)1097-0096(199903/04)27:3<135::AID-JCU6>3.0.CO;2-P. [DOI] [PubMed] [Google Scholar]

- 32.Ortiz S.H.C., Chiu T., Fox M.D. Ultrasound image enhancement: A review. Biomed. Signal Process. Control. 2012;7:419–428. doi: 10.1016/j.bspc.2012.02.002. [DOI] [Google Scholar]

- 33.Joel T., Sivakumar R. Despeckling of ultrasound medical images: A survey. J. Image Graph. 2013;1:161–165. doi: 10.12720/joig.1.3.161-165. [DOI] [Google Scholar]

- 34.Li B., Xu K., Feng D., Mi H., Wang H., Zhu J. Denoising Convolutional Autoencoder Based B-mode Ultrasound Tongue Image Feature Extraction; Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP); Brighton, UK. 12–17 May 2019; pp. 7130–7134. [DOI] [Google Scholar]

- 35.Kokil P., Sudharson S. Despeckling of clinical ultrasound images using deep residual learning. Comput. Methods Programs Biomed. 2020;194:105477. doi: 10.1016/j.cmpb.2020.105477. [DOI] [PubMed] [Google Scholar]

- 36.Perdios D., Vonlanthen M., Besson A., Martinez F., Arditi M., Thiran J.-P. Deep convolutional neural network for ultrasound image enhancement; Proceedings of the 2018 IEEE International Ultrasonics Symposium (IUS); Kobe, Japan. 22–25 October 2018; pp. 1–4. [Google Scholar]

- 37.Di Serafino M., Notaro M., Rea G., Iacobellis F., Paoli V.D., Acampora C., Ianniello S., Brunese L., Romano L., Vallone G. The lung ultrasound: Facts or artifacts? In the era of COVID-19 outbreak. La Radiol. Med. 2020;125:738–753. doi: 10.1007/s11547-020-01236-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Hellier P., Coupé P., Morandi X., Collins D.L. An automatic geometrical and statistical method to detect acoustic shadows in intraoperative ultrasound brain images. Med. Image Anal. 2010;14:195–204. doi: 10.1016/j.media.2009.10.007. [DOI] [PubMed] [Google Scholar]

- 39.Karamalis A., Wein W., Klein T., Navab N. Ultrasound confidence maps using random walks. Med. Image Anal. 2012;16:1101–1112. doi: 10.1016/j.media.2012.07.005. [DOI] [PubMed] [Google Scholar]

- 40.Hacihaliloglu I. Enhancement of bone shadow region using local phase-based ultrasound transmission maps. Int. J. Comput. Assist. Radiol. Surg. 2017;12:951–960. doi: 10.1007/s11548-017-1556-y. [DOI] [PubMed] [Google Scholar]

- 41.Meng Q., Baumgartner C., Sinclair M., Housden J., Rajchl M., Gomez A., Hou B., Toussaint N., Zimmer V., Tan J. Data Driven Treatment Response Assessment and Preterm, Perinatal, and Paediatric Image Analysis. Springer; Cham, Switzerland: 2018. Automatic shadow detection in 2d ultrasound images; pp. 66–75. [DOI] [Google Scholar]

- 42.Meng Q., Sinclair M., Zimmer V., Hou B., Rajchl M., Toussaint N., Oktay O., Schlemper J., Gomez A., Housden J. Weakly supervised estimation of shadow confidence maps in fetal ultrasound imaging. IEEE Trans. Med. Imaging. 2019;38:2755–2767. doi: 10.1109/TMI.2019.2913311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Yasutomi S., Arakaki T., Matsuoka R., Sakai A., Komatsu R., Shozu K., Dozen A., Machino H., Asada K., Kaneko S., et al. Shadow Estimation for Ultrasound Images Using Auto-Encoding Structures and Synthetic Shadows. Appl. Sci. 2021;11:1127. doi: 10.3390/app11031127. [DOI] [Google Scholar]

- 44.He K., Zhang X., Ren S., Sun J. Deep Residual Learning for Image Recognition; Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Las Vegas, NV, USA. 27–30 June 2016; pp. 770–778. [DOI] [Google Scholar]

- 45.Simonyan K., Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv. 20141409.1556 [Google Scholar]

- 46.Redmon J., Divvala S., Girshick R., Farhadi A. You Only Look Once: Unified, Real-Time Object Detection; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 779–788. [DOI] [Google Scholar]

- 47.Liu W., Anguelov D., Erhan D., Szegedy C., Reed S., Fu C.-Y., Berg A.C. SSD: Single Shot MultiBox Detector; Proceedings of the Computer Vision; Amsterdam, The Netherlands. 11–14 October 2016; pp. 21–37. [DOI] [Google Scholar]

- 48.Ronneberger O., Fischer P., Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Munich, Germany. 5–9 October 2015; pp. 234–241. [DOI] [Google Scholar]

- 49.Chen L.-C., Papandreou G., Kokkinos I., Murphy K., Yuille A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017;40:834–848. doi: 10.1109/TPAMI.2017.2699184. [DOI] [PubMed] [Google Scholar]

- 50.Chen L.-C., Zhu Y., Papandreou G., Schroff F., Adam H. Lecture Notes in Computer Science. Volume 11211. Springer; Cham, Switzerland: 2018. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation; pp. 801–818. [DOI] [Google Scholar]

- 51.Dozen A., Komatsu M., Sakai A., Komatsu R., Shozu K., Machino H., Yasutomi S., Arakaki T., Asada K., Kaneko S. Image Segmentation of the Ventricular Septum in Fetal Cardiac Ultrasound Videos Based on Deep Learning Using Time-Series Information. Biomolecules. 2020;10:1526. doi: 10.3390/biom10111526. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Shozu K., Komatsu M., Sakai A., Komatsu R., Dozen A., Machino H., Yasutomi S., Arakaki T., Asada K., Kaneko S. Model-Agnostic Method for Thoracic Wall Segmentation in Fetal Ultrasound Videos. Biomolecules. 2020;10:1691. doi: 10.3390/biom10121691. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Hu Y., Xia B., Mao M., Jin Z., Du J., Guo L., Frangi A.F., Lei B., Wang T. AIDAN: An Attention-Guided Dual-Path Network for Pediatric Echocardiography Segmentation. IEEE Access. 2020;8:29176–29187. doi: 10.1109/ACCESS.2020.2971383. [DOI] [Google Scholar]

- 54.Wu Y., Shen K., Chen Z., Wu J. Automatic Measurement of Fetal Cavum Septum Pellucidum From Ultrasound Images Using Deep Attention Network; Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP); Abu Dhabi, United Arab Emirates. 25–28 October 2020; pp. 2511–2515. [DOI] [Google Scholar]

- 55.Li H., Yang X., Liang J., Shi W., Chen C., Dou H., Li R., Gao R., Zhou G., Fang J., et al. Contrastive Rendering for Ultrasound Image Segmentation; Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2020; Lima, Peru. 4–8 October 2020; pp. 563–572. [DOI] [Google Scholar]

- 56.Goodfellow I., Pouget-Abadie J., Mirza M., Xu B., Warde-Farley D., Ozair S., Courville A., Bengio Y. Generative adversarial networks. Commun. ACM. 2020;63:139–144. doi: 10.1145/3422622. [DOI] [Google Scholar]

- 57.Liang J., Yang X., Li H., Wang Y., Van M.T., Dou H., Chen C., Fang J., Liang X., Mai Z., et al. Synthesis and Edition of Ultrasound Images via Sketch Guided Progressive Growing GANS; Proceedings of the 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI); Iowa City, IA, USA. 3–7 April 2020; pp. 1793–1797. [DOI] [Google Scholar]

- 58.Huang Q., Zeng Z. A review on real-time 3D ultrasound imaging technology. BioMed Res. Int. 2017;2017:6027029. doi: 10.1155/2017/6027029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Çiçek Ö., Abdulkadir A., Lienkamp S.S., Brox T., Ronneberger O. 3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Istanbul, Turkey. 17–21 October 2016; pp. 424–432. [DOI] [Google Scholar]

- 60.Al Chanti D., Duque V.G., Crouzier M., Nordez A., Lacourpaille L., Mateus D. IFSS-Net: Interactive few-shot siamese network for faster muscle segmentation and propagation in volumetric ultrasound. IEEE Trans. Med Imaging. 2021;40:1–14. doi: 10.1109/TMI.2021.3058303. [DOI] [PubMed] [Google Scholar]

- 61.Li Y., Khanal B., Hou B., Alansary A., Cerrolaza J.J., Sinclair M., Matthew J., Gupta C., Knight C., Kainz B., et al. Standard Plane Detection in 3D Fetal Ultrasound Using an Iterative Transformation Network; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Granada, Spain. 16–20 September 2018; pp. 392–400. [DOI] [Google Scholar]

- 62.Gonzalez Duque V., Al Chanti D., Crouzier M., Nordez A., Lacourpaille L., Mateus D. Spatio-Temporal Consistency and Negative Label Transfer for 3D Freehand US Segmentation; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Lima, Peru. 4–8 October 2020; pp. 710–720. [DOI] [Google Scholar]

- 63.Loibl S., Poortmans P., Morrow M., Denkert C., Curigliano G. Breast cancer. Lancet. 2021 doi: 10.1016/S0140-6736(20)32381-3. [DOI] [PubMed] [Google Scholar]

- 64.Han S., Kang H.-K., Jeong J.-Y., Park M.-H., Kim W., Bang W.-C., Seong Y.-K. A deep learning framework for supporting the classification of breast lesions in ultrasound images. Phys. Med. Biol. 2017;62:7714–7728. doi: 10.1088/1361-6560/aa82ec. [DOI] [PubMed] [Google Scholar]

- 65.Fujioka T., Kubota K., Mori M., Kikuchi Y., Katsuta L., Kasahara M., Oda G., Ishiba T., Nakagawa T., Tateishi U. Distinction between benign and malignant breast masses at breast ultrasound using deep learning method with convolutional neural network. Jpn. J. Radiol. 2019;37:466–472. doi: 10.1007/s11604-019-00831-5. [DOI] [PubMed] [Google Scholar]

- 66.Byra M., Galperin M., Ojeda-Fournier H., Olson L., O’Boyle M., Comstock C., Andre M. Breast mass classification in sonography with transfer learning using a deep convolutional neural network and color conversion. Med. Phys. 2019;46:746–755. doi: 10.1002/mp.13361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Antropova N., Huynh B.Q., Giger M.L. A deep feature fusion methodology for breast cancer diagnosis demonstrated on three imaging modality datasets. Med. Phys. 2017;44:5162–5171. doi: 10.1002/mp.12453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Tanaka H., Chiu S.-W., Watanabe T., Kaoku S., Yamaguchi T. Computer-aided diagnosis system for breast ultrasound images using deep learning. Phys. Med. Biol. 2019;64:235013. doi: 10.1088/1361-6560/ab5093. [DOI] [PubMed] [Google Scholar]

- 69.Zhu Y.-C., AlZoubi A., Jassim S., Jiang Q., Zhang Y., Wang Y.-B., Ye X.-D., Du H. A generic deep learning framework to classify thyroid and breast lesions in ultrasound images. Ultrasonics. 2021;110:106300. doi: 10.1016/j.ultras.2020.106300. [DOI] [PubMed] [Google Scholar]

- 70.Xiao T., Liu L., Li K., Qin W., Yu S., Li Z. Comparison of Transferred Deep Neural Networks in Ultrasonic Breast Masses Discrimination. BioMed Res. Int. 2018;2018:4605191. doi: 10.1155/2018/4605191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Zhang E., Seiler S., Chen M., Lu W., Gu X. BIRADS features-oriented semi-supervised deep learning for breast ultrasound computer-aided diagnosis. Phys. Med. Biol. 2020;65:125005. doi: 10.1088/1361-6560/ab7e7d. [DOI] [PubMed] [Google Scholar]

- 72.Huang Y., Han L., Dou H., Luo H., Yuan Z., Liu Q., Zhang J., Yin G. Two-stage CNNs for computerized BI-RADS categorization in breast ultrasound images. Biomed. Eng. Online. 2019;18:8. doi: 10.1186/s12938-019-0626-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Zhou L.-Q., Wu X.-L., Huang S.-Y., Wu G.-G., Ye H.-R., Wei Q., Bao L.-Y., Deng Y.-B., Li X.-R., Cui X.-W., et al. Lymph Node Metastasis Prediction from Primary Breast Cancer US Images Using Deep Learning. Radiology. 2020;294:19–28. doi: 10.1148/radiol.2019190372. [DOI] [PubMed] [Google Scholar]

- 74.Yap M.H., Pons G., Martí J., Ganau S., Sentís M., Zwiggelaar R., Davison A.K., Martí R. Automated Breast Ultrasound Lesions Detection Using Convolutional Neural Networks. IEEE J. Biomed. Health Inform. 2018;22:1218–1226. doi: 10.1109/JBHI.2017.2731873. [DOI] [PubMed] [Google Scholar]

- 75.Huang G., Liu Z., Van Der Maaten L., Weinberger K.Q. Densely Connected Convolutional Networks; Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Honolulu, HI, USA. 21–26 July 2017; pp. 2261–2269. [DOI] [Google Scholar]

- 76.Cao Z., Duan L., Yang G., Yue T., Chen Q. An experimental study on breast lesion detection and classification from ultrasound images using deep learning architectures. BMC Med. Imaging. 2019;19:51. doi: 10.1186/s12880-019-0349-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Kumar V., Webb J.M., Gregory A., Denis M., Meixner D.D., Bayat M., Whaley D.H., Fatemi M., Alizad A. Automated and real-time segmentation of suspicious breast masses using convolutional neural network. PLoS ONE. 2018;13:e0195816. doi: 10.1371/journal.pone.0195816. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Hu Y., Guo Y., Wang Y., Yu J., Li J., Zhou S., Chang C. Automatic tumor segmentation in breast ultrasound images using a dilated fully convolutional network combined with an active contour model. Med. Phys. 2019;46:215–228. doi: 10.1002/mp.13268. [DOI] [PubMed] [Google Scholar]

- 79.Zhuang Z., Li N., Joseph Raj A.N., Mahesh V.G.V., Qiu S. An RDAU-NET model for lesion segmentation in breast ultrasound images. PLoS ONE. 2019;14:e0221535. doi: 10.1371/journal.pone.0221535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Vakanski A., Xian M., Freer P.E. Attention-Enriched Deep Learning Model for Breast Tumor Segmentation in Ultrasound Images. Ultrasound Med. Biol. 2020;46:2819–2833. doi: 10.1016/j.ultrasmedbio.2020.06.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Singh V.K., Abdel-Nasser M., Akram F., Rashwan H.A., Sarker M.M.K., Pandey N., Romani S., Puig D. Breast tumor segmentation in ultrasound images using contextual-information-aware deep adversarial learning framework. Expert Syst. Appl. 2020;162:113870. doi: 10.1016/j.eswa.2020.113870. [DOI] [Google Scholar]

- 82.Li X., Zhang S., Zhang Q., Wei X., Pan Y., Zhao J., Xin X., Qin C., Wang X., Li J., et al. Diagnosis of thyroid cancer using deep convolutional neural network models applied to sonographic images: A retrospective, multicohort, diagnostic study. Lancet Oncol. 2019;20:193–201. doi: 10.1016/S1470-2045(18)30762-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Li H., Weng J., Shi Y., Gu W., Mao Y., Wang Y., Liu W., Zhang J. An improved deep learning approach for detection of thyroid papillary cancer in ultrasound images. Sci. Rep. 2018;8:6600. doi: 10.1038/s41598-018-25005-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Guan Q., Wang Y., Du J., Qin Y., Lu H., Xiang J., Wang F. Deep learning based classification of ultrasound images for thyroid nodules: A large scale of pilot study. Ann. Transl. Med. 2019;7:137. doi: 10.21037/atm.2019.04.34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Ko S.Y., Lee J.H., Yoon J.H., Na H., Hong E., Han K., Jung I., Kim E.-K., Moon H.J., Park V.Y., et al. Deep convolutional neural network for the diagnosis of thyroid nodules on ultrasound. Head Neck. 2019;41:885–891. doi: 10.1002/hed.25415. [DOI] [PubMed] [Google Scholar]

- 86.Moussa O., Khachnaoui H., Guetari R., Khlifa N. Thyroid nodules classification and diagnosis in ultrasound images using fine-tuning deep convolutional neural network. Int. J. Imaging Syst. Technol. 2020;30:185–195. doi: 10.1002/ima.22363. [DOI] [Google Scholar]

- 87.Lheureux S., Gourley C., Vergote I., Oza A.M. Epithelial ovarian cancer. Lancet. 2019;393:1240–1253. doi: 10.1016/S0140-6736(18)32552-2. [DOI] [PubMed] [Google Scholar]

- 88.Zhang L., Huang J., Liu L. Improved Deep Learning Network Based in combination with Cost-sensitive Learning for Early Detection of Ovarian Cancer in Color Ultrasound Detecting System. J. Med. Syst. 2019;43:251. doi: 10.1007/s10916-019-1356-8. [DOI] [PubMed] [Google Scholar]

- 89.Christiansen F., Epstein E.L., Smedberg E., Åkerlund M., Smith K., Epstein E. Ultrasound image analysis using deep neural networks for discriminating between benign and malignant ovarian tumors: Comparison with expert subjective assessment. Ultrasound Obstet. Gynecol. 2021;57:155–163. doi: 10.1002/uog.23530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Feng Y., Yang F., Zhou X., Guo Y., Tang F., Ren F., Guo J., Ji S. A Deep Learning Approach for Targeted Contrast-Enhanced Ultrasound Based Prostate Cancer Detection. IEEE/ACM Trans. Comput. Biol. Bioinform. 2019;16:1794–1801. doi: 10.1109/TCBB.2018.2835444. [DOI] [PubMed] [Google Scholar]

- 91.Wildeboer R.R., Mannaerts C.K., van Sloun R.J.G., Budäus L., Tilki D., Wijkstra H., Salomon G., Mischi M. Automated multiparametric localization of prostate cancer based on B-mode, shear-wave elastography, and contrast-enhanced ultrasound radiomics. Eur. Radiol. 2020;30:806–815. doi: 10.1007/s00330-019-06436-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Girum K.B., Lalande A., Hussain R., Créhange G. A deep learning method for real-time intraoperative US image segmentation in prostate brachytherapy. Int. J. Comput. Assist. Radiol. Surg. 2020;15:1467–1476. doi: 10.1007/s11548-020-02231-x. [DOI] [PubMed] [Google Scholar]

- 93.Hassan T.M., Elmogy M., Sallam E.-S. Diagnosis of Focal Liver Diseases Based on Deep Learning Technique for Ultrasound Images. Arab. J. Sci. Eng. 2017;42:3127–3140. doi: 10.1007/s13369-016-2387-9. [DOI] [Google Scholar]

- 94.Schmauch B., Herent P., Jehanno P., Dehaene O., Saillard C., Aubé C., Luciani A., Lassau N., Jégou S. Diagnosis of focal liver lesions from ultrasound using deep learning. Diagn. Interv. Imaging. 2019;100:227–233. doi: 10.1016/j.diii.2019.02.009. [DOI] [PubMed] [Google Scholar]

- 95.Sudharson S., Kokil P. An ensemble of deep neural networks for kidney ultrasound image classification. Comput. Methods Programs Biomed. 2020;197:105709. doi: 10.1016/j.cmpb.2020.105709. [DOI] [PubMed] [Google Scholar]

- 96.Douglas Pamela S., Garcia Mario J., Haines David E., Lai Wyman W., Manning Warren J., Patel Ayan R., Picard Michael H., Polk Donna M., Ragosta M., Ward R.P., et al. ACCF/ASE/AHA/ASNC/HFSA/HRS/SCAI/SCCM/SCCT/SCMR 2011 Appropriate Use Criteria for Echocardiography. J. Am. Coll. Cardiol. 2011;57:1126–1166. doi: 10.1016/j.jacc.2010.11.002. [DOI] [PubMed] [Google Scholar]

- 97.Puntmann V.O., Carerj M.L., Wieters I., Fahim M., Arendt C., Hoffmann J., Shchendrygina A., Escher F., Vasa-Nicotera M., Zeiher A.M., et al. Outcomes of Cardiovascular Magnetic Resonance Imaging in Patients Recently Recovered From Coronavirus Disease 2019 (COVID-19) JAMA Cardiol. 2020;5:1265–1273. doi: 10.1001/jamacardio.2020.3557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Bonow R.O., O’Gara P.T., Yancy C.W. Cardiology and COVID-19. JAMA. 2020;324:1131–1132. doi: 10.1001/jama.2020.15088. [DOI] [PubMed] [Google Scholar]

- 99.Abdi A.H., Luong C., Tsang T., Allan G., Nouranian S., Jue J., Hawley D., Fleming S., Gin K., Swift J. Automatic quality assessment of echocardiograms using convolutional neural networks: Feasibility on the apical four-chamber view. IEEE Trans. Med. Imaging. 2017;36:1221–1230. doi: 10.1109/TMI.2017.2690836. [DOI] [PubMed] [Google Scholar]

- 100.Liao Z., Girgis H., Abdi A., Vaseli H., Hetherington J., Rohling R., Gin K., Tsang T., Abolmaesumi P. On modelling label uncertainty in deep neural networks: Automatic estimation of intra-observer variability in 2d echocardiography quality assessment. IEEE Trans. Med. Imaging. 2019;39:1868–1883. doi: 10.1109/TMI.2019.2959209. [DOI] [PubMed] [Google Scholar]

- 101.Ghorbani A., Ouyang D., Abid A., He B., Chen J.H., Harrington R.A., Liang D.H., Ashley E.A., Zou J.Y. Deep learning interpretation of echocardiograms. NPJ Digit. Med. 2020;3:10. doi: 10.1038/s41746-019-0216-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Zhang J., Gajjala S., Agrawal P., Tison G.H., Hallock L.A., Beussink-Nelson L., Lassen M.H., Fan E., Aras M.A., Jordan C. Fully automated echocardiogram interpretation in clinical practice: Feasibility and diagnostic accuracy. Circulation. 2018;138:1623–1635. doi: 10.1161/CIRCULATIONAHA.118.034338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Ahn S., Ta K., Lu A., Stendahl J.C., Sinusas A.J., Duncan J.S., Ruiter N.V., Byram B.C. Unsupervised motion tracking of left ventricle in echocardiography. Ultrason. Imaging Tomogr. 2020:36. doi: 10.1117/12.2549572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Kusunose K., Abe T., Haga A., Fukuda D., Yamada H., Harada M., Sata M. A deep learning approach for assessment of regional wall motion abnormality from echocardiographic images. JACC Cardiovasc. Imaging. 2020;13:374–381. doi: 10.1016/j.jcmg.2019.02.024. [DOI] [PubMed] [Google Scholar]

- 105.Lekadir K., Galimzianova A., Betriu A., Del Mar Vila M., Igual L., Rubin D.L., Fernandez E., Radeva P., Napel S. A Convolutional Neural Network for Automatic Characterization of Plaque Composition in Carotid Ultrasound. IEEE J. Biomed. Health Inform. 2017;21:48–55. doi: 10.1109/JBHI.2016.2631401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Savaş S., Topaloğlu N., Kazcı Ö., Koşar P.N. Classification of Carotid Artery Intima Media Thickness Ultrasound Images with Deep Learning. J. Med. Syst. 2019;43:273. doi: 10.1007/s10916-019-1406-2. [DOI] [PubMed] [Google Scholar]

- 107.Araki T., Jain P.K., Suri H.S., Londhe N.D., Ikeda N., El-Baz A., Shrivastava V.K., Saba L., Nicolaides A., Shafique S., et al. Stroke Risk Stratification and its Validation using Ultrasonic Echolucent Carotid Wall Plaque Morphology: A Machine Learning Paradigm. Comput. Biol. Med. 2017;80:77–96. doi: 10.1016/j.compbiomed.2016.11.011. [DOI] [PubMed] [Google Scholar]

- 108.Qian C., Yang X. An integrated method for atherosclerotic carotid plaque segmentation in ultrasound image. Comput. Methods Programs Biomed. 2018;153:19–32. doi: 10.1016/j.cmpb.2017.10.002. [DOI] [PubMed] [Google Scholar]

- 109.Zhou R., Fenster A., Xia Y., Spence J.D., Ding M. Deep learning-based carotid media-adventitia and lumen-intima boundary segmentation from three-dimensional ultrasound images. Med. Phys. 2019;46:3180–3193. doi: 10.1002/mp.13581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110.Biswas M., Kuppili V., Araki T., Edla D.R., Godia E.C., Saba L., Suri H.S., Omerzu T., Laird J.R., Khanna N.N., et al. Deep learning strategy for accurate carotid intima-media thickness measurement: An ultrasound study on Japanese diabetic cohort. Comput. Biol. Med. 2018;98:100–117. doi: 10.1016/j.compbiomed.2018.05.014. [DOI] [PubMed] [Google Scholar]