Abstract

The purpose of this study was to directly and quantitatively measure BMD from Cone-beam CT (CBCT) images by enhancing the linearity and uniformity of the bone intensities based on a hybrid deep-learning model (QCBCT-NET) of combining the generative adversarial network (Cycle-GAN) and U-Net, and to compare the bone images enhanced by the QCBCT-NET with those by Cycle-GAN and U-Net. We used two phantoms of human skulls encased in acrylic, one for the training and validation datasets, and the other for the test dataset. We proposed the QCBCT-NET consisting of Cycle-GAN with residual blocks and a multi-channel U-Net using paired training data of quantitative CT (QCT) and CBCT images. The BMD images produced by QCBCT-NET significantly outperformed the images produced by the Cycle-GAN or the U-Net in mean absolute difference (MAD), peak signal to noise ratio (PSNR), normalized cross-correlation (NCC), structural similarity (SSIM), and linearity when compared to the original QCT image. The QCBCT-NET improved the contrast of the bone images by reflecting the original BMD distribution of the QCT image locally using the Cycle-GAN, and also spatial uniformity of the bone images by globally suppressing image artifacts and noise using the two-channel U-Net. The QCBCT-NET substantially enhanced the linearity, uniformity, and contrast as well as the anatomical and quantitative accuracy of the bone images, and demonstrated more accuracy than the Cycle-GAN and the U-Net for quantitatively measuring BMD in CBCT.

Subject terms: Osteoporosis, Dental diseases, Medical imaging

Introduction

Trabecular bone density, a determinant of bone strength, is important for the diagnosis of bone quality in bone diseases1,2. Bone mineral density (BMD) measurements are a direct method of estimating human bone mass for diagnosing osteoporosis and predicting future fracture risk3,4. Generally, volumetric BMD can be assessed quantitatively through the calibration of Hounsfield Units (HU) in CT, which is a method known as quantitative CT (QCT)5,6. The multi-detector CT (MDCT) with rapid acquisition of 3D volume images enables QCT to be applied to clinically important sites for assessing BMD7.

For dental implant treatment, precise in vivo measurement of alveolar bone quality is very important in determining the primary stability of dental implants8. Therefore, the alveolar bone quality of the implant site needs to be measured before surgery to determine whether the BMD is sufficient to support the implant9. Recently, cone-beam CT (CBCT) systems have been widely used for dental treatment and planning as they offer many advantages over MDCTs, including a lower radiation dose to the patient, shorter acquisition times, better resolution, and greater detail10–15. However, the voxel intensity values in CBCT systems are arbitrary, and do not allow for the assessment of bone quality as the systems do not correctly show HUs16–20. The ability of the CBCT to assess the bone density is limited as the HUs derived from CBCT data is clearly different from that of MDCT data5,17–19,21. Several studies have been performed to resolve the discrepancy in HUs between MDCT and CBCT data15–17,22. Some studies investigated the relationship between CBCT voxel intensity values and MDCT HUs using a BMD calibration phantom with material inserts of different attenuation coefficients17,23–27. These studies showed that the use of the phantoms in CBCT scanners would be difficult for correlating CBCT voxel intensities with HUs because of the non-uniformity of the measurements and the nonlinear relationship between CBCT voxel intensities and HUs15.

CBCTs have also been widely used for accurate patient setups in image-guided radiation therapy28. Many methods for correcting CBCT images with high quality have been proposed to produce quantitative CBCTs in the radiation therapy field, which do not require a calibration phantom during an object scan. These methods can be classified as hardware corrections such as anti-scatter grids, and model-based methods using Monte Carlo techniques to model the scatter to CBCT projections29–34. Recently, the generative adversarial network (GAN), a deep neural network model, has shown state-of-the-art performance in many image processing tasks28,35,36. The GAN is composed of two networks trained simultaneously with one focused on image generation and the other on discrimination. The GAN has the capability of data generation without explicitly modelling the probability density function37. In one study, a deep learning-based method using a modified GAN improved image quality for generating corrected CBCT images, which integrated a residual block concept into a Cycle-GAN framework38. Moreover, the U-Net model of U-shape encoder-decoder architecture is widely applied in biomedical image segmentation, image denoising39–41, and image synthesis42–44. The U-Net based approach could efficiently synthesize artifact-suppressed CT-like CBCT images from CBCT images containing global scattering and local artifacts43,44.

To date, these deep learning-based studies have mainly focused on the improvement in voxel values of the soft tissues in CBCT images. As far as we know, no previous studies have quantitatively measured BMD from CBCT images through the improvement of the bone image using deep learning. We hypothesized that a deep learning-based method could generate QCT-like CBCT images from CBCT images for directly measuring BMD by learning the pixel-wise mapping between QCT and CBCT images. The purpose of this study was to directly and quantitatively measure BMD from CBCT images by enhancing the linearity and uniformity of the bone intensities based on a hybrid deep-learning model (QCBCT-NET) of combining the generative adversarial network (Cycle-GAN) and U-Net, and to compare the bone images enhanced by the QCBCT-NET with those by Cycle-GAN and U-Net.

Materials and methods

Data acquisition and preparation

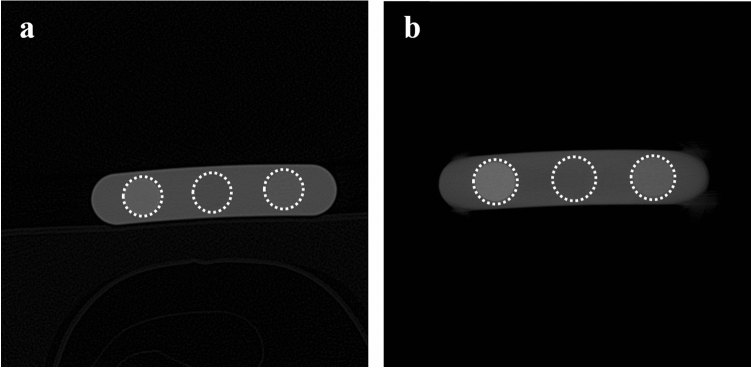

We used two phantoms of human skulls encased in acrylic articulated for medical use (Erler Zimmer Co., Lauf, Germany), one with and the other without metal restorations causing streak artifacts. The phantoms have been used in our previous studies45–48. The images of the phantoms were obtained with a MDCT (Somatom Sensation 10, Siemens AG, Erlangen, Germany) and a CBCT (CS 9300, Carestream Health, Inc., Rochester, US), respectively. We acquired the CT images with voxel sizes of 0.469 × 0.469 × 0.5 mm3, dimensions of 512 × 512 pixels, and 16 bit depth under condition of 120 kVp and 130 mA, while the CBCT images were obtained with voxel sizes of 0.3 × 0.3 × 0.3 mm3, dimensions of 559 × 559 pixels, and 16 bit depth under conditions combined from 80 or 90 kVp and 8 or 10 mA. In addition, CT and CBCT images of a BMD calibration phantom (QRM-BDC Phantom 200 mm length, QRM GmbH, Moehrendorf, Germany) with calcium hydroxyapatite inserts of three densities (0 (water), 100, and 200 mg/cm3) were also obtained under the same condition (Fig. 1). The CT images of the skull phantoms were then converted into quantitative CT (QCT) images based on Hounsfield Units (HU) by linear calibration using the CT images of the BMD calibration phantom. The CBCT images of the skull phantoms were also converted into calibrated CBCT (CAL_CBCT) images using the corresponding images of the BMD calibration phantom for comparisons with deep learning results afterwards.

Figure 1.

(a) MDCT, and (b) CBCT images of BMD calibration phantom with calcium hydroxyapatite inserts of three densities (0 (center circle), 100 (right circle), and 200 (left circle) mg/cm3).

The CT image for the skull phantom was matched to the CBCT image by paired-point registration using a software (3D Slicer, MIT, Massachusetts, US), where the six landmarks were localized manually at the vertex on the lateral incisors, the buccal cusps of the first premolars, and the distobuccal cusps of the first molars49. The matched CT and CBCT images consisting of a matrix of 559 × 559 × 264 pixels were cropped to images of 559 × 559 × 200 pixels centered at the maxillomandibular region, and then resized to images of 256 × 256 × 200 pixels. To avoid adverse impacts from non-anatomical regions during training, binary masks were applied to the CT and CBCT images to separate the maxillomandibular region from the non-anatomical regions44. The binary mask images were generated by using thresholding and morphological operations. The edges of anatomical regions were extracted by applying a local range filter to the paired CBCT and CT images50, and the morphological operations of opening and flood fill were applied to the binarized edges obtained by thresholding to remove small blobs and fill the inner area. The corresponding CBCT and CT images were multiplied by the intersection of the two binary masks from CBCT and CT images. The voxel values outside the masked region were replaced with Hounsfield Units (HUs) of − 1000.

For deep learning, we prepared the 800 pairs of axial slice images for QCT and CBCTs from the skull phantom without metal restorations for the training and validation datasets (obtained under four conditions combined from 80 or 90 kVp, and 8 or 10 mA), and independently, another 400 pairs for QCT and CBCTs from the skull phantom with metal restorations for the test dataset (obtained under two conditions of 80 kVp and 8 mA, and 90 kVp and 10 mA).

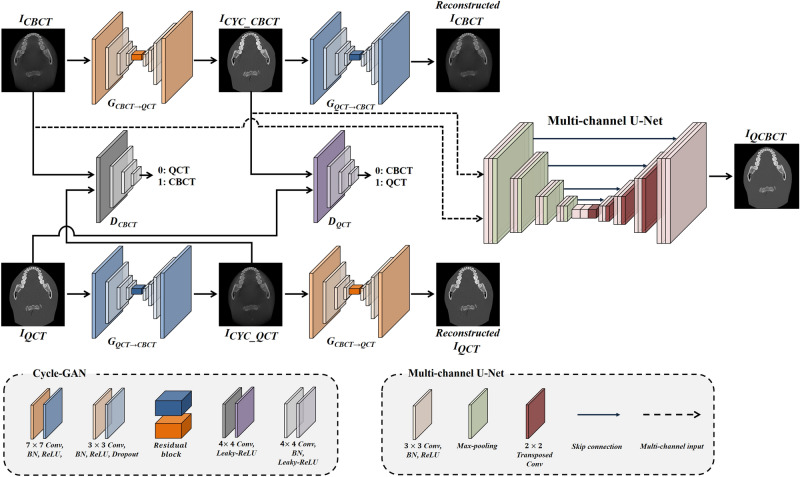

Hybrid deep-learning model (QCBCT-NET) for quantitative CBCT images

We designed a hybrid deep-learning architecture (QCBCT-NET) consisting of Cycle-GAN and U-Net to generate QCT-like images from the conventional CBCT images (Fig. 2), and also the Cycle-GAN and the U-Net with the same architecture with QCBCT-NET, respectively, for performance comparisons. We implemented Cycle-GAN with the residual blocks38 combined with a multi-channel U-Net model using paired training data. The CycleGAN architecture contained two generators for yielding the CBCT to QCT () and QCT to CBCT () mappings, and two discriminators for distinguishing between real () and generated () images. We adopted a ResNet architecture with nine residual blocks for the generators, and a PatchGAN of 70 × 70 patch for the discriminators.

Figure 2.

The QCBCT-NET architecture combining Cycle-GAN and the multi-channel U-net. The Cycle-GAN consisted of two generators of , and , and two discriminators of , and . In the generators, the convolution block consisted of 7 × 7 and 3 × 3 convolution layers with batch normalization and ReLU activation, and residual blocks were embedded in the middle of the down-sampling and up-sampling layers. In discriminators, the convolution block consisted of 4 × 4 convolution layers with batch normalization and leaky ReLU activation followed by down-sampling layers. The multi-channel U-Net had two-channel inputs of CBCT and corresponding CYC_CBCT images, consisting of 3 × 3 convolution layers with batch normalization and ReLU activation, and had skip connections at each layer level. Max-pooling was used for down-sampling and transposed convolution was used for up-sampling. Consequently, the QCBCT-NET generated QCBCT images from CBCT images to quantitatively measure BMD in CBCTs.

The Cycle-GAN model was optimized using two part loss functions consisting of an adversarial loss and a cycle consistency loss36. The adversarial loss function relied on the output of the discriminators, which were defined as:

where was the CBCT image, and , the QCT image.

To avoid mode collapse issues, we added a cycle consistency loss that reduced the space of mapping functions. The cycle consistency loss was defined as:

where was the CBCT image, and , the QCT image.

Finally, the loss function of Cycle-GAN was defined as:

where λ controlled the relative importance of the adversarial losses, and the used value of λ was 10.

To generate QCBCT images, we implemented the multi-channel U-Net with four skip-connections between an encoder and a decoder at each resolution level using the two-channel inputs consisting of the original CBCT image, and the corresponding output of the Cycle-GAN. The multi-channel U-Net was optimized by the loss function consisting of the mean absolute difference (MAD) and structural difference (SSIM) between QCBCT and QCT images43, which were defined as:

where was the QCBCT image, , the QCT image, µ, mean, 2, variance, and C1 and C2, variables to stabilize the division with weak denominators.

Finally, the loss function of the multi-channel U-Net was defined as:

where the used value of was 0.6.

The deep learning model was trained and tested using a workstation with four GPUs of Nvidia GeForce GTX 1080 Ti and 11 GB of VRAM. The Cycle-GAN model was trained by the Adam optimizer with a mini-batch size of 8 and epoch number of 200. For the first 100 epochs, the learning rate was maintained at 0.0002, and decreased linearly approaching zero for the next 100 epochs. The U-Net model was trained by the Adam optimizer with a mini-batch size of 8 and epoch number of 200. The learning rate was set to 0.0001 with momentum terms of 0.9 to stabilize the training.

To compare the performance of measuring BMD from QCBCT images produced by the QCBCT-NET with those by the Cycle-GAN or the U-Net, we used the same settings with QCBCT-NET for the Cycle-GAN and the U-Net, and trained the networks with only CBCT as the network input, respectively.

Evaluation of quantitative CBCT images for measuring BMD

To quantitatively evaluate the performance of measuring BMD from CBCT images by the different deep learning models, we compared the mean absolute difference (MAD), peak signal to noise ratio (PSNR), normalized cross correlation (NCC), and structural similarity (SSIM) between the original QCT image (the ground truth), and QCBCT image produced by QCBCT-NET, CYC_CBCT image produced by Cycle-GAN, U_CBCT image produced by U-NET, and CAL_CBCT image produced by only calibration for the CBCT image of the test dataset obtained under two scanning conditions. The MAD was defined as the mean of the absolute differences between the intensities of the QCT and CBCT images, the PSNR as the logarithm of the maximum possible intensity (MAX) over the root mean squared error (MSE) between the intensities of the QCT and CBCT images (), the NCC as the multiplication between the intensities of the QCT and CBCT images divided by each standard deviation (), and SSIM the same as described above. The quantitative measurements in each slice were averaged over the whole maxilla and mandible. The higher values of PSNR, SSIM, and NCC, and the lower MAE indicated better performance for BMD measurement from CBCT images.

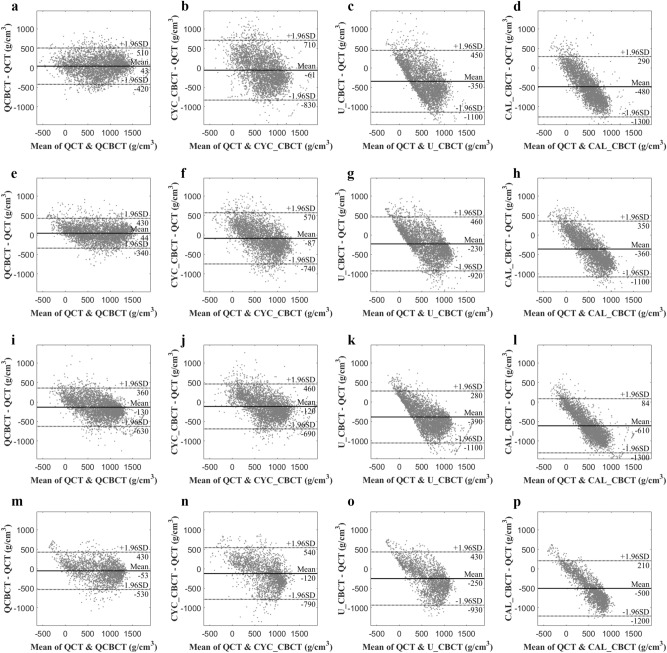

Spatial nonuniformity (SNU) of the CBCT images was measured as the absolute difference between the maximum and the minimum of the BMD values in rectangular ROIs around the maxilla and mandible. To evaluate the linearity of BMD measurements in the CBCT images, we analyzed the relationship between the voxel intensities of the QCT (the ground truth) and CBCT images through a linear regression of the voxel intensities (Slope, slope of linear regression) at the maxilla and mandible, respectively. The lower SNU, and the higher Slope indicated better performance for BMD measurement from CBCT images. We also performed the Bland–Altman analysis to analyze the bias and agreement limits of the BMD between QCT (the ground truth) and CBCT images at the maxilla and mandible.

We compared the performances between QCBCT and other CBCT images at the maxilla and mandible under two conditions of 80 kVp and 8 mA, and 90 kVp and 10 mA with respect to the variations of BMD values of a bone depending on their relative positions51, and those affected by scanning conditions. Paired two-tailed t-tests were used (SPSS v26, SPSS Inc., Chicago, IL, USA) to compare the quantitative performances between QCBCT and CYC_CBCT images, between QCBCT and U_CBCT images, and between QCBCT and CAL_CBCT images. Statistical significance level was set at 0.01.

Results

Table 1 summarizes the means of the quantitative performance results for measuring BMD from QCBCT images produced by QCBCT-NET, CYC_CBCT produced by Cycle-GAN, U_CBCT produced by U-NET, and CAL_CBCT produced by calibration for the CBCT images of test datasets acquired for the skull phantom with metal restorations under conditions of 80 kVp and 8 mA, and 90 kVp and 10 mA. The BMD images of QCBCTs significantly outperformed the CYC_CBCT and U_CBCT images in MAD, PSNR, SSIM, and NCC at both the maxilla and mandible area when compared to the original QCT images (Table 1). All performances from the QCBCT images exhibited significant differences with those from the CYC_CBCT or U_CBCT images at the maxilla and mandible (p < 0.01) except for the SNU from the U_CBCT (p = 0.04) (Table 1). Compared to the BMD measurements from the CYC_CBCT image, the BMD from the QCBCT showed increases of 38% MAD, 20% PSNR, 45% SSIM, 40% NCC, 80% SNU, and 84% Slope at the maxilla, and 39% MAD, 20% PSNR, 50% SSIM, 40% NCC, 47% SNU, and 102% Slope at the mandible for CBCT images under condition of 80 kVp and 8 mA (Table 2). Compared to the BMD measurement from the U_CBCT image, increases of 59% MAD, 41% PSNR, 112% SSIM, 58% NCC, -17% SNU, and 167% Slope at the maxilla, and 49% MAD, 33% PSNR, 81% SSIM, 54% NCC, -25% SNU, and 142% Slope at the mandible for CBCT images under condition of 80 kVp and 8 mA (Table 2). Under the higher dose condition of 90 kVp and 10 mA, the BMD from the QCBCT also showed higher performances at both the maxilla and mandible compared to the CYC_CBCT and U_CBCT (Table 2). Therefore, the BMDs from the QCBCT demonstrated more accuracy than those from the CYC_CBCT and U_CBCT without regard to relative positions of the bone, or effects from different scanning conditions.

Table 1.

Quantitative performance of CBCT images produced by QCBCT-NET, Cycle-GAN, U-Net, and CAL_CBCT compared to the original QCT images for measuring BMD values at the maxilla (1–81 slices) and mandible (82–200 slices) for test datasets under conditions of 80 kVp and 8 mA, and 90 kVp and 10 mA.

| Maxilla | Mandible | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MAD | PSNR | SSIM | NCC | SNU | Slope | MAD | PSNR | SSIM | NCC | SNU | Slope | ||

|

80 kVp 8 mA |

QCBCT-NET | 203.45 ± 27.24*†‡ | 23.87 ± 1.34*†‡ | 0.87 ± 0.02*†‡ | 0.87 ± 0.02*†‡ | 15.60 ± 7.85†‡ | 0.83 ± 0.04*†‡ | 190.79 ± 34.46*†‡ | 24.58 ± 1.39*†‡ | 0.87 ± 0.07*†‡ | 0.88 ± 0.06*†‡ | 21.85 ± 7.72†‡ | 0.85 ± 0.16*†‡ |

|

Cycle-GAN (p-value) |

328.91 ± 55.12 (0.00) | 19.94 ± 1.63 (0.00) | 0.60 ± 0.07 (0.00) | 0.62 ± 0.08 (0.00) | 79.04 ± 13.48 (0.00) | 0.45 ± 0.06 (0.00) | 313.14 ± 58.68 (0.00) | 20.52 ± 1.42 (0.00) | 0.58 ± 0.08 (0.00) | 0.63 ± 0.11 (0.00) | 41.59 ± 10.56 (0.00) | 0.42 ± 0.09 (0.00) | |

| U-Net (p-value) | 493.91 ± 45.14 (0.00) | 16.93 ± 0.86 (0.00) | 0.41 ± 0.07 (0.00) | 0.55 ± 0.08 (0.00) | 13.39 ± 3.22 (0.04) | 0.31 ± 0.06 (0.00) | 371.00 ± 36.81 (0.00) | 18.54 ± 1.31 (0.00) | 0.48 ± 0.08 (0.00) | 0.57 ± 0.12 (0.00) | 17.54 ± 2.84* (0.00) | 0.35 ± 0.08 (0.00) | |

| CAL_CBCT (p-value) | 592.40 ± 53.76 (0.00) | 15.63 ± 0.80 (0.00) | 0.31 ± 0.08 (0.00) | 0.61 ± 0.08 (0.00) | 69.30 ± 15.05 (0.00) | 0.26 ± 0.06 (0.00) | 491.44 ± 95.51 (0.00) | 17.33 ± 1.52 (0.00) | 0.40 ± 0.05 (0.00) | 0.62 ± 0.11 (0.00) | 39.19 ± 11.14 (0.00) | 0.30 ± 0.08 (0.00) | |

|

90 kVp 10 mA |

QCBCT-NET | 265.4 ± 63.41*†‡ | 21.92 ± 1.98*†‡ | 0.79 ± 0.02*†‡ | 0.84 ± 0.02*†‡ | 27.09 ± 38.42†‡ | 0.62 ± 0.04*†‡ | 236.25 ± 68.62*†‡ | 22.98 ± 2.36*†‡ | 0.79 ± 0.08*†‡ | 0.80 ± 0.15*†‡ | 15.87 ± 4.24†‡ | 0.66 ± 0.11*†‡ |

| Cycle-GAN (p-value) | 296.82 ± 53.03 (0.00) | 21.08 ± 1.39 (0.00) | 0.72 ± 0.04 (0.00) | 0.76 ± 0.05 (0.00) | 68.91 ± 47.76 (0.00) | 0.55 ± 0.05 (0.00) | 288.28 ± 61.30 (0.00) | 21.38 ± 2.17 (0.00) | 0.69 ± 0.07 (0.00) | 0.71 ± 0.14 (0.00) | 36.22 ± 8.96 (0.00) | 0.53 ± 0.09 (0.00) | |

| U-Net (p-value) | 474.15 ± 52.87 (0.00) | 17.40 ± 0.88 (0.00) | 0.50 ± 0.06 (0.00) | 0.68 ± 0.09 (0.00) | 16.02 ± 30.39* (0.00) | 0.38 ± 0.04 (0.00) | 370.59 ± 104.16 (0.00) | 19.66 ± 2.68 (0.00) | 0.57 ± 0.07 (0.00) | 0.67 ± 0.14 (0.00) | 12.80 ± 4.08* (0.00) | 0.40 ± 0.06 (0.00) | |

| CAL_CBCT (p-value) | 661.48 ± 61.59 (0.00) | 14.87 ± 0.78 (0.00) |

0.29 ± 0.08 (0.00) |

0.75 ± 0.05 (0.00) | 52.71 ± 23.00 (0.00) | 0.31 ± 0.05 (0.00) | 573.25 ± 93.37 (0.00) | 16.15 ± 1.42 (0.00) | 0.37 ± 0.08 (0.00) | 0.72 ± 0.13 (0.00) | 72.44 ± 30.46 (0.00) | 0.31 ± 0.06 (0.00) | |

MAD mean absolute difference, PSNR peak signal to noise ratio, SSIM structural similarity, NCC normalized cross correlation, SNU spatial nonuniformity, Slope slope of linear regression between the voxel intensities.

Mean ± SD.

*Significant difference (p < 0.01) between QCBCT-NET and U-Net, †(p < 0.01) between QCBCT-NET and Cycle-GAN, and ‡(p < 0.01) between QCBCT-NET and CAL_CBCT.

Table 2.

Percentage increases of QCBCT-NET performance compared to Cycle-GAN and U-Net for measuring BMD values at the maxilla (1–81 slices) and mandible (82–200 slices) for CBCT images of test datasets under conditions of 80 kVp and 8 mA, and 90 kVp and 10 mA.

| Maxilla (%) | Mandible (%) | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MAD | PSNR | SSIM | NCC | SNU | Slope | MAD | PSNR | SSIM | NCC | SNU | Slope | ||

|

80 kVp 8 mA |

vs. Cycle-GAN | 38.14 | 19.71 | 45.00 | 40.32 | 80.26 | 84.44 | 39.07 | 19.79 | 50.00 | 39.68 | 47.46 | 102.38 |

| vs. U-Net | 58.81 | 40.99 | 112.20 | 58.18 | − 16.50 | 167.74 | 48.57 | 32.58 | 81.25 | 54.39 | − 24.57 | 142.86 | |

|

90 kVp 10 mA |

vs. CycleGAN | 10.59 | 3.98 | 9.72 | 10.53 | 59.10 | 12.73 | 17.69 | 7.48 | 14.49 | 12.68 | 56.18 | 24.53 |

| vs. U-Net | 44.03 | 25.98 | 58.00 | 23.53 | − 73.47 | 63.16 | 36.40 | 16.89 | 38.60 | 19.40 | − 23.98 | 65.00 | |

MAD mean absolute difference, PSNR peak signal to noise ratio, SSIM structural similarity, NCC normalized cross correlation, SNU spatial nonuniformity, Slope slope of linear regression between the voxel intensities.

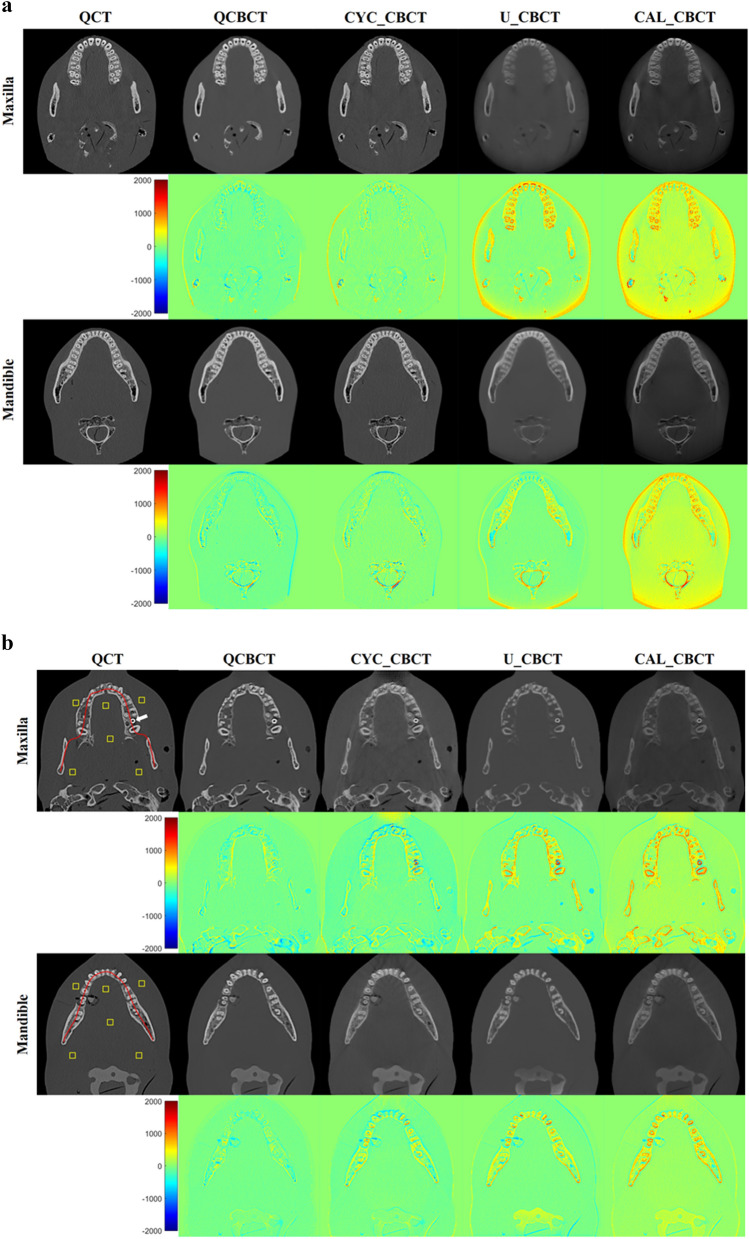

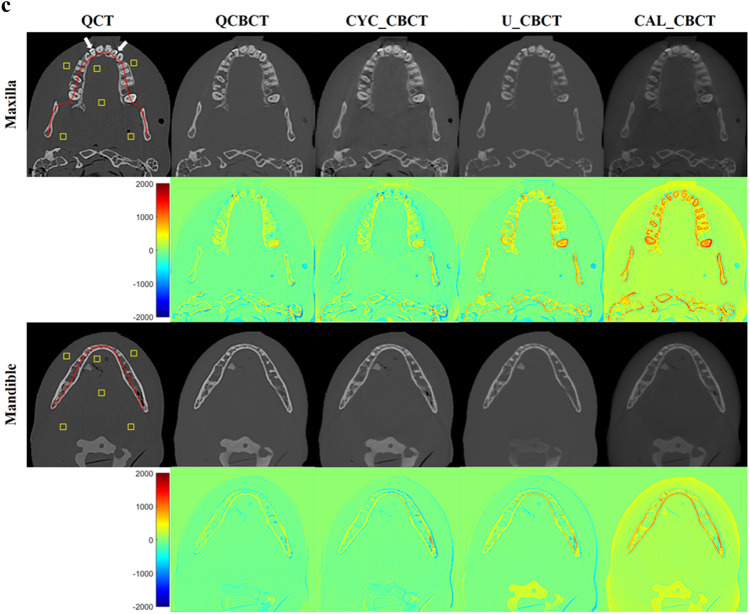

Figure 3 shows the axial slices of the BMD images from the original QCT, QCBCT, CYC_CBCT, U_CBCT, and CAL_CBCT at the maxilla and mandible. As shown in the subtraction images in Fig. 3, the BMD image quality of the QCBCTs for the two regions exhibited substantial improvement over those of CYC_CBCT, U_CBCT, and CAL_CBCT in terms of BMD (voxel intensity) differences compared to the original QCT images. The large differences around the teeth and dense bone of higher voxel intensities (BMD) seen in the CAL_CBCT were more reduced in the QCBCT than in the CYC_CBCT or U_CBCT images.

Figure 3.

The axial slices of BMD images from the original QCT, their generations by deep learning methods (the first and third row), and their subtractions from the original QCT images (the second and fourth row) at the maxilla and the mandible. QCBCT produced by QCBCT-NET, CYC_CBCT by Cycle-GAN, U_CBCT by U-NET, and CAL_CBCT by only calibration from (a) training datasets under condition of 90 kVp and 10 mA, (b) test datasets under condition of 80 kVp and 8 mA, and (c) test datasets under condition of 90 kVp and 10 mA. The yellow squares shown in the QCT image were ROIs for calculation of the spatial nonuniformity (SNU), the red curve shown in the QCT image was the dental arch for BMD (voxel intensity) profiles, and the white arrows shown in the QCT images indicated the dental implant at the maxilla in (b), and the dental restorations at the maxilla in (c).

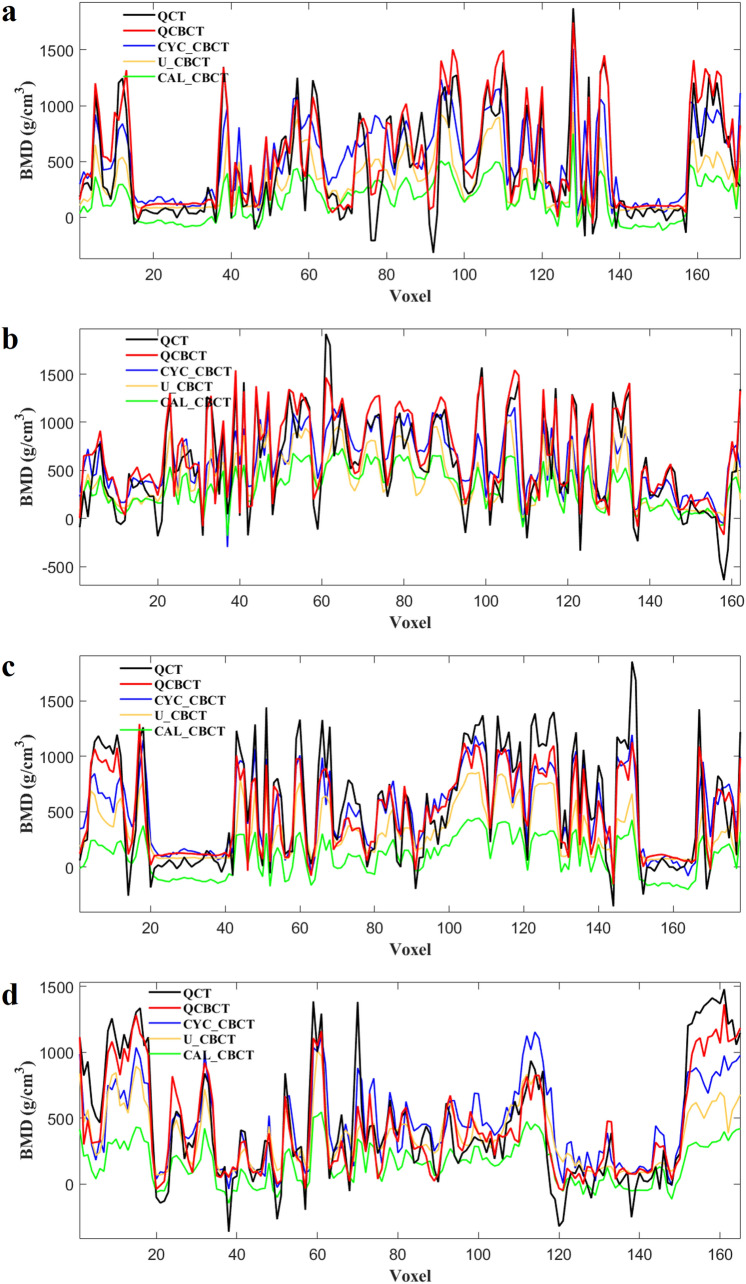

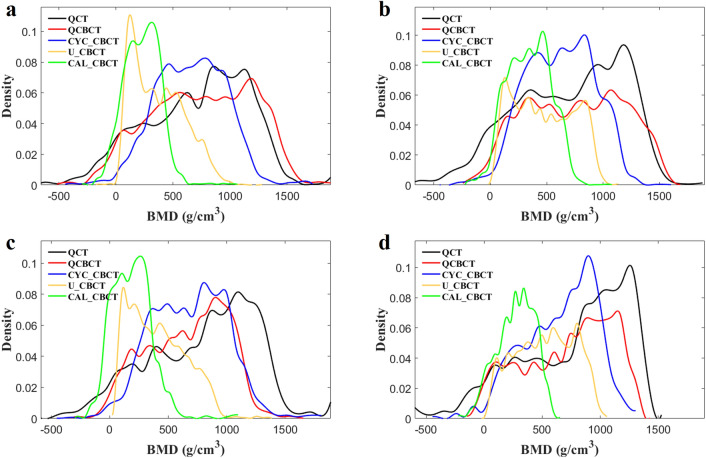

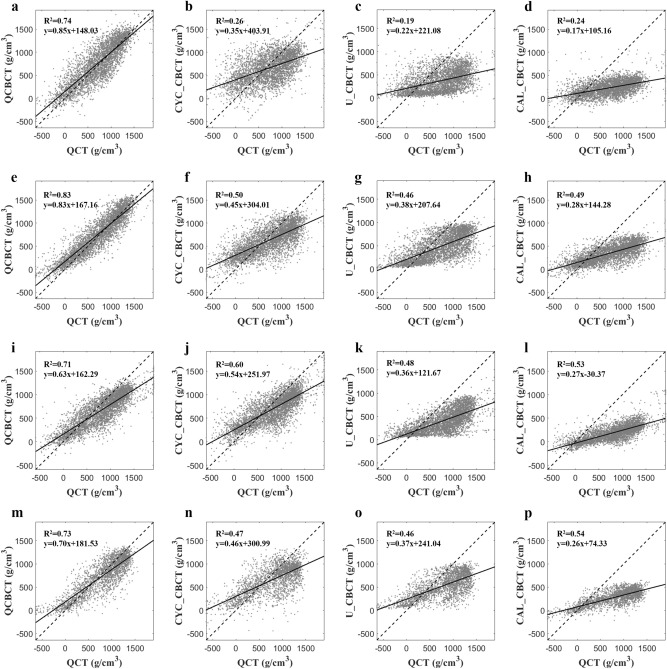

Figure 4 shows the BMD (voxel intensity) profiles that were acquired along the dental arch at the maxilla and mandible in the QCT and CBCT images as shown in Fig. 3. The BMD profile from the QCBCT images more closely reflected the original QCT than the CYC_CBCT and U_CBCT images with higher correlations with the QCT than other CBCT images, although the dental implant and restorations showed higher voxel intensities compared to other anatomical structures (Fig. 4). Therefore, the QCBCT image exhibited more improved structural preservation and edge sharpness of the bone than the CYC_CBCT and U_CBCT images at both the maxilla and mandible. The BMD distribution of the QCBCT also more closely restored the original QCT than that of the CYC_CBCT and U_CBCT images in an axial slice at the maxilla and mandible (Fig. 5). The linear relationship between the QCT and QCBCT images showed more contrast and correlation than that between QCT and other CBCT images with the larger slope and better goodness of fit (Fig. 6). The Bland–Altman plot between QCT and QCBCT images also showed higher linear relationships and better agreement limits than that between QCT and other CBCT images (Fig. 7). Therefore, the QCBCT images showed more improvement in preservation for the original distribution and linear relationship of the BMD values compared to CYC_CBCT and U_CBCT images.

Figure 4.

The BMD (voxel intensity) profiles along the dental arch at the maxilla and the mandible in the QCT, and QCBCT, CYC_CBCT, U_CBCT, and CAL_CBCT images shown in Fig. 2. Pearson correlation coefficients of QCBCT, CYC_CBCT, U_CBCT, and CAL_CBCT with the original QCT were (a) 0.92, 0.65, 0.60, and 0.65, respectively, for the profile at the maxilla and, (b) 0.93, 0.70, 0.65, and 0.69, respectively, for the profile at the mandible shown in Fig. 2b, and (c) 0.92, 0.89, 0.84, and 0.88, respectively, for the profile at the maxilla, and (d) 0.93, 0.81, 0.82, and 0.82, respectively, for the profile at the mandible shown in Fig. 2c.

Figure 5.

The BMD distribution in an axial slice of the original QCT, and QCBCT, CYC_CBCT, U_CBCT, and CAL_CBCT images. (a) CBCT images at the maxilla under condition of 80 kVp and 8 mA, (b) at the mandible under condition of 80 kVp and 8 mA, (c) at the maxilla under condition of 90 kVp and 10 mA, and (d) at the mandible under condition of 90 kVp and 10 mA.

Figure 6.

The linear relationships between the original QCT, and QCBCT, CYC_CBCT, U_CBCT, and CAL_CBCT images. (a–d) CBCT images at the maxilla under condition of 80 kVp and 8 mA, (e–h) at the mandible under condition of 80 kVp and 8 mA, (i–l) at the maxilla under condition of 90 kVp and 10 mA, and (m–p) at the mandible under condition of 90 kVp and 10 mA.

Figure 7.

The Bland–Altman plots between the original QCT, and QCBCT, CYC_CBCT, U_CBCT, and CAL_CBCT images. (a–d) CBCT images at the maxilla under condition of 80 kVp and 8 mA, (e–h) at the mandible under condition of 80 kVp and 8 mA, (i–l) at the maxilla under condition of 90 kVp and 10 mA, and (m–p) at the mandible under condition of 90 kVp and 10 mA.

Discussion

We developed a hybrid deep-learning model (QCBCT-NET) consisting of Cycle-GAN and U-Net to quantitatively and directly measure BMD from CBCT images. The BMD measurements of QCBCT images produced by QCBCT-NET significantly outperformed the CYC_CBCT images produced by Cycle-GAN and U_CBCT images produced by U-Net at both the maxilla and mandible area when compared to the original QCT. We used paired training data in the Cycle-GAN implementation with the residual blocks, which forced the network to focus on reducing image artifacts and enhancing bone contrast, rather than focusing on bone structural mismatches. Through the residual blocks in the generator architecture of the Cycle-GAN, the network could learn the difference between the source and target based on the residual image and generate corrected bone images more accurately52. In a study, a Cycle-GAN was used to capture the relationship from CBCT to CT images while simultaneously supervising an inverse of the CT to CBCT transformation model36. The Cycle-GAN doubled the process of a typical GAN by enforcing an inverse transformation, which doubly constrained the model and increased accuracy in the output images38. In our study, the Cycle-GAN can learn both intensity and textural mapping from a source distribution of the CBCT bone image to a target distribution of the QCT bone image.

In previous studies, U-Net architectures were used to directly synthesize CT-like CBCT images for their corresponding CT images especially on paired datasets43,44. The U-Net could suppress global scattering artifacts and local artifacts derived from CBCT images by capturing both global and local features in the image spatial domain43. In addition, the spatial uniformity of CT-like CBCT images was enhanced close to those of corresponding CT images while maintaining the anatomical structures on the CBCT images44. Therefore, in our results, the spatial uniformity of CBCT images produced by U-Net was improved, but the contrast of the bone images was reduced when compared to the CYC_CBCT images by Cycle-GAN.

In our study, the two-channel U-Net, which learned spatial information of CBCTs and corresponding CYC_CBCT images simultaneously, could improve image contrast and uniformity by suppressing beam hardening artifacts and scattering noise43. The CYC_CBCT images out of the two inputs helped the U-Net to focus on learning pixel-wise correspondence (or mapping) between QCT and CBCT images while maintaining the original intensity distribution of the bone structures. The combination loss of MAE and SSIM in the U-Net facilitated faster convergence and better accuracy considering the pixel-wise errors and structural similarity. As a result, the BMDs (voxel intensities) from the QCBCT demonstrated more accuracy than those from the CYC_CBCT and U_CBCT without regard to relative positions of the bone in the image volume51, or effects from different radiation doses or scanning conditions used in clinical settings.

We combined the Cycle-GAN with the two-channel U-Net model to further improve the contrast and uniformity of the CBCT bone images. The Cycle-GAN improved the contrast of the bone images by reflecting the original BMD distribution of the QCT images locally, while the two-channel U-Net improved the spatial uniformity of the bone images by globally suppressing the image artifacts and noise. As a result, the Cycle-GAN and two-channel U-Net worked to provide complementary benefits in improving the contrast and uniformity of the bone image locally and globally. Consequently, the QCBCT-NET could substantially enhance the linearity, uniformity, and contrast as well as the anatomical and quantitative accuracy of the bone images in order to quantitatively measure BMD in CBCT. Although the BMD linear relationships and agreement limits of QCBCT images were superior to those of CYC_CBCT and U_CBCT images, the accuracy of our method should be further improved for clinical applications.

Our study had some limitations. First, because paired CBCT and CT images were acquired at different imaging situations typically, the bone structures of the images were not perfectly aligned even after registration. Therefore, the registration error of CBCT and CT images might cause adverse impacts during network training. Second, our study had a potential limitation of generalization ability due to using a relatively small number of training dataset. Overfitting of the training CNN model, which resulted in the model learning statistical regularity specific to the training dataset, could impact negatively the model’s ability to generalize to a new dataset53. Third, the results presented in this study were based on two human skull phantoms with and without metal restorations instead of actual patients. Our method needs to be validated for the dataset from actual patients having dental fillings and restorations for its application in clinical research and practice, and compared to the conventional scatter-based method in future studies.

Conclusions

We proposed QCBCT-NET to directly and quantitatively measure BMD from CBCT images based on a hybrid deep-learning model of combining the generative adversarial network (GAN) and U-Net. The Cycle-GAN and two-channel U-Net in QCBCT-Net provided complementary benefits of improving the contrast and uniformity of the bone image locally and globally. The BMD images produced by QCBCT-NET significantly outperformed the images produced by Cycle-GAN or U-Net in MAD, PSNR, SSIM, NCC, and linearity when compared to the original QCT. The QCBCT-NET substantially enhanced the linearity, uniformity, and contrast as well as the anatomical and quantitative accuracy of the bone images, and demonstrated more accuracy than the Cycle-GAN and the U-Net for quantitatively measuring BMD in CBCT. In future studies, we plan to evaluate the proposed method on the actual patient dataset to prove its clinical efficacy.

Acknowledgements

This work was supported by the National Research Foundation of Korea (NRF) Grant funded by the Korea government (MSIT) (No. 2019R1A2C2008365), and by the Korea Medical Device Development Fund Grant funded by the Korea government (the Ministry of Science and ICT, the Ministry of Trade, Industry and Energy, the Ministry of Health & Welfare, the Ministry of Food and Drug Safety) (No. 1711138289, KMDF_PR_20200901_0147, 1711137883, KMDF_PR_20200901_0011).

Author contributions

T.-H.Y. and W.-J.Y.: Contributed to conception and design, data acquisition, analysis and interpretation, and drafted and critically revised the manuscript. S.Y.: Contributed to conception and design, data analysis and interpretation, and drafted and critically revised the manuscript. S.-J.L.: Contributed to data analysis and interpretation, and drafted the manuscript. C.P.: Contributed to data analysis and interpretation. J.-E.K., K.-H.H., S.-S.L. and M.-S.H.: Contributed to conception and design, data interpretation, and drafted the manuscript. All authors gave their final approval and agree to be accountable for all aspects of the work.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Tae-Hoon Yong and Su Yang.

References

- 1.Ammann P, Rizzoli R. Bone strength and its determinants. Osteoporos Int. 2003;14(Suppl 3):S13–S18. doi: 10.1007/s00198-002-1345-4. [DOI] [PubMed] [Google Scholar]

- 2.Seeman E. Bone quality: The material and structural basis of bone strength. J. Bone Miner. Metab. 2008;26:1–8. doi: 10.1007/s00774-007-0793-5. [DOI] [PubMed] [Google Scholar]

- 3.Kanis JA, et al. Assessment of fracture risk and its application to screening for postmenopausal osteoporosis—Synopsis of a WHO report. Osteoporosis Int. 1994;4:368–381. doi: 10.1007/BF01622200. [DOI] [PubMed] [Google Scholar]

- 4.Budoff MJ, et al. Measurement of thoracic bone mineral density with quantitative CT. Radiology. 2010;257:434–440. doi: 10.1148/radiol.10100132. [DOI] [PubMed] [Google Scholar]

- 5.Cann CE. Quantitative CT for determination of bone mineral density: A review. Radiology. 1988;166:509–522. doi: 10.1148/radiology.166.2.3275985. [DOI] [PubMed] [Google Scholar]

- 6.Giambini H, et al. The effect of quantitative computed tomography acquisition protocols on bone mineral density estimation. J. Biomech. Eng. 2015;137:114502. doi: 10.1115/1.4031572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Adams JE. Quantitative computed tomography. Eur. J. Radiol. 2009;71:415–424. doi: 10.1016/j.ejrad.2009.04.074. [DOI] [PubMed] [Google Scholar]

- 8.Rues S, et al. Effect of bone quality and quantity on the primary stability of dental implants in a simulated bicortical placement. Clin. Oral Investig. 2021;25:1265–1272. doi: 10.1007/s00784-020-03432-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Turkyilmaz I, McGlumphy EA. Influence of bone density on implant stability parameters and implant success: A retrospective clinical study. BMC Oral Health. 2008;8:1–8. doi: 10.1186/1472-6831-8-32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kiljunen T, Kaasalainen T, Suomalainen A, Kortesniemi M. Dental cone beam CT: A review. Phys. Med. 2015;31:844–860. doi: 10.1016/j.ejmp.2015.09.004. [DOI] [PubMed] [Google Scholar]

- 11.Kamburoglu K. Use of dentomaxillofacial cone beam computed tomography in dentistry. World J. Radiol. 2015;7:128–130. doi: 10.4329/wjr.v7.i6.128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Dalessandri D, et al. Advantages of cone beam computed tomography (CBCT) in the orthodontic treatment planning of cleidocranial dysplasia patients: A case report. Head Face Med. 2011;7:1–9. doi: 10.1186/1746-160X-7-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kapila SD, Nervina JM. CBCT in orthodontics: Assessment of treatment outcomes and indications for its use. Dentomaxillofac. Radiol. 2015;44:1–19. doi: 10.1259/dmfr.20140282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Woelber JP, Fleiner J, Rau J, Ratka-Kruger P, Hannig C. Accuracy and usefulness of CBCT in periodontology: A systematic review of the literature. Int. J. Periodontics Restorat. Dent. 2018;38:289–297. doi: 10.11607/prd.2751. [DOI] [PubMed] [Google Scholar]

- 15.Kim DG. Can dental cone beam computed tomography assess bone mineral density? J. Bone Metab. 2014;21:117–126. doi: 10.11005/jbm.2014.21.2.117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Pauwels R, Jacobs R, Singer SR, Mupparapu M. CBCT-based bone quality assessment: Are Hounsfield units applicable? Dentomaxillofac. Radiol. 2015;44:20140238. doi: 10.1259/dmfr.20140238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Mah P, Reeves TE, McDavid WD. Deriving Hounsfield units using grey levels in cone beam computed tomography. Dentomaxillofac. Radiol. 2010;39:323–335. doi: 10.1259/dmfr/19603304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Molteni R. Prospects and challenges of rendering tissue density in Hounsfield units for cone beam computed tomography. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2013;116:105–119. doi: 10.1016/j.oooo.2013.04.013. [DOI] [PubMed] [Google Scholar]

- 19.Schulze R, et al. Artefacts in CBCT: A review. Dentomaxillofac. Radiol. 2011;40:265–273. doi: 10.1259/dmfr/30642039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Katsumata A, et al. Relationship between density variability and imaging volume size in cone-beam computerized tomographic scanning of the maxillofacial region: An in vitro study. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2009;107:420–425. doi: 10.1016/j.tripleo.2008.05.049. [DOI] [PubMed] [Google Scholar]

- 21.Reeves TE, Mah P, McDavid WD. Deriving Hounsfield units using grey levels in cone beam CT: A clinical application. Dentomaxillofac. Radiol. 2012;41:500–508. doi: 10.1259/dmfr/31640433. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Silva IM, Freitas DQ, Ambrosano GM, Boscolo FN, Almeida SM. Bone density: Comparative evaluation of Hounsfield units in multislice and cone-beam computed tomography. Braz. Oral Res. 2012;26:550–556. doi: 10.1590/S1806-83242012000600011. [DOI] [PubMed] [Google Scholar]

- 23.Nomura Y, Watanabe H, Honda E, Kurabayashi T. Reliability of voxel values from cone-beam computed tomography for dental use in evaluating bone mineral density. Clin. Oral Implant Res. 2010;21:558–562. doi: 10.1111/j.1600-0501.2009.01896.x. [DOI] [PubMed] [Google Scholar]

- 24.Naitoh M, Hirukawa A, Katsumata A, Ariji E. Evaluation of voxel values in mandibular cancellous bone: Relationship between cone-beam computed tomography and multislice helical computed tomography. Clin. Oral Implant Res. 2009;20:503–506. doi: 10.1111/j.1600-0501.2008.01672.x. [DOI] [PubMed] [Google Scholar]

- 25.Parsa A, et al. Reliability of voxel gray values in cone beam computed tomography for preoperative implant planning assessment. Int. J. Oral Maxillofac. Implants. 2012;27:1438–1442. [PubMed] [Google Scholar]

- 26.Parsa A, Ibrahim N, Hassan B, van der Stelt P, Wismeijer D. Bone quality evaluation at dental implant site using multislice CT, micro-CT, and cone beam CT. Clin. Oral Implant Res. 2015;26:E1–E7. doi: 10.1111/clr.12315. [DOI] [PubMed] [Google Scholar]

- 27.Cha JY, Kil JK, Yoon TM, Hwang CJ. Miniscrew stability evaluated with computerized tomography scanning. Am. J. Orthod. Dentofacial Orthop. 2010;137:73–79. doi: 10.1016/j.ajodo.2008.03.024. [DOI] [PubMed] [Google Scholar]

- 28.Tao Xu, P. Z. et al. Attngan: Fine-grained text to image generation with attentional generative adversarial networks. Preprint at http://arXiv.org/1711.10485 (2017).

- 29.Li Y, Garrett J, Chen GH. Reduction of beam hardening artifacts in cone-beam CT Imaging via SMART-RECON algorithm. Med. Imaging 2016 Phys. Med. Imaging. 2016;9783:97830W. doi: 10.1117/12.2216882. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Bechara BB, Moore WS, McMahan CA, Noujeim M. Metal artefact reduction with cone beam CT: An in vitro study. Dentomaxillofac. Radiol. 2012;41:248–253. doi: 10.1259/dmfr/80899839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Wu M, et al. Metal artifact correction for X-ray computed tomography using kV and selective MV imaging. Med. Phys. 2014;41:1–17. doi: 10.1118/1.4901551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Zhu L, Xie Y, Wang J, Xing L. Scatter correction for cone-beam CT in radiation therapy. Med. Phys. 2009;36:2258–2268. doi: 10.1118/1.3130047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Xu Y, et al. A practical cone-beam CT scatter correction method with optimized Monte Carlo simulations for image-guided radiation therapy. Phys. Med. Biol. 2015;60:3567–3587. doi: 10.1088/0031-9155/60/9/3567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Cao Q, Sisniega A, Stayman JW, Yorkston J, Siewerdsen JH, Zbijewski W. Quantitative cone-beam CT of bone mineral density using model-based reconstruction. Med. Imaging 2019 Phys. Med. Imaging. 2019;10948:109480Y. doi: 10.1117/12.2513216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Ledig, C. et al. Photo-realistic single image super-resolution using a generative adversarial network. Preprint at http://arXiv.org/1609.04802 (2017).

- 36.Zhu, J.-Y., Park, T., Isola, P. & Efros, A. A. Unpaired Image-to-image translation using cycle-consistent adversarial networks. In Proc. IEEE International Conference on Computer Vision 2223–2232 (2017).

- 37.Yi X, Walia E, Babyn P. Generative adversarial network in medical imaging: A review. Med. Image Anal. 2019;58:1–20. doi: 10.1016/j.media.2019.101552. [DOI] [PubMed] [Google Scholar]

- 38.Harms J, et al. Paired cycle-GAN-based image correction for quantitative cone-beam computed tomography. Med. Phys. 2019;46:3998–4009. doi: 10.1002/mp.13656. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Ronneberger O, Fischer P, Brox T, et al. U-net: Convolutional networks for biomedical image segmentation. In: Navab N, et al., editors. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer; 2015. [Google Scholar]

- 40.Tian C, et al. Deep learning for image denoising: A survey. In: Pan J-S, et al., editors. International Conference on Genetic and Evolutionary Computing. Springer; 2018. [Google Scholar]

- 41.Do WJ, et al. Reconstruction of multicontrast MR images through deep learning. Med. Phys. 2020;47:983–997. doi: 10.1002/mp.14006. [DOI] [PubMed] [Google Scholar]

- 42.Liu, G. Photographic image synthesis with improved U-net. In 2018 Tenth International Conference on Advanced Computational Intelligence (ICACI)). IEEE (2018).

- 43.Chen L, Liang X, Shen C, Jiang S, Wang J. Synthetic CT generation from CBCT images via deep learning. Med. Phys. 2020;47:1115–1125. doi: 10.1002/mp.13978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Kida S, et al. Cone beam computed tomography image quality improvement using a deep convolutional neural network. Cureus. 2018;10:1–15. doi: 10.7759/cureus.2548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Choi JW. Analysis of the priority of anatomic structures according to the diagnostic task in cone-beam computed tomographic images. Imaging Sci. Dent. 2016;46:245–249. doi: 10.5624/isd.2016.46.4.245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Choi JW, et al. Relationship between physical factors and subjective image quality of cone-beam computed tomography images according to diagnostic task. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2015;119:357–365. doi: 10.1016/j.oooo.2014.11.010. [DOI] [PubMed] [Google Scholar]

- 47.Shin JM, et al. Contrast reference values in panoramic radiographic images using an arch-form phantom stand. Imaging Sci. Dent. 2016;46:203–210. doi: 10.5624/isd.2016.46.3.203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Dh C, et al. Reference line-pair values of panoramic radiographs using an arch-form phantom stand to assess clinical image quality. Imaging Sci. Dent. 2013;43:7–15. doi: 10.5624/isd.2013.43.1.7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Lee SJ, et al. Virtual skeletal complex model- and landmark-guided orthognathic surgery system. J. Craniomaxillofac. Surg. 2016;44:557–568. doi: 10.1016/j.jcms.2016.02.009. [DOI] [PubMed] [Google Scholar]

- 50.Bailey DG, Hodgson RM. Range filters—Local intensity subrange filters and their properties. Image Vis. Comput. 1985;3:99–110. doi: 10.1016/0262-8856(85)90058-7. [DOI] [Google Scholar]

- 51.Swennen GR, Schutyser F. Three-dimensional cephalometry: Spiral multi-slice vs cone-beam computed tomography. Am. J. Orthod. Dentofacial Orthop. 2006;130:410–416. doi: 10.1016/j.ajodo.2005.11.035. [DOI] [PubMed] [Google Scholar]

- 52.He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In The IEEE Conference on Computer Vision and Pattern Recognition 770–778 (2016).

- 53.Kwon O, et al. Automatic diagnosis for cysts and tumors of both jaws on panoramic radiographs using a deep convolution neural network. Dentomaxillofac. Radiol. 2020;49:1–9. doi: 10.1259/dmfr.20200185. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.