Abstract

Paradoxically, risk assessments for the majority of chemicals lack any quantitative characterization as to the likelihood, incidence, or severity of the risks involved. The relatively few cases where “risk” is truly quantified are based on either epidemiologic data or extrapolation of experimental animal cancer bioassay data. The paucity of chemicals and health endpoints for which such data are available severely limits the ability of decision-makers to account for the impacts of chemical exposures on human health. The development by the World Health Organization International Programme on Chemical Safety (WHO/IPCS) in 2014 of a comprehensive framework for probabilistic dose-response assessment has opened the door to a myriad of potential advances to better support decision-making. Building on the pioneering work of Evans, Hattis, and Slob from the 1990s, the WHO/IPCS framework provides both a firm conceptual foundation as well as practical implementation tools to simultaneously assess uncertainty, variability, and severity of effect as a function of exposure. Moreover, such approaches do not depend on the availability of epidemiologic data, nor are they limited to cancer endpoints. Recent work has demonstrated the broad feasibility of such approaches in order to estimate the functional relationship between exposure level and the incidence or severity of health effects. While challenges remain, such as better characterization of the relationship between endpoints observed in experimental animal or in vitro studies and human health effects, the WHO/IPCS framework provides a strong basis for expanding the breadth of risk management decision contexts supported by chemical risk assessment.

Keywords: Dose-response assessment, probabilistic methods, benefit-cost analysis

summary:

NASEM’s Science and Decisions called for Probabilistic and Harmonized Dose-Response for chemicals. New tools, implementing and extending the WHO/IPCS methods, make risk-based decision-making possible.

1. INTRODUCTION

Most current risk assessments, particularly of non-cancer effects, are actually more akin to “safety” assessments, where the goal is to ensure the “absence” of (any) effect. A typical output of such assessments is a “Reference Dose,” which is defined as follows.

RfD: an estimate (with uncertainty spanning perhaps an order of magnitude) of a daily oral exposure to the human population (including sensitive subgroups) that is likely to be without an appreciable risk of deleterious effects during a lifetime. It can be derived from a NOAEL, LOAEL, or BMD, with UFs generally applied to reflect limitations of the data used. Generally used in EPA’s noncancer health assessments. (U.S. EPA, 2002)

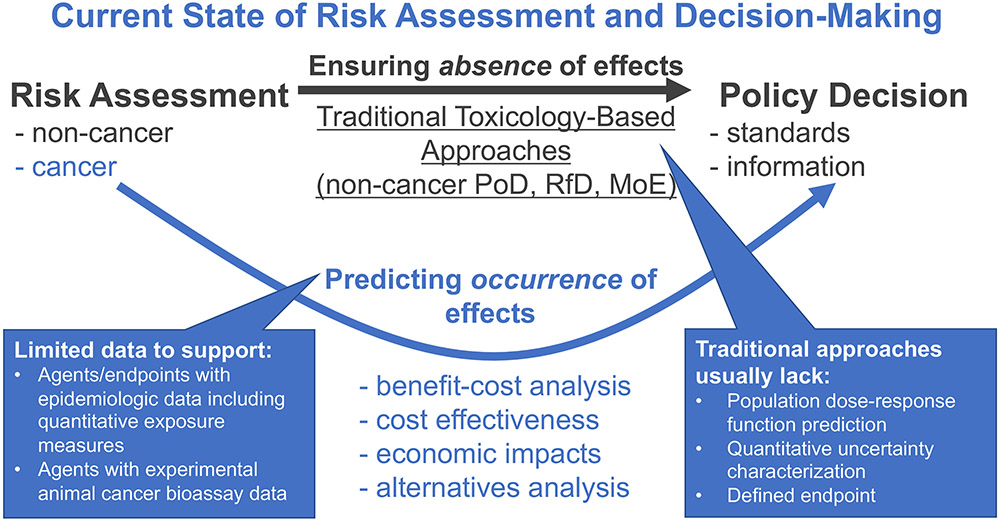

Such assessments, while useful in some decision contexts, are not suitable for analyses of benefit-cost, cost effectiveness, or economic impacts, which necessitate a “counterfactual” paradigm wherein the occurrence (often as an expected value) of particular effects differs under different policy options. For example, as depicted in Figure 1, traditional approaches using toxicological data usually lack three key components needed to support socioeconomic analyses: a prediction of the population dose-response relationship throughout a range of exposure levels; quantitative characterization of uncertainty in order to calculate central or expected values; and defined endpoints that can be assigned economic values. Such information is only available for a limited number of agents and endpoints that either have adequate epidemiologic data or experimental animal cancer bioassays. As a result, socioeconomic benefits analyses, which can be conducted to support or augment a variety of regulatory activities, ranging from particulate matter standards to drinking water maximum contaminant levels, only cover a dozen or so agents for epidemiology and a few hundred for animal cancer bioassays. This situation stems from the fact that methods that have been traditionally used to conduct dose-response assessment of environmental chemicals for non-cancer effects presume a “population threshold” concept, and thus do not provide the appropriate risk characterization to conduct such analysis. Thus, the basic premise of non-cancer risk assessment is that a “safe dose” is treated as absolutely without risk, even though the actual definitions of toxicity values such as the RfD actually do not rule out a residual level of risk, and in fact are silent as to what the magnitude of that residual risk may be.

Figure 1. Current state of risk assessment and decision-making.

Traditional risk assessment approaches, such as those based on deriving points of departure (PoDs), margins of exposure (MoEs), reference doses (RfDs), are focused on ensuring the absence of adverse effects at some (usually imprecisely specified) level of confidence. However, economic analysis usually necessitates prediction of the occurrence of adverse effects in order to characterize societal impacts under different policy options or scenarios, for which limited data are available to support using current risk assessment methods.

Indeed, the need to better support socioeconomic analyses was cited by the National Academies in its Science and Decisions report (NAS, 2009) as one of the reasons for recommending that risk assessments redefine traditional toxicity values such as the RfD as a probabilistically-derived “risk-specific dose,” which characterizes risks quantitatively as a function of dose. Interestingly, there is a long history of previous attempts to define probabilistic approaches to dose-response assessment dating back to the 1990s (Baird, Cohen, Graham, Shlyakhter, & Evans, 1996; Evans, Rhomberg, Williams, Wilson, & Baird, 2001; Hattis, Baird, & Goble, 2002; Slob & Pieters, 1998; Swartout, Price, Dourson, Carlson-Lynch, & Keenan, 1998), but efforts have, thus far, had little effect in changing risk assessment practice.

The context of benefit-cost analysis in support of regulatory proposals is the most well-known and most frequently argued basis for the need for non-cancer assessment to be capable of predicting levels of risk to exposed populations below, at, or above the RfD or similar toxicity values. However, there are many other decision-support contexts which also suffer from the lack of capability to predict levels of risk and the benefits of risk reduction. In both private and public sector contexts, there are significant investments in the operational (as distinct from rule-making) aspects of chemical risk control. Operational aspects include monitoring chemical exposures of workers and the public, conducting inspections and audits, product formulation, remediation for contaminated sites and a host of other activities. For a significant part of that very large investment, the actual level of benefits accruing to the public, workers, the environment and the corporations themselves cannot be estimated. The impact of this gap extends from the inability to provide direct quantitative characterization of “how much risk” there is due to a particular source to the associated trade-offs with respect to cost and/or competing risks that are part in parcel with many risk management and decision-making contexts. Chemical alternatives assessment, as currently practiced is largely non-quantitative in describing the risk reductions expected, but may be moving slowly toward a more quantitative portrayal of the relative harms of the target chemical and its proposed alternatives (NAS, 2014).

Around the same time that Science and Decisions (NAS, 2009) was released, the Harmonization Project of the World Health Organization International Programme on Chemical Safety initiated a working group to develop a framework for probabilistic hazard characterization. Although the initial impetus for the project was as a companion to the Guidance document on characterizing and communicating uncertainty in exposure assessment, published as part of a harmonization document on exposure in 2008 (WHO/IPCS, 2008), the working group soon recognized that this effort could also address the recommendations from the Science and Decisions (NAS, 2009) report. First published in 2014, with an open source journal article in 2015 and a second edition released in 2017, the resulting international guidance document presented a comprehensive framework for addressing uncertainty and variability in human health dose-response assessment of chemicals (Chiu & Slob, 2015; WHO/IPCS, 2014, 2017).

This new framework has opened the door to broader application of probabilistic approaches than earlier efforts, in part because it was developed through an extended multi-year process that included input from researchers, risk assessors, and regulators, as well as ongoing training in multiple professional fora. In this article, ,we begin with a brief overview of the WHO/IPCS framework, focusing on the new conceptual foundation that underlies the approach. Next we describe some of the recent advances and applications since the publication of the framework. We then discuss some challenges to its broader application. We conclude that the WHO/IPCS framework provides a firm foundation for expanding the utility of risk assessment beyond the usual context of an “assurance of safety,” encompassing the broader suite of decision contexts encountered in both private and public sector investments in chemical risk control.

2. OVERVIEW OF THE WHO/IPCS FRAMEWORK

The key concept underlying the WHO/IPCS approach is the replacement of quantities such as the RfD with a so-called target human dose.

Target Human Dose (HDMI): the estimated human dose at which effects with magnitude M occur in the population with an incidence I.

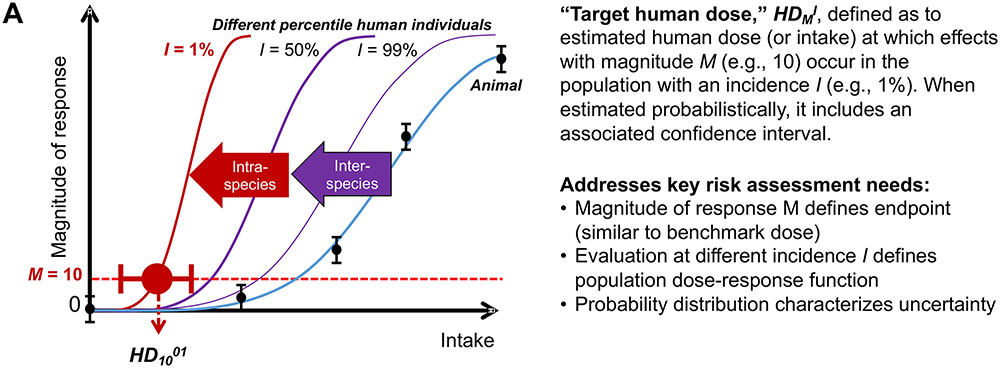

A conceptual illustration of the HDMI is shown in Figure 2A. Specifically, this approach posits that experimental animal data can be extrapolated to dose-response curves at the individual level, with human population variation leading to a family of different dose-response curves at different percentiles of the population. The HDMI expresses the relationship between the three quantities of human dose (HD), magnitude of effect (M), and percentile of the population (I), and can be mathematically re-expressed as needed with different variables being treated as the independent versus dependent variables.

Figure 2. Summary of WHO/IPCS probabilistic approach to characterize quantitative uncertainty and variability in dose-response assessment.

A: Illustration of the key concept of the “human target dose,” HDMI, which replaces traditional toxicity values such as the Reference Dose (RfD). B: General approach to deriving HDMI probabilistically, in comparison to deriving a traditional deterministic RfD, along with how it addresses key needs for economic analyses. C: Illustration of key concept of HDMI when expressed as population incidence for a specific magnitude of effect. For additional details, see (WHO/IPCS, 2014) and (Chiu & Slob, 2015).

The derivation of the HDMI along with its associated confidence interval involves the following steps, also illustrated in Figure 2B:

Conduct benchmark dose (BMD) modeling. The BMD, introduced by Crump (1984), is the dose associated with a specific size of effect, the benchmark response (BMRM), corresponding to the magnitude of effect M. The BMDM is estimated, with an associated statistical distribution, by statistical model fitting to dose-response data. This approach contrasts with using a NOAEL as a starting point for toxicological risk assessment, the limitations of which have been recognized for decades (EFSA, 2009; NAS, 2001; U.S. EPA, 1995, 2012; WHO/IPCS, 2009). Additionally, rather than using only a single point, such as the lower confidence limit (BMDL), the entire uncertainty distribution is utilized.

Derive probabilistic interspecies extrapolation factors. Interspecies extrapolation involves two components. The first component, denoted as the dosimetric adjustment factor (DAF), converts experimental animal exposures to “human equivalent” exposures, either through (TK) toxicokinetic modeling (Corley et al., 2012; Dorman et al., 2008; Schroeter et al., 2008; Teeguarden, Bogdanffy, Covington, Tan, & Jarabek, 2008), or through generic approaches such as allometric scaling by body mass (U.S. EPA, 1994; West, Brown, & Enquist, 1999). The second component, which is denoted UFA, accounts for unknown chemical-specific interspecies differences. Preliminary default distributions were derived as part of the WHO/IPSC framework based on a review of previously published analyses of historical data (WHO/IPCS, 2014).

Derived probabilistic human variability extrapolation factors. In the WHO/IPCS framework, the generic human variability uncertainty factor UFH is replaced with a factor that depends on the population incidence I, denoted UFH,I, which reflects TK and toxicodynamic (TD) differences between individuals at the median and individuals at the Ith percentile of the population distribution (Chiu & Slob, 2015; WHO/IPCS, 2014). As with interspecies extrapolation, preliminary default distributions were derived as part of the WHO/IPSC framework based on reviewed previously published analyses of historical data (WHO/IPCS, 2014).

Combine the components probabilistically to derive an intake-response function and its uncertainty. The integration of BMD modeling, interspecies extrapolation, and human variability extrapolation, is captured in the HDMI, which disaggregates “risk” into the distinct concepts of the magnitude of effect (M), the incidence of effect (I), and uncertainty (reflected in the confidence interval). The HDMI can furthermore be mathematically “inverted” to derive an intake-response function for a specified fraction I of the population (Chiu & Slob, 2015).

Thus, the result is a family of dose-response functions where one independent variable is the usual dose, but the “response” can be either magnitude of effect (at each value for the percentile of the population, as illustrated in Figure 2A) or incidence (for a specific value for magnitude of effect, as illustrated in Figure 2C), in addition to uncertainty bound (Chiu & Slob, 2015). As noted by Science and Decisions (NAS, 2009), these types of “risk-specific dose”-type metrics are precisely what is needed “to be more formally incorporated into risk-tradeoffs and benefit-cost analyses.” The recent advances described next include additional tools to facilitate application, recent case studies illustrating implementation, and new methods to address the key data gaps in characterizing human variability.

3. RECENT ADVANCES IN APPLYING PROBABILISTIC DOSE-RESPONSE APPROACHES

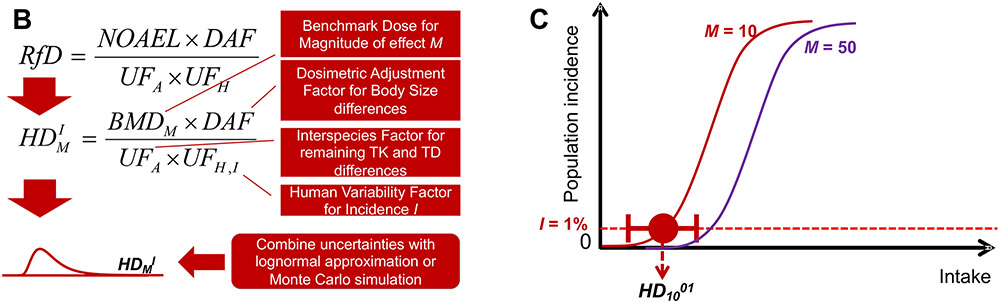

3.1. Web-based tools

One of the original components of the WHO/IPCS framework was the parallel development of a spreadsheet tool “APROBA” to implement the approach. This tool was recently extended in a version called “APROBA-Plus” to include semi-quantitative comparison to probabilistic exposure estimates (Bokkers, Mengelers, Bakker, Chiu, & Slob, 2017). Additionally, recognizing the shift towards online portals for conducting a variety of analyses, two web-based tools that implement these approaches have also been recently developed (Figure 3). First, the spreadsheet tool APROBA was implemented through an R “shiny” web application “APROBAweb” (https://wchiu.shinyapps.io/APROBAweb/). APROBAweb is appropriate for users new to probabilistic dose-response assessment, and also includes the results of some published results described below. Second, for more advanced users, an online Bayesian benchmark dose (BBMD) system available at https://benchmarkdose.org (Shao & Shapiro, 2018) has been augmented with a Monte Carlo simulation-based implementation of the probabilistic framework described in Chiu and Slob (2015). This tool replaces two simplifying assumptions that were required in APROBA and APROBAweb:

Figure 3. Screen shots of web-based tools for conducting probabilistic dose-response analyses.

A: Rshiny “APROBAweb” tool (https://wchiu.shinyapps.io/APROBAweb/). This tool uses the lognormal approximation implemented in the WHO/IPCS APROBA Excel spreadsheet. Results from Chiu et al. (2018) can be viewed and/or loaded. B: Bayesian Benchmark Dose (BBMD) tool, which includes a probabilistic RfD module (https://benchmarkdose.org/). This tool uses Monte Carlo Simulation instead of a lognormal approximation, and can utilize posterior samples from the Bayesian Benchmark Dose calculations as input.

First, whereas APROBA assumes the BMD is distributed lognormally, BBMD utilizes Bayesian posterior samples of the BMD generated from a Bayesian model averaging approach, and thus provides a more data-driven uncertainty distribution for the BMD.

Second, the APROBA assumes the uncertainty in the ratio between median and the Ith percentile of the population is lognormally distributed, whereas the original data suggest that the log-transformed standard deviation is, itself, lognormally distributed. The Monte Carlo-based approach of the BBMD thus incorporates the original distribution.

Thus, the calculations in the BBMD system rely on fewer assumptions, especially with respect to the shape of the underlying probability distributions, as compared to the approximate approach. The main benefit, though, of the BBMD system is that it automatically integrates with BMD modeling, the lack of which (i.e., relying on NOAELs or LOAELs) will frequently be a major contributor to uncertainty.

3.2. Feasibility of broadly applying the probabilistic framework to non-cancer endpoints

Chiu et al. (2018) assessed the feasibility and quantitative implications of broadly applying the WHO/IPCS framework to replace traditional RfDs with probabilistic estimates of the HDMI. After curating peer-reviewed toxicity values from U.S. government sources, they identified 1464 traditional RfDs and endpoints suitable for probabilistic estimation, consisting of 608 Chemicals, 351 of which had multiple RfDs or endpoints. They then implemented a four-step, automated workflow that took existing RfDs and calculated the corresponding HDMI values:

If needed, convert to endpoint-specific RfD by removing consideration of database uncertainty. This step was necessary for 317 out of 1464 RfDs because the probabilistic dose-response framework aims to make predictions related to the specific effects associated with the POD, rather than a general statement about lack of “deleterious effects.” Because the database factor is meant to cover the case where a different effect might be observed in an as yet unperformed study, it is not meaningful to include in deriving the HDMI. Thus, this step re-interprets the RfDs as endpoint-specific, so no “incomplete database” adjustment would be applied.

Assign conceptual model(s) and magnitude(s) of effect to each endpoint-specific RfD. Each endpoint is assigned a “conceptual model” based on the type of effect (continuous or dichotomous, reflecting a stochastic process or not) associated with the POD. This choice, along with the benchmark response (BMR) for PODs that are BMDLs, determine the magnitude of effect (M). In some cases, such as when a NOAEL is reported for effects that might include both continuous and quantal endpoints, multiple conceptual models are assigned.

Assign uncertainty distributions for each POD and uncertainty factor. Approaches and default distributions are described previously above.

Combine uncertainties probabilistically using WHO/IPCS (2014) approximate lognormal approach. This approximation was previously implemented in a spreadsheet tool “APROBA” that is available from the WHO/IPCS web page, and was reprogrammed in R for “batch” processing. The accuracy of the lognormal approximation was confirmed to be within 20-30% of those calculated by Monte Carlo simulation (see Chiu et al. (2018) Supplemental Figure S2).

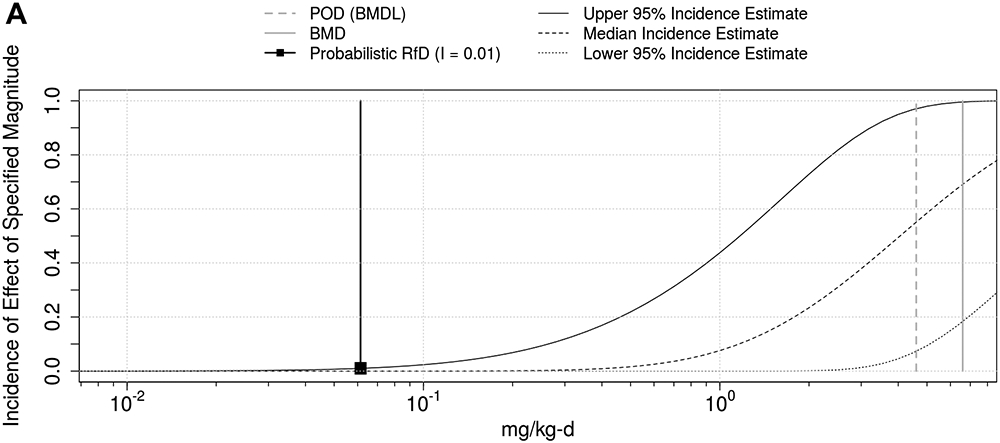

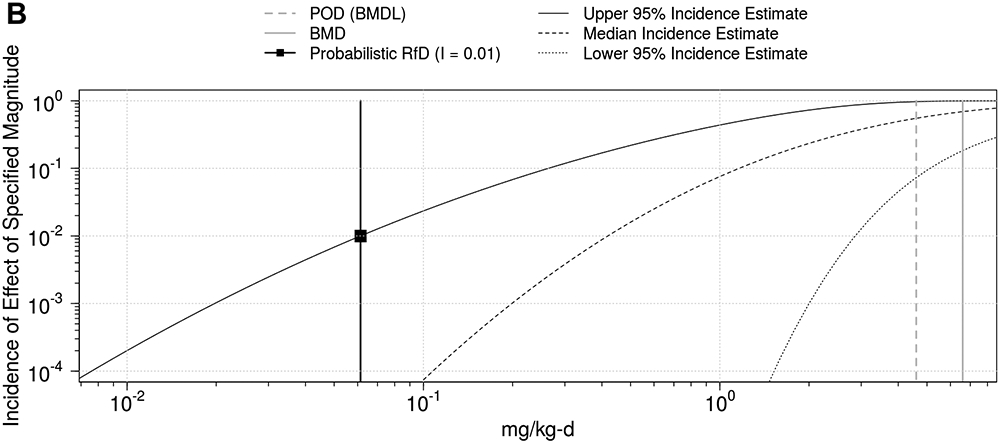

Outputs of this analysis included not only the uncertainty distribution for HDMI at I=1%, from which any statistic of interest (e.g., mean, median, confidence interval) or weighted average can be derived, but also a distribution for the incidence of effect as a function of dose, which is needed for any type of comparative analysis of different risk management options with differing degrees of exposure mitigation. An example for dieldrin liver toxicity is given in Figure 4.

Figure 4. Population dose-response prediction for dieldrin liver toxicity using WHO/IPCS Framework.

Incidence is shown both on natural scale (A) and log-scale (B).

Some key conclusions from the analysis from Chiu et al. (2018) of approximately 1500 chemical-non-cancer endpoint combinations are as follows:

Traditional RfDs tended to correlate with probabilistic RfDs (defined as the lower 95% confidence bound of the HDMI for I=1%), with a majority of the differences being 10-fold or less.

Estimated upper confidence bounds on incidence (“residual risk”) at exposures equal to traditional RfDs (i.e., at hazard quotient, HQ=1) are typically a few percent, but a quarter are greater than 3%. These estimates of incidence increase substantially for exposures at the RfD, with wide variation across RfDs.

The median estimates from the uncertainty distribution of incidence for exposures at the RfD are generally very small (<0.01% in almost all cases).

The severity of the endpoint assessed varies greatly across RfDs, ranging, for instance, from “mild irritation” to “hemorrhage” or “increased mortality.”

More importantly, however, the work by Chiu et al. (2018) demonstrated the feasibility of broadly applying probabilistic dose-response assessment to non-cancer endpoints as a replacement of the traditional, deterministic process of calculating RfDs. Moreover, the ability to make predictions of dose-response as a function of exposure can be therefore be used to support the broad range of risk management contexts, including benefit-cost analysis. Of course it must be recognized that these are a priori, predictive estimates based a mix of chemical-specific data for the effects observed, and a modest set of chemicals with historical data on inter-species or intra-species differences. They have not been calibrated or compared to chemical-specific data on variability in human responses after in vivo chemical exposure. However, below we describe some new in vitro/in silico approaches for predicting population dose-response that provide a useful comparison.

3.3. New in vitro and in silico approaches to predict population dose-response

One of the additional key conclusions from the Chiu et al. (2018) analysis was that the uncertainty in human variability is one of the greatest contributors to overall uncertainty. Indeed, the (WHO/IPCS, 2014) framework relies on a fairly small dataset of human in vivo population variability data compiled by Hattis and Lynch (2007) in order to develop a default preliminary distribution. More recently, however, a number of new methods for addressing population variability have become more widely available, particularly on a chemical-specific basis. As reviewed by Chiu and Rusyn (2018), as well as the other articles in a special issue of Mammalian Genome (Rusyn, Kleeberger, McAllister, French, & Svenson, 2018), these include in vivo (e.g., rodent), in vitro (e.g., human), and in silico models for population variability in susceptibility to chemicals.

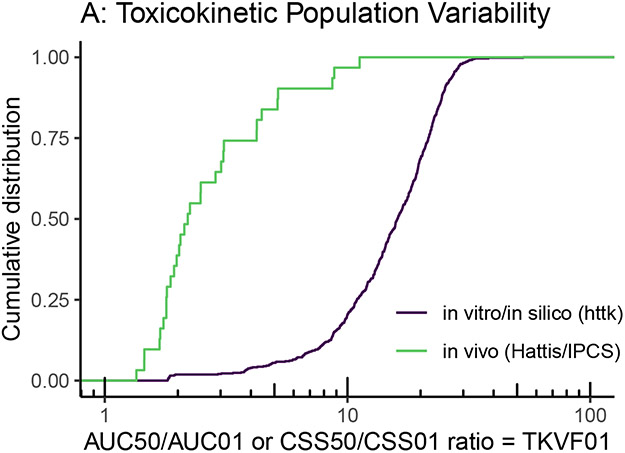

To illustrate the potential for such “new approach methods” (NAMs) to address population variability, we can compare them with the data underlying the WHO/IPCS (2014) default distributions for TK and TD variability. For instance, the “high throughput toxicokinetics” (httk) R package can calculate population-based TK for several hundred chemicals based on in vitro measurements of clearance and protein binding (Pearce et al. 2017; (Ring, Pearce, Setzer, Wetmore, & Wambaugh, 2017). Importantly, Ring et al. (2017) developed a Monte Carlo sampler for population variability based on sampling from a representative NHANES population. While the primary use of these in silico models has been to conduct “reverse” TK to convert in vitro bioactivity measurements to oral dose equivalents, the same models can be run in the “forward” direction to predict population variation in the steady-state concentration (Css) of a chemical given a uniform oral dose. Figure 5A shows the results between httk (n=582) and WHO/IPCS (2014) (n=31) for the TK variability factor TKVF01, the ratio between the median and most sensitive 1st percentile of the population. The larger population variability exhibited by httk is possibly due to the more diverse population being sampled, as the NHANES sample includes a variety of ethnicities and anthropomorphic characteristics, whereas most of the data used by WHO/IPCS consisted of smaller and more homogeneous groups of human subjects.

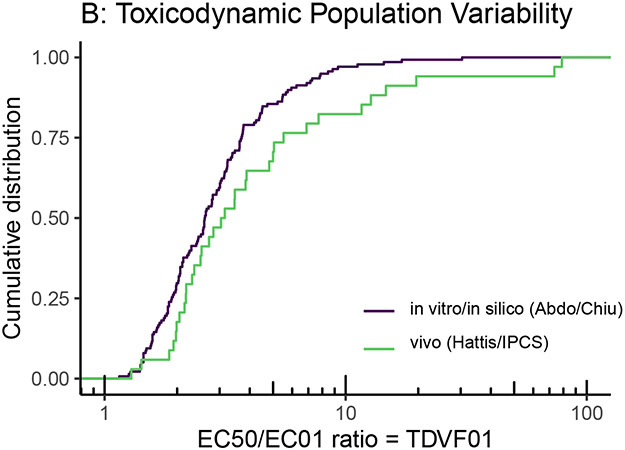

Figure 5.

Comparison of toxicokinetic (A) and toxicodynamic (B) variability from the limited number of traditional in vivo human studies compiled by Hattis and Lynch (2007) and WHO/IPCS (2014) with those from more recent in vitro/in silico models and methods, such as high throughput toxicokinetics (httk) and cytotoxicity screening ~1000 cell lines derived from different individuals (Abdo et al., 2015; Chiu et al. 2017).

With respect to TD, one of the most promising NAMs is the use of in vitro cell lines derived from a diverse population. For instance, Abdo et al. (2015) used over 1000 lymphoblast cell lines from the 1000 Genomes project in order to assess TD variation in cytotoxic response to over 100 chemicals. Chiu, Wright, and Rusyn (2017) performed a Bayesian re-analysis of these data to more clearly separate measurement and statistical errors from true population heterogeneity. Figure 5B compares the results between the Chiu et al. (2017) analysis (n=138) and WHO/IPCS (2014) (n=34) for the TD variability factor TDVF01, the ratio between the median and most sensitive 1st percentile of the population. The in vitro data showed slightly less variation, possibly due to a combination of measurement errors in the in vivo data, the lack of non-genetic sources of variability in the in vitro data, and the single in vitro assessed endpoint of cytotoxicity.

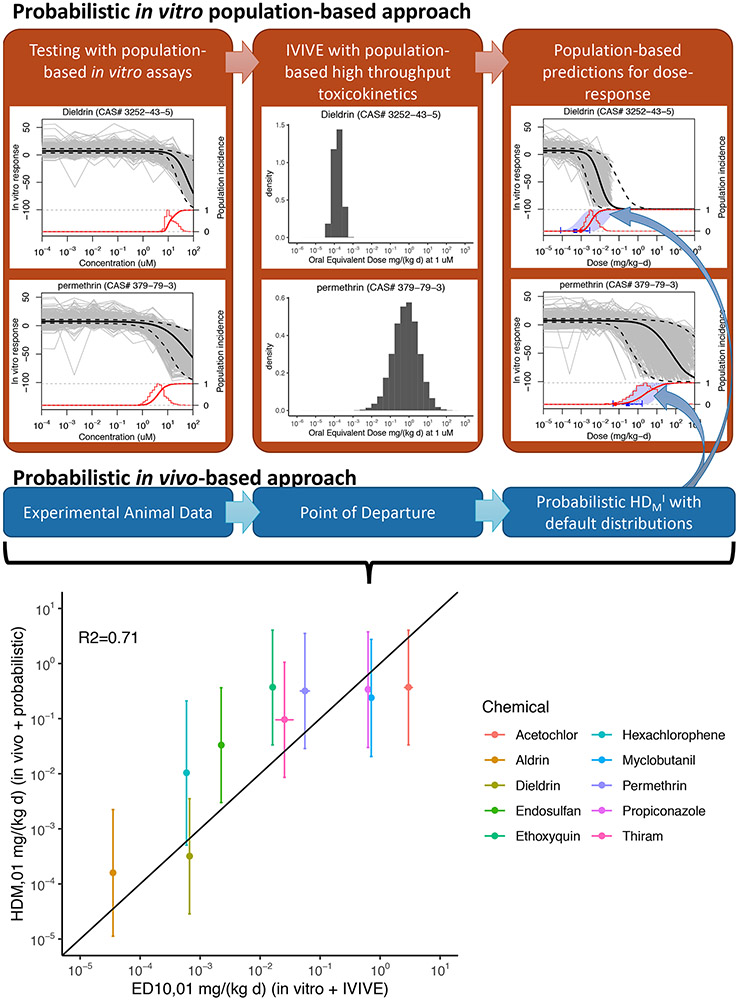

Interestingly, combining httk for TK and the 1000 Genomes approach for TD could actually yield a population of individual dose-response curves, as well as overall dose-population incidence curve, like the ones illustrated in Figures 2A and 2C. Thus, a fully “in vitro/in silico” TK-TD model can be developed within the WHO/IPCS (2014) framework, as illustrated in the top half of Figure 6. Unfortunately, to date, only a handful of chemicals overlap (n=14) between the httk and 1000 Genomes dataset, but they still provide a proof of principle. Of the overlapping chemicals, two (DEHP and styrene) are known to require metabolic activation for toxicity, the extent to which is not tracked by httk and is limited in the lymphoblast cells tested in vitro. For an additional two chemicals (Aldicarb and Dichlorvos), the critical effect is acetylcholinesterase inhibition, for which lymphoblastoid cells are not an appropriate model. Dropping these chemicals which are outside the “applicability domain” of this approach, the remaining chemicals were each analyzed as follows.

Figure 6.

Comparison between a probabilistic in vitro/in silico approach to predict population dose-response and the probabilistic WHO/IPCS (2014) approach based on experimental in vivo data. Scatter plot compares the predictions of the two methods for the dose eliciting a response in the 1% most sensitive individual in the population.

First, the fitted concentration-response for all ~1000 individuals was converted to a dose-response by using httk to determine the oral equivalent dose at each concentration, leading to a population of individual dose-response curves as conceptualized in Figure 2A. Then, the dose eliciting a 10% cytotoxic response (ED10) was found for each individual from the fitted concentration-response. The cumulative distribution of ED10 values then represents the dose-population incidence curve as conceptualized in Figure 2C. This dose-incidence curve can then be compared to the probabilistic predictions from the WHO/IPCS (2014) framework using the in vivo experimental animal data and the default distributions, as described above in the previous section. As a point of comparison, the dose eliciting the response for the 1% most sensitive individual (HDM01) was compared between the two approaches. As shown in the scatter plot in the bottom of Figure 6, in eight out of ten cases, the 90% confidence interval for the HDM01 using the WHO/IPCS (2014) approach overlapped with the value from the in vitro/in silico model, with an R2 = 0.71 for the correlation between the median estimates of the two approaches.

These results suggest that NAMs, specifically population-based in vitro and in silico models, not only have a great potential to enhance our characterization of human variability, but may even be able to make predictions as to the overall population dose-response relationship. However, further work is needed to address some of the limitations of the currently available NAMs. For instance, most in vitro systems are unable to make TK or TD predictions for compounds whose toxicity require metabolic bioactivation. Furthermore, additional population-based NAMs are needed to address variability in unique toxic endpoints for which cytotoxicity is a poor surrogate, such as acetylcholinesterase inhibition and receptor-mediated effects. Finally, it should be emphasized that population-based in vitro models to date only address the genetic component of population variability. As described in Zeise et al. (2013), there are many other potential contributors to TD variability in a diverse population, including life-stage, nutrition, non-chemical stressors, and chemical-chemical interactions. Thus, results from existing in vitro models for population variability that address only genetic variability could be but a lower bound for overall TD variability.

4. CONCLUSIONS: REMAINING CHALLENGES AND FUTURE PROSPECTS

There is considerable opportunity to fundamentally change the nature of chemical risk management. However, there are a number of challenges to be overcome before the full potential can be realized. These challenges can be considered to fall into several categories: (a) challenges in quantification; (b) institutional challenges; (c) and communication-related challenges.

4.1. Challenges in Quantification

A major challenge is that the vast majority of chemicals in commerce and to which populations are exposed lack the epidemiologic or experimental animal data that have been traditionally used to develop toxicity values. However, in addition to the NAMs described above for characterizing population variability, major efforts have been undertaken in the field of toxicology to develop surrogate toxicity information using computational in silico methods (Wignall et al., 2018), high-throughput in vitro data (e.g., Wambaugh et al., 2015), and more detailed mechanistic frameworks such as adverse outcome pathways (AOPs) (Ankley et al., 2010). In addition, there is a long history of “data poor” approaches, such as the threshold of toxicological concern (TTC) (e.g., Kroes, Kleiner, & Renwick, 2005) and read-across (Patlewicz, Helman, Pradeep, & Shah, 2017; Stuard & Heinonen). In the medium term, these approaches could be used to derive points of departure for use in the WHO/IPCS framework. While all of these approaches entail significant uncertainties, these uncertainties may be preferable to the alternative, which is the systematic omission of data-poor chemicals from risk assessments, an omission that essentially ascribes a value of zero to the impact of these chemicals and assigns zero benefit to any attempt to reduce exposure to them. Another challenge will continue to be the ability to quantify uncertainty in the level of human variability at very low doses (e.g., a dose at which less than 1 person in 10,000 might have an adverse health outcome). One strategy might be to set a default level of incidence below which it is not recommended to quantify uncertainty unless a decision-maker is thought to be particularly interested in such an estimate.

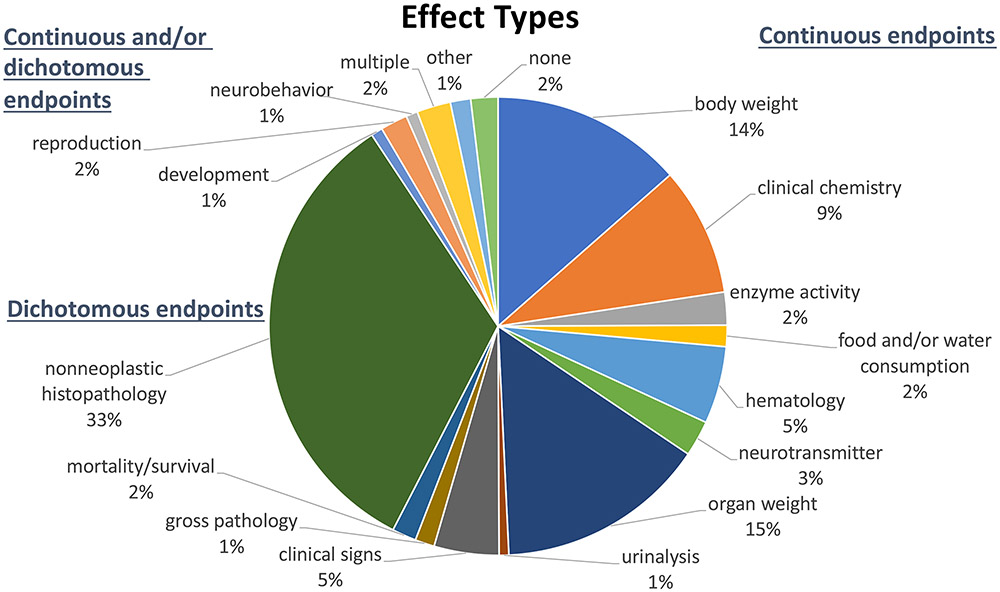

Additional challenges relate to the current inability to convert the incidence of predicted adverse health responses into estimates of the burden of disease (e.g., QALYs, DALYs, etc.) for a great majority of adverse health outcomes that are associated with the health response being predicted. On the one hand, an “effect” can be defined as a specified value of M along with a corresponding economic value, such as an estimate of a loss in Disability-Adjusted Life Years (DALYs) or a monetized value. The incidence of this “effect” as a function of dose can then be predicted to provide the dose-response function needed to calculate marginal benefits of different policy options. Alternatively, if different levels of “M” correspond to different levels of economic value, at each dose, the population average “M” can be calculated by integrating over the population of individual dose-response functions, and this population dose-response can then be used to calculate marginal benefits. In any case, it will be necessary to fully describe the health burden to the population and to monetize the benefit of preventing some fraction of this burden. Unfortunately, as noted by Chiu et al. (2018), critical effect endpoints from animal studies used for the construction of non-cancer dose-response curves include a wider range of severity, including alterations in clinical chemistry parameters, changes in organ or body weights, alterations in histology, changes in food or water consumption, and even hemorrhage and death (Figure 7). Few of these endpoints correspond directly to a frank disease state in humans, and this disconnect inhibits the ability to assign economically meaningful values, such as DALYs or monetized units. Thus, the ability for the large range of severity of outcomes to more formally and quantitatively contribute to the determination of an appropriate level of protection and the cost-effectiveness of prevention would be a very important contribution if it were realized.

Figure 7.

Effect types for 1464 reference dose (RfD) derivations for different health outcomes for 608 chemicals based on analysis by (Chiu et al., 2018).

It should be further noted that none of these quantification challenges is brought about by the particular methods or tools applied here (APROBA, BBMD, etc.). Rather, the approach described above exposes those inherent challenges where they are otherwise left unacknowledged in the classical RfD-based approach.

4.2. Institutional Challenges

Some of the key institutional challenges stem from the need to build capacity to describe uncertainties and to quantify the previously unquantified. While easy-to-use and freely available tools are helpful in making the analytical task much less burdensome, achieving the conceptual understanding of the quantitative components of the HDMI and its related measures will require a sustained effort. The conceptual separation of uncertainty and variability, the concept of multiple probability distributions, Monte Carlo simulation and related techniques are not part of the standard training of a significant proportion of the relevant workforce who normally use reference values, such that continuing professional education will be needed until it becomes part of standard toxicological training and that of related disciplines.

A more fundamental institutional challenge (applying to both private and public sector organizations) is the various barriers which prevent an institution from explicitly establishing quantified goals for health protection (and the level of confidence in the level of protection achieved). The transition involves converting from the definition of the RfD, which includes multiple layers of ambiguity, to a statement like: “We propose to allow levels of contaminant X in drinking water up to the level where we can be 99% confident that less than 1% of the exposed population are expected to experience mild adverse effects with daily consumption.” Overcoming this particular challenge involves preparing decision-makers and communicators to transition from a binary concept of safe/unsafe to implementing explicit judgements regarding societal tolerance for non-zero risk and a significant level of uncertainty when estimating low levels of risk. The potential difficulties in making this transition is evident in the analogous challenges associated with moving from the NOAEL to the BMD in defining the POD, which is still ongoing due to both technical and institutional challenges. Like the HDMI, the BMD required being more explicit about what level of response was considered “acceptable” or “minimally adverse,” and it took several decades to develop guidance and policies to establish consistent and appropriate BMRs for regulatory decision-making. While a similar long-term approach is likely to be required to enable widespread and routine use of the HDMI, there may be areas, such as socio-economic analyses, where probabilistic methods represent a “fit for purpose” approach to fill a critical need in regulatory analysis.

More broadly, if the molecular and genetic underpinnings of variability in susceptibility begin to be revealed, a non-zero risk tolerance may be made more difficult with the potential to identify those who may be adversely affected by, or have intolerably high individual risks to, particular toxicant effects. Such issues will require social, ethical, and political discussions to resolve, akin to the issues surrounding personalized risk/premium stratification in the insurance sector.

4.3. Communication Challenges

Arguably, the communication burden associated with this transformation is significant. One of those challenges relates to overcoming concerns, where appropriate, that are based in expectations (public, political, media, stakeholders, workers) that current exposure limits (RfDs, etc.) delineate a “bright line” between safe and unsafe exposures. Ultimately, it may be difficult to explain why it is deemed reasonable to tolerate exposures in which a small but non-zero fraction of the population (or a fraction of the population of workers) can be predicted to experience varying degrees of harm.

The explanation for this apparent “disconnect” may be that, in a sense, the current application of non-cancer risk assessment “violates” the conceptual separation between risk assessment and risk management advocated by the foundational NRC “Red Book” (NAS, 1983). Various judgments expected of the risk management function are subsumed within the risk assessment function’s selection of adverse outcomes and the accumulation of generic and chemical-specific adjustment factors with no explicit requirement to achieve a level of protection (and with a level of confidence) that the risk manager has determined to be desirable, nor the expectation that the resulting level of public protection will even be formally stated.

For this reason, a concerted effort, perhaps using Chiu et al. (2018) as a starting point, should be undertaken to understand the societal consequences of the limitations of current approaches. At one extreme, the consequences could be found to be minimal with the finding that current risk management decisions are generally appropriate and investments in public and private chemical risk control are appropriately allocated. At the other extreme, the consequences could be found to be substantial with respect to, for example, some combination of human health impacts, misallocation of financial expenditures in public and private sectors and questions of the appropriate governance of this class of risks to the public. It is also reasonable to expect that the truth lies somewhere in between those extremes.

4.4. Future prospects

While this review demonstrates that the WHO/IPCS framework for probabilistic dose-response is broadly applicable and tools are already available, it will not be possible (may not be desirable) to apply such fundamental change in all cases all at the same time. As such, it would be useful to consider where such application of the modified process and conceptual approach is most fruitfully applied. Examples of these considerations could include: (a) where the societal cost is predicted to be significant; (b) when applying or requiring chemical substitutions; (c) where known exposures are within an order of magnitude of existing risk management criteria; (d) for new chemicals only; (e) based on ease of application; or (f) in relatively non-controversial contexts to gain more practical experience in the management of the process, the interactions with decision-makers and stakeholders during the process, and in the communication of the results of the process.

Overall, although challenges remain, the WHO/IPCS framework provides a better characterization of the relationship between endpoints observed in experimental studies and human health effects, providing a firm foundation for expanding the utility of chemical risk assessment beyond the usual context of an “assurance of safety,” encompassing the broader suite of risk management contexts encountered in either private or public sector, and ultimate better risk assessment support for risk-based decision-making.

Acknowledgments:

An early draft of this work was presented the Risk Assessment, Economic Evaluation, and Decisions Workshop held September 26-27, 2019, hosted by the Harvard Center for Risk Analysis. The authors wish to thank the Workshop participants, members of the WHO/IPCS Workgroup on Characterizing Uncertainty in Hazard Characterisation, and members of the UNEP/SETAC Life Cycle Initiative for important input into work described in this article. This work was supported, in part, by NIH grant P42 ES027704 to Texas A&M University.

Acronyms

- AOP

Adverse Outcome Pathway

- APROBA

Approximate probabilistic approach

- BBMD

Bayesian Benchmark Dose

- BMD

Benchmark Dose

- BMDL

Benchmark Dose Lower Confidence Limit

- BMR

Benchmark Response

- Css

steady-state concentration

- DAF

Dosimetric Adjustment Factor

- DALY

Disability-Adjusted Life Years

- DEHP

di(2-ethylhexyl) phthalate

- ED10

Effective dose for a 10% response

- EPA

United States Environmental Protection Agency

- HDMI

Human Dose causing effect magnitude M with incidence I.

- httk

high-throughput toxicokinetic

- HQ

Hazard quotient

- IPCS

International Programme on Chemical Safety

- IVIVE

In vitro-in vivo extrapolation

- LOAEL

Lowest Observed Adverse Effect Level

- MoE

Margin of Exposure

- NAM

New Approach Method

- NAS

National Academy of Sciences

- NHANES

National Health and Nutrition Examination Survey

- NOAEL

No Observed Adverse Effect Level

- NRC

National Research Council

- PoD

Point of Departure

- QALY

Quality-adjusted life-years

- RfD

Reference Dose

- TD

Toxicodynamic

- TDVF

Toxicodynamic variability factor

- TK

Toxicokinetic

- TKVF

Toxicokinetic variability factor

- TTC

Threshold of toxicological concern

- UF

Uncertainty Factor

- UFA

Uncertainty Factor for Animal-to-Human Extrapolation

- WHO/IPCS

World Health Organization/International Programme on Chemical Safety

REFERENCES

- Abdo N, Xia M, Brown CC, Kosyk O, Huang R, Sakamuru S, … Wright FA (2015). Population-based in vitro hazard and concentration-response assessment of chemicals: the 1000 genomes high-throughput screening study. Environ Health Perspect, 123(5), 458–466. doi: 10.1289/ehp.1408775 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ankley GT, Bennett RS, Erickson RJ, Hoff DJ, Hornung MW, Johnson RD, … Villeneuve DL (2010). Adverse outcome pathways: A conceptual framework to support ecotoxicology research and risk assessment. Environmental Toxicology and Chemistry, 29(3), 730–741. doi: 10.1002/etc.34 [DOI] [PubMed] [Google Scholar]

- Baird SJS, Cohen JT, Graham JD, Shlyakhter AI, & Evans JS (1996). Noncancer risk assessment: A probabilistic alternative to current practice. Human and Ecological Risk Assessment, 2(1), 79–102. [Google Scholar]

- Bokkers BGH, Mengelers MJ, Bakker MI, Chiu WA, & Slob W (2017). APROBA-Plus: A probabilistic tool to evaluate and express uncertainty in hazard characterization and exposure assessment of substances. Food Chem Toxicol, 110, 408–417. doi: 10.1016/j.fct.2017.10.038 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chiu WA, Axelrad DA, Dalaijamts C, Dockins C, Shao K, Shapiro AJ, & Paoli G (2018). Beyond the RfD: Broad Application of a Probabilistic Approach to Improve Chemical Dose-Response Assessments for Noncancer Effects. Environ Health Perspect, 126(6), 067009. doi: 10.1289/EHP3368 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chiu WA, & Rusyn I (2018). Advancing chemical risk assessment decision-making with population variability data: challenges and opportunities. Mamm Genome. doi: 10.1007/s00335-017-9731-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chiu WA, & Slob W (2015). A Unified Probabilistic Framework for Dose-Response Assessment of Human Health Effects. Environ Health Perspect, 123(12), 1241–1254. doi: 10.1289/ehp.1409385 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chiu WA, Wright FA, & Rusyn I (2017). A tiered, Bayesian approach to estimating of population variability for regulatory decision-making. ALTEX, 34(3), 377–388. doi: 10.14573/altex.1608251 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corley RA, Kabilan S, Kuprat AP, Carson JP, Minard KR, Jacob RE, … Einstein DR (2012). Comparative computational modeling of airflows and vapor dosimetry in the respiratory tracts of rat, monkey, and human. Toxicol Sci, 128(2), 500–516. doi: 10.1093/toxsci/kfs168 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crump KS (1984). A new method for determining allowable daily intakes. Fundam Appl Toxicol, 4, 854–871. [DOI] [PubMed] [Google Scholar]

- Dorman DC, Struve MF, Wong BA, Gross EA, Parkinson C, Willson GA, … Andersen ME (2008). Derivation of an inhalation reference concentration based upon olfactory neuronal loss in male rats following subchronic acetaldehyde inhalation. Inhal Toxicol, 20(3), 245–256. doi: 10.1080/08958370701864250 [DOI] [PubMed] [Google Scholar]

- EFSA. (2009). Guidance of the Scientific Committee on Use of the benchmark dose approach in risk assessment. Parma: European Food Safety Agency. [Google Scholar]

- Evans JS, Rhomberg LR, Williams PL, Wilson AM, & Baird SJ (2001). Reproductive and developmental risks from ethylene oxide: a probabilistic characterization of possible regulatory thresholds. Risk Anal, 21(4), 697–717. [DOI] [PubMed] [Google Scholar]

- Hattis D, Baird S, & Goble R (2002). A straw man proposal for a quantitative definition of the RfD. Drug Chem Toxicol, 25(4), 403–436. doi: 10.1081/dct-120014793 [DOI] [PubMed] [Google Scholar]

- Hattis D, & Lynch MK (2007). Empirically observed distributions of pharmacokinetic and pharmacodynamic variability in humans—implications for the derivation of single point component uncertainty factors providing equivalent protection as existing RfDs. In Lipscomb JC & Ohanian EV (Eds.), Toxicokinetics in Risk Assessment (pp. 69–93). New York: Informa Healthcare USA Inc. [Google Scholar]

- Kroes R, Kleiner J, & Renwick A (2005). The threshold of toxicological concern concept in risk assessment. Toxicological Sciences, 86(2), 226–230. doi: 10.1093/toxsci/kfi169 [DOI] [PubMed] [Google Scholar]

- NAS. (1983). Risk Assessment in the Federal Government: Managing the Process. Washington, DC: National Academy Press. [PubMed] [Google Scholar]

- NAS. (2001). Standard Operating Procedures for Developing Acute Exposure Guideline Levels Washington DC: National Academies Press. [PubMed] [Google Scholar]

- NAS. (2009). Science and Decisions: Advancing Risk Assessment. Washington DC: National Academies Press. [PubMed] [Google Scholar]

- NAS. (2014). A Framework to Guide Selection of Chemical Alternatives. Washington, DC: National Academy Press. [PubMed] [Google Scholar]

- Patlewicz G, Helman G, Pradeep P, & Shah I (2017). Navigating through the minefield of read-across tools: A review of in silico tools for grouping. Computational Toxicology, 3, 1–18. doi: 10.1016/j.comtox.2017.05.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ring CL, Pearce RG, Setzer RW, Wetmore BA, & Wambaugh JF (2017). Identifying populations sensitive to environmental chemicals by simulating toxicokinetic variability. Environ Int, 106, 105–118. doi: 10.1016/j.envint.2017.06.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rusyn I, Kleeberger SR, McAllister KA, French JE, & Svenson KL (2018). Introduction to mammalian genome special issue: the combined role of genetics and environment relevant to human disease outcomes. Mamm Genome, 29(1-2), 1–4. doi: 10.1007/s00335-018-9740-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schroeter JD, Kimbell JS, Gross EA, Willson GA, Dorman DC, Tan YM, & Clewell HJ 3rd. (2008). Application of physiological computational fluid dynamics models to predict interspecies nasal dosimetry of inhaled acrolein. Inhal Toxicol, 20(3), 227–243. doi: 10.1080/08958370701864235 [DOI] [PubMed] [Google Scholar]

- Shao K, & Shapiro AJ (2018). A Web-Based System for Bayesian Benchmark Dose Estimation. Environ Health Perspect, 126(1), 017002. doi: 10.1289/ehp1289 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slob W, & Pieters MN (1998). A probabilistic approach for deriving acceptable human intake limits and human health risks from toxicological studies: general framework. Risk Anal, 18(6), 787–798. [DOI] [PubMed] [Google Scholar]

- Stuard SB, & Heinonen T (2018). Relevance and application of read- across - Mini review of European consensus platform for alternatives and Scandinavian society for cell toxicology 2017 workshop session. Basic and Clinical Pharmacology and Toxicology. doi: 10.1111/bcpt.13006 [DOI] [PubMed] [Google Scholar]

- Swartout JC, Price PS, Dourson ML, Carlson-Lynch HL, & Keenan RE (1998). A probabilistic framework for the reference dose (probabilistic RfD). Risk Anal, 18(3), 271–282. [DOI] [PubMed] [Google Scholar]

- Teeguarden JG, Bogdanffy MS, Covington TR, Tan C, & Jarabek AM (2008). A PBPK model for evaluating the impact of aldehyde dehydrogenase polymorphisms on comparative rat and human nasal tissue acetaldehyde dosimetry. Inhal Toxicol, 20(4), 375–390. doi: 10.1080/08958370801903750 [DOI] [PubMed] [Google Scholar]

- U.S. EPA. (1994). Methods for Derivation of Inhalation Reference Concentrations (RfCs) and Application of Inhalation Dosimetry. Washington DC: U.S. Environmental Protection Agency. [Google Scholar]

- U.S. EPA. (1995). The Use of the Benchmark Dose Approach in Health Risk Assessment. (EPA/630/R-94/007). Washington Dc: U.S. Environmental Protection Agency. [Google Scholar]

- U.S. EPA. (2002). A Review of the Reference Dose and Reference Concentration Processes. (EPA/630/P-02/002F). Environmental Protection Agency, Washington, DC. . [Google Scholar]

- U.S. EPA. (2011). Recommended Use of Body Weight 3/4 as the Default Method in Derivation of the Oral Reference Dose. Washington DC: U.S. Environmental Protection Agency. [Google Scholar]

- U.S. EPA. (2012). Benchmark Dose Technical Guidance. Washington, DC: U.S. Environmental Protection Agency. [Google Scholar]

- Wambaugh JF, Wetmore BA, Pearce R, Strope C, Goldsmith R, Sluka JP, … Setzer RW (2015). Toxicokinetic triage for environmental chemicals. Toxicological Sciences, 147(1), 55–67. doi: 10.1093/toxsci/kfv118 [DOI] [PMC free article] [PubMed] [Google Scholar]

- West GB, Brown JH, & Enquist BJ (1999). The fourth dimension of life: fractal geometry and allometric scaling of organisms. Science, 284(5420), 1677–1679. [DOI] [PubMed] [Google Scholar]

- WHO/IPCS. (2008). Uncertainty and Data Quality in Exposure Assessment. Geneve: World Health Organization International Program on Chemical Safety. [Google Scholar]

- WHO/IPCS. (2009). Principles for modelling dose-response for the risk assessment of chemicals. Geneve: World Health Organization International Program on Chemical Safety. [Google Scholar]

- WHO/IPCS. (2014). Guidance Document on Evaluating and Expressing Uncertainty in Hazard Characterization. Geneve: World Health Organization International Program on Chemical Safety; Retrieved from http://www.who.int/ipcs/methods/harmonization/areas/hazard_assessment/en/. [Google Scholar]

- WHO/IPCS. (2017). Guidance Document on Evaluating and Expressing Uncertainty in Hazard Characterization. Geneve: World Health Organization International Program on Chemical Safety; Retrieved from http://www.who.int/ipcs/methods/harmonization/areas/hazard_assessment/en/. [Google Scholar]

- Wignall JA, Muratov E, Sedykh A, Guyton KZ, Tropsha A, Rusyn I, & Chiu WA (2018). Conditional Toxicity Value (CTV) Predictor: An In Silico Approach for Generating Quantitative Risk Estimates for Chemicals. Environ Health Perspect, 126(5), 057008. doi: 10.1289/EHP2998 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeise L, Bois FY, Chiu WA, Hattis D, Rusyn I, & Guyton KZ (2013). Addressing human variability in next-generation human health risk assessments of environmental chemicals. Environ Health Perspect, 121(1), 23–31. doi: 10.1289/ehp.1205687 [DOI] [PMC free article] [PubMed] [Google Scholar]