Abstract

Within the field of human rehabilitation, robotic machines are used both to rehabilitate the body and to perform functional tasks. Robotics autonomy able to perceive the external world and reason about high-level control decisions, however, seldom is present in these machines. For functional tasks in particular, autonomy could help to decrease the operational burden on the human and perhaps even to increase access—and this potential only grows as human motor impairments become more severe. There are however serious, and often subtle, considerations to introducing clinically-feasible robotics autonomy to rehabilitation robots and machines. Today the fields of robotics autonomy and rehabilitation robotics are largely separate. The topic of this article is at the intersection of these fields: the introduction of clinically-feasible autonomy solutions to rehabilitation robots, and opportunities for autonomy within the rehabilitation domain.

Keywords: robotics autonomy, rehabilitation robotics, control interfaces, human-robot interaction, human rehabilitation

1. INTRODUCTION

Assistive machines used to regain or replace lost human motor function are crucial to facilitating the independence of those with severe motor impairments. Machines such as powered therapy orthoses and manipulandums aim towards rehabilitation of the body, and work with the patient to move their own arms and legs to regain diminished or lost function. Exoskeletons worn on the body provide additional structure, support and power that enables a patient to use weakened or paralyzed limbs—and the hope is for such machines to be used for everyday manipulation and mobility, in addition to the therapeutic role they play today. Prostheses are robotic limbs worn on the body as replacements for missing limbs. Powered wheelchairs offer non-anthropomorphic mobility for people with impairments ranging from muscle weakness to cervical paralysis. Robotic arms mounted to wheelchairs promise enhanced manipulation capabilities for wheelchair operators with upper-body impairments. Each of these machines can extend the mobility and manipulation abilities of persons with motor limitations in their own legs and arms, and in some cases even provide ability where there is none.

In their clinical form, each of the machines listed above involves no robotics autonomy—that is, the ability to make higher-level decisions based on observations of the external world. (Though there do exist elements of automation, discussed further in Section 4.3.1.) However, the potential impact for robotics autonomy on the field of rehabilitation is considerable. The handful of application areas to date—robotic “smart” wheelchairs (1), stationary and mobile intelligent robotic arms (2), the burgeoning area of autonomous robotic aides (3)—only begin to scratch the surface. Autonomous robots already synthetically sense the world, generate motion and compute cognition—any of which might be adapted to address sensory, motor and cognitive impairments in humans.

Within the field of rehabilitation robotics1 research, autonomy is engaged infrequently. However, 10-15 years ago the field saw a comparatively stronger presence from autonomy—particularly on the topic of robotic wheelchairs and assistive arms mounted to them. In the years since research within the rehabilitation robotics community shifted away from autonomy, though we are beginning to see the pendulum swing back. Certainly the field of robotics autonomy has made significant advances in the intervening years, so there is a heightened potential to make new, perhaps more impactful, contributions.

The intersection of rehabilitation with even more advanced robotics autonomy technologies—including artificial intelligence and machine learning—is ripe for exploitation. The purpose of this article is to examine this intersection, between the fields of robotics autonomy and rehabilitation robotics. Therefore, throughout, the discussion of rehabilitation robotics is skewed towards topics where autonomy has played a role or might in the future. For this reason, the article is not a comprehensive survey of the field of rehabilitation robotics. For such a review, we refer to the reader to the topic-specific surveys highlighted throughout the article.

This article begins with an introduction to the types of robots used within the domain of human rehabilitation to regain lost function (Sec. 2). It continues with a description of the fundamental challenge of capturing and interpreting control signals from a human with motor impairments, for the purpose of operating a machine used to replace lost function (Sec. 3). We then survey research that introduces autonomy to such machines in order to help overcome these operational challenges, including potential areas for future impact (Sec. 4). A discussion of the societal adoption and acceptance of autonomy in rehabilitation robots follows (Sec. 5), and after our conclusions (Sec. 6).

2. REHABILITATION: REGAINING LOST FUNCTION

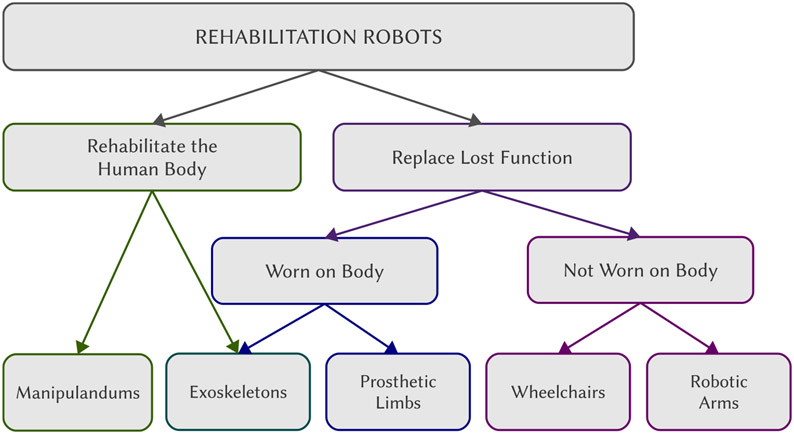

We begin with a brief overview of machines used for human rehabilitation. These include machines whose purpose it is to rehabilitation the body (Sec. 2.1) as well as machines used to replace lost motor function, which might be mounted on the body (Sec. 2.2) or unattached to the body (Sec. 2.3). A schematic of this categorization is shown in Figure 1.

Figure 1.

Categorization of robots used for human rehabilitation. Low-level automation is seen primarily in robots used to rehabilitate the body and robots worn on the body to replace lost function. Higher-level autonomy so far has been introduced (within research, never clinically) primarily to robots not worn on the body—in part due to practical considerations like where to mount additional sensors and computational infrastructure.

2.1. With the Human Body

Physical therapy aims to rehabilitate the human body in order to regain lost motor function. It is founded on the ideas of neuroplasticity in the brain and motor learning and strength training in the body, and can help to address impairments such as partial paralysis as a result of stroke or incomplete spinal cord injury. The worldwide incidence of stroke is estimated to be 1% of the population (4), and even after intensive rehabilitation only 5-20% of stroke survivors recover full function (5) with 25-74% relying on a caregiver for basic functional tasks (6). Robotic devices play a role in therapy-enabled rehabilitation by providing physical guidance to the body’s movements; through manipulandums or haptic feedback interfaces in contact with some part of the body (usually the hand), or through powered orthoses and exoskeletons attached to and/or supporting the body (7).2 The benefits of robot-assisted therapy include standardized treatment and assessment, relief for therapists from repetitive-motion therapies and longer training sessions (11).

Early work using robots for physical therapy was characterized by pre-recorded motion trajectories (for lower-body rehabilitation) and constrained reaching movements (for upper-body rehabilitation). Today the field has advanced to functional tasks and shared-control strategies that engage more active patient participation (11)—and by how much often is individualized based on patient needs and therapy goals. A lot of research in therapy robots investigates when and in what manner the automation assistance should step in to most effectively engage neurorehabilitation (7, 12).

A powered exoskeleton is a motorized rigid or flexible orthosis attached to the body (13), and currently its primary use is within clinical therapy environments (14). Exoskeletons provide guidance and support during upper- and lower-body motor rehabilitation exercises, including body-weight supported gait training on a treadmill. Lower-body exoskeletons also are being employed clinically by paraplegics for physiological benefits (rather than rehabilitated motor ability) such as reduced bone loss and improved digestion. A goal however is for powered exoskeletons also to be widely employed as mobility and manipulation devices outside of the clinic (14), and thus additionally to replace lost function. Powered exoskeletons therefore straddle the topics of rehabilitating the body and using a machine attached to the body to replace lost function (discussed next).

For a complete review of the area of robots used to rehabilitate the human body, we refer the reader to this collection (11) of articles on the topic of neurorehabilitation, as well as these survey articles (13, 14) on lower-limb exoskeletons.

2.2. With a Machine Attached to the Body

There are conditions for which a functional gap remains after the body has undergone rehabilitation therapy. For such conditions, machines can be employed in day-to-day life to bridge this functional gap and facilitate independent mobility and manipulation, which correlates with improved self-esteem, social connections and societal participation (15). One such condition is limb loss.

A prosthetic limb is a device attached to the body as a replacement for a lost limb. An estimated 1.6 million people in the United States alone are living with limb loss (16), and prosthetic limb use is correlated with positive measures like improved quality of life, restored function and increased employment likelihood (17).

The overwhelming majority of today’s prosthesis users employ a body-powered prosthesis, which contains no motors and is controlled either actively (e.g. using a harness attached to the opposing shoulder) or passively (e.g. a cantilevered foot). Upper-limb body-powered prostheses typically terminate in a single degree of freedom effector (hand or hook), and accomplish only a subset of the lost functionality (18, 19). Robotic arms and legs mounted on the body as a replacement for lost limbs—electric prostheses—are an incredibly promising, and yet surprisingly underutilized, technology.

There exist many hypotheses as to why robotic prostheses have not been adpoted more broadly, ranging from energy expenditure when operating the device (a particular concern for lower-limb prostheses), to difficulties with hardware maintenance, to operational challenges that limit functionality (a particular challenge for upper-limb prostheses) (18, 20). Regardless of how functional, a device simply will not be adopted if it is too heavy, expends more energy than it produces or has an uncomfortable socket connection (21). Many upper-limb robotic (and body-powered) prostheses are used only aesthetically, not functionally, and for an overwhelming number of tasks the human simply uses their other hand (for unilateral amputations) (18, 19, 20). Accordingly, much of the research in the area of upper and lower-limb prostheses focuses on the physical hardware design, and on the interpretation of signals from the human to control these machines.

For a in-depth review of the area of robotic prostheses, we refer the reader to survey articles on prosthesis use (18) and design (21), and myoelectric control (22).

2.3. With a Machine Unattached to the Body

While prostheses are machines mounted to the body to replace lost limb function, there also exist assistive machines that aim to replace lost function and likewise are operated by the human, but are not mounted to the human body.3

The powered wheelchair is by far the most ubiquitous assistive machine in use throughout society. There is evidence that powered mobility offers increased freedom, independence and social engagement (25). However, there are impairments which can make driving a powered wheelchair a challenge, including upper-body physical impairments (ataxia, bradykinesia, dystonia, weakness/fatigue, spasticity, tremor and paralysis), cognitive impairments (deficits in executive reasoning, impaired attention, agitation and impulse control) and also perceptual impairments (low vision or blindness, limitations in head, neck or eye movement, visual field loss and visual field neglect) (26). Thus while many individuals do achieve sufficient mobility using wheelchairs, many others do not. A survey of clinicians in the United States found 10-40% of candidate users unable to be prescribed a powered wheelchair (26) because of sensory, motor or cognitive deficits that impede safe driving—leaving those individuals reliant on a caretaker for mobility.

One major confound is the fact that the more severe a person’s motor impairment, the more limited are their options for control interfaces—and accordingly also the control information that might be transmitted through these interfaces. Industry and academia alike continue to innovate on the design of control interfaces for wheelchairs (discussed further in Section 3.1.2), as well as on the design of the wheelchair itself. For example, standing wheelchairs (27, 28) are developed for the health, functional and social benefits of the body being upright and at the height of standing adults.

The area of assistive robotic arms is less mature than that of powered wheelchairs, yet recently is gaining momentum. In the last 25 years there have been three major commercial assistive robotic arms.4 While wheelchair-mounted robotic arms arguably offer the most flexibility in terms where and when they provide assistance (29), standalone and mobile manipulators, workstation robots and automated feeding devices also have been proposed (2).

3. THE CHALLENGE: CAPTURING CONTROL SIGNALS FROM HUMANS WITH MOTOR IMPAIRMENTS

For assistive machines that aim to fill a gap in lost function, whether attached to the body or not, a major challenge is how to capture and interpret control signals from the human. A significant factor is that with increasingly severe motor impairments, there is a diminishing ability on the part of the human to generate control commands, and fewer interface options for how to capture these control commands. Often as machines become functionally more complex to meet the need of increasingly severe motor impairments, so too do these machines become more complex to control. Thus, in addition to motor impairments, operation is challenged by the limited control interfaces which are available to those with severe impairments and also the complexity of the machine to be controlled.

3.1. Clinical Standards

Human motor impairments often translate into limitations—in bandwidth, in duration, in strength, in sources on the body—in the control signals that person can produce. For prosthesis control, there exists a fundamental confound that the higher the level of amputation, the more Degrees-of-Freedom (DoF) there are to control on the prosthesis, and yet fewer residual muscles to generate the control signals. Many traditional interfaces used to operate wheelchairs, like the 2-axis joystick, are inaccessible to those with severe motor impairments such as paralysis as a result of injury (e.g. cervical spinal cord injury) or degenerative disease (e.g. Amyotrophic Lateral Sclerosis, ALS). Moreover, the interfaces which are accessible to those with severe motor impairments already struggle to control machines like powered wheelchairs, and are fairly untenable for more complex machines like robotic arms.

A significant effort in both academia and industry has gone into creating control interfaces that are both effective in operating assistive machines yet also able to be operated by the target end-users of those machines (Fig. 2). Here we overview control interfaces and paradigms commonly employed on commercially-available machines used to replace lost function: prosthetic limbs (Sec. 3.1.1), wheelchairs (Sec. 3.1.2) and robotic arms (Sec. 3.1.3).

Figure 2.

Examples of clinical standards in control interfaces for prostheses, wheelchairs and robotic arms. Left to right: Body-powered prosthesis harness, EMG electrodes embedded within a prosthesis socket, joystick with visual feedback display, sip-and-puff and array of switches embedded within a headrest. Photo credits, left to right: Ottobock (Duderstadt, Germany), Ottobock, Quickie Wheelchairs (Phoenix, Arizona, United States), Adaptive Switch Laboratories (Spicewood, Texas, United States) and Permobil (Timrå, Sweden).

3.1.1. Prosthetic Limb Control.

The control paradigms and sensors used to operate prostheses differ for the upper and lower limbs—for functional reasons like the types of movements produced by that limb, and physiological reasons like where the robotic limb is mounted and what signals from the human are used to operate it.

A common sensor interface employed on robotic prostheses is the myoelectric interface that detects, using electrodes placed on the surface of the skin, electromyographic (EMG) signals produced as a result of muscle activation. For purposes of physiological intuition, EMG signals typically are detected from residual limb muscles.

Discriminating control commands from myoelectric signals can be a challenge for reasons ranging from consistent sensor placement day-to-day after donning/doffing the device, to sensor drift due to physiological factors like sweat and swelling, to low signal-to-noise ratios in the detectable electrical signals. These challenges are exasperated in upper-limb prostheses because a higher dimensional control signal is required—the number of controllable DoF in the human arm and hand is much greater than that of the leg and foot. Recent innovations seek to modify the human body, rather than the interface (Sec. 3.2.1), in an effort to find alternate ways to produce these signals. For example, Targeted Muscle Reinnervation (TMR) (30, 31) is a procedure that surgically relocates nerves from the amputated limb to reinnervate muscles that no longer are biomechanically functional (e.g. the pectoral or serratus muscles after a shoulder disarticulation) and are larger than the muscles of the residual limb—effectively producing a biological amplification of the EMG signal.

The commercial standards in upper-limb myoelectric prostheses typically operate one controllable DoF each for the hand, elbow and wrist. A direct control paradigm maps a pair of EMG electrodes to control a single DoF (22, 32). For example, an electrode pair detects the (thresholded) difference in biceps/triceps contraction to control prosthetic elbow flexion/extension. When placed on agonist-antagonist muscles of the residual arm, there is an advantage of physiological intuition (32). Typically a (mechanical or EMG) switch is used to divert control from one function to another (e.g. from the elbow to the hand).

As prostheses become more articulated, the upper limit on the number of controllable DoF becomes an inhibitor. A pattern-recognition paradigm is able to handle a larger number of control classes, using machine learning techniques to map the signals from an array of electrodes to a set of movement classes that typically each control one half of a single DoF (22, 32). Each decoded class maps to a distinct function, and the most advanced decoding techniques (which, importantly, also are usable by the human) discern 10-12 motion classes (22, 33). For example, elbow flexion and extension each would be individual classes. The simultaneous control of multiple DoF remains a significant challenge (34).

Control in lower-limb robotic prostheses typically consists of a set of finite-state machines. Each state machine is a pre-programmed gait—an automated controller that a therapist tunes to the gait of the user—and each state is a posture of that gait (35, 36). The pre-programmed gaits include for example flat surface walking, incline walking and stair ascent/descent. Interface decoding therefore consists of predicting when to trigger a switch between the finite-state machines. Mechanical sensors such as force sensors and joint encoders are the standard inputs to these decoders, with more recent developments also incorporating EMG (37, 38, 39).

3.1.2. Wheelchair Control.

There are wheelchair control devices and interfaces specifically designed to address some of the physical, cognitive and visual impairments mentioned above (Sec. 2.3). Typically extensive hand-tuning by an occupational therapist occurs when a person is being fit for a powered wheelchair, for example that dampens gains to address tremor or narrows the deadzone of a joystick to address motion limitations. A lot of expertise and user assessment stands behind the design of these interfaces.

Joysticks are by far the most common interface used to operate powered wheelchairs, which fully cover the 2-DoF control space (speed, heading) of the wheelchair. Standard joysticks are operated by the hands, however miniature limited-throw joysticks are employed broadly and operated by many other parts of the body (e.g. toe, chin, tongue). A joystick is a proportional control interface, which generates control signals that scale with the magnitude of the user input (i.e. amount of joystick deflection).

The commercial control interfaces that are accessible to those with severe motor impairments are limited in both the dimensionality of the control signals they are able to simultaneously issue (generally 1-D, occasionally 2-D), and also the continuity of that control signal. Such interfaces include the sip-and-puff, which is operated by respiration, and arrays of mechanical or proximity switches embedded within the wheelchair headrest. These interfaces typically offer non-proportional control, which issues control signals in preset amounts that do not scale with the magnitude of the signal provided by the human.5 To change the value of the control signal (or power level) instead involves navigating through a menu displayed on a small screen mounted near the armrest. Some maneuvers require multiple changes in power level, for example driving up a ramp into a van (higher power, to overcome the incline) and then positioning inside the van (lower power, for precise positioning).

3.1.3. Robotic Arm Control.

Robotic arms attached to wheelchairs is still a burgeoning, but growing, commercial area. To date, there are no commercial interfaces specifically designed for robotic arm control. The interfaces in use are those which have been developed to operate wheelchairs. For example, the Kinova JACO is sold with a 3-axis joystick using buttons to switch between control modes.

Control of end-effector position (3-D) and orientation (3-D) for a robotic arm nominally exists in 6-D. This is a higher dimensionality than that provided by standard joysticks, which offer 2-D or 3-D control. The standard solution is to partition the control space into control modes, that operate within only a subset of the control space at a given time. To operate a different subset requires a switch to a different control mode (e.g. through a button press). Modal control of a robotic arm already is a challenge with 2-D and 3-D interfaces. With the more limited wheelchair interfaces (1-D, discrete) available to those with severe motor impairments, control becomes fairly untenable—and in practice such interfaces rarely are used to operate robotic arms. Which presents an irony: where robotic arm operation becomes inaccessible to those who arguably are most in need of its assistance.

3.2. Progress in Research

Despite the success of assistive machines in facilitating independence for many, there are circumstances under which control remains a challenge—to the point even of making the machine entirely inaccessible. To address this challenge presents the following options: either to capture more complex control signals from the human, or for the machine operation to require less complex input from the human, by designing simpler machines or offloading some of the burden to autonomous controllers.6 That is:

Easier Control. To design machines with high functional complexity, and yet low control complexity.

Richer Control Signals. To design novel control interfaces and decoding paradigms able to capture high-complexity control signals despite severe motor impairments.

Robotics Autonomy. To introduce robotics autonomy that augments, interprets or modifies low-complexity control signals from the human.

While the operational challenges associated with assistive and rehabilitation robots are broader, and include factors like energy expenditure and socket fit, we focus in this section on the question of control: of capturing and interpreting control signals from the human, and integrating these signals within control paradigms on the robot. The topic of autonomy in rehabilitation machines and robots will be discussed in Section 4.

3.2.1. Design Innovations in Prostheses.

Research in prosthesis control focuses on decoding signals from the human, mapping these signals to alternative control spaces and novel hardware designs that require simpler control. All of these approaches aim to simplify or improve control, or enable more complex control.

For commercial upper-limb robotic prostheses, paradigms that control 1-DoF at a time are the norm. Such control paradigms are robust and straightforward to use, but do not scale well to high-DoF systems or complex coordinated motions (22). Therefore, a large body of pattern-recognition research focuses on advancing EMG decoding paradigms—evaluating a variety, even cascades (40), of machine learning algorithms (41, 42) and different input features for these algorithms (41, 42, 43), as well as exploring different output classes (44, 45, 46) including simultaneous multi-DoF control (34). Prosthesis operation furthermore is a target application domain for cortical neural interfaces (47, 48)—an extremely promising technology with the potential to capture complex control signals in the absence of movement ability, but still with many hurdles to adoption.

Sensor decoding research also features prominently in lower-limb prostheses, where here superior decoding aims to better predict transitions between finite-state machines. Arguably the stakes for classification errors with this hardware are higher than for upper-limb prostheses—since a mismatch between the selected automated gait and the human’s intended gait can result in a fall. Lower-limb decoders take as input information from mechanical sensors (e.g. force sensors, joint encoders) (39) or EMG data (37); and today, most often a combination of both (38, 49). Recent work is beginning to explore algorithms that perceive the external world, rather than just the human body, to anticipate environmental triggers of a state-machine switch (e.g. detection of a staircase (50)).

Academic work in simultaneous (multi-DoF) control for upper-limb prostheses furthermore explores alternative mapping paradigms. For example, such approaches might have each decoded class map to a set of multi-DoF motions (51) or force functions for each joint (52), or employ direct control within a reduced dimensionality space (53). This work at times is motivated by the idea of muscle synergies, that activate in coordination rather than individually. There is evidence of synergies in the human body that coordinate groups of muscles (54), posture and force in the hand (55, 56) and movement at the spinal cord (57).

There also exists work that aims to simplify control through mechanical design, rather than interface design. For example, robotic hand designs (58, 59) that mechanically encode synergies are being piloted on prosthetic arms, and variable stiffness prosthetic ankles increase functionality without increasing control complexity (60). Designs also aim to provide sensory feedback to the human, widely believed to be a necessity for advanced manipulation, by adding sensors to the prosthesis (61) or somatosensory neural interfaces (62).

3.2.2. Interface Innovations in Wheelchairs.

Much of the research in interfaces for wheelchair operation focuses on capturing control signals from alternate sites on the body. Control signals are captured from head gestures (63, 64, 65) and facial movements (65, 66, 67), shoulder and body movements (68, 69), and eye gaze (70). Biophysical signals such as electrooculography (71), electromyography (64) and electroencephalography (EEG) (72, 73) all are used to provide control input to wheelchairs. In addition to these noninvasive neural interfaces, cortical neural interfaces (47, 48) also might be used to operate wheelchairs and wheelchair-mounted robotic arms—with transformative potential for patients with locked-in syndrome, for example. All of these interfaces have the advantage of not requiring the user to adapt to the physical form factor of the interface, unlike commercial interfaces like the joystick, sip-and-puff and headarrays.

The field of wheelchair research also has introduced interfaces designed specifically to be paired with autonomy paradigms (discussed further in Section 4). For example, there are interfaces that employ haptic feedback to guide the user’s trajectory or alert them to obstacles (74, 75), touch-based graphical user interfaces to capture control commands (76) or locations of interest (65, 77), control command selections via blinking diodes and EEG (78), and speech interfaces to capture low- and high-level control commands (64, 79, 80) and environment annotations (81).

4. AUTONOMY IN HUMAN-OPERATED ASSISTIVE MACHINES

When a person has a motor impairment as a result of injury, disease or limb loss, our first line of defense is rehabilitation—to regain as much function as is possible with their own body. When rehabilitation however has reached its limits and a gap in function remains, we turn to assistive devices and machines to fill that functional gap. The idea behind then adding robotics autonomy is to make these machines even more accessible—to reduce operational burden and perhaps even create access where currently there is none.

The fundamental components of an autonomy system include sensors to observe the external world, and intelligence paradigms that reason about this information and generate control signals able to be executed by the hardware platform. Accordingly, components such as perception, obstacle-free path planning and motion controllers are standard on autonomous systems—all of which require additional sensing and computational infrastructure. Largely for this reason, to date within rehabilitation the majority of robotics autonomy has been introduced to machines that are not mounted to the body. (The introduction of autonomy to machines worn on the body is discussed further in Section 4.3.1.)

While full autonomy is an option for assistive machines, for reasons of robustness (82, 83) and user acceptance (84) it seldom is the architecture of choice today. An important observation is that users of assistive devices overwhelmingly prefer to retain as much control as possible, and cede only a minimum amount of control authority to the machine (18, 85).

The alternative then is to share control between the human and robotics autonomy. While at one end of the shared-control spectrum lies fully manual control and at the other fully automated control, in between lies a continuum of shared-control paradigms, that integrate inputs from manual control and automated controllers. Within robotics, typically the goal of shared-control paradigms is to find a sweet spot along this continuum (86, 87, 88); ideally, where sharing control makes the system more capable than at either of the extremes. When one considers how able, or willing, the human is to accommodate limitations of the robot however, users of assistive machines potentially are very different than more traditional human-robot teams (e.g. search-and-rescue or manufacturing).

Fundamental questions that any assistive shared-control autonomy system must address include: (1) What is the intent of the human? Without an explicit indication from the human of their intended task or goal, this information must be inferred. (2) How to appropriately share control between the autonomy and human control commands? The assistive robotics literature offers numerous paradigms for how to accomplish control sharing—and yet surprisingly few comparative studies of these paradigms (89, 90). Even when paradigms perform similarly according to typical robotics metrics like success, efficiency and safety, this does not mean the paradigms will rate similarly in user acceptance or adoption.

4.1. Robotic Wheelchairs

By far the most common introduction of autonomy to a rehabilitation machine is the powered wheelchair. The potential for robotic “smart” wheelchairs to aid in the mobility of those with motor, or cognitive, impairments has been recognized for decades (1). A survey (26) of epidemiological data estimates that between 1.4 and 2.1 million individuals would benefit from a robotic wheelchair at least some of the time. Despite significant advances in capabilities, control and interfaces, there has been limited7 success in transitioning robotic wheelchair technologies to the general public—so much so that the clinical world remains largely unaware that this technology even exists.

4.1.1. System Design and Autonomy Behaviors.

Historically, the general trend in robotic wheelchairs was to offer a complete system: that was very capable, but also involved a fair amount of infrastructure, and components that were costly. Many were developed in their entirety from the ground up, including the wheelchair hardware and software systems (91). Early robotic wheelchairs often relied on modifications to their environment, such as fiducial landmarks (92) or visual/magnetic lines (93). As sensors and algorithms improved however, so did the autonomy capabilities.

Many recent works take more modular approaches to both hardware and software development. Modular software is used to accommodate multiple control interfaces (91, 76) and sensors (94). Modular hardware is used to interface the autonomy system with a variety of commercial powered wheelchair models (76, 95, 96, 97), or to address different levels of human ability (91).

For reasons of user, bystander and wheelchair safety, collision avoidance is one of the most common autonomy behaviors implemented within robotic wheelchair systems (96, 98, 99, 100). Collision avoidance can assist with human impairments ranging from spasticity to bradykinesia to visual field neglect, and can help with spatially-constrained maneuvers such as driving in crowds (25). Approaches for how to avoid collisions include stopping (93, 101), alerting the user (e.g. via joystick force feedback (74)), steering away from the obstacle (102, 103) and engaging a path planner to circumvent the obstacle (85, 104).

Additional autonomy behaviors on robotic wheelchairs include person following (105), wheelchair convoying (106), automated docking (107), wall following (95, 108, 109, 110) and doorway traversal (89, 99, 110, 111, 112). Approaches that automate route planning (77, 113) can be especially appropriate for users with cognitive impairments (114).

How to generate appropriate motion trajectories also is a topic of interest—for example, trajectories that take into account human comfort (115) or social considerations such as how to pass a pedestrian (116).

4.1.2. Shared Control.

Recognizing that the user often is dissatisfied when the machine takes over more control than is necessary—effectively forcing the user to cede more control authority to the machine than needed—many approaches offer a variety, often a hierarchy, of autonomous and semi-autonomous control modes within their shared-control schemes.

In early robotic wheelchairs, shared-control frequently triggered a discrete shift from human control to autonomous control (117) or placed the high-level control (e.g. goal selection) with the human and low-level control (e.g. trajectory planning and execution) with the autonomy (80, 91, 108, 118). This latter partitioning remains widely employed in more recent works as well (67, 76, 119, 110). A smaller number of works blended commands from the user and the autonomy (85, 111, 120)—which increasingly is adopted today.

Today research efforts explore methods to intelligently and smoothly accomplish control sharing such that the user retains more control while safety is maintained. The function for determining how much control lies with the human versus the autonomy often is based on safety metrics such as distance to an obstacle (96, 97, 99) and uncertainty (90), or humancentric metrics like comfort and transparency (121, 96, 122), or is inferred using machine learning techniques (123). An approach fundamentally different to control blending is to partition the control space. For example, paradigms allocate control of heading to the autonomy and linear speed to the human (100, 111, 117, 124).

There exist approaches that incorporate an explicit estimate of user intent into their shared-control paradigm, in order to decide what is the human’s goal (97, 125), which shared-control paradigm to use (98) and when the autonomy should step in (99, 126); also to smoothly blend with the autonomy controls (127) and filter noisy input signals (128).

The importance and need to customize the control sharing to individual operators is gaining interest in recent years (97, 125, 129, 130). There are architectures that aim to develop individualized models of assistance (125, 129), and preliminary evidence of human preference over assistance paradigms changing over time (89).

Studies which are longitudinal and in-situ are critical, and remain absent from the literature: that is, studies which examine and compare control sharing use and preference, and intent inference performance, over extended periods of time and in real-world dynamic environments, both indoors and outdoors.

4.2. Robotic Arms

Robotic arms mounted to powered wheelchairs today is still a burgeoning commercial area, though within academia several long-term projects have developed assistive systems with integrated mobility and manipulation (68, 131, 132). Arguably the introduction of autonomy will be a necessity for the widespread adoption of assistive robotic arms—their higher-dimensional control makes operation a prohibitive challenge for those with severe motor impairments. Even with the advent of neural interfaces able to capture richer control signals from the human, it is very likely that autonomy still will help to ease operation. While no commercial arms offer autonomy to date, this is an active area of research.

4.2.1. Potential and Challenges.

Autonomy in robotic arms can help to accomplish Activities of Daily Living (ADL) manipulation tasks, such as personal hygiene, meal preparation and feeding, as well as basic functionality like picking up objects from high and low surfaces (133). In the United States alone there are 10.7 million people who require assistance with one or more ADL tasks.8

While from one perspective the question of intent inference is perhaps easier than for wheelchairs (because the workspace is smaller), from another perspective it is more challenging—there exists much greater variety in the types of motions we employ to accomplish manipulation tasks. Motion generation on robotic arms also must address issues like solving for inverse kinematics and singularities, which might be complicated by the human guiding the arm into configuration-infeasible parts of the control space. Perceptual, inference and motion generation challenges all still serve as impediments to the robustness of autonomy-endowed assistive robotic arms—and are bottlenecks in their use to accomplish ADL tasks with motor-impaired operators in complex real-world environments.

The fact that the human signals are lower dimensional than the full robot control space raises multiple decisions for the system designer. One decision is the human signal mapping: that is, to what control space the human signals should map. For example, the human and autonomy might operate entirely different controllers (e.g. the human position and the autonomy gripper force (134)). More commonly, the human signals map directly to control robot end-effector (or joint) position or velocity—but of course only to a subset of that control, as dictated by the currently engaged control mode. Whether the autonomy is restricted to operate within the same control dimensions as the human, or free to augment the human’s signal in the uncovered control dimensions, is another design decision.

The challenges of modal control create additional opportunities for autonomy. For example, instead of issuing control commands, the autonomy might instead anticipate and facilitate switches between the control subspaces, to ease the burden of mode switching (135) or to better estimate human intent (136). Such an approach is reminiscent of function switching in upper-limb prostheses (that is, of diverting control to operate different joints), which is an identified opportunity for autonomy (137).

4.2.2. Shared Control.

As with robotic wheelchairs, for wheelchair-mounted robotic arms there is a question of how to design paradigms for control sharing. Earlier work saw a similar high/low-level control partition, where the user selected the task or goal, which then was executed by the autonomy system (29, 138). In some works the human additionally intervened to provide pose corrections (68, 78, 139) or assist the autonomy (83). To partition the control dimensions between the autonomy and human also was explored (140).

Recent work investigates blended control and inferred operator intent. What form the function allocating control should take, and on what it should depend, is an open research question (141). Intent inference often plays a role in this control allocation, and research explores improving (136, 142) and even influencing (143) this inference.

Much of the evaluation of assistive robotic arms for which the goal or task is not defined a priori (or explicitly indicated by the human) is limited to reaching or pick-and-place tasks. More empirical evaluations of complex, multi-step ADL tasks are critically needed.

4.3. The Future

The introduction of autonomy transforms an assistive machine into a kind of shared-control robot, that allows the human to offload some of their operational burden. Autonomy to date largely has been employed on wheelchairs and robotic arms, and never deployed clinically. However in the future we do anticipate clinical and societal adoption (discussed further in Section 5.1), as well as opportunities for autonomy on a greater variety of clinical devices and machines. With this, we also anticipate a need for autonomy that is customized to individual users, and adapts with them as their abilities and preferences change.

4.3.1. Autonomy in More Assistive Machines.

While today within the field of rehabilitation robotics there exists little autonomy, there do exist elements of automation—such as the automated gait controllers seen in lower-limb prostheses (35) and exoskeletons (14), automated grasps implemented on prosthetic hands (144) and automated assistive behaviors implemented on wheelchair-mounted robotic arms (68). Another area in which automation plays a role is assist-as-needed controllers (145, 146) that adapt how much control assistance is provided during robot-enabled physical therapy. There likely exist opportunities to advance each of these elements with additional sensors and intelligence.

Another opportunity area is assistive devices that are not themselves machines, but which might integrate autonomy technologies that provide feedback to the human user. For example, academia and industry integrate sensors into long canes and walkers used by those with low-vision and blindness, to provide the user with obstacle information (147).

The potential for robotics autonomy to help with the control challenges of upper-limb prostheses appears strikingly evident—there is a societal expectation that an artificial limb will replace the full functionality of a lost limb, and yet for the overwhelming majority of prosthesis users this still is far from reality. However there are fundamental challenges to integrating autonomy into prosthetic limbs, and in particular prosthetic arms—challenges which often are unacknowledged by the robotics autonomy community. Prosthetic arms and hands differ from robotic ones in important ways. Crucially, weight is a deal-breaking factor—upper-limb prostheses must be physically supported, for hours at a time, by the user’s own shoulder and residual limb—with several important consequences. One is an absence of sensors; typically on commercial prosthetic arms, not even joint encoders are present. Control, as a result, is exclusively open-loop. Second is less actuation power; batteries must be light, small enough to be contained within the arm and able to operate for hours or even days without recharging. Third is fewer computational resources; again, limited by constraints on weight, size and power. There also are practical considerations like how to keep sensors clean, or how to don the device with precision if required for sensor calibration or kinematic transforms. All of these factors help to explain why advances in robotics autonomy overwhelmingly have not been clinically applicable to prosthetic limbs.

While these considerations do present considerable challenges, they do not necessarily rule out all opportunities for autonomy. For example, simple tactile sensors employed in reactive control paradigms that maintain grip force or achieve force closure (144) would not require extensive sensing or computation. Sensors worn on other parts of the body also are becoming more commonplace within society. We also do note efforts within academic research to considerably constrain autonomy propositions with clinical viability (137, 148).

The above constraints on sensors, actuation power and computation are less severe for prosthetic legs. Lower-limb prostheses are larger, support the body rather than are supported by it and often do integrate sensors such as joint encoders. To introduce additional sensors that perceive the external world to improve automation, or even introduce some element of autonomy, is more clinically viable than for upper-limb prostheses, and is currently being explored within academia (50).

4.3.2. Customized and Adaptive Autonomy.

Users of rehabilitation machines are unique in their physical abilities—motor, sensory or cognitive—as well as in their personal preferences. This uniqueness strongly suggests a need for customized, even individualized, assistance solutions—and specifically in regards to how control is shared (141). The question of how to achieve customization, that moreover is viable to deploy within clinical populations, is formidable. While the assistive autonomy likely can be tuned in the clinic (as when fit for a wheelchair by an occupational therapist), such a solution is not able to respond to changing patient needs.

To fully meet the needs and preferences of the user, we anticipate a requirement that the level and form of assistance be dynamic and able to adapt autonomously—which of course presents both a major opportunity and major challenge for machine learning. In particular, we expect this human-robot team to be changing over time for the following reasons:

Changing human ability. It is expected that the user’s needed or desired amount of assistance will be extremely non-static: hopefully due to successful rehabilitation, but also possibly due to the degenerative nature of a disease.

Changing human preference. A given user might prefer different levels of assistance on different days or throughout the day, depending on factors like pain or fatigue. The human naturally also will learn and adapt to the machine; changing how they behave as an operator as they become more familiar with the autonomy.

Changing environments. The performance of the robot and needs of the human are likely to change as they are introduced to new scenarios. If we want the robot to go where the human goes, it will encounter novel environments.

To achieve dynamic assistance, there is a question of where the adaptation signals come from, when and in response to what adaptation should happen, and how to accomplish the adaptation. Users communicate with the robot through interfaces that effectively filter (and thus remove information content from) their control signals, and these control signals furthermore are likely to be noisy, sparse and often masked (by impairment or interface) of the human’s true motor intent. Suitable machine learning algorithms therefore will be required to extract information from sparse and noisy signals, provided by non-experts, and be judicious in deciding what is temporary variability and what are actual changes in need, ability or preference—in short, to adapt appropriately to the changing needs and wants of the user, without overfitting to trial-by-trial variations and noise.

Engineering research is necessary to develop and design these algorithms, and long-term studies with end-users are required to evaluate their efficacy and iterate upon their design. Eventually, it will be necessary for these studies to be performed in-situ, outside of the lab and out in general society, which will present an entirely new set of challenges.

5. DISCUSSION

We conclude with a discussion of the adoption and acceptance of autonomy in rehabilitation robots, and propose a challenge to the community to break down the partition between machines that rehabilitate the body and machines that fill functional gaps.

5.1. Societal Adoption and Acceptance of Autonomy

User acceptance is always a factor in the adoption of new technologies. However users of assistive devices rely very intimately on this machine—it is physically supporting, or attached to, their bodies—and understandably can be very particular in their tolerance for innovation. The final measure of success for any assistive device is to what extent it improves the quality of life and abilities of the user—and, no matter how elegant the engineering, this measure will be zero if the device is never adopted. The consideration of user acceptance thus is made all the more relevant.

It is critical when developing assistive technologies to consider appearance, regulations and cost. Any infrastructure (sensors, computers) added to the assistive machine should be evaluated for whether or to what extent it minimizes contributing to the appearance of impairment. Dimension or weight additions need to be evaluated for practicality—for example, even if the expanded width of a wheelchair remains ADA9 compliant, it might significantly increase the difficulty of traversing through doorways. Whenever speaking with clinicians and patients, cost always emerges as a dominating factor. At least in the short term, these technologies will not be covered by insurance plans in most countries, and so any system that is going to be of practical benefit should be reasonable to finance out of pocket. Even as the cost of sensors and computing power rapidly declines, this still places some technologies outside the realm of feasibility—calling into question the utility of research which depends on such technologies.

We argue that the deployment of robotics autonomy in traditionally human-operated assistive machines is particularly timely. With the advent of driver-assist technologies (such as emergency braking, lane assistance) in standard automobiles, we now have a domain in which traditionally human-operated machines have incorporated robotics autonomy and become shared-control. Furthermore, the use of these machines is widespread, throughout all facets of society. In a very concrete way never before seen, people are primed to accept and adopt assistance from robotics autonomy. Given this societal familiarity, and lowered barriers to high-quality sensor information, with industry engagement it would be feasible to envision autonomy technology deploying on assistive machines this decade.

5.2. Gold Standard: Assistive Robots that Rehabilitate

The beginning of this article presented a categorization that partitioned rehabilitation robots into machines which aim to rehabilitate bodies and those which aim to replace lost function. However, we might envision that this bifurcation would not always exist. Instead, the operation of an assistive machine that replaces lost function also might be used to regain lost function. We are starting to see this explored with novel interfaces that provide rehabilitation benefits through their operation (149), and are used to control computers and wheelchairs. A therapy paradigm might adapt how much and what sort of autonomy assistance is provided by the robot, in order to encourage the recovery of motor function—as we have seen in machines whose purpose it is to rehabilitate the body (145, 146). A breakdown of this partition, and the advent of robots that provide both functional capabilities as well as rehabilitation benefits by virtue of their operation, could be seen as a gold standard for rehabilitation robotics, and thus a grand challenge for the field.

6. CONCLUSION

When our ability to rehabilitate motor impairments in the human body has reached a plateau, we turn to assistive machines and devices to fill that functional gap. While such machines do successfully facilitate independent mobility and manipulation for many, there are many others for whom the operation of these machines is made difficult, or entirely inaccessible, by their motor impairments and the control interfaces which are available to them. This confound only increases as motor impairments become more severe, interfaces become more limited and machines become more complex to control.

Robotics autonomy holds the potential to aid in this difficulty, by offloading some operational burden from the human to the autonomy. Within academic research, autonomy has been introduced primarily to powered wheelchairs and robotic arms. There are many subtle and critical factors to introducing clinically-feasible autonomy solutions to rehabilitation machines, which moreover often go unacknowledged by (or are unknown to) the robotics autonomy community. No autonomy technology will be adopted by the end-user that has not given proper consideration to these factors.

Today there is little overlap between the communities of rehabilitation robotics and robotics autonomy, and little representation from robotics autonomy within clinical or research work in human rehabilitation. In the future however greater cross-pollination between these communities could facilitate autonomy being introduced to, and facilitating access to, a wide variety of human-operated assistive machines. We propose a grand challenge for these communities to integrate even further, and design interfaces and control paradigms that rehabilitate the body through the operation of assistive robots that perform functional tasks.

ACKNOWLEDGMENTS

This material is based upon work supported by the National Science Foundation under Grant IIS 1552706 and Grant CNS 15544741, the U.S. Office of Naval Research under Award Number N00014-16-1-2247, and the National Institute of Biomedical Imaging and Bioengineering and the National Institute of Child Health and Human Development of the National Institutes of Health under Award Number R01-EB01933. Any opinions, findings and conclusions or recommendations expressed in this material are those of the author and do not necessarily reflect the views of the aforementioned institutions.

Footnotes

DISCLOSURE STATEMENT

The author is not aware of any affiliations, memberships, funding or financial holdings that might be perceived as affecting the objectivity of this review.

We identify the field of rehabilitation robotics to include reasearch involving the types of robots in actual clinical use (prostheses, exoskeletons, manipulandums and orthoses) and/or that participates the rehabilitation robotics community (e.g. the IEEE Conference on Rehabilitation Robotics). This is a largely separate community from the robotics autonomy community, with the primary difference being the strong biomedical engineering perspective of the former and the strong computer science perspective of the latter (with mechanical engineering heavily represented in both).

A related technology is Functional Electrical Stimulation (FES) (8), where movement of the limbs is accomplished through electrical stimulation of the muscles (rather than a robotic orthosis), perhaps triggered by neural signals from the patient (9). FES, along with wearable sensors and virtual reality, are all technologies developed for neurorehabilitation which however fall outside of this scope of this article, as they do not involve the use of a robot. Also related, but out of scope, is a branch of robotics research (Socially Assistive Robotics (10)) that aims to socially (rather than physically) support the patient during therapy exercise.

The next step along this trajectory of course is independent robotic assistants (3, 23, 24) that serve as body surrogates without being in contact with, or even co-located with, the operator’s own body. Such technologies have demonstrated promise, but still are quite far from adoption within the clinical world of rehabilitation, and therefore fall outside of the scope of this article.

The MANUS (later iARM, Exact Dynamics, Arnhem, Netherlands), Raptor (Applied Resources Corporation, New Jersey, United States) and JACO (Kinova Robotics, Quebec, Canada).

Though very recently a proportional control sip-and-puff has entered the market (Stealth Robotics, Colorado, United States).

An additional avenue is to modify the human body itself, rather than the machine side of the interface—as is done in the TMR surgery mentioned in Section 2.2.

The discontinued Wheelchair Pathfinder (Nurion-Raycal Industries, Pennsylvannia, United States) provided obstacle feedback to people with low-vision/blindness, and the TAO-7 Intelligent Wheelchair Base (AAI Canada, Ontaria, Canada) is marketed as a research testbed.

United States Census Bureau 2010 disability data: www.census.gov/people/disability/

The Americans with Disabilities Act and its associated regulations: www.ada.gov/

LITERATURE CITED

- 1.Simpson R 2005. Smart wheelchairs: A literature review. Journal of Rehabilitation Research & Development 42:423–438 [DOI] [PubMed] [Google Scholar]

- 2.Hillman M 2006. Rehabilitation robotics from past to present – A historical perspective. In Lecture Notes in Control and Information Science, Advances in Rehabilitation Robotics, eds. Bien ZZ, Stefanov D, vol. 306. Springer, Berlin, Heidelberg, 25–44 [Google Scholar]

- 3.Chen TL, Ciocarlie M, Cousins S, Grice PM, Hawkins K, et al. 2013. Robots for humanity: Using assistive robotics to empower people with disabilities. Robotics and Automation Magazine 20:30–39 [Google Scholar]

- 4.Vos T, Barber R, Ryan M, Bell B, Bertozzi-Villa A, et al. 2015. Global, regional, and national incidence, prevalence, and years lived with disability for 301 acute and chronic diseases and injuries in 188 countries, 1990–2013: A systematic analysis for the Global Burden of Disease Study 2013. The Lancet 386:743–800 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Nakayama H, Jørgensen H, Raaschou H, Olsen T. 1994. Recovery of upper extremity function in stroke patients: The Copenhagen Stroke Study. Archives of Physical Medicine and Rehabilitation 75:394–398 [DOI] [PubMed] [Google Scholar]

- 6.Miller EL, Murray L, Richards L, Zorowitz RD, Bakas T, et al. 2010. Comprehensive overview of nursing and interdisciplinary rehabilitation care of the stroke patient: A scientific statement from the American Heart Association. Stroke 41:2402–2448 [DOI] [PubMed] [Google Scholar]

- 7.Harwin WS, Patton JL, Edgerton VR. 2006. Challenges and opportunities for robot-mediated neurorehabilitation. Proceedings of the IEEE 94:1717–1726 [Google Scholar]

- 8.Peckham PH, Knutson JS. 2005. Functional Electrical Stimulation for neuromuscular applications. Annual Review of Biomedical Engineering 7:327–360 [DOI] [PubMed] [Google Scholar]

- 9.Jackson A, Zimmermann JB. 2012. Neural interfaces for the brain and spinal cord—restoring motor function. Nature Reviews Neurology 8:690–699 [DOI] [PubMed] [Google Scholar]

- 10.Feil-Seifer D, Matarić MJ. 2011. Socially assistive roboics. IEEE Robotics & Automation Magazine 18:24–31 [Google Scholar]

- 11.Reinkensmeyer DJ, Dietz V, eds. 2016. Neurorehabilitation technology. Switzerland: Springer International Publishing [Google Scholar]

- 12.Pennycott A, Wyss D, Vallery H, Klamroth-Marganska V, Riener R. 2012. Towards more effective robotic gait training for stroke rehabilitation: A review. Journal of NeuroEngineering and Rehabilitation 9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Young AJ, Ferris DP. 2016. State-of-the-art and future directions for lower limb robotic exoskeletons. IEEE Transactions on Neural Systems and Rehabilitation Engineering 25:171–182 [DOI] [PubMed] [Google Scholar]

- 14.Esquenazi A, Talaty M, Jayaraman A. 2017. Powered exoskeletons for walking assistance in persons with central nervous system injuries: A narrative review. American Academy of Physical Medicine and Rehabilitation 9:46–62 [DOI] [PubMed] [Google Scholar]

- 15.Finlayson M, van Denend T. 2003. Experiencing the loss of mobility: Perspectives of older adults with MS. Disability & Rehabilitation 25 [DOI] [PubMed] [Google Scholar]

- 16.Ziegler-Graham K, MacKenzie EJ, Ephraim PL, Travison TG, Brookmeyer R. 2008. Estimating the prevalence of limb loss in the United States: 2005 to 2050. Archives of Physical Medicine and Rehabilitation 89:422–429 [DOI] [PubMed] [Google Scholar]

- 17.Raichle KA, Hanley MA, Molton I, Kadel NJ, Campbell K, et al. 2008. Prosthesis use in persons with lower- and upper-limb amputation. Journal of Rehabilitation Resesearch and Development 45:961–972 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Biddiss EA, Chau TT. 2007. Upper limb prosthesis use and abandonment: A survey of the last 25 years. Prosthetics and Orthotics International 31:236–257 [DOI] [PubMed] [Google Scholar]

- 19.Micera S, Carpaneto J, Raspopovic S. 2010. Control of hand prostheses using peripheral information. IEEE Reviews in Biomedical Engineering 3:48–68 [DOI] [PubMed] [Google Scholar]

- 20.Atkins D, Heard D, Donovan W. 1996. Epidemiologic overview of individuals with upper-limb loss and their reported research priorities. Journal of Prosthetics and Orthotics 8:2–11 [Google Scholar]

- 21.ff. Weir RF, Sensinger JW. 2009. The design of artificial arms and hands for prosthetic applications. In Standard handbook of biomedical engineering and design, chap. 32. McGraw-Hill [Google Scholar]

- 22.Scheme E, Englehart K. 2011. Electromyogram pattern recognition for control of powered upper-limb prostheses: State of the art and challenges for clinical use. Journal of Rehabilitation Research & Development 48:643–660 [DOI] [PubMed] [Google Scholar]

- 23.Reiser U, Connette C, Fischer J, Kubacki J, Bubeck A, et al. 2009. Care-O-bot® 3 - creating a product vision for service robot applications by integrating design and technology. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) [Google Scholar]

- 24.Leeb R, Tonin L, Rohm M, Desideri L, Carlson T, del R. Millán J. 2015. Towards independence: A BCI telepresence robot for people with severe motor disabilities. Proceedings of the IEEE 103:969–982 [Google Scholar]

- 25.Edwards K, McCluskey A. 2010. A survey of adult power wheelchair and scooter users. Disability & Rehabilitation: Assistive Technology 5:411–419 [DOI] [PubMed] [Google Scholar]

- 26.Simpson R, LoPresti E, Cooper R. 2008. How many people would benefit from a smart wheelchair? Journal of Rehabilitation Research & Development 45:53–72 [DOI] [PubMed] [Google Scholar]

- 27.Churchward R 1985. The development of a standing wheelchair. Applied Ergonomics 16:55–62 [DOI] [PubMed] [Google Scholar]

- 28.Arva J, Paleg G, Lange M, Lieberman J, Schmeler M, et al. 2009. RESNA position on the application of wheelchair standing devices. Assistive Technology 21:161–168 [DOI] [PubMed] [Google Scholar]

- 29.Dune C, Leroux C, Marchand E. 2007. Intuitive human interaction with an arm robot for severely handicapped people a one click approach. In Proceedings of the IEEE International Conference on Rehabilitation Robotics (ICORR) [Google Scholar]

- 30.Kuiken TA, Dumanian GA, Lipschutz RD, Miller LA, Stubblefield KA. 2004. The use of targeted muscle reinnervation for improved myoelectric prosthesis control in a bilateral shoulder disarticulation amputee. Prosthetics and Orthotics International 28:245–53 [DOI] [PubMed] [Google Scholar]

- 31.Kuiken TA, Gi L, Lock BA, Lipschutz RD, Miller LA, et al. 2009. Targeted muscle reinnervation for real-time myoelectric control of multifunction artificial arms. The Journal of the American Medical Association 301:619–28 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Parker P, Englehart K, Hudgins B. 2006. Myoelectric signal processing for control of powered limb prostheses. Journal of Electromyography and Kinesiology 16:541–548 [DOI] [PubMed] [Google Scholar]

- 33.Tenore FVG, Ramos A, Fahmy A, Acharya S, Etienne-Cummings R, Thakor NV. 2009. De-coding of individuated finger movements using surface electromyography. IEEE Transactions on Biomedical Engineering 56:1427–1434 [DOI] [PubMed] [Google Scholar]

- 34.Young AJ, Smith LH, Rouse EJ, Hargrove LJ. 2013. Classification of simultaneous movements using surface EMG pattern recognition. IEEE Transactions on Biomedical Engineering 60:1250–1258 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Sup F, Bohara A, Goldfarb M. 2008. Design and control of a powered transfemoral prosthesis. International Journal of Robotics Research 27:263–273 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Rouse EJ, Mooney LM, Herr HM. 2014. Clutchable series-elastic actuator: Implications for prosthetic knee design. International Journal of Robotics Research 33:1611–1625 [Google Scholar]

- 37.Huang H, Kuiken T, Lipschutz R. 2009. A strategy for identifying locomotion modes using surface electromyography. IEEE Transactions on Biomedical Engineering 56:65–73 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Spanias JA, Perreault EJ, Hargrove LJ. 2016. Detection of and compensation for EMG disturbances for powered lower limb prosthesis control. IEEE Transactions on Neural Systems and Rehabilitation Engineering 24:226–234 [DOI] [PubMed] [Google Scholar]

- 39.Varol HA, Sup F, Goldfarb M. 2010. Multiclass real-time intent recognition of a powered lower limb prosthesis. IEEE Transactions on Biomedical Engineering 57:542–551 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Chu JU, Moon I, Mun MS. 2006. A real-time EMG pattern recognition system based on linear-nonlinear feature projection for a multifunction myoelectric hand. IEEE Transactions on Biomedical Engineering 53:2232–2239 [DOI] [PubMed] [Google Scholar]

- 41.Huang Y, Englehart KB, Hudgins B, Chan ADC. 2005. A gaussian mixture model based classification scheme for myoelectric control of powered upper limb prostheses. IEEE Transactions on Biomedical Engineering 52:1801–1811 [DOI] [PubMed] [Google Scholar]

- 42.León M, Gutiérrez J, Leija L, Muñoz R, de la Cruz J, Santos M. 2011. Multiclass motion identification using myoelectric signals and support vector machines. In Third World Congress on Nature and Biologigally Inspired Computing [Google Scholar]

- 43.Englehart K, Hudgins B. 2003. A robust, real-time control scheme for multifunction myoelectric control. IEEE Transactions on Biomedical Engineering 50:848–854 [DOI] [PubMed] [Google Scholar]

- 44.Tenore F, Armiger RS, Vogelstein RJ, Wenstrand DS, Harshbarger SD, Englehart K. 2008. An embedded controller for a 7-degree of freedom prosthetic arm. In Proceedings of the International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) [DOI] [PubMed] [Google Scholar]

- 45.Ajiboye AB, ff. Weir RF. 2005. A heuristic fuzzy logic approach to EMG pattern recognition for multifunctional prosthesis control. IEEE Transactions on Neural Systems and Rehabilitation Engineering 13 [DOI] [PubMed] [Google Scholar]

- 46.Simon AM, Hargrove LJ, Lock BA, Kuiken TA. 2011. Target achievement control test: Evaluating real-time myoelectric pattern-recognition control of multifunctional upper-limb prostheses. Journal of Rehabilitation Research & Development 48:619–628 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Lebedev MA, Nicolelis MA. 2006. Brain-machine interfaces: Past, present and future. TRENDS in Neurosciences 29:536–546 [DOI] [PubMed] [Google Scholar]

- 48.Chaudhary U, Birbaumer N, Ramos-Murguialday A. 2016. Brain-computer interfaces for communication and rehabilitation. Nature Reviews Neurology 12:513–525 [DOI] [PubMed] [Google Scholar]

- 49.Huang H, Zhang F, Hargrove LJ, Dou Z, Rogers DR, Englehart KB. 2010. Continuous locomotion-mode identification for prosthetic legs based on neuromuscular-mechanical fusion. IEEE Transactions on Biomedical Engineering 58:2867–2875 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Krausz NE, Lenzi T, Hargrove LJ. 2015. Depth sensing for improved control of lower limb prostheses. IEEE Transactions on Biomedical Engineering 62:2576–2587 [DOI] [PubMed] [Google Scholar]

- 51.Shima K, Tsuji T. 2010. Classification of combined motions in human joints through learning of individual motions based on muscle synergy theory. In Proceedings of the IEEE/SICE International Symposium on System Integration (SII) [Google Scholar]

- 52.Jiang N, Englehart KB, Parker PA. 2009. Extracting simultaneous and proportional neural control information for multiple-DOF prostheses from the surface electromyographic signal. IEEE Transactions on Biomedical Engineering 56 [DOI] [PubMed] [Google Scholar]

- 53.Matrone G, Cipriani C, Secco E, Magenes G, Carrozza M. 2010. Principal components analysis based on control of a multi-DOF underactuated prosthetic hand. Journal of NeuroEngineering and Rehabilitation 7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Tresch MC, Jarc A. 2009. The case for and against muscle synergies. Current Opinion in Neurobiology 19:601–607 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Santello M, Flanders M, Soechting JF. 1998. Postural hand synergies for tool use. The Journal of Neuroscience 18:10105–10115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Castellini C, van der Smagt P. 2013. Evidence of muscle synergies during human grasping. Biological Cybernetics 107:233–245 [DOI] [PubMed] [Google Scholar]

- 57.Calancie B, Needham-Shropshire B, Jacobs P, Willer K, Zych G, Green B. 1994. Involuntary stepping after chronic spinal cord injury: Evidence for a central rhythm generator for locomotion in man. Brain 117:1143–1159 [DOI] [PubMed] [Google Scholar]

- 58.Bicchi A, Gabiccini M, Santello M. 2011. Modeling natural and artificial hands with synergies. Philosophical Transactions of the Royal Society B 366:3153–3161 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Gioioso G, Salvietti G, Malvezzi M, Prattichizz D. 2013. Mapping synergies from human to robotic hands with dissimilar kinematics: An approach in the object domain. IEEE Transactions on Robotics 29:825–837 [Google Scholar]

- 60.Shepherd MK, Rouse EJ. 2017. The VSPA foot: A quasi-passive ankle-foot prosthesis with continuously variable stiffness. IEEE Transactions on Neural Systems and Rehabilitation Engineering In press [DOI] [PubMed] [Google Scholar]

- 61.ff. Weir RF, Heckathorne CW, Childress DS. 2001. Cineplasty as a control input for externally powered prosthetic components. Journal of Rehabilitation Research and Development 38:357–363 [PubMed] [Google Scholar]

- 62.Weber DJ, Friesen R, Miller LE. 2012. Interfacing the somatosensory system to restore touch and proprioception: Essential considerations. Journal of Motor Behavior 44:403–418 [DOI] [PubMed] [Google Scholar]

- 63.Jaffe DL, Harris HL, Leung SK. 1990. Ultrasonic head controlled wheelchair/interface: A case study in development and technology transfer. In Proceedings of the Annual International Conference on Assistive Technology for People with Disabilities (RESNA) [Google Scholar]

- 64.Moon I, Lee M, Ryu J, Mun M. 2003. Intelligent robotic wheelchair with EMG-, gesture-, and voice-based interfaces. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) [Google Scholar]

- 65.Bley F, Rous M, Canzler U, Kraiss KF. 2004. Supervised navigation and manipulation for impaired wheelchair users. In Proceedings of the International Conference on Systems, Man and Cybernetics (SMC) [Google Scholar]

- 66.Touati Y, Ali-Cherif A, Achili B. 2009. Smart wheelchair design and monitoring via wired and wireless networks. In Proceedings of the IEEE Symposium on Industrial Electronics & Applications (ISIEA) [Google Scholar]

- 67.Escobedo A, Spalanzani A, Laugier C. 2013. Multimodal control of a robotic wheelchair: Using contextual information for usability improvement. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) [Google Scholar]

- 68.Bien Z, Chung MJ, Chang PH, Kwon DS, Kim DJ, et al. 2004. Integration of a rehabilitation robotic system (KARES II) with human-friendly man-machine interaction units. Autonomous Robots 16:165–191 [Google Scholar]

- 69.Thorp EB, Abdollahi F, Chen D, Farshchiansadegh A, Lee MH, et al. 2016. Upper body-based power wheelchair control interface for individuals with tetraplegia. IEEE Transactions on Neural Systems and Rehabilitation Engineering 24:249–260 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Adachi Y, Goto K, Matsumoto Y, Ogasawara T. 2003. Development of control assistant system for robotic wheelchair-estimation of user’s behavior based on measurements of gaze and environment. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA) [Google Scholar]

- 71.Barea R, Boquete L, Mazo M, López E. 2002. System for assisted mobility using eye movements based on electrooculography. IEEE Transactions on Neural Systems and Rehabilitation Engineering 10 [DOI] [PubMed] [Google Scholar]

- 72.Carlson T, del R Millán J. 2013. Brain-controlled wheelchairs: A robotic architecture. IEEE Robotics Automation Magazine 20:65–73 [Google Scholar]

- 73.Katsura S, Ohnishi K. 2004. Human cooperative wheelchair for haptic interaction based on dual compliance control. IEEE Transactions on Industrial Electronics 51:221–228 [Google Scholar]

- 74.Luo RC, Hu CY, Chen TM, Lin MH. 1999. Force reflective feedback control for intelligent wheelchairs. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) [Google Scholar]

- 75.Kitagawa L, Kobayashi T, Beppu T, Terashima K. 2001. Semi-autonomous obstacle avoidance of omnidirectional wheelchair by joystick impedance control. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) [Google Scholar]

- 76.Braga RA, Petry M, Reis LP, Moreira AP. 2011. Intellwheels: Modular development platform for intelligent wheelchairs. Journal of Rehabilitation Research and Development 48 [DOI] [PubMed] [Google Scholar]

- 77.Wang Y, Chen W. 2011. Hybrid map-based navigation for intelligent wheelchair. In Proceedings of IEEE International Conference on Robotics and Automation (ICRA) [Google Scholar]

- 78.Luith T, Ojdanić D, Friman O, Prenzel O, Graser A. 2007. Low level control in a semi-autonomous rehabilitation robotic system via a brain-computer interface. In Proceedings of the IEEE International Conference on Rehabilitation Robotics (ICORR) [Google Scholar]

- 79.Simpson RC, Levine SP. 2002. Voice control of a powered wheelchair. IEEE Transactions on Neural Systems and Rehabilitation Engineering 10:122–125 [DOI] [PubMed] [Google Scholar]

- 80.Yanco HA. 1998. Wheelesley, a robotic wheelchair system: Indoor navigation and user interface. In Assistive Technology and Artificial Intelligence, vol. 1458 of Lecture Notes in Computer Science. Springer-Verlag [Google Scholar]

- 81.Walter MR, Hemachandra S, Homberg B, Tellex S, Teller S. 2013. Learning semantic maps from natural language descriptions. In Proceedings of Robotics: Science and Systems (R:SS) [Google Scholar]

- 82.Busnel M, Cammoun R, Coulon-Lauture F, Détriché JM, Claire GL, Lesigne B. 1999. The robotized workstation ”MASTER” for users with tetraplegia: Description and evaluation. Journal of Rehabilitation Research and Development 36:217–229 [PubMed] [Google Scholar]

- 83.Volosyak I, Ivlev O, Gräser A. 2005. Rehabilitation robot FRIEND II - the general concept and current implementation. In Proceedings of the IEEE International Conference on Rehabilitation Robotics (ICORR) [Google Scholar]

- 84.Kim DJ, Hazlett-Knudsen R, Culver-Godfrey H, Rucks G, Cunningham T, et al. 2011. How autonomy impacts performance and satisfaction: Results from a study with spinal cord injured subjects using an assistive robot. IEEE Transactions on Systems, Man, and Cybernetics - Part A: Systems and Humans 42:2–14 [Google Scholar]

- 85.Lankenau A, Röfer T. 2001. A versatile and safe mobility assistant. IEEE Robotics & Automation Magazine 8:29–37 [Google Scholar]

- 86.Sheridan T 1992. Telerobotics, automation, and human supervisory control. MIT Press [Google Scholar]

- 87.Crandall JW, Goodrich MA, Olsen DR Jr., Nielsen CW. 2005. Validating human-robot interaction schemes in multitasking environments. IEEE Transactions on Systems, Man and Cybernetics 35:438–449 [Google Scholar]

- 88.Fong T, Thorpe C, Baur C. 2001. Advanced interfaces for vehicle teleoperation: Collaborative control, sensor fusion displays, and remote driving tools. Autonomous Robots 11:77–85 [Google Scholar]

- 89.Erdogan A, Argall B. 2017. The effect robotic wheelchair control paradigm and interface on user performance, effort and preference: An experimental assessment. Robotics and Autonomous Systems 94:282–297 [Google Scholar]

- 90.Ezeh C, Trautman P, Devigne L, Bureau V, Babel M, Carlson T. 2017. Probabilistic vs linear blending approaches to shared control for wheelchair driving. In Proceedings of the IEEE International Conference on Rehabilitation Robotics (ICORR) [DOI] [PubMed] [Google Scholar]

- 91.Mazo M 2001. An integral system for assisted mobility. IEEE Robotics & Automation Magazine 8:46–56 [Google Scholar]

- 92.Madarasz RL, Heiny LC, Cromp RF, Mazur NM. 1986. Design of an autonomous vehicle for the disabled. IEEE Journal of Robotics and Automation 2:117–126 [Google Scholar]