Abstract

The perception of social categories, emotions, and personality traits from others’ faces each have been studied extensively but in relative isolation. We synthesize emerging findings suggesting that, in each of these domains of social perception, both a variety of bottom-up facial features and top-down social cognitive processes play a part in driving initial perceptions. Among such top-down processes, social-conceptual knowledge in particular can have a fundamental structuring role in how we perceive others’ faces. Extending the Dynamic Interactive framework (Freeman & Ambady, 2011), we outline a perspective whereby the perception of social categories, emotions, and traits from faces can all be conceived as emerging from an integrated system relying on domain-general cognitive properties. Such an account of social perception would envision perceptions to be a rapid, but gradual, process of negotiation between the variety of visual cues inherent to a person and the social cognitive knowledge an individual perceiver brings to the perceptual process. We describe growing evidence in support of this perspective as well as its theoretical implications for social psychology.

Although often warned not to judge a book by its cover, we cannot help but dispel any number of judgments on encountering the people around us. From facial features alone, seemingly immediately we perceive the social categories to which they belong (e.g., gender, race), their current emotional state (e.g., sad), and the personality characteristics they likely possess (e.g., trustworthy, intelligent). The field of social psychology has taken great interest in these judgments, as each domain of judgment has consistently been shown to portend wide-ranging effects on social interaction and sometimes society at large. Social category judgments tend to spontaneously activate related stereotypes, attitudes, and goals and can bear a number of cognitive, affective, and behavioral consequences, such as providing a basis for subsequent prejudice and discrimination (Brewer, 1988; Fiske & Neuberg, 1990; Macrae & Bodenhausen, 2000). Emotion judgments, of course, have long been noted to drive nonverbal communication and provide critical signals for upcoming behaviors (Darwin, 1872; Ekman & Friesen, 1971; Ekman, Sorensen, & Friesen, 1969). Finally, trait judgments from facial features alone occur spontaneously and outside awareness, and they can impact evaluations, behavior, and real-world outcomes ranging from political elections, financial success, and criminal-sentencing decisions (for review, Todorov, Olivola, Dotsch, & Mende-Siedlecki, 2015).

Given the implications, early work in the field of social psychology focused on the products of these judgments and the variety of downstream effects that ensue. At the same time, research in the cognitive, neural, and vision sciences was aiming to characterize the underlying visual cues and basic mechanisms driving face perception. Recently, a unified ‘social vision’ approach has formed (Adams, Ambady, Nakayama, & Shimojo, 2011; Balcetis & Lassiter, 2010; Freeman & Ambady, 2011), in which the process of social perception is integrated with the products that follow. This approach stands in contrast to a more traditional divide wherein these levels of analysis are studied by fairly separate disciplines.

Although social categorization, emotion recognition, and trait attribution have each been studied in relatively isolated literatures, traditionally these literatures have shared a similar feed-forward emphasis (although with exceptions). In a feed-forward approach, perceptual cues activate an internal representation (e.g., social category, emotion, trait), which in turn drives subsequent cognitive, affective, motivational, and behavioral processes. For instance, classic and influential models of social categorization treated a fully formed categorization (e.g., man, Black person) as the initial starting point (Brewer, 1988; Fiske & Neuberg, 1990; Macrae & Bodenhausen, 2000); prominent basic emotion theories treated emotion percepts (e.g., angry) as if directly “read out” from specific combinations of facial action units in a universal, genetically determined fashion (Ekman, 1993); and popular models of face-based trait impressions have tended to focus on specific sets of facial features that produce specific impressions in a bottom-up fashion (Oosterhof & Todorov, 2008a; Zebrowitz & Montepare, 2008a). In all cases, the face itself directly conveys a social judgment, and little attention was paid to processes harbored within perceivers.

There has been an increasing recognition of such processes and the important role that top-down social cognitive factors, such as stereotypes, attitudes, and goals, play in “initial” social perceptions (Freeman & Johnson, 2016a; Hehman, Stolier, Freeman, Flake, & Xie, 2019). In the context of perceiving social categories and its interplay with stereotype processes, the Dynamic Interactive (DI) theory provided a framework and computational model to understand the mutual interplay of bottom-up visual cues and top-down social cognitive factors in driving perceptions (Freeman & Ambady, 2011). Here we extend the DI theory to provide an understanding of similar mutual interplay in the context of perceiving emotions and personality traits as well. We aim to show that an integrated system relying on domain-general cognitive principles may provide a helpful model of visually-based social perception broadly.

1. Dynamic Interactive (DI) Theory

The DI framework (Freeman & Ambady, 2011) uses domain-general cognitive and computational principles, such as recurrent processing and mutual constraint satisfaction, in order to argue that an initial social perception (e.g., male, Black, happy) is rapid, yet gradual, process of negotiation between the multiple visual features inherent to a person (e.g., facial and bodily cues) and the baggage a perceiver brings to the perceptual process (e.g., stereotypes, attitudes, goals). Accordingly, initial categorizations are not discrete “read outs” of facial features; they evolve over hundreds of milliseconds – in competition with other partially-active perceptions – and may be dynamically shaped by context and one’s stereotypes, attitudes, and goals.

Why might this be the case? At the neural level, the representation of a social category would be reflected by a pattern of activity distributed across a large population of neurons. Thus, activating a social category representation would involve continuous changes in a pattern of neuronal activity (Smith & Ratcliff, 2004; Spivey & Dale, 2006; Usher & McClelland, 2001). Neuronal recordings in nonhuman primates have shown that almost 50% of a face’s visual information rapidly accumulates in the brain’s perceptual system within 80 ms, while the remaining 50% gradually accumulates over the following hundreds of milliseconds (Rolls & Tovee, 1995). As such, during early moments of perception when only a “gist” is available, the transient interpretation of a face is partially consistent with multiple interpretations (e.g., both male or female). As information accumulates and representations become more fine-grained, the pattern of neuronal activity dynamically sharpens into an increasingly stable and complete representation (e.g., male), while other, competing representations (e.g., female) are pushed out (Freeman & Ambady, 2011; Freeman, Stolier, Brooks, & Stillerman, 2018).

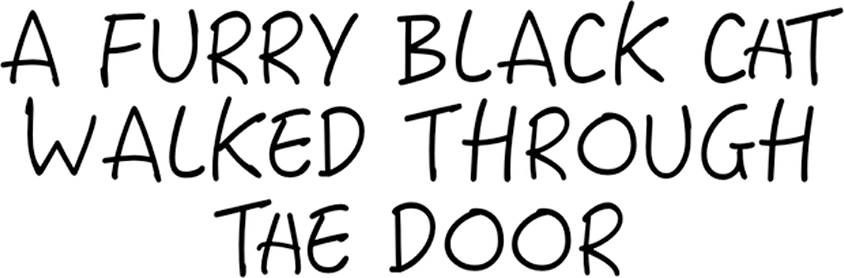

According to the DI theory, this dynamic competition inherent to the perceptual process is important because it allows the perceptual system to take the natural diversity in others’ visual cues (e.g., masculine features on a woman’s face) and slot it into stable categories to perceive others. A central premise to the theory is that, during the hundreds of milliseconds it takes for the neuronal activity to achieve a stable pattern (~100% male or ~100% female), top-down factors, such as attitudes, goals, and perhaps most notably stereotypes or social-conceptual knowledge more generally, can also exert an influence, thereby partly determining the pattern that the system settles into (Freeman & Ambady, 2011). Accordingly, social category perception is rendered a compromise between the perceptual cues “actually” there and the baggage perceivers bring to the perceptual process. Just as expectations from prior knowledge are effortlessly and rapidly brought to bear on perceiving an ambiguous A/H letter to align with one’s assumptions (see Fig. 1), so too may social expectations and social-conceptual knowledge shape face perception. Indeed, simulations with the computational model derived from the DI theory account well for a wide range of phenomena, such as ambiguity resolution in perceiving social categories, top-down stereotype effects on gender and race perception, intersectional effects and phenotype resemblance effects on social category perception (e.g., “race is gendered”), effects of interracial exposure, among numerous others (Freeman & Ambady, 2011; Freeman, Pauker, & Sanchez, 2016; Freeman, Penner, Saperstein, Scheutz, & Ambady, 2011).

Figure 1.

Perceivers readily and involuntarily perceive the words “CAT” and “THE”, rather than “CHT” and “TAE”, due to top-down knowledge of such words, even though the middle A/H is identical. Figure taken from Gaspelin and Luck (2018).

Although in theory any number of social cognitive processes may shape initial perceptions, the top-down impact of stereotypes has the strongest backing from a mechanistic perspective. It also connects social perception to a wider literature on the interplay of conceptual knowledge and visual perception. Stereotypes, after all, are merely semantic associations activated by social category representations. A central argument of the DI theory is that stereotypes are semantic activations that can become implicit expectations during perception, and that they thereby take on the ability to influence perception (Freeman & Ambady, 2011; Freeman, Penner, et al., 2011; Johnson, Freeman, & Pauker, 2012b). See Fig. 2. However, we believe that the theoretical and computational basis of understanding the interplay of facial features and stereotypes in social category perception (which was the focus of the DI theory) sets the stage for understanding the interplay of visual features and conceptual knowledge in driving social perception more broadly.

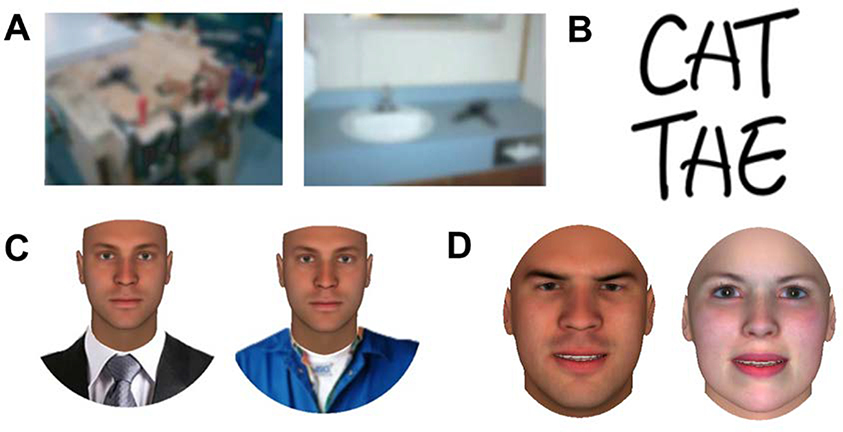

Figure 2.

The impact of social-conceptual knowledge on face perception shares a fundamental similarity with more general top-down impacts of conceptual associations in perception. (A) Conceptual knowledge about hairdryers and drills and about garages and bathrooms leads an ambiguous object to be readily disambiguated by the context (Bar, 2004). (B) The “CAT” and “THE” example from Fig. 1, where stored representations of “CAT” and “THE” lead to opposite interpretations of the same letter. (C) Contextual attire cues bias perception of a racially-ambiguous face to be White when surrounded by high-status attire but to be Black when surrounded by low-status attire, due to stereotypic associations between race and social status (Freeman, Penner, et al., 2011). (D) An emotionally ambiguous face is perceived to be angry when male but happy when female, due to stereotypic associations linking men to anger and women to joy (Hess, Adams, & Kleck, 2004). Adapted from Freeman and Johnson (2016).

1.1. Conceptual knowledge in visual perception

Intuitively, we might expect that our perception of a visual stimulus such as a face would be immune to conceptual knowledge and other top-down factors, instead reflecting a veridical representation of the perceptual information before our eyes (Marr, 1982). This was long argued to be the case (Fodor, 1983; Pylyshyn, 1984) and is still an assumption of many popular feed-forward models of object recognition (Riesenhuber & Poggio, 1999). An important exception historically was the ‘New Look’ perspective that emerged over a half-century ago, arguing that motives can impact perception (i.e., we see what we want to see) and providing evidence that, for example, poor children overestimate the size of coins (Bruner & Goodman, 1947). However, the perspective soon lost favor. Today, many researchers view perception as an active and constructive process, where context and prior knowledge adaptively constrain perception. While few are likely to refute top-down influences on perceptual decision-making generally, debate continues as to whether these influences would operate at the level of perception itself, rather than on attentional or post-perceptual decision processes (Firestone & Scholl, 2015; Pylyshyn, 1999). In our view, top-down influences are likely to manifest at all stages, including multiple levels of perceptual processing itself, and continued arguments for the cognitive impenetrability of perception are difficult to reconcile with swaths of empirical findings and a modern understanding of the neuroscience of perception (see Vinson et al., 2016).

Indeed, numerous findings now support the notion that top-down conceptual knowledge plays an important role in visual perception. And while initial the DI theory incorporated such insights to focus on stereotypes’ impact on face perception, we aim to show here that the theory and conceptually situated nature of perception can be extended to understand other domains of social perception more generally. Evidence for the conceptual scaffolding of perception is now quite vast (for review, Collins & Olson, 2014). Large-scale neural oscillations allow visual perception to arise from both bottom-up feed-forward and top-down feedback influences (Engel, Fries, & Singer, 2001; Gilbert & Sigman, 2007), and even the earliest of responses in primary visual cortex (V1-V4) are sensitive to learning and altered by top-down knowledge (Damaraju, Huang, Barrett, & Pessoa, 2009; Li, Piëch, & Gilbert, 2004).

With respect to conceptual knowledge, learning about a novel category has consistently been shown to facilitate the recognition of objects (Collins & Curby, 2013; Curby, Hayward, & Gauthier, 2004; Gauthier, James, Curby, & Tarr, 2003) and changes the discriminability of faces’ category-specifying features (Goldstone, Lippa, & Shiffrin, 2001). Detailed semantic knowledge, such as stories about a stimulus, can facilitate the recognition of objects and faces, and such influences manifest as early 100 ms after visual exposure (Abdel-Rahman & Sommer, 2008; Abdel-Rahman & Sommer, 2012). A brain regions central to object and face perception, the fusiform gyrus (FG), is sensitive to such knowledge and learning (Tarr & Gauthier, 2000) and readily modulated by perceptual ‘priors’ and top-down expectation signals from ventral-frontal regions, notably the orbitofrontal cortex (OFC) (Bar, 2004; Bar, Kassam, et al., 2006; Freeman et al., 2015; Summerfield & Egner, 2009) (see Fig. 3). For instance, when participants have an expectation about a face, top-down effective connectivity from the OFC to the FG is enhanced, suggesting that expectation signals available in the OFC may play a role in modulating FG visual representations (Summerfield & Egner, 2009; Summerfield et al., 2006). Moreover, when presented with objects, activity related to successful object recognition is present in the OFC 50–85 ms earlier than in regions involved in object perception, again suggesting a role for OFC expectation signals that may affect FG perceptual processing (Kveraga, Boshyan, & Bar, 2007).

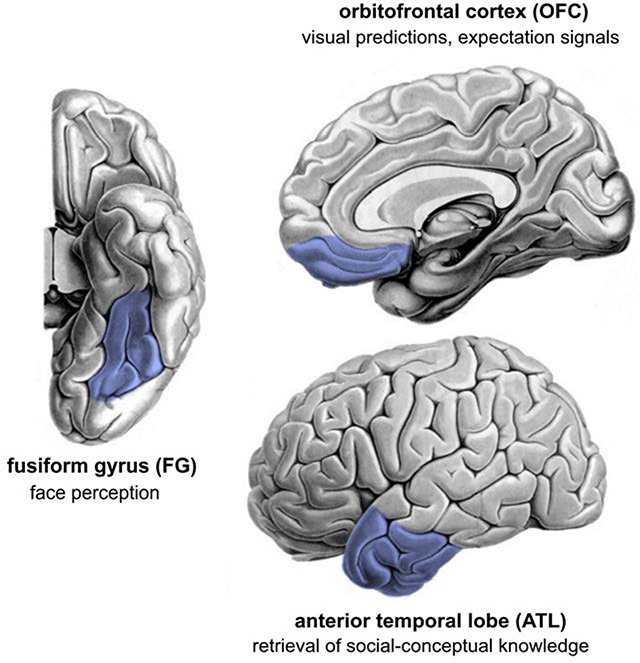

Figure 3.

Freeman and Johnson (2016) posited that the fusiform gyrus (FG), orbitofrontal cortex (OFC), and anterior temporal lobe (ATL) together play an important role in the coordination of sensory and social processes during perception. The FG is centrally involved in visual processing of faces, the ATL broadly involved in semantic storage and retrieval processes, and the OFC involved in visual predictions and top-down expectation signals. In this perspective, when perceiving another person’s face, evolving representations in the FG lead the ATL to retrieve social-conceptual associations related to tentatively perceived characteristics. This social-conceptual information available in the ATL, in turn, is used by the OFC to implement top-down visual predictions (e.g., based on social-conceptual knowledge) that can flexibility modulate FG representations of faces more in line with those predictions. Such a network would support a flexible integration of bottom-up facial cues and higher-order social cognitive processes. Adapted from Freeman and Johnson (2016).

We have shown that, when participants view faces, the representational structure of activity patterns in the face-processing FG partly reflects stereotypical expectations (Stolier & Freeman, 2016a) and emotion concepts (Brooks, Chikazoe, Sadato, & Freeman, in press). Such findings are consistent more broadly with growing evidence that perceptual representations in object-processing brain regions do not reflect processing of visual cues alone, but additionally reflect abstract semantic relationships between perceptual categories (Jozwik, Kriegeskorte, Storrs, & Mur, 2017; Khaligh-Razavi & Kriegeskorte, 2014; Storrs, Mehrer, Walter, & Kriegeskorte, 2017). More generally, the face-processing FG has been shown to be sensitive to a variety of other top-down social cognitive processes, such as goals (Kaul, Ratner, & Van Bavel, 2013) and intergroup processes (Brosch, Bar-David, & Phelps, 2013; Kaul, Ratner, & Van Bavel, 2014; Van Bavel, Packer, & Cunningham, 2008).

1.2. An extended DI model

How could we account for such findings and understand the conceptual scaffolding of perceiving social categories, emotions, and traits? In considering the underlying representations involved, early models in social perception (e.g., Brewer, 1988; Fiske & Neuberg, 1990; Hamilton, Katz, & Leirer, 1980; Smith, 1984; Srull & Wyer, 1989), inspired by information-processing approaches and a rising interest in the digital computer, viewed representations as discrete symbolic units, manipulated through propositions and logical rules in what can be described as a ‘physical symbol system’ (Newell, 1980). This included the popular ‘spreading activation’ associative networks, which are highly valuable in understanding phenomena such as stereotype activation and priming (e.g., Blair & Banaji, 1996; Dovidio, Evans, & Tyler, 1986).

Distributed neural-network models (and localist approximations) in social cognition (Freeman & Ambady, 2011; Kunda & Thagard, 1996; Read & Miller, 1998; Smith & DeCoster, 1998), including the DI model, assume that representations are not encapsulated by any single static unit, but instead reflect a unique pattern distributed over a population of units. It is the distributed pattern, dynamically re-instantiated in every new instance, that serves as the unique ‘code’ for a given social category, stereotype, trait, or memory. Such models have considerably higher neural plausibility (Rumelhart, Hinton, & McClelland, 1986; Smolensky, 1989), as multi-cell recordings have now made clear it is the communal activity of a population of neurons – a specific pattern of firing rates – that provides the ‘code’ for various kinds of sensory and abstract cognitive information (i.e., a ‘population code’; Averbeck, Latham, & Pouget, 2006).

The DI model (Freeman & Ambady, 2011) is a recurrent connectionist network with stochastic activation (McClelland, 1991; McClelland & Rumelhart, 1981; Rogers & McClelland, 2004). When a face is presented to the network, facial feature detectors in the Cue level activate social categories in the Category level, which in turn activate stereotype attributes in the Stereotype level; in parallel, top-down attentional processes activate task demands in the Higher-Order level, which amplify or attenuate certain pools of social categories in the Category Level. At every moment in time, a node has a transient activation level, which can be interpreted as the strength of a tentative hypothesis that the node is represented in the input. After the system is initially stimulated by bottom-up and top-down inputs (e.g., a face and a given task demand), activation flows among all nodes at the same time (as a function of their particular connection weights).

Because processing is recurrent and nodes are all bidirectionally connected, this results in a dynamic back-and-forth flow of activation among many nodes in the system, leading them gradually to readjust each other’s activation as they mutually constrain one another over time. This causes the system to gradually stabilize over time onto an overall pattern of activation that best fits the inputs and maximally satisfies the system’s constraints (the inputs and the relationships among nodes). The model thereby captures the notion that perceptions of social categories dynamically evolve over fractions of a second, emerging from the interaction between bottom-up sensory cues and top-down social cognitive factors. As such, perceptions of social categories are a gradual process of negotiation between visual cues and perceiver knowledge. Although many neural systems would be involved, recent extensions of the DI framework (Freeman & Johnson, 2016a) propose that this integration of bottom-up and top-down information in initial social perception centers on the interplay of the FG (involved in face perception), OFC (involved in top-down expectation signals), and anterior temporal lobe (ATL; involved in the storage and retrieval of semantic associations; Olson, McCoy, Klobusicky, & Ross, 2012). See Fig. 3.

In order to extend from social category perception to a more comprehensive system for emotion perception and trait perception as well, we can conceive of the Category and Stereotype levels as a single level. While separate levels in the original model, the Category and Stereotype levels both reflect knowledge structures or attributes; combining them into a single level is nearly functionally equivalent from the perspective of the model. As in Fig. 4, this single level of an extended DI model would include categories (e.g., Male, Asian), emotions (e.g., Happy, Angry), and traits (e.g., Trustworthy, Dominant). Stereotype attributes (e.g., Aggressive, Caring) in this case are equivalent to traits (also see Kunda & Thagard, 1996). As in the original model, nodes that are conceptually related (e.g., Male – Aggressive, Trustworthy – Likeable, Happy – Trustworthy) have positive excitatory connections, and those that have an opposite conceptual relationship (e.g., Female – Aggressive) have negative inhibitory connections; those unrelated have no connection. Based on task instructions in a particular context, Higher-Order task demand nodes (e.g., Race Task Demand, Emotion Task Demand, Dominance Task Demand) will excite the pool of nodes relevant for the task (i.e., the response set) and inhibit those irrelevant for the task, thereby allowing certain sets of social categories, emotions, or traits to come “to the fore” for the judgment currently at hand (see Fig. 4).

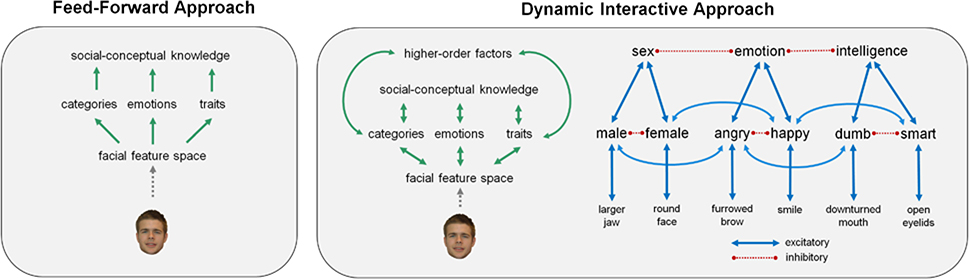

Figure 4.

In a Feed-Forward Approach, facial features are represented in a facial feature space, which in turn activates social categories, emotions, and traits, thereafter activating related social-conceptual knowledge and impacting subsequent processing and behavior. In an extended DI framework, during perception, as facial features begin activating categories (e.g., male), emotions (e.g., angry), and/or traits (e.g., smart), related social-conceptual attributes will be activated as well, but they will also feed excitatory and inhibitory pressures back on the earlier activated representations. The continuous, recurrent flow of activation among all internal representations of categories, emotions, traits, and social-conceptual attributes (here all organized into a single processing level) leads social-conceptual knowledge to have a structuring effect on perceptions and even featural representation. During this process, higher-order task demands (e.g., sex, emotion, intelligence) amplify and attenuate representations so as to bring task-relevant attributes to the fore for the specific task context at hand. Note that this depiction is highly simplified; a limitless number of other attributes and their connections could be included, and a number of excitatory and inhibitory connections are omitted here for simplicity. Also note that facial feature space be modeled using a range of approaches from simplified sets of facial features, as seen here, to more complex computational approaches based on the brain’s visual-processing stream (Riesenhuber & Poggio, 1999); multiple levels of visual processing could be included and not all levels of visual processing need be bidirectional.

1.3. Social-conceptual structure becomes perceptual structure

A central implication of the DI model and its extension here is that social-conceptual structure is always in an intimate exchange with perceptual structure, and thus how we think about social groups (i.e., stereotypes), emotions, or personality traits helps determine how we visually perceive them in other people. One well-studied example is “race is gendered” effects, whereby perceptual judgments of Black faces are biased toward male judgments and Asian faces biased toward female judgments. These perceptual effects have been demonstrated using a variety of paradigms and have been related to individual differences in the strength of overlapping stereotype associations between Black and Male stereotypes (e.g., aggressive, hostile) and Asian and Female stereotypes (e.g., docile, communal) (Johnson et al., 2012b). Recently such social-conceptual biasing of perceiving gender and race was shown to be reflected in neural-representational patterns of the face-processing FG region while viewing faces, showing that it is reflected in the basic perceptual processing of those faces (Stolier & Freeman, 2016a). Simulations with the DI model showed that such effects naturally arise out of the recurrent interactions between cue, category, and stereotype representations inherent to the system (Freeman & Ambady, 2011; Phenomenon 3). As facial cues (e.g., larger jaw) activate categories (e.g., male) that in turn activate stereotypes (e.g., aggressive) during perception, all conceptually related attributes (i.e., stereotypes) begin feeding back excitatory and inhibitory pressures to category representations (e.g., male and female) and lower-level cue representations (e.g., larger jaw and round face). This has the effect of causing the conceptual similarity of any two categories (or by extension, any two emotions or two traits) to scaffold that pair’s perceptual similarity.

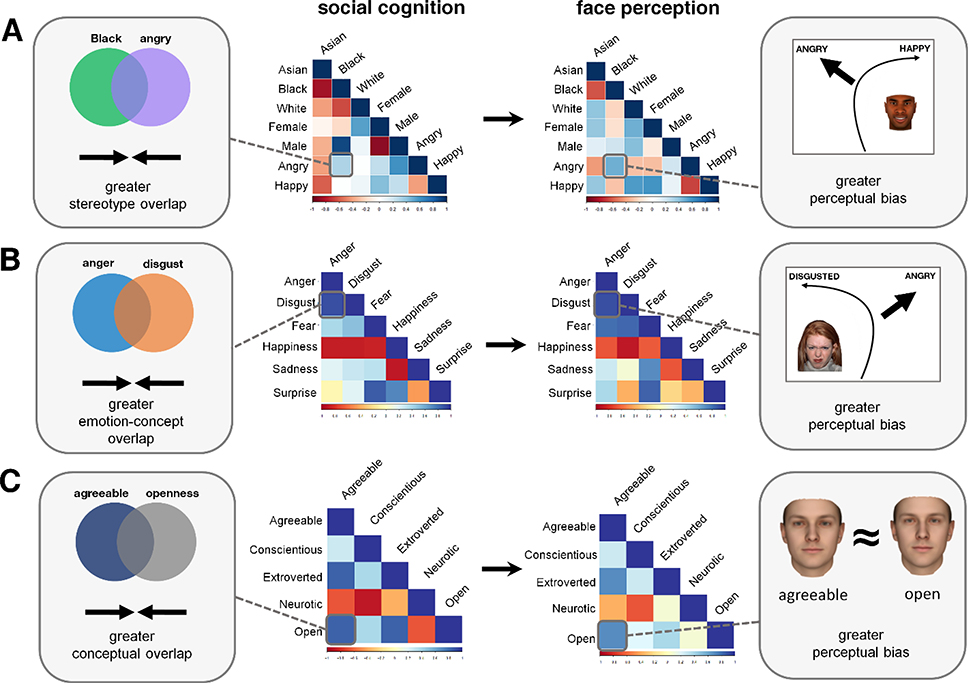

Our recent research has tested this social-conceptual scaffolding of perceiving faces in the context of perceiving social categories, emotions, and traits more comprehensively using a technique called representational similarity analysis (RSA). Using RSA, we can examine how representational geometry (i.e., the pairwise similarities among representations) is conserved across conceptual, perceptual, and/or physical representational spaces to test whether conceptual structure is reflected in perceptual structure, even when acknowledging the contribution of physical structure in faces themselves (for more on the approach, Freeman et al., 2018). Indeed, in one set of studies examining social category perception, we found that for any given pair of gender, race, or emotion categories (e.g., Black and Male, Female and Happy), a greater biased similarity in stereotype knowledge between the two categories was associated with a greater bias to perceive faces belonging to those categories more similarly (Stolier & Freeman, 2016a) (see Fig. 5A).

Figure 5. Social-conceptual structure shapes face perception.

Representational dissimilarity matrices (RDMs) comprise all pairwise similarities/dissimilarities and are estimated for both conceptual knowledge and perceptual judgments. Unique values under the diagonal are vectorized, with each vector reflecting the geometry of the representational space, and a correlation or regression then tested the vectors’ relationship. (A) Participants’ stereotype RDM (stereotype content task) predicted their perceptual RDM (mouse-tracking), showing that a biased similarity between two social categories in stereotype knowledge was associated with a bias to see faces belonging to those categories more similarly, which in turn was reflected in FG neural-pattern structure (Stolier & Freeman, 2016a). (B) Participants’ emotion-concept RDM (emotion ratings task) predicted their perceptual RDM (mouse-tracking), showing that an increased similarity between two emotion categories in emotion-concept knowledge was associated with a tendency to perceive those facial expressions more similarly (Brooks & Freeman, 2018), which was also reflected in FG pattern structure (Brooks & Freeman, in press). (C) Participants’ conceptual RDM (trait ratings task) predicted their perceptual RDM (reverse correlation task), showing that an increased tendency to believe two traits are conceptually more similar is associated with using more similar facial features to make inferences about those traits (Stolier, Hehman, Keller, et al., 2018). Figure adapted from Freeman et al. (2018).

To assess perceptual similarity in this study, we used computer mouse-tracking, which provides a window into the real-time dynamics leading up to social perceptual judgments (or any other kind of forced-choice response; Freeman, 2018). Specifically, by examining how a participant’s hand settles into a response over time, and may be partially pulled toward other potential responses, numerous studies have leveraged mouse-tracking to chart out the real-time dynamics through which social categories, emotions, stereotypes, attitudes, and traits activate and resolve over hundreds of milliseconds (Freeman, 2018; Freeman, Dale, & Farmer, 2011; Stillman, Shen, & Ferguson, 2018). During two-choice tasks (e.g., Male vs. Female), deviation in a subject’s hand trajectory toward each category response provides an indirect measure of the degree to which that category was activated during perception. If conceptual knowledge links one category to another (e.g., Black to Male), subjects’ perceptions should be biased toward that category and, consequently, their hand trajectories should deviate toward that category response. Thus, a greater deviation in hand movement toward the opposite response serves as a measure of how similarly the current stimulus is perceived as consistent with the opposite response – even if not explicitly selected as such (see Fig. 5).

We recently conducted a similar comprehensive test of the conceptual scaffolding of emotion. In a series of studies examining the six “basic” emotion categories (e.g., Angry, Happy, Sad, Disgusted, Surprised, Fearful), we demonstrated that a greater similarity between any two emotion categories in emotion-concept knowledge was associated with a tendency to perceive those facial expressions more similarly (Brooks & Freeman, 2018). In some studies, perceptual similarity was assessed using mouse-tracking, such as when a perceiver’s greater conceptual similarity between Anger and Disgust leads to a greater attraction to select a “Disgusted” response for an angry face (or vice-versa) (Fig. 5B). A reverse correlation technique able to estimate perceivers’ visual prototypes for emotions converged with such findings, revealing more physically resembling visual prototypes for any pair of emotions that were viewed as conceptually more similar in the mind of a perceiver. For both social category and emotion perception, we additionally demonstrated that this conceptual shaping of perceptual structure was evident in neural patterns of the face-processing regions in the brain’s perceptual system (FG) when perceivers viewed faces. Moreover, the correlation of conceptual structure and perceptual structure held above and beyond any inherent physical resemblances in the face stimuli themselves (Brooks & Freeman, in press; Stolier & Freeman, 2016a). Such findings suggest that the locus of conceptual shaping of perceptual structure is at relatively early perceptual stages of processing, rather than reflecting a mere response bias or post-perceptual decision processes.

Finally, an additional set of studies tested whether the influence of conceptual similarity on perceptual similarity in face-based trait impressions as well. Using several techniques, including perceptual ratings and reverse correlation, here again we found that that an increased tendency to believe two traits (e.g., openness and agreeableness) are more similar conceptually predicted a greater similarity in the actual facial features used to make inferences about those traits, e.g., what makes a face appear open or agreeable to a perceiver (Stolier, Hehman, Keller, Walker, & Freeman, 2018) (Fig. 5C).

The DI framework could parsimoniously account for such findings through a single recurrent system wherein perceptions of social categories, emotions, and traits all emerge out of the basic interactions among cues, social cognitive representations, and higher-order cognitive states (see Fig. 4).

Each in turn, below we review in greater depth recent research on perceiving social categories, emotions, and traits, including the role that social-conceptual knowledge and other social cognitive processes play. Surely, the phenomena of social categorization, emotion perception, and trait inference have important differences; at the same time, the DI approach argues that theoretical and empirical advances may be gained by conceiving of these as emerging from a single recurrent system for social perception that relies on domain-general cognitive properties (at least certainly insofar as these phenomena operate as social perceptual judgments). Perceptions of social categories, emotions, and traits are all scaffolded by social-conceptual knowledge in similar fashion because they emerge out of basic domain-general interactions among cues, social cognitive representations, and higher-order cognitive states.

2. Perceiving Social Categories

Given the complexity of navigating the social world, people streamline mental processing by placing them into social categories. Perceivers maintain conceptual categories of others, each tied to rich sets of information that streamline our ability to predict behavior. These categories span any dimension along which we divide one another, such as demographic categories including race, gender, and age (Macrae & Bodenhausen, 2000), abstract in- and out-groups (Tajfel, 1981), and cultural and occupational groups (Fiske, Cuddy, Glick, & Xu, 2002). Seminal work by Allport (1954) argued that individuals perceive others via spontaneous, perhaps inevitable, category-based impressions that are highly efficient and designed to economize on mental resources. As described earlier, since then, a vast array of studies has demonstrated that such category-based impressions bring about a host of cognitive, affective, and behavioral outcomes, changing how we think and feel about others and behave with them, often in ways that may operate non-consciously (e.g., Bargh & Chartrand, 1999; Brewer, 1988; Devine, 1989; Dovidio, Kawakami, Johnson, Johnson, & Howard, 1997; Fazio, Jackson, Dunton, & Williams, 1995; Fiske, Lin, & Neuberg, 1999; Gilbert & Hixon, 1991). A traditional emphasis has therefore been to document the downstream implications of person categorization and its myriad outcomes for social interaction.

About 15 years ago, social psychologists began to examine the perceptual determinants of social categorization, such as how processing of stimulus features maps onto higher level stages of the social categorization pipeline. For example, one series of studies showed that perceivers more efficiently extract facial category vs. identity cues, which was interpreted as perhaps an important factor setting the stage for categorical thinking at later stages of person perception (Cloutier, Mason, & Macrae, 2005). The downstream consequences of perceiving category cues were further evidenced by findings show that such cues can function independently of category membership itself in automatic evaluations (Livingston & Brewer, 2002) and stereotypic attributions (Blair, 2002; Blair, Judd, Sadler, & Jenkins, 2002). Moreover, category-relevant features in isolation (e.g., hair) were shown to even automatically trigger category activation (Martin & Macrae, 2007), and even within-category variation in the prototypicality of race-related cues (Blair, Judd, & Fallman, 2004; Blair et al., 2002; Freeman, Pauker, Apfelbaum, & Ambady, 2010) or sex-related cues (Freeman, Ambady, Rule, & Johnson, 2008) shown to powerfully shape perceptions.

One consequence of such within-category variation – the natural diversity in the category cues of our social world – is that it often leads multiple categories to become simultaneously active during initial perceptions (Freeman & Ambady, 2011; Freeman & Johnson, 2016a). Moreover, such social category co-activations, often indexed using the mouse-tracking technique described earlier, may not just be innocuous peculiarities of the perceptual system, but instead consequential social perceptual phenomena with tangible downstream impacts. These partial and parallel co-activations of categories, not observed in explicit responses, lead to differences in stereotyping (Freeman & Ambady, 2009; Mason, Cloutier, & Macrae, 2006) and social evaluation (Johnson, Lick, & Carpinella, 2015; Livingston & Brewer, 2002). Importantly, these transient influences on perception can lead to consequential downstream outcomes. For instance, Black individuals with more prototypically Black faces tend to receive harsher criminal sentences (Blair et al., 2004a;b) including capital punishment (Johnson, Eberhardt, Davies, & Purdie-Vaughns, 2006). Similarly, American female politicians with less prototypically female facial features (i.e. more masculine cues) are less likely to be elected in conservative American states (Carpinella, Hehman, Freeman, & Johnson, 2016; Hehman, Carpinella, Johnson, Leitner, & Freeman, 2014), and this effect is predicted by the perceptual biasing effect that occurs when individuals categorize the politicians by sex (i.e. co-activation of the “male” category; Hehman, Carpinella, et al., 2014).

In addition to within-category variation, social perception is also sensitive to a number of other forms of extraneous perceptual input in the environment. For example, race categorization shows sensitivity to context such that targets are more likely to be categorized as White or Asian if they are seen in a culturally-congruent visual context (Freeman, Ma, Han, & Ambady, 2013). Even cues inherent to the individual (e.g., hair and clothing) can supply a source of expectation and prediction that may impact face processing. For example, clothing can bias race categorization by exerting a contextual cue to the social status of an individual, eliciting visual predictions about the person’s race. One study presented subjects with faces morphed along a Black–White continuum, each with low-status attire (e.g., a janitor uniform) or high-status attire (e.g., a business suit). Subjects categorized the faces as White or Black while their mouse trajectories were recorded. The study found that low-status attire biased perceptions toward the Black category while high-status attire biased perceptions toward the White category. When race and status were stereotypically incongruent (e.g., a White face with low-status attire or a Black face with high-status attire), participants’ mouse movements showed a continuous attraction to the opposite category, suggesting that the social status associated with clothing exerted a top-down influence on race categorization (Freeman et al., 2011).

In addition to external cues in the environment, social perception also shows sensitivity to inputs from the perceiver. These include motivations and expectations that bias the processing of novel stimuli, as well as preexisting perceptual heuristics used to make sense of ongoing sensory input. One such abstract top-down factor that can impact social perception is a perceiver’s own goals and motivations, which can bear weight on perception even when they reside outside of conscious awareness. In this sense, perception is chronically “motivated” to pick up on whatever aspects of the environment are most relevant or useful to current processing goals. For example, transient sexual desire can increase the speed and accuracy of sex categorization (Brinsmead-Stockham, Johnston, Miles, & Macrae, 2008). Notable and consequential effects of motivated social categorization occur in the case of race perception. For example, situations of economic scarcity lead White subjects to rate Black faces as more Black and to more often rate mixed-race faces as Black (Krosch & Amodio, 2014). Studies have found that subjects are more likely to identify an impoverished image of a gun as a gun when primed with a Black face, due to stereotypical associations (Eberhardt, Goff, Purdie-Vaughns, & Davies, 2004). Similar effects emerge for group identity, which produces a strong chronic motivational state to perceptually categorize others differently based on their in- or out-group status (Xiao, Coppin, & Van Bavel, 2016a, 2016b). For example, political group identity leads subjects to represent biracial candidates as lighter or darker in skin-tone if they are in the same or different political group (respectively; Caruso, Mead, & Balcetis, 2009).

2.2. Conceptual influences of social categorization

Stereotypes are merely conceptual knowledge related to social categories, and they have been extensively studied in social psychology and traditionally considered to be triggered after categorizing a target person (Allport, 1954; Brewer, 1988; Fiske & Neuberg, 1990; Macrae & Bodenhausen, 2000). As described earlier, only fairly recently have approaches considered the influence that stereotypes can have on a visual percept before it has fully stabilized (Freeman & Ambady, 2011; Freeman & Johnson, 2016b; MacLin & Malpass, 2001b). One set of studies demonstrated that prior race labels alter the perceived lightness of a face, such as knowledge that a person is Black making a face’s skin tone appear darker (Levin & Banaji, 2006). Another set of studies found that racially ambiguous faces were more likely to be categorized as Black and judged to have Afrocentric facial features, if they had a stereotypically Black hairstyle (MacLin & Malpass, 2001a). As suggested by the DI model’s simulations with the analogous status stereotype effects on race perception via attire cues above (Freeman, Penner, et al., 2011), such effects of hairstyle cues are likely driven stereotype associations. But in considering such findings of stereotypical contexts, it is difficult to know whether biased perceptual decisions reflect a bias on perception itself or merely at a post-perceptual decision stage. This would suggest stereotypes affect how perceivers think about the targets but not how they “see” them.

While a post-perceptual explanation cannot be entirely ruled out, recent work has been able to more closely investigate stereotype impacts on social category perception by examining how stereotypes bind ostensibly unrelated categories together (Freeman & Ambady, 2011; Freeman & Johnson, 2016b). Just as hairstyle or other visual cues can shape social categorization by activating conceptual associations, so can one category serve as context for perception of another category, even one on a seemingly unrelated dimension. This line of work has demonstrated the inherent intersection of race and sex, such that certain pairs of race and sex categories share stereotypes (e.g. the categories “Asian” and “female” sharing conceptual associations with docility and submissiveness; the categories “Black” and “male” sharing conceptual associations with hostility and physical ability) and are biased to be perceived concurrently as a result (Carpinella, Chen, Hamilton, & Johnson, 2015; Johnson, Freeman, & Pauker, 2012a). An important consequence is that individuals who do not meet the expected stereotype-congruent combination of social categories (e.g. Asian men and Black women) are the subject of biased stereotypic expectations that can negatively influence their experiences in dating, university life, and the workforce (Galinsky, Hall, & Cuddy, 2013).

Providing evidence for top-down conceptual structuring can be difficult when intrinsic physical resemblances are also at play. For example, stereotypes prescribe men as angrier and women as happier, and men’s faces are more readily perceived angry and women’s face more readily perceived happy (Hess et al., 2004; Hess et al., 2000). However, evolutionary psychologists have suggested this to be driven by intrinsic physical overlap in the facial features specifying anger and masculinity (e.g., furrowed brow) and joy and femininity (e.g., roundness) (Becker, Kenrick, Neuberg, Blackwell, & Smith, 2007). Indeed, DI model simulations account for such physical resemblance effects well (Freeman & Ambady, 2011; Phenomenon 4) – regardless of whether it exists due to distal evolutionary pressures (e.g., for men to be dominant and women to be submissive; Becker et al. 2007) or simply arbitrary physical covariation.

Nevertheless, given both potential factors at play, it is difficult to isolate top-down stereotypic drivers in the perceptual privileging of male anger and female joy. However, unconstrained, data-driven tasks have been valuable for isolating the effect of stereotypes on binding sex and emotion categories together (Brooks & Freeman, 2018). In one set of studies, we used reverse correlation to produce visual prototype faces for each subject for the categories Male, Female, Angry, and Happy. Reverse correlation allows a visual estimation of the cues that individuals expect to see for a given face category (Dotsch, Wigboldus, Langner, & van Knippenberg, 2008; Todorov, Dotsch, Wigboldus, & Said, 2011). We had independent raters rate the prototype Male, Female, Angry, and Happy faces on sex and emotion categories. We found that the reverse-correlated face prototypes showed a systematic bias in their appearance that was consistent with stereotypes, with Female prototypes biased toward (and vice-versa) and Male prototypes biased toward Angry (and vice-versa). In follow-up studies, we found that this effect was strongly predicted by a given individual’s conceptual associations between those categories. That is, the more that a subject harbored stereotype-congruent knowledge about sex and emotion categories (i.e., high overlap between Female-Happy and Male-Angry), the more likely were they to yield visual prototypes for those categories that were biased in appearance (Brooks, Stolier, & Freeman, 2018). Importantly, each category is attended to in isolation, making it unlikely that subjects were conceptually primed to produce biased responses in the initial reverse correlation task.

Neuroimaging can be highly valuable in addressing the perceptual vs. post-perceptual question, in that it can identify which levels of neural representation top-down impacts manifest (Freeman et al., 2018; Stolier & Freeman, 2015). In two studies, we measured the overlap of social categories at three levels: in their conceptual structure as related by similar trait stereotypes, measured via explicit surveys; in their visual perception from faces, through a perceptual categorization task; and in their neural representation, by comparing the similarity of the categories’ representational patterns across the brain (Stolier & Freeman, 2016b). Conceptual similarity was measured as the similarity in stereotype associations of each category, e.g., where the categories Black and Male may be high in hostility and sociability stereotypes but low in affectionate stereotypes. Perceptual similarity was measured with mouse-tracking, where participants categorized faces along each category in a two-choice task (e.g., Male vs. Female), and similarity was calculated as the degree to which participant mouse trajectories were drawn towards any one category response regardless of their final response (e.g., trajectories drawn towards ‘Male’ while categorizing Black female faces). Lastly, we measured neural similarity of each category as the similarity in the multi-voxel neural patterns of each category-pair.

Indeed, in both studies, we found that social categories more similar in conceptual knowledge were perceived more similarly. Category-pairs more related in stereotypes were also more interdependent during perceptual categorization. For instance, consistent with prior work, the stereotype content task indicated greater conceptual overlap between the Black and Male categories than Black and Female categories (Johnson et al., 2012a). As seen in Fig. 5A, when categorizing faces belonging to these categories (e.g. Black female faces by sex), participants were more drawn towards the stereotype-consistent category response, regardless of their explicit response (e.g., mouse trajectories were drawn more toward ‘Male’ en route to the ‘Female’ response). Moreover, these conceptually entangled category-pairs were also more similar in their multi-voxel neural patterns in regions involved in perception (FG) and top-down expectation signals (OFC). These findings survived analyses that controlled for intrinsic physical similarity of the faces themselves. This suggested that, even in face-processing regions within the brain’s perceptual system, a face’s social categories are shaped by social-conceptual knowledge, namely stereotypes about those categories (Stolier & Freeman, 2016b).

2.4. Summary

Although social categorization was long treated as a starting point and only its downstream products took theoretical center-stage, the past 15 years have increasingly zoomed in on the categorization process. Such work has found that perceptions of face’s social categories are susceptible to a range of social cognitive factors, such as stereotypes, attitudes, and goals, which are often presumed to operate only downstream of categorization. Stereotypes, i.e. social-conceptual knowledge, can have a pronounced impact in structuring perceptions, and growing findings confirm the close interplay between perceiver knowledge and facial features in driving initial perceptions – a premise central to the framework outline here.

3. Perceiving Emotions

Humans have the impressive social-perceptual ability to infer someone else’s emotional state from perceptual information on their face: a scowling person looks “angry”, a frowning person looks “sad”, a smiling person looks “happy”. The perceptual operations that lead to categorizations of others’ emotional states are just as transparent as those that lead to other social categories such as gender or standard object categorizations – no effortful deliberation is required to perceive emotion from facial expressions. And yet, perceiving and categorizing emotions in others affords incredibly rich social inferences, allowing us to anticipate others’ future actions and mental states and plan our own behavior accordingly.

Due to the ease and fluency with which we make rich inferences from facial actions, there is long-standing interest in facial expressions and how they are perceived – experimental psychologists have been studying facial emotion since the field’s inception in the 19th century. Early theoretical assumptions and intuitions about facial emotion were largely influenced by Darwin (1872) with his book The Expression of the Emotions in Man and Animals. Darwin viewed the study of facial expressions as a test case for the theory of evolution, and wanted to discover and document potential evolutionary “principles” for the existence of facial expressions. Darwin pioneered a number of methods, and made a number of theoretical assumptions, that persist in the field today. These include aiming to build a taxonomy of facial expressions, examining which facial expressions people can reliably recognize by having them categorize pictures, and assuming that cross-cultural data can address questions of innateness or universality in facial expressions (Darwin, 1872; Gendron & Barrett, 2017). This approach inspired an early body of empirical work which largely studied facial emotion perception by having subjects place static posed images of facial expressions into a fixed set of categories. These studies built taxonomies of facial displays that could be reliably “recognized” as specific emotions, and explored the boundary conditions that influenced perceiver agreement (Allport, 1924; Feleky, 1914; for a review of this early period of research, see Gendron & Barrett, 2017).

Darwin’s approach persisted further into the 20th century with the highly influential “basic emotion” approach (Ekman, 1972; Ekman & Cordaro, 2011; Izard, 1971; Izard, 2011; Tracy & Randles, 2011). Ekman (1972) had a particularly influential approach to studying facial expressions. This involved closely associating facial actions with information about the underlying facial musculature, and delineating the specific combinations of facial actions (facial action units) that lead subjects to categorize a face as an emotion like “angry” or “afraid” (Friesen & Ekman, 1978). The goal of this research was ro build taxonomies of emotions that could be considered psychologically “basic” by studying consensus between perceivers in how facial expressions were categorized. Informed by greater study of the facial expressions themselves, studies continued to mainly consist of showing posed facial expressions to subjects who were asked to label them (Ekman, Friesen, & Ellsworth, 2013). A great deal of work using this approach shows that perceivers are typically fast, accurate, and largely consensual in their categorizations of facial expressions associated with a small number of “basic” emotions (most commonly Anger, Disgust, Fear, Happiness, Sadness, and Surprise; Ekman & Friesen, 1971; Ekman, Sorenson, & Friesen, 1969; Izard, 1971; Tracy & Randles, 2011). In general, this approach assumes that the facial expressions associated with the “basic” emotions are so evolutionarily old and motivationally relevant that they trigger a direct “read-out” of visual features that should be fairly invariant between individuals (Smith, Cottrell, Gosselin, & Schyns, 2005). However, a growing body of work instead suggests that there are a number of contextual and perceiver-dependent factors that weigh in on how facial expressions are visually perceived.

For instance, research shows that facial emotion perception is extremely sensitive to – and even shaped by – the surrounding context. These contextual factors can be as simple as visual aspects of the person displaying an emotion (e.g. their body), multimodal aspects like the person’s voice, the surrounding scene, or more abstract characteristics of the context like the perceiver’s current goals. This body of work is a major factor motivating more recent theories of emotion to treat facial emotion perception as an embedded and situated phenomenon (e.g., Wilson-Mendenhall, Barrett, Simmons, & Barsalou, 2011), and to consider different ways of studying it as a result.

In one sense, it is entirely unsurprising that facial emotion perception would be heavily influenced by the surrounding context, since most instances of perceiving facial emotion occur in particular social contexts or scenes, alongside vocal and bodily cues that convey a wealth of information. But these findings are a serious challenge to classic views of emotion that heavily emphasize diagnosticity of facial cues due to the surprising strength of the effects. In many cases, aspects of the visual and even auditory context can completely dominate input from the face. For example, when someone’s body posture is incongruent with the emotion ostensibly signaled by their face, the ultimate emotion categorization is often consistent with bodily rather than facial cues (for review, see de Gelder et al., 2005; Van den Stock, Righart, & de Gelder, 2007). While it is unclear whether this means that body posture really carries more diagnostic or important information about emotional states, but it does indicate that perceivers heavily rely on cues from the body. Some work does indicate that bodily motion conveys information about specific emotion categories, since perceivers are highly consensual in their emotion categorizations for point-light displays (Atkinson, Dittrich, Gemmell, & Young, 2004).

Similarly notable effects have emerged for vocal cues, such that stereotypically Sad facial expressions are perceived as Happy when they are accompanied by a Happy voice, even when participants are instructed to disregard the voice (de Gelder & Vroomen, 2000). As with body cues, some researchers suggest that this reflects vocal cues being more diagnostic or informative about emotional states compared to the face (Scherer, 2003). A great deal of evidence also suggests that identical facial expressions are perceived differently depending on the visual scene in which they are encountered (e.g., a neutral context, such as standing in a field, or a fearful context, such as a car crash; Righart & De Gelder, 2008). Similar effects occur when participants are just given prior knowledge about the social context emotional facial expressions were originally displayed in (Carroll & Russell, 1996). Social information immediately present in a scene also can influence emotion perception, such that emotion perception is shaped by the facial expressions of other individuals in a visual scene (Masuda et al., 2008).

This growing body of evidence suggests that perceivers spontaneously make use of any information available to them to categorize someone else’s emotional state, and that the face is just one factor weighing in on these perceptions. This has led some researchers to propose that the face itself is “inherently ambiguous” (Hassin, Aviezer, & Bentin, 2013). Indeed, these results are widely consistent with insights from vision science that ambiguous stimuli are particularly subject to expectations and associations guided by the environment (Bar, 2004; Summerfield & Egner, 2009). At the very least, this work suggests that experimental designs using isolated posed facial expressions are not able to capture the full range of processes that weigh in on facial emotion perception.

3.1. Perceiver-dependent theories of emotion perception

Classic theories of emotion, most famously the “basic emotion” approach, assume that facial emotion perception occurs as a direct bottom-up read-out of facial cues that are inherently tied to their relevant emotion categories. For example, experiencing a given emotion such as “anger” yields a reliable and specific combination of facial cues that are able to be automatically extracted by a perceiver and effortlessly recognized as “anger” (Ekman et al., 2013; Izard, 2011; Smith et al., 2005). An explicit assumption of these models of emotion is that the “basic” emotions are universally recognized across cultures (Ekman, 1972; Ekman & Friesen, 1971). However, the profound susceptibility of facial emotion perception to context – and the readiness with which perceivers make use of any contextual or associative content available to them in order to categorize facial expressions – has led recent theories of emotion and social perception to consider the idea that individual perceivers may serve as their own form of “context”.

The basic idea that aspects of the perceiver can sometimes influence emotion perception is not particularly controversial. A large body of work shows that dispositional factors such as social anxiety (Fraley, Niedenthal, Marks, Brumbaugh, & Vicary, 2006) (Fraley et al., 2006), stigma consciousness (Pinel, 1999), and implicit racial prejudice (Hugenberg & Bodenhausen, 2004; Hutchings & Haddock, 2008) can impact visual processing of facial expressions. Recent approaches further argue that perceiver-dependence is a fundamental characteristic of emotion perception rather than an occasional biasing factor (Barrett, 2017; Freeman & Ambady, 2011; Freeman & Johnson, 2016b; Lindquist, 2013). For example, the Theory of Constructed Emotion holds that facial displays of emotion can only be placed into a given category such as “anger” or “fear” when conceptual knowledge about those emotion categories is rapidly and implicitly integrated into the perceptual process (Barrett, 2017), which is highly consistent with the DI theory’s premise of the conceptual scaffolding of various instances of social perceptual judgments. As discussed earlier, the DI framework predicts that a wealth of contextual and conceptual input implicitly informs perception before a face is placed into a stable response (e.g., category, emotion, or trait judgment), allowing for a great deal of influence from conceptual structure of emotion categories on the ongoing processing of visual displays of emotion.

A natural consequence of this theoretical approach would be substantial variability between individuals, given the variety of different prior experiences, conceptual associations, and dispositional qualities that reside within each individual. As a result, perceiver-dependent theories place less of an emphasis on specific facial expressions being tied to specific discrete emotion categories. If one assumes variability is the norm in emotion, then taxonomies of “basic” emotions are more of a catalog of consensus judgments linked to particular category labels, rather than a definitive account of universal categories. Indeed, meta-analyses show a remarkable lack of consistency between individuals and studies in the neural representation of emotional experiences and perceptions (Kober et al., 2008; Lindquist, Wager, Kober, Bliss-Moreau, & Barrett, 2012) as well as their physiological signatures (Siegel et al., 2018) and associated facial actions in spontaneous displays (Durán & Fernández-Dols, 2018; Durán, Reisenzein, & Fernández-Dols, 2017). If this degree of variability exists in emotion experience and expression, the perceptual system would have to be flexible, making rapid use of available contextual factors and cognitive resources to make sense of facial emotion displays. As a result, an emerging body of research has begun to directly investigate the role of conceptual knowledge in facial emotion perception.

3.2. Conceptual influences on emotion perception

A major thread in recent debates about emotion perception concerns the manner in which conceptual knowledge about emotions is involved in emotion perception. Classic theories would assume that categorizing a face as “angry” (through a direct read-out of cues assumed to inherently signal Anger) would lead to Anger-related conceptual knowledge being subsequently activated in service of predicting an angry individual’s behavior. In contrast, if facial emotion perception were influenced by conceptual knowledge, that would require rapid and implicit access of conceptual associations before a percept has stabilized. Thus, most work on the relationship between conceptual knowledge and emotion perception has focused on manipulating access to conceptual knowledge and measuring how this impacts performance in standard emotion perception tasks. Much of this work involves the “semantic satiation” technique (Balota & Black, 1997; Black, 2001), in which target trials require subjects to repeat a word 30 or more times (e.g., in this case, “angry”), temporarily reducing access to the associated concept, before making a response that the concept is hypothesized to be necessary for. When subjects have access to emotion concepts reduced this way, they show impaired accuracy in emotion categorization (Lindquist, Barrett, Bliss-Moreau, & Russell, 2006) and emotional facial expressions no longer serve as primes for other face stimuli from their category (Gendron, Lindquist, Barsalou, & Barrett, 2012a), indicating a relatively low level perceptual role for conceptual knowledge. On the other hand, increasing access to emotion concept knowledge increases speed and accuracy in emotion perception tasks (Carroll & Young, 2005; Nook, Lindquist, & Zaki, 2015) and shapes perceptual memory for facial expressions (Doyle & Lindquist, 2018; Fugate, Gendron, Nakashima, & Barrett, 2018). Additionally, semantic dementia patients, who have dramatically reduced access to emotion concept knowledge, seemingly fail to perceive discrete emotion at all, instead categorizing facial expressions by broad valence categories (Lindquist, Gendron, Barrett, & Dickerson, 2014).

Many attempts to measure the influence of conceptual knowledge on facial emotion perception have used language (i.e. emotion category labels like “anger”) as a proxy for studying emotion concepts. More generally, language has an important role in constructionist theories of emotion due to the central constructionist concept that language is responsible for the common sense intuition that emotion is organized into discrete categories (Doyle & Lindquist, 2017; Lindquist, 2017). Certainly, language is an important factor in how we perceive and categorize emotion. A recent neuroimaging meta-analysis showed that when emotion category labels like “sadness” and “surprise” are incorporated into experimental tasks, the amygdala is less frequently active (Brooks et al., 2017). This supports the idea that facial expressions are ambiguous to some degree and that category labels provide immediate access to conceptual knowledge, reducing perceptual uncertainty. But since most existing work on conceptually scaffolded emotion perception has explicitly manipulated language or conceptual knowledge in tasks, it has been difficult to capture the implicit influence of conceptual knowledge that theories assume is involved in every instance of emotion perception.

As already discussed, assessing representational geometries using representational similarity analysis (RSA) is one way of globally measuring the overall influence of conceptual knowledge without directly manipulating it (see Fig. 5). Existing uses of RSA to study emotion perception have been fruitful, suggesting that more abstract conceptual information may affect the perceptual representation of emotion. For example, neuroimaging work has been able to adjudicate between dimensional vs. appraisal models of how emotional situations are represented in the brain (Skerry & Saxe, 2015). This line of work also used RSA to show a correspondence between the neural representations of valence information from perceived human facial expressions and inferences from situations (Skerry & Saxe, 2014). One study measured the representational similarity between emotion categories in their perception from faces and voices (Kuhn, Wydell, Lavan, McGettigan, & Garrido, 2017), showing high correspondence between modalities even when controlling for low-level stimulus features. In general, these studies suggest that the representational structure of emotion perception may be shaped by more abstract conceptual features.

In one set of studies, we used RSA to measure the correspondence between subjects’ conceptual and perceptual representational spaces for commonly studied emotion categories – Anger, Disgust, Fear, Happiness, Sadness, and Surprise (Brooks & Freeman, 2018). All studies measured how conceptually similar subjects found each pair of emotions, and used this idiosyncratic conceptual similarity space to predict their perceptual similarity space. In two studies, we measured perceptual similarity using computer mouse-tracking. On each trial, subjects would see a face stimulus displaying a stereotyped emotional facial expression (e.g. a scowl for Anger) and have to categorize it as one of two emotion categories by clicking on response options on the screen (e.g. ‘Anger’, ‘Disgust’; one response option always corresponded to the intended/posed emotion display). We used mouse-trajectory deviation toward the unselected category response as a measure of perceptual similarity. We found that conceptual similarity significantly predicted perceptual similarity, even when statistically controlling for intrinsic physical similarity in the stimuli themselves. Thus, the more conceptually similar a subject found a given pair of emotions (e.g. Anger and Disgust) predicted a stronger co-activation of the two categories during perception, even though there was ostensibly only one emotion being conveyed by the face stimulus (Fig. 5B). Moreover, this effect could not be explained by how similar the two categories are in their associated visual properties.

In an additional study, we repeated this approach, but used reverse correlation to measure perceptual similarity. As discussed earlier, reverse correlation allows a visual estimation of the cues that individuals expect to see for a given face category (Dotsch et al., 2008; Todorov et al., 2011). A given subject in this study would be randomly assigned to an emotion category pair (e.g. Anger-Disgust) and asked to complete the reverse correlation task for these two categories, as well as a task to measure conceptual similarity between the categories. Perceptual similarity was measured by having independent raters rate pairs of images (each coming from the same subject) on how similar they were, as well as measuring the inherent visual similarity of the images themselves (on a pixel-by-pixel basis). We found that, when a subject held two emotion categories to be conceptually more similar, their reverse-correlated visual prototypes for these categories took on a greater physical resemblance. Reverse correlation allowed a less constrained test of the relationship between conceptual and perceptual similarity, since each subject was only attending to one emotion in isolation on the reverse correlation trials. As a result, the reverse correlation results provide strong evidence for conceptually scaffolded emotion perception since it is a data-driven task that did not rely on a particular stimulus set, emotion category labels, or any normative assumptions of how different facial emotion expressions should appear.

To identify at what level of neural representation such conceptual scaffolding of facial emotion manifests, subjects completed an fMRI task in which they passively viewed facial expressions of Anger, Disgust, Fear, Happiness, Sadness, and Surprise. We also again used a conceptual ratings task to measure the conceptual similarity of each pair of emotions. We found that neural-representational patterns in the face-processing FG region showed a representational structure that was significantly predicted by idiosyncratic conceptual structure. These findings demonstrate that representations of facial emotion categories in the brain’s perceptual system are organized in a way that partially conforms to how perceivers structure those categories conceptually. Such results are consistent with the stereotype scaffolding of a face’s social categories manifesting in the FG as well (Stolier & Freeman, 2016a). These results show conceptual impacts on how the brain represents facial emotion categories at a relatively basic level of visual processing. Overall, this growing line of work suggests that the brain’s representation of facial emotion, or of a face’s social categories, do not reflect facial cues alone; they are also partly shaped by the conceptual meaning of those emotions or social categories.

3.3. Summary

The traditional view has been that there are a certain number of emotion categories that can be reliably and automatically recognized in humans. However, research increasingly suggests that this approach has ignored idiosyncratic perceiver-dependent factors that shape emotion perception. Facial actions undeniably convey important information about internal states, but there is little evidence that real-world instances of emotion experience yield specific and discrete facial displays like the ones usually studied in psychological research (Durán et al., 2017). Real-world facial displays of emotion are typically much more subtle and brief (Barrett, Mesquita, & Gendron, 2011a; Durán et al., 2017; Russell, Bachorowski, & Fernández-Dols, 2003), and their interpretation requires a myriad of contextual and associative top-down factors to weigh in on visual processing. Growing evidence demonstrates that one such top-down factor is each perceiver’s idiosyncratic conceptual knowledge about emotion, leading to a highly flexible process for facial emotion perception that may exhibit substantial variability between individuals.

4. Perceiving Traits

While it may be obvious that perceivers track social categories and emotions from faces, they also readily dispel personality inferences based solely upon their facial appearance. These inferences are not arbitrary; they tend to be highly correlated across multiple perceivers, even at brief exposures (Bar, Neta, & Linz, 2006; Willis & Todorov, 2006) and often occur automatically and beyond our conscious control (Zebrowitz & Montepare, 2008a). For instance, responses in the amygdala, a subcortical region important for a variety of social and emotional processes, tracks a face’s level of trustworthiness even when it is presented outside of conscious awareness using backward masking (Freeman, Stolier, Ingbretsen, & Hehman, 2014). Despite face-based impressions’ generally limited accuracy (Todorov et al., 2015; Tskhay & Rule, 2013), they can often powerfully guide our interactions with others and predict real-world consequences such as electoral outcomes (Todorov, Mandisodza, Goren, & Hall, 2005) or capital-sentencing decisions (Wilson & Rule, 2015), among others (for review, Todorov et al., 2015).

Outside of face-based impressions, social psychologists have long explored impression formation and trait attribution, dating back to Estes (1938) and Asch (1946). Decades of research explored the cognitive mechanisms involved in making dispositional inferences about others and other forms of social reasoning (e.g., Skowronski & Carlston, 1987, 1989; Uleman & Kressel, 2013; Uleman, Newman, & Moskowitz, 1996; Winter & Uleman, 1984; Wyer Jr & Carlston, 2018), and still countless other studies explored “zero-acquaintance” judgments in interpersonal encounters that focused on judgmental accuracy in deducing others’ personality upon first meeting them (e.g., Albright et al., 1997; Ambady, Bernieri, & Richeson, 2000; Ambady, Hallahan, & Rosenthal, 1995; Ambady & Rosenthal, 1992; Kenny, 1994; Kenny & La Voie, 1984). However, it was only fairly recently that social psychologists began to investigate face-based impressions in particular more seriously (Bodenhausen & Macrae, 2006; Zebrowitz, 2006).

Researchers have now linked a large array of facial features with specific impressions, such as facial width, eye size and eyelid openness, symmetry, emotion, head posture, sexual dimorphism, averageness, and numerous others (for reviews, Hehman et al., 2019; Olivola & Todorov, 2017; Todorov et al., 2015; Zebrowitz & Montepare, 2008a). Discovering the links between all facial features and all traits is challenging, and computational and data-driven approaches can provide more comprehensive assessments (Adolphs, Nummenmaa, Todorov, & Haxby, 2016). Seminal research by Oosterhof and Todorov (2008b) took such an approach to characterize the specific features that underlie a range of face impressions. In this work, participants viewed a large set of randomly varying computer-generated faces and evaluated the faces along different personality traits. Principal component analyses identified two fundamental dimensions: trustworthiness and dominance. These dimensions are consistent with the perspective that perceivers tend to place others along two primary dimensions: their intentions to help or harm (warmth) and their ability to enact those intentions (competence) (Fiske, Cuddy, & Glick, 2007; Fiske et al., 2002; Rosenberg, Nelson, & Vivekananthan, 1968).

With respect to the cues underlying these two fundamental dimensions, Oosterhof and Todorov (2008b) found that the trustworthiness dimension was characterized by faces varying in happy-negative emotion expression resemblance despite their having a neutral emotional expression. The dominance dimension roughly corresponded to physical strength and facial maturity cues. Such findings can be explained by overgeneralization theory (Zebrowitz & Montepare, 2008b), which posits that perceivers utilize functionally adaptive and evolutionarily shaped facial cues (e.g., emotion, facial maturity) and “overgeneralize” to ostensibly unrelated traits (e.g., trustworthiness) due to the cue’s association with that trait (e.g., trustworthiness from happy cues; dominance from age cues) (Said, Sebe, & Todorov, 2009; Zebrowitz, Fellous, Mignault, & Andreoletti, 2003). Such research has made important advances in understanding the specific arrangements of facial features that reliably evoke particular trait impressions.

More recently, researchers have begun to document the myriad factors harbored within perceivers that also help determine face impressions. Remarkably, at least for a number of common face impressions, the bulk of their variance is accounted for by idiosyncratic differences in how perceivers infer about faces (Hehman et al., 2019; Hehman, Sutherland, Flake, & Slepian, 2017; Xie, Flake, & Hehman, 2018). Indeed, other research has demonstrated that the fundamental dimensions – trustworthiness and dominance – can shift or disappear entirely depending on perceiver factors. For instance, when judging female targets, dominance cues elicit more negative and untrustworthy evaluations, compared to male targets (Oh, Buck, & Todorov, 2019; Sutherland, Young, Mootz, & Oldmeadow, 2015), likely due stereotypic expectations of women as submissive, i.e. benevolent sexism (Glick & Fiske, 1996). Thus, trustworthiness and dominance dimensions cease being independent. On older adult faces, facial dominance comes to take on new meaning (e.g., wisdom) likely due to stereotypes of older adults’ physical frailty, inconsistent with the notion of dominance and hostility (Hehman, Leitner, & Freeman, 2014). Perceptions of trustworthiness depend more or less on typicality or attractiveness facial cues depending on whether the target is from our own or a different culture (Sofer et al., 2017)