Abstract

With the rapid expansion of hardware options in the extended realities (XRs), there has been widespread development of applications throughout many fields, including engineering, entertainment and medicine. Development of medical applications for the XRs have a unique set of considerations during development and human factors testing. Additionally, understanding the constraints of the user and the use case allow for iterative improvement. In this manuscript, the authors discuss the considerations when developing and performing human factors testing for XR applications, using the Enhanced ELectrophysiology Visualization and Interaction System (ĒLVIS) as an example. Additionally, usability and critical interpersonal interaction data from first-in-human testing of ĒLVIS are presented.

Keywords: Extended Reality, Mixed Reality, Medical Applications, Cardiac Electrophysiology, Ablation Accuracy

1. Introduction

The rapid expansion and development of extended reality (XR) hardware (including virtual, augmented and mixed realities) has resulted in a corresponding expansion in medical applications and clinical studies utilizing XR, ranging from medical education and patient rehabilitation to surgical guidance2. Each of these XR technologies has specific usability benefits and challenges that will drive usability, acceptance, and adoption. Development of novel solutions, matching the technologies to the clinical needs and unique context are central to successful solutions.

The authors have developed a mixed reality (MxR) solution to empower physicians who perform minimally invasive cardiac procedures. The Enhanced ELectrophysiology Visualization and Interaction System (ĒLVIS) has been developed to address the unmet needs in the cardiac electrophysiology (EP) laboratory. In this manuscript, we will discuss the development and human factors testing of ĒLVIS as an example of matching an appropriate technology with an unmet clinical need, practical design considerations in development and human factors testing and usability data from first-in-human testing.

2. Extended Reality Spectrum

The XRs encompasses the continuum from fully immersive virtual realities, through mixed realities and minimally intrusive augmented realities. While the XRs represent a spectrum, there are discrete technological advantages and limitations that constrain appropriate use cases. (See Table 1)

Table 1.

Descriptions of the extended realities are illustrated as a progression from virtual to mixed reality, along with an example of a head mounted display (HMD), and technologic advantages and disadvantages.

| Virtual Reality (VR) | Augmented Reality (AR) | Mixed Reality (MxR) | Yet to Come... | |

|---|---|---|---|---|

| Description & Images | Digital Replaces Physical

|

Digital Separated from Physical

|

Digital Integrates with Physical

|

|

| Example of Head Mounted Display |  |

|

|

|

| Advantages | Price Graphics Immersive environment Several hardware options |

Ability to see and interact with natural environment | Ability to see and interact with natural and digital environments | |

| Disadvantages | Price Limited hardware options |

Price Limited hardware options |

2.1. Virtual Reality.

Virtual Reality (VR) offers the user an immersive experience where the user can have meaningful interactions in a completely digital, virtual environment but is no longer able to interact with their native, or natural, environment. The level of immersion of VR experiences is dependent on the class of system used. Most frequently, VR hardware utilizes head mounted displays (HMDs) which can be sub-classified as mobile, tethered or stand-alone.

Phone-based, mobile VR displays, such as Google Daydream View and Samsung Gear VR, require a phone to be inserted into the HMD. This class of display only enables the user to experience their environment from a single position at a time, and therefore tends to provide the user with a less immersive experience. The advantage of mobile VR HMDs is that by being more affordable and portable, the applications based on mobile VR offer increased accessibility to a wider number of users, including those with limited mobility. There has not been significant development of medical applications using mobile VR headsets.

Standalone VR HMDs, such as the Oculus Quest and Go, provide some of the accessibility of mobile VR but with some of the technical capabilities of tethered VR to bridge the transition between the device classes. While an expensive option compared to mobile VR HMDs, these standalone headsets offer some of the improved performance of tethered HMDs and remove some of the limitations of mobile based VR.

Tethered HMDs such as the Valve Index, Varjo VR-2, Oculus Rift and HTC Vive Cosmos, have a physical cable connection to a high-capability computer to provide graphical processing for higher fidelity displays. These headsets tend to have excellent resolution and field of view when compared to standalone VR and offer a superior immersive, visual user experience. However, tethering the user to a computer limits potential use cases for medical application development by restricting mobility and introducing snag or trip hazards. For example, use of a tethered VR system in a clinic setting may be quite tenable if a station is set up for users to wear the HMD in that location. In use cases where the mobility is required, this platform would be challenging.

An example of a VR application in medicine is the Stanford Virtual Heart Project3 in conjunction with Lighthaus, Inc. They have developed a VR application for educating medical students about the complexities of congenital heart disease. In this experience, the user is given handheld controllers to navigate through the cardiac anatomy to better understand the anatomy and the potential surgical repairs.

2.2. Augmented Reality.

In augmented reality (AR), the user remains in their natural environment and has the ability to import and anchor digital images into their environment. These digital images enhance, or augment, the environment rather than providing a fully immersive experience akin to the VR HMDs. Google Glass is an example of an AR HMD and has been used in medical applications, including surgical and nonsurgical environments4,5. To date, the results from feasibility, usability and adoption testing has been promising particularly in patient-centric studies and medical education settings4.

Echopixel was one of the early 3D displays to have clearance from the US Food and Drug Administration of their True 3D system which is integrated into a DICOM workstation to display 3D images of patient radiologic images. The system utilizes a 3D display and polarized glasses with a stylus to manipulate the image. The initial use case in cardiology assessed the pre-procedural use assessment of patients with congenital heart disease for surgical planning6. Specifically, cardiologists were asked to evaluate images from 9 patients who underwent computed tomography angiography either by using the 3D display or a traditional display to perform interpretation of the imaging. Interpretation times were faster using the Echopixel True 3D display as compared to traditional display (13 min vs 22 min), with similarly accurate interpretations.

2.3. Mixed Reality.

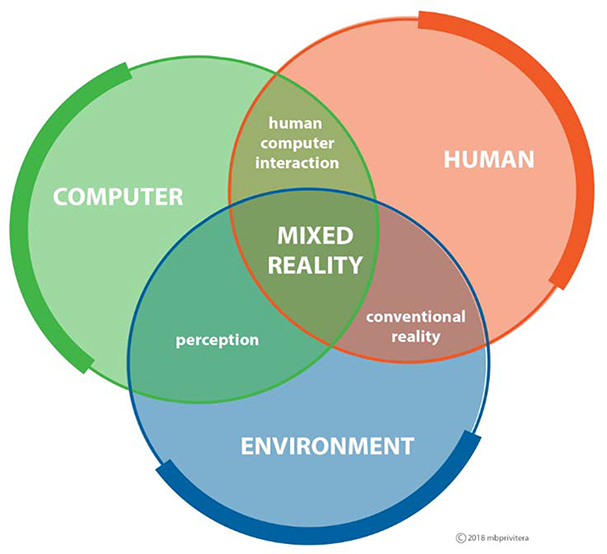

Mixed reality (MxR) allows the user to remain in their natural environment (see Figure 1), import and anchor digital images to augment their environment with the additional ability to interact with these digital images, predominantly using HMDs. This enhancement to traditional AR displays has been particularly innovative for the medical community, enabling multiple new applications over the past few years7–9. Medical applications leveraging MxR has seen the most research and development on the Microsoft HoloLens10, though there are other available MxR HMDs with medical applications, such as the Magic Leap.

Figure 1.

Mixed Reality resides at the intersection of human-computer-environment by overlaying content in natural environment, or real world1.

We have been developing a mixed reality system, the Enhanced Electrophysiology Visualization and Interaction System (Project ĒLVIS)7,11,12. System details are presented below (see section 4).

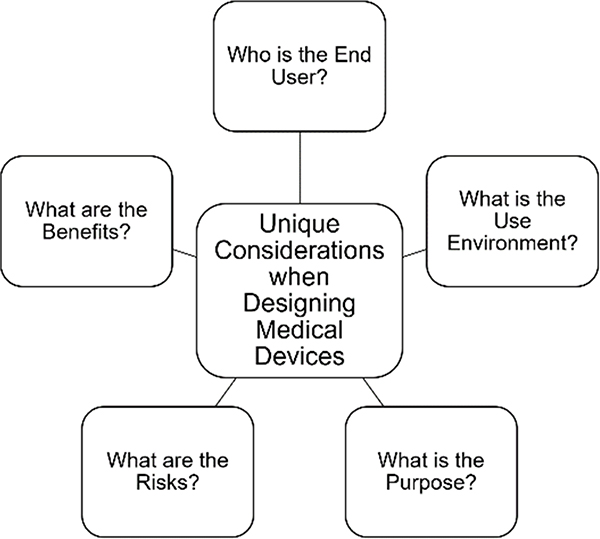

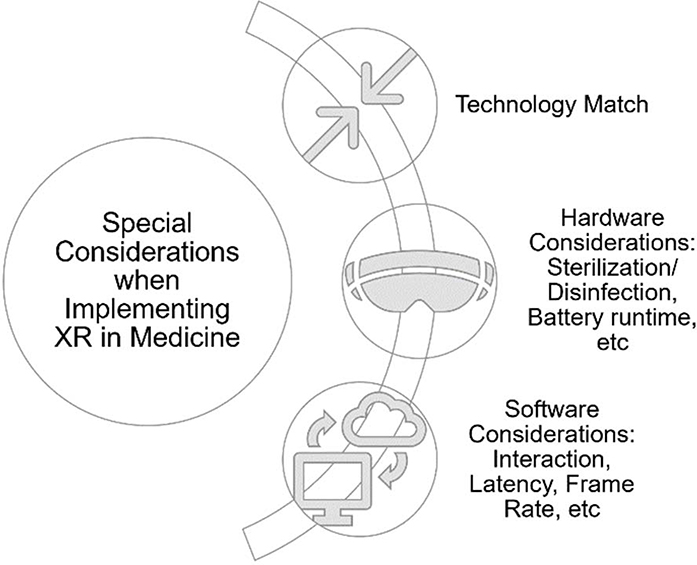

3. Unique Reqirements in Medicine

There are unique considerations when designing and innovating medical devices (see Figure 2). Early in development, it is critical to identify the end user of the device and understand the current workflow and unmet need(s). For instance, devices that are used by physicians (physician-facing) will have a different set of use requirements than devices that are used by patients (patient-facing). Additionally, devices implemented in sterile versus non-sterile use environments will have different standards to consider. Devices deployed in sterile environments have considerations of disinfection and sanitation prior to use in those environments whereas devices used in a nonsterile space may not need to be tested for these standards. Additionally, devices may be used for diagnostic, therapeutic or combined purposes. For instance, AR devices used in radiologic applications assist the user with visual-spatial relationships to improve interpretation of images13. Other devices are used for therapeutic purposes, such as MindMaze’s Mindmotion14 which is used for both inpatient and outpatient neurorehabilitation post stroke. Perhaps one of the most demanding use cases in medicine is the implementation of an AR medical device for use during procedures, including invasive and minimally invasive procedures.

Figure 2.

There are several unique considerations when designing medical devices. Each input requires careful investigation to design a successful tool.

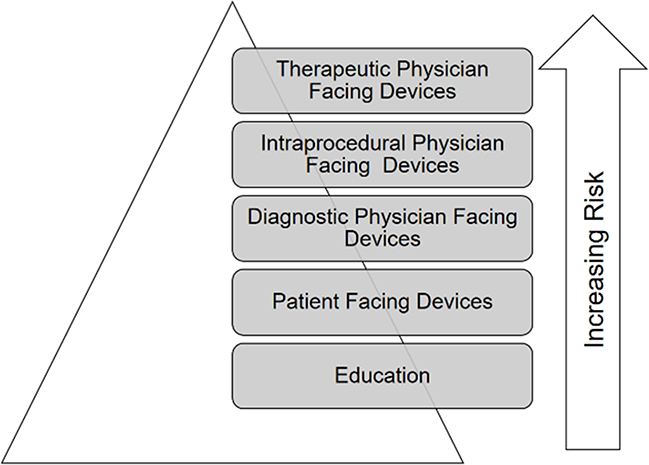

Medical devices for Intraprocedural use inherently pose a higher risk to patients, and are often tied to therapeutic decision making (see Figure 3). As such, devices that will be used intraprocedurally for therapeutic purposes likely have higher regulatory requirements. Faults in these medical device applications may result in incorrect or incomplete data to the physician which in turn may result in a negative patient outcome. For these devices where misrepresented or misunderstood data may result in harm to patient, standards surrounding implementation should be high, requiring rigor and reproducibility around the testing of the device.

Figure 3.

There are increasing levels of risk associated with various applications. Student education tools pose the least risk, with increasing levels of risk for physician facing devices from diagnostic to intra-procedural to therapeutic devices. With increasing risk, there is increased levels of scrutiny and regulation.

For instance, applications used during patient procedures may assist or guide operating physicians in better understanding the patient’s unique 3-dimensional anatomies by providing improved visual-spatial communication, improved comprehension, and greater value. Conversely, if the application distracts the operating physician or distorts the geometry, any added value may be negated.

4. The Enhanced Electrophysiology Visualization and Interaction System (ĒLVIS)

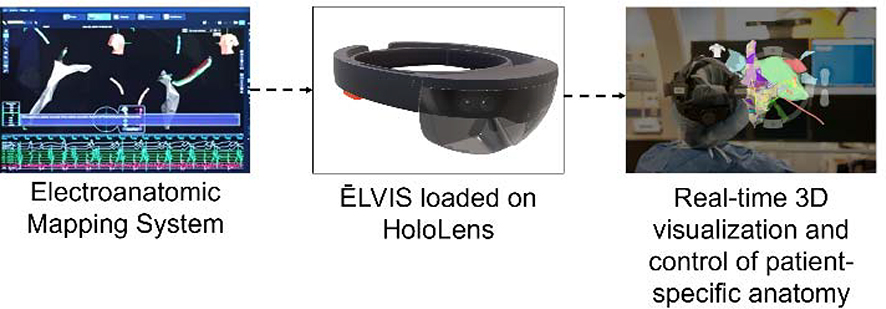

We have developed the Enhanced Electrophysiology Visualization and Interaction System (ĒLVIS) which uses a MxR HMD (Microsoft HoloLens) to provide physicians with a real-time 3-dimensional display of patient specific data during minimally invasive cardiac electrophysiology procedures (see Figure 4). Cardiac electrophysiology studies (EPS) are performed in patients with known or suspected heart rhythm abnormalities. These procedures are performed via catheters that are introduced into the body through blood vessels and navigated into the heart using the vascular system. Once in the heart, these catheters, which have electrodes at the distal end, record the electrical signals in the heart. Using commercially available electroanatomic mapping systems (EAMS), these catheters generate geometries of various cardiac chambers by sweeping the area within the chamber and generating an anatomic shell. The electrophysiologic data is overlaid onto the anatomic shell resulting in electroanatomic maps. These electroanatomic maps have been widely adopted and are used in the majority of EPS15,16. Within these maps, physicians can visualize the electrode tips of the catheters allowing the physician to understand the real-time movement of the catheters and the relationship of the catheters to each other and the cardiac anatomy. Current workflows in the EP laboratory require multiple people positioned at multiple device workstations to control their particular device and data display, including the electroanatomic mapping system workstation. These generated electroanatomic maps are displayed on a screen, often in orthogonal views, requiring the electrophysiologist (EP) to mentally recreate a 3-dimensional representation of this data in their mind. In order to reposition the electroanatomic map for the physician to enhance their understanding, this would occur through coordinated, precise communication between the physician and the technician at the EAMS workstation.

Figure 4.

Workflow for the Enhanced Electrophysiologic Visualization and Interaction System, or ĒLVIS. Data flows from the electroanatomic mapping system to the ĒLVIS application which runs off a Microsoft HoloLens. From here, the real-time patient specific data is displayed in true 3-dimensions for the physician to visualize and interact with to best understand the data during the procedure to optimize patient outcome.

The ĒLVIS interface provides physicians the ability to see a patient’s unique electroanatomic maps in true 3D and allows the physician to have control over the patient data (e.g., projection, rotation, size,) using a hands-free, gaze-dwell interface allowing for maintenance of the sterile field during EPS. Thus, the two problems the current ĒLVIS system solves are: 1) current 3D data is compressed to be displayed on a 2D screen, and 2) control of data during the procedure is decentralized from the person performing the procedure.

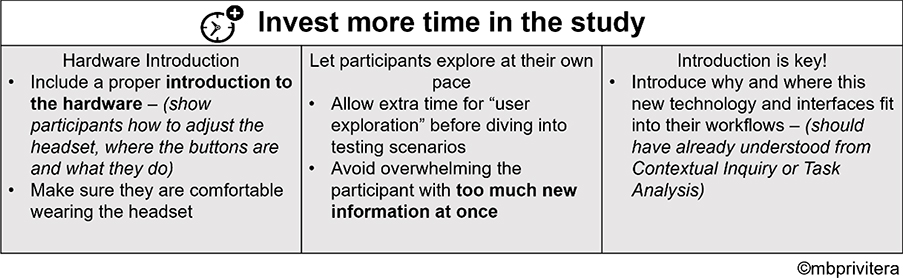

Throughout the design and development phases, the usability of the ĒLVIS system was carefully analyzed using task analysis, heuristic evaluations, expert reviews and usability studies. This practice meets the requirements for regulatory submission as indicated by FDA Human Factors Guidance (2016) and IEC 62366 (2015). Usability testing of the user interface included formative and summative human factors testing. Feedback from formative human factors testing clarified the needs of the end user specifically regarding the interface method of control. The interface went from a mixed gaze, gaze/gesture, and voice to a more uniform gaze/dwell interface. In addition, users provided feedback on the menu hierarchy, visual balance, legibility, iconography and the identification of potential use errors. Further this testing demonstrated the need to ensure study participants (the end users) were familiar with the underlying technology of the HMD prior to conducting a formal human factors (summative) validation study. The ability for users to become familiar with the hardware prior to the evaluation of interface usability required additional allotment of time in device introduction. Participants required a demonstration of adjustment controls of the hardware as the ability to wear a HMD comfortably might be the difference between acceptance or reluctance in the evaluation (see Figure 5).

Figure 5.

Best practices for usability assessments of mixed reality devices1.

5. Practical (and Special) Design Considerations for Implementing Extended Realities in Medicine

Identifying the technology-use case match is the first branch point in decision making when developing XR medical applications. Once the appropriate candidate use case has been identified, the additional hardware and software considerations for the development and testing of XR medical devices can be examined. (see Figure 6)

Figure 6.

In addition to the usual considerations when designing a medical device, there are unique considerations when developing an extended reality application in the medical field. In addition to the technology-use case match, there are hardware and software considerations.

5.1. Hardware considerations.

XR hardware used in higher-risk medical applications will be subject to unique considerations, as are the majority of tools in these medical environments. The system must balance the user need for immersion, performance and image quality with mobility, battery life and ergonomics. Generally, the more mobile the system, the lower the fidelity of the visualization. Additionally, the number and quality of interaction modes, (e.g. voice, eye, hand) correspond with an increase in cost and complexity. Finally, concerns about biocompatibility, sterilization, and disinfection must be addressed17.

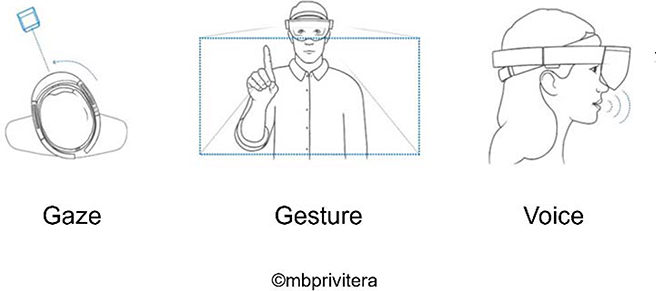

5.2. Software considerations.

XR in the medical space require highly testable and stable applications that will behave in predictable ways as they may impact or be directly involved in patient care. Perhaps the most significant software consideration is the interface interaction method. Augmented and mixed reality allow for designing interaction methods that will be comfortable to the end user as they build upon current, widely accepted interaction methods. Various medical contexts (e.g. operating room, emergency room, outpatient clinics) will have special considerations for optimizing interaction methods—gesture, gaze, eye tracking and voice, as mentioned above—and must be considered during the design process (Figure 3).

For instance, applications used during surgical procedures must consider hands-free methods of interaction as those that require hand gestures may negatively impact workflow. By empowering medical providers through these interaction methods, there can be direct control over data used to inform clinical decision-making. This kind of empowerment, through control mechanisms, may ultimately improve workflows and may ideally contribute to the reduction of medical errors through the improved, accurate and high-quality communication. (see Figure 7)

Figure 7.

Interaction modes for considerations during the design process1.

5.3. Hand gestures.

Implementation of a hand gesture interface has special design considerations. While avoiding some of the pitfalls of the voice interface (see below), hand gesture interfaces must consider the system’s ability to see the hand, initiation, mechanics and termination of the gesture. Rigorous testing of the system’s interpretation of sudden or jerky movements versus expected movements must be undertaken in various use environments. Gestures must be mechanically distinct enough from other gestures to avoid confusion to the system, but must be easy for adoption by end users. Careful design consideration for gestures that end sessions as inadvertent activation of this may have a significant impact in the use environment. Additionally, hand gesture interfaces are limited to those use cases where the end user’s hands are not bring used for other tasks. When implementing an interface for the ĒLVIS system, hand gestures would not be effective as the physician wearing the system is using their hands to guide catheter and perform the procedure. As such, understanding the use environment for the system helped rule out certain interaction methods.

5.4. Voice commands.

Seemingly, the implementation of voice commands in AR/MxR should be an intuitive interaction method for the end user, as it closely replicates real world experiences. Voice command interfaces require the end user to verbally express what they would like to happen with the interface. To practically implement such a system, “wake” words need to start the command, notifying the interface that an action is required. Real world examples of this include Amazon’s “Alexa,” Apple’s “Siri” and Microsoft’s “Cortana.” Notably, these wake words should not be common words in the use environment to minimize inadvertent actions. These words should be easy to remember yet distinctive enough to avoid inadvertent activation. (Consider “Alexander” may activate “Alexa.”) Additionally, command libraries must be created that use simple, easy to remember commands to increase adoption by the end user. Testing voice command interface must be done with an international user base to account for various accents. When testing the ĒLVIS application, we found that voice commands could be effective for certain users but was not universally reliable due to variability in end user accents, and challenges in achieving an acceptable false positive rate with sufficient sensitivity. To overcome this hurdle will require improvements in vocabulary libraries that these devices are trained on.

5.5. Gaze and Gaze Dwell.

People often gaze at objects relating to their current task and objects being manipulated. Gaze interactions are an extension of this natural interaction and provide an intuitive interface mode18. Gaze refers to looking steadily at an object. This method of interaction provides a potentially faster interaction than a computer mouse when an end user’s hands are required for other tasks18. Once the user has gazed at an object, the object is selected. There are various methods for “confirmation,” including hand gesture (i.e. “tapping” on the object gazed at), voice (i.e. voice command “select” at the object gazed at) or dwell. Gaze-dwell interfaces are completely independent of hand gestures and voice commands, allowing the user to gaze at the object and then hold the gaze (or dwell) over the object for selection. The dwell time for selection of the object varies from milliseconds-seconds and should be tested in the use environment to optimize responsiveness and sensitivity19. The gaze-dwell interface was implemented in the ĒLVIS system and was the appropriate fit given the use environment (the electrophysiology laboratory) and end users (Electrophysiologists).

5.6. Eye tracking.

Recent hardware developments are now allowing for new interaction methods, such as eye tracking. Tracking eye movements is an intuitive mode of interaction for similar reasons to gaze tracking. There may be human eye physiologic variants or certain types of contact lenses or glasses that do not allow for proper calibration prior to implementing eye tracking, requiring careful user screening. Eye tracking does allow for applications to track where the use is looking in real time, opening a wide array of potential future applications. The method of selecting an object should invoke previously mentioned commands, such as voice, hand gesture or dwell. Use of blinking is generally considered not to be an ideal mode of interaction as it is not always a deliberate input and can be a reflexive response of the end user20.

5.7. Human Computer Interface.

Design considerations of the human computer interface are integral for the usability and adoption of these technologies in medicine. Transitioning highly trained and extensively experienced healthcare providers towards adoption of these new technologies requires consideration of existing interfaces which are used daily by the user. As a starting point for the XR application, the User interface (UI) design can build from traditional UI development but must consider the unique advantages of the extended realities. For example, use of familiar icons and symbols with the extended realities will likely increase adoption and decrease learning curves during adoption and implementation. In taking this approach users are eased into the integration of mixed reality technologies in that they in themselves enhance the familiar.

6. Clinical Testing of the ĒLVIS System

6.1. Methods.

After approval from the Western Institutional Review Board (IRB), usability of the fully engineered ĒLVIS system was tested prospectively in a first-in-human series performed at Washington University in St Louis/St Louis Children’s Hospital in the Cardiac Augmented REality (CARE) Study.

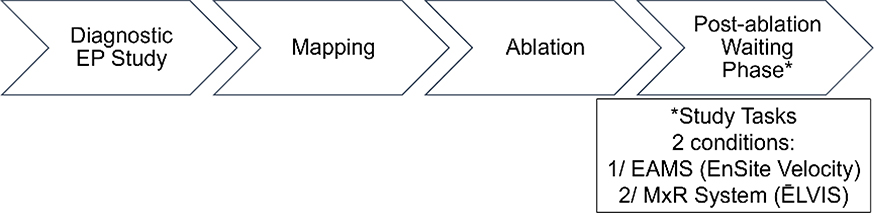

Pediatric patients, ages 6–21 years, with a clinical indication for EP study were eligible for enrollment in the CARE study. Exclusion criteria included: 1) mechanical support, such as ventricular assist device, at time of procedure, 2) age <6 years, 3) patients in foster care/wards of the state, or 4) pregnancy. Patients underwent a standard EPS as clinically indicated, and physicians had the ability to use ĒLVIS during the procedure per their discretion. During the routine post-ablation waiting phase of the EPS, physicians were asked to complete a series of study tasks under two conditions: 1) using a standard electroanatomic mapping system (EAMS), and 2) using ĒLVIS, in random order. (See Figure 8) Study tasks included: 1) creation of a single, high-density cardiac chamber, and 2) sequential point navigation within the generated chamber. Physicians were given the option to create any chamber under the abovementioned 2 conditions, given a 5-minute time limit per condition. Next, 5 target markers were placed within the chamber and physicians were asked to sequentially navigate to those targets under the 2 conditions, with a time limit of 60 seconds per target. Physicians were able to move or rotate the geometries, or ask for a mapping technician to move/rotate as needed to enhance their understanding. The number of interactions between the physician and mapping technician were recorded for each study task to determine if use of ĒLVIS changed the communication dynamic n the EP laboratory.

Figure 8.

The Cardiac Augmented REality (CARE) Study workflow.

At the conclusion of the procedure, physician users were asked to complete a 7 question, Likert-based exit survey was specifically designed to better understand the usability of the ĒLVIS system. Additionally, data were collected during study tasks to understand the number of interactions between team members, specifically the physician performing the procedure and the technician controlling the EAMS.

6.2. Results.

In total, 16 patients were enrolled in the study with 3 physician end users participating in the studies.

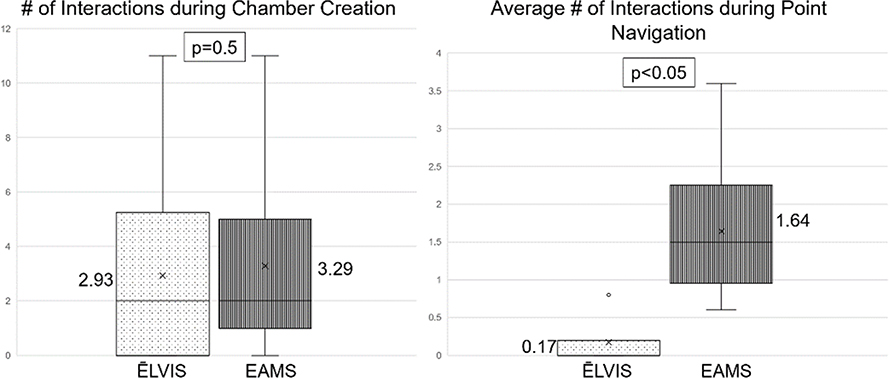

Interactions.

Electrophysiology procedures are complex, stressful procedures requiring sustained attention to detail while performing intricate tasks, similar to surgical procedures21. Interactions during the EPS occur routinely and can be a source for potential errors22, particularly during increasingly complicated procedures. To understand how use of the ĒLVIS system affected interactions between the performing electrophysiologist and the mapping technician during the procedure, the number of interactions (quantity) were counted during cardiac chamber creation and point navigation (see Figure 9) under the 2 study conditions, ĒLVIS and EAMS. During chamber creation, there was no significant difference between the number of interactions under the 2 study conditions (paired student T-test p=0.5). In contrast, during point navigation, where the physician was given a target in the chamber to navigate to, there was a significantly reduced number of interactions with use of the ĒLVIS system when compared to EAMS (p<0.05). It was observed that during this portion of the procedure, the physician had the ability to manipulate the geometry and confirm their location rather than relying on another person, the mapping technician, to confirm the catheter location. This reduction in interactions during point navigation may impact efficiency, workflow and team dynamics in the EP laboratory.

Figure 9.

Interaction data obtained during the first-in-human studies. The number of interactions were recorded when the physician was asked to perform 2 discrete tasks, chamber creation and point navigation, under 2 conditions, using the ĒLVIS system or using standard electroanatomic mapping system (EAMS).

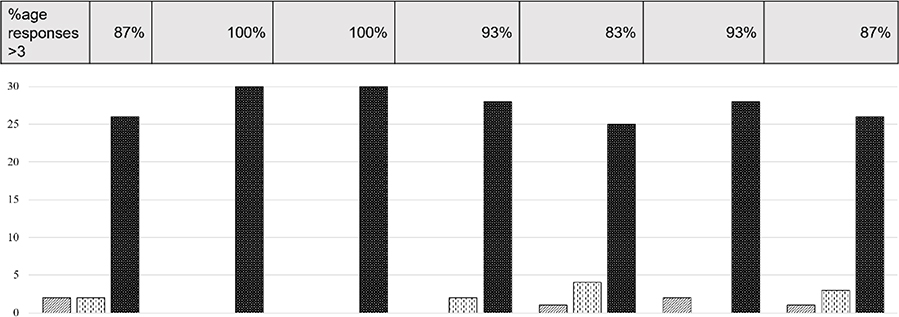

Usability.

Results from the physician exit usability survey (see Figure 10) demonstrated that overwhelmingly, the physicians found the system comfortable (87% agree/strongly agree) and easy to use (100% agree/strongly agree) with readily accessible tools (100% agree/strongly agree). Most physicians (93%) used all the features in the interface and 83% found that the ability to control or manipulate the data was the most important feature of the system. One can reason from this that the power from the ĒLVIS system comes the 3D view of the patient anatomy and catheter locations in conjunction with the ability of the physician to control and manipulate the data to maximize their understanding. Importantly 93% of physicians found that data, when presented in true 3-dimensions and the ability to control the angle of viewing of the data, were easier to interpret than current standard of care, which is displayed on 2-dimensional monitors in orthogonal views. Lastly, 87% of physicians found that they learned something new about the anatomy when viewing the data in 3D.

Figure 10.

Results from the physician exit usability survey, based on a Likert scale (1=strongly disagree, 3=neutral, 5=strongly agree). Assessing the percentage of responders who answered >3 on each of the 7 questions is shows in the row above the bar graph.

7. Future Directions

Future hardware development in the XRs will impact application development. With continued testing and increased regulatory guidance for the development of these applications, there will be more applications developed for higher-risk applications, including intraprocedural, intra-surgical, and therapeutic uses. Incorporation of new interaction modalities, such as voice control with natural language processing23, will allow for a more intuitive, seamless interfaces which will also increase adoption.

Building the electrophysiology laboratory of the future will encompass not only an augmented reality system but also include clinical decision support, including intraprocedural decision support and predictive analytic support, and robotic control which will eventually require minimal assistance of a human operator. By combining machine learning with computer vision algorithms, the entire workflow of the EP lab will change for the benefit of the patient.

8. Conclusion

Overall, the number of medical applications in development utilizing the extended realities are increasing. To best harness the potential of these technologies, an understanding of each use context is necessary in defining the design options to meet those needs. Once technical solutions have been made, design considerations regarding interaction methods of the user interface are critical in the development of high-quality solutions. Medical applications of the extended realities will require intuitive interfaces with seamless interaction methods in order to improve current clinical workflows. Our work with creation of the ĒLVIS system demonstrates that implementation of a mixed reality system can be achieved in the most exacting of settings, to assist intraprocedural use by taking data which is typically a complex cognitive task and translating this to an interactive, exploratory enhanced experience. Ultimately, this system can immediately add value to the medical team and this value will improve patient outcomes.

9 References

- 1.Cox K, Privitera Mary Beth, Alden Tor, Silva Jonathan, Silva Jennifer. in Applied Human Factors in Medical Device Design 327–337 (2019). [Google Scholar]

- 2.ClinicalTrials.gov, <https://clinicaltrials.gov/ct2/results?cond=&term=virtual+reality&cntry=&state=&city=&dist=> (2020).

- 3.Stanford Children’s Health, L. P. C. s. H. S. The Stanford Virtual Heart - Revolutionizing Education on Congenital Heart Defects, <www.stanfordchildrens/org/en/innovation/virtual-reality/stanford-virtual-reality.com> (2020).

- 4.Dougherty B & Badawy SM Using Google Glass in Nonsurgical Medical Settings: Systematic Review. JMIR Mhealth Uhealth 5, e159, doi: 10.2196/mhealth.8671 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wei NJ, Dougherty B, Myers A & Badawy SM Using Google Glass in Surgical Settings: Systematic Review. JMIR Mhealth Uhealth 6, e54, doi: 10.2196/mhealth.9409 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chan F, A. S., Bauser-Heaton H, Hanley F, Perry S. (Radiological Society of North America 2013. Scientific Assembly and Annula Meeting). [Google Scholar]

- 7.Silva J & Silva J (Google Patents, 2019).

- 8.Silva JNA, Southworth M, Raptis C & Silva J Emerging Applications of Virtual Reality in Cardiovascular Medicine. JACC Basic Transl Sci 3, 420–430, doi: 10.1016/j.jacbts.2017.11.009 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.McJunkin JL et al. Development of a Mixed Reality Platform for Lateral Skull Base Anatomy. Otol Neurotol 39, e1137–e1142, doi: 10.1097/MAO.0000000000001995 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Corporation, N. (ed Merrill Doug) (2018). [Google Scholar]

- 11.Southworth MK, Silva JR & Silva JN A. Use of extended realities in cardiology. Trends Cardiovasc Med, doi: 10.1016/j.tcm.2019.04.005 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Andrews C, Southworth MK, Silva JNA & Silva JR Extended Reality in Medical Practice. Curr Treat Options Cardiovasc Med 21, 18, doi: 10.1007/s11936-019-0722-7 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Molina CA et al. Augmented reality-assisted pedicle screw insertion: a cadaveric proof-of-concept study. J Neurosurg Spine, 1–8, doi: 10.3171/2018.12.SPINE181142 (2019). [DOI] [PubMed] [Google Scholar]

- 14.SA MTGM (ed Bourriquet Sylvian) (2018).

- 15.Pedrote A, Fontenla A, Garcia-Fernandez J & Spanish Catheter Ablation Registry, c. Spanish Catheter Ablation Registry. 15th Official Report of the Spanish Society of Cardiology Working Group on Electrophysiology and Arrhythmias (2015). Rev Esp Cardiol (Engl Ed) 69, 1061–1070, doi: 10.1016/j.rec.2016.06.009 (2016). [DOI] [PubMed] [Google Scholar]

- 16.Bhakta D & Miller JM Principles of electroanatomic mapping. Indian Pacing Electrophysiol J 8, 32–50 (2008). [PMC free article] [PubMed] [Google Scholar]

- 17.Commission, I. E. (2011).

- 18.Sibert LE, J. R. J. K.in SIGCHI Conference on Human Factors in Computing Systems 281–288. [Google Scholar]

- 19.Holmqvist K, Nystrom M, Andersson R, Dewhurst R, Jarodzka H, de Weijer J Eye tracking: A Comprehensive Guide to Methods and Measures. (Oxford: OUP., 2011). [Google Scholar]

- 20.Virtual Environments and Advanced Interface Design. (Oxford University Press, Inc., 1995). [Google Scholar]

- 21.Ng R, Chahine S, Lanting B & Howard J Unpacking the Literature on Stress and Resiliency: A Narrative Review Focused on Learners in the Operating Room. J Surg Educ 76, 343–353, doi: 10.1016/j.jsurg.2018.07.025 (2019). [DOI] [PubMed] [Google Scholar]

- 22.Ahmed Z, Saada M, Jones AM & Al-Hamid AM Medical errors: Healthcare professionals’ perspective at a tertiary hospital in Kuwait. PLoS One 14, e0217023, doi: 10.1371/journal.pone.0217023 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Sardar P et al. Impact of Artificial Intelligence on Interventional Cardiology: From Decision-Making Aid to Advanced Interventional Procedure Assistance. JACC Cardiovasc Interv 12, 1293–1303, doi: 10.1016/j.jcin.2019.04.048 (2019). [DOI] [PubMed] [Google Scholar]