Abstract

Artificial intelligence (AI) is evolving in the field of diagnostic medical imaging, including echocardiography. Although the dynamic nature of echocardiography presents challenges beyond those of static images from X-ray, computed tomography, magnetic resonance, and radioisotope imaging, AI has influenced all steps of echocardiography, from image acquisition to automatic measurement and interpretation. Considering that echocardiography often is affected by inter-observer variability and shows a strong dependence on the level of experience, AI could be extremely advantageous in minimizing observer variation and providing reproducible measures, enabling accurate diagnosis. Currently, most reported AI applications in echocardiographic measurement have focused on improved image acquisition and automation of repetitive and tedious tasks; however, the role of AI applications should not be limited to conventional processes. Rather, AI could provide clinically important insights from subtle and non-specific data, such as changes in myocardial texture in patients with myocardial disease. Recent initiatives to develop large echocardiographic databases can facilitate development of AI applications. The ultimate goal of applying AI to echocardiography is automation of the entire process of echocardiogram analysis. Once automatic analysis becomes reliable, workflows in clinical echocardiographic will change radically. The human expert will remain the master controlling the overall diagnostic process, will not be replaced by AI, and will obtain significant support from AI systems to guide acquisition, perform measurements, and integrate and compare data on request.

Keywords: Artificial intelligence, Deep learning, Echocardiography, Machine learning

INTRODUCTION

There is widespread acknowledgment that artificial intelligence (AI) will transform the healthcare system, particularly in the field of diagnostic medical imaging.1) The use of AI in diagnostic medical imaging has undergone extensive evolution, and there are currently computer vision systems that perform some clinical image interpretation tasks at the level of expert physicians.2),3) Furthermore, the potential applications of AI are not limited to image interpretation, but also include image acquisition, processing, reporting, and follow-up planning.4) Although AI has been applied mostly to static images from X-ray, computed tomography (CT), magnetic resonance (MR), and radioisotope imaging, many companies and investigators have recently sought to address challenges such as the dynamic nature of echocardiography through AI.5),6) Many areas in echocardiography have been impacted by AI, and AI-based software that aids in echocardiography measurement is commercially available.

Because of the continuously increasing number of patients with cardiovascular disease and the parallel growth in demand for echocardiographic studies, there is a supply-demand mismatch between patient volume and number of experts available to interpret echocardiographic studies not only in the laboratory, but also at the point-of-care.7) Therefore, AI, which can offer sustainable solutions to reduce workloads (e.g., aid in the repetitive and tedious tasks involved in echocardiography measurement and interpretation), should be viewed as a growth opportunity rather than as a disruption.8) Many issues remain that must be addressed to implement AI applications in daily clinical practice. In this review, we provide an overview of the current state of AI in echocardiography and the challenges that are being confronted. Further, we aimed to analyze various aspects of incorporating AI in the clinical workflow and provide insight into the future of echocardiography.

TERMINOLOGY

In broad terms, AI comprises the engineering of computerized systems able to perform tasks that typically require human intelligence. Machine learning (ML) provides systems the ability to learn and improve performance from experience without being explicitly programmed. Deep learning (DL) is a subfield of ML concerned with algorithms inspired by the structure and function of the human brain, known as artificial neural networks. Conventional ML usually requires derivation of knowledge-based hand-crafted features extracted from input images.9) However, DL networks can learn high-level features from big data, instead of by “feature engineering” processes. In DL training networks, all trainable weights are updated using backpropagation algorithms by minimizing the objective function, which is the difference between the ground truth and the prediction. In the inference phase, the trained network predicts results from the input image itself, without the need for hand-crafted imaging features.10) Especially in the field of imaging, convolutional neural networks (CNNs) are able to extract detailed low-level information from original images and combine them to form higher-order structural information, enabling identification of complex entities in unprocessed images. This technique has dramatically improved the accuracy of ML models, especially in the fields of visual object recognition and object detection.6) While CNNs successfully encode the spatial features of a single frame, recurrent neural networks (RNNs) are designed to interpret temporal or sequential information from videos, which are essentially a sequence of individual images.11),12) RNNs enable encoding of temporal dependencies between sequential images. Although RNNs have not been fully exploited, combined CNNs and RNNs have been designed to consider spatio-temporal features to achieve an accurate evaluation of echocardiograms by simultaneously extracting spatial features and retaining memory across image sequences.13)

AI learning can be classified into three categories: supervised learning, unsupervised learning, and reinforcement learning.6) The main difference between supervised and unsupervised learning is that unsupervised learning uses unlabeled data, whereas supervised learning uses labeled data. Supervised learning analyzes labeled training data and produces an inferred function, which can be used for classification or prediction of new (untaught) input. Supervised learning techniques have shown great promise in medical image analysis, and the availability of large amounts of training data with manual labels has been crucial in many of these successes.14) For example, after training an algorithm with thousands of apical echocardiographic images with known left ventricular ejection fraction (LVEF), the algorithm was able to analyze images and produce an accurate LVEF identification.15) On the other hand, unsupervised learning aims to infer, from unlabeled data, the unknown natural structure or pattern present within a set of data points. However, supervised and unsupervised learning are not mutually exclusive. ML can integrate supervised and unsupervised learning as appropriate for semi-supervised learning.11) The final AI learning technique, reinforcement learning, is a method of learning from actions taken in surrounding environments to maximize cumulative rewards through trial and error.11)

ECHOCARDIOGRAPHIC IMAGE ACQUISITION

The first step in application of AI to echocardiography is image acquisition. Compared to static images that are based on radiation, image acquisition in echocardiography is much more experience-dependent. AI can help minimize inter- and intra-observer variation and aid in acquisition of standard images. For example, Narang et al.16) recently introduced a novel DL-derived technology that combines real-time image quality assessment with adaptive anatomic guidance to allow users with limited echocardiography training to acquire standard echocardiography images. The U.S. Food and Drug Administration (FDA) approved this AI-based echocardiographic device in February 2020, as the first such device to receive FDA approval.17) This new paradigm shift in AI applications can facilitate access to echocardiographic data in resource-limited settings or clinical situations requiring immediate interrogation of cardiac structure and function (e.g., emergency departments, primary care clinics, remote environments, and the developing world). In these situations, given that nonexpert users can perform limited echocardiographic examinations using handheld or portable machines with nonuniform quality and risk of nondiagnostic and misleading imaging, the potential use of AI guidance to improve the yield of point-of-care ultrasound has tremendous appeal. The current condition for use of this AI-based echocardiographic device is that a cardiologist must review and approve the acquired images for patient evaluation, rather than leaving everything to the machine.6) The extent to which AI-based devices can be trusted is an important issue that should be discussed over time.

VIEW IDENTIFICATION

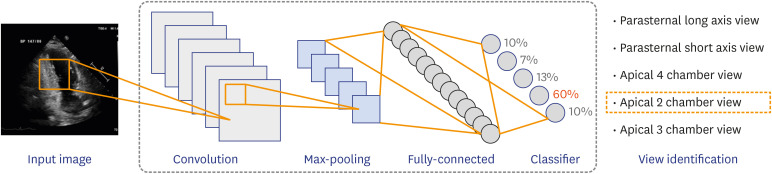

A typical 2-dimensional (2D) transthoracic echocardiogram collects close to 70 videos from different viewpoints to visualize the complex 3-dimensional (3D) cardiac structure from 2D cross-sectional images. As such video clips do not necessarily have labeled views, view identification is an essential step in AI applications before training the deep neural network and proceeding with automatic measurement and image interpretation (Figure 1). Correct view identification must proceed to ensure an accurate evaluation of the echocardiogram. Furthermore, considering that a massive amount of labeled data is a prerequisite for DL algorithm training, a view identification algorithm that is able to handle this time-consuming and laborious preprocessing step is expected to facilitate the development of AI applications in echocardiography.

Figure 1. Illustration of a deep learning-based model for view identification. The model comprises convolutional layers that learn features for visual cues with a fully connected layer for view identification.

Previous studies have proposed view classification models with accuracies around 91%–94% for 15-view classification.18),19) Although these models seem to work well, it is important to recognize that errors in the view classification step can cascade into errors in measurement or interpretation. Recently, Kusunose et al.20) reported on a CNN-based view classification algorithm with an overall accuracy of 98.1%. The authors then tested the prediction model for LVEF using learning data with a 1.9% error rate. Since LVEF prediction accuracy was verified, even with training data containing 1.9% mislabeled images, they suggested that their view classification model is feasible clinically. Although this previous study evaluated only the impact of view classification errors on LVEF prediction, it highlighted that view classification is not an independent step but a foundational step for automatic analysis of echocardiographic images.

View classification algorithms can change the way echocardiograms are read. Standard echocardiographic acquisitions often start with parasternal views, followed by apical views, and finally subcostal views. Therefore, acquisitions from multiple nonconsecutively acquired views must be integrated to arrive at a conclusion or diagnosis. For example, to evaluate aortic stenosis severity, the left ventricular (LV) outflow tract diameter from the parasternal long-axis view must be integrated with Doppler tracings of the LV outflow tract and aortic valve obtained from apical 3- or 5-chamber views and, occasionally, suprasternal views. Simultaneously, cardiologists must pay attention to LVEF, typically obtained from apical 2- and 4-chamber views. Advancement in AI-automated classification algorithms would allow echocardiograms to be read according to the particular valve or chamber being evaluated instead of by the sequence in which the images were acquired. If one submits a query for aortic valve area assessment, all relevant images would be displayed together, despite not being acquired consecutively. The same principle would apply to the parameters of LVEF, diastolic function, and valvular regurgitation.

SEGMENTATION AND MEASUREMENT

Once appropriate views have been determined, the next step is to measure the cardiac chamber size and function. To date, automatic cardiac segmentation in 2D and 3D transthoracic echocardiograms has focused on the LV due to the emerging needs for assessing left heart function, represented by the LVEF.21),22),23) Echocardiographic quantification of the LVEF requires accurate delineation of the LV endocardium in both end-diastole and end-systole. In daily clinical practice, semi-automatic or manual identification of endocardial borders is routine due to the current lack of accuracy and reproducibility for fully automatic cardiac segmentation methods.23) Therefore, the field suffers from time-consuming tasks that are prone to intra- and inter-observer variability,24) indicating the need for a fast, accurate, and automated analysis of echocardiograms.

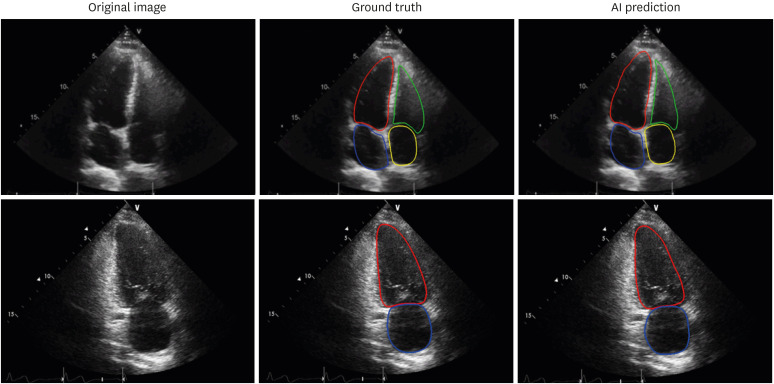

Despite remarkable advances in the quality of echocardiograms, issues of inhomogeneity in intensity, edge dropout, and low signal-to-noise ratio historically have impeded the clinical use of automatic segmentation platforms for an accurate and clinically acceptable calculation of chamber volumes. Nevertheless, multiple vendors, and even investigators, are addressing these issues and have demonstrated the ability to perform automated echocardiographic recognition and measure common 2D structural parameters (Figure 2).19),21),22),23) Automatic measurements are expected to become more time-efficient, representative, and reproducible.19),25) Furthermore, automatic measurements by algorithms need not be limited to a specific phase (i.e., end-diastole or end-systole); rather, they can allow a frame-by-frame analysis of multiple cardiac cycles to provide a more precise evaluation.26) However, to date, such algorithms have been trained on private datasets, and their generalizability to other independent datasets remains undertested. In addition to the difficulty of obtaining a large number of echocardiographic images that have been carefully annotated by cardiologists, measurement variation associated with intrinsic beat-to-beat variability in cardiac function, as well as variability from approximating a 3D object using 2D cross-sectional images, has hampered the advent of clinically applicable algorithms. No automated method can be better than the images inputted. Considering that a 2D echocardiographic measure of chamber volume is limited by geometric assumptions and foreshortening, automated 2D echocardiographic volume measurement has innate limitations that cannot be overcome by the algorithms.

Figure 2. Examples of endocardial border detection. Artificial intelligence algorithms show robust segmentation results (right columns) compared to the ground truth as determined by manual annotation (left columns). Left ventricle (red), left atrium (blue), right ventricle (green), right atrium (yellow).

Due to the avoidance of both geometric assumptions and the risk of underestimating chamber volumes associated with foreshortened views, 3D transthoracic echocardiographic measurements of cardiac chamber volumes are well-recognized to be superior in accuracy and reproducibility compared to 2D techniques.27) Therefore, recent guidelines recommend 3D echocardiographic quantification of the left heart chambers when possible.28) However, this methodology has not been widely embraced nor fully integrated into routine clinical work in most centers due to its extensive user input dependence, need for 3D expertise, and time-consuming workflow; most centers continue to rely on traditional 2D echocardiographic assessments of cardiac chamber volume and function. Therefore, the ultimate target of automated volume measurement algorithms should be 3D echocardiography rather than 2D echocardiography. The availability of a reasonably accurate and reproducible automated cardiac chamber quantification technique, requiring minimal or no manual correction of the endocardial borders, would potentially allow integration of 3D echocardiographic volumetric measurements into routine clinical practice.

Although 3D echocardiography has suffered from inferior image quality compared to that of contemporary 2D echocardiographic images,27) utilizing volumetric information can inform the boundaries by reference to adjacent slices. Furthermore, it is more straightforward to develop automated volumetric measurement algorithms for 3D echocardiography than for 2D echocardiography due to its volumetric nature, which is free from the approximation process for volume estimation in 2D echocardiography using Simpson's method. Automated software (HeartModelA.I.; Philips Healthcare, Amsterdam, Netherlands) based on an adaptive analytic algorithm that provides LV and left atrial volumetric quantification is commercially available.29) Validation of this automated 3D transthoracic echocardiography software has shown its good accuracy and reproducibility, with a promising time-saving potential in patients with good images.30),31),32),33) Although border correction is required in patients with low image quality, to improve the accuracy of the automated measure,34) multiple refinements have been made in the continued development of the algorithm, resulting in improved endocardial boundary detection that requires minimal to no manual corrections, as well as an improved ability to analyze a larger percentage of the patient population.30) Furthermore, this methodology has been recently expanded to automatic measurement of chamber volumes throughout the cardiac cycle35) and to automated LV mass determination.36)

INTERPRETATION

Echocardiography is not only skill-dependent in acquisition of images; the interpretation of echocardiography remains highly subjective. AI holds promise in echocardiographic interpretation as it has the potential to extract information not readily apparent to observers. For instance, assessing regional wall motion abnormalities (RWMAs), based on a visual interpretation of endocardial excursion and myocardial thickening, is subjective and experience-dependent. The development of an objective classification model is expected to improve the detection of RWMAs in clinical practice. In a recent study, automated diagnosis of RWMA by a DL-based algorithm showed diagnostic accuracy comparable to the expert consensus assessment.37) Echocardiographic RWMA assessment with AI might not be necessary for experts. However, the availability of an objective and quantitative assessment of RWMAs, with no intra-observer errors, has tremendous appeal considering its ability to increase workflow in daily clinical practice. If these models can be improved using a larger cohort, the clinical applications would not be limited to rest echocardiography, but also could be expanded to stress echocardiography.

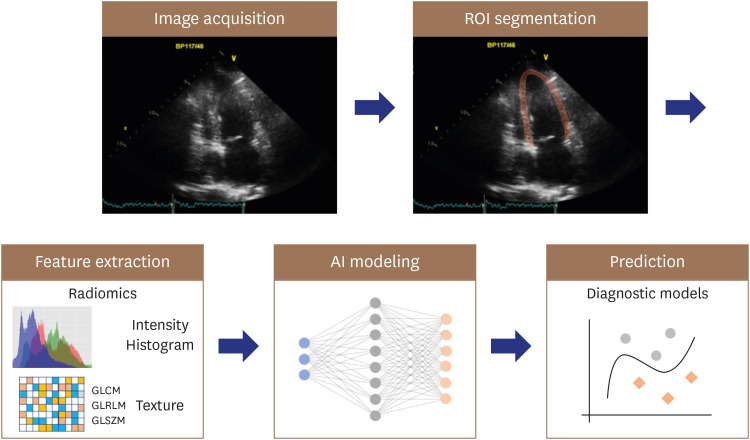

Myocardial textural characterization is another target for AI applications. Although the echocardiographic myocardial texture is dependent upon the ultrasound instrument and transducer, it also is influenced by alterations in tissue pathology.38) Therefore, abnormal myocardial texture on echocardiography often provides important clues regarding myocardial disease.39) However, such myocardial tissue characterizations rely heavily on experience and remain subjective. The application of radiomics and AI-based algorithms holds promise in echocardiographic interpretation of myocardial textural changes, which are often subtle, non-specific, and challenging to quantify.40),41) Radiomics is a process that converts digital medical images into mineable high-dimensional data, motivated by the concept that biomedical images contain information reflecting the underlying pathophysiology, and that relationships can be revealed via quantitative image analyses (Figure 3).42) Although radiomics was initiated in oncology using CT, MR, and positron emission tomography images,43),44),45) it is potentially applicable to echocardiography for evaluation of cardiovascular disease.40),41)

Figure 3. Application of radiomics and AI-based algorithms for myocardial textural change. A ROI is extracted from the image for radiomics feature analysis, including intensity and texture features. AI models integrate radiomics features in development of the diagnostic model.

AI: artificial intelligence, ROI: region of interest, GLCM: gray-level co-occurrence matrix, GLRLM: gray-level run length matrix, GLSZM: gray-level size zone matrix.

Although most of the studies have employed supervised ML models to mimic interpretation by human expertise, unsupervised clustering algorithms also provide important insights into disease by segregating patient groups with similar traits and assigning them into clusters. This enables the exploration of possible heterogeneity within a disease category that has historically been considered homogeneous.11) For example, in patients with aortic stenosis, an unsupervised clustering algorithm applied to echocardiographic features with and without clinical features might allow the phenotyping of aortic stenosis and prediction of differential outcomes.46),47) Uncovering heterogeneity that would otherwise be undetected using traditional classification methods can improve risk stratification and aid in cost-effective healthcare utilization toward better diagnosis and treatment.48)

THE CHALLENGES

The most significant barrier to progress in AI applications for echocardiography is the lack of large, publicly available, and well-annotated echocardiographic datasets for training.6),23),49) Although small datasets can be sufficient for training AI algorithms in research settings, large datasets are essential for training, validating, and testing commercial AI applications.50) While healthcare organizations store vast amounts of echocardiographic data that could be exploited to train AI algorithms, the data usually are stored at an on-premise server, which is safe but limits the potential of sharing data with other institutions. Cloud-based storage, which is becoming more secure, is expected to improve data sharing as well as data backup.50) Even when a large echocardiographic dataset is available for training, it might be unusable because most datasets are not curated, organized, anonymized, or appropriately annotated and are rarely linked to a ground truth diagnosis. Furthermore, definition of the ground truth depends on the desired task or purpose of a given AI algorithm. If the echocardiographic finding is the reference standard, annotation performed by experts can be considered as ground truth. On the other hand, the ground truth might require confirmatory clinical labeling beyond echocardiographic reports, such as a pathologic report, clinical outcome, or both.50) Therefore, the task of data preparation for AI algorithm training is inevitably time- and labor-intensive. Additionally, to translate AI into broader clinical practice, these databases must include sufficient “real-world” heterogeneity, reflecting the spectrum of practice and imaging protocols in the field.49) Since the data quality and scope determine the applicability and accuracy of AI algorithms regardless of their approach, we hope that recent initiatives to develop large echocardiographic databases will facilitate the development of AI applications for echocardiography.6),23) Finally, for AI solutions to be properly utilized in daily clinical practice and improve the quality of care, not only is development of AI applications needed, but the relevant information technology, legal, and institutional environment also should be prepared.

FUTURE EXPECTATIONS

The ultimate goal of AI applications in echocardiography is automation of the entire process of echocardiographic image analysis. In a recent paper, Zhang et al.19) proposed a solution that automatically recognizes echocardiographic views, segments and labels the cardiac chambers (including the cavity and myocardium of the LV), calculates classical echocardiographic functional parameters and global longitudinal strain, and classifies certain cardiac pathologies. Although further studies are required to understand the true performance of the proposed automatic analysis pipeline, this study provides a preview of the future echocardiographic laboratory. Once the automatic analysis of images has become reliable, workflow in clinical echocardiographic laboratories will change radically.25) Whereas the expert currently spends much of their time extracting parameters from images and mentally integrating them to reach a diagnosis (while applying consensus recommendations), the future echocardiographic laboratory automatically will extract all relevant numbers and feed them directly into diagnostic algorithms to support decision-making. The human operator will be the master controlling the overall diagnostic process, while computers perform measurements and integrate and compare data on request.

Furthermore, in this system, diagnostic criteria and decision trees will be continuously refined and improved by integrating ever-expanding databases so that a given patient’s echocardiographic profile can be matched against millions of validated cases to provide not only a diagnosis, but also an estimate of the risk and long-term outcomes related to different therapeutic options. Although unsupervised and reinforcement learning are not actively used in the field of medical imaging,6),11) they hold promise for development of automated risk prediction algorithms that can be used to guide clinical care. Unsupervised learning techniques could be used for more precise phenotyping of complex diseases, and reinforcement learning algorithms can intelligently augment healthcare providers.51)

CONCLUSION

With the continual evolution of technology, healthcare providers should become well versed and able to harness the new knowledge provided by AI. Despite some opinions voiced in the public space, AI is unlikely to replace human expertise. Rather, the echocardiographic specialist will obtain significant support from computing technology, which will greatly reduce the time spent on extracting and integrating data, and in turn, render the healthcare system more sustainable.

Footnotes

Funding: This work was supported by the Korea Medical Device Development Fund grant funded by the Korean government (Ministry of Science and ICT; Ministry of Trade, Industry, and Energy; Ministry of Health & Welfare; Ministry of Food and Drug Safety) (project No. 202016B02).

Conflict of Interest: The authors have no financial conflicts of interest.

- Conceptualization: Yoon YE, Chang HJ

- Funding acquisition: Yoon YE.

- Investigation: Kim S.

- Methodology: Kim S.

- Resources: Chang HJ.

- Software: Kim S.

- Supervision: Chang HJ.

- Writing - original draft: Yoon YE, Kim S.

- Writing - review & editing: Yoon YE, Kim S, Chang HJ.

References

- 1.Lakhani P, Prater AB, Hutson RK, et al. Machine learning in radiology: applications beyond image interpretation. J Am Coll Radiol. 2018;15:350–359. doi: 10.1016/j.jacr.2017.09.044. [DOI] [PubMed] [Google Scholar]

- 2.Yassin NIR, Omran S, El Houby EMF, Allam H. Machine learning techniques for breast cancer computer aided diagnosis using different image modalities: a systematic review. Comput Methods Programs Biomed. 2018;156:25–45. doi: 10.1016/j.cmpb.2017.12.012. [DOI] [PubMed] [Google Scholar]

- 3.Qin C, Yao D, Shi Y, Song Z. Computer-aided detection in chest radiography based on artificial intelligence: a survey. Biomed Eng Online. 2018;17:113. doi: 10.1186/s12938-018-0544-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Pesapane F, Codari M, Sardanelli F. Artificial intelligence in medical imaging: threat or opportunity? Radiologists again at the forefront of innovation in medicine. Eur Radiol Exp. 2018;2:35. doi: 10.1186/s41747-018-0061-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Davis A, Billick K, Horton K, et al. Artificial intelligence and echocardiography: a primer for cardiac sonographers. J Am Soc Echocardiogr. 2020;33:1061–1066. doi: 10.1016/j.echo.2020.04.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kusunose K. Steps to use artificial intelligence in echocardiography. J Echocardiogr. 2021;19:21–27. doi: 10.1007/s12574-020-00496-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Narang A, Sinha SS, Rajagopalan B, et al. The supply and demand of the cardiovascular workforce: striking the right balance. J Am Coll Cardiol. 2016;68:1680–1689. doi: 10.1016/j.jacc.2016.06.070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Sengupta PP, Adjeroh DA. Will artificial intelligence replace the human echocardiographer? Circulation. 2018;138:1639–1642. doi: 10.1161/CIRCULATIONAHA.118.037095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Jordan MI, Mitchell TM. Machine learning: trends, perspectives, and prospects. Science. 2015;349:255–260. doi: 10.1126/science.aaa8415. [DOI] [PubMed] [Google Scholar]

- 10.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 11.Sevakula RK, Au-Yeung WM, Singh JP, Heist EK, Isselbacher EM, Armoundas AA. State-of-the-art machine learning techniques aiming to improve patient outcomes pertaining to the cardiovascular system. J Am Heart Assoc. 2020;9:e013924. doi: 10.1161/JAHA.119.013924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Taheri Dezaki F, Liao Z, Luong C, et al. Cardiac phase detection in echocardiograms with densely gated recurrent neural networks and global extrema loss. IEEE Trans Med Imaging. 2019;38:1821–1832. doi: 10.1109/TMI.2018.2888807. [DOI] [PubMed] [Google Scholar]

- 13.Goto S, Delling FN, Deo RC. A hybrid convolutional and recurrent neural network architecture accurately detects patients with mitral valve prolapse from echocardiography. Circulation. 2019;140:A13968 [Google Scholar]

- 14.de Bruijne M. Machine learning approaches in medical image analysis: From detection to diagnosis. Med Image Anal. 2016;33:94–97. doi: 10.1016/j.media.2016.06.032. [DOI] [PubMed] [Google Scholar]

- 15.Asch FM, Poilvert N, Abraham T, et al. Automated echocardiographic quantification of left ventricular ejection fraction without volume measurements using a machine learning algorithm mimicking a human expert. Circ Cardiovasc Imaging. 2019;12:e009303. doi: 10.1161/CIRCIMAGING.119.009303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Narang A, Bae R, Hong H, et al. Utility of a deep-learning algorithm to guide novices to acquire echocardiograms for limited diagnostic use. JAMA Cardiol. 2021 doi: 10.1001/jamacardio.2021.0185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Voelker R. Cardiac ultrasound uses artificial intelligence to produce images. JAMA. 2020;323:1034. doi: 10.1001/jama.2020.2547. [DOI] [PubMed] [Google Scholar]

- 18.Madani A, Arnaout R, Mofrad M, Arnaout R. Fast and accurate view classification of echocardiograms using deep learning. NPJ Digit Med. 2018;1:6. doi: 10.1038/s41746-017-0013-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Zhang J, Gajjala S, Agrawal P, et al. Fully automated echocardiogram interpretation in clinical practice. Circulation. 2018;138:1623–1635. doi: 10.1161/CIRCULATIONAHA.118.034338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kusunose K, Haga A, Inoue M, Fukuda D, Yamada H, Sata M. Clinically feasible and accurate view classification of echocardiographic images using deep learning. Biomolecules. 2020;10:665. doi: 10.3390/biom10050665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Jiang W, Lee KH, Liu Z, Fan Y, Kwok KW, Lee AP. Deep learning algorithms to automate left ventricular ejection fraction assessments on 2-dimensional echocardiography. J Am Coll Cardiol. 2019;73:1610. [Google Scholar]

- 22.Knackstedt C, Bekkers SCAM, Schummers G, et al. Fully automated versus standard tracking of left ventricular ejection fraction and longitudinal strain: the FAST-EFs multicenter study. J Am Coll Cardiol. 2015;66:1456–1466. doi: 10.1016/j.jacc.2015.07.052. [DOI] [PubMed] [Google Scholar]

- 23.Leclerc S, Smistad E, Pedrosa J, et al. Deep learning for segmentation using an open large-scale dataset in 2D echocardiography. IEEE Trans Med Imaging. 2019;38:2198–2210. doi: 10.1109/TMI.2019.2900516. [DOI] [PubMed] [Google Scholar]

- 24.Thorstensen A, Dalen H, Amundsen BH, Aase SA, Stoylen A. Reproducibility in echocardiographic assessment of the left ventricular global and regional function, the HUNT study. Eur J Echocardiogr. 2010;11:149–156. doi: 10.1093/ejechocard/jep188. [DOI] [PubMed] [Google Scholar]

- 25.D'hooge J, Fraser AG. Learning about machine learning to create a self-driving echocardiographic laboratory. Circulation. 2018;138:1636–1638. doi: 10.1161/CIRCULATIONAHA.118.037094. [DOI] [PubMed] [Google Scholar]

- 26.Ouyang D, He B, Ghorbani A, et al. Video-based AI for beat-to-beat assessment of cardiac function. Nature. 2020;580:252–256. doi: 10.1038/s41586-020-2145-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Lang RM, Addetia K, Narang A, Mor-Avi V. 3-Dimensional echocardiography: latest developments and future directions. JACC Cardiovasc Imaging. 2018;11:1854–1878. doi: 10.1016/j.jcmg.2018.06.024. [DOI] [PubMed] [Google Scholar]

- 28.Lang RM, Badano LP, Mor-Avi V, et al. Recommendations for cardiac chamber quantification by echocardiography in adults: an update from the American Society of Echocardiography and the European Association of Cardiovascular Imaging. J Am Soc Echocardiogr. 2015;28:1–39.e14. doi: 10.1016/j.echo.2014.10.003. [DOI] [PubMed] [Google Scholar]

- 29.Tsang W, Salgo IS, Medvedofsky D, et al. Transthoracic 3D echocardiographic left heart chamber quantification using an automated adaptive analytics algorithm. JACC Cardiovasc Imaging. 2016;9:769–782. doi: 10.1016/j.jcmg.2015.12.020. [DOI] [PubMed] [Google Scholar]

- 30.Medvedofsky D, Mor-Avi V, Amzulescu M, et al. Three-dimensional echocardiographic quantification of the left-heart chambers using an automated adaptive analytics algorithm: multicentre validation study. Eur Heart J Cardiovasc Imaging. 2018;19:47–58. doi: 10.1093/ehjci/jew328. [DOI] [PubMed] [Google Scholar]

- 31.Tamborini G, Piazzese C, Lang RM, et al. Feasibility and accuracy of automated software for transthoracic three-dimensional left ventricular volume and function analysis: comparisons with two-dimensional echocardiography, three-dimensional transthoracic manual method, and cardiac magnetic resonance imaging. J Am Soc Echocardiogr. 2017;30:1049–1058. doi: 10.1016/j.echo.2017.06.026. [DOI] [PubMed] [Google Scholar]

- 32.Levy F, Dan Schouver E, Iacuzio L, et al. Performance of new automated transthoracic three-dimensional echocardiographic software for left ventricular volumes and function assessment in routine clinical practice: comparison with 3 Tesla cardiac magnetic resonance. Arch Cardiovasc Dis. 2017;110:580–589. doi: 10.1016/j.acvd.2016.12.015. [DOI] [PubMed] [Google Scholar]

- 33.Sun L, Feng H, Ni L, Wang H, Gao D. Realization of fully automated quantification of left ventricular volumes and systolic function using transthoracic 3D echocardiography. Cardiovasc Ultrasound. 2018;16:2. doi: 10.1186/s12947-017-0121-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Medvedofsky D, Mor-Avi V, Byku I, et al. Three-dimensional echocardiographic automated quantification of left heart chamber volumes using an adaptive analytics algorithm: feasibility and impact of image quality in nonselected patients. J Am Soc Echocardiogr. 2017;30:879–885. doi: 10.1016/j.echo.2017.05.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Narang A, Mor-Avi V, Prado A, et al. Machine learning based automated dynamic quantification of left heart chamber volumes. Eur Heart J Cardiovasc Imaging. 2019;20:541–549. doi: 10.1093/ehjci/jey137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Volpato V, Mor-Avi V, Narang A, et al. Automated, machine learning-based, 3D echocardiographic quantification of left ventricular mass. Echocardiography. 2019;36:312–319. doi: 10.1111/echo.14234. [DOI] [PubMed] [Google Scholar]

- 37.Kusunose K, Abe T, Haga A, et al. A deep learning approach for assessment of regional wall motion abnormality from echocardiographic images. JACC Cardiovasc Imaging. 2020;13:374–381. doi: 10.1016/j.jcmg.2019.02.024. [DOI] [PubMed] [Google Scholar]

- 38.Skorton DJ, Collins SM, Woskoff SD, Bean JA, Melton HE., Jr Range- and azimuth-dependent variability of image texture in two-dimensional echocardiograms. Circulation. 1983;68:834–840. doi: 10.1161/01.cir.68.4.834. [DOI] [PubMed] [Google Scholar]

- 39.Koyama J, Minamisawa M, Sekijima Y, Kuwahara K, Katsuyama T, Maruyama K. Role of echocardiography in assessing cardiac amyloidoses: a systematic review. J Echocardiogr. 2019;17:64–75. doi: 10.1007/s12574-019-00420-5. [DOI] [PubMed] [Google Scholar]

- 40.Yu F, Huang H, Yu Q, Ma Y, Zhang Q, Zhang B. Artificial intelligence-based myocardial texture analysis in etiological differentiation of left ventricular hypertrophy. Ann Transl Med. 2021;9:108. doi: 10.21037/atm-20-4891. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Kagiyama N, Shrestha S, Cho JS, et al. A low-cost texture-based pipeline for predicting myocardial tissue remodeling and fibrosis using cardiac ultrasound. EBioMedicine. 2020;54:102726. doi: 10.1016/j.ebiom.2020.102726. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Gillies RJ, Kinahan PE, Hricak H. Radiomics: images are more than pictures, they are data. Radiology. 2016;278:563–577. doi: 10.1148/radiol.2015151169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Hassani C, Saremi F, Varghese BA, Duddalwar V. Myocardial radiomics in cardiac MRI. AJR Am J Roentgenol. 2020;214:536–545. doi: 10.2214/AJR.19.21986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Schofield R, Ganeshan B, Fontana M, et al. Texture analysis of cardiovascular magnetic resonance cine images differentiates aetiologies of left ventricular hypertrophy. Clin Radiol. 2019;74:140–149. doi: 10.1016/j.crad.2018.09.016. [DOI] [PubMed] [Google Scholar]

- 45.Mannil M, von Spiczak J, Manka R, Alkadhi H. Texture analysis and machine learning for detecting myocardial infarction in noncontrast low-dose computed tomography: unveiling the invisible. Invest Radiol. 2018;53:338–343. doi: 10.1097/RLI.0000000000000448. [DOI] [PubMed] [Google Scholar]

- 46.Kwak S, Lee Y, Ko T, et al. Unsupervised cluster analysis of patients with aortic stenosis reveals distinct population with different phenotypes and outcomes. Circ Cardiovasc Imaging. 2020;13:e009707. doi: 10.1161/CIRCIMAGING.119.009707. [DOI] [PubMed] [Google Scholar]

- 47.Casaclang-Verzosa G, Shrestha S, Khalil MJ, et al. Network tomography for understanding phenotypic presentations in aortic stenosis. JACC Cardiovasc Imaging. 2019;12:236–248. doi: 10.1016/j.jcmg.2018.11.025. [DOI] [PubMed] [Google Scholar]

- 48.Omar AMS, Ramirez R, Haddadin F, et al. Unsupervised clustering for phenotypic stratification of clinical, demographic, and stress attributes of cardiac risk in patients with nonischemic exercise stress echocardiography. Echocardiography. 2020;37:505–519. doi: 10.1111/echo.14638. [DOI] [PubMed] [Google Scholar]

- 49.Dey D, Slomka PJ, Leeson P, et al. Artificial intelligence in cardiovascular imaging: JACC state-of-the-art review. J Am Coll Cardiol. 2019;73:1317–1335. doi: 10.1016/j.jacc.2018.12.054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Willemink MJ, Koszek WA, Hardell C, et al. Preparing medical imaging data for machine learning. Radiology. 2020;295:4–15. doi: 10.1148/radiol.2020192224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Shameer K, Johnson KW, Glicksberg BS, Dudley JT, Sengupta PP. Machine learning in cardiovascular medicine: are we there yet? Heart. 2018;104:1156–1164. doi: 10.1136/heartjnl-2017-311198. [DOI] [PubMed] [Google Scholar]