Abstract

Brain-computer interfaces (BCIs) represent a new frontier in the effort to maximize the ability of individuals with profound motor impairments to interact and communicate. While much literature points to BCIs' promise as an alternative access pathway, there have historically been few applications involving children and young adults with severe physical disabilities. As research is emerging in this sphere, this article aims to evaluate the current state of translating BCIs to the pediatric population. A systematic review was conducted using the Scopus, PubMed, and Ovid Medline databases. Studies of children and adolescents that reported BCI performance published in English in peer-reviewed journals between 2008 and May 2020 were included. Twelve publications were identified, providing strong evidence for continued research in pediatric BCIs. Research evidence was generally at multiple case study or exploratory study level, with modest sample sizes. Seven studies focused on BCIs for communication and five on mobility. Articles were categorized and grouped based on type of measurement (i.e., non-invasive and invasive), and the type of brain signal (i.e., sensory evoked potentials or movement-related potentials). Strengths and limitations of studies were identified and used to provide requirements for clinical translation of pediatric BCIs. This systematic review presents the state-of-the-art of pediatric BCIs focused on developing advanced technology to support children and youth with communication disabilities or limited manual ability. Despite a few research studies addressing the application of BCIs for communication and mobility in children, results are encouraging and future works should focus on customizable pediatric access technologies based on brain activity.

Keywords: brain-computer interface, children, youth, assistive technology, severe disability, communication, environmental control

Introduction

Technology is often exploited as a tool to support children affected by severe brain disorders or injury in their daily activities. These technologies are especially pertinent to children who are not capable of using speech to communicate or who are limited in motor skills and require mobility aids. Worldwide, only 1 in 10 people have access to assistive technology devices when required [World Health Organization (WHO), 2020] and in Canada 95% of 3,775,920 individuals living with a disability use at least one aid or device to assist movement, communication, learning, or daily activities of life (Berardi et al., 2020).

The need for novel assistive technology and techniques for neurorehabilitation effective for children is high (Mikołajewska and Mikołajewski, 2014). One of the most advanced technical solutions is the brain-computer interface (BCI). BCIs can be defined as a link between the brain and an extra-corporeal apparatus, whereby signals from the brain can directly control the external device entirely bypassing the peripheral nervous system (Wolpaw et al., 2000). BCIs utilize changes in brain activity occurring when we react to stimuli, perform specific mental tasks, or experience different psychological or emotional states. Non-invasive BCIs typically detect and utilize electromagnetic potentials directly related to ensemble neuronal firing, or the associated hemodynamic changes including regional changes in relative oxyhemoglobin and deoxyhemoglobin concentrations (Proulx et al., 2018; Schudlo and Chau, 2018; Sereshkeh et al., 2018, 2019) and changes in arterial blood flow velocities (Myrden et al., 2011, 2012; Goyal et al., 2016), due to neurovascular coupling. Clinically, BCIs enable brain-based control of communication aids and environmental technologies (Moghimi et al., 2013; Rupp et al., 2014), assist in diagnosis (De Venuto et al., 2016; Lech et al., 2019), and enhance rehabilitation therapies (Daly and Wolpaw, 2008; Pichiorri and Mattia, 2020).

A long-term objective of translational BCI research is providing a channel for communication and environmental control for people with severe and multiple physical disabilities who otherwise lack the means to interact with people and the environment around them (Wolpaw et al., 2002). Hence, BCI-based control has been explored for: computer cursors (Wolpaw et al., 2002; Wirth et al., 2020); virtual keyboards (Birbaumer et al., 1999; Thompson et al., 2014a; Hosni et al., 2019); augmentative and alternative access systems (Thompson et al., 2013, 2014b; Brumberg et al., 2018); prosthetic devices (McFarland and Wolpaw, 2008; Vilela and Hochberg, 2020); wheelchairs (Punsawad and Wongsawat, 2013; Yu et al., 2017); entertainment/gaming (Holz et al., 2013; Van de Laar et al., 2013; Cattan et al., 2020); Internet browsing (Mugler et al., 2010; Milsap et al., 2019); and painting (Münßinger et al., 2010; Zickler et al., 2013; Kübler and Botrel, 2019). Given these explorations, BCIs have potential to serve as an alternative access method for people with severe motor deficits (Huggins et al., 2014), who are not well-served by commercially available access solutions. Nonetheless, research on novel BCI solutions for target populations has been limited to laboratory settings (Fager et al., 2012; Wolpaw and Wolpaw, 2012; Guy et al., 2018) and able-bodied adults (Pires et al., 2011; Oken et al., 2018). A modest subset of BCI studies has recruited adults with disabilities, including: amyotrophic lateral sclerosis (Nijboer et al., 2008; Huggins et al., 2011; Oken et al., 2014); multiple sclerosis (Papatheodorou et al., 2019); brainstem stroke (Sellers et al., 2014); muscular dystrophy (Zickler et al., 2011); acquired brain injury (Huang et al., 2019) and cerebral palsy (CP) (Taherian et al., 2016).

The adult BCI focus is at least partially attributable to the relative ease of acquiring from this population, robust brain signals that can be well-characterized. While the findings of adult studies are promising, BCI algorithms optimized for adults cannot be directly applied to pediatric users due, in part, to age-related differences in the brain responses of interest (Volosyak et al., 2017; Manning et al., 2021). For example, compared to adults, children exhibit less language lateralization (Holland et al., 2001), attenuated movement-related cortical potentials (MRCPs) (Pangelinan et al., 2011), and greater attentional effects on the latencies of auditory evoked potentials (Choudhury et al., 2015). Well-established BCI tasks for adults, such as verbal fluency, a verbal working memory task that requires to recall words associated with a common criterion from memory (Schudlo and Chau, 2018), are not suitable for children without developmentally appropriate modifications (Gaillard et al., 2003; Schudlo and Chau, 2018). Children with congenital impairments may have atypical brain anatomy and functional organization that preclude the simple translation of time-honored BCI protocols, including those validated in adults with acquired impairments.

Developmental differences may also manifest behaviorally. Children may experience difficulties maintaining focus (Gavin and Davies, 2007; Kinney-Lang et al., 2020) and their brain signals can contain excessive movement artifacts (Bell and Wolfe, 2007). It is imperative that research expands beyond able-bodied adults and involves more end-users, including children, ensuring any new developments are optimized from an individual's perspective. Differences in brain structure, topography, cognitive processing pathways and psycho-behavioral predisposition ought to be considered (Weyand and Chau, 2017).

Mikołajewska and Mikołajewski (2014) published a mini-review of BCI applications in children identifying several issues unique to pediatric applications of BCI and a paucity of research thereof. Among these pediatric-specific challenges included the absence of guidelines for processing brain signals from children, heightened neural plasticity including evolving cortical organization and frequency content of signals, and child engagement considerations such as fear, comfort, and positioning (Mikołajewska and Mikołajewski, 2014). Notwithstanding these concerns, the need for pediatric BCI research remains high given the lack of viable access technologies for children and youth with severe and multiple disabilities (Myrden et al., 2014).

Brain Computer Interfaces

BCI systems deploy either invasive or non-invasive signal acquisition modalities. Invasive BCIs monitor brain activity on a cortex's surface using electrocorticography (ECoG), within the gray matter using intracortical microelectrodes (Simeral et al., 2011) or in deep subcortical structures using depth electrodes (Krusienski and Shih, 2011; Herff et al., 2020). Non-invasive BCIs instead measure electrophysiological activity with electroencephalography (EEG) or magnetoencephalography (MEG), or hemodynamic activity using magnetic resonance imaging (MRI), near-infrared spectroscopy (NIRS) or transcranial Doppler ultrasound (Myrden et al., 2011, 2012). A third category, the hybrid BCI, is defined as systems using two or more measurement modalities such as NIRS-EEG and EEG-electrocardiogram (Pfurtscheller et al., 2010; Zephaniah and Kim, 2014) either simultaneously or sequentially.

BCIs can be categorized according to the paradigm invoked for eliciting machine-discernible brain signals. Reactive BCI paradigm elicits an event-related potential (ERP). Popular ERPs leveraged in BCIs include the P300, which is evoked by an oddball stimulus and characterized by a large positive deflection that occurs between 200–250 to 700–750 ms after stimulus onset (Amiri et al., 2013; He et al., 2020), and the steady-state visual evoked potential (SSVEP) and auditory steady-state response, wherein brain responses are evoked, respectively, by flickering lights or pure tones at specific frequencies. Active BCI paradigms elicit machine-discernible brain signals for BCI control via deliberate mental tasks such as motor imagery (MI), which involves the mental rehearsal of: a given movement (Rejer, 2012); mental arithmetic, music imagery (Weyand and Chau, 2015); spelling (Obermaier et al., 2003); covert speech (Birbaumer et al., 2010); observing pictures (Kushki et al., 2012); among others. Typical BCI taxonomies include passive BCIs that simply monitor the user's psychological state (Myrden and Chau, 2016, 2017).

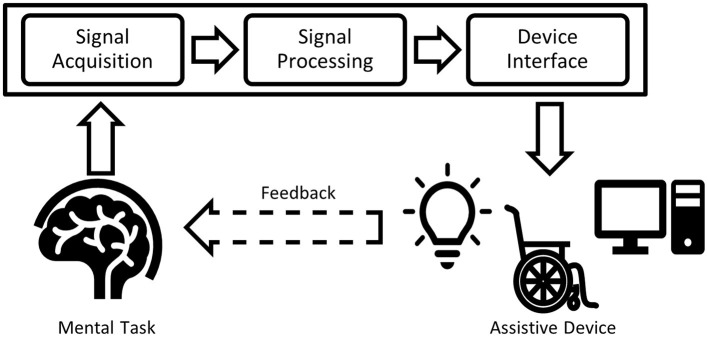

Once acquired, signals generated by a BCI task are fed through a processing pipeline. Signal processing procedures for BCIs can be “offline” (retrospective) or “online” (suitable for real-time applications). A typical BCI processing pipeline (Bamdad et al., 2015) for communication and mobility is depicted in Figure 1. Typical pipeline elements include algorithms to suppress artifacts, extract features, and classify the signals. Pipeline outputs are then used to control an assistive device that supports communication or mobility.

Figure 1.

Typical BCI processing pipeline. The input signal acquired from the human brain is filtered (signal processing), classified and transferred to an output device (device interface), forming the BCI application.

Objectives and Research Question

This article appraises the pediatric BCI literature systematically, considering specific inclusion criteria and highlighting the current information processing methods applied to pediatric brain signals. Through this systematic review, we set out to address two research questions: (1) What is the state of science in applying BCIs to support communication and manual ability in the pediatric population?; (2) What is current knowledge about the necessary considerations to render BCIs suitable for children?

Methods

Study Design

This systematic review included all levels of research evidence and aimed to integrate best practice systematic review methodology, the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines (Moher et al., 2009).

Search Strategy

Identification Process

Based on a preliminary search-string with the PubMed database, the syntax was developed for the search across three databases during May 2020: Scopus, PubMed, and Ovid. The SPIDER (Sample, Phenomenon of Interest, Design, Evaluation, Research type) tool was used to structure the search related to the research questions (Cooke et al., 2012). Electronic database searches were performed using the following key-terms related to “Sample:” pediatric or pediatric; or child or children; or youth(s) or adolescent(s) or teen(s) or teenager(s). These were combined with the following “Phenomenon of Interest” terms: BCI or brain computer interface or brain-computer interface or brain-machine interface or brain machine interface or mind machine interface or direct neural interface or neural control interface. The search strategy did not specify design, evaluation, or research type in order to capture all potentially relevant articles. These terms were considered in the inclusion and exclusion criteria. After retrieving studies from the searches, duplicates were removed and the paper titles, abstracts, and associated meta-data were compiled into a single table for further review.

Screening Process: Inclusion and Exclusion Criteria

All research within Oxford levels of evidence I–IV (Howick et al., 2011), including case studies and single-case experimental design studies reporting objective outcome measures were eligible for inclusion, if they: (1) reported in full text; (2) were published in English in peer-reviewed journals between January 2008 and May 2020; (3) included children and adolescents, under the age of 19 (Sawyer et al., 2018), using BCIs; (4) described the design of the protocol used for data collection (“Design”); (5) measured outcomes related to the performance of the BCI (“Evaluation”); and (6) included quantitative methods (“Research type”). Studies that presented only aggregate results from participating adults and children were excluded. Those studies that related to the general diagnosis of brain disorders or diseases were excluded. Publications related to passive BCI without a final goal of developing assistive technology devices were excluded. Gray literature and unpublished works were not eligible for inclusion. Strictly qualitative research, book chapters, review articles, and conference publications were excluded.

Eligibility Process: Study Selection, Data Collection Process, and Synthesis of Results

Two of the five authors (SO and SCH) conducted the search across the databases and produced a list of articles based on the title and abstract according to the inclusion criteria. A two-step procedure was carried out independently by four authors (SO, SCH, PK, RS) to identify articles for inclusion. The first step involved screening titles and abstracts for potential eligibility and, thereafter, screening the full text of potentially eligible articles. Four authors independently completed data extraction. An almost perfect level of agreement was obtained for title and abstract screening (Cohen's kappa coefficient, k = 0.96, percentage of agreement 98%). After a full-text review of the eligible papers, articles were excluded for any of the following reasons: the performance was reported for a heterogeneous group composed of adults and children without a two-group comparison (e.g., only averaged accuracy was reported, or children and adults' classification performance was not distinguishable); BCIs were not developed for pediatric participants; BCIs developed for adults but included a limited number of children (only one or two adolescents not sufficient for a two-group comparison); only adult participants were included in the study; results were not reported in terms of BCI performance; the study was not related to BCIs; passive BCIs were applied; the study did not include participants' data; participants' ages were not reported. Twelve articles remained eligible for further review. Twelve articles remained eligible for further review.

Data Extraction and Analysis

For each eligible study, the following data were extracted: number of participants and their ages; study design and data acquisition protocol; signal features; classifier; and performance metrics. It was not appropriate to conduct a meta-analysis or any statistical analyses of the results due to the small number and heterogeneity of the included studies. Instead, key findings were summarized and presented narratively clustering the selected full-text papers into two sub-groups based on the functional activities identified (communication or manual ability) and the type of measurement applied (non-invasive or invasive). No additional articles were found by consulting the references of the included full-text articles.

Quality Appraisal and Risk of Bias

Considering the heterogeneity of the 12 articles, the “QualSyst” quality assessment tool (Alberta Heritage Foundation for Medical Research) was used to gauge the quality of the overall body of evidence (Kmet et al., 2004). We applied a 14-criteria checklist for quantitative studies, where raters scored each criterion as being fully (2 points), partially (1 point), or not (0 points) fulfilled. A summary score was calculated for each paper as the sum of the scores across all applicable criteria and expressed as a percentage of the total possible score. When a specific criterion was not applicable to a given study, the criterion was omitted from the calculation of the summary score. Two reviewers (SO and SCH) independently assessed the quality (inter-rater reliability, k = 0.77 and 86% level of agreement) and the risk of bias for all the included studies. The sample sizes of the multiple-case-study articles were reported in terms of the number of pediatric participants recruited in the studies. Adult participants were not included in Tables 1–6. To further elucidate the overall quality of the evidence, each of the included articles received a quality grade as: limited (score of ≤50%); adequate (>50 and ≤70%); good (>70 and ≤80%); or strong (>80%) (Lee et al., 2008). Discrepancies were discussed between the two reviewers and consensus was reached. The risk of bias was identified for each study by two authors (SO and SCH) using the Agency for Healthcare Research and Quality (AHRQ) criteria (Viswanathan et al., 2017). The risk of bias was assessed through the evaluation and discussion of each article in terms of selection, performance, attrition, detection, and reporting (inter-rater reliability, k = 0.94 and 95% level of agreement). Responses for each criterion were scored as “low risk,” “high risk,” “unclear,” and “not applicable.” Low risk of bias was assumed when studies met all the risk-of-bias criteria, medium risk of bias if at least one of the risk-of-bias criteria was not met and high risk of bias if three or more risk-of-bias criteria were not fulfilled. An unknown risk of bias was considered as high risk.

Table 1.

Research articles on pediatric non-invasive BCIs: study objectives and data collection details.

| References | Study objective | Sample size [females] | Age (years) | Diagnosis | Applications | BCI paradigm | Mode of operation | Signal type | Data acquisition | Task and sessions |

|---|---|---|---|---|---|---|---|---|---|---|

| Ehlers et al. (2012) | Examine age-related performance differences on an SSVEP-BCI |

N = 51 [31] Pediatric Group 1: N = 11 [6] Group 2: N = 12 [9] Group 3: N = 14 [3] |

6–33 Pediatric Avg. 6.73 Avg. 8.08 Avg. 9.86 |

TD | Mouse control/ spelling | SSVEP # stimuli: 3 Low: 7–11 Hz Medium: 13–17 Hz High: 30, 32, 34, 36, 38 Hz |

Synch | EEG |

Location: parietal and occipital (PZ, PO3, PO4, O1, OZ, O2, O9, O10) # Channels: 8 Hardware and Software: -Ag/Ag-Cl EEG electrodes -Wet system -BCI2000 -C++ (Bremen BCI) |

Task: cursor control to complete a spelling task # Sessions: 1 Session duration: 45 min Task duration: 2 min per run (6 words and at least 20 commands per word) |

| Norton et al. (2018) | Compare the performance of 9–11-year-old children using SSVEP-based BCI to adults |

N = 26 [n/a] Pediatric N = 15 [n/a] |

9–68 Pediatric 9–11 |

TD | Graphical interface comprising three white circle targets | SSVEP # stimuli: 3 [6.2, 7.7, 10 Hz] |

Synch | EEG |

Location: PO3, POZ, PO4, O1, OZ, O2 # Channels: 6 Hardware and Software: -Tin electrodes -Wet system -BCI2000 |

Task: focus visual attention on one of three white circle targets # Sessions: 1 Session duration: 5 trials/stimulus (15 trials total) for training, 20 trials/stimulus (60 trials total) for testing. Task duration: 5 s |

| Beveridge et al. (2017) | Evaluate mVEP paradigm for BCI-controlled video game | Pediatric N = 15 [4] |

Pediatric 13–16 |

TD | Neurogaming | mVEP # stimuli: 5 |

Synch | EEG |

Location: Cz, TP7, CPz, TP8, P7, P3, Pz, P4, P8, O1, Oz, and O2 # Channels: 12 Hardware and Software: -g.LADYbird -g.BSamp and g.GAMMAbox -MATLAB® -Unity 3D |

Task: 3D car-racing video game # Sessions: 1 Session duration: 1 h Task duration: 1,000 ms to activate 5 stimuli (300 trials per calibration and 60 per testing) |

| Beveridge et al. (2019) | Study trade-off between accuracy of control and gameplay speed using an mVEP BCI |

N = 48 [10] Pediatric N = 15 [4] |

13–40 Pediatric 13–16 |

Pediatric data is pulled from Beveridge et al. (2017) to compare to newly collected adult data. Adult protocol differed slightly from pediatric protocol (e.g., slow medium and fast lap rather than 3 slow laps + compare experienced vs. naive adults). | ||||||

| Vařeka (2020) | Compare CNN with baseline classifiers using large subject P300 BCI dataset | Pediatric N = 250 [112] |

7–17 | No identifying physical symptoms were asked or recorded | Guess the number game | P300 # stimuli: 1-9 flashings |

Synch | EEG |

Location: Fz, Cz, Pz # Channels: 3 Hardware and Software: -BrainVision standard V-Amp -Neurobehavioural Systems Inc. -BrainVision Recorder -MATLAB® |

Task: P300 # Sessions: 1 Session duration: Task duration: 1,000 ms (532 trials) |

| Taherian et al. (2017) | Employ a commercial EEG based BCI with people with CP |

N = 8 [4] Pediatric N = 5 [2] |

7–43 Pediatric P1: 17 P2: 17 P3: 7 P4: 9 P5: 9 |

Spastic quadriplegic CP | Puzzle games | MI-ERD | Synch | EEG |

Location: unknown number—includes C3 and C4 # Channels: 14 Hardware and Software: -Emotiv EPOC BCI headset -Saline felt electrodes -Emotiv software |

Task: imagined arm movements associated with the ability to move a virtual cube # Sessions: 5–7 Session duration: 30 min Task duration: 16–55 8 s trials |

| Jochumsen et al. (2018) | Movement intention detection in adolescents with CP from single-trial EEG | Pediatric N = 8 [1] |

11–17 P1: 16 P2: 15 P3: 11 P4: 15 P5: 13 P6: 15 P7: 17 P8: 16 |

Hemiplegia or diplegia CP with GMFCS of I-V | Neurorehabilitation | Movement preparation—MRCP/ dorsiflexions of the ankle joint |

Asynch (self-paced) | EEG |

Location: F3, FZ, F4, C3, CZ, C4, P3, PZ, P4 # Channels: 9 Hardware and Software: -Neuroscan EEG amplifiers -Wet system -EMG for movement detection -MATLAB® |

Task: dorsiflexion of the ankle joint # Sessions: 1 Session duration: 15 min—avg. 65 ± 18 movements performed per participant Task duration: 4–6 s |

| Zhang et al. (2019) | Evaluate if children can use simple BCIs | Pediatric N = 26 [7] |

6–18 P1–2: 6 P3: 8 P4: 9 P5–7: 10 P8–9: 11 P10–11: 12 P12–14: 13 P15–16: 14 P17: 15 P18–19: 16 P20–22:17 P23–26: 18 |

TD | Mouse control and remote-controlled car | MI and goal-oriented thinking—ERD | Synch | EEG |

Location: AF3, AF4, F3, F4, F7, F8, FC5, FC6, P7, P8, T7, T8, O1, O2 # Channels: 14 Hardware and Software: -Emotiv EPOC BCI headset -Saline felt electrodes -Emotiv software |

Tasks: imagine opening and closing both hands, “goal-oriented thoughts,” and rest # Sessions: 2 Session duration: <1 h Task duration: eight 8 s trials per task for training; 10–20 s trials with 5 s rest for testing |

CP, cerebral palsy; avg., average; MI, motor imagery; N, number of participants; P#, pediatric participant number; SSVEP, steady state visual evoked potential; synch, synchronous; asynch, asynchronous; TD, typically developing; CNN, convolutional neural network; mVEP, motion-onset visual evoked potential; EEG, electroencephalography; EMG, electromyography; ERD, event-related desynchronization; MRCP, movement-related cortical potential; GMFCS, gross motor function classification system; n/a, not applicable.

Table 6.

Risk of bias.

| References | Source of bias | |||||

|---|---|---|---|---|---|---|

| Selection bias | Performance bias | Attrition bias | Detection bias | Reporting bias | Overall bias | |

| Sanchez et al. (2008) | + | – | ? | + | – | High |

| Breshears et al. (2011) | + | – | ? | + | – | High |

| Ehlers et al. (2012) | – | – | + | + | – | Medium |

| Pistohl et al. (2012) | + | – | ? | + | – | High |

| Pistohl et al. (2013) | + | – | ? | + | – | High |

| Beveridge et al. (2017) | – | – | ? | + | – | Medium |

| Taherian et al. (2017) | + | + | + | + | + | High |

| Norton et al. (2018) | – | – | – | + | – | Low |

| Jochumsen et al. (2018) | – | – | ? | + | – | Medium |

| Beveridge et al. (2019) | – | – | ? | + | – | Medium |

| Zhang et al. (2019) | – | – | + | – | – | Low |

| Vařeka (2020) | + | – | ? | + | – | High |

+, high-risk of bias; ?, uncertain/non-applicable risk of bias; –, low-risk of bias.

Results

Study Selection and Taxonomy

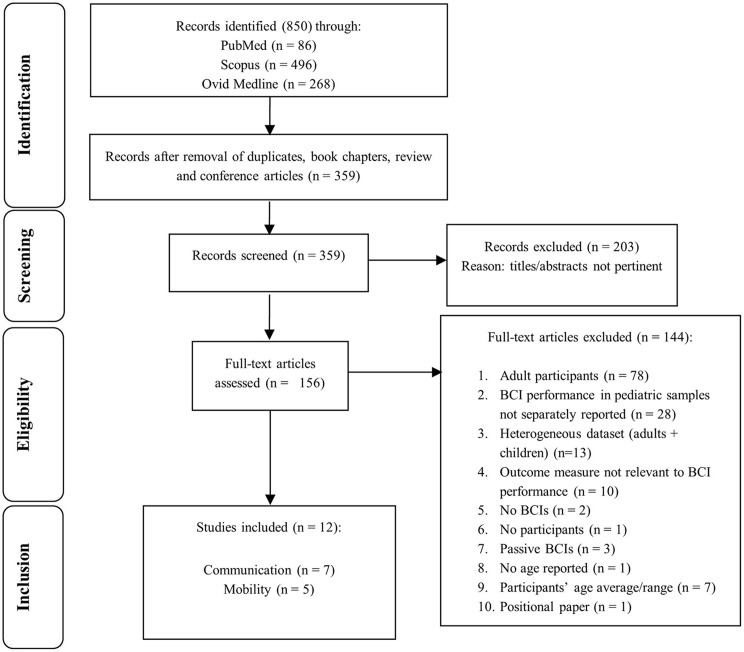

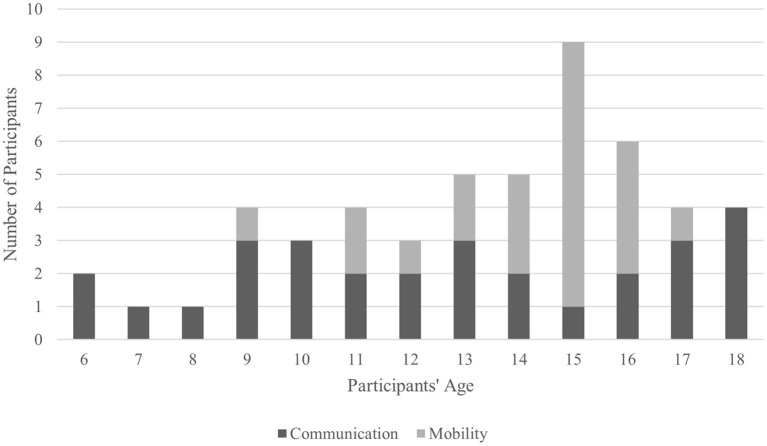

The search strategy identified 850 potential papers; 340 duplicates and 151 reviews, book chapters, conference articles were removed. Then 359 titles and abstracts were reviewed and 203 were removed, according to the inclusion criteria (section Screening Process: Inclusion and Exclusion Criteria), leaving 156 articles that required full-text review. Twelve articles were subsequently identified as eligible for inclusion and grouping into sub-categories: seven relating to communication (Beveridge et al., 2017, 2019; Taherian et al., 2017; Norton et al., 2018; Zhang et al., 2019; Vařeka, 2020); and five concerning mobility (Sanchez et al., 2008; Breshears et al., 2011; Pistohl et al., 2012, 2013; Jochumsen et al., 2018). The flowchart in Figure 2 details outcomes of: identification; screening; eligibility; inclusion steps.

Figure 2.

Study selection flowchart. The flow diagram describes identification, screening, eligibility, and inclusion procedures.

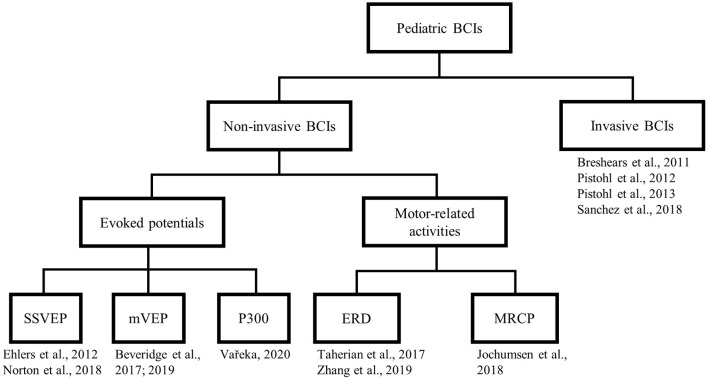

We categorized the selected papers (see Figure 3). At the first level of the taxonomy, we grouped papers according to the type of measurement, either non-invasive or invasive. Under the non-invasive category, we further subdivided papers by the type of brain signals harnessed, which includes three types of sensory evoked potentials, MRCP, or event-related desynchronization (ERD). This taxonomy roughly reflects the readiness for clinical translation, with the non-invasive alternatives being more readily implementable. For each study, we adhere to a uniform presentation structure, highlighting the participants, task paradigm, analytical approach, and key findings.

Figure 3.

Taxonomy of the selected articles. SSVEP, steady state visual evoked potential; mVEP, motion-onset visual evoked potential; ERD, event-related desynchronization; MRCP, movement-related cortical potential.

Objectives, participants' information (e.g., age, health conditions, participant number), methods and findings related to online and offline BCI performance are reported in Tables 1–4.

Table 4.

Research articles on pediatric invasive BCIs: signal processing techniques and results (only for pediatric age).

| References | Online/offline/# of classes | Signal processing and features | Classifier/outcome measures | Results |

|---|---|---|---|---|

| Sanchez et al. (2008) | n/a Chance level: n/a |

Fs: 381.5 Hz Filtering: FIR filter (1–6 kHz) Features: (i) Equiripple FIR filter: 1–60 Hz, 60–100 Hz, 100–300 Hz, 300 Hz−6 kHz (ii) FIR filter topology trained using the Wiener solution |

Classifier: n/a Outcome Measure: (i) Pearson's r |

Offline accuracy: n/a Online accuracy: n/a Additional measures: Pearson's r for X-position; Y-position P1: 0.39 ± 0.26; 0.48 ± 0.27 P2: 0.42 ± 0.26; 0.45 ± 0.25 Highest r achieved with 300 Hz−6 kHz feature |

| Breshears et al. (2011) |

Online only

2 classes: Imagined or performed motor movement vs. rest Chance level: 50% (theoretical chance level) |

Fs: 1,200 Hz Filtering: Autoregressive spectral coefficients in 2 Hz frequency bins from 0 to 250 Hz for each electrode Features: (i) Spectral power of filtered frequency bins (ii) Spectral power of electrodes |

Classifier:

Real-time translational algorithm based on the weighted linear summation of the identified features (showing power increases were assigned positive weights, or power decreases were assigned negative weights) Outcome Measure: (i) Accuracy for each action |

Offline accuracy: n/a Online accuracy: P1: 70.8–99.0% P2: 72.7–77.4% P3: 82.7–85.1% P4: 75.0–100% P5: 88.8 % P6: 93.3 % Additional measures: n/a |

| Pistohl et al. (2012) |

Offline

2 classes: precision grip, whole-hand grip 10-fold cross-validation (20 repetitions) Chance level: 50% (theoretical chance level) |

Fs: 256 Hz Filtering: Re-referenced to common average, average voltage subtracted, normalized voltage, low pass filtered component (fc ~5 Hz) Features: (i) Signal components |

Classifier: rLDA Outcome Measure: (i) Decoding accuracy (ii) Temporal evolution of grasp discriminability |

Offline accuracy:

P1: 97% P2: 84% P3: 96% Online accuracy: n/a Additional measures: Temporal evolution of grasp discriminability: 0.2 s |

| Pistohl et al. (2013) |

Offline only

2 classes: grasp and no grasp Chance level: n/a |

Fs: 256 or 1,024 HzFiltering:LFC: 2nd order Savitzky-Golay filter (window length: 250 ms) Features: (i) LFC (ii) Frequency band amplitudes within consecutive bands of 4 Hz width from 0 to 128 Hz. Band pass filtering by 4th order elliptic digital filter design |

Classifier: rLDA in 10-fold cross-validation Outcome Measures: (i) True positive ratio (TPR) (ii) False positive ratio (FPR) (iii) False positive min−1 (FP-rate) |

Offline accuracy:

After 0.25 s (TPR/FPR/FP-rate): P1: 0.75/0.26/2.5 P2: 0.50/0.36/2.7 P3: 0.75/0.25/3.1 After 0.50 s (TPR/FPR/FP-rate): P1: 0.92/0.10/0.9 P2: 0.69/0.12/0.9 P3: 0.91/0.08/1.0 After 0.75 s (TPR/FPR/FP-rate): P1: 0.97/0.05/0.4 P2: 0.74/0.05/0.4 P3: 0.96/0.03/0.4 Online accuracy: n/a Additional measures: n/a |

Fs, sampling frequency; fc, cut-off frequency; rLDA, regularized linear discriminant analysis; FPR, false positive ratio; TPR, true positive ratio; FP-rate, false positive rate; P#, pediatric participant number; LFC, low-pass filtered component; FIR, finite impulse response; n/a, not applicable.

Non-invasive Pediatric BCIs

Eight studies (Ehlers et al., 2012; Beveridge et al., 2017, 2019; Taherian et al., 2017; Jochumsen et al., 2018; Norton et al., 2018; Zhang et al., 2019; Vařeka, 2020) in this category used EEG as the non-invasive modality for interrogating the pediatric brain. The study by Jochumsen et al. (2018) is the only one on non-invasive pediatric BCI related to manual ability. The other seven non-invasive BCI studies (Ehlers et al., 2012; Beveridge et al., 2017, 2019; Taherian et al., 2017; Norton et al., 2018; Zhang et al., 2019; Vařeka, 2020) focused on new systems to support communication and computer interaction.

Evoked Potentials

Five studies harnessed evoked brain responses: steady-state visual evoked potential (SSVEP) (Ehlers et al., 2012; Norton et al., 2018); motion-onset visual evoked potential (mVEP) (Beveridge et al., 2017, 2019); P300 (Vařeka, 2020) following the presentation of a visual stimulus.

Ehlers et al. (2012) investigated the influence of development-specific changes in the background EEG on stimulus-driven BCI with 37 typically developing (TD) children and 14 adults, aged 6–33, using SSVEPs and mouse control and spelling tasks. Only online results but no chance level were reported. Participants navigated a letter matrix to spell six words, two in three different stimuli conditions (low, medium, high frequency), by focusing on one of five target LEDs (corresponding to four directions and a select command) placed around a screen where the letter in the middle of the matrix was highlighted. Participants practiced by spelling their names; however, the youngest participants were assisted by the investigator in locating the target LED given their less developed visual searching abilities. Ehlers et al. (2012) used the Bremen-BCI (Friman et al., 2007) to classify five different SSVEP targets. Poor signals due to insufficient electrode contact were given a low weight or ignored. Classification of signals used to generate the correct-to-complete commands ratio was based on a 2 s sliding window every 125 ms. Accuracies, regarding correct-to-complete commands ratio, were lower than 60% for pediatric participants. Results showed low classification performance (accuracy: ~40%) for the young subjects (age 7–10 years), based on stimulation of 7 and 11 Hz. When a low-frequency (7–11 Hz) visual stimulus was presented to participants, adults consistently achieved higher accuracies (~78%) than those achieved by the three groups of children (group 1 accuracy: ~40%; groups 2 and 3: ~50%). In the medium frequency (13–17 Hz) condition, differences in achieved accuracies were found only between the adults (accuracy: ~78%) and youngest group of children with an average age of 6.73 years (group 1 accuracy: ~55%; group 2: 50%; group 3: 75%). In contrast, no difference between the four groups was found when a high-frequency (30–48 Hz) stimulus was presented (group 1 accuracy: ~38%; group 2: ~45%; group 3: 55%; adults: ~62%). An age-specific shift was observed in the peak synchronization frequency. Peak synchronization increases from 8 to 9 Hz in the lowest age group to 10–11 Hz in adults. Aborted attempts decreased with increasing age and increased as the accuracy level decreased (particularly evident in the high drop-out rates of the youngest age group under low-frequency stimulation). Lastly, the authors discovered the inability to adequately control a BCI using the low-frequency rates. The age factor gains influence with decreasing stimulation frequency.

Similarly, Norton et al. (2018) used the SSVEP paradigm to compare the performance between 15 TD children (aged 9–11) and 11 adults (aged 19–68) in a laboratory environment using a graphical interface. Offline and online results but no chance level were reported. Authors included a minimum offline accuracy requirement for the online analysis. Authors did not specify the number of sessions in their study. We assumed that participants performed only one session preceded by BCI calibration. Participants were asked to focus their attention on three white circles, each alternating between white and black at three different frequencies (6.2, 7.7, and 10 Hz) on the screen. During calibration, participants were directed to focus on the circle highlighted by an on-screen arrow. Participants subsequently repeated the same task without the arrow to test the system online. Norton et al. (2018) applied a calibration phase and a longer experimental phase to classify three different SSVEP targets. If the calibration phase accuracy was <85%, the participant could not proceed to the experimental phase (online phase). Eleven children and all adults met the minimum accuracy requirement. Children and adults achieved similar performance during the experimental phase (accuracy: 79 vs. 78%; latency: 2.1 vs. 1.9 s; bitrate: 0.05 vs. 0.56 bits s−1). Feature extraction and classification were based on the canonical correlation analysis (CCA) and used to determine the SSVEP targets. Norton et al. (2018) used a method similar to that applied by Lin et al. (2006) wherein EEG signals from multiple channels were used to calculate the CCA coefficients considering the stimulus frequencies in the systems. The frequency with the highest coefficient indicates the SSVEP frequency. This study demonstrated that children can use an SSVEP-based BCI with higher accuracy (average accuracy: 79%) than Ehlers et al. (2012) when low-frequency stimulus is applied. However, their good performance could be dependent on the different environments. Participants completed a target selection task and not a text-entry task. Also, they applied different stimulus frequencies.

Beveridge et al. (2017) evaluated whether 15 TD adolescents (aged 13–16) could gain control of an mVEP-BCI paradigm for video game playing. Offline and online accuracy of target vs. non-target stimuli classification (chance level: 50%) and 5-class discrimination results (chance level: 20%) were reported. Participants were engaged in a 3D car racing video game that involved changing lanes at several checkpoints performing three laps. As participants approached a checkpoint, one of the five motion stimuli was presented above each of five lanes with an arrow indicating the target lane for positioning the car. Participants attended to the motion stimulus associated with the target lane. If the target lane was selected correctly by the BCI, participants were rewarded with points and speed boost. The authors collected 300 mVEP trials from 12 gel-based EEG electrodes to calibrate a classifier tested with additional 300 trials. Beveridge et al. (2019) subsequently reported performance achieved by BCI-naïve and BCI-experienced adults with a near-identical protocol. For this review, Beveridge et al. (2017) and Beveridge et al. (2019) were considered identical as they relied on the same adolescent dataset. The two studies by Beveridge et al. (2017, 2019) report results from a single adolescent mVEP dataset. The collected mVEP data were resampled from 250 to 20 Hz and filtered. Data were averaged over five trials to generate 12 feature vectors for each stimulus which corresponded to the 12 EEG channels. Offline and online accuracies and information transfer rate (ITR) were reported for each participant. Participants achieved 85.17% offline accuracy through a leave-one-out cross-validation, and 68% accuracy and 11 bits per minute during online trials. The authors reported Cz, P7, and O1 as the most discriminative channels across participants. Beveridge et al. (2019) also compared the group of BCI naïve teenagers with nine BCI naive adults. Adults achieved higher classification accuracies compared to teenagers (average accuracies in 3 laps: 75.4 vs. 68%), but the difference between adults and teenager was significant only in the third lap.

Lastly, Vařeka (2020) entailed large-scale offline analysis of P300 visually-evoked potential signals collected from 250 children (aged 7–17) without any identifying physical symptoms, playing “Guess the Number.” This game requires participants to focus on a self-selected number between 1 and 9 as a series of numbers (1–9) flash on a screen in random order. When flashed, the selected number elicits a P300 response. Thus, the algorithm predicts the selected number. Offline results but no chance levels were reported. Vařeka (2020) collected 532 trials using three channels. The study aimed to compare convolutional neural networks (CNNs) against linear discriminant analysis (LDA) and support vector machines (SVM). The author applied a baseline to correct each epoch and eliminated epochs containing amplitudes exceeding 100 μV. Epochs were divided into 20 equal-sized intervals wherein the amplitudes were averaged. Features were classified separately using CNN, LDA, and SVM. All classifiers produced similar classification accuracies. Single-trial classification accuracies ranged between 62 and 64%, while trial averaging raised accuracies to 76–79%. Precision, recall, and area under a receiver operating characteristic (ROC) curve (AUC) were 61.5–63.5%, 60.5–67.5%, and 62–66%, respectively, for single-trial classification. Averaging groups of one to six neighboring epochs instead of single trials improved classification accuracies.

Motor-Related Activities

Three studies investigated motor-related activities with children (Taherian et al., 2017; Jochumsen et al., 2018; Zhang et al., 2019).

Two studies applied a MI paradigm (Taherian et al., 2017; Zhang et al., 2019) and EMOTIV system, a commercially available headset. Although both studies reported the use of the same EEG device (EMOTIV Epoc®), Zhang et al. (2019) referred to a dry system while Taherian et al. (2017) described a wet device. Zhang et al. (2019) reported that the electrode foam pads were immersed in a saline solution to ensure reliable connection before being placed on the child's head. Both studies should have described the EMOTIV Epoc® as a headset with saline-soaked felt pads. These sensors are not wet in the traditional sense, but they are not considered truly dry. Both studies explored the possibility of implementing an EEG-based visual motion BCI and they used the Emotiv Software Development Kit (SDK) for the analysis and classification. Both studies extracted modulation features. Classification results were obtained based on ERD phenomenon.

Taherian et al. (2017) evaluated the feasibility of implementing an EEG-based BCI using the 14-saline-based electrode version of this headset in five children (aged 9–17) with spastic quadriplegic CP. The EMOTIV is packaged with software that provides visual feedback of cognitive tasks and a gamified training protocol. Participants donned the EMOTIV Epoc® headset and completed six 30-min training sessions where they were guided through the EMOTIV software to move a virtual cube up, down, left, or right using MI of the limbs. EEG signals were processed using Cognitiv™ Suite provided by EMOTIV Epoc®. The Cognitiv system processes the brainwaves and matches them to the patterns of thought trained, relying on ERD, detected on the EEG signals within the frequency range of 0.2 and 43 Hz (Lang, 2012). The authors developed a puzzle game and participants were asked to complete the puzzle after each training session. The puzzle was completed in an online paradigm using the same MI tasks from the training sessions while continuous visual feedback was provided. When participants were able to produce MI tasks with precision, they were rewarded with a puzzle piece. Participants completed five to seven sessions. Unfortunately, Taherian et al. (2017) reported only online performance scores for left and right arm for some participants in graphs and do not report accuracy, latency, or bitrate. Performance values were approximated and based on graph readings. All participants experienced challenges following protocol for various reasons related to their condition, including: difficulties focusing; seizures during trials; anxiety; equipment discomfort; lack of enjoyment when playing. All pediatric participants demonstrated inconsistent and unreliable control of BCI. They concluded that existing commercial BCIs are not designed according to the needs of end-users with CP.

Zhang et al. (2019) conducted a cross-over interventional study on 26 TD children (aged 6–18) to estimate the performance of two tasks (driving a remote-control car and moving a computer cursor). Children participated in two sessions where they performed a MI task (imagery of opening and closing both hands to move the car or the cursor) and a “goal-oriented thought” task (think of moving the car or the cursor toward a target). During each session, participants completed eight trials as training data for the BCI followed by 10 testing trials to evaluate the system performance. The BCI's goal was to complete the designated task (car or cursor) using one of two strategies (MI or goal-oriented thought). We assumed that Zhang et al. (2019) used the Emotiv SDK to extract ERD features using an 8-s window. They reported good performance using the EPOC headset and Radial Basis Probabilistic Neural Network to distinguish between baseline and training epochs. Zhang et al. (2019) reported online results (chance level: 70% classification accuracy, 0.4 Cohen's kappa) in terms of Cohen's kappa scores (range 0.025–0.90). Performance correlated with increasing age, but sex was not associated. A Cohen's kappa of 0.4 or higher indicated successful control.

Jochumsen et al. (2018) deployed motor execution tasks to elicit MRCP in the motor cortex. MRCP is an event-related potential locked to the onset of a movement, reflecting the preparatory brain activity. They detected MRCPs in 8 adolescents with hemiplegia or diplegia CP (aged 11–17) via EEG, with the goal of maximizing motor learning by temporally aligning afferent feedback with the cortical manifestation of movement intention. Offline classification accuracy (chance level: 60–65%) was reported. Participants dorsiflexed an ankle at self-determined pace during a single 15-min session. Electromyographic signal was used to divide continuous EEG into epochs. Mean amplitudes, absolute band power, and template matching were extracted from each channel after filtering. Template matching was obtained by calculating the cross-correlation between the template of the movement epochs (averaged epochs for each channel for each participant) and the epochs. A random forest discriminated movement intention epochs from idle epochs using a leave-one-out cross-validation and achieved up to 85% accuracy.

For study objective, population, and tasks of non-invasive BCIs see Table 1. For signal processing techniques and results see Table 2.

Table 2.

Research articles on pediatric non-invasive BCIs: signal processing techniques and results (only for pediatric age).

| References | Online/offline/# of classes | Signal processing and features | Classifier or analysis | Results |

|---|---|---|---|---|

| Ehlers et al. (2012) |

Online only

5 classes: 5 visual stimulation frequency targets in low, medium, or high frequency range Chance level: n/a |

Fs: 2,048 Hz Filtering: High-pass (fc = 0.1 Hz) Low-pass (fc = 552.96 Hz) Features: Minimum energy combination spatial filter |

Classifier:

Bremen-BCI Outcome Measure: (i) Accuracy |

Offline accuracy: n/a Online accuracy: Low-frequency stimulation Group 1: 40%; Group 2: 50%; Group 3: 50% Medium-frequency stimulation Group 1: 55%; Group 2: 50%; Group 3: 75% High-frequency stimulation Group 1: 38%; Group 2: 45%; Group 3: 55% Additional measures: n/a |

| Norton et al. (2018) |

Offline and Online

3 classes: 3 SSVEP targets Chance level: n/a |

Fs: 128 Hz Filtering: Bandpass 1–30 Hz Features: (i) Threshold (ii) Window-length |

Classifier:

Canonical correlation analysis Outcome Measure: (i) Classification accuracy (ii) Latency (iii) Nykopp bitrate |

Offline accuracy:

11 of 14 children exceeded the threshold of success: 40–100% Online accuracy: P: 79% Additional measures: Latency: 2.106 s Nykopp bitrate: 0.5 bits s−1 |

| Beveridge et al. (2017) |

Offline

5 classes: 5 target locations for mVEP and 2 classes: Leave one out cross validation among the 5 targets Online 5 target locations classified using target vs. non-target binary classification Chance level: 20% (0 bpm) for 5 class-classifier 50% for 2 class-classifier (theoretical chance level) |

Fs: 250 Hz, resampled to 20 Hz Filtering: Baseline-corrected, Low-pass filter (fc = 10 Hz) Features: (i) mVEP components (e.g., P100, N200, and P300): data averaged over 5 trials (12 feature vectors per stimulus) |

Classifier: LDA Outcome Measure: (i) Classification accuracy (ii) ITR |

Offline accuracy (LOOCV & 5-class):

P1: 84.58 & 76.67 P9: 94.79 & 98.33 P2: 94.58 & 96.67 P10: 78.75 & 71.67 P3: 84.79 & 81.67 P11: 90.42 & 91.67 P4: 83.54 & 70.00 P12: 77.50 & 68.33 P5: 86.46 & 85.00 P13: 88.75 & 85.00 P6: 77.29 & 76.67 P14: 82.29 & 75.00 P7: 88.96 & 85.00 P15: 72.92 & 70.00 P8: 91.88 & 81.67 Mean: 85.17 & 80.89 Online accuracy: P1: 54%* P9: 75%* P2: 88%* P10: 51%* P3: 45%* P11: 58%* P4: 65%* P12: 60%* P5: 83%* P13: 85%* P6: 61%* P14: 82%* P7: 82%* P15: 40%* P8: 92%* Mean: 68%** Additional measures: ITR P1: 4 bpm* P9: 13 bpm* P2: 19 bpm* P10: 4 bpm* P3: 3 bpm* P11: 6 bpm* P4: 8 bpm* P12: 7 bpm* P5: 16 bpm* P13: 17 bpm* P6: 7 bpm* P14: 16 bpm* P7: 16 bpm* P15: 2 bpm* P8: 21 bpm* Mean: 11 bpm** |

| Beveridge et al. (2019) |

Offline

5 classes: 5 target locations for mVEP and 2 classes: LOOCV among the 5 targets Online 5 target locations classified using target vs. non-target binary classification Chance level: 20% (0 bpm) for 5 class-classifier 50% for 2 class-classifier (theoretical chance level) |

Fs: 250 Hz, resampled to 20 Hz Filtering: Baseline-corrected, Low-pass filter (fc = 10 Hz) Features: (i) mVEP components (e.g., P100, N200, and P300): data averaged over 5 trials (12 feature vectors per stimulus) |

Classifier:

LDA Outcome Measure: (i) Classification accuracy (ii) ITR (iii) mVEP latency (iv) mVEP amplitude |

-Same results as Beveridge et al. (2017) -Online results showed that BCI naïve adults achieved higher accuracies than BCI naïve children (the difference is not always statistically significant) |

| Vařeka (2020) |

Offline only

10 classes: 10 P300 targets Chance level: n/a |

Fs: 1,000 Hz Filtering: Baseline-corrected, amplitude threshold 100 μV Features: (i) Averaged time intervals and feature scaled to zero mean and unit variance |

Classifier: LDA, SVM, and CNN in leave one out cross validation Outcome Measure: (i) Accuracy (ii) Precision (iii) Recall (iv) AUC |

Offline accuracy: Single-trial classification accuracy 62–64% Accuracy with trial averaging 76–79% Online accuracy: n/a Additional measures: (i) Single-trial classification (ii) Precision-−61.5–63.5%*** (iii) Recall-−60.5–67.5%*** (iv) AUC-−62–66%***all tested models achieved comparable classification results |

| Taherian et al. (2017) |

Online only

2 classes: Left and right arm motor imagery Chance level: n/a |

Fs: n/a Filtering: Proprietary Emotiv software Features: Proprietary Emotiv software (Cognitiv suite)—ERD |

Classifier:

Emotiv classifier—proprietary output from Emotiv Software Development Kit Outcome Measure: (i) peak performance score |

Offline accuracy: n/a Online accuracy: n/a Additional measures: Peak performance score for left and right arm |

| Jochumsen et al. (2018) |

Offline only

2 classes: idle vs. movement-related activity Chance level: 60–65% |

Fs:1,000 Hz Filtering: 4th order zero phase shift Butterworth bandpass 0.1–45 Hz, baseline correction Features: (i) Mean amplitudes (ii) Absolute band power (iii) Template matching (iv) All features combined |

Classifier:

Random forest classifier in LOOCV Outcome Measure: (i) Classification accuracy |

Offline accuracy:

75–85% Online accuracy: n/a Additional measures: n/a |

| Zhang et al. (2019) |

Online only

2 classes: MI/goal-oriented thought and rest Chance level: 70% (0.40 Cohen's Kappa) |

Fs: 2,048 Hz resampled to 128 Hz Filtering: Proprietary Emotiv software Features: Proprietary Emotiv software—ERD |

Classifier:

Emotiv classifier—PNN and RBF Outcome Measure: (i) Cohen's kappa |

Offline accuracy: n/a Online accuracy: n/a Additional measures: Average Kappa score of 0.46, range of 0.025–0.9 |

Fs, sampling frequency; fc, cut-off frequency; SSVEP, steady state visually evoked potential; mVEP, motion-onset visual evoked potential; MI, motor imagery; ITR, information transfer rate; LOOCV, leave-one-out cross-validation; CNN, convolutional neural network; LDA, linear discriminant analysis; SVM, support vector machine; AUC, area under the ROC curve;

Averaged across all 3 laps (estimated from bar graph);

Averaged across all 3 laps;

Estimated from bar graph-range includes achieved averages for all three classifier results; ERD, event-related desynchronization; PNN, probabilistic neural network; RBF, radial basis function; bpm, bits per minute; P#, pediatric participant number; n/a, not applicable.

Invasive Pediatric BCIs

Four articles (Sanchez et al., 2008; Breshears et al., 2011; Pistohl et al., 2012, 2013) included in this category used ECoG as invasive modality for interrogating the pediatric brain. Three of these studies applied motor execution paradigms while one additionally invoked MI (Breshears et al., 2011). Studies recruited individuals with epilepsy who had implanted electrode grids used to monitor brain activity prior to surgery.

First invasive pediatric BCI study was presented by Sanchez et al. (2008). They explored motor control paradigm with two adolescents aged 14 and 15 years who had an electrode array implanted to monitor their intractable epilepsy prior to surgery. Participants engaged in six repetitions of arm reaching and pointing tasks. ECoG signals were decoded from pre-motor, motor, somatosensory, and parietal cortices using a linear adaptive finite impulse response (FIR) filter trained with Wiener solution. Sanchez et al. (2008) reported the first example of the ability to decode pediatric ECoG signals for an online BCI model. They processed ECoG signals collected during reaching and pointing task by first filtering the data between 1 and 6 kHz. Features from each channel were fed into the above FIR filter topology to generate estimate of arm trajectory. Pearson's correlation was used to determine how closely the decoded signals matched the true arm's trajectory. The highest Pearson's correlations were achieved using the 300 Hz−6 kHz frequency band feature.

Breshears et al. (2011) demonstrated the decodable nature of ECoG signals from motor and/or language (Wernicke's or Broca's area) cortices by six pediatric participants (aged 9–15) who required invasive monitoring for intractable epilepsy. To move a cursor on the screen, children performed a motor (e.g., hand opening and closing, repetitive tongue protrusion) or phoneme articulation (oo, ah, eh, ee) task. Participants were asked to move the cursor along one dimension to hit a target on the opposite side of the screen during a single online session. Trials were grouped into runs of up to 3 min with a rest period of <1 min. Breshears et al. (2011) applied an autoregressive spectral estimation in 2 Hz bins ranging between 0 and 250 Hz to decode ECoG signals. For each electrode and frequency bin, power increases or decreases in the significant task-related spectral power were identified by calculating the r2 correlation between baseline spectra and activity spectra for each active task. Online performance accuracy (chance level: 50%) was calculated considering the number of successes (i.e., hit the target) divided by the total number of movements after each block. Results were compared to a previous study (Leuthardt et al., 2004; Wisneski et al., 2008) conducted with five adult participants (aged 23–46). The results showed that the pediatric participants' performance matched the adults' one and signals can be decoded and affected in the same way as adult brain signals. Within 9 min of training, children achieved 70–99% target accuracy in experiments where multiple cognitive modalities were used to achieve an imagined action to control a cursor on the screen. Children controlled the cursor using hand movements using β (15–40 Hz) and γ (60–130 Hz) frequency ranges and, two with tongue movements using high-γ (107.5–155 Hz) frequency range. Four of the six participants began with achieved accuracies <70%. Two participants were able to generate BCI control using imagined movement rather than over performance of the task. The mean accuracy was 81% and the mean training time was 11.6 min. The adult group required 12.5 min and reported a mean accuracy of 72%. Table 4 outlines the range of accuracies for each participant using one or different movements. These findings form proof of concept that decoding signals from the pediatric cortex is possible and may be used for BCI control.

Turning attention specifically to grasping movements, Pistohl et al. (2012) conducted a single-session of study with three pediatric participants (aged 14–16) who had electrodes implanted for pre-surgical epilepsy diagnostics. Participants' self-initiated reach-to-grasp of a cup with either precision or whole-hand grip, relocated the cup and finally returned their hand to a central resting position. Participants completed between 303 and 338 trials. Pistohl et al. (2012) focused on two-class classification of precision grip and whole-hand grip on offline analysis (chance level: 50% classification accuracy). Common average reference and low-pass filtering were applied. The authors utilized an rLDA classifier and reported decoding accuracy and temporal evolution of grasp discriminability. The three participants achieved between 84 and 97% decoding accuracy. Temporal evolution of grasp discriminability was 0.2 s.

Subsequently, Pistohl et al. (2013) utilized the same data to automatically detect the time of grasping movements within a continuous ECoG recording. After filtering, authors extracted frequency band amplitudes and trained an rLDA classifier to distinguish two classes, occurrence of the grasp and no grasp. Ten-fold cross-validation was used to test detection performance for each subject. Offline results were reported. Based on the previous work, we assumed that the chance level considered was 50% but the authors did not report it. Results showed amplitudes recorded in the high-gamma range from hand-arm motor-related channels were used to achieve the best performance. Local maxima between 56–128 Hz and 16–28 Hz. Low-pass filtered components, 16–28 and 56–128 Hz amplitudes reported best classification results when used together to feed the classifier. Sensitivity and specificity depended on temporal precision of detection and on the delay between event detection and when the event occurred.

For study objective, population, and tasks of invasive BCIs see Table 3. For signal processing techniques and results see Table 4.

Table 3.

Research articles on pediatric invasive BCIs: Study objectives and data collection details.

| References | Study objective | Sample size [females] | Age (years) | Diagnosis | Applications | BCI paradigm | Mode of operation | Signal type | Data acquisition | Task and sessions |

|---|---|---|---|---|---|---|---|---|---|---|

| Sanchez et al. (2008) | Present techniques to spatially localize motor potentials | Pediatric N = 2 [2] |

14–15 P1: 14 P2: 15 |

Intractable epilepsy | Neuroprosthetics | Arm reaching and pointing | Synch | ECoG |

Location: sensorimotor cortex # Channels: 36 and 32 Hardware and Software: -MATLAB® |

Task: arm reaching and pointing # Sessions: 1 Session duration: 6 task repetitions Task duration: 5 s |

| Breshears et al. (2011) | Decodable nature of pediatric brain signals for the purpose of neuroprosthetic control |

N = 11 [n/a] Pediatric N = 6 [1] |

9–46 Pediatric 9–15 P1: 15 P2: 11 P3: 15 P4: 9 P5: 12 P6: 13 |

Intractable epilepsy | Neuroprosthetics/mouse control | MI or motor execution (hand opening/closing, tongue protrusions, phoneme articulation) | Synch | ECoG |

Location: motor, temporal, and prefrontal areas, depending on the patient # Channels: 48 or 64 Hardware and Software: -AdTech electrode arrays - g.tec amplifier -BCI2000 -MATLAB® |

Task: move a cursor on a screen along one-dimension using motor execution or imagined movement # Sessions: 1 Session duration: 10–37 min Task duration: 2–3 s |

| Pistohl et al. (2012) | ECoG signal decoding for hand configurations in an everyday environment | Pediatric N = 3 [3] |

14–16 P1: 14 P2: 16 P3: 15 |

Epilepsy | Neuroprosthetics/reach-to-grasp | Motor execution | Asynch (self-paced) | ECoG |

Location: electrodes residing over hand-arm motor cortex as identified through anatomical location and electrical stimulation # Channels: 48 or 64 Hardware and Software: -IT-Med clinical EEG-System |

Task: reach-to-grasp movements (self-paced and largely self-chosen movements) # Sessions: 1 Session duration: – Time of analyzed data: P1: 32 min (303 grasps) P2: 35.3 min (338 grasps) P3: 25.4 min (320 grasps) Task duration: 60 ms per grasp |

| Pistohl et al. (2013) | Time of grasps from human ECoG recording from the motor cortex during a sequence of natural and continuous reach-to-grasp movements | Pediatric N = 3 [3] |

14–16 P1: 14 P2: 16 P3: 15 |

Same as Pistohl et al. (2012) as the participants and experimental paradigm is the same. | ||||||

N, number of participants; ECoG, electrocorticography; P#, pediatric participant number; MI, motor imagery; synch, synchronous; asynch, asynchronous; n/a, not applicable.

Quality Assessment and Risk of Bias

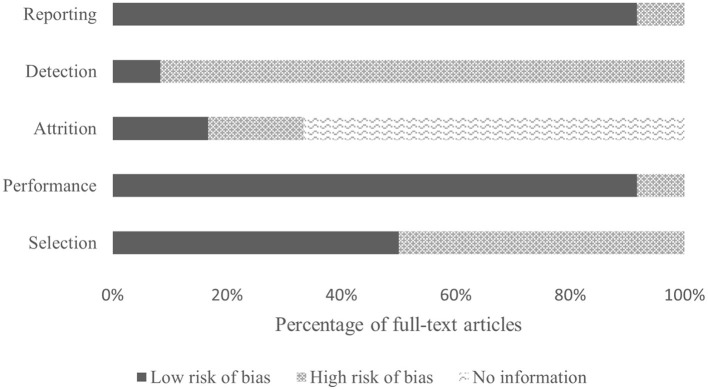

The quality of the included studies ranged from 45% (Taherian et al., 2017) to 91% (Zhang et al., 2019), with a median score of 0.70 and an interquartile range of 0.60–0.76. For breakdown of quality, appraisal markings see Table 5. Ten included papers present primary exploratory research using a multiple case study design, and two present cross-sectional studies (Ehlers et al., 2012; Zhang et al., 2019). Overall, the quality of the studies was adequate. One study was assessed as limited (Taherian et al., 2017), six as adequate (Sanchez et al., 2008; Breshears et al., 2011; Pistohl et al., 2013; Beveridge et al., 2017, 2019; Norton et al., 2018), three as good (Ehlers et al., 2012; Pistohl et al., 2012; Jochumsen et al., 2018), and two as strong (Zhang et al., 2019; Vařeka, 2020). However, some of the algorithms used by Zhang et al. (2019) are proprietary, making it difficult for the reproducibility of the experiments because the EMOTIV SDK may require buying a license to access some of the APIs. For the quality assessment of the signal processing, we considered sufficient reporting information about the features extracted and the classification algorithms applied. Also, we did not take into account open science (data and software/code sharing) and the reproducibility of the obtained results for the quality assessment. We highlight that Vařeka (2020) is the only included study that made publicly available data and software code. Table 6 and Figure 4 report the domain-level judgments for each study and a summary bar plot of the distribution of the risk-of-bias assessment within each bias domain. Breshears et al. (2011), Ehlers et al. (2012), Norton et al. (2018), and Beveridge et al. (2019) are the only studies comparing children to adults. Only six studies (Ehlers et al., 2012; Beveridge et al., 2017, 2019; Jochumsen et al., 2018; Norton et al., 2018; Zhang et al., 2019) considered important inclusion and exclusion criteria for selection bias: information related to the dominant hand; previous experience with BCI; use of medication; individual participants' age; gender; history of brain injury. Four studies (Sanchez et al., 2008; Breshears et al., 2011; Pistohl et al., 2012, 2013) included children with epilepsy but did not control for epilepsy-related brain activity or differences in the electrode positioning. Vařeka (2020) required large numbers of participants but did not report individual participants' ages or handedness or report results for male vs. female. In terms of maintaining fidelity to the study protocol, nine studies implement the same protocol consistently across participants. Three studies applied protocols including a different number of sessions and trials across participants (Breshears et al., 2011; Ehlers et al., 2012; Taherian et al., 2017). Missing data (e.g., participants who dropped out or researchers' excluded trials or low performance) were considered and handled appropriately only in one study (Norton et al., 2018). Three studies took missing data into consideration but did not discuss or analyze them appropriately (Ehlers et al., 2012; Taherian et al., 2017; Zhang et al., 2019). We did not consider questions related to assessors blinded to the intervention or exposure status of participants, because blinding is not appropriate for BCI studies. Two studies did not assess outcomes using valid and reliable measures (Ehlers et al., 2012; Taherian et al., 2017). Ehlers et al. (2012) reported an unclear definition of the performance evaluation (e.g., correct-to-complete commands ratio). Taherian et al. (2017) did not include a reliable measure in their protocol (i.e., performance score). Table 7 summarizes the performance evaluation metrics used in the 12 studies in this review. In terms of confounding variables assessed, we considered participants': age; gender; fatigue; psychological factors. Only one study did not introduce bias through confounding variables (Zhang et al., 2019). One article did not pre-specify outcomes (Taherian et al., 2017). Regarding bias that might affect these 12 studies, we highlight only five studies reported concerns due to the small sample sizes (Sanchez et al., 2008; Breshears et al., 2011; Jochumsen et al., 2018; Zhang et al., 2019). Vařeka (2020) is the only study that recruited many participants. Self-reporting risk of bias was applicable only for one study (Zhang et al., 2019), which included: questionnaires for psychological and cognitive information; BCI workload.

Table 5.

QualSyst scores for quantitative papers.

| References | QUALSYST criteria (quantitative) | Score (%) | Quality grade | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Question/objective sufficiently described | Study design evident and appropriate | Method of subject/comparison group selection or source of information/input variable described and appropriate | Subject characteristic sufficiently described | If interventional and random allocation was possible, was it described? | If interventional and blinding of investigators was possible, was it reported? | If interventional and blinding of subjects was possible, was it reported? | Outcomes and (if applicable) exposure measures well-defined and robust to measurement/misclassification bias? Means of assessment reported? | Sample size appropriate (pediatric population) | Analytic methods described/justified and appropriate | Some estimate of variance is reporter for the main results | Controlled for confounding | Results reported in sufficient detail | Conclusions supported by the results | |||

| Sanchez et al. (2008) | 2 | 2 | 1 | 2 | N/A | N/A | N/A | 1 | 0 | 1 | 1 | 0 | 2 | 2 | 70 | Adequate |

| Breshears et al. (2011) | 2 | 2 | 1 | 2 | N/A | N/A | N/A | 2 | 0 | 1 | 0 | N/A | 1 | 1 | 60 | Adequate |

| Ehlers et al. (2012) | 2 | 2 | 1 | 2 | N/A | N/A | N/A | 1 | 0 | 2 | 1 | 1 | 2 | 2 | 73 | Good |

| Pistohl et al. (2012) | 2 | 2 | 1 | 2 | N/A | N/A | N/A | 2 | 0 | 2 | 0 | 0 | 2 | 2 | 75 | Good |

| Pistohl et al. (2013) | 1 | 1 | 1 | 2 | N/A | N/A | N/A | 2 | 0 | 2 | 1 | N/A | 2 | 2 | 70 | Adequate |

| Beveridge et al. (2017) | 1 | 2 | 1 | 2 | N/A | N/A | N/A | 1 | 0 | 1 | 0 | 1 | 1 | 2 | 60 | Adequate |

| Taherian et al. (2017) | 2 | 2 | 1 | 2 | N/A | N/A | N/A | 0 | 0 | 0 | 0 | N/A | 0 | 2 | 45 | Limited |

| Jochumsen et al. (2018) | 2 | 2 | 1 | 2 | N/A | N/A | N/A | 2 | 0 | 2 | 1 | N/A | 2 | 2 | 80 | Good |

| Norton et al. (2018) | 2 | 2 | 1 | 1 | N/A | N/A | N/A | 2 | 1 | 1 | 1 | 1 | 1 | 2 | 68 | Adequate |

| Beveridge et al. (2019) | 1 | 2 | 1 | 2 | N/A | N/A | N/A | 1 | 0 | 1 | 0 | 1 | 1 | 2 | 60 | Adequate |

| Zhang et al. (2019) | 2 | 2 | 2 | 1 | N/A | N/A | N/A | 2 | 1 | 2 | 2 | 2 | 2 | 2 | 91 | Strong |

| Vařeka (2020) | 2 | 2 | 1 | 1 | N/A | N/A | N/A | 1 | 2 | 2 | 2 | 1 | 2 | 2 | 90 | Strong |

Criteria were scored either 2 or 1 or 0 (2 = yes, 1 = partial, 0 = no) or if the criteria were not applicable to the paper it was scored N/A (not applicable). To make them comparative, overall scores are presented as a %. Quality grade: limited (score of ≤50%), adequate (>50% and ≤70%), good (>70% and ≤80%), strong (>80%) (Lee et al., 2008).

Figure 4.

Bar plot visualization of risk-of-bias assessments.

Table 7.

Performance evaluation metrics used in the 12 studies on pediatric BCIs.

| References | Performance |

| Sanchez et al. (2008) | →Accuracy |

| Breshears et al. (2011) | →Accuracy |

| Ehlers et al. (2012) | →Accuracy |

| Pistohl et al. (2012) | →Correlation coefficients |

| Pistohl et al. (2013) | →TPR/FPR/FP-rate |

| Beveridge et al. (2017) | →Accuracy → ITR |

| Taherian et al. (2017) | →Performance score |

| Jochumsen et al. (2018) | →Accuracy |

| Norton et al. (2018) | →Accuracy → Latency → Bitrate |

| Beveridge et al. (2019) | →Accuracy → ITR → Latency |

| Zhang et al. (2019) | →Cohen's kappa |

| Vařeka (2020) | →Accuracy → Precision → Recall → AUC |

FPR, false positive ratio; TPR, true positive ratio; FP-rate, false positive rate; AUC, area under ROC curve; ITR, information transfer rate.

Evaluation Factors

Evaluation factors usually reported for BCI studies are: usability; performance; user's satisfaction; evaluation of psychological factors; brain workload; fatigue; quality of life; cognitive evaluation (Nicolas-Alonso and Gomez-Gil, 2012; Choi et al., 2017). Only one study reported subject fatigue using a 5-point Likert scale questionnaire (Zhang et al., 2019). Zhang et al. (2019) used a self-report questionnaire to investigate the psychological factors of participants during BCI experiments. Taherian et al. (2017) justified the absence of self-report questionnaires due to the severity of participants' conditions and limited communication capabilities. In terms of performance, most of the studies reported accuracy rates (see Table 7). Norton et al. (2018) was the only study with a self-report questionnaire for the usability of the BCI system.

Discussion

Pediatric BCIs

The primary objective of this systematic review was to examine studies related to the use of BCIs in pediatric populations. We described the current state-of-the-art for pediatric BCIs and assessed the quality and the risk of bias of the 12 articles. The included studies raise several challenges addressed in the following sections where we describe considerations for future research to make BCI technologies suitable for children. We also identify requirements to render BCIs suitable for clinical translation.

BCIs for Communication: State-of-the-Art

Regarding studies investigating BCIs for communication, a wide range of methods were implemented yielding various levels of success. Seven studies involved non-invasive EEG as the signal acquisition modality (Ehlers et al., 2012; Beveridge et al., 2017, 2019; Taherian et al., 2017; Norton et al., 2018; Zhang et al., 2019; Vařeka, 2020). Five studies analyzed evoked potentials (Ehlers et al., 2012; Beveridge et al., 2017, 2019; Norton et al., 2018; Vařeka, 2020) and two used movement-related potentials as the control signal (Taherian et al., 2017; Zhang et al., 2019).

Two studies took advantage of the active mental task MI (Taherian et al., 2017; Zhang et al., 2019). Taherian et al. (2017) deployed a consumer-grade EEG headset with five youth with spastic quadriplegic CP to decode left and right arm MI. This was the first study that involved children and computer access with a commercial EEG-BCI using the EMOTIV Epoc® hardware, but participants achieved poor accuracies (0.08–0.56 peak performance score range). Zhang et al. (2019) utilized the same low-cost commercial EEG headset as Taherian et al. (2017) to compare MI and “goal-oriented thinking” as tasks to control either a toy car or computer cursor with TD children. Participants achieved an online Cohen's kappa score of 0.46 pointing to successful control of the BCI. Importantly, they found performances were higher when users were controlling toy car vs. computer cursor. These results were attributed to the increased engagement of the children when controlling the car. This study points to the potential of low-cost BCIs being successfully used as a binary switch with pediatric users. It is unclear whether the poor results achieved by Taherian et al. (2017) are due to neurological differences of children with CP compared to TD children. It is possible that the physical and cognitive limitations, common among children with CP, were the source of differences in achieved accuracies.

Many limitations in current methods emerge when translating BCIs for communication to the target population (e.g., children with severe disabilities). For example, in the study by Taherian et al. (2017), participating children had unique head shapes that limited the ability of the electrodes on the BCI to gain contact with the scalp. Additionally, individuals with CP have been known to produce large muscular artifacts due to involuntary movements. Since the authors were unable to record raw EEG data, it is unclear whether artifacts disrupted signal acquisition, ultimately affecting their training data. The embedded EMOTIV system used by Taherian et al. (2017) may not have adequate artifact reduction methods, which would significantly affect the classification of the signals. Lastly, the authors reported another issue due to the severity of participants' conditions. They found many difficulties conducting 30 min training sessions and mentioned the impossibility of collecting enough EEG data to adequately train the classifiers. Moreover, participants were unable to learn to reproduce specific MI tasks within the timeframe of the study. In contrast, Zhang et al. (2019) demonstrated that a goal-oriented strategy works better than MI task with children and it may be useful for teaching MI tasks to children with disabilities. They found a BCI illiteracy rate higher in children than in adults and emphasized the potential difficulty children experience when reproducing their thought strategy in each trial. These issues might be resolved by including additional training phases in the study acquisition protocol for pediatric BCIs. The lack of customization of commercial headsets for pediatric head sizes may also justify reduced performances of children as compared to adults. For this reason, Zhang et al. (2019) had many difficulties placing the electrodes in locations dictated by the international 10–20 system in pediatric BCI studies.

The other five communication-focused studies utilized the reactive tasks known as SSVEP (Ehlers et al., 2012; Norton et al., 2018), mVEP (Beveridge et al., 2017, 2019), and P300 (Vařeka, 2020). The two studies investigating SSVEP (i.e., where the user visually fixates on a flashing target to indicate its selection) utilized the EEG signal acquisition modality and achieved mixed results. There are three main performance measurements of an SSVEP-based BCI: accuracy (probability of predicted target matching the target), latency (mean time from target onset to classification), and bit rate (transfer information rate, e.g., the amount of information conveyed per time unit). Ehlers et al. (2012) tested an SSVEP-BCI with five visual targets achieving quite poor results with 40 TD children. Accuracies ranged from 38 to 75%. Results reported by Ehlers et al. (2012) demonstrated that mean accuracy rates depend on age and frequency of the stimulation (10–11 Hz). The adult comparison group obtained consistently higher accuracy rates compared to all three children samples and an age-specific shift can be seen in the peak synchronization frequency. Their findings align with those reported by Roland et al. (2011). Using an ECoG and EEG-BCI, Roland et al. (2011) found that higher frequency bands show significant correlations with age in participants aged 11–59 years. This full-text was excluded because authors did not report BCI performance. Norton et al. (2018) built upon the work of Ehlers et al. (2012) by improving an SSVEP-BCI for TD children. Eleven of the 15 children exceeded the threshold of successful BCI control during offline sessions and attained an average of 79% online classification accuracy. This result was statistically similar to results achieved by the participating adult cohort. While the achieved bit rates of pediatric participants were lower than adults, this study points to the promise of successful control of SSVEP-BCIs by pediatric users. As noted in Norton et al. (2018), there are many methodological differences between their study and Ehlers et al. (2012), which may explain result differences. Methodological discrepancies include differences in the task controlled by the BCI, slightly different stimulation frequencies, involvement of a calibration phase, dissimilar environmental settings, and exclusion of participants after a poor calibration phase. Lastly, Norton et al. (2018) is the only study where performance is reported in terms of accuracy, latency, and bit rates. The latency is the average amount of time between the onset of the stimuli and the classification of the predicted target (Norton et al., 2018). Norton et al. (2018) showed that children were slower than adults, although this result was not significant.

The two studies by Beveridge's group (Beveridge et al., 2017, 2019) involve racetrack video games and are the first studies with pediatric subjects using mVEP-BCI applications. The overarching goal of these two articles is to explore a BCI task that is less visually fatiguing than commonly investigated alternative BCI tasks such as SSVEP and P300. They demonstrated the feasibility of mVEP paradigm achieving an average accuracy of up to 72% but did not apply any measurement or questionnaires to evaluate visual fatigue among participants. The absence of a qualitative and quantitative evaluation of visual fatigue limits the reliability of these two papers despite the high performance reported.