Abstract

Insurers and policymakers have created health care price transparency websites to facilitate price shopping and reduce spending. However, price transparency efforts to date have been plagued by low use. It is unclear whether this low use reflects lack of interest or lack of awareness. We launched a large online advertising campaign to increase consumer awareness about insurer-specific negotiated price information available on New Hampshire’s public price transparency website. Our campaign led to a more than 700 percent increase in visits to the website. However, in our analysis of health plan claims, this increased use did not translate to increased use of lower-priced providers. Our findings imply that the limited success of price transparency tools in reducing health care spending to date is driven by structural factors that limit consumer interest in health care price information, rather than only a lack of awareness about price transparency tools.

INTRODUCTION

Many policymakers and payers view health care price transparency as critical to facilitating informed patient decision making, reducing the burden of out-of-pocket spending, and decreasing overall health care spending.1 For example, the federal government recently issued new hospital price transparency requirements which require hospitals to post negotiated prices for select services online.2 These requirements build upon price transparency websites offered by many states and private insurers.3–5

The assumption underlying these efforts is that patients will use this newly available price information when choosing where to get care. When introducing the new hospital price transparency initiative, Secretary of Health and Human Services Alex Azar described a vision of “a patient-centered system that puts you in control and provides the affordability you need, the options and control you want, and the quality you deserve. Providing patients with clear, accessible information about the price of their care is a vital piece of delivering on that vision.”6 In national surveys, Americans echo these views and support more availability of health care price information.7,8

Despite this stated interest, price transparency efforts to date have been plagued by low consumer engagement. For example, in three studies examining different price transparency websites, only 1 to 15 percent of the individuals who were offered access used a price transparency tool even once in the year following introduction.9–11 In New Hampshire, which offers one of the most sophisticated publicly available websites, roughly 1 percent of state residents used the website over the 3 years after its introduction.12 Given these low percentages, it is not surprising that most evaluations of price transparency efforts have not demonstrated decreased spending or decreased out-of-pocket costs.9,10,13 It is unclear whether engagement is low because people are unaware that price information is available or because people are disinterested despite survey responses suggesting the contrary.

To answer this question, we launched an experiment. We implemented a large targeted online advertising campaign using Google Advertisements to increase awareness of and engagement with New Hampshire’s price transparency website.14 We evaluated the impact of this campaign on use of the website. Using claims data from one of the state’s largest insurers, we then assessed whether the campaign increased use of lower-priced care for three shoppable services. Our results inform the extent to which lack of awareness is why price transparency efforts have had limited impact on price shopping, and in turn, health care spending to date.

METHODS

Price transparency website

We launched an online informational advertising campaign to increase awareness of and engagement with NH Health Cost, the publicly available price transparency website developed by the State of New Hampshire. NH Health Cost, rated as one of the best state price transparency websites in the country,3 provides insurer- and provider-specific negotiated prices which reflect the real amount a provider would be paid for over 100 services.14 These price data are based on the state’s all-payer claims database.15 On the price transparency website, patients can submit their insurer and cost-sharing obligations (e.g., deductible) to estimate their expected out-of-pocket cost for a service across providers.

Intervention: Online advertising campaign

The online advertisement campaign focused on three categories of services: imaging services, emergency department visits, and physical therapy services. We selected these three service categories because they are common and among the most frequently searched services on price transparency websites.9,12 Also, these services are on average lower cost than a deductible in a high-deductible health plan and therefore, more likely to incur patient cost sharing obligations. Imaging and physical therapy services are also non-emergent, while many emergency department visits could be addressed in an alternative and cheaper setting such as an urgent care center.16

Advertisements were shown to people from the state of New Hampshire who were using Google’s search engine (“search ads” in Google parlance) or on a website with content considered relevant (“display ads”). Each user’s location was determined using the Internet Protocol address (IP address). The search terms that triggered a search ad were refined over the campaign both by an independent Google advertisements consultant and automatically by the Google advertisements algorithm. Once a search term is determined to be relevant to the advertisement content, the advertisement is entered into an automated auction with competing advertisements. The search advertisement was displayed if it won the automated auction (see Supplementary Appendix Section 1 for details17). The algorithm “learned” over time to better target search terms and users. Display advertisements appeared on websites with content related to the three service categories or on websites visited by individuals determined to have relevant search or web usage patterns.

The advertisements directed users to the price transparency website. See Appendix Exhibit A1 for screenshots of the advertisements.17 If an individual clicked on the advertisement, they would be directed to the NH Health Cost website where they could select their insurer (or uninsured status) and a health care service. They were then shown insurer-specific negotiated prices and their estimated out-of-pocket costs across providers in New Hampshire. A total of $39,060 was spent on the advertising campaign in the 6 months (January 1, 2019 to June 30, 2019) during which the campaign was fully implemented. In the 6 months prior to that (July 1, 2018 to December 31, 2018), we piloted the advertisements on a small scale.

Analytic plan: Impacts of the intervention on price transparency website usage

To assess whether the campaign increased awareness and use of the NH Health Cost website, we examined advertisement metrics as well as website visit patterns before and during the campaign. Importantly, we limited analyses to visits to the price transparency-related pages of the website and excluded visits to other pages on the NH Health Cost website (e.g., educational information about health insurance coverage, information on health care spending for employers, quality ratings).

Using Google Ads statistics, we analyzed the number of times the ads were shown by each targeted service category and the “click rate”, the percent of advertisements that resulted in a click that directed the user to the NH Health Cost website.

Using NH Health Cost website data, we tracked the number of visits from users from New Hampshire who viewedthe price transparency components of the website. To measure changes in viewership following the intervention, we compared average weekly visits to the website in the pre-intervention period to that in the post-intervention period (excluding the pilot phase). We also tracked the average amount of time spent on the website and the number of pages viewed. We separately examined the number of visits by insurance status.

Analytic plan: Impacts of the intervention on health care prices paid

For this analysis, we partnered with HealthCore Inc, a health outcomes research subsidiary of Anthem, which is the market leading insurer in New Hampshire with about 40% market share among the privately insured.18 We used the HealthCore Integrated Research Database (HIRD), which is a longitudinally integrated medical claims database drawn from health care encounters of members enrolled in 14 Anthem health insurance plans across the United States. For this analysis, we accessed a limited data set for which data use agreements were in place with the covered entities in compliance with the Health Insurance Portability and Accountability (HIPAA) Privacy Role.

We studied whether the campaign was associated with changes in the use of lower-priced care among privately insured enrollees in New Hampshire (intervention group) relative to enrollees in the neighboring states of Connecticut, Maine, and New York (control group). During our study period, no control state had a publicly available price transparency website that reported insurer- and provider-specific negotiated prices. Massachusetts was excluded because our claims data included very few individuals in that state.

Our study population consisted of enrollees with medical coverage under an Anthem plan at any point from January 1, 2017 to June 30, 2019 between the ages of 18 and 65 who utilized an imaging service, emergency department visits, or physical therapy service for which price information was available on the price transparency website (a list of current procedural terminology (CPT) codes included is in the Supplementary Appendix Exhibit A217). We excluded enrollees with supplemental sources of health insurance coverage, including Medicare, to ensure we could capture all utilization for any given individual. We divided the study period into 6-month increments from 1/1/17 to 6/30/19.

Our primary outcome was the total price paid for a service, which was defined as the sum of all payments owed to the provider by the insurer and the patient for the given service. Additional details about our methodology for constructing the primary outcome variable are presented in the Supplementary Appendix Section 2.17 Our secondary outcome was the patient’s out-of-pocket cost for the service, which is the total of any copayment, coinsurance, and deductible paid. We excluded costs above the 99th percentile to limit the impact of outliers. We log-transformed both outcomes to estimate relative (percentage) effects, and consistent with prior studies, added $0.01 to observations of $0.00.10 The CPT codes for the services included in our analysis are presented in Supplementary Appendix Exhibit A2.17

We estimated intention-to-treat effects which assess the impact on all Anthem-insured individuals in New Hampshire, rather than only those who saw the advertisement. The intention-to-treat estimates mitigate bias from differences between individuals who search and do not search for health care services online. Minimizing selection bias was relevant because research suggests that individuals who search for health care-related topics online or use price transparency websites may differ from those who do not.19 Moreover, our data did not allow us to link enrollee-level data to Google search activity or online visits to the NH Health Cost website.

In a difference-in-difference analysis, we compared changes in outcomes in New Hampshire to changes in outcomes in control states before versus after the implementation of the online campaign. Details on our models are in Appendix Section 3.17 In brief, we stratified analyses by the 3 service categories of interest. We fit regression models predicting each outcome as a function of an interaction term between indicators for the 6-month intervention period and whether the service was received in New Hampshire (our differences-in-differences estimate of interest). The model also included an interaction term between an indicator for the 6-month pilot period and whether the service was received in New Hampshire, demographic and clinical characteristics for the patients, fixed effects for the service received (given by the CPT code), and year fixed effects. Heterogeneity-robust standard errors clustered at the state level are reported.20

The difference-in-difference estimate can be interpreted as the percentage change in the amount paid (total or out-of-pocket) associated with the online advertisements campaign.

One of the assumptions in our difference-in-difference analysis is that in the absence of the intervention, the intervention and control populations would have followed similar trends in prices paid (i.e., the parallel trends assumption). In additional analyses, we estimated models to test the parallel trends assumption by testing for differences in pre-intervention outcome trends in New Hampshire versus control states (details in Appendix Section 417).

In secondary analyses, we examined whether the intervention impacted service-level utilization by quantifying the number of times each service was received in each month in our study population. We also examined total and out-of-pocket spending as alternate measures of utilization. We conducted sub-analyses limited to the subset of our study population in high-deductible health plans, as these enrollees have the most incentive to use price information to select lower-priced providers. We conducted an additional test for use of lower-priced providers when enrollees in high-deductible health plans are more likely to be under their deductible. To do so, we further limited the sample to services received by patients in the months January through March, since deductibles reset at the beginning of the calendar year for the majority of enrollees. Details on these secondary analyses are in Appendix Sections 5 and 6.

Limitations

Our analyses had several limitations. First, we could not link Google’s data on who clicked on the ads and claims data on who received related care. Therefore, we were not able to evaluate the extent to which our intervention reached those who went on to receive care. This precluded us from estimating the effect of the intervention among individuals who saw the ad. This is a general limitation that would likely be faced in evaluation of any public price transparency website. However, we conducted a back-of-the-envelope calculation to estimate the proportion of individuals receiving a service who visited the price transparency website due to our intervention. To do so, we multiplied the number of ad clicks from New Hampshire in each service category by the proportion of clicks where the user viewed Anthem-specific price information (this is our estimate on the proportion of viewers who were insured by Anthem). This estimate assumes that everyone who clicked on the ad received a service. We recognize that this is unlikely the case, but it is unknown what percentage of users actually went on to receive care. This calculation suggests that, in a best-case scenario, 77%, 13%, and 54% of individuals who had an ED, imaging, or physical therapy service in our claims data, respectively, clicked on an ad for the NH Health Cost website. Nonetheless, increased use of the website following our intervention suggests that Google ads is relatively successful at targeting users who are interested in the data.

Second, our analysis was limited to shorter-term effects of the intervention. It is possible that many individuals learned about the tool during our six-month intervention but did not return to it until after our study period, when they required care. Future analyses could test for such longer-term effects of such an intervention.

Third, our analyses focused on three service categories, and consumers may have been more responsive to price information for other services. However, the service categories we focused on are considered shoppable.22

Fourth, because our claims-based analysis only allowed us to observe individuals who received a service, our analysis would not capture effects from individuals who decided to forgo a service after viewing health care price information, though we do not observe a change in overall rates of utilization of the studied services after our intervention. Finally, our study population comes from one market leading private insurer in the state, and therefore, does not examine individuals who are uninsured or covered by other payers.

RESULTS

Advertisements

Advertisements were displayed for Google searches conducted from New Hampshire for a set of terms relevant to the three service categories of interest. Common search terms that were deemed relevant for each service category are reported in Exhibit A3. Emergency department service-focused ads were displayed a total of 2,136,665 times during the 6-month post-period, imaging services-focused ads were displayed 2,149,554 times, and physical therapy focused ads were displayed 2,927,163 times. The total number of clicks was 7,862 for emergency department searches, 8,612 for imaging searches, and 14,234 for physical therapy searches. The percent of search ads that users clicked on was 1.9%, 4.2%, and 2.3% for emergency department, imaging, and physical therapy ads, respectively. For display ads, the click rate was lower at 0.32%, 0.31%, and 0.42% for emergency department, imaging, and physical therapy services, respectively.

In comparison, during the six-month implementation period, our study population from this one insurer in New Hampshire had 5,418 emergency department visits, 36,442 imaging services, and 13,989 physical therapy visits.

Use of the price transparency website

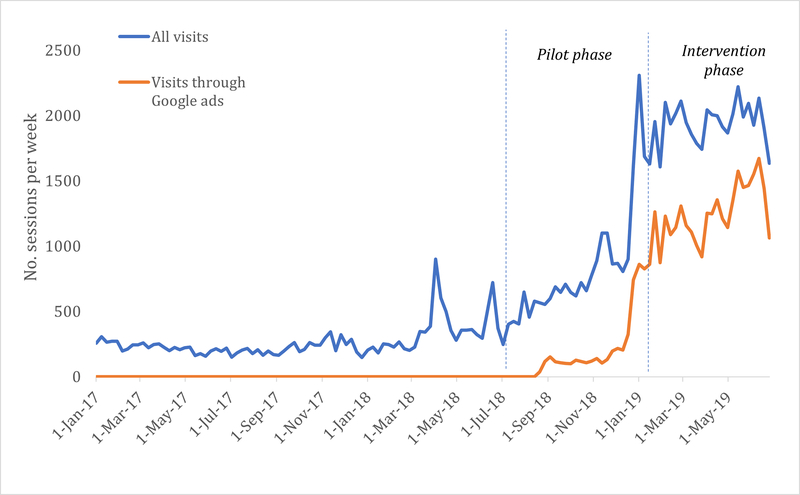

The average number of visits per week to the website was 139 in the pre-intervention phase and 1,166 in the implementation phase (Exhibit 1). This represents a 739 percent increase in the number of weekly visits. The percent of total visits that came through the Google ads (“paid visits”) on average per week in the pre-intervention and implementation phase was 0 percent and 84 percent respectively.

Exhibit 1 (figure): The number of visits per week to price transparency website substantially increased during the advertising campaign.

Source: Author calculations from Google Ads and Google Analytics data on the NH Health Cost website and advertisement campaign.

Notes: The exhibit presents weekly visits to the NH Health Cost price transparency website. Total visits and visits that came through clicks on a Google advertisement are presented. The data is limited to visits from a New Hampshire-based internet protocol (IP) address. Moreover, only visits to the price transparency components of the website are included. Visits to other parts of the website (e.g., pages with educational information about health insurance) were excluded. Weekly data from January 1, 2017 to December 31, 2019 is presented. The pilot phase (July 1, 2018 to December 31, 2018) is the 6-month period during which the online advertisements were piloted on a small scale. The intervention phase (January 1, 2019 to June 30, 2019) was the period during which the intervention was fully implemented and corresponds to the post-intervention study period of interest in our analysis.

Among visitors to the NH Health Cost website, the average number of page views per session changed from 3.0 to 1.7 from the pre-intervention period to the intervention period. Among visitors who clicked through the ads, the average number of page views in the intervention period was similar at 1.6. The average duration per session went from 1.8 to 0.9 minutes among all visitors and was 0.5 minutes for visitors who came through Google ads.

Users of the price transparency website selected their insurer to view price information. Based on these data, during the intervention period, 53 percent of users were Anthem enrollees, 3 percent were Cigna enrollees, 5 percent were covered by other insurers, and 39 percent were uninsured individuals. Weekly metrics on visits by insurer are reported in Appendix Exhibit A4.17

Use of lower-priced providers

In analyses that used claims data to examine the effects of the ads campaign on prices paid for services, our sample included a total of 1,270,397 enrollees, 87.4 percent of whom received an imaging service, 21.5 percent an emergency department visit, and 29 percent physical therapy services. Appendix Exhibit A5 presents descriptive characteristics of our study population.17 Mean prices paid weighted by utilization within each CPT code in New Hampshire versus control states in each study period are presented in Appendix Exhibit A6.17

The average price paid for each of the three sets of services enrollees in New Hampshire received were not differentially lower during the advertising campaign than prior to it (Exhibit 2). We estimate a 4 percent (p=0.07) non-statistically significant increase in the price paid for emergency department visits associated with the intervention. Our estimates implied no change in the price paid (estimate=0.3 percent, p=0.26) for imaging services, and a non-statistically significant change (18 percent increase, p-value = 0.16) for physical therapy services.

Exhibit 2:

Impact of the online advertisement campaign on total costs and out-of-pocket cost by service category

| Emergency Department visit |

Imaging services |

Physical therapy |

||||

|---|---|---|---|---|---|---|

| Estimate | P-value | Estimate | P-value | Estimate | P-value | |

| Differential change in total prices paid (percent change) | 4.4% | 0.07 | 0.3% | 0.26 | 18.3% | 0.16 |

| Differential change in out-of-pocket costs (percent change) | 3.1% | 0.08 | −1.6% | 0.01 | 18.2% | 0.17 |

Source: Author’s estimates from the HIRD database.

Note: Difference-in-difference coefficient estimates andp-values from the main analysis are presented for the primary and secondary outcomes across the 3 service categories of interest. The estimates represent the differential percentage change in the outcome in the post-intervention period (January 1, 2019 – June 30, 2019) relative to the pre-intervention in New Hampshire compared to control states. Coefficient estimates are multiplied by 100 to approximate percentage changes. Heterogeneity robust standard errors clustered at the state level were estimated.

For analyses focused on out-of-pocket costs, our estimates implied that our advertisement campaign was associated with a 3 percent (p-value=0.08) increase in the price paid for emergency department visits and a 2 percent (estimate=−0.02, p-value=0.01) decrease for imaging services. The estimate for physical therapy services was not statistically significant. Regression estimates for additional explanatory variables are reported in Exhibit A7.

On all but one outcome, our analyses suggested that the parallel trends assumption holds (Exhibit A8). For out-of-pocket costs for imaging services, analyses suggested that monthly changes in the outcome during the period prior to the intervention were 1.0 percent (p-value=0.01) lower than that in control states. This suggests that the 1.6 percent difference-in-difference estimate in out-of-pocket costs for imaging services in the main results likely reflected declines that would be expected in the absence of the intervention (due to pre-existing trend differences in New Hampshire relative to the control states for this outcome) rather than causal effects of the advertising campaign.

We also did not find evidence that the intervention was associated with changes in the quantity of services utilized (Exhibit A9). Sub-analyses limited to enrollees in high-deductible health plans yielded conclusions that were similar to the main results (Exhibit A10).

DISCUSSION

Our online advertisement campaign was successful in that it led to more than a 700 percent increase in use of New Hampshire’s price transparency website, though the absolute number of users as a share of the overall New Hampshire population remained small. However, despite this increase, we did not find that the advertising campaign was associated with greater use of lower-priced services.

Our analyses suggest that lack of awareness may be one driver of low use of price transparency websites. Our relatively low cost and simple Google advertisements campaign was effective in increasing use. Governments and commercial payers could pursue similar strategies to increase traffic to publicly available health care price transparency websites. Consistent with consumer surveys that indicate people want this type of information, the page views and time on the website suggest that users were interested the information provided. As the campaign continued, we also observed growth among users who did not come from a Google ad which may have also been attributed to greater awareness of the website due to the intervention. One important caveat that all online advertising campaigns struggle with is reaching high “quality” users. As we steadily increased the users per week, we also found that the time that they spent on the website steadily fell. This implies that many of the incremental users who visited the website via the advertisements later in the campaign found the information less useful. Nonetheless, though 54 seconds - the average time spent on the website during our advertising campaign is short - anecdotal evidence suggests that it is longer than the average time spent on websites, 45 seconds.23

However, despite the dramatic increase in use of the price transparency website, we did not observe any impact on health care prices paid in New Hampshire in the three service categories our campaign targeted. Our findings emphasize that awareness of prices does not simply translate into price shopping and lower spending. There are numerous barriers to using price information. Individuals may not know the details of their benefit design to infer their out-of-pocket costs. Customized out-of-pocket cost estimates may be critical.9,24 It may also be that despite the website being accessed by many people, the ads campaign may not have reached the optimal audience, those who were most likely to be price sensitive and those who went on to receive health care services.

The lack of effect on prices paid may also be due to more fundamental factors, unrelated to the implementation of the ad campaign, that hinder the effectiveness of price transparency to lower spending. For example, even among those in high-deductible health plans, patients may still lack sufficient incentive to choose lower cost care.7,25 People may also place relatively less weight on health care prices compared to other factors when selecting a health care provider. Patients’ choice of provider may be strongly influenced by their provider’s referrals, recommendations from others, previous experience, or other factors. It is also possible that in some cases the availability of price information actually led to use of higher-priced providers, and this cancelled out savings from patients who switched to lower-priced providers. Though survey work has shown that most Americans do not believe higher prices in health care imply higher quality, some portion of Americans do believe they are positively correlated.26 Increases in prices paid following introduction of a price transparency tool have been observed in past studies.9

Though it may not drive greater use of lower-priced providers, price transparency may have other benefits. Patients may be more aware of their out-of-pocket costs ahead of time and they may be able to plan accordingly. These data can be used in negotiations between insurers and providers and lead to lower prices.18,27,28 Our intervention, however, was not designed to identify such “supply-side” effects on negotiated prices, which would be expected to occur over an interval longer than our 6-month intervention period.

CONCLUSION

Increasing price transparency is viewed by many as a critical strategy to reduce spending. Prior initiatives have been plagued by low use. We find that a simple advertising campaign dramatically increased use of a price transparency website, but this did not translate into lower prices paid consistent with price shopping behavior. Our findings provide a cautionary note for those who believe that availability of price information alone will reduce spending.

Supplementary Material

Acknowledgments

Grant Support: This work was supported by a grant from the Donaghue Foundation.

Footnotes

Disclosures: The authors have no potential conflicts of interest to disclose.

References

- 1.Reinhardt UE. The Disruptive Innovation of Price Transparency in Health Care. JAMA. 2013;310(18):1927–8. [DOI] [PubMed] [Google Scholar]

- 2.Centers for Medicare and Medicaid Services. Hospital Outpatient Prospective Payment System Policy Changes: Hospital Price Transparency Requirements (CMS-1717-F2) [Internet]. CMS.gov. 2020. [cited 2020 Mar 1]. p. 1–8. Available from: https://www.cms.gov/newsroom/fact-sheets/cy-2020-hospital-outpatient-prospective-payment-system-opps-policy-changes-hospital-price [Google Scholar]

- 3.de Brantes F, Delbanco S, Butto E, Patino-Mazmanian K, Tessitore L. Price Transparency & Physician Quality Report Card 2017 [Internet]. 2017. [cited 2019 May 20]. p. 1–13. Available from: https://www.catalyze.org/product/2017-price-transparency-physician-quality-report-card/

- 4.Sinaiko AD, Chien AT, Rosenthal MB. The Role of States in Improving Price Transparency in Health Care. JAMA Intern Med 2015;02115(6):886–7. [DOI] [PubMed] [Google Scholar]

- 5.Kullgren JT, Duey KA, Werner RM. A census of state health care price transparency websites. JAMA. 2013;309(23):2437–8. [DOI] [PubMed] [Google Scholar]

- 6.Secretary Azar Statement on Proposed Rule for Hospital Price Transparency [Internet]. HHS.gov. 2020. [cited 2020 Mar 5]. p. 1. Available from: https://www.hhs.gov/about/news/2019/07/29/secretary-azar-statement-proposed-rule-hospital-price-transparency.html [Google Scholar]

- 7.Sinaiko AD, Mehrotra A, Sood N. Cost-Sharing Obligations, High-Deductible Health Plan Growth, and Shopping for Health Care: Enrollees With Skin in the Game. JAMA Intern Med 2016;176(3):1–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Silliman R. Let’s Talk Numbers : Americans Want Price Transparency and Cost Conversations [Internet]. 2017. [cited 2019 May 20]. p. 1–2. Available from: https://health.oliverwyman.com/2017/05/let_s_talk_numbers.html

- 9.Desai S, Hatfield LA, Hicks AL, Chernew ME, Mehrotra A. Association Between Availability of a Price Transparency Tool and Outpatient Spending. JAMA. 2016;315(17):1874. [DOI] [PubMed] [Google Scholar]

- 10.Desai S, Hatfield LA, Hicks AL, Sinaiko AD, Chernew ME, Cowling D, et al. Offering a price transparency tool did not reduce overall spending among California public employees and retirees. Health Aff 2017;36(8):1401–7. [DOI] [PubMed] [Google Scholar]

- 11.Sinaiko AD, Joynt KE, Rosenthal MB. Association Between Viewing Health Care Price Information and Choice of Health Care Facility. JAMA Intern Med 2016;1–3. [DOI] [PubMed] [Google Scholar]

- 12.Mehrotra A, Brannen T, Sinaiko AD. Use Patterns of a State Health Care Price Transparency Web Site: What Do Patients Shop For? Inq J Heal Care Organ Provision, Financ 2014;51(0):2014–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sinaiko AD, Rosenthal MB. Examining a health care price transparency tool: Who uses it, and how they shop for care. Health Aff 2016;35(4):662–70. [DOI] [PubMed] [Google Scholar]

- 14.New Hampshire Insurance Department. NH Health Cost [Internet]. Available from: https://nhhealthcost.nh.gov/

- 15.New Hampshire Comprehensive Health Care Information System [Internet]. [cited 2020 Mar 1]. Available from: https://nhchis.com/

- 16.Weinick RM, Burns RM, Mehrotra A. Many emergency department visits could be managed at urgent care centers and retail clinics. Health Aff 2010. September;29(9):1630–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.To access the Appendix, click on the Details tab of the article online.

- 18.Tu H, Gourevitch R. Moving Markets: Lessons from New Hampshire’s Health Care Price Transparency Experiment [Internet]. 2014. [cited 2019 Dec 1]. Available from: http://www.chcf.org/publications/2014/04/moving-markets-new-hampshire

- 19.Gourevitch RA, Desai S, Hicks AL, Hatfield LA, Chernew ME, Mehrotra A. Who Uses a Price Transparency Tool? Implications for Increasing Consumer Engagement. Inq J Heal Care Organ Provision, Financ 2017;54:004695801770910. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Wooldridge JM. Econometric Analysis of Cross Section and Panel Data. Vol. 58, The MIT Press. MIT Press; 2010. 752 p. [Google Scholar]

- 21.To access the Appendix, click on the Details tab of the article online.

- 22.Frost A, Newman D. Spending on Shoppable Services in Health Care [Internet]. 2016. [cited 2016 Mar 2]. Available from: http://www.healthcostinstitute.org/files/Shoppable Services IB 3.2.16_0.pdf

- 23.Sevell+Sevell. How much time should someone spend on your website? [Internet]. [cited 2020 Mar 1]. Available from: https://www.sevell.com/news/average-session-duration

- 24.Whaley C, Chafen JS, Pinkard S, Kellerman G, Bravata D, Kocher R, et al. Association Between Availability of Health Service Prices and Payments for These Services. JAMA. 2014;312(16):1670. [DOI] [PubMed] [Google Scholar]

- 25.Zhang X, Haviland A, Mehrotra A, Huckfeldt P, Wagner Z, Sood N. Does Enrollment in High-Deductible Health Plans Encourage Price Shopping ? Health Serv Res 2017;53(51):2718–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Phillips KA, Schleifer D, Hagelskamp C. Most Americans Do Not Believe That There Is An Association Between Health Care Prices And Quality Of Care. Health Aff (Millwood). 2016;35(4):647–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Brown ZY. An Empirical Model of Price Transparency and Markups in Health Care. 2016;(January). Available from: http://www-personal.umich.edu/~zachb/zbrown_empirical_model_price_transparency.pdf

- 28.Whaley CM. Provider responses to online price transparency. J Health Econ 2019;66:241–59. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.