Abstract

Background:

Delivering physical and behavioral health services in a single setting is associated with improved quality of care and reduced health care costs. Few health systems implementing integrated care develop conceptual models and targeted measurement strategies a priori with an eye toward adoption, implementation, sustainment, and evaluation. This is a broad challenge in the field, which can make it difficult to disentangle why implementation is or is not successful.

Method:

This article discusses strategic implementation and evaluation planning for a pediatric integrated care program in a large health system. Our team developed a logic model, which defines resources and community characteristics, program components, evaluation activities, short-term activities, and intermediate and anticipated long-term patient-, clinician-, and practice-related outcomes. The model was designed based on research and stakeholder input to support strategic implementation and evaluation of the program. For each aspect of the logic model, a measurement battery was selected. Initial implementation data and intermediate outcomes from a pilot in five practices in a 30-practice pediatric primary care network are presented to illustrate how the logic model and evaluation plan have been used to guide the iterative process of program development.

Results:

A total of 4,619 office visits were completed during the 2 years of the pilot. Primary care clinicians were highly satisfied with the integrated primary care program and provided feedback on ways to further improve the program. Members of the primary care team and behavioral health providers rated the program as being relatively well integrated into the practices after the second year of the pilot.

Conclusion:

This logic model and evaluation plan provide a template for future projects integrating behavioral health services in non-specialty mental health settings, including pediatric primary care, and can be used broadly to provide structure to implementation and evaluation activities and promote replication of effective initiatives.

Plain language abstract:

Up to 1 in 5 youth have difficulties with mental health; however, the majority of these youth do not receive the care they need. Many youth seek support from their primary care clinicians. Pediatric primary care practices have increasingly integrated behavioral health clinicians into the care team to improve access to services and encourage high-quality team-based care. Definitions of “behavioral health integration” vary across disciplines and organizations, and little is known about how integrated behavioral health care is actually implemented in most pediatric settings. In addition, program evaluation activities have not included a thorough examination of long-term outcomes. This article provides detailed information on the implementation planning and evaluation activities for an integrated behavioral health program in pediatric primary care. This work has been guided by a logic model, an important implementation science tool to guide the development and evaluation of new programs and promote replication. The logic model and measurement plan we developed provides a guide for policy makers, researchers, and clinicians seeking to develop and evaluate similar programs in other systems and community settings. This work will enable greater adoption, implementation, and sustainment of integrated care models and increase access to high-quality care.

Keywords: Integrated care, pediatric primary care, logic model, program evaluation, implementation, children/child and adolescent/youth/family, behavioral health treatment

Mental health disorders occur in 20% of youth; however, less than 30% of youth with significant mental health needs receive services (Merikangas et al., 2010). Up to half of all pediatric primary care office visits involve a psychosocial concern (Martini et al., 2012), which has resulted in primary care clinicians (PCCs) being considered de facto mental health clinicians. However, PCCs are often unable to provide necessary services due to time constraints, limitations in training, and concerns about billing and reimbursement (Horwitz et al., 2015). To address these challenges, models of integrated care, which include behavioral health professionals working collaboratively within pediatric primary care practices, have been developed (e.g, Asarnow et al., 2017; Godoy et al., 2017). These models hold promise for addressing gaps in mental health service delivery. Additional benefits of delivering physical and behavioral health services in a single setting include improved quality of care and patient outcomes and reduced health care costs (e.g., Asarnow et al., 2015; Wright et al., 2016).

Given the evidence for integrated behavioral health services, it is not surprising that health systems and primary care practices have expressed interest in implementing these models (Asarnow et al., 2017). However, definitions of “behavioral health integration” vary widely (Peek & National Integration Academy Council, 2013). In addition, little is known about how clinics and health systems implement integrated behavioral health care (American Psychiatric Association & Academy of Psychosomatic Medicine, 2016), particularly in pediatric settings. Few health systems develop conceptual models and targeted measurement strategies a priori with an eye toward adoption, implementation, and evaluation of costs and other factors that may affect sustainment. This challenge is not unique to integrated behavioral health. The field of dissemination and implementation science more broadly has noted that, when new evidence-based practices or programs are implemented, there is often a lack of strategic implementation planning and attention to causal theory (Bauer et al., 2015; Lewis et al., 2018), making it difficult to disentangle why implementations of new programs do or do not succeed.

This article discusses the collaborative, iterative process of program development with a focus on implementation and evaluation activities for a pediatric integrated care initiative in a large health system, including developing a logic model and associated measurement battery. Logic models are an important implementation science tool to guide the development and evaluation of new programs (Smith et al., 2020). To illustrate the use of the logic model, the implementation context, and initial outcomes, we present data from a 2-year clinical pilot.

Overview of the Healthy Minds, Healthy Kids program

In the summer of 2016, Healthy Minds, Healthy Kids (HMHK) launched as a pilot program in five pediatric primary care practices in a 30-practice network owned by a children’s hospital in the Northeast of the United States. The program was designed as one component of a multi-level response to improving behavioral health care access for children in the region.

A psychiatrist and a psychologist co-directed HMHK during the 2-year pilot and were responsible for developing and maintaining partnerships with primary care practices, creating and implementing clinical workflows, and overseeing hiring and training of clinicians. In each pilot practice, a “behavioral health champion” was identified (typically the practice medical director), and the practice managers worked closely with HMHK leadership to ensure development of procedures that were well-aligned with practice culture and workflow and replicable throughout the primary care network.

During the initial pilot year, health system leadership indicated interest in expanding to additional sites and evaluating HMHK more systematically. At that point, we established an implementation planning team, consisting of experts in pediatric psychology, integrated behavioral health services, implementation science, and health economics. This implementation team led strategic planning activities for HMHK, including developing a logic model and evaluation plan to support implementation and expansion. This work was guided by the Exploration, Adoption/Preparation, Implementation, Sustainment (EPIS) framework (Aarons et al., 2011). The team met monthly over the course of a year to review progress related to program development, develop the logic model for the program, identify key metrics, and select measures for program evaluation.

Developing the logic model and measurement battery

A logic model is a useful program management and evaluation tool for illustrating relationships among resources, activities, intended outcomes, and overall impact of a program (Centers for Disease Control and Prevention, 2019). Logic models are used to build a shared understanding of program goals among all stakeholders, support clear communication about the program and its expected impact, and guide program evaluation and improvement efforts (McLaughlin & Jordan, 1999; Smith et al., 2020). Use of logic models for program development and evaluation ensures that activities are consistent with stakeholder preferences, increases the likelihood that program goals are attained (Hayes et al., 2011; Tremblay et al., 2017), promotes replicability, and identifies linkages between intermediate and longer-term outcomes (Smith et al., 2020). As program implementation activities are completed, the logic model and evaluation plan are used interactively, resulting in data-based program revisions and more rigorous program evaluation. For example, if intended outcomes are not achieved, logic model inputs or activities might need to be revised to more accurately capture actual implementation. Alternatively, the measurement plan might require revision to ensure reliable and valid evaluation activities.

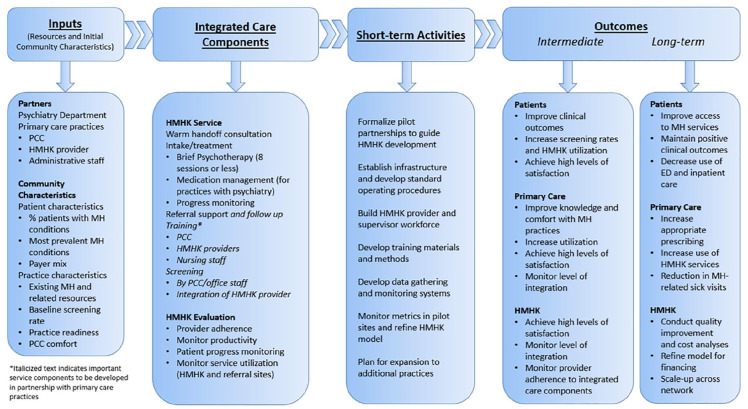

With input from key stakeholders, including administrators and colleagues in bioinformatics and information technology, the implementation team developed a logic model that included inputs (i.e., necessary resources), components of the integrated care model, short-term activities, and intermediate and long-term patient-, clinician-, and practice-related outcomes that were deemed to be of greatest interest to the stakeholder group. See Figure 1. The implementation team identified specific metrics and validated measures associated with each construct in the logic model. These metrics were selected based on a review of the pediatric, integrated care, implementation science, and health economics literatures and the resources provided by the Center for Integrated Health Solutions (https://www.samhsa.gov/integrated-health-solutions) and the Advancing Integrated Mental Health Solutions (AIMS) Center (https://aims.uw.edu/).

Figure 1.

Logic model for the Healthy Minds, Healthy Kids (HMHK) program.

Note: PCC: primary care clinician; HMHK: Healthy Minds, Healthy Kids; MH: mental health; ED: emergency department.

In this article, we describe each component of the logic model, including the guiding conceptual framework, inputs, components of the integrated care model, short-term activities, intermediate outcomes, and long-term outcomes. For each component, we highlight the metrics and measures that were implemented as part of this process and pilot data, when available. We conclude with a discussion about how this strategic implementation planning has informed program development, rollout, and plans for expansion.

Guiding conceptual framework

The development of HMHK aligns with the EPIS model (Aarons et al., 2011), which provided a roadmap for the implementation process. EPIS delineates four phases of the implementation process and acknowledges the importance of inner and outer setting constructs and bridging and innovation factors that influence implementation. The four EPIS phases are: Exploration (i.e., needs and readiness assessment, evaluation of resources), Preparation (i.e., development of implementation plan and plan for rollout), Implementation (i.e., execution of the plan and monitoring of successes and opportunities for improvement), and Sustainment (i.e., ongoing program implementation without external supports).

In the Exploration phase, we identified relevant inputs and resources. Then, we moved to Preparation, focusing on identifying the key components of integrated care, the implementation plan, and priority metrics. HMHK is currently in the Implementation phase, and key short-term metrics are being tracked. Longer-term metrics will be tracked as the program expands and ultimately moves from Implementation to Sustainment. Although the current logic model does not include explicit links to the Sustainment phase of EPIS (Aarons et al., 2011), all outcomes denoted in this model are necessary for ultimately sustaining and scaling up HMHK.

Inputs

The implementation team began by delineating the program inputs: the available personnel and non-personnel resources that can contribute to the development of a successful program (McLaughlin & Jordan, 1999). The team determined the key inputs were as follows:

Partners

HMHK represents a partnership between the primary care network owned by a children’s hospital and the psychiatry department in the same health system. A Steering Committee, composed of primary care network and psychiatry department leadership, psychologists, psychiatrists, PCCs, and administrators, met several times per year during the pilot to discuss program development and implementation and to ensure that program goals and activities aligned with the strategic plans of the primary care network, psychiatry department, and larger health system.

Community characteristics

Community characteristics include patient characteristics (e.g., number of patients served and payer mix) and practice characteristics (e.g., practice readiness, existing mental health resources). The five practices in the pilot served between 6,474 and 15,695 patients and included 6 to 21 PCCs. One of the practices was in the largest city in the state, classified as a large central metro area (Ingram & Franco, 2013; hereafer referred to as an “urban” practice). The remaining four practices were located in large fringe metro areas (Ingram & Franco, 2013; hereafter referred to as “suburban” practices). See Table 1 for details about practice size, race and ethnicity of the patients, and proportion of patients insured by Medicaid. Pilot sites were selected based on input from the medical director and administrative leaders of the primary care network, practice leaders’ interest, insurance payer mix of the patients in the practices, and availability of space in the practices for a behavioral health clinician.

Table 1.

Patient demographics and clinical information for the five pilot practices.

| Practice 1 | Practice 2 | Practice 3 | Practice 4 | Practice 5 | |

|---|---|---|---|---|---|

| Patient race (%) | |||||

| American Indian or Alaska Native | 0.1 | 0.1 | 0.1 | 0.2 | 0.1 |

| Asian | 11.5 | 7.6 | 3.3 | 6.7 | 8.8 |

| Black | 38.3 | 3.8 | 1.1 | 8.4 | 3.7 |

| Multiple races | 2.5 | 1.5 | 1.7 | 1.6 | 1.8 |

| Native Hawaiian or Other Pacific Islander | 0.1 | 0.1 | 0.0 | 0.1 | 0.0 |

| Other | 21.9 | 14.6 | 14.9 | 7.8 | 15.6 |

| White | 25.5 | 72.1 | 78.7 | 75.2 | 69.7 |

| Patient ethnicity (%) | |||||

| Hispanic or Latino | 18.0 | 2.3 | 5.1 | 4.0 | 4.1 |

| Not Hispanic or Latino | 81.8 | 96.6 | 94.0 | 95.8 | 95.4 |

| Medicaid insurance (%) | 68.5 | 7.8 | 11.6 | 8.9 | 12.3 |

| Number of PCCs | 21 | 6 | 10 | 14 | 9 |

| Number of patients | 15,695 | 6,474 | 11,902 | 15,297 | 10,078 |

| Practice location NCHS classification | Large central metro | Large fringe metro | Large fringe metro | Large fringe metro | Large fringe metro |

Note: Some race/ethnicity frequencies do not sum to 100% due to a combination of rounding and missing data. PCC: primary care clinician; NCHS: National Center for Health Statistics.

During the pilot, the HMHK leadership team considered community characteristics, a review of the literature, and benchmarking interviews conducted with peer institutions to guide the staffing plan for the program. For example, the team determined that staffing ratios would need to differ across practices based on a variety of factors (e.g., geographic location of the practice, number of PCCs in the practice). The pilot staffing plan included a part-time behavioral health clinician for each practice. Also, payer mix influenced staffing decisions. At the time of the pilot, the insurance contract relevant to most patients in the urban practice required licensed psychologists rather than licensed clinical social workers (LCSWs). Administrative leaders preferred to staff the suburban practices with LCSWs to reduce personnel costs. As HMHK has expanded to other practices since the pilot, we have continued to examine community and practice characteristics and program utilization to revise our staffing plans. For example, although most new sites continue to launch with part-time behavioral health clinicians, we have increased staffing in our largest sites due to high patient volumes. We also plan to examine whether these variables affect implementation.

In addition to metrics that could be easily captured through the electronic health record (EHR) and other data sources (e.g., practice size, HMHK utilization rates), the implementation team identified several measures to evaluate relevant practice characteristics. The Organizational Readiness for Implementing Change (ORIC; Shea et al., 2014) and Organizational Readiness to Change Assessment (ORCA; Helfrich et al., 2009) were selected to assess practice readiness for HMHK implementation. See Table 2 for details about the selected measures. We started using these measures after implementation began in the five pilot sites, so readiness data are not available. As the program expands, the readiness assessment will be completed with all members of the primary care team in prospective practices. We will use scores to inform program rollout activities, including partnership development with practice leadership and training for clinicians and office staff to prepare for implementation.

Table 2.

Validated self-report instruments and other data elements included in the measurement plan.

| Self-report measures | |||||

| Name of measure | Component of logic model | Brief description | Item scaling/response options | Respondent | Timing of administration |

| Organizational Readiness for Implementing Change (Shea et al., 2014) | Community Characteristics | Reliable and valid 12-item measure of organizational readiness for change | 5-point Likert scale (1 = disagree to 5 = agree) | All members of primary care team (clinicians and office staff) | Pre-implementation |

| Organizational Readiness to Change Assessment (Helfrich et al., 2009) | Community Characteristics | 77-item measure of organizational strengths and weaknesses to support EBP implementation; utilizing the Strength of Evidence and Quality of Context scales | Evidence scale rated on 5-point Likert scale (1 = very

weak to 5 = very strong) Context scale rated on 5-point Likert scale (1 = strongly disagree to 5 = strongly agree) |

All members of primary care team (clinicians, nurses, medical assistants, and office staff) | Pre-implementation |

| Comfort and Practice (Burka et al., 2014) | Community Characteristics & Intermediate Outcome | 19 items to assess PCC comfort and practice behavior related to attention-deficit/hyperactivity disorder, depression, anxiety, and autism spectrum disorders | 6-point Likert scale (0 = never to 5 = very frequently) | PCCs | Pre-implementation and annually |

| Level of Integration Measure (Fauth et al., 2010) | Intermediate Outcome | 35-item measure to evaluate extent to which the BH clinician or program is integrated into primary care team | 5-point Likert scale (1 = strongly disagree to 5 = strongly agree) | PCCs, nurses, medical assistants | Annually |

| Program Satisfaction (adapted from Hine et al., 2017) | Intermediate Outcome | 16 items assessing primary care team members’ perceptions of acceptability and utility of HMHK | 5-point Likert scale for acceptability (1 = not at all satisfied to 5 = highly satisfied) and utility (1 = strongly disagree to 5 = strongly agree) | All members of primary care team (clinicians, nurses, medical assistants, and office staff) | Annually |

| Patient Centered Integrated Behavioral Health Care Principles and Tasks (AIMS Center, 2012) | Intermediate Outcome | 31 items in two domains: Principles of Care (extent to which the care team employs a patient-centered, population-based approach to deliver evidence-based care) and Core Components and Tasks (aspects of effective integrated care programs) | 3-point Likert scale to indicate proportion of patients with whom the principles and components/tasks are employed (0 = none to 2 = most/all) | HMHK clinicians | Annually |

| Collaboration with Primary care Clinician | Intermediate Outcome | 7-item scale developed for this project to evaluate HMHK clinician perceptions of the extent to which PCCs and HMHK clinicians engage in team-based collaborative care | 4-point Likert scale (1 = never to 4 = always) | HMHK clinicians | Annually |

| Electronic health record data | |||||

| Data element | Component of logic model | Brief description | Target | Timing of extraction | |

| Patients with MH conditions | Community Characteristics | Number and percent of patients in each practice who have existing MH conditions to identify anticipated volume/need and most prevalent conditions in each practice location | Patients | Pre-implementation and annually | |

| Payer mix | Community Characteristics | Type of insurance (i.e., public, commercial) and specific vendor to identify proportion of patients in the practice with in-network benefits | Patients | Pre-implementation and annually | |

| Utilization of screening measures | Intermediate Outcome | Number and percent of patients per practice for whom PCC used a relevant MH screening measure during an office visit | Patients, PCCs | Pre-implementation and annually | |

| Referrals to HMHK; scheduled and completed office visits with HMHK | Intermediate Outcome & Long-term Outcome | Number of patients per practice referred to HMHK and number of scheduled and completed office visits as indicators of program utilization | Patients, PCCs, HMHK clinicians | Continuously monitored | |

| Medications prescribed by PCCs for MH concerns | Long-term Outcome | Types and frequency of prescriptions written by PCCs for MH concerns as an indicator of PCC behavior related to MH conditions | Patients; PCCs | Pre-implementation and annually | |

| Primary care sick visits with MH concern and ED visits with MH concern | Long-term Outcome | Number and percent of patients per practice with sick visits and ED visits primarily due to MH concerns as an indicator of secondary health care utilization | Patients | Pre-implementation and annually | |

Note: EBP: evidence-based practice; PCC: primary care clinician; HMHK: Healthy Minds, Healthy Kids program; AIMS: Advancing Integrated Mental Health Solutions; MH: mental health; ED: emergency department; BH = Behavioral Health.

In addition, we chose a survey to assess PCC self-reported comfort and involvement in diagnosing and treating common pediatric mental health conditions, which we refer to as the Comfort and Practice survey (Burka et al., 2014). The survey is administered to all members of the medical team to evaluate changes in PCC comfort and practice behavior. Because we started using this measure after the start of the pilot, we did not obtain baseline ratings. See Table 3 and Intermediate Outcomes: Primary Care section for data from Year 2 of the pilot.

Table 3.

Intermediate outcomes for five pilot sites after year 2.

| N | M | SD | Potential range | |

|---|---|---|---|---|

| Primary care | ||||

| LIM | 97 | 4.0 | 0.49 | 1–5 |

| PCC comfort | 46 | 2.4 | 0.88 | 0–5 |

| PCC practice | 41 | 2.6 | 0.63 | 0–5 |

| Program acceptability | 135 | 4.5 | 0.62 | 1–5 |

| Program utility | 93 | 4.4 | 0.60 | 1–5 |

| HMHK | ||||

| LIM | 5 | 4.0 | 0.28 | 1–5 |

| PCIBH principles | 5 | 1.7 | 0.23 | 0–2 |

| PCIBH core components and tasks | 5 | 1.4 | 0.12 | 0–2 |

| Collaborative care | 5 | 2.9 | 0.27 | 1–4 |

Note: SD: standard deviation; LIM: level of integration measure; PCC: primary care clinician; HMHK: Healthy Minds, Healthy Kids; PCIBH: patient-centered integrated behavioral health.

Components of the HMHK integrated care model

Next, the implementation team proceeded to clearly define the components of the HMHK service model in preparation for implementation.

Service model

The clinical care model for HMHK was developed based on review of the literature related to integrated primary care practice and feedback from key stakeholders. The model includes consultation to PCCs and other members of the primary care team (i.e., clinician-to-clinician consultation), consultation to patients and families (i.e., warm handoff consultation), and brief behavioral health interventions (i.e., 8–10 sessions) offered in the primary care office or via telehealth, which was implemented in response to the COVID-19 pandemic. If patients require a higher level of care, the HMHK clinician works closely with the care team to identify community resources and support a referral.

To encourage full integration of HMHK clinicians into the care team, PCCs serve as gatekeepers; families may not self-refer to HMHK. PCCs can refer to HMHK by requesting a same-day warm handoff consultation from the HMHK clinician; the HMHK clinician joins the office visit to screen the patient, identify preliminary treatment goals, and provide initial recommendations. If the HMHK clinician is not available, PCCs can also refer through the EHR.

Patients and families who are seen for follow-up visits with HMHK receive brief evidence-based behavioral health interventions (e.g., cognitive behavioral or behavioral). To maximize clinician availability and patient access, HMHK clinicians see patients and families for a 1-hour initial intake and 30-minute follow-up sessions. At each session, patients receive homework assignments designed to support development of skills to reduce impairment.

Evaluation model

A core component of the HMHK model is ongoing progress monitoring. Ongoing program evaluation activities are critical to understand clinician adherence to the HMHK model, clinician productivity, and program utilization by PCCs and families.

During the pilot, program leaders collaborated with partners in the hospital’s finance department to access existing data, including practice payer mix, billable charges, and rates of reimbursement, to inform budget planning for program expansion. These data will also be used to evaluate whether the program meets financial targets. As the HMHK program expands, the team will engage in quality improvement (QI) to evaluate additional intermediate and long-term outcomes (e.g., patient clinical outcomes, HMHK clinician fidelity to the model, patient service use, program cost). To date, QI projects have included revision of progress note templates and templates for between-session homework assignments to support standardization of care and clinician adherence to evidence-based practice.

Short-term activities

Following a clear understanding of program resources and agreement on the key components of the model, the implementation team agreed upon several key activities and outcomes to support early stage implementation (McLaughlin & Jordan, 1999; Smith et al., 2020). Some activities were already in progress (e.g., pilot partnerships), while others were identified as necessary next steps (e.g., develop additional data systems). During the pilot, additional data tracking systems were developed to monitor program utilization (e.g., number and type of referrals, availability of appointments, scheduled and completed office visits, diagnoses). In addition, an operations manager was hired for HMHK, and she worked closely with the co-directors to develop standard operating procedures to guide program implementation and expansion. She was also responsible for working with administrative leaders in the psychiatry and finance departments to develop financial targets and the operating budget.

The HMHK leadership team recognized the need to support workforce development efforts to prepare for expected personnel needs once expansion began. In partnership with training program leaders in the psychiatry department, the HMHK co-directors refined training rotations for trainees (Njoroge et al., 2017). A training and supervision plan was established to support the development of clinical competencies in pediatric integrated behavioral health in pediatric primary care (e.g., Hoffses et al., 2016) and encourage fidelity to evidence-based strategies.

Outcomes

The final step in logic model development involved defining key outcomes of interest. The implementation team delineated between intermediate outcomes (i.e., impacts more directly connected to HMHK implementation) and longer-term outcomes (i.e., impacts that likely result from the additive benefit of intermediate outcomes; McLaughlin & Jordan, 1999). Together, these outcomes reflect concerted efforts to ensure the sustainability of HMHK. The team identified measurement strategies for each metric of interest, using a combination of health system/EHR data, econometric data, and validated self-report instruments.

Intermediate outcomes

The implementation team determined that key intermediate outcomes were related to patient-, primary care-, and HMHK program–level constructs.

Patients

During the first year of the pilot, which rolled out in stages over the course of the year, 723 patients were seen for 1,481 office visits in the five practices. This represents approximately 1.5% of patients in the practices. Most patients (n = 490; 67.8%) were referred to HMHK through the EHR after the patient’s visit with the PCC. An additional 233 (32.2%) engaged with HMHK through a warm handoff conducted the same day as the primary care office visit. Most of these warm handoffs (n = 181; 77.7%) were conducted in the urban practice. This was likely due to the fact that the no show rate for scheduled HMHK sessions in that setting was substantially higher than in the other sites (28% vs. 4%–12%), which allowed for more clinician time to engage in warm handoff consultations with patients who were in the office for visits with PCCs. In the second year of the pilot, the team saw 1,360 patients for 3,138 office visits, meaning 2.8% of patients in the practices engaged with HMHK. Again, most patients (n = 1,156; 85.0%) were referred through the EHR; 204 (15%) were referred via warm handoff, and the majority (n = 134; 65.7%) of warm handoffs occurred in the urban practice. As in year 1, the no show rate in the urban location was higher than in the other sites (29% vs 4%–10%). Across years, among patients who attended an HMHK intake and at least one follow-up session, the mean number of total visits attended was 3.7. The most common referral concerns included anxiety, attention-deficit/hyperactivity disorder (ADHD), disruptive behavior (including tantrums), sleep problems, school problems (including academic concerns and bullying), and mood problems.

Primary care

An electronic survey is distributed to all staff (PCCs, nurses, medical assistants, office staff) annually to gather staff perspectives about the program. At the conclusion of the first year of the pilot, only program satisfaction data were collected; during year 2, the survey included the program satisfaction measure (adapted from Hine et al., 2017), the level of integration measure (LIM; Fauth et al., 2010), and the questionnaire related to PCC comfort and practice with mental health conditions (Burka et al., 2014).

After year 1, participating practices indicated a high level of acceptability and utility of the HMHK program (acceptability M = 4.4 on a 5-point scale; SD = 0.64; utility M = 4.4; SD = 0.56). At the conclusion of year 2, ratings from the pilot practices were similar (acceptability M = 4.5; SD = 0.62 and utility M = 4.4; SD = 0.60; see Table 3). These findings coincide with those of Hine and colleagues (2017) that demonstrated PCCs were generally satisfied with integrated behavioral health services in pediatric primary care.

The satisfaction survey also included open-ended questions about program strengths and areas for growth. With regard to program strengths, respondents frequently made comments related to (a) reduction in stigma (e.g., “our patients’ parents are more comfortable because it’s here at our location”); (b) ease of access (e.g., “families actually use service as it is convenient”); and (c) opportunities for consultation (e.g., “it is great being able to do a warm handoff the day of visit”). With regard to opportunities for growth, respondents made comments related to (a) HMHK clinician availability (e.g., “making appointment availability more visible to clinicians,” “increasing availability for point-of-care curbside visits”); and (b) HMHK operating procedures (e.g., referral process “feels a bit cumbersome,” “opportunities for improvements in communication”). These comments, many of which echo the main themes highlighted in the extant literature on integrated primary care (Arora et al., 2017; Godoy et al., 2017; Hine et al., 2017), were used to refine procedures during the pilot. The Steering Committee, stakeholder leaders, and practices were informed about the ways in which practice-level feedback was used to inform program improvements. For example, the referral process was redesigned to align with procedures in the EHR used by other programs within the hospital, and HMHK clinicians began sending their initial visit notes to PCCs in the EHR to share their impressions and preliminary treatment plans. Program satisfaction information will continue to be utilized as HMHK expands to further refine the service model and improve workflow.

After year 2, members of the primary care team felt that HMHK was relatively well integrated into each of the primary care practices (LIM M = 4.00; SD = 0.49; see Table 3). With respect to PCC comfort, respondents, on average, indicated that they “rarely” to “occasionally” felt comfortable with managing common pediatric mental health conditions (M = 2.4 on the 0–5 scale; SD = 0.88). Of note, members of the medical team indicated higher levels of comfort with ADHD (M = 3.4; SD = 1.07) than depression (M = 2.2; SD = 1.36), anxiety (M = 2.1; SD = 1.22), and autism spectrum disorder (ASD; M = 2.8; SD = 1.27). This is consistent with expectations, given that the PCCs in the primary care network had previously been exposed to a substantial amount of training and education related to the diagnosis and management of ADHD (Fiks et al., 2017), and no similar work had been completed related to other behavioral health concerns. These data will be used to inform educational activities that are being developed in partnership with psychiatry and primary care leadership. With respect to actual practice behavior, PCCs provided responses that align with comfort ratings overall (M = 2.6 on the 0–5 scale; SD = 0.63) and for common presenting concerns (ADHD M = 3.4; SD = 1.09; depression M = 2.6; SD = 0.83; anxiety M = 2.2; SD = 0.87; ASD M = 2.4; SD = 0.84).

HMHK

To obtain information about HMHK clinician perspectives, three measures were selected, including the LIM (Fauth et al., 2010), the Patient Centered Integrated Behavioral Health Care Principles and Tasks (AIMS Center, 2012), and a measure developed by the implementation team related to HMHK clinician perceptions of the extent to which PCCs and HMHK clinicians engage in team-based collaborative care. These measures were administered to the five HMHK clinicians after year 2. The HMHK clinicians had similar ratings to the primary care team regarding level of integration (LIM M = 4.0; SD = 0.27). On average, HMHK clinicians indicated that the teams used patient-centered approaches with most patients (Principles M = 1.7; SD = 0.23), and aspects of effective integrated care programs were employed with some to most of the patients (Core Components and Tasks M = 1.4; SD = 0.12). Finally, on average, HMHK clinicians indicated that they “often” engaged in team-based, collaborative care with their primary care colleagues (M = 2.9; SD = 0.28; Table 3). These measures will be administered annually to monitor changes in level of integration, use of team-based care strategies, and implementation of patient-centered, integrated care approaches. In addition, these data will be used to inform QI initiatives to ensure that high levels of integration are achieved across sites.

Long-term outcomes

In addition to continuing to collect relevant intermediate outcome metrics to understand long-term utilization (e.g., referrals, engagement in care) and effectiveness outcomes, the implementation team identified several additional discrete long-term outcomes.

Patients

The ultimate long-term goal of HMHK is improved patient health—both mental and physical health. Specific long-term health outcomes of interest will vary depending on subgroups of the population, although primary health outcomes include improvements in behavioral health conditions (e.g., depression and anxiety) as well as changes in health behaviors related to physical health conditions (e.g., adherence to an asthma care plan). Other patient outcomes cut across diagnoses and populations, such as improvement in quality of life. In the long term, HMHK also aims to reduce avoidable emergency department (ED) visits and hospitalizations. We plan to access patients’ health care service claims data to assess whether the use of integrated behavioral health services results in reduced ED visits and hospitalization.

In addition, improved patient access to and utilization of mental health services is a long-term objective and indicator of HMHK’s success. We recently evaluated patient- and service-related factors associated with engagement in HMHK treatment (Mautone et al., 2020). We will also collaborate with leaders in other programs in the hospital system (e.g., outpatient specialty mental health programs) to evaluate access to care more broadly across the health system. For instance, in our pilot work, we noted that HMHK resulted in more patients being seen within the primary care sites as expected, but also an increase in referrals to our outpatient specialty mental health clinics. It will be important to continue to monitor service utilization over time to ensure that patients are receiving the right services at the right time and in the right setting to best address their needs.

Primary care

In addition to evaluating changes in PCC self-reported comfort and practice related to management of mental health conditions, the implementation team determined that it would be ideal to evaluate actual clinician behavior (e.g., medication prescribing practices). The team will continue to collaborate with colleagues in informatics to identify data collection methods through the EHR that can capture changes in clinician behaviors.

As noted above, approximately half of primary care office visits are related to a behavioral health concern (Martini et al., 2012). As HMHK grows, we expect that there will be a reduction in behavioral health-related sick visits scheduled in HMHK practices. This can also be evaluated through the EHR.

HMHK

Cost is an important determinant of adoption, sustainment, and scalability of the HMHK program. Evaluation of program cost will include an analysis of resources and costs required to develop and run the program and the potential additional costs or cost offsets resulting from an increase or decrease in secondary-care utilization (i.e., sick visits, ED visits, hospitalizations). The cost estimation will primarily aim to identify the categories of costs (i.e., program set-up and ongoing costs), and the scale of resources needed for each category. We will use CostIt (Costing Interventions Templates; Adam et al., 2007), software designed by World Health Organization to record and analyze cost data. In addition, we will access patients’ health care service claims data to assess if engagement in integrated services results in reduced costs or costs offset due to decreased health care utilization. We will compare total expenses for patients engaged in HMHK with expenses for patients with similar profiles who were not in the program. We also will track all services billed and monitor denials of claims to become more informed about reimbursement options (e.g., use of Collaborative Care billing codes; Press et al., 2017). Finally, we will explore alternative payment models to the traditional fee-for-service-model currently in place.

Future directions

Because of the iterative nature of this work, the HMHK service model has continued to be refined, particularly as we collect data on the various components of the logic model. Although several components of the program development and expansion plan have been delayed due to the COVID-19 pandemic (e.g., hiring freeze-delayed addition of new practices), the following are examples of ongoing and future program development activities.

First, the team is focusing on developing strategies for training PCCs, nursing staff, and office staff to enhance team knowledge of behavioral health and team-based care. To date, HMHK leadership has further developed the practice orientation process to include a more detailed training related to integrated behavioral health and HMHK. Also, HMHK clinicians are working with each practice to incorporate practice-based learning opportunities (e.g., lunch and learn sessions) focused on mental health issues, and the psychiatry department is working with the primary care network to implement a mental health-focused curriculum for PCCs (e.g., Laraque et al., 2009). The data we collect on PCCs’ comfort and practice with behavioral health issues will inform these efforts.

Second, to address the needs of more complex patients and to support PCC skill development related to psychiatric medication management, the HMHK leadership team is identifying opportunities for improving access to psychiatry services in primary care. In one practice, psychiatry trainees are present for a rotation related to integrated behavioral health and PCCs and families can obtain psychiatric medication consultation directly from the psychiatry trainees and attending psychiatrist. Other practices are increasingly using telephonic consultation through a state-funded program offered by the psychiatry department, modeled after established programs (e.g., Straus & Sarvet, 2014). Also, a telepsychiatry service has been developed to improve family access to psychiatric care. As these consultative opportunities are refined and scaled, we will evaluate whether they increase PCC satisfaction with HMHK and PCC comfort and practices related to managing behavioral health conditions.

Third, HMHK leadership is collaborating with content experts in the psychiatry department to offer a professional development series for HMHK clinicians to support fidelity to evidence-based practices. Concurrently, the leadership team is working on strategies for monitoring clinician fidelity and providing ongoing performance feedback.

Fourth, the HMHK team is refining referral procedures, patient tracking systems, and progress monitoring procedures to improve clinical workflows and evaluation efficiency. Several groups throughout the enterprise are focused on screening patients for behavioral health concerns and linking these patients to services (e.g., Farley et al., 2020). HMHK leadership will work closely with the behavioral health champions in each practice to ensure that appropriate patients who are identified through these screening efforts are referred to HMHK. Leadership also is working to ensure that referrals to higher levels of care and patient progress can be easily monitored in the EHR.

Fifth, one of the inherent goals of creating HMHK was to promote health equity by increasing access to quality care. We will continue to use program evaluation data, alongside recommendations in the literature (e.g., Arora et al., 2017), to inform infrastructure development, clinician training, and clinical practice to address identified health disparities. Members of the HMHK team have joined the newly established Health Equity Working Group for the health system. This will help ensure that ongoing program development and evaluation activities are aligned with hospital priorities and that HMHK continues to promote access to high-quality care for children and families from diverse racial and ethnic groups.

Sixth, the team will continue to use program evaluation data, including referral rates, clinician productivity, and family and clinician satisfaction data to inform staffing plans and patient schedules for each practice. During the 2-year pilot, HMHK clinicians saw less than 3% of patients in the participating practices. Given that up to 20% of youth have behavioral health concerns (Merikangas et al., 2010) and that half of primary care office visits include a behavioral or emotional concern (Martini et al., 2012), there are additional opportunities for program expansion. In addition, follow-up sessions for HMHK are 30 minutes; outpatient behavioral health sessions are typically 45 to 60 minutes. Anecdotally, clinicians have indicated that 30-minute sessions are insufficient for some patients. As a result, clinicians have piloted longer sessions, and the leadership team is evaluating options for altering visit durations.

Seventh, consultation to families via warm handoff and consultation to PCCs is a critical component of the HMHK model. The leadership team monitors the number of warm handoff consultations; however, there is no mechanism for quantifying clinician-to-clinician consultation. This will be included in future data-tracking systems.

Finally, we will continue to explore opportunities under federal and state payment reform initiatives to ensure the financial sustainability of HMHK. We are evaluating the current business model and insurance contracts to identify opportunities for increased reimbursement, which will be essential as we expand.

Conclusion

Our work developing a logic model and associated evaluation plan for HMHK serves two key functions. First, this process has allowed us to develop a highly tailored implementation and evaluation plan to support scale up of a new program in a manner that is responsive to the specific context and health system priorities. Tailored implementation approaches have been recommended (Powell et al., 2017), as this approach provides structure while allowing for modifications to the implementation planning activities. Second, it is our hope that this work will serve as a model for other health systems seeking to integrate behavioral health services in pediatrics, as well as implement new programs more broadly. Integrated behavioral health programs are expanding rapidly in pediatric primary care throughout the United States (e.g., Briggs et al., 2016; Godoy et al., 2017). Although we provide a specific example from our work with HMHK, we view our process as generalizable to other contexts. As has been noted by others (e.g., Bauer et al., 2015; Lewis et al., 2018), programs are often implemented without strategic implementation planning, making it difficult to disentangle why implementations do or do not succeed. The use of logic models and a priori evaluation plans can move the field of implementation science forward, while also increasing the likelihood that integrated behavioral health services, and other evidence-based practices, will be successful and sustainable.

Acknowledgments

The authors thank the network of primary care clinicians, their patients, and families for their contribution to this work and clinical research facilitated through the Pediatric Research Consortium (PeRC) at Children’s Hospital of Philadelphia.

Footnotes

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was completed with support from the Chair of the Department of Child & Adolescent Psychiatry & Behavioral Sciences at Children’s Hospital of Philadelphia. M.F.D. is supported by a National Institute of Mental Health Training Fellowship (grant no. T32 MH109433).

ORCID iD: Jennifer A Mautone  https://orcid.org/0000-0002-2712-5255

https://orcid.org/0000-0002-2712-5255

References

- Aarons G. A., Hurlburt M., Horwitz S. M. (2011). Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health and Mental Health Services Research, 38, 4–23. 10.1007/s10488-010-0327-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adam T., Aikins M., Evans D. (2007). CostIt (Costing interventions templates) software (Version 4.5). World Health Organization. http://www.who.int/choice/toolkit/cost_it/en/ [Google Scholar]

- Advancing Integrated Mental Health Solutions Center. (2012). Patient Centered Integrated Behavioral Health Care Principles and Tasks. Psychiatry & Behavioral Sciences Division of Population Health, University of Washington. Retrieved January 5, 2021: https://aims.uw.edu/sites/default/files/CollaborativeCarePrinciplesAndComponents_2014-12-23.pdf [Google Scholar]

- American Psychiatric Association & Academy of Psychosomatic Medicine. (2016). Dissemination of integrated care within adult primary care settings: The collaborative care model. https://www.psychiatry.org/psychiatrists/practice/professional-interests/integrated-care/learn

- Arora P., Godoy L., Hodgkinson S. (2017). Serving the underserved: Cultural considerations in behavioral health integration in pediatric primary care. Professional Psychology: Research and Practice, 48(3), 139–148. [Google Scholar]

- Asarnow J., Kolko D. J., Miranda J., Kazak A. E. (2017). The pediatric patient-centered medical home: Innovative models for improving behavioral health. American Psychologist, 72, 13–27. 10.1037/a0040411 [DOI] [PubMed] [Google Scholar]

- Asarnow J., Rozenman M., Wiblin J., Zeltzer L. (2015). Integrated medical-behavioral care compared with usual primary care for child and adolescent behavioral health: A meta-analysis. JAMA Pediatrics, 169, 929–937. 10.1001/jamapediatrics.2015.1141 [DOI] [PubMed] [Google Scholar]

- Bauer M. S., Damschroder L., Hagedorn H., Smith J., Kilbourne A. M. (2015). An introduction to implementation science for the non-specialist. BMC Psychology, 3, Article 32. 10.1186/s40359-015-0089-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Briggs R. D., German M., Schrag Hershberg R., Cirilli C., Crawford D. E., Racine A. D. (2016). Integrated pediatric behavioral health: Implications for training and intervention models. Professional Psychology: Research and Practice, 47(4), 312–319. [Google Scholar]

- Burka S. D., Van Cleve S. N., Shafer S., Barkin J. L. (2014). Integration of pediatric mental health care: An evidence-based workshop for primary care providers. Journal of Pediatric Health Care, 28(1), 23–34. 10.1016/j.pedhc.2012.10.006 [DOI] [PubMed] [Google Scholar]

- Centers for Disease Control and Prevention. (2019). Logic models. https://www.cdc.gov/eval/logicmodels/index.htm

- Farley A.M., Gallop R.J., Brooks E.S., Gerdes M., Bush M.L., Young J.F. (2020). Identification and management of adolescent depression in a large pediatric care network. Journal of Developmental and Behavioral Pediatrics, 41, 85–94. 10.1097/DBP.0000000000000750. [DOI] [PubMed]

- Fauth J., Tremblay G., Blanchard A., Austin J. (2010, June 23–27). Participatory practice based research for improving clinical practice from the ground up: The integrated care evaluation project [Conference session]. International Meeting of the Society for Psychotherapy Research, Asilomar, CA, United States. [Google Scholar]

- Fiks A. G., Mayne S. L., Michel J. J., Miller J., Abraham M., Suh A., Jawad A. F., Guevara J. P., Grundmeier R. W., Blum N. J., Power T. J. (2017). Distance-learning, ADHD quality improvement in primary care: A cluster-randomized trial. Journal of Developmental & Behavioral Pediatrics, 38, 573–583. 10.1097/DBP.0000000000000490 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Godoy L., Long M., Marschall D., Hodgkinson S., Bokor B., Rhodes H., Crumpton H., Weissman M., Beers L. (2017). Behavioral health integration in health care settings: Lessons learned from a pediatric hospital primary care system. Journal of Clinical Psychology in Medical Settings, 24, 245–258. 10.1007/s10880-017-9509-8 [DOI] [PubMed] [Google Scholar]

- Hayes H., Parchman M. L., Howard R. (2011). A logic model framework for evaluation and planning in a primary care practice-based research network (PBRN). Journal of the American Board of Family Medicine, 24, 576–582. 10.3122/jabfm.2011.05.110043 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Helfrich C. D., Li Y.-F., Sharp N. D., Sales A. E. (2009). Organizational readiness to change assessment (ORCA): Development of an instrument based on the Promoting Action on Research in Health Services (PARIHS) framework. Implementation Science, 4, 38. 10.1186/1748-5908-4-38 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hine J. F., Grennan A. Q., Menousek K. M., Robertson G., Valleley R. J., Evans J. H. (2017). Physician satisfaction with integrated behavioral health in pediatric primary care. Journal of Primary Care Community Health, 8, 89–93. 10.1177/2150131916668115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoffses K. W., Ramirez L. Y., Berdan L., Tunick R., Honaker S. M., Meadows T. J., Shaffer L., Robins P. M., Stancin T., Sturm L. (2016). Topical review: Building competency: Professional skills for pediatric psychologists in integrated primary care settings. Journal of Pediatric Psychology, 41, 1144–1160. 10.1093/jpepsy/jsw066 [DOI] [PubMed] [Google Scholar]

- Horwitz S. M., Storfer-Isser A., Kerker B. D., Szilagyi M., Garner A., O’Connor K. G., Hoagwood K. E., Stein R. E. (2015). Barriers to the identification and management of psychosocial problems: Changes from 2004 to 2013. Academic Pediatrics, 15, 613–620. 10.1016/j.acap.2015.08.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ingram D. D., Franco S. J. (2013). NCHS Urban-rural classification scheme for counties. Vital and Health Statistics, 2(166), 1–73. [PubMed] [Google Scholar]

- Laraque D., Adams R., Steinbaum D., Zuckerbrot R., Schonfeld D., Jensen P. S., Demaria T., Barrett M., Dela-Cruz M., Boscarino J. A. (2009). Reported physician skills in the management of children’s mental health problems following an educational intervention. Academic Pediatrics, 9(3), 164–171. 10.1016/j.acap.2009.01.009 [DOI] [PubMed] [Google Scholar]

- Lewis C. C., Klasnja P., Powell B. J., Lyon A. R., Tuzzio L., Jones S., Walsh-Bailey C., Weiner B. (2018). From classification to causality: Advancing understanding of mechanisms of change in implementation science. Frontiers in Public Health, 6, Article 136. 10.3389/fpubh.2018.00136 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martini R., Hilt R., Marx L., Chenven M., Naylor M., Sarvet B., Ptakowski K. K. (2012). Best principles for integration of child psychiatry into the pediatric health home. American Academy of Child & Adolescent Psychiatry. [Google Scholar]

- Mautone J. A., Cabello B., Egan T. E., Rodrigues N. P., Davis M., Figge C. J., Sass A. J., Williamson A. A. (2020). Exploring predictors of treatment engagement in urban integrated primary care. Clinical Practice in Pediatric Psychology, 8(3), 228–240. 10.1037/cpp0000366 [DOI] [PMC free article] [PubMed]

- McLaughlin J., Jordan G. (1999). Logic models: A tool for telling your program’s performance story. Evaluation and Program Planning, 22, 65–72. 10.1016/S0149-7189(98)00042-1 [DOI] [Google Scholar]

- Merikangas K. R., He J.-p., Burstein M., Swanson S. A., Avenevoli S., Cui L., Benjet C., Georgiades K., Swendsen J. (2010). Lifetime prevalence of mental disorders in US adolescents: Results from the National Comorbidity Survey Replication–Adolescent Supplement (NCS-A). Journal of the American Academy of Child & Adolescent Psychiatry, 49(10), 980–989. 10.1016/j.jaac.2010.05.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Njoroge W.F.M., Williamson A.A., Mautone J.A., Robins P.M., Benton T.D. (2017). Competencies and training guidelines for behavioral health providers in pediatric primary care. Child and Adolescent Psychiatric Clinics of North America, 26, 717–731. [DOI] [PubMed] [Google Scholar]

- Peek C. J., & National Integration Academy Council. (2013). Lexicon for behavioral health and primary care integration: Concepts and definitions developed by expert consensus (AHRQ Publication No.13-IP001-EF). https://pip.w3.uvm.edu/Lexicon.pdf

- Powell B. J., Beidas R. S., Lewis C. C., Aarons G. A., McMillen J. C., Proctor E. K., Mandell D. S. (2017). Methods to improve the selection and tailoring of implementation strategies. Journal of Behavioral Health Services Research, 44, 177–194. 10.1007/s11414-015-9475-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Press M. J., Howe R., Schoenbaum M., Cavanaugh S., Marshall A., Baldwin L., Conway P. H. (2017). Medicare payment for behavioral health integration. The New England Journal of Medicine, 376, 405–407. 10.1056/NEJMp1614134 [DOI] [PubMed] [Google Scholar]

- Shea C. M., Jacobs S. R., Esserman D. A., Bruce K., Weiner B. J. (2014). Organizational readiness for implementing change: A psychometric assessment of a new measure. Implementation Science, 9, Article 7. 10.1186/1748-5908-9-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith J. D., Li D., Rafferty M. R. (2020, April 16). The Implementation Research Logic Model: A method for planning, executing, reporting, and synthesizing implementation projects. medRxiv. 10.1101/2020.04.05.20054379 [DOI] [PMC free article] [PubMed]

- Straus J. H., Sarvet B. (2014). Behavioral health care for children: The Massachusetts child psychiatry access project. Health Affairs, 33, 2153–2161. 10.1377/hlthaff.2014.0896 [DOI] [PubMed] [Google Scholar]

- Tremblay C., Coulombe V., Briand C. (2017). Users’ involvement in mental health services: Programme logic model of an innovative initiative in integrated care. International Journal of Mental Health Systems, 11, Article 9. 10.1186/s13033-016-0111-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wright D. R., Haaland W. R., Ludman E., McCauley E., Lindenbaum J., Richardson L. P. (2016). The costs and cost-effectiveness of collaborative care for adolescents with depression in primary care settings: A randomized clinical trial. JAMA Pediatrics, 170, 1048–1054. 10.1001/jamapediatrics.2016.1721 [DOI] [PubMed] [Google Scholar]