Abstract

Robotics is one of the most emerging technologies today, and are used in a variety of applications, ranging from complex rocket technology to monitoring of crops in agriculture. Robots can be exceptionally useful in a smart hospital environment provided that they are equipped with improved vision capabilities for detection and avoidance of obstacles present in their path, thus allowing robots to perform their tasks without any disturbance. In the particular case of Autonomous Nursing Robots, major essential issues are effective robot path planning for the delivery of medicines to patients, measuring the patient body parameters through sensors, interacting with and informing the patient, by means of voice-based modules, about the doctors visiting schedule, his/her body parameter details, etc. This paper presents an approach of a complete Autonomous Nursing Robot which supports all the aforementioned tasks. In this paper, we present a new Autonomous Nursing Robot system capable of operating in a smart hospital environment area. The objective of the system is to identify the patient room, perform robot path planning for the delivery of medicines to a patient, and measure the patient body parameters, through a wireless BLE (Bluetooth Low Energy) beacon receiver and the BLE beacon transmitter at the respective patient rooms. Assuming that a wireless beacon is kept at the patient room, the robot follows the beacon’s signal, identifies the respective room and delivers the needed medicine to the patient. A new fuzzy controller system which consists of three ultrasonic sensors and one camera is developed to detect the optimal robot path and to avoid the robot collision with stable and moving obstacles. The fuzzy controller effectively detects obstacles in the robot’s vicinity and makes proper decisions for avoiding them. The navigation of the robot is implemented on a BLE tag module by using the AOA (Angle of Arrival) method. The robot uses sensors to measure the patient body parameters and updates these data to the hospital patient database system in a private cloud mode. It also makes uses of a Google assistant to interact with the patients. The robotic system was implemented on the Raspberry Pi using Matlab 2018b. The system performance was evaluated on a PC with an Intel Core i5 processor, while the solar power was used to power the system. Several sensors, namely HC-SR04 ultrasonic sensor, Logitech HD 720p image sensor, a temperature sensor and a heart rate sensor are used together with a camera to generate datasets for testing the proposed system. In particular, the system was tested on operations taking place in the context of a private hospital in Tirunelveli, Tamilnadu, India. A detailed comparison is performed, through some performance metrics, such as Correlation, Root Mean Square Error (RMSE), and Mean Absolute Percentage Error (MAPE), against the related works of Deepu et al., Huh and Seo, Chinmayi et al., Alli et al., Xu, Ran et al., and Lee et al. The experimental system validation showed that the fuzzy controller achieves very high accuracy in obstacle detection and avoidance, with a very low computational time for taking directional decisions. Moreover, the experimental results demonstrated that the robotic system achieves superior accuracy in detecting/avoiding obstacles compared to other systems of similar purposes presented in the related works.

Keywords: Robotics, Smart hospital, Ultrasonic sensor, Fuzzy logic, Bluetooth low energy beacon, Raspberry pi, Wireless beacon network

Introduction

Autonomous Nursing Robots

Healthcare workers are continuously facing serious workplace hazards. Nowadays, during the time of the COVID-19 pandemic, healthcare workers have an increased risk of contracting COVID-19 due to their contact with patients affected by the new coronavirus. In addition, workers in healthcare settings may be exposed to flu germs, blood borne pathogens (such as HBV and HIV), hand-washing related infections and many other healthcare-associated infections and diseases. Shortage of staffing and, in particular, nursing shortage is an additional major concern in many hospitals. Healthcare organizations in the near future will likely face much more shortage of nurses than in nowadays [5]. Due to nursing shortage, nurses working in a healthcare organization often have to considerably extend their work shifts, or even to work overtime shifts. Thus, nurses are at high risk for fatigue and burnout, which consequently may negatively affect the patients’ care [40]. Given that the number of caretakers and nurses is constantly decreasing, hospital management struggles a lot to provide quality treatment to patients, even in tier-1 hospitals.

There are many technological developments aiming in automating hospital processes and reducing the risks in a hospital environment. Robots, for example, are started to be used in smart hospital environments for performing various activities. In particular, patient caretaker robots have the potential to provide solutions to some of the aforementioned problems [13]. Caretaker robots can be helpful to decrease nurses’ workload and efficiently minimize nonvalue‐added nursing activities [23]. Nevertheless, the major essential issues in the case of Autonomous Nursing Robots are effective robot path planning for the delivery of medicines to patients, measuring the patient body parameters through sensors, interacting with and informing the patient, by means of voice-based modules, about the doctors visiting schedule, his/her body parameter details, etc. All these requirements should be taken into consideration simultaneously, instead of supporting each one of them separately.

It is indeed important, as a robot may have power restrictions and limited capacity (i.e., regarding robot’s vision, memory, movement, etc.), to utilize solutions based on Autonomous Nursing Robot offering a variety of effective actions, and saving much cost. In the context of multimedia technology, the combination of various media technologies inside robots would facilitate their usage in various scenarios, as illustrated in some case studies of autonomous nursing robots presented in the relevant literature. Media technologies include tools to support global media processing ranging from audio, video, images, interactive media, video games, virtual reality, and augmented reality. As illustrated, a robot could utilize a patient privacy face recognition module to detect the patient and link him with a disease database hosted on a remote cloud server to retrieve the disease information in a privacy mode [17]. Then, the robot could navigate the map of the environment and locations of other robots through the Simultaneous Localization and Mapping (SLAM) method [15] to circulate the disease information of the patient in a secured setting. A path planning could be set up for the robot to take the patient to the emergency room, especially in the COVID-19 pandemic [28]. Interactive environment could be detected by the virtual reality and augmented reality in the robot so as it could enhance further actions responding to the environment. Voice synthesis is generated to produce response by audio to the patients for the information they would like to know such as medicine and treatment therapy. Obviously, there is close connection from multimedia technology to the Automatic Nursing Robots.

This paper presents an approach of a complete Autonomous Nursing Robot which supports all the aforementioned tasks.

Literature review

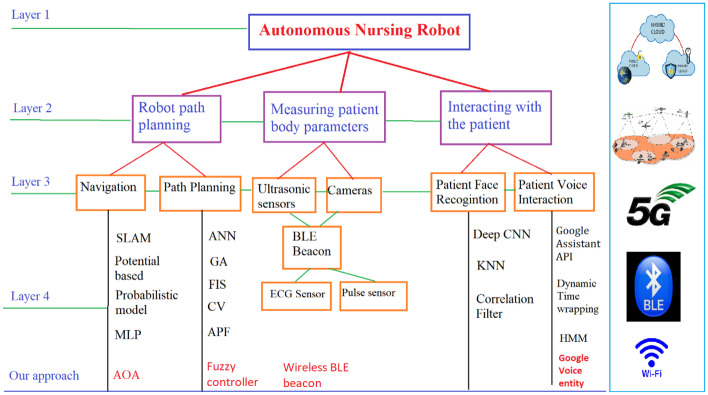

In the literature, there is a plethora of artificial intelligence (AI) methods, network technologies (WiFi, Bluetooth Low Energy (BLE), etc.), and devices (ultrasonic sensors, cameras, BLE Beacon, etc.) aiming to support effective robot positioning, path planning and navigation. The AI techniques could be computer vision (CV) methods, Artificial Neural Networks (ANNs), Genetic Algorithms (GAs), Fuzzy Inference Systems (FISs), Deep Learning algorithms, etc. The diagram in Fig. 1 depicts a classification of Autonomous Robot types by considering various AI techniques, network technologies, and devices. Layer 1 in the figure implies the object study, and Layer 2 expresses main functionalities of an Autonomous Nursing Robot that are: robot path planning, measuring patient body temperature, and interacting with the patient. Layer 3 demonstrates sub-functions of each main function shown in Layer 2, for example, the Robot path planning functions has 2 sub-functions, namely Navigation and Path planning, in which the Navigation sub-function leads to finding the shortest path on a map, while Path planning sub-function employs AI algorithms like ANNs, GAs and FIS for avoiding obstacles in the robot’s path.

Fig. 1.

Review of other related approaches vs. Ours

Layer 4 shows typical techniques used in the literature for the implementation of each sub-function. For example, for implementing the Navigation sub-function various techniques have been used, such as the SLAM method [15], Potential based approaches [31], Probabilistic models [19], and methods based on Multilayer Perceptron (MLP) [39]. All those techniques and devices connected together through a network (e.g. WiFi, BLE, 5G, Satellite network) to a (hybrid) cloud service for performing the sub-tasks of an Autonomous Nursing Robot. The last layer in the figure shows our approaches for each sub-function. All these approaches are discussed clearly in Sect. 2, and we illustrate them in this figure to demonstrate the difference and novelty of our approach against the related ones.

In what follows, we briefly describe some of the typical methods shown in Layer 4 of the diagram presented in Fig. 1.

Robot navigation McGuire et al. [27] presented a literature survey and a comparative study of Bug Algorithms (BA) for robotic navigation. BA, as hinted by its name, differs from the existing path planning algorithms like Dijkstra and A* in terms of defining the environment in advance. Variants of BA have been proposed such as Bug1 [25], Bug2 [26], Com1 and Alg2 [37], DistBug [16], Rev1 and Rev2 [12] and others. Choice of bugs was also mentioned toward the Robot application. Bera et al. [4] described a real-time method for socially-aware robot navigation using potential-based approaches [31] for robot path planning in dynamic environments. Other techniques using probabilistic or Bayesian velocity-obstacles were also referred to the literature [19]. Singh and Khelchandra [39] used MLP to determine a collision-free area that controls the robot speed in each motion. A review of SLAM algorithms for Robot Navigation was addressed in [20].

Robot path planning Dirik et al. [9] proposed a global path planning method based on ANNs and GAs to provide an effective path planning and obstacle avoidance solution. An ANN can be used to represent the environmental information in the robot workspace in order to find an obstacle avoidance path that leads the robot to the target neuron corresponding to the target location. The global optimal path is then decided by applying a GA. Fusing GA and ANN brings effective path planning trajectory for the mobile robot. Zhang et al. [43] investigated a vision-based gaze guided robot method for avoiding obstacles based on the user’s eye movements. This method implements vision detection and robot motion control by applying a type-2 FIS to control the robot based on eye gaze data obtained from the system user through an eye gaze tracking device. Avirup et al. [2] described a road-following system for guiding mobile robots in outdoor environments based on a monocular camera. The presented system consists of road tracking and detection subsystems. The road detection subsystem fuses the results from two computer vision (CV) methods (i.e., a color-based road detector and a texture line detector). The road tracking system provides the tracking waypoints for the robot by transforming the road-following position in camera coordinates into world coordinates and sends appropriate control commands to the robot motion controller. A fuzzy algorithm system for mobile robot was proposed in [29] to guide a robot equipped with a non-calibrated camera in an uneven outdoor environment without colliding with obstacles. In this method, a feature selection/tracking algorithm was developed to select some of the most significant feature points. This approach also uses FIS for analyzing the features motion and for making navigation decisions. In [36], a modified artificial potential field (APF) algorithm was implemented aiming to avoid collision with unknown objects in the robot’s pathway.

Robot measurement by devices Contemporary robotic systems are also required to perform advanced indoor positioning, localization and navigation of people and/or robots in real-time by using sensors and other wireless devices. There are several applications which utilize Wi-Fi, Radio-Frequency Identification (RFID), Bluetooth Low Energy (BLE), and other technologies to support equipment tracking, real-time personnel detecting, patient localization, navigation of people with disabilities and many other activities. For example, in [18] an indoor location-based control system was proposed, which is making use of a BLE Beacon in a Wireless LAN environment for determining the current indoor location of a user. The system provides the user with different services depending on the current user location and controls household devices based on the user location information. In [33], a real-time localization system was described which uses a number of smart BLE Beacons and wireless Beacon Receivers in an airport building. The system is continuously tracking and detecting the real-time location of all passengers, so even if a passenger moves from the location where he/she had submitted a request, the airline team will still be able to contact the passenger at his/her current location. The beacon system also signals an alert, in case that the passenger tries to enter into a restricted location. Indoor localization of a moving object by using a Bluetooth signal was also proposed in [3]. In particular, this method utilizes the Received Signal Strength Indicator (RSSI) of the Bluetooth protocol to get the distance between the sender node and the receiver node in a wireless network. The method removes RSSI outliers and calculates a moving average of the RSSI values, so that the distance between the beacon and the moving object is estimated. In [34], tracing the location of a nursing home patient is achieved with the RSSI technique and the trilateral method. In [10], a tracking robot is presented which achieves stable tracking of the target by adding a wireless beacon to the target and utilizing a Radio Frequency (RF) wireless system. This robot applies a location method based on a single receiving station to get the information of the target location. It also uses sonar sensors to measure distances from obstacles and perform obstacle avoidance. An Internet of Things (IoT)-based patient health monitoring system is proposed in [11] with various wearable sensors such as temperature sensor, ECG (Electro Cardio Gram) sensor and pulse sensor for measuring patient temperature, pressure and heart rate. The system also consists of a GSM (Global System for Mobile telecommunications) module through which a doctor can be informed with a mobile phone text message about the patient health. An Android application was developed through which the intimations on the patient body temperature can be sent to the doctor mobile.

Patient face recognition Various sensors (e.g., RGB, depth, EEG, thermal, and wearable inertial sensors) may provide extra information for face recognition systems to identify patients’ faces in both static and video sequences. Kortli et al. [21] provided a review in the field of Face Recognition algorithms, in which Correlation Filters (CFs), Convolutional Neural Networks (CNNs), and clustering approaches based the on k-nearest neighbor technique (K-NN) are the most typical approaches. The recognition algorithms could be classified by the Local approach, the Holistic approach, and the Hybrid. The article [32] also affirmed the strength of those aforementioned methodologies to enhance the accuracy of face recognition.

Patient voice interaction In [8], a prototype of a voice assistant-based navigation system that utilizes the Google Assistant API on a Raspberry Pi was described for performing interaction with visually impaired persons. The system supports users to interact with home appliances by speech and gesture commands. In [22], another voice-based assistant system for home automation was presented which implements natural language processing and speech recognition for receiving voice commands from the USB mic connected to the Raspberry Pi. The Raspberry Pi processes the speech input given by the user through the mic, converts it into text and executes the command. Various insecurity issues related with home digital voice assistants and, in particular, Amazon Alexa, were discussed in [41]. The authors examined the insecurity caused by acoustic attacks and presented proof-of-concept attacks which show that Alexa is vulnerable to this type of attacks. A review of Speech recognition systems could be found in [38].

Contributions of this paper

Motivation

The previous related methods are either concentrated on a specific sub-function of the Autonomous Nursing Robot or they had unreasonable performance in different scenarios. For example, Path planning based on GAs has good performance with non-real-time scenarios, but in case of an emergency occurring, for example, in a hospital, this algorithm may be ineffective since it often achieves slow processing time. On the other hand, lacking and poor support of voice-based modules is a shortcoming of robots operating in a hospital environment, especially during a pandemic era, such as we have now with the COVID-19 pandemic, whereas hospital patients with COVID-19 need to be instructed steps-by-steps. Consequently, it is necessary to have a complete system for Autonomous Nursing Robot that fully supports all the aforementioned tasks.

The contributions of the current paper

In this paper, we build upon the reviewed research works and technological developments with the aim to present the design and implementation of an innovative patient caretaker robot capable of operating in a smart hospital environment. The presented robot is capable to perform activities such as: (i) delivering medicines on correct time to patients, (ii) periodically measuring patient body parameters (such as temperature) and updating those details into the hospital database wirelessly through IoT, and (iii) passing important instructions to the patient through a Google-based voice entity incorporated in the robot. By performing all these tasks, the ultimate aim of the robot is that it helps to reduce working difficulties of nurses in a hospital environment and also to support the safety of healthcare professionals, specifically in critical hospital situations happening in times of a pandemic outbreak. In particular, the contributions of the work presented in this paper are classified as follows, by the robot sub-functions shown in the diagram presented in Fig. 1.

System design The objective of the presented system is to identify the patient room, perform robot path planning for the delivery of medicines to a patient and measure the patient body parameters through a wireless BLE beacon receiver and the BLE beacon transmitter at the respective patient rooms.

Robot path planning Obstacles are effectively detected in the robot path by utilizing ultrasonic and vision sensors. A new fuzzy controller system which consists of three ultrasonic sensors and one camera is developed to detect the optimal robot path and to avoid the robot collision with stable and moving obstacles. The outputs of these sensors and the processed images from the camera are given as the inputs to the fuzzy controller for recognizing obstacles and performing robot navigation. Each image captured by the camera image sensor is transferred to the central computing system which operates on a i5 processor. The fuzzy controller of the robot is responsible for making the robot to avoid any obstacle in its path while moving towards the transmitter beacon.

Robot navigation The navigation of the robot is implemented on a BLE tag module by using the AOA (Angle of Arrival) method [30]. This BLE tag transmits the signal in real-time from a single antenna.

Robot measurement by devices The robot uses sensors to measure the patient body parameters and updates these data to the hospital patient database system in a private cloud mode. A device is utilized to implement the BLE (Bluetooth Low Energy) beacon for identifying the patient location. The robot identifies the respective patient room based on a unique Beacon transmitter kept at the patient room. The robot follows the beacon signal, reaches the respective room and, finally, it delivers medicine to the patient. The proposed system effectively detects obstacles in the robot’s vicinity and makes decisions, by following the beacon signal, for avoiding them. The timings of the drug delivery and the recording the patient body parameter are implemented through programming the robot controlling software. The robotic system is implemented on the Raspberry Pi using Matlab 2018b on a PC with Intel Core i5 processor, where the solar power is used to power the whole system.

Patient voice interaction The robot makes uses of a Google assistant to interact with the patients. A Google-based voice entity is incorporated to the robot so that a patient can have some interactions with the robot, for the patient to be informed about the doctors visiting schedule, his/her body parameter details etc. The robot fetches the analyzed details from the server and interacts with the patient.

Organization of this paper

Section 2 introduces the proposed method and Sect. 3 validates the proposed Autonomous Nursing Robot system experimentally through the Think-speak cloud, an open source IoT analytics platform services which allows to live stream and analyze the data in the cloud. Several sensors, namely HC-SR04 ultrasonic sensor, Logitech HD 720p image sensor, the temperature sensor and the heart rate sensor are used together with a camera to generate datasets for evaluating the proposed system. In particular, the system was tested on operations taking place in the context of a private hospital in Tirunelveli, Tamilnadu, India. A detailed comparison is performed, through some performance metrics, such as Correlation, Root Mean Square Error (RMSE), and Mean Absolute Percentage Error (MAPE), against the related works of Deepu et al. [7], Huh and Seo [14], Chinmayi et al. [6], Alli et al. [1], Xu [42], Ran et al. [35], and Lee et al. [24].

Description of the proposed Autonomous Nursing Robot system

System design

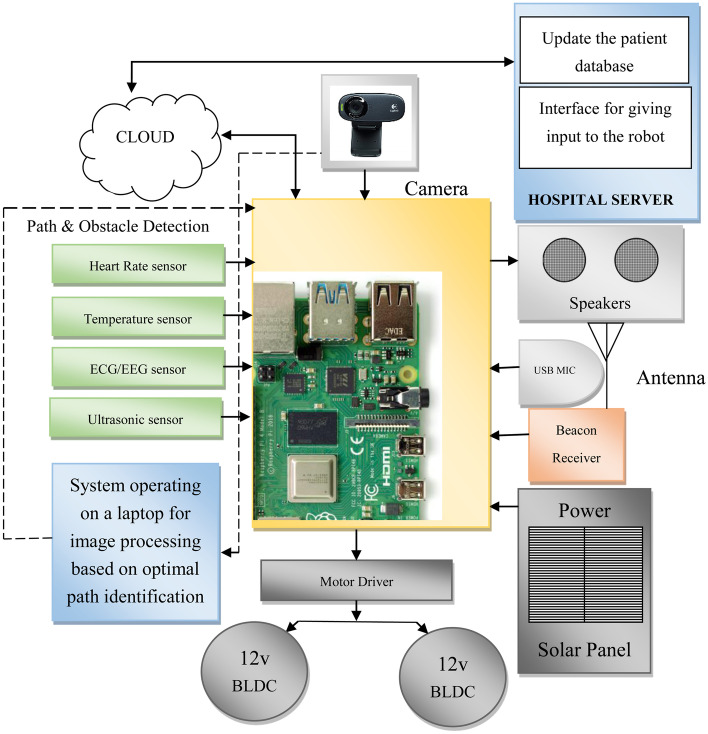

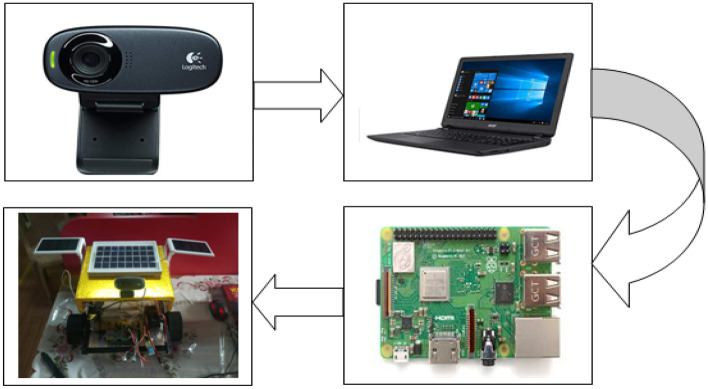

The architecture of the Autonomous Nursing Robot system is presented in Fig. 2. The control of the robot is implemented using Raspberry Pi 4 on a Broadcom processor with clock rate of 1.8 GHz and with an internal memory of 4 GB on the Raspbian OS. The system is mounted on a metal robotic chassis balanced by 4 × 15 cm wheels which are attached at a 12v brushless DC motor controlled by a DC 12v, 15A, 500 W motor driver module.

Fig. 2.

The robotic system architecture

System objective

The robot is designed with the aim to support work of nurses and, thus, to reduce human workload in a hospital environment so that simple tasks can be allotted to the robot and patient management failures to be considerably reduced. The robot can deliver medicines to a respective patient in a timely basis. It measures the patient body temperature, pressure and heart rate periodically and records all these parameters in a patients’ database of a private cloud. For measuring the body parameters, sensors such as MAX30205 temperature sensor, AD8232 pressure sensor and MAX30100 heart rate sensors are used. These sensors are available on the robot, as shown in the user-system interface. A patient has to place his/her hand on the user-system interface area of the robot for all these parameters to be measured at the same time.

Main tasks

The main tasks of the robot are to identify the patient room, set up path planning for the delivery of medicines to a patient, and measure the patient body parameters through a wireless BLE beacon receiver and the BLE beacon transmitter at the respective patient rooms. The robot follows the transmitter beacon signal and moves towards the patient room. A UUID (Universally Unique Identifier) is used as a unique beacon ID. It is provided to all individual beacons to differentiate each beacon from others connected in the hospital network. These BLEs are programmable devices to generate beacon signals which can be triggered wirelessly. The receiver kept in the robot receives a small piece of data, follows the transmitter beacon signal and moves the robot towards the patient room.

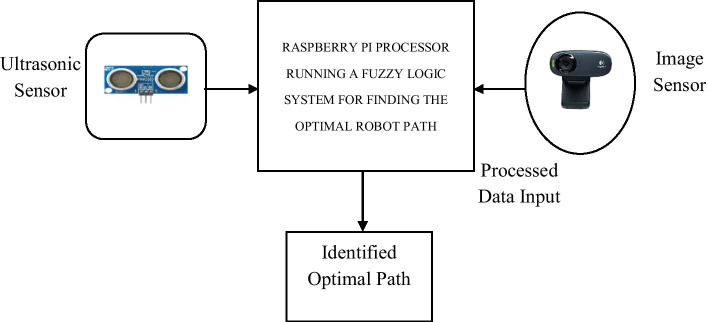

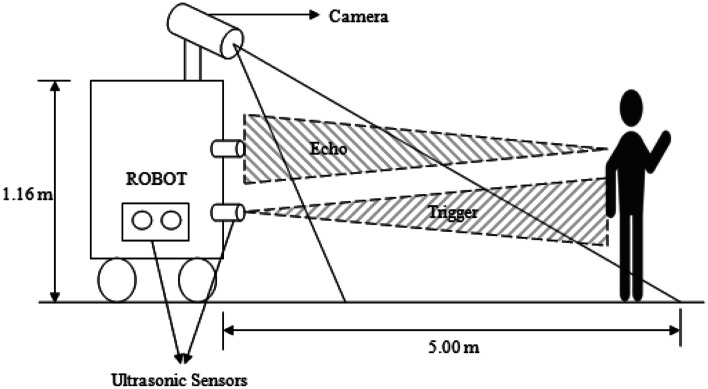

While moving towards the transmitter beacon, the fuzzy controller of the robot is responsible for making the robot to avoid any obstacle in its path while moving towards the transmitter beacon. This fuzzy controller consists of three ultrasonic sensors and one camera (Fig. 3). The outputs from the ultrasonic sensors are used as first level input to the fuzzy controller. Each image captured by the camera image sensor is transferred to the central system which operates on a i5 processor. Here, each image is initially converted to gray scale image, and, after that, to a binary image by applying thresholding. All resulted binary images are partitioned and identified as left and right visual images. Based on the average binary levels present in both left and right visual images, the navigation directions are estimated. By using the inputs from the sensor outputs and the serial output from the system after processing the image, the fuzzy controller is capable to identify the optimal robot directions. A voice entity module is also developed on the robot for providing instructions to the patient regarding the medication. While entering into the patient room, in case that the room door is closed, the voice entity module is also used to instruct the patient attendee to open the door.

Fig. 3.

Robot navigation

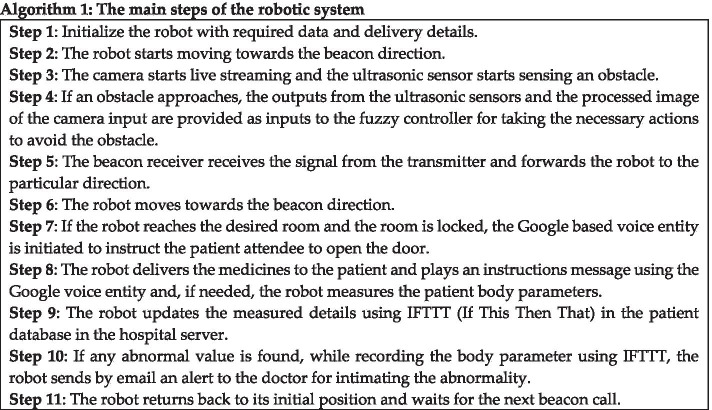

Main steps

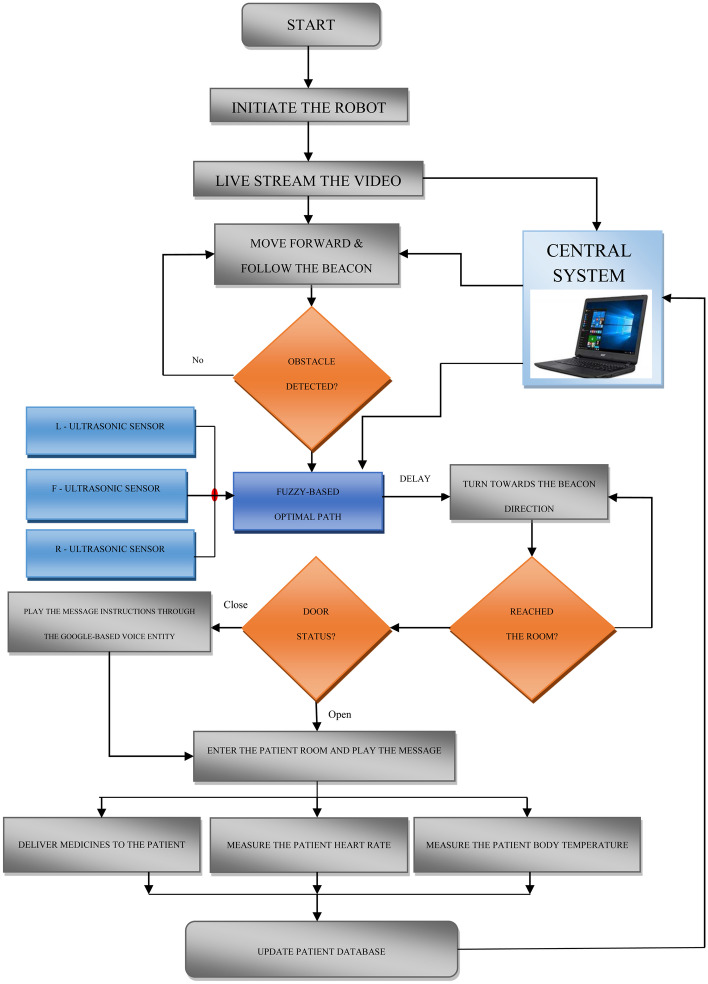

The flowchart of the robot operations is shown in Fig. 4. In particular, the robot performs the following steps (Algorithm 1):

Fig. 4.

Flowchart of the robot operation

Main functions

In what follows, we describe the main functions of the proposed Autonomous Nursing Robot system described in Figs. 2–4.

Robot navigation

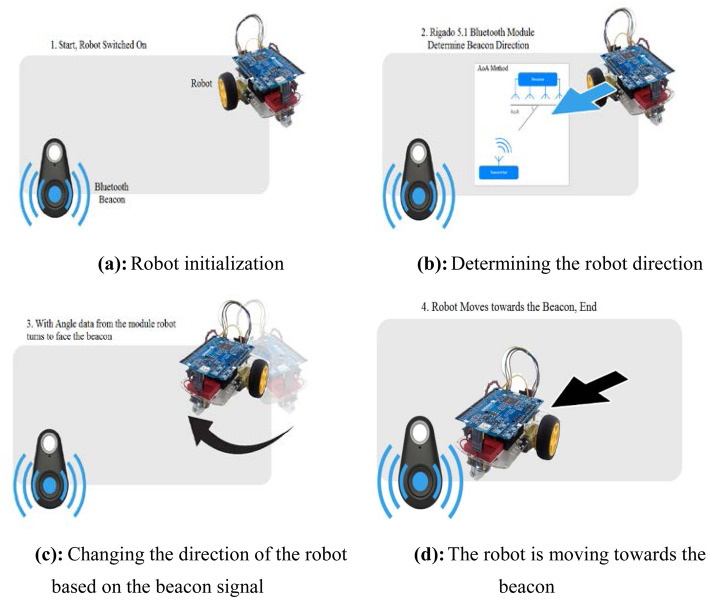

The system consists of a low power BLE beacon, which is used as a transmitter and located at a patient’s room, and the multiple-antenna receivers which are kept on the robot-receiver. When the transmitter BLE beacon is triggered, it sends wirelessly the patient’s room position details. The navigation of the robot is implemented on a BLE tag module by using the AOA (Angle of Arrival) method [30]. This BLE tag transmits the signal in real-time from a single antenna. The receiver contains multiple antennas arranged in such a way that the receiver sees the phase difference because of the variation in the distance of each receiving antenna to the broadcasting antenna, as shown in Fig. 5b. On the reception of the beacon signal from the transmitter, the robot turns its “face” towards the transmitter beacon, as shown in Fig. 5c. Finally, the robot starts moving towards the beacon, as shown in Fig. 5d.

Fig. 5.

(a): Robot initialization (b): Determining the robot direction (c): Changing the direction of the robot based on the beacon signal (d): The robot is moving towards the beacon

Robot path planning

a) Fuzzy-based obstacle detection

When the robot is navigating from an initial position towards the transmitter beacon, it may face various obstacles, such as moving objects and fixed objects. In order to avoid these obstacles, a fuzzy-based obstacle avoidance system is implemented with the following input data.

A corresponding ultrasonic sensor is used for moving the robot in a different direction. It achieves to identify the obstacles in the forward direction and avoids the robot collision with other stable or moving objects. The details of how the parameters of the fuzzy membership functions are set up for achieving obstacle avoidance based on the outputs from the ultrasonic sensors are given in Sect. 2.2.2.b.

The camera kept in the front face of the robot makes live video streams to the central system, which processes the real-time video and provides image processing results on the basis of directions to the robot. The description of the algorithm for obstacle avoidance based on the outputs from the image sensor is presented in Sect. 2.2.2.c.

The corresponding ultrasonic sensor measures the robot distance from the obstacle and submits the details to the Raspberry Pi 4 processor, where the fuzzy controller is executed to achieve the optimal direction of the robot’s movement (See Fig. 6 and the corresponding description in Sect. 2.2.2.d).

Fig. 6.

Obstacle detection robot geometry

b) Fuzzy membership functions for obstacle avoidance based on ultrasonic sensors

The robot chooses direction both by using inputs from ultrasonic sensors and by processing data from an image sensor. In particular, three ultrasonic sensors connected to the Raspberry Pi 4 are placed at the three faces of the robot (Left, Right and Front). Each ultrasonic sensor emits high frequency sound waves, then waits for those sounds to reflect back, after hitting on any intermediate obstacle, and, thus, it can measure the distance from the obstacle by considering the time for the ultrasound to return back to the sensor. These operations are done by triggering the “trigg pin” of the ultrasonic sensor, i.e., by first setting the trigg pin LOW for 2 ms, then by setting it HIGH for 10 ms, and then by setting it again LOW- an event which informs the sensor to emit the signal. The emitted signal is returned back to sensor, which indicates the sound round trip travel time. A sound wave travels at 340 m/s, a value which is equal to 29 µs/cm. The corresponding formulae which are applied for converting duration values into distance values are as follows, while Tables 1, 2 and 3 show the conditions and corresponding decisions for avoiding a front obstacle, a right obstacle and a left obstacle, respectively.

| 1 |

| 2 |

| 3 |

Table 1.

Conditions for avoiding front obstacle

| CONDITIONS FOR AVOIDING FRONT OBSTACLE | DECISIONS |

|---|---|

| Front Distance (in m) < Threshold Front Distance | Close |

| Left Distance (in m) < right Distance (in m) | Right |

| Left Distance (in m) > right Distance (in m) | Left |

| Else | Forward |

Table 2.

Conditions for avoiding right obstacle

| CONDITIONS FOR AVOIDING RIGHT OBSTACLE | DECISIONS |

|---|---|

| Right Distance (in m) < Threshold Right Distance | Close |

| Right Distance (in m) > left Distance (in m) | Forward |

| Right Distance (in m) < left Distance (in m) | Left |

Table 3.

Conditions for avoiding left obstacle

| CONDITIONS FOR AVOIDING LEFT OBSTACLE | DECISIONS |

|---|---|

| Left Distance (in m) < Threshold Left Distance | Close |

| Left Distance (in m) > right Distance (in m) | Forward |

| Left Distance (in m) < right Distance (in m) | Right |

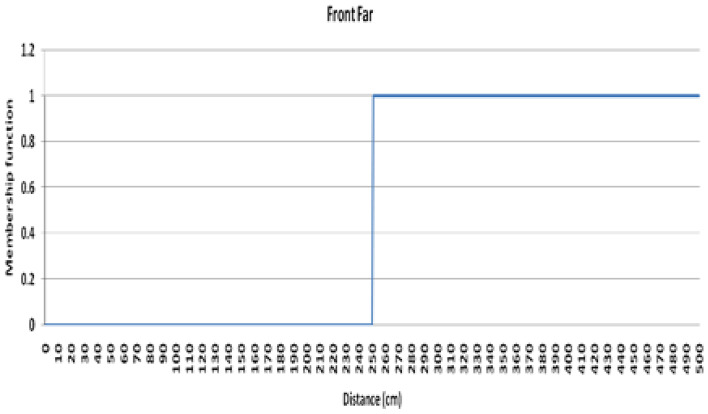

The fuzzy membership functions for the front ultrasonic sensor are as follows:

| 4 |

| 5 |

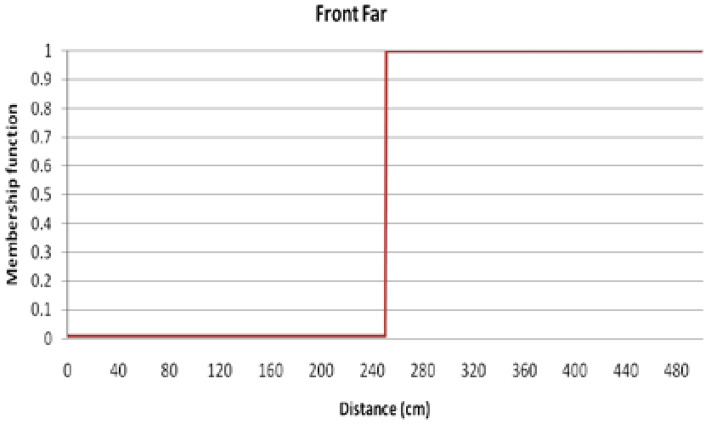

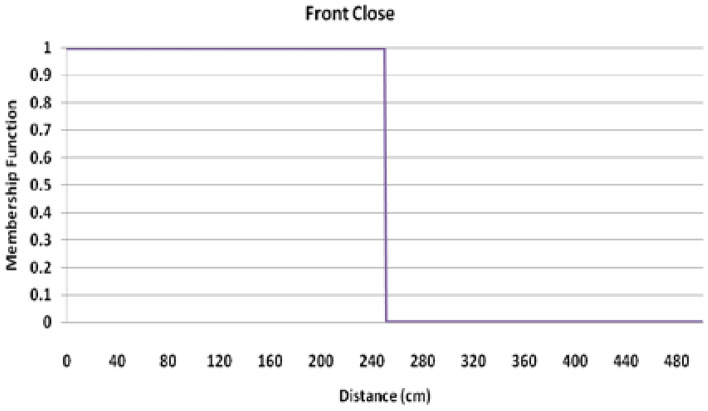

Figure 7 demonstrates that the membership function for is equal to 1, if the distance is within 250 to 500 cm, while it is equal to 0 when the distance is less than 250 cm. Figure 8 presents that the membership function for is equal to 0, if the distance is within 250 to 500 cm and it is equal to 1 when the distance is less than 250 cm.

Fig. 7.

Membership function for

Fig. 8.

Membership function for

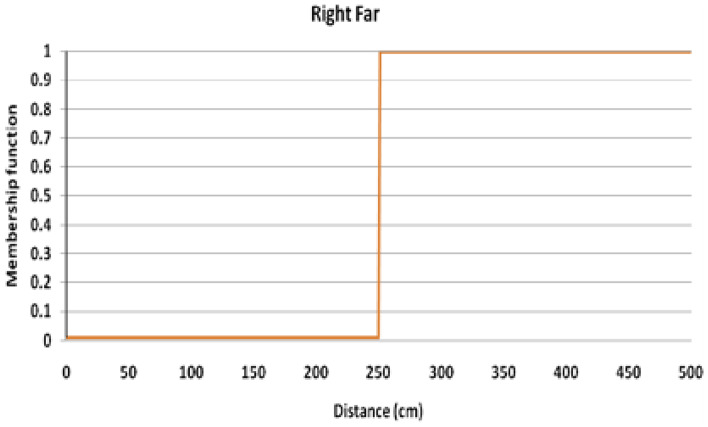

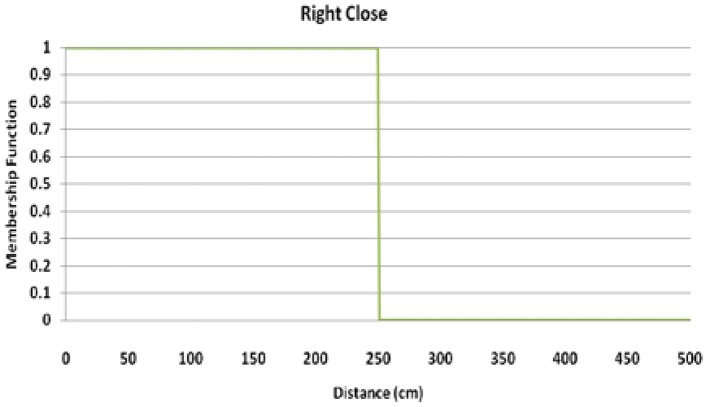

Similarly, the fuzzy membership functions for the left and the right ultrasonic sensors are as follows:

| 6 |

| 7 |

| 8 |

| 9 |

Figures 9 and 10 present the fuzzy membership functions for and , respectively, while Figs. 11 and 12, demonstrate the membership functions for and , respectively.

Fig. 9.

Membership function for

Fig. 10.

Membership function for

Fig. 11.

Membership function for

Fig. 12.

Membership function for

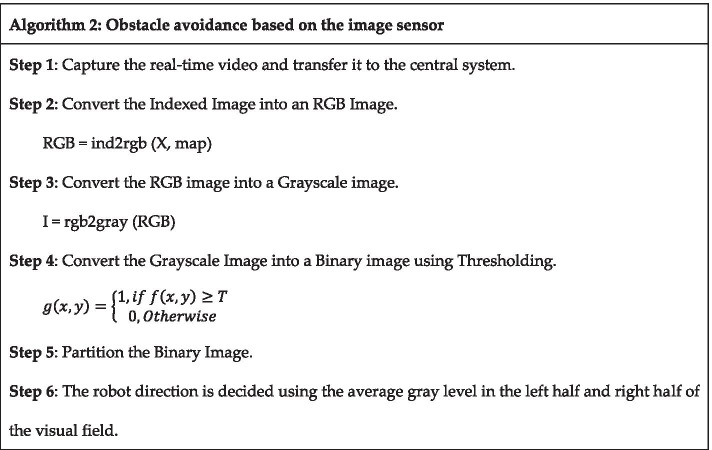

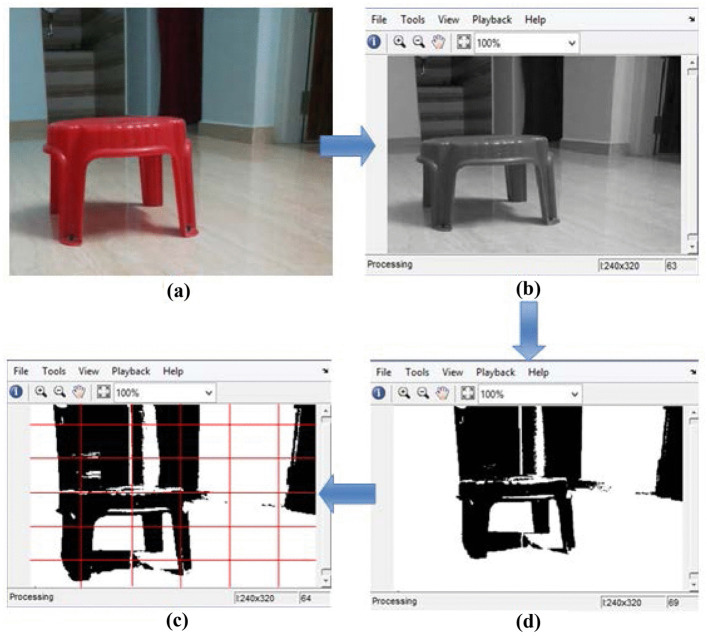

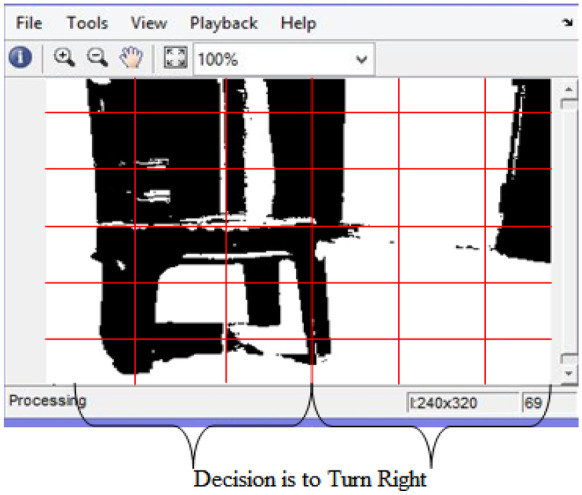

c) Obstacle avoidance based on the image sensor

A vision-based robot navigation system is implemented that is equipped with a 720p HD Logitech USB Camera connected to the Raspberry Pi 4. Figure 13 demonstrates the flow of image sensor-based obstacle detection process. The live video is captured using the camera and transferred into the central cloud system wirelessly. In the central system, the images are processed using MATLAB 2017b. The image shown in Fig. 14a is initially converted into a grayscale image by eliminating the hue and saturation details and retaining the luminance, as shown in Fig. 14b. The grayscale image is then converted into binary image, by using a threshold value, as shown in Fig. 14c. The binary image is partitioned by means of clustering algorithms like Grid based clustering as shown in Fig. 14d. This image is used as input to the controller for making decisions about the navigation direction of the robot. Based on the average binary zero level of the pixels in the right and left visual fields, the robot directions are decided. If the left half of the visual image includes more binary zeros than binary ones, the decision is taken to turn the robot to the right side. If the right half of the visual image includes more binary zeros than binary ones, the decision is taken to turn the robot to the left side (Fig. 15). The steps of the algorithm for obstacle avoidance based on the robot image sensor are shown in Algorithm 2.

Fig. 13.

Image sensor-based obstacle detection

Fig. 14.

(a) Input image, (b) Grayscale image, (c) Binary image, (d) Partitioned image

Fig. 15.

Robot navigation based on inputs from the image sensor

d) Fuzzy rules for performing robot navigation according to the ultrasonic sensor and the image sensor

The directions of the robot wheels are controlled by simply varying the speed of the robot DC motors. The most common method to perform this is by controlling PWM (Pulse Width Modulation) signals. Direction rules are listed in Tables 4 and 5.

Table 4.

Fuzzy rule base for robot navigation based on the three ultrasonic sensors

| Rule | Left Sensor | Centre Sensor | Right Sensor | Direction of motion |

|---|---|---|---|---|

| 1 | Close | Close | Close | Right Turn |

| 2 | Close | Close | Far | Half Right Turn |

| 3 | Close | Far | Close | Forward |

| 4 | Close | Far | Far | Half Right Turn |

| 5 | Far | Close | Close | Half Left Turn |

| 6 | Far | Close | Far | Right Turn |

| 7 | Far | Far | Close | Half Left Turn |

| 8 | Far | Far | Far | Forward |

Table 5.

Fuzzy rule base for robot navigation based on both the ultrasonic sensor decision and the image sensor decision

| Rule | Ultrasonic Sensor Decision | Image Sensor Decision | Direction of motion |

|---|---|---|---|

| 1 | Right | Right | Right Turn |

| 2 | Half Right | Right | Half Right |

| 3 | Left | Left | Left Turn |

| 4 | Half Left | Left | Half Left |

| 5 | Forward | Forward | Forward |

Robot measurement by devices

The robot uses sensors to measure the patient body parameters and updates these data to the hospital patient database system in a private cloud mode. A device is utilized to implement the Wireless BLE beacon for identifying the patient location.

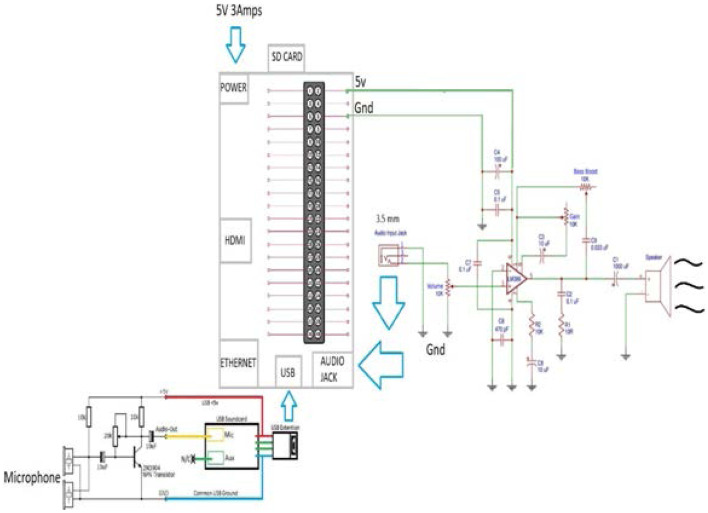

Patient Voice Interaction

The Patient Voice Interaction is a special module incorporated to the robot so that a patient can have some interactions with the robot, for the patient to be informed about doctors visiting schedule, his/her body parameter details etc. The robot fetches the analyzed details from the server and interacts with the patient. For this implementation, we have developed a service in the Google cloud platform by using the API manager to enable the Google assistant API and select the credentials with OAuth client ID. The circuit diagram for the module implementation is shown in Fig. 16.

Fig. 16.

Circuit diagram of Google based voice entity

For measuring the patient body parameters, we utilize two sensors: MAX30205 for the body temperature and MAX30100 for the pulse rate. The think-speak IoT platform is used to store and retrieve the sensed data using HTTP protocol on WLAN. Webhooks is a way for an app to provide information to other applications.

Remarks

The proposed system is designed for the Nursing Robot System with main capabilities of Robot Path Planning for Navigation and Robot Interaction with hospital patients. This is a promising solution for the medical sector that aims to utilize the strength and advantages of autonomous systems for dealing with emergency situations like the COVID-19 pandemic. The integrated solutions are actually implemented in an autonomous nursing robot that it could provide significant meanings to a hospital’s community.

Experimental results

Environmental setup

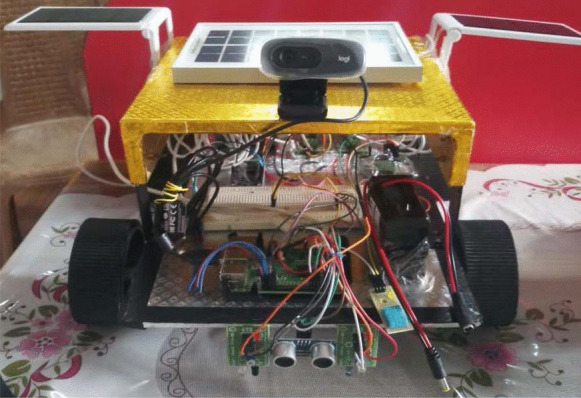

The robotic system is implemented on the Raspberry Pi which runs on a Broadcom processor and through MATLAB 2018b. The system performance was evaluated on a PC with an Intel Core i5 processor, while the solar power was used to power the system. Several sensors namely HC-SR04 ultrasonic sensor, Logitech HD 720p image sensor, the temperature sensor and the heart rate sensor are used together with a camera to generate the datasets for testing the proposed system on operations taking place in the context of a private hospital in Tirunelveli, Tamilnadu, India. Due to limited time and conditions of COVID-19, we capture a reasonable amount of data in the mentioned hospital through sensors. The camera mounted on the top of the robot records the video and sends it wirelessly to the PC running on an Intel i5 processor. Figure 17 demonstrates the experimental setup of the robot prototype model. The comparison is performed through some performance metrics such as Correlation, Root Mean Square Error (RMSE), and Mean Absolute Percentage Error (MAPE) against the related works. The dataset is created using both HC-SR04 ultrasonic sensor and Logitech HD 720p image sensor for performing several trials. The formulas of some performance metrics are shown below.

- Correlation: shows how closely the actual distance and the measured distance were related. This correlation is used for indicating predictive relationship which is used in approximations. The correlation of A and B can be given as,

10 - Root Mean Square Error: RMSE is widely used to evaluate the difference between actual observed values and predicted values by a model.

11 - Mean Absolute Percentage Error: MAPE is the statistical measure of the accuracy of the prediction method.

12

Fig. 17.

Robot prototype model

Results

The MATLAB 2018 installed on the PC segments the individual images frames from the video and the processed data with the navigation information. The outputs of the ultrasonic sensors are sent to the fuzzy controller to find the optimal path for robot navigation. A wireless BLE beacon is installed at every patient room. The robot follows the beacon signal coming from the room and identifies the patient.

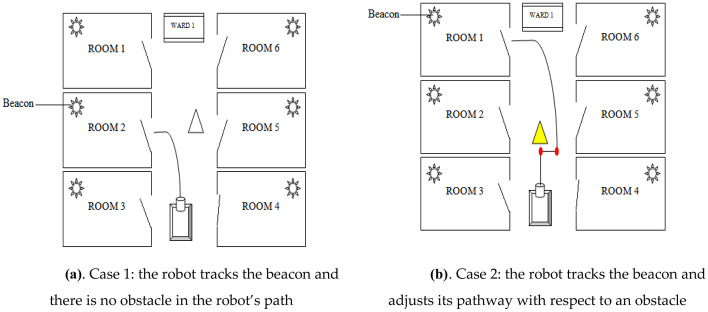

Figure 18a shows case 1 where there is no obstacle in the robot path so that the robot identifies the beacon signal and moves towards the patient room by tracking the beacon signal. Figure 18b shows case 2 where some obstacles are found in the robot path. Here, the robot uses the fuzzy controller system to avoid the obstacle and moves towards a new location for a few seconds of delay and turns back to the beacon direction.

Fig. 18.

(a). Case 1: the robot tracks the beacon and there is no obstacle in the robot’s path (b). Case 2: the robot tracks the beacon and adjusts its pathway with respect to an obstacle

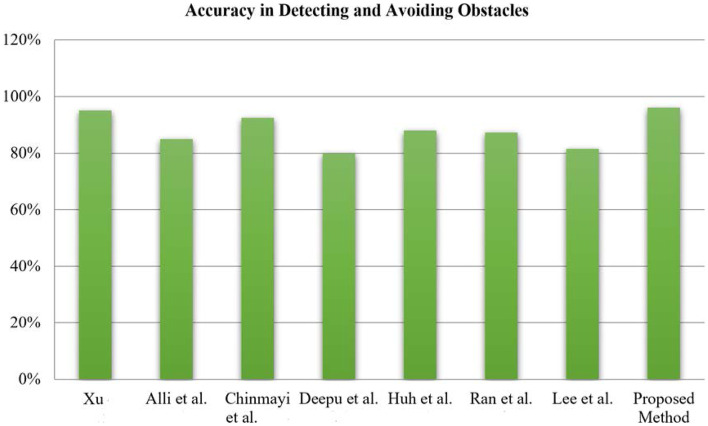

Experiments with the robot showed that the accuracy of the implemented method in detecting and avoiding obstacles is equal to 96.05% with an average computational time for taking directional decision equal to 87.9 ms. After this task, the robot delivers the medicines to the patient. If it is required to measure the patient body parameters such as temperature, pressure and heart rate, the robot measures those details and uploads the data to the central server system. Figure 19 shows the updated values from the temperature sensor and heart rate sensor in the Think-speak cloud—an open source IoT analytics platform services, which allows to live stream and analyze the data in the cloud. Once the update is done, the robot returns back to its initial position.

Fig. 19.

Body temperature and pulse rate monitor in think-speak cloud

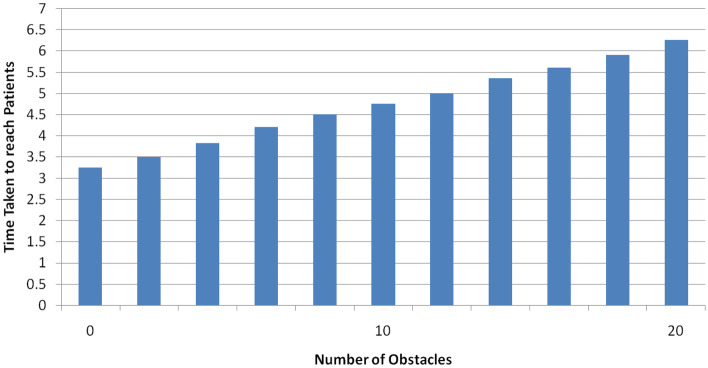

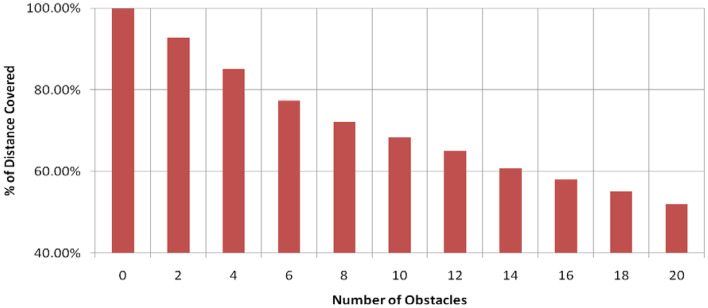

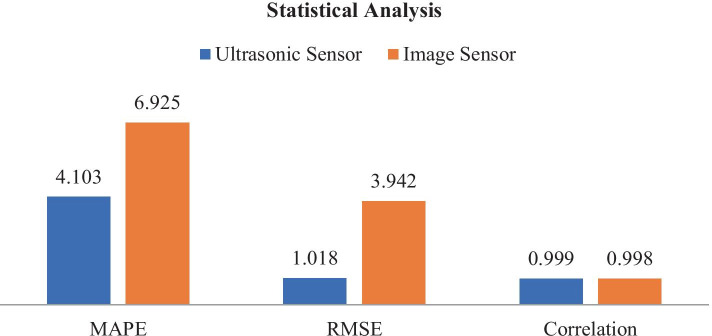

Figure 20 illustrates the time taken to reach the patient with respect to number of obstacles. It is clear that the total number of obstacles is high, and the time taken to reach the patients is also high. Figure 21 demonstrates the percent of distance covered with respect to the number of obstacles. Clearly, when the number of obstacles is high, the time taken to reach the patients is high. Figure 22 illustrates statistical analysis of ultrasonic sensor data & image sensor data (USB Camera) by calculating MAPE, RMSE and Correlation values.

Fig. 20.

Time taken to reach the patient with respect to number of obstacles (ms)

Fig. 21.

Distance covered with respect to number of obstacles (%)

Fig. 22.

Statistical analysis of ultrasonic sensor & image sensor (USB Camera)

Table 6 demonstrates the correlation of the ultrasonic sensor and image sensor with the parameters of MAPE, RMSE and Correlation. Figure 23 demonstrates the comparative analysis with the existing methods (Deepu et al. [7]; Huh and Seo [14]; Chinmayi et al. [6]; Alli et al. [1]; Xu [42]; Ran et al. [35]; Lee et al. [24]) and the proposed work. It has been found that the proposed method achieves high accuracy in detecting and avoiding obstacles than the other methods.

Table 6.

Correlation of the ultrasonic sensor and image sensor

| Sensor Type | MAPE | RMSE | Correlation |

|---|---|---|---|

| Ultrasonic Sensor | 4.103 | 1.018 | 0.999 |

| Image Sensor | 6.925 | 3.942 | 0.998 |

Fig. 23.

Comparison of the proposed work with the other existing methods

Conclusions

The vision of a robot plays a vital role in the robot working environment. As a sensor-based robot could not provide detailed information about the robot’s vicinity, we have gone through an image segmentation process which gives the full details about the robot’s surrounding for navigating the robot without any collision. In this paper, we aimed to design a robot that will be capable to operate in a smart hospital with the aim to perform activities such as delivering medicines in correct time to the patients, periodically measuring a patient’s body parameters (such as temperature), updating those details into the hospital database wirelessly through IoT, as well as passing important instruction to the patient through a Google-based voice entity incorporated in the robot. A fuzzy controller system, which consists of three ultrasonic sensors and a camera, is developed. The outputs from these sensors and the processed images from the camera are identified as the input to the fuzzy controller for obstacles detection and for robot navigation. The robot identifies the patient room based on the wireless beacon kept at the patient room. The robot follows the beacon, identifies the room and delivers medicine to the patient. The proposed system effectively detects obstacles in the robot’s vicinity and makes decision with the beacon signal for avoiding them.

The proposed fuzzy-based obstacle avoidance method takes inputs from three ultrasonic sensors and the processed visuals of the image are captured by the camera. The results show that the accuracy of the proposed method in detecting the obstacle and avoiding the obstacle is about 96.05% with an average computational time for taking directional decision equal to 87.9 ms. Through this, the robot is free to move inside the hospital environment, and at the same time, it follows the beacon signal from the patient room to reach the patient. The Google-based voice entity present in the robotic system is used to interact with the patient about the health parameters measured and other general information. Experimental results clearly demonstrated the usage of the proposed system in comparison to other related approaches, such as the methods suggested by Deepu et al. [7], Huh and Seo [14], Chinmayi et al. [6], Alli et al. [1], Xu [42], Ran et al. [35], and Lee et al. [24]. The proposed system aims to give a supporting hand to healthcare professionals during the emergency situations such as the current pandemic virus spread.

Even though achieving some preliminary results of developing the Autonomous Nursing Robot system, more parts are needed to be done in order to have a high-performance system. Our future aim is to develop an AI-based obstacle avoidance scheme by replacing the proposed fuzzy-based decisions for avoiding the obstacle so that decision making will be performed over time to choose an alternative path for avoiding the obstacle more efficiently. Moreover, making the robot direction more effective by analyzing the details about the unknown surface is also our target. Finally, it is essential to enhance the interaction of the Automatic Nursing Robots by multimedia technologies in order to achieve a complete solution. A robot could connect to a social network to gather and synthesize disease related information like COVID-19 around globe to disseminate to patients in a hospital. The digital media and dynamic content are updated to the robot regularly for urgent response to the environment. Image vision should be enhanced also as it produces an important role to guide the robotic path beside the information from sensors. It has been witnessed that the microAI that combines AI and Edge Computing would bring more feasible solutions for robots in real contexts. Therefore, we will utilize this kind of technology combined with media technology in the further studies.

Acknowledgements

This research is funded by Vietnam National Foundation for Science and Technology Development (NAFOSTED) under grant number 102.05-2018.02.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

K. Lakshmi Narayanan, Email: kyelyen@gmail.com.

R. Santhana Krishnan, Email: santhanakrishnan86@gmail.com.

Le Hoang Son, Email: sonlh@vnu.edu.vn.

Nguyen Thanh Tung, Email: tungnt@isvnu.vn.

E. Golden Julie, Email: goldenjuliephd@gmail.com.

Y. Harold Robinson, Email: yhrobinphd@gmail.com.

Raghvendra Kumar, Email: raghvendraagrawal7@gmail.com.

Vassilis C. Gerogiannis, Email: vgerogian@uth.gr

References

- 1.Alli KS, Onibonoje MO, Oluwole AS, Ogunlade MA, Mmonyi AC, Ayamolowo O, Dada SO. Development of an Arduino-based obstacle avoidance robotic system for an unmanned vehicle. ARPN J Eng Appl Sci. 2018;13:886–892. [Google Scholar]

- 2.Avirup KG, Subrat KS, Sudhansu KM (2019) Improved turning angle calculation for an unmanned ground vehicle. IEEE conference International Conference on Vision Towards Emerging Trends in Communication and Networking

- 3.Bae Y. Robust Localization for Robot and IoT Using RSSI. Energies. 2019;12:1–18. [Google Scholar]

- 4.Bera A, Tanmay R, Rohan P, Dinesh M (2017) Sociosense: Robot navigation amongst pedestrians with social and psychological constraints. In 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 7018–7025. IEEE

- 5.Bialous SA, Baltzell K. The World Needs 6 Million More Nurses: What are we Waiting for? Am J Trop Med Hyg. 2020;103(1):1–2. doi: 10.4269/ajtmh.20-0451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chinmayi R, Jayam YK, Tunuguntla V, Dammuru JV, Nadella H, Anudeep DSSK, Nair JG (2018) Obstacle detection and avoidance robot. 2018 IEEE International Conference on Computational Intelligence and Computing Research (ICCIC)

- 7.Deepu R, Honnaraju B, Murali S (2014) Path Generation for Robot Navigation using a Single Camera. International Conference on Information and Communication Technologies 1425–1432

- 8.Dhiraj SK, Ramteke PL (2019) Personal google API assistant system using raspberry Pi. Int Res J Eng Technol 6(2):1144–1149

- 9.Dirik M, Castillo O, Kocamaz AF. Gaze-Guided Control of an Autonomous Mobile Robot Using Type-2 Fuzzy Logic. Appl Syst Innov. 2019;2(14):1–16. [Google Scholar]

- 10.Fang Z, Deng L, Ou Y, Wu X (2010) A Tracking Robot Based on Wireless Beacon. In: Liu H., Ding H., Xiong Z., Zhu X. (eds) Intelligent Robotics and Applications. ICIRA 2010. Lecture Notes in Computer Science, vol 6425. Springer, Berlin, Heidelberg

- 11.Ganesh EN (2019) Health monitoring system using raspberry Pi and IoT. Oriental J Comput Sci Technol 12(1):08–13

- 12.Horiuchi Y, Hiroshi N (2001) Evaluation of path length made in sensor-based path-planning with the alternative following. In Proceedings 2001 ICRA. IEEE International Conference on Robotics and Automation (Cat. No. 01CH37164) 2:1728–1735. IEEE

- 13.Huang S, Tanioka T, Locsin R, Parker M, Masory O (2011) Functions of a Caring Robot in Nursing. Proceedings of 7th International Conference on Natural Language Processing and Knowledge Engineering (NLP-KE), Tokushima 425–429. 10.1109/nlpke.2011.6138237

- 14.Huh J-H, Seo K. An Indoor Location-Based Control System Using Bluetooth Beacons for IoT Systems. Sensors. 2017;17:1–22. doi: 10.3390/s17122917. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Jiang Z, Zhu J, Jin C, Siyu Xu, Zhou Y, Pang S. Simultaneously merging multi-robot grid maps at different resolutions. Multimed Tools Appl. 2020;79(21):14553–14572. doi: 10.1007/s11042-018-7109-8. [DOI] [Google Scholar]

- 16.Kamon I, Rivlin E. Sensory-based motion planning with global proofs. IEEE Trans Robot Autom. 1997;13(6):814–822. doi: 10.1109/70.650160. [DOI] [Google Scholar]

- 17.Karri C (2021) Secure robot face recognition in cloud environments. Multimed Tools Appl 1–16 10.1007/s11042-020-10253-5

- 18.Karwa R, Saluja I, Nehete C. Realtime Indoor Location-Based Passenger Tracking System using Bluetooth Beacon for Airport Authority. International Journal for Research in Applied Science & Engineering Technology. 2019;7(4):3617–3626. doi: 10.22214/ijraset.2019.4607. [DOI] [Google Scholar]

- 19.Kim S, Stephen JG, Wenxi L, David W, Rynson WHL, Ming CL, Dinesh M (2015) Brvo: Predicting pedestrian trajectories using velocity-space reasoning. Int J Robot Res 34(2): 201–217

- 20.Kolhatkar C, Kranti W (2021) Review of SLAM algorithms for indoor mobile robot with LIDAR and RGB-D camera technology. Innovations in Electrical and Electronic Engineering 397–409

- 21.Kortli Y, Maher J, Ayman AF, Mohamed A (2020) Face recognition systems: A Survey. Sensors 20(2):342 [DOI] [PMC free article] [PubMed]

- 22.Kumar R, Jayalakshmi J, Karthik PRA. A Python based Virtual Assistant using Raspberry Pi for Home Automation. Int J Electron Commun Eng. 2018;5(7):22–27. doi: 10.14445/23488549/IJECE-V5I7P105. [DOI] [Google Scholar]

- 23.Lee JY, Song YA, Jung JY, Kim HJ, Kim BR, Do HK, et al. Nurses' Needs for Care Robots in Integrated Nursing Care Services. J Adv Nurs. 2018;74(9):2094–2105. doi: 10.1111/jan.13711. [DOI] [PubMed] [Google Scholar]

- 24.Lee TJ, Yi DH, Cho DI (2016) A monocular vision sensor-based obstacle detection algorithm for autonomous robots. Sensors 16:311 [DOI] [PMC free article] [PubMed]

- 25.Lumelsky V, Stepanov A. Dynamic path planning for a mobile automaton with limited information on the environment. IEEE Trans Autom Control. 1986;31(11):1058–1063. doi: 10.1109/TAC.1986.1104175. [DOI] [Google Scholar]

- 26.Lumelsky VJ, Alexander AS (1990) Path-planning strategies for a point mobile automaton moving amidst unknown obstacles of arbitrary shape. In Autonomous robot vehicles, pp. 363–390. Springer, New York, NY

- 27.McGuire KN, de Croon GCHE, Karl T (2019) A comparative study of bug algorithms for robot navigation. Robot Auton Syst 121:103261

- 28.Mohsin AH, Zaidan AA, Zaidan BB, Mohammed KI, Albahri OS, Albahri AS, Alsalem MA (2021) PSO–Blockchain-based image steganography: towards a new method to secure updating and sharing COVID-19 data in decentralised hospitals intelligence architecture. Multimed Tools Appl 1–25 [DOI] [PMC free article] [PubMed]

- 29.Norouzzadeh R, Taghirad HD, Tamjidi AH (2009) Vision-Based Fuzzy Navigation of Mobile Robots in Grassland Environments. International Conference on Advanced Intelligent Mechatronics 14–17

- 30.Peng R, Sichitiu ML (2006) Angle of arrival localization for wireless sensor networks. Proceedings of the 3rd Annual IEEE Communications Society on Sensor and Ad Hoc Communications and Networks, 2006. SECON'06 1:374–382

- 31.Pérez-Higueras N, Ramón-Vigo R, Fernando C, Luis M (2014) Robot local navigation with learned social cost functions. In 2014 11th International Conference on Informatics in Control, Automation and Robotics (ICINCO), vol. 2, pp. 618–625. IEEE

- 32.Quang-Minh K, Dung-Nhan H, My-Ha L (2020) Receptionist and Security Robot Using Face Recognition with Standardized Data Collecting Method. In 2020 5th International Conference on Green Technology and Sustainable Development (GTSD), pp. 597–603. IEEE

- 33.Rajashree HS, Sahana B, Surabhi S, Apoorva TN, Parameshachari BD. Indoor Localization using BLE Technology. International Journal of Engineering Research & Technology. 2018;6(13):1–6. [Google Scholar]

- 34.Rakshith H, Adithi R, Vinodhini M, Jayashree MO (2019) 2D Mapping robot using ultrasonic sensor and processing IDE. IEEE Conference, International Conference on Vision Towards Emerging Trends in Communication and Networking

- 35.Ran L, Zhang Y, Zhang Q, Yang T. Convolutional Neural Network-Based Robot Navigation Using Uncalibrated Spherical Images. Sensors. 2017;17:1341. doi: 10.3390/s17061341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Rostami SMH, Sangaiah AK, Wang J, et al. Obstacle avoidance of mobile robots using modified artificial potential field algorithm. J Wireless Com Network. 2019;2019:70. doi: 10.1186/s13638-019-1396-2. [DOI] [Google Scholar]

- 37.Sankaranarayanan A, VidyasagarM (1990) A new path planning algorithm for moving a point object amidst unknown obstacles in a plane. In Proceedings., IEEE International Conference on Robotics and Automation pp. 1930–1936. IEEE

- 38.Shaikh NS, Deshmukh RR (2016) Speech recognition system—a review. IOSR J Comput Eng 18(4):3–8

- 39.Singh NH, Khelchandra T (2018) Mobile robot navigation using MLP-BP approaches in dynamic environments. Arab J Sci Eng 43(12):8013–8028

- 40.Stimpfel AW, Sloane DM, Aiken LH. The Longer the Shifts for Hospital Nurses, the Higher the Levels of Burnout and Patient Dissatisfaction. Health Aff (Millwood) 2012;31(11):2501–2509. doi: 10.1377/hlthaff.2011.1377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Xinyu L, Guan-Hua T, Alex XL, Kamran A, Chi-Yu L, Tian X (2018) The Insecurity of Home Digital Voice Assistants – Amazon Alexa as a Case Study CNS

- 42.Xu R. Path planning of mobile robot based on multi-sensor information fusion. J Wireless Com Network. 2019;2019:44. doi: 10.1186/s13638-019-1352-1. [DOI] [Google Scholar]

- 43.Zhang H, Hernandez DE, Zhibao Su, Bo Su. A Low Cost Vision-Based Road-Following System for Mobile Robots. Appl Sci. 2018;8:1–17. [Google Scholar]