Abstract

Variable importance (VI) tools describe how much covariates contribute to a prediction model’s accuracy. However, important variables for one well-performing model (for example, a linear model f (x) = xT β with a fixed coefficient vector β) may be unimportant for another model. In this paper, we propose model class reliance (MCR) as the range of VI values across all well-performing model in a prespecified class. Thus, MCR gives a more comprehensive description of importance by accounting for the fact that many prediction models, possibly of different parametric forms, may fit the data well. In the process of deriving MCR, we show several informative results for permutation-based VI estimates, based on the VI measures used in Random Forests. Specifically, we derive connections between permutation importance estimates for a single prediction model, U-statistics, conditional variable importance, conditional causal effects, and linear model coefficients. We then give probabilistic bounds for MCR, using a novel, generalizable technique. We apply MCR to a public data set of Broward County criminal records to study the reliance of recidivism prediction models on sex and race. In this application, MCR can be used to help inform VI for unknown, proprietary models.

Keywords: Rashomon, permutation importance, conditional variable importance, U-statistics, transparency, interpretable models

1. Introduction

Variable importance (VI) tools describe how much a prediction model’s accuracy depends on the information in each covariate. For example, in Random Forests, VI is measured by the decrease in prediction accuracy when a covariate is permuted (Breiman, 2001; Breiman et al., 2001; see also Strobl et al., 2008; Altmann et al., 2010; Zhu et al., 2015; Gregorutti et al., 2015; Datta et al., 2016; Gregorutti et al., 2017). A similar “Perturb” VI measure has been used for neural networks, where noise is added to covariates (Recknagel et al., 1997; Yao et al., 1998; Scardi and Harding, 1999; Gevrey et al., 2003). Such tools can be useful for identifying covariates that must be measured with high precision, for improving the transparency of a “black box” prediction model (see also Rudin, 2019), or for determining what scenarios may cause the model to fail.

However, existing VI measures do not generally account for the fact that many prediction models may fit the data almost equally well. In such cases, the model used by one analyst may rely on entirely different covariate information than the model used by another analyst. This common scenario has been called the “Rashomon” effect of statistics (Breiman et al., 2001; see also Lecué, 2011; Statnikov et al., 2013; Tulabandhula and Rudin, 2014; Nevo and Ritov, 2017; Letham et al., 2016). The term is inspired by the 1950 Kurosawa film of the same name, in which four witnesses offer different descriptions and explanations for the same encounter. Under the Rashomon effect, how should analysts give comprehensive descriptions of the importance of each covariate? How well can one analyst recover the conclusions of another? Will the model that gives the best predictions necessarily give the most accurate interpretation?

To address these concerns, we analyze the set of prediction models that provide near-optimal accuracy, which we refer to as a Rashomon set. This approach stands in contrast to training to select a single prediction model, among a prespecified class of candidate models. Our motivation is that Rashomon sets (defined formally below) summarize the range of effective prediction strategies that an analyst might choose. Additionally, even if the candidate models do not contain the true data generating process, we may hope that some of these models function in similar ways to the data generating process. In particular, we may hope there exist well performing candidate models that place the same importance on a variable of interest as the underlying data generating process does. If so, then studying sets of well-performing models will allow us to deduce information about the data generating process.

Applying this approach to study variable importance, we define model class reliance (MCR) as the highest and lowest degree to which any well-performing model within a given class may rely on a variable of interest for prediction accuracy. Roughly speaking, MCR captures the range of explanations, or mechanisms, associated with well-performing models. Because the resulting range summarizes many prediction models simultaneously, rather a single model, we expect this range to be less affected by the choices that an individual analyst makes during the model-fitting process. Instead of reflecting these choices, MCR aims to reflect the nature of the prediction problem itself.

We make several, specific technical contributions in deriving MCR. First, we review a core measure of how much an individual prediction model relies on covariates of interest for its accuracy, which we call model reliance (MR). This measure is based on permutation importance measures for Random Forests (Breiman et al., 2001; Breiman, 2001), and can be expanded to describe conditional importance (see Section 8, as well as Strobl et al. 2008). We draw a connection between permutation-based importance estimates (MR) and U-statistics, which facilitates later theoretical results. Additionally, we derive connections between MR, conditional causal effects, and coefficients for additive models. Expanding on MR, we propose MCR, which generalizes the definition of MR for a class of models. We derive finite-sample bounds for MCR, which motivate an intuitive estimator of MCR. Finally, we propose computational procedures for this estimator.

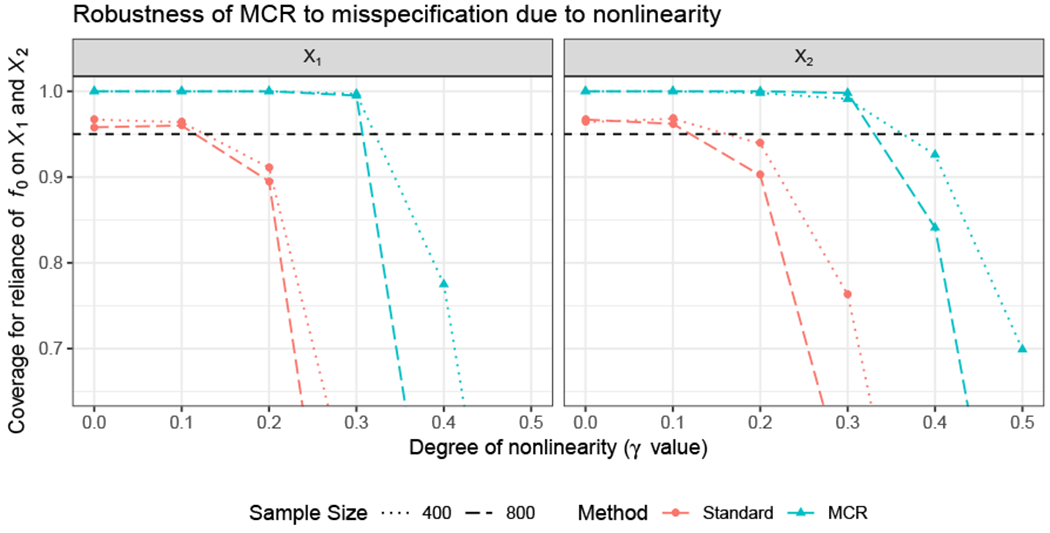

The tools we develop to study Rashomon sets are quite general, and can be used to make finite-sample inferences for arbitrary characteristics of well-performing models. For example, beyond describing variable importance, these tools can describe the range of risk predictions that well-fitting models assign to a particular covariate profile, or the variance of predictions made by well-fitting models. In some cases, these novel techniques may provide finite-sample confidence intervals (CIs) where none have previously existed (see Section 5).

MCR and the Rashomon effect become especially relevant in the context of criminal recidivism prediction. Proprietary recidivism risk models trained from criminal records data are increasingly being used in U.S. courtrooms. One concern is that these models may be relying on information that would otherwise be considered unacceptable (for example, race, sex, or proxies for these variables), in order to estimate recidivism risk. The relevant models are often proprietary, and cannot be studied directly. Still, in cases where the predictions made by these models are publicly available, it may be possible to identify alternative prediction models that are sufficiently similar to the proprietary model of interest.

In this paper, we specifically consider the proprietary model COMPAS (Correctional Offender Management Profiling for Alternative Sanctions), developed by the company Northpointe Inc. (subsequently, in 2017, Northpointe Inc.,Courtview Justice Solutions Inc., and Constellation Justice Systems Inc. joined together under the name Equivant). Our goal is to estimate how much COMPAS relies on either race, sex, or proxies for these variables not measured in our data set. To this end, we apply a broad class of flexible, kernel-based prediction models to predict COMPAS score. In this setting, the MCR interval reflects the highest and lowest degree to which any prediction model in our class can rely on race and sex while still predicting COMPAS score relatively accurately. Equipped with MCR, we can relax the common assumption of being able to correctly specify the unknown model of interest (here, COMPAS) up to a parametric form. Instead, rather than assuming that the COMPAS model itself is contained in our class, we assume that our class contains at least one well-performing alternative model that relies on sensitive covariates to the same degree that COMPAS does. Under this assumption, the MCR interval will contain the VI value for COMPAS. Applying our approach, we find that race, sex, and their potential proxy variables, are likely not the dominant predictive factors in the COMPAS score (see analysis and discussion in Section 10).

The remainder of this paper is organized as follows. In Section 2 we introduce notation, and give a high level summary of our approach, illustrated with visualizations. In Sections 3 and 4 we formally present MR and MCR respectively, and derive theoretical properties of each. We also review related variable importance practices in the literature, such as retraining a model after removing one of the covariates. In Section 5, we discuss general applicability of our approach for determining finite-sample CIs for other problems. In Section 6, we present a general procedure for computing MCR. In Section 7, we give specific implementations of this procedure for (regularized) linear models, and linear models in a reproducing kernel Hilbert space. We also show that, for additive models, MR can be expressed in terms of the model’s coefficients. In Section 8 we outline connections between MR, causal inference, and conditional variable importance. In Section 9, we illustrate MR and MCR with a simulated toy example, to aid intuition. We also present simulation studies for the task of estimating MR for an unknown, underlying conditional expectation function, under misspecification. We analyze a well-known public data set on recidivism in Section 10, described above. All proofs are presented in the appendices.

2. Notation & Technical Summary

The label of “variable importance” measure has been broadly used to describe approaches for either inference (van der Laan, 2006; Díaz et al., 2015; Williamson et al., 2017) or prediction. While these two goals are highly related, we primarily focus on how much prediction models rely on covariates to achieve accuracy. We use terms such as “model reliance” rather than “importance” to clarify this context.

In order to evaluate how much prediction models rely on variables, we now introduce notation for random variables, data, classes of prediction models, and loss functions for evaluating predictions. Let be a random variable with outcome and covariates , where the covariate subsets and may each be multivariate. We assume that observations of Z are iid, that n ≥ 2, and that solutions to arg min and arg max operations exist whenever optimizing over sets mentioned in this paper (for example, in Theorem 4, below). Our goal is to study how much different prediction models rely on X1 to predict Y.

We refer to our data set as Z = [ y X ], a matrix composed of a n-length outcome vector y in the first column, and a n × p covariate matrix X = [ X1 X2 ] in the remaining columns. In general, for a given vector v, let v[j] denote its jth element(s). For a given matrix A, let A′, A[i,·], A[·,j] and A[i,j] respectively denote the transpose of A, the ith row(s) of A, the jth column(s) of A, and the element(s) in the ith row(s) and jth column(s) of A.

We use the term model class to refer to a prespecified subset of the measurable functions from to . We refer to member functions as prediction models, or simply as models. Given a model f, we evaluate its performance using a nonnegative loss function . For example, L may be the squared error loss Lse(f, (y, x1, x2)) = (y − f (x1, x2))2 for regression, or the hinge loss Lh(f, (y, x1, x2)) = (1 − y f (x1, x2))+ for classification. We use the term algorithm to refer to any procedure that takes a data set as input and returns a model as output.

2.1. Summary of Rashomon Sets & Model Class Reliance

Many traditional statistical estimates come from descriptions of a single, fitted prediction model. In contrast, in this section, we summarize our approach for studying a set of near-optimal models. To define this set, we require a prespecified “reference” model, denoted by fref, to serve as a benchmark for predictive performance. For example, fref may come from a flowchart used to predict injury severity in a hospital’s emergency room, or from another quantitative decision rule that is currently implemented in practice. Given a reference model fref, we define a population ϵ-Rashomon set as the subset of models with expected loss no more than ϵ above that of fref. We denote this set as , where denotes expectations with respect to the population distribution. This set can be thought of as representing models that might be arrived at due to differences in data measurement, processing, filtering, model parameterization, covariate selection, or other analysis choices (see Section 4).

Figure 1–A illustrates a hypothetical example of a population ϵ-Rashomon set. Here, the y-axis shows the expected loss of each model , and the x-axis shows how much each model relies on X1 for its predictive accuracy. More specifically, given a prediction model f, the x-axis shows the percent increase in f’s expected loss when noise is added to X1. We refer to this measure as the model reliance (MR) of f on X1, written informally as

| (2.1) |

The added noise must satisfy certain properties, namely, it must render X1 completely uninformative of the outcome Y, without altering the marginal distribution of X1 (for details, see Section 3, as well as Breiman, 2001; Breiman et al., 2001).

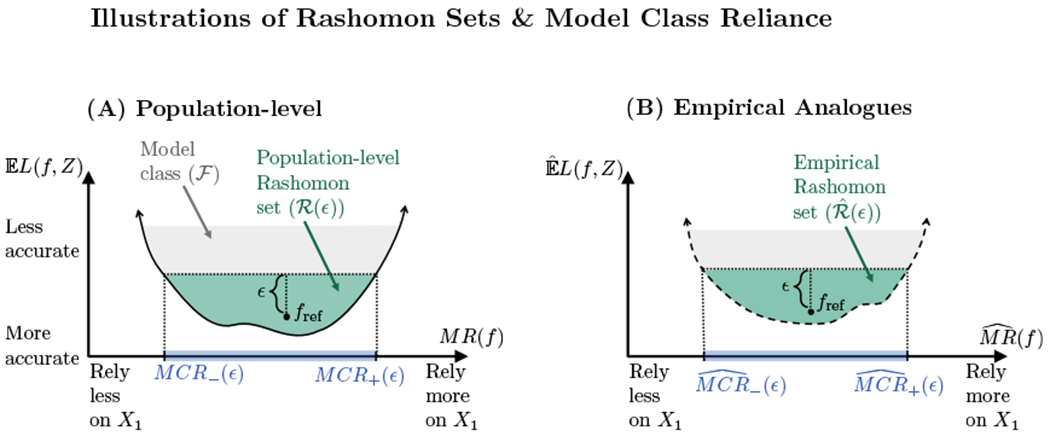

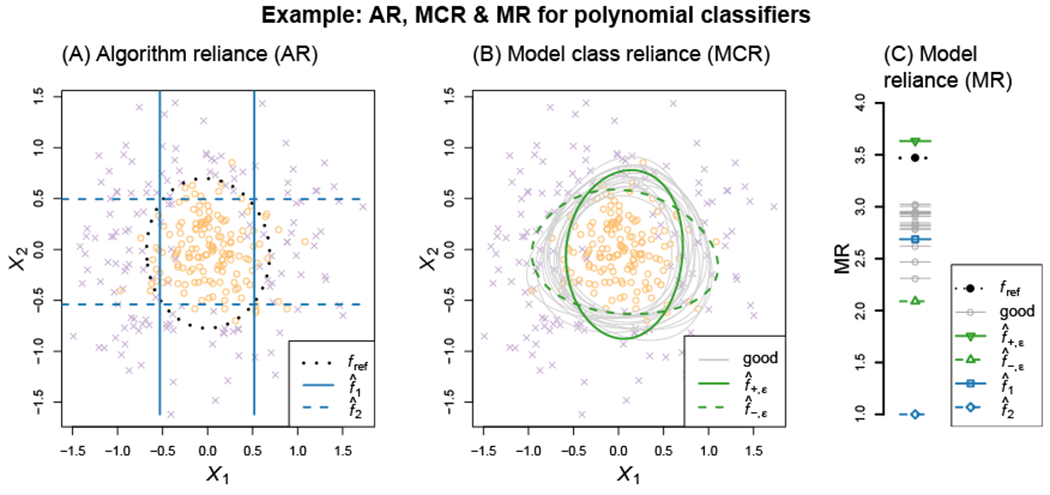

Figure 1:

Rashomon sets and model class reliance – Panel (A) illustrates a hypothetical Rashomon set , within a model class . The y-axis shows the expected loss of each model , and the x-axis shows how much each model f relies on X1 (defined formally in Section 3). Along the x-axis, the population-level MCR range is highlighted in blue, showing the values of MR corresponding to well-performing models (see Section 4). Panel (B) shows the in-sample analogue of Panel (A). Here, the y-axis denotes the in-sample loss, ; the x-axis shows the empirical model reliance of each model on X1 (see Section 3); and the highlighted portion of the x-axis shows empirical MCR (see Section 4).

Our central goal is to understand how much, or how little, models may rely on covariates of interest (X1) while still predicting well. In Figure 1–A, this range of possible MR values is shown by the highlighted interval along the x-axis. We refer to an interval of this type as a population-level model class reliance (MCR) range (see Section 4), formally defined as

| (2.2) |

To estimate this range, we use empirical analogues of the population ϵ-Rashomon set, and of MR, based on observed data (Figure 1–B). We define an empirical ϵ-Rashomon set as the set of models with in-sample loss no more than ϵ above that of fref, and denote this set by . Informally, we define the empirical MR of a model f on X1 as

| (2.3) |

that is, the extent to which f appears to rely on X1 in a given sample (see Section 3 for details). Finally, we define the empirical model class reliance as the range of empirical MR values corresponding to models with strong in-sample performance (see Section 4), formally written as

| (2.4) |

In Figure 1–B, the above range is shown by the highlighted portion of the x-axis.

We make several technical contributions in the process of developing MCR.

Estimation of MR, and population-level MCR: Given f, we show desirable properties of as an estimator of MR(f), using results for U-statistics (Section 3.1 and Theorem 5). We also derive finite sample bounds for population-level MCR, some of which require a limit on the complexity of in the form of a covering number. These bounds demonstrate that, under fairly weak conditions, empirical MCR provides a sensible estimate of population-level MCR (see Section 4 for details).

Computation of empirical MCR: Although empirical MCR is fully determined given a sample, the minimization and maximization in Eq 2.4 require nontrivial computations. To address this, we outline a general optimization procedure for MCR (Section 6). We give detailed implementations of this procedure for cases when the model class is a set of (regularized) linear regression models, or a set of regression models in a reproducing kernel Hilbert space (Section 7). The output of our proposed procedure is a closed-form, convex envelope containing , which can be used to approximate empirical MCR for any performance level ϵ (see Figure 2 for an illustration). Still, for complex model classes where standard empirical loss minimization is an open problem (for example, neural networks), computing empirical MCR remains an open problem as well.

Interpretation of MR in terms of model coefficients, and causal effects: We show that MR for an additive model can be written as a function of the model’s coefficients (Proposition 15), and that MR for a binary covariate X1 can be written as a function of the conditional causal effects of X1 on Y (Proposition 19).

Extensions to conditional importance: We provide an extension of MR that is analogous to the notion of conditional importance (Strobl et al., 2008). This extension describes how much a model relies on the specific information in X1 that cannot otherwise be gleaned from X2 (Section 8.2).

Generalizations for Rashomon sets: Beyond notions of variable importance, we also generalize our finite sample results for MCR to describe arbitrary characterizations of models in a population ϵ-Rashomon set. As we discuss in concurrent work (Coker et al., 2018), this generalization is analogous to the profile likelihood interval, and can, for example, be used to bound the range of risk predictions that well-performing prediction models may assign to a particular set of covariates (Section 5).

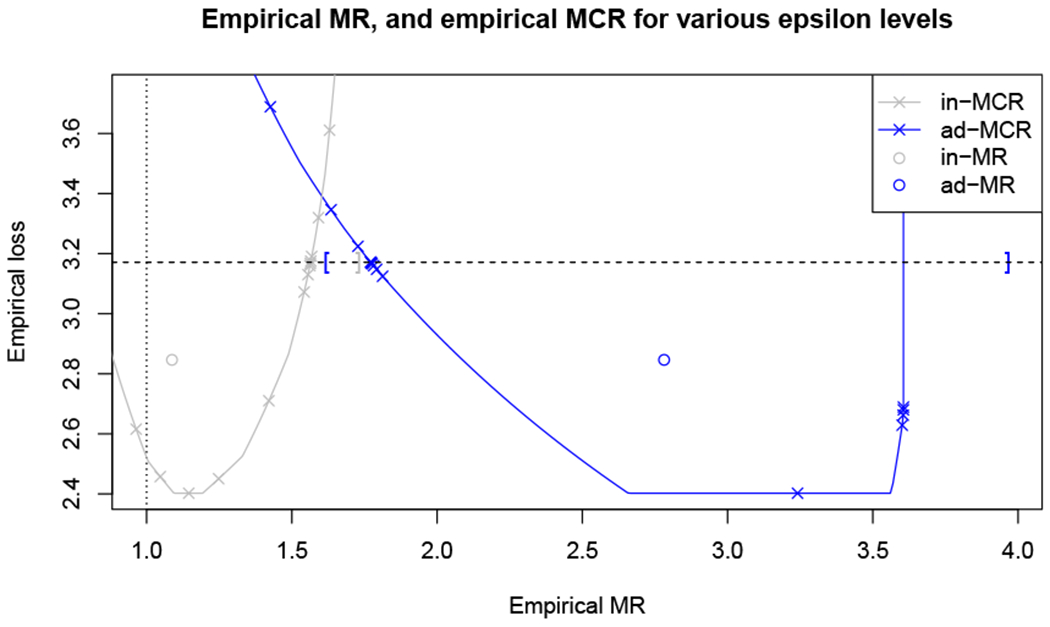

Figure 2:

Illustration of output from our empirical MCR computational procedure – Our computation procedure produces a closed-form, convex envelope that contains (shown above as the solid, purple line), which bounds empirical MCR for any value of ϵ (see Eq 2.4). The procedure works sequentially, tightening these bounds as much as possible near the ϵ value of interest (Section 6). The results from our data analysis (Figure 8) are presented in the same format as the above purple envelope.

We begin in the next section by formally reviewing model reliance.

3. Model Reliance

To formally describe how much the expected accuracy of a fixed prediction model f relies on the random variable X1, we use the notion of a “switched” loss where X1 is rendered uninformative. Throughout this section, we will treat f as a pre-specified prediction model of interest (as in Hooker, 2007). Let and be independent random variables, each following the same distribution as Z = (Y, X1, X2). We define

as representing the expected loss of model f across pairs of observations in which the values of and have been switched. To see this interpretation of the above equation, note that we have used the variables from , but we have used the variable from an independent copy Z(b). This is why we say that and have been switched; the values of () do not relate to each other as they would if they had been chosen together. An alternative interpretation of eswitch(f) is as the expected loss of f when noise is added to X1 in such a way that X1 becomes completely uninformative of Y, but that the marginal distribution of X1 is unchanged.

As a reference point, we compare eswitch(f) against the standard expected loss when none of the variables are switched, From these two quantities, we formally define model reliance (MR) as the ratio,

| (3.1) |

as we alluded to in Eq 2.1. Higher values of MR(f) signify greater reliance of f on X1. For example, an MR(f) value of 2 means that the model relies heavily on X1, in the sense that its loss doubles when X1 is scrambled. An MR(f) value of 1 signifies no reliance on X1, in the sense that the model’s loss does not change when X1 is scrambled. Models with reliance values strictly less than 1 are more difficult to interpret, as they rely less on the variable of interest than a random guess. Interestingly, it is possible to have models with reliance less than one. For instance, a model f′ may satisfy MR(f′) < 1 if it treats X1 and Y as positively correlated when they are in fact negatively correlated. However, in many cases, the existence of a model satisfying implies the existence of another, better performing model satisfying and eorig(f″) ≤ eorig(f′). That is, although models may exist with MR values less than 1, they will typically be suboptimal (see Appendix A.2).

Model reliance could alternatively be defined as a difference rather than a ratio, that is, as In Appendix A.5, we discuss how many of our results remain similar under either definition.

3.1. Estimating Model Reliance with U-statistics, and Connections to Permutation-based Variable Importance

Given a model f and data set , we estimate MR(f) by separately estimating the numerator and denominator of Eq 3.1. We estimate eorig(f) with the standard empirical loss,

| (3.2) |

We estimate eswitch(f) by performing a “switch” operation across all observed pairs, as in

| (3.3) |

Above, we have aggregated over all possible combinations of the observed values for (Y, X2) and for X1, excluding pairings that are actually observed in the original sample. If the summation over all possible pairs (Eq 3.3) is computationally prohibitive due to sample size, another estimator of eswitch(f) is

| (3.4) |

| (3.5) |

Here, rather than summing over all pairs, we divide the sample in half. We then match the first half’s values for (Y, X2) with the second half’s values for X1 (Line 3.4), and vice versa (Line 3.5). All three of the above estimators (Eqs 3.2, 3.3 & 3.5) are unbiased for their respective estimands, as we discuss in more detail shortly.

Finally, we can estimate MR(f) with the plug-in estimator

| (3.6) |

which we define as the empirical model reliance of f on X1. In this way, we formalize the empirical MR definition in Eq 2.3.

Again, our definition of empirical MR is very similar to the permutation-based variable importance approach of Breiman (2001), where Breiman uses a single random permutation and we consider all possible pairs. To compare these two approaches more precisely, let (π1, … , πn!} be a set of n-length vectors, each containing a different permutation of the set (1, …, n}. The approach of Breiman (2001) is analogous to computing the loss for a randomly chosen permutation vector πl ∈ {π1, … , πn!}. Similarly, our calculation in Eq 3.3 is proportional to the sum of losses over all possible (n!) permutations, excluding the n unique combinations of the rows of X1 and the rows of that appear in the original sample (see Appendix A.3). Excluding these observations is necessary to preserve the (finite-sample) unbiasedness of .

The estimators , and all belong to the well-studied class of U-statistics. Thus, under fairly minor conditions, these estimators are unbiased, asymptotically normal, and have finite-sample probabilistic bounds (Hoeffding, 1948, 1963; Serfling, 1980; see also DeLong et al., 1988 for an early use of U-statistics in machine learning, as well as caveats in Demler et al., 2012). To our knowledge, connections between permutation-based importance and U-statistics have not been previously established.

While the above results from U-statistics depend on the model f being fixed a priori, we can also leverage these results to create uniform bounds on the MR estimation error for all models in a sufficiently regularized class . We formally present this bound in Section 4 (Theorem 5), after introducing required conditions on model class complexity. The existence of this uniform bound implies that it is feasible to train a model and to evaluate its importance using the same data. This differs from the classical VI approach of Random Forests (Breiman, 2001), which avoids in-sample importance estimation. There, each tree in the ensemble is fit on a random subset of data, and VI for the tree is estimated using the held-out data. The tree-specific VI estimates are then aggregated to obtain a VI estimate for the overall ensemble. Although sample-splitting approaches such as this are helpful in many cases, the uniform bound for MR suggests that they are not strictly necessary, depending on the sample size and the complexity of .

3.2. Limitations of Existing Variable Importance Methods

Several common approaches for variable selection, or for describing relationships between variables, do not necessarily capture a variable’s importance. Null hypothesis testing methods may identify a relationship, but do not describe the relationship’s strength. Similarly, checking whether a variable is included by a sparse model-fitting algorithm, such as the Lasso (Hastie et al., 2009), does not describe the extent to which the variable is relied on. Partial dependence plots (Breiman et al., 2001; Hastie et al., 2009) can be difficult to interpret if multiple variables are of interest, or if the prediction model contains interaction effects.

Another common VI procedure is to run a model-fitting algorithm twice, first on all of the data, and then again after removing X1 from the data set. The losses for the two resulting models are then compared to determine the importance, or “necessity,” of X1 (Gevrey et al., 2003). Because this measure is a function of two prediction models rather than one, it does not measure how much either individual model relies on X1. We refer to this approach as measuring empirical Algorithm Reliance (AR) on X1, as the model-fitting algorithm is the common attribute between the two models. Related procedures were proposed by Breiman et al. (2001); Breiman (2001), which measure the sufficiency of X1.

As we discuss in Section 3.1, the permutation-based VI measure from RFs (Breiman, 2001; Breiman et al., 2001) forms the inspiration for our definition of MR. This RF VI measure has been the topic of empirical studies (Archer and Kimes, 2008; Calle and Urrea, 2010; Wang et al., 2016), and several variations of the measure have been proposed (Strobl et al., 2007, 2008; Altmann et al., 2010; Hapfelmeier et al., 2014). Mentch and Hooker (2016) use U-statistics to study predictions of ensemble models fit to subsamples, similar to the bootstrap aggregation used in RFs. Procedures related to “Mean Difference Impurity,” another VI measure derived for RFs, have been studied theoretically by Louppe et al. (2013); Kazemitabar et al. (2017). All of this literature focuses on VI measures for RFs, for ensembles, or for individual trees. Our estimator for model reliance differs from the traditional RF VI measure (Breiman, 2001) in that we permute inputs to the overall model, rather than permuting the inputs to each individual ensemble member. Thus, our approach can be used generally, and is not limited to trees or ensemble models.

Outside of the context of RF VI, Zhu et al. (2015) propose an estimand similar to our definition of model reliance, and Gregorutti et al. (2015, 2017) propose an estimand analogous to eswitch(f) − eorig(f). These recent works focus on the model reliance of f on X1 specifically when f is equal to the conditional expectation function of Y (that is, ). In contrast, we consider model reliance for arbitrary prediction models f. Datta et al. (2016) study the extent to which a model’s predictions are expected to change when a subset of variables is permuted, regardless of whether the permutation affects a loss function L. These VI approaches are specific to a single prediction model, as is MR. In the next section, we consider a more general conception of importance: how much any model in a particular set may rely on the variable of interest.

4. Model Class Reliance

Like many statistical procedures, our MR measure (Section 3) produces a description of a single predictive model. Given a model with high predictive accuracy, MR describes how much the model’s performance hinges on covariates of interest (X1). However, there will often be many other models that perform similarly well, and that rely on X1 to different degrees. With this notion in mind, we now study how much any well-performing model from a prespecified class may rely on covariates of interest.

Recall from Section 2.1 that, in order to define a population ϵ-Rashomon set of near-optimal models, we must choose a “reference” model fref to serve as a performance benchmark. In order to discuss this choice, we now introduce more explicit notation for the population ϵ-Rashomon set, written as

| (4.1) |

Note that we write and interchangeably when fref and are clear from context. Similarly, we occasionally write empirical ϵ-Rashomon sets using the more explicit notation , but typically abbreviate these sets as .

While fref could be selected by minimizing the in-sample loss, the theoretical study of is simplified under the assumption that fref is prespecified. For example, fref may come from a flowchart used to predict injury severity in a hospital’s emergency room, or from another quantitative decision rule that is currently implemented in practice. The model fref can also be selected using sample splitting. In some cases it may be desirable to fix fref equal to the best-in-class model , but this is generally infeasible because f⋆ is unknown. Still, for any , the Rashomon set defined using fref will always be conservative in the sense that it contains the Rashomon set defined using f⋆.

We can now formalize our definitions of population-level MCR and empirical MCR by simply plugging in our definitions for MR(f) and (Section 3) into Eqs 2.2 & 2.4 respectively. Studying population-level MCR (Eq 2.2) is the main focus of this paper, as it provides a more comprehensive view of importance than measures from a single model. If MCR+(ϵ) is low, then no well-performing model in places high importance on X1, and X1 can be discarded at low cost regardless of future modeling decisions. If MCR−(ϵ) is large, then every well-performing model in must rely substantially on X1, and X1 should be given careful attention during the modeling process. Here, may itself consist of several parametric model forms (for example, all linear models and all decision tree models with less than 6 single-split nodes). We stress that the range [MCR−(ϵ), MCR+(ϵ)| does not depend on the fitting algorithm used to select a model . The range is valid for any algorithm producing models in F, and applies for any .

In the remainder of this section, we derive finite sample bounds for population-level MCR, from which we argue that empirical MCR provides reasonable estimates of population-level MCR (Section 4.1). In Appendix B.7 we consider an alternate formulation of Rashomon sets and MCR where we replace the relative loss threshold in the definition of with an absolute loss threshold. This alternate formulation can be similar in practice, but still requires the specification of a reference function fref to ensure that and are nonempty.

4.1. Motivating Empirical Estimators of MCR by Deriving Finite-sample Bounds

In this section we derive finite-sample, probabilistic bounds for MCR+(ϵ) and MCR−(ϵ). Our results imply that, under minimal assumptions, and are respectively within a neighborhood of MCR+ (ϵ) and MCR−(ϵ) with high probability. However, the weakness of our assumptions (which are typical for statistical-learning-theoretic analysis) renders the width of our resulting CIs to be impractically large, and so we use these results only to show conditions under which and form sensible point estimates. In Sections 9.1 & 10, below, we apply a bootstrap procedure to account for sampling variability.

To derive these results we introduce three bounded loss assumptions, each of which can be assessed empirically. Let borig, Bind, Bref, be known constants.

Assumption 1 (Bounded individual loss) For a given model , assume that .

Assumption 2 (Bounded relative loss) For a given model , assume that .

Assumption 3 (Bounded aggregate loss) For a given model , assume that .

Each assumption is a property of a specific model . The notation Bind and Bref refer to bounds for any individual observation, and the notation borig and Bswitch refer to bounds on the aggregated loss L in a sample. These boundedness assumptions are central to our finite sample guarantees, shown below.

Crucially, loss functions L that are unbounded in general may be used so long as L(f, (y, x1, x2)) is bounded on a particular domain. For example, the squared-error loss can be used if is contained within a known range, and predictions f (x1, x2) are contained within the same range for We give example methods of determining Bind in Sections 7.3.2 & 7.4.2. For Assumption 3, we can approximate borig by training a highly flexible model to the data, and setting borig equal to half (or any positive fraction) of the resulting cross-validated loss. To determine Bswitch we can simply set Bswitch = Bind, although this may be conservative. For example, in the case of binary classification models for non-separable groups (see Section 9.1), no linear classifier can misclassify all observations, particularly after a covariate is permuted. Thus, it must hold that Bind > Bswitch. Similarly, if fref satisfies Assumption 1, then Bref may be conservatively set equal to Bind. If model reliance is redefined as a difference rather than a ratio, then a similar form of the results in this section will apply without Assumption 3 (see Appendix A.5).

Based on these assumptions, we can create a finite-sample upper bound for MCR+(ϵ) and lower bound for MCR−(ϵ). In other words, we create an “outer” bound that contains the interval [MCR−(ϵ),MCR+(ϵ)| with high probability.

Theorem 4 (“Outer” MCR Bounds) Given a constant ϵ ≥ 0, let and be prediction models that attain the highest and lowest model reliance among models in . If f+,ϵ and f−,ϵ satisfy Assumptions 1, 2 & 3, then

| (4.2) |

| (4.3) |

where .

Eq 4.2 states that, with high probability, MCR+(ϵ) is no higher than added to an error term . As n increases, ϵout approaches ϵ and approaches zero. One practical implication is that, roughly speaking, if , then the empirical estimator is unlikely to substantially underestimate MCR+(ϵ). By similar reasoning, we can conclude from Eq 4.3 that if , then is unlikely to substantially overestimate MCR−(ϵ). By setting ϵ = 0, Theorem 4 can also be used to create a finite-sample bound for the reliance of the unique (unknown) best-in-class model on X1 (see Corollary 22 in Appendix A.4), although describing individual models is not the main focus of this paper.

We provide a visual illustration of Theorem 4 in Figure 3. A brief sketch of the proof is as follows. First, we enlarge the empirical ϵ-Rashomon set by increasing ϵ to ϵout, such that, by Hoeffding’s inequality, with high probability. When , we know that by the definition of . Next, the term leverages finite-sample results for U-statistics to account for estimation error of MR(f+,ϵ) = MCR+(ϵ) when using the estimator . Thus, we can relate to both and MCR+(ϵ) in order to obtain Eq 4.2. Similar steps can be applied to obtain Eq 4.3.

Figure 3:

Illustration of terms in Theorems 4 and 6 – Above we show the relation between empirical MR (x-axis) and empirical loss (y-axis) for models f in a hypothetical model class . We mark fref by the black point. For each possible model reliance value r ≥ 0, the curved, dashed line shows the lowest possible empirical loss for a function in satisfying . The set contains all models in within the dotted gray lines. To create the bounds from Theorem 4, we expand the empirical ϵ-Rashomon set by increasing ϵ to ϵout, such that f+,ϵ (or f−,ϵ) is contained in with high probability. We then add (or subtract) to account for estimation error of (or ). These steps are illustrated above in blue, with the final bounds shown by the blue bracket symbols along the x-axis. To create the bounds for MCR+(ϵ) (and MCR−(ϵ)) in Theorem 6, we constrict the empirical ϵ-Rashomon set by decreasing ϵ to ϵin, such that all models with high expected loss are simultaneously excluded from with high probability. We then subtract (or add) to simultaneously account for MR estimation error for models in . These steps are illustrated above in purple, with the final bounds shown by the purple bracket symbols along the x-axis. For emphasis, below this figure we show a copy of the x-axis with selected annotations, from which it is clear that and are always within the bounds produced by Theorems 4 and 6. With high probability, and are within a neighborhood of MCR−(ϵ) and MCR+(ϵ) respectively.

The bounds in Theorem 4 naturally account for potential overfitting without an explicit limit on model class complexity (such as a covering number, Rademacher complexity, or VC dimension). Instead, these bounds depend on being able to fully optimize MR across sets in the form of . If we allow our model class to become more flexible, then the size of will also increase. Because the bounds in Theorem 4 result from optimizing over , increasing the size of results in wider, more conservative bounds. In this way, Eqs 4.2 and 4.3 implicitly capture model class complexity.

So far, Theorem 4 lets us bound the range of MR values corresponding to models that predict well, but it does not tell us whether these bounds are actually attained. Similarly, we can conclude from Theorem 4 that [MCR−(ϵ), MCR+(ϵ)] is unlikely to exceed the estimated range [, ] by a substantial margin, but we cannot determine whether this estimated range is unnecessarily wide. For example, consider the models that drive the estimator: the models with strong in-sample accuracy, and high empirical reliance on X1. These models’ in-sample performance could merely be the result of overfitting, in which case they do not tell us direct information about . Alternatively, even if all of these models truly do perform well on expectation (that is, even if they are contained in ), the model with the highest empirical reliance on X1 may merely be the model for which our empirical MR estimate contains the most error. Either of these scenarios can cause to be unnecessarily high, relative to MCR+(ϵ).

Fortunately, both problematic scenarios are solved by requiring a limit on the complexity of . We propose a complexity measure in the form of a covering number, which allows us control a worst case scenario of either overfitting or MR estimation error. Specifically, we define the set of functions as an r-margin-expectation-cover if for any and any distribution D, there exists such that

| (4.4) |

We define the covering number to be the size of the smallest r-margin-expectation-cover for . In general, we use and to denote probabilities and expectations with respect to a random variable V following the distribution D. We abbreviate these quantities accordingly when V or D are clear from context, for example, as , , or simply . Unless otherwise stated, all expectations and probabilities are taken with respect to the (unknown) population distribution.

We first show that this complexity measure allows us to control the worst case MR estimation error, that is, the covering number provides a uniform bound on the error of for all .

Theorem 5 (Uniform bound for ) Given r > 0, if Assumptions 1 and 3 hold for all , then

where

| (4.5) |

Theorem 5 states that, with high probability, the largest possible estimation error for MR(f) across all models in is bounded by q(δ, r, n), which can be made arbitrarily small by increasing n and decreasing r. As we noted in Section 3.1, this means that it is possible to train a model and estimate its reliance on variables without using sample-splitting.

The covering number can also be used to limit the extent of overfitting (see Appendix B.5.1). As a result, it is possible to set an in-sample performance threshold low enough so that it will only be met by models with strong expected performance (that is, by models truly within ). To implement this idea of a stricter performance threshold, we contract the empirical ϵ-Rashomon set by subtracting a buffer term from ϵ. This requires that we generalize the definition of an empirical ϵ-Rashomon set to for , where the explicit inclusion of fref now ensures that is nonempty, even for ϵ < 0. As before, we typically omit the notation fref and , writing instead.

We are now prepared to answer the questions of whether the bounds from Theorem 4 are actually attained, and of whether the estimated range is unnecessarily wide. Our answer comes in the form of an upper bound on MCR−(ϵ), and a lower bound on MCR+(ϵ).

Theorem 6 (“Inner” MCR Bounds) Given constants ϵ ≥ 0 and r > 0, if Assumptions 1, 2 and 3 hold for all , and then

| (4.6) |

| (4.7) |

where , as defined in Eq 4.5.

Theorem 6 can allow us to infer an “inner” bound that is contained within the interval [MCR−(ϵ), MCR+(ϵ)] with high probability. In Figure 3, we illustrate the result of Theorem 6, and give a sketch of the proof. This proof follows a similar structure to that of Theorem 4, but incorporates Theorem 5’s uniform bound on MR estimation error ( term), as well as an additional uniform bound on the probability that any model has in-sample loss too far from its expected loss (ϵin term).

A practical implication of Theorem 6 is that, roughly speaking, if then it is unlikely for the empirical estimator to substantially underestimate MCR+(ϵ). Taken together with Theorem 4, we can conclude that, if , then the estimator is unlikely either to overestimate or to underestimate MCR+(ϵ) by very much. In large samples, it may be plausible to expect the condition to hold, since ϵin and ϵout both approach ϵ as n increases. In the same way, if , we can conclude from Eqs 4.3 & 4.7 that the empirical estimator is unlikely to either overestimate or underestimate MCR−(ϵ) by very much. For this reason, we argue that and form sensible estimates of population-level MCR – each is contained within a neighborhood of its respective estimand, with high probability. The secondary x-axis of Figure 3 gives an illustration of this argument.

5. Extensions of Rashomon Sets Beyond Variable Importance

In this section we generalize the Rashomon set approach beyond the study of MR. In Section 5.1, we create finite-sample CIs for other summary characterizations of near-optimal, or best-in-class models. The generalization also helps to illustrate a core aspect of the argument underlying Theorem 4: models with near-optimal performance in the population tend to have relatively good performance in random samples.

In Section 5.2, we review existing literature on near-optimal models.

5.1. Finite-sample Confidence Intervals from Rashomon Sets

Rather than describing how much a model relies on X1, here we assume the analyst is interested in an arbitrary characteristic of a model. We denote this characteristic of interest as . For example, if fβ is the linear model then ϕ may be defined as the norm of the associated coefficient vector (that is, or the prediction fβ would assign given a specific covariate profile xnew (that is ).

Given a descriptor ϕ, we now show a general result that allows creation of finite-sample CIs for the best performing models . The resulting CIs are themselves based on empirical Rashomon sets.

Proposition 7 (Finite sample CIs from Rashomon sets) Let , let , and let .

If Assumption 2 holds for all , then

Proposition 7 generates a finite-sample CI for the range of values ϕ(f) corresponding to well-performing models, . This CI, denoted by can itself be interpreted as the range of values ϕ(f) corresponding to models f with empirical loss not substantially above that of fref. Thus, the interval has both a rigorous coverage rate and a coherent in-sample interpretation. The proof of Proposition 7 uses Hoeffding’s inequality to show that models in are contained in with high probability, that is, that models with good expected performance tend to perform well in random samples.

An immediate corollary of Proposition 7 is that we can generate finite-sample CIs for all best-in-class models by setting ϵ = 0. This corollary can be further strengthened if a single model f⋆ is assumed to uniquely minimize over (see Appendix B.6).

Note that Proposition 7 implicitly assumes that ϕ(f) can be determined exactly for any model , in order for the interval to be precisely determined. This assumption does not hold, for example, if ϕ(f) = MR(f), or if ϕ(f) = Var{f(X1, X2)}, as these quantities depend on both f and the (unknown) population distribution. In such cases, an additional correction factor must be incorporated to account for estimation error of ϕ(f), such as the term in Theorem 4.

In concurrent work, Coker et al. (2018) show that profile likelihood intervals take the same form as the interval in Proposition 7. This means that a profile likelihood interval can also be expressed by minimizing and maximizing over an empirical Rashomon set. More specifically, consider the case where the loss function L is the negative of the known log likelihood function, and where fref is the maximum likelihood estimate of the “true model,” which in this case is f⋆. If additional minor assumptions are met (see Appendix A.6 for details), then the (1 − δ)-level profile likelihood interval for ϕ(f⋆) is equal to , where ( and are defined as in Proposition 7, and is the 1 − δ percentile of a chi-square distribution with 1 degree of freedom.

Relative to a profile likelihood approach, the advantage of Proposition 7 is that it does not require asymptotics, it does not require that the likelihood be known up to a parametric form, and it can be extended to study the set of near-optimal prediction models , rather than a single, potentially misspecified prediction model f⋆. This is especially useful when different near-optimal models accurately describe different aspects of the underlying data generating process, but none capture it completely. The disadvantage of Proposition 7 is that the required performance threshold of decreases more slowly than the performance threshold of required in a profile likelihood interval. Because our results from Section 4.1 carry a similar disadvantage, we use these results primarily to motivate point estimates describing the Rashomon set .

Still, it is worth emphasizing the generality of Proposition 7. Through this result, Rashomon sets allow us to reframe a wide set of finite-sample inference problems as in-sample optimization problems. The implied CIs are not necessarily in closed form, but the approach still opens an exciting pathway for deriving non-asymptotic results. For example, they imply that existing methods for profile likelihood intervals might be able to be reapplied to achieve finite-sample results. For highly complex model classes where profile likelihoods are difficult to compute, such as neural networks or random forests, approximate inference is sometimes achieved via approximate optimization procedures (for example, Markov chain Monte Carlo for Bayesian additive regression trees, in Chipman et al., 2010). Proposition 7 shows that similar approximate optimization methods could be repurposed to establish approximate, finite-sample inferences for the same model classes.

5.2. Related Literature on the Rashomon Effect

Breiman et al. (2001) introduced the “Rashomon effect” of statistics as a problem of ambiguity: if many models fit the data well, it is unclear which model we should try to interpret. Breiman suggests that the ensembling many well-performing models together can resolve this ambiguity, as the new ensemble model may perform better than any of its individual members. However, this approach may only push the problem from the member level to the ensemble level, as there may also be many different ensemble models that fit the data well.

The Rashomon effect has also been considered in several subject areas outside of VI, including those in non-statistical academic disciplines (Heider, 1988; Roth and Mehta, 2002). Tulabandhula and Rudin (2014) optimize a decision rule to perform well under the predicted range of outcomes from any well-performing model. Statnikov et al. (2013) propose an algorithm to discover multiple Markov boundaries, that is, minimal sets of covariates such that conditioning on any one set induces independence between the outcome and the remaining covariates. Nevo and Ritov (2017) report interpretations corresponding to a set of well-fitting, sparse linear models. Meinshausen and Bühlmann (2010) estimate structural aspects of an underlying model (such as the variables included in that model) based on how stable those aspects are across a set of well-fitting models. This set of well-fitting models is identified by repeating an estimation procedure in a series of perturbed samples, using varying levels of regularization (see also Azen et al., 2001). Letham et al. (2016) search for a pair of well-fitting dynamical systems models that give maximally different predictions.

6. Calculating Empirical Estimates of Model Class Reliance

In this section, we propose a binary search procedure to bound the values of and (see Eq 2.4), which respectively serve as estimates of MCR−(ϵ) and MCR+(ϵ) (see Section 4.1). Each step of this search consists of minimizing a linear combination of êorig(f) and êswitch(f) across . Our approach is related to the fractional programming approach of Dinkelbach (1967), but accounts for the fact that the problem is constrained by the value of the denominator, êorig(f). We additionally show that, for many model classes, computing only requires that we minimize convex combinations of êorig(f) and êswitch(f), which is no more difficult than minimizing the average loss over an expanded and reweighted sample (See Eq 6.2 & Proposition 11).

Computing however will require that we are able to minimize arbitrary linear combinations of êorig(f) and êswitch(f). In Section 6.3, we outline how this can be done for convex model classes – classes for which the loss function is convex in the model parameter. Later, in Section 7, we give more specific computational procedures for when is the class of linear models, regularized linear models, or linear models in a reproducing kernel Hilbert space (RKHS). We summarize the tractability of computing empirical MCR for different model classes in Table 1.

Table 1:

Tractability of empirical MCR computation for different model classes - For each case, we describe the tractability of computing and using our proposed approaches. Computing empirical MCR can be reduced to a sequence of optimization problems, the form of which are noted in parentheses within the above table.

| Model class and loss function () | Computing | Computing |

|---|---|---|

| (L2 Regularized) Linear models, with the squared error loss | Highly tractable (QP1QC, see Sections 7.2 & 7.3) | Highly tractable (QP1QC, see Sections 7.2 & 7.3) |

| Linear models in a reproducing kernel Hilbert space, with the squared error loss | Moderately tractable (QP1QC, see Section 7.4.1) | Moderately tractable (QP1QC, see Section 7.4.1) |

| Cases where irrelevant covariates do not improve predictions | Moderately tractable (Convex optimization problems, see Proposition 11) | Potentially intractable |

| Cases where minimizing the empirical loss is a convex optimization problem | Potentially intractable (DC programs, see Section 6.3) | Potentially intractable (DC programs, see Section 6.3) |

To simplify notation associated with the reference model fref, we present our computational results in terms of bounds on empirical MR subject to performance thresholds on the absolute scale. More specifically, we present bound functions b− and b+ satisfying simultaneously for all (Figures 2 & 8 show examples of these bounds). The binary search procedures we propose can be used to tighten these boundaries at a particular value ϵabs of interest.

Figure 8:

Empirical MR and MCR for Broward County criminal records data set - For any prediction model f, the y-axis shows empirical loss and the x-axis shows empirical reliance on each covariate subset. Null reliance (MR equal to 1.0) is marked by the vertical dotted line. Reliances on different covariate subsets are marked by color (“admissible” = blue; “inadmissible” = gray). For example, model reliance values for fref are shown by the two circular points, one for “admissible” variables and one for “inadmissible” variables. MCR for different values of ϵ can be represented as boundaries on this coordinate space. To this end, for each covariate subset, we compute conservative boundary functions (shown as solid lines, or “bowls”) guaranteed to contain all models in the class (see Section 6). Specifically, all models in are guaranteed to have an empirical loss and empirical MR value for “inadmissible variables” corresponding to a point within the gray bowl. Likewise, all models in are guaranteed to have an empirical loss and empirical MR value for “admissible variables” corresponding to a point within the blue bowl. Points shown as “×” represent additional models in discovered during our computational procedure, and thus show where the “bowl” boundary is tight. The goal of our computation procedure (see Section 6) is to tighten the boundary as much as possible near the ϵ value of interest, shown by the dashed horizontal line above. This dashed line has a y-intercept equal to the loss of the reference model plus the ϵ value of interest. Bootstrap CIs for MCR−(ϵ) and MCR+(ϵ) are marked by brackets.

We briefly note that as an alternative to the global optimization procedures we discuss below, heuristic optimization procedures such as simulated annealing can also prove useful in bounding empirical MCR. By definition, the empirical MR for any model in forms a lower bound for , and an upper bound for . Heuristic maximization and minimization of empirical MR can be used to tighten these boundaries.

Throughout this section, we assume that , to ensure that MR is finite.

6.1. Binary Search for Empirical MR Lower Bound

Before describing our binary search procedure, we introduce additional notation used in this section. Given a constant and prediction model , we define the linear combination , and its minimizers (for example, ), as

We do not require that is uniquely minimized, and we frequently use the abbreviated notation when is clear from context.

Our goal in this section is to derive a lower bound on for subsets of in the form of . We achieve this by minimizing a series of linear objective functions in the form of , using a similar method to that of Dinkelbach (1967). Often, minimizing the linear combination is more tractable than minimizing the MR ratio directly.

Almost all of the results shown in this section, and those in Section 6.2, also hold if we replace with throughout (see Eq 3.5), including in the definition of and . The exception is Proposition 11, below, which we may still expect to approximately hold if we replace with .

Given an observed sample, we define the following condition for a pair of values , and argmin function :

Condition 8 (Criteria to continue search for lower bound) and .

We are now equipped to determine conditions under which we can tractably create a lower bound for empirical MR.

Lemma 9 (Lower bound for ) If satisfies , then

| (6.1) |

for all satisfying . It also follows that

Additionally, if and at least one of the inequalities in Condition 8 holds with equality, then Eq 6.1 holds with equality.

Lemma 9 reduces the challenge of lower-bounding to the task of minimizing the linear combination . The result of Lemma 9 is not only a single boundary for a particular value of ϵabs, but a boundary function that holds all values of ϵabs > 0, with lower values of ϵabs leading to more restrictive lower bounds on .

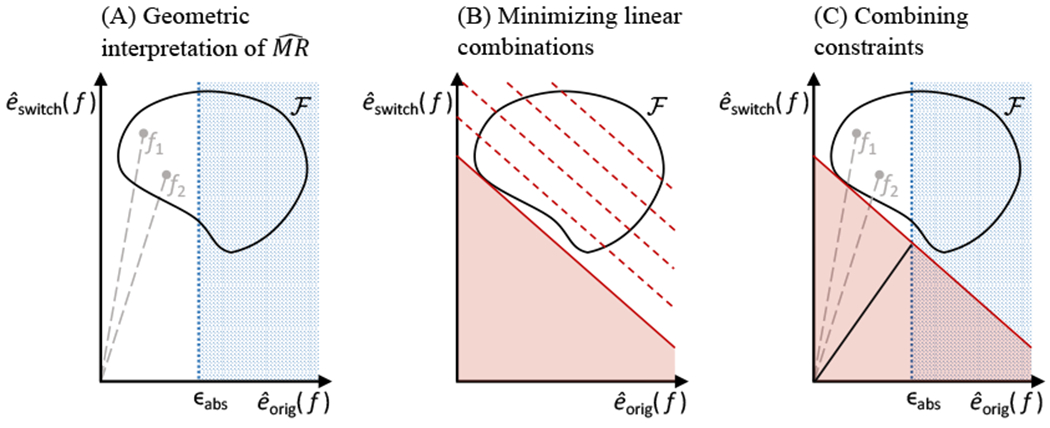

In addition to the formal proof for Lemma 9, we provide a heuristic illustration of the result in Figure 4, to aid intuition.

Figure 4:

Above, we illustrate the geometric intuition for Lemma 9. In Panel (A), we show an example of a hypothetical model class , marked by the enclosed region. For each model , the x-axis shows and the y-axis shows . Here, we can see that the condition holds. The blue dotted region marks models with higher empirical loss. We mark two example models within as f1 and f2. The slopes of the lines connecting the origin to f1 and f2 are equal to and respectively. Our goal is to lower-bound the slope corresponding to for any model f satisfying êorig(f) ≤ ϵabs. In Panel (B), we consider the linear combination for γ = 1. Above, contour lines of are shown in red. The solid red line indicates the smallest possible value of across . Specifically, its y-intercept equals . If we can determine this minimum, we can determine a linear border constraint on , that is, we will know that no points corresponding to models may lie in the shaded region above. Additionally, if (see Lemma 9), then we know that the origin is either excluded by this linear constraint, or is on the boundary. In Panel (C), we combine the two constraints from Panels (A) & (B) to see that models satisfying êorig(f) ≤ ϵabs must, correspond to points in the white, unshaded region above. Thus, as long as the unshaded region does not contain the origin, any line connecting the origin to the a model f satisfying êorig(f) < ϵabs (for example, here, f1, f2) must, have a. slope at least, as high as that of the solid black line above. It. can be shown algebraically that the black line has slope equal to the left-hand side of Eq 6.1. Thus the left-hand side of Eq 6.1 is a lower bound for for all .

It remains to determine which value of γ should be used in Eq 6.1. The following lemma implies that this value can be determined by a binary search, given a particular value of interest for ϵabs.

Lemma 10 (Monotonicity for lower bound binary search) The following monotonicity results hold:

is monotonically increasing in γ.

is monotonically decreasing in γ.

Given ϵabs, the lower bound from Eq 6.1, , is monotonically decreasing in γ in the range where , and increasing otherwise.

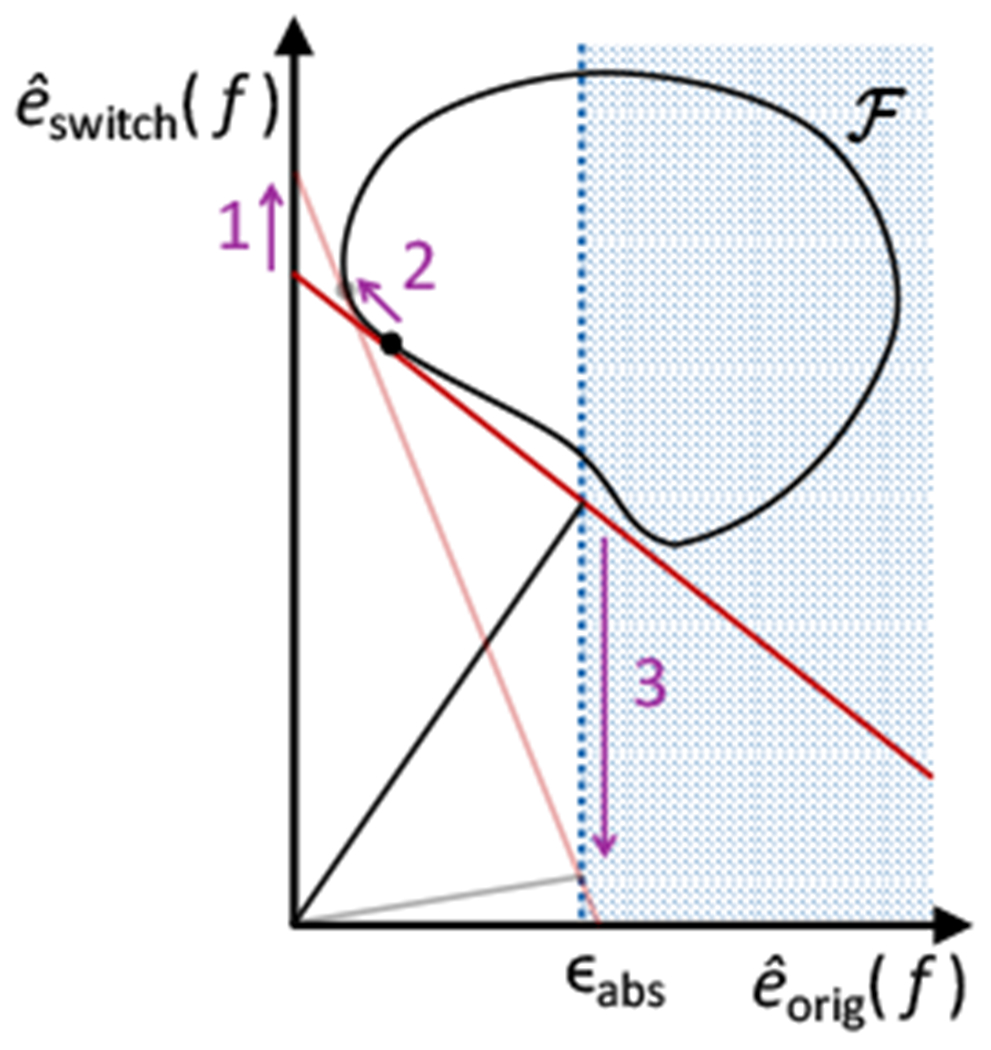

Given a particular performance level of interest, ϵabs, Point 3 of Lemma 10 tells us that the value of γ resulting in the tightest lower bound from Eq 6.1 occurs when γ is as low as possible while still satisfying Condition 8. Points 1 and 2 show that if γ0 satisfies Condition 8, and one of the equalities in Condition 8 holds with equality, then Condition 8 holds for all γ ≥ γ0. Together, these results imply that we can use a binary search to determine the value of γ to be used in Lemma 9, reducing this value until Condition 8 is no longer met. In addition to the formal proof for Lemma 10, we provide an illustration of the result in Figure 5 to aid intuition.

Figure 5:

Monotonicity for binary search. Above we show a version of Figure 4–C for two alternative values of γ. This figure is meant, to add intuition for the monotonicity results in Lemma 10, in addition to the formal proof. Increasing γ is equivalent to decreasing the slope of the red line in Figure 4–C. We define two values γ1 < γ2, where γ1 corresponds to the solid red line, above, and γ2 corresponds to the semi-transparent, red line. The y-intercept values of these lines are equal to and respectively (see Figure 4–C caption). The solid and semi-transparent, black dots mark and respectively. Plugging γ1 and γ2 into Eq 6.1 yields two lower bounds for , marked by the slopes of the solid and semi-transparent, black lines respectively (see Figure 4–C caption). We see that (1) , that (2) , and that (3) the left-hand side of Eq 6.1 is decreasing in γ when . These three conclusions are marked by arrows in the above figure, with numbering matching the enumerated list in Lemma 10.

Next we present simple conditions under which the binary search for values of γ can be restricted to the nonnegative real line. This result substantially extends the computational tractability of our approach, as minimizing for γ ≥ 0 is equivalent to minimizing a reweighted empirical loss over an expanded sample of size n2:

| (6.2) |

where .

Proposition 11 (Nonnegative weights for lower bound binary search) Assume that L and satisfy the following conditions.

(Predictions are sufficient for computing the loss) The loss L{f, (Y, X1, X2)} depends on the covariates (X1, X2) only via the prediction function f, that is, whenever .

- (Irrelevant information does not improve predictions) For any distribution D satisfying X1 ⊥D (X2, Y), there exists a function fD satisfying

and(6.3)

Let γ = 0. Under the above assumptions, it follows that either (i) there exists a function minimizing that does not. satisfy Condition 8, or (ii) for any function minimizing .

The implication of Proposition 11 is that, when the conditions of Proposition 11 are met, the search region for γ can be limited to the nonnegative real line, and minimizing will be no harder than minimizing a reweighted empirical loss over an expanded sample (Eq 6.2). To see this, recall that for a fixed value of ϵabs we can tighten the boundary in Lemma 9 by conducting a binary search for the smallest, value of γ that satisfies Condition 8. If setting γ equal to 0 does not satisfy Condition 8, and the search for γ can be restricted to the nonnegative real line, where minimizing is more tractable (see Eq 6.2). Alternatively, if , then we have identified a well-performing model g−,0 with empirical MR no greater than 1. For ϵabs = êorig(fref) + ϵ, this implies that , which is a sufficiently precise conclusion for most, interpretational purposes (see Appendix A.2).

Because of the fixed pairing structure used in êdivide, Proposition 11 will not necessarily hold if we replace êswitch with êdivide throughout (see Appendix C.3). However, since êdivide approximates êswitch, we can expect Proposition 11 to hold approximately. The bound from Eq 6.1 still remains valid if we replace êswitch with êdivide and limit γ to the nonnegative reals, although in some cases it may not be as tight.

6.2. Binary Search for Empirical MR Upper Bound

We now briefly present a binary search procedure to upper bound , which mirrors the procedure from Section 6.1. Given a constant and prediction model , we define the linear combination , and its minimizers (for example, ), as

As in Section 6.1, need not be uniquely minimized, and we generally abbreviate as when is clear from context.

Given an observed sample, we define the following condition for a pair of values , and argmin function :

Condition 12 (Criteria to continue search for upper bound) and .

We can now develop a procedure to upper bound , as shown in the next lemma.

Lemma 13 (Upper bound for ) If satisfies γ ≤ 0 and , then

| (6.4) |

for all satisfying êorig(f) ≤ ϵabs. It also follows that

| (6.5) |

Additionally, if and at least one of the inequalities in Condition 12 holds with equality, then Eq 6.4 holds with equality.

As in Section 6.1, it remains to determine the value of γ to use in Lemma 13, given a value of interest for . The next lemma tells us that the boundary from Lemma 13 is tightest when γ is as low as possible while still satisfying Condition 12.

Lemma 14 (Monotonicity for upper bound binary search) The following monotonicity results hold:

is monotonically increasing in γ.

is monotonically decreasing in γ for γ ≤ 0, and Condition 12 holds for γ = 0 and .

Given ϵabs, the upper boundary is monotonically increasing in γ in the range where and γ < 0, and decreasing in the range where and γ < 0.

Together, the results from Lemma 14 imply that we can use a binary search across to tighten the boundary on from Lemma 13.

6.3. Convex Models

In this section we show that empirical MCR can be conservatively computed when the loss function is convex in the model parameters – that is, when the models are indexed by a d-dimensional parameter , and when the loss function L(fθ, (y, x1, x2)) is convex in θ for all .

Fortunately, neither Lemma 9 nor Lemma 13 require an exact minimum for or . For Lemma 9, any lower bound on is sufficient to determine a lower bound on MR(f). Likewise, for Lemma 13, any lower bound on is sufficient to determine an upper bound on MR(f).

To find these lower bounds, we note that for “convex” model classes (defined above) the optimization problems in Sections 6.1 & 6.2 can be written either as convex optimization problems, or as difference convex function (DC) programs. A DC program is one that can be written as

where cDC is a constraint function, , and gDC, hDC, and cDC are convex. Although precise solutions to DC problems are not always tractable, lower bounds can be attained by branch-and-bound (B&B) methods (Horst and Thoai, 1999). A simple B&B approach is to partition Θ into a set of simplexes. Within the jth simplex, a lower bound on gDC(θ)−hDC(θ) can be determined by replacing hDC with the hyperplane function hj satisfying hj (υ) = hDC(υ) at each vertex υ of the jth simplex. Within this partition, gDC(θ) − hDC(θ) is lower bounded by lj := minθ gDC(θ) − hj(θ), which can be computed as the solution to a convex optimization problem. Any partition for which lj is found to be too high is disregarded. Once a bound lj is computed for each partition, the partition with the lowest value lj is selected to be subdivided further, and additional lower bounds are recomputed for each new, resulting partition. This procedure continues until a sufficiently tight lower bound is attained (for more detailed procedures, see Horst and Thoai, 1999).

This approach allows us to conservatively approximate bounds on in the form of Eq 6.1 & 6.4 by replacing and with lower bounds from the B&B procedure. Although it will always yield valid bounds, the procedure may converge slowly when the dimension of Θ is large, giving highly conservative results. For some special cases of model classes however, even high dimensional DC problems simplify greatly. We discuss these cases in the next section.

7. MR & MCR for Linear Models, Additive Models, and Regression Models in a Reproducing Kernel Hilbert Space

For linear or additive models, many simplifications can be made to our approaches for MR and MCR. To simplify the interpretation of MR, we show below that population-level MR for a linear model can be expressed in terms of the model’s coefficients (Section 7.1). To simplify computation, we show that the cost of computing empirical MR for a linear model grows only linearly in n (Section 7.1), even though the number of terms in the definition of empirical MR grows quadratically (see Eqs 3.3 & 3.6).

Moving on from MR, we show how empirical MCR can be computed for the class of linear models (Section 7.2), for regularized linear models (Section 7.3), and for regression models in a reproducing kernel Hilbert space (RKHS, Section 7.4). To do this, we build on the approach in Section 6 by giving approaches for minimizing arbitrary combinations of êswitch(f) and êorig(f) across . Even when the associated objective functions are non-convex, we can tractably obtain global minima for these model classes. We also discuss procedures to determine an upper bound Bind on the loss for any observation when using these model classes (see Assumption 1).

Throughout this section, we assume that for , that , and that L is the squared error loss function L(f, (y, x1, x2) = (y − f(x1, x2))2. As in Section 6, we also assume that , to ensure that empirical MR is finite.

7.1. Interpreting and Computing MR for Linear or Additive Models

We begin by considering MR for linear models evaluated with the squared error loss. For this setting, we can show both an interpretable definition of MR, as well as a computationally efficient formula for êswitch(f).

Proposition 15 (Interpreting MR, and computing empirical MR for linear models) For any prediction model f, let eorig(f), eswitch(f), êorig(f), and êswith(f) be defined based on the squared error loss L(f, (y, x1, x2)) := (y − f (x1, x2))2 for , , and , where p1 and p2 are positive integers. Let β = (β1, β2) and fβ satisfy , , and . Then

| (7.1) |

and, for finite samples,

| (7.2) |

where , 1n is the n-length vector of ones, and In is the n × n identity matrix.

Eq 7.1 shows that model reliance for linear models can be interpreted in terms of the population covariances, the model coefficients, and the model’s accuracy. Gregorutti et al. (2017) show an equivalent formulation of Eq 7.1 under the stronger assumptions that fβ is equal to the conditional expectation function of Y (that is, ), and the covariates X1 and X2 are centered.

Eq 7.2 shows that, although the number of terms in the definition of êswitch grows quadratically in n (see Eq 3.3), the computational complexity of êswitch(fβ) for a linear model fβ grows only linearly in n. Specifically, the terms and in Eq 7.2 can be computed as and respectively, where the computational complexity of each term in parentheses grows linearly in n.

As in Gregorutti et al. (2017), both results in Proposition 15 readily generalize to additive models of the form fg1,g2 (X1,X2) := g1 (X1) + g2(X2), since permuting X1 is equivalent to permuting g1(X1).

7.2. Computing Empirical MCR for Linear Models

Building on the computational result from the previous section, we now consider empirical MCR computation for linear model classes of the form

In order to implement the computational procedure from Sections 6.1 and 6.2, we must be able to minimize arbitrary linear combinations of êorig(fβ) and êswitch(fβ). Fortunately, for linear models, this minimization reduces to a quadratic program, as we show in the next remark.

Remark 16 (Tractability of empirical MCR for linear model classes) For any and any fixed coefficients , the linear combination

| (7.3) |

is proportional in β to the quadratic function − 2q′β + β′Qβ, where

and . Thus, minimizing ξorigêorig(fβ) + ξswitchêswitch(fβ) is equivalent to an unconstrained (possibly non-convex) quadratic program.

Because our empirical MCR computation procedure from Sections 6.1 and 6.2 consists of minimizing a sequence of objective functions in the form of Eq 7.3, Remark 16 shows us that this procedure is tractable for the class of unconstrained linear models.

7.3. Regularized Linear Models

Next, we continue to build on the results from Section 7.2 to calculate boundaries on for regularized linear models. We consider model classes formed by quadratically constrained subsets of , defined as

| (7.4) |

where Mlm and rlm are pre-specified. Again, this class describes linear models with a quadratic constraint on the coefficient vector.

7.3.1. Calculating MCR

As in Section 7.2, calculating bounds on via Lemmas 9 & 13 requires that are able to minimizing linear combinations ξorigêorig(fβ) + ξswitchêswitch (fβ) across for arbitrary . Applying Remark 16, we can again equivalently minimize −2q′β + β′Qβ subject to the constraint in Eq 7.4:

| (7.5) |

The resulting optimization problem is a (possibly non-convex) quadratic program with one quadratic constraint (QP1QC). This problem is well-studied, and is related to the trust region problem (Boyd and Vandenberghe, 2004; Pólik and Terlaky, 2007; Park and Boyd, 2017). Thus, the bounds on MCR presented in Sections 6.1 and 6.2 again become computationally tractable for the class of quadratically constrained linear models.

7.3.2. Upper Bounding the Loss

One benefit of constraining the coefficient vector (β′Mlmβ ≤ rlm) is that it facilitates determining an upper bound Bind on the loss function L(fβ, (y, x)) = (y − x′β)2, which automatically satisfies Assumption 1 for all . The following lemma gives sufficient conditions to determine Bind.

Lemma 17 (Loss upper bound for linear models) If Mlm is positive definite, Y is bounded within a known range, and there exists a known constant such that for all , then Assumption 1 holds for the model class , the squared error loss function, and the constant

In practice, the constant can be approximated by the empirical distribution of X and Y. The motivation behind the restriction in Lemma 17 is to create complementary constraints on X and β. For example, if Mlm is diagonal, then the smallest elements of Mlm correspond to directions along which β is least restricted by β′Mlmβ ≤ rlm (Eq 7.5), as well as the directions along which x is most restricted by (Lemma 17).

7.4. Regression Models in a Reproducing Kernel Hilbert Space (RKHS)

We now expand our scope of model classes by considering regression models in a reproducing kernel Hilbert space (RKHS), which allow for nonlinear and nonadditive features of the covariates. We show that, as in Section 7.3, minimizing a linear combination of êorig(f) and êswitch(f) across models f in this class can be expressed as a QP1QC, which allows us to implement the binary search procedure of Sections 6.1 & 6.2.

First we introduce notation required to describe regression in a RKHS. Let D be a (R×p) matrix representing a pre-specified dictionary of R reference points, such that each row of D is contained in . Let k be a pre-specified positive definite kernel function, and let μ be a prespecified estimate of . Let KD be the R × R matrix with KD[i,j] = k(D[i,·], D[j,·]). We consider prediction models of the following form, where the distance to each reference point is used as a regression feature:

| (7.6) |

Above, the norm ‖fα‖k is defined as

| (7.7) |

In the next two sections, we show that bounds on empirical MCR can again be tractably computed for this class, and that the loss for models in this class can be feasibly upper bounded.

7.4.1. Calculating MCR

Again, calculating bounds on from Lemmas 9 & 13 requires us to be able to minimize arbitrary linear combinations of êorig(fα) and êswitch(fα).

Given a size-n sample of test observations Z = [ y X ], let Korig be the n × R matrix with elements Korig[i,j] = k (X[i,·], D[j,·]). Let Zswitch = [ yswitch Xswitch ] be the (n(n − 1)) × (1 + p) matrix with rows that contain the set {(y[i], X1[j,·], X2[i,·]) : i, j ∈ {1, … , n} and i ≠ j}. Finally, let Kswitch be the n(n − 1) × R matrix with Kswitch[i,j] = k (Xswitch[i,·], D[j,·]).

For any two constants , we can show that minimizing the linear combination over is equivalent to the minimization problem

| (7.8) |

| (7.9) |

Like Problem 7.5, Problem 7.8-7.9 is a QP1QC. To show Eqs 7.8–7.9, we first write êorig(fα) as

| (7.10) |

| (7.11) |

Following similar steps, we can obtain

Thus, for any two constants , we can see that ξorigêorig(fα)+ξswitchêswitch(fα) is quadratic in α. This means that we can tractably compute bounds on empirical MCR for this class as well.

7.4.2. Upper Bounding the Loss

Using similar steps as in Section 7.3.2, the following lemma gives sufficient conditions to determine Bind for the case of regression in a RKHS.

Lemma 18 (Loss upper bound for regression in a RKHS) Assume that Y is bounded within a known range, and there exists a known constant rD such that for all , where is the function satisfying υ(x)[i] = k(x, D[i,·]). Under these conditions, Assumption 1 holds for the model class , the squared error loss function, and the constant

Thus, for regression models in a RKHS, we can satisfy Assumption 1 for all models in the class.

8. Connections Between MR and Causality

Our MR approach can be fundamentally described as studying how a model’s behavior changes under an intervention on the underlying data. We aim to study the causal effect of this intervention on the model’s performance. This goal mirror’s the conventional causal inference goal of studying how an intervention on variables will change outcomes generated by a process in nature.

This section explores this connection to causal inference further. Section 8.1 shows that when the prediction model in question is the conditional expectation function from nature itself, MR reduces to commonly studied quantities in the causal literature. Section 8.2 proposes an alternative to MR that focuses on interventions, or data perturbations, that are likely to occur in the underlying data generating process.

8.1. Model Reliance and Causal Effects

In this section, we show a connection between population-level model reliance and the conditional average causal effect. For consistency with the causal inference literature, we temporarily rename the random variables (Y, X1, X2) as (Y, T, C), with realizations (y, t, c). Here, T := X1 represents a binary treatment indicator, C := X2 represents a set of baseline covariates (“C” is for “covariates”), and Y represents an outcome of interest. Under this notation, represents the expected loss of a prediction function f, and denotes the expected loss in a pair of observations in which the treatment has been switched. Let be the (unknown) conditional expectation function for Y, where we place no restrictions on the functional form of f0.

Let Y1 and Y0 be potential outcomes under treatment and control respectively, such that . The treatment effect for an individual is defined as Y1 − Y0, and the average treatment effect is defined as . Let be the (unknown) conditional average treatment effect of T for all patients with C = c. Causal inference methods typically assume (conditional ignorability), and for all values of c (positivity), in order for f0 and CATE to be well defined and identifiable.

The next proposition quantifies the relation between the conditional average treatment effect function (CATE) and the model reliance of f0 on X1.

Proposition 19 (Causal interpretations of MR) For any prediction model f, let eorig(f) and eswitch(f) be defined based on the squared error loss .

If (conditional ignorability) and for all values of c (positivity), then MR(f0) is equal to

| (8.1) |

where Var(T) is the marginal variance of the treatment assignment.