Abstract

Deep learning has produced state-of-the-art results for a variety of tasks. While such approaches for supervised learning have performed well, they assume that training and testing data are drawn from the same distribution, which may not always be the case. As a complement to this challenge, single-source unsupervised domain adaptation can handle situations where a network is trained on labeled data from a source domain and unlabeled data from a related but different target domain with the goal of performing well at test-time on the target domain. Many single-source and typically homogeneous unsupervised deep domain adaptation approaches have thus been developed, combining the powerful, hierarchical representations from deep learning with domain adaptation to reduce reliance on potentially-costly target data labels. This survey will compare these approaches by examining alternative methods, the unique and common elements, results, and theoretical insights. We follow this with a look at application areas and open research directions.

1. INTRODUCTION

Supervised learning is arguably the most prevalent type of machine learning and has enjoyed much success across diverse application areas. However, many supervised learning methods make a common assumption: the training and testing data are drawn from the same distribution. When this constraint is violated, a classifier trained on the source domain will likely experience a drop in performance when tested on the target domain due to the differences between domains [182]. Single-source domain adaptation refers to the goal of learning a concept from labeled data in a source domain that performs well on a different but related target domain [73, 80, 180]. Unsupervised domain adaptation specifically addresses the situation where there are labeled source data and only unlabeled target data available for use during training [73, 147].

Because of its ability to adapt labeled data for use in a new application, domain adaptation can reduce the need for costly labeled data in the target domain. As an example, consider the problem of semantically segmenting images. Each real image in the Cityscapes dataset required approximately 1.5 hours to annotate for semantic segmentation [46]. In this case, human annotation time could be spared by training an image semantic segmentation model on synthetic street view images (the source domain) since these can be cheaply generated, then adapting and testing for real street view images (the target domain, here the Cityscapes dataset).

An undeniable trend in machine learning is the increased usage of deep neural networks. Deep networks have produced many state-of-the-art results for a variety of machine learning tasks [73, 80] such as image classification, speech recognition, machine translation, and image generation [79, 80]. When trained on large amounts of data, these many-layer neural networks can learn powerful, hierarchical representations [80, 147, 182, 226] and can be highly scalable [76]. At the same time, these networks can also experience performance drops due to domain shifts [72, 226]. Thus, much research has gone into adapting such networks from large labeled datasets to domains where little (or possibly no) labeled training data are available (for a list, see [257]). These single-source and typically homogeneous unsupervised deep domain adaptation approaches, which combine the benefit of deep learning with the very practical use of domain adaptation to remove the reliance on potentially costly target data labels, will be the focus of this survey.

A number of surveys have been created on the topic of domain adaptation [12, 24, 42, 48, 49, 121, 122, 162, 182, 227, 246, 285] and more generally transfer learning [45, 128, 152, 180, 216, 232, 235, 252, 273], of which domain adaptation can be viewed as a special case [182]. Previous domain adaptation surveys lack depth of coverage and comparison of unsupervised deep domain adaptation approaches. In some cases, prior surveys do not discuss domain mapping [48, 49, 121], normalization statistic-based [48, 49, 121, 285], or ensemble-based [48, 49, 121, 246, 285] methods. In other cases, they do not survey deep learning approaches [12, 122, 162, 182]. Still others are application-centric, focusing on a single use case such as machine translation [24, 42]. One earlier survey focuses on the multi-source scenario [227], while we focus on the more prevalent single-source scenario. Transfer learning is a broader topic to cover, thus surveys provide minimal coverage and comparison of the deep learning methods that have been designed for unsupervised domain adaptation [152, 180, 216, 232, 252, 273], or they focus on tasks such as activity recognition [45] or reinforcement learning [128, 235]. The goal of this survey is to discuss, highlight unique components, and compare approaches to single-source homogeneous unsupervised deep domain adaptation.

We first provide background on where domain adaptation fits into the more general problem of transfer learning. We follow this with an overview of generative adversarial networks (GANs) to provide background for the increasingly widespread use of adversarial techniques in domain adaptation. Next, we investigate the various domain adaptation methods, the components of those methods, and the results. Then, we overview domain adaptation theory and discuss what we can learn from the theoretical results. Finally, we look at application areas and identify future research directions for domain adaptation.

2. BACKGROUND

2.1. Transfer Learning

The focus of this survey is domain adaptation. Because domain adaptation can be viewed as a special case of transfer learning [182], we first review transfer learning to highlight the role of domain adaptation within this topic. Transfer learning is defined as the learning scenario where a model is trained on a source domain or task and evaluated on a different but related target domain or task, where either the tasks or domains (or both) differ [61, 80, 180, 252]. For instance, we may wish to learn a model on a handwritten digit dataset (e.g., MNIST [130]) with the goal of using it to recognize house numbers (e.g., SVHN [175]). Or, we may wish to learn a model on a synthetic, cheap-to-generate traffic sign dataset [168] with the goal of using it to classify real traffic signs (e.g., GTSRB [224]). In these examples, the source dataset used to train the model is related but different from the target dataset used to test the model – both are digits and signs respectively, but each dataset looks significantly different. When the source and target differ but are related, then transfer learning can be applied to obtain higher accuracy on the target data.

2.1.1. Categorizing Methods.

In a transfer learning survey paper, Pan et al. [180] defined two terms to help classify various transfer learning techniques: “domain” and “task.” A domain consists of a feature space and a marginal probability distribution (i.e., the features of the data and the distribution of those features in the dataset). A task consists of a label space and an objective predictive function (i.e., the set of labels and a predictive function that is learned from the training data). Thus, a transfer learning problem might be either transferring knowledge from a source domain to a different target domain or transferring knowledge from a source task to a different target task (or a combination of the two) [61, 180, 252].

By this definition, a change in domain may result from either a change in feature space or a change in the marginal probability distribution. When classifying documents using text mining, a change in the feature space may result from a change in language (e.g., English to Spanish), whereas a change in the marginal probability distribution may result from a change in document topics (e.g., computer science to English literature) [180]. Similarly, a change in task may result from either a change in the label space or a change in the objective predictive function. In the case of document classification, a change in the label space may result from a change in the number of classes (e.g., from a set of 10 topic labels to a set of 100 topic labels). Similarly, a change in the objective predictive function may result from a substantial change in the distribution of the labels (e.g., the source domain has 100 instances of class A and 10,000 of class B, whereas the target has 10,000 instances of A and 100 of B) [180].

To classify transfer learning algorithms based on whether the task or domain differs between source and target, Pan et al. [180] introduced three terms: “inductive”, “transductive”, and “unsupervised” transfer learning. In inductive transfer learning, the target and source tasks are different, the domains may or may not differ, and some labeled target data are required. In transductive transfer learning, the tasks remain the same while the domains are different, and both labeled source data and unlabeled target data are required. Finally, in unsupervised transfer learning, the tasks differ as in the inductive case, but there is no requirement of labeled data in either the source domain or the target domain.

2.1.2. Domain Adaptation.

One popular type of transfer learning is domain adaptation, which will be the focus of our survey. Domain adaptation is a type of transductive transfer learning. Here, the target task remains the same as the source, but the domain differs [55, 180, 182]. Homogeneous domain adaptation is the case where the domain feature space also remains the same, and heterogeneous domain adaptation is the case where the feature spaces differ [182].

In addition to the previous terminology, machine learning techniques are often categorized based on whether or not labeled training data are available. Supervised learning assumes labeled data are available, semi-supervised learning uses both labeled data and unlabeled data, and unsupervised learning uses only unlabeled data. However, domain adaptation assumes data comes from both a source domain and a target domain. Thus, prepending one of these three terms to “domain adaptation” is ambiguous since it may refer to labeled data being available in the source or target domains.

Authors apply these terms in various ways to domain adaptation [54, 111, 180, 204, 252]. In this paper, we will refer to “unsupervised” domain adaptation as the case in which both labeled source data and unlabeled target data are available, “semi-supervised” domain adaptation as the case in which labeled source data in addition to some labeled target data are available, and “supervised” domain adaptation as the case in which both labeled source and target data are available [12]. The distinction between these categories describes the target domain, but only describe situations in which labeled data are available for the source domain. These definitions are commonly used in the methods surveyed in this paper as well as others [27, 73, 76, 147, 204, 226].

2.1.3. Related Problems.

Multi-domain learning [61, 113] and multi-task learning [29] are related to transfer learning and domain adaptation. In contrast to transfer learning, the goal of these learning approaches is obtaining high performance on all specified domains (or tasks) rather than just on a single target domain (or task) [180, 261]. For example, often it is assumed that the training data are drawn in an independent and identically distributed (i.i.d.) fashion, which may not be the case [113]. One such example is the task of developing a spam filter for users who disagree on what is considered spam. If all the users’ data are combined, the training data will be drawn from multiple domains. While each individual domain may be i.i.d., the aggregated dataset may not be. If the data are split by user, then there may be too little data to learn a model for each user. Multi-domain learning can take advantage of the entire dataset to learn individual user preferences [61, 113]. Some researchers have developed adversarial strategies to tackle this multi-domain learning challenge [89, 213].

When working with multiple tasks, instead of training models separately for different tasks (e.g., one model for detecting shapes in an image and one model for detecting text in an image), multi-task learning will learn these separate but related tasks simultaneously so that they can mutually benefit from the training data of other tasks through a (partially) shared representation [29]. If there are both multiple tasks and domains, then these approaches can be combined into multi-domain multi-task learning, as is described by Yang et al. [261].

Another related problem is domain generalization, in which a model is trained on multiple source domains with labeled data and then tested on a separate target domain that was not seen during training [173]. This contrasts with domain adaptation where target examples (possibly unlabeled) are available during training. Some approaches related to those surveyed in this paper have been designed to address this situation. Examples include an adversarial method introduced by Zhao et al. [284] and an autoencoder approach by Ghifary et al. [75] discussed in Section 7.4.

2.2. Generative Adversarial Networks

Many deep domain adaptation methods that we will discuss in the next section incorporate adversarial training. We use the term adversarial training broadly to refer to any method that utilizes an adversary or an adversarial process during training. Before other adversarial methods were developed, the term was narrowly applied to training designed to improve the robustness of a model by utilizing adversarial examples, e.g. image inputs with small worst-case perturbations that lead to misclassification [82, 230]. Subsequently, other techniques have arisen that also utilize an adversary during training, including generative-adversarial training of generative adversarial networks (GANs) [81] and domain-adversarial training of domain adversarial neural networks (DANN) [73], both of which have been used for domain adaptation. To provide background for the domain adaptation methods utilizing these techniques, we will first discuss GANs and later when discussing DANN note the differences.

In recent years there has been a large and growing interest in GANs. Pitting two well-matched neural networks against each other (hence “adversarial”), playing the roles of a data discriminator and a data generator, the pair is able to refine each player’s abilities in order to perform functions such as synthetic data generation. Goodfellow et al. [81] proposed this technique in 2014. Since that time, hundreds of papers have been published on the topic [91, 271]. GANs have traditionally been applied to synthetic image generation, but recently researchers have been exploring other novel use cases such as domain adaptation.

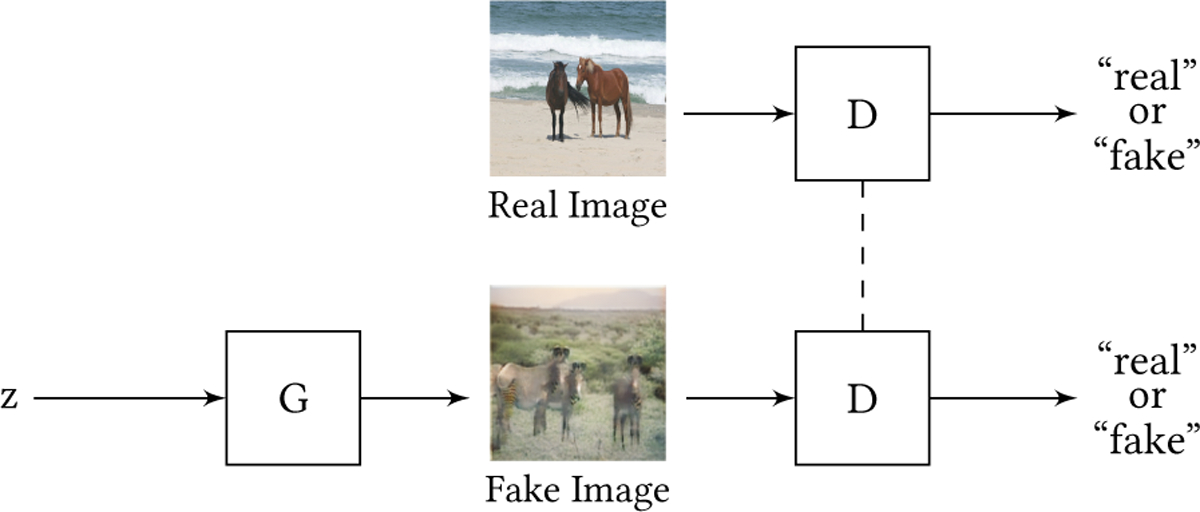

GANs are a type of deep generative model [81]. For synthetic image generation, a training dataset of images must be available. Popular datasets include human faces (CelebA [146]), handwritten digits (MNIST [130]), bedrooms (LSUN [268]), and sets of other objects (CIFAR-10 [123] and ImageNet [56, 200]). After training, the generative model will be able to generate synthetic images that resemble those in the training data. For example, a generator trained with CelebA will generate images of human faces that look realistic but are not images of real people, as shown in Figure 1. To learn to do this, GANs utilize two neural networks competing against each other [81]. One network represents a generator. The generator accepts a noise vector as input, which contains random values drawn from some distribution such as normal or uniform. The goal of the generator network is to output a vector that is indistinguishable from the real training data. The other network represents a discriminator, which accepts as input either a real sample from the training data or a fake sample from the generator. The goal of the discriminator is to determine the probability that the input sample is real. During training, these two networks play a minimax game, where the generator tries to fool the discriminator and the discriminator attempts to not be fooled.

Fig. 1.

Realistic but entirely synthetic images of human faces generated by a GAN trained on the CelebA-HQ dataset [116].

Using the notation from Goodfellow et al. [81], we define a value function V (G, D) employed by the minimax game between the two networks:

| (1) |

Here, x ~ pdata(x) draws a sample from the real data distribution, z ~ pz (z) draws a sample from the input noise, D(x; θd) is the discriminator, and G(z; θg) is the generator. As shown in the equation, the goal is to find the parameters θd that maximize the log probability of correctly discriminating between real (x) and fake (G(z)) samples while at the same time finding the parameters θg that minimize the log probability of 1 − D(G(z)). The term D(G(z)) represents the probability that generated data G(z) is real. If the discriminator correctly classifies a fake input then D(G(z)) = 0. Equation 1 minimizes the quantity 1 − D(G(z)). This occurs when D(G(z)) = 1, or when the discriminator misclassifies the generator’s output as a real sample. Thus the discriminator’s mission is to learn to correctly classify the input as real or fake while the generator tries to fool the discriminator into thinking that its generated output is real. This process is illustrated in Figure 2.

Fig. 2.

Illustration of the GAN generator G and discriminator D networks. The dashed line between the D networks indicates that they share weights (or are the same network). In the top row, a real image from the training data (horses ↔ zebras dataset by Zhu et al. [290]) is fed to the discriminator, and the goal of D is to make D(x) = 1 (correctly classify as real). In the bottom row, a fake image from the generator is fed to the discriminator, and the goal of D is to make D(G(z)) = 0 (correctly classify as fake), which competes with the goal of G to make D(G(z)) = 1 (misclassify as real).

2.2.1. Training.

In recent years there have been impressive results from GANs. At the same time, this research faces some challenges since training a GAN can encounter problems such as difficulty converging [6, 79], mode collapse where the generator only learns to generate realistic samples for a few specialized modes of the data distribution [79], and vanishing gradients [81]. Many methods have been proposed to resolve these training challenges using a variety of tricks [81, 90, 179, 207, 219, 229], network architecture choices [116, 190, 207], objective modifications [5, 15, 66, 87, 112, 120, 161, 163, 165, 176–178, 283], mixtures or ensembles [6, 63, 78, 93, 117, 170, 181, 237, 270], maximum mean discrepancy (MMD) [16, 64, 135, 139, 228], making a connection to reinforcement learning [67, 186], or a combination of these modifications [90, 166, 269]. For an in-depth discussion of these techniques, there are a number of survey papers directed at GAN variants that include a discussion of training challenges and work [92, 99, 158]. These techniques can be employed in the domain adaptation methods that utilize GANs [20, 21, 41, 96, 143, 160, 208, 219, 245, 250]. While these training stability methods could similarly be applied to other adversarial domain adaptation approaches, they are not typically needed for the non-GAN methods surveyed here.

2.2.2. Evaluation.

Once successfully trained, a GAN model can be difficult to evaluate and compare with other models. Multiple approaches and measures have been introduced to evaluate GAN performance. Often researchers have evaluated their models through visual inspection [210] such as performing user studies where participants mark which images they think look more realistic [207]. However, ideally a more automated metric could be found. Past generative models were evaluated by computing log-likelihood [236], but this is not necessarily tractable in GANs [79]. A proxy for log-likelihood is a Parzen window estimate, which was used for early GAN evaluation [81, 156, 177, 236], but in high dimensions (such as images), this could be far from the actual log-likelihood and not even rank models correctly [85, 236]. Thus, there has been much work proposing various evaluation methods for GANs: methods for detecting memorization [15, 60, 81, 156, 190, 236], determining diversity [7, 90, 179, 210], measuring realism [16, 90, 145, 207], and approximating log-likelihood [253]. Xu et al. [258] and Borji [19] survey and compare many of these GAN evaluation methods.

These techniques can be used for evaluating domain adaptation methods used for image translation (a form of image generation but conditioned on an input image) from one domain to another [14, 41, 197, 264, 265, 290]. However, many domain adaptation methods (even those that are adversarial such as those using GANs) are not used for generation but rather for tasks with more easily-defined loss functions, making these techniques largely not needed for adversarial domain adaptation methods. For example, accuracy [14, 21, 22, 41, 69, 73, 96, 143, 241] or AUC scores [189] can be used to evaluate classification, intersection over union or pixel accuracy can be used to evaluate image segmentation [14, 69, 96, 138, 185], and absolute difference can be used to evaluate regression [219].

3. METHODS

In recent years, numerous new unsupervised domain adaptation methods have been proposed, with a growing emphasis on neural network-based approaches. Distinct lines of research have emerged. These include aligning the source domain and target domain distributions, mapping between domains, separating normalization statistics, designing ensemble-based methods, or focusing on making the model target discriminative by moving the decision boundary into regions of lower data density. In addition, others have explored combinations of these approaches. We will describe each of these categories together with recent methods that fall into these categories.

In this survey, we will focus on homogeneous domain adaptation consisting of one source and one target domain, as is most commonly studied. Another case is multi-source domain adaptation, where there are multiple source domains but still only one target domain. Sun et al. [227] survey multi-source domain adaptation, and since then a number of other methods [28, 88, 95, 157, 184, 192, 256, 259, 281] have been developed for this case. It is also possible to perform multi-target domain adaptation [77], though this case is even more rarely studied. Similarly, we focus on homogeneous domain adaptation due to its prevalence, though some heterogeneous methods have been developed [62, 105, 137, 244, 263, 289].

3.1. Domain-Invariant Feature Learning

Most recent domain adaptation methods align source and target domains by creating a domain-invariant feature representation, typically in the form of a feature extractor neural network. A feature representation is domain-invariant if the features follow the same distribution regardless of whether the input data are from the source or target domain [280]. If a classifier can be trained to perform well on the source data using domain-invariant features, then the classifier may generalize well to the target domain since the features of the target data match those on which the classifier was trained. However, these methods assume that such a feature representation exists and the marginal label distributions do not differ significantly (Section 6).

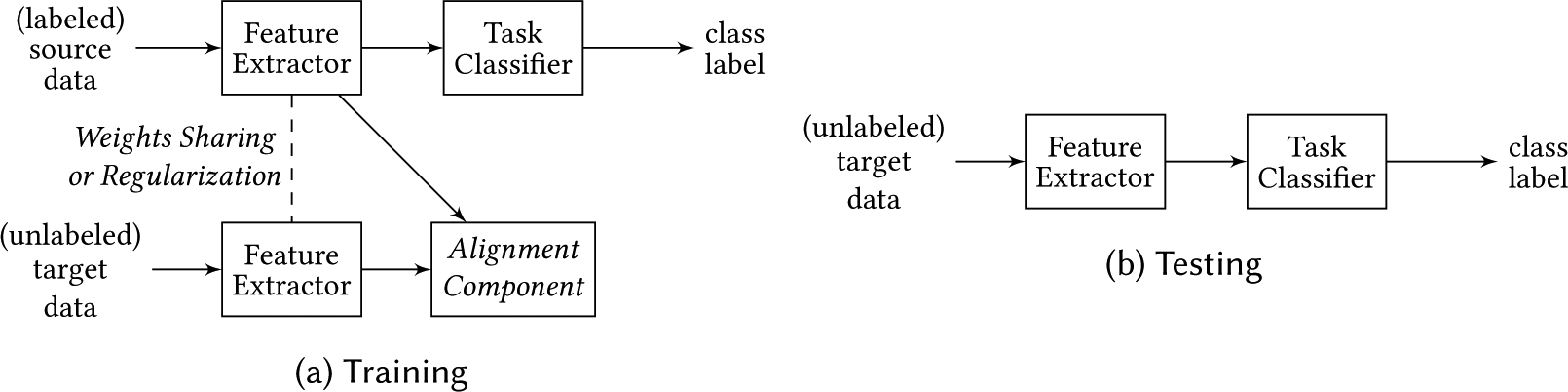

The general training and testing setup of these methods is illustrated in Figure 3. Methods differ in how they align the domains (the Alignment Component in the figure). Some minimize divergence, some perform reconstruction, and some employ adversarial training. In addition, they differ in weight sharing choices, which will be discussed in Section 4.3. We discuss the various alignment methods below.

Fig. 3.

General network setup for domain adaptation methods learning domain-invariant features. (a) Methods differ in regard to how the domains are aligned during training (the Alignment Component) and whether the feature extractors used on each domain share none, some, or all of the weights between domains. (b) The target data are fed to the domain-invariant feature extractor and then to the task classifier.

3.1.1. Divergence.

One method of aligning distributions is through minimizing a divergence that measures the distance between the distributions. Choices for the divergence measure include maximum mean discrepancy, correlation alignment, contrastive domain discrepancy, the Wasserstein metric, and a graph matching loss.

Maximum mean discrepancy (MMD) [83, 84] is a two-sample statistical test of the hypothesis that two distributions are equal based on observed samples from the two distributions. The test is computed from the difference between the mean values of a smooth function on the two domains’ samples. If the means are different, then the samples are likely not from the same distribution. The smooth functions chosen for MMD are unit balls in characteristic reproducing kernel Hilbert spaces (RKHS) since it can be proven that the population MMD is zero if and only if the two distributions are equal [84].

To use MMD for domain adaptation, the alignment component can be another classifier similar to the task classifier. MMD can then be computed and minimized between the outputs of these classifiers’ corresponding layers (a slightly different setup than that in Figure 3). Rozantsev et al. [199] employ MMD, Long et al. [147] investigate a multiple kernel variant of MMD (MK-MMD), and later Long et al. [147] develop a joint MMD (JMMD) method [151]. Bousmalis et al. [22] also tried MMD but found using an adversarial objective performed better in their experiments.

Correlation alignment (CORAL) [225] is similar to MMD with a polynomial kernel, computed from the distance between second-order statistics (covariances) of the source and target features. For domain adaptation, the alignment component consists of computing the CORAL loss between the two feature extractors’ outputs (in order to minimize the distance). A variety of distances have been used: Sun et al. [226] use a squared matrix Frobenius norm in Deep CORAL, Zhang et al. [278] use a Euclidean distance in mapped correlation alignment (MCA), others have used log-Euclidean distances in LogCORAL [249] and Log D-CORAL[172], and Morerio et al. [171] use geodesic distances. Zhang et al. [279] generalize correlation alignment to possibly infinite-dimensional covariance matrices in RKHS. Chen et al. [34] align statistics beyond the first and second orders.

Contrastive domain discrepancy (CCD) [114] is based on MMD but looks at the conditional distributions in order to incorporate label information (unlike CORAL or ordinary MMD). When minimizing CCD, intra-class discrepancy is minimized while inter-class margin is maximized. This has the problem of requiring target labels though, so Kang et al. [114] propose contrastive adaptation networks (CAN) that minimize cross-entropy loss on the labeled target data while alternating between estimating labels for target samples (via clustering) with adapting the feature extractor with the now-computable CCD (using the clusters). This approach outperforms the other methods on the Office dataset as shown in Table 3.

Table 3.

Classification accuracy (source → target, mean ± std %) of different neural network based domain adaptation methods on the Office computer vision dataset. Adversarial approaches denoted by *.

| Name | Office (Amazon, DSLR, Webcam) | |||||

|---|---|---|---|---|---|---|

| A→W | D→W | W→D | A→D | D→A | W→A | |

| CAN[114]a | 94.5 ± 0.3 | 99.1 ± 0.2 | 99.8 ± 0.2 | 95.0 ± 0.3 | 78.0 ± 0.3 | 77.0 ± 0.3 |

| Gen. to Adapt[208]a* | 89.5 ± 0.5 | 97.9 ± 0.3 | 99.8 ± 0.4 | 87.7 ± 0.5 | 72.8 ± 0.3 | 71.4 ± 0.4 |

| SimNet[187]a* | 88.6 ± 0.5 | 98.2 ± 0.2 | 99.7 ± 0.2 | 85.3 ± 0.3 | 73.4 ± 0.8 | 71.8 ± 0.6 |

| MADA[183]a* | 90.0 ± 0.1 | 97.4 ± 0.1 | 99.6 ± 0.1 | 87.8 ± 0.2 | 70.3 ± 0.3 | 66.4 ± 0.3 |

| AutoDIAL[27]bc | 84.2 | 97.9 | 99.9 | 82.3 | 64.6 | 64.2 |

| CCN++[101]d* | 78.2 | 97.4 | 98.6 | 73.5 | 62.8 | 60.6 |

| Rozantsev et al.[199] | 76.0 | 96.7 | 99.6 | |||

| AdaBN[145]b | 74.2 | 95.7 | 99.8 | 73.1 | 59.8 | 57.4 |

| JAN-A[151]a* | 86.0 ± 0.4 | 96.7 ± 0.3 | 99.7 ± 0.1 | 85.1 ± 0.4 | 69.2 ± 0.4 | 70.7 ± 0.5 |

| LogCORAL[249] | 70.2 ± 0.6 | 95.5 ± 0.1 | 99.5 ± 0.3 | 69.4 ± 0.5 | 51.2 ± 0.3 | 51.6 ± 0.5 |

| Log D-CORAL[172] | 68.5 | 95.3 | 98.7 | 62.0 | 40.6 | 40.6 |

| ADDA[241]a* | 75.1 | 97.0 | 99.6 | |||

| Sener et al.[214] | 81.1 | 96.4 | 99.2 | 84.1 | 58.3 | 63.8 |

| DRCN[76] | 68.7 ± 0.3 | 96.4 ± 0.3 | 99.0 ± 0.2 | 66.8 ± 0.5 | 56.0 ± 0.5 | 54.9 ± 0.5 |

| Deep CORAL[226] | 66.4 ± 0.4 | 95.7 ± 0.3 | 99.2 ± 0.1 | 66.8 ± 0.6 | 52.8 ± 0.2 | 51.5 ± 0.3 |

| 73.0 [199, 241] | 96.4 [199, 241] | 99.2 [199, 241] | ||||

| DAN[147] | ||||||

| 68.5 [241] | 96.0 [241] | 99.0 [241] | ||||

| Tzeng et al.[240]e* | 59.3 ± 0.6 | 90.0 ± 0.2 | 97.5 ± 0.1 | 68.0 ± 0.5 | 43.1 ± 0.2 | 40.5 ± 0.2 |

| Source only (i.e., no adaptation) | 62.6 [241]a | 96.1 [241]a | 98.6 [241]a | |||

with ResNet-50 network

with Inception-based network

hyperparameter tuned on one W labeled example per class on A →W task (see [150])

with ResNet-18 network

semi-supervised for some classes, but evaluated on 16 hold-out categories for which the labels were not seen during training

A problem known as “optimal transport” was originally proposed for studying resource allocation such as finding an optimal way to move material from mines to factories [169, 193], but it can also be used to measure the distances between distributions. If the cost of moving each point is a norm (e.g., Euclidean), then the solution to a discrete optimal transport problem can be viewed as a distance: the Wasserstein distance [50] (also known as the earth mover’s distance). To align feature and label distributions with this distance, Courty et al. [47] propose joint distribution optimal transport (JDOT). To incorporate this into a neural network, Damodaran et al. [50] propose DeepJDOT.

Another divergence measure arises from graph matching: the problem of finding an optimal correspondence between graphs [260]. A feature extractor’s output on a batch of samples can be viewed as an undirected graph (in the form of an adjacency matrix), where similar samples in the batch are connected. Given the graph from a batch of source data fed through the feature extractor and similarly a graph from a batch of target data, then the cost of aligning these graphs can be used as a divergence, as proposed by Das et al. [51–53].

3.1.2. Reconstruction.

Rather than minimizing a divergence, Ghifary et al. [76] and Bousmalis et al. [22] hypothesize that alignment can be accomplished by learning a representation that both classifies the labeled source domain data well and can be used to reconstruct either the target domain data (Ghifary et al.) or both the source and target domain data (Bousmalis et al.). The alignment component in these setups is a reconstruction network – the opposite of the feature extractor network – that takes the feature extractor output and recreates the feature extractor’s input (in this case, an image). Ghifary et al. [76] propose deep reconstruction-classification networks (DRCN), using a pair-wise squared reconstruction loss. Bousmalis et al. [22] propose domain separation networks (DSN), using a scale-invariant mean squared error reconstruction loss.

3.1.3. Adversarial.

Several varieties of feature-level adversarial domain adaptation methods have been introduced in the literature. In most the alignment component consists of a domain classifier. In one paper this component is instead represented by a network learning an approximate Wasserstein distance, and in another paper the component is a GAN.

A domain classifier is a classifier that outputs whether the feature representation was generated from source or target data. Recall that GANs include a discriminator that tries to accurately predict whether a sample is from the real data distribution or from the generator. In other words, the discriminator differentiates between two distributions, one real and one fake. A discriminator could similarly be designed to differentiate two distributions which instead represent a source distribution and a target distribution, as is done with a domain classifier. Note though that an adversarial domain classifier is used for adaptation, whereas a GAN is used for data generation. The domain classifier is trained to correctly classify the domain (source or target). In this scenario, the feature extractor is trained such that the domain classifier is unable to classify from which domain the feature representation originated. This is a type of zero-sum two-player game [280] as in a GAN (Section 2.2). Typically, these networks are adversarially trained by alternating between these two steps. The feature extractor can be trained to make the domain classifier perform poorly by negating the gradient from the domain classifier with a gradient reversal layer [72] when performing back propagation to update the feature extractor weights (e.g., in DANN [1, 72, 73] and VRADA [189]), maximally confusing the domain classifier (when it outputs a uniform distribution over binary labels [240]), or inverting the labels (in ADDA [241]). Because data distributions are often multi-modal, results may be improved by conditioning the domain classifier on a multilinear map of the feature representation and the task classifier predictions, which takes into account the multi-modal nature of the distributions [148].

Shen et al. [217] created WDGRL, a modification of DANN, by replacing the domain classifier with a network that learns an approximate Wasserstein distance. This distance is then minimized between source and target domains, which they found to yield an improvement. This method is similar to the divergence methods except here the divergence is learned with a network rather than computed based on statistics (e.g., using mean in MMD or covariance in CORAL). This method outperforms the other methods on the Amazon review dataset as shown in Table 4.

Table 4.

Classification accuracy comparison for domain adaptation methods for sentiment analysis (positive or negative review) on the Amazon review dataset [18]a with domains books (B), DVD (D), electronics (E), and kitchen (K). Adversarial approaches denoted by *.

| Source→Target | DANN[73]b* | DANN[73]c* | CORAL[225]d | ATT[204]c | WDGRL[217]ce* | No Adapt.[225]f |

|---|---|---|---|---|---|---|

| B→D | 82.9 | 78.4 | 80.7 | 83.1 | ||

| B→E | 80.4 | 73.3 | 76.3 | 79.8 | 83.3 | 74.7 |

| B→K | 84.3 | 77.9 | 82.5 | 85.5 | ||

| D→B | 82.5 | 72.3 | 78.3 | 73.2 | 80.7 | 76.9 |

| D→E | 80.9 | 75.4 | 77.0 | 83.6 | ||

| D→K | 84.9 | 78.3 | 82.5 | 86.2 | ||

| E→B | 77.4 | 71.3 | 73.2 | 77.2 | ||

| E→D | 78.1 | 73.8 | 72.9 | 78.3 | ||

| E→K | 88.1 | 85.4 | 83.6 | 86.9 | 88.2 | 82.8 |

| K→B | 71.8 | 70.9 | 72.5 | 77.2 | ||

| K→D | 78.9 | 74.0 | 73.9 | 74.9 | 79.9 | 72.2 |

| K→E | 85.6 | 84.3 | 84.6 | 86.3 |

using 30,000-dimensional feature vectors from marginalized stacked denoising autoencoders (mSDA) by Chen et al. [36], which is an unsupervised method of learning a feature representation from the training data

using 5000-dimensional unigram and bigram feature vectors

using bag-of-words feature vectors including only the top 400 words, but suggest using deep text features in future work

the best results on target data for various hyperparameters

using bag-of-words feature vectors

Sankaranarayanan et al. [208] propose Generate to Adapt that uses a GAN as the alignment component. The feature extractor output is both fed to a classifier trained to predict the label (if the input is from the source domain) and also to a GAN trained to generate source-like images (regardless of if the input is source or target). For training stability, they use an AC-GAN [179]. They note one downside of using a GAN for adaptation is that it requires a large training dataset, but a common strategy is to use a pretrained network on a large dataset such as ImageNet. Using this pretraining, even on small datasets (e.g., Office) where the generated images are poor, the network still learns adaptation satisfactorily. Sankaranarayanan et al. [209] similarly develop a similar approach for semantic segmentation.

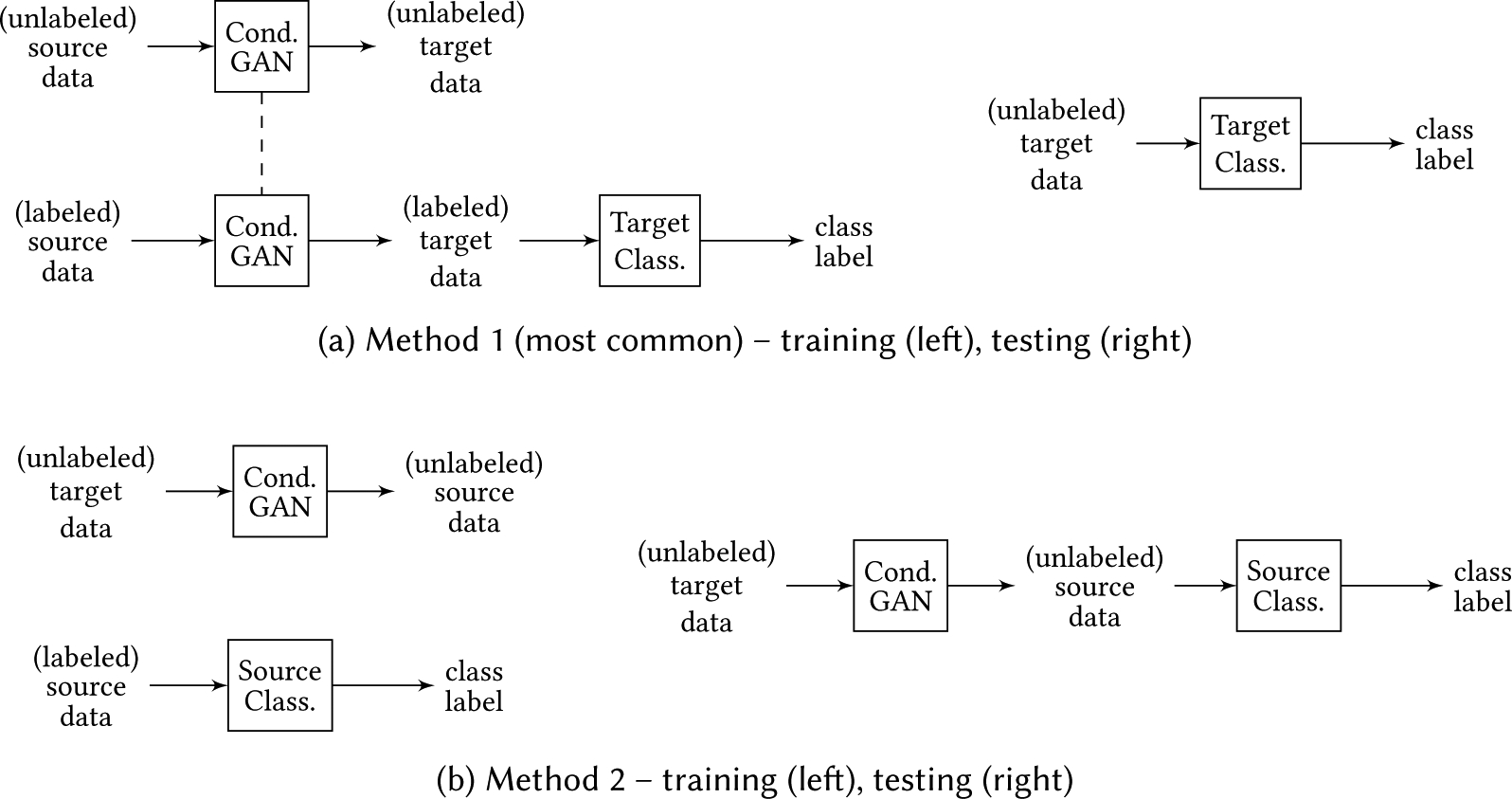

3.2. Domain Mapping

An alternative to creating a domain-invariant feature representation is mapping from one domain to another. The mapping is typically created adversarially and at the pixel level (i.e., pixel-level adversarial domain adaptation), but not always, as discussed at the end of this section. This mapping can be accomplished with a conditional GAN. The generator performs adaptation at the pixel level by translating a source input image to an image that closely resembles the target distribution. For example, the GAN could change from a synthetic vehicle driving image to one that looks realistic as shown in Figure 4 [41, 96, 197, 265, 290]. A classifier can then be trained on the source data mapped to the target domain using the known source labels [219] or jointly trained with the GAN [21, 96]. We will first discuss how a conditional GAN works followed by the ways it can be employed for domain adaptation.

Fig. 4.

Synthetic vehicle driving image (left) adapted to look realistic (right) [96].

3.2.1. Conditional GAN for Image-to-Image Translation.

The original formulation of a GAN was unconditional, where a GAN only accepted a noise vector as input. Conditional GANs, on the other hand, accept as input other information such as a class label, image, or other data [59, 74, 81, 164]. In the case of image generation, this means that a particular type of image to generate can be specified. One such example is to generate an image of a particular class within an image dataset such as “cat” rather than a random object from the dataset. Another example is conditioning on an input image such as in Figure 4, mapping an input driving image from one domain (synthetic) to an output image in another domain (realistic). Other uses include: transferring style (e.g., make a photo look like a Van Gogh painting) [118, 264, 290], colorizing images [109], generating satellite images from Google Maps data (or vice versa) [109, 264, 290], generating images of clothing from images of people wearing the clothing [265], generating cartoon faces from real faces [197, 231], converting labels to photos (e.g., semantic segmentation output to a photo) [109, 264, 290], learning disentangled representations [37], improving GAN training stability [179], and domain adaptation, which will be discussed in Section 3.2.2.

GANs conditioned on an input image can be used to perform image-to-image translation. These networks can be trained with varying levels of supervision: the dataset may contain corresponding images in the domains (supervised [109, 265]), only a few corresponding images (semi-supervised [71]), or no corresponding images (unsupervised [118, 264, 290]). A popular and general-purpose supervised method is pix2pix, developed by Isola et al. [109]. A commonly used unsupervised method is CycleGAN [290], which is based on pix2pix, or methods similar to CycleGAN including DualGAN [264] and DiscoGAN [118].

Numerous modifications to these approaches have been proposed: one that is multi-modal is MUNIT, a multi-modal unsupervised image-to-image translator [104]. By assuming a decomposition into style (domain-specific) and content (domain-invariant) codes, MUNIT can generate diverse outputs for a given input image (e.g., multiple possible output images corresponding to the same input image). A modification to CycleGAN explored by Li et al. [136] uses separate batch normalization for each domain (an idea similar to AdaBN discussed in Section 3.3). Mejjati et al. [3] and Chen et al. [38] improve results with attention, learning which areas of the images on which to focus. Shang et al. [215] improve results by feeding the mapped images into a denoising autoencoder. While CycleGAN and similar approaches use two generators, one for each mapping direction, Benaim et al. [14] developed a method for one-sided mapping that maintains distances between pairs of samples when mapped from the source to the target domain rather than (or in addition to) using a cycle consistency loss, and Fu et al. [69] developed an alternative one-sided mapping using a geometric constraint (e.g., vertical flipping or 90 degree rotation). Royer et al. [197] propose XGAN, a dual adversarial autoencoder capable of handling large domain shifts, where possibly an image in the source domain may correspond to multiple images in the target domain or vice versa. They tested mapping human faces to cartoon faces, which was a shift larger than CycleGAN could adequately handle. Choi et al. [41] propose StarGAN, a method for handling multiple domains with a single GAN. Approaches like CycleGAN need a separate generator (or two, one for each direction) for each pair of domains, which is not a scalable solution to many domains. StarGAN, on the other hand, only needs a single generator. This has the added benefit of allowing the generator to learn using all the available data rather than only the data in a specific pair of domains. During training they randomly pick a target domain at each iteration so the generator learns to generate images in all the domains. Anoosheh et al. [4] propose an approach designed for the same purpose as StarGAN but using one generator per domain.

3.2.2. Image-to-Image Translation for Domain Adaptation.

While the above approaches map images from one domain to another without the explicit purpose of performing domain adaptation, they can also be used for domain adaptation. For example, the original CycleGAN paper was application agnostic, but others have experimented with applying CycleGAN to domain adaptation [14, 69, 96]. It is important to note though that these image-to-image translation approaches assume that the domain differences are primarily low-level [20, 21, 241].

If unsupervised domain adaptation is performed for classification, adaptation can be accomplished by training an image-to-image translation GAN to map data from source to target, training a classifier on the mapped source images with known labels, and then subsequently testing by feeding unlabeled target through this target-domain classifier [20, 138, 219], as done in SimGAN [219] and illustrated in Figure 5a. Alternatively, rather than learning a mapping from source to target, the opposite could be done: learn a mapping from target to source, train a classifier on the source images with known labels, and test by feeding target images to the image-to-image translation model (to make them look like source images) followed by the source-domain classifier [32], as illustrated in Figure 5b.

Fig. 5.

Two possible configurations using image-to-image translation for domain adaptation. The conditional GAN and classifier can be trained separately or jointly. Method 1 is the most common. Method 2 is used by one paper. A combination of methods 1 and 2 is used in one paper. The dashed lines between networks indicate that they share weights (or are the same network). Note: this figure does not illustrate the many variants of the conditional GAN component, which often train a generator in each direction (one source to target and one target to source) and use additional losses such as cycle consistency.

In either of these approaches, if the mapping and the classification models are learned independently, the class assignments may not be preserved. For instance, class 1 may end up being “renamed” to class 2 after the mapping since the mapping was learned ignoring the class labels. This issue can be resolved by incorporating a semantic consistency loss (see Section 4.1) and training the mapping and classification models jointly [22, 96], as done in PixelDA [21].

If there is a way to perform hyperparameter tuning, a third option is possible (combination of Figure 5a and 5b): train a target-domain classifier on the source-to-target GAN (for which the GAN is not used during testing) and a source-domain classifier on the target-to-source GAN (for which the GAN is used during testing). The algorithm may then output a linear combination of the prediction results from the two classifiers [201]. While this approach does improve results, it requires a method of hyperparameter training (see Section 4.7).

All of the above approaches perform pixel-level mapping. An alternative approach is to perform feature-level mapping. Hong et al. [98] use a conditional GAN to learn to make the source features look more like the target features (a distinctly different idea than making the features domain invariant, which was discussed in Section 3.1). They found this particularly helpful for structured domain adaptation (e.g., semantic segmentation, in their case).

Up to this point, these domain mapping methods have used image-to-image translation to map images (or in one case features) from one domain to another and thereby improve domain adaptation performance. Another line of research using pixel-level image generation for domain adaptation is to use a GAN to generate corresponding images in multiple domains and then employ all but the last layer of the discriminator as a feature extractor for a classifier [143, 160]. Liu et al. [143] train a pair of GANs called CoGAN on two domains of images. Mao et al. [160] propose RegCGAN using only one generator and discriminator but including a domain label prepended to the input noise vector.

3.3. Normalization Statistics

Normalization layers such as batch norm [107] are used in most neural networks [211]. These have benefits including allowing for higher learning rates and thus faster training [107], reducing initialization sensitivity [107], smoothing the optimization landscape and making the gradients more Lipschitz [211], and allowing for deeper networks to converge [80, 254]. Each batch norm layer normalizes its input to have zero mean and unit variance. At test time, running averages of the batch norm parameters can be used. Alternatives have been developed including instance norm allowing use in recurrent neural networks [10] and group norm removing the dependence on batch size [254]. However, none of these normalization techniques were developed with domain adaptation in mind. In the case of domain adaptation, the normalization statistics for each domain likely differ. Another line of domain adaptation research involves using per-domain batch normalization statistics.

Li et al. [141] assume that the neural net layer weights learn task knowledge and the batch norm statistics learn domain knowledge. If this is the case, then domain adaptation can be performed by modulating all the batch norm layers’ statistics from the source to target domain, a technique they call AdaBN. This has the benefit of being simple, parameter free, and complementary to other adaptation methods.

Carlucci et al. [27] propose AutoDIAL, a generalization of AdaBN. In AdaBN, the target data are not used to learn the network weights but only for adjusting the batch norm statistics. AutoDIAL can utilize the target data for learning the network weights by coupling network parameters between source and target domains. They do this through adding domain alignment layers (DA-layers) that differ for source and target input data before each of the batch norm layers. Generally, batch norm computes a moving average of the statistics on a batch of the layer’s input data. However, in AutoDIAL, source and target input data to DA-layers are mixed by a learnable amount before feeding this to batch norm (meaning that the batch norm statistics are now computed over some source and some target data rather than just source data or just target data). This allows the network to automatically learn how much alignment is needed at various points in the network.

3.4. Ensemble Methods

Given a base model such as a neural network or decision tree, an ensemble consisting of multiple models can often outperform a single model by averaging together the models’ outputs (e.g., regression) or taking a vote (e.g., classification) [65, 80]. This is because if the models are diverse then each individual model will likely make different mistakes [80]. However, this performance gain corresponds with an increase in computation cost due to the large number of models to evaluate for each ensemble prediction, making ensembles common for some use cases such as competitions but uncommon when comparing models [80]. Despite the incurred cost, several ensemble-based methods have been developed for domain adaptation either using the ensemble predictions to guide learning or using the ensemble to measure prediction confidence for pseudo-labeling target data.

3.4.1. Self-Ensembling.

An alternative to using multiple instances of a base model as the ensemble is using only a single model but “evaluating” (via a history or average) the models in the ensemble at multiple points in time during training – a technique called self-ensembling. This can be done by averaging over past predictions for each example (by recording previous predictions) [126] or past network weights (by maintaining a running average) [234]. Since an ensemble requires diverse models, these self-ensembling approaches require high stochasticity in the networks, which is provided by extensive data augmentation, varying the augmentation parameters, and including dropout. These methods were originally developed for semi-supervised learning.

French et al. [68] modify and extend these prior self-ensembling methods for unsupervised domain adaptation. They use two networks: a student network and a teacher network. Input images are fed first to stochastic data augmentation (Gaussian noise, translations, horizontal flips, affine transforms, etc.) before being input to both networks. Because the method is stochastic, the augmented images fed to the networks will differ. The student network is trained with gradient descent while the teacher network weights are an exponential moving average (EMA) of the student network’s weights. This method outperforms the other methods on the datasets in Table 2. Athiwaratkun et al. [9] show that in at least one experiment stochastic weight averaging [110] can further improve these results.

Table 2.

Classification accuracy (source → target, mean ± std %) of different neural network based domain adaptation methods on various computer vision datasets (only including those used in > 2 papers). Adversarial approaches denoted by *.

| Name | MNIST and USPS | MNIST and SVHN | MNIST[-M] | Synthetic to Real | |||

|---|---|---|---|---|---|---|---|

| MN→US | US→MN | SV→MN | MN→SV | MN→MN-M | SYNN→SV | SYNS→GTSRB | |

| Target only (i.e., if we had the target labels) | 96.3 ± 0.1 [96] 96.5 [21] |

99.2 ± 0.1 [96] | 99.2 ± 0.1 [96] 99.5 [22] 99.51 [72] |

96.4 [21] 98.7 [22] 98.91 [72] |

92.44 [72] 92.4 [22] |

99.87 [72] 99.8 [22] |

|

| French et al.[68] | 98.2 | 99.5 | 99.3 | 37.5 97.0a |

97.1 | 99.4 | |

| Co-DA[124]b* | 98.6 | 81.7 | 97.5 | 96.0 | |||

| DIRT-T[220]b* | 99.4 | 76.5 | 98.7 | 96.2 | 99.6 | ||

| VADA[220]b* | 94.5 | 73.3 | 95.7 | 94.9 | 99.2 | ||

| DeepJDOT[50] | 95.7 | 96.4 | 96.7 | 92.4 | |||

| CyCADA[96]* | 95.6 ± 0.2 | 96.5 ± 0.1 | 90.4 ± 0.4 | ||||

| Gen. to Adapt[208]* | 92.8 ± 0.9 | 90.8 ± 1.3 | 92.4 ± 0.9 | ||||

| SimNet[187]* | 96.4 | 95.6 | 90.5 | ||||

| MCD[206]* | 96.5 ± 0.3 | 94.1 ± 0.3 | 96.2 ± 0.4 | 94.4 ± 0.3 | |||

| GAGL[250]b* | 96.7 | 74.6 | 94.9 | 93.1 | 97.6 | ||

| SBADA-GAN[201]b* | 97.6 | 95.0 | 76.1 | 61.1 | 99.4 | 96.7 | |

| MCA[278] | 96.6 | 96.8 | 89.0 | ||||

| CCN++[101]* | 89.1 | ||||||

| M-ADDA[127]* | 98 | 97 | |||||

| Rozantsev et al.[199] | 60.7 | 67.3 | |||||

| PixelDA[21]* | 95.9 | 98.2 | |||||

| ATT[204] | 85.0 | 52.8 | 94.0 | 92.9 | 96.2 | ||

| ADDA[241]* | 89.4 ± 0.2 | 90.1 ± 0.8 | 76.0 ± 1.8 | ||||

| RegCGAN[160]* | 93.1 ± 0.7 | 89.5 ± 0.9 | |||||

| DTN[231]* | 84.4 | ||||||

| Sener et al.[214] | 78.8 | 40.3 | 86.7 | ||||

| DSN[22]b* | 91.3 [21] | 82.7 | 83.2 | 91.2 | 93.1 | ||

| DRCN[76] | 91.80 ± 0.09 | 73.67 ± 0.04 | 81.97 ± 0.16 | 40.05 ± 0.07 | |||

| CoGAN[143]* | 91.2 ± 0.8 | 89.1 ± 0.8 | 62.0 [21] | ||||

| DANN[72, 73]* | 85.1 [21] | 71.07 70.7 [22] 71.1 [204] 73.6 [96] |

35.7 [204] | 81.49 77.4 [22] 81.5 [204] |

90.48 90.3 [22, 204] |

88.66 88.7 [204] 92.9 [22] |

|

| DAN[147] | 81.1 [21] | 71.1 [22] | 76.9 [22] | 88.0 [22] | 91.1 [22] | ||

| Source only (i.e., no adaptation) | 78.9 [21] 82.2 ± 0.8 [96] |

69.6 ± 3.8 [96] | 59.19 [72] 59.2 [22] 67.1 ± 0.6 [96] |

56.6 [22] 57.49 [72] 63.6 [21] |

86.65 [72] 86.7 [22] |

74.00 [72] 85.1 [22] |

|

problem-specific hyperparameter tuning of data augmentation to match pixel intensities of target domain images

hyperparameter tuned on some labeled target data

3.4.2. Pseudo-Labeling.

Rather than voting or averaging the outputs of the models in an ensemble, the individual model predictions could be compared to determine the ensemble’s confidence in that prediction. The more models in the ensemble that agree, the higher the ensemble’s confidence in that prediction. In addition, if performing classification on a particular example, an individual model’s confidence can be determined by looking at the last layer’s softmax distribution: uniform indicates uncertainty whereas one class’s probability much higher than the rest indicates higher confidence. Applying this to domain adaptation, a diverse ensemble trained on source data may be used to label target data. Then, if the ensemble is highly confident, those now-labeled target examples can be used to train a classifier for target data.

This is the method Saito et al. [204] developed called asymmetric tri-training (ATT). Two networks sharing a feature extractor are trained on the labeled source data (i.e., the ensemble in this case is of size two). Those two networks then predict the labels for the unlabeled target data, and if the two agree on the label and have high enough confidence on a particular instance, then the predicted label for that example is assumed to be the true label. After the target data are labeled by the first two networks, the third network (also sharing the same feature extractor) can be trained using the assumed-true labels (pseudo-labels). Diversity in the ensemble is handled with an additional loss (see Section 4.1).

Instead of using an ensemble, Zou et al. [291] rely on just the softmax distribution for the confidence measure. When working with semantic segmentation, they found relying on the prediction confidence for pseudo-labeling results in transferring primarily easy classes while ignoring harder classes. Thus, they additionally propose adding a class-wise weighting term when pseudo-labeling to normalize the class-wise confidence levels and thus balance out the class distribution.

3.5. Target Discriminative Methods

One assumption that has led to successes in semi-supervised learning algorithms is the cluster assumption [30]: that data points are distributed in separate clusters and the samples in each cluster have a common label [220]. If this is the case, then decision boundaries should lie in low density regions (i.e., should not pass through regions where there are many data points) [30]. A variety of domain adaptation methods have been explored to move decision boundaries into density regions of lower density. These have typically been trained adversarially.

Shu et al. [220] in virtual adversarial domain adaptation (VADA) and Kumar et al. [124] in co-regularized alignment (Co-DA) both use a combination of variational adversarial training (VAT) developed by Miyato et al. [167] and conditional entropy loss. They are used in combination because VAT without the entropy loss may result in overfitting to the unlabeled data points [124] and the entropy loss without VAT may result in the network not being locally-Lipschitz and thus not resulting in moving the decision boundary away from the data points [220]. Shu et al. [220] additionally propose a decision-boundary iterative refinement step with a teacher (DIRT-T) for use after training to further refine the decision boundaries on the target data, allowing for a slight improvement over VADA. An entropy loss was also used in AutoDIAL [27] but without VAT.

In generative adversarial guided learning (GAGL), Wei et al. [250] propose to let a GAN move decision boundaries into lower-density regions. Using domain alignment methods that learn domain-invariant features like DANN (Section 3.1), typically the data fed to the feature extractor is either source or target data. However, Wei et al. propose to alternate this with feeding generated (fake) images and appending a “fake” label to the task classifier, thus repurposing the task classifier as a GAN discriminator. They found this to have the effect of moving the decision boundaries in the target domain into areas of lower density with a GAN, promoting target-discriminative features as a result.

Saito et al. [205] propose adversarial dropout regularization. Since dropout is stochastic, when they create two instances of the task classifier containing dropout, the resulting networks may produce different predictions. The difference between these predictions can be viewed as a discriminator. Using this discriminator to adversarially train the feature extractor has the effect of producing target discriminative features. Lee et al. [134] alter adversarial dropout to better handle convolutional layers by dropping channel-wise rather than element-wise.

3.6. Combinations

In recent work, researchers have proposed various combinations of the above methods. Domain mapping has been combined with domain-invariant feature learning methods either trained separately (in GraspGAN [20]) or jointly (in CyCADA [96]). Following AdaBN, many researchers started employing domain-specific batch normalization [20, 68, 114, 124, 136]. Kumar et al. [124] propose co-regularized alignment (Co-DA), an approach in which two separate adversarial domain-invariant feature networks are learned with different feature spaces, drawing on ensemble-based methods. Kang et al. [115] combine domain mapping with aligning the models’ attention by minimizing an attention-based discrepancy. Deng et al. [58] combine target discriminative methods with self-ensembling. Lee et al. [132] combine target discriminative methods and domain-invariant feature learning with a sliced Wasserstein metric.

Multi-adversarial domain adaptation (MADA) [183] combines adversarial domain-invariant feature learning with ensemble methods for the purpose of better handling multi-modal data. This is accomplished by incorporating a separate discriminator for each class and using the task classifier’s softmax probability to weight the loss from each discriminator for unlabeled target samples.

Saito et al. [206] combine elements of adversarial domain-invariant feature learning, ensemble methods, and target discriminative features in their maximum classifier discrepancy (MCD) method. They propose using a shared feature extractor followed by an ensemble (of size two) of task-specific classifiers, where the discrepancy between predictions measures how far outside the support of the source domain the target samples lie. The discriminator in this setup is the combination of the two classifiers. The feature extractor is trained to minimize the discrepancy (i.e., fool the classifiers that the samples are from the source domain) while the classifiers are trained to maximize the discrepancy on the target samples.

4. COMPONENTS

Table 1 summarizes the neural network-based domain adaptation methods we discuss showing components each method uses including what type of adaptation, which loss functions, whether the method uses a generator, and which weights are shared. Below we discuss each of these aspects followed by how the networks are trained, what types of networks can be used, multi-level adaptation techniques, and how to tune the hyperparameters of these methods.

Table 1.

Comparison of different neural network based domain adaptation methods based on method of adaptation (domain-invariant feature learning [DI], domain mapping [DM], normalization [N], ensemble [En], target discriminative [TD]), various loss functions (distance, promoting different features, cycle consistency, semantic consistency, task, feature- or pixel-level adversarial), usage of a generator, and which weights are shared (in the feature extractor).

| Name | Method | Loss Functions | Adversarial Loss | Generator | Shared Weights | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Distance | Diff. | Cycle | Sem. | Task | Feature | Pixel | ||||

| CAN[114] | DI,N | CCD | ✓ | not BN | ||||||

| French et al.[68] | En,N | sq. diff. | ✓ | EMA | ||||||

| Co-DA[124]a | DI,En,N,TD | L1 | ✓ | ✓ | ✓ | optional | ||||

| VADA[220]a | DI,TD | ✓ | ✓ | ✓ | ||||||

| DeepJDOT[50] | DI | JDOT | ✓ | ✓ | ||||||

| CyCADA[96] | DI,DM | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| Gen. to Adapt[208] | DI | ✓ | ✓ | ✓ | ✓ | |||||

| SimNet[187] | DI | prototypes | ✓ | |||||||

| MADA[183] | DI,En | ✓ | ✓ | ✓ | ||||||

| MCD[206] | DI,En,TD | ✓ | ✓ | ✓ | ✓ | |||||

| GAGL[250] | DI,TD | ✓ | ✓ | ✓ | ✓ | ✓ | ||||

| SBADA-GAN[201]b | DM | ✓ | ✓ | ✓ | ✓ | |||||

| MCA[278] | DI | MCA | ✓ | ✓ | ||||||

| CCN++[101] | DI | clusters | ✓ | ✓ | ||||||

| M-ADDA[127] | DI | clusters | ✓ | ✓ | ||||||

| Rozant. et al.[199] | DI | MMD | ✓ | regularize | ||||||

| XGAN[197] | DM | ✓ | ✓ | ✓ | ✓ | some | ||||

| StarGAN[41] | DM | ✓ | ✓ | ✓ | ✓ | |||||

| PixelDA[21] | DM | ✓ | ✓ | ✓ | ✓ | ✓ | ||||

| AutoDIAL[27] | N,TD | ✓ | not BN | |||||||

| AdaBN[145] | N | not BN | ||||||||

| JAN-A[151] | DI | JMMD | ✓ | ✓ | ✓ | |||||

| LogCORAL[249] | DI | logCOR, mean | ✓ | ✓ | ||||||

| Log D-CORAL[172] | DI | logDCOR | ✓ | ✓ | ||||||

| VRADA[189] | DI | ✓ | ✓ | ✓ | ||||||

| ATT[204] | En | ✓ | ✓ | ✓ | ||||||

| SimGAN[219] | DM | ✓ | ✓ | N/Ac | ||||||

| ADDA[241] | DI | ✓ | ✓ | |||||||

| CycleGAN[290] | DM | ✓ | ✓ | ✓ | d | |||||

| RegCGAN[160] | DM | ✓ | ✓ | ✓ | ✓ | |||||

| Sener et al.[214] | DI | k-NN | ✓ | |||||||

| DSN[22] | DI | ✓ | ✓ | ✓ | ✓ | some | ||||

| DRCN[76] | DI | ✓ | ✓ | ✓ | ||||||

| CoGAN[143] | DM | ✓ | ✓ | ✓ | some | |||||

| Deep CORAL[226] | DI | CORAL | ✓ | ✓ | ||||||

| DANN[1, 72, 73] | DI | ✓ | ✓ | ✓ | ||||||

| DAN[147] | DI | MK-MMD | ✓ | low | ||||||

| Tzeng et al.[240]e | DI | ✓ | ✓ | ✓ | ||||||

also incorporate virtual adversarial training [167]

also a self-labeled classification loss (learn label on source images, pseudo-label mapped target to source)

maps to target domain so only have feature extractor for target (part of the classifier)

semi-supervised for some classes, i.e., requires some labeled target data for some of the classes

4.1. Losses

4.1.1. Distance.

Distance functions play a variety of roles in domain adaptation losses. A distance loss can be used to align two distributions by minimizing a distance function (e.g., MMD) as explained in Section 3.1. If using an ensemble, minimizing a distance function can align the outputs of the ensemble’s models: an L1 loss of the difference in predicted target class probabilities from two networks in Co-DA [124] or a squared difference between the predictions of the student and teacher networks in self-ensembling [68]. (Note the squared difference loss is confidence thresholded, i.e., if the max predicted output is below a certain threshold then the squared difference loss is set to zero.)

Some of the described methods have been altered replacing the task loss with one of similarity. Laradji et al. [127] propose M-ADDA, a metric-learning modification to ADDA but with the goal of maximizing the margin between clusters of data points’ embeddings. Based on DANN, Pinheiro [187] proposes SimNet, classifying based on how close an embedding is to the embeddings of a random subset of source images for each class. Hsu et al. [101] propose CCN++ incorporating a pairwise similarity network (trained with the same class is similar and different classes are dissimilar).

4.1.2. Promote Differences.

Methods that rely on multiple networks learning different features (such as to make an ensemble diverse) do so by promoting differences between the networks. Saito et al. [204] train the two classifiers labeling unlabeled data to use different features by adding a norm of the product of the two classifiers’ weights. Bousmalis et al. [22] promote different features between two private feature extractors with a soft subspace orthogonality constraint, which is similarly used by Liu et al. [144] for text classification. Kumar et al. [124] train the feature extractors to be different by pushing minibatch means apart. Saito et al. [206] maximize the discrepancy between two classifiers using a fixed, shared feature extractor to promote using different features.

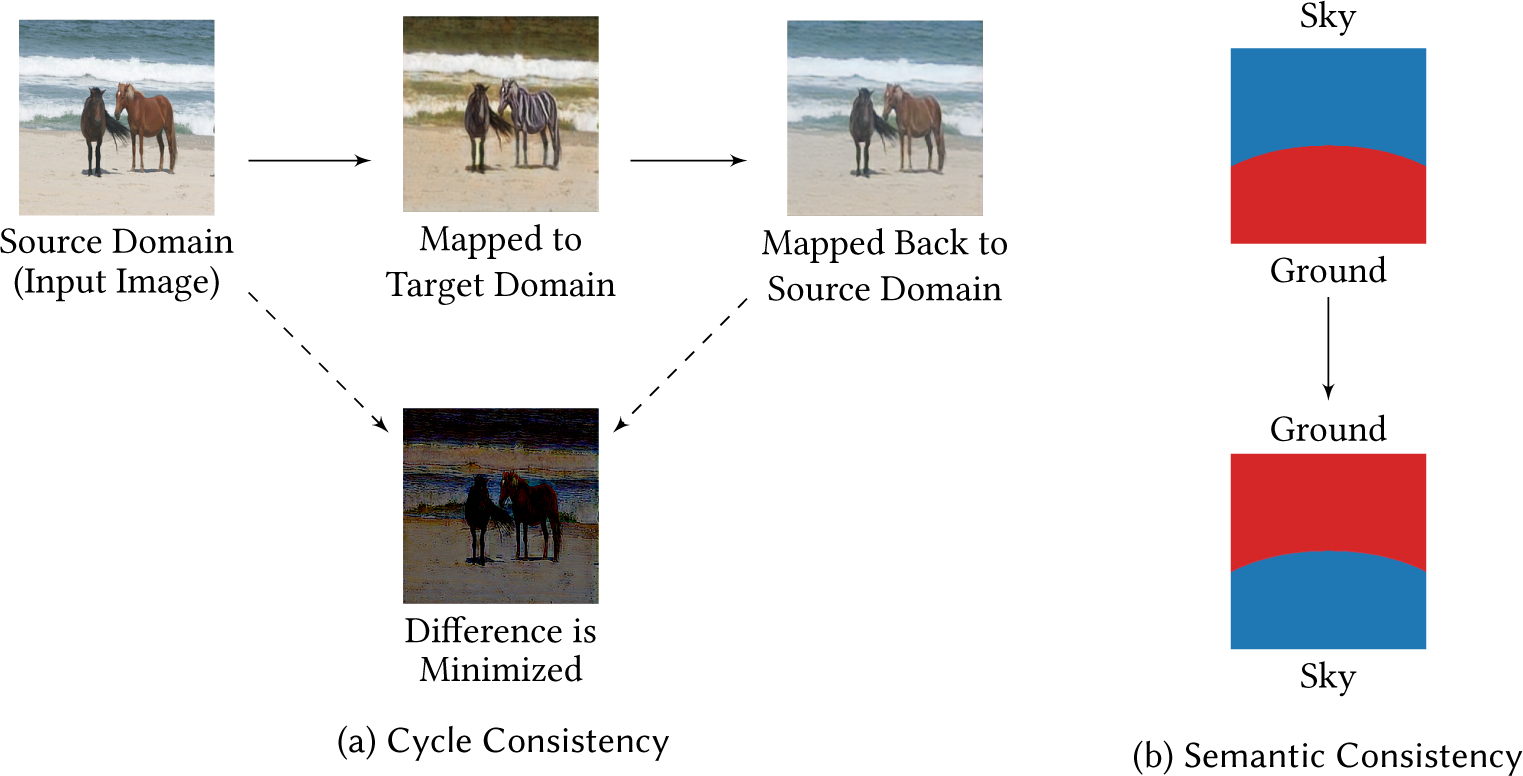

4.1.3. Cycle Consistency / Reconstruction.

A cycle consistency loss or reconstruction loss is commonly used in domain mapping methods to avoid requiring a dataset of corresponding images to be available in both domains. This is how CycleGAN [290], DualGAN [264], and DiscoGAN [118] can be unsupervised. This means that after translating an image from one domain (e.g., horses) to another (e.g., zebras), the new image can be translated back to reconstruct the original image, as illustrated in Figure 6a. Some variants of this have been proposed such as an L1 loss with a transformation function (e.g., identity, image derivatives, mean of color channels) [219], a feature-level cycle-consistency loss (mapping from source to embedding to target then back to embedding resulting in the same embeddings) [197], or using the loss in one [41] or both directions [96, 197]. Sener et al. [214] enforce cycle consistency in their k-nearest neighbors (k-NN) approach by requiring the distance between any source and target point labeled the same to be less than the distance between any source and target point labeled differently and derive a rule they can solve with stochastic gradient descent.

Fig. 6.

(a) Illustration of a cycle-consistency loss using the horses ↔ zebras dataset by Zhu et al. [290]. The difference between the original source image and the reconstructed image (source to target and back to source) is minimized. (b) Example semantic segmentation situation in which the class names are swapped between the input image and the mapped image that would be prevented by including a semantic-consistency loss. The semantic-consistency loss requires that the class assignments are preserved.

4.1.4. Semantic Consistency.

A semantic consistency loss can be used to preserve class assignments as illustrated in Figure 6b (a segmentation example). The semantic consistency loss requires that a classifier output (or semantic segmentation labeling) from the original source image is the same as the same classifier’s output on the pixel-level mapped target output.

4.1.5. Task.

Nearly all of the domain adaptation methods include some form of task loss that helps the network learn to perform the desired task. For example, for classification, the goal is to output the ground truth source label, or for semantic segmentation, to label each pixel with the correct ground truth source label. The task loss used is generally a cross-entropy loss, or more specifically the negative log likelihood of a softmax distribution [80] when using a softmax output layer. The exceptions not including a task loss are SimNet [187] that classify based on distance to prototypes of each class, the work by Sener et al. [214] that uses k nearest neighbors, and AdaBN [141] that only adjusts the batch norm layers to the target domain. In addition, the image-to-image translation methods are application agnostic unless trained jointly for domain adaptation.

4.1.6. Adversarial.

A variety of methods use a discriminator (or critic) for learning domain-invariant features, realistic image generation, or promoting target discriminative features by forcing a network (either a feature extractor or generator) to produce outputs indistinguishable between two domains (source and target or real and fake). This loss is different than the other losses discussed in this section because this adversarial loss is learned [79, 109] (where learning is more than a hyperparameter search) rather than being provided as a predefined function. During training, gradients from the discriminator are used to train the feature extractor or generator (e.g., negated by a gradient reversal layer, Section 3.1.3). This alternates with updating the discriminator itself to make the correct domain classification.

4.1.7. Additions for Specific Problems.

Some research focusing on specific problems has resulted in additional losses. For semantic segmentation, Li et al. [138] develop a loss making segmentation boundaries sharper to help when the mapped image-to-image translation images will be used for segmentation, Chen et al. [40] develop a distillation loss in addition to performing location-aware alignment (e.g., “road” is usually at the bottom of each image), Hoffman et al. [97] develop a class-aware constrained multiple instance loss, Zhang et al. [276] develop a curriculum where after learning some high-level properties on easy tasks the segmentation network is forced to follow those properties (interpretations include student-teacher setup or posterior regularization), and Perone et al. [185] apply the self-ensembling method [68] replacing the cross-entropy loss with a consistency loss. For object detection, Chen et al. [39] use two domain classifiers (one on an image-level representation and the other on an instance-level representation) with a consistency regularization between them. For adaptation from synthetic images where it is known which pixels are foreground in the source images, Bousmalis et al. [21] and Bak et al. [11] mask certain losses to only penalize foreground pixel differences. For person re-identification, Wei et al. [251] include a person identity-keeping constraint in their domain mapping GAN.

4.2. Low-Confidence or Low-Relevance Rejection

Given a measure of confidence, performance may increase if we can reject data points for training the target classifier that are not of sufficient confidence. This, of course, assumes our confidence measurement is accurate enough. Saito et al. [204] used the label agreement of an ensemble combined with the softmax distribution output (uniform is not confident, one probability much higher than the rest is confident). Sener et al. [214] used the label agreement of the k nearest source data points. If the confidence is to low, then the example is rejected and not used in training until if later on when re-evaluated it is determined to be sufficiently confident. Inoue et al. [106] used an object detector’s prediction probability as a measure of confidence, only using high-confidence detections for fine-tuning an object detection network. Similarly, a rejection approach could be used if we have a measure of relevance. For text classification, Zhang et al. [275] weight examples by their relevance to their target aspect based on a small set of positive and negative keywords (a form of weak supervision).

4.3. Weight Sharing

Methods employ different amounts of sharing network weights between domains or regularizing the weights to be similar. Most methods completely share weights between the feature extractors used on the source and target domains (as shown in Table 1). However, some techniques do not. Since deep networks consist of many layers, allowing them to represent hierarchical features, Long et al. [147] propose copying the lower layers from a network trained on the source domain and adapting higher layers to the target domain with MK-MMD since higher layers do not transfer well between domains. In CoGAN, Liu et al. [143] share the first few layers of the generators and the last few layers of the discriminators, making the assumption that the domains share high-level representations. In AdaBN, Li et al. [141] assume domain knowledge is stored in the batch norm statistics, so they share all weights except for the batch norm statistics. French et al. [68] define the teacher network as an exponential moving average of the student network’s weights (a type of ensemble). Instead of sharing weights, Rozantsev et al. [198, 199] propose two variants: regularizing weights to be similar but not penalizing linear transformations and transforming the weights from the source network to the target network with small residual networks. Bousmalis et al. [22] propose domain separation networks (DSN): learning source-specific, target-specific, and shared features where the “shared” source domain encoder and “shared” target domain encoder do share weights, but the “private” source domain encoder and “private” target domain encoders do not. Others have similarly explored this idea of shared vs. specific features [25, 144, 194].

4.4. Training Stages

Some have trained networks for domain adaptation in stages. Tzeng et al. [241] train a source classifier first followed by adaptation. Taigman et al. [231] use a pre-trained encoder during adaptation. Bousmalis et al. [20] in GraspGAN first train the domain-mapping network followed by the domain-adversarial network. Hoffman et al. [96] in CyCADA train their many components in stages because it would not all fit into GPU memory at once.

Other methods train the domain adaptation networks jointly, which using an adversarial approach is done by alternating between training the discriminator and the rest of the networks (Sections 2.2 and 3.1.3). However, variations exist for some other methods. Saito et al. [204] in ATT cycle through generating training the source networks, generating pseudo-labels, and training the target network. Zou et al. [291] alternate between pseudo-labeling the target data and re-training the model using the labels (a form of self-training). Wei et al. [250] in GAGL alternate between feeding in real source and target data and the fake images generated by a GAN. Sener et al. [214] alternate between k-nearest neighbors and performing gradient descent.

4.5. Multi-Level

Some adaptation methods perform adaptation at more than one level. As discussed in Section 3.6, GraspGAN [20] and CyCADA [96] perform pixel-level adaptation with domain mapping and feature-level adaptation with domain-invariant feature learning. Hoffman et al. [96] found that performing both levels of adaptation significantly improves accuracy: using domain mapping to capture low-level image domain shifts and learning domain-invariant features to handle larger domain shifts than what pure domain mapping methods can support. Following this idea, Tsai et al. [239] make semantic segmentation predictions and perform domain-invariant feature learning at multiple levels in their semantic segmentation network, and Zhang et al. [274] perform domain-invariant feature learning at multiple levels while automatically learning how much to align to each level. Chen et al. [39] perform domain-invariant feature learning at both image and instance levels for object detection but also include a consistency regularization between the two domain classifiers.

4.6. Types of Networks

Nearly all of the surveyed approaches focus on learning from image data and use convolutional neural networks (CNNs) such as ResNet-50 or Inception (Table 3). Wang et al. [247] explore the use of attention networks, Kang et al. [115] a combination of CNNs and attention, Ma et al. [154] graph convolutional networks, and Kurmi et al. [125] Bayesian neural networks. In the case of time-series data, Purushotham et al. [189] propose instead using a variational recurrent neural network (RNN) [43] or LSTM (a type of RNN) [94] rather than a CNN. The RNN learns the temporal relationships while adversarial training is used to achieve domain adaptation. For text classification (a type of natural language processing), Liu et al. [144] also use LSTMs while Zhang et al. [275] found a CNN to work just as well as RNNs or bi-LSTMs in their experiments. For relation extraction (another type of natural language processing), Fu et al. [70] also use a CNN. For time-series speech recognition, Zhao et al. [282] use bi-LSTMs while Hosseini-Asl et al. [100] used a combination of CNNs and RNNs. In the related problem of domain generalization, a combination of CNNs and RNNs have been used for handling a radio spectrogram changing through time to identify sleep stages [284].

4.7. Hyperparameter Tuning

Normal supervised learning-based hyperparamenter tuning methods do not carry over to unsupervised domain adaptation [22, 73, 149, 150, 171, 185, 245]. A common supervised learning approach is to split the training data into a smaller training set and a validation set. After repeatedly altering the hyperparameters, retraining the model, and testing on this validation set for each set of hyperparameters, the model yielding the highest validation set accuracy is selected. Another option is cross validation. However, in unsupervised domain adaptation, there are now two domains, and the data for the target domain may not include any labels. When evaluating domain adaptation approaches on common datasets, generally the target data does contain labels, so work by some groups [22, 27, 124, 201, 220, 245, 250] do use some labeled target data (or all of it [149, 217]) for hyperparameter tuning, which can be interpreted as an upper bound on how well the method could perform [245]. For example, some [27, 150] tuned for Office on one W labeled example per class on the A →W task, while others [201, 250] tuned with a validation set of 1000 randomly sampled target examples. Using any labeled target data is not ideal because real-world testing will not include labels for tuning (unless it is semi-supervised, in which case semi-supervised learning is recommended in Section 6).