Abstract

Several imaging algorithms including patch-based image denoising, image time series recovery, and convolutional neural networks can be thought of as methods that exploit the manifold structure of signals. While the empirical performance of these algorithms is impressive, the understanding of recovery of the signals and functions that live on manifold is less understood. In this paper, we focus on the recovery of signals that live on a union of surfaces. In particular, we consider signals living on a union of smooth band-limited surfaces in high dimensions. We show that an exponential mapping transforms the data to a union of low-dimensional subspaces. Using this relation, we introduce a sampling theoretical framework for the recovery of smooth surfaces from few samples and the learning of functions living on smooth surfaces. The low-rank property of the features is used to determine the number of measurements needed to recover the surface. Moreover, the low-rank property of the features also provides an efficient approach, which resembles a neural network, for the local representation of multidimensional functions on the surface. The direct representation of such a function in high dimensions often suffers from the curse of dimensionality; the large number of parameters would translate to the need for extensive training data. The low-rank property of the features can significantly reduce the number of parameters, which makes the computational structure attractive for learning and inference from limited labeled training data.

Keywords: level set, surface recovery, function representation, image denoising, neural networks

1. Introduction

Several imaging algorithms were introduced to exploit the extensive redundancy with images to recover them from noisy and possibly undersampled measurements. For instance, several patch-based image denoising methods were introduced in the recent past. Algorithms such as non-local means perform averaging of similar patches within the image to achieve denoising [6]. Similar patch-based regularization strategies are used for image recovery from undersampled data [27,56]. Similar approaches are also used for the recovery of images in a time series by exploiting their non-local similarity [36,38]. The success of these methods could be attributed to the manifold assumption [11,47], which states that signals in real-world datasets (e.g. patches in images) are restricted to smooth manifolds in high dimensional spaces. In particular, the regularization penalty used in non-local methods can be viewed as the energy of the signal gradients on the patch manifold rather than in the original domain, facilitating the collective recovery of the patch manifold from noisy measurements [4]. In particular, non-local methods estimate the interpatch weights, which are used for denoising; the interpatch weights are equivalent to the manifold Laplacian, which captures the structure of the manifold. Similarly, image denoising approaches such as BM3D [8] that cluster patches, followed by PCA approximations of the cluster, can also be viewed as modeling the tangent subspaces of the patch manifold in each neighborhood. Patch dictionary based schemes, which allow the coefficients to be adapted to the specific patch, could also be viewed as tangent subspace approximation methods.

Convolutional neural networks are now emerging as very powerful alternatives for image denoising [52, 58] and image recovery [2,17]. Rather than averaging similar patches, neural networks learn how to denoise the image neighborhoods from example pairs of noisy and noise-free patches. These frameworks can be viewed as learning a multidimensional function in high dimensional patch spaces. In particular, the inputs to the network are noisy patches and the corresponding outputs are the denoised patches/pixels. We note that the learning of such functions using conventional methods will suffer from the curse of dimensionality. Specifically, large amounts of training data may be needed to learn the parameters of such a high-dimensional function, if represented using conventional methods. While the empirical performance of neural networks is impressive, the mathematical understanding of why and how they can learn complex multidimensional functions in high-dimensional spaces from relatively limited training data is still emerging. We note that the manifold assumption is also used in the CNN literature to explain the good performance of neural networks.

With the goal of understanding the above algorithms from a geometrical perspective, we consider the following conceptual problems (a) when can we learn and recover a manifold or surface in high dimensional space from few samples or training data, (b) when can we exactly learn and recover a function that lives on a surface, from few input-output examples, (c) can these results explain the good performance of imaging algorithms that use manifold structure. We note that many different surface models including parametric shape models [18,22], local and multi-resolution representations [34,44], and implicit level-set [21,32,43] shape representations have been used in low-dimensional settings (e.g. 2D/3D). Our main focus in this paper is on surface recovery in high-dimensional spaces with application to machine learning and learning surfaces of patches and images. The utility of the above algorithms in such high dimensional applications have not been well-studied, to the best of our knowledge; the direct extension of the low-dimensional algorithms is expected to be associated with high computational complexity. We consider implicit level set representation of the surface to deal with shapes of arbitrary topology. Specifically, we model the surface or union of surfaces as the zero level set of a multidimensional function ψ. To restrict the degrees of freedom of the surface, we consider the level set function to be bandlimited. The bandwidth of ψ can be viewed as a measure of the complexity of the surface; a more band- limited function will translate to a smoother surface. We refer the readers to our earlier works [30,37,38,59,60] for examples of 2D/3D recovery of shapes, where the above representation is used to represent and recover shapes with sharp corners and edges.

We show that under the above assumptions on the surface, a non-linear mapping of the points on the surface will live on a low-dimensional feature subspace, whose dimension depends on the complexity of the surface. Specifically, one can transform each data point to a feature vector, whose size is equal to the number of basis functions used for the surface representation. Since we use a linear combination of complex exponentials to represent the surface, the lifting in our setting is an exponential mapping. We use the low-rank property of the feature matrix to estimate the surface from few of its samples. Our sampling results show that an irreducible surface can be perfectly recovered from very few samples, whose number is dependent on the bandwidth. Our experiments in these settings show the good recovery of the surfaces from few noisy points. Our results also show that the union of irreducible surfaces can also be recovered from few samples, provided each of the irreducible components are adequately sampled. We also use a kernel low-rank algorithm to recover a surface from its noisy samples, which bears close similarity with non-local means algorithms widely used in image processing.

We also show that the low-rank property can be used to efficiently represent multidimensional functions of points living on the surface. In particular, we are only interested in the good representation of the function when the input is on or in the vicinity of the surface. We assume the functions are linear combination of the same basis functions (exponentials in our case). Since such representations are linear in the feature space, the low-rank nature of the exponential features provides an elegant approach to represent the function using considerably fewer parameters. In particular, we show that the feature vectors of a few anchor points on the surface span the space, which allows us to efficiently represent the function as the interpolation of the function values at the anchor points using a Dirichlet kernel. The significant reduction in the number of free parameters offered by this local representation makes the learning of the function from finite samples tractable. We note that the computational structure of the representation is essentially a one-layer kernel network. Note that the approximation is highly local; the true function and the local representation match only on the surface, while they may deviate significantly on points which are not on the surface. We demonstrate the preliminary utility of this network in denoising, which shows improved performance compared to some state-of-the-art methods. Here, we model the denoiser as a function that provides a noise-free center pixel of a p × p noisy patch. The noisy patch is assumed to a point in p2 dimensional space, close to the low-dimensional patch surface or union of surfaces. We also show that this framework can be used to learn a manifold, which can be viewed as the signal subspace version of the null-space based kernel low-rank algorithm considered above. In this case, the network structure is an auto-encoder.

This work is related to kernel methods, which are widely used for the approximation of functions [4,7,24]. It is well-known that an arbitrary function can be approximated using kernel methods, and the computational structure resembles a single hidden layer neural network. Our work has two key distinctions with the above approaches: (a) unlike most kernel methods that choose infinite bandwidth kernels (e.g. Gaussians), we restrict our attention to a band-limited kernel. (b) We focus on a restrictive data model, where the data samples are localized or close to the zero set of a band-limited function. These two restrictions allow us to come up with theoretical results on when such a surface can be perfectly recovered from few samples. The results also provide clues on how many training data pairs are needed to learn functions on such surfaces. We note that such sampling theoretical results are not available in kernel literature, to the best of our knowledge. This work is inspired by the recent work on algebraic varieties [31], which also considers surfaces with finite degrees of freedom. The main distinction of this paper is the novel theoretical guarantees on recovery of the surface and functions living on the surface, which go beyond the empirical results in [31]. We focus on bandlimited surfaces in this work to borrow the theoretical tools from [37,38,60]. This work extends the results in [37,38,60] in three important ways (i). The planar results are generalized to the high dimensional setting. (ii). The worst-case sampling conditions are replaced by high-probability results, which are far less conservative, and are in good agreement with experimental results. (iii). The sampling results are extended to the local representation of functions. While we focus on bandlimited functions to come up with theoretical bounds, the results could be generalized to arbitrary surface representations including most basis functions, such as polynomial basis functions considered in [31,53] and shift invariant representations [5,54]. We note that this work uses parametric level-set representations unlike non-parametric level-set models (e.g. [21,43] for image segmentation). The narrow-band evolution used by these approaches to manage computational complexity makes these algorithms highly vulnerable to initial guess, unlike our algorithms as illustrated in [60]. While we illustrate our algorithms in 2D/3D applications for visualization purposes, we stress that our main focus is on high-dimensional (≫ 3) extensions of the level set approach and generalization to shape recovery. Non-parametric and even parametric level-set methods [5, 54] will be associated with very high computational complexity in this setting without the proposed computational approaches, and has not been reported to the best of our knowledge.

1.1. Terminology and Notation

We introduce some commonly used terminologies and notations throughout the paper. We term the zero level set of a trigonometric polynomial as a surface. Usually, the lower-case Greek letters ψ, η, etc. are used to represent the trigonometric polynomials. The calligraphic letter or is used to represent the zero level sets of the trigonometric polynomials and hence the surfaces. The bold lower-case letters x denotes the real variable in [0,1)n and sometimes the points on the surface. The indexed bold lower-case letters xi represent the samples on the surface. The upper-case Greek letters are used to denote the bandwidth of the trigonometric polynomials. In other words, the upper-case Greek letters are the coefficients index set. The coefficients set is shown as {ck : k ∈ Λ}. The cardinality of bandwidth Λ is given by |Λ|, which will serve as a measure of the complexity of the surface. The notation Γ ⊝ Λ indicates the set of all the possible uniform shifts of the set Λ within the set Γ. The specific Greek letter Φ (sometimes subscripts are used to identify the corresponding bandwidth) is used to represent the lifting map (feature map) of the point on the surface. The notation Φ(X) denotes the feature matrix of the sampling set X.

1.2. Background on non-local means; reinterpretation as manifold regularization

Non-local means (NLM) methods average patches in an image based on their similarity to obtain a denoised image. In particular, they compute a weight matrix, whose entries are , where Pr (f) denotes a patch in the image f, centered at r. The smoothing approach in NLM can be viewed as the minimization problem

| (1) |

This optimization problem can be viewed as the discretization of the manifold smoothness regularization strategy used in machine learning [4], which considers the recovery of a multidimensional function f(s) on a manifold from its noisy samples f(sk) = yk:

| (2) |

Here, is a smooth surface/manifold and denotes the gradient of the function on the manifold. The weight matrix W captures the geometry of the patch manifold in (1). Specifically, closer point pairs on will have higher weights, while distant point pairs will have smaller weights. The equivalence with NLM can be seen by viewing the noisy patches as noisy samples yk on the patch manifold. We note that the weighted sum is often expressed in a compact form as

Here, and L is the Laplacian matrix L = D – W, which captures the structure of the manifold and D is a diagonal matrix . L can be viewed as the discrete approximation of the Laplace-Beltrami operator on the continuous surface/manifold [4].

2. Parametric surface representation

In this work, we use the level set representation to describe a (hyper-)surface. We model a (hyper-)surface in [0,1)n; n ≥ 2 as the zero level set of a function ψ:

| (3) |

For example, when n = 2, is a (hyper-)surface of dimension 1, which is typically a curve. We note that the level set representation is widely used in image segmentation [21]. The normal practice in image segmentation is the non-parametric level set representation of a time-dependent evolution function ψ, which results in the PDE-driven models. Note that the initialization of these models affects the stability and the rate of convergence of the methods. So good initialization of level set functions is usually a requirement for good segmentation.

Several authors have recently proposed to represent the level set function ψ as a linear combination of basis functions φk(x) [5,54]. These schemes argue that the reduced number of parameters translate to fast and efficient algorithms. Besides, we do not require the good initialization in this setting. Motivated by these schemes, we represent ψ(x) as

| (4) |

Since the level set function is the linear combination of some basis functions, we term the corresponding zero level set as parametric zero level set. We note that the surface properties would depend on the specific basis functions and will indeed decide the type of the kernel used in the algorithms in Section 4.3. We now provide some examples of parametric representations, depending on the choices of the basis functions.

2.1. Shift invariant surface representation

A popular choice for the basis functions is the shift invariant representation, where compactly supported basis functions such as B-splines are used. Specifically, the basis functions are shifted copies of a template φ, denoted

| (5) |

Here T is the grid spacing, which controls the degrees of freedom of the representation. The number of B-splines in the above representation is 1/(T – 1)n. One may also choose a multi-resolution or sparse wavelet surface representation, when the basis functions are shifted and dilated copies of a template. This approach allows the surface to have different smoothness properties at different spatial regions.

2.2. Polynomial surface representation

The surface can also be represented as a linear combination of polynomials [31]. The polynomial degree will control the degrees of freedom in this setting. This work is inspired by [31]. However, we note that the recovery of the surface and functions from few points are not considered in the polynomial setting. In addition, the stability of polynomial representations is not fully clear, which may be needed to represent complex surfaces; we note that low degree polynomials were considered in the examples in [31].

2.3. Band-limited surface representation

We assume that the surface is within [0,1)n. A well-studied representation for support limited functions is the Fourier exponential basis, which is widely used in digital image processing [33,50,60], biomedical image processing [30,40,51], and geophysics [41]. The level set function can be assumed to be band-limited [30], when ψ is expressed as a Fourier series:

| (6) |

In the above representation, the set Λ denotes the bandwidth of the Fourier coefficients c = {ck : k ∈ Λ}; its cardinality |Λ| is the number of free parameters in the surface representation. We refer to Λ as the Fourier support of ψ and we note that we always choose the support to be symmetric with respect to the origin. This choice is governed by the relation of this representation with polynomials, described in the next subsection. The extension of Λ governs the degree of the polynomial.

In this work, we focus on the Fourier series representation due to its key benefits including well-developed theoretical tools, fast algorithms such as fast Fourier transform, orthogonality, and the property that |exp(j2πkTx) | = 1, which results in stable representations and also facilitate the theory. In this work, we mainly focus on this representation because it facilitates us to borrow the theoretical tools from our past work [37,38,60]. We note that these results may be extended to other basis sets but is beyond the scope of this work. We will now review some of the properties of this representation, which we will use in the following sections.

2.3.1. Relation of bandlimited representation with polynomials

We also note that bandlimited representations (6) have an intimate relation with polynomials [30]. In particular, we note that one can transform the polynomial basis to an exponential one by the one-to-one mapping

| (7) |

We will make use of this correspondence to study the properties of the zero sets of (6). With this transformation, the representation (6) simplifies to the complex polynomial denoted as , which is of the form

| (8) |

Since the mapping involves powers of zi, where zi are specified by the trigonometric mapping (7), we term the expansion in (6) as a trigonometric polynomial.

We note that the mapping defined by (7) is a bijection from [0,1)n onto the complex unit torus . Hence,

| (9) |

which implies that there is a one-to-one correspondence between the zero sets of ψ and the zeros of on the unit torus. Accordingly, we can study the algebraic properties of trigonometric polynomials and their zero sets by studying their corresponding complex polynomials under the mapping v.

2.3.2. Non-uniqueness of level-set representation

We first show that the level set representation of a surface in (6) may not be unique, when the bandwidth of the representation is larger than the minimal one required to represent the surface. We first note that the function ψ(x) with bandwidth Λ in (6) can be expressed with a larger bandwidth Γ ⊃ Λ by zero filling the additional Fourier coefficients:

| (10) |

where the coefficients set is the zero-filled version of the vector c, denoted by :

| (11) |

We note that the representation of the surface by functions with the larger bandwidth Γ is not unique. In particular, any uniform shift of the coefficients in the Fourier domain corresponds to a phase multiplication in the space domain:

| (12) |

Since , we can see that the zero sets of φ′ are identical to that of φ.

Because the exponentials are orthogonal to each other, the functions φ′ that has the same zero set as φ lives in a subspace of dimension Γ ⊝ Λ. Here, Γ ⊝ Λ denote the set of all valid uniform shifts k0 of Λ, denoted by Λ + k0, that are contained in Γ. We will introduce the set Γ ⊝ Λ with more details in §3.2.2.

2.3.3. Minimal bandwidth representation of a surface

We note from the previous section that the multiplication with the phase term in (10) corresponds to multiplying the trigonometric polynomial in (8) by ; the degree of the resulting trigonometric polynomial φ′ will be greater than that of φ. In this section, we show that out of all these polynomials, the one with smallest degree is unique. More importantly, the bandwidth of the above minimal polynomial can be used as a measure of the complexity of the surface. Specifically, a more complex surface would correspond to a polynomial with a larger bandwidth.

The following result shows that for any given surface , there exists a unique level set function ψ, whose coefficient set {ck : k ∈ Λ} has the smallest bandwidth.

Proposition 1.

For every (hyper-)surface given by the zero level set of (10), there is a unique (up to scaling) minimal trigonometric polynomial ψ, which satisfies . Any other trigonometric polynomial ψ1 that also satisfies will have BW(ψ1) ⊇ BW(ψ). Here, BW(ψ) denotes the bandwidth of the function ψ.

As seen from (10), the coefficients of ψ1 can be the shifted version of the coefficients of ψ. Thus, the Fourier support of ψ1 is larger than (contains) the Fourier support of ψ; the degree of the trigonometric polynomial ψ1 is larger than the degree of the minimal polynomial ψ, which has the smallest degree or equivalently bandwidth. In this sense, the minimal polynomial ψ is unique, up to scaling. The proof of this result is given in Appendix 9.1. We refer to the ψ of the form (6) with the minimal bandwidth Λ that satisfy

| (13) |

as the minimal trigonometric polynomial of the surface .

In other words, when ψ is the minimal trigonometric polynomial of a surface , it does not have a factor with no zeros (i.e., never vanishes or vanishes only at isolated points on [0,1)n). In particular, if a polynomial has a factor with no zeros in [0,1)n, one can remove this factor and obtain a polynomial with a smaller bandwidth and with the same support set. Note from (8) that the minimal trigonometric polynomial will correspond to being a polynomial with the minimal degree.

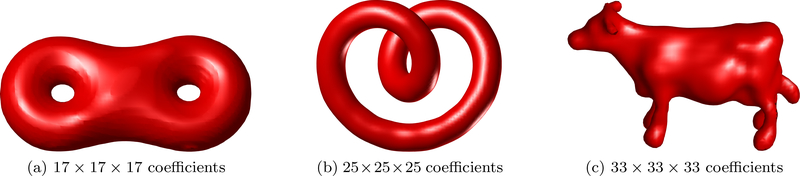

As mentioned at the beginning of this section, the bandwidth Λ of the minimal polynomial of the surface grows with the complexity of ; a more oscillatory surface with a lot of details corresponds to a high bandwidth minimal polynomial, while a simple and highly smooth surface corresponds to a low bandwidth minimal polynomial. We hence consider |Λ| as a complexity measure of the surface. Furthermore, we note that the surface model can approximate an arbitrary closed surface with any degree of accuracy, as long as the bandwidth is large enough [30]. One can refer to Fig.2 in [30] for illustration in 2D and see Fig. 1 for illustration in 3D. Here we illustrate this idea in 2D/3D for simplicity, but the approach is general for any dimensions.

Figure 1:

Illustration the fertility of our level set representation model in 3D. The three examples show that our model is capable to capture the geometry of the shape even though the shape has complicated topologies, which demonstrated that the representation is not restrictive.

2.3.4. Irreducible bandlimited surfaces

We now introduce the concept of irreducible polynomials, which is important for our results. We term a surface to be irreducible if its minimal trigonometric polynomial is irreducible. A polynomial is irreducible if it cannot be factorized into smaller factors, whose zero sets are within [0,1)n. Most of the irreducible surfaces are simply connected (i.e., consist of a single connected component1). Intuitively, a general surface may be composed of several connected components, where each connected component is irreducible. In this case, we term the above surface as the union or irreducible surfaces. The minimal polynomial of the union of irreducible surfaces will be the product of the irreducible minimal polynomials of the individual connected components. The following definitions puts the above explanations into more concrete terms:

Definition 2.

A surface is termed as irreducible, if it is the zero set of an irreducible trigonometric polynomial.

Definition 3.

A trigonometric polynomial ψ(x) is said to be irreducible, if the corresponding polynomial is irreducible in . A polynomial p is irreducible over a field of complex numbers, if it cannot be expressed as the product of two or more non-constant polynomials with complex coefficients.

When ψ can be written as the product of several irreducible components , then is essentially the union of irreducible surfaces:

| (14) |

3. Lifting mapping and low-dimensional feature spaces

In this section, we show that there exists a non-linear transformation, which maps the points on an irreducible surface to a low-dimensional subspace. The transformation is intimately tied in with the specific choice of basis functions used to represent the surface. Our results show that the dimension of the subspace depends on the complexity of the surface, or equivalently the bandwidth of the minimal polynomial. We can use the rank of the feature matrix as a surrogate of the complexity of the surface to recover it, much like sparsity is used to recover signals in compressed sensing.

Consider the non-linear lifting mapping , obtained by evaluating the basis functions at x:

| (15) |

We can view ΦΓ(x) as the feature vector of the point x, analogous to the ones used in kernel methods [45]. Here, |Γ| denotes the cardinality of the set Γ. We denote the set

| (16) |

as the feature space of the surface . Since any point on a surface satisfies (3), the feature vectors of points from satisfy

| (17) |

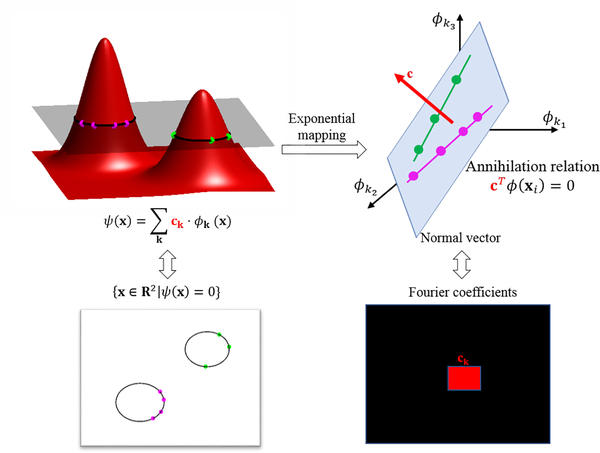

where c is the coefficients vector in the representation of ψ in (6). The above relation is illustrated in Fig. 2.

Figure 2:

Illustration of the annihilation relations (17) in 2D. Assume that the curve is the zero set of a band-limited function ψ(x), shown in the top left. The Fourier coefficients of ψ, denoted by c, are bandlimited in Λ, denoted by the red square in the bottom right. Each point on the curve satisfies ψ(xi) = 0. Using the representation (3), we have cTΦΛ(xi) = 0. This means that the feature map will lift each point in the level set to a |Λ| dimensional subspace whose normal vector is specified by c, as illustrated by the plane and the red vector c in the top right. Note that if more than one closed curve are presented, each curve will be lifted to a lower dimensional subspace in the feature space, as shown by the two lines in the plane, and the lower dimensional spaces will span the |Λ| dimensional subspace. (Figure courtesy of Q. Zou, reprint from [60] with permission from IEEE).

The relation (17) also implies that c is orthogonal to all the feature vectors of points living on and hence a feature matrix constructed from points on the surface is rank deficient by one; i.e., the dimension of the feature space is at most |Γ| – 1. However, we now show that the feature matrix is often significantly low-rank depending on the geometry of the surface and the specific representations of the surface.

3.1. Shift invariant representation

We now show that if the level set function is represented by a shift invariant representation (e.g. B-splines), the dimension of the lifted feature points are dependent on the area of the surface. We consider to be the pth-degree tensor-product B-spline function. Note that βp(x) is support limited in [−(p+1)/2, (p+1)/2]n. If the support of φk(x) does not overlap with , we have . Hence, we have

| (18) |

where ik is the indicator vector whose kth entry is one and the rest of the entries are zeros. Note that all of these indicator vectors are linearly independent. The number of basis vectors whose bandwidth does not overlap with is dependent on the area of as well as the support of ψ. Thus, the dimension of is a measure of the area of the surface , and satisfies

| (19) |

where P is the number of basis functions whose support does not overlap with .

3.2. Band-limited surface representation

We now consider the case of an arbitrary point x on the zero level set of ψ(x) with bandwidth Λ. Using (10), the lifting is specified by:

| (20) |

We note from (20) that the lifting Φ can be evaluated with a larger bandwidth Γ ⊃ Λ. When the lifting is performed with the minimal bandwidth (i.e., Γ = Λ), we term the corresponding lifting as the minimal lifting.

We now analyze Λthe dimension of the feature space for the minimal (Γ = Λ) and non-minimal lifting (Λ ⊂ Γ) cases. In both cases, we will show that the feature space is low-dimensional and is a subspace of .

3.2.1. Irreducible surface with minimal lifting (Γ = Λ)

We first focus on the case where ψ is an irreducible trigonometric polynomial and the bandwidth of the lifting is specified by Λ, which is the bandwidth of the minimal polynomial. The annihilation relation (17) implies that c is orthogonal to the feature vectors ΦΛ(x). This implies that

| (21) |

3.2.2. Irreducible surface with non-minimal lifting (Γ ⊃ Λ)

We now consider the setting where the non-linear lifting is specified by ΦΓ(x), where Λ ⊂ Γ. Because of the annihilation relation, we have

where is the zero filled coefficients in (10). Since the zero set of the function is exactly the same as that of ψ, we have

| (22) |

This implies that any shift of within Γ ⊝ Λ, denoted by will satisfy . It is straightforward to see that and are linearly independent for all values of k0. We denote the number of possible shifts such that the shifted set Λ + k0 is still within Γ (i.e., Λ + k0 ⊆ Γ) by |Γ ⊝ Λ|:

| (23) |

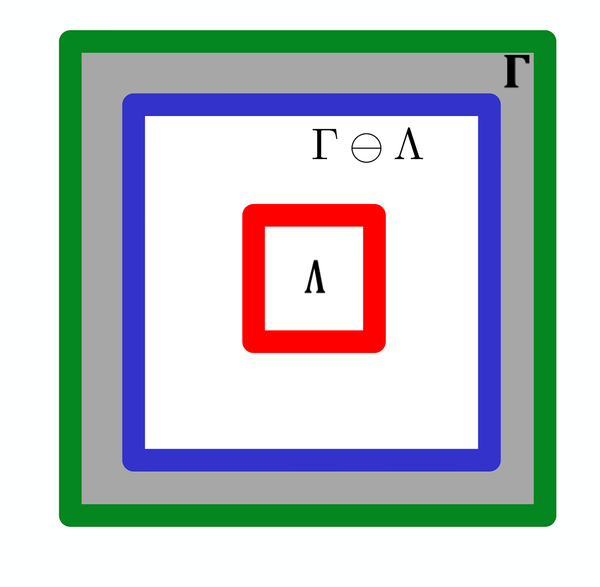

This set is illustrated in Fig. 3 along with Γ and Λ. Since the vectors are linearly independent and are orthogonal to any feature vector ΦΓ(x) on , the dimension of the subspace is bounded by

| (24) |

Figure 3:

The non-minimal filter bandwidth Γ (green) is illustrated along with the minimal filter bandwidth Λ (red). The set Γ ⊝ Λ (blue) contains all indices at which Λ can be centered, while remaining inside Γ.

3.2.3. Union of irreducible surfaces with Γ ⊃ Λi

When , each irreducible surface will be mapped to a subspace of dimension |Γ| — |Γ ⊝ Λi|. This implies that the non-linear lifting transforms the union of irreducible surfaces to the well-studied union of subspace model [14,23,25].

4. Surface recovery from samples

In this section, we will use the low-rank structure of the feature maps of the points to recover the surface. As discussed in the introduction, the recovery of a surface/manifold from point clouds is an important problem in denoising, machine learning, shape recovery from point clouds, and image segmentation. For presentation purposes, we consider different cases in the increasing order of complexity. In particular, we consider irreducible (single connected component) surfaces with minimal lifting, union of irreducible components with minimal lifting, and finally the case with non-minimal lifting. Note that in practice, the bandwidth of the surface is not known apriori, and hence one has to over-estimate the bandwidth; this translates to the non-minimal lifting setting. Our results in this section show that irreducible surfaces can be recovered from very few samples, as long as the number of samples exceed a number proportional to the bandwidth. Union of irreducible surfaces can also be recovered from few samples, but each of the irreducible components need to be sampled adequately to guarantee perfect recovery.

4.1. Sampling theorems

We consider the recovery of the surface from its samples xi; i = 1, ···, N. According to the analysis in the previous section, if the sampling point xi is located on the zero level set of ψ(x), we will then have the annihilation relation specified by (17). Notice that equation (17) is a linear equation with c as its unknowns. Since all the samples xi; i = 1,.., N satisfy the annihilation relation (17), we have

| (25) |

We call ΦΓ(x) the feature matrix of the sampling set X = {x1, ⋯, xN}. We propose to estimate the coefficients c, and hence the surface using the above linear relation (25). Note that is invariant to the scale of c; without loss of generality, we reformulate the estimation of the surface as the solution to the system of equations

| (26) |

We note that without the constraint ||c||F = 1, cT ΦΓ(X) = 0 will have a trivial solution with c = 0. The use of the Frobenius norm constraint enables us to solve the problem using eigen decomposition. The above estimation scheme yields a unique solution, if the matrix ΦΛ(X) has a unique null-space basis vector. We will now focus on the number of samples N and its distribution on , which will guarantee the unique recovery of . We will consider different lifting scenarios introduced in Section 3 separately. As we will see, in some cases considered below, the null-space has a large dimension. However, the minimal null-space vector (coefficients with the minimal bandwidth) will still uniquely identify the surface, provided the sampling conditions are satisfied.

4.1.1. Case 1: Irreducible surfaces with minimal lifting

Suppose ψ(x) is an irreducible trigonometric polynomial with bandwidth Λ. Consider the lifting which is specified by the minimal bandwidth Λ. We see from (21) that rank (ΦΛ(X)) < |Λ| — 1. The following result shows when the inequality is replaced by an equality.

Proposition 4.

Let {x1, ⋯, xN} be N independent and uniformly distributed random samples on the surface , where ψ(x) is an irreducible (minimal) trigonometric polynomial with bandwidth Λ. The feature matrix ΦΛ(X) will have rank |Λ| — 1, if

for almost all surfaces .

We note that the above results are true for almost all surfaces. This implies that the surfaces for which the above results do not hold correspond to a set of measure zero [10]. The above proposition guarantees that the solution to the system of equations specified by (26) is unique (up to scaling) when the number of samples exceeds N = |Λ| — 1 with unit probability. The proof of this proposition can be found in Appendix 9.2. With Proposition 4, we obtain the following sampling theorem.

Theorem 5 (Irreducible surfaces of any dimension).

Let ψ(x), x ∈ [0,1]n, n ≥ 2 be an irreducible trigonometric polynomial whose bandwidth is given by Λ. The zero level set of ψ(x) is denoted as . If we are randomly given N ≥ |Λ| — 1 samples on , then almost all surfaces can be recovered.

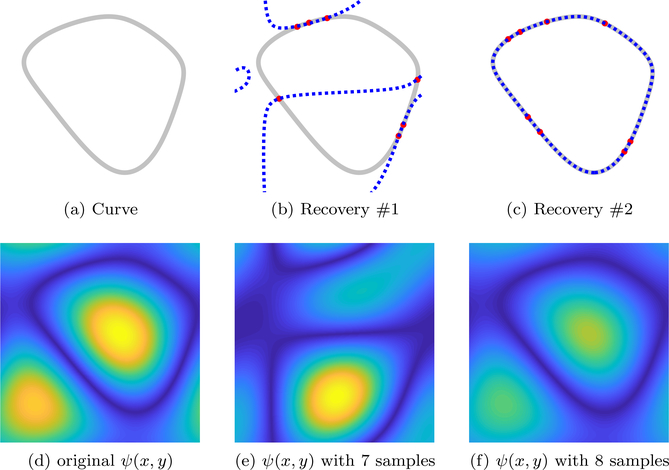

This theorem generalizes the results in [60] to any dimension n ≥ 2 and is illustrated in Fig. 4 and Fig. 5.

Figure 4:

Illustration of Theorem 5 in 2D. The irreducible curve given by (a) is the original curve, which is obtained by the zero level set of a trigonometric polynomial whose bandwidth is 3 × 3. According to Theorem 5, we will need at least 8 samples to recover the curve. In (b), we randomly choose 7 samples (the red dots) on the original curve (the gray curve). The blue dashed curve shows the recovered curve from this 7 samples. Since the sampling condition is not satisfied, the recovery failed. In (c), we randomly choose 8 points (the red dots). From (c), we see that the blue dashed curve (recovered curve) overlaps the gray curve (the original curve), meaning that we recover the curve perfectly. In (d) - (f), we showed the original trigonometric polynomial, the polynomial obtained from 7 samples and the polynomial obtained from 8 samples.

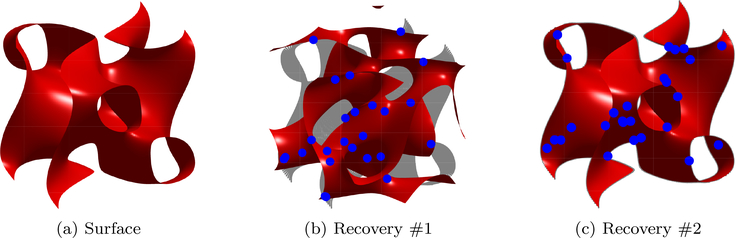

Figure 5:

Illustration of Theorem 5 in 3D. The irreducible surface given by (a) is the original surface, which is given by the zero level set of a trigonometric polynomial whose bandwidth is 3 × 3 × 3. According to Theorem 5, we will need at least 26 samples to recover the surface. In (b), we randomly choose 25 samples (the blue dots) on the original surface (the gray part). The red surface is what we recovered from the 25 samples. Since the sampling condition is not satisfied, the recovery failed. In (c), we randomly choose 26 points (the blue dots). From (c), we see that the red surface (recovered surface) overlaps the gray surface (the original surface), meaning that we recover the surface perfectly.

In the theorem, when n = 2, then is a planar curve. In this setting, if the bandwidth of ψ Λ is a rectangular region with dimension k1 × k2. Then by this sampling theorem, we get perfect recovery with probability one, when the number of random samples on the curve exceeds k1 · k2 ‒ 1. Note that the degrees of freedom in the representation (6) is k1 · k2 ‒ 1, when we constrain ||c||F = 1. This implies that if the number of samples exceed the degrees of freedom, we get perfect recovery. Note that these results are significantly less conservative than the ones in [60], which required a minimum of (k1 + k2)2 samples. We note that the results in [60] were the worst case guarantees, and will guarantee the recovery of the curve from any (k1 + k2)2 samples. By contrasts, our current results are high probability results; there may exist a set of N ≥ k1 · k2 − 1 samples from which we cannot get unique recovery.

We note that the current work is motivated by the phase transition experiments (Fig. 5) in [60], which shows that one can recover the curve in most cases when the number of samples exceeds k1 · k2 ‒ 1 rather than the conservative bound of (k1 + k2)2. We also note that it is not straightforward to extend the proof in [60] to the cases beyond n = 2. Specifically, we relied on Bezout’s inequality in [60], which does not generalize easily to high dimensional cases.

4.1.2. Case 2: Union of irreducible surfaces with minimal lifting

We now consider the union of irreducible surfaces , where ψ has several irreducible factors ψ(x) = ψ1(x)⋯ψM(x). Then we have . Suppose the bandwidth of ψ(x) is given by Λ and the bandwidth of each factor ψi(x) is given by Λi. We have the following result for this setting.

Proposition 6.

Let ψ(x) be a trigonometric polynomial with M irreducible factors, i.e.,

| (27) |

Suppose the bandwidth of each factor ψi(x) is given by Λi and the bandwidth of ψ is Λ. Assume that {x1, ⋯, xn} are N uniformly distributed random samples on , which are chosen independently. Then with probability 1 that the feature matrix ΦΛ(X) will be of rank |Λ| ‒ 1 for almost all 0 if

each irreducible factor is randomly sampled with Ni > |Λi| — 1 points, and

the total number of samples satisfy N ≥ |Λ| — 1.

Similar to previous propositions, the above results are valid for almost all ψ, which implies that the set of ψ for which the above results do not hold is a set of measure zero [10]. The proof of this result can be seen in Appendix 9.2.3. Based on this proposition, we have the following sampling conditions.

Theorem 7 (Union of irreducible surfaces of any dimension).

Let ψ(x) be a trigonometric polynomial with M irreducible factors as in (28). If the samples x1,., xn satisfy the conditions in Proposition 6, then the surface can be uniquely recovered by the solution of (26) for almost all ψ.

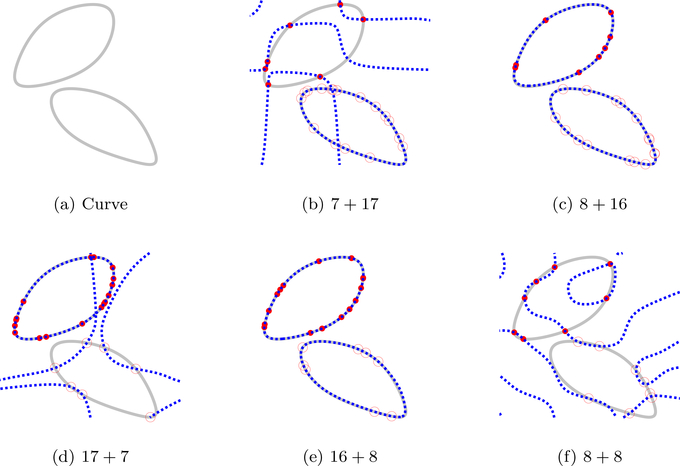

Unlike the sampling conditions in Theorem 5 that does not impose any constraints on the sampling, the above result requires each component to be sampled with a minimum rate specified by the degrees of freedom of that component. We illustrate the above result in Fig. 6 in 2D (n = 2), where is the union of two irreducible curves with bandwidth of 3 × 3, respectively. The above results show that if each of these simply connected curves are sampled with at least eight points and if the total number of samples is no less than 24, we can uniquely identify the union of curves. The results show that if any of the above conditions are violated, the recovery fails; by contrast, when the number of randomly chosen points satisfy the conditions, we obtain perfect recovery.

Figure 6:

Illustration of Theorem 7. The original curve (a) is given by the zero set of a reducible trigonometric polynomial with bandwidth 5 × 5, which is the product of two trigonometric polynomials with bandwidth 3 × 3. According to the sampling theorem, we totally need at least 24 samples and each of the components needs to be sampled for at least 8 samples. We first choose 7 samples (red dots) on the first component and 17 samples (red circles) on the second one. The gray curve in (b) is the original curve and the blue dashed curve is what we recovered from the 7+ 17 = 24 samples. Since the sampling condition is not satisfied, the recovery failed. In (c), we choose 8 samples (red dots) on the first component and 16 samples (red circles) on the second one. From (c), we see that the gray curve (the original curve) overlaps the blue dashed curve (recovered curve), meaning that we recovered the curve successfully. In (d), we choose 17 samples on the first component and 7 samples on the other one. From (d), we see that the recovery is not successful. In (e), we have 16 samples on the first component and 8 samples on the second one. The original curve overlaps the recovered one. So we recovered it perfectly. Lastly, we choose 8 samples on each of the component and we failed to recover the curve as shown in (f). Note that the recovered curves pass through the samples in all cases.

4.1.3. Case 3: Non-minimal lifting

In Section 4.1.1 and 4.1.2, we introduced theoretical guarantees for the perfect recovery of the surface in any dimensions. The sampling theorems introduced in Section 4.1.1 and 4.1.2 assume that we know exactly the bandwidth of the surface or the union of surfaces. However, in practice, the true bandwidth of the surface is usually unknown. We now consider the recovery of the surface, when the bandwidth is over-estimated, or equivalently the lifting is performed assuming Γ ⊃ Λ. As discussed in Section 3.2.2, the dimension of is upper bounded by |Γ| — |Γ ⊝ Λ|, which implies that

where Γ ⊝ Λ represents the number of valid shifts of Λ within Γ as discussed in Section 3.2.2.

The following two propositions show when the inequality in the rank relation above can be an equality and hence we can recover the surface.

Proposition 8 (Irreducible surface with non-minimal lifting).

Let {x1, ⋯, xn} be N random samples on the surface , chosen independently. The trigonometric polynomial ψ(x) is irreducible whose true bandwidth is Λ. Suppose the lifting mapping is performed using bandwidth Γ ⊃ Λ. Then rank(ΦΓ(X)) = |Γ| – |Γ ⊝ Λ| for almost all ψ, if

The proof of this proposition can be found in Appendix 9.2.4.

Proposition 9 (Union of irreducible surfaces with non-minimal lifting).

Let ψ(x) be a randomly chosen trigonometric polynomial with M irreducible factors, i.e.,

| (28) |

Suppose the bandwidth of each factor ψi(x) is given by Λi and the bandwidth of ψ is Λ. Let Γi ⊃ Λi be the non-minimal bandwidth of each factor ψi(x) and Γ ⊃ Λ is the bandwidth of the non-minimal lifting. Assume that {x1, ⋯, xn } are N random samples on that are chosen independently. Then, the feature matrix ΦΛ(X) will be of rank |Γ| – |Γ ⊝ Λ| for almost all f if

each irreducible factor is randomly sampled with Ni ≥ |Γ| – |Γi ⊝ Λi| points, and

the total number of samples satisfy N ≥ |Γ| – |Γ ⊝ Λ|.

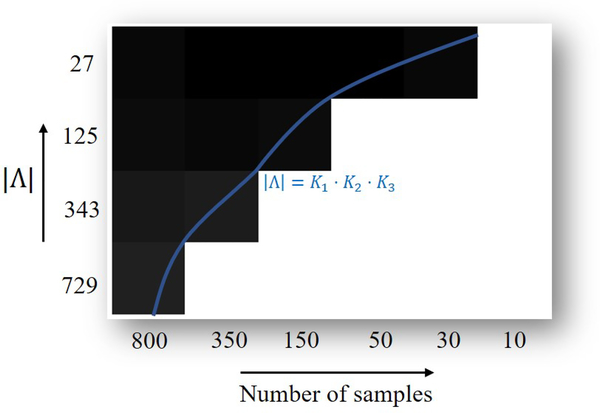

We prove this result in Appendix 9.2.5. Note that in practice, when non-minimal lifting mapping is performed, we then randomly sample approximately |Γ| – |Γ ⊝ Λ| positions on . This random strategy ensures that the samples are distributed to the factors, roughly satisfying the conditions in Proposition 9. We further studied this proposition in Fig. 7. We considered several random surfaces obtained by choosing random coefficients, each with different bandwidth and considered their recovery from different number of samples. From which, we obtained the phase transition plot given in Fig. 7, which agrees well with the theory.

Figure 7:

Effect of number of sampled points on surfaces reconstruction error. We randomly generated several surfaces with different bandwidths and number of sampled points, and tried to recover the surfaces from these samples. The reconstruction errors of the surfaces averaged over several trials are shown in the above phase transition plot, as a function of bandwidth and number of sampled entries. the color black indicates that the true surfaces can be recovered in any of the experiments, while the color white represents that the true surfaces are not recovered in all the experiments. It is seen that we can almost recover the surfaces with |Λ| = k1 · k2 · k3 samples.

4.2. Surface recovery algorithm for the non-minimal setting

The two propositions in Section 4.1.3 show that ΦΓ(X) has |Γ ⊝ Λ| null space basis vectors , when the non-minimal lifting with bandwidth Γ is performed. The following result from [60] shows that the null-space vectors are related to the minimal polynomial of the surface. In particular, all null-space vectors have the minimal polynomial as a factor. We will use this property to extract the surface from the null-space vectors as their greatest common divisor. We also introduce a simpler computational strategy which relies on the sum of squares of the null-space vectors.

Proposition 10 (Proposition 9 in [60]).

The coefficients of the trigonometric polynomials of the form

is a null space vector of ΦΓ(X).

Note that the coefficients of θk(x) correspond to the shifted versions of the coefficients of ψ and hence are linearly independent. We also note that any such function is a valid annihilating functions for points on . When the dimension of the null-space is |Γ ⊝ Λ|, these corresponding coefficients form a basis for the null-space. Therefore, we have that any function in the null-space can be expressed as

| (29) |

| (30) |

where αk and γ are arbitrary coefficients and function, respectively. Note that all of the functions obtained by the null-space vectors have ψ as a common factor.

Accordingly, we have that ψ(x) is the greatest common divisor of the polynomials , where ni are the null-space vectors of ΦΓ(X), which can be estimated using singular value decomposition (SVD). Since we consider polynomials of several variables, it is not computationally efficient to find the greatest common divisor. We note that we are not interested in recovering the minimal polynomial, but are only interested in finding the common zeros of μi(x). We hence propose to recover the original surface as the zeros of the sum of squares (SoS) polynomial

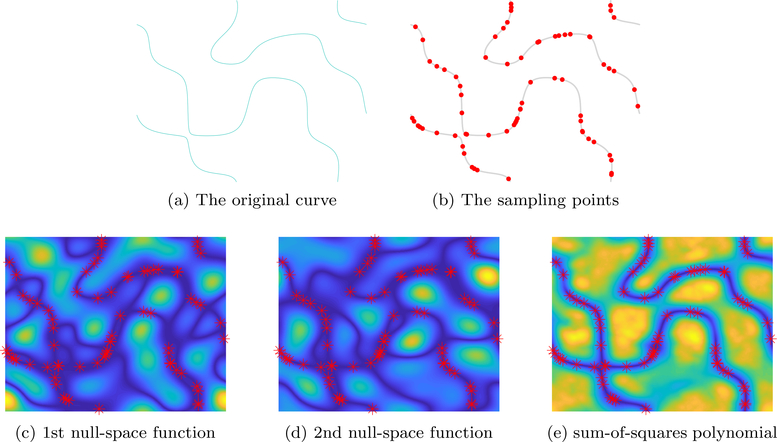

Note that rank guarantees in Propositions 9.2.4 and 9.2.5 ensure that the entire null-space will be fully identified by the feature matrix. Coupled with Proposition 10, we can conclude that the recovery using the above algorithm (SVD, followed by the sum of squares of the inverse Fourier transforms of the coefficients) will give perfect recovery of the surface under noiseless conditions. The algorithm is illustrated in Fig. 8.

Figure 8:

Illustration of the sampling fashion for non-minimal bandwidth. We consider the curve as shown in (a), which is given by the zero level set of a trigonometric polynomial of bandwidth 5 × 5. We choose the non-minimal bandwidth Γ as 11 × 11. According to the sampling condition for non-minimal bandwidth, we sampled on 72 random locations. We randomly chose two null-space vectors for the feature matrix of the sampling set, which gave us functions (c) and (d). We can see that all of these functions have zeros on the original zero set, in addition to processing several other zeros. The sum of squares function is shown in (e), showing the common zeros, which specifies the original curve.

4.3. Surface recovery from noisy samples

The analysis in Section 4.1.3 shows that when the bandwidth of the surface is small, the feature matrix is low rank. In practice, the sampling points are usually corrupted with some noise. We denote the noisy sampling set by Y = X + N, where N is the noise. We propose to exploit the low-rank nature of the feature matrix to recover it from noisy measurements. Specifically, when the sampling set X is corrupted by noise, the points will deviate from the original surface, and hence the features will cease to be low rank. We impose a nuclear norm penalty on the feature maps that will push the feature vectors to a subspace. Since the feature vectors are related to the original points by the exponential mapping, the original points will move to the surface. In practice it is difficult to compute the feature map. We hence rely on an iterative reweighted least-squares algorithm, coupled with the kernel-trick, to avoid the computation of the features. Since the cost function is non-linear (due to the non-linear kernel), we use steepest descent-like algorithm to minimize the cost function. We note that each iteration of this algorithm has similarities to non-local means algorithms, which first estimate the weight/Laplacian matrix from the patches, followed by a smoothing. We also note that this approach has conceptual similarities to kernel low-rank algorithms used in MRI and computer vision [28, 29]. These algorithms rely on explicit polynomial mappings, low-rank approximation of the features, followed by the analytical evaluation of the pre-images that is possible for polynomial kernels.

We pose the denoising as:

| (31) |

where we use the nuclear norm of the feature matrix of the sampling set as a regularizer. Unlike traditional convex nuclear norm formulations, the above scheme is non-convex.

We adapt the kernel low-rank algorithm in [31,38] to the high dimensional setting to solve (31). This algorithm relies on an iteratively reweighted least squares (IRLS) approach [12, 26] which alternates between the following two steps:

| (32) |

and

| (33) |

where and η > 1 is a constant. Here, . We use the kernel-trick to evaluate . The kernel-trick suggests that we do not need to explicitly evaluate the features. Each entry of the matrices correspond to inner-products in feature space:

| (34) |

which can be evaluated efficiently using the nonlinear function κ (termed as kernel function) of their inner- products in .

The dependence of the kernel function on the lifting is detailed in Section 5.3. Since the above problem in (32) is not quadratic, we propose to solve it using gradient descent as in [60]. We note that the cost function in (32) can be rewritten as

| (35) |

where Pi,j are the entries of the matrix P(n−1). As will be discussed in detail in Section 5.3, the exponential kernel for a circular support as in Fig. 11.(b) can be approximated as a circularly symmetric kernel . In this case, the partial derivatives of (32) with respect to one of the vectors xi is

| (36) |

| (37) |

Figure 11:

bandwidth of the set Λ with different q values.

Here,

| (38) |

Thus, the gradient of the cost function (35) is :

| (39) |

Here, L = D — W is the matrix obtained from the weights W and D is a diagonal matrix with diagonal entries .

We note that the gradient of (32) specified by (39) is also the gradient of the cost function

| (40) |

which is used in approaches such as non-local means (NLM) [6] and graph regularization [48]. We note that the above optimization problem is quadratic and hence has an analytical solution. We thus alternate between the solution of (40) and updating the weights, and hence the Laplacian matrix using (38), where P is specified by (33). Despite the similarity to NLM, we note that NLM approaches use a fixed Laplacian unlike the iterative approach in our work. In addition, the expression of the Laplacian is also very different. We refer the readers to [60] for comparison of the proposed scheme with the above graph regularized algorithm. Once the denoised null-space matrix is obtained from the above algorithm, we can use the sum of square approach described in Section 4.1.3 to recover the surfaces. We note that the algorithm is not very sensitive to the true bandwidth of the kernel Γ, as long as it over-estimates the true bandwidth of the surface Λ.

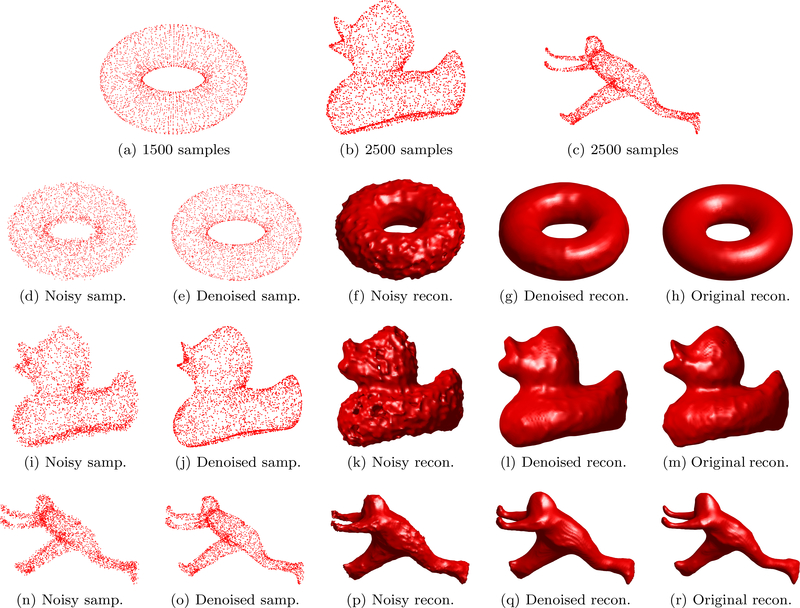

We illustrate this approach in the context of recovering 3D shapes from noisy point clouds in Fig. 9. The data sets are obtain from AIM@SHAPE [1]. We note that the direct approach, where the null-space vector is calculated from the noisy feature matrix, often results in perturbed shapes. By contrast, the nuclear norm prior is able to regularize the recovery.

Figure 9:

Illustration of the points cloud denoising algorithm and surface recovery algorithm with unknown bandwidth. The first row shows the samples drawn from three surfaces. Noise is added to the samples (see (d), (i), (n)). Then we use the proposed algorithm to denoise the points. The parameter λ in (31) is chosen as 1.4 for the denoising algorithm. The number of iterations for the denoising algorithm is 30. The surfaces that are recovered from noisy samples and denoised samples are also presented for comparison. The bandwidth was chosen as 31 × 31 × 31 for all the experiments.

5. Recovery of functions on surfaces

As discussed in the introduction, modern machine learning algorithms pre-learn functions from given input and output data pairs [19]. For example, CNN based denoising approaches that provide state-of-the-art results essentially learn to generate noise-free pixels or patches from given training data with several noisy and noise- free patch pairs [52,58]. The problem can be formulated as estimating a nonlinear function y = f(x), given input and output data pairs (xi, yi); i = 1,.., Ntrain. A challenge in the representation of such high dimensional function is the large number of parameters, which is also termed as the curse of dimensions. Kernel methods [35], random forests [46] and neural networks [57] provide a powerful class of machine learning models that can be used in learning highly nonlinear functions. These models have been widely used in many machine learning tasks [16].

We now show that the results shown in the previous sections provide an attractive option to compactly represent functions, when the data lie on a smooth surface or manifold in high dimensional spaces. We note that the manifold assumption is widely assumed in a range of machine learning problems [11, 13]. We now show that if the data lie on a smooth surface in high dimensional space, one can represent the multidimensional functions very efficiently using few parameters.

We model the function using the same basis functions used to represent the level set function. In our case2, we model it as a band-limited multidimensional function:

| (41) |

where . The number of free parameters in the above representation is |Γ|, where is the bandwidth of the function. Note that |Γ| grows rapidly with the dimension n. The large number of parameters needed for such a representation makes it difficult to learn such functions from few labeled data points. We now show that if the points lie on the union of irreducible surfaces as in (14), where the bandwidth of ψ is given by Λ ⊂ Γ, we can represent functions of the form (41) efficiently.

5.1. Compact representation of features using anchor points

We use the upper bound of the dimension of the feature matrix in (24) to come up with an efficient representation of functions of the form 41. The dimension bound (24) implies that the features of points on lie in a subspace of dimension r = |Γ| — |Γ ⊝ Λ|, which is far smaller than |Γ| especially when the dimension n is large. We note that kernel methods often approximate the feature space using few eigen vectors of kernel PCA. However, there is no guarantee that these basis vectors are mappings of some points on . Hence, it is a common practice to consider all the training samples to capture the low-dimensional feature vectors in kernel PCA. We now show that it is possible to find a set of N ≥ r anchor points , such that the feature space is in span{ΦΓ(a1), · · ·, ΦΓ(aN)}. This result is a Corollary of Proposition 9.

Corollary 11.

Let ψ(x) be a randomly chosen trigonometric polynomial with M irreducible factors as in (28). Suppose Γi ⊃ Λi is the non-minimal bandwidth of each factor ψi(x) and Γ ⊃ Λ is the total bandwidth. Let {a1, ⋯, aN} be N randomly chosen anchor points on satisfying

each irreducible factor is sampled with Ni ≥ |Γi| — |Γi ⊝ Λi| points, and

the total number of samples satisfy N ≥ |Γ| — |Γ ⊝ Λ|.

Then,

| (42) |

with probability 1.

As discussed in Section 4.1.3, if we randomly choose N ≥ |Γ| — |Γ ⊝ Λ| points on , the feature matrix will satisfy the conditions in Corollary 11 and hence (42) with unit probability. This relation implies that the feature vector of any point can be expressed as the linear combination of the features of the anchor points ΦΓ(ai); i = 1, ⋯, N:

| (43) |

| (44) |

Here, αi(x) are the coefficients of the representation. Note that the complexity of the above representation is dependent on N, which is much smaller than |Γ|, when the surface is highly band-limited. We note that the above compact representation is exact only for and not for arbitrary ; the representation in (44) will be invalid for .

However, this direct approach requires the computation of the high dimensional feature matrix, and hence may not be computationally feasible for high dimensional problems. We hence consider the normal equations and solve for α(x) as

| (45) |

where (·)† denotes the pseudo-inverse.

5.2. Representation and learning of functions

Using (41), (44), and (45), the function can be written as

| (46) |

| (47) |

Here, f(x) is an M × 1 vector, while F is an M × N matrix. is an N × N matrix and kA(x) is an N × 1 vector. Thus, if the function values at the anchor points, specified by f(ai); i = 1, ⋯, N are known, one can compute the function for any point .

We note that the direct representation of a function in (41) requires |Γ| parameters, which can be viewed as the area of the green box in Fig. 3. By contrast, the above representation only requires |Γ| ⊝ |Γ : Λ| anchor points, which can be viewed as the area of the gray region in Fig. 3. The more efficient representation allows the learning of complex functions from few data points, especially in high dimensional applications.

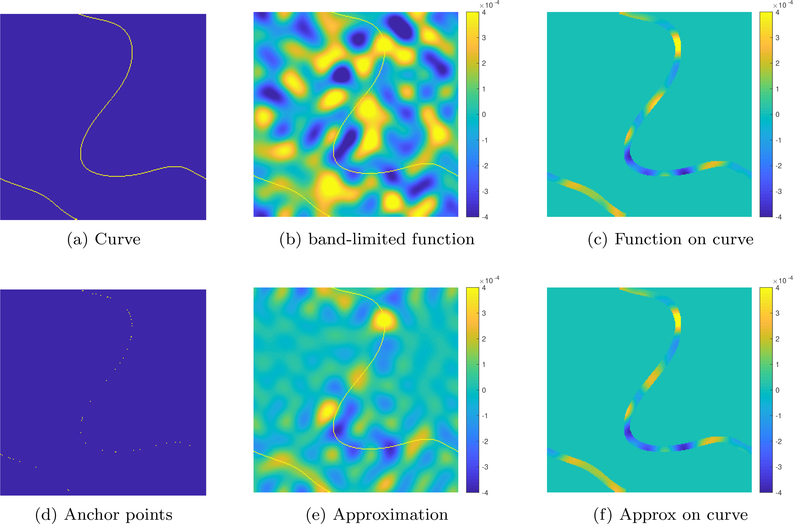

We demonstrate the above local function representation result in a 2D setting in Fig. 10. Specifically, the original band-limited function is with bandwidth 13 × 13. The direct representation of the function has 13 × 13 = 169 degrees of freedom. Now, if we only care about points on a curve which is with bandwidth 3 × 3, then the same function living on the curve can be represented exactly using 48 anchor points, thus significantly reducing the degrees of freedom. However, note that the above representation is only exact on the curve. We note that the function goes to zero as one moves away from the curve.

Figure 10:

Illustration of the local representation of functions in 2D. We consider the local approximation of the band-limited function in (b) with a bandwidth of 13 × 13, living on the band-limited curve shown in (a). The bandwidth of the curve is 3 × 3. The curve is overlaid on the function in (b) in yellow. The restriction of the function to the vicinity of the curve is shown in (c). Our results suggest that the local function approximation requires 132 − 112 = 48 anchor points. We randomly select the points on the curve, as shown in (d). The interpolation of the function values at these points yields the global function shown in (e). The restriction of the function to the curve in (f) shows that the approximation is good.

The choice of anchor points depends on the geometry of the surface, including the number of irreducible components. For arbitrary training samples, we can estimate the unknowns F in (47) from the linear relations

| (48) |

as F = YZH(ZZH)† The above recovery is exact when we have N = r achor points because Z has full column rank in this case. The reason why Z has full column rank is due to (44) and (45). Equation (44) suggests that rank(Z) ≥ N, while equation (45) shows rank(Z) < N. Therefore, we have rank(Z) = N, indicating that Z has full rank in this case. When N > r, the F is obtained using the pseudo-inverse, which is based on the least square approximation.

5.3. Efficient computation using kernel trick

We use the kernel-trick to evaluate and kA (x), thus eliminating the need to explicitly evaluating the features of the anchor points and x. Each entry of the matrix is computed as in (34), while the vector kA (x) is specified by:

| (49) |

which can be evaluated efficiently as nonlinear function κ (termed as kernel function) of their inner-products in . We now consider the kernel function κ for specific choices of lifting.

Using the lifting in (20), we obtain the kernel as

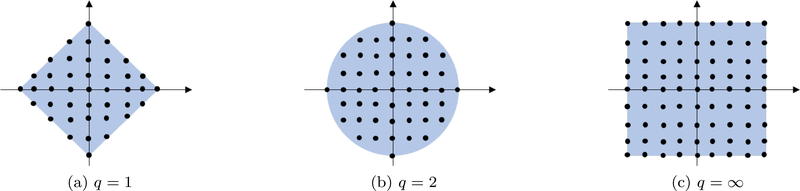

Note that the kernel is shift invariant in this setting. Since is an n dimensional function, evaluating and storing it is often challenging in multidimensional applications. We now focus on approximating the kernel efficiently for fast computation. We consider the impact of the shape of the bandwidth set Γ on the shape of the kernel. Specifically, we consider sets of the form

| (50) |

where d denotes the size of the bandwidth. The integer q specifies the shape of Γ [55], which translates to the shape of the kernel

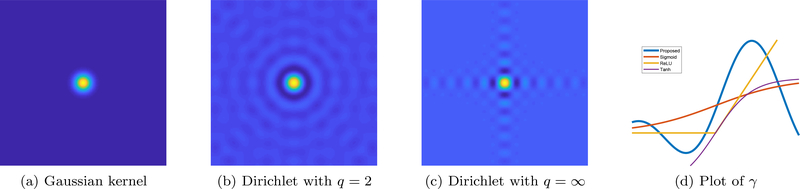

| (51) |

We term the q =1 case as the diamond Dirichlet kernel. If q = 2, we call it the circular Dirichlet kernel. We call the Dirichlet kernel the cubic Dirichlet kernel if q = ∞. See Figure 11 for the bandwidth and Figure 12 to see the associated kernel.

Figure 12:

Visualization of kernels in and the non-linear function γ with some commonly used activation functions.

We note from the above figures that the circular Dirichlet kernel (q = 2) is roughly circularly symmetric, unlike the triangular or diamond kernels. This implies that we can safely approximate it as

| (52) |

where . We note that this approximation results in significantly reduced computation in the multidimensional case. The function g may be stored in a look-up table or computed analytically. We use this approach to speed up the computation of multidimensional functions in Section 6.

An additional simplification is to assume that x and y are unit-norm vectors. In this case, we can approximate

| (53) |

where γ(z) = g(1 − z/2). Here, we term γ as the activation function. While we do not make this simplifying assumption in our computations, it enables us to show the similarity of the computational structure of (46) to current neural network. The plot of this activation function, along with commonly used activation functions, is shown in Figure 12 (d).

With the aforementioned analysis, we can then rewrite (46) as

| (54) |

| (55) |

In the second step, we used the approximation in (53).

5.4. Optimization of the anchor points and coefficients

The above results show the existence of a computational structure of the form (55) with N anchor points a1,.., aN on the surface and the corresponding coefficients that can represent the function exactly. We note that the anchor points need not to be selected as a subset of the training data. We note that Corollary 11 guarantees to have full column rank as N = r. However, the condition number of this matrix may be poor, depending on the choice of the anchor points. It may be worthwhile to choose the anchors such that the condition number of is low, which will reduce the noise amplification in (45).

We hence propose to solve for the anchor points A and the corresponding coefficients such that it minimizes the least square error evaluated on the training data:

| (56) |

We propose to minimize the above expression using stochastic gradient descent. This approach will allow the choice of the anchor points a1,.., aN.

6. Relation to neural networks

We now briefly discuss the close relation of the proposed framework with neural networks. We consider the function learning setting, which is considered in Section 5 and show that the computational structure closely mimics a neural network with one hidden layer. We discuss briefly the benefits of depth in improving the representation. We also show that the above framework can be used to approximate the learning of a manifold from data, which can be viewed as a signal subspace alternative to the null-space approach considered in Section 4. We also show that the computational structure closely mimics an auto-encoder.

6.1. Task/function learning from input output pairs

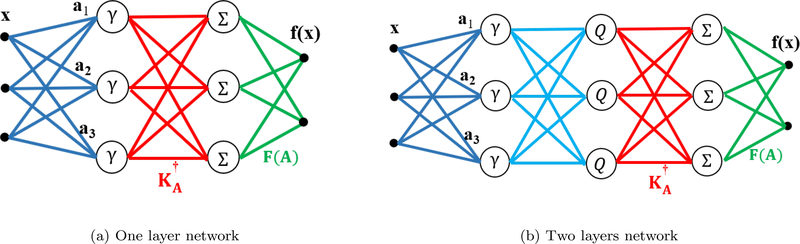

We now focus on the learning of a function (54) from training data pairs and will show its equivalence with neural networks. Note that the computation involves the inner product of the input signal x with templates ai; i = 1,.., N, followed by the non-linear activation function γ to obtain kA(x). These terms are then weighted by the fully connected layer A followed by weighting by the second fully connected layer . See Fig. 13 for the visual illustration.

Figure 13:

Computational structure of function evaluation. (a) corresponds to (46) to compute the band-limited multidimensional function f on . The inner-product between the input vector x and the anchor templates on the surface are evaluated, followed by non-linear activation functions γ to obtain the coefficients αi(x). These coefficients are operated with the fully connected linear layers and F(A). The fully connected layers can be combined to obtain a single fully connected layer . Note that this structure closely mimics a neural network with a single hidden layer. (b) uses an additional quadratic layer, which combines functions of a lower bandwidth to obtain a function of a higher bandwidth.

As noted above, the representation using anchor points to reduce the degrees of freedom significantly compared to the direct representation. However, we note that the number of parameters needed to represent a high bandwidth function in high dimensions is still high. We now provide some intuition on how the low-rank tensor approximation of functions and composition can explain the benefit of common operations in deep networks.

We now consider the case when the band-limited multidimensional function in (41) can be approximated as

| (57) |

Clearly, the bandwidth of f is almost twice that of , showing the benefit of adding layers. While an arbitrary function with the same bandwidth as f cannot be represented as in (57), one may be able to approximate it closely. The new layer will have a quadratic non-linearity Q, if the function has the form (57). Note that one may use arbitrary non-linearity in place of the quadratic one in (57).

Similarly, one may perform a low-rank tensor approximation of an arbitrary N dimensional function . Specifically, the approximation involves the sum of products of 1-D functions.

| (58) |

where . The above sum of products can also be realized by taking weighted linear combination of 1-D functions, followed by a non-linearity as in (57). This allows one to have a hierarchical structure, where lower dimensional functions are pooled together to represent a multidimensional function.

In image processing applications, the functions to be learned are shift-invariant. This allows one to learn functions of small image patches (e.g. 3×3) of a specified dimension at each layer. The functions on nearby pixels in the output thus correspond to information from different 3 × 3 neighborhoods. The low-dimensional functions from non-overlapping 3 × 3 neighborhoods could be combined with downsampling as in (58) to represent a high dimensional function (e.g. 9 × 9) neighborhoods. The process can be repeated to improve the efficiency of representation.

6.2. Relation to auto-encoders

We note that the space of band-limited functions of the form (41) can reasonably approximate lower order polynomials in for sufficiently high bandwidth Γ [49]. In particular, let us assume that there exists a set of coefficients β such that

| (59) |

In this case, the above results imply that one can represent any point on the surface as

| (60) |

We note that the resulting network is hence essentially an auto-encoder. Specifically, the inner-products between the feature vectors of x and the anchor point ai denoted by α(x) can be viewed as the latent features or compact code. As described previously, the coefficients captures the geometry of the surface, while the top layer A is the decoder that recover the signal from its latent vectors.

We note that the surface recovery algorithms in Section 4 follow a null-space approach, where we identify the null-space of the feature space or equivalently the annihilation functions from the samples of the surface. Specifically, the sum of squares of the null-space functions in Section 4.2 provides a measure of the error in projecting the feature vector to the null-space of the feature matrix.

| (61) |

| (62) |

where ni are the null-space vectors. The projection energy is zero if the point x is on and is high when it is far from it.

By contrast, the auto-encoder approach can be viewed as a signal subspace approach, where we project the samples to the basis vectors specified by the feature vectors of the anchors ΦΓ(ai). Specifically, we use the non-linearity specified by (53) and trained the network parameters (A as well as the weights of the inner-products) using stochastic gradient descent. The training data corresponds to randomly drawn points on the surface. To ensure that the network learns a projection, we trained the network as a denoising auto-encoder; the inputs correspond to samples on the surface corrupted with Gaussian noise, while the labels are the true samples. Once the training is complete, we plot the approximation error

| (63) |

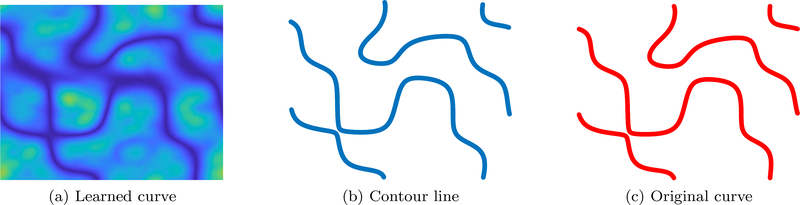

as a function of the input point in Fig. 14.

Figure 14:

Illustration of the surface learning network using the curve in Fig. 8. (a) and (b) are the learned results. We compared the learned curve (blue curve) with the original curve (red curve) in (c). From which we see that the two curves are almost the same, indicating that the learned network performs well.

We trained the network using the exemplar curve shown in Fig. 8. We randomly choose 1000 points on the curve as the training data and 250 features are chosen in the middle layer. The bandwidth of the Dirichlet kernel is chosen to be 15. The trained network is then used to learn the curve. The learned results are shown in Fig. 14. From which one can see that the proposed learning framework performs well. We note that the projection error is close to zero on the surface, while it is high if it is away from the surface. Note that this closely mimics the plot in Fig. 8. Once trained, the surface can be estimated in low-dimensional settings as the zero set of the projection error as shown in Fig. 14.(b), which closely approximates the true curve in (c). We note that can be viewed as a residual denoising auto-encoder. Once trained, this network can be used as a prior in inverse problems as in [2], where we have used the null-space network in Section 6. We have also used the null-space prior (61) in our prior work [39], where the null-space basis was learned as described in Section 4.3.

7. Illustration in denoising

We now illustrate the preliminary utility of the proposed network in image denoising. Specifically, we consider the learning of a function , which predicts the denoised center pixel of a patch from the noisy p × p patch. The function f in p2 dimensional space is associated with a large number of free parameters; learning of these unknowns are challenging due to the curse of dimensionality. Then the result in the previous section offers a work-around, which suggests that the function can be expressed as the linear combination of the features of “anchor-patches”, weighted by p.

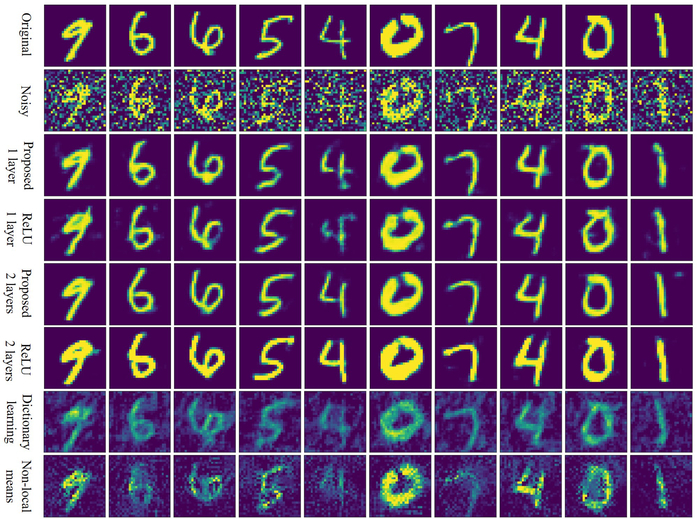

We propose to learn the anchor patches ai and the function values f(ai) from exemplar data using stochastic gradient descent to minimize (56). Note that the learned representation is valid for any patch, and hence the proposed scheme is essentially a convolutional neural network. The difference of our structure in (55) with the commonly used convolutional neural networks (CNN) structure is the activation function γ. We replaced the ReLU non-linearity in a network with the proposed function γ in a single layer network. For the two-layer network, we replaced the ReLU non-linearity with γ and Q as indicated in (57).

We first tested the performance of the network on the MNIST dataset [20]. In the experiments, we choose the patch size to be 7 × 7 and d = 7 in (51). We also trained a ReLU network with the same parameters for comparison. Besides, we compared the proposed scheme against non-local means (NLM) and dictionary learning (DL) [9]. All algorithms, except for NLM were trained using the MNIST training set provided in TensorFlow. For the proposed network and the ReLU network, they are trained using 300 epoches and for the dictionary learning method, 500 iterations are used to learn the dictionaries. The comparison of the testing results is shown in Figure 15. The comparison of the PSNR is reported in the caption. The results show that the neural network based approaches offer improved performance compared to dictionary learning and non-local methods. Our results also show that the proposed networks provide comparable, if not slightly better performance, compared to the ReLU networks. The results also show the slight improvement in performance offered by the proposed two-layer networks over single layer networks.

Figure 15:

Comparison of our learned denoiser using the proposed activation function and the ReLU activation function. The testing results show that the denoising performance using the proposed activation function is comparable to the performance using ReLU. The eight rows in the figure correspond to the original images, the noisy images, the denoised images using the proposed one-layer network, the denoised images using one layer ReLU network, the denoised images using the proposed two-layer network, the denoised images using two-layer ReLU network, the denoised images using dictionary learning and the denoised images using non-local means. The averaged PSNR of the denoised images using the proposed one-layer network, one layer ReLU network, proposed two-layer network, two-layer ReLU network, dictionary learning and non-local means are 19.68 dB, 20.03 dB, 20.86 dB, 17.48 dB, 14.76 dB and 14.28 dB respectively. From the quantitative results, we can see that our proposed one-layer network performs comparable to the one-layer ReLU network. For the proposed two-layer network, the performance is getting better from both quantitative and visual points of view. For the two-layer ReLU network, visually the performance is better than that of the one-layer ReLU network. But the PSNR is getting worse. The main reason that causes the low PSNR for the two-layer ReLU network is the change of the pixel values on each hand-written digit.

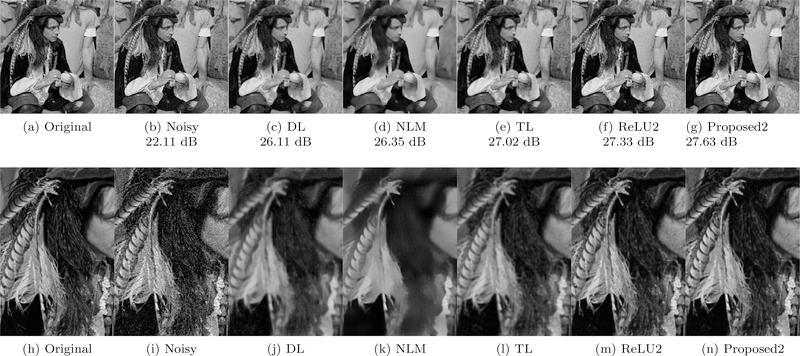

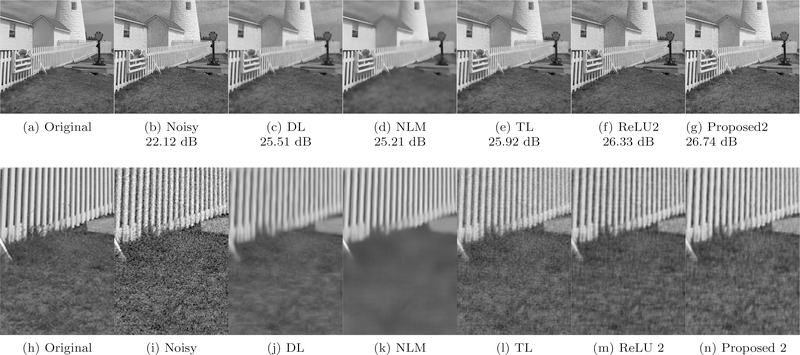

The size of the image in the MNIST dataset is small. To better demonstrate the performance of the proposed network, we also applied the proposed scheme to the denoising of natural images. The algorithm was trained on the images of Hill, Cameraman, Couple, Bridge, Barbara and Boat at three different noise settings. We assume the noise is Gaussian white noise in the natural images setting. We compared the proposed scheme against dictionary learning (DL), non-local means (NLM) and transform learning (TL) [42]. In the experiments for natural images, the patch size is chosen as 9 × 9 and d = 7 in (51). For the proposed network and the ReLU network, they are trained using 300, 400, 450 epoches corresponding to the noise level σ = 10, 20,100, and for the dictionary learning method, 500 iterations are used to learn the dictionaries. We then tested the denoising performance on two natural images: Man and Lighthouse.

The quantitative results (PSNR) of the algorithm are shown in Table 1, while the results on Man and Lighthouse with noise of standard deviation σ = 20 are shown in Fig. 16 and Fig. 17. In Table 1, Fig. 16 and Fig. 17, “ReLU1” and “ReLU2” represent one-layer ReLU network and two-layer ReLU network, while “Proposed1” and “Proposed2” stand for the proposed one-layer network and proposed two-layer network. The results show that the performance of the neural network schemes is superior to classical methods and the proposed networks provide comparable or slightly better performance than the ReLU networks.

Table 1:

The PSNR (dB) of the denoised results for the two testing natural images with different noise level.

| Img. | σ | DL | NLM | TL | ReLU1 | ReLU2 | Proposed1 | Proposed2 |

|---|---|---|---|---|---|---|---|---|

| 10 | 26.63 | 26.64 | 27.41 | 30.29 | 31.11 | 30.99 | 31.19 | |

| Man | 20 | 26.11 | 26.35 | 27.02 | 27.47 | 27.33 | 27.25 | 27.63 |

| 100 | 19.69 | 20.95 | 21.65 | 21.85 | 22.11 | 21.91 | 22.06 | |

| 10 | 27.08 | 29.08 | 28.71 | 28.88 | 29.27 | 30.05 | 30.28 | |

| Lighthouse | 20 | 25.51 | 25.21 | 25.92 | 26.25 | 26.33 | 26.69 | 26.74 |

| 100 | 19.14 | 20.14 | 20.15 | 20.21 | 20.46 | 20.35 | 20.47 | |

Figure 16: