Abstract

Objective

We quantify the use of clinical decision support (CDS) and the specific barriers reported by ambulatory clinics and examine whether CDS utilization and barriers differed based on clinics’ affiliation with health systems, providing a benchmark for future empirical research and policies related to this topic.

Materials and Methods

Despite much discussion at the theoretic level, the existing literature provides little empirical understanding of barriers to using CDS in ambulatory care. We analyze data from 821 clinics in 117 medical groups, based on in Minnesota Community Measurement’s annual Health Information Technology Survey (2014-2016). We examine clinics’ use of 7 CDS tools, along with 7 barriers in 3 areas (resource, user acceptance, and technology). Employing linear probability models, we examine factors associated with CDS barriers.

Results

Clinics in health systems used more CDS tools than did clinics not in systems (24 percentage points higher in automated reminders), but they also reported more barriers related to resources and user acceptance (26 percentage points higher in barriers to implementation and 33 points higher in disruptive alarms). Barriers related to workflow redesign increased in clinics affiliated with health systems (33 points higher). Rural clinics were more likely to report barriers to training.

Conclusions

CDS barriers related to resources and user acceptance remained substantial. Health systems, while being effective in promoting CDS tools, may need to provide further assistance to their affiliated ambulatory clinics to overcome barriers, especially the requirement to redesign workflow. Rural clinics may need more resources for training.

Keywords: clinical decision support, adoption barriers, user acceptance, ambulatory care, health systems

INTRODUCTION

Clinical decision support (CDS), a key component of an electronic health record (EHR), is generally defined as tools to enhance decision making in the clinical workflow.1 Examples of CDS tools include computerized alerts and reminders to care providers and patients, and pre-established condition-specific order sets based on clinical guidelines that can improve the receipt of recommended care by targeted patients.1 Because of its potential for reducing medical errors and improving quality of care,2 CDS featured prominently in the Center for Medicare and Medicaid Services Meaningful Use (MU) program, first established in 2009 to provide financial incentives to hospitals and physicians for adopting and using EHRs. To receive funding in each stage of MU, healthcare providers had to meet specific CDS requirements, including the number of decision support rules, medication alerts, and the use of patient registries.3 Partly driven by government incentive policies, the variety of CDS tools and the proportion of providers using CDS increased significantly in recent years.4 While the majority of published studies of CDS reported positive effects on clinical and patient outcomes, in some cases, the benefits were not as large as expected or not realized at all.5 The varying effectiveness of CDS has been attributed to particular contextual or implementation factors, such as provider education and training.5 In the existing literature, there has been discussion of challenges and barriers to adopting and using CDS,6 but the empirical understanding is mostly limited to case studies,7 qualitative analyses,8 and surveys focusing on physician satisfaction.9 In particular, to our knowledge, there have been no studies quantifying the magnitude of major barriers to using CDS in ambulatory care.

The rapidly changing landscape of healthcare delivery, especially the significant expansion of health systems due to an increase of mergers among hospitals and their acquisitions of physician practices,10 will likely impact whether and how providers use CDS. On the one hand, health systems, functioning as parent organizations of hospitals, ambulatory clinics, and other care providing units (such as nursing homes), may facilitate the implementation and sharing of enterprise-wide EHRs that include CDS as a component, and thereby reduce barriers faced by providers in using the technology. On the other hand, being a part of a health system may require an ambulatory clinic to quickly “migrate” to a new EHR with a different CDS design, which potentially entails changes in care processes and thus creates barriers to using CDS.

During recent years, there have been important progresses in CDS technologies and standards, such as CDS hooks11 and Fast Healthcare Interoperability Resources (FHIR)–based platforms.12 These new advancements can potentially facilitate the integration of decision support mechanisms into existing EHRs, the exchanges of patient information among care providers, and the utilization of more current and expanded evidence base. However, CDS hooks and FHIR are still in the early stage of being adopted,13 and their impact on care delivery has not been empirically assessed yet.

We analyze a unique dataset containing information about the use of CDS and barriers reported by ambulatory clinics in Minnesota, which is among the states leading the efforts of adopting EHR and CDS. These clinics’ experience with CDS can be valuable for providers in other regions as CDS adoption increases nationwide. This study expands the empirical literature on CDS in 3 ways. First, we document the longitudinal trends (2014-2016) of CDS use and barriers reported by ambulatory clinics, allowing examination of the change over time. Given the lack of understanding in the existing literature of the magnitude of barriers to CDS adoption and use in ambulatory care, our findings serve as an important benchmark. Second, we examine 7 specific barriers relevant to 3 distinct aspects of using CDS: resource barriers, user acceptance barriers, and technology barriers. Third, given the increasing role of health systems in care delivery14 and EHR implementation,10 we compare CDS use and barriers in clinics that were affiliated with health systems to those that were not. Although our data extend to only 2016, the information reported by these clinics on CDS use and barriers, such as issues related to clinical workflows and false alarms, are consistent with the major ongoing concerns in the field.15–17 Moreover, as the CDS continues to be adopted by ambulatory providers and the influence of health systems increases, the key insights from this study have current relevancy, even if the magnitude of some barriers may have changed after 2016. Our findings can inform care providers, EHR vendors, health system administrators, and future policies to improve CDS utilization.

MATERIALS AND METHODS

Data and sample

We use 2014-2016 data from the Minnesota Health Information Technology Ambulatory Clinic Survey (MN-HIT), conducted annually by Minnesota Community Measurement.18 The first wave of the MN-HIT was completed in 2014 by 1316 clinics in 234 medical groups, with a response rate of 90%. More than half of these clinics were affiliated with 20 health systems. The survey successfully retained responses from most of these clinics in 2015 and 2016, with 928 clinics (71% of the 2014 sample) responding in all 3 years. The data contain detailed information on the adoption and use of EHRs and CDS, as well as barriers to using CDS. The same data source has been used and discussed in previous studies on related topics.19,20

To identify whether a particular clinic belongs to a health system, we compare the clinics and medical groups in the MN-HIT to the Medicare Provider Enrollment, Chain, and Ownership System (PECOS) database21 and the Healthcare Information and Management Systems Society (HIMSS) annual surveys of ambulatory clinics, provided by the HIMSS Analytics LOGIC Market Intelligence Platform. We consider a health system as an organization that owns at least 1 hospital and 1 group of physicians, following the definitions of health systems developed by the Agency for Healthcare Research and Quality and the RAND Center of Excellence on Health System Performance.22,23 Both PECOS and HIMSS identify affiliations with health systems by ownership or management.20 For clinics and medical groups that cannot be located in either PECOS or HIMSS, we use information from these provider organizations’ website to determine whether they belong to health systems. Because we aim to understand barriers among CDS users, we only include clinics that reported using CDS in all 3 years. The final study sample is a balanced panel of 821 clinics (62% of the 2014 sample) in 117 medical groups from 2014 to 2016.

Outcomes: use of CDS tools and barriers

Several frameworks for innovation and diffusion have theorized the major aspects of barriers to adopting and using new technology.24 The Technology Acceptance Model (TAM)25 and the Resource-Based Theory (RBT)26 are particularly relevant in the context of CDS adoption and use. The TAM links the level and success of technology use to the interaction between the (perceived) usefulness of the technology and the behavioral intention of the targeted users. Based on the TAM, the key barriers to using technology include user resistance or unwillingness due to the (perceived) limited effectiveness or high cost (eg, disruption of current process).27 By contrast, the RBT focuses on the resources, the readiness, and the competencies of the potential recipients of technology (eg, available resources for training and maintenance). In the MN-HIT survey, clinics reported whether they used the following CDS tools in each year (Table 1): automated reminders for missing labs and tests, chronic disease care plans and flow sheets, clinical guidelines based on patient problems list; high-tech diagnostic imaging decision support tools, medication guides/alerts, patient or condition-specific reminders, and preventive care services reminders. Additionally, the survey asked all clinics whether they face the following 7 specific barriers when using decision support at the point of care (Table 1): lack of resources to build/implement, lack of staff and/or provider training, requires a redesign of workflow processes, too many false alarms/too disruptive, requires a system upgrade, software not available, and hardware issues. Corresponding to the TAM and RBT, we categorize the 7 reported barriers (Table 1) in the survey based on whether they are mainly related to resources, user acceptance, or technology. Based on the responses to these questions (yes/no), we create an indicator for each CDS tool and barrier, as well as a count variable for the total number of barriers in each year. These indicators and the count of barriers are the main outcomes for the analysis.

Table 1.

List of CDS tools and barriers included in the survey

| CDS tools used by clinics |

| Automated reminders |

| Chronic disease care plans and flow sheets |

| Clinical guidelines based on patient problems list, gender, and age |

| High tech diagnostic imaging |

| Medication guides/alerts |

| Patient-specific or condition-specific reminders |

| Preventive care services reminders |

| Barriers to CDS use |

| Barriers related to resources |

| Lack of resources to build/implement |

| Lack of staff and/or provider training |

| Barriers related to user acceptance |

| Requires a redesign of workflow processes |

| Too many false alarms/too disruptive |

| Barriers related to technology |

| Requires a system upgrade |

| Software not available |

| Hardware issues |

CDS: clinical decision support.

Explanatory variables: Characteristics of clinics and medical groups

We examine the association between reported CDS barriers and characteristics of clinics and medical groups, including clinic type (primary care vs specialty or mixed), location (urban vs rural), number of physicians in a clinic (1-19 vs 20 or more), number of clinics in a medical group (1; 2-10; 11-20; 21 or more), and an indicator for a clinic’s affiliation with a health system (yes/no).

Additionally, we include in our analysis 4 important measures that reflect the comprehensiveness of a clinic’s CDS and EHR system, and a clinic’s length of experience in using EHR: (1) a count variable (range, 0-7) based on whether a clinic has each of the 7 CDS tools; (2) a count variable (range, 0-7) based on whether a clinic has a set of core EHR functionalities; (3) a count variable (range, 0-6) reflecting the scope of the e-prescribing functions; and (4) the timing of EHR implementation (2011 or before; 2012-2013; 2014-2016), used as a proxy for a clinic’s overall experience with health information technology. The details of the 2 measures for EHR comprehensiveness can be found in Supplementary Tables A1 and A2.

Finally, we control for these clinics’ EHR vendors in our analysis, using indicators for any vendors that show at least 3% of share in our sample in any given year. The remaining vendors (ie, <3% of shares in all years) are grouped together. Recent studies have shown that different EHR vendors lead to variations in hospital performance based on MU criteria,28 and hence may have implications on materializing the productivity of health information technology in general.

The Human Subjects Protection Committee, which is the Institutional Review Board of RAND Corporation, has determined that the study is minimal risk and approved a waiver of consent for using the corresponding secondary data.

Analysis

We first examine the trends of CDS utilization and barriers reported by the clinics in our sample and test whether the changes over time are statistically significant. Linear probability (regression) models are then employed to test whether the reported barriers are associated with clinic and medical group characteristics. Next, using the subgroup of the clinics that reported at least 1 barrier in 2014, we explore possible predictors of change in the count of barriers over time, also with a linear probability model. Specifically, we create an indicator for barrier reduction by comparing the number of barriers reported in 2014 to that reported in 2016 (1 = fewer barriers reported in 2016; 0 = same number or more barriers reported in 2016) and use the same set of explanatory variables from 2014. Also, considering the important differences between large (with 21 or more clinics) and small medical groups (with 20 or fewer clinics), we conduct a subsample analysis using clinics in small medical groups with the same specifications. Finally, to ensure that our results are robust, we conduct sensitivity analyses using logistic regression models.

Fixed effects for time periods are included in all models. Standard errors are clustered at the medical group level to adjust for intragroup and serial correlations.

RESULTS

Table 2 presents the characteristics of the study sample by year. The majority of the clinics in our sample provided at least some specialty care (57%), had 19 or fewer physicians (86%), were in medical groups with 21 or more clinics (58%), and were located in urban areas (82%). Over the study period, the proportion of clinics affiliated with (owned or managed by) health systems slightly increased from 60% in 2014 to 62% in 2016. Most clinics (87%) implemented EHR in 2011 or earlier. Among all EHR vendors reported in our data, Epic (Epic, Verona, WI) had a dominant share and increased over time (48% in 2014 and 59% in 2016).

Table 2.

Characteristics of clinics and medical groups by year (N = 821)

| Year |

2014-2016 Percentage Point Change | (None of the changes is statistically significant.) | ||

|---|---|---|---|---|

| 2014 | 2015 | 2016 | ||

| Health system affiliation (by ownership or management) | ||||

| No | 39.8% | 38.7% | 37.8% | −2.0% |

| Yes | 60.2% | 61.3% | 62.2% | 2.0% |

| Geographic location | ||||

| Rural | 17.8% | 17.9% | 17.9% | 0.1% |

| Urban | 82.2% | 82.1% | 82.1% | −0.1% |

| Type of practice | ||||

| Primary care only | 43.1% | 42.9% | 44.7% | 1.6% |

| Specialty/mixed | 56.9% | 57.1% | 55.3% | −1.6% |

| Clinic size | ||||

| 1-19 physicians | 85.9% | 83.3% | 84.0% | −1.9% |

| 20 or more physicians | 14.1% | 16.7% | 16.0% | 1.9% |

| Medical group size (number of clinics) | ||||

| 1 | 5.0% | 5.0% | 5.0% | 0 |

| 2-10 | 26.4% | 26.4% | 26.4% | 0 |

| 11-20 | 10.7% | 10.7% | 10.7% | 0 |

| 21+ | 57.9% | 57.9% | 57.9% | 0 |

| Electronic Health Record implementation | ||||

| 2011 | 87.1% | 87.1% | 87.1% | 0 |

| 2012-2013 | 9.9% | 9.9% | 9.9% | 0 |

| 2014-2016 | 3.0% | 3.0% | 3.0% | 0 |

| EHR vendor | ||||

| Other | 17.3% | 16.2% | 14% | −3.3% |

| Allscripts | 6.5% | 4.5% | 5.6% | −0.9% |

| Centricity | 2.8% | 3.2% | 1.7% | −1.1% |

| Cerner | 5.7% | 6% | 5.8% | 0.1% |

| eClinicalWorks | 5.6% | 4.1% | 5.7% | 0.1% |

| Epic | 47.9% | 58% | 59.2% | 11.3% |

| Greenway | 9.7% | 4.1% | 4.3% | −5.4% |

| NextGen | 4.5% | 3.9% | 3.7% | −0.8% |

| Electronic Health Record comprehensiveness | ||||

| EHR functionality scale (0-7) | 4.7 | 4.7 | 5.0 | 0.3 |

| E-prescribing scale (0-6) | 4.6 | 4.7 | 5.0 | 0.4 |

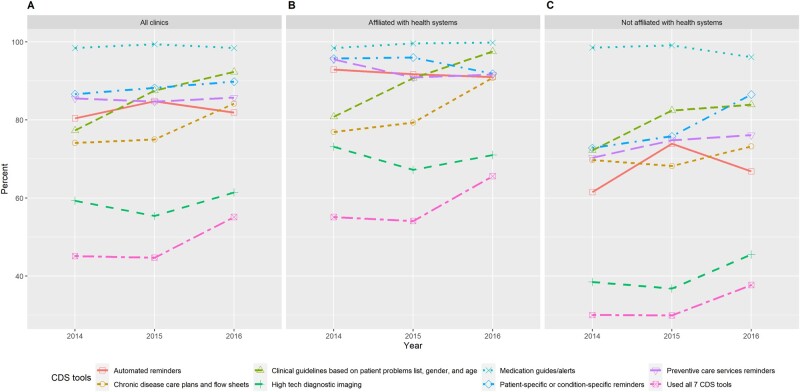

The use of different CDS tools and the reported barriers are summarized in Figures 1 and 2, with additional results available in Supplementary Table A3. By 2016, almost all clinics (98%) used electronic medication guides and alerts, whereas only 61% of clinics used high-tech diagnostic imaging. While the use of all CDS tools increased over time, the largest increases occurred in the use of clinical guidelines based on patient problem list (from 77% in 2014 to 92% in 2016) and chronic disease care plans (from 74% in 2014 to 84% in 2016). Overall, clinics affiliated with health systems used more CDS tools than did those not in health systems. In 2014, 55% of system-affiliated clinics used all 7 CDS tools, and the proportion increased to 66% in 2016. In comparison, among clinics outside health systems, only 30% used all 7 CDS tools in 2014 and 38% in 2016.

Figure 1.

Reported use of clinical decision support (CDS) tools for (A) all ambulatory clinics in the sample, (B) ambulatory clinics affiliated with health systems, and (C) ambulatory clinics not affiliated with health systems by year.

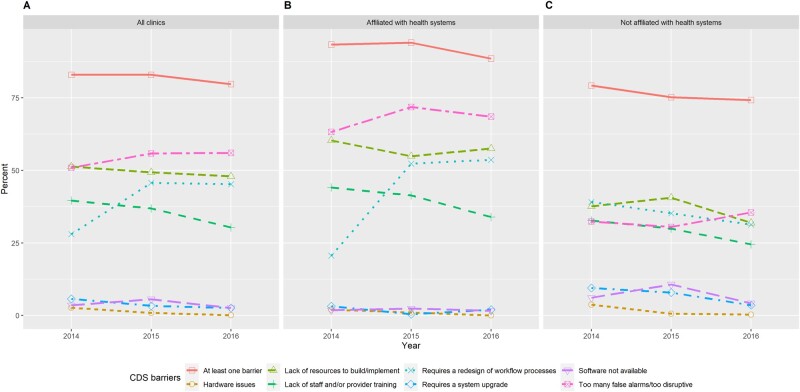

Figure 2.

Reported barriers to using clinical decision support (CDS) tools for (A) all ambulatory clinics in the sample, (B) ambulatory clinics affiliated with health systems, and (C) ambulatory clinics not affiliated with health systems by year.

In 2014, 83% of the clinics reported at least 1 barrier, and the proportion decreased slightly to 80% in 2016. Barriers related to user acceptance and resources were substantial. By large margins, the 4 most commonly reported barriers were “too many false alarms or too disruptive” (51% in 2014 and 56% in 2016), “lack of resource to build or implement” (51% in 2014 and 48% in 2016), “requires a redesign of workflow processes” (28% in 2014 and 45% in 2016), and “lack of staff or provider training” (40% in 2014 and 30% in 2016). In contrast, barriers related to technology were only reported by a small fraction of the clinics in 2014, and they decreased further in 2016. Over time, the 2 resource barriers decreased while both user acceptance barriers increased. Compared with clinics outside health systems, those affiliated with health systems were more likely to report barriers related to resources and user acceptance but were less likely to report technology barriers. For example, 54% of clinics affiliated with health systems reported barriers due to workflow redesign in 2016, whereas for clinics without system affiliation, the proportion was only 31% in the same year. On average, system-affiliated clinics had 2 barriers in 2014, which increased to 2.2 in 2016. In contrast, clinics not affiliated with health systems reported an average of 1.8 barriers in 2014, which decreased to 1.5 in 2016.

Based on the estimates from the linear probability models using the whole sample (Table 3), several factors were significant predictors of barriers related to user acceptance. Clinics in rural areas were less likely to report issues in workflow redesign (13 percentage points lower) relative to other clinics. Clinics affiliated with health systems were more likely to report excessive or disruptive alarms (21 percentage points higher), and so were clinics in large medical groups (43 percentage points higher for medical groups with 21 or more clinics). Clinics using more EHR functionalities were less likely to report workflow redesign issues (15 percentage points lower for each additional EHR functionality).

Table 3.

Factors significantly associated with barriers to using CDS: coefficients (percentage points) and P values (in parentheses) from linear probability models

| Barriers to using CDS | Associated factors | Coefficient (P Value) |

|---|---|---|

| Entire sample (N = 821) | ||

| Resource barriers | ||

| Resources to implement | Clinics from large medical groups (21+ clinics) | 25.9 (.05) |

| EHR vendor eClinicalWorks compared with other vendors | −21.4 (.02) | |

| Staff and provider training | Clinics located in rural areas | 22.0 (<.01) |

| User acceptance barriers | ||

| Workflow redesign | Clinics located in rural areas | −12.7 (.03) |

| EHR functionality scale | −14.6 (<.01) | |

| Year of survey—2015 | 21.3 (.014) | |

| Year of survey—2016 | 21.5 (<.01) | |

| Too many alarms or disruptive | Clinics affiliated to a health system | 20.9 (.05) |

| Clinics from large medical groups (21+ clinics) | 43.1 (<.01) | |

| EHR vendor Centricity compared with other vendors | 37.8 (.02) | |

| EHR vendor Greenway compared with other vendors | 34.7 (<.01) | |

| Reduction of barriers | EHR vendor Allscripts compared with other vendors | 46.2 (.05) |

| Small group (n = 346) | ||

| Resource barriers | ||

| Resources to implement | Clinics affiliated to a health system | 21.2 (<.01) |

| Clinics with 20 or more physicians | −18.9 (<.01) | |

| Year of survey—2016 | −14.1 (.04) | |

| Staff and provider training | Clinics affiliated to a health system | 16.2 (.03) |

| Clinics with 20 or more physicians | −12.2 (.03) | |

| User acceptance barriers | ||

| Workflow redesign | EHR system implemented in 2014-2016 | −19.2 (.05) |

| EHR vendor Greenway compared with other vendors | −25.1 (<.01) | |

| Too many alarms or disruptive | EHR vendor Centricity compared with other vendors | 32 (.04) |

| Reduction of barriers | No factors associated to this barrier | – |

The full results from the linear probability model can be found in Supplementary Tables A4 and A5.

CDS: clinical decision support; EHR: electronic health record.

Resource barriers were associated with 2 important factors. Clinics in large medical groups were more likely to report resource issues in implementation than single clinics (26 percentage points higher), whereas clinics in rural areas were more likely to report issues in staff and provider training (22 percentage points higher). Based on the model using longitudinal changes of the number of barriers, most factors included in the analysis were not associated with barrier reduction over time.

The results from analyzing the subsample of clinics in small medical groups (with 20 or fewer clinics) show that health systems affiliation is associated with increased resource barriers related to implementation (21 percentage points higher) and training (16 percentage points higher) (Table 3). Also, in this subsample, more recent EHR implementation, between 2014 and 2016, was associated with a lower probability of reporting CDS barriers related to workflow redesign (19 percentage points lower), compared with EHR implementation in 2011 or earlier. Full results from the linear probability models can be found in Supplementary Tables A4 and A5.

Finally, in our sensitivity analyses, the marginal effects estimated from the logistic regressions were similar to the linear probability models (Supplementary Tables A6 and A7), although the magnitudes and significance levels slightly increased.

DISCUSSION

While the use of CDS tools proliferated among ambulatory clinics between 2014 and 2016, there was still much room for increasing the scope of their utilization. By 2016, among clinics not affiliated with health systems, only one-third were using all 7 CDS tools included in the survey. The limited use of high-tech diagnostic imaging in both groups was not surprising, as its cost-effectiveness has been increasingly questioned.29,30 However, the relatively low use of automated reminders and chronic disease care plans by clinics not affiliated with health systems is concerning, as these 2 CDS functions may be effective in improving patient outcomes.31,32 The level of CDS use found in our data is consistent with another recent study based on a different data source.33

Our findings show that, despite the accumulation of experience with CDS by these clinics, some important barriers were consistently reported. Workflow redesign and false alarms or disruptions, 2 major barriers related to user acceptance, increased significantly over time. Prior research on CDS highlighted these 2 barriers as significant factors associated with provider resistance and frustration.34,35 Our findings quantified their prevalence as reported by ambulatory clinics in Minnesota. Considering that all clinics in our sample had at least 3 years of experience using CDS by the end of 2016, these barriers seem to be persistent. Although we do not have information on the trends of these barriers after 2016, the observation from our data is consistent with recent and current focuses in CDS policy and research. Workflow redesign and alert fatigue continue to be the 2 main issues in conceptualizing and understanding CDS effectiveness.15–17

The integration of workflows into CDS (or EHR in general) is key to the success in using decision support, as outlined in the Five Rights framework of CDS implementation.36 Between 2014 and 2015, for clinics with health systems affiliation, there was an increase of 32 percentage points in the reported barriers due to the required workflow redesign, and the level further increased in 2016. This increase could be due to difficulties faced by clinics when working with vendors in customizing CDS and their inability to effectively configure the adopted CDS tools. Barriers to redesigning clinical workflows may not be apparent until sometime after initial adoption and can become more conspicuous when clinics start their efforts to optimize CDS. Interestingly, clinics that were not in health systems reported a much lower level of workflow redesign issues and showed a decreasing trend, which might reflect the lower need among these clinics to adapt because of their slower expansion of CDS use. Although our data do not have sufficient information to explain the exact reasons behind the clinical workflow issues, our results indicate that, among clinics in small medical groups, more recent EHR implementation was associated with lower barriers related to workflows. This finding can be somewhat puzzling, as it often takes years for providers to fully optimize EHR, and presumably, clinics with less experience would have more challenges in integrating CDS into workflows. Previous research suggested that late EHR adopters were able to learn from their peers’ earlier experiences and were thus more prepared.37,38 However, another possibility is that clinics with more recent EHR implementation did not immediately start using some of the important features embedded in these CDS tools that require workflow redesign and hence reported lower barriers. Similarly, our finding that rural clinics were less likely to report workflow redesign issues might also be due to the more limited scope of CDS use among these clinics. Future research may investigate this particular phenomenon to better understand workflow redesign in the context of using CDS. Some providers’ early experiences with CDS hooks and FHIR suggest that clinical workflow integration might improve with these new tools and emerging standards.13 Our findings support efforts to further their adoption and use.

Barriers related to resources for implementation and training, although decreasing, were still substantial by the end of the study period. By contrast, technological barriers stemming from hardware, software, and system upgrade were low, possibly due to overall improvements in health information technology and vendor services. The increased tendency of clinics in large medical groups to report resource barriers might reflect the complexity of implementing and standardizing CDS in multiclinic settings, as well as the cost implications.39 Having the same CDS across all clinics within a medical group or a health system can produce efficiencies, but if providers in these clinics vary significantly in their clinical processes, some may face barriers when using such tools. To build a good alert and reminder system in CDS requires high levels of specificity and sensitivity,40,41 which can be particularly difficult to achieve in large medical groups with considerably heterogeneous providers. We also find that rural clinics were more likely to report resource barriers related to staff and provider training, suggesting that these clinics may need targeted assistance after CDS adoption to fully leverage the technology in their care delivery.

Finally, clinics in health systems showed a much higher level of using alerts, reminders, and chronic disease care plans, which also seemed to have led to more frequent reporting of false alarms and disruptions. Although health systems may become the driving force for EHR and CDS adoption in ambulatory care,42 their effectiveness in reducing barriers, and thus improving the actual experience of using CDS, was not reflected in our data. On the contrary, among clinics in small medical groups, affiliation with a health system was associated with significantly increased resource barriers, possibly due to their need to quickly adapt to the health system’s enterprise-wide EHR and CDS, but without sufficient resources allocated for implementation and training.

In recent years, the increasing trend of using CDS that incorporates artificial intelligence (AI)43 and large scale health data exchange partnerships, such as Observational Health Data Science and Informatics (OHDSI),44 may help care providers overcome some of the key barriers to using decision support. For example, AI and machine learning tools can potentially tailor alerts and reminders based on the specific preference and expertise of physicians,45 whereas OHDSI and other similar partnerships enable the utilization of a more expanded evidence base and more standardized data exchange.46 As a result, CDS use may be less constrained by providers’ own EHRs and the need to change clinical workflows. However, to effectively and safely implement these technological advancements in CDS can be challenging and requires rigorous and real-time evaluations.43 More research is needed to fully understand the impacts of AI, OHDSI, and FHIR on CDS use.

Limitations

Our study has important limitations. First, our data do not extend beyond 2016 and may not capture the trends in CDS use and barriers afterward. Nonetheless, the main insights from our analyses are unlikely to have changed in most recent years, as new CDS technologies have not been widely adopted yet, while managerial practices that can reduce user acceptance barriers take considerable time to establish. Second, we only have data from Minnesota, and our findings may not be generalizable to clinics in other states. However, Minnesota is considered one of the leading states in health information technology,47 and the experience of clinics may provide useful lessons to other regions as CDS adoption continues. Third, the MN-HIT data do not contain important organizational characteristics such as leadership, governance structures, and information technology management processes, which can significantly affect CDS use and the potential barriers encountered. Fourth, the count variables measuring the comprehensiveness of CDS and EHR used in the analysis may not fully characterize their complexity and heterogeneity across clinics because the same CDS tool or EHR functionality can be designed and configured in different ways. Finally, the main outcomes in this study, the use of CDS and barriers, are based on binary responses to survey questions at the clinic level, which are subject to measurement errors and do not capture the intensity of CDS use and the severity of barriers.20

CONCLUSION

Despite the continuing diffusion of CDS in ambulatory care, a significant proportion of ambulatory clinics reported barriers to using the technology. Issues with clinical workflows and resources prevailed or even increased over time. Affiliation with health systems was associated with increased barriers. Health systems may need to target certain clinics when allocating resources for implementation and training, providing assistance to effectively bridge the gap between CDS and clinical workflows, and reduce alert fatigue. Rural clinics may need more training resources. Our findings also echo the advocacy to establish federal leadership in advancing CDS standards and creating a national CDS repository,48 which can potentially facilitate and coordinate CDS implementation, training, and workflow redesign across the board. For clinics outside health systems, there may need to be policies to further incentivize the expansion of CDS use.

FUNDING

This work was supported through the RAND Center of Excellence on Health System Performance, which is funded through a cooperative agreement (1U19HS024067-01) between the RAND Corporation and the Agency for Healthcare Research and Quality.

AUTHOR CONTRIBUTIONS

YS, AA-R, RSR, SHF, and PS contributed to concept and design. YS, PS, and CLD contributed to acquisition of data. YS, AA-R, RSR, SHF, and PS contributed to analysis and interpretation of data. YS, AA-R, DS, and PS drafted of the manuscript. YS, AA-R, RSR, SHF, PS, DS, and CLD contributed to critical revision of the manuscript for important intellectual content. YS and AA-R contributed to statistical analysis. YS, RSR, DS, PS, and CLD obtained funding. AA-R contributed to administrative, technical, or logistic support. YS, DS, PS, and CLD contributed to supervision.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Supplementary Material

ACKNOWLEDGMENTS

The authors thank Donald Miller at the Pennsylvania State University for his research assistance.

DATA AVAILABILITY

The data underlying this article were obtained from Minnesota Community Measurement under a data use agreement, which does not allow the authors to share the data publicly. Anyone interested in accessing this data should contact Minnesota Community Measurement.

CONFLICT OF INTEREST STATEMENT

The authors report no financial interest with any entity that would pose a conflict of interest with the subject matter of this article.

REFERENCES

- 1.Office of the National Coordinator for Health Information Technology. Clinical decision support. 2018. https://www.healthit.gov/topic/safety/clinical-decision-support. Accessed May 21, 2019.

- 2. Garg AX, Adhikari NKJ, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA 2005; 293 (10): 1223–38. [DOI] [PubMed] [Google Scholar]

- 3.Centers for Medicare and Medicaid Services. Stage 2: Eligible Professional Meaningful Use Core Measures Measure 17 of 17: Use Secure Electronic Messaging. 2012. https://www.cms.gov/Regulations-and-Guidance/Legislation/EHRIncentivePrograms/downloads/Stage2_EPCore_17_UseSecureElectronicMessaging.pdf. Accessed August 14, 2020.

- 4. Middleton B, Sittig DF, Wright A.. Clinical decision support: a 25 year retrospective and a 25 year vision. Yearb Med Inform 2016; Suppl 1: S103–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Jones SS, Rudin RS, Perry T, et al. Health information technology: an updated systematic review with a focus on meaningful use. Ann Intern Med 2014; 160 (1): 48–54. [DOI] [PubMed] [Google Scholar]

- 6. Sittig DF, Wright A, Osheroff JA, et al. Grand challenges in clinical decision support. J Biomed Inform 2008; 41 (2): 387–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Ash JS, Sittig DF, Guappone KP, et al. Recommended practices for computerized clinical decision support and knowledge management in community settings: a qualitative study. BMC Med Inform Decis Mak 2012; 12: 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Moja L, Liberati EG, Galuppo L, et al. Barriers and facilitators to the uptake of computerized clinical decision support systems in specialty hospitals: protocol for a qualitative cross-sectional study. Implement Sci 2014; 9: 105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Khairat S, Marc D, Crosby W, et al. Reasons for physicians not adopting clinical decision support systems: critical analysis. JMIR Med Inform 2018; 6 (2): e24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Furukawa MF, Machta RM, Barrett KA, et al. Landscape of health systems in the United States. Med Care Res Rev 2020; 77 (4): 357–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Dolin RH, Boxwala A, Shalaby J.. A pharmacogenomics clinical decision support service based on FHIR and CDS Hooks. Methods Inf Med 2018; 57 (S 02): e115–23. [DOI] [PubMed] [Google Scholar]

- 12. Boussadi A, Zapletal E.. A Fast Healthcare Interoperability Resources (FHIR) layer implemented over i2b2. BMC Med Inform Decis Mak 2017; 17 (1): 120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Semenov I, Osenev R, Gerasimov S, et al. Experience in developing an FHIR medical data management platform to provide clinical decision support. Int J Environ Res Public Health 2019; 17 (1): 73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Ahluwalia SC, Damberg CL, Silverman M, et al. What defines a high-performing healthcare delivery system: a systematic review. Jt Comm J Qual Patient Saf 2017; 43 (9): 450–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Greenes RA, Bates DW, Kawamoto K, et al. Clinical decision support models and frameworks: Seeking to address research issues underlying implementation successes and failures. J Biomed Inform 2018; 78: 134–43. [DOI] [PubMed] [Google Scholar]

- 16. Hussain MI, Reynolds TL, Zheng K.. Medication safety alert fatigue may be reduced via interaction design and clinical role tailoring: a systematic review. J Am Med Inform Assoc 2019; 26 (10): 1141–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Sutton RT, Pincock D, Baumgart DC, et al. An overview of clinical decision support systems: benefits, risks, and strategies for success. NPJ Digit Med 2020; 3: 17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Minnesota Department of Health. 2018 Minnesota Health IT Clinic Survey. https://www.health.state.mn.us/facilities/ehealth/assessment/docs/survey2018clinic.pdf. Accessed September 6, 2020.

- 19. Ranganathan C, Balaji S.. Key factors affecting the adoption of telemedicine by ambulatory clinics: insights from a statewide survey. Telemed J E Health 2020; 26 (2): 218–25. [DOI] [PubMed] [Google Scholar]

- 20. Rudin RS, Shi Y, Fischer SH, et al. Level of agreement on health information technology adoption and use in survey data: a mixed-methods analysis of ambulatory clinics in 1 US state. JAMIA Open 2019; 2 (2): 231–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Center for Medicare and Medicaid Services. PECOS for physicians and NPPs. 2019. https://www.cms.gov/Outreach-and-Education/Medicare-Learning-Network-MLN/MLNProducts/Downloads/MedEnroll_PECOS_PhysNonPhys_FactSheet_ICN903764.pdf. Accessed December 11,2020.

- 22.Agency for Healthcare Research and Quality. Defining health systems. 2017.http://www.ahrq.gov/chsp/chsp-reports/resources-for-understanding-health-systems/defining-health-systems.html. Accessed August 16, 2020.

- 23. Ridgely MS, Duffy E, Wolf L, et al. Understanding U.S. Health Systems: using mixed methods to unpack organizational complexity. EGEMS (Wash DC) 2019; 7 (1): 39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Daim TU, Behkami N, Basoglu N, et al. Healthcare Technology Innovation Adoption. Cham, Switzerland: Springer International; 2016. [Google Scholar]

- 25. Venkatesh V, Bala H.. Technology Acceptance Model 3 and a research agenda on interventions. Decis Sci 2008; 39 (2): 273–315. [Google Scholar]

- 26. Mitchell R. Resource-based theory: creating and sustaining competitive advantage edited by J.B. Barney and D.N Clark Oxford University Press, Oxford, Paperback, 2007; 316 pages, ISBN 978-019-927769-8. J Publ Aff 2008; 8 (4): 309–13. [Google Scholar]

- 27. Venkatesh M, Davis, et al. User acceptance of information technology: toward a unified view. MIS Quarterly 2003; 27: 425.doi:10.2307/30036540 [Google Scholar]

- 28. Holmgren AJ, Adler-Milstein J, McCullough J.. Are all certified EHRs created equal? Assessing the relationship between EHR vendor and hospital meaningful use performance. J Am Med Inform Assoc 2018; 25 (6): 654–60. doi:10.1093/jamia/ocx135 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Hillman BJ, Goldsmith JC.. The uncritical use of high-tech medical imaging. N Engl J Med 2010; 363 (1): 4–6. [DOI] [PubMed] [Google Scholar]

- 30. Rao VM, Levin DC.. The overuse of diagnostic imaging and the Choosing Wisely initiative. Ann Intern Med 2012; 157 (8): 574–6. [DOI] [PubMed] [Google Scholar]

- 31. Perri-Moore S, Kapsandoy S, Doyon K, et al. Automated alerts and reminders targeting patients: a review of the literature. Patient Educ Couns 2016; 99 (6): 953–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Roshanov PS, Misra S, Gerstein HC, et al. ; CCDSS Systematic Review Team. Computerized clinical decision support systems for chronic disease management: a decision-maker-researcher partnership systematic review. Implement Sci 2011; 6: 92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Rudin RS, Fischer SH, Shi Y, et al. Trends in the use of clinical decision support by health system–affiliated ambulatory clinics in the United States, 2014-2016. Am J Account Care 2019; 7: 4–10. [Google Scholar]

- 34. Doebbeling BN, Saleem J, Haggstrom D, et al. Integrating Clinical Decision Support Into Workflow—Final Report. Rockville, MA: Agency for Healthcare Research and Quality; 2011. https://healthit.ahrq.gov/sites/default/files/docs/citation/IntegratingCDSIntoWorkflow.pdf. Accessed May 3, 2019.

- 35. Gardner RL, Cooper E, Haskell J, et al. Physician stress and burnout: the impact of health information technology. J Am Med Inform Assoc 2019; 26 (2): 106–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Osheroff JA, Teich JM, Levick D, et al. Improving Outcomes with Clinical Decision Support: An Implementer’s Guide. 2nd ed.Boca Raton, FL: CRC Press. [Google Scholar]

- 37. Boan L. HIT Think Why being a late EHR adopter isn’t a bad thing. Health Data Management. 2017. https://www.healthdatamanagement.com/opinion/why-being-a-late-ehr-adopter-isnt-a-bad-thing. Accessed May 21, 2019.

- 38.MOS Medical Reviews. How a later EHR adoption has been proven advantageous to healthcare providers. 2017. https://www.mosmedicalrecordreview.com/blog/late-ehr-adoption-advantageous-for-healthcare-providers/. Accessed May 21, 2019.

- 39. Pearl RM. What health systems, hospitals, and physicians need to know about implementing electronic health records. Harvard Business Review.2017. https://hbr.org/2017/06/what-health-systems-hospitals-and-physicians-need-to-know-about-implementing-electronic-health-records. Accessed May 10, 2019.

- 40. Coleman JJ, van der Sijs H, Haefeli WE, et al. On the alert: future priorities for alerts in clinical decision support for computerized physician order entry identified from a European workshop. BMC Med Inform Decis Mak 2013; 13: 111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. van der Sijs H, Aarts J, Vulto A, et al. Overriding of drug safety alerts in computerized physician order entry. J Am Med Inform Assoc 2006; 13 (2): 138–47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Shi Y, Amill-Rosario A, Rudin RS, et al. Health information technology for ambulatory care in health systems. Am J Manag Care 2020; 26 (1): 32–8. [DOI] [PubMed] [Google Scholar]

- 43. Magrabi F, Ammenwerth E, McNair JB, et al. Artificial intelligence in clinical decision support: challenges for evaluating AI and practical implications. Yearb Med Inform 2019; 28 (1): 128–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Dixon BE, Wen C, French T, et al. Extending an open-source tool to measure data quality: case report on Observational Health Data Science and Informatics (OHDSI). BMJ Health Care Inform 2020; 27 (1): e100054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Garcia-Vidal C, Sanjuan G, Puerta-Alcalde P, et al. Artificial intelligence to support clinical decision-making processes. EBioMedicine 2019; 46: 27–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Garza M, Del Fiol G, Tenenbaum J, et al. Evaluating common data models for use with a longitudinal community registry. J Biomed Inform 2016; 64: 333–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Castro D, New J, Wu J. The best states for data innovation. Center for Data Innovation. 2017. https://www.datainnovation.org/2017/07/the-best-states-for-data-innovation/. Accessed May 5, 2019.

- 48. Tchen J, Bakke S, Bates D, et al. Optimizing Strategies for Clinical Decision Support: Summary of a Meeting Series. Washington, DC: National Academy of Medicine; 2017. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data underlying this article were obtained from Minnesota Community Measurement under a data use agreement, which does not allow the authors to share the data publicly. Anyone interested in accessing this data should contact Minnesota Community Measurement.