Abstract

A hierarchical model of temporal dynamics was examined in adults (n=34) and youth (n=46) across the stages of face processing during perception of static and dynamic faces. Three ERP components (P100, N170, N250) and spectral power in the mu range were extracted, corresponding with cognitive stages of face processing: low-level vision processing, structural encoding, higher-order processing, and action understanding. Youth and adults exhibited similar yet distinct patterns of hierarchical temporal dynamics such that earlier cognitive stages predicted later stages, directly and indirectly. However, latent factors indicated unique profiles related to behavioral performance for adults and youth and age as a continuous factor. The application of path analysis to electrophysiological data can yield novel insights into the cortical dynamics of social information processing.

Keywords: temporal dynamics, electroencephalography (EEG), event-related potentials (ERP), face processing, path analysis

1. INTRODUCTION

The ability to rapidly process and interpret the information contained in the human face is a crucial and early-emerging social ability. Behavioral and brain specialization for face processing is evident within the first months (de Haan et al. 2003; Leppänen and Nelson 2008), and, by adolescence, specialized face processing strategies are evident (Taylor et al. 2004). Neuroimaging studies have described face-selective, right-lateralized activity within the fusiform gyrus (Haxby et al. 1994; Puce et al. 1995; Kanwisher and Yovel 2006), supported by intracranial electrophysiological detection of face-related activity within this region (Allison et al. 1994). Extracranial electroencephalography (EEG) and event-related potential (ERP) studies provide the temporal sensitivity to characterize a sequence of perceptual stages that are indexed by distinct ERP components with specific scalp topography and chronology (Wong et al. 2009; Luo et al. 2010) aligned with face-processing functional stages, from early visual processing to action understanding (Haxby et al. 2000; Young and Bruce 2011).

Despite understanding of these stages, temporal dynamics among them (i.e., relative serial versus parallel processing; influence of early stages on subsequent stages) remain unclear. Neuroimaging studies applying functional connectivity measures to quantify the relative co-activation of spatially distinct regions (Lee et al. 2003) have provided information about the regional network involved in face processing; however, the limited temporal resolution of fMRI precludes clarification of regional underpinnings of processing stages and distinguished among functional processes subserved by the same brain region within short periods of time (e.g., <2–3 seconds). Effective connectivity is an alternative approach that examines the direct influence of one neural system on another by testing a causal model with theoretically constrained connections specified in advance, including neuroanatomical, neurofunctional, and neuropsychological considerations (Rolls, 1992; Lai et al., 2014; Büchel and Friston 2000). Seminal work identified a feed-forward mechanism such that the fusiform gyrus exerts a dominant influence on downstream cortical regions, including the amygdala, orbitofrontal cortex, superior temporal sulcus, and inferior occipital gyrus (Fairhall & Ishai, 2007). These effective connections are known to be distinct on the basis of individual differences (e.g., handedness, Frässle et al., 2016) and may be modulated by emotional content of the face (Dima et al., 2011).

Work utilizing simultaneously recorded fMRI and EEG supports the engagement and specification of distinct effective networks relative to one ERP feature, the N170 (Nguyen et al., 2014). Yet, little is known about how the temporal dynamics between different EEG features (i.e., between P100, N170, N250, or spectral features), despite calls to investigate these relationships (Olivares et al., 2015). Spectral analysis of EEG has targeted coherence across the scalp thought to reflect temporal or causal shifts (Varotto et al. 2014; Di Lorenzo et al. 2015), and EEG been used to generate effective connectivity maps from computational models of face detection (Bae et al., 2017; Chang et al., 2020). Other work has linked distinct EEG features to early visual system processes, as it pertains to frequency of face presentation (Collins, Robinson, & Behrmann, 2018). Yet, although the advantages of EEG to separate discrete cognitive processes are clear (see Hudac & Sommerville, 2019), to date, no work has examined how distinct ERP and spectral components are linked in time or how these temporal dynamics relate to face processing behaviors.

1.1. Objective of the study.

The aim of this study was to extend our understanding of developmental face processing by applying temporal dynamics to describe discrete neural processes. We utilize confirmatory factor analysis (CFA), a long-standing analytic approach capable of generating hypothetical variables (i.e., inferred through mathematical modeling) called latent variables (see Loehlin, 1987; Rammsayer et al., 2014) that relate to the hierarchical stages of face processing. In this way, we can input observed variables well-characterized in face processing literature (ERP amplitudes, ERP latencies, and mu rhythm) as predictors of latent variables corresponding to discrete face processing stages. We hypothesized that face-related neural activity at early stages would be predictive of subsequent stages in a hierarchical fashion (Haxby et al. 2000; Young and Bruce 2011) and that these processes would predict behaviorally assessed social cognitive abilities, including face recognition and mental state attribution. To better understand temporal dynamic differences across developmental stages in this exploratory study, we tested these models in youth (age 8–18 years) and adults (age 19–33 years). In this way, the results focus on temporal dynamics within the conceptual framework rather than the specific contributions of data features (i.e., time and frequency).

We targeted the following stages of face processing.

1.1.1. Basic visual processing (P100).

A positive-going P100 component at parieto-occipital leads occurs approximately 100 milliseconds after viewing the face (Key et al. 2005). The P100 indexes basic visual processing of the low-level features of visual stimuli and originates in the visual cortex (Taylor 2002; Wong et al. 2009). Early maturation and gradual specialization of face processing over the first year of life (Nelson and De Haan 1996; De Haan et al. 2002a; 2002b; de Haan et al. 2003; Grossmann et al. 2007) is marked by a decrease in amplitude and latency of the P100 over childhood into adulthood (Batty and Taylor 2002; Taylor et al. 2004; Batty and Taylor 2006) suggesting a transition in processing efficiency.

1.1.2. Face structural encoding (N170).

A negative-going N170 component, recorded over parieto-occipital scalp regions, occurs approximately 170 milliseconds after viewing a face (Bentin et al. 1996; 1999). The N170 reflects structural information encoding an early stage of face processing preceding higher-order processing such as identity (Bentin and Deouell 2000; Eimer 2000) and emotion recognition (Münte et al. 1998; Eimer and Holmes 2002; Eimer et al. 2003). Developmental studies have found that N170 latency and amplitude decrease until adolescence and then increase again into adulthood (Taylor et al. 1999; Itier and Taylor 2004; Taylor et al. 2004; Batty and Taylor 2006; Miki et al. 2015). The N170 becomes more right lateralized over development (Taylor et al. 2004) suggesting a gradual and quantitative maturation in face processing throughout childhood into adulthood.

1.1.3. Higher-order processing (N250).

A negative-going N250 component over fronto-central scalp occurs between 200 and 300 milliseconds after observing complex stimuli such as an emotionally expressive face (Carretié et al. 2001; Turetsky et al. 2007; Wynn et al. 2008). The N250 reflects higher-order face processing, such as affect decoding (Luo et al. 2010). The development of this component is not well studied; however research investigating emotional face processing among infants, youth, and adults suggests increased amplitude of mid-latency negativity elicited by emotional faces, especially those portraying negative valence (Balconi and Pozzoli 2003; De Haan et al. 2004; Bar-Haim et al. 2005)

1.1.4. Action understanding (Mu rhythm):

The mu rhythm is defined as brain activity oscillating in the 8–13 Hz frequency range recorded from central electrodes and reflects the detection of intentions and prediction of potential future actions of others (Muthukumaraswamy and Johnson 2004; Muthukumaraswamy et al. 2004). During action observation and execution, the underlying cortical generators of the mu rhythm desynchronize. In this way, attenuation (i.e., reduction of oscillatory power) of mu activity serves as an indicator of an execution/observation matching system involved in action-perception (Hari 2006) and has been proposed to reflect the system’s translation and understanding of actions (Pineda 2005). These findings have been replicated in adults, youth, and infants as young as 8 months of age (Nyström 2008; Liao et al. 2015), suggesting mu rhythm is a robust indicator of action understanding across development. Although there is evidence of an age-related increase in mu attenuation between 6–17 years of age when observing actions (Oberman et al. 2013), it is unclear how such changes are associated with lower-level and high-level social perception processes and behavior.

1.2. Hypotheses:

First, we sought to confirm known developmental differences in ERP and spectral components, predicting a larger response in youth relative to adults (Batty and Taylor 2002; Taylor et al. 2004; Batty and Taylor 2006). In addition, we tested interrelationships among observed neural processes with the prediction that earlier processes (e.g., P100, N170) would be related to temporally subsequent processes (e.g., N250, mu rhythm) consistent with prior work (Pourtois et al. 2010). We recognize that ERP and spectral components are not perfectly correlated (Luck and Kappenman 2013). For instance, the initial visual processing neural response may be differentially sensitive to magnitude (i.e., P100 amplitude) and speed of processing (i.e., P100 latency). Thus, we did not expect complete congruence between types of measurement. Ultimately, our objective was to characterize the latent neural structure of both amplitude and latency thus representing the combined features of face processing.

Second, we examined patterns of temporal dynamics among substages of face processing to identify developmental changes from childhood to adulthood. We examined these temporal dynamics in a hierarchical fashion, aligned with prior models (Haxby et al. 2000; Young and Bruce 2011) that highlight early visual and structural encoding followed by higher-level processing to extract meaning (e.g., emotion or identity discrimination). As such, we hypothesized that temporally early neural stages would influence temporally later stages (McPartland et al. 2014), specifically that P100 → N170 → N250 → Mu attenuation. This approach examined the extent to which processing stages act independently and/or exhibit downstream effects across substages. Given work suggesting similar functionally connected networks but reduced strength in children measured via resting state MRI networks (Uddin et al. 2011), it is possible that the strength of influence from one substage to downstream substages (i.e., magnitude of each temporal dynamic path) would be reduced in youth relative to adults. Lastly, we tested how neural processes covary with face processing abilities (e.g., mental state understanding, face recognition). Although ERP and spectral components can be differentially associated with social traits (Kang et al. 2017), we hypothesized that higher-order substages (e.g., N250, mu attenuation) would index more abstract aspects of social perception, and thus, would be more reflective of behavioral performance. In contrast, we hypothesized that lower-order substages would index low-level visual features for both developmental groups.

2. MATERIALS AND METHODS

2.1. Participants:

Typically developing adults (n=34, 20 female, age range 19–33, M age =22.97, SD age =2.84 years) and youth (n=46, 12 female, age range 8–18 years, M age =11.93, SD age =2.66 years) participated in this study. Participants were recruited from ongoing research studies taking place at the University of Washington and Yale University. All participants had 20/20 (corrected) vision and were screened for active psychiatric disorders. Participants were excluded from participation if there was presence of seizures, neurological disease, history of serious head injury, sensory or motor impairment that would impede completion of the study protocol, or use of medications known to affect brain electrophysiology. Informed consent was obtained for all participants in accordance with local ethical review board procedures (University of Washington #35313; Yale University #0711003222).

2.2. General procedures:

Testing was conducted over the course of one session, lasting approximately 70 minutes. Data were collected at two sites (University of Washington and Yale University) using identical EEG recording systems, sensor arrays, and data collection protocols consistent with best practices (Picton et al. 2000; Webb et al. 2015). In addition to identical data collection and processing procedures, both labs employed identical equipment monitoring protocols to maintain consistency in stimuli display calibration and system timing. Systems monitoring was cross-referenced at each site to ensure data collection and processing systems were consistent and identical at both sites.

2.3. Behavioral outcomes:

Participants were administered two social behavioral tasks. First, the Benton Facial Recognition Test (BFRT) (Benton et al. 1994) was used as a standardized measure of face recognition. In this task, participants are given a target face and instructed to identify the target from an array of six adult faces presented simultaneously. Participants are given unlimited amount of time to choose, and a maximum score is 54. Low scores (i.e., < 41) on the BFRT reflect poorer performance and have been associated with neural impairment within the inferior posterior parietal region involved in face processing (Malhotra et al. 2009; Tranel et al. 2009). For our analyses, raw scores were used as the dependent outcome variable for path modeling.

Second, the Reading the Mind in the Eyes Task – Revised (RMET) (Baron-Cohen et al. 2001) was used as a measure of mental state attribution. In this task, participants are instructed to match a photograph of a pair of adult eyes to the appropriate emotional labels. For each of the 36 trials, there is one target label and three distractor labels. Scores are generated based upon the number of trials for which the participant correctly chose the target (maximum of 36). For our analyses, raw scores were used as the dependent outcome variable for path modeling.

2.4. Experimental paradigm.

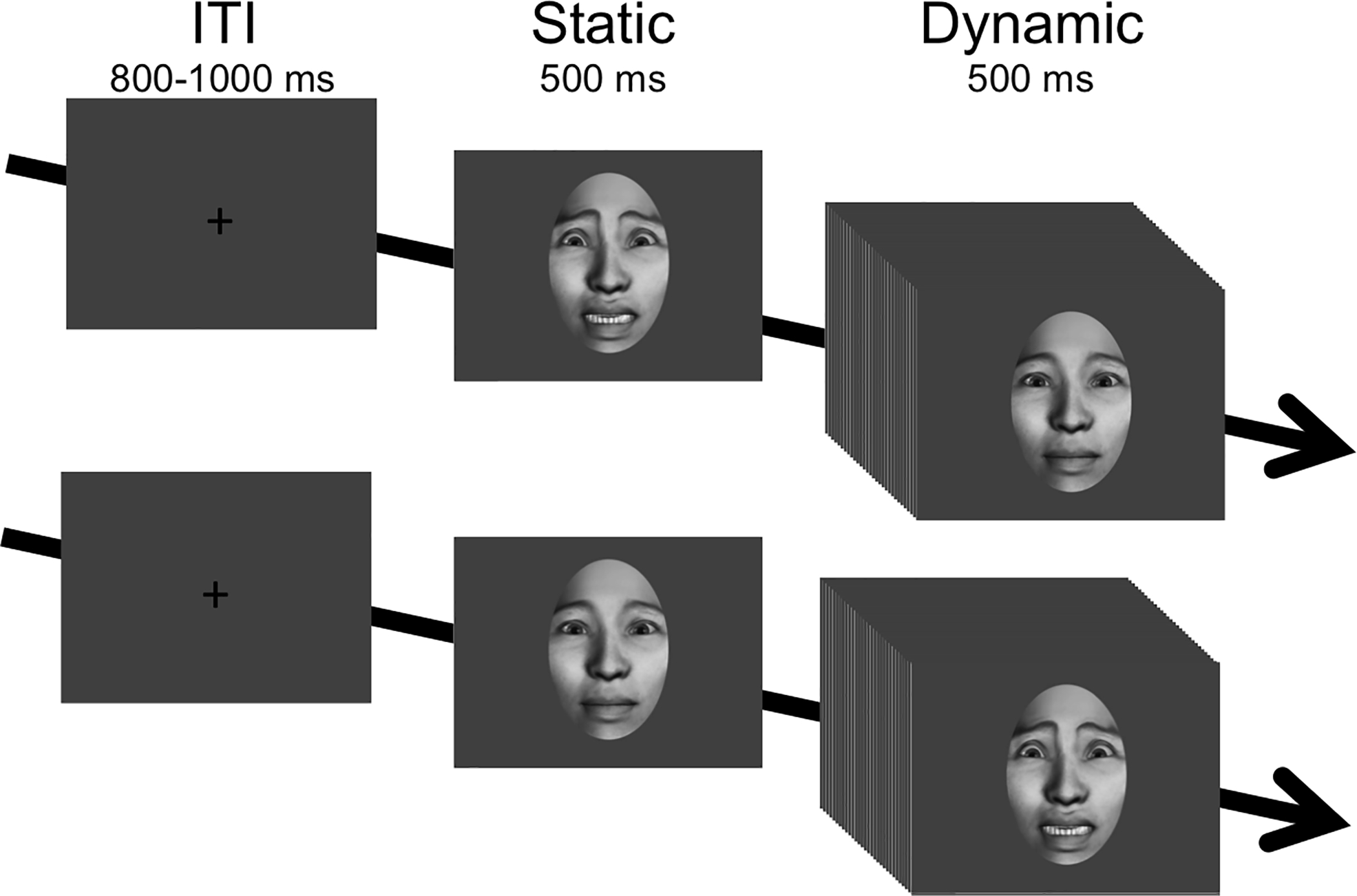

Stimulus design and validation has been previously described in depth (Naples et al. 2014). Photorealistic, computer generated faces were presented during each trial as a static initial pose for 500 ms, followed by 500 ms of motion as the face transitioned between emotional expressions (see Figure 1). In this way, static images permitted derivation of ERPs while the dynamic movement conditions permitted assessment of spectral power in the EEG mu rhythm. There were 75 stimuli each in 3 different categories of movement sequence controlling for both affective expression and familiarity (225 total stimuli): (1) affective movement (fearful face); (2) neutral movement (close lipped, puffed cheeks with open eyes; and (3) biologically impossible movement (upward dislocation of eyes and mouth). Each sequence was presented in both forward and reverse orders (e.g., forward=neutral to fear face; reverse=fear face to neutral) yielding a total of 450 trials. To ensure attention, subjects performed a target detection task that involved pressing a button at each of the three target trials (i.e., ball bouncing). Trials were preceded by a jittered intertrial interval (800–1000 ms). Three minutes of resting EEG were collected before and after the paradigm. Here, we focus on the face processing response to fearful expressions, as these facial expressions are known to elicit increased brain activity as early as infancy (Leppänen and Nelson 2012) and continue to develop into childhood and adulthood (Batty and Taylor 2003).

Figure 1. Experimental design.

Condition exemplars are presented for a reverse trial (fear face to neutral face) and forward trial (neutral face to fear face). ERP components were extracted in response to the static face presentation. Mu attenuation was extracted in response to the dynamic face presentation.

2.5. Data collection and processing.

Participants were seated 90 centimeters from a stimulus-display monitor. EEG was recorded continuously from the scalp from a 128-channel geodesic sensor array at 500 Hz throughout each stimulus presentation trial via Net Station 4.3 software (Electrical Geodesic Inc., Eugene OR; also used for data editing and reduction). The EEG signal was amplified and collected with a bandpass filter of 0.1 Hz high pass filter and 200 Hz elliptical low-pass filter. The conditioned signal from all channels was multiplexed and digitized at 1000 Hz using an analog-to-digital converter and a dedicated computer (a second, synchronized computer generated stimuli and registered stimulus on/offset for offline segmentation). The vertex electrode was used as a reference during acquisition.

Processing of data consisted of the following steps, presented in temporal order (see Supplemental Figure 1). ERPs were generated following digital filtering (low-pass Butterworth 30 Hz; consistent with Leppänen et al, 2008) by segmenting from 100 ms pre-static face onset to 500 ms post-static onset, prior to the movement sequence. EEG epochs were generated without filtering by segmenting from the beginning of the movement sequence to 500 ms post-movement onset (500 ms total per epoch). All epochs were subsequently subjected to artifact detection for signal deflections greater than 200 μV due to bad channel or 140 μV due to an eye blink or eye movement. Manual visual inspection confirmed or corrected automatic detection results. Spline interpolation of data from neighboring sites was used to replace data from electrodes for which greater than 40 percent of the trials were rejected. ERPs were transformed to correct for baseline shifts and re-referenced to the average reference. All participants had an adequate number of ERP trials (Minimum trials: Adults, 53; Youth, 52. Trials excluded on average: Adults, 29.33%; Youth, 30.67%) and EEG trials (Minimum trials: Adults, 46; Youth, 48. Trials excluded on average: Adults, 38.7%; Youth, 36%). There were no group differences in the amount of ERP data retained, F(1,81)=.13, p=.73, or the amount of EEG data retained, F(1,81)=.84, p=.36.

2.5. Single-trial data extraction procedures.

For all analyses, a single-trial approach was used to ensure adequate power given the substantial parameters necessary for CFA (87 parameters, including factor loadings, factor and error variances, and factor covariances). Single-trial procedures (see Figure S1, top section) were similar to prior work evaluating trial level ERP (Regtvoort et al. 2006; Paiva et al. 2016; Volpert-Esmond et al. 2017; Hudac et al. 2018) and EEG (Billinger et al. 2013) data.

For ERP components, electrodes of interest (see Table S1) were selected based on precedent (e.g., corresponding to posterior electrodes P7/P8, P9/P10, PO9/PO10 for P100 and N170; and fronto-central electrodes C1/C2, C3/C4, F3/F4, and FC1/FC2 for N250) and aligned with prior work using this task (Naples et al. 2014). Visual inspection of within-subject grand-averaged data (Figure S2) confirmed that time windows and topographies accommodated the anticipated latency differences between youth and adults. Subsequently, at the single-trial level, peak amplitudes (i.e., maximum value for P100; minimum value for N170 and N250) and peak latencies within each time window were extracted for each participant across both left and right hemispheres (see Supplemental Figure 2): P100 between 60 and 180 ms; N170 between 120 and 250 ms; and N250 between 200 and 400 ms.

For mu rhythm suppression, fast Fourier transforms were performed on data recorded from central electrodes (e.g., corresponding approximately to C1/C2, C3/C4, and CP1/CP2)(Muthukumaraswamy et al. 2004) during the observation of facial movement. Degree of mu rhythm attenuation was defined as the ratio of power in the 8–13 Hz frequency band during action observation (i.e., dynamic fear facial expression) relative to resting EEG with eyes open. Subsequently, a value of zero reflects no mu rhythm attenuation relative to baseline and larger negative values represent greater power attenuation. Mu attenuation was computed at the single-trial level for trials deemed artifact-free based upon processing procedures described above.

Preliminary MLM analyses examined potential for site differences indicated a lack of consistent site differences (site differences in group effects found in only 3 out of 32 comparisons, see Table S2), Considering that site differences were diffused across measurements (i.e., not all associated with one stage, such as VP or SE), we did not further account for site.

2.6. Analytic Plan:

A visual representation of our analytic plan is provided in Figure S1 (bottom section). We anticipated developmental changes between groups (adult vs youth) at a macrolevel, but also predicted more granular developmental differences. Thus, models were first produced at the group level, and individual differences related to age as a continuous factor were evaluated after final path models were established.

First, we evaluated overall developmental group differences for each neural and behavioral measurement. A series of descriptive analyses were conducted via SAS 9.04 (SAS Institute, Cary, NC) using multilevel models, with single trial ERP component measurements as the unit of analysis, with restricted maximum likelihood with each measurement being predicted as the dependent outcome. The residual degrees of freedom were partitioned into between- and within-participants as appropriate for a repeated measures design (i.e., using DDFM=BETWITHIN). A series of models were generated using PROC MIXED to describe the variances and covariances associated with measurement, separately for each ERP component (P100, N170, N250), EEG measure (mu rhythm), and behavior (BFRT, RMET). Models included fixed effects for group (2: adult, child), and models for neural measurements also included fixed effects for hemisphere (2: right, left) and the subsequent group by hemisphere interaction. A priori pairwise comparisons are reported to describe group differences.

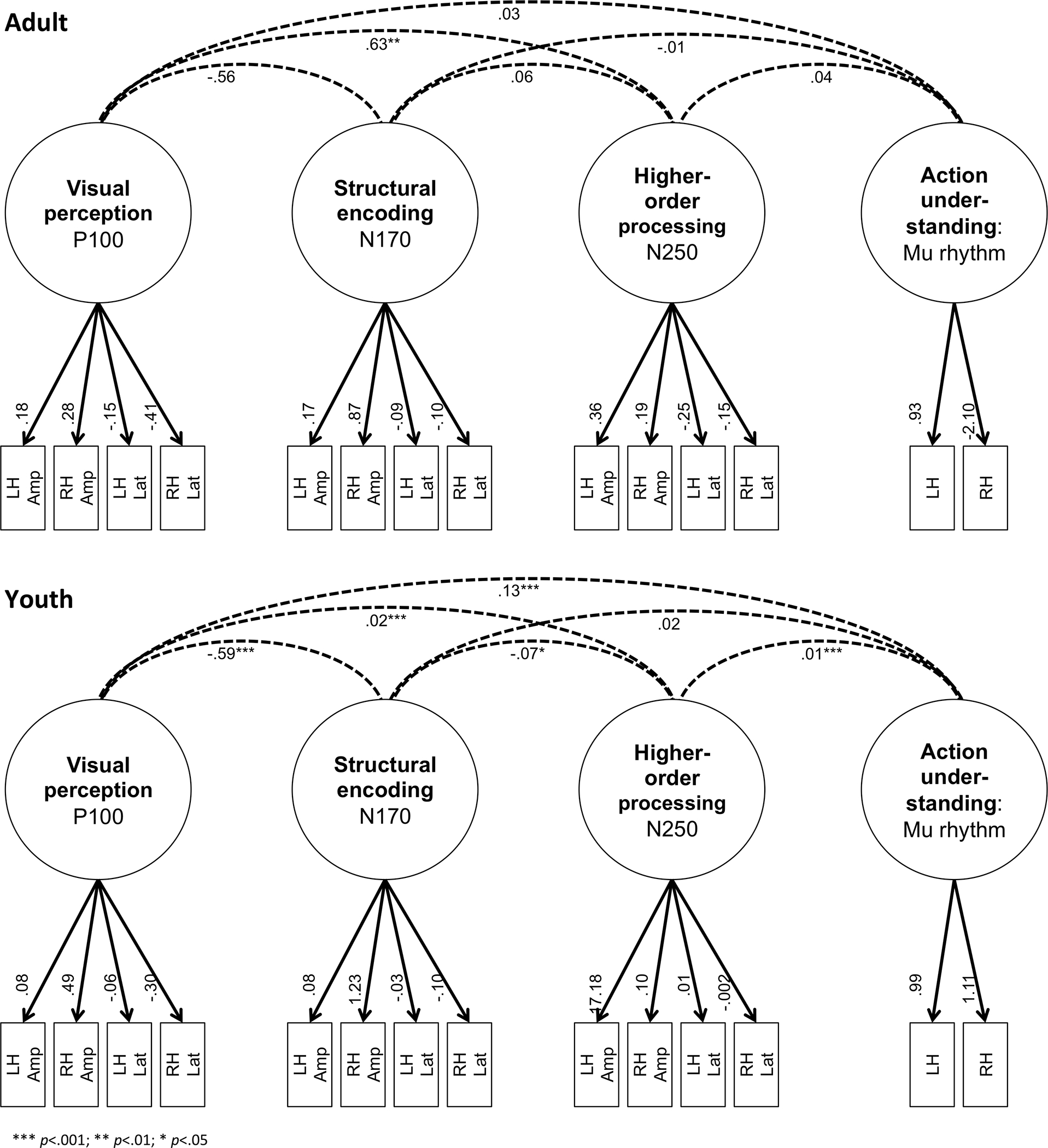

Second, we confirmed the validity of latent factors describing each neural process to ensure each latent factor represented a distinct aspect of face processing. Single-trial measurements were averaged across hemisphere and within subject, and Pearson correlations were computed using the R package “corr” (version 3.3.1) with correction for multiple comparisons via Bonferroni correction. Then, a confirmatory factor analyses (CFA) was conducted within the R package “lavaan” (version 3.3.1) using robust maximum likelihood estimation. Latent structures representing each neural process were estimated based upon four measurements per ERP process (left amplitude, right amplitude, left latency, right latency) and two measurements per spectral process (left mu rhythm, right mu rhythm). Marker variables for latent structures were selected on the basis of the largest factor loading from a preliminary model across all subjects (collapsed across age). Then, models were fit separately for each group to generate group-specific factor loadings. According to proposed guidelines (Hu and Bentler 1999; Steiger 2007; Hooper et al. 2008), goodness-of-fit was assessed by examining root mean squared error of approximation (RMSEA; good fit <.07, acceptable fit < 0.1), comparative fit index (CFI; good fit > .95; acceptable fit >.9), and the standardized root mean squared residual (SRMR, good fit=below 0.08). Standardized regression coefficients (i.e., factor loadings) are reported.

Third, temporal dynamics were measured using path analysis. Aligned with proposed functional stages of face processing (Haxby et al. 2000; Young and Bruce 2011), we tested an a priori hierarchical processing: low-level visual processing, structural encoding, higher-order processing, and action understanding. To this extent, path analyses were conducted in which regressions were restricted in such a way that early stages predicted later stages of face processing and behavioral outcomes. Due to power limitations, a full structural equation model could not be generated; thus, for data reduction purposes, factor scores were extracted from the CFAs separately for each individual and then averaged across trials. Thus, the data inputted into the path analysis was a single value for each variable (VP, SE, HP, AU) derived from the predicted latent value from the CFA (i.e., circles depicted in Figure 2). In this way, the input data accounted for data from each hemisphere (right, left) and each measurement type (amplitude, latency), and the same model was fitted in both adult and youth groups. Path analysis was conducted within R package “laavan” and model fit was confirmed via identical procedures to the CFA analysis, now inputting the predicted latent variable values rather than observed values. Continuous predictors were added to the group models for face recognition and mental state abilities. Between-group differences were assessed via chi-square tests comparing the original model to modified models sequentially constraining one path regression coefficient at a time. In this way, a series of 14 models were estimated (one for each path, see Table 2) and tested against the original, full model to determine if there were significant group differences for each path.

Figure 2. Confirmatory factor analysis measurement model.

Confirmatory factor analysis results for adult (top) and youth (bottom) models. Latent factors are depicted by circles and observed variables are depicted by boxes denoted by number to specific hemisphere and measurement. Dashed lines depict latent factor covariance and significance is noted with asterisk based upon p-value (*** p<.001; ** p<.01; * p<.05). Abbreviations: LH, left hemisphere. RH, right hemisphere. Amp, amplitude. Lat, latency.

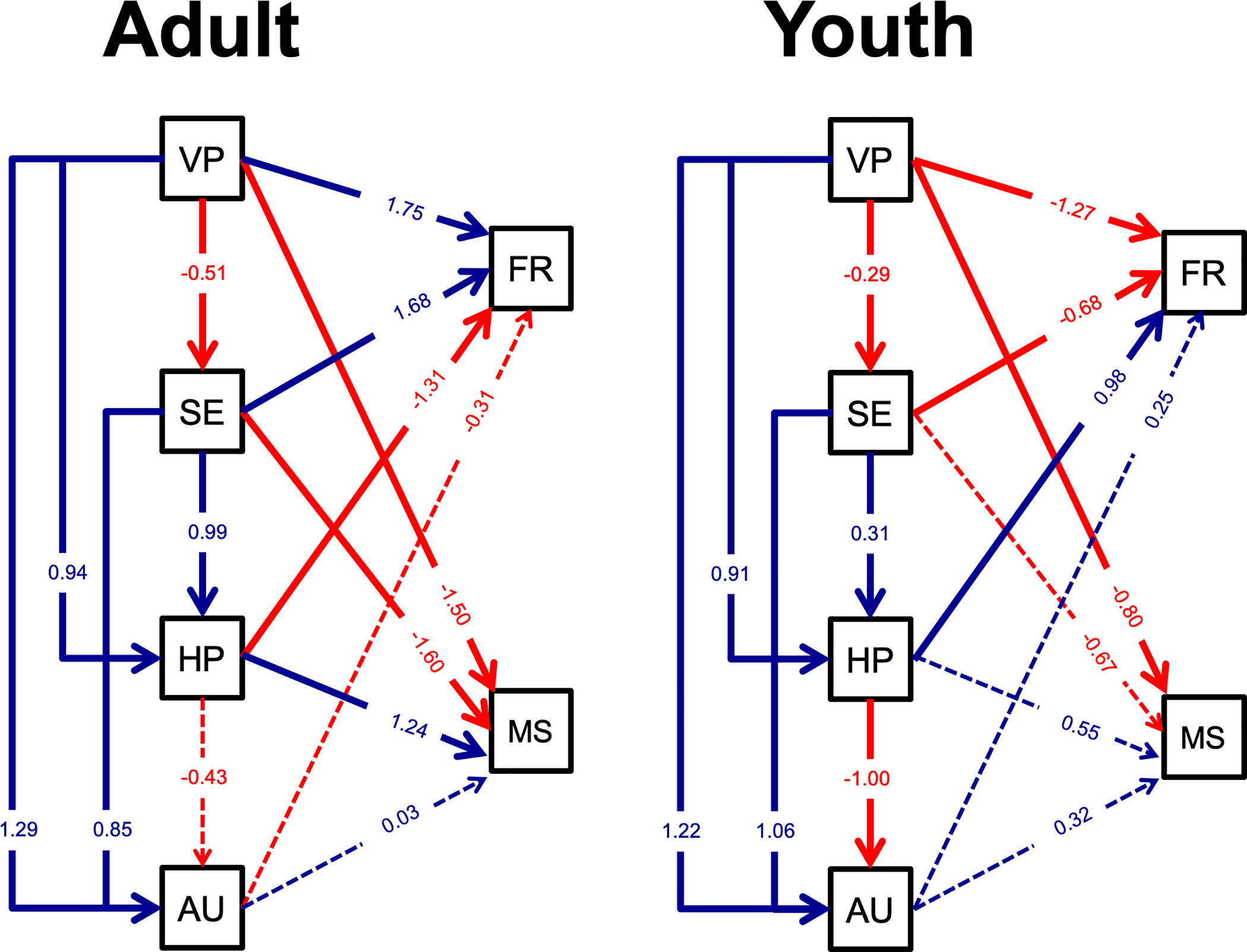

Table 2. Hierarchical path regression coefficients from path analyses.

Standardized regression coefficients and chi-square group difference results are reported for adult and youth path analyses. Abbreviations: VP, low-level vision perception; SE, structural encoding; HP, higher-order processing; AU, action understanding; FR, facial recognition behavior via the Benton Facial Recognition Task; MS, mental state behavior via the Reading the Eyes in the Mind task.

| Process | Adult | Youth | ||||

|---|---|---|---|---|---|---|

| Path | Coeff. | p-value | Coeff. | p-value | χ2 | χ2 p value |

|

Visual perception (VP) regressions | ||||||

| VP→SE | −0.51 | <.001 | −0.29 | 0.027 | 8.01 | 0.005 |

| VP→HP | 0.94 | <.001 | 0.91 | <.001 | 1.16 | 0.282 |

| VP→AU | 1.29 | <.001 | 1.22 | <.001 | 4.57 | 0.033 |

|

Information encoding (SE) regressions | ||||||

| SE→HP | 0.99 | <.001 | 0.31 | 0.001 | 0.18 | 0.673 |

| SE→AU | 0.85 | 0.023 | 1.06 | <.001 | 4.54 | 0.033 |

|

Higher-order processing (HP) regression | ||||||

| HP→AU | −0.43 | 0.193 | −1.00 | <.001 | 0 | 0.957 |

|

Predictors of facial recognition (FR) | ||||||

| VP→FR | 1.75 | 0.006 | −1.27 | 0.001 | 13.13 | <.001 |

| SE→FR | 1.68 | 0.006 | −0.68 | 0.021 | 10.54 | 0.001 |

| HP→FR | −1.31 | 0.016 | 0.98 | 0.005 | 10.61 | 0.001 |

| AU→FR | −0.31 | 0.174 | 0.25 | 0.178 | 2.13 | 0.144 |

|

Predictors of mental state (MS) behavior | ||||||

| VP→MS | −1.50 | 0.004 | −0.80 | 0.040 | 4.75 | 0.029 |

| SE→MS | −1.60 | 0.001 | −0.67 | 0.052 | 1.87 | 0.172 |

| HP→MS | 1.24 | 0.003 | 0.55 | 0.067 | 4.30 | 0.038 |

| AU→MS | 0.03 | 0.919 | 0.32 | 0.233 | 0.38 | 0.536 |

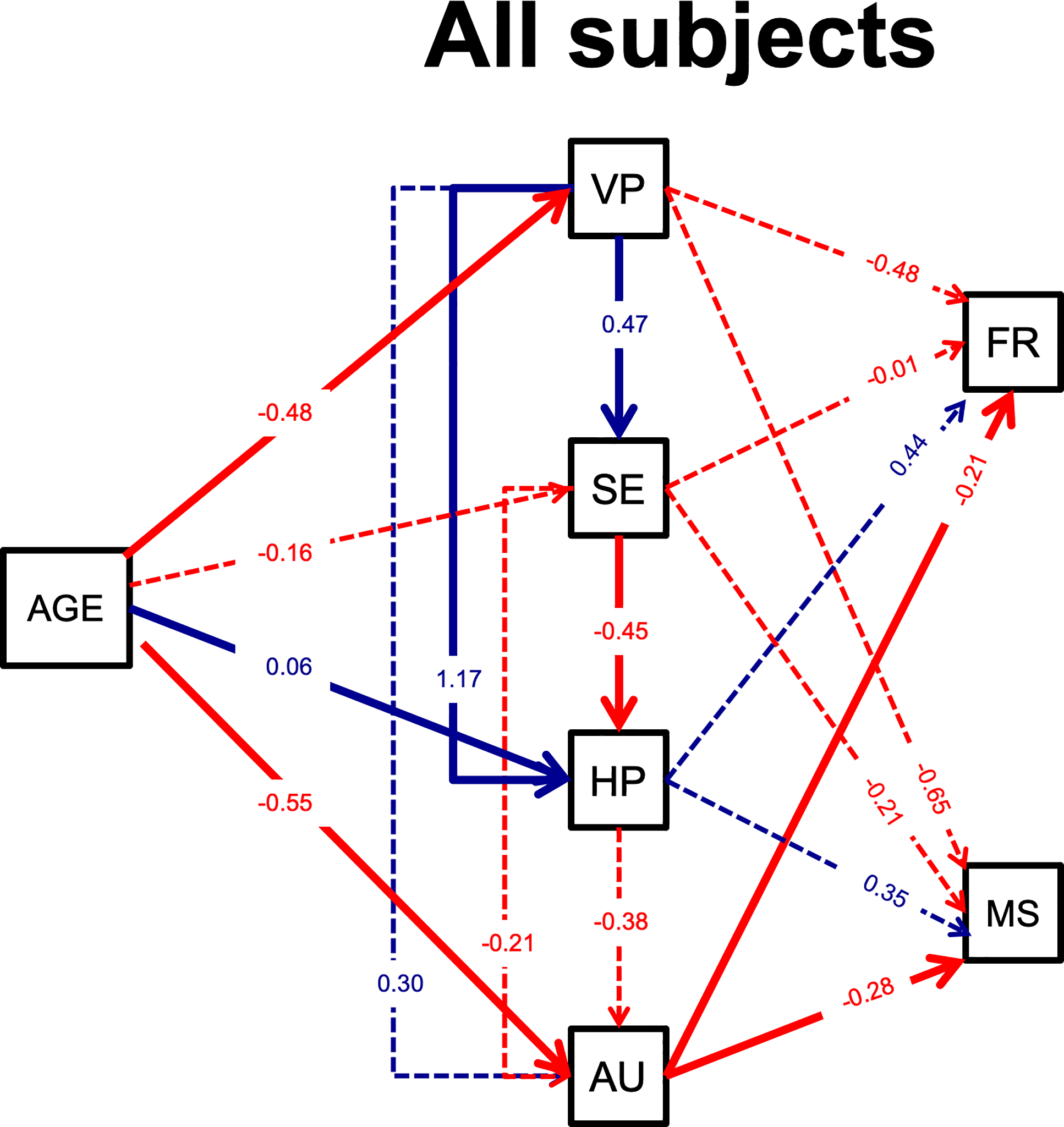

Lastly, a final path model (all subjects with neural and behavioral predictors) tested the influence of age as predictor.

3. RESULTS

3.1. Group differences in neural and behavioral measurements.

Group means and pairwise comparisons (reported in Table 1) with corrections for multiple comparisons using Bonferroni adjustments (Westfall and Troendle 2008) indicated that overall, youth exhibited larger amplitudes than adults. For the P100, youth exhibited greater amplitudes (differences > 7.98 μV) and longer latencies (difference > 13.17 ms) than adults for both left and right hemispheres. Youth also exhibited longer N170 latencies (differences > 14.17 ms), greater N250 amplitudes in both hemispheres (i.e., more negative; differences > 5.71 μV), and a longer N250 latency in the left hemisphere (difference=10.49 ms). Mu rhythm was more attenuated in youth than adults in the left hemisphere (difference=−.81 μV). An extensive description of the interrelationships among observed neural processes is available in SM1 and Table S3.

Table 1. Descriptive statistics and group comparisons for each neural and behavioral measure.

Units for each measurement are reported as microvolts (amplitude), millisecond (latency), relative power (mu rhythm), and total scaled score (social behavior predictors). A priori pairwise comparisons are reported for each outcome, adjusted for multiple comparisons using Bonferroni-correction. Abbreviations: SE=Standard error; LH=left hemisphere; RH=right hemisphere; BFRT=Benton Face Recognition Task; RMET=Reading the Mind in the Eyes Task.

| Process | Adults | Youth | Bonferroni-corrected p | |||||

|---|---|---|---|---|---|---|---|---|

| Measure | Mean | SE | Mean | SE | t | df | ||

| Low-level vision perception | ||||||||

| P100 amplitude | LH | 6.04 | 0.13 | 14.02 | 0.22 | −8.16 | 77.0 | <.0001 |

| P100 amplitude | RH | 6.14 | 0.12 | 15.04 | 0.22 | −9.10 | 77.0 | <.0001 |

| P100 latency | LH | 112.59 | 0.60 | 125.76 | 0.47 | −5.45 | 77.0 | <.0001 |

| P100 latency | RH | 112.78 | 0.58 | 127.18 | 0.46 | −5.89 | 77.0 | <.0001 |

| Structural encoding | ||||||||

| N170 amplitude | LH | −6.37 | 0.13 | −8.16 | 0.23 | 2.34 | 77.0 | 0.1316 |

| N170 amplitude | RH | −6.14 | 0.13 | −6.62 | 0.23 | 0.63 | 77.0 | 1.0000 |

| N170 latency | LH | 180.40 | 0.67 | 194.57 | 0.57 | −5.13 | 77.0 | <.0001 |

| N170 latency | RH | 179.00 | 0.64 | 194.55 | 0.58 | −5.66 | 77.0 | <.0001 |

| Higher-order processing | ||||||||

| N250 amplitude | LH | −5.93 | 0.11 | −11.64 | 0.18 | 7.27 | 77.0 | <.0001 |

| N250 amplitude | RH | −5.51 | 0.11 | −11.44 | 0.18 | 7.54 | 77.0 | <.0001 |

| N250 latency | LH | 296.99 | 1.04 | 307.47 | 0.95 | −3.54 | 77.0 | 0.0041 |

| N250 latency | RH | 296.29 | 1.04 | 302.42 | 0.93 | −2.06 | 77.0 | 0.2568 |

| Action understanding | ||||||||

| Mu rhythm | LH | −6.10 | 0.02 | −6.91 | 0.02 | 4.34 | 77.0 | 0.0003 |

| Mu rhythm | RH | −6.66 | 0.02 | −7.15 | 0.02 | 2.54 | 77.0 | 0.0779 |

| Social behavior | ||||||||

| BFRT | 42.79 | 0.62 | 40.71 | 0.70 | 2.15 | 77.0 | 0.0345 | |

| RMET | 26.76 | 0.78 | 20.16 | 0.35 | 8.43 | 77.0 | <.0001 | |

Table 1 also reports group differences and pairwise comparisons in behavioral outcomes that indicate stronger performance in adults relative to youths. Regarding facial recognition (BFRT), the majority of adults scored within the normal range (76.5% with scores > 41; range 36–52); in contrast, only 50.0% of the youth had scores above 41 (range 30–53). Pairwise comparisons indicated that youth scored 2.08 points lower than adults. Regarding mental state attribution (RMET), youth scored in a range of 16–25 while the adult score range was 17–35. It is important to note that both the BFRT and RMET were developed and normed with adults; thus, the reduced scores of youth are not surprising and likely due to development.

3.2. Interrelationships between latent structures.

CFAs were conducted to determine correlations between latent neural processes (as described in Section 2.6). The CFAs revealed a moderately good fit for both the adults (RMSEA=.057; CFI=.94; and SRMR=.04) and youth (RMSEA=.074; CFI=.93; SRMR=.057), which indicated that the observed indicators adequately measure the latent structures. The measurement model is presented in Figure 2, including covariance between latent structures. Of note, covariances across latent structures were generally small or non-significant, highlighting that the latent structures are capturing distinct information. For the youth model, a negative covariance (−.59, p < .001) between low-level vision processing and structural encoding indicated that these latent structures are slightly less. In contrast, a positive covariance (.13, p < .001) between vision processing and action understanding indicating a small amount of shared information between latent variables. For the adult model, a positive covariance (.63, p < .001) indicated a moderate amount of information is shared between vision processing and higher-order processing.

3.3. Temporal dynamics of neural processes between groups.

Just identified path models (i.e., equal number of parameters and variance/covariances) were generated separately for each group using the predicted latent value from each group CFA (i.e. circles depicted in Figure 2). Both models revealed a similar adequate fit for both the adults and youth (RMSEAs=0, CFIs=1, SRMRs=0). Considering our objective of comparing direct and indirect paths between adult and youth models (Bamber and van Santen 2000), these just-identified models provided a unique solution for all parameters, permitting group comparisons of paths. Path model results with standardized regression coefficients are illustrated in Figure 3. To test group differences, we conducted chi-square tests and report these results with standardized regression coefficients and significance values in Table 2. Here, we characterize these results:

Figure 3. Path analysis results.

Path analysis results for adult and youth models. Within each model, standardized factor loadings are reported and solid lines indicate significant paths (dashed lines indicate p > .05). Positive paths (blue) indicate that greater neural response is associated with increased neural response downstream or more accurate social behavior. Negative paths (red) indicate that a greater neural process response is associated with a decreased downstream response or less accurate behavior. Dashed lines did not meet significance criterion (p < 0.05). Abbreviations: VP, low-level visual perception; SE, structural encoding; HP, higher-order processing; AU, action understanding; FR, facial recognition behavior via the Benton Facial Recognition Task; MS, mental state behavior via the Reading the Eyes in the Mind task.

First, within the direct paths of the proposed hierarchical model, there was early correspondence across groups as indicated by significant regression coefficients of VP→SE for both groups. This path suggests increased visual processing (VP, i.e., more positive P100 amplitudes, faster P100 latencies) predicted reduced structural encoding activity (SE, i.e., less negative N170 amplitudes, slower N170 latencies). However, this effect was stronger for adults than youth, p=.005, as indicated by χ2 tests. Second, significant regression coefficients of SE→HP for both groups suggested that increased structural encoding (SE, i.e., more negative N170 amplitudes, slower N170 latencies) predicted increased higher-order processing (HP, i.e., more negative N250 amplitudes, slower N250 latencies) . There were no differences in magnitude or direction between groups, p=.67. Third, a pattern of increased HP (i.e., more negative N250 amplitudes, faster N250 latencies) predicting reduced action understanding (AU, i.e., more negative mu attenuation) was evident by significant youth coefficient regressions (p<.001), but not adults (p=.18). However, χ2 tests indicated no group differences.

Lastly, the indirect paths indicated a similar pattern for adults and youth: both groups had significant regression coefficients that increased early stages predict increased later stages (e.g., VP→HP, VP→AU, SE→AU). Two group differences were noted by χ2 tests, such that both VP→AU and SE→AU were stronger in magnitude for adults than youth by 0.07 and 0.21, respectively.

3.4. Relationship between neural processes and behavior.

Covariance between the two social behaviors indicated a non-significant relationship in youth (standardized coefficient=.16, p=.16) and a negative relationship in adults (standardized coefficient=−.39, p=.037). The paths between latent factors representing the neural processes and behavioral measures exhibited distinct patterns between developmental groups. Chi-square model comparisons confirmed significant group differences in prediction of facial recognition performance from early neural factors (low-level vision processing and structural encoding, p’s <.034). Opposing effects were found for early neural factors such that increased VP and SE indicated better facial recognition (FR) performance in adults but worse in youth. Adults also exhibited reduced mental state (MS) understanding performance with increased early neural factors (VP→MS, SE→MS) and the VP→MS effect was stronger by 0.70 in adults than youth. Finally, increased HP predicted better MS performance (adults) and better FR performance (youth), but increased HP predicted reduced FR in adults.

To assess whether these effects were related to developmental differences and not due to reduced performance within the youth group, post-hoc analyses were conducted for a subset of the adults with similarly poor performance (See SM2, Figure S3, Tables S4-5). The results indicated that adults with poorer mental state understanding (RMET) share similar temporal dynamics to youth (i.e., greater responses from early neural factors predicted the reduced RMET performance). In contrast, adults who scored below normal on the BFRT exhibited a more adult-like pattern such that increased VP and SE predicted better FR, but the underlying indirect effectivity connectivity model was disrupted such that increased VP predicted reduced AU. Although we caution fully interpreting such a small sample, the findings suggested that individual differences on face processing tasks might be related to unique neural signatures of temporal dynamics.

3.5. Understanding development as a continuous feature of age.

Lastly, a final model collapsed across all subjects assessed age as a continuous factor. Model fit was acceptable per CFI (0.962) and SRMR (0.043) but not RMSEA (0.293), thus, results should be interpreted cautiously. Standardized coefficients and p-values are reported in Table S6 and illustrated in Figure 4. The addition of age as an additional factor did change the overall model of temporal dynamics, such that none of the early neural factors predicted AU and only AU predicted behavioral performance. Increased age was associated with decreased VP, increased HP, and decreased AU.

Figure 4. Path analysis results for all subjects with age in years as continuous predictor.

Standardized factor loadings are reported and solid lines indicate significant paths (dashed lines indicate p > .05). Positive paths (blue) indicate that greater neural response is associated with increased neural response downstream or more accurate social behavior. Negative paths (red) indicate that a greater neural process response is associated with a decreased downstream response or less accurate behavior. Dashed lines did not meet significance criterion (p < 0.05). Abbreviations: VP, low-level visual perception; SE, structural encoding; HP, higher-order processing; AU, action understanding; FR, facial recognition behavior via the Benton Facial Recognition Task; MS, mental state behavior via the Reading the Eyes in the Mind task.

4. DISCUSSION

Our objective was to characterize developmental differences across distinct stages of face processing by assessing EEG patterns of temporal dynamics in youth and adults. We targeted latent factors corresponding to low-level vision processing (P100), structural encoding (N170), higher-order processing (N250), and action understanding (mu rhythm). Individual neural responses were greater for youth as predicted from prior work (Batty and Taylor 2002; Taylor et al. 2004; Batty and Taylor 2006). Our novel approach indicates unique patterns of that were distinct for youth and adults, specifically related to face recognition and mental state understanding (i.e., higher-order social information extraction) and age as a continuous factor.

The current findings are consistent with existing evidence indicating that youth exhibit greater electrophysiological responses to faces than adults across all stages of face processing. Other work has highlighted developmental shifts towards a decrease in ERP amplitude and latency for early components, including the P100 and N170 (Batty and Taylor 2002; Taylor et al. 2004; Batty and Taylor 2006). Although previous work has not reported N250 changes across development, our findings provide initial evidence of increased N250 amplitude and latency in youth relative to adults, paralleling differences observed for other components in previous research. The mu rhythm response is also increased in youth in this study, which though aligned with our ERP results, is the opposite pattern from a previous study (Oberman et al. 2013), though this discrepancy may be due to methodological differences (e.g., biological motion stimuli, different baseline control conditions, and age of the developmental group). In addition to demonstrating increased neural responses, youth also performed worse on social behavior tasks than adults. Taken together, these results imply that youth require increased effort during face processing that may reflect developing integration of skills that influence basic perceptual tasks, ultimately reducing behavioral face processing performance.

One of the primary innovations of this study addressed this uncertainty by evaluating the hierarchical contribution of specific cognitive processes using a theory-driven, systems-level approach. A hierarchical model was adapted from prior work (Haxby et al. 2000; Young and Bruce 2011) suggesting a functional progression from low-level vision processing, structural encoding, higher-order processing, and action understanding, consistent with developmental and comparative theories of fearful face processing more specifically (Leppänen and Nelson 2012). We generated latent variables for each neural process across hemisphere and measurement (i.e., amplitude, latency for ERP). Although amplitude and latency measurements provide potentially unique information, this strategy assessed the general impact of each specific stage of the face processing system. In this way, we evaluated the independent contribution of each stage to determine effects on proceeding stages. Indeed, the latent structures for each neural process exhibited very low amounts of covariance, which indicated that these latent structures captured distinct information.

Temporal dynamics between latent factors representing neural processes indicated divergent patterns between youth and adults. Generally, most direct (linear) and indirect (non-linear) paths significantly predicted later hierarchical stages, which is aligned with prior evidence indicating that earlier stages modulate ongoing face processing mechanisms (Pourtois et al. 2010). An advantage of the current study is the confirmation of these mechanisms using a dynamic strategy within single trials, supporting prior work delineating specific roles for substages of face processing (Scott et al. 2006; 2008). Several direct paths (both groups: VP→SE; youth only: SE→HP) were negative, implying opposite engagement of the two face processing stages. For instance, low levels of vision processing (i.e., P100) in turn modulate increased information processing (i.e., N170), suggesting a need to increase downstream activation levels of the subsequent stage for both youth and adults. This may be indicative of requiring additional information for appropriate representation and encoding of the face. Alternatively, this may also showcase a relative proportion of effort between these two stages. In other words, it may be the case that the P100 is transmitting information to a more expansive network of brain regions that elicit the N170 that in turn require relatively more effort during structural encoding than low-level vision processing. Similarly, for youth, the negative path between structural encoding and higher-order processing may indicate an increase of activation for the subsequent stage in youth, whereas adults exhibit stronger temporal patterns. The lack of feed-forward connectivity for adults may indicate reduced need for communication between later face processing stages.

One of the most important and surprising discoveries of this study is that the strength of temporal dynamics were not globally reduced in youth relative to adults. Within the direct and indirect paths, adults did exhibit a greater magnitude for certain paths (VP→AU, VP→SE), youth exhibited a greater magnitude for other paths (SE→AU), and others were similar across groups (SE→HP, HP→AU). Basic patterns were consistent across groups as well, such that increased early low-level visual perception (VP) predicts reduced structural encoding (SE). We had predicted that temporal dynamics would be equilaterally reduced across all stages of face processing in youth, aligned with theories that the face processing system continually matures through adulthood (Germine et al. 2010). Instead, these results indicate that the relationships between stages in youth are similar to adults but that magnitude differences suggest age-related changes in organization and/or dynamics of the face processing system. Work from fMRI indicates that structural connections between social brain regions are continuing to strengthen over development (Wang et al. 2017), in concert with the increasing specificity for faces within cortical regions (Behrmann et al. 2016). For instance, one fMRI study confirmed that the functional activation of the fusiform gyrus is directly related to an individual’s pattern of structural connectivity between social brain regions (Saygin et al. 2012). Taken together, our findings contribute to a growing body of research evaluating structural and functional connectivity by uniquely examining the associated temporal dynamics.

In addition, factor scores representing neural processes differentially predicted social behaviors between adults and youth. Specifically, better lower-order face processing skills, as measured by face recognition, were predicted by increased early stages in adults (VP, SE), but a later stage in youth (HP). In contrast, better higher-order mental state understanding skills were predicted by increased mid-stage in adults (HP) but not significantly predictive in youth. One of the reasons that youth performed poorly on behavioral tasks compared to adults may be related to overly responsive early neural processing (as indicated by negative predictions of VP and SE on MS and FR), which may reduce performance on behavioral tasks. This may be indicative of increased demands for bottom-up processing, whereas adults and youth with more successful face recognition utilize top-down processes (Bar 2003; Bitan et al. 2006; Gazzaley et al. 2008).

An alternative possibility is that performance on behavioral tasks is confounded by group membership, such that the neural factors reflect performance more so than development or age. We attempted to address this concern by subgrouping the adult sample based upon performance on behavioral outcomes (see SI Tables 4-5). There was not a clear-cut indication that youth have a distinct profile from low-performing adults. Specifically, regardless of whether adults performed poorly on BFRT or RMET, the low-performing adults exhibited an adult-like negative path between low-level VP and SE on FR and MS. However, low-performing adults on the BFRT exhibited an inconsistent negative indirect paths between VP on AU and SE on AU. Although we caution from over-interpretation of these preliminary results given the reduced sample, these findings suggest that paths are based upon concomitant face processing abilities. However, outstanding questions remain – including poor understanding of why some individuals (both adult and youth) may have performed on the behavioral measurements. It is helpful to note that the BFRT, in particular, was not meant as a diagnostic test and it is unclear whether a subclinical range exists. Future work would benefit from continued exploration of the neural mechanisms supporting these behaviors, in addition to efforts exploring how constellations of different face processing and social cognitive abilities may reflect unique temporal dynamics.

The findings and methodological innovations in the current study are pertinent for better understanding disorders with atypical social behavior. For instance, social processing is a key determinant of the functional abilities of patients with schizophrenia (Sergi et al. 2006; Turetsky et al. 2007) and youth and adults with developmental disorders such as autism spectrum disorders (Elgar and Campbell 2001; Klin et al. 2015). Although clinical electrophysiology studies have identified reductions in the key neural responses discussed here (McPartland et al. 2004; Webb et al. 2006; Wynn et al. 2008), it is unclear which particular stage of face processing is most impacted and whether subsequent stages are affected. By implementing the strategies of the current paper, it would be possible to evaluate possible hypotheses regarding whether impairments are evident within a discrete portion of the face processing system that may interfere with downstream transmission of information.

One caveat is that we exclusively tested a feed-forward, hierarchical system (Haxby et al. 2000; Young and Bruce 2011) due to analytical constraints. Future work should also explore feedback dynamics of temporal dynamics across trials to evaluate whether middle processing stages (e.g., SE or HP) may indeed modulate the early processing stages (e.g., VP) of subsequent trials. For instance, the posterior superior temporal sulcus (pSTS) has both feed-forward and feedback projections with the amygdala (Oram and Richmond 1999; Sugase et al. 1999), which is a critical anatomical connection for face processing and fear processing more specifically (Allison et al. 2000). Considering that the pSTS is implicated during face processing (Herrmann et al. 2004; Itier and Taylor 2004), it is important to address how feedback modulates ongoing social brain processing.

It is important to note that these developmental shifts are specific to the perception within one context (i.e., fearful expression). The aim of this study was not to isolate functional subsystems within the face processing system (e.g., emotion processing, identity detection), but rather to identify how a single trial can elucidate different aspects of the general system. Continued work will evaluate these mechanisms across a variety of contexts to better assess how and what information is communicated via effective connections between social processes. In addition, we acknowledge that the face processing system is continually developing across childhood, potentially at an accelerated rate during puberty due the structural and functional maturation of the cerebral cortex (Casey et al. 2000). Although the current cross-sectional study cannot address the developmental trajectories of the face processing system and is not adequately powered to make conclusions regarding age as a continuous factor, these findings highlight distinct differences between developmental groups.

4.1. Limitations:

Within this exploratory study, several population differences limit our ability to fully interpret the developmental effects. For one, there is a gender disparity between youth (1 female to 3 male) and adults (3 female to 2 male) groups. Considering known sex differences on face processing across development (McClure 2000), this may have disproportionately biased our developmental group differences. Another individual differences to note would be handedness, given known lateralization differences between right- and left-handeded individuals (Bukowski et al., 2013; Rangarajan & Parvizi, 2016). Additionally, we included a larger sample of youth (n=46) relative to adults (n=34), and developmental results may be improperly influenced by differential statistical power. Lastly, and arguably the most critical individual difference consideration, the age range without our youth group (8–18 years) includes a period of functional and structural brain development associated with improvements in face processing (Blakemore 2008; Burnett et al. 2011).

In addition, there are experimental considerations. First, it may be possible that there are carry-over influences from the static face since mu rhythm was extracted from the dynamic face, which was always presented second. It may be helpful to counterbalance the presentation order to determine if that changes the hierarchical model. An additional consideration relates to preprocessing of the data: although single-trial analytics are becoming more widely used (e.g., Hudac et al., 2018) and data were processed here according to the extant literature, the influence of particular EEG processing decisions (e.g., filtering range) are not fully vetted.

In future efforts, researchers need to consider the influence of age and sex on the emerging changes in temporal dynamics of the face processing system from childhood to adulthood.

5. CONCLUSION

This is the first study to systematically address the relationships between neural processes and a hierarchical model of temporal dynamics supporting face processing in youth and adults. Our findings demonstrate that temporal dynamics across development is associated with distinct patterns for youth relative to adults, particularly within early face processing stages. Neural processes have differential predictions on social behaviors that are more evident in adults than youth, further highlighting potential reduced face processing mechanisms in youth. This study utilized a novel methodology to capture unique neural stages and establish the effective connections across substages of the face processing system. Although the results address and build upon established theoretical frameworks (Haxby et al. 2000; Young and Bruce 2011), we acknowledge that the specific contribution of different data features (i.e., time and frequency) is difficult to interpret. However, this strategy will be valuable for researchers studying typical and atypical development in testing theory-driven aspects of complex cognitive systems.

Supplementary Material

Acknowledgements

This work was supported by the National Institutes of Health [R21 MH091309 to JMCP and RAB; R01 MH100047 to RAB; R01 MH100173 and R01 MH107426 to JMCP].

Footnotes

Declaration of interest statement

None of the authors have potential conflicts of interest to be disclosed.

REFERENCES

- Allison T, McCarthy G, Nobre A, Puce A, Belger A. Human Extrastriate Visual Cortex and the Perception of Faces, Words, Numbers, and Colors. Cerebral Cortex. Oxford University Press; 1994. September 1;4(5):544–54. [DOI] [PubMed] [Google Scholar]

- Allison T, Puce A, McCarthy G. Social perception from visual cues: role of the STS region. Trends in Cognitive Sciences. 2000. July;4(7):267–78. [DOI] [PubMed] [Google Scholar]

- Bae Y, Yoo BW, Lee JC, & Kim HC (2017). Automated network analysis to measure brain effective connectivity estimated from EEG data of patients with alcoholism. Physiological measurement, 38(5), 759. [DOI] [PubMed] [Google Scholar]

- Balconi M, Pozzoli U. Face-selective processing and the effect of pleasant and unpleasant emotional expressions on ERP correlates. International Journal of Psychophysiology. 2003. July;49(1):67–74. [DOI] [PubMed] [Google Scholar]

- Bamber D, van Santen J. How to assess a model’s testability and identifiability. Journal of Mathematical Psychology. 2000. March;44(1):20–40. [DOI] [PubMed] [Google Scholar]

- Bar M A cortical mechanism for triggering top-down facilitation in visual object recognition. J Cogn Neurosci. 2003;15(4):600–9. [DOI] [PubMed] [Google Scholar]

- Bar-Haim Y, Lamy D, Glickman S. Attentional bias in anxiety: a behavioral and ERP study. Brain and Cognition. 2005. October;59(1):11–22. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S, Wheelwright S, Hill J, Raste Y, Plumb I. The “Reading the Mind in the Eyes” Test revised version: a study with normal adults, and adults with Asperger syndrome or high-functioning autism. J Child Psychol & Psychiat. 2001. February;42(2):241–51. [PubMed] [Google Scholar]

- Batty M, Taylor MJ. Visual categorization during childhood: an ERP study. Psychophysiol. 2002. July;39(4):482–90. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ. Early processing of the six basic facial emotional expressions. Brain Res Cogn Brain Res. 2003. October;17(3):613–20. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ. The development of emotional face processing during childhood. Developmental Science. 2006. March;9(2):207–20. [DOI] [PubMed] [Google Scholar]

- Behrmann M, Scherf KS, Avidan G. Neural mechanisms of face perception, their emergence over development, and their breakdown. WIREs Cogn Sci. John Wiley & Sons, Inc; 2016. July;7(4):247–63. [DOI] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological Studies of Face Perception in Humans. J Cogn Neurosci. 1996. November;8(6):551–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S, Deouell LY. Structural encoding and identification in face processing: erp evidence for separate mechanisms. Cognitive Neuropsychology. Taylor & Francis Group; 2000. February 1;17(1):35–55. [DOI] [PubMed] [Google Scholar]

- Bentin S, Deouell LY, Soroker N. Selective visual streaming in face recognition: evidence from developmental prosopagnosia. Neuroreport. 1999. March 17;10(4):823–7. [DOI] [PubMed] [Google Scholar]

- Benton A, Sivan A, Hamsher K, Varney NR, Spreen O. Contributions to neuropsychological assessment Psychological Assessment Resources. Inc; 1994. [Google Scholar]

- Billinger M, Brunner C, Scherer R, Müller-Putz GR. Estimating Brain Connectivity from Single-Trial EEG Recordings. Biomedical Engineering / Biomedizinische Technik. 2013. September 4;:1–2. [DOI] [PubMed] [Google Scholar]

- Bitan T, Burman DD, Lu D, Cone NE, Gitelman DR, Mesulam M-M, et al. Weaker top–down modulation from the left inferior frontal gyrus in children. NeuroImage. 2006. November;33(3):991–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blakemore S-J. The social brain in adolescence. Nat Rev Neurosci. Nature Publishing Group; 2008. April 1;9(4):267–77. [DOI] [PubMed] [Google Scholar]

- Blakemore S-J, Choudhury S. Development of the adolescent brain: implications for executive function and social cognition. J Child Psychol & Psychiat. Blackwell Publishing Ltd; 2006. March;47(3–4):296–312. [DOI] [PubMed] [Google Scholar]

- Burnett S, Bird G, Moll J, Frith C, Blakemore SJ. Development during adolescence of the neural processing of social emotion. J Cogn Neurosci. MIT Press; 2009;21(9):1736–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burnett S, Sebastian C, Cohen Kadosh K, Blakemore S-J. The social brain in adolescence: Evidence from functional magnetic resonance imaging and behavioural studies. Neuroscience & Biobehavioral Reviews. 2011. August 1;35(8):1654–64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Büchel C, Friston K. Assessing interactions among neuronal systems using functional neuroimaging. Neural Netw [Internet]. 2000. October;13(8–9):871–82. Available from: http://eutils.ncbi.nlm.nih.gov/entrez/eutils/elink.fcgi?dbfrom=pubmed&id=11156198&retmode=ref&cmd=prlinks [DOI] [PubMed] [Google Scholar]

- Bukowski H, Dricot L, Hanseeuw B, & Rossion B (2013). Cerebral lateralization of face-sensitive areas in left-handers: only the FFA does not get it right. Cortex, 49(9), 2583–2589. [DOI] [PubMed] [Google Scholar]

- Carretié L, Martin-Loeches M, Hinojosa JA, Mercado F. Emotion and attention interaction studied through event-related potentials. J Cogn Neurosci. MIT Press 238 Main St., Suite 500, Cambridge, MA 02142–1046 USA journals-info@mit.edu; 2001. November 15;13(8):1109–28. [DOI] [PubMed] [Google Scholar]

- Casey BJ, Giedd JN, Thomas KM. Structural and functional brain development and its relation to cognitive development. Biol Psychol. 2000. October;54(1–3):241–57. [DOI] [PubMed] [Google Scholar]

- Chang W, Wang H, Yan G, & Liu C (2020). An EEG based familiar and unfamiliar person identification and classification system using feature extraction and directed functional brain network. Expert Systems with Applications, 158, 113448. [Google Scholar]

- Collins E, Robinson AK, & Behrmann M (2018). Distinct neural processes for the perception of familiar versus unfamiliar faces along the visual hierarchy revealed by EEG. NeuroImage, 181, 120–131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Haan M, Belsky J, Reid V, Volein A, Johnson MH. Maternal personality and infants’ neural and visual responsivity to facial expressions of emotion. J Child Psychol Psychiatry. Blackwell Publishing; 2004. September 30;45(7):1209–18. [DOI] [PubMed] [Google Scholar]

- De Haan M, Humphreys K, Johnson MH. Developing a brain specialized for face perception: a converging methods approach. Developmental Psychobiology. 2002a. April;40(3):200–12. [PubMed] [Google Scholar]

- de Haan M, Johnson MH, Halit H. Development of face-sensitive event-related potentials during infancy: a review. International Journal of Psychophysiology. 2003. December;51(1):45–58. [DOI] [PubMed] [Google Scholar]

- De Haan M, Pascalis O, Johnson MH. Specialization of neural mechanisms underlying face recognition in human infants. J Cogn Neurosci. MIT Press 238 Main St., Suite 500, Cambridge, MA 02142–1046 USA journals-info@mit.edu; 2002b. February 15;14(2):199–209. [DOI] [PubMed] [Google Scholar]

- Di Lorenzo G, Daverio A, Ferrentino F, Santarnecchi E, Ciabattini F, Monaco L, et al. Altered resting-state EEG source functional connectivity in schizophrenia: the effect of illness duration. Front Hum Neurosci. 2015. May 5;9:1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dima D, Stephan KE, Roiser JP, Friston KJ, & Frangou S (2011). Effective connectivity during processing of facial affect: evidence for multiple parallel pathways. Journal of Neuroscience, 31(40), 14378–14385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eimer M Event-related brain potentials distinguish processing stages involved in face perception and recognition. Clinical Neurophysiology. 2000. April;111(4):694–705. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A. An ERP study on the time course of emotional face processing. Neuroreport. 2002. March 25;13(4):427. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A, McGlone FP. The role of spatial attention in the processing of facial expression: an ERP study of rapid brain responses to six basic emotions. Cogn Affect Behav Neurosci. 2003. June;3(2):97–110. [DOI] [PubMed] [Google Scholar]

- Elgar K, Campbell R. Annotation: The cognitive neuroscience of face recognition: Implications for developmental disorders. J Child Psychol & Psychiat. Wiley Online Library; 2001;42(6):705–17. [DOI] [PubMed] [Google Scholar]

- Fairhall SL, & Ishai A (2007). Effective connectivity within the distributed cortical network for face perception. Cerebral cortex, 17(10), 2400–2406. [DOI] [PubMed] [Google Scholar]

- Frässle S, Krach S, Paulus FM, & Jansen A (2016). Handedness is related to neural mechanisms underlying hemispheric lateralization of face processing. Scientific reports, 6(1), 1–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gazzaley A, Clapp W, Kelley J, McEvoy K, Knight RT, D’Esposito M. Age-Related Top-Down Suppression Deficit in the Early Stages of Cortical Visual Memory Processing. P Natl Acad Sci USA. National Academy of Sciences; 2008. September 2;105(35):13122–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Germine LT, Duchaine B, Nakayama K. Where cognitive development and aging meet: Face learning ability peaks after age 30. Cognition. Elsevier B.V; 2010. December 2;:1–10. [DOI] [PubMed] [Google Scholar]

- Grossmann T, Striano T, Friederici AD. Developmental changes in infants’ processing of happy and angry facial expressions: a neurobehavioral study. Brain and Cognition. 2006. ed. 2007 June;64(1):30–41. [DOI] [PubMed] [Google Scholar]

- Hari R Action-perception connection and the cortical mu rhythm. Prog Brain Res. Elsevier; 2006;159:253–60. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. 2000. June;4(6):223–33. Available from: http://linkinghub.elsevier.com/retrieve/pii/S1364661300014820 [DOI] [PubMed] [Google Scholar]

- Haxby JV, Horwitz B, Ungerleider LG. The functional organization of human extrastriate cortex: a PET-rCBF study of selective attention to faces and locations. Journal of …. 1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herrmann MJ, Ehlis A-C, Ellgring H, Fallgatter AJ. Early stages (P100) of face perception in humans as measured with event-related potentials (ERPs). J Neural Transm. 2004. December 7;112(8):1073–81. [DOI] [PubMed] [Google Scholar]

- Hooper D, Coughlan J, Mullen M. Structural equation modelling: Guidelines for determining model fit. Articles. 2008;:2. [Google Scholar]

- Hu L-T, Bentler PM. Cutoff Criteria for Fit Indexes in Covariance Structure Analysis: Conventional Criteria Versus New Alternatives. Structural Equation Modeling: A Multidisciplinary Journal. Taylor & Francis Group; 1999;6(1):1–55. [Google Scholar]

- Hudac CM, DesChamps TD, Arnett AB, Cairney BE, Ma R, Webb SJ, et al. Early enhanced processing and delayed habituation to deviance sounds in autism spectrum disorder. Brain and Cognition. 2018. March 15;123:110–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hudac CM, & Sommerville JA (2020). 10 Understanding Others’ Minds and Morals: Progress and Innovation of Infant Electrophysiology. In The Social Brain: A Developmental Perspective (pp. 179–197). MIT Press; Cambridge, MA. [Google Scholar]

- Ishai A, Schmidt CF, Boesiger P. Face perception is mediated by a distributed cortical network. Brain Res Bull. 2005. September;67(1–2):87–93. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Taylor MJ. Source analysis of the N170 to faces and objects. Neuroreport. 2004. June;15(8):1261–5. [DOI] [PubMed] [Google Scholar]

- Kang E, Keifer CM, Levy EJ, Foss-Feig JH, McPartland JC, Lerner MD. Atypicality of the N170 Event-Related Potential in Autism Spectrum Disorder: A Meta-analysis. Biological Psychiatry: Cognitive Neuroscience and Neuroimaging. Elsevier Inc; 2017. December 27;:1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, Yovel G. The fusiform face area: a cortical region specialized for the perception of faces. Philosophical Transactions of the Royal Society B: Biological Sciences. The Royal Society; 2006. December 29;361(1476):2109–28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Key APF, Dove GO, Maguire MJ. Linking brainwaves to the brain: an ERP primer. Developmental Neuropsychology. Lawrence Erlbaum Associates, Inc; 2005;27(2):183–215. [DOI] [PubMed] [Google Scholar]

- Klin A, Shultz S, Jones W. Social visual engagement in infants and toddlers with autism: Early developmental transitions and a model of pathogenesis. Neuroscience & Biobehavioral Reviews. Elsevier Ltd; 2015. March 1;50:189–203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lai J, Pancaroglu R, Oruc I, Barton JJ, & Davies-Thompson J (2014). Neuroanatomic correlates of the feature-salience hierarchy in face processing: An fMRI-adaptation study. Neuropsychologia, 53, 274–283. [DOI] [PubMed] [Google Scholar]

- Lee L, Harrison LM, Mechelli A. A report of the functional connectivity workshop, Dusseldorf 2002. Neuroimage. 2003. pp. 457–65. [DOI] [PubMed] [Google Scholar]

- Leppänen JM, Nelson CA. Tuning the developing brain to social signals of emotions. Nature Publishing Group [Internet]. 2008. December 3;10(1):37–47. Available from: http://www.nature.com/nrn/journal/vaop/ncurrent/full/nrn2554.html [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppänen JM, Nelson CA. Early Development of Fear Processing. Curr Dir Psychol Sci. 2nd ed. SAGE PublicationsSage CA: Los Angeles, CA; 2012. May 30;21(3):200–4. [Google Scholar]

- Liao Y, Acar ZA, Makeig S, Deak G. EEG imaging of toddlers during dyadic turn-taking: Mu-rhythm modulation while producing or observing social actions. NeuroImage. Elsevier Inc; 2015;112(C):52–60. [DOI] [PubMed] [Google Scholar]

- Loehlin JC (1987). Latent variable models: An introduction to factor, path, and structural analysis. Lawrence Erlbaum Associates, Inc. [Google Scholar]

- Luck SJ, Kappenman ES. The Oxford Handbook of Event-Related Potential Components. Oxford University Press; 2013. [Google Scholar]

- Luo W, Feng W, He W, Wang N-Y, Luo Y-J. Three stages of facial expression processing: ERP study with rapid serial visual presentation. NeuroImage [Internet]. 2010. January 15;49(2):1857–67. Available from: 10.1016/j.neuroimage.2009.09.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malhotra P, Coulthard EJ, Husain M. Role of right posterior parietal cortex in maintaining attention to spatial locations over time. Brain. Oxford University Press; 2009. March;132(Pt 3):645–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McClure EB. A meta-analytic review of sex differences in facial expression processing and their development in infants, children, and adolescents. Psychological Bulletin. 2000. May;126(3):424–53. [DOI] [PubMed] [Google Scholar]

- McPartland J, Dawson G, Webb SJ, Panagiotides H, Carver LJ. Event-related brain potentials reveal anomalies in temporal processing of faces in autism spectrum disorder. J Child Psychol & Psychiat. 2004. October;45(7):1235–45. [DOI] [PubMed] [Google Scholar]

- McPartland JC, Bernier R, South M. Realizing the Translational Promise of Psychophysiological Research in ASD. Journal of Autism and Developmental Disorders. 2014. November 28;45(2):277–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miki K, Honda Y, Takeshima Y, Watanabe S, Kakigi R. Differential age-related changes in N170 responses to upright faces, inverted faces, and eyes in Japanese children. Front Hum Neurosci. Frontiers; 2015;9:263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muthukumaraswamy SD, Johnson BW. Changes in rolandic mu rhythm during observation of a precision grip. Psychophysiol [Internet]. 2004. January;41(1):152–6. Available from: 10.1046/j.1469-8986.2003.00129.x [DOI] [PubMed] [Google Scholar]

- Muthukumaraswamy SD, Johnson BW, McNair NA. Mu rhythm modulation during observation of an object-directed grasp. Cognitive Brain Research. 2004. April;19(2):195–201. [DOI] [PubMed] [Google Scholar]

- Münte TF, Brack M, Grootheer O, Wieringa BM, Matzke M, Johannes S. Brain potentials reveal the timing of face identity and expression judgments. Neurosci Res. 1998. January;30(1):25–34. [DOI] [PubMed] [Google Scholar]

- Naples A, Nguyen-Phuc A, Coffman M, Kresse A, Faja S, Bernier R, et al. A computer-generated animated face stimulus set for psychophysiological research. Behav Res Methods. Springer US; 2014;47(2):562–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelson CA, De Haan M. Neural correlates of infants’ visual responsiveness to facial expressions of emotion. Developmental Psychobiology. John Wiley & Sons, Inc; 1996;29(7):577–95. [DOI] [PubMed] [Google Scholar]

- Nguyen VT, Breakspear M, & Cunnington R (2014). Fusing concurrent EEG–fMRI with dynamic causal modeling: Application to effective connectivity during face perception. Neuroimage, 102, 60–70. [DOI] [PubMed] [Google Scholar]

- Nyström P The infant mirror neuron system studied with high density EEG. Social Neuroscience. 2008. September;3(3–4):334–47. [DOI] [PubMed] [Google Scholar]

- Oberman LM, McCleery JP, Hubbard EM, Bernier R, Wiersema JR, Raymaekers R, et al. Developmental changes in mu suppression to observed and executed actions in autism spectrum disorders. Soc Cogn Affect Neurosci. Oxford University Press; 2013. March;8(3):300–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olivares EI, Iglesias J, Saavedra C, Trujillo-Barreto NJ, & Valdés-Sosa M (2015). Brain signals of face processing as revealed by event-related potentials. Behavioural neurology, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oram MW, Richmond BJ. I see a face-a happy face. Nat Neurosci. 1999. October;2(10):856–8. [DOI] [PubMed] [Google Scholar]

- Paiva TO, Almeida PR, Ferreira-Santos F, Vieira JB, Silveira C, Chaves PL, et al. Similar sound intensity dependence of the N1 and P2 components of the auditory ERP: Averaged and single trial evidence. Clinical Neurophysiology. 2016. January;127(1):499–508. [DOI] [PubMed] [Google Scholar]

- Passarotti AM, Paul BM, Bussiere JR, Buxton RB, Wong EC, Stiles J. The development of face and location processing: an fMRI study. Developmental Science. Blackwell Publishing Ltd; 2003. February 1;6(1):100–17. [Google Scholar]

- Picton TW, Bentin S, Berg P, Donchin E, Hillyard SA, Johnson R, et al. Guidelines for using human event-related potentials to study cognition: recording standards and publication criteria. Vol. 37, Psychophysiology. 2000. pp. 127–52. [PubMed] [Google Scholar]

- Pineda JA. The functional significance of mu rhythms: translating “seeing” and “hearing” into “doing”. Brain Res Brain Res Rev. 2005. December 1;50(1):57–68. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Spinelli L, Seeck M, Vuilleumier P. Modulation of Face Processing by Emotional Expression and Gaze Direction during Intracranial Recordings in Right Fusiform Cortex. J Cogn Neurosci. MIT Press238 Main St., Suite 500, Cambridge, MA 02142–1046USA journals-info@mit.edu; 2010. September;22(9):2086–107. [DOI] [PubMed] [Google Scholar]

- Puce A, Allison T, Gore JC, McCarthy G. Face-Sensitive Regions in Human Extrastriate Cortex Studied by Functional Mri. J Neurophysiol. 1995. September;74(3):1192–9. [DOI] [PubMed] [Google Scholar]

- Rammsayer TH, & Troche SJ (2014). Elucidating the internal structure of psychophysical timing performance in the sub-second and second range by utilizing confirmatory factor analysis. In Neurobiology of interval timing (pp. 33–47). Springer, New York, NY. [DOI] [PubMed] [Google Scholar]

- Rangarajan V, & Parvizi J (2016). Functional asymmetry between the left and right human fusiform gyrus explored through electrical brain stimulation. Neuropsychologia, 83, 29–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Regtvoort AGFM, van Leeuwen TH, Stoel RD, van der Leij A. Efficiency of visual information processing in children at-risk for dyslexia: Habituation of single-trial ERPs. Brain and Language. 2006. September;98(3):319–31. [DOI] [PubMed] [Google Scholar]

- Rolls ET (1992). Neurophysiological mechanisms underlying face processing within and beyond the temporal cortical visual areas. Philosophical Transactions of the Royal Society of London. Series B: Biological Sciences, 335(1273), 11–21. [DOI] [PubMed] [Google Scholar]

- Saygin ZM, Osher DE, Koldewyn K, Reynolds G, Gabrieli JDE, Saxe RR. Anatomical connectivity patterns predict face selectivity in the fusiform gyrus. Nat Neurosci. Nature Publishing Group; 2012. February;15(2):321–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott LS, Tanaka JW, Sheinberg DL, Curran T. A reevaluation of the electrophysiological correlates of expert object processing. J Cogn Neurosci. 2006. September;18(9):1453–65. [DOI] [PubMed] [Google Scholar]

- Scott LS, Tanaka JW, Sheinberg DL, Curran T. The role of category learning in the acquisition and retention of perceptual expertise: A behavioral and neurophysiological study. Brain Research. 2008. May;1210:204–15. [DOI] [PubMed] [Google Scholar]

- Sergi MJ, Rassovsky Y, Nuechterlein KH, Green MF. Social perception as a mediator of the influence of early visual processing on functional status in schizophrenia. Am J Psychiatry. American Psychiatric Publishing; 2006. March;163(3):448–54. [DOI] [PubMed] [Google Scholar]

- Steiger JH. Understanding the limitations of global fit assessment in structural equation modeling. Personality and Individual Differences. 2007. May;42(5):893–8. [Google Scholar]

- Sugase Y, Yamane S, Ueno S, Kawano K. Global and fine information coded by single neurons in the temporal visual cortex. Nature. 1999. August 26;400(6747):869–73. [DOI] [PubMed] [Google Scholar]

- Taylor MJ. Non-spatial attentional effects on P1. Clinical Neurophysiology. 2002. December;113(12):1903–8. [DOI] [PubMed] [Google Scholar]

- Taylor MJ, Batty M, Itier RJ. The faces of development: a review of early face processing over childhood. J Cogn Neurosci. MIT Press 238 Main St., Suite 500, Cambridge, MA 02142–1046 USA journals-info@mit.edu; 2004. October;16(8):1426–42. [DOI] [PubMed] [Google Scholar]

- Taylor MJ, McCarthy G, Saliba E, Degiovanni E. ERP evidence of developmental changes in processing of faces. Clinical Neurophysiology. 1999. May;110(5):910–5. [DOI] [PubMed] [Google Scholar]