Abstract

Background:

Nurses often document patient symptoms in narrative notes.

Purpose:

This study used a technique called natural language processing (NLP) to: (1) Automatically identify documentation of seven common symptoms (anxiety, cognitive disturbance, depressed mood, fatigue, sleep disturbance, pain, and well-being) in homecare narrative nursing notes, and (2) examine the association between symptoms and emergency department visits or hospital admissions from homecare.

Method:

NLP was applied on a large subset of narrative notes (2.5 million notes) documented for 89,825 patients admitted to one large homecare agency in the Northeast United States.

Findings:

NLP accurately identified symptoms in narrative notes. Patients with more documented symptom categories had higher risk of emergency department visit or hospital admission.

Discussion:

Further research is needed to explore additional symptoms and implement NLP systems in the homecare setting to enable early identification of concerning patient trends leading to emergency department visit or hospital admission.

Background

Introduction

Symptoms are subjective indications of disease and include concepts such as fatigue, pain, and disturbed sleep (Corwin et al., 2014). Patients, their families, and health systems all play a role in alleviating the burden of symptoms (Corwin et al., 2014). Nurses are the largest sector of health providers; currently in the United States (US), approximately 4 million nurses provide care in a diverse range of clinical settings (Haddad & Toney-Butler, 2019). Nurses are often charged with managing symptoms (National Institute of Nursing Research, 2017). The National Institute of Nursing Research (NINR) recognized the centrality of symptom management in clinical practice and named symptom science as one of its four key strategic priorities (Corwin et al., 2014; National Institute of Nursing Research, 2017). To standardize symptom assessment and research at scale, NINR has recently developed a set of symptom-related common data elements in the areas of pain, sleep, fatigue, and affective and cognitive symptoms (Redeker et al., 2015).

Symptom Assessment and Management is Critical in Homecare

Symptom assessment and management by nurses is of critical importance, especially in settings where nurses provide the majority of patient care. Homecare is an example of such a setting. It is estimated that up to 12 million individuals receive care from homecare agencies annually (The Medicare Payment Advisory Commission, 2018; The National Association for Home Care and Hospice, 2010) – a number that will likely rise with the aging of the US population and growing emphasis on moving care from the acute sector into community settings (Caffrey et al., 2011). Patients admitted to homecare are often clinically complex, with more than six comorbid conditions and eight prescribed medications on average (Medicare Payment Advisory Commission, 2019).

Symptom management is one of the key objectives of homecare services, and nurses often implement multifaceted interventions to help patients alleviate a diverse range of symptoms. Failure to manage symptoms is one of the common reasons for emergency department (ED) visits or hospital admissions during homecare (Ma, Shang, Miner, Lennox, & Squires, 2018). With homecare patients accounting for approximately 17% of hospital admissions, there are multiple efforts underway to reduce avoidable hospital readmissions (Centers for Medicare and Medicaid Services, 2019).

A limited number of previous studies focused on identifying and describing symptoms among homecare patients. For example, some studies examined end-of-life symptoms among patients receiving palliative homecare (Gӧtze, Brähler, Gansera, Polze, & Kӧhler, 2014;Howell et al., 2011) or explored depressive and other psychiatric symptoms (Diefenbach, Tolin, & Gilliam, 2012; Markle-Reid et al., 2014). Most of the existing studies, however, used qualitative methodologies to interview small sample sizes of patients about their symptoms. Although such studies provide an indepth insight into personal symptom experiences, they lack generalizability and cannot be used to estimate symptom prevalence in homecare patients, a critical foundation for intervention development.

Natural Language Processing Can Help Extract Symptom Information at Scale

Symptom information is limited in currently available, structured (i.e., highly organized, formatted) homecare performance data sets, including the widely used Centers for Medicare and Medicaid Services (CMS) Outcome and Assessment Information Set (OASIS)(for Medicare & Medicaid Services, 2005). While CMS requires US homecare agencies to collect standardized assessment data (e.g., sociodemographics, clinical characteristics, hospitalizations, ED admissions) on all patients admitted to homecare, OASIS does not provide detailed symptom data. Furthermore, OASIS data collection is only completed at the first and last homecare visits, limiting its coverage of symptoms over time (O’Connor & Davitt, 2012). Moreover, while an important foundational step, the NINR common data element initiative is nascent (Redeker et al., 2015). Alternative data sources, in particular, free-text clinical narratives from electronic health records, may complement OASIS data and help to estimate symptom prevalence among homecare patients.

Recent studies, mostly conducted in inpatient settings, show that nursing narratives can help shed light into symptom prevalence (blinded Koleck et al., 2020). To process narrative nursing data at scale, a data science technique called natural language processing (NLP) can be applied. NLP includes a variety of statistical approaches that help to automatically extract meaning from clinical notes (Demner-Fushman, Chapman, & McDonald, 2009).

In general, NLP is implemented via series of computer algorithms. The specific approaches and tools used to perform NLP, however, are varied. In some cases, NLP algorithms rely mostly on manually curated, rule-based vocabularies generated by subject matter experts. For example, a recent study aimed to identify social risk factors among patients discharged from hospitals using discharge summaries (Navathe et al., 2018). To accomplish this task, study team experts’ (including physicians, nurses and pharmacologists) first developed comprehensive vocabularies of words and expressions that describe several key social risk factor categories, including tobacco use, drug abuse, depression, and housing instability. Example words and expressions representing the concept of “drug abuse” are as follows: IVDU (abbreviation for “intravenous drug use”), amphetamines abuse, cocaine abuse, and heroin dependence. Next, NLP was used to search discharge summaries (n = ~100,000) and find the manually curated, rule-based terms related to one or more social risk factor categories.

Alternatively, NLP can rely on patterns in the text extracted by machine learning classification algorithms rather than manually curated, rule-based vocabularies. For example, although providing adequate clinical information with radiology orders is important for accurate interpretation of imaging studies, a high percentage of radiology orders lack adequate order information (i.e., why an order was placed). Assad, Al, Topaz, Tu, and Zhou L (2017) applied machine learning to evaluate the adequacy of information in radiology orders (Assad et al., 2017). First, study experts manually classified a subset (n = ~2,000) of chest computed tomography (CT) orders as containing adequate or inadequate order information based on the clinical guidelines. Then, machine learning algorithms were used to learn features (i.e., characteristics) of the text that distinguish an adequate from an inadequate CT order. This process allowed the team to automate order adequacy evaluation with high accuracy. NLP approaches can also use a combination of manually curated rule-based and classification methods.

Previous NLP studies have highlighted the extent of valuable information that can be found in clinical narratives. For example, our team used NLP to extract information about patient falls from homecare clinical notes (Topaz et al., 2016) as well as wound characteristics (Topaz et al., 2019) and drug and alcohol abuse (Topaz et al., 2019) information from inpatient clinical notes. Other studies have implemented NLP in the domains of radiology (Cai et al. n.d., Hassanpour & Langlotz, 2016; Pons, Braun, Hunink, & Kors, 2016), mental health (Althoff, Clark, & Leskovec, 2016; Le, Van, Montgomery, Kirkby, & Scanlan, 2018; Zhou et al., 2015a), oncology (Kreimeyer et al., 2017; Yim, Yetisgen, Harris, & Kwan, 2016), and others (Acker et al., 2017; Assad et al., 2017; Goss et al., 2014; Plasek et al., 2016; Zhou et al., 2015b; Zhou et al., 2014). An emerging body of research used NLP to identify symptoms; however, a recent systematic review found only one study focused specifically on nursing data and no studies conducted in homecare (Theresa A Koleck et al., 2019).

Value of Narrative Data Captured by Nurses in Clinical Notes

Data found in clinical narratives include valuable information, critical for patient risk identification. For example, our previous study used NLP to study associations between heart failure self-management and rehospitalizations (Topaz et al., 2017). The study developed an NLP algorithm to mine free-text clinical notes and identify heart failure patients with suboptimal self-management behaviors in the domains of diet, physical activity, medication adherence, and adherence to clinician appointments. Adjusted regression analyses showed that poor self-management (e.g., poor diet or low medication adherence) were significantly associated with preventable 30-day rehospitalizations. Other studies used NLP of nursing notes to identify patients at risk for sepsis (Horng et al., 2017), cardiac arrest outcomes(Collins & Vawdrey, 2012), and out-of-hospital mortality (Waudby-Smith, Tran, Dubin, & Lee, 2018). No previous study has used NLP to estimate homecare patients’ risk for poor outcomes, such as hospitalizations or ED visits.

This study aims to bridge the evidence gaps and explore nursing symptom documentation in homecare via the following specific aims: (a) develop and validate an NLP algorithm to identify documentation of symptoms recommended by NINR as common data elements (anxiety, cognitive disturbance, depressed mood, fatigue, sleep disturbance, pain, and well-being) in homecare narrative nursing notes; (b) apply the NLP algorithm to examine the prevalence of symptoms in a cohort of patients admitted to a large urban homecare agency (n = 89K patients, ~2.5 million clinical notes); and (c) examine the association between symptoms and ED or hospital admissions from homecare.

Theoretical Framework

This study is guided by the Nursing Science Precision Health Model (Hickey et al., 2019) and specifically addresses precision in symptom prevalence measurement. Symptom science is essential to precision health approaches for disease treatment and prevention that takes into account individual variability in genes, environment, and lifestyle (Hickey et al., 2019). For this study, as an initial step in a program of research with the goal of creating a data science infrastructure for precision health, we developed and validated an NLP algorithm. The algorithm identifies symptoms in clinical notes as a strategy to improve precision in symptom measurement. Examining the relationship between symptom prevalence and patient outcomes (hospitalization s and ED visits) will further inform identification of intervention targets.

Methods

Study Methods Overview

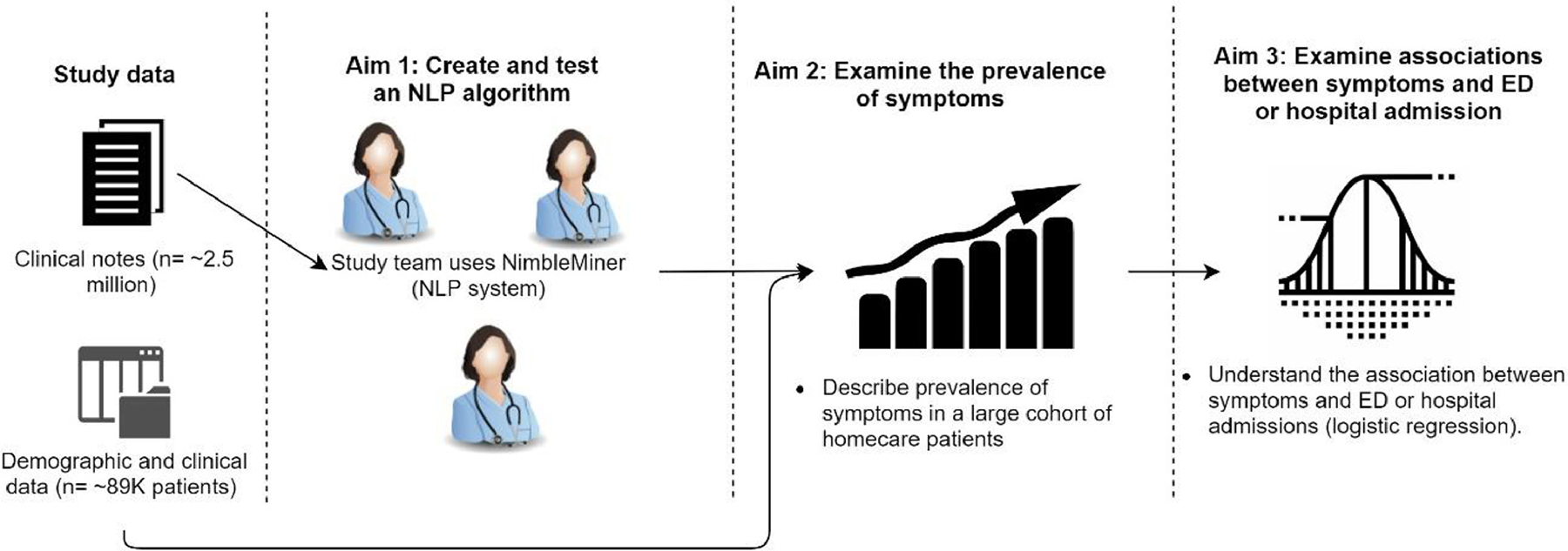

Figure 1 describes the general flow of the study methods. First, the study used NimbleMiner (an open-source and free NLP system (Topaz et al., 2019) to generate an NLP algorithm that can help identify symptoms. This algorithm was tested on a “gold-standard” expert-validated subset of clinical notes (Aim 1, NLP system testing section). The NLP algorithm was then applied to examine the prevalence of symptoms in a large cohort of homecare patients (Aim 2). Finally, logistic regression was used to understand the association between symptoms and ED visits or hospital admissions (Aim 3).

Figure 1 –

Study methods overview.

Setting and Sample

This study used electronic health record data from the Visiting Nurse Service of New York (VNSNY), the largest not-for-profit homecare provider in the US. VNSNY makes more than 1.3 million professional nursing visits per year to patients in New York City and the surrounding suburban counties. The study sample included 113,515 homecare episodes for 89,825 unique patients admitted to VNSNY from January 1 to December 31, 2014. Homecare episode length ranged between 10 and 60 days.

Patient sociodemographics, clinical characteristics, and study outcomes (hospitalization and ED admissions) were extracted from OASIS. OASIS assessments are conducted at both the patient’s homecare admission and discharge (Table 1). The study was approved by the Institutional Review Boards of Columbia University Irving Medical Center and VNSNY.

Table 1 –

Data Format, Sources, Domains, and Data Elements

| Format | Sources | Domains | Data Elements |

|---|---|---|---|

| Structured data | OASIS (start of care assessment) | Socio-demographic characteristics of patients | Age, gender, race/ethnicity, living situation |

| OASIS (start of care assessment) | Clinical characteristics of patients | Primary diagnoses, comorbid conditions, cognitive functioning, need in activities of daily living assistance, urinary and bowel incontinence | |

| OASIS (end of care assessment)* | Outcomes (service use) | ED visit or hospitalization | |

| Unstructured data (NLP analysis) | Clinical notes (visit & care coordination notes) | Symptom presence | Anxiety, cognitive disturbance, depressed mood, fatigue, sleep disturbance, pain, and poor well-being |

End of care OASIS assessments are conducted when patient’s homecare services end or interrupted by hospitalization or ED visit.

Clinical Notes

For this study population, we extracted ~2.5 million homecare notes (n = 1,149,586 visit notes and n = 1,461,171 care coordination notes) documented in the VNSNY electronic health record. Notes were documented by homecare clinicians during or after a patient visit. Visit notes ranged from lengthy admission notes (often written by a registered nurse) to shorter progress notes (e.g., nurse follow-up notes). Care coordination notes included documentation of communication with interdisciplinary care team members (e.g., primary care physician, social work), orders of supply or equipment (e.g., oxygen, wheelchair), and other care related information. The average visit note length was 150 words, while the average length of care coordination note was 99 words. All clinical notes were documented electronically; hence the text of the notes was in a computer readable format (txt).

Aim 1: Develop and Validate an NLP Algorithm to Identify Documentation of Symptoms: Symptoms Included as NINR Common Data Elements

We focused on seven symptoms selected for inclusion as NINR common data elements – anxiety, cognitive disturbance, depression, fatigue, sleep disturbance, pain, and well-being. Although the intent of our NLP approach is to complement, not replace, structured common data elements, we reviewed the NINR common data element questionnaires for each symptom, when applicable, as a vocabulary source for NLP algorithm development. Because we wanted to explore associations between symptoms and ED visits or hospital admissions from homecare, we developed a vocabulary of words and expressions for well-being that would be indicative of a lack of or poor well-being rather than a comfortable, healthy state.

NLP System Development: Previous Development of Vocabularies for Symptoms

Our team previously developed and used NimbleMiner (Topaz et al., 2019), an open-source and free NLP RStudio Shiny application, to create comprehensive vocabularies and NLP algorithms to automatically extract symptom-related information from electronic health record clinical narrative text (Koleck et al., 2020). A detailed step-by-step overview of the NimbleMiner system is published elsewhere and readers who want to explore applying the system are encouraged to review this article (Topaz et al., 2019).

Briefly, we first identified preliminary synonyms and expressions for each unique symptom using the Unified Medical Language System (UMLS) Metathesaurus Browser – a very large biomedical vocabulary database that includes standardized terminologies, such as Systemized Nomenclature of Medical Terms (SNOMED) (SNOMED, 2016), International Classification of Diseases version 10 (ICD-10) (WHO, 2014), and International Classification for Nursing Practice (ICNP) (International Council of Nurses, 2018), and is organized by concept (Bodenreider, 2004). Nurse clinician scientists reviewed and revised synonymous words and expressions for each symptom.

Then, we expanded the symptom vocabularies using NimbleMiner and two complementary bodies of text – (1) Electronic health record clinical notes from a diverse array of specialties, settings, and providers and (2) PubMed abstracts containing Medical Subject Heading or key word symptom-related terms. After uploading the text and preliminary UMLS/expertinformed synonyms into the NimbleMiner system, we built word embedding language models (i.e., a statistical representation of a body of text). Essentially, the word embedding models use neighboring words to identify other potential synonyms (i.e., words or expressions that appear in the same context). Based on the models, NimbleMiner suggests 50 similar words or expressions for each imported synonym via an intuitive user interface (Topaz et al., 2019). Two users with expertise in symptoms (registered nurses), plus a third adjudicator (registered nurse), iteratively reviewed, and accepted NimbleMiner suggested synonyms or expressions until no new relevant symptoms could be identified for each symptom. Words or expressions included abbreviations, misspellings, and unique multiword combinations (specific examples provided in the next section).

Using this process, we developed comprehensive symptom vocabularies for five out of the seven symptoms of interest for this study, including anxiety, depressed mood, fatigue, sleep disturbance, and pain. For the remaining more ambiguous symptom categories (i.e., cognitive disturbance and well-being), we combined vocabularies from multiple symptom concepts based on the questions that comprise the common data element questionnaires for these symptoms. The cognitive disturbance category included words and expressions related to the symptom concepts of impaired memory, impaired cognition, and impaired attention/executive function. The poor well-being category included words and expressions from the symptom concepts of malaise, emotional liability, suicidal ideation, agitation, anger, and difficulty coping. The candidate word and expression lists were reviewed and revised by the members of the research team until a final list was compiled for each symptom.

Expanding Vocabularies for Homecare-Specific Symptom Synonyms

We then developed a homecare-specific language model using all clinical notes available for the study (~2.5 million homecare notes). Using the synonym lists developed in the previous step as a baseline, and for each symptom concept/category, we identified additional synonyms based on homecare-specific data. Additional homecare-specific symptoms were identified by an expert in homecare nursing.

NLP System Testing

We created a gold standard human annotated testing set of 500 clinical notes randomly selected from the full sample of notes. Each note was annotated by two reviewers for presence of symptom(s). The inter-rater agreement was high (Kappa statistic = 0.91), which shows that strong agreement was achieved (McHugh, 2012). All disagreements were resolved through discussion.

We applied the NLP system to the gold standard testing set and calculated precision (defined as the number of true positives out of the total number of predicted positives), recall (defined as the number of true positives out of the actual number of positives), and F-score (defined as the weighted harmonic mean of the precision and recall) for each symptom (Beger, 2016). For all metrics, scores range between 0 and 1, with higher scores indicating better NLP performance. An F-score >0.8 would generally indicate that an NLP system achieves good performance in identifying symptoms.

Aim 2: Apply the NLP Algorithm to Examine the Prevalence of Symptoms

We applied our NLP algorithm to all clinical notes in the sample (excluding the 500 notes in the testing set) using NimbleMiner software. NimbleMiner identifies positive instances of a symptom in text using regular expressions (i.e., specially encoded strings of text). NimbleMiner is able to account for negated symptoms (e.g., denies fatigue, no pain). For each note, we generated an indicator of symptom presence (present or absent). We then aggregated this information at the level of a homecare episode for each patient. Symptom prevalence was summarized quantitatively. We also examined co-occurrence of symptoms.

Aim 3: Examine the Association Between Symptoms and ED or Hospital Admissions From Homecare

We developed logistic regression models (Hosmer, Leme-show, & Sturdivant, 2013) adjusted for all other patient sociodemographic and clinical characteristics described in Table 1 to examine whether the presence of a symptom was significantly associated with higher odds of ED or hospital admission. Presence of symptoms was represented by a binary indicator of whether a symptom was present or absent in each homecare episode. Since we had a relatively large number of variables and observations in the analysis, we implemented logistic regression with stepwise forward selection, where only variables with p-values of <.01 were retained in the final model (Hosmer et al., 2013).

Findings

Table 2 summarizes socio-demographic and clinical characteristics of the sample. The patient sample (n = 89,825 unique patients, n = 113,515 homecare episodes) had a mean age of 70.8 years, was 60.8% female, and was racially and ethnically diverse. One-third of patients lived alone or had limited cognitive functioning. The vast majority of the sample (97.8%) needed assistance with activities of daily living. The most common comorbid conditions were hypertension (63.9%), diabetes (35.2%), and arthritis (17.8%).

Table 2 –

Sociodemographic and Clinical Characteristics of the Sample

| Variable | N (113,515 homecare episodes) | % |

|---|---|---|

| Gender: Female | 69,068 | 60.8% |

| Mean age (SD) | 70.8 (16.2) | |

| Race | ||

| White | 48,303 | 42.6% |

| Black or African-American | 30,218 | 26.6% |

| Hispanic/Latino | 26,725 | 23.5% |

| Asian | 6,980 | 6.1% |

| Other | 1,147 | 1% |

| Patient living situation | ||

| Lives alone | 42,698 | 37.6% |

| Lives with other | 68,082 | 60.0% |

| Lives in congregate situation (e.g., assisted living) | 2,517 | 2.2% |

| Common comorbid conditions | ||

| Hypertension | 72,535 | 63.9% |

| Diabetes | 39,936 | 35.2% |

| Arthritis | 20,201 | 17.8% |

| Acute myocardial infraction | 19,418 | 17.1% |

| Chronic obstructive pulmonary disease | 18,798 | 16.6% |

| Heart failure | 15,972 | 14.1% |

| Depression | 13,165 | 11.6% |

| Renal disease | 11,646 | 10.3% |

| Any skin ulcer | 11,256 | 9.9% |

| Dementia | 10,661 | 9.4% |

| Stroke | 10,122 | 8.9% |

| Any cancer | 6,819 | 6.0% |

| Neurological disease | 5,751 | 5.1% |

| Peripheral vascular disease | 3,736 | 3.3% |

| AIDS | 2,642 | 2.3% |

| Limited cognitive functioning | 37,173 | 32.7% |

| Need assistance with activities of daily living | 110,972 | 97.8% |

| Urinary and/or bowel incontinence | 21,215 | 18.7% |

| ED visit or hospitalization | 16,923 | 14.9% |

Aim 1: Develop and Validate an NLP Algorithm to Identify Documentation of Symptoms

In total, we identified:

797 synonyms for anxiety (e.g., anxious, anxious[misspelling], constantly worried about, emotional distress, excessive worry, expressed fear, nervous overwhelmed);

349 synonyms for cognitive disturbance (e.g., attention impairment, bad memory, difficulty focusing, forgetfulness, mild cognitive decline, MCI [abbreviation- mild cognitive impairment], poor cognition, trouble concentrating);

819 synonyms for depressed mood (e.g., anhedonic, depressed, endorses feeling depressed, feeling blue, he feels sad, hopelessness, mood depressed, still feels sad);

769 synonyms for fatigue (e.g., exhausted, fatigue [misspelling], fatigue [misspelling], fatigue [misspelling], feel tired, groggy, just feel tired, lack of energy);

252 synonyms for sleep disturbance (e.g., disturbed sleep, difficulty sleeping, I can’t sleep, impaired sleep, insomnia, insomonia [misspelling], restless sleep, very poor sleep);

1,751 synonyms for pain (e.g., ache, backaches, becomes uncomfortable, discomfort, it hurts, myalgia, nerve pains, soreness);

1,250 synonyms for poor well-being (e.g., agitation, angers, dysphoria, endorsed S I [abbreviation], feeling unwell, labile mood, malaise, restlessness, self-harm).

Overall, the NLP system achieved high symptom identification accuracy (F = 0.87) when tested on the gold standard human reviewed set of 500 clinical notes (see Table 3). Best accuracy was achieved for fatigue and cognitive disturbance (F = 0.93).

Table 3 –

Natural Language Processing System Accuracy in Identifying Symptoms

| Recall | Precision | F-measure | |

|---|---|---|---|

| Sleep disturbance | 0.86 | 0.94 | 0.90 |

| Fatigue | 0.92 | 0.95 | 0.93 |

| Depressed mood | 0.79 | 0.80 | 0.80 |

| Anxiety | 0.92 | 0.89 | 0.90 |

| Poor well-being | 0.81 | 0.80 | 0.81 |

| Cognitive disturbance | 0.91 | 0.94 | 0.93 |

| Pain | 0.91 | 0.80 | 0.84 |

| Overall | 0.87 | 0.87 | 0.87 |

Aim 2: Apply the NLP Algorithm to Examine the Prevalence of Symptoms

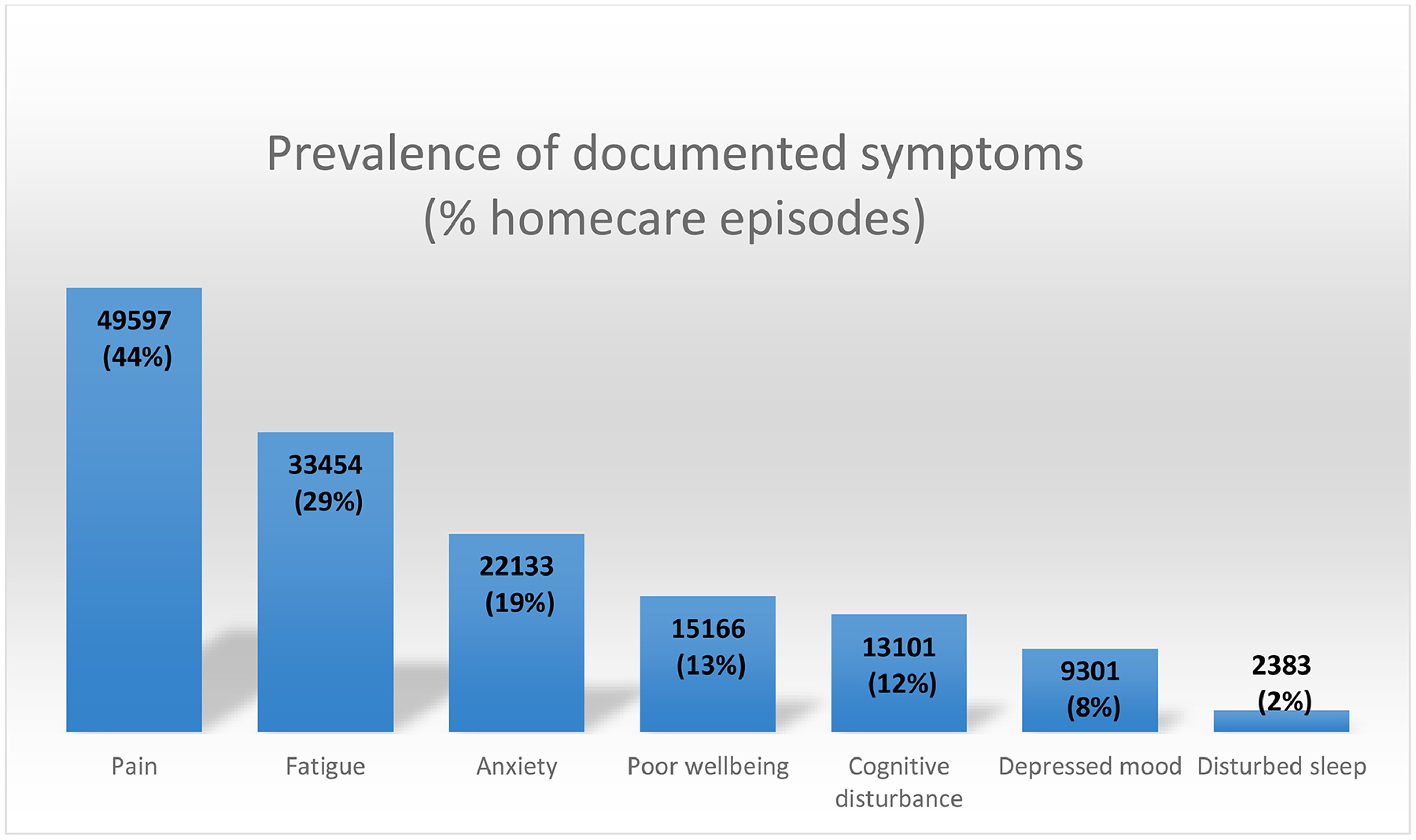

Figure 2 describes the prevalence of symptoms by homecare episode. The most prevalent symptoms were pain and fatigue (reported in 44% and 29% of homecare episodes, respectively) while the least prevalent symptom was disturbed sleep (reported in 2% of homecare episodes).

Figure 2 –

Prevalence of symptoms by homecare episode.

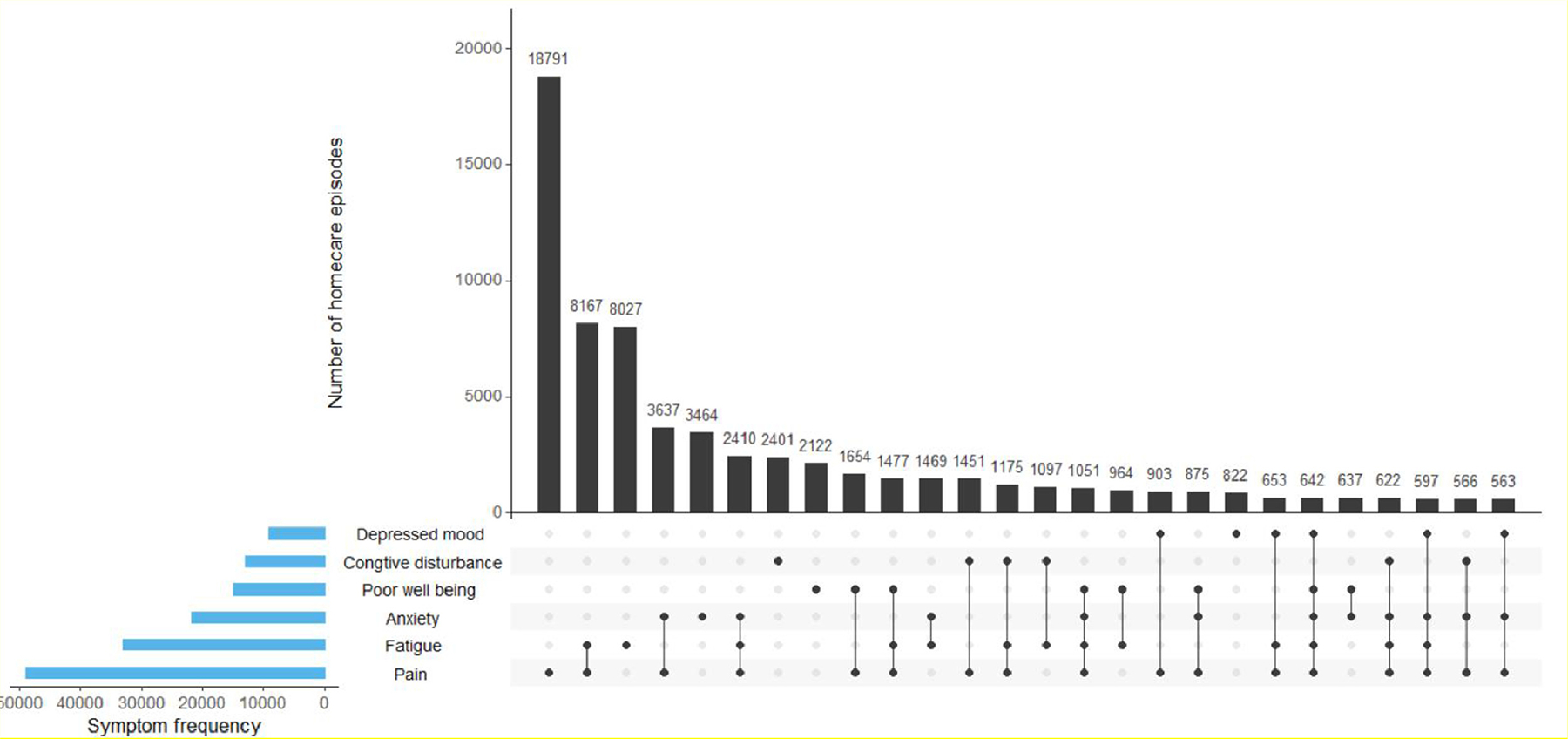

Figure 2 visualizes co-occurrence of symptoms within homecare episodes. The most frequent 2-symptom clusters included fatigue and pain (observed in n = 8,167 homecare episodes) and anxiety and pain (observed in n = 3,637 homecare episodes). The most frequent 3-symptom clusters included anxiety, fatigue, and pain (observed in n = 2,410 homecare episodes) and poor well-being, fatigue, and pain (observed in n = 1,477 homecare episodes).

Aim 3: Examine the Association Between Symptoms and ED Visits or Hospital Admissions

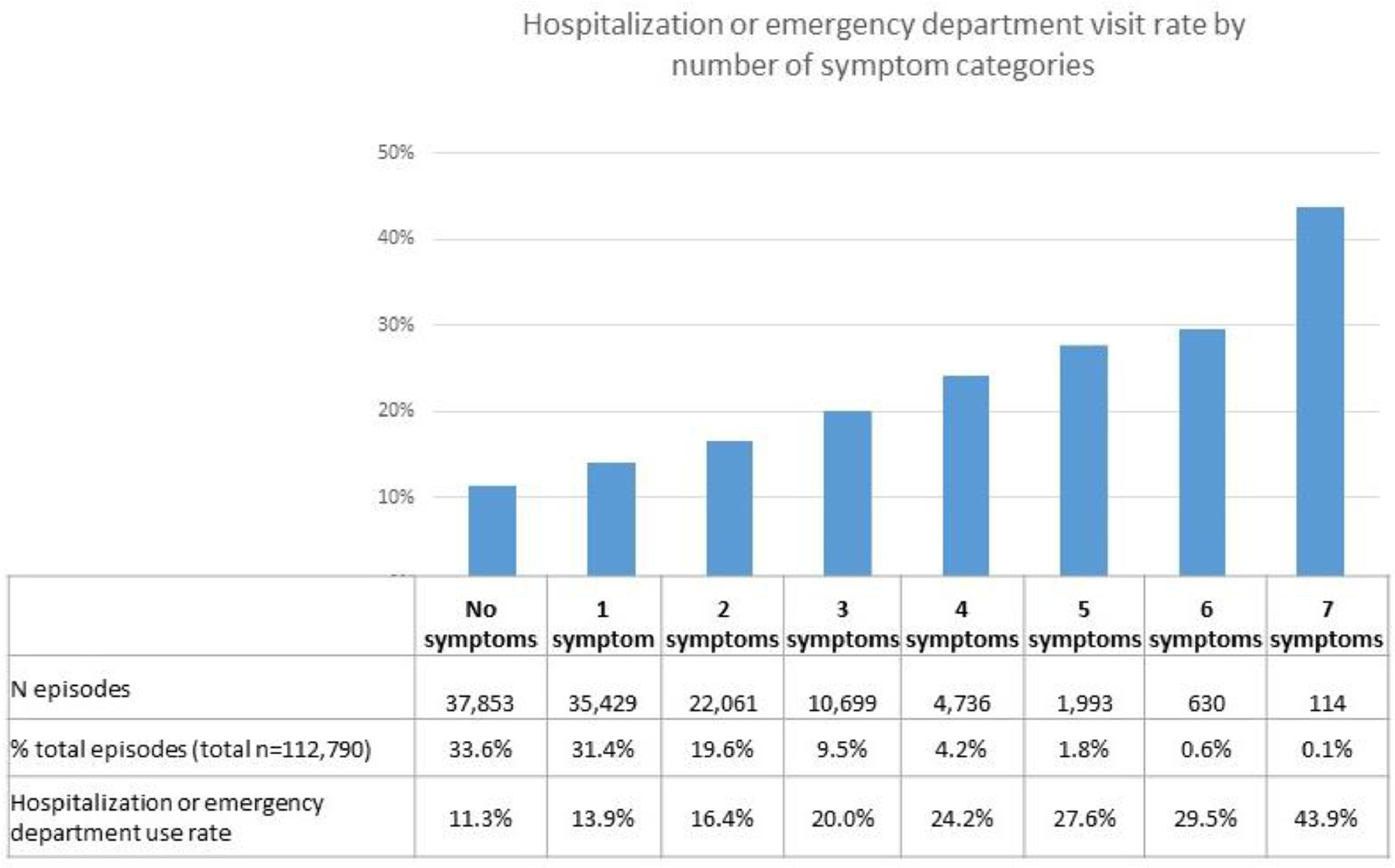

Overall, 14.9% (n = 16,923) of homecare episodes had a hospitalization or ED visit (one patient could have several episodes). Figure 3 displays co-occurrence of symptoms in homecare episodes. For example, 18,791 homecare episodes had only documentation of pain while 8,167 homecare episodes had documentation of pain + fatigue. Figure 3 presents symptoms cooccurring groups that were prevalent in >0.05% of homecare episodes. Figure 3 shows that patients with a higher number of reported symptom categories had significantly higher rates of hospitalizations or ED visits (p < .01). While only one in ten (11.3%) patients with no symptoms had a negative outcome, more than one in three patients with 6 or 7 different symptoms were hospitalized or visited the ED (29.5% and 43.9%, respectively).

Figure 3 –

Co-occurrence of symptoms by homecare episode.

Table 4 presents final results of stepwise forward selection logistic regression, adjusted for patient socio-demographic and clinical characteristics. Overall, the model included 20 variables significantly associated with risk of hospitalization or ED visit. Presence of five of the NLP-extracted symptoms was retained in the model, including poor well-being, fatigue, pain, sleep disturbance, and anxiety. Two symptoms, depressed mood and cognitive disturbance, were not significantly associated with risk of hospitalization or ED visit. Homecare episodes with poor well-being and fatigue had about 40% higher odds of hospitalization or ED visit.

Table 4 –

Results of Stepwise Logistic Regression to Select a Subset of Variables Significantly Associated with Risk of Hospitalization or ED Visit

| Variable | Odds Ratio | Std. Err. | Z | p | 95% CI | 95% CI |

|---|---|---|---|---|---|---|

| Any cancer | 2.16 | 0.07 | 24.55 | <.001 | 2.03 | 2.30 |

| Presence of urinary catheter | 2.10 | 0.13 | 12.2 | <.001 | 1.86 | 2.36 |

| Any skin ulcer | 1.90 | 0.05 | 25.41 | <.001 | 1.81 | 2.00 |

| Renal disease | 1.70 | 0.04 | 20.97 | <.001 | 1.61 | 1.78 |

| Heart failure | 1.49 | 0.03 | 17.31 | <.001 | 1.43 | 1.56 |

| Poor well-being* | 1.43 | 0.03 | 15.38 | <.001 | 1.37 | 1.50 |

| Fatigue* | 1.39 | 0.03 | 17.69 | <.001 | 1.34 | 1.44 |

| AIDS | 1.31 | 0.07 | 5.06 | <.001 | 1.18 | 1.46 |

| Peripheral vascular disease | 1.31 | 0.06 | 6.31 | <.001 | 1.21 | 1.43 |

| Chronic obstructive pulmonary disease | 1.30 | 0.03 | 11.95 | <.001 | 1.25 | 1.36 |

| DX: diabetes | 1.28 | 0.02 | 13.58 | <.001 | 1.23 | 1.32 |

| Pain* | 1.25 | 0.02 | 12.28 | <.001 | 1.20 | 1.29 |

| Urinary incontinence | 1.24 | 0.03 | 9.77 | <.001 | 1.18 | 1.29 |

| Disturbed sleep* | 1.18 | 0.06 | 3.17 | .002 | 1.06 | 1.31 |

| Any limitation in cognitive functioning | 1.14 | 0.01 | 11.85 | <.001 | 1.12 | 1.17 |

| Anxiety* | 1.14 | 0.02 | 6.1 | <.001 | 1.09 | 1.19 |

| DX: acute myocardial infraction | 1.13 | 0.03 | 5.48 | <.001 | 1.08 | 1.18 |

| DX: depression | 1.10 | 0.03 | 3.61 | <.001 | 1.04 | 1.16 |

| Assistance need in any activity of daily living | 1.09 | 0.01 | 16.51 | <.001 | 1.07 | 1.10 |

| Age | 0.99 | 0.00 | −10.26 | <.001 | 0.99 | 0.99 |

The variables were extracted via NLP of clinical notes.

Discussion

This study developed and implemented an automated NLP algorithm that can identity mentions of symptoms included as NINR common data elements in homecare clinical notes. The NLP algorithm achieved relatively high accuracy of symptom identification compared to symptom identification by clinicians. NLP symptom identification accuracy was similar or higher compared with other NLP algorithms developed and applied in other clinical settings (Dreisbach, Koleck, Bourne, & Bakken, 2019). These results are promising, and they warrant further research on feasibility of NLP in homecare settings.

Our findings indicate that two-thirds of homecare episodes had at least one NINR common data element symptom category documented. Interestingly, these results match well with a recent analysis of the National Health and Aging Trends Study that analyzed the prevalence of symptoms in community–dwelling adults 65 years and older. This study focused on six symptoms, including pain, fatigue, sleeping difficulty, breathing difficulty, depressed mood and anxiety (Patel et al., 2019). Four of these symptoms overlapped with symptoms explored in our study, namely pain, fatigue, sleeping difficulty, and depressed mood. Similar to our study, the national survey found that roughly two-thirds of older adults report experiencing at least one symptom, with 27% reporting one symptom (31% in our study), 21% reporting two symptoms (19% in our study), 14% reporting three symptoms (10% in our study), and the rest reporting more than 4 symptoms. Although study methods were very different (we used NLP of homecare clinical notes while the National Health and Aging Trends Study uses self-report of older adults), similarity in our results is reassuring and might potentially suggest that nurses’ documentation of symptoms is an accurate depiction of patient self-report.

Prevalence of individual symptoms in our study was mostly comparable to other studies as well. For example, previous research suggests that up to one-half of community living older adults in the US suffer from pain (Hunt et al., 2015; Patel et al., 2019), which is very similar to our findings (44%). Likewise, we found that fatigue was documented for roughly one-third of homecare episodes, a rate consistent with other investigations of elderly living in the community (de Rekeneire, Leo-Summers, Han, & Gill, 2014; Patel et al., 2019; Zengarini et al., 2015). In addition, both anxiety and depressed mood were reported with similar prevalence in the US older adult population (Centers for Disease Control and Prevention, 2019; Patel et al., 2019).

On the other hand, prevalence of poor sleep in this study was significantly lower than reported in previous studies. For example, a recent national observational study in the US found that about one-in-three older adults suffer from sleeping difficulty (Patel et al., 2019), with other estimations as high as 50% (Miner & Kryger, 2017). There are several potential explanations for the discrepancy between our findings and other studies. First, although our NLP vocabularies showed good performance on the expert annotated sample of clinical notes, we might have missed several other ways in which homecare clinicians describe disturbed sleep patterns. If our NLP algorithms are accurate, however, homecare clinicians might be under-assessing and/or under-reporting sleep issues among homecare patients. Further investigation is needed to understand this discrepancy. Approaches could include incorporation of additional data sources to identify disturbed sleep, such as sleep medications, or qualitative interviews with homecare clinicians to help understand why disturbed sleep is not documented more often.

We identified a diverse range of symptom co-occurrence combinations, with commonly occurring symptom dyads (e.g., fatigue + pain) and triads (anxiety + fatigue + pain). This finding suggests an opportunity for further study of disease-specific or multiple chronic condition symptom clusters using nursing documentation for the homecare population.

In terms of ED visits and hospitalizations, we found that for patients in homecare encounters with higher numbers of reported symptom categories had higher rates of ED visits or hospitalizations. These results are consistent with the National Health and Aging Trends Study that showed that the incidence of several negative outcomes (including recurrent falls, hospitalization, and disability) increased with greater symptom count (Patel et al., 2019). For example, older adults reporting 3 symptoms had two times higher odds of hospitalization compared to older adults with no symptoms. In our study, homecare encounters with 3 symptoms (20%) also had double the rate of ED visits or hospitalizations of encounters with no symptoms (10%). These findings may not be unexpected as nurses may document more symptom information for sicker patients who will eventually be admitted to the ED or hospitalized. Detailed examination of this trend focusing on individual symptoms or symptom clusters within certain condition(s) should be completed to improve ED visit or hospitalization prediction and facilitate understanding of the biological underpinnings of symptom manifestation.

In adjusted analyses via stepwise logistic regression, presence of five out of seven of the NLP-extracted symptom categories was significantly associated with higher odds of ED visit or hospitalization. For example, patients with documentation of poor well-being or fatigue had 40% higher odds of negative outcomes compared to patients without these symptoms.

This study had a few limitations. In addition to the limitations previously discussed (i.e. the algorithm to identify disturbed sleep may be insufficient and variability in nurse documentation practices related to patient acuity), we chose to prioritize symptoms with NINR common data element questionnaires. Inclusion of additional symptoms (e.g., shortness of breath, chest pain) or risk factors should be considered and may improve ED visit or hospitalization prediction. Another limitation is the fact that study data were collected during 2014, which may or may not reflect symptom documentation or management trends in more recent data. A final limitation of our study is related to the longitudinal nature of the data. In the current study, we used retrospective data to identify patients at risk for hospitalization and ED visits. If such NLP algorithms are applied on real-time patient encounter data, however, we would not have access to a cumulative number of symptoms from multiple homecare visits. In addition, patient symptom counts may fluctuate over time from visit to visit, representing a moving target of decreased or increased risk for negative outcomes. Thus, future work is needed to explore how to apply such NLP algorithms prospectively. This further work should use approaches such as time-series analyses (e.g., survival analyses) to explore symptom trajectories leading to early identification of patients at high risk for decline.

Overall, our results support the hypothesis that nursing documentation of patient’s symptoms is associated with negative outcomes and have implications for homecare. We envision that an automated NLP system can be integrated into the homecare electronic health record to scan nursing notes and identify documentation of symptoms in real time. Once risky symptom(s) or symptom clusters are identified, the system can automatically alert the nurse or care manager on the concerning trend as well as provide tailored nursing intervention and symptom self- or caregiver-management strategy (e.g., resting throughout the day/taking naps to mitigate fatigue, taking scheduled and as needed medications to manage pain) recommendations to be delivered at the point of care to prevent ED visits and hospitalization (Hickey et al., 2019). The NLP system can also be programmed to suggest patients with similar symptoms who are more likely to visit the ED or be hospitalized. Such early and personalized alerts can be used to inform timely interventions to avoid negative outcomes in the homecare setting.

Conclusions

This study was the first to develop, test, and implement an NLP system to identify NINR common data element symptoms documented by homecare nurses for a large cohort of patients. Our results serve as an initial step in a program of research with the goal of creating a data science infrastructure for precision health. Study findings indicate that NLP system accuracy in symptom identification was relatively high compared with expert review. Our findings also show that patients with more documented symptom categories have higher risk of ED visits and hospitalizations. Further research is needed to explore additional symptoms and implement NLP systems in the homecare setting to enable early identification of concerning patient trends.

Figure 4 –

Hospitalization or ED visit rate by number of symptom categories documented during a homecare episode.

Funding Sources:

This research was funded as a pilot study (Maxim Topaz, PI) of the Precision in Symptom Self-Management Center (P30 NR016587) in conjunction with R00NR017651 - Advancing chronic condition symptom cluster science through use of electronic health records and data science techniques

REFERENCES

- Acker WW, Plasek JM, Blumenthal KG, Lai KH,Topaz M, Seger DL, …, Zhou L (2017). Prevalence of food allergies and intolerances documented in electronic health records. Journal of Allergy and Clinical Immunology, (6), 140. 10.1016/j.jaci.2017.04.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Althoff T, Clark K, & Leskovec J (2016). Large-scale analysis of counseling conversations: An application of natural language processing to mental health. Transactions of the Association for Computational Linguistics, 4, 463–476. http://www.ncbi.nlm.nih.gov/pubmed/28344978. [PMC free article] [PubMed] [Google Scholar]

- Assad W, Al Topaz M, Tu J, & Zhou L (2017). The application of machine learning to evaluate the adequacy of information in radiology orders. 2017 IEEE International Conference on Bioinformatics and Biomedicine (BIBM) (pp. 305–310). 10.1109/BIBM.2017.8217668. [DOI] [Google Scholar]

- Beger A (2016). Precision-Recall curves. SSRN Electronic Journal. 10.2139/ssrn.2765419. [DOI]

- Bodenreider O (2004). The unified medical language system (UMLS): Integrating biomedical terminology. Nucleic Acids Research, 32(90001). 267D–270 10.1093/nar/gkh061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caffrey C, Sengupta M, Moss A, Harris-Kojetin L, & Valverde R (2011). Home health care and discharged hospice care patients: United States, 2000 and 2007. [PubMed]

- Cai T, Giannopoulos AA, Yu S, Kelil T, Ripley B, Kumamaru KK, … Mitsouras D Natural language processing technologies in radiology research and clinical applications. Radiographics : A Review Publication of the Radiological Society of North America, Inc, 36(1), 176–191. 10.1148/rg.2016150080 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Centers for Disease Control and Prevention. (2019). Depression is Not a Normal Part of Growing Older. Retrieved from https://www.cdc.gov/aging/mentalhealth/depression.htm

- Centers for Medicare and Medicaid Services. (2019). Home Health Compare. Retrieved from https://www.medicare.gov/homehealthcompare/search.html

- Collins SA, & Vawdrey DK (2012). Reading between the lines; of flow sheet data: Nurses’ optional documentation associated with cardiac arrest outcomes. Applied Nursing Research : ANR, 25(4), 251–257. 10.1016/j.apnr.2011.06.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corwin EJ, Berg JA, Armstrong TS, DeVito Dabbs A, Lee KA, Meek P, & Redeker N (2014). Envisioning the future in symptom science. Nursing Outlook, 62(5), 346–351. 10.1016/j.outlook.2014.06.006. [DOI] [PubMed] [Google Scholar]

- de Rekeneire N, Leo-Summers L, Han L, & Gill TM (2014). Epidemiology of restricting fatigue in older adults: the precipitating events project. Journal of the American Geriatrics Society, 62(3), 476–481. 10.1111/jgs.12685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Demner-Fushman D, Chapman WW, & McDonald CJ (2009). What can natural language processing do for clinical decision support. Journal of Biomedical Informatics, 42(5), 760–772. 10.1016/j.jbi.2009.08.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diefenbach GJ, Tolin DF, & Gilliam CM (2012). Impairments in life quality among clients in geriatric home care: associations with depressive and anxiety symptoms. International Journal of Geriatric Psychiatry, 27 (8), 828–835. 10.1002/gps.2791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dreisbach C, Koleck TA, Bourne PE, & Bakken S (2019). A systematic review of natural language processing and text mining of symptoms from electronic patient-authored text data. International Journal of Medical Informatics, 125, 37–46. 10.1016/j.ijmedinf.2019.02.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goss F, Plasek J, Lau J, Seger D, Chang F, & Zhou L (2014). An evaluation of a natural language processing tool for identifying and encoding allergy information in emergency department clinical notes. AMIA Annual Symposium Proceedings, 1, 580–588. [PMC free article] [PubMed] [Google Scholar]

- Gӧtze H, Brähler E, Gansera L, Polze N, & Kӧhler N (2014). Psychological distress and quality of life of palliative cancer patients and their caring relatives during home care. Supportive Care in Cancer, 22(10), 2775–2782. 10.1007/s00520-014-2257-5. [DOI] [PubMed] [Google Scholar]

- Haddad LM, & Toney-Butler TJ (2019). Nursing shortage. StatPearls StatPearls Publishing. http://www.ncbi.nlm.nih.gov/pubmed/29630227. [PubMed] [Google Scholar]

- Hassanpour S, & Langlotz CP (2016). Information extraction from multi-institutional radiology reports. Artificial Intelligence in Medicine, 66, 29–39. 10.1016/j.artmed.2015.09.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hickey KT, Bakken S, Byrne MW, Bailey D.(Chip) E., Demiris G, Docherty SL, …, Grady PA (2019). Precision health: Advancing symptom and self-management science. Nursing Outlook. 10.1016/j.outlook.2019.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horng S, Sontag DA, Halpern Y, Jernite Y, Shapiro NI, & Nathanson LA (2017). Creating an automated trigger for sepsis clinical decision support at emergency department triage using machine learning. PLoS One, 12(4) e0174708. 10.1371/journal.pone.0174708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hosmer DW, Lemeshow S, & Sturdivant RX (2013). Applied logistic regression (3rd ed.). Wiley. http://books.google.com/books?id=wGO5h0Upk9gC. [Google Scholar]

- Howell D, Marshall D, Brazil K, Taniguchi A, Howard M, Foster G, & Thabane L (2011). A shared care model pilot for palliative home care in a rural area: impact on symptoms, distress, and place of death. Journal of Pain and Symptom Management, 42(1), 60–75. 10.1016/J.JPAINSYMMAN.2010.09.022. [DOI] [PubMed] [Google Scholar]

- Hunt LJ, Covinsky KE, Yaffe K, Stephens CE, Miao Y, Boscardin WJ, & Smith AK (2015). Pain in community-dwelling older adults with dementia: results from the National Health and Aging Trends Study. Journal of the American Geriatrics Society, 63(8), 1503–1511. 10.1111/jgs.13536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- International Council of Nurses. (2018). About ICNP | ICN -International Council of Nurses. Retrieved from https://www.icn.ch/what-we-do/projects/ehealth-icnp/abouticnp

- Koleck TA, Tatonetti NP, Bakken S, Mitha S, Henderson MM, George M, Miaskowski C, Smaldone A, & Topaz M (2020). Identifying Symptom Information in Clinical Notes Using Natural Language Processing. Nursing research. 10.1097/NNR.0000000000000488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Le DV, Montgomery J, Kirkby KC, & Scanlan J (2018). Risk prediction using natural language processing of electronic mental health records in an inpatient forensic psychiatry setting. Journal of Biomedical Informatics, 86, 49–58. 10.1016/j.jbi.2018.08.007. [DOI] [PubMed] [Google Scholar]

- Kreimeyer K, Foster M, Pandey A, Arya N, Halford G, Jones SF, …, Botsis T (2017). Natural language processing systems for capturing and standardizing unstructured clinical information: A systematic review. Journal of Biomedical Informatics, 73, 14–29. 10.1016/j.jbi.2017.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koleck TA, Dreisbach C, Bourne PE, & Bakken S (2019). Natural language processing of symptoms documented in free-text narratives of electronic health records: a systematic review. Journal of the American Medical Informatics Association : JAMIA, 26(4), 364–379. 10.1093/jamia/ocy173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ma C, Shang J, Miner S, Lennox L, & Squires A (2018). The prevalence, reasons, and risk factors for hospital readmissions among home health care patients: A systematic review. Home Health Care Management & Practice, 30(2), 83–92. 10.1177/1084822317741622. [DOI] [Google Scholar]

- Markle-Reid M, McAiney C, Forbes D, Thabane L, Gibson M, Browne G, …, Busing B (2014). An interprofessional nurse-led mental health promotion intervention for older home care clients with depressive symptoms. BMC Geriatrics, 14(1), 62. 10.1186/1471-2318-14-62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McHugh ML (2012). Interrater reliability: the kappa statistic. Biochemia Medica, 22(3), 276–282. http://www.ncbi.nlm.nih.gov/pubmed/23092060. [PMC free article] [PubMed] [Google Scholar]

- Medicare Payment Advisory Commission. (2019). March 2019 Report to the Congress: Medicare Payment Policy. http://www.medpac.gov/-documents-/reports.

- Miner B, & Kryger MH (2017). Sleep in the aging population. Sleep Medicine Clinics, 12(1), 31–38. 10.1016/j.jsmc.2016.10.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Institute of Nursing Research. (2017). Symptom Science. https://www.ninr.nih.gov/newsandinformation/iq/symptom-science-workshop.

- Navathe AS, Zhong F, Lei VJ, Chang FY, Sordo M, Topaz M, …, Zhou L (2018. April). Hospital readmission and social risk factors identified from physician notes. Health Serv Res, 53(2), 1110–1136, doi: 10.1111/1475-6773.12670 Epub 2017 Mar 13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O’Connor M, & Davitt JK (2012). The Outcome and Assessment Information Set (OASIS): a review of validity and reliability. Home Health Care Services Quarterly, 31 (4), 267–301. 10.1080/01621424.2012.703908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patel KV, Guralnik JM, Phelan EA, Gell NM, …, Wallace RB (2019). Symptom burden among community-dwelling older adults in the United States. Journal of the American Geriatrics Society, 67(2), 223–231. 10.1111/jgs.15673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plasek JM, Goss FR, Lai KH, Lau JJ, Seger DL, Blumenthal KG, …, Zhou L (2016). Food entries in a large allergy data repository. Journal of the American Medical Informatics Association, (e1), 23. 10.1093/jamia/ocv128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pons E, Braun LMM, Hunink MGM, & Kors JA (2016). Natural language processing in radiology: A systematic review. Radiology, 279(2), 329–343. 10.1148/radiol.16142770. [DOI] [PubMed] [Google Scholar]

- Redeker NS, Anderson R, Bakken S, Corwin E, Docherty S, …, Dorsey SG (2015). Advancing symptom science through use of common data elements. Journal of Nursing Scholarship, 47(5), 379–388. 10.1111/jnu.12155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- SNOMED. (2016). Snomed CT. U.S. National Library of Medicine; U.S. National Library of Medicine. [Google Scholar]

- The Medicare Payment Advisory Commission. (2018). Home health care services. Retrieved from http://www.medpac.gov/docs/default-source/reports/mar18_medpac_ch9_sec.pdf?sfvrsn=0

- The National Association for Home Care and Hospice. (2010). Basic Statistics About Home Care Medicare-Certified Agencies.

- Topaz M, Lai K, Dowding D, Lei VJ, Zisberg A, Bowles KH, & Zhou L (2016). Automated identification of wound information in clinical notes of patients with heart diseases: Developing and validating a natural language processing application. Int J Nurs Stud, 64, 25–31. 10.1016/j.ijnurstu.2016.09.013. Epub 2016 Sep 19. [DOI] [PubMed] [Google Scholar]

- Topaz M, Murga L, Gaddis KM, McDonald MV, Bar-Bachar O, Goldberg Y, & Bowles KH (2019). Mining fall-related information in clinical notes: Comparison of rule-based and novel word embedding-based machine learning approaches. Journal of biomedical informatics, 90, 103103. 10.1016/j.jbi.2019.103103. [DOI] [PubMed] [Google Scholar]

- Topaz M, Murga L, Bar-Bachar O, Cato K, & Collins S (2019). Extracting Alcohol and Substance Abuse Status from Clinical Notes: The Added Value of Nursing Data. Stud Health Technol Inform, 264, 1056–1060. 10.3233/SHTI190386. [DOI] [PubMed] [Google Scholar]

- Topaz M, Radhakrishnan K, Blackley S, Lei V, Lai K, & Zhou L (2017). Studying Associations Between Heart Failure Self-Management and Rehospitalizations Using Natural Language Processing. Western journal of nursing research, 39(1), 147–165. 10.1177/0193945916668493. [DOI] [PubMed] [Google Scholar]

- Topaz M, Murga L, Bar-Bachar O, McDonald M, & Bowles K (2019). NimbleMiner: An Open-Source Nursing-Sensitive Natural Language Processing System Based on Word Embedding. Computers, informatics, nursing : CIN, 37(11), 583–590. 10.1097/CIN.0000000000000557. [DOI] [PubMed] [Google Scholar]

- Waudby-Smith IER, Tran N, Dubin JA, & Lee J (2018). Sentiment in nursing notes as an indicator of out-of-hospital mortality in intensive care patients. PLoS One, 13(6) e0198687. 10.1371/journal.pone.0198687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- WHO. (2014). WHO | International Classification of Diseases (ICD). World Health Organization. http://www.who.int/classifications/icd/en/. [Google Scholar]

- Yim W, Yetisgen M, Harris WP, & Kwan SW (2016). Natural language processing in oncology. JAMA Oncology, 2(6), 797, doi:10.1001/jamaoncol.2016.021310.1001/jamaoncol.2016.0213https://doi.org/https://doi.org/ . [DOI] [PubMed] [Google Scholar]

- Zengarini E, Ruggiero C, Pérez-Zepeda MU, Hoogendijk EO, Vellas B, Mecocci P, & Cesari M (2015). Fatigue: Relevance and implications in the aging population. Experimental Gerontology, 70, 78–83, doi:10.1016/j.exger.2015.07.01110.1016/j.exger.2015.07.011https://doi.org/https://doi.org/ . [DOI] [PubMed] [Google Scholar]

- Zhou L, Baughman AW, Lei VJ, Lai KH, Navathe AS, …, Chang F (2015a). Identifying patients with depression using free-text clinical documents. Studies in Health Technology and Informatics: 216. 10.3233/978-1-61499-564-7-629. [DOI] [PubMed] [Google Scholar]

- Zhou L, Baughman AW, Lei VJ, Lai KH, Navathe AS, …, Chang F (2015b). Identifying patients with depression using free-text clinical documents. Studies in Health Technology and Informatics, 216, 629–633. http://www.ncbi.nlm.nih.gov/pubmed/26262127. [PubMed] [Google Scholar]

- Zhou L, Lu Y, Vitale CJ, Mar PL, Chang F, Dhopeshwarkar N, & Rocha RA (2014). Representation of information about family relatives as structured data in electronic health records. Applied Clinical Informatics, 5(2), 349–367, doi:10.4338/ACI-2013-10-RA-008010.4338/ACI-2013-10-RA-0080https://doi.org/https://doi.org/ . [DOI] [PMC free article] [PubMed] [Google Scholar]