Abstract

Purpose

To develop a deep learning model to detect incorrect organ segmentations at CT.

Materials and Methods

In this retrospective study, a deep learning method was developed using variational autoencoders (VAEs) to identify problematic organ segmentations. First, three different three-dimensional (3D) U-Nets were trained on segmented CT images of the liver (n = 141), spleen (n = 51), and kidney (n = 66). A total of 12 495 CT images then were segmented by the 3D U-Nets, and output segmentations were used to train three different VAEs for the detection of problematic segmentations. Automatic reconstruction errors (Dice scores) were then calculated. A random sampling of 2510 segmented images each for the liver, spleen, and kidney models were assessed manually by a human reader to determine problematic and correct segmentations. The ability of the VAEs to identify unusual or problematic segmentations was evaluated using receiver operating characteristic curve analysis and compared with traditional non–deep learning methods for outlier detection. Using the VAE outputs, passive and active learning approaches were performed on the original 3D U-Nets to determine if training could decrease segmentation error rates (15 CT scans were added to the original training data, according to each approach).

Results

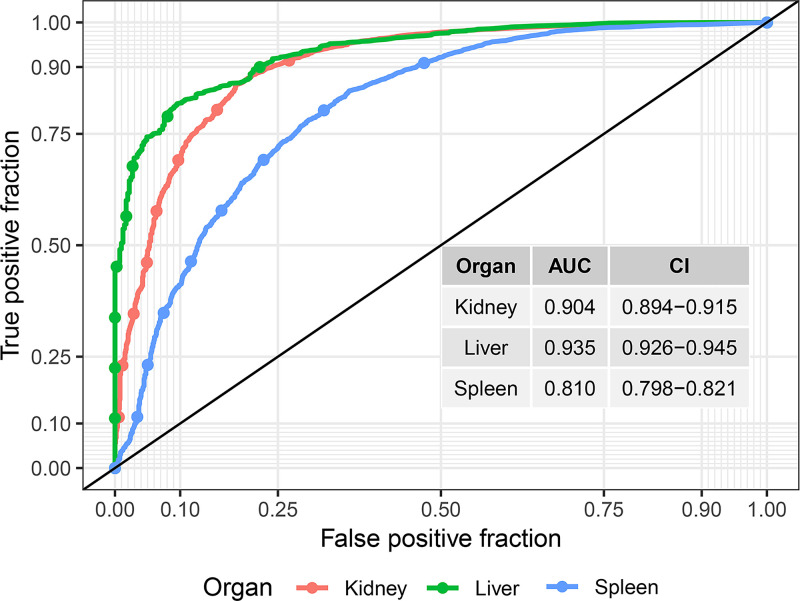

The mean area under the receiver operating characteristic curve (AUC) for detecting problematic segmentations using the VAE method was 0.90 (95% CI: 0.89, 0.92) for kidney, 0.94 (95% CI: 0.93, 0.95) for liver, and 0.81 (95% CI: 0.80, 0.82) for spleen. The VAE performance was higher compared with traditional methods in most cases. For example, for liver segmentation, the highest performing non–deep learning method for outlier detection had an AUC of 0.83 (95% CI: 0.77, 0.90) compared with 0.94 (95% CI: 0.93, 0.95) using the VAE method (P < .05). Using the information on problematic segmentations for active learning approaches decreased 3D U-Net segmentation error rates (original error rate, 7.1%; passive learning, 6.0%; active learning, 5.7%).

Conclusion

A method was developed to screen for unusual and problematic automatic organ segmentations using a 3D VAE.

Keywords: Convolutional Neural Network (CNN), Deep Learning Algorithms, Machine Learning Algorithms, Segmentation, CT

© RSNA, 2021

Keywords: Convolutional Neural Network (CNN), Deep Learning Algorithms, Machine Learning Algorithms, Segmentation, CT

Summary

A model was developed to detect problematic automatic segmentations of the liver, spleen, and kidney in an unsupervised manner.

Key Points

■ The area under the receiver operating characteristic curve for detecting problematic segmentations using the variational autoencoder method was 0.90 for kidney, 0.94 for liver, and 0.81 for spleen.

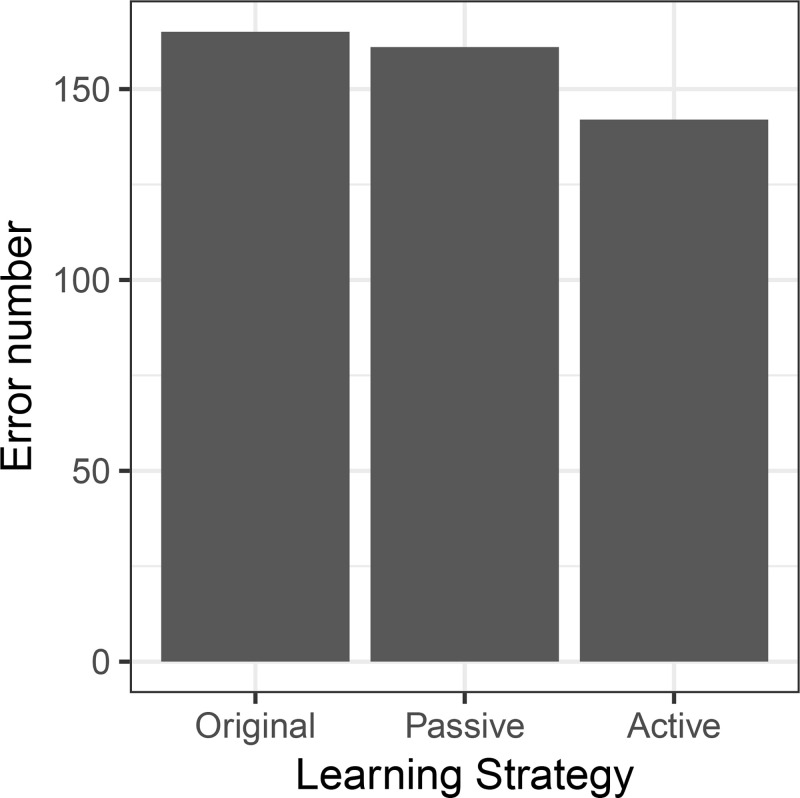

■ Comparison of a passive learning approach with an active learning approach resulted in a numerically lower rate of resulting problematic segmentations in an unseen sample of 2480 CT scans (6.0% and 5.7%, respectively).

Introduction

Organ segmentation on CT data using three-dimensional (3D) convolutional neural networks is highly effective, but generating training labels is quite time-consuming. An insufficient number of labels impairs the performance of the trained algorithm, especially in regard to generalization to clinical routine.

Commonly available small-to-moderate–size datasets (50–750 segmentations [1]) are sufficient to achieve good “in-dataset” performance. However, owing to the nature of human anatomy and pathologic conditions, many variants and pathologic conditions are not captured in such small training data, which may explain in part the observed gap between expected performance and performance using real-world data. Even if trained on a larger number of datasets, differences will exist between the training distribution and the real-world distribution, which may include technical aspects, patient characteristics, or rarer pathologic conditions. Images that differ from the training dataset distribution can cause surprisingly severe failures of the segmentation algorithm. It would be desirable to be able to screen automatic segmentations for problematic cases to perform quality control or to use these selected cases for active learning. Our intent was to use variational autoencoders (VAEs) to enable such automatic screening—specifically, to detect unusual (problematic or erroneous) segmentations.

Classic autoencoders encode input data and attempt to reconstruct those data using a decoder. Between encoder and decoder, an information bottleneck usually occurs. In VAEs—a more recent development—there is a probabilistic component at the bottleneck, where the bottleneck describes random distributions from which a sample is obtained and then given to the decoder. In addition, in VAEs, a loss is applied to the bottleneck variable that penalizes deviations from a unit normal distribution; this ensures that only valuable information (ie, valuable for reconstruction) is encoded. These additions have quite beneficial effects on the way in which autoencoders function (2). VAEs have been used to detect abnormalities in informatics and in medical images (3,4). In work related to medical images, the image data were used as input, and differences between reconstructed images and the input were considered to represent anomalies. In the current work, we focus on segmentation abnormalities and use the segmentation as input to the autoencoder.

In this exploratory study, we hypothesize that failed or problematic segmentations can be detected automatically using VAE without additional supervision and that this method will perform better than traditional non–deep learning techniques. Specifically, we hypothesize that the reconstruction error of a VAE will be high in problematic cases since atypical CT segmentations deviate from the learned distribution and cannot be reconstructed accurately. Of note, input for previously used autoencoder-based methods in medical imaging consists of the actual image data, whereas in our approach, the autoencoder processes the segmentation data.

Materials and Methods

Study Overview

Figure 1 shows an overview of the experimental setup. To begin, a segmentation 3D U-Net was trained on CT data with voxel-wise organ labels. Then, unlabeled CT data were automatically segmented by the trained 3D U-Net. These automatically labeled data were used to train a 3D VAE in an unsupervised fashion. The trained VAE was then used to calculate reconstruction errors of the segmentations based on the Dice score between VAE input and output. A human reviewer (V.S.) evaluated the automatically generated labels and classified the results as either problematic or acceptable. The performance of reconstruction error to detect problematic segmentations was evaluated using receiver operating characteristic (ROC) curves and area under the ROC curve (AUC) metrics. Table 1 shows an overview of patient datasets used in the development of the various models.

Figure 1:

Overview of the experimental setup. (A) A three-dimensional (3D) U-Net was trained using labeled data. (B) A large unlabeled dataset was automatically segmented by the U-Net. (C) The automatically generated labels were used for unsupervised training of the variational autoencoder (VAE), which in turn was used to generate reconstruction errors for the automatic segmentations. (D) A human observer evaluated the automatic segmentations and labeled them as problematic or acceptable (E). The hypothesis was that the reconstruction errors can be used to detect problematic segmentations. (F) This was tested using human evaluations and area under the receiver operating characteristic curve (AUC) values.

Table 1:

Patient Datasets for Training and Testing 3D U-Net and VAE Models

Patient Datasets

This study was performed retrospectively on available data. This investigation was Health Insurance Portability and Accountability Act (HIPAA) compliant and approved by the institutional review board at the National Institutes of Health (NIH) and the Office of Human Subjects Research Protection at the NIH. The requirement for signed informed consent was waived.

Training data for segmentation.— For liver and spleen segmentation, data from the Medical Segmentation Decathlon were used for training (liver, n = 131; spleen, n = 41) (1). Kidney segmentation data were from an internal dataset (n = 56). In addition to each dataset, 10 segmentations from the CT colonography data (see below) for each organ were added. These data were applied to train three segmentation models (in total, n = 141 for the liver, n = 51 for the spleen, and n = 66 for the kidney).

Training set for anomaly detection.— Consecutive asymptomatic outpatients aged 18 years and older who were generally healthy and undergoing low-dose unenhanced abdominal CT for colorectal cancer screening as part of routine health maintenance at the University of Wisconsin Hospital and Clinics (Madison, Wis) were included. Low-dose, noncontrast multidetector CT acquisition was performed at 120 kVp using a single vendor (GE Healthcare), with modulated tube current to achieve a vendor-specific noise index of 50, typically resulting in an effective dose of 2 mSv to 3 mSv (n = 12 495; HIPAA-compliant anonymization). We chose this dataset for the high number of included studies to ensure that sample size did not limit our fully automatic approach. All datasets were included in this study. This dataset and patient cohort has been previously described in prior epidemiologic studies (5). However, the current study is focused on technical aspects and does not overlap with prior work. CT images were segmented (liver, spleen, and kidney) using a 3D U-Net, and all segmentation results were used for training a VAE. There were no missing data.

Networks

U-Net development.— A modified 3D U-Net (6) with residual connections was trained on the labeled kidney, spleen, and liver data (Dice score, 0.93 in the distribution test data) (7,8). Details on the training data used for the models are provided in Table 1. Domain adaptation to noncontrast CT was performed using generative adversarial networks as described previously (9). Briefly, CycleGAN was trained to transform contrast-enhanced CT images (ie, images acquired with intravenous iodine contrast agent) into noncontrast CT images (10). This was done to improve segmentation performance of the U-Net at noncontrast CT, while the training data for the U-Net was contrast-enhanced CT. Automatic organ segmentation was performed on 12 495 abdominal CT scans.

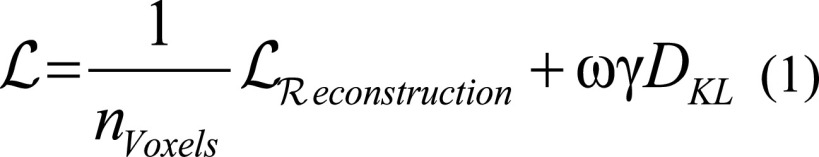

VAE development.— A 3D autoencoder with a latent vector of size 10 was used (Fig 2), based on the two-dimensional joint-VAE implementation (11). Input resolution was 64 × 64 × 64. No cropping was performed. Images were rescaled to the input resolution. We modified the original loss function by including a division by the number of voxels, which normalizes the reconstruction error to the input volume size; with this modification, the size of the network can be changed while preserving the VAE characteristics (Equations [1] and [2]). The input size was 64 × 64 × 64, and data were rescaled to fit the input dimensions. No other preprocessing was performed. For reconstruction loss, we used cross-entropy loss. The VAE was trained for 100 epochs. To stabilize training, a linearly increasing weighting factor (ω, 0–1) of the Kullback-Leibler loss term was used for the first 25 000 iterations. Gamma was set to 0.001, and batch size was 4. No systematic hyperparameter tuning was performed, and for the three organs, identical hyperparameters were used. A single model was trained for each organ. Table 1 provides information on the data used for model development.

Figure 2:

An overview of the three-dimensional (3D) variational autoencoder is shown. Input and output dimensions are 64 × 64 × 64. Segmentations were downsampled to this size. Of note, downsampling may diminish the usefulness in applications in which fine details are segmented, but this may be overcome with a patch-based approach. Given that the focus in this work was on organ segmentations, which usually have a simple shape, this was not performed. The latent vector z has a length of 10. Each encoding step consists of a 3D convolution with size 4 and stride 2 followed by rectified linear unit (ReLu). At a size of 4 × 4 × 4, a fully connected (FC) layer with size 256 follows. Then two fully connected layers generate the mean and standard deviation of the hidden vector with a length of 10. Simplified, each pair of μ and σ describes a Gaussian distribution from which the vector z is sampled. From z, the decoder decodes the 3D matrix analogous to the encoder using transposed 3D convolutions with the same kernel and stride followed by a ReLu activation. There is no weight sharing between the encoder and decoder.

|

|

Conventional Methods for Outlier Detection

For comparison, we performed traditional outlier detection of the organ segmentation using the following predefined features: volume of the segmentation, number of connected components in the segmentation, and average CT attenuation of the CT volume described by the segmentation (CT number). We attempted unsupervised outlier detection using various outlier detection methods, such as isolation forest (12), one-class support vector machine (13), “local outlier factor” (14), and elliptic envelope (15). Results were evaluated using ROC curves.

Evaluation

A random selection of 2510 segmentations was evaluated by a physician (V.S., > 5 years of imaging experience) for failure of the segmentation (part of the target organ not segmented or segmentations of tissue that is clearly not the specified organ [ie, problematic segmentation]).

For automatic evaluation, the original segmentations were run through the VAE, and the reconstruction error was calculated as Dice score according to Equation (3) between VAE input (X) and output (Y).

|

Active and Passive Learning Approach

We compared active and passive learning approaches on the spleen segmentation U-Net. Datasets used for these models are shown in Table 1. For active learning, we sampled CT scan segmentations with high VAE reconstruction errors as those cases likely were problematic for the U-Net to segment. We sampled 15 CT images using stratified sampling to ensure that there was some variety of reconstruction errors (R package splitstackshape, stratified by reconstruction error). For passive learning, we sampled 15 random CT images.

These selected CT images were manually segmented and combined with the preexisting training data, yielding two new training datasets. A U-Net was trained on each dataset separately. The trained U-Nets were again used to segment 2480 previously unseen datasets from the CT colonography cohort (excluding the images that were used for training). Visual assessment was performed of all segmentations by a human reviewer (V.S., physician with > 5 years of imaging experience) in a blinded fashion and in random order.

Statistical Analysis

We evaluated the performance of the VAE reconstruction error (Dice score) as a proxy to identify problematic segmentations, using human evaluation as the reference standard. AUC and AUC CIs were calculated with the DeLong method using the pROC R package (https://cran.r-project.org/web/packages/pROC/pROC.pdf). For comparison of AUCs between methods, statistical significance was determined based on CIs. If 95% CIs were not overlapping, statistical significance at the level of P ≤ .05 was determined. In general, an AUC of 0.5 suggests no discrimination, 0.7–0.8 is considered acceptable, 0.8–0.9 is considered excellent, and greater than 0.9 is considered outstanding (16). For comparison of paired binary data, the McNemar χ2 test was used.

Technical Implementation

All code was written in Python 3.5 using the PyTorch 1.0 library (https://pytorch.org). For computation, 4× graphics processing unit nodes of the NIH Biowulf high-performance computing system were used (4× NVIDIA P100). Inference processing time was less than 200 msec per dataset. Training the VAE took 1.5 hours.

Results

Overview

An overview of the autoencoder reconstruction error and percentage of problematic segmentations is shown in Table 2. Overall, the percentage of problematic segmentations was low for the liver and moderate for the kidney and spleen (1.6%, 4.1%, and 7.1%, respectively). The majority of reconstruction errors (Dice scores) was in a narrow band ranging from 0.70 to 0.88. Note that these Dice scores are pure autoencoder error measurements and are not the original segmentation Dice scores (which would be related to a ground truth segmentation, which was not available in the case of new test data).

Table 2:

Reconstruction Errors as Dice Scores and Problematic Segmentations for Each Organ

Detection of Problematic Segmentations

The reconstruction errors were significantly different between the successful and the problematic segmentations, as seen in the density plots in Figure 3. We assessed the ability to detect problematic segmentations using the ROC curves shown in Figure 4. The AUC was good to excellent for all segmentations (mean AUC, 0.94 [95% CI: 0.93, 0.95] for liver, 0.90 [95% CI: 0.89, 0.92] for kidney, and 0.81 [95% CI: 0.80, 0.82] for spleen; Table 3).

Figure 3:

Density plots for the reconstruction error (Dice score) for each organ. Red indicates problematic segmentations, and blue indicates correct segmentations.

Figure 4:

For each organ, a receiver operating characteristic (ROC) curve is shown. The area under the ROC (AUC) values are good to excellent (see Table 3).

Table 3:

Area Under the Receiver Operating Characteristic Curves for Outlier Detection Performance of Various Algorithms and the VAE

An example of a segmentation and autoencoder output is shown in Figure 5. In this case, the colon had an unusual homogeneous attenuation similar to that of the liver and was erroneously segmented as liver. After passing through the VAE, the problematic area was not reconstructed and disappeared. This causes an increased reconstruction error and flags this segmentation as problematic. Because a 3D U-Net functions as a voxel classifier and does not have a concept of shapes of its prediction, it is therefore prone to errors such as the one shown in Figure 5.

Figure 5:

Example liver segmentation. Upper panel: A CT image with a problematic liver segmentation is shown (left upper panel) alongside the original CT image (right upper panel). The liver is truncated at the top, giving the liver segmentation a flat-top appearance, resulting from the clinical CT scanning protocol. The liver is segmented correctly, but an area of the ascending colon is erroneously also segmented as liver. In this case, the colon had an unusually homogeneous attenuation similar to that of the liver and is in close proximity to the liver. Left lower panel: A three-dimensional image of the segmentation is shown, with an arrow pointing to the erroneously segmented area of the ascending colon. Right lower panel: After the segmentation was passed through the variational autoencoder, the problematic area disappeared (arrow). Intuitively, the autoencoder must reduce the input segmentation to 10 features and decode it again from these features. This forced information reduction does not allow for uncommon segmentations to be reflected; thus, the autoencoder can only reproduce the liver segmentation but cannot re-create the erroneous area.

Comparison with Conventional Outlier Detection Methods

We compared the VAE-based detection of problematic segmentations with conventional outlier detection methods. The AUCs of the various tested outlier detection algorithms and the VAE for results are shown in Table 3. The AUC values show that in all organs, the VAE method had the highest performance. For example, the algorithm with the highest performance for detecting problematic liver segmentations (elliptic envelope) had a mean AUC of 0.83 (95% CI: 0.77, 0.90), which had a lower performance when compared with the VAE, which had a mean AUC of 0.94 (95% CI: 0.93, 0.95; P < .05). The method with the highest performance for detecting problematic spleen segmentations (isolation forest) had a mean AUC of 0.71 (95% CI: 0.67, 0.75), which was also lower than the VAE method, which had a mean AUC of 0.81 (95% CI: 0.80, 0.82; P < .05). This difference was statistically significant for all comparisons except for the kidney, for which the isolation forest method yielded a mean AUC of 0.89 (95% CI: 0.85, 0.92) compared with a mean of 0.90 (95% CI: 0.89, 0.92) for the VAE method (not statistically significant). Of the conventional methods tested, elliptic envelope and isolation forest generally had the highest performance.

Active versus Passive Learning

We compared active and passive learning approaches for improving spleen segmentations. In both approaches, we added 15 new segmentations to the preexisting segmentation dataset. In the active approach, those segmentations were chosen on the basis of high reconstruction error, whereas in the passive approach, there was random sampling. The error rates for these approaches are shown in Figure 6. Segmentation error rates in 2510 assessed CT images were numerically lower in the active learning approach (5.7% [n = 142]) compared with the passive learning approach (6.0% [n = 151]), but this difference was not statistically significant (original error rate for reference, 7.1% [n = 178]).

Figure 6:

Error rates for the active and passive learning approaches in spleen segmentation. The passive learning approach (adding 15 random segmentations to the training data) resulted in only a small improvement in error rate, while active learning (adding an automatic sample of 15 high–reconstruction error images) led to a substantial improvement of the performance in unseen data.

Discussion

We present a universal algorithm that can detect unusual, abnormal, or grossly incorrect segmentations automatically at CT using a VAE and reconstruction error. The model functions as an abnormality detection approach with the assumption that unusual segmentations are likely incorrect. We showed that our method can detect problematic segmentations with high accuracy (mean AUC of 0.94 for liver, 0.90 for kidney, and 0.81 for spleen). Our method may be useful for continuous quality monitoring and for active learning. An important aspect of the developed method is that it does not require any expert knowledge or manual creation of detection rules to achieve this task as compared with traditional methods. We also showed that the VAE-based outlier detection outperformed conventional feature-based outlier detection methods.

The use of deep learning–based segmentation in medical imaging has been increasing rapidly. The 3D U-Net is a de facto standard for 3D medical segmentation that has proved its universal applicability (17). These algorithms are powerful but not very robust regarding CT images with unexpected characteristics, which may cause the algorithms to fail. Each organ or task poses different challenges to automatic segmentation methods, which are detailed in prior work (9). This is a particular concern in a scenario in which training data do not reflect the real world, and the models do not perform well in general patient populations, which is a major issue for critical applications, especially in medical imaging (9).

One approach to increasing reliability of deep learning methods is to use larger training datasets. However, in medicine, there is often an overwhelming number of images that are “normal” or that show common pathologic conditions and therefore, at some point, do not provide additional knowledge for training. Rare and abnormal images (edge cases) must be added into training datasets to reduce the risk of unexpected failures. For example, in clinical radiology, the concept of a so-called teaching file exists, in which unusual or important cases are collected, which can greatly enhance radiologists’ learning.

The use of unsupervised methods in medical imaging is an area of continuous investigation. Of note, Uzunova et al (4) used a 3D VAE to directly detect pathologic conditions in medical images by training the VAE on normal images. A similar approach was used by Baur et al (18). They achieved noteworthy results, but it should be noted that supervised methods usually greatly surpass unsupervised methods at clinical segmentation challenges with the current technology. Baur et al (18) point out difficulties with autoencoders for pathologic condition detection, stating that some models cannot reconstruct anomalies (which is desirable) but at the same time lack the capability to reconstruct important fine imaging details. Nevertheless, major thought leaders in the artificial intelligence world believe that the key to a major increase in capabilities of deep learning lies in unsupervised learning, and the appeal clearly is to be able to use vast amounts of available unlabeled data (19).

In contrast to the aforementioned studies, in our work, we focus on detecting outliers in the segmentations, rather than in the imaging data themselves. Segmentation errors are a much more constrained problem, as the segmentations contain much less information and detail and have substantially less variation compared with the actual CT images.

An important question that remains is whether the VAE should be trained on the initial ground truth segmentations or on a large number of automatic segmentations without ground truth. We opted for the latter, for two reasons. First, the number of segmentations with ground truth may be too low for successful training (often < 100). Second, frequently there are translational or scaling differences between the training data and the unseen data (eg, different field of view, different Z-coverage) for which a convolutional network by design can easily account, but a VAE cannot, owing to the nature of its feature vector. Of note, this method requires that the majority of automatic segmentations be largely correct, but this is usually the case for a reasonably performing convolutional neural network in medical imaging.

On the basis of these observations, we used our method in an active learning workflow and compared the results to a passive learning approach. For active learning, images with high VAE reconstruction error were manually segmented, while in passive learning, random images were selected (n = 15). The results show a substantial advantage of the active learning approach. On the basis of our experience, we believe the described method would be useful for evaluation of segmentations of a wide spectrum of other organs or anatomic structures with the prerequisite that there is sufficient size (owing to resolution constraints) and a common shape of the imaged structure that would include organs such as the bladder, bowel, or skeletal features. Future studies may also aim to directly use a VAE reconstruction error as an additional loss in mixed supervised and unsupervised deep learning.

There were several notable limitations. The concept of abnormality detection to find problematic segmentations relies on the assumption that the majority of automatic segmentations will be largely correct. This is frequently the case for medical convolutional neural network applications but may need prior visual verification. In addition, our approach assumes that the shape of the segmentations has some inherent regularity. While this is true for most anatomic structures, it may not be true for pathologic features such as tumors. An additional concern that applies to other CT-based deep learning tasks as well is the variability of image appearance owing to different scanner types and settings, although we believe that the effect on this project is low because the input data are the segmentation map without CT numbers and the significant downscaling that was performed, which further mitigates scanner variability. Owing to technical limitations, the input and output size for the VAE was 64 × 64 × 64 and segmentations were downsampled to this size, which impairs the usefulness for applications in which fine detail is of importance.

In summary, deep learning methods are vulnerable to failure when confronted with unexpected cases. This is a critical issue for clinical uses. Our method has the potential to detect such failure without supervision. We also showed the value of the method in an active learning scenario. This method would be quite useful for active learning approaches or quality assurance purposes with the aim to increase real-world performance of deep learning systems in radiology. Active learning may greatly reduce the amount of work needed for manual labeling, and therefore reduce cost of development of future medical imaging applications.

Acknowledgments

Acknowledgment

This work used the computational resources of the NIH HPC Biowulf cluster (http://hpc.nih.gov).

Authors declared no funding for this work.

Disclosures of Conflicts of Interest: V.S. Activities related to the present article: institution receives grant from NIH (intramural program). Activities not related to the present article: disclosed no relevant relationships. Other relationships: disclosed no relevant relationships. K.Y. Activities related to the present article: received grant from NIH (intramural funding). Activities not related to the present article: disclosed no relevant relationships. Other relationships: disclosed no relevant relationships. P.M.G. Activities related to the present article: consulting free or honorarium from Madison Club. Activities not related to the present article: disclosed no relevant relationships. Other relationships: disclosed no relevant relationships. P.J.P. Activities related to the present article: consulting fee or honorarium from University of Wisconsin. Activities not related to the present article: consultant for Bracco and Zebra; stock/stock options from SHINE and Elucent. Other relationships: disclosed no relevant relationships. R.M.S. Activities related to the present article: associate editor of Radiology: Artificial Intelligence. Activities not related to the present article: author receives royalties from iCAD, ScanMed, PingAn, Philips, Translation Holdings (patent royalties or software licenses); author has cooperative research and development agreement with PingAn; author receives GPU card donations from NVIDIA. Other relationships: disclosed no relevant relationships.

Abbreviations:

- AUC

- area under the ROC curve

- NIH

- National Institutes of Health

- ROC

- receiver operating characteristic

- 3D

- three dimensional

- VAE

- variational autoencoder

References

- 1.Medical Segmentation Decathlon. http://medicaldecathlon.com/. Accessed September 8,2020.

- 2.Burgess CP, Higgins I, Pal A, et al. Understanding disentangling in b-VAE. arXiv 1804.03599 [preprint] https://arxiv.org/abs/1804.03599. Posted April 10, 2018. Accessed December 2020.

- 3.Cao VL, Nicolau M, McDermott J. Learning Neural Representations for Network Anomaly Detection. IEEE Trans Cybern 2019;49(8):3074–3087. [DOI] [PubMed] [Google Scholar]

- 4.Uzunova H, Schultz S, Handels H, Ehrhardt J. Unsupervised pathology detection in medical images using conditional variational autoencoders. Int J Comput Assist Radiol Surg 2019;14(3):451–461. [DOI] [PubMed] [Google Scholar]

- 5.Pickhardt PJ, Graffy PM, Zea R, et al. Automated CT biomarkers for opportunistic prediction of future cardiovascular events and mortality in an asymptomatic screening population: a retrospective cohort study. Lancet Digit Health 2020;2(4):e192–e200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Çiçek Ö, Abdulkadir A, Lienkamp SS, Brox T, Ronneberger O. 3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation. In: Ourselin S, Joskowicz L, Sabuncu M, Unal G, Wells W, eds.Medical Image Computing and Computer-Assisted Intervention – MICCAI 2016. MICCAI 2016. Lecture Notes in Computer Science, vol 9901. Cham, Switzerland: Springer, 2016; 424–432. [Google Scholar]

- 7.Kayalibay B, Jensen G, van der Smagt P. CNN-based Segmentation of Medical Imaging Data. arXiv 1701.03056 [preprint] https://arxiv.org/abs/1701.03056. Posted January 11, 2017. Accessed December 2020. [Google Scholar]

- 8.Isensee F, Kickingereder P, Wick W, Bendszus M, Maier-Hein KH. Brain Tumor Segmentation and Radiomics Survival Prediction: Contribution to the BRATS 2017 Challenge. In: Crimi A, Bakas S, Kuijf H, Menze B, Reyes M, eds.Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries. BrainLes 2017. Lecture Notes in Computer Science,vol 10670.Cham, Switzerland:Springer,2017;287–. [Google Scholar]

- 9.Sandfort V, Yan K, Pickhardt PJ, Summers RM. Data augmentation using generative adversarial networks (CycleGAN) to improve generalizability in CT segmentation tasks. Sci Rep 2019;9(1):16884. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Zhu JY, Park T, Isola P, Efros AA. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In: 2017 IEEE International Conference on Computer Vision (ICCV),Venice, Italy,October 22–29, 2017.Piscataway, NJ:IEEE,2017. [Google Scholar]

- 11.Dupont E. Learning Disentangled Joint Continuous and Discrete Representations. arXiv 1804.00104 [preprint] https://arxiv.org/abs/1804.00104. Posted March 31, 2018. Accessed December 2020.

- 12.Liu FT, Ting KM, Zhou ZH. Isolation Forest. In: 2008 Eighth IEEE International Conference on Data Mining, Pisa, Italy,December 15–19, 2008.Piscataway, NJ:IEEE, 2008. [Google Scholar]

- 13.Mourão-Miranda J, Hardoon DR, Hahn T, et al. Patient classification as an outlier detection problem: an application of the One-Class Support Vector Machine. Neuroimage 2011;58(3):793–804. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Breunig MM, Kriegel HP, Ng RT, Sander J. LOF: identifying density-based local outliers. In: Proceedings of the 2000 ACM SIGMOD International Conference on Management of Data - SIGMOD ’00.New York, NY:ACM,2000; 93–104. [Google Scholar]

- 15.Rousseeuw PJ, Van Driessen K. A Fast Algorithm for the Minimum Covariance Determinant Estimator. Technometrics 1999;41(3):212–223. [Google Scholar]

- 16.Mandrekar JN. Receiver operating characteristic curve in diagnostic test assessment. J Thorac Oncol 2010;5(9):1315–1316. [DOI] [PubMed] [Google Scholar]

- 17.Isensee F, Petersen J, Klein A, et al. Abstract: nnU-Net: Self-adapting Framework for U-Net-Based Medical Image Segmentation. In: Handels H, Deserno T, Maier A, Maier-Hein K, Palm C, Tolxdorff T, eds.Bildverarbeitung für die Medizin 2019. Informatik aktuell. Wiesbaden, Germany:Springer Vieweg,2019;22. [Google Scholar]

- 18.Baur C, Wiestler B, Albarqouni S, Navab N. Deep Autoencoding Models for Unsupervised Anomaly Segmentation in Brain MR Images. In: Crimi A, Bakas S, Kuijf H, Keyvan F, Reyes M, van Walsum T, eds.Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries. BrainLes 2018. Lecture Notes in Computer Science,vol 11383,Cham, Switzerland:Springer,2019;161–169. [Google Scholar]

- 19.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature 2015;521(7553):436–444. [DOI] [PubMed] [Google Scholar]