Abstract

Previous research based on the longitudinal Health and Retirement Study (HRS) has argued that the prevalence of cognitive impairment has declined in recent years in the United States. A recent article published in Epidemiology by Hale et al., however, suggests this finding is biased by unmeasured panel conditioning (improvement in cognitive scores resulting from repeat assessment). After adjusting for test number, Hale and colleagues found that the prevalence of cognitive impairment had actually increased between 1996 and 2014. In this commentary, we argue that simply adjusting for test number is not an appropriate way to handle panel conditioning in this instance because it fails to account for selective attrition (the tendency for cognitively high-functioning respondents to remain in the sample for a longer time). We reanalyze HRS data using models that simultaneously adjust for panel conditioning and selective attrition. Contrary to Hale et al., we find that the prevalence of cognitive impairment has indeed declined in the United States in recent decades.

Keywords: Cognitive impairment, trends, panel conditioning, selective attrition

A growing body of evidence suggests that age-specific dementia prevalence is declining in the United States.1-3 A paper recently published in Epidemiology by Hale et al. casts doubt on this trend.4 Hale and colleagues argue that the apparent decrease in dementia risk observed between 1996 and 2014 in the Health and Retirement Survey (HRS) is an artifact of unmeasured panel conditioning. Respondents in the HRS perform the same cognitive assessments (e.g., word recall, serial 7s subtraction, and backwards counting) every two years. Previous research demonstrates that repeated exposure to tests like these—usually at shorter time intervals—improves test performance (as respondents become more familiar with the questions asked), potentially biasing results.5-8

Studies that have used HRS data to estimate secular dementia trends have not accounted for the fact that people observed in more recent years have, on average, taken the cognitive tests more times than people observed in earlier waves of the study. From their analysis of HRS data, Hale et al. conclude that the direction of the secular trend in dementia risk is reversed from negative to positive after adjusting for the number of times respondents have completed the HRS cognitive assessments. Based on this provocative and important result, they conclude that dementia prevalence has actually increased in the U.S. over time—perhaps due to lengthening life expectancy and people living longer with dementia.

Critique of Hale et al. (2020)

The workhorse of Hale et al.’s analysis is a regression model that expresses the log odds of dementia as a function of time (i.e., year of the survey); the number of cognitive assessments the HRS respondent has completed; and their age, gender, race/ethnicity, and educational attainment. Their estimates show a positive relationship between amount of prior survey experience and respondents’ performance on the cognitive assessment, with effect sizes that grow monotonically the longer a respondent remains in the HRS panel. Hale et al.4 attribute this finding to practice effects, or what we refer to as “panel conditioning”.9-10

In our view, Hale et al. have not provided sufficient evidence for this interpretation. Panel conditioning usually occurs alongside non-random panel attrition, making it difficult to differentiate practice effects from gradual compositional changes to the sample.7, 10-12 A strong inverse relationship between prior survey exposure and dementia risk could be a sign of panel conditioning, as Hale et al. suggest, or it may be an artifact of differential attrition. People with cognitive impairments are less likely to remain in the HRS across surveys, lowering the likelihood that they complete a large number of assessments. Using panel weights may alleviate this problem, but only insofar as the weights capture all the relevant differences (observed or otherwise) between respondents with varying propensities to persist in the HRS study. The HRS’s weights—which include a post-stratification adjustment that aligns the sample margins to sociodemographic control totals obtained from the American Community Survey (ACS)—are unlikely to satisfy this requirement.

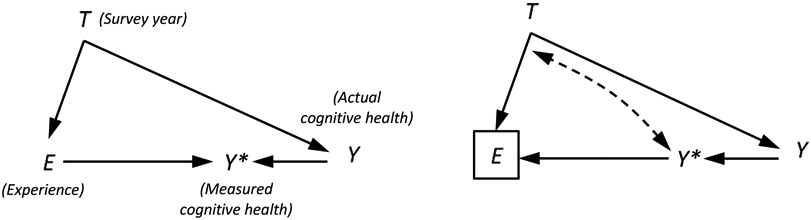

This issue could have serious consequences for inference. Consider a simple example where E represents a respondent’s survey experience, Y* represents their measured cognitive health, Y represents their actual cognitive health, and T indexes the year of the survey (t = 1996,…, 2014 in the HRS). If the amount of survey experience a respondent accumulates is a function of their cognitive health (as in right panel of Figure 1), as opposed to being a cause of test performance (as in the left panel of Figure 1), then conditioning on E (through stratification or by including it in a regression model) would be inappropriate. Under this scenario, survey experience would represent a “collider” on the path from year to cognitive health (T→E←Y*←Y), introducing the possibility of collider bias.13 Importantly, this collider bias would not only affect the estimates of panel conditioning (i.e., the estimated coefficients describing the relationship between survey experience and cognition),14 it could also compromise estimates of the time trend in cognition, which are of primary concern to Hale et al.

Figure 1.

Causal diagrams depicting the relationship between survey year, T, survey experience, E, measured cognitive health, Y*, and actual cognitive health, Y. In the graph on the left, cognitive health and experience are a function of year (T→Y and T→E), actual cognitive health predicts measured health scores (Y→Y*), and prior experience predicts health scores (E→Y*) but not actual cognitive health, as would be the case if there is panel conditioning. In the graph on the right, cognitive health predicts survey experience (E←Y*←Y), as would be the case if healthier individuals remain in the panel longer (because they have fewer comorbidities and/or find the survey taking experience less demanding). In the second scenario, experience is a collider (T→E←Y*←Y), because it is a common effect of year and cognitive health. Conditioning on a collider (as indicated by the box around E) induces a non-causal relationship between the variables that predict it. We illustrate this relationship with a bi-directional dashed line. See the text for more details.

Hale et al. acknowledge the possibility that test experience is endogenous in their model of cognitive impairment, but they assume that conditioning on it has no bearing on the estimates they obtain for other covariates on dementia. This assumption is valid for estimated effects from variables that are unrelated to the number of interviews a respondent has completed (because test experience is not a collider in these instances), but incorrect for estimated effects from variables that predict it. Survey year falls into the latter category. Indeed, Hale et al.’s motivation for adjusting for survey experience is that it is related to survey year.

A Different Approach

Circumventing this problem is not easy. In prior studies, researchers have sought to distinguish panel conditioning bias from bias introduced by panel attrition by comparing respondents with varying levels of survey exposure at time t, but the same propensity to remain in the panel.10, 15 Identifying respondents with the same propensity to remain in the panel can be accomplished by counting the number of times a respondent completes the survey, where the count is inclusive of all prior waves and waves that occur subsequent to time t. This variable encompasses—though does not specify—the broad set of individual selection factors that influence survey attrition. We refer to this strategy as a between-person comparison across time-in-survey groups because it isolates the independent effects of prior survey exposure, yielding more credible estimates of panel conditioning.

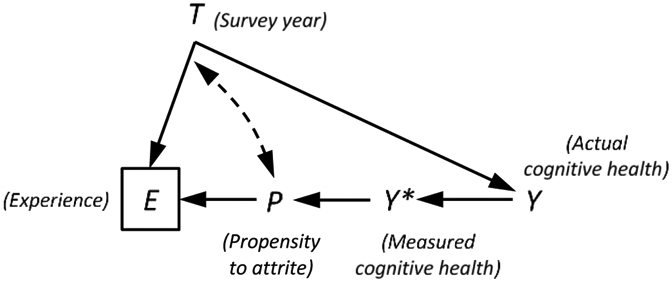

We can use a similar strategy to adjust for panel conditioning in models predicting cognitive impairment. By including a measure of number of surveys ever completed and number of surveys completed as of a given wave (i.e., at E = e), we are able to hold constant survey experience and adjust for a respondent’s propensity to remain in the sample in later waves. Under this approach, the number of times a person has been assessed is still a function of year (T→E), but we have blocked the path that connects it to cognitive health by conditioning on an intermediate variable: a respondent’s propensity to remain in the sample (P in Figure 2). This allows for a cleaner test of panel conditioning, while also providing more defensible estimates of the time trend in dementia prevalence. In the section that follows, we reproduce the findings obtained in Hale et al. and then show how they change in analyses that use this design.

Figure 2.

Revised causal diagram depicting the relationship between survey year, T, survey experience, E, measured cognitive health, Y*, actual cognitive health, Y, and a respondent’s propensity to remain in the panel, P. Our concern is that healthier respondents accumulate more survey experience because they tend to stay in the sample longer than respondents with poorer cognitive functioning. We can close this path (through P) by entering a control for total survey experience, where total experience is defined as the number of surveys a respondent completes across all waves in which they appear in the sample (including future waves). This returns us to a situation where we can estimate the time trend in dementia prevalence that is free of collider bias. See the text for more details.

Revisiting Trends in the Risk of Cognitive Impairment in the United States

Our reassessment of Hale et al.’s findings relied on the same HRS data used in their study. For self-respondents, cognitive status in the HRS is commonly assessed using cut-off scores from a modified version of the Telephone Interview for Cognitive Status (TICS). TICS scores range from 0 (lowest functioning) to 27 (highest functioning), with a score of 6 or lower indicating dementia.16 For respondents who rely on proxies (usually a spouse or child) to answer interview questions, cognitive status is ascertained by a combination of proxy report of memory, difficulty with five instrumental activities of daily living, and interviewer assessment of cognitive impairment.

We combined HRS waves from 1995 through 2014, which contain 209,279 interviews from 36,414 participants. We removed observations for which cognitive status was not ascertained (n=15,012). Following Hale et al., we also removed respondents who were younger than 50 (n=6,206); those with missing data on key covariates (age (n=1), race/ethnicity (n=133), sex (n=7), education (n=25), survey mode (n=259)); and those with a sampling weight of 0 (n=7,474). The final analytic sample included 180,162 observations of 33,099 individuals. Descriptive statistics for the sample, which are weighted using RAND’s complex survey weights (variable RwWTCRNH), are presented in Table 1.

Table 1.

Characteristics of Health and Retirement Sample (n=33,106).

| Age, Mean (SD) | 60.5 (10.0) |

| Sex | |

| Female | 53% |

| Male | 47% |

| Race/Ethnicity | |

| White | 78% |

| Black | 11% |

| Hispanic | 8% |

| Other | 3% |

| Education | |

| < High School | 20% |

| High School | 33% |

| College | 47% |

Note: Data come from Health and Retirement Study (1995-2014). Estimates are weighted to be nationally representative of Americans aged 50 and older. Baseline year is defined as the first year a respondent was observed between 1995 and 2014.

Table 2 presents coefficients from a series of logistic regression models of the log odds of dementia expressed as a function of year, age, age-squared, sex, race, interview mode, proxy response, practice effects (measured by number of tests taken by the observed year), and propensity to attrite (measured by total number of tests ever taken from 1995 to 2014). All variance estimates are clustered at the respondent level. Model 1 replicates previous studies that have ignored panel conditioning. We observe a significantly reduced odds of having dementia over time (OR: 0.89; 95% CI: 0.84-0.95 per decade). In Model 2, we adopt Hale et al.’s approach of adjusting for number of tests taken to account for panel conditioning. To account for the fact that proxy respondents are likely to be impacted in different ways by survey experience than self-respondents (or not at all), we interact proxy status with interview number. This adjustment erases the time trend (OR: 1.01; 95% CI: 0.87-1.17 per decade), but it is subject to the methodological concerns raised above. In Model 3, we address these concerns by adjusting for the total number of interviews respondents completed between 1995 and 2014. This model produces a negative and significant relationship between time and dementia prevalence (OR: 0.72; 95% CI: 0.63-0.81 per decade), with effect sizes that are generally on par with those observed in prior U.S.-based studies.2 That is, dementia risk has declined over time.

Table 2.

Logistic regressions of dementia (N = 180,162)

| Variable | Model 1 | Model 2 | Model 3 | Model 4 | ||||

|---|---|---|---|---|---|---|---|---|

| OR | 95% CI | OR | 95% CI | OR | 95% CI | OR | 95% CI | |

| Time (Unit: 10 years) | 0.89 | (0.84, 0.95) | 1.01 | (0.87, 1.17) | 0.72 | (0.63, 0.81) | 0.86 | (0.77, 0.97) |

| Race/Ethnicity (ref. White) | ||||||||

| Black | 4.38 | (3.84, 4.99) | 4.36 | (3.81, 4.99) | 4.39 | (3.82, 5.04) | 3.19 | (2.85, 3.57) |

| Hispanic | 3.26 | (2.75, 3.85) | 3.26 | (2.77, 3.84) | 3.34 | (2.84, 3.93) | 1.89 | (1.60, 2.24) |

| Other | 1.92 | (1.11, 3.34) | 1.94 | (1.11, 3.38) | 1.88 | (1.07, 3.31) | 1.65 | (1.00, 2.73) |

| Male (ref. female) | 1.03 | (0.96, 1.11) | 1.02 | (0.95, 1.10) | 0.98 | (0.91, 1.06) | 1.01 | (0.94, 1.08) |

| Age (Unit: 10 years) | 2.44 | (2.07, 2.87) | 2.70 | (2.29, 3.18) | 2.63 | (2.22, 3.13) | 2.09 | (1.74, 2.51) |

| Age2 (Unit: 10 years) | 1.02 | (0.99, 1.05) | 1.00 | (0.97, 1.04) | 1.00 | (0.97, 1.03) | 1.03 | (0.99, 1.06) |

| Phone Survey (ref. face-to-face) | 0.79 | (0.74, 0.84) | 0.76 | (0.71, 0.81) | 0.77 | (0.72, 0.83) | 0.81 | (0.76, 0.87) |

| Proxy Response (ref. Self-response) | 22.05 | (19.52, 24.89) | 9.36 | (7.62, 11.50) | 7.98 | (6.45, 9.87) | 7.46 | (5.94, 9.36) |

| Proxy Response*Male | 0.35 | (0.31, 0.40) | 0.37 | (0.32, 0.42) | 0.39 | (0.34, 0.45) | 0.35 | (0.30, 0.40) |

| Education (ref. <High School) | ||||||||

| High School | 0.39 | (0.35, 0.44) | ||||||

| College | 0.23 | (0.20, 0.25) | ||||||

| Interview Number (ref. 1st) | ||||||||

| 2nd | 0.98 | (0.89, 1.09) | 1.18 | (1.07, 1.31) | 1.18 | (1.06, 1.31) | ||

| 3 or 4 | 0.77 | (0.68, 0.87) | 1.20 | (1.07, 1.34) | 1.19 | (1.07, 1.33) | ||

| 5 to 7 | 0.65 | (0.54, 0.78) | 1.53 | (1.32, 1.79) | 1.53 | (1.31, 1.77) | ||

| 8+ | 0.54 | (0.43, 0.70) | 1.85 | (1.52, 2.26) | 1.82 | (1.51, 2.20) | ||

| Proxy * Interview Number | ||||||||

| Proxy * 2nd | 1.24 | (0.97, 1.59) | 1.27 | (0.98, 1.64) | 1.28 | (0.98, 1.68) | ||

| Proxy * 3 or 4 | 2.25 | (1.86, 2.74) | 2.31 | (1.88, 2.83) | 2.35 | (1.90, 2.91) | ||

| Proxy * 5 to 7 | 3.05 | (2.49, 3.74) | 3.15 | (2.53, 3.91) | 3.29 | (2.59, 4.19) | ||

| Proxy * 8+ | 4.79 | (3.63, 6.32) | 5.37 | (4.04, 7.14) | 5.71 | (4.16, 7.86) | ||

| Total Interviews (ref. 1) | ||||||||

| 2 | 1.33 | (0.96, 1.84) | 1.35 | (0.97, 1.88) | ||||

| 3 or 4 | 0.95 | (0.70, 1.30) | 0.94 | (0.68, 1.28) | ||||

| 5 to 7 | 0.70 | (0.51, 0.95) | 0.70 | (0.51, 0.96) | ||||

| 8+ | 0.36 | (0.26, 0.49) | 0.37 | (0.26, 0.51) | ||||

Increases in education appear to partially explain the secular decline in dementia prevalence in the HRS. In Model 4 of Table 2, we further adjust for educational attainment (coded as less than high school, high school graduate, or college). This model produces an estimated time trend in dementia prevalence that is somewhat attenuated, though still negative and significant (OR: 0.86; 95% CI: 0.77, 0.97). This is consistent with previous research indicating that educational expansion is a key driver of declining dementia prevalence in the U.S.17-18

We present several additional models with different specifications in the appendix. Average marginal effects (AMEs)—which, unlike ORs, allow for easily interpretable cross-model comparisons—show similar patterns to those reported above.19 We also include models that estimate a non-parametric year trend in dementia risk and models that estimate a trend for self-respondents and proxy respondents separately. Overall, these models point to a single coherent finding: There has been modest improvement in cognitive functioning among Americans in recent years, and this trend is robust to proper adjustment for panel conditioning and selective attrition.

Conclusion

Hale et al.’s conclusion is provocative. Contrary to a great deal of prior evidence, they argue that the prevalence of cognitive impairment is increasing in the United States. If true, as they argue, the scientific community should prioritize research on the factors driving this increase in cognitive impairment.

We share Hale et al.’s concern that practice effects—also known as panel conditioning effects in other literatures—may complicate our understanding of trends in the prevalence of cognitive impairment in cohort studies like the HRS. What we take issue with is their modeling approach for understanding this important methodological problem. Specifically, Hale et al. have conflated panel conditioning effects with bias introduced by non-random panel attrition. After replicating Hale et al.’s core findings, we report the results of alternate models that more accurately distinguish panel conditioning from panel attrition. Our findings reproduce previous results that show declines in the prevalence of cognitive impairment. In line with other prior research, we also show that much of the decline is due to educational expansion over time.

Like Hale et al., we implore the research community to consider the possibility that panel conditioning may bias inferences about within-person trends over time in cognitive functioning and other outcomes. However, assessing panel conditioning effects requires a research design that also accounts for panel attrition.

Supplementary Material

Contributor Information

Mark Lee, Department of Sociology, Minnesota Population Center, University of Minnesota.

Andrew Halpern-Manners, Department of Sociology, Indiana University.

John Robert Warren, Department of Sociology, Minnesota Population Center, University of Minnesota.

References

- 1.Hudomiet P, Hurd MD, Rohwedder S. Dementia prevalence in the United States in 2000 and 2012: estimates based on a nationally representative study. J Gerontol B-Psychol. 2018;73(S1):S10–S19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Langa KM, Larson EB, Crimmins EM, et al. A comparison of the prevalence of dementia in the United States in 2000 and 2012. JAMA Intern Med. 2017;177(1):51–58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Wu YT, Beiser AS, Breteler MB, et al. The changing prevalence and incidence of dementia over time – current evidence. Nat Rev Neurol. 2017;13(6):327–379. [DOI] [PubMed] [Google Scholar]

- 4.Hale JM, Schneider DC, Gampe J, et al. Trends in the risk of cognitive impairment in the United States, 1996-2014. Epidemiology. 2020;31(5):745–754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Calamia M, Markon K, Tranel D. Scoring higher the second time around: meta-analyses of pracitce effects in neuropsychological assessment. Clin Neuropsychol. 2012;26(4):543–570. [DOI] [PubMed] [Google Scholar]

- 6.Goldberg TE, Harvey PD, Wesnes KA, et al. Practice effects due to serial cognitive assessment: implications for preclinical Alzheimer’s Disease randomized controlled trials. Alzheimer’s Dement. 2015;1(1):103–111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Rabbitt P, Diggle P, Holland F, McInnes L. Practice and drop-out effects during a 17-year longitudinal study of cognitive aging. J Gerontol B-Psychol. 2004;59(2):P84–P97. [DOI] [PubMed] [Google Scholar]

- 8.Wesnes K, Pincock C. Practice effects on cognitive tasks: a major problem? Lancet Neurol. 2002;1(8):P473. [DOI] [PubMed] [Google Scholar]

- 9.Halpern-Manners A, Warren JR. Panel conditioning in longitudinal studies: evidence from labor force items in the Current Population Survey. Demography. 2012;49:1499–1519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Warren JR, Halper-Manners A. Panel conditioning effects in lognitudinal social science surveys. Sociol Methods Res. 2012;41(4):491–534. [Google Scholar]

- 11.Cantor D A review and summary of studies on panel conditioning. In: Menard Scott, ed. Handbood of Longitudinal Research: Design, Measurement, and Analysis. Burlington, MA: Academic Press; 2008:123–138. [Google Scholar]

- 12.Das M, Toepoel V, van Soest A. Nonparametric tests of panel conditioning and attrition bias in panel surveys. Sociol Methods Res. 2011;40(1):32–56. [Google Scholar]

- 13.Pearl J, Glymour MM, Jewell NP. Causal Inference in Statistics: A Primer. John Wiley & Sons; 2016. [Google Scholar]

- 14.Elwert F, Winship C. Endogenous selection bias: the problem of conditioning on a collider variable. Annu Rev Sociol. 2014;40:31–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Halpern-Manners A, Warren JR, Torche F. Panel conditioning in the General Social Survey. Sociol Methods Res. 2017;46(1):103–124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Brandt J, Spencer M, and Folstein M. The Telephone Interview for Cognitive Status. Neuropsychiatry Neuropsychol Behav Neurol. 1988;1(2):111–117. [Google Scholar]

- 17.Langa KM. Is the risk of Alzheimer’s disease and dementia declining? Alzheimer’s Res Ther. 2015;7:34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Langa KM, Larson EB, Karlawish JH, et al. Trends in the prevalence and mortality of cognitive impairment in the United States: Is there evidence of a compression of cognitive morbidity? Alzheimer’s Dement. 2008;4(2):134–144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Mize TD, Doan L, Long JS. A general framework for comparing predictions and marginal effects across models. Sociol Methodol. 2019;49(1):152–189. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.