Abstract

Current pathology workflow involves staining of thin tissue slices, which otherwise would be transparent, followed by manual investigation under the microscope by a trained pathologist. While the hematoxylin and eosin (H&E) stain is well-established and a cost-effective method for visualizing histology slides, its color variability across preparations and subjectivity across clinicians remain unaddressed challenges. To mitigate these challenges, recently we have demonstrated that spatial light interference microscopy (SLIM) can provide a path to intrinsic, objective markers, that are independent of preparation and human bias. Additionally, the sensitivity of SLIM to collagen fibers yields information relevant to patient outcome, which is not available in H&E. Here, we show that deep learning and SLIM can form a powerful combination for screening applications: training on 1,660 SLIM images of colon glands and validating on 144 glands, we obtained a benign vs. cancer classification accuracy of 99%. We envision that the SLIM whole slide scanner presented here paired with artificial intelligence algorithms may prove valuable as a pre-screening method, economizing the clinician’s time and effort.

Introduction

Quantitative phase imaging (QPI) [1] has emerged as a powerful label-free method for biomedical applications [2] More recently, due to its high sensitivity to tissue nanoarchitecture and quantitative output, QPI has been proven valuable in pathology [3, 4]. Combining spatial light interference microscopy (SLIM, [5, 6]) and dedicated software for whole slide imaging (WSI) allowed us to demonstrate the value of tissue refractive index as an intrinsic marker for diagnosis and prognosis [7–14]. So far, we have used various metrics derived from the QPI map to obtain clinically relevant information. For example, we found that translating the data into tissue scattering coefficients can be used to predict disease recurrence after prostatectomy. SLIM’s sensitivity to collagen fibers proved useful in the diagnosis and prognosis of breast cancer [12, 14, 15]. While this approach of “feature engineering” has the advantage of providing physical significance to the computed markers, it only covers a limited range of parameters available from our data. In other words, it is likely that certain useful parameters are never computed at all. This restricted analysis is likely to limit the ultimate performance of our procedure.

Recently, artificial intelligence (AI) has received significant scientific interest from the biomedical community [16–20]. In image processing, AI provides an exciting opportunity for boosting the amount of information from a given set of data, with high throughput [20]. In contrast to feature engineering, a deep convolution neural network computes an exhaustive number of features associated with an image, which is bound to improve the performance of the task at hand. Here, we apply, for the first time to our knowledge, SLIM and AI to classify colorectal tissue into cancer and benign.

Genetic mutations over the course of 5–10 years leads to the development of colorectal cancer from benign adenomatous polyps [21]. Early diagnosis promotes disease-specific mortality. Thus, early diagnosed cancers (still localized) have a 89.8% 5-year survival rate compared to a 12.9% 5-year survival rate for patients with distant metastasis or late stage-disease [22]. Colonoscopy is the preferred form of screening in the U.S. From 2002 to 2010, the percentage of persons in the age group of 50–75 years who underwent colorectal cancer screening increased from 54% to 65% [23]. Out of all individuals undergoing colonoscopy, the prevalence of adenoma is 25 – 27%, and the prevalence of high-grade dysplasia and colorectal cancer is 1 – 3.3% [24, 25]. As current screening methods cannot distinguish adenoma from a benign polyp with high accuracy, a biopsy or polyp removal is performed in 50% of all colonoscopies [26]. A pathologist examines the excised polyps to determine if the tissue is benign, dysplastic, or cancerous.

New technologies for quantitative and automated tissue investigation are necessary to reduce the dependence on manual examination and provide large-scale screening strategies. As a successful precedent, the Papanicolou test (pap smear) for cervical cancer screening has been augmented by the benefits of computational screening tools [27]. The staining procedure, which is critical to the proper operation of such systems, is designed to match calibration thresholds [28].

We used a SLIM-based tissue scanner in combination with AI to classify cancer and benign cases. We demonstrate the clinical value of the new method by performing automatic colon screening, using intrinsic tissue markers. Importantly, such a measurement does not require staining or calibration. Therefore, in contrast to current staining markers, signatures developed from the phase information can be shared across laboratories and instruments, without modification.

Results and methods

SLIM whole slide scanner

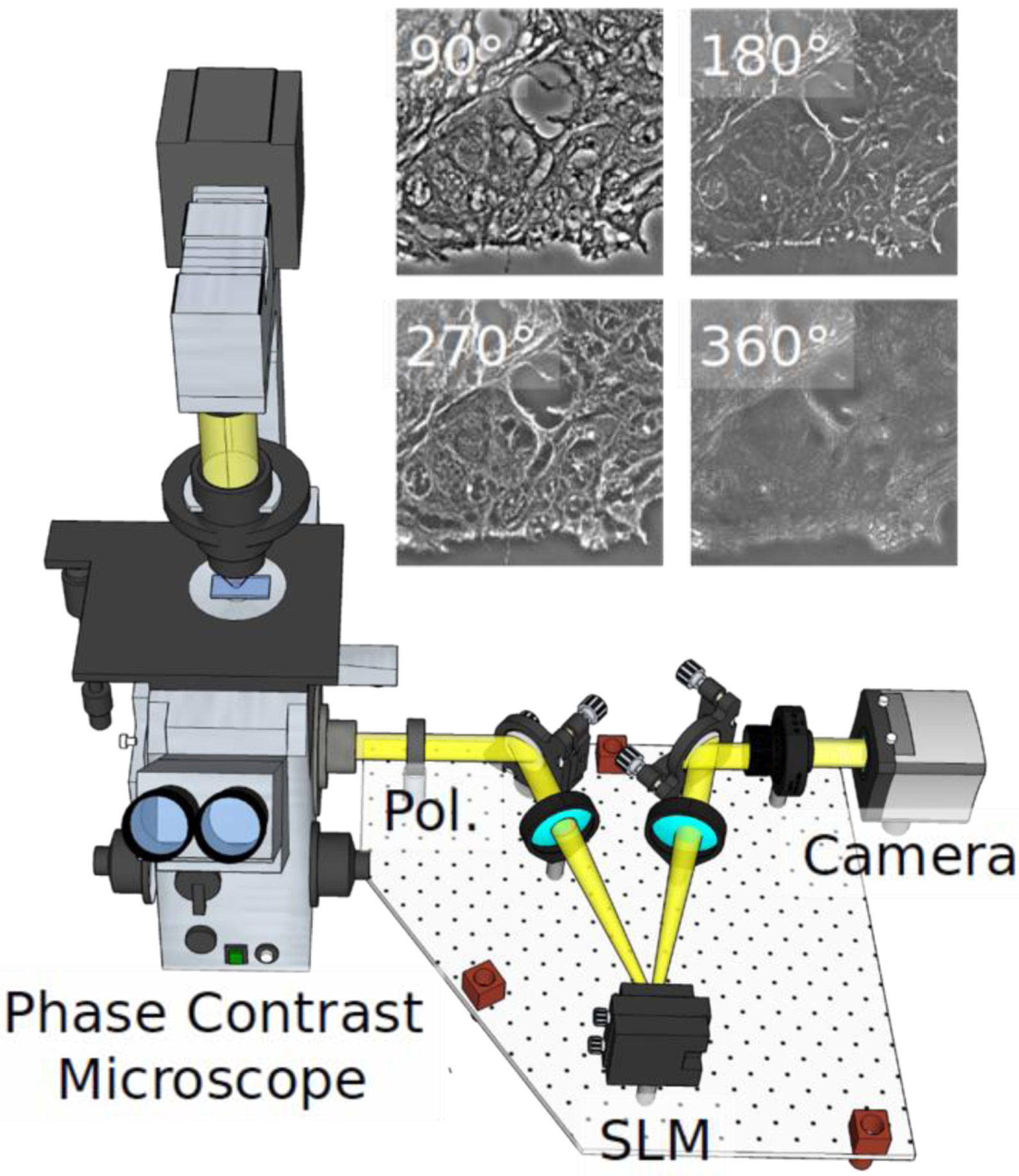

Our label-free SLIM scanner, consisting of dedicated hardware and software, is described in more detail in [29]. Figure 1 illustrates the SLIM module (Cell Vista SLIM Pro, Phi Optics, Inc.), which outfits an existing phase contrast microscope. In essence, SLIM works by making the ring in the phase contrast objective pupil tunable. In order to achieve this, the image outputted by a phase contrast microscope is Fourier transformed at a plane of a spatial light modulator (SLM), which produces pure phase modulation. At this plane, the image of the phase contrast ring is perfectly matched to the SLM phase mask, which is shifted in increments of 90° (Fig. 1). From the four intensity images that correspond to the ring phase shifts, the quantitative phase image is retrieved uniquely at each point in the field of view. Figure 2 shows examples of SLIM images associated with tissue cores and glands for cancer and normal colon cases.

Fig. 1.

SLIM system implemented as add-on to an existing phase contrast microscope. Pol-polarizer, SLM-spatial light modulator. The four independent frames corresponding to the 4 phase shifts imparted by the SLM are shown for a tissue sample.

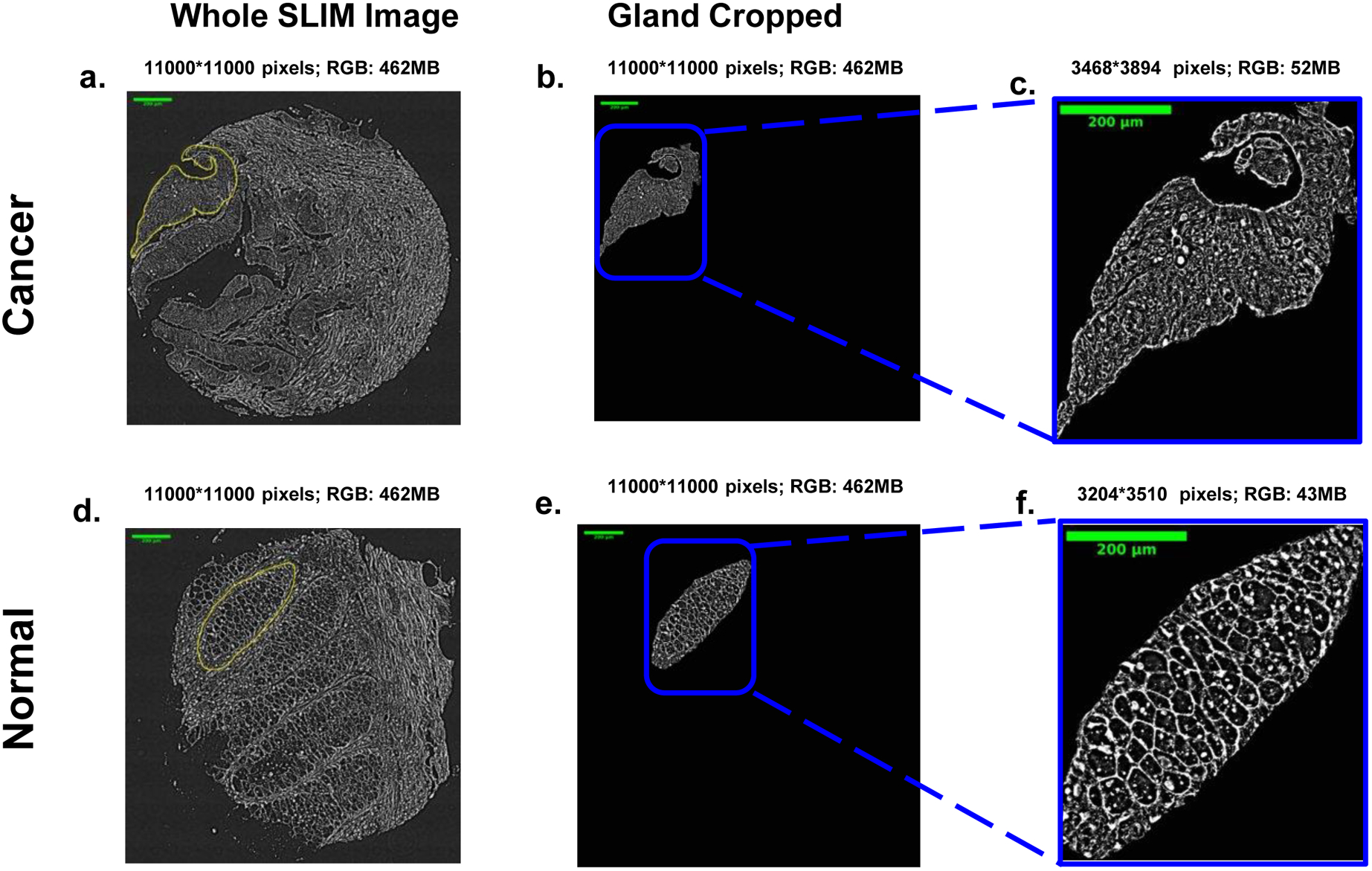

Fig. 2.

Examples of cancer (a-c) and normal (d-f) tissue from a microarray of colon patients. The classification is performed at the glandular scale, which are manually annotated for ground truth as illustrated in b-c and e-f.

The SLIM tissue scanner can acquire the four intensity images, process them, and display the phase image, all in real-time. This is possible due to the novel acquisition software that seamlessly combines CPU and GPU processing [29]. The SLIM phase retrieval computation occurs on a separate thread while the microscope stage moves to the next position. Scanning large fields of view, e.g., entire microscope slides, and assembling the resulting images into single files required the development of new dedicated software tools [29]. The final SLIM images (Fig. 2) are displayed in real-time at up to 15 frames per second, as limited by the spatial light modulator refresh rate, which is 4X faster.

Deep learning model

The images that we used for our deep learning training were prepared as follows. Our specimens consisted of a tissue microarray (TMA), which was prepared with archival pathology material collected from 131 patients. All patients underwent colon resection for treatment of colon cancer at the University of Illinois at Chicago (UIC) from 1993 to 1999. For each case, tissue cores of 0.6 mm diameter, corresponding to “tumor, normal mucosa, dysplastic mucosa, and hyperplastic mucosa” were retrieved. The tissue cores were transferred into a high-density array composed of primary colon cancer (n=127 patients) and mucosa of normal (n=131 patients), dysplastic (n=33 patients), and hyperplastic colon (n=86 patients).

Two 4-μm thick sections were cut from each of the four tissue blocks. The first section was deparaffinized and stained with hematoxylin and eosin (H&E) and imaged using the Nanozoomer (bright-field slide scanner, Hamamatsu Corporation). A pathologist made a diagnosis for all tissue cores in the TMA set, which was used as “ground truth” for our analysis. A second adjacent section was prepared in a similar way but without the staining step. This slide was then sent to our laboratory for imaging. These studies followed the protocols outlined in the procedures approved by the Institutional Review Board at the University of Illinois at Urbana-Champaign (IRB Protocol No. 13900). Prior to imaging, the tissue slices were deparaffinized, and cover slipped with an aqueous mounting medium. The tissue microarray image was assembled from mosaic tiles acquired using a conventional microscope (Zeiss Axio Observer, 40x/0.75, AxioCam MRm 1.4MP CCD). Overlapping tiles were composited on a per-core basis using ImageJ’s stitching functionality. To compare with other approaches, an updated version of this instrument (Cell Vista SLIM Pro, Phi Optics, Inc.) currently can acquire a core within four seconds. Specifically, 1.2 mm × 1.2 mm region consisting of 4 × 4 mosaic tiles can be acquired within four seconds at 0.4 μm resolution. In this estimation, we allow 100 ms for stage motion and 30 ms for SLM modulator stabilization time and 10 ms exposure.The resulting large image file was then cropped into 176 images of 10,000×10,000pixels, corresponding to a 1587.3×1587.3μm2 field of view.

Manual segmentation of glands was performed on these cropped images, using ImageJ. We then selected out all the cancer and normal images, and manually segmented each gland from the images and generated an independent gland image. Thus, a total of 1844 colon gland images were acquired, of which 922 images were cancer gland images and the other 922 normal gland images. Then 1660 gland images were assigned into a training dataset, 144 gland images assigned into a validation dataset, and 40 gland images into a test dataset. Each of the three dataset has an equal size in cancer and normal class.

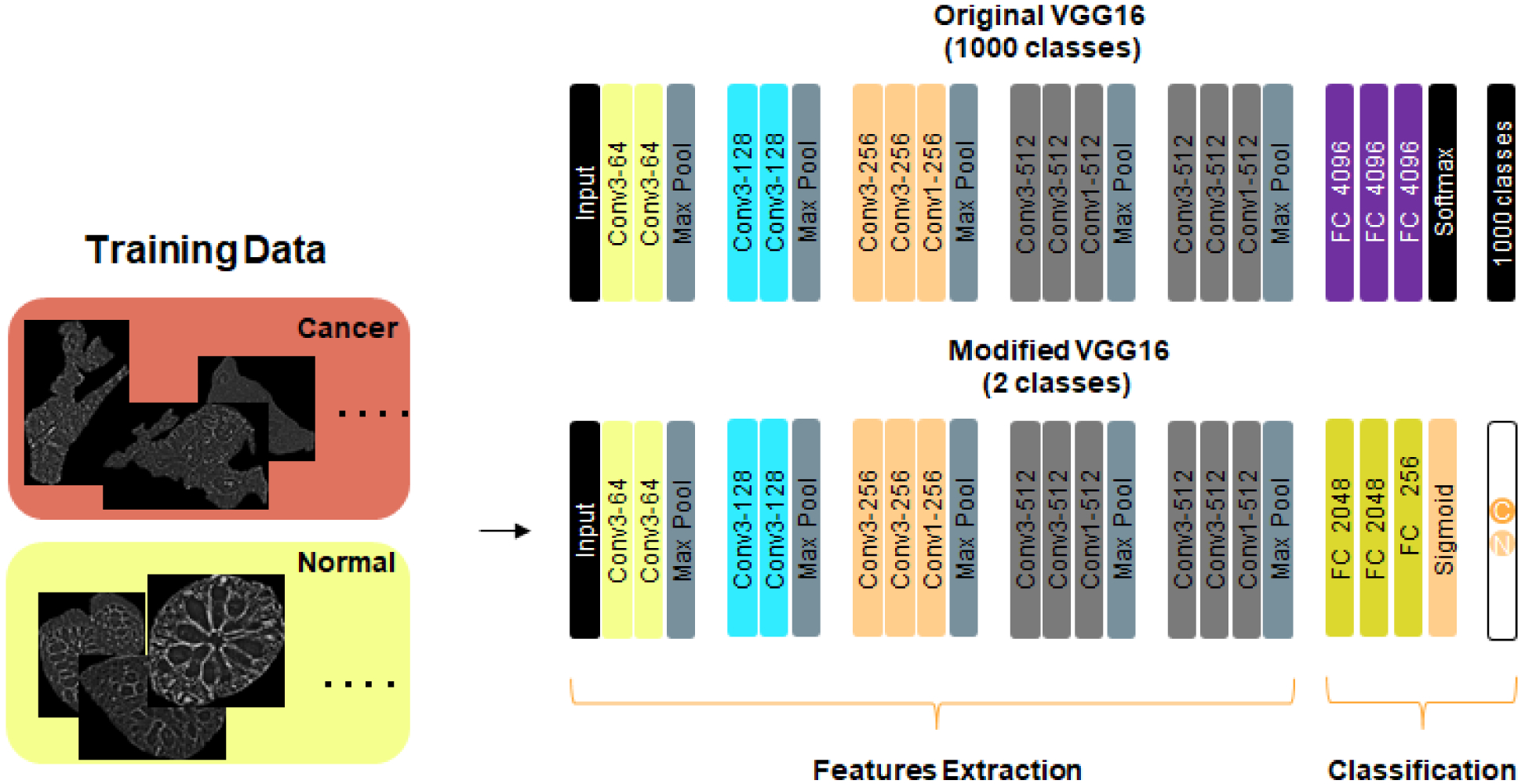

In our deep learning model that leverages pretrained convents, we used a transfer learning approach to build our deep learning classifier. This approach is especially useful when there is a limited amount of data to train a model. We selected the VGG16 deep network trained on a large dataset (ImageNet over 1.6 M images of various sizes and 1000 classes - See Figure 3). Among the long list of pretrained models like ResNet, Inception, Inception-ResNet, Xception, MobileNet and others, we chose the VGG16 network, due to its rich features extraction capabilities (see Fig. 3 network and Ref. [30]). The 138M parameters of VGG16 uses over 528 MB in storage and has only 16 layers. We reuse the VGG16’s parameters in the convolutional, the first five blocks of the network, to extract the rich features that are hidden within each gland image. The “top layer” of the VGG16 network is replaced by several fully connected layers and a final sigmoid nonlinear activation unit. The output of the nonlinear activation function is class predicted by the network. The predicted classification is fed into a “cross-entropy” loss function. Stochastic gradient descent methods are used to update the weights of the new network in two steps, as follows. In the first step, we import the VGG16 weights and replace the top layer (consisting of three fully connected layers and a softmax classifier) with our fully connected layers and a sigmoid for binary classification. During this first step we “freeze” the weights of convolutional layers of the VGG16 network and update the weights of newly added top layers. In the second step and final step, we “unfreeze” the weights in the convolutional layers and fine tune all the weights (convolutional and top layers). The original input image size of the VGG16 was 224×224×3, we increased it to 256×256×3. Since our images are in gray scale and that the VGG16 only accepts RGB image as input, we had to copy each image three times and place the threes copies in R, G, and B channels. The new fully connected (FC) layers have 2048 units each, followed by a dense layer with 256 units, a dropout of 0.5 is used immediately after the first 2048 FC layer. This selection of FCs and dropout resulted in best performance on our validation and test sets.

Fig. 3.

Modified VGG16 network. Input image size is 256 × 256 ×3. A pad of length 1 is added before each Max Pool layer. Conv1 : Convolutional layer with 1×1 filter; Conv3 : Convolutional layer with 3×3 filter; Max Pool: Maximum pooling layer over 2×2 pixels (stride=2); All hidden layers are followed by RELU activation. First FC layer is followed by 0.5 dropout. For the 1660 images used for training, they are split equally into 830 “normal” images and 830 “cancer” images. The images are also equally split into these two classes for the validation and the test.

Model accuracy and loss

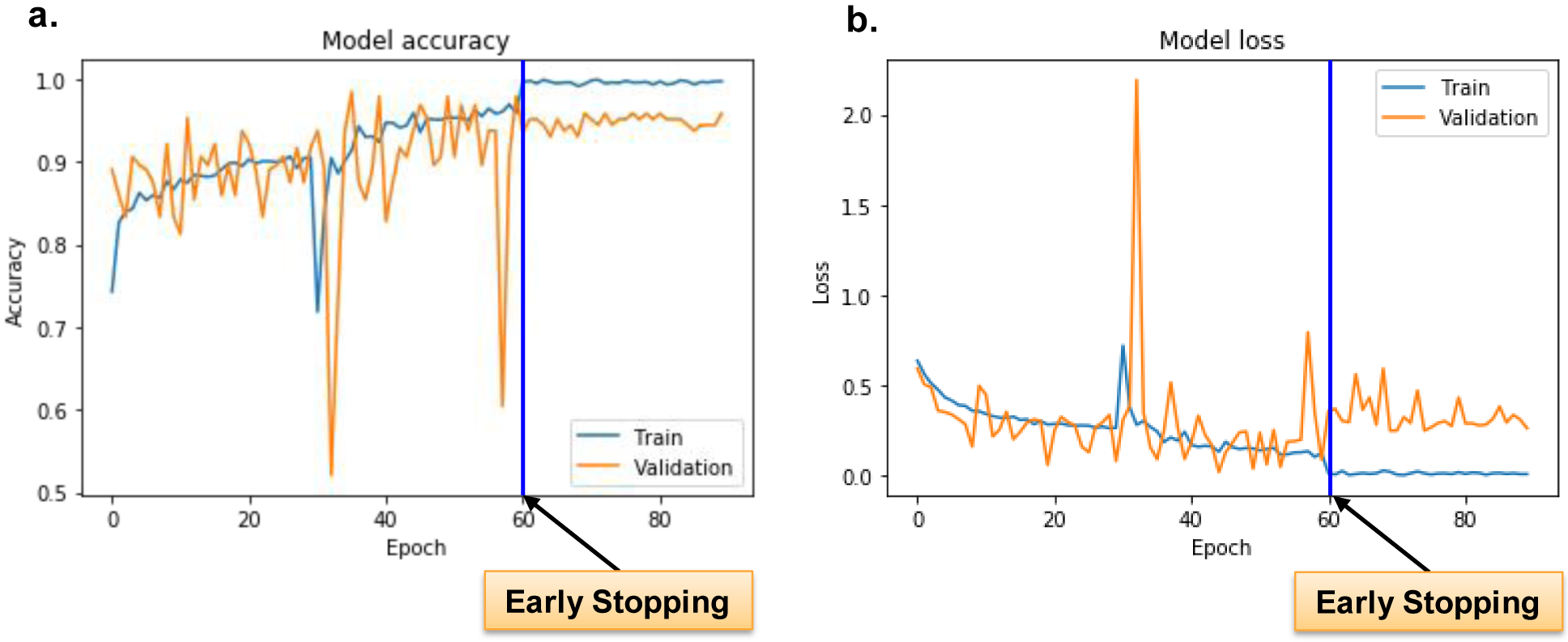

Model accuracy and losses are shown in Fig. 4. First, note that the shape of loss curves is a good proxy for assessing the “underfitting”, “overfitting”, and “just-right” models. In general, a deep learning model is classified as “underfitting”, when it is not efficient use of all training dataset. In this case, the training loss curve exhibits a non-zero constant loss value beyond a certain epoch value. In a similar fashion, a deep learning model is said to “overfit” the training data, when the training loss curve keeps decreasing, while the validation loss metric stalls and then starts to increase. Both “underfitting” and “overfitting” are signs of non-generalizability. On the other hand, the ‘just-right” deep learning models, the training and validation loss functions tend to follow each other closely and converge towards zero or very small values. We stopped the training at epoch 60 (this is known as training by early stopping criteria), where the network is no longer able to generalize (i.e., the validation loss started to increase after a specific epoch value). Early stopping criteria are implemented by saving the trained weights of our network where validation loss is lowest during all the training cycle. During our training exercise, the “best” model is saved when the validation accuracy highest is 0.98 at epoch 36.

Fig. 4.

Model accuracy and loss. Training and validation accuracy and loss, with moderate data augmentation. a.) The training accuracy ranges from 0.7186 to 0.9695, while the validation accuracy from 0.5208 to as high as 0.9844. b.) The training loss ranges from 0.1029 to 0.7192, while the validation loss from 0.019 to 2.1918. The biggest validation loss, 2.1918, occurs at the epoch 33, while the validation accuracy hits the lowest level, 0.5208. The blue line in figure a and figure b represents early stopping point since after epoch 60, the validation loss is no longer improving. Only the best model is saved during the whole training.

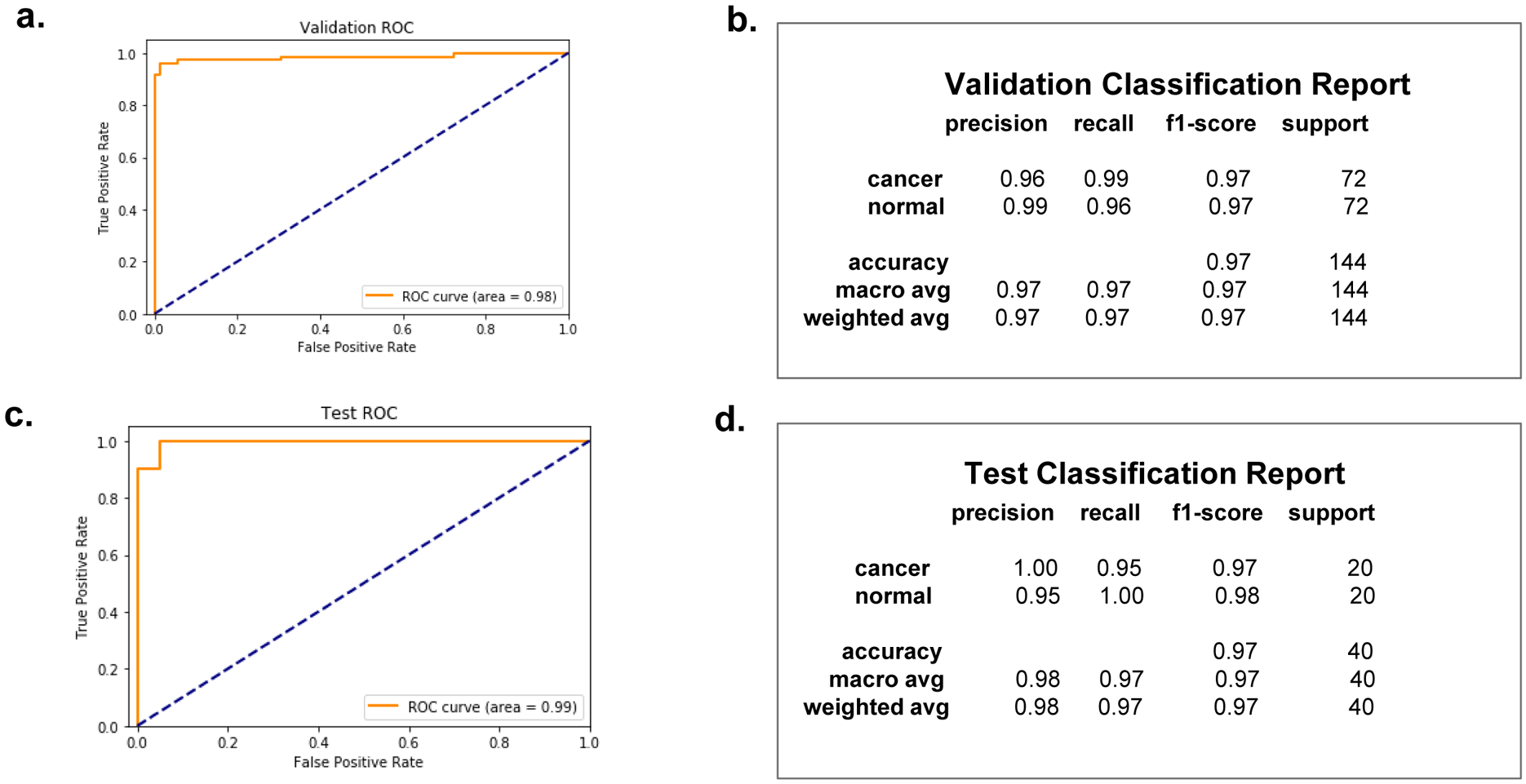

ROC, AUC, and classification reports for validation and test

Receiver Operating Characteristics (ROC) curve and Area Under the Curve (AUC) scores are two metrics used in reporting the performance of our network on both the validation and the test datasets (Fig. 5). The ROC curve displays the performance of our deep learning classifier at various thresholds. The two parameters plotted on the ROC axes are the true positive rate, along the y-axis, and false positive rate along the x-axis. Figure 5a shows the ROC curve for the validation and test. The AUC measure on the validation set is 0.98 and 0.99 in the test set, also show in Fig. 5d. The accuracy for both the validation and test dataset was 97%.

Fig. 5.

ROC (Receiver Operating Characteristics) curve, with AUC (Area Under The Curve), and classification reports respectively for the validation dataset and the test dataset. The AUC score is 0.98 for the validation dataset, and 0.99 for the test dataset, as indicated. The two classes, cancer and normal, have balanced support for both the validation dataset and the test dataset, with the validation dataset providing 72 actual occurrences for each class and the test dataset providing 20 actual occurrences for each class. The accuracy hits 97% for both the validation and the test.

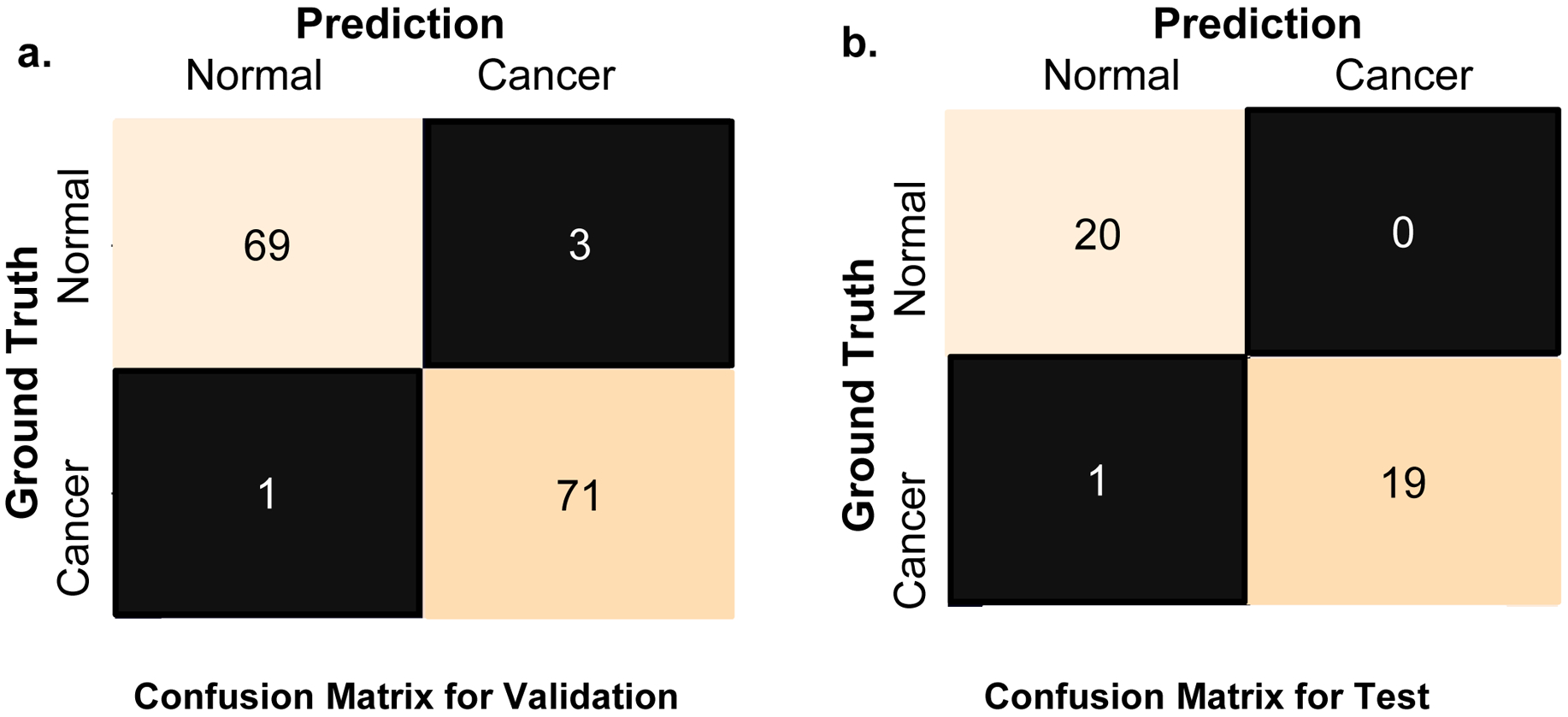

Confusion matrix for validation and test

The confusion matrix provides a quantitative measure performance of binary classifier. There are two classes in our confusion matrix: “normal” or “cancer” gland. A confusion matrix has two types of errors: Type I error is False Positive, where “normal” is classified as “cancer”. Type II error is False Negative, where “cancer” is classified as “normal”. For a perfect classifier, its confusion matrix is diagonal, which means it will only have True Negatives and True Positives.

The confusion matrices for validation and test datasets are shown in Figures 6a and 6b, respectively. In the first row of Fig. 6a, 69 out of the 72 “normal” instances are correctly predicted or True Negatives; and 3 out of the 72 are wrongly predicted as “cancer” or False Positives (Type I error). In the second row of Fig. 6a, 71 out of the 72 “cancer” instances are correctly predicted as “cancer” or True Positives; and 1 out of the 72 “cancer” instances is wrongly predicted as “normal” or False Negative (Type II error). In the first row of Fig. 6b, all the 20 “normal” instances are correctly predicted as “normal” and none of them is wrongly predicted as “cancer”. In the second row of Fig. 6b, 19 out of the 20 instances are correctly predicted as “cancer” and only 1 out of the 20 instances is wrongly predicted as “normal” or False Negative (Type II error).

Fig. 6. Confusion Matrix for Validation (a) and Test (b).

a.) For class cancer, 71 of the 72 images were correctly classified as cancer, while for class normal, 69 of the 72 images were correctly classified as normal. b.) For class cancer, 19 of the 20 images were correctly classified as cancer, while for class normal, all the 20 images are correctly classified as normal.

Summary and discussion

In summary, we showed that applying AI (deep transfer learning) to SLIM images yields excellent performance in classifying cancers and benign tissue. The 98% (validation dataset) and 99% (test dataset) overall glandular scale accuracy, defined as area under the ROC curve suggest that this approach may prove valuable especially for screening applications. The SLIM module can be implemented to existing microscopies already in use in the pathology laboratories around the world. Thus, it is likely that this new tool can be easily adopted at a large scale as a prescreening tool, enabling the pathologist to screen trough cases fast. This approach can be applied to more difficult tasks in the future, such as quantifying the aggressiveness of the disease [12], and can be used for other types of cancer, with proper optimization of the network.

It has been shown in a different context that the inference step can be implemented into the SLIM acquisition software [Kandell et all., under review]. Because the inference is faster than the acquisition time of a SLIM frame and can also be performed in parallel, we anticipate that the classification can be performed in real time. The overall throughput of the SLIM tissue scanner is comparable with that of commercial whole slide scanners that only perform bright field imaging on stained tissue sections [31]. In principle, it is possible to have the result of classification, with areas of interest highlighted for the clinician, all done as soon as the scan is complete, in a couple of minutes. In the next phase of this project, we plan to work with clinicians to further assess the performance of our classifier against experts.

Acknowledgements

We are grateful to Mikhail Kandel, Shamira Sridharan, and Andre Balla for imaging, annotating and diagnosing the tissues used in this study. This work was funded by NSF 0939511, R01 GM129709, R01 CA238191, R43GM133280-01.

Footnotes

Data available on request from the authors.

References and Notes:

- 1.Popescu G, Quantitative phase imaging of cells and tissues. 2011: McGraw Hill Professional. [Google Scholar]

- 2.Park Y, Depeursinge C, and Popescu G, Quantitative phase imaging in biomedicine. Nature Photonics, 2018. 12(10): p. 578. [Google Scholar]

- 3.Uttam S, et al. , Early prediction of cancer progression by depth-resolved nanoscale mapping of nuclear architecture from unstained tissue specimens. Cancer research, 2015. 75(22): p. 4718–4727. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Majeed H, et al. , Quantitative phase imaging for medical diagnosis. Journal of biophotonics, 2017. 10(2): p. 177–205. [DOI] [PubMed] [Google Scholar]

- 5.Wang Z, et al. , Spatial light interference microscopy (SLIM). Optics express, 2011. 19(2): p. 1016–1026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kim T, et al. , White-light diffraction tomography of unlabelled live cells. Nature Photonics, 2014. 8(3): p. 256. [Google Scholar]

- 7.Wang Z, et al. , Tissue refractive index as marker of disease. Journal of biomedical optics, 2011. 16(11): p. 116017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Nguyen TH, et al. , Automatic Gleason grading of prostate cancer using quantitative phase imaging and machine learning. Journal of biomedical optics, 2017. 22(3): p. 036015. [DOI] [PubMed] [Google Scholar]

- 9.Sridharan S, et al. , Prediction of prostate cancer recurrence using quantitative phase imaging: Validation on a general population. Scientific reports, 2016. 6(1): p. 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Sridharan S, et al. , Prediction of prostate cancer recurrence using quantitative phase imaging. Scientific reports, 2015. 5(1): p. 1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Takabayashi M, et al. , Tissue spatial correlation as cancer marker. Journal of biomedical optics, 2019. 24(1): p. 016502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Majeed H, et al. , Quantitative histopathology of stained tissues using color spatial light interference microscopy (cSLIM). Scientific reports, 2019. 9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Takabayashi M, et al. , Disorder strength measured by quantitative phase imaging as intrinsic cancer marker in fixed tissue biopsies. PloS one, 2018. 13(3). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Majeed H, et al. , Label-free quantitative evaluation of breast tissue using Spatial Light Interference Microscopy (SLIM). Scientific reports, 2018. 8(1): p. 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Majeed H, et al. , Quantifying collagen fiber orientation in breast cancer using quantitative phase imaging. Journal of biomedical optics, 2017. 22(4): p. 046004. [DOI] [PubMed] [Google Scholar]

- 16.Christiansen EM, et al. , In silico labeling: Predicting fluorescent labels in unlabeled images. Cell, 2018. 173(3): p. 792–803. e19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ounkomol C, et al. , Label-free prediction of three-dimensional fluorescence images from transmitted-light microscopy. Nature methods, 2018. 15(11): p. 917–920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Rivenson Y, et al. , PhaseStain: the digital staining of label-free quantitative phase microscopy images using deep learning. Light: Science & Applications, 2019. 8(1): p. 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Rivenson Y, et al. , Virtual histological staining of unlabelled tissue-autofluorescence images via deep learning. Nature biomedical engineering, 2019. 3(6): p. 466. [DOI] [PubMed] [Google Scholar]

- 20.Mahjoubfar A, Chen CL, and Jalali B, Deep learning and classification, in Artificial Intelligence in Label-free Microscopy. 2017, Springer. p. 73–85. [Google Scholar]

- 21.Muto T, Bussey H, and Morson B, The evolution of cancer of the colon and rectum. Cancer, 1975. 36(6): p. 2251–2270. [DOI] [PubMed] [Google Scholar]

- 22.Howlader N, et al. , SEER Cancer Statistics Review, 1975–2011, 2014: National Cancer Institute. Bethesda, Md. [Google Scholar]

- 23.Klabunde CN, et al. , Vital signs: colorectal cancer screening test use—United States, 2012. MMWR. Morbidity and mortality weekly report, 2013. 62(44): p. 881. [PMC free article] [PubMed] [Google Scholar]

- 24.Giacosa A, Frascio F, and Munizzi F, Epidemiology of colorectal polyps. Techniques in coloproctology, 2004. 8(2): p. s243–s247. [DOI] [PubMed] [Google Scholar]

- 25.Winawer SJ, Natural history of colorectal cancer. The American journal of medicine, 1999. 106(1): p. 3–6. [DOI] [PubMed] [Google Scholar]

- 26.Pollitz K, et al. , Coverage of colonoscopies under the Affordable Care Act’s prevention benefit. The Henry J. Kaiser Family Foundation, American Cancer Society, and National Colorectal Cancer Roundtable; (September 2012). Accessed at http://kaiserfamilyfoundation.files.wordpress.com/2013/01/8351.pdf, 2012. [Google Scholar]

- 27.Pantanowitz L, Automated pap tests, in Practical Informatics for Cytopathology. 2014, Springer. p. 147–155. [Google Scholar]

- 28.Chen YC, et al. , X-Ray Computed Tomography of Holographically Fabricated Three-Dimensional Photonic Crystals. Advanced Materials, 2012. 24(21): p. 2863–2868. [DOI] [PubMed] [Google Scholar]

- 29.Kandel ME, et al. , Label-free tissue scanner for colorectal cancer screening. Journal of biomedical optics, 2017. 22(6): p. 066016. [DOI] [PubMed] [Google Scholar]

- 30.Simonyan K and Zisserman A, Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014.

- 31.Kandel ME, et al. , Label-free tissue scanner for colorectal cancer screening. Journal of Biomedical Optics, 2017. 22(6): p. 066016–066016. [DOI] [PubMed] [Google Scholar]

- 32.The data that support the findings of this study are available from the corresponding author upon reasonable request.