Abstract

Aims

The lack of primary ophthalmologists in China results in the inability of basic-level hospitals to diagnose pterygium patients. To solve this problem, an intelligent-assisted lightweight pterygium diagnosis model based on anterior segment images is proposed in this study.

Methods

Pterygium is a common and frequently occurring disease in ophthalmology, and fibrous tissue hyperplasia is both a diagnostic biomarker and a surgical biomarker. The model diagnosed pterygium based on biomarkers of pterygium. First, a total of 436 anterior segment images were collected; then, two intelligent-assisted lightweight pterygium diagnosis models (MobileNet 1 and MobileNet 2) based on raw data and augmented data were trained via transfer learning. The results of the lightweight models were compared with the clinical results. The classic models (AlexNet, VGG16 and ResNet18) were also used for training and testing, and their results were compared with the lightweight models. A total of 188 anterior segment images were used for testing. Sensitivity, specificity, F1-score, accuracy, kappa, area under the concentration-time curve (AUC), 95% CI, size, and parameters are the evaluation indicators in this study.

Results

There are 188 anterior segment images that were used for testing the five intelligent-assisted pterygium diagnosis models. The overall evaluation index for the MobileNet2 model was the best. The sensitivity, specificity, F1-score, and AUC of the MobileNet2 model for the normal anterior segment image diagnosis were 96.72%, 98.43%, 96.72%, and 0976, respectively; for the pterygium observation period anterior segment image diagnosis, the sensitivity, specificity, F1-score, and AUC were 83.7%, 90.48%, 82.54%, and 0.872, respectively; for the surgery period anterior segment image diagnosis, the sensitivity, specificity, F1-score, and AUC were 84.62%, 93.50%, 85.94%, and 0.891, respectively. The kappa value of the MobileNet2 model was 77.64%, the accuracy was 85.11%, the model size was 13.5 M, and the parameter size was 4.2 M.

Conclusion

This study used deep learning methods to propose a three-category intelligent lightweight-assisted pterygium diagnosis model. The developed model can be used to screen patients for pterygium problems initially, provide reasonable suggestions, and provide timely referrals. It can help primary doctors improve pterygium diagnoses, confer social benefits, and lay the foundation for future models to be embedded in mobile devices.

1. Introduction

Pterygium is a common and frequently occurring disease in ophthalmology. It is the degeneration and growth of conjunctival fibrovascular tissue on the cornea, which usually leads to astigmatism and dry eyes. Covering the pupil area can cause a significant decrease in vision. The main treatment is surgical resection [1]. Pterygium can usually be diagnosed by anterior segment images [1]. Anterior segment images are taken with a slit lamp digital microscope and obtained by the diffuse illumination method at a magnification of 10x. Professional ophthalmologists often diagnose ocular surface diseases by viewing the anterior segment images. At present, the incidence of pterygium disease in China is 9.84% [2], and there are few professional ophthalmologists in county-level and lower hospitals and community hospitals and other basic-level hospitals. Therefore, it is difficult for the basic-level hospitals to meet the needs of the huge number of pterygium patients. The problem was to be solved; an intelligent lightweight-assisted diagnosis model based on anterior segment images is proposed in the study. The model can help nonprofessional ophthalmologists in the primary hospital to make preliminary diagnoses of pterygium patients and help them obtain pterygium grading (three types of normal, observation, and surgery) to get an accurate referral. The model can also be embedded in mobile phones to assist users in self-screening. Some primary doctors may not provide effective services to pterygium patients, and this model can help to solve the problem.

The combination of ophthalmology and artificial intelligence (AI) has become closer with the development of AI [3–9]. In 2016, a deep learning model was proposed by the Google team; it can diagnose DR automatically through fundus images [10]. Deep learning models have been used by lots of researchers to diagnose DR [11–14] since then. In addition to DR, researchers have used deep learning methods to detect common fundus diseases, including glaucoma [15–17], retinal vein occlusion [18, 19], age-related macular degeneration [20–22], and even research on the classification of multiple common fundus diseases [23]. These studies obtained good results.

There are many studies on the diagnosis of fundus-related diseases using deep learning methods but relatively few studies on ocular surface diseases. Pterygium is a common disease on the ocular surface. AI research on pterygium is mainly pterygium detection. Traditional learning methods mainly extract the pterygium characteristics in anterior segment images to detect pterygium. Related researchers have used the adaptive nonlinear enhancement method, SVM, to segment pterygium tissue to detect pterygium [24–26]. In recent years, researchers such as Mohd Asyraf Zulkifley have used neural networks, DeepLab V2, and other deep learning methods to detect and segment pterygium [27–29]; researchers such as Zamani et al. have used a variety of deep learning models to perform two-class detection of pterygium [30]. Existing research on pterygium detection is mostly based on the two-class detection of pterygium based on anterior segment images. It has not been further determined whether the pterygium is operated on, which cannot meet the needs of precision medicine. The deep learning models used for detecting pterygium are currently mostly classic; the excessive number of parameters requires considerable space and cannot be used on mobile terminals or low-configuration devices.

An intelligent lightweight-assisted pterygium diagnosis model is designed by using transfer learning in this study. The model detects normal images, pterygium observation periods, and pterygium surgery periods from anterior segment images. Comparative research with classic models is carried out simultaneously and reported as follows.

2. Materials and Methods

2.1. Data Source

The Affiliated Eye Hospital of Nanjing Medical University provided anterior segment images for this study. The images were obtained from two models of slit lamp digital microscopy. In this study, 436 anterior segment images were used to train an intelligent lightweight-assisted pterygium diagnosis model. The dataset consists of 142, 144, and 150 anterior segment images for the normal, pterygium observation period, and pterygium surgery period, respectively. There were 188 images for model testing, which consisted of 61, 62, and 65 anterior segment images for the normal condition, pterygium observation period, and pterygium surgery period, respectively. The patients' gender and age were not restricted when selecting the images. The personal information of patient-related was all removed from the images to avoid infringing on patient privacy.

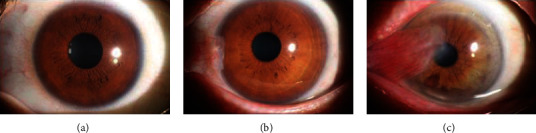

In this study, the images selected had high quality. Thus, ophthalmologists can easily diagnose whether the image shows a normal anterior segment or pterygium. The anterior segment images were either normal or showed pterygium. The image selected was diagnosed only as normal, pterygium observation period, or pterygium surgery period. The marking standard was [31] as follows. The normal anterior segment image is characterized by no obvious conjunctival hyperemia or proliferation, and the cornea is transparent; the anterior segment image of the pterygium observation period is characterized by the horizontal length of the pterygium head tissue invading the limbus of the cornea <3 mm; the anterior segment image of the pterygium surgery period is characterized by the horizontal length of the pterygium head tissue invading the limbus of the cornea ≥3 mm. The three types of anterior segment images were shown in Figure 1. There were two professional ophthalmologists who diagnosed the anterior segment images independently. If the diagnostic results of two ophthalmologists were identical, the final clinical diagnosis result was achieved. If the diagnostic results of two ophthalmologists were different, the final clinical diagnostic result was given by an expert ophthalmologist.

Figure 1.

Three types of anterior segment images.

Figure 1(a) is the normal anterior segment image; Figure 1(b) is the pterygium observation period anterior segment image; Figure 1(c) is the pterygium surgery period anterior segment image.

2.2. Data Augmentation

The quantity of training data was too small. Therefore, the original images were augmented by flipping and rotating the original image. First, the original image was flipped horizontally, and then, the original image and the horizontally flipped image were rotated clockwise and counterclockwise by 1° and 2°, respectively, so that the augmented image maintained the original medical characteristics. An original image and its augmented image are shown in Figure 2.

Figure 2.

Original image and its augmented images.

2.3. Lightweight Model Training

The study uses a MobileNet [32] model; the initial parameters used in the model were pretrained on the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) [33] dataset. A total of 436 original anterior segment images and 4,360 augmented anterior segment images were used to train two intelligent lightweight assisted diagnosis models for detecting pterygium grading. The network structure is not changed, and only the final output is changed to 3 categories in the transfer learning process.

MobileNet is a lightweight model designed specifically for mobile and embedded terminals. This study focuses on the transfer learning of the MobileNet model with an inverted residual structure. Its basic network structure mainly includes convolutional layers, bottleneck layers, and an average pooling layer. The structure of the bottleneck layers is shown in Figure 3 [32], it usually includes pointwise convolution and depthwise convolution; when the stride is 1, the input is added to the output. The structure of MobileNet 2 is shown in [32].

Figure 3.

Bottleneck structure.

In this study, a total of 436 original anterior segment images and 4,360 augmented anterior segment images were selected to train the two lightweight models. The images input to the two lightweight models were 224 × 224. Intelligent lightweight-assisted diagnosis models were obtained after training.

2.4. Classic Model Training

AlexNet [34], VGG16 [35], and ResNet18 [36] are three classic deep learning classification models. This study used 4,360 augmented anterior segment images to train three intelligent classic-assisted diagnosis models for detecting pterygium grading. The network structure of the three models with their initial parameters pretrained on the ILSVRC [33] dataset was used. The network structure is not changed, and only the final output is changed to 3 categories in the transfer learning process. The images input to the three models were 224 × 224. Intelligent-assisted diagnosis models were obtained after training. The results of the three models were compared to the lightweight models.

The server was used to train and test the five models. A computer was also used to test the five models because the basic hospitals usually do not have servers. The hardware configuration of the server used in this study is Intel (R) Xeon (R) Gold 5118 CPU, the main frequency is 2.3 GHz, the graphics card is Tesla V100, the video memory is 32 GB, and the operating system is Ubuntu 18.04. The hardware configuration of the computer used in this study is Intel (R) Core (TM) i5-4200M CPU, the main frequency is 2.5, the augmentation method is GHz, NVIDIA GeForce GT 720M x, the video memory is 1 GB, and the operating system is windows10.

2.5. Statistical Analysis

The SPSS 22.0 statistical software was used to analyze the results. The accuracy, size and parameters of models, time, sensitivity, specificity, F1-score, and AUC for the pterygium diagnostic models were calculated for the normal anterior segment image, pterygium observation period anterior segment image, and surgery period anterior segment image; then, ROC curves were plotted. The consistency between the expert and the model was evaluated by kappa value.

3. Results

A total of 188 anterior segment images were used to test the intelligent lightweight-assisted pterygium diagnosis models based on original data (MobileNet 1) and augmented data (MobileNet 2) for pterygium. The expert diagnosed 61 images as normal anterior segment, 62 as pterygium observation period, and 65 as pterygium surgery period. MobileNet 1 diagnosed 64 images as normal anterior segment, 55 as pterygium observation period, and 69 as pterygium surgery period. MobileNet 2 diagnosed 61 images as normal anterior segment, 64 as pterygium observation period, and 63 as pterygium surgery period. The two models' diagnostic results are shown in Tables 1 and 2.

Table 1.

Diagnostic results of MobileNet 1 (original data).

| Clinical | MobileNet diagnosis (original data) | |||

|---|---|---|---|---|

| Normal | Observe | Surgery | Total | |

| Normal | 59 | 2 | 0 | 61 |

| Observe | 4 | 45 | 13 | 62 |

| Surgery | 1 | 8 | 56 | 65 |

| Total | 64 | 55 | 69 | 188 |

Table 2.

Diagnostic results of MobileNet 1 (augmented data).

| Clinical | MobileNet diagnosis (augmented data) | |||

|---|---|---|---|---|

| Normal | Observe | Surgery | Total | |

| Normal | 59 | 2 | 0 | 61 |

| Observe | 2 | 52 | 8 | 62 |

| Surgery | 0 | 10 | 55 | 65 |

| Total | 61 | 64 | 63 | 188 |

A total of 188 anterior segment images were used to test the three classic intelligent-assisted pterygium diagnosis models (AlexNet, VGG16, ResNet18) based on augmented data (MobileNet 2) for pterygium. The three models' diagnostic results were compared with MobileNet 1 and MobileNet 2. Compared with the results of expert diagnosed, except for ResNet18, the sensitivity of the other four models for diagnosing the anterior segment image as normal was above 90%, the sensitivity of diagnosing the anterior segment image as pterygium observation period was up to 83.87% (AlexNet and MobileNet2), and the highest sensitivity of diagnosing the anterior segment image as pterygium surgery period was 86.15% (MobileNet1). The specificities of the five models for diagnosing anterior segment images as normal, pterygium observation period, and pterygium surgery period were mostly above 85%. Among them, the specificities of MobileNet 2 for diagnosing anterior segment images as the three grades were all above 90%, which showed that the models' misdiagnosis rates were low. The AUC values of MobileNet 2 for diagnosing anterior segment images as normal, pterygium observation period, and pterygium surgery period were the highest among the five models, which were 0.976, 0.872, and 0.891, respectively. The five models' evaluation results are compared in Table 3.

Table 3.

The five models' evaluation results.

| Model | Evaluation indicators | Normal | Observe | Surgery |

|---|---|---|---|---|

| MobileNet (original data) | Sensitivity | 96.72% | 72.58% | 86.15% |

| Specificity | 96.06% | 92.06% | 89.43% | |

| F1-score | 94.40% | 76.92% | 83.58% | |

| AUC | 0.964 | 0.823 | 0.878 | |

| 95% CI | 0.931-0.996 | 0.751-0.895 | 0.820-0.936 | |

| Kappa | 77.64% | |||

| Accuracy | 85.11% | |||

| Size (MB) | 13.5 | |||

| Parameters (million) | 4.2 | |||

| Time-S (ms) | 5.86 | |||

| Time-C (ms) | 473.37 | |||

| MobileNet (augmented data) | Sensitivity | 96.72% | 83.87% | 84.62% |

| Specificity | 98.43% | 90.48% | 93.50% | |

| F1-score | 96.72% | 82.54% | 85.94% | |

| AUC | 0.976 | 0.872 | 0.891 | |

| 95% CI | 0.947-1 | 0.811-0.933 | 0.833-0.948 | |

| Kappa | 82.44% | |||

| Accuracy | 88.30% | |||

| Size (MB) | 13.5 | |||

| Parameters (million) | 4.2 | |||

| Time-S (ms) | 5.75 | |||

| Time-C (ms) | 465.53 | |||

| AlexNet | Sensitivity | 91.80% | 83.87% | 84.62% |

| Specificity | 98.43% | 88.10% | 77.61% | |

| F1-score | 94.12% | 80.62% | 85.94% | |

| AUC | 0.951 | 0.860 | 0.891 | |

| 95% CI | 0.909-0.993 | 0.797-0.922 | 0.833-0.948 | |

| Kappa | 80.05% | |||

| Accuracy | 86.70% | |||

| Size (MB) | 233 | |||

| Parameters (million) | 60 | |||

| Time-S (ms) | 1.06 | |||

| Time-C (ms) | 64.63 | |||

| VGG16 | Sensitivity | 96.72% | 79.03% | 67.69% |

| Specificity | 92.13% | 81.75% | 97.56% | |

| F1-score | 90.77% | 73.13% | 78.57% | |

| AUC | 0.944 | 0.804 | 0.826 | |

| 95% CI | 0.907-0.982 | 0.733-0.874 | 0.754-0.899 | |

| Kappa | 71.34% | |||

| Accuracy | 80.85% | |||

| Size (MB) | 527 | |||

| Parameters (million) | 138 | |||

| Time-S (ms) | 1.72 | |||

| Time-C (ms) | 1020.11 | |||

| ResNet18 | Sensitivity | 81.97% | 66.13% | 75.38% |

| Specificity | 95.28% | 81.75% | 84.55% | |

| F1-score | 85.47% | 65.08% | 73.68% | |

| AUC | 0.886 | 0.739 | 0.800 | |

| 95% CI | 0.825-0.947 | 0.660-0.819 | 0.728-0.871 | |

| Kappa | 61.67% | |||

| Accuracy | 74.47% | |||

| Size (MB) | 44.6 | |||

| Parameters (million) | 33 | |||

| Time-S (ms) | 2.53 | |||

| Time-C (ms) | 170.88 | |||

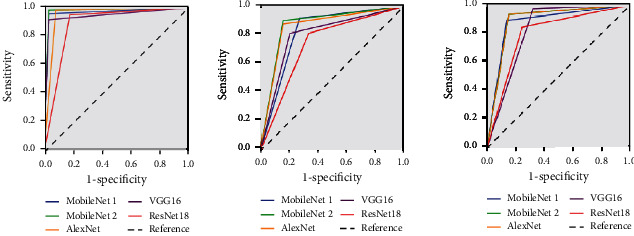

In Table 3, the sizes and parameters of the five models are compared. MobileNet 1 and MobileNet 2 had the smallest sizes and parameters among them, 13.5 M and 4.2 M, respectively. VGG16 had the largest size and parameters, 527 M and 138 M, respectively. As shown in Table 3, MobileNet 2 had the smallest space and the least number of parameters, and the evaluation indicators, such as sensitivity, specificity, F1-score, AUC, kappa value, and accuracy rate, still had good results. The AUC, kappa value, accuracy, and test time were the best among the 5 models. The five models' ROC curves of diagnosing the anterior segment image as normal, pterygium observation period, and pterygium surgery period are compared in Figure 4.

Figure 4.

ROC of the five models for normal, pterygium observation period, and surgery period.

Time-S means the time of testing one image based on server; time-C means the time of testing one image based on the computer.

4. Discussion

Pterygium is a common ocular surface disease that can cause vision loss and affect appearance. It has a higher incidence among people working outdoors in rural and remote areas (such as fishermen and farmers). For vast rural and remote areas that lack professional medical resources for ophthalmology, the intelligent-assisted diagnosis model can provide a convenient method for screening pterygium for local patients. It can avoid the rush of patients to go to county hospitals or prefectural hospitals and reduce their financial burden. Additionally, the model further provides treatment suggestions to facilitate the referral of patients in need of surgery at the basic-level hospital; it can also reasonably allocate medical resources.

In 2012, the AlexNet model [34] won the championship of the classification in the ILSVRC competition. The network structure of the AlexNet model has 7 layers, while the network structure of the VGG model [35] has up to 19 layers and researchers often use VGG 16, which has 16 layers. The ResNet model [36] has up to 152 layers, but researchers often use ResNet 18 and ResNet 50. The network structures of the models almost are deep, so it is suitable for the extraction of more complex image features. The above models are classic and occupy a large amount of space and have many parameters. The MobileNet model is a lightweight model. The depth of the separable convolution kernel and linear bottleneck was used to reduce the number of parameters so that the model occupies a small space. The complexity of the anterior segment image is relatively low, so AlexNet obtained better results than the three classic models. MobileNet is further simplified based on the classic model and obtained the best diagnosis results among these models.

MobileNet2 had the best overall diagnosis results among the 5 models, but its sensitivity for diagnosing the pterygium observation period and pterygium surgery period was only 83.87% and 84.62%, respectively. The sensitivity was low because the number of the training samples was only 436. Although the images were augmented by 10 times, the augmented images were more similar to the original image. The effect improved, but the improvement was small.

As shown in Table 2, the MobileNet 2 model did not diagnose the pterygium surgery period images as normal images. Only pterygium observation periods were diagnosed as normal images. Most of the other errors were diagnosed between the pterygium observation period and pterygium surgery period. Patients diagnosed during the pterygium observation period and pterygium surgery period were recommended to go to the superior hospital for further confirmation, and the correct diagnosis was obtained after referral. Since the pterygium observation period images were diagnosed as normal anterior segment images, the model asked the user to diagnose again after obtaining the diagnosis result of the normal anterior segment image or asked the user whether to upload the image to the doctor. If the user had doubts about the diagnosis result, the user could upload the image, and a doctor confirmed the diagnosis result.

In this study, the parameters of the MobileNet lightweight model were only 4.2 M, and the model size was 13.5 M, which was suitable for embedding in mobile devices and offline operation of embedded devices. Users of basic-level medical institutions can take photos of the anterior segment through the camera so that the device and the local medical computer can be simultaneously diagnosed. Therefore, pterygium screening can be performed at the basic-level hospital. The training images of the MobileNet2 model were obtained from the slit lamp digital microscope, which has a certain gap with the anterior segment images taken by the mobile device camera. In the future, more images of the anterior segment taken by the mobile device camera will be collected to improve the model and make the model more suitable for mobile applications. People in the vast rural areas and remote mountainous areas of China have difficulty seeing a doctor. Mobile devices can realize self-screening, which is convenient for users to pay attention to their ocular surface health at any time.

5. Conclusion

This study used deep learning methods to propose a three-category intelligent lightweight-assisted pterygium diagnosis model, MobileNet, based on amplified data. Its results were compared with three classic deep learning models (AlexNet, VGG16, and ResNet18). The MobileNet 2 model had the fewest parameters, and its overall evaluation index results were the best. This model can be used on low-configuration computers at basic-level hospitals, and it can help primary doctors preliminarily screen pterygium problems in patients through anterior segment images. It can also provide suitable recommendations and timely referrals, improve the diagnosis level of primary ophthalmology, and obtain good social benefits. Additionally, the small size and few parameters of the lightweight model lay the foundation for future models to be embedded in mobile devices so that it is convenient for mobile users to screen themselves for pterygium problems.

Acknowledgments

This study was supported by the National Natural Science Foundation of China (No.61906066), Natural Science Foundation of Zhejiang Province (No.LQ18F020002), Science and Technology Planning Project of Huzhou Municipality (No.2016YZ02), and Nanjing Enterprise Expert Team Project.

Contributor Information

Chenghu Wang, Email: wangchenghu1226@163.com.

Weihua Yang, Email: benben0606@139.com.

Data Availability

The datasets used and/or analysed during the present study are available from the corresponding author on reasonable request.

Conflicts of Interest

All the authors declare that there is no conflict of interest about the publication of this article.

Authors' Contributions

Bo Zheng and Yunfang Liu contributed equally to this study.

References

- 1.Wu X., Li M., Xu F. Research progress of treatment techniques for pterygium. Recent Advances in Ophthalmology. 2021;41(3):296–300. [Google Scholar]

- 2.Ren H., Li X., Cai Y. Current status and prospect of individualized clinical treatment of pterygium. Yan Ke Xue Bao. 2020;35(4):255–261. [Google Scholar]

- 3.Poplin R., Varadarajan A. V., Blumer K., et al. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nature Biomedical Engineering. 2018;2(3):158–164. doi: 10.1038/s41551-018-0195-0. [DOI] [PubMed] [Google Scholar]

- 4.Peng Y., Dharssi S., Chen Q., et al. DeepSeeNet: A Deep Learning Model for Automated Classification of Patient- based Age-related Macular Degeneration Severity from Color Fundus Photographs. Ophthalmology. 2019;126(4) doi: 10.1016/j.ophtha.2018.11.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Rohm M., Tresp V., Müller M., et al. Predicting visual acuity by using machine learning in patients treated for neovascular age-related macular degeneration. Ophthalmology. 2018;125(7):1028–1036. doi: 10.1016/j.ophtha.2017.12.034. [DOI] [PubMed] [Google Scholar]

- 6.Yang W. H., Zheng B., Wu M. N., et al. An evaluation system of fundus photograph-based intelligent diagnostic technology for diabetic retinopathy and applicability for research. Diabetes Therapy. 2019;10(5):1811–1822. doi: 10.1007/s13300-019-0652-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Xu J., Wan C., Yang W., et al. A novel multi-modal fundus image fusion method for guiding the laser surgery of central serous chorioretinopathy. Mathematical Biosciences and Engineering. 2021;18(4):4797–4816. doi: 10.3934/mbe.2021244. [DOI] [PubMed] [Google Scholar]

- 8.Dai Q., Liu X., Lin X., et al. A novel meibomian gland morphology analytic system based on a convolutional neural network. IEEE Access. 2021;9(1):23083–23094. doi: 10.1109/access.2021.3056234. [DOI] [Google Scholar]

- 9.Zhang H., Niu K., Xiong Y., Yang W., He Z. Q., Song H. Automatic cataract grading methods based on deep learning. Computer methods and programs in biomedicine. 2019;182:p. 104978. doi: 10.1016/j.cmpb.2019.07.006. [DOI] [PubMed] [Google Scholar]

- 10.Gulshan V., Peng L., Coram M., et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316(22):2402–2410. doi: 10.1001/jama.2016.17216. [DOI] [PubMed] [Google Scholar]

- 11.Raman R., Srinivasan S., Virmani S., Sivaprasad S., Rao C., Rajalakshmi R. Fundus photograph-based deep learning algorithms in detecting diabetic retinopathy. Eye (London, England) 2019;33(1):97–109. doi: 10.1038/s41433-018-0269-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kermany S., Goldbaum M., Cai W., et al. Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell. 2018;172(5):1122–1131.e9. doi: 10.1016/j.cell.2018.02.010. [DOI] [PubMed] [Google Scholar]

- 13.Raju M., Pagidimarri V., Barreto R., Kadam A., Kasivajjala V., Aswath A. Development of a deep learning algorithm for automatic diagnosis of diabetic retinopathy. Studies in Health Technology and Informatics. 2017;245(1):559–563. doi: 10.3233/978-1-61499-830-3-559. [DOI] [PubMed] [Google Scholar]

- 14.Schmidt-Erfurth U., Sadeghipour A., Gerendas B. S., Waldstein S. M., Bogunović H. Artificial intelligence in retina. Progress in Retinal and Eye Research. 2018;67:1–29. doi: 10.1016/j.preteyeres.2018.07.004. [DOI] [PubMed] [Google Scholar]

- 15.Christopher M., Belghith A., Bowd C., et al. Performance of deep learning architectures and transfer learning for detecting glaucomatous optic neuropathy in fundus photographs. Scientific Reports. 2018;8(1):p. 16685. doi: 10.1038/s41598-018-35044-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Li Z., He Y., Keel S., Meng W., Chang R. T., He M. Efficacy of a deep learning system for detecting glaucomatous optic neuropathy based on color fundus photographs. Ophthalmology. 2018;125(8):1199–1206. doi: 10.1016/j.ophtha.2018.01.023. [DOI] [PubMed] [Google Scholar]

- 17.Medeiros F. A., Jammal A. A., Mariottoni E. B. Detection of progressive glaucomatous optic nerve damage on fundus photographs with deep learning. Ophthalmology. 2021;128(3):383–392. doi: 10.1016/j.ophtha.2020.07.045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Nagasato D., Tabuchi H., Ohsugi H., et al. Deep neural network-based method for detecting central retinal vein occlusion using ultrawide-field fundus ophthalmoscopy. Journal of Ophthalmology. 2018;2018:6. doi: 10.1155/2018/1875431.1875431 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Nagasato D., Tabuchi H., Ohsugi H., et al. Deep-learning classifier with ultrawide-field fundus ophthalmoscopy for detecting branch retinal vein occlusion. International Journal of Ophthalmology. 2019;12(1):98–103. doi: 10.18240/ijo.2019.01.15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Burlina P., Joshi N., Pacheco K. D., Freund D. E., Kong J., Bressler N. M. Utility of deep learning methods for referability classification of age-related macular degeneration. JAMA Ophthalmology. 2018;136(11):1305–1307. doi: 10.1001/jamaophthalmol.2018.3799. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Yim J., Chopra R., Spitz T., et al. Predicting conversion to wet age-related macular degeneration using deep learning. Nature Medicine. 2020;26(6):892–899. doi: 10.1038/s41591-020-0867-7. [DOI] [PubMed] [Google Scholar]

- 22.Yan Q., Weeks D. E., Xin H., et al. Deep-learning-based prediction of late age-related macular degeneration progression. Nature Machine Intelligence. 2020;2(2):141–150. doi: 10.1038/s42256-020-0154-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Zheng B., iang Q., Lu B., et al. Five-category intelligent auxiliary diagnosis model of common fundus diseases based on fundus images. Translational Vision Science & Technology. 2021;10(7) doi: 10.1167/tvst.10.7.20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Abdani S. R., Zaki W. M. D. W., Mustapha A., Hussain A. Iris segmentation method of pterygium anterior segment photographed image. 2015 IEEE Symposium on Computer Applications & Industrial Electronics (ISCAIE); 2015; Langkawi, Malaysia. pp. 69–72. [DOI] [Google Scholar]

- 25.Abdani S. R., Zaki W. M. D. W., Hussain A., Mustapha A. An adaptive nonlinear enhancement method using sigmoid function for iris segmentation in pterygium cases. 2015 International Electronics Symposium (IES); 2015; Surabaya, Indonesia. pp. 53–57. [DOI] [Google Scholar]

- 26.Zaki W. M. D. W., Daud M. M., Abdani S. R., Hussain A., Mutalib H. A. Automated pterygium detection method of anterior segment photographed images. Computer Methods and Programs in Biomedicine. 2018;154:71–78. doi: 10.1016/j.cmpb.2017.10.026. [DOI] [PubMed] [Google Scholar]

- 27.Abdani S. R., Zulkifley M. A., Hussain A. Compact convolutional neural networks for pterygium classification using transfer learning. 2019 IEEE International Conference on Signal and Image Processing Applications (ICSIPA); 2019; Kuala Lumpur, Malaysia. pp. 140–143. [DOI] [Google Scholar]

- 28.Zulkifley M. A., Abdani S. R., Zulkifley N. H. Pterygium-Net: a deep learning approach to pterygium detection and localization. Multimedia Tools and Applications. 2019;78(24):34563–34584. doi: 10.1007/s11042-019-08130-x. [DOI] [Google Scholar]

- 29.Abdani S. R., Zulkifley M. A., Moubark A. M. Pterygium tissues segmentation using densely connected deeplab. 2020 IEEE 10th Symposium on Computer Applications & Industrial Electronics (ISCAIE); 2020; Malaysia. pp. 229–232. [DOI] [Google Scholar]

- 30.Zamani N. S. M., Zaki W. M. D. W., Huddin A. B., Hussain A., Mutalib H. A., Ali A. Automated pterygium detection using deep neural network. IEEE Access. 2020;8:191659–191672. doi: 10.1109/ACCESS.2020.3030787. [DOI] [Google Scholar]

- 31.Gumus K., Erkilic K., Topaktas D., Colin J. Effect of pterygia on refractive indices, corneal topography, and ocular aberrations. Cornea. 2011;30(1):24–29. doi: 10.1097/ico.0b013e3181dc814e. [DOI] [PubMed] [Google Scholar]

- 32.Sandler M., Howard A., Zhu M., Zhmoginov A., Chen L.-C. Mobile Net V2: inverted residuals and linear bottlenecks. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition; 2018; Salt Lake City, UT, USA. pp. 4510–4520. [DOI] [Google Scholar]

- 33.Deng J., Dong W., Socher R., Li L.-J., Li K., Fei-Fei L. Image Net: A Large-Scale Hierarchical Image Database. 2009 IEEE Conference on Computer Vision and Pattern Recognition; 2009; Miami, FL, USA. pp. 248–255. [DOI] [Google Scholar]

- 34.Krizhevsky A., Sutskever I., Hinton G. Imagenet classification with deep convolutional neural networks. Proceedings of the 25th International Conference on Neural Information Processing Systems (NIPS); 2012; Lake Tahoe, Nevada, US. pp. 1097–1105. [Google Scholar]

- 35.Simonyan K., Zisserman A. Very deep convolutional networks for large-scale image recognition. https://arxiv.org/abs/1409.1556.pdf.

- 36.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016; Las Vegas, NV, USA. pp. 770–778. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets used and/or analysed during the present study are available from the corresponding author on reasonable request.