Abstract

The Euclidean scattering transform was introduced nearly a decade ago to improve the mathematical understanding of convolutional neural networks. Inspired by recent interest in geometric deep learning, which aims to generalize convolutional neural networks to manifold and graph-structured domains, we define a geometric scattering transform on manifolds. Similar to the Euclidean scattering transform, the geometric scattering transform is based on a cascade of wavelet filters and pointwise nonlinearities. It is invariant to local isometries and stable to certain types of diffeomorphisms. Empirical results demonstrate its utility on several geometric learning tasks. Our results generalize the deformation stability and local translation invariance of Euclidean scattering, and demonstrate the importance of linking the used filter structures to the underlying geometry of the data.

Keywords: geometric deep learning, wavelet scattering, spectral geometry

1. Introduction

In an effort to improve our mathematical understanding of deep convolutional networks and their learned features, Mallat (2010, 2012) introduced the scattering transform for signals on . This transform has an architecture similar to convolutional neural networks (ConvNets), based on a cascade of convolutional filters and simple pointwise nonlinearities. However, unlike many other deep learning methods, this transform uses the complex modulus as its nonlinearity and does not learn its filters from data, but instead uses designed filters. As shown in Mallat (2012), with properly chosen wavelet filters, the scattering transform is provably invariant to the actions of certain Lie groups, such as the translation group, and is also provably Lipschitz stable to small diffeomorphisms, where the size of a diffeomorphism is quantified by its deviation from a translation. These notions were applied in Bruna and Mallat (2011, 2013); Sifre and Mallat (2012, 2013, 2014); Oyallon and Mallat (2015) using groups of translations, rotations, and scaling operations, with applications in image and texture classification. Additionally, the scattering transform and its deep filter bank approach have also proven to be effective in several other fields, such as audio processing (Andén and Mallat, 2011, 2014; Wolf et al., 2014, 2015; Andén et al., 2019), medical signal processing (Chudácek et al., 2014), and quantum chemistry (Hirn et al., 2017; Eickenberg et al., 2017, 2018; Brumwell et al., 2018). Mathematical generalizations to non-wavelet filters have also been studied, including Gabor filters as in the short time Fourier transform (Czaja and Li, 2019) and more general classes of semi-discrete frames (Grohs et al., 2016; Wiatowski and Bölcskei, 2015, 2018).

However, many data sets of interest have an intrinsically non-Euclidean structure and are better modeled by graphs or manifolds. Indeed, manifold learning models (e.g., Tenenbaum et al., 2000; Coifman and Lafon, 2006a; van der Maaten and Hinton, 2008) are commonly used for representing high-dimensional data in which unsupervised algorithms infer data-driven geometries to capture intrinsic structure in data. Furthermore, signals supported on manifolds are becoming increasingly prevalent, for example, in shape matching and computer graphics. As such, a large body of work has emerged to explore the generalization of spectral and signal processing notions to manifolds (Coifman and Lafon, 2006b) and graphs (Shuman et al., 2013a, and references therein). In these settings, functions are supported on the manifold or the vertices of the graph, and the eigenfunctions of the Laplace-Beltrami operator, or the eigenvectors of the graph Laplacian, serve as the Fourier harmonics. This increasing interest in non-Euclidean data geometries has led to a new research direction known as geometric deep learning, which aims to generalize convolutional networks to graph and manifold structured data (Bronstein et al., 2017, and references therein).

Inspired by geometric deep learning, recent works have also proposed an extension of the scattering transform to graph domains. These mostly focused on finding features that represent a graph structure (given a fixed set of signals on it) while being stable to graph perturbations. In Gama et al. (2019b), a cascade of diffusion wavelets from Coifman and Maggioni (2006) was proposed, and its Lipschitz stability was shown with respect to a global diffusion-inspired distance between graphs. These results were generalized in Gama et al. (2019a) to graph wavelets constructed from more general graph shift operators. A similar construction discussed in Zou and Lerman (2019) was shown to be stable to permutations of vertex indices, and to small perturbations of edge weights. Gao et al. (2019) established the viability of graph scattering coefficients as universal graph features for data analysis tasks (e.g., in social networks and biochemistry data). The wavelets used in Gao et al. (2019) are similar to those used in Gama et al. (2019b), but are constructed from an asymmetric lazy random walk matrix (the wavelets in Gama et al. (2019b) are constructed from a symmetric matrix). The constructions of Gama et al. (2019b) and Gao et al. (2019) were unified in Perlmutter et al. (2019), which introduced a large family of graph wavelets, including both those from Gama et al. (2019b) and Gao et al. (2019) as special cases, and showed that the resulting scattering transforms enjoyed many of the same theoretical properties as in Gama et al. (2019b).

In this paper we consider the manifold aspect of geometric deep learning. There are two basic tasks in this setting: (1) classification of multiple signals over a single, fixed manifold; and (2) classification of multiple manifolds. Beyond these two tasks, there are additional problems of interest such as manifold alignment, partial manifold reconstruction, and generative models. Fundamentally for all of these tasks, both in the approach described here and in other papers, one needs to process signals over a manifold. Indeed, even in manifold classification tasks and related problems such as manifold alignment, one often begins with a set of universal features that can be defined on any manifold, and which are processed in such a way that allows for comparison of two or more manifolds. In order to carry out these tasks, a representation of manifold supported signals needs to be stable to orientations, noise, and deformations over the manifold geometry. Working towards these goals, we define a scattering transform on compact smooth Riemannian manifolds without boundary, which we call geometric scattering. Our construction is based on convolutional filters defined spectrally via the eigendecomposition of the Laplace-Beltrami operator over the manifold, as discussed in Section 2. We show that these convolutional operators can be used to construct a wavelet frame similar to the diffusion wavelets constructed in Coifman and Maggioni (2006). Then, in Section 3, we construct a cascade of these generalized convolutions and pointwise absolute value operations that is used to map signals on the manifold to scattering coefficients that encode approximate local invariance to isometries, which correspond to translations, rotations, and reflections in Euclidean space. We then show in Section 4 that our scattering coefficients are also stable to the action of diffeomorphisms with a notion of stability analogous to the Lipschitz stability considered in Mallat (2012) on Euclidean space. Our results provide a path forward for utilizing the scattering mathematical framework to analyze and understand geometric deep learning, while also shedding light on the challenges involved in such generalization to non-Euclidean domains. Indeed, while these results are analogous to those obtained for the Euclidean scattering transform, we emphasize that the underlying mathematical techniques are derived from spectral geometry, which plays no role in the Euclidean analysis. Numerical results in Section 5 show that geometric scattering coefficients achieve competitive results for signal classification on a single manifold, and classification of different manifolds. We demonstrate the geometric scattering method can capture the both local and global features to generate useful latent representations for various downstream tasks. Proofs of technical results are provided in the appendices.

1.1. Notation

Let denote a compact, smooth, connected d-dimensional Riemannian manifold without boundary contained in , and let denote the set of functions that are square integrable with respect to the Riemannian volume dx. Let r(x, x′) denote the geodesic distance between two points, and let Δ denote the Laplace-Beltrami operator on . Let denote the set of all isometries between two manifolds and , and set to be the isometry group of . Likewise, we set to be the diffeomorphism group on . For , we let denote its maximum displacement.

2. Geometric wavelet transforms on manifolds

The Euclidean scattering transform is constructed using wavelet and low-pass filters defined on . In Section 2.1, we extend the notion of convolution against a filter (wavelet, low-pass, or otherwise), to manifolds using notions from spectral geometry. Many of the notions described in this section are geometric analogues of similar constructions used in graph signal processing (Shuman et al., 2013b). Section 2.2 utilizes these constructions to define Littlewood-Paley frames for , and Section 2.3 describes a specific class of Littlewood-Paley frames which we call geometric wavelets.

2.1. Convolution on manifolds

On , the convolution of a signal with a filter is defined by translating h against f; however, translations are not well-defined on generic manifolds. Nevertheless, convolution can also be characterized using the Fourier convolution theorem, i.e., . Fourier analysis can be defined on using the spectral decomposition of −Δ. Since is compact and connected, −Δ has countably many eigenvalues which we enumerate as 0 = λ0 < λ1 ≤ λ2 (repeating those with multiplicity greater than one), and there exists a sequence of eigenfunctions φ0, φ1, φ2, … such that {φk}k≥0 is an orthonormal basis for and −Δφk = λkφk. While one can take each φk to be real valued, we do not assume this choice of eigenbasis. One can show that φ0 is constant, which implies, by orthogonality, that φk has mean zero for k ≥ 1. We consider the eigenfunctions {φk}k≥0 as the Fourier modes of the manifold , and define the Fourier series of as

Since φ0, φ1, φ2, … form an orthonormal basis, we have

| (1) |

For , we define the convolution ∗ over between f and h as

| (2) |

We let be the corresponding operator Thf(x) := f ∗ h(x) and note that we may write

where

It is well known that convolution on commutes with translations. This equivariance property is fundamental to Euclidean ConvNets, and has spurred the development of equivariant neural networks on other spaces, e.g., Cohen and Welling (2016); Kondor and Trivedi (2018); Thomas et al. (2018); Kondor et al. (2018a); Cohen et al. (2018); Kondor et al. (2018b); Weiler et al. (2018). Since translations are not well-defined on , we instead seek to construct a family of operators which commute with isometries. Towards this end, we say a filter is a spectral filter if λk = λℓ implies . In this case, there exists a function , which we refer to as the spectral function of h, such that

In the proofs of our theorems, it will be convenient to group together eigenfunctions belonging to the same eigenspace. This motivates us to define

as the set of all eigenvalues of −Δ, and to let

| (3) |

for each λ ∈ Λ. We note that if h is a spectral filter, then we may write

| (4) |

For a diffeomorphism we define the operator as

The operator Vζ deforms the function according to the diffeomorphism ζ of the underlying manifold . The following theorem shows that Th and Vζ commute if ζ is an isometry and h is a spectral filter. We note the assumption that h is a spectral filter is critical and in general Th does not commute with isometries if h is not a spectral filter. The proof is in Appendix A.

Theorem 2.1

For every spectral filter and for every ,

2.2. Littlewood-Paley frames over manifolds

A family of spectral filters {hγ : γ ∈ Γ} (with Γ countable), is called a Littlewood-Paley frame if it satisfies the following condition which implies that the hγ cover the frequencies of evenly:

| (5) |

We define the corresponding frame analysis operator, , by

The following proposition shows that if (5) holds, then preserves the energy of f.

Proposition 2.2

If {hγ : γ ∈ Γ} satisfies (5), then is an isometry, i.e.,

The proof of Proposition 2.2 is nearly identical to the corresponding result in the Euclidean case. For the sake of completeness, we provide full details in Appendix B. Since the operator is linear, Proposition 2.2 also shows the operator is non-expansive, i.e., . This property is directly related to the L2 stability of a ConvNet of the form , where the σℓ are nonlinear functions. Indeed, if all the frame analysis operators and all the nonlinear operators σℓ are non-expansive, then the entire network is non-expansive as well.

2.3. Geometric wavelet transforms on manifolds

The geometric wavelet transform is a special type of Littlewood-Paley frame analysis operator in which the filters group the frequencies of into dyadic packets. A spectral filter is said to be a low-pass filter if and is non-increasing with respect to k. Typically, decays rapidly as k grows large. Thus, a low-pass filtering, Tϕf := f ∗ ϕ, retains the low frequencies of f while suppressing the high frequencies. A wavelet is a spectral filter such that . Unlike low-pass filters, wavelets have no frequency response at k = 0, but are generally well localized in the frequency domain away from k = 0.

We shall define a family of low-pass and a wavelet filters, using the difference between low-pass filters at consecutive dyadic scales, in a manner which mimics standard wavelet constructions (see, e.g., Meyer, 1993). Let be a non-negative, non-increasing function with G(0) = 1. Define a low-pass spectral filter ϕ and its dilation at scale 2j for by:

Given the dilated low-pass filters, , we defined our wavelet filters by

| (6) |

For , we let AJf := f ∗ ϕJ and Ψjf := f ∗ ψj. We then define the geometric wavelet transform as

The geometric wavelet transform extracts the low frequency, slow transitions of f over through AJf, and groups the high frequency, sharp transitions of f over into different dyadic frequency bands via the collection {Ψjf : j ≤ J}. The following proposition can be proved by observing that {ϕJ , ψj : j ≤ J} forms a Littlewood-Paley frame and applying Proposition 2.2. The proof is nearly identical to the corresponding result in the Euclidean case; however, we provide full details in Appendix C in order to help keep this paper self-contained.

Proposition 2.3

For any , is an isometry, i.e.,

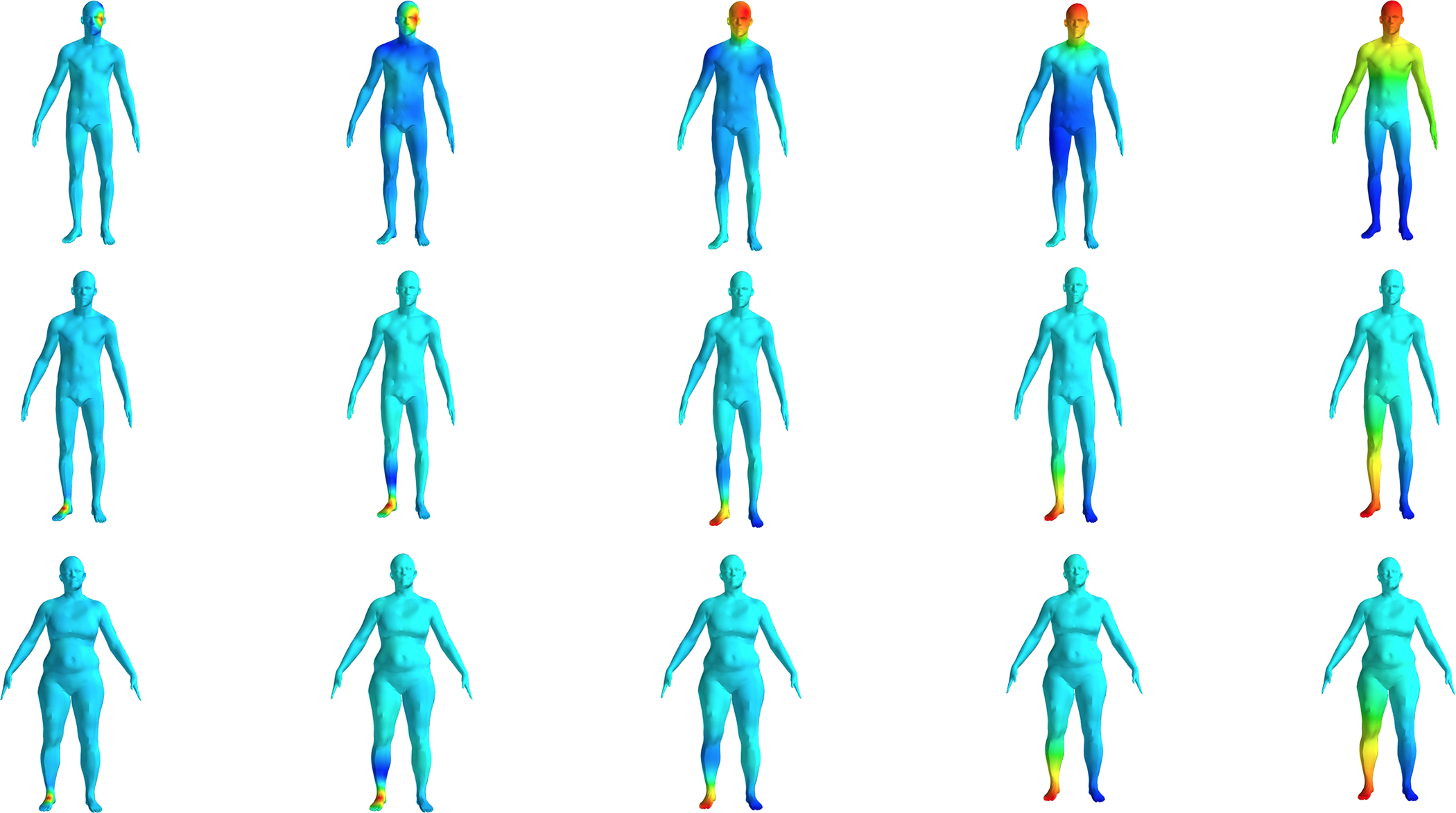

An important example is G(λ) = e−λ. In this case the low-pass kernel KϕJ is the heat kernel on M at diffusion time t = 2J, and the wavelet operators Ψj are similar to the diffusion wavelets introduced in Coifman and Maggioni (2006). We also note that wavelet constructions similar to ours were used in Hammond et al. (2011) and Dong (2017). Figure 1 depicts these wavelets over manifolds from the FAUST data set (Bogo et al., 2014). Unlike many wavelets commonly used in computer vision, our wavelets are not directional. Indeed, on a generic manifold the concept of directional wavelets is not well-defined since the isometry group cannot be decomposed into translations, rotations, and reflections. Instead, our wavelets have a donut-like shape which is somewhat similar to the wavelet obtained by applying the Laplacian operator on to a d-dimensional Gaussian.

Figure 1:

Geometric wavelets on the FAUST mesh with G(λ) = e−λ. From left to right j = −1,−3,−5,−7,−9. Positive values are colored red, while negative values are dark blue.

3. The geometric wavelet scattering transform

The geometric wavelet scattering transform is a type of geometric ConvNet, constructed in a manner analogous to the Euclidean scattering transform (Mallat, 2012) as an alternating cascade of geometric wavelet transforms (defined in Section 2.3) and nonlinearities. As we shall show in Sections 3.3, 3.4, and 4, this transformation enjoys several desirable properties for processing data consisting of signals defined on a fixed manifold , in addition to tasks in which each data point is a different manifold and one is required to compare and classify manifolds. Tasks of the latter form are approachable due to the use of geometric wavelets that are derived from a universal frequency function that is defined independent of . Motivation for these invariance and stability properties is given in Section 3.1, and the geometric wavelet scattering transform is defined in Section 3.2. We note that much of our analysis remains valid when our wavelets are replaced with a general Littlewood-Paley frame. However, we will focus on the wavelet case for the ease of exposition and to to emphasize the connections between the manifold scattering transform and its Euclidean analogue.

3.1. The role of invariance and stability

Invariance and stability play a fundamental role in many machine learning tasks, particularly in computer vision. For classification and regression, one often wants to consider two signals , or two manifolds and , to be equivalent if they differ by the action of a global isometry. Similarly, it is desirable that the action of small diffeomorphisms on , or on the underlying manifold , should not have a large impact on the representation of the input signal.

Thus, we seek to construct a family of representations, (Θt)t∈(0,∞), which are invariant to isometric transformations up to the scale t. In the case of analyzing multiple signals on a fixed manifold, such a representation should satisfy a condition of the form:

| (7) |

where α(ζ) measures the size of the isometry with α(id) = 0, and β(t) decreases to zero as the scale t grows to infinity. For diffeomorphisms, invariance is too strong of a property since we are often interested in non-isometric differences between signals on a fixed manifold, or geometric differences between multiple manifolds, not just topological differences, e.g., we often wish to classify a doughnut differently than a coffee mug, even though they are both topologically a 2-torus. Instead, we want a family of representations that is stable to diffeomorphism actions, but not invariant. Combining this requirement with the isometry invariance condition (7) leads us to seek, for the case of a fixed manifold , a condition of the form:

| (8) |

where A(ζ) measures how much ζ differs from being an isometry, with A(ζ) = 0 if and A(ζ) > 0 if . We also develop analogous conditions for isometries in the case of multiple manifolds in Section 3.4.

At the same time, the representations (Θt)t∈(0,∞) should not be trivial. Different classes or types of signals are often distinguished by their high-frequency content, i.e., for large k. The same may also be true of two manifolds and , in that differences between them are only readily apparent when comparing the high frequency eigenfunctions of their respective Laplace-Beltrami operators. Our problem is thus to find a family of representations for data defined on a manifold that is stable to diffeomorphisms, allows one to control the scale of isometric invariance, and discriminates between different types of signals, in both high and low frequencies. The wavelet scattering transform of Mallat (2012) achieves goals analogous to the ones presented here, but for Euclidean supported signals. We seek to construct a geometric version of the scattering transform, using filters corresponding to the spectral geometry of , and to show it has similar properties.

3.2. Defining the geometric wavelet scattering transform

The geometric wavelet scattering transform is a nonlinear operator constructed through an alternating cascade of geometric wavelet transforms WJ and nonlinearities. Its construction is motivated by the desire to obtain localized isometry invariance and stability to diffeomorphisms, as formulated in Section 3.1.

A simple way to obtain a locally isometry invariant representation of a signal is to apply the low-pass averaging operator AJ. If G(λ) ≤ e−λ, then one can use Theorem 2.1 to show that

| (9) |

In other words, the L2 difference between f ∗ ϕJ and Vζf ∗ ϕJ for a unit energy signal f (i.e., ||f||2 = 1), is no more than the size of the isometry ||ζ||∞ depressed by a factor of 2dJ, up to some universal constant that depends only on . Thus, the parameter J controls the degree of invariance.

However, by definition , and so if , we see the high-frequency content of f is lost in the representation AJf. The high frequencies of f are recovered with the wavelet coefficients {Ψjf = f ∗ ψj : j ≤ J}, which are guaranteed to capture the remaining frequency content of f. However, the wavelet coefficients Ψjf are not isometry invariant and thus do not satisfy any bound analogous to (9). If we apply the averaging operator in addition to the wavelet coefficient operator, we obtain:

but by design the sequences and have small overlapping support in order to satisfy the Littlewood-Paley condition (5), particularly in their largest responses, and thus f ∗ ψj ∗ ϕJ ≈ 0. In order to obtain a non-trivial invariant that also retains some of the high-frequency information in the signal f, we need to apply a nonlinear operator. Because it is non-expansive and commutes with isometries, we choose the absolute value (complex modulus) function as our non-linearity, and let

We then convolve the UJ[j]f with the low-pass ϕj to obtain locally invariant descriptions of f, which we refer to as the first-order scattering coefficients:

| (10) |

The collection of all such coefficients can be written as

where

These coefficients satisfy a local invariance bound similar to (9), but encode multiscale characteristics of f over the manifold geometry, which are not contained in AJf. Nevertheless, the geometric scattering representation still loses information contained in the signal f. Indeed, even with the absolute value, the functions |f ∗ψj| have frequency information not captured by the low-pass ϕJ. Iterating the geometric wavelet transform WJ recovers this information by computing , which contains the first order invariants (10) but also retains the high frequencies of . We then obtain second-order geometric wavelet scattering coefficients given by

the collection of which can be written as . The corresponding geometric scattering transform up to order m = 2 computes , which can be thought of as a three-layer geometric ConvNet that extracts invariant representations of the input signal at each layer. Second order coefficients, in particular, decompose the interference patterns in |f ∗ ψj1| into dyadic frequency bands via a second wavelet transform. This second order transform has the effect of coupling two scales 2j1 and 2j2 over the geometry of the manifold .

The general geometric scattering transform iterates the wavelet transform and absolute value (complex modulus) operators up to an arbitrary depth. Formally, for and j1, …, jm ≤ J we let

when m ≥ 1, and we let UJ[∅]f = f when m = 0. Likewise, we define

and let SJ[∅]f = f ∗ ϕJ. We then consider the maps and given by

and

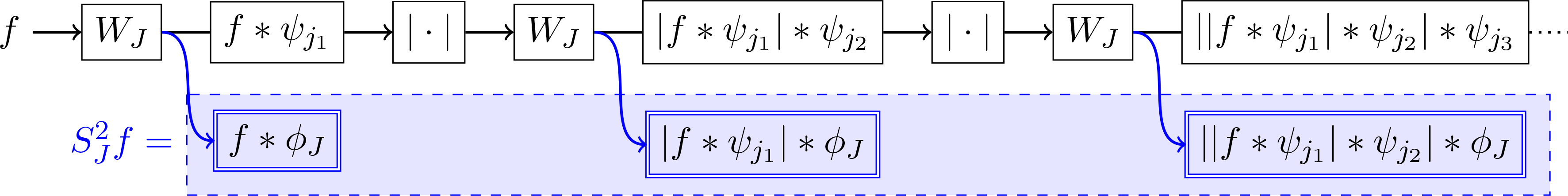

An illustration of the map SJ, which we refer to as the geometric scattering transform at scale 2J, is given by Figure 2. In practice, one only uses finitely many layers, which motives us to also consider the L-layer versions of UJ and SJ defined for L ≥ 0 by

and

Figure 2:

The geometric wavelet scattering transform , illustrated for L = 2.

The invariance properties of SJ and are described in Sections 3.3 and 3.4, whereas their diffeomorphism stability properties are described in Section 4. The following proposition shows that both SJ and are non-expansive.

Proposition 3.1

Both the finite-layer and infinite-layer geometric wavelet scattering transforms are nonexpansive. Specifically,

The first inequality is trivial. The proof of the second inequality is nearly identical to Mallat (2012, Proposition 2.5), and is thus omitted.

3.3. Isometric invariance

The geometric wavelet scattering transform is invariant to the action of the isometry group on the input signal f up to a factor that depends upon the frequency decay of the low-pass spectral filter 𝜙J. In particular, the following theorem establishes isometric invariance up to the scale 2J under the assumption that . The proof of Theorem 3.2 is given in Appendix D.

Theorem 3.2

Let and suppose G(λ) ≤ e−λ. Then there is a constant such that for all ,

| (11) |

and

| (12) |

The factor ||UJf||2,2 for an infinite depth network is hard to bound in terms of ||f||2, which is also true for the Euclidean scattering transform (Mallat, 2012). However, for finite depth networks, a simple argument shows that , which yields (12).

For manifold classification (or any task requiring rigid invariance), we take J → ∞. This limit is equivalent to replacing the low-pass operator AJ with an integration over , since for any ,

| (13) |

Equation (13) motivates the definition of a non-windowed geometric scattering transform,

We also define as the L-layer version of , analogous to defined in (3.2). Unlike , which consists of a countable collection of functions, consists of a countable collections of scalar values. Theorem 3.2 and (13) show that these values are invariant to global isometries acting on f. The following proposition shows they form a sequence in ℓ2. We give a proof in Appendix E.

Theorem 3.3

If, then when

3.4. Isometric invariance between different manifolds

Let and be isometric manifolds. For shape matching tasks in which and should be identified as the same shape, it is appropriate to let J → ∞, and, inspired by (13), use the representation to carry out the computation; see Section 5.2 for numerical results along these lines. In such tasks, one selects a signal f that it is defined intrinsically in terms of the geometry , i.e., one that is chosen in such a way that given f on , one can compute a corresponding signal f′ = Vζf on without explicit knowledge of . For example, if is a two-dimensional surface embedded in , and is a three-dimensional rotation of by ζ ∈ SO(3), then the coordinate function is such a function. Indeed, let and suppose f(x) = xi is the coordinate function on for a fixed coordinate 1 ≤ i ≤ 3. Then the coordinate function on is given by f′ = Vζf. More sophisticated examples include the SHOT features of Tombari et al. (2010); Prakhya et al. (2015). The following proposition shows that the geometric scattering transform produces a representation that is invariant to isometries . We give a proof in Appendix F.

Proposition 3.4

Let , let , and let f′ := Vζf be the corresponding signal defined on . Then .

In other tasks, one may wish to have local isometric invariance between and . We thus extend Theorem 3.2 in the following way. If , then the operator maps into . We wish to estimate how much (SJ)′ Vζf differs from SJf, where (SJ)′ denotes the geometric wavelet scattering transform on . However, the difference SJf−(SJ)′ Vζf is not well-defined since SJf is a countable collection of functions defined on and (SJ)′ Vζf is a collection of functions defined on . Therefore, in Theorem 3.5 we let ζ2 be a second isometry from to and estimate ; see Appendix G for the proof.

Theorem 3.5

Let and assume that G(λ) ≤ e−λ. Then there is a constant such that

| (14) |

and

| (15) |

It is worthwhile to contrast Proposition 3.4 and Theorem 3.5 with Theorem 3.2 stated in Section 3.3. As mentioned in the introduction, two of the basic tasks we condsider are the classification of multiple signals over a single, fixed manifold and the classification of multiple manifolds. Since Theorem 3.2 considers an isometry , it shows that the manifold scattering transform is well-suited for the former task. Proposition 3.4 and Theorem 3.5, on the other hand, assume that ζ is an isometry from one manifold to another manifold , and therefore indicate that the manifold scattering transform is well-suited to the latter task as well.

4. Stability to Diffeomorphisms

In this section we show the scattering transform is stable to the action of diffeomorphisms on a signal . In Section 4.1, we show that when restricted to bandlimited functions, the geometric scattering transform is stable to diffeomorphisms. In Section 4.2, we show that under certain assumptions on , that spectral filters are stable to diffeomorphisms (even if f is not bandlimited). As a consequence, it follows that finite width (i.e., a finite number of wavelets per layer) scattering networks are stable to diffeomorphisms on these manifolds.

4.1. Stability for bandlimited functions

Analogously to the Lipschitz diffeomorphism stability in Mallat (2012, Section 2.5), we wish to show the geometric scattering coefficients are stable to diffeomorphisms that are close to being an isometry. Similarly to Wiatowski and Bölcskei (2015); Czaja and Li (2019), we will assume the input signal f is λ- bandlimited for some λ > 0. That is, whenever λk > λ.

Theorem 4.1

Let , and assume G(λ) ≤ e−λ. Then there is a constant such that if ζ = ζ1 ∘ ζ2 for some isometry and diffeomorphism , then

| (16) |

and

| (17) |

for all functions such that whenever λk > λ.

Theorem 4.1 achieves the goal set forth by (8), with two exceptions: (i) we restrict to bandlimited functions; and (ii) the infinite depth network has the term ||UJf||2,2 in the upper bound. We leave the vast majority of the work in resolving these issues to future work, although Section 4.2 takes some initial steps in resolving (i). We also leave for future work the case of quantifying for two diffeomorphic manifolds and . When ζ is an isometry, it reduces to Theorem 3.2, since in this case we may choose ζ = ζ1, ζ2 = id and note that ||id||∞ = 0. For a general diffeomorphism ζ, taking the infimum of ||ζ2||∞ over all factorizations ζ = ζ1 ∘ ζ2 leads to a bound where the first term depends on the scale of the isometric invariance and the second term depends on the distance from ζ to the isometry group in the uniform norm. Letting J → ∞, we may also prove an analogous theorem for the non-windowed scattering transform.

Theorem 4.2

Let , and assume G(λ) ≤ e−λ. Then there is a constant such that if ζ = ζ1 ∘ ζ2 for some isometry and diffeomorphism , then

| (18) |

for all functions such that whenever λk > λ.

The proofs of Theorems 4.1 and 4.2 are given in Appendix H.

4.2. Single-Filter Stability

Theorems 4.1 and 4.2 prove diffeomorphism stability for the geometric wavelet scattering transform, but their proof techniques rely on f being bandlimited. In this section we discuss a possible approach to proving a stability result for all .

As stated in Theorem 2.1, spectral integral operators are equivariant to the action of isometries. This fact is crucial to proving Theorem 3.2 because it allows us to estimate

| (19) |

instead of

| (20) |

In Mallat (2012), it is shown that the Euclidean scattering transform SEuc is stable to the action of certain diffeomorphisms which are close to being translations. A key step in the proof is a bound on the commutator norm ||[SEuc,Vζ]||, which then allows the author to bound a quantity analogous to (19) instead of bounding (20) directly. This motivates us to study the commutator of spectral integral operators with Vζ for diffeomorphisms which are close to being isometries.

For technical reasons, we will assume that is two-point homogeneous, that is, for any two pairs of points, (x1, x2), (y1, y2) such that r(x1, x2) = r(y1, y2), there exists an isometry such that ζ(x1) = y1 and ζ(x2) = y2. In order to quantify how far a diffeomorphism differs from being an isometry we will consider two quantities:

| (21) |

and

| (22) |

Intuitively, A1 is a measure of how much ζ distorts distances, and A2 is a measure of how much ζ distorts volumes. We let A(ζ) = max{A1(ζ),A2(ζ)} and note that if ζ is an isometry, then A(ζ) = 0. We remark that A(ζ) defined here differs from the notion of diffeomorphism size used in Theorems 4.1 and 4.2. It is an interesting research direction to understand the differences between these formulations, and to understand more generally which definitions of diffeomorphism size geometric deep networks are stable to. The following theorem, which is proved in Appendix I, bounds the operator norm of [Th, Vζ] in terms of A(ζ) and a quantity depending upon h.

Theorem 4.3

Assume that is two-point homogeneous, and let be a spectral filter. Then there exists a constant such that for any diffeomorphism ,

where

Theorem 4.3 leads to the following corollary, which we prove in Appendix J

Corollary 4.4

Assume that is two-point homogeneous and that G(λ) ≤ e−λ, then

where,

In practice, the wavelet transform is implemented using finitely many wavelets. By the triangle inequality, Corollary 4.4 leads to a commutator estimate for the finite wavelet transform. Therefore, by the arguments used in the proof of Theorem 2.12 in Mallat (2012), it follows that the geometric scattering transform is stable to diffeomorphisms on two-point homogeneous manifolds when implemented with finitely many wavelets at each layer. We do note however, that B(ψj) increases exponentially as j decreases to −∞. Therefore, this argument only applies to a finite-wavelet implementation of the geometric scattering transform. Mallat (2012) overcomes this difficulty using an almost orthogonality argument. In the future, one might seek to adapt these techniques to the manifold setting. However, there are numerous technical difficulties which are not present in the Euclidean setting.

5. Numerical results

In this section, we describe two numerical experiments to illustrate the utility of the geometric wavelet scattering transform. We consider both traditional geometric learning tasks, in which we compare to other geometric deep learning methods, as well as limited training tasks in which the unsupervised nature of the transform is particularly useful. In the former set of tasks, empirical results are not state-of-the-art, but they show that the geometric scattering model is a good mathematical model for geometric deep learning. Specifically, in Section 5.1 we classify signals, corresponding to digits, on a fixed manifold, the two-dimensional sphere. Then, in Section 5.2 we classify different manifolds which correspond to ten different people whose bodies are positioned in ten different ways. The back-end classifier for all experiments is an RBF kernel SVM.

In order to carry out our numerical experiments, it was necessary to discretize our manifolds and represent them as graphs. We use triangle meshes for all manifolds in this paper, which allows us to approximate the Laplace-Beltrami operator and integration on each manifold via the approach described in Solomon et al. (2014). We emaphasize that this approximation of the Laplace-Beletrami operator is not the standard graph Laplacian of the triangular mesh, and thus the discretized geometric scattering transform is not the the same as the versions of the graph scattering transform reported in Zou and Lerman (2019); Gama et al. (2019b,a); Gao et al. (2019).

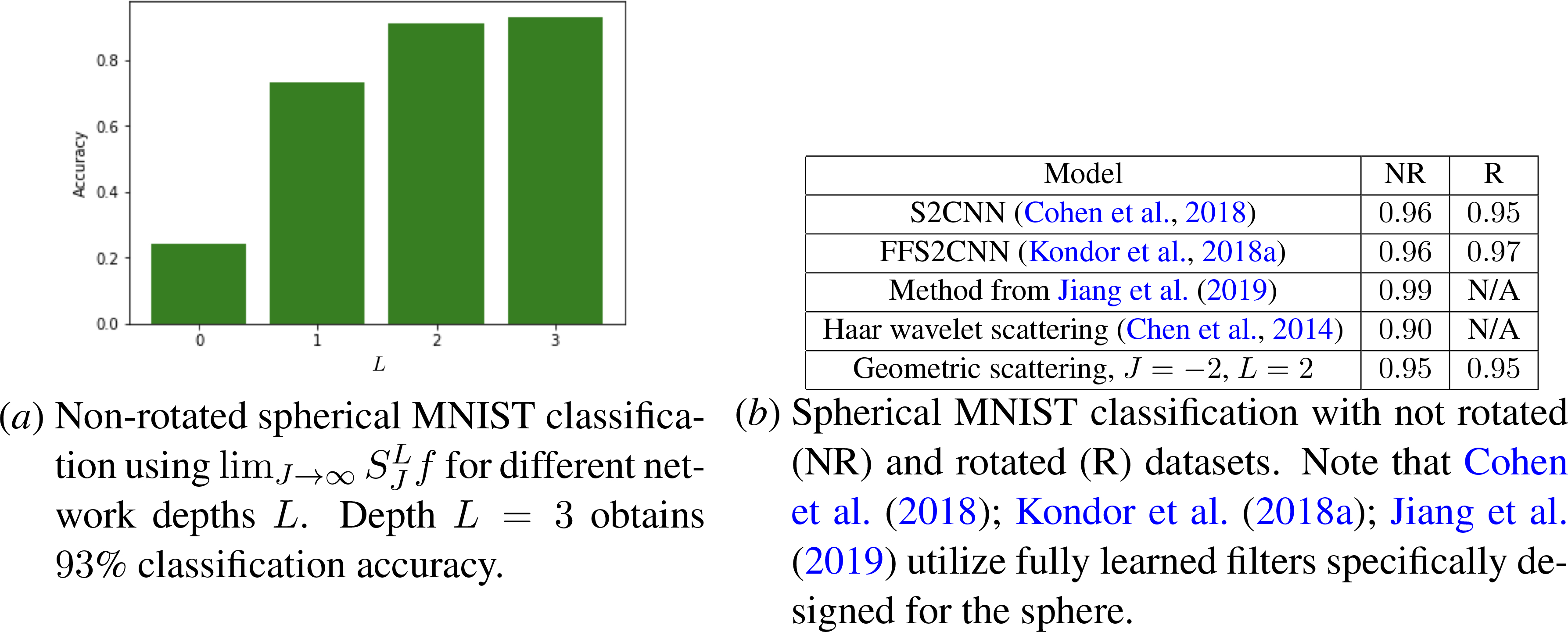

5.1. Spherical MNIST

In the first experiment, we project the MNIST dataset from Euclidean space onto a two-dimensional sphere using a triangle mesh with 642 vertices. During the projection, we generate two datasets consisting of not rotated (NR) and randomly rotated (R) digits. Using the NR spherical MNIST database, we first investigate in Figure 3(a)subfigure the power of the globally invariant wavelet scattering coefficients for different network depths L and with J → ∞, which is equivalent to using the f representation defined in Section 3.3. Here f is the projection of the digit onto the sphere. We observe increasing accuracy but with diminishing returns across the range 0 ≤ L ≤ 3. Then on both the NR and R spherical MNIST datasets, we calculate the geometric scattering coefficients for J = −2 and L = 2. Other values of J are also reported in Appendix K, in addition to further details on how the spherical MNIST classification experiments were conducted. From Theorem 4.1, we know the scattering transform is stable to randomly generated rotations and Figure 3(b)subfigure shows the scattering coefficients capture enough rotational information to correctly classify the digits.

Figure 3:

Spherical MNIST classificaion results.

5.2. FAUST

The FAUST dataset (Bogo et al., 2014) contains ten poses from ten people resulting in a total of 100 manifolds represented by triangle meshes. We first consider the problem of classifying poses. This task requires globally invariant features, and thus we compute the globally invariant geometric wavelet scattering transform of Section 3.3. Following the common practice of other geometric deep learning methods (see, e.g., Litany et al., 2017; Lim et al., 2019), we use 352 SHOT features (Tombari et al., 2010; Prakhya et al., 2015) as initial node features f. We used 5-fold cross validation for the classification tests with nested cross validation to tune the hyper-parameters of the RBF kernel SVM, as well as the network depth L. We remark that tuning the network depth of the geometric scattering transform is relatively simple as compared to fully learned geometric deep networks, since the filters are predefined geometric wavelets. This is particularly important for smaller data sets such as FAUST where there is a limited amount of training data. As indicated in Figure 3, we achieve 95% overall accuracy using the geometric scattering features, compared to 92% accuracy achieved using only the integrals of SHOT features (i.e., restricting to L = 0). We note that Masci et al. (2015) also considered pose classification, but the authors used a different training/test split (50% for training and 50% for test in a leave-one-out fashion), so our results are not directly comparable.

As a second task, we attempt to classify the people. This task is even more challenging than classifying the poses since some of the people are very similar to each other. We again performed 5-fold cross-validation, with each fold containing two poses from each person to ensure the folds are evenly distributed. As shown in Table 1, we achieved 76% accuracy on this task compared to the 61% accuracy using only integrals of SHOT features. In order to further emphasize the difference between the discretized geometric scattering transform and the graph scattering transform, we also attempted this task using the graph scattering transform derived from the graph Laplacian of the manifold mesh, applied to the SHOT features of each manifold. For this approach, which is representative of the aforementioned graph scattering papers, we obtained 58% accuracy. This result is similar to the 61% accuracy obtained by the baseline SHOT feature approach and empirically indicates the importance of encoding geometric information into the scattering transform for manifold-based tasks. More details regarding both tasks are in Appendix K.

Table 1:

Manifold classification on FAUST dataset with two tasks.

| Task/Model | SHOT only | Geometric scattering |

|---|---|---|

| Pose classification | 0.92 | 0.95 |

| Person classification | 0.61 | 0.76 |

6. Conclusion

We have constructed a geometric version of the scattering transform on a large class of Riemannian manifolds and shown this transform is non-expansive, invariant to isometries, and stable to diffeomorphisms. Our construction uses the spectral decomposition of the Laplace-Beltrami operator to construct a class of spectral filtering operators that generalize convolution on Euclidean space. While our numerical examples demonstrate geometric scattering on two-dimensional manifolds, our theory remains valid for manifolds of any dimension d, and therefore can be naturally extended and applied to higher-dimensional manifolds in future work. Finally, our construction provides a mathematical framework that enables future analysis and understanding of geometric deep learning.

Acknowledgments

This research was partially funded by: grant P42 ES004911, through the National Institute of Environmental Health Sciences of the NIH, supporting F.G.; IVADO (l’institut de valorisation des données) [G.W.]; the Alfred P. Sloan Fellowship (grant FG-2016-6607), the DARPA Young Faculty Award (grant D16AP00117), the NSF CAREER award (grant 1845856), and NSF grant 1620216 [M.H.]; NIH grant R01GM135929 [M.H. & G.W]. The content provided here is solely the responsibility of the authors and does not necessarily represent the official views of the funding agencies.

Appendix A. Proof of Theorem 2.1

Theorem 2.1

For every spectral filter and for every ,

We will prove a result that generalizes Theorem 2.1 to isometries between different manifolds. This more general result will be needed to prove Theorem 3.5.

Before stating our more general result, we introduce some notation. Let and be smooth compact connected Riemannian manifolds without boundary, and let be an isometry. Since and are and isometric, their Laplace Beltrami operators Δ and Δ′ have the same eigenvalues, and we enumerate the eigenvalues of −Δ (and also of −Δ′) in increasing order (repeating those with multiplicity greater than one) as 0 = λ0 < λ1 ≤ λ2 ≤ … Recall that if is a spectral filter, then by definition, there exists a function such that

and that

Therefore, we may define an operator , on , which we consider the analogue of Th, as integration against the kernel

where , is an orthonormal basis of eigenfunction on with . With this notation, we may now state a generalized version of Theorem 2.1 (to recover Theorem 2.1, we set ).

Theorem A.1

Let be an isometry. Then for every spectral filter h and every ,

Proof

For λ ∈ Λ, let πλ be the operator which projects a function onto the corresponding eigenspace Eλ, and let be the analogous operator defined on . Since forms an orthonormal basis for Eλ, we may write write πλ as integration against the kernel K(λ)(x, y) defined in (3), i.e.,

Recalling from (4) that

we see that

and likewise,

Therefore, by the linearity of Vζ, it suffices to show that

for all and all λ ∈ Λ. Let and write

where . Since ζ is an isometry, we have and .

Therefore,

as desired. ■

Appendix B. Proof of Proposition 2.2

Proposition 2.2

If {hγ : γ ∈ Γ} satisfies (5), then is an isometry, i.e.,

Proof

Analogously to Parseval’s theorem, it follows from the Fourier inversion formula (1) and the fact that {φk}k≥0 is an orthonormal basis, that

Similarly, it follows from (2) that

Therefore, using the Littlewood Paley condition (5), we see

■

Appendix C. Proof of Proposition 2.3

Proposition 2.3

For any , is an isometry, i.e.,

Proof

We will show that the frame {ϕJ , ψj : j ≤ J} satisfies the Littlewood Paley condition (5), i.e. that

The result will then follow from Proposition 2.2. Recall that ϕJ is defined by for some non-negative, non-increasing function G such that G(0) = 1. Therefore, from (6), we see that that

and so,

■

Appendix D. The Proof of Theorem 3.2

Theorem 3.2

Let and suppose G(λ) ≤ e−λ. Then there is a constant such that for all ,

| (11) |

and

| (12) |

The proof of Theorem 3.2 relies on the following two lemmas.

Lemma D.1

There exists a constant such that for every spectral filter h and for every ,

Moreover, if , then there exists a constant such that for any

Lemma D.2

For any ,

Proof

[The Proof of Theorem 3.2] Theorem 2.1 proves that spectral filter convolution operators commute with isometries. Since the absolute value operator does as well, it follows that , and therefore

Since , we see that

| (23) |

Since AJ = T𝜙J and , Lemma D.1 shows that

Equation (12) follows from Lemma D.2, and (11) follows by letting L increase to infinity in (23). Therefore, the proof is complete pending the proof of Lemmas D.1 and D.2. ■

Proof

[The Proof of Lemma D.2] Let

Then, by construction,

| (24) |

where we adopt the convention that . Since the wavelet transform and the absolute value operator are both non-expansive, it follows that is non-expansive as well. Therefore, since , we see

Therefore, (24) implies

as desired. ■

In order to prove Lemma D.1, we will first prove the following lemma.

Lemma D.3

For λ ∈ Λ, let K(λ) be the kernel defined as in (3), and let m(λ) be the multiplicity of λ. Then, there exists a constant such that

| (25) |

As a consequence, if Kh is a spectral kernel, then

| (26) |

Furthermore, if is homogeneous, i.e., if for all , there exists an isometry mapping x to y, then

| (27) |

and thus,

| (28) |

Proof

For any k such that λk = λ, it is a consequence of Hörmander’s local Weyl law (Hörmander (1968); see also Shi and Xu (2010)) that

Theorem 1 of Shi and Xu (2010) shows that

Therefore,

This implies (25). Now, if we assume that is homogeneous, then Theorem 3.2 of Evariste (1975) shows that

Substituting this into the above string of inequalities yields (27). Equations (26) and (28) follow by recalling from (4) that

and applying the triangle inequality. ■

Now we may prove Lemma D.1.

Proof [The Proof of Lemma D.1] Let Kh be the kernel of Th. Then by the Cauchy-Schwartz inequality and the fact that ,

It follows that

| (29) |

Lemma D.3 shows

and therefore

Now suppose that G(λ) ≤ e−λ. Theorem 2.4 of Bérard et al. (1994) proves that for any , α ≥ 0, and t > 0,

| (30) |

Integrating both sides over M yields:

| (31) |

Using the assumption that that , (29) and (31) with α = d/2 and t = 2J, we see

■

Appendix E. The Proof of Theorem 3.3

Theorem 3.3

If, then when

Proof

Let p = ∅ or p = (j1, …, jm) denote a scattering path, and let

| (32) |

denote the set of all scattering paths. Denote by the set of all paths with scales no larger than J,

Using (13), Fatou’s Lemma implies that for any ,

| (33) |

We now integrate both sides of (33) over , and apply Fatou’s Lemma and Tonelli’s Theorem to obtain

where the last inequality follows from Proposition 3.1 by setting f1 = f and f2 = 0. ■

Appendix F. The Proof of Proposition 3.4

Proposition 3.4

Let , let , and let f′ := Vζf be the corresponding signal defined on . Then .

Proof

Recall the set of all scattering paths from (32). We need to prove for all . If p = ∅ then since ζ is an isometry. Theorem A.1, stated in Appendix A, proves that for any spectral filter , where is the analogue of Th on (defined precisely in Appendix A). Since the modulus operator also commutes with isometries, it follows that U′[p]Vζf = VζU[p]f for any . Thus, since ζ is an isometry,

■

Appendix G. The Proof of Theorem 3.5

Theorem 3.5

Let and assume that G(λ) ≤ e−λ. Then there is a constant such that

| (14) |

and

| (15) |

Proof

As in the proof of Theorem 3.2, we observe that since spectral filter convolution operators and the absolute value operator both commute with isometries, it follows that .

Therefore

Equation (15) now follows by applying (12). The proof of (14) is similar and follows by applying (11). ■

Appendix H. The Proof of Theorems 4.1 and 4.2

Theorem 4.1

Let , and assume G(λ) ≤ e−λ. Then there is a constant such that if ζ = ζ1 ∘ ζ2 for some isometry and diffeomorphism , then

| (16) |

and

| (17) |

for all functions such that whenever λk > λ.

Theorem 4.2

Let , and assume G(λ) ≤ e−λ. Then there is a constant such that if ζ = ζ1 ∘ ζ2 for some isometry and diffeomorphism , then

| (18) |

for all functions such that whenever λk > λ.

In order to prove Theorem 4.1, we will need the following lemma.

Lemma H.1

If is λ-bandlimited, i.e., hf,ϕki = 0 whenever λk > λ, then there exists a constant such that

for all .

Proof

As in the proof of Theorem A.1, let Λ denote the set of unique eigenvalues of −Δ, and let πλ be the operator that projects a function onto the eigenspace Eλ. Let

be the operator which projects a function onto all eigenspaces with eigenvalues less than or equal to λ. Note that Pλ can be written as integration against the kernel

where K(λ) is defined as in (3). If f is any λ-bandlimited function in , then Pλf = f, and so similarly to the proof of Lemma D.1, we see that

which implies

Lemma D.3 shows that for all

Therefore,

where N(λ) is the number of eigenvalues less than or equal to λ. Weyl’s law (see for example Ivrii (2016)) implies that

and so

■

Proof [The Proof of Theorem 4.1]

Let ζ = ζ2 ∘ ζ1 be a factorization of ζ such that ζ1 is an isometry and ζ2 is a diffeomorphism. Then since Vζf = f ∘ ζ−1, we see that Vζ = Vζ2Vζ1. Therefore, for all λ-bandlimited functions f

By (12), we have that

and by Proposition 3.1 and Lemma H.1 we see

Since ζ1 is an isometry, we observe that ||Vζ1f||2 = ||f||2. Combining this with the two inequalities above completes the proof of (17). The proof of (16) is similar, but uses (11) instead of (12). ■

Proof [The Proof of Theorem 4.2]

Repeating the proof of Proposition 3.4 we see that

Therefore, the result follows from Theorem 4.1 by taking J → ∞ on the right hand side of (16). ■

Appendix I. The Proof of Theorem 4.3

Theorem 4.3 Assume that is two-point homogeneous, and let be a spectral filter. Then there exists a constant such that for any diffeomorphism ,

where

In order to prove Theorem 4.3, we will need the an auxiliary result, which provides a commutator estimate for operators with radial kernels. We will say that a kernel operator

is radial if

for some . The following theorem establishes a commutator estimate for operators with radial kernels.

Theorem I.1

Let T be a kernel integral operator with a radial kernel K(x, y) = κ(r(x, y)) for some . Then there exists constants and such that

Here A1(ζ) and A2(ζ) are defined as in (21) and (22) respectively,

and

Proof We first compute

Therefore, by the Cauchy-Schwartz inequality,

We may bound the first integral by observing

To bound the second integral observe, that by the mean value theorem and the assumption that K is radial, we have

Lastly, since K(x, y) = κ(r(x, y)), we see that

which completes the proof. ■

Proof

[The Proof of Theorem 4.3] We write T = Th and K = Kh. If is two-point homogeneous and r(x,y) = r(x′, y′), then by the definition of two-point homogeneity there exists an isometry mapping x ⟼ x′ and y ⟼ y′. Therefore, we may use the proof of Theorem 2.1 to see that K(x′, y′) = K(x, y). It follows that K(x, y) is radial and so we may write K(x, y) = κ(r(x, y)) for some κ ∈ C1.

Applying Theorem I.1, we see that

Lemma D.3 implies that

and since forms an orthonormal basis for , it can be checked that

Therefore, the proof is complete since

and

■

Appendix J. The Proof of Corollary 4.4

Corollary 4.4

Assume that is two-point homogeneous and that G(λ) ≤ e−λ, then

where,

Proof

By the definition of and the assumption that G(λ) ≤ e−λ, we have that and

for k ≥ 1. Therefore, by Theorem 4.3,

where,

Equation (30) implies that

and that

thus completing the proof. ■

Appendix K. Additional details of classification experiments

K.1. Spherical MNIST

For all spherical MNIST experiments, including those reported in Section 5.1, we used the following procedure. Since the digits six and nine are impossible to distinguish in spherical MNIST, we removed the digit six from the dataset. The mesh on the sphere consisted of 642 vertices, and to construct the wavelets on the sphere, all 642 eigenvalues and eigenfunctions of the approximate Laplace-Beltrami operator were used. For the range of scales we chose −8 ≤ j ≤ min(0, J). Training and testing were conducted using the standard MNIST training set of 60,000 digits and the standard testing set of 10,000 digits, projected onto the sphere. The training set was randomly divided into five folds, of which four were used to train the RBF kernel SVM, taking as input the relevant geometric scattering representation of each spherically projected digit, and one was used to validate the hyper-parameters of the RBF kernel SVM (see Appendix K.3).

On both the non-rotated and randomly rotated spherical MNIST datasets, we calculated the geometric scattering coefficients and downsampled the resulting scattering coefficient functions (e.g., f ∗ ϕJ(x) and |f ∗ ψj| ∗ ϕJ(x)). For J → ∞ we selected one coefficient since they are all the same (recall (13)). With J = 0, we selected 4 coefficients per function; with J = −1, we selected 16 coefficients; with J = −2, we selected 64 coefficients. The selected coefficients were determined by finding 4, 16,and 64 nearly equidistant points x on the sphere. Classification results on the test set for a fixed network depth of L = 2 and for the different values of J are reported in Table 2 below. Figure 3(a)subfigure reports classification results for J → ∞ and for 0 ≤ L ≤ 3.

Table 2:

Geometric wavelet scattering classification results with with network depth L = 2 for different J on non-rotated and rotated spherical MNIST

| J | NR | R |

|---|---|---|

| J → ∞ | 0.91 | 0.91 |

| J = 0 | 0.94 | 0.94 |

| J = −1 | 0.95 | 0.95 |

| J = −2 | 0.95 | 0.95 |

K.2. FAUST

The FAUST dataset Bogo et al. (2014) consists of 100 manifolds corresponding to ten distinct people in ten distinct poses. Each manifold is approximated by a mesh with 6890 vertices. We used the 512 smallest eigenvalues and corresponding eigenfunctions to construct the geometric wavelets. During cross validation, in addition to cross validating the SVM parameters (see Section K.3 below), we also cross validated the depth of the scattering network for 0 ≤ L ≤ 2. For the classification test, we performed 5 fold cross validation with a training/validation/test split of 70%/10%/20% for both pose classification and person classification. The range of j is chosen as −11 ≤ j ≤ 0.

We report the frequency of each network depth L selected during the hyperparameter cross validation stage. Since there are five test folds and eight validation folds, the depth is selected 40 times per task. For pose classification, L = 0 was selected 19 times, L = 1 was selected 11 times, and L = 2 was selected 10 times. For person classification, L = 0 was selected 5 times, L = 1 was selected 29 times, and L = 2 was selected 6 times. The results indicate the importance of avoiding overfitting with needlessly deep scattering networks, while at the same time highlighting the task dependent nature of the network depth (compare as well to the MNIST results reported above and in the main text).

K.3. Parameters for RBF kernel SVM

We used RBF kernel SVM for all classification tasks and cross validated the hyperparameters. In the two FAUST classification tasks of Section 5.2, for the kernel width γ, we chose from {0.001, 0.005, 0.01, 0.02, 0.04}, while for the penalty C we chose from {50, 100, 250, 400, 500}. For the spherical MNIST classification task of Section 5.1, for γ we chose from {0.00001, 0.0001, 0.001} and for C we chose from {25, 100, 250, 500}.

Contributor Information

Michael Perlmutter, Michigan State University, Department of Computational Mathematics, Science & Engineering, East Lansing, Michigan, USA.

Feng Gao, Yale University, Department of Genetics, New Haven, Connecticut, USA.

Guy Wolf, Université de Montréal, Department of Mathematics and Statistics, Mila – Quebec Artificial Intelligence Institute, Montréal, Québec, Canada.

Matthew Hirn, Michigan State University, Department of Computational Mathematics, Science & Engineering, Department of Mathematics, Center for Quantum Computing, Science & Engineering, East Lansing, Michigan, USA.

References

- Joakim Andén and Stéphane Mallat. Multiscale scattering for audio classification. In Proceedings of the ISMIR 2011 conference, pages 657–662, 2011. [Google Scholar]

- Joakim Andén and Stéphane Mallat. Deep scattering spectrum. IEEE Transactions on Signal Processing, 62(16):4114–4128, August 2014. [Google Scholar]

- Joakim Andén, Vincent Lostanlen, and Mallat Stéphane. Joint time-frequency scattering. IEEE Transactions on Signal Processing, 67(14):3704–3718, 2019. [Google Scholar]

- Pierre Bérard, Gérard Besson, and Gallot Sylvain. Embedding Riemannian manifolds by their heat kernel. Geometric and Functional Analysis, 4(4):373–398, 1994. [Google Scholar]

- Bogo Federica, Romero Javier, Loper Matthew, and Black Michael J.. FAUST: Dataset and evaluation for 3D mesh registration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Piscataway, NJ, USA, 2014. [Google Scholar]

- Bronstein Michael M., Bruna Joan, Yann LeCun, Arthur Szlam, and Vandergheynst Pierre. Geometric deep learning: Going beyond euclidean data. IEEE Signal Processing Magazine, 34(4): 18–42, 2017. [Google Scholar]

- Brumwell Xavier, Sinz Paul, Kwang Jin Kim, Yue Qi, and Hirn Matthew. Steerable wavelet scattering for 3D atomic systems with application to Li-Si energy prediction. In NeurIPS Workshop on Machine Learning for Molecules and Materials, 2018. arXiv:1812.02320. [Google Scholar]

- Bruna Joan and Mallat Stéphane. Classification with scattering operators. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 1561–1566, 2011. [Google Scholar]

- Bruna Joan and Mallat Stéphane. Invariant scattering convolution networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(8):1872–1886, August 2013. [DOI] [PubMed] [Google Scholar]

- Chen Xu, Cheng Xiuyuan, and Mallat Stéphane. Unsupervised deep Haar scattering on graphs. In Advances in Neural Information Processing Systems 27, pages 1709–1717, 2014. [Google Scholar]

- Václav Chudácek, Ronen Talmon, Joakim Andén, Stéphane Mallat, Coifman Ronald R, Patrice Abry, and Doret Muriel. Low dimensional manifold embedding for scattering coefficients of intrapartum fetale heart rate variability. In the 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, pages 6373–6376, 2014. [DOI] [PubMed] [Google Scholar]

- Cohen Taco and Welling Max. Group equivariant convolutional networks. In Proceedings of The 33rd International Conference on Machine Learning (ICML), volume 48 of Proceedings of Machine Learning Research, pages 2990–2999, 2016. [Google Scholar]

- Cohen Taco S., Geiger Mario, Koehler Jonas, and Welling Max. Spherical CNNs. In Proceedings of the 6th International Conference on Learning Representations, 2018. [Google Scholar]

- Coifman Ronald R. and Lafon Stéphane. Diffusion maps. Applied and Computational Harmonic Analysis, 21:5–30, 2006a. [Google Scholar]

- Coifman Ronald R. and Lafon Stéphane. Geometric harmonics: A novel tool for multiscale out-of-sample extension of empirical functions. Applied and Computational Harmonic Analysis, 21(1): 31–52, July 2006b. [Google Scholar]

- Coifman Ronald R. and Maggioni Mauro. Diffusion wavelets. Applied and Computational Harmonic Analysis, 21(1):53–94, 2006. [Google Scholar]

- Czaja Wojciech and Li Weilin. Analysis of time-frequency scattering transforms. Applied and Computational Harmonic Analysis, 47(1):149–171, 2019. [Google Scholar]

- Dong Bin. Sparse representation on graphs by tight wavelet frames and applications. Applied and Computational Harmonic Analysis, 42(3):452–479, 2017. [Google Scholar]

- Eickenberg Michael, Exarchakis Georgios, Hirn Matthew, and Mallat Stéphane. Solid harmonic wavelet scattering: Predicting quantum molecular energy from invariant descriptors of 3D electronic densities. In Advances in Neural Information Processing Systems 30 (NIPS 2017), pages 6540–6549, 2017. [Google Scholar]

- Eickenberg Michael, Exarchakis Georgios, Hirn Matthew, Mallat Stéphane, and Thiry Louis. Solid harmonic wavelet scattering for predictions of molecule properties. Journal of Chemical Physics, 148:241732, 2018. [DOI] [PubMed] [Google Scholar]

- Evariste Giné M.. The addition formula for the eigenfunctions of the Laplacian. Advances in Mathematics, 18(1):102–107, 1975. [Google Scholar]

- Gama Fernando, Bruna Joan, and Ribeiro Alejandro. Stability of graph scattering transforms. In Advances in Neural Information Processing Systems 33, 2019a. [Google Scholar]

- Gama Fernando, Ribeiro Alejandro, and Bruna Joan. Diffusion scattering transforms on graphs. In International Conference on Learning Representations, 2019b. [Google Scholar]

- Gao Feng, Wolf Guy, and Hirn Matthew. Geometric scattering for graph data analysis. In Proceedings of the 36th International Conference on Machine Learning (ICML), volume 97 of Proceedings of Machine Learning Research, pages 2122–2131, Long Beach, California, USA, 2019. [Google Scholar]

- Grohs Philipp, Wiatowski Thomas, and Helmut Bölcskei. Deep convolutional neural networks on cartoon functions. In IEEE International Symposium on Information Theory, pages 1163–1167, 2016. [Google Scholar]

- Hammond David K., Vandergheynst Pierre, and Gribonval Rémi. Wavelets on graphs via spectral graph theory. Applied and Computational Harmonic Analysis, 30:129–150, 2011. [Google Scholar]

- Hirn Matthew, Mallat Stéphane, and Poilvert Nicolas. Wavelet scattering regression of quantum chemical energies. Multiscale Modeling and Simulation, 15(2):827–863, 2017. [Google Scholar]

- Lars Hörmander. The spectral function of an elliptic operator. Acta Mathematica, 121:193–218, 1968. [Google Scholar]

- Ivrii Victor. 100 years of Weyl’s law. Bulletin of Mathematical Sciences, 6(3):379–452, 2016. [Google Scholar]

- Chiyu Max Jiang, Jingwei Huang, Kashinath Karthik, Prabhat Philip Marcus, and Niessner Matthias. Spherical CNNs on unstructured grids. In International Conference on Learning Representations, 2019. [Google Scholar]

- Kondor Risi and Trivedi Shubhendu. On the generalization of equivariance and convolution in neural networks to the action of compact groups. In Proceedings of the 35th International Conference on Machine Learning (ICML), volume 80 of Proceedings of Machine Learning Research, pages 2747–2755, Stockholmsmässan, Stockholm Sweden, 2018. [Google Scholar]

- Kondor Risi, Lin Zhen, and Trivedi Shubhendu. Clebsch-Gordan nets: a fully Fourier space spherical convolutional neural network. In Advances in Neural Information Processing Systems 31, pages 10117–10126, 2018a. [Google Scholar]

- Kondor Risi, Son Hy Truong, Horace Pan, Anderson Brandon, and Trivedi Shubhendu. Covariant compositional networks for learning graphs. In International Conference on Learning Representations (ICLR) Workshop, 2018b. arXiv:1801.02144. [Google Scholar]

- Lim Isaak, Dielen Alexander, Campen Marcel, and Kobbelt Leif. A simple approach to intrinsic correspondence learning on unstructured 3d meshes. In Laursa Leal-Taixé and Stefan Roth, editors, Computer Vision – ECCV 2018 Workshops, pages 349–362, Cham, 2019. Springer International Publishing. ISBN 978–3-030–11015-4. [Google Scholar]

- Litany Or, Remez Tal, Emanuele Rodolà, Alex Bronstein, and Bronstein Michael. Deep functional maps: Structured prediction for dense shape correspondence. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), pages 5660–5668, 10 2017. [Google Scholar]

- Mallat Stéphane. Recursive interferometric representations. In 18th European Signal Processing Conference (EUSIPCO-2010), Aalborg, Denmark, 2010. [Google Scholar]

- Mallat Stéphane. Group invariant scattering. Communications on Pure and Applied Mathematics, 65(10):1331–1398, October 2012. [Google Scholar]

- Masci Jonathan, Boscaini Davide, Bronstein Michael M., and Vandergheynst Pierre. Shapenet: Convolutional neural networks on non-Euclidean manifolds. CoRR, arxiv:1501.06297, 2015. [Google Scholar]

- Meyer Yves. Wavelets and Operators, volume 1. Cambridge University Press, 1993. [Google Scholar]

- Oyallon Edouard and Mallat Stéphane. Deep roto-translation scattering for object classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2015. arXiv:1412.8659. [Google Scholar]

- Perlmutter Michael, Gao Feng, Wolf Guy, and Hirn Matthew. Understanding graph neural networks with asymmetric geometric scattering transforms. arXiv:1911.06253, 2019. [Google Scholar]

- Sai Manoj Prakhya, Bingbing Liu, and Lin Weisi. B-shot: A binary feature descriptor for fast and efficient keypoint matching on 3d point clouds. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages 1929–1934, 2015. [Google Scholar]

- Shi Yiqian and Xu Bin. Gradient estimate of an eigenfunction on a compact Riemannian manifold without boundary. Annals of Global Analysis and Geometry, 38:21–26, 2010. [Google Scholar]

- Shuman David I., Narang Sunil K., Frossard Pascal, Ortega Antonio, and Vandergheynst Pierre. The emerging field of signal processing on graphs: Extending high-dimensional data analysis to networks and other irregular domains. IEEE Signal Processing Magazine, 30(3):83–98, 2013a. [Google Scholar]

- Shuman David I, Narang Sunil K., Frossard Pascal, Ortega Antonio, and Vandergheynst Pierre. The emerging field of signal processing on graphs. IEEE Signal Processing Magazine, pages 83–98, May 2013b. [Google Scholar]

- Sifre Laurent and Mallat Stéphane. Combined scattering for rotation invariant texture analysis. In Proceedings of the ESANN 2012 conference, 2012. [Google Scholar]

- Sifre Laurent and Mallat Stéphane. Rotation, scaling and deformation invariant scattering for texture discrimination. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2013. [Google Scholar]

- Sifre Laurent and Mallat Stéphane. Rigid-motion scattering for texture classification. arXiv:1403.1687, 2014. [Google Scholar]

- Solomon Justin, Crane Keenan, and Vouga Etienne. Laplace-Beltrami: The Swiss army knife of geometry processing. 12th Symposium on Geometric Processing Tutorial, 2014. [Google Scholar]

- Tenenbaum Joshua B., de Silva Vin, and Langford John C.. A global geometric framework for nonlinear dimensionality reduction. Science, 290(5500):2319–2323, 2000. [DOI] [PubMed] [Google Scholar]

- Thomas Nathaniel, Smidt Tess, Kearnes Steven, Yang Lusann, Li Li, Kohlhoff Kai, and Riley Patrick. Tensor field networks: Rotation- and translation-equivariant neural networks for 3d point clouds. arXiv:1802.08219, 2018. [Google Scholar]

- Tombari Federico, Salti Samuele, and Di Stefano Luigi. Unique signatures of histograms for local surface description. In European conference on computer vision, pages 356–369. Springer, 2010. [Google Scholar]

- van der Maaten Laurens and Geoffrey Hinton. Visualizing high-dimensional data using t-SNE. Journal of Machine Learning Research, 9:2579–2605, 2008. [Google Scholar]

- Weiler Maurice, Geiger Mario, Welling Max, Boomsma Wouter, and Cohen Taco. 3D steerable cnns: Learning rotationally equivariant features in volumetric data. In Advances in Neural Information Processing Systems 31, pages 10381–10392, 2018. [Google Scholar]

- Wiatowski Thomas and Helmut Bölcskei. Deep convolutional neural networks based on semi-discrete frames. In Proceedings of IEEE International Symposium on Information Theory, pages 1212–1216, 2015. [Google Scholar]

- Wiatowski Thomas and Helmut Bölcskei. A mathematical theory of deep convolutional neural networks for feature extraction. IEEE Transactions on Information Theory, 64(3):1845–1866, 2018. [Google Scholar]

- Wolf Guy, Mallat Stéphane, and Shamma Shihab A.. Audio source separation with time-frequency velocities. In 2014 IEEE International Workshop on Machine Learning for Signal Processing (MLSP), Reims, France, 2014. [Google Scholar]

- Wolf Guy, Mallat Stephane, and Shamma Shihab A.. Rigid motion model for audio source separation. IEEE Transactions on Signal Processing, 64(7):1822–1831, 2015. [Google Scholar]

- Zou Dongmian and Lerman Gilad. Graph convolutional neural networks via scattering. Applied and Computational Harmonic Analysis, 2019. [Google Scholar]