Abstract

Implementation planning typically incorporates stakeholder input. Quality improvement efforts provide data-based feedback regarding progress. Participatory system dynamics modeling (PSD) triangulates stakeholder expertise, data and simulation of implementation plans prior to attempting change. Frontline staff in one VA outpatient mental health system used PSD to examine policy and procedural “mechanisms” they believe underlie local capacity to implement evidence-based psychotherapies (EBPs) for PTSD and depression. We piloted the PSD process, simulating implementation plans to improve EBP reach. Findings indicate PSD is a feasible, useful strategy for building stakeholder consensus, and may save time and effort as compared to trial-and-error EBP implementation planning.

Keywords: Implementation science, Mental health, Evidence-based practices, System dynamics, Participatory stakeholder engagement

Introduction

System-wide policies to implement best practices are enacted within local sub-systems of healthcare providers and programs. Implementation strategies such as external facilitation (Rycroft-Malone 2004; Rycroft-Malone et al. 2002) elicit stakeholders’ perspectives or “mental models” regarding these implementation contexts. But, individual stakeholders’ views may be incorrect or incomplete, and divergent stakeholder perspectives may remain irreconcilable unless empirical data help resolve disputes (Simon 1991).

Lean management uses stakeholder input and data systems to develop incremental change efforts that are iteratively refined using Plan-Do-Study-Act cycles (PDSA; DelliFraine et al. 2010; Mazzocato et al. 2010; Vest and Gamm 2009). However, without a sufficiently precise accounting of system interdependence during planning, stakeholders may select strategies using PDSA that prove ineffectual or produce unintended consequences. Like many implementation planning approaches, the PDSA cycle is inefficient, because one cannot “Study” the stakeholder assumptions that underlie the “Plan” until after the change strategy has been implemented in the “Do” phase. Data are then used to evaluate implementation impacts retrospectively, rather than to forecast the anticipated benefits or consequences during planning.

Participatory system dynamics modeling (PSD) offers an alternative, triangulating stakeholder expertise, healthcare data and model simulations to refine implementation strategies prior to attempting change (Hovmand 2014; Sterman 2006). PSD empowers frontline clinic leadership and staff by defining and evaluating the policy and procedural “mechanisms” that determine local capacity for EBP implementation in a visual model, making system interdependence and complexity more tractable. Stakeholders evaluate the likely impacts of implementation plans and determine which approaches are most likely to achieve desired outcomes. We report our experiences with PSD in one Veterans Health Administration (VA) outpatient mental health system. We used PSD to determine whether two implementation plans were likely to increase the reach of evidence-based psychotherapies (EBPs) for posttraumatic stress disorder (PTSD) and depression. Before we describe PSD in more detail, we will first describe our implementation contexts in the VA.

Dynamics of Outer and Inner VA Mental Health Contexts

Implementation of EBPs is conceptualized to occur within interdependent outer and inner contexts (“EPIS Model,” Aarons et al. 2011). We focused on programs and service teams (inner context) delivering depression and PTSD EBPs according to national VA policies and performance measures (outer context). On the one hand, this outer context creates considerable demand for EBP implementation. Mental health programs are expected to be in the active implementation phase and there is considerable tension for local change at underperforming facilities (Aarons et al. 2011). At the same time, adoption of team-based VA mental health delivery, competing EBP implementation demands and limited local implementation supports, can create countervailing influences on implementation (Chard et al. 2012; Cook et al. 2013; Finley et al. 2015). Our partnership with local stakeholders’ to improve the reach of PTSD and depression EBPs occurred in the following contexts.

National Evidence-Based Psychotherapy Dissemination

PTSD and depression are highly prevalent, debilitating mental health disorders that along with substance use disorders, are primary reasons Veterans seek care in VA (Department of Veterans Affairs 2014; Elbogen et al. 2013). In addition, patient demand for VA mental health services continues to increase each year (Hermes et al. 2014; Mott et al. 2014). To meet patient demand with high-quality care, VA worked for the last eight years to increase the number of providers trained in evidence-based psychotherapies (EBPs) for depression and PTSD (Karlin and Cross 2014). EBPs are recommended in VA/DOD clinical practice guidelines, mandated in the VA Uniform Mental Health Services Handbook, and assessed with VA quality measures (Department of Veterans Affairs 2008; Department of Veterans Affairs & Department of Defense 2009a, 2010). Nationwide resources and infrastructure facilitate EBP adoption among VA providers, including national EBP trainings and EBP note templates for tracking EBP sessions in electronic record systems (Ruzek et al. 2012; Watts et al. 2014). EBPs led to significant improvements in the health and well being of patients who received treatment. Patients who received cognitive behavioral therapy (CBT) for depression experienced a 40 % reduction in depression symptoms (Karlin et al. 2012), and 60 % of Veterans who received prolonged exposure (PE) experienced a clinically significant improvement in PTSD (Eftekhari et al. 2013).

Despite nationwide investments in provider adoption, EBPs have limited reach among the VA mental health population (Shiner et al. 2013). This prompted us to examine system factors that reduce or enhance local capacity to expand EBP reach among patients. In settings where a majority of providers have already adopted EBPs, we posited that EBP reach would best be conceptualized as a function of interdependent staffing, scheduling and referral practices operating in the local system. Despite national mandates, measures and initiatives, these policy and procedural “mechanisms” are often locally specific.

Inner Contexts: Equifinality and Local EBP Reach

Our local collaborators were engaged in EBP national trainings and many mental health providers adopted EBPs in their programs. Yet, when our partnership began, psychotherapy initiation was still 8–10 % below VA national averages (Table 1). VA performance data identified this gap, but aggregated data from ten diverse locations in the regional VA Palo Alto Health Care System, so local managers lacked specificity regarding which clinic programs and policies to target for change. As a step toward improving EBP reach, outpatient staff at the Menlo Park VA agreed that all new PTSD and depression patients should be scheduled with two psychotherapy appointments at their intake. This led to an increase in appointment scheduling, but modest effects on psychotherapy initiation (see Fig. 1).

Table 1.

Context of outpatient mental health implementation: reach of psychotherapies for addiction and mental health in VA nationally and in pilot sites Source Department of Veterans Affairs, Veterans Health Administration, Office of Mental Health Operations, Mental Health Evaluation Center Information System (FY2014). Retrieved September 28, 2015 from VHA Support Service Center

| VA national average (%) | Local system (%) | Difference (%) | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Initiate | 8 Sessions | Prescription | Initiate | 8 Sessions | Prescription | Initiate | 8 Sessions | Prescription | |

| Depression | 46 | 6 | 28 | 38 | 8 | 28 | −8 | +2 | −6 |

| PTSD | 66 | 14 | - | 56 | 16 | - | −10 | +2 | - |

| SUD | 44 | 22 | Listed Below | 44 | 18 | Listed Below | 0 | −4 | Listed Below |

| AUD | - | - | 4 | - | - | 5 | - | - | +1 |

| OUD | - | - | 29 | - | - | 16 | - | - | −13 |

Note PTSD posttraumatic stress disorder, SUD substance use disorder. Within the SUD category prescriptions for AUD alcohol use disorder and OUD opioid use disorder are listed above

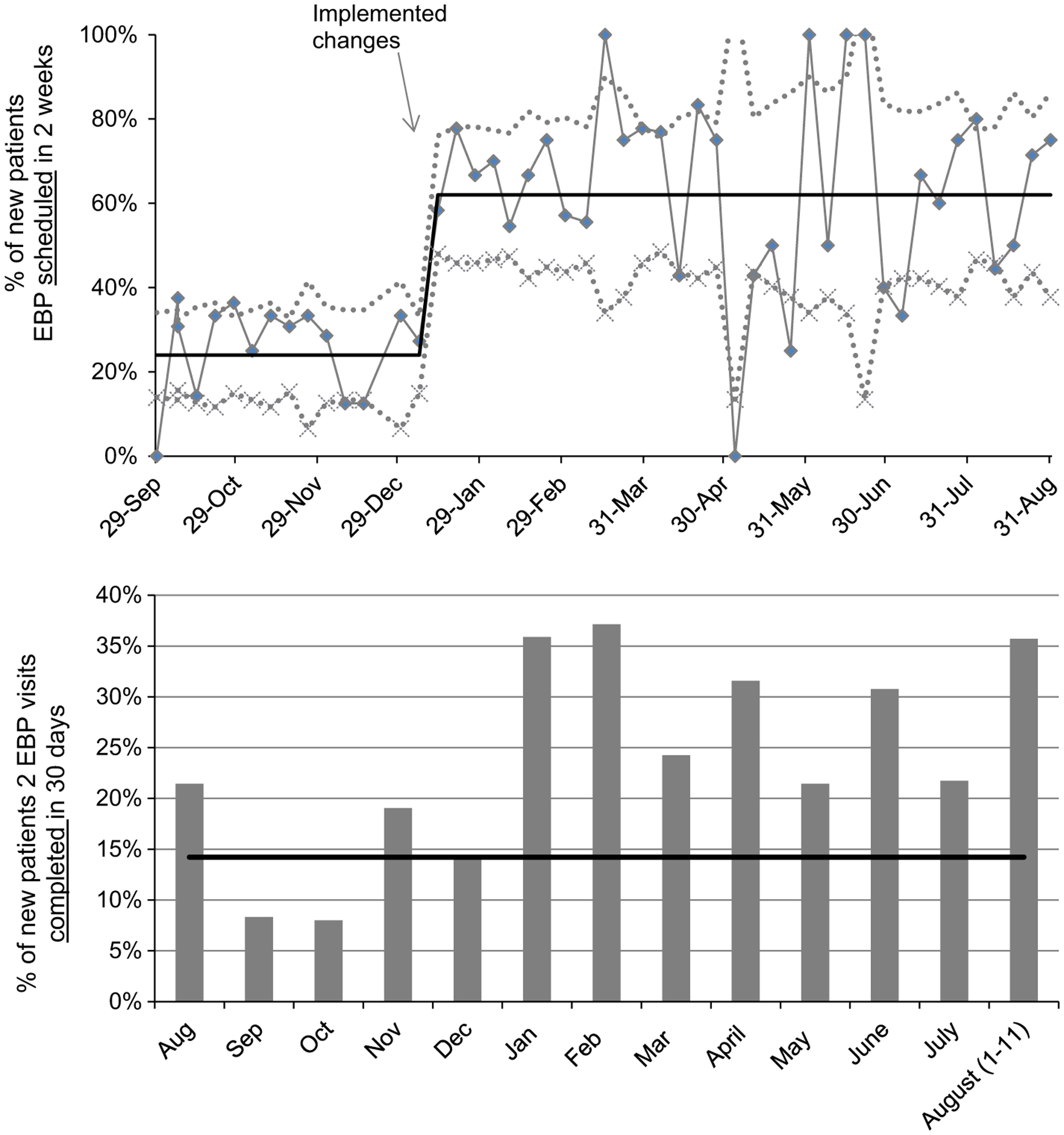

Fig. 1.

Quality improvement: significant increase in EBP scheduling without explaining variability or gap between scheduling and completion

The new scheduling policy may have had a different impact in another setting, but in this setting, fixing a service delivery problem (scheduling psychotherapy appointments) was only one component of achieving the desired implementation outcome (EBP reach) (Proctor et al. 2011). Across settings any desired implementation outcome is likely equifinal: varying initial conditions and organizational formations may achieve similar outcomes (Doty et al. 1993). Organizational equifinality aligns with the emphasis on the contextual “fit” of EBPs in implementation literature (Damschroder et al. 2009; Glasgow et al. 2012). We hypothesized that improving EBP reach in this context would require a more precise system definition of EBP fit.

Despite local emphasis, improving EBP reach required insights regarding local determinants of system behavior that were difficult to see with the VA data sources available. Despite local progress, retrospective data did not explain how gains were achieved or the mechanisms by which they would be sustained. Staff did not know what caused the discrepancy between referral or scheduling rates and the appointment completion rate, which was only 30–35 % during the most successful months (Fig. 1). This was only the upstream part of the problem. Downstream, although 46 % of depressed patients and 66 % of PTSD patients were initiating psychotherapy in VA nationally (including non-EBPs), only 6–22 % completed a therapeutic eight-session dose necessary for clinical benefit (see Table 1).

Table 2 displays structural, setting-level factors that vary between two of our partner sites, the Menlo Park and Stockton outpatient systems. In Table 2, structural differences between these two settings are listed, and the implications for EBP reach are listed below in italics. These differences illustrate the strengths of PSD for implementation planning: a process in which stakeholders formulate and evaluate a local theory of their system. Stakeholders’ inability to identify implementation strategies that achieved their goals—despite a sustained emphasis—suggests the structure of the system may be preventing a solution (Meadows and Wright 2008). Using PSD, stakeholders propose and test implementation plans specific to their own policies and staffing resources via simulation. Without this tool, imported implementation strategies from other settings may fail due to incompatibility with local capacities and constraints.

Table 2.

System capacity: barriers and facilitators influencing local timing and reach of evidence-based practices (EBPs)

| Menlo park | Stockton |

|---|---|

| 3548 unique patients/year | 2043 unique patients/year |

| Lower caseload per provider | Higher caseload per provider |

| Rare wait for initial appointment | Occasional waitlist to get into clinic |

| 5.2 psychiatrists per 9 EBPsy providers | 3.0 psychiatrists per 4 EBPsy providers |

| Higher EBPsy providers/MD ratio | Lower EBPsy provider/MD ratio |

| Higher EBPsy base rate | Higher EBPharm base rate |

| Providers often self refer for EBPs | Referrals to other providers by necessity |

| Multiple on-site specialty programs | Only telehealth specialty care |

| Training program site multiple disciplines | No trainees providing care |

| Most groups “open” (ongoing enrollment) | Most groups “closed” (infrequent opening) |

| Shorter time to next available appointment | Longer time to next available appointment |

Note Structural differences between sites are listed in plain text. The implications of each structural factor for EBP reach is listed in italics below the structural difference

EBPsy Evidence-based psychotherapy, EBPharm Evidence-based pharmacotherapy

Inner Contexts: Multifinality and Local EBP Reach

In this study, we focused on evidence-based psychotherapies for PTSD and depression. But, like most mental health systems, VA mandates multiple evidence-based practices (Department of Veterans Affairs and Department of Defense 2009b; Harris et al. 2009). Psychotherapy and pharmacotherapy data in Table 1 illustrate this “multiple EBP” reality. Maximally, implementation plans should improve the reach of multiple EBPs. Minimally, they identify means to improve a particular EBP implementation while interfering the least with other health system goals (Kopetz et al. 2011). Multiple EBPs is just one source of complexity. In any large integrated healthcare system, policies and practices change constantly and rapidly (Aarons et al. 2011; Chambers et al. 2013). VA adoption of the Behavioral Health Interdisciplinary Plan (BHIP), a delivery model allocating 5.5 full-time effort staff for every 1000 patients, is another factor influencing EBP reach. BHIP teams include psychiatrists, psychologists, social workers, nurses and other disciplines. Only some of these disciplines provide evidence-based psychotherapies (EBPs) for depression and PTSD. Therefore, improving EBP reach requires coordinated staffing and scheduling, coordinated referrals among providers within and across teams, and congruence between national requirements and local realities. PSD addresses multifinal complexity inherent to implementation planning, examining dynamics unresolved by standardized staffing plans and national mandates alone. Aided by health system data, mathematical specification and computer-assisted simulation, stakeholders examine the current state of the system, account for interacting and potentially competing objectives, and are able to see the myriad plausible, intended and unintended consequences of change.

Summary

Local stakeholders wanted implementation plans that would account for system interdependencies. VA information systems highlighted local implementation problems, but not what stakeholders could do about it. Stakeholders needed a way to integrate VA data systems and make them locally actionable. Given this context, we believed that stakeholder buy-into EBP implementation planning would be enhanced when all mental health providers and their priorities (i.e., all EBPs, all disciplines, all patient populations) were included. We propose that PSD is a uniquely inclusive approach to managing local system factors driving EBP reach.

Participatory System Dynamics Modeling

Implementation strategies become part of the organizational culture and shape how stakeholders understand implementation problems (Aarons et al. 2011). We selected PSD as our planning process and tool for improving EBP reach. PSD has been used to optimize organizational change initiatives by studying how system structures create delays (e.g., wait-times), accumulations (e.g., patients in the system), and drive overall system behavior, such as EBP reach over time (Forrester 1985; Morecroft and Sterman 1994; Rahmandad et al. 2009; Sterman 2006). For example, system dynamics modeling was used in VA to expand the EBPs that reduced Veteran homelessness by 33 % over four years, (Glasser et al. Glasser et al. 2014; U.S. Interagency Council on Homelessness 2013; U.S. Department of Housing and Urban Development 2014). System dynamics simulations have also compared the population impacts of alternative system-wide EBP implementations (Lich et al. 2014; Lyon et al. 2015). A recent Institute of Medicine and Centers for Medicare and Medicaid Services report to the VA identified (1) misaligned patient demand and system resources, (2) uneven healthcare processes, (3) non-integrated data tools, and (4) lack of leader empowerment in VA, and recommended system dynamics simulations to improve patient access to care (Centers for Medicare & Medicaid Services Alliance to Modernize Healthcare (CAMH) 2015). Yet, despite 30 years of scholarship and application (see System Dynamics Review), PSD is underutilized by implementation scientists.

Stakeholder Engagement

PSD model building or “group model building,” is inherently mixed methods (Hovmand 2014). PSD best practices include an iterative process in which the end-users of PSD models are engaged in co-creating model development, testing and refinement (Vennix 1996). Stakeholders develop a testable local theory of the system, proceeding from developing an initial qualitative understanding of the system, to rigorous mathematical evaluation of system behavior (Sterman 1994). The six phases are (1) participate, (2) calibrate, (3) simulate, (4) translate (implement), (5) evaluate and (6) iterate. Iteration is not completed at the end. Rather, iteration is possible between each component of the process. In this way, PSD is consistent with the process elements of the Consolidated Framework for Implementation Research (CFIR): planning, engaging, enacting and evaluating/reflecting (Damschroder et al. 2009). PSD is innovative for evaluating implementation impacts during planning, prior to execution. To our knowledge, no other exemplars exist for empowering frontline healthcare providers to use data-based forecasts that better align existing resources to improve EBP reach.

When enlisted during implementation planning, PSD can optimize local capacity for expanding EBP reach via restructuring: re-aligning roles, teams, procedures and data systems in the inner setting (Powell et al. 2012). Using model simulation, stakeholders assess the clinic redesign scenarios proposed in their implementation plans, evaluating potential mechanisms by which EBP reach could be improved. In this way, PSD specifies EBP reach as a function of formally defined general and EBP-specific system capacities, key drivers of implementation outcomes (Flaspohler et al. 2008; Scaccia et al. 2015).

As compared to “implementation-as-usual,” PSD simulation offers a particularly valuable scientific advance for settings that have adopted an EBP, but have no new resources to improve implementation (e.g., new staff, new trainings). Without modeling, other participatory strategies require implementation trial-and-error, which can breed cynicism among staff and reduce the buy-in necessary to sustain restructuring interventions (DelliFraine et al. 2010; Mazzocato et al. 2010; Rycroft-Malone 2004; Rycrosft-Malone et al. 2002; Stetler et al. 2006; Vest and Gamm 2009). The holism of PSD means that implementation of multiple EBPs can be improved at once. PSD helps frontline staff evaluate and resolve conflicts about competing implementation plans via simulation, and stakeholders can assess multiple desirable and undesirable outcomes. In this way, PSD comprises the theoretical framework and methodological basis for a “learning organization,” (Senge 2006; Sterman 1994). The central value is the participatory process for learning from model building.

The Present Study

Implementation scientists from the VA National Center for PTSD partnered with VA Palo Alto Healthcare System to improve EBP reach. We piloted the PSD participation, calibration and simulation phases (phases 1–3), to evaluate two EBP implementation plans that VA mental health stakeholders believed would improve reach of EBPs for PTSD and depression. Stakeholders hypothesized that standard 60-min intake evaluations would increase access, and thereby, increase patients beginning EBPs, as compared to the prior standard of 90-min intakes. Stakeholders also hypothesized that EBP initiation and completion would increase with restructured referrals and staffing. In the redesign scenario, PTSD patients would begin their first EBP session one-week after their BHIP intake evaluation, whereas the status quo referred patients first to an intermediary PTSD information group to learn about PTSD EBP options. We provide an overview of our PSD process, describing procedures and findings so that readers can consider PSD for improving EBP implementation planning in other healthcare systems.

Methods

Procedures

Phase I Participate: Stakeholder Expertise and Consensus-Building

Stakeholders

Based on the axiom that every stakeholder has relevant expertise, a core modeling group was comprised of one “champion” provider or leader from each service delivery team, including managers, nurses, psychiatrists, social workers and psychologists. Leadership offered workload credit to this volunteer PSD committee, which met for 1-h, twice a month, over 5 months. These six service delivery teams included multi-disciplinary, generalist behavioral health integration planning (or BHIP) teams, and specialty care teams or programs (e.g., PTSD Clinical Team, Women’s Counseling Center).

Modeling Group Sessions

Local leadership and frontline staff shaped the model by describing local clinic procedures. An iterative process helped to insure everyone had multiple opportunities for input. The approach to the sessions was unstructured with an agenda established at the beginning of each meeting. In order to model the problem of “limited EBP reach,” the first modeling session began by asking providers, “How do you identify the mental health need of a new patient during an intake?” Next, providers were asked, “What makes it a good intake process?” and “What makes it a bad intake process?” Providers were then asked, “What data or evidence is available to evaluate the intake process?” Finally, providers were asked, “What is upstream (in terms of patient flow) of the intake?” and “What is downstream from an intake?” These initial discussions produced preliminary stock and flow substructures for each program or team. The basic model represents stakeholder’s understanding of patient flow through care as defined by the programs, policies and procedures of the outpatient system. This group spent approximately 14 h on the PSD process across all three phases. We reminded staff frequently that we were modeling to learn together, and not to simply produce model results.

Staff Meetings

PSD was the focus of one, 1-h staff meeting (with all staff) each month (4 total). The first staff meeting oriented staff to systems thinking and systems modeling. The second staff meeting presented the modeling structures developed in the smaller group. The third staff meeting reviewed data sources being used to inform model parameters, clarifying how providers entered data for services in health records; participants were asked to generate hypotheses about changes the clinic could make (i.e., implementation plans) that they believed would increase the number of new patients who received psychotherapy. Staff were encouraged to frame hypotheses as “What if scenarios,” such as, “What if the intake duration was 60-min instead of 90-min?” During the fourth staff meeting, hypotheses were reviewed and staff were asked to rank them by priority for evaluation via model simulation. Formative Evaluation. To guide the process, anonymous staff feedback was solicited after the first staff meeting and fourth staff meeting, considered the “mid-point” of the six PSD phases. Pre- and mid-point evaluation forms included 15 items and are available by request.

Veteran Patients

Veterans with lived recovery experiences, who have used VA mental health services, and now work as VA patient advocators/navigators, met with the project facilitator for 1 h each month (Veteran Advisory Partnership for Operations and Research, VAPOR). Initial sessions focused on the establishment of the partnership mission, clarifying roles and expectations. VAPOR was shown PSD milestones, and relayed relevant personal experiences and observations from working with Veteran patients, that guided project priorities.

Study Sites

We completed phases 1 through 3 of the PSD process with programs located in the Menlo Park outpatient system of VA Palo Alto Health Care System. Our pilot was conducted over 5 months. Given our goal to improve EBP reach across all outpatient clinics in the VA Palo Alto Health Care System, we began in Menlo Park because it had the highest level of program complexity. We started with the most complex site, anticipating that our model would be streamlined when scaling to other locations. Substructures for specialty or training programs can simply be “turned off” at sites without these programs. In this way, a generally standard PSD model can be tailored to local clinics and retain the feasibility of scaling to multiple locations. Diversity within and across healthcare systems (see Table 2), demonstrates the value of PSD for representing local perspectives and data, while remaining generalizable.

EBP Definition

We collaborated with local stakeholders to improve local implementation of five EBPs. Three were EBPs for depression [cognitive behavior therapy (CBT-D), Acceptance and commitment therapy (ACT), interpersonal psychotherapy (IPT)], and two were EBPs for PTSD [prolonged exposure (PE), cognitive processing therapy (CPT)]. These EBPs have demonstrated clinical efficacy and are part of the VA national dissemination program, but few VA patients initiate these EBPs even in specialty programs (Shiner et al. 2013).

Although we are focused on depression and PTSD EBPs in this manuscript, in order to adequately address the system context of EBP implementation, we sought to determine how many Veterans receive all mental health services in each service delivery team over any given period of time. In addition to EBPs delivered in individual psychotherapy, we also examined intake evaluations, group psychotherapy, medication management, and care coordination/case management appointments.

Ethics Review: Quality Improvement

We submitted our pilot protocol to our institutional review board (IRB) and our activities were determined to be quality improvement, not requiring IRB oversight. Quality improvement (QI) includes systemic, data-guided activities designed to improve health care delivery processes, and our aim was to bring our local healthcare system up to established VA quality standards for EBP implementation. By design, our use of existing health system data did not increase any patient risks, beyond the potential risk to confidentiality already associated with capturing personal health information during routine clinical care. Feedback collected from former Veteran patients and providers was designed to directly improve patient care via the PSD process. While future larger scale evaluations of PSD may require IRB approvals, the fact that the basic protocol was deemed QI usefully keeps the present pilot study process and findings close to “real world” PSD use in non-research contexts.

Phase II Calibrate: Data Inputs and Model Calibration

System Structure and Behavior

Through synthesis of administrative data and stakeholder estimates, a stock and flow model of system structure and behavior was developed. We built model structures of the outpatient system so that we could test hypotheses about how EBP implementation problems came to be and to evaluate potential solutions to these problems. The dynamics (system behavior over time) of our problem were the rate, proportions and accumulations of patient flows in the clinic over time. Model building, validation and simulation tests were all performed with the aid of computer simulation software with graphical user interface that enabled representation of variable relationships that are difficult to visualize or impossible to model easily with other approaches, such as (1) stochastic or deterministic, (2) continuous or discrete, (3) linear or non-linear, and (4) simultaneous or lagged (Rahmandad et al. 2009; Ventana Systems, Inc. 2014).

Capacity and Mechanism Formulation

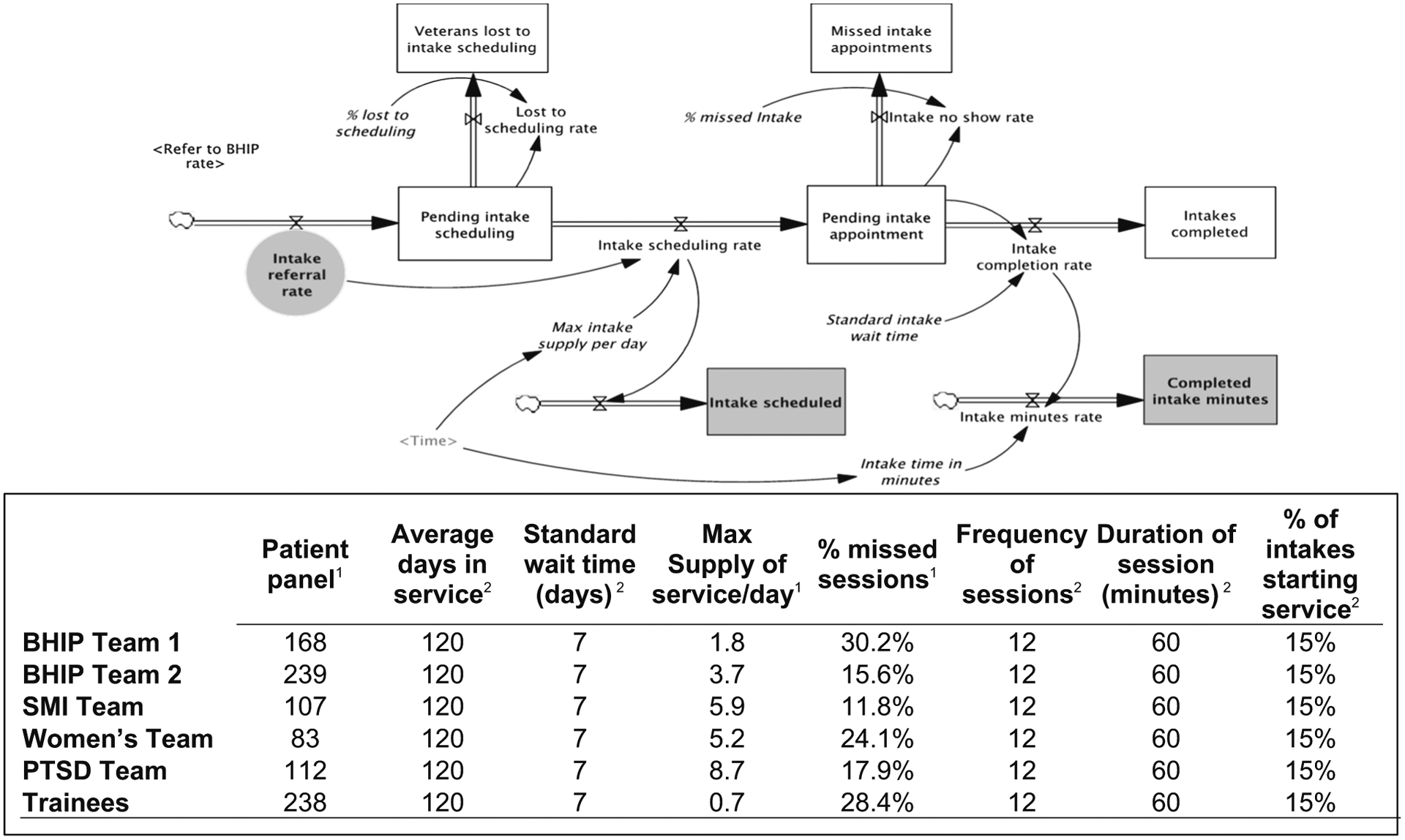

We formally defined the system EBP capacity for expanding EBP reach using causal modeling equations of hypothesized system mechanisms driving EBP implementation. Detail was added equation by equation in collaboration with staff, and the mathematical model was expressed as a stock-and-flow diagram. The complete stock-and-flow model and equations are available by request; please email the corresponding author. The PSD facilitator and PSD modeler met weekly via teleconference to translate qualitative stakeholder discussions into models or simulations that were presented back to stakeholders at later meetings. Figure 2 displays an excerpted stock-and-flow substructure for the intake appointment process. The model can be understood as a hypothesis of system capacity to provide EBPs as a function of patient demand and initial system conditions. Stocks (depicted by a rectangle) represent the level (prevalence) of a variable at any given time, such as the number of patients on any given day pending any service. Flows (double-lined arrow with ‘faucet’ icon) increase or decrease a stock over time (incidence). On their own, stocks provide a snapshot of the clinic; many clinic managers have data snapshots like these. But, by also specifying rates or flows, PSD estimates whether levels are increasing/decreasing and what system factors are driving specific increases or decreases. Managers can ‘zoom in’ on any part of the process, up or downstream, such as to differentiate referrals from scheduling (see grey boxes in Fig. 2).

Fig. 2.

System dynamics modeling: stock and flow diagram (intakes) and Electronic health record parameters (psychotherapy)

Note that the direction of patient flow is determined by local programs, procedures and policies and can be redesigned. In Fig. 2, the desired patient ‘flow’ is through the stocks in the center. The rate at which patients move through this sub-system is a function of the available service supply at the bottom and the rate of patients who ‘no show’ out of flows at the top. By formulating these health system characteristics, the model displays and compares simulated trends for every variable, showing changes in service dynamics per day, within any section of the clinic system, over any time horizon, making data newly actionable for implementation planning.

Health System Data

The previous 12 months of data was entered to create a baseline or “base case” for comparison to implementation alternatives. Our simulations did not require any new data collection. Due to use of both endogenous (calculated) and exogenous (entered) parameters, model structures for implementation plans can be developed even when the data available may be limited. In the Fig. 2 stock-and-flow diagram, italics indicate variables entered from VA data systems, whereas variables for which we did not enter data are calculated within the model (in regular font). The table in Fig. 2 displays an example of data inputs from either existing VA information systems or stakeholder estimates. The table displays parameters for the number of patients who completed 60-min psychotherapy appointments during the prior year in each team. These parameters are available in any healthcare system. Stakeholders evaluated data inputs for reasonableness. It is common for problems with data quality or availability to gain new salience for stakeholders as the model is calibrated, and the need for more data or higher quality data may be newly prioritized.

Model Calibration and Validation

During the calibration phase, simulations of system behavior were calibrated against historical data. Sensitivity analyses are conducted to inform model structures and validity, and later, to guide implementation decisions. We selected ranges of parameters exogenous to the model, which were reviewed by stakeholders for validity and checked to make sure equations specifying “endogenous” variables (i.e., calculated within the model) were replicating the historical observations of the number of patients receiving each service, in each team, over the last year. For example, the “base case” for the intake duration (60 vs. 90 min) scenario described below, required us to determine the exact date that leaders mandated the change to 60-min appointments. Only when we entered the exact date of this practice change, did the model accurately replicate service delivery variables we were checking.

Phase III Simulate: System Dynamics Modeling Analyses

Simulation of Alternative EBP Implementations Via Redesign

Design of the model prioritizes the EBP reach problem and considers all practical implementation strategies that might be useful, constructing comparison models with alternative structures. Once these adapted models were developed, stakeholders tested the whole-system impacts of potential procedural changes (e.g. restructuring strategies) in the “virtual world” of the models. During implementation planning, simulation is designed to help select among competing implementation trade-offs that are difficult to compare without an empirical model (Sterman 2000). This is particularly crucial when stakeholders are at a stalemate, or when prior implementation plans failed or were not sustained. Adding specificity unavailable in many implementation approaches, simulation tests also determine when stakeholders can expect to observe improvement.

Hypotheses Hypothesis 1

More depression and PTSD patients will receive EBP treatments with a 60-min intake evaluation policy than with 90-min intakes. Hypothesis 2 More PTSD patients will begin EBPs with referrals from BHIP intakes to EBP session 1 in specialty programs, than with referrals first through the PTSD information group. Looking at any single rate in relation to these hypotheses, such as referrals, scheduling, or completing an EBP, is “zooming in” on one part of the process. The referring rate is upstream from the accumulations of patients scheduled or completing the appointment. Patient “no shows” and other system processes (phone calls, reminder systems; not shown) co-determine the appointments scheduled and completed downstream.

Results

Qualitative Formative Evaluation

We asked staff about their concerns and modeling goals with an anonymous feedback evaluation (see Table 3a). Their concerns mapped well to PSD models, which defined the system-factors represented in their goals precisely: the proportion of patients seen by each provider/team, patient referral flows across programs, clinic processes influencing access to services, etc. Despite regular staff meetings and communication, staff did not necessarily agree about proposals for change. Staff agreed to a shared goal to expand reach of EBPs, but some service delivery teams did not feel that their workflows and patient panels were reflected in this goal (e.g., evidence-based pharmacotherapy providers/prescribers; providers focused on different patient populations, such as patients with serious mental illness). In contrast, the depression and PTSD EBP providers were carrying the burden of the highly emphasized EBP implementation goal in their schedules. In some cases, they may have felt they were doing so single-handedly. Staff reported concerns about the unknown or unintended impacts of their own and their colleagues’ proposals. No single, shared strategy emerged from qualitative work alone.

Table 3.

Formative evaluation of participatory system dynamics (PSD) modeling: stakeholder participation and perspectives

|

BHIP Behavioral Health Integration Program, PCT PTSD Clinical Team

Staff were interested in how two potential new policies would impact EBP reach, and Table 3b displays the two hypotheses staff desired to test first using the model. In fact, staff provided thirty hypotheses for testing, and ranked them by priority. A large number of stakeholder hypotheses affirms the importance of modeling. Stakeholders needed an ex ante model for resolving their lack of consensus about proposed plans. Evaluating all thirty hypotheses (or even fifteen or five hypotheses) via trial-and-error would have been infeasible. Stakeholders found PSD acceptable for their goals and reported many useful aspects of the process for addressing these challenges (see Table 3c).

Simulation Analyses: Hypothesis Tests of Implementation Plans

We evaluated two strategies stakeholders ranked highest for improving EBP timing and reach (see Table 2b). The first reduced intake appointments by 30 min, a change local leadership had recently imposed, creating tension among staff. The top of Fig. 3 displays the program differences (BHIP versus specialty care or “SC”) between the 60 and 90-min hypothesis test. For hypothesis 1, we show the data for scheduling appointments, but not completing appointments (shown for hypothesis 2). The scheduling discrepancy between the 60 and 90-min scenarios was 141 EBP appointments (SC) and 655 EBP appointments (BHIP) or 796 sessions total. The number of total completed EBP sessions over 1 year would increase by 424 with 60-min intake evaluations, considerably less than looking at scheduling alone. We found 60-min intakes would improve EBP reach, and more so in the generalist BHIP teams than it would in the specialty mental health programs.

Fig. 3.

Implementation scenario simulations: stakeholder hypotheses for improving evidence-based psychotherapy reach. Note The simulated output presented in this figure is synthetic data comparing the plausible future number of EBP sessions scheduled and completed over 1 year under the status quo or “base case” of current implementation, as compared to one of two alternative implementation plans: 60-min intakes and PTSD Streamline. Specialty care (SC) includes the Women’s Program and PTSD program. Behavioral Health Integration Program (BHIP) is generalist mental health care. Not shown: New Patient Referrals to Evidence-based Psychotherapy

In Fig. 3, comparison of the simulated output in the two system behavior-over-time graphs, scheduling on top, and completion on the bottom, highlight the ability of PSD to account explicitly for clinical system interactions. For each implementation plan, stakeholders received an a priori estimate of how restructuring would differentially impact referrals, scheduling or appointment completion; valuable, because each component of this process directly influences staff day-to-day workflows. Variability between scheduling and completing EBP sessions is only driven in part by patient behavior. On average, the no show rate in this setting is consistent with national averages for VA mental health, approximately 21 %. But, the historically observed percentage of missed psychotherapy appointments varied significantly across teams, ranging from a low of 11.8 % to a high of 30.2 % (see Fig. 2), and within teams, missed appointments varied across services (e.g., therapy versus care coordination versus intakes). This was accounted for in the model. The average daily team supply of each service also varied (see Fig. 2 table) and the impacts of these sources of variability were examined in the model.

The second hypothesis staff tested was to streamline PTSD specialty program referrals (PCT; also shown in the bottom of Fig. 3). The PTSD streamline simulation estimated that the supply of PTSD EBPs sessions would increase by 16 % (496 sessions) in the PCT, and depression EBPs sessions would increase by 29 % (262 sessions) among generalist BHIP teams. Staff were surprised that due to system interdependencies, both PTSD and depression supply were likely to increase, summing to an increase of 758 EBP sessions over one year. With these additional EBP sessions, 63 more patients could complete a full course of EBP in the streamlined scenario, indicating approximately 30 more Veterans would be likely to remit or recover under this alternative EBP implementation (Karlin et al. 2012; Eftekhari et al. 2013). Simulating the estimated clinical benefit for local patients may motivate stakeholders to sustain a potentially difficult restructuring change. More than looking at implementation outcomes alone or leaning heavily on data from other settings. Knowing improvement would be observed around week 21 (see x-axis in Fig. 3) should also help stakeholders avoid abandoning an effective improvement strategy before it bears fruit.

Discussion

This outpatient system provides a valuable case study of systems-level implementation planning using PSD. Local EBP adoption and capacity for EBP delivery was already acquired via high-quality training among providers. In addition, a high level of leadership support and an organizational culture of quality improvement were already underway. Local leadership sought improved EBP implementation and invited the principal investigator and a modeling expert colleague into the outpatient system. Leaders also met with the facilitator and modeling team regularly, shared data transparently and communicated responsively. Staff met bi-weekly and contributed actively in the project, frequently sharing suggestions and ideas with the facilitator. This type of collaborative engagement is a known precondition for improving organizational implementation (Damchroder et al. 2009; Wiener 2009). Yet, despite fertile ground, EBP implementation goals had not been achieved, and the local outpatient mental health system fell behind national averages on EBP initiation (reach). Therefore, we believe that implementation plans solely targeting changes in provider attitudes and skills, leadership support or organizational climate would not have improved EBP reach in this setting.

Due to the complexity of the day-to-day workflows in a mental health system, it is difficult to choose EBP implementation plans that achieve optimal EBP reach without unanticipated effects. Conventional change efforts using PDSA cycles require making changes and then adjusting efforts based on post hoc evaluation of their impacts, which can result in wasted efforts or staff disengagement. As a result, there is increasing attention to the application of systems engineering methods and simulations for guiding improvements in health care access and quality (CAMH 2015). PSD emerged from this application of engineering approaches to management in complex dynamic systems (Forrester 1961), but few healthcare professionals or local leaders are familiar with systems science approaches (Mabry et al. 2013). Even so, over the few months of this pilot, frontline staff with no prior system dynamics training engaged in the process and reported that PSD was useful. Restructuring, re-aligning system resources to improve EBP referrals or adjust EBP provider scheduling, requires considerable stakeholder support (Powell et al. 2012). PSD works to develop stakeholder buy-in through high levels of engagement, testing stakeholders’ assumptions and enabling empirical, pre-enactment (Damschroder et al. 2009) comparison of options.

All True Improvement is Local

PSD empowers frontline stakeholders with a process and tool for addressing local implementation needs (Forrester 1985). Although system dynamics modeling has been used to examine system-wide mental health scenarios and system-wide VA interventions, to our knowledge, this report describes the first attempt to use PSD for engaging frontline mental health staff with simulations during implementation planning. While the VA Uniform Mental Health Services Handbook and the VA/DOD Clinical Practice Guidelines are establish VA priorities, strategies for achieving their objectives are not fully controlled by a central decision-making body. Rather, local decisions regarding procedures are made based on local resources according to the best available information managers have.

Despite multiple data systems available in large integrated health systems, for data to become locally actionable, it must be synthesized into tools that illuminate local stakeholders’ needs. VA makes a number of data-based dashboards available, but they are not integrated, and they do not mathematically account for interdependence the way a system dynamics model does. During our formative evaluation, we learned that there is a tendency among some clinicians to see data-driven VA performance measures as justification for “punishing staff” without providing locally useful information. This can breed cynicism and contribute to burnout and staff turnover (Garcia et al. 2015). At the same time, we found providers had several EBP implementation questions that they wanted to evaluate empirically. Many staff desired increased use of data to guide decisions and sought transparency regarding their own and their colleagues’ workflows.

Among VA mental health providers a sense of psychological safety (e.g., willingness to take interpersonal risks and speak up) is associated with pointing out errors, increasing job satisfaction and reducing intention to leave VA (Derickson et al. 2015; Yanchus et al. 2015). Research on PSD indicates that PSD modeling increases understanding of system dynamics and increases organizational performance, and the boost is mediated by psychological safety and willingness to share information (Bendoly 2014). PSD implementation planning, aligns system resources to meet patient demands, improves clinical processes, integrates available data tools, and empowers local leaders (CAMH 2015). Locally enacted, PSD enriches staff general capacity and motivation for making team-based, collaborative implementation improvements (Scaccia et al. 2015).

Modeling System Capacity for EBP Implementation

As a multi-method process, PSD is also unique in specifying and rigorously evaluating a local system’s specific capacity for EBP implementation (Scaccia et al. 2015) or the fit of the EBPs in context (Damschroder et al. 2009; Glasgow et al. 2012). We began model building by asking stakeholders’ questions to elicit the dynamics that facilitate or interfere with EBP reach, modeling the other types of services that providers supply. Clinic managers and staff in our clinic had questions regarding the trade-offs of different approaches to organizing limited staff resources to achieve the interdependent goals of (1) timely access and (2) access to appropriate, high-quality, evidence-based care. PSD results should be interpreted as a temporary answer to these questions, rather than a definitive result. The PSD facilitator encouraged stakeholders to assume that the model is “wrong” in some way and work to continue making more accurate approximations. This uncertainty inherent in the modeling process doesn’t impede decision-making. Rather it encourages stakeholders to remain cautious and adopt a mindset that’s open to changing course if or when new evidence becomes available. As compared to facilitating implementation planning without models, we found clinicians and managers can be disabused of ineffectual notions more efficiently if they have developed confidence in the usefulness of the model, than by simply discussing policies and procedures without modeling. During this study, staff were motivated to evaluate restructuring plans in the “virtual world” of the PSD model and saw this as a considerable advantage as compared to implementation with post hoc evaluation in the real world (Sterman 2000).

Multidisciplinary Teams and Patient Flows

We showed stakeholders exactly how the multidisciplinary composition of BHIP and specialty care teams were influencing the supply of EBP appointments. Prior research on PTSD EBP implementation in VA indicates that program structure, workloads and team functioning may all be associated with provider EBP use and adherence (Chard et al. 2012; Cook et al. 2013; Finley et al. 2015). Challenges to increasing EBP reach also include clinic planning and management to provide patients a full course of an EBP (up to 12 weekly sessions). So we modeled the continuity of care over time, or the “dynamic complexity” of implementing a timely and complete course of therapy. PSD helps local systems to make sure that patient demand for EBPs, both in terms of referral flow and clinical processes, are optimally aligned with allocation of professional resources (Masnick and McDonnell 2010). Some PSD projects prioritize elucidation of feedback loops by generating causal loop diagrams. Rather than focus first on feedback loops, consistent with other system dynamics projects (Homer et al. 2004), we observed that stakeholders found the stock and flow model most intuitive for understanding how patient flows and stocks such as EBP session completion (reach) interrelate (Warren 2004; Warren 2005).

Capitalizing on Widely Available Health System Data to Scale

Use of PSD to improve implementation is facilitated by the near ubiquitous use of health information systems. We have established a partnership with the national VA Program Evaluation Resource Center (PERC), and our next steps include building our model data synthesis on to existing daily PERC data extraction, re-purposing existing data infrastructure (servers and code), for the efficiency and cost-effectiveness necessary for scaling PSD to multiple sites or even nationally. Our preliminary, working version of our SD model uses parameter estimates derived from common electronic health records information. These parameter values define concrete scenarios that are easy for stakeholders to interpret.

Why Can’t This Be Simpler?

Implementation of an innovation is complex in any multilayered organization (Aarons et al. 2011). Alignment of stakeholders around PSD embeds research on implementation into the structure of the local system or inner setting (Aarons et al. 2011). To date, many mental health implementation efforts in VA have focused on necessary, but narrower implementation settings (e.g., specialty programs, rather than referrals across the mental health outpatient system) or implementation goals (e.g., provider training and adoption, fidelity to a specific EBP), even though effective implementation strategies are likely to be multi-component and address multiple factors (Aarons et al. 2011). From a systems perspective, decision-making without local engagement and modeling resources is expected to engender policy-resistance and other counter-productive system behaviors (Meadows and Robinson 1985). We incorporated several tools (i.e., mathematical equations) to understand delays in the system and non-linearities that are very difficult for any one person, or even one organizational unit to understand without a visual model and empirical tests of that model.

Implications of Participatory System Dynamics Modeling for Implementation Science

Implementation science investigates multi-level processes to advance generalizable knowledge of how and why implementation processes are effective (Proctor et al. 2012). Rather than simply describe these system complexities, PSD formalizes the local mechanisms by which systems produce EBP implementation outcomes (Luke and Stamatakis 2012). PSD capitalizes on the flexibility, resourcefulness and reorganizing properties of human systems. Implementation scientists count on this, but rarely model these factors explicitly. PSD is optimal for addressing the complexities of healthcare problems in a manageable but realistic way, and conceptualizes both the big picture and specific system components simultaneously (Caro et al. 2012; Mabry 2014). For these reasons, PSD helps to balance a central challenge of implementation science, which is to develop explanatory, nomothetic insights from processes that are idiographic and locally specific (Damschroder and Lowery 2015). Dynamic models offer another substantial advantage by precisely estimating when implementation improvement should be observed (Sterman 2000).

There are also several advantages of PSD over traditional statistical analyses. Many implementation phenomena and theoretical models, such as Rogers’ Diffusion of Innovation (2003) explicitly address the role of time, and describe lagged effects and accumulations derived from non-linear associations and non-normal distributions (e.g., S-curve of diffusion; Luke and Stamatakis 2012; Rogers 2003). Different variables may also play crucial roles at different points in the implementation process (Aarons et al. 2011). PSD may be relatively efficient as compared to implementation approaches that move from implementation planning to implementation without modeling. The advanced computational sophistication of PSD builds consensus among stakeholders as they examine their workflows and patient encounters, including all of their synergistic, cumulative and compounding effects (Sterman 2006).

Limitations

Menlo Park was in the active implementation phase. Many providers received EBP training and programs had been working on improving EBP referrals for some time. Our PSD simulations may have operated differently during EBP exploration or preparation phases (Aarons et al. 2011). On the other hand, PSD may be particularly valuable to systems at the outset of selecting EBPs for implementation. Future research should compare PSD in settings starting implementation across EPIS Model phases. PSD may also have been received differently in a setting with a lower readiness to change and less support from leadership (Weiner 2009; Aarons et al. 2014). Future PSD evaluations should assess PSD operation in settings that vary on these domains. We also benefitted from an outer setting in which there was already a substantial EBP dissemination effort underway (Karlin and Cross 2014), whereas other settings may need to focus primarily on increasing provider adoption and fidelity to EBPs. However, we believe that use of PSD in this context was useful as a system-level implementation planning strategy. Even in contexts with considerable EBP resources in place, limited general resources is the norm and limited stakeholder agreement is likely. Based on our involvement in national VA implementation efforts, we expect many sites would benefit from PSD approaches to achieving consensus, and coordinating workflows and referrals to maximize EBP-specific capacity. Finally, it is a limitation that these plans have not yet been implemented. Our future work will compare a priori simulation output against a posteriori implementation results.

Future Directions

We observed high levels of staff participation and positive evaluations of PSD phases 1–3, demonstrating acceptability. Demonstrating feasibility, we were also able to synthesize data, build models and evaluate implementation scenarios via simulation in less than six months. Next, we plan to complete PSD phases 4–6 and evaluate the effectiveness of PSD for improving EBP reach, continuing our partnership with a pilot that expands to other systems.

Conclusions

Identifying the best ways to allocate limited EBP resources is critical in VA and all healthcare systems. Learning from national VA investments to promote EBP adoption and infrastructure system-wide, the PSD approach recognizes the need for generalizable implementation strategies applied in local systems. This PSD pilot is innovative in identifying new uses for existing data that empower frontline staff to reach greater consensus and precision in their EBP implementation plans. PSD was feasible and acceptable to staff and generated useful estimates of implementation plan impacts. Stakeholders estimated the number of EBP sessions their plans would yield and estimated the clinical benefit to patients. We know of no other resources with this level of specificity for system-level implementation planning.

PSD meets several needs for advancing the field of implementation science. A primary innovation is the potential generalizability of one systems approach that can be tailored to operational mechanisms in local settings. PSD can be applied to any EBP that requires coordination among multidisciplinary providers and multiple appointments. PSD is also responsive to the need to improve implementation of multiple evidence-based practices in one system. Embedding high levels of stakeholder engagement in developing implementation strategies improves general capacity, including buy-in, leadership development, information sharing and systems thinking (Bendoly 2014; CAMH 2015; Morecroft and Sterman 1994).

Eliciting stakeholders’ ‘mental models’ is necessary, but not sufficiently precise for improved alignment of EBP implementation to meet patients’ needs (Simon 1991; Sterman 1994). Empirically quantified mechanisms of implementation ‘barriers and facilitators,’ allows stakeholders to make causal attributions about specific EBP capacities that are validated with calibrated parameters from health system data (Martinez-Moyana and Richardson 2013). Unlike linear statistics, PSD addresses non-linearities, such patient accumulations and service delays. Rather than guesswork, stakeholders’ hypotheses about system impacts are tested before changes.

Early stages of system dynamics modeling projects emphasize problem definition and often begin using the modeler’s own substantive knowledge to generate a set of elements (i.e., constructs, variables) related to the problem of interest. Our PSD process incorporated such expertise from stakeholders, supporting them in more rigorous ‘systems thinking’ (Richardson & Pugh, 1981). Without developing a working simulation model, implementation plans can only be improved via trial-and-error in the real world. Our preliminary stock-and-flow model fostered important, initial insights about how to improve EBP reach. After implementation, modeling to explore how best to adapt or make ongoing improvements (PSD phases 4–6), may also serve to increase the sustainability of EBP implementation efforts amid ongoing system change (Chambers et al. 2013).

Acknowledgments

This project is a collaboration among local and national mental health stakeholders in the Veterans Health Administration (VA). We would like to acknowledge the hard work and dedication of the mental health staff in outpatient services who are working to provide Veterans high-quality care. Staff are actively engaged in this process and are committed to improving the timing and reach of evidence-based mental health care. We would also like to acknowledge the contributions of the Veterans Advisory Partnership for Operations and Research (VAPOR). VAPOR is an advisory council of Veterans with lived experience in recovery as VA mental health patients who now work as patient advocates and health care navigators. We are grateful for their expertise and ongoing partnership in this improvement effort. We acknowledge project support contributions from McKenzie Javorka, B.A., Cora Bernard, M.S. and Dan Wang, Ph.D. Finally, we acknowledge the expertise of two national program offices, the Program Evaluation Resource Center (PERC) and the National Center for PTSD, Dissemination and Training Division. The views and opinions of authors expressed in this manuscript do not necessarily state or reflect those of the United States Government or the Department of Veterans Affairs.

Funding This pilot study was funded with in-kind contributions from the National Center for PTSD, Department of Veterans Affairs.

Footnotes

Conflict of Interest All the authors declare that they have no conflict of interest.

Ethical Approval This article does not contain any studies with human participants performed by any of the authors.

References

- Aarons GA, Ehrhart MG, & Farahnak LR (2014). The implementation leadership scale (ILS): Development of a brief measure of unit level implementation leadership. Implementation Science, 9(1), 45. doi: 10.1007/s10488-010-0327-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Hurlburt M, & Horwitz SM (2011). Advancing a conceptual model of evidence-based practice implementation in public services sectors. Administrative Policy Mental Health Services Research, 38, 4–23. doi: 10.1186/1748-5908-9-45 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bendoly E (2014). System dynamics understanding in projects: Information sharing, psychological safety, and performance effects. Production and Operations Management, 23(8), 1352–1369. doi: 10.1111/poms.12024 [DOI] [Google Scholar]

- Caro JJ, Briggs AH, Siebert U, & Kuntz KM (2012). Modeling good research practices—overview: A report of the ISPOR-SMDM modeling good research practices task force-1. Value in Health, 15, 796–803. doi: 10.1016/j.jval.2012.06.012 [DOI] [PubMed] [Google Scholar]

- Centers for Medicare & Medicaid Services Alliance to Modernize Healthcare (CAMH). (2015). Independent Assessment of the Health Care Delivery Systems and Management Processes of the Department of Veterans Affairs (Volume 1: Integrated Report). Retrieved October, 2015 from http://www.va.gov/opa/choiceact/documents/assessments/Integrated_Report.pdf [Google Scholar]

- Chambers D, Glasgow R, & Stange K (2013). The dynamic sustainability framework: Addressing the paradox of sustainment amid ongoing change. Implementation Science, 8, 117. doi: 10.1186/1748-5908-8-117 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chard KM, Ricksecker EG, Healy ET, Karlin BE, & Resick PA (2012). Dissemination and experience with cognitive processing therapy. Journal of Rehabilitation Research and Development, 49, 667–678. doi: 10.1682/JRRD.2011.10.0198 [DOI] [PubMed] [Google Scholar]

- Cook JM, O’Donnell C, Dinnen S, Bernardy N, Rosenheck R, & Hoff R (2013). A formative evaluation of two evidence-based psychotherapies for PTSD in VA residential treatment programs. Journal of Traumatic Stress, 26, 56–63. doi: 10.1002/jts.21769 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damschroder L, Aron D, Keith R, Kirsh S, Alexander J, & Lowery J (2009). Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science, 4(1), 50. doi: 10.1186/1748-5908-4-50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damschroder L & Lowery J (2015). Efficient synthesis: Using qualitative comparative analysis (QCA) and the CFIR across diverse studies. Plenary presentation at the 2015 Society for Implementation Research Collaboration, Seattle, WA. [Google Scholar]

- DelliFraine JL, Langabeer JR, & Nembhard IM (2010). Assessing the evidence of Six Sigma and Lean in the health care industry. Quality Management in Healthcare, 19(3), 211–225. doi: 10.1097/QMH.0b013e3181eb140e [DOI] [PubMed] [Google Scholar]

- Department of Veterans Affairs. (2008). Uniform mental health services in VA medical centers and clinics. In Veterans Health Administration (Ed.), VHA handbook 260.01. Washington, DC. [Google Scholar]

- Department of Veterans Affairs. (2014). VA mental health services: public report. Retrieved November, 2014 from http://www.mentalhealth.va.gov/docs/Mental_Health_Transparency_Report_11-24-14.pdf

- Department of Veterans Affairs and Department of Defense. (2009a). The management of MDD working group. VA/DOD clinical practice guideline for management of major depressive disorder (MDD). Retrieved from http://www.healthquality.va.gov/guidelines/MH/mdd/MDDFULL053013.pdf

- Department of Veterans Affairs and Department of Defense. (2009b). VA/DoD clinical practice guideline for the management of substance use disorders. Retrieved from http://www.healthquality.va.gov/guidelines/MH/sud/sud_full_601f.pdf

- Department of Veterans Affairs and Department of Defense. (2010). VA/DoD clinical practice guideline for the management of posttraumatic stress. Retrieved from http://www.healthquality.va.gov/guidelines/MH/ptsd/cpgPTSDFULL201011612c.pdf

- Derickson R, Fishman J, Osatuke K, Teclaw R, & Ramsel D (2015). Psychological safety and error reporting within veterans health administration hospitals. Journal of Patient Safety, 11(1), 60–66. doi: 10.1097/PTS.0000000000000082 [DOI] [PubMed] [Google Scholar]

- Doty DH, Glick WH, & Huber GP (1993). Fit, equifinality, and organizational effectiveness: a test of two configurational theories. Academy of Management Journal, 36(6), 1196–1250. doi: 10.2307/256810 [DOI] [Google Scholar]

- Eftekhari A, Ruzek JI, Crowley JJ, Rosen CS, Greenbaum MA, & Karlin BE (2013). Effectiveness of national implementation of prolonged exposure therapy in Veterans Affairs care. JAMA Psychiatry, 70, 49–955. [DOI] [PubMed] [Google Scholar]

- Elbogen EB, Wagner HR, Johnson SC, Kinneer P, Kang H, Vasterling JJ, et al. (2013). Are Iraq and Afghanistan veterans using mental health services? New data from a national random-sample survey. Psychiatric Services, 64, 134–141. doi: 10.1176/appi.ps.004792011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Finley EP, Garcia HA, Ketchum NS, McGeary DD, McGeary CA, Stirman SW, et al. (2015). Utilization of evidence-based psychotherapies in Veterans Affairs posttraumatic stress disorder outpatient clinics. Psychological services, 12(1), 73. doi: 10.1037/ser0000014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flaspohler P, Stillman L, Duffy JL, Wandersman A, & Maras M (2008). Unpacking capacity: The intersection of research to practice and community centered models. American Journal of Community Psychology, 41(3–4), 182–196. doi: 10.1007/s10464-008-9162-3 [DOI] [PubMed] [Google Scholar]

- Forrester JW (1985). The model versus a modeling process. System Dynamics Review, 1, 133–134. doi: 10.1002/sdr.4260010112 [DOI] [Google Scholar]

- Forrester JW (1961). Industrial Dynamics. Cambridge: MIT Press. [Google Scholar]

- Garcia HA, McGeary CA, Finley EP, Ketchum NS, McGeary DD, & Peterson AL (2015). Burnout among psychiatrists in the Veterans Health Administration. Burnout Research, 2(4), 108–114. doi: 10.1016/j.burn.2015.10.001 [DOI] [Google Scholar]

- Glasgow RE, Vinson C, Chambers D, Khoury MJ, Kaplan RM, & Hunter C (2012). National Institutes of Health approaches to dissemination and implementation science: Current and future directions. American Journal of Public Health, 102(7), 1274–1281. doi: 10.2105/AJPH.2012.300755 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glasser S, Ellis W, Chin J, Glazner C & Kane V (2014). A model for eliminating veteran homelessness in the USA. Presentation at the 32nd International Conference of the System Dynamics Society, Delft, The Netherlands. [Google Scholar]

- Harris AH, Humphreys K, Bowe T, Kivlahan DR, & Finney JW (2009). Measuring the quality of substance use disorder treatment: Evaluating the validity of the Department of Veterans Affairs continuity of care performance measure. Journal of Substance Abuse Treatment, 36(3), 294–305. doi: 10.1016/j.jsat.2008.05.011 [DOI] [PubMed] [Google Scholar]

- Hermes ED, Hoff R, & Rosenheck RA (2014). Sources of the increasing number of Vietnam era veterans with a diagnosis of PTSD using VHA services. Psychiatric Services, 65(6), 830–832. doi: 10.1176/appi.ps.201300232 [DOI] [PubMed] [Google Scholar]

- Homer J, Jones A, Seville D, Essien J, Milstein B, & Murphy D (2004). The CDC’s diabetes systems modeling project: developing a new tool for chronic disease prevention and control. In 22nd International Conference of the System Dynamics Society (pp. 25–29). Retrieved from http://www.donellameadows.org/wp-content/userfiles/Diabetes_SystemISDC041.pdf. [Google Scholar]

- Hovmand P (2014). Community-based system dynamics modeling. New York: Springer. [Google Scholar]

- Karlin BE, Brown GK, Trockel M, Cunning D, Zeiss AM, & Taylor CB (2012). National dissemination of cognitive behavioral therapy for depression in the Department of Veterans Affairs health care system: therapist and patient-level outcomes. Journal of Consulting and Clinical Psychology, 80, 707–718. doi: 10.1037/a0029328 [DOI] [PubMed] [Google Scholar]

- Karlin B, & Cross G (2014). From the laboratory to the therapy room: National dissemination and implementation of evidence-based psychotherapies in the U.S. Department of Veterans Affairs Health Care System. American Psychologist, 69(1), 19–33. doi: 10.1037/a0033888 [DOI] [PubMed] [Google Scholar]

- Köpetz C, Faber T, Fishbach A, & Kruglanski AW (2011). The multifinality constraints effect: How goal multiplicity narrows the means set to a focal end. Journal of Personality and Social Psychology, 100(5), 810–826. doi: 10.1037/a0022980 [DOI] [PubMed] [Google Scholar]

- Lich KH, Tian Y, Beadles CA, Williams LS, Bravata DM, Cheng EM, et al. (2014). Strategic planning to reduce the burden of stroke among Veterans using simulation modeling to inform decision-making. Stroke, 45, 2078–2084. doi: 10.1161/STROKEAHA.114.004694 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luke DA, & Stamatakis KA (2012). Systems science methods in public health: Dynamics, networks, and agents. Annual Review of Public Health, 33, 357–376. doi: 10.1146/annurev-publhealth-031210-101222 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon AR, Maras MA, Pate CM, Igusa T, & Vander Stoep A (2015). Modeling the impact of school-based universal depression screening on additional service capacity needs: A system dynamics approach. Administration and Policy in Mental Health and Mental Health Services Research,. doi: 10.1007/s10488-015-0628-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mabry P (2014). Simulation as a tool to inform health policy. Part 1: Introduction to health systems simulations for policy. Academy Health Webinar. [Google Scholar]

- Mabry PL, Milstein B, Abraido-Lanza AF, Livingood WC, & Allegrante JP (2013). Opening on window on systems science research in health promotion and public health. Health Education & Behavior, 40, 5S–8S. doi: 10.1177/1090198113503343 [DOI] [PubMed] [Google Scholar]

- Martinez-Moyana IJ, & Richardson GP (2013). Best practices in system dynamics modeling. System Dynamics Review, 29, 102–123. doi: 10.1002/sdr.1495 [DOI] [Google Scholar]

- Masnick K, & McDonnell G (2010). A model linking clinical workforce skill mix planning to health and health care dynamics. Human Resources for Health, 8(1), 1. doi: 10.1186/1478-4491-8-11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazzocato P, Savage C, Brommels M, Aronsson H, & Thor J (2010). Lean thinking in healthcare: A realist review of the literature. Quality and Safety in Health Care, 19(5), 376–382. doi: 10.1136/qshc.2009.037986 [DOI] [PubMed] [Google Scholar]

- Meadows DH, & Robinson JM (1985). The Electronic Oracle: Computer models and social decisions. NewYork: Wiley. [Google Scholar]

- Meadows DH, & Wright D (2008). Thinking in systems: A primer. White River Junction: Chelsea Green Publishing. [Google Scholar]

- Morecroft J, & Sterman J (1994). Modeling for learning organizations. Portland, OR: Productivity Press. [Google Scholar]

- Mott J, Hundt N, Sansgiry S, Mignogna J, & Cully J (2014). Changes in psychotherapy utilization among veterans with depression, anxiety, and PTSD. Psychiatric Services, 65(1), 106–112. doi: 10.1176/appi.ps.201300056 [DOI] [PubMed] [Google Scholar]

- Powell BJ, McMillen JC, Proctor EK, Carpenter CR, Griffey RT, Bunger AC, et al. (2012). A compilation of strategies for implementing clinical innovations in health and mental health. Medical Care Research and Review, 69(2), 123–157. doi: 10.1177/1077558711430690 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proctor EK, Powell BJ, Baumann AA, Hamilton AM, & Santens RL (2012). Writing implementation research grant proposals: Ten key ingredients. Implement Science, 7(1), 96. doi: 10.1186/1748-5908-7-96 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. (2011). Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Administration and Policy In Mental Health, 38(2), 65–76. doi: 10.1007/s10488-010-0319-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rahmandad H, Repenning N, & Sterman J (2009). Effects of feedback delay on learning. System Dynamics Review, 25, 309–338. doi: 10.1002/sdr.427 [DOI] [Google Scholar]

- Richardson GP, & Pugh AL (1981). Introduction to system dynamics modeling with dynamo. Cambridge: MIT Press. [Google Scholar]

- Rogers EM (2003). Diffusion of innovations. New York: Free Press. [Google Scholar]

- Ruzek J, Karlin BE, & Zeiss A (2012). Implementation of evidence-based psychological treatments in the veterans health administration. In McHugh RK & Barlow DH (Eds.), Dissemination of evidence-based psychological treatments. New York: Oxford University Press. [Google Scholar]

- Rycroft-Malone J (2004). The PARIHS framework—a framework for guiding the implementation of evidence-based practice. Journal of Nursing Care Quality, 19(4), 297–304. [DOI] [PubMed] [Google Scholar]

- Rycroft-Malone J, Kitson A, Harvey G, McCormack B, Seers K, Titchen A, et al. (2002). Ingredients for change: Revisiting a conceptual framework. Quality and Safety in Health Care, 11(2), 174–180. doi: 10.1136/qhc.11.2.174 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scaccia JP, Cook BS, Lamont A, Wandersman A, Castellow J, Katz J, et al. (2015). A practical implementation science heuristic for organizational readiness: R = MC2. Journal of Community Psychology, 43(4), 484–501. doi: 10.1002/jcop.21698 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Senge PM (2006). The fifth discipline: The art and practice of the learning organization. Vancouver, BC: Broadway Business. [Google Scholar]

- Shiner B, D’Avolio LW, Nguyen TM, Zayed MH, Young-Xu Y, Desai RA, et al. (2013). Measuring use of evidence based psychotherapy for posttraumatic stress disorder. Administration and Policy in Mental Health and Mental Health Services Research, 40(4), 311–318. doi: 10.1007/s10488-012-0421-0 [DOI] [PubMed] [Google Scholar]

- Simon H (1991). Bounded rationality and organizational learning. Organization Science, 2(1), 125–134. doi: 10.1287/orsc.2.1.125 [DOI] [Google Scholar]

- Sterman J (1994). Learning in and about complex systems. System Dynamics Review, 10, 291–330. doi: 10.1002/sdr.4260100214 [DOI] [Google Scholar]

- Sterman JD (2000). Business dynamics: Systems thinking and modeling for a complex world (Vol. 19). Boston: Irwin/McGraw-Hill. [Google Scholar]

- Sterman JD (2006). Learning from evidence in a complex world. American Journal of Public Health, 6, 505–514. doi: 10.2105/AJPH.2005.066043 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stetler C, Legro M, Rycroft-Malone J, Bowman C, Curran G, Guihan M, et al. (2006). Role of “external facilitation” in implementation of research findings: A qualitative evaluation of facilitation experiences in the Veterans Health Administration. Implementation Science, 1, 23. doi: 10.1186/1748-5908-1-23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- System Dynamics Review. Hoboken: John Wiley & Sons, Inc. Retrieved from http://onlinelibrary.wiley.com/journal/10.1002/(ISSN)1099-1727 [Google Scholar]

- U.S. Department of Housing and Urban Development. (2014). The 2014 Annual Homeless Assessment Report (AHAR) to congress: PART 1 point-in-time estimates of homelessness. Retrieved October 1, 2015 from https://www.hudexchange.info/resources/documents/2014-AHAR-Part1.pdf

- U.S. Interagency Council on Homelessness. (2013). Ending homelessness among veterans: A report by the United States interagency council on homelessness. Retrieved October, 2015 from http://usich.gov/resources/uploads/asset_library/USICH_Ending_Homelessness_Among_Veterans_Rpt_February_2013_FINAL.pdf

- Vennix J (1996). Group model building: facilitating team learning using system dynamics. Chichester: Wiley. [Google Scholar]

- Ventana Systems, Inc. (2014). Vensim® Version 6.3.

- Vest JR, & Gamm LD (2009). A critical review of the research literature on Six Sigma, Lean and StuderGroup’s Hardwiring Excellence in the United States: the need to demonstrate and communicate the effectiveness of transformation strategies in healthcare. Implementation Science, 4(1), 35. doi: 10.1186/1748-5908-4-35 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Warren K (2004). Why has feedback systems thinking struggled to influence strategy and policy formulation? Suggestive evidence, explanations and solutions. Systems Research and Behavioral Science, 21(4), 331–347. doi: 10.1002/sres.651 [DOI] [Google Scholar]

- Warren K (2005). Improving strategic management with the fundamental principles of system dynamics. System Dynamics Review, 21(4), 329–350. doi: 10.1002/sdr.325 [DOI] [Google Scholar]

- Watts BV, Shiner B, Zubkoff L, Carpenter-Song E, Ronconi JM, & Coldwell CM (2014). Implementation of evidence-based psychotherapies for posttraumatic stress disorder in VA specialty clinics. Psychiatric Services, 65, 648–653. doi: 10.1176/appi.ps.201300176 [DOI] [PubMed] [Google Scholar]

- Weiner BJ (2009). A theory of organizational readiness for change. Implementation Science, 4, 67. doi: 10.1186/1748-5908-4-67 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yanchus NJ, Periard D, Moore SC, Carle AC, & Osatuke K (2015). Predictors of job satisfaction and turnover intention in VHA mental health employees: A comparison between psychiatrists, psychologists, social workers, and mental health nurses. Human Service Organizations: Management, Leadership & Governance, 39(3), 219–244. doi: 10.1080/23303131.2015.1014953 [DOI] [Google Scholar]