Significance

Mean-field games (MFGs) is an emerging field that models large populations of agents. They play a central role in many disciplines, such as economics, data science, and engineering. Since many applications come in the form of high-dimensional stochastic MFGs, numerical methods that use spatial grids are prone to the curse of dimensionality. To this end, we exploit the variational structure of potential MFGs and reformulate it as a generative adversarial network (GAN) training problem. This reformulation allays a bit the curse of dimensionality when solving high-dimensional MFGs in the stochastic setting, by avoiding spatial grids or uniform sampling in high dimensions, and instead utilizes the structure of the MFG and its connection with GANs.

Keywords: mean-field games, generative adversarial networks, Hamilton–Jacobi–Bellman, optimal control, optimal transport

Abstract

We present APAC-Net, an alternating population and agent control neural network for solving stochastic mean-field games (MFGs). Our algorithm is geared toward high-dimensional instances of MFGs that are not approachable with existing solution methods. We achieve this in two steps. First, we take advantage of the underlying variational primal-dual structure that MFGs exhibit and phrase it as a convex–concave saddle-point problem. Second, we parameterize the value and density functions by two neural networks, respectively. By phrasing the problem in this manner, solving the MFG can be interpreted as a special case of training a generative adversarial network (GAN). We show the potential of our method on up to 100-dimensional MFG problems.

Mean field games (MFGs) are a class of problems that model large populations of interacting agents. They have been widely used in economics (1–4), finance (2, 5–7), industrial engineering (8–10), swarm robotics (11, 12), epidemic modeling (13, 14), and data science (15–17). In MFGs, a continuum population of small rational agents play a noncooperative differential game on a time horizon . At the optimum, the agents reach a Nash equilibrium, where they can no longer unilaterally improve their objectives. Given the initial distribution of agents , where is the space of all probability densities, the solution to MFGs are obtained by solving the system of partial differential equations (PDEs),

| [1.1] |

which couples a Hamilton–Jacobi–Bellman (HJB) equation and a Fokker–Planck (FP) equation. Here, is the value function, i.e., the policy that guides the agents; is the Hamiltonian, which describes the physics of the environment; is the distribution of agents at time ; denotes the interaction between the agents and the population; and is the terminal condition, which guides the agents to the final distribution. Under standard assumptions, i.e., convexity of in the second variable, and monotonicity of and —namely, that

and similarly for —then the solution to Eq. 1.1 exists and is unique. See refs. 18–20 for more details. Although there are a plethora of fast solvers for the solution of Eq. 1.1 in two and three dimensions (20–25), numerical methods for solving Eq. 1.1 in high dimensions are practically nonexistent due to the need for grid-based spatial discretization. These grid-based methods are prone to the curse of dimensionality, i.e., their computational complexity grows exponentially with spatial dimension (26). Thus, grid-based methods cannot be tractably used on, e.g., modeling an energy-efficient heating, ventilation, and air-conditioning system in a complex building, where the dimensions can be as high as 1,000 (27).

Our Contribution.

We present APAC-Net, an alternating population and agent control neural network approach geared toward high-dimensional MFGs in the deterministic and stochastic case. To this end, we phrase the MFG problem as a saddle-point problem (19, 22, 28) and parameterize the value function and the density function. This formulation provides some alleviation to the curse of dimensionality by avoiding the use of spatial grids or uniformly sampling in high dimensions. While spatial grids for MFGs are also avoided in ref. 29, their work is limited to the deterministic setting . APAC-Net models high-dimensional MFGs in the stochastic setting . It does this by drawing from a natural connection between MFGs and generative adversarial neural networks (GANs) (30) (Section 3), a powerful class of generative models that have shown remarkable success on various types of datasets (30–35).

1. Variational Primal-Dual Formulation of MFGs

We derive the mathematical formulation of MFGs for our framework; in particular, we arrive at a primal-dual convex–concave formulation tailored for our alternating networks approach. An MFG system Eq. 1.1 is called potential, if there exist functionals such that

| [2.1] |

where

| [2.2] |

That is, there exist functionals such that their variational derivatives with respect to are the interaction and terminal costs and from Eq. 1.1. A critical feature of potential MFGs is that the solution to Eq. 1.1 can be formulated as the solution to a convex–concave saddle-point optimization problem. To this end, we begin by stating Eq. 1.1 as a variational problem (19, 22) akin to the Benamou–Brenier formulation for the Optimal Transport (OT) problem:

| [2.3] |

where is the Lagrangian function corresponding to the Legendre transform of the Hamiltonian , are mean-field interaction terms, and is the velocity field. Next, setting as a Lagrange multiplier, we insert the PDE constraint into the objective to get

| [2.4] |

Finally, integrating by parts and minimizing with respect to to obtain the Hamiltonian via , we obtain

| [2.5] |

Here, our approach follows that of refs. 22, 36, and 37. This formula can also be obtained in the context of HJB equations in density spaces (23) or by integrating the HJB and the FP equations in Eq. 1.1 with respect to and , respectively (28). In ref. 28, it was observed that all MFG systems admit an infinite-dimensional two-player general-sum game formulation, and the potential MFGs are the ones that correspond to zero-sum games. In this interpretation, Player 1 represents the mean field or the population as a whole, and their strategy is the population density . Furthermore, Player 2 represents the generic agent, and their strategy is the value function . The aim of Player 2 is to provide a strategy that yields the best response of a generic agent against the population. This interpretation is in accord with the intuition behind GANs, as the key observation is that under mild assumptions on and , each spatial integral is really an expectation from . The formulation Eq. 2.5 is the cornerstone of our method.

2. Connections to GANs

GANs.

In GANs (30), we have a discriminator and generator, and the goal is to obtain a generator that is able to produce samples from a desired distribution. The generator does this by taking samples from a known distribution and transforming them into samples from the desired distribution. Meanwhile, the purpose of the discriminator is to aid the optimization of the generator. Given a generator network and a discriminator network , the original GAN objective is to find an equilibrium to the minimax problem

| [3.1] |

Here, the discriminator acts as a classifier that attempts to distinguish real images from fake/generated images, and the goal of the generator is to produce samples that “fool” the discriminator.

Wasserstein GANs.

In Wasserstein GANs (31), the motivation is drawn from OT theory, where now the objective function is changed to the Wasserstein-1 (W1) distance in the Kantorovich–Rubenstein dual formulation

| [3.2] |

and the discriminator is required to be 1-Lipschitz. In this setting, the goal of the discriminator is to compute the W1 distance between the distribution of and . In practice, using the W1 distance helps prevent the generator from suffering “mode collapse,” a situation where the generator produces samples from only one mode of the distribution ; for instance, if is the distribution of images of handwritten digits, then mode collapse entails producing only, say, the 0 digit. Originally, weight-clipping was to enforce the Lipschitz condition of the discriminator network (31), but an improved method using a penalty on the gradient was used in ref. 32.

GANs MFGs.

A Wasserstein GAN can be seen as a particular instance of a deterministic MFG (19, 22, 38). Specifically, consider the MFG Eq. 2.5 in the following setting. Let , be a hard constraint with target measure (as in OT), let = 0, and let be the Hamiltonian defined by

| [3.3] |

where we note that this Hamiltonian arises when the Lagrangian is given by . Then, Eq. 2.5 reduces to,

| [3.4] |

where we note that the optimization in leads to . And since , we have that , and for all . We observe that the above is precisely the W1 distance in the Kantorovich–Rubenstein duality (39).

3. APAC-Net

Rather than discretizing the domain and solving for the function values at grid-points, APAC-Net avoids them by parameterizing the function and solving for the function itself. We make a small commentary that this rhymes with history, in moving from the Riemann to the Lebesgue integral: The former focused on grid-points of the domain, whereas the latter focused on the function values.

The training process for our MFG is similar to that of GANs. We initialize the neural networks and . We then let

| [4.1] |

where are samples drawn from the initial distribution. Thus, we set , i.e., the push-forward of . We make a small comment that this idea of sampling to solve a PDE is similar in spirit to that of the Feynman–Kac approach. In this setting, we train to produce samples from . We note that and automatically satisfy the terminal and initial condition, respectively. In particular, produces samples from at .

Our strategy for training consists of alternately training (the population) and (the value function for an individual agent). Intuitively, this means we are alternating the population and agent control neural networks (APAC-Net) in order to find the equilibrium. Specifically, we train by first sampling a batch from the given initial density and uniformly from ; so, we are really sampling from the products of the densities and . Next, we compute the push-forward for . We then compute the loss,

| [4.2] |

where we can optionally add a regularization term

| [4.3] |

to penalize deviations from the HJB equations (29, 40). This extra regularization term has also been found effective in, e.g., Wasserstein GANs (3), where the norm of the gradient (i.e., the HJB equations) is penalized. Finally, we back-propagate the loss to the weights of . To train the generator, we again sample and as before and compute

| [4.4] |

Finally, we back-propagate this loss with respect to the weights of (see Algorithm [Alg.] 1).

Algorithm 1: APAC-Net

| Require: diffusion parameter, terminal cost, Hamiltonian, interaction term. |

| Require: Initialize neural networks and , batch size |

| Require: Set and as in Eq. 4.1 |

| while not converged do |

| train : |

| Sample batch where and |

| for . |

| Back-propagate the loss to weights. |

| train : |

| Sample batch where and |

| Back-propagate the loss to weights. |

4. Related Works

High-Dimensional MFGs and Optimal Control.

To the best of our knowledge, the first work to solve MFGs efficiently in high dimensions () was done in ref. 29. Their work consisted of using Lagrangian coordinates and parameterizing the value function using a neural network. Finally, to estimate the densities, the instantaneous change of variables formula (41). This combination allowed them to successfully avoid using spatial grids when solving deterministic MFG problems with quadratic Hamiltonians. Besides only computing MFGs with , another limitation is that for nonquadratic Hamiltonians, the instantaneous change of variables formula may lead to high computational costs when estimating the density. APAC-Net circumvents this limitation by rephrasing the MFG as a saddle-point problem Eq. 2.5 and using a GAN-based approach to train two neural networks instead. For problems involving high-dimensional optimal control and differential games, spatial grids were also avoided (20, 23, 24, 42, 43). However, these methods are based on generating individual trajectories per agent and cannot be directly applied to MFGs without spatial discretization of the density, thus limiting their use in high dimensions.

Reinforcement Learning.

Our work bears connections with multiagent reinforcement learning (RL), where neither the Lagrangian nor the dynamics (constraint) in Eq. 2.3 are known. Here, a key difference is that multiagent RL generally considers a finite number of players. Ref. 44 proposes a primal-dual distributed method for multiagent RL. Refs. 16 and 45 propose a Q-Learning approach to solve these multiagent RL problems. Ref. 17 studies the convergence of policy gradient methods on mean-field RL problems, i.e., problems where the agents try instead to learn the control, which is socially optimal for the entire population. Ref. 46 uses an inverse RL approach to learn the MFG model along with its reward function. Ref. 47 proposes an actor–critic method for finding the Nash equilibrium in linear-quadratic MFGs and establishing linear convergence.

Generative Modeling with OT.

There is a class of works that focus on using OT, a class of MFGs, to solve problems arising in data science and, in particular, GANs. Ref. 48 presents a tractable method to train large-scale generative models using the Sinkhorn distance, which consist of loss functions that interpolate between Wasserstein (OT) distance and Maximum Mean Discrepancy (MMD). Ref. 49 proposes a mini-batch MMD-based distance to improve training GANs. Ref. 50 proposes a class of regularized Wasserstein GAN problems with theoretical guarantees. Ref. 51 uses a trained discriminator from GANs to further improve the quality of generated samples. Ref. 52 phrases the adversarial problem as a matching problem in order to avoid solving a minimax problem. Finally, ref. 53 provides an excellent survey on recent numerical methods for OT and their applications to GANs.

GAN-Based Approach for MFGs.

Our work is most similar to ref. 38, where a connection between MFGs and GANs is also made. However, APAC-Net differs from ref. 38 in two fundamental ways. First, instead of choosing the value function to be the generator, we set the density function as the generator. This choice is motivated by the fact that the generator outputs samples from a desired distribution. It is also aligned with other generative modeling techniques arising in continuous normalizing flows (40, 54–56). Second, rather than setting the generator/discriminator losses as the residual errors of Eq. 1.1, we follow the works of refs. 19, 22, 23, and 28 and utilize the underlying variational primal-dual structure of MFGs (see Eq. 2.5); this allows us to arrive at the Kantorovich–Rubenstein dual formulation of Wasserstein GANs (39).

5. Numerical Experiments

We demonstrate the potential of APAC-Net on a series of high-dimensional MFG problems. We note that the below examples would not have been possible with previous grid-based methods, as the number of grid-points required to even start the problem grows exponentially with the dimension. We also illustrate the behavior of the MFG solutions for different values of and use an analytical solution to illustrate the accuracy of APAC-Net. We also provide additional high-dimensional results in Appendix B.

Experimental Setup.

We assume without loss of generality , unless otherwise stated. In all experiments, our neural networks have three hidden layers, with 100 hidden units per layer. We use a residual neural network (ResNet) for both networks, with skip connection weight 0.5. For , we use the Tanh activation function, and for , we use the ReLU activation function. For training, we use ADAM with , learning rate for , learning rate for , weight decay of for both networks, batch size 50, and (the HJB penalty parameter) in Alg. 1.

The Hamiltonians in our experiments have the form

| [6.1] |

where varies with the environment (either avoiding obstacles or avoiding congestion, etc.), and is a constant (that represents maximal speed). Furthermore, we choose as terminal cost

| [6.2] |

which is the distance between the population and a target destination. To allow for verification of the high-dimensional solutions, we set the obstacle and congestion costs to only affect the first two dimensions. In Figs. 1–4 and 6, time is represented by color. Specifically, blue denotes starting time, red denotes final time, and the intermediate colors denote intermediate times. We also plot the HJB residual error—that is, in Alg. 1—on 4,096 fixed sampled points, which helps us monitor the convergence of APAC-Net. As in standard machine-learning methods, all of the plots in this section are generated by using validation data—i.e., data not used in training—in order to gauge generalizability of APAC-Net. Further details, as well as additional experiments, can be found in the appendix.

Fig. 1.

Comparison of 2D solutions for different values of . The agents start at the blue points and end at the red points .

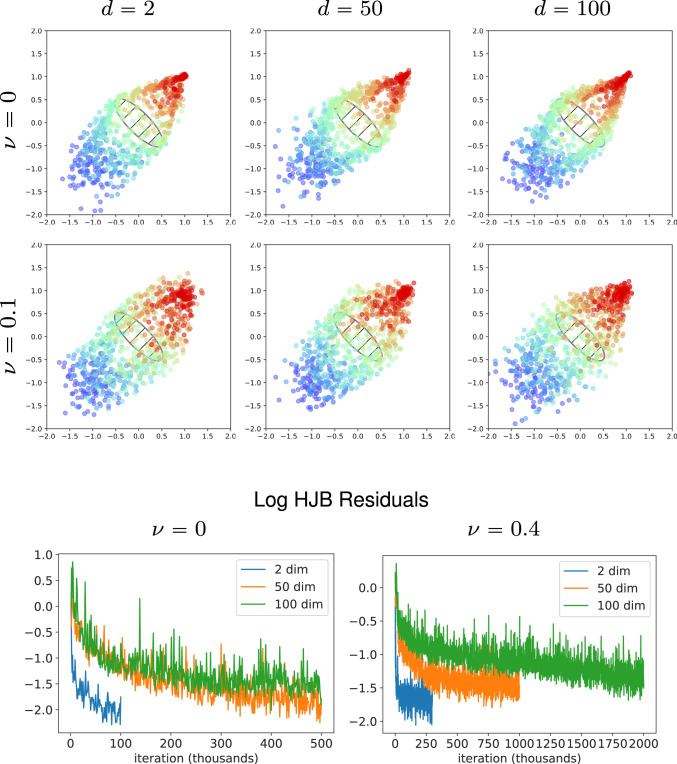

Fig. 4.

Computation of the congestion problem with a bottleneck in dimensions (dim) 2, 50, and 100 with stochasticity parameter and 0.1. For dimensions 50 and 100, we plot the first two dimensions.

Fig. 6.

Computation of the control of the fully nonlinear multiagent quadcopter (12 dimensions). This experiment represents a real-world example of control, where we move the agents from one point to another, with the constraint of having a velocity of zero at the destination. We compute examples with and without noise () and with and without congestion. The HJB residuals (Eq. 4.3) are plotted in the graph.

Effect of Stochasticity Parameter .

We investigate the effect of the stochasticity parameter on the behavior of the MFG solutions. In Fig. 1, we show the solutions for two-dimensional (2D) MFGs using , and 0.6. As increases, the density of agents widens along the paths due to the added diffusion term in the HJB and FP equations in Eq. 1.1. The hatched markings are obstacles and are described in Eqs. 6.4 and 6.5. These results are consistent with those in ref. 57.

Obstacles.

We compute the solution to an MFG where the agents are required to avoid obstacles. In this case, we let

| [6.3] |

with , and denoting with , , and , then

| [6.4] |

Similarly, we let

| [6.5] |

The obstacles and are shown in hatched markings in Fig. 2. Our initial density is a Gaussian centered at with SD , and the terminal function is . We chose in Eq. 6.1. The numerical results are shown in Fig. 2. Observe that results are similar across dimensions, verifying our high-dimensional computation. We run the 2D problem for 200,000 (200 k) iterations and the 50- and 100-dimensional problems for 300 k iterations.

Fig. 2.

Computation of the obstacle problem in dimensions (dim) 2, 50, and 100 with stochasticity parameter and 0.4. For dimensions 50 and 100, we plot the first two dimensions.

Congestion.

We choose the interaction term to penalize congestion, so that the agents are encouraged to spread out. In particular, we have

| [6.6] |

which is the (bounded) inverse squared distance, averaged over pairs of agents. Computationally, we sample from twice and then calculate the integrand. Here, our initial density is a Gaussian centered at with SD , the terminal function is , and we chose in Eq. 6.1. Results are shown in Fig. 3, where we see qualitatively similar results across dimensions. We run the 2D problem for 100 k iterations and the 50- and 100-dimensional problems for 500 k iterations.

Fig. 3.

Computation of the congestion problem in dimensions (dim) 2, 50, and 100 with stochasticity parameter and 0.4. For dimensions 50 and 100, we plot the first two dimensions.

Congestion with Bottleneck Obstacle.

We combine the congestion problem with a bottleneck obstacle. The congestion penalization is the same as Eq. 6.6, and the obstacle represents a bottleneck—thus, agents are encouraged to spread out, but must squeeze together to avoid the obstacle. The initial density, terminal functions, in Eq. 6.1, and the expression penalizing congestion are the same as in the congestion experiment above. The obstacle is chosen to be

| [6.7] |

with . As intuitively expected, the agents spread out before and after the bottleneck, but squeeze together in order to avoid the obstacle (Fig. 4). We run the 2D problem for 100 k iterations and the 50- and 100-dimensional problems for 500 k iterations. We observe similar results across dimensions.

Analytic Comparison.

We verify our method by comparing it to an analytic solution for dimensions 2, 50, and 100 with congestion and without congestion . For

| [6.8] |

and in Eq. 1.1, the explicit formula for is given by

| [6.9] |

where . For the case, we use Kernel Density Estimation (58, 59) to estimate from samples of the generator. The derivation of the analytic solution can be found in Appendix A.

For , we compute the relative error on a grid of size , where we discretize the spatial domain with points and the time domain with 16 points. Note here that the grid-points are validation points—i.e., points that were not used in training. For and , we use 4,096 sampled points for validation since we cannot build a grid. We run a total of 30 k and 60 k iterations for and , respectively. We validate every 1 k iterations. Fig. 5 shows that our learned model approaches the true solution across all dimensions for both values of , indicating that APAC-Net generalizes well.

Fig. 5.

Log relative errors in 2, 50, and 100 dimensions (dim) and for . Here, means no interaction. For the case, we compute the validation on a grid over 16 uniformly spaced timesteps with the true from Eq. 6.9. For the and 100 cases, we compute on a sample of 4,096 sample points, sampled from the initial density.

A Realistic Example: The Quadcopter.

In this experiment, we examine a realistic scenario where the dynamics are that of a Quadcopter (also known as Quadrotor craft), which is an aerial vehicle with four rotary wings (similar to many of the consumer drones seen today). The dynamics of the quadrotor craft are given as,

where , , and are the usual Euclidean spatial coordinates, and , , and are the angular coordinates of roll, pitch, and yaw, respectively. The constant is the mass, to which we put 0.5 (kilogram), and is the gravitation acceleration constant on Earth, to which we put 9.81 (meters per second squared). The variables , , , are the controls representing thrust and angular acceleration. In order to fit a control framework, the above second-order system is turned into a first-order system:

which we will compactly denote as , where is the right-hand side, is the state, and is the control. As can be observed, the above is a 12-dimensional system that is highly nonlinear and high-dimensional. In the stochastic case, we add a noise term to the dynamics: , where denotes a Wiener process (standard Brownian motion). The interpretation here is that we are modeling the situation when the quadcopter suffers from noisy measurements.

In our experiments, we set our Lagrangian cost function to be , and, thus, our Hamiltonian becomes . Our initial density is a Gaussian in the spatial coordinates centered at with SD 0.5, and we set all other initial coordinates to zero (i.e., initial velocity, initial angular position, and initial angular velocity are all set to zero). We set our terminal cost to be a simple norm difference between the agent’s current position and the position , and we also want the agents to have zero velocity, i.e.,

In our experiments, we set the final time to be .

We also add a congestion term to our experiments where the congestion is in the spatial positions, so as to encourage agents to spread out (and thus making midair collisions less likely):

where in our experiments we put .

For hyperparameters, we use the same setup as before, except we raise the batch size to 150. Our results are shown in Fig. 6. We see that with congestion, the agents spread out more as expected. Furthermore, in the presence of noise, the agents’ sensors are noisy, and so at terminal time, the agents do not get as close to the terminal point of . This noise also adds an envelope of uncertainty, so we do see that the agents are not as streamlined as in the noiseless cases.

We note that most previous attempts at modeling the behavior of quadcopters relied on linearization techniques, but here, we solve the fully nonlinear problem with neural networks. And our approach solves the quadcopter problem in a deterministic and stochastic MFGs context, where we consider many quadcopters and their interactions with each other. This multiagent modeling of the quadcopter would be practically infeasible for grid-based methods, as such a grid would need to discretize a high-dimensional space.

6. Conclusion

We present APAC-Net, an alternating population-agent control neural network approach for tackling high-dimensional stochastic MFGs. To this end, our algorithm avoids the use of spatial grids by parameterizing the controls, and , using two neural networks, respectively. Consequently, our method is geared toward high-dimensional instances of these problems that are beyond reach with existing grid-based methods. APAC-Net therefore sets the stage for solving realistic high-dimensional MFGs arising in, e.g., economics (1–4), swarm robotics (11, 12), and, perhaps most important/relevant, epidemic modeling (13, 14). Our method also has natural connections with Wasserstein GANs, where acts as a generative network and acts as a discriminative network. Unlike GANs, however, APAC-Net incorporates the structure of MFGs via Eqs. 2.5 and 4.1, which absolves the network from learning an entire MFG solution from the ground up. Our experiments show that our method is able to solve 100-dimensional MFGs.

Since our method was presented solely in the setting of potential MFGs, a natural extension is the nonpotential MFG setting, where the MFG can no longer be written in a variational form. Instead, one would have to formulate the MFG as a monotone inclusion problem (60). Moreover, convergence properties of the training process of APAC-Net may be investigated following the techniques presented in, e.g., ref. 61. Finally, a practical direction involves examining guidelines on the design of more effective network architectures, e.g., PDE-based networks (62, 63), neural ordinary differential equations (41), or sorting networks (64).

Appendix A. Derivation of Analytic Solution

We derive explicit formulas used to test our approximate solutions in Section 6. Assume that and

| [A.1] |

Then, Eq. 1.1 becomes

| [A.2] |

We find solutions to this system by searching for stationary solutions first:

| [A.3] |

and then writing

| [A.4] |

The second equation in Eq. A.3 yields , where is chosen so that . Plugging this in the first equation in Eq. A.3, we obtain

| [A.5] |

Now, we make an ansatz that . Then, we have that , and obtain

| [A.6] |

Therefore, we have that

| [A.7] |

From the first equation, we obtain that

| [A.8] |

On the other hand, we have that

| [A.9] |

so

| [A.10] |

Summarizing, we get that for any , the following is a solution for Eq. A.2:

| [A.11] |

where is given by Eq. A.8, and

| [A.12] |

Choosing , Eq. A.11 gives the analytic solution used in Section 6.

Appendix B: Details on Numerical Results and More Experiments

Congestion.

Here, we elaborate on how we compute the congestion term,

| [B.1] |

We do this by first using the batch , which was used for training (and sampled from ), and then compute another batch , again sampled from . Letting be a batch of time points uniformly sampled in , we estimate the interaction cost with,

| [B.2] |

Congestion with Bottleneck Obstacle and Higher Stochasticity.

When choosing a stochasticity parameter , the stochasticity dominates the dynamics, and the obstacles do not interact as much with the obstacle. We plot these results in Fig. 7, where for two dimensions, we trained for 150 k iterations, and for 50 and 100 dimensions, we trained for 800 k iterations. All environment and training parameters are the same as in the Congestion with Bottleneck Obstacle, except that now .

Fig. 7.

Computation of the congestion problem with a bottleneck in dimensions (dim) 2, 50, and 100 with stochasticity parameter . For dimensions 50 and 100, we plot the first two dimensions.

Analytic Comparison.

Here, we mention specifically how we performed Kernel Density Estimation. Namely, in order to estimate the density , we take a batch of samples (during training, this is the training batch). Then, at uniformly spaced time points , we estimate the density with the formula,

| [B.3] |

where we choose , is the dimension, and , in accordance with Scott’s rule for multivariate Kernel Density Estimation (65).

Density Splitting Via Symmetric Obstacle.

Here, we compute an example where we have a symmetric obstacle, and, thus, the generator will learn to split the density. Agents will go left or right of the obstacle, depending on their starting position. Here, we chose the obstacle as,

| [B.4] |

and we choose . The environment and training parameters are the same as in the obstacles example in the main text, except that we choose the HJB penalty in Alg. 1 to be 0.1. Qualitatively, we see that the solution agrees with our intuition: The agents will go left or right depending on their starting position. Note that the results are similar across dimensions, verifying our computation. For the , case, we trained for 100 k iterations; for the , case, we trained for 300 k iterations; for the and , case, we trained for 500 k iterations; for the case, we trained for 1,000 k iterations; and the for the , case, we trained for 2,000 k iterations. In Fig. 8, we plot the agents moving through the symmetric obstacles, as well as the HJB residuals.

Fig. 8.

Computation of the obstacle problem where the obstacle is symmetric. We plot the results for dimensions (dim) 2 and 100, and for and .

Acknowledgments

This work was supported by Air Force Office of Scientific Research (AFOSR) Multidisciplinary University Research Initiative Grant FA9550-18-1-0502, AFOSR Grant FA9550-18-1-0167, and Office of Naval Research Grant N00014-18-1-2527.

Footnotes

The authors declare no competing interest.

Data Availability

To promote access and progress, we provide our PyTorch implementation at GitHub (https://github.com/atlin23/apac-net).

References

- 1.Achdou Y., Buera F. J., Lasry J.-M., Lions P.-L., Moll B., Partial differential equation models in macroeconomics. Philos. Trans. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 372, 20130397 (2014). [DOI] [PubMed] [Google Scholar]

- 2.Achdou Y., Han J., Lasry J.-M., Lions P.-L., Moll B.. “Income and wealth distribution in macroeconomics: A continuous-time approach.” (NBER Working Paper 23732, National Bureau of Economic Research, Cambridge, MA, 2017). https://www.nber.org/papers/w23732. Accessed 4 July 2021.

- 3.Guéant O., Lasry J.-M., Lions P.-L., “Mean field games and applications” in Paris-Princeton Lectures on Mathematical Finance 2010, Carmona R., et al., Eds. (Lecture Notes in Mathematics, Springer, Berlin, Germany, 2011), vol. 2003, pp. 205–266. [Google Scholar]

- 4.Gomes D. A., Nurbekyan L., Pimentel E. A., Economic Models and Mean-Field Games Theory (IMPA Mathematical Publications, Rio de Janeiro, Brazil, 2015). [Google Scholar]

- 5.Firoozi D., Caines P. E., “An optimal execution problem in finance targeting the market trading speed: An MFG formulation” in 2017 IEEE 56th Annual Conference on Decision and Control (CDC) (IEEE, Piscataway, NJ, 2017), pp. 7–14. [Google Scholar]

- 6.Cardaliaguet P., Lehalle C.-A., Mean field game of controls and an application to trade crowding. Math. Financ. Econ. 12, 335–363 (2018). [Google Scholar]

- 7.Casgrain P., Jaimungal S., Algorithmic trading in competitive markets with mean field games. SIAM News 52, 1–2 (2019). [Google Scholar]

- 8.De Paola A., Trovato V., Angeli D., Strbac G., A mean field game approach for distributed control of thermostatic loads acting in simultaneous energy-frequency response markets. IEEE Trans. Smart Grid 10, 5987–5999 (2019). [Google Scholar]

- 9.Kizilkale A. C., Salhab R., Malhamé R. P., An integral control formulation of mean field game based large scale coordination of loads in smart grids. Automatica 100, 312–322 (2019). [Google Scholar]

- 10.Gomes D. A., Saúde J., A mean-field game approach to price formation in electricity markets. arXiv [Preprint] (2018). https://arxiv.org/abs/1807.07088 (Accessed 4 July 2021).

- 11.Liu Z., Wu B., Lin H., “A mean field game approach to swarming robots control” in 2018 Annual American Control Conference (ACC) (IEEE, Piscataway, NJ, 2018), pp. 4293–4298. [Google Scholar]

- 12.Elamvazhuthi K., Berman S., Mean-field models in swarm robotics: A survey. Bioinspiration Biomimetics 15, 015001 (2019). [DOI] [PubMed] [Google Scholar]

- 13.Lee W., Liu S., Tembine H., Osher S., Controlling propagation of epidemics via mean-field games. SIAM J. Appl. Math. 81, 190–207 (2021). [Google Scholar]

- 14.Chang S. L., Piraveenan M., Pattison P., Prokopenko M., Game theoretic modelling of infectious disease dynamics and intervention methods: A review. J. Biol. Dynam. 14, 57–89 (2020). [DOI] [PubMed] [Google Scholar]

- 15.Weinan E., Han J., Li Q., A mean-field optimal control formulation of deep learning. Res. Math. Sci. 6, 10 (2019). [Google Scholar]

- 16.Guo X., Hu A., Xu R., Zhang J., “Learning mean-field games” in NeurIPS 2019: The 33rd Annual Conference on Neural Information Processing Systems, Wallach H., et al., Eds. (Advances in Neural Information Processing Systems, 2019), vol. 32, pp. 4967–4977. [Google Scholar]

- 17.Carmona R., Laurière M., Tan Z., Linear-quadratic mean-field reinforcement learning: Convergence of policy gradient methods. arXiv [Preprint] (2019). https://arxiv.org/abs/1910.04295 (Accessed 4 July 2021).

- 18.Lasry J.-M., Lions P.-L., Jeux à champ moyen. II–Horizon fini et contrôle optimal. Compt. Rendus Math. 343, 679–684 (2006). [Google Scholar]

- 19.Lasry J.-M., Lions P.-L., Mean field games. Jpn. J. Math. 2, 229–260 (2007). [Google Scholar]

- 20.Chow Y. T., Darbon J., Osher S., Yin W., Algorithm for overcoming the curse of dimensionality for time-dependent non-convex Hamilton–Jacobi equations arising from optimal control and differential games problems. J. Sci. Comput. 73, 617–643 (2017). [Google Scholar]

- 21.Achdou Y., Capuzzo-Dolcetta I., Mean field games: Numerical methods. SIAM J. Numer. Anal. 48, 1136–1162 (2010). [Google Scholar]

- 22.Benamou J.-D., Carlier G., Santambrogio F., “Variational mean field games” in Active Particles, Bellomo N., Degond P., Tadmor E., Eds. (Modeling and Simulation in Science, Engineering, and Technology, Springer, Cham, Switzerland, 2017), vol. 1, pp. 141–171. [Google Scholar]

- 23.Chow Y. T., Li W., Osher S., Yin W., Algorithm for Hamilton–Jacobi equations in density space via a generalized Hopf formula. J. Sci. Comput. 80, 1195–1239 (2019). [Google Scholar]

- 24.Chow Y. T., Darbon J., Osher S., Yin W., Algorithm for overcoming the curse of dimensionality for certain non-convex Hamilton–Jacobi equations, projections and differential games. Annal. Math. Sci. Appl. 3, 369–403 (2018). [Google Scholar]

- 25.Jacobs M., Léger F., Li W., Osher S., Solving large-scale optimization problems with a convergence rate independent of grid size. SIAM J. Numer. Anal. 57, 1100–1123 (2019). [Google Scholar]

- 26.Bellman R., Dynamic programming. Science 153, 34–37 (1966). [DOI] [PubMed] [Google Scholar]

- 27.Li S., Li S. E., Deng K., “Mean-field control for improving energy efficiency” in Automotive Air Conditioning, Zhang Q., Li S. E., Deng K., Eds. (Springer, Cham, Switzerland, 2016), pp. 125–143. [Google Scholar]

- 28.Cirant M., Nurbekyan L., The variational structure and time-periodic solutions for mean-field games systems. Minimax Theory Appl 3, 227–260 (2018). [Google Scholar]

- 29.Ruthotto L., Osher S. J., Li W., Nurbekyan L., Fung S. W., A machine learning framework for solving high-dimensional mean field game and mean field control problems. Proc. Natl. Acad. Sci. U.S.A. 117, 9183–9193 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Goodfellow I., et al. , “Generative adversarial nets” in NIPS’14: Proceedings of the 27th International Conference on Neural Information Processing Systems, Ghahramani Z., Welling M., Cortes C., Lawrence N. D., Weinberger K. Q., Eds. (MIT Press, Cambridge, MA, 2014), vol. 2, pp. 2672–2680. [Google Scholar]

- 31.Arjovsky M., Chintala S., Bottou L., “Wasserstein generative adversarial networks” in ICML’17: Proceedings of the 34th International Conference on Machine Learning, Precup D., Teh Y. W., Eds. (JMLR, 2017), vol. 70, pp. 214–223. [Google Scholar]

- 32.Gulrajani I., Ahmed F., Arjovsky M., Dumoulin V., Courville A. C., “Improved training of Wasserstein GANs” in NIPS’17: Proceedings of the 31st International Conference on Neural Information Processing Systems, von Luxburg U., Guyon I., Bengio S., Wallach H., Fergus R., Eds. (Curran Associates, Inc., Red Hook, NY, 2017), pp. 5767–5777. [Google Scholar]

- 33.Lin A. T., Li W., Osher S., Montúfar G., Wasserstein proximal of GANs. UCLA CAM [Preprint] (2018). ftp://ftp.math.ucla.edu/pub/camreport/cam18-53.pdf (Accessed 4 July 2021).

- 34.Dukler Y., Li W., Lin A., Montufar G., “Wasserstein of Wasserstein loss for learning generative models” in Proceedings of the 36th International Conference on Machine Learning, Chaudhuri K., Salakhutdinov R., Eds. (Proceedings of Machine Learning Research, PMLR, 2019), vol. 97, pp. 1716–1725. [Google Scholar]

- 35.Chu K. F. C., Minami K., Smoothness and stability in GANs. arXiv [Preprint] (2020). https://arxiv.org/abs/2002.04185 (Accessed 4 July 2021).

- 36.Cardaliaguet P., Graber P. J., Mean field games systems of first order. ESAIM Control, Optim. Calc. Var. 21, 690–722 (2015). [Google Scholar]

- 37.Cardaliaguet P., Graber P. J., Porretta A., Tonon D., Second order mean field games with degenerate diffusion and local coupling. Nonlin. Diff. Equ. Appl. 22, 1287–1317 (2015). [Google Scholar]

- 38.Cao H., Guo X., Laurière M., Connecting GANs and MFGs. arXiv [Preprint] (2020). https://arxiv.org/abs/2002.04112 (Accessed 4 July 2021).

- 39.Villani C., Topics in Optimal Transportation (Graduate Studies in Mathematics, American Mathematical Society, Providence, RI, 2003), vol. 58. [Google Scholar]

- 40.Onken D., Fung S. W., Li X., Ruthotto L., OT-Flow: Fast and accurate continuous normalizing flows via optimal transport. arXiv [Preprint] (2020). https://arxiv.org/abs/2006.00104 (Accessed 4 July 2021).

- 41.Chen T. Q., Rubanova Y., Bettencourt J., Duvenaud D. K., “Neural ordinary differential equations” in NIPS’18: Proceedings of the 32nd International Conference on Neural Information Processing Systems, Bengio S., Wallach H. M., Larochelle H., Grauman K., Cesa-Bianchi N., Eds. (Curran Associates, Red Hook, NY, 2018), pp. 6572–6583. [Google Scholar]

- 42.Lin A. T., Chow Y. T., Osher S. J., A splitting method for overcoming the curse of dimensionality in Hamilton–Jacobi equations arising from nonlinear optimal control and differential games with applications to trajectory generation. Commun. Math. Sci. 16, pp. 1933–1973 (2018). [Google Scholar]

- 43.Darbon J., Osher S., Algorithms for overcoming the curse of dimensionality for certain Hamilton–Jacobi equations arising in control theory and elsewhere. Res. Math. Sci. 3, 19 (2016). [Google Scholar]

- 44.Wai H.-T., Yang Z., Wang Z., Hong M., “Multi-agent reinforcement learning via double averaging primal-dual optimization” in NIPS’18: Proceedings of the 32nd International Conference on Neural Information Processing Systems, Bengio S., Wallach H. M., Larochelle H., Grauman K., Cesa-Bianchi N., Eds. (Curran Associates, Red Hook, NY, 2018), pp. 9649–9660. [Google Scholar]

- 45.Guo X., Hu A., Xu R., Zhang J., A general framework for learning mean-field games. arXiv [Preprint] (2020). https://arxiv.org/abs/2003.06069 (Accessed 4 July 2021).

- 46.Yang J., Ye X., Trivedi R., Xu H., Zha H., “Deep mean field games for learning optimal behavior policy of large populations” in 6th International Conference on Learning Representations (ICLR, 2018). [Google Scholar]

- 47.Fu Z., Yang Z., Chen Y., Wang Z., “Actor-critic provably finds Nash equilibria of linear-quadratic mean-field games” in 8th International Conference on Learning Representations (ICLR, 2020). [Google Scholar]

- 48.Genevay A., Peyre G., Cuturi M., “Learning generative models with Sinkhorn divergences” in Proceedings of the Twenty-First International Conference on Artificial Intelligence and Statistics, Storkey A., Perez-Cruz F., Eds. (Proceedings of Machine Learning Research, PMLR, 2018), vol. 84, pp. 1608–1617. [Google Scholar]

- 49.Salimans T., Zhang H., Radford A., Metaxas D., “Improving GANs using optimal transport” in 6th International Conference on Learning Representations (ICLR, 2018). [Google Scholar]

- 50.Sanjabi M., Ba J., Razaviyayn M., Lee J. D., “On the convergence and robustness of training GANs with regularized optimal transport” in NIPS’18: Proceedings of the 32nd International Conference on Neural Information Processing Systems, Bengio S., Wallach H. M., Larochelle H., K. Grauman, N. Cesa-Bianchi, Eds. (Curran Associates, Red Hook, NY, 2018), pp. 7091–7101. [Google Scholar]

- 51.Tanaka A., “Discriminator optimal transport” in NeurIPS 2019: The 33rd Annual Conference on Neural Information Processing Systems, Wallach H., et al., Eds. (Advances in Neural Information Processing Systems, 2019), vol. 32, pp. 6813–6823. [Google Scholar]

- 52.Lin J., Lensink K., Haber E., Fluid flow mass transport for generative networks. arXiv [Preprint] (2019). https://arxiv.org/abs/1910.01694 (Accessed 4 July 2021).

- 53.Peyré G., et al. , Computational optimal transport. Found. Trend. Math. Learn. 11, 355–607 (2019). [Google Scholar]

- 54.Finlay C., Jacobsen B.-H., Nurbekyan L., Oberman A. M., How to train your neural ODE. arXiv [Preprint] (2020). https://arxiv.org/abs/2002.02798 (Accessed 4 July 2021).

- 55.Grathwohl W., Chen R. T. Q., Betterncourt J., Sutskever I., Duvenaud D., “FFJORD: Free-form continuous dynamics for scalable reversible generative models” in International Conference on Learning Representations 2019 (ICLR, 2019). [Google Scholar]

- 56.Onken D., Ruthotto L., Discretize-optimize vs. optimize-discretize for time-series regression and continuous normalizing flows. arXiv [Preprint] (2020). https://arxiv.org/abs/2005.13420 (Accessed 4 July 2021).

- 57.Parkinson C., Arnold D., Bertozzi A. L., Osher S., A model for optimal human navigation with stochastic effects. arXiv [Preprint] (2020). https://arxiv.org/abs/2005.03615 (Accessed 4 July 2021).

- 58.Rosenblatt M., Remarks on some nonparametric estimates of a density function. Ann. Math. Statist. 27, 832–837 (1956). [Google Scholar]

- 59.Parzen E., On estimation of a probability density function and mode. Ann. Math. Statist. 33, 1065–1076 (1962). [Google Scholar]

- 60.Liu S., Nurbekyan L., Splitting methods for a class of non-potential mean field games. arXiv [Preprint] (2020). https://arxiv.org/abs/2007.00099 (Accessed 4 July 2021).

- 61.Cao H., Guo X., Approximation and convergence of GANs training: An SDE approach. arXiv [Preprint] (2020). https://arxiv.org/abs/2006.02047 (Accessed 4 July 2021).

- 62.Haber E., Ruthotto L., Stable architectures for deep neural networks. Inverse Probl. 34, 014004 (2017). [Google Scholar]

- 63.Ruthotto L., Haber E., Deep neural networks motivated by partial differential equations. J. Math. Imag. Vis. 62, 1–13 (2019). [Google Scholar]

- 64.Anil C., Lucas J., Grosse R., “Sorting out Lipschitz function approximation” in International Conference on Machine Learning, Chaudhuri K., Salakhutdinov R., Eds. (Proceedings of Machine Learning Research, PMLR, 2019), pp. 291–301. [Google Scholar]

- 65.Scott D. W., Sain S. R., Multidimensional density estimation. Handb. Stat. 24, 229–261 (2005). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

To promote access and progress, we provide our PyTorch implementation at GitHub (https://github.com/atlin23/apac-net).