Significance

This study examines a fundamental aspect of human cognition: how listeners learn musical systems. It provides evidence that certain types of symmetry featured in musical scales help listeners process melodic and tonal information more easily. We propose that this cognitive benefit is the reason for the prevalence of unevenly spaced notes in scales across musical cultures. From a broader perspective, this work provides a cognitive perspective on the trade-off between cross-cultural diversity and fundamental similarity in a universal human activity, music.

Keywords: universals, syntactic learning, expectancies, musical scale, musical cultures

Abstract

Despite the remarkable variability music displays across cultures, certain recurrent musical features motivate the hypothesis that fundamental cognitive principles constrain the way music is produced. One such feature concerns the structure of musical scales. The vast majority of musical cultures use scales that are not uniformly symmetric—that is, scales that contain notes spread unevenly across the octave. Here we present evidence that the structure of musical scales has a substantial impact on how listeners learn new musical systems. Three experiments were conducted to test the hypothesis that nonuniformity facilitates the processing of melodies. Novel melodic stimuli were composed based on artificial grammars using scales with different levels of symmetry. Experiment 1 tested the acquisition of tonal hierarchies and melodic regularities on three different 12-tone equal-tempered scales using a finite-state grammar. Experiments 2 and 3 used more flexible Markov-chain grammars and were designed to generalize the effect to 14-tone and 16-tone equal-tempered scales. The results showed that performance was significantly enhanced by scale structures that specified the tonal space by providing unique intervallic relations between notes. These results suggest that the learning of novel musical systems is modulated by the symmetry of scales, which in turn may explain the prevalence of nonuniform scales across musical cultures.

Music is a fascinating phenomenon from the perspective of human cognition: It is ubiquitous in human cultures (1, 2) and serves a role in everyday life for most individuals (3) but displays extraordinary variety in its timbral (4), rhythmic (5), and tonal characteristics (6) as well as social functions (7–9). The question of whether music possesses universal properties is a debated topic. The claim that music exhibits universal features has long faced skepticism (10–12), but in the light of past studies, it appears increasingly plausible (6, 13–19).

Until recently, discussions of musical universals were largely uninformed by systematically gathered, quantitative data. However, a growing body of ethnomusicological data (6, 20), together with the modern tools of statistical analysis inspired by evolutionary biology (21), have recently shed light on the question of musical universals. By quantitatively determining the prevalence of particular musical features (6, 19, 22), this line of research has confirmed that music is found in every culture, fulfills social needs, and exhibits what Western listeners have identified as a form of tonality (i.e., the existence of a tonal center among the set of discrete pitches) (6).

More specifically, a certain property of musical scales has been reported to constitute one of the main recurrent musical features across cultures. Savage et al. (19) examined 32 candidate features of “musical universality” and found that “nonequidistant scales”—or scales that are composed of intervals of different sizes—constitute the second-most common feature. In line with this is the observation that one of the oldest instruments ever found, a bone flute presumably constructed by Neanderthals 43,000 y ago, was designed to play unevenly spaced scale notes (23). In contrast, “equal-step” scales have been observed extremely rarely across musical cultures, but most notably in the famous Slendro scale from Java (18), in some Western art music by Claude Debussy and other 20th-century composers (24), and in Western serial compositions using the 12-tone chromatic scale (25).*

The pervasiveness of certain features in an activity as ubiquitous as music can be explained by the interaction of cross-cultural influences and the existence of sensory and cognitive constraints that shape the way music is enjoyed and hence produced. This study aims to examine those cognitive constraints for the particular case of musical scales. Music theorists have noted that common scales, such as the pentatonic scale and Western diatonic scale, display certain structural properties regarding the positioning of the tones along the octave that could promote the processing of musical information—in particular with respect to locating a tonal center (27, 28). Most known scales display intervals of different sizes (whole tones and semitones in the Western system), which are positioned around the octave in a way that maximizes uniqueness through intervallic, nonuniform structures (29). A pitch set satisfies the property of uniqueness if “each of its elements has a unique set of relations with the others and therefore has the potentiality for a unique musical role or ‘dynamic quality’” (ref. 30, p. 8). In turn, scale structures fulfilling uniqueness could enhance “position finding”—that is, having a sense of the position of each tone relative to other tones (27). As noted by Balzano (29), the Western diatonic scale possesses an axis of symmetry (i.e., the sequence of intervals formed by starting at a particular tone and moving both clockwise and counterclockwise around the circle is identical); however, it still fulfills the uniqueness property due to the directional nature (low/high) of pitch perception. This type of symmetry—which we term reflective symmetry—and the concept of uniqueness have also been reported in rhythms, mostly from West Africa (31), where they might serve a similar purpose of cognitive facilitation of listener orientation in a cyclical space. In contrast, the whole-tone scale and other uniform scales do not satisfy the uniqueness property because they are completely symmetric. Finally, intermediate levels of uniqueness can be found in certain scales that are transpositionally limited (32) and thus display rotational symmetry. Balzano (29) remarks that with regard to these scales, “it is the very symmetry and apparent elegance of the set that is its undoing with respect to Uniqueness” (ref. 29, p. 326).

Despite this rich theoretical literature on intervallic structures in scales and their potential to facilitate cognitive processing of music, only one previous study to our knowledge has explored the cognitive advantage of certain scale features from an experimental psychology perspective. Using an out-of-tune note detection task, Trehub et al. (33) showed that it was easier for infants to detect out-of-tune notes in the context of an unfamiliar nonequal step scale compared to an unfamiliar equal step scale. This effect was not shown for adults, although both infants and adults were better at detecting out-of-tune notes in the context of a familiar nonequal step scale (the Western major scale). Although detecting out-of-tune notes is an important aspect of music perception, other processes, such as encoding a tonal hierarchy or syntactic regularities from a set of melodies, constitute higher-level core elements of music processing (34).

A tonal hierarchy results from a distribution of notes that creates the perception that certain tones are more prominent, stable, or structurally significant (35–37). This representation is developed early in life through musical exposure (38, 39) but can also occur rapidly and incidentally for unfamiliar musical systems (40, 41). Previous studies suggest that the brain is able to encode a set of rules that define well-formed melodies from note sequences displayed by a corpus of melodies (42). Loui et al. (43) demonstrated that listeners can learn syntactic regularities and develop melodic expectancies from a highly unconventional musical system (featuring nonoctave-repeating scales) after only a short exposure period. However, it has been noted that some features in melodic structures, such as those identified by Narmour’s implication–realization model (44), may facilitate the learning of unfamiliar musical systems and the formation of melodic expectancies, suggesting that constraints are shaping musical systems (45).

In this study, we tested the hypothesis that the intervallic structure of musical scales also affects the ease with which listeners acquire knowledge of unfamiliar musical systems. Given the rarity of uniformly symmetric scales in musical cultures, we hypothesized that this specific intervallic structure may serve as an impediment to ease of music processing. Specifically, we sought to examine the effect of intervallic uniqueness by testing four different types of symmetry structures that entail various degrees of uniqueness (29). Asymmetric scales (examined in experiments 1, 2, and 3) and reflective–symmetric scales (examined in experiment 2) both satisfy the uniqueness property. Rotational–symmetric scales (experiments 1 and 3) possess an intermediate level of uniqueness: Each tone has a unique set of relations with only some of the other tones. Uniform–symmetric scales (experiments 1, 2, and 3) fail to satisfy the uniqueness property. We hypothesized that musical systems built from asymmetric and reflective–symmetric scales would result in better learning of musical structure than those from the rotational–symmetric and uniform–symmetric scales because the former fully satisfy the uniqueness property. By contrasting listener performance linked to melodic processing (namely tonal hierarchy and melodic regularity learning) as a function of intervallic organization, this work sought to demonstrate that cognitive constraints actively shape the structure of musical scales (46).

Results

Three experiments were conducted to test our hypothesis concerning the facilitating effects of nonuniform scales. All three experiments explored listener processing of artificial, unfamiliar musical systems using scales with three different levels of symmetry: One scale was uniformly symmetric, one was either rotationally or reflectively symmetric, and the last scale was asymmetric. Experiments 2 and 3 addressed certain problems with the stimulus design of experiment 1, expanded the musical systems beyond standard 12-tone equal temperament (12-TET), and streamlined the experimental procedure. The “middle” symmetry condition varied across experiments; it was rotational in experiments 1 and 3 and reflective in experiment 2. Schematic diagrams of the scales used in all three experiments are shown in Fig. 1.

Fig. 1.

Schematic representations of experiment scales. (A) Circular diagrams of the three 12-tone equal-tempered (12-TET) scales used in experiment 1. (B) Circular diagrams of the six scales used in experiment 2. Top shows 12-TET scales. Bottom shows 14-TET scales. (C) Circular diagrams of the six scales used in experiment 3. Top shows 12-TET scales. Bottom shows 16-TET scales.

The general paradigm for the experiments consisted of presenting exposure melodies (all composed of pure tones) to participants to provide them an opportunity to implicitly learn features of the musical systems. The general assumption was that listeners would demonstrate enhanced learning in proportion to the degree of uniqueness of the scale. In experiments 2 and 3, listeners were asked to indicate whether a melody sounded familiar or not after the exposure phase. The design of experiment 1 was more complex and included a probe-tone task and a set of recognition–generalization tasks to assess how much of the novel musical structure listeners had learned from the exposure phase. Both recognition and generalization tasks were included in experiment 1 to match the experimental design of prior work (43). The different types of tasks corresponded to the three aspects of music cognition that were examined in experiment 1: tonal hierarchy, melodic memory, and melodic expectation. Tonal hierarchy corresponds to the fact that listeners, when exposed to a musical system, build representations of the relative fit of each note in the scale (47). This can be observed with a probe-tone task, where listeners provide “goodness-of-fit” ratings for probe tones preceded by a tonal context (36). Here, an exposure phase presented a set of melodies in which tones had a specific density distribution. This exposure phase was bookended by probe-tone ratings that could demonstrate how much participants had learned about the distribution of pitches in the exposure phase and how that learning was affected by the scale structure.

The recognition task tested memory for novel melodies composed from different scale structures. While we expected overall poor performance (48, 49), we hypothesized that memory performance could be modulated by the type of scale, favoring those that allow for a better encoding of intervallic relationships (50). The aspect of music cognition explored through the generalization task in experiment 1 and experiments 2 and 3 as a whole concerned the processing of regularities and the formation of melodic expectations (using artificial grammars; see Fig. 2). The ability to predict melodic sequences has been shown to play a central role in music perception across different cultures (51, 52) and has been successfully tracked from cortical activity (53). After exposure to melodies composed in a novel musical system, listeners were presented with melodies that were either consistent or inconsistent with the grammar of the system. In experiment 1, these melodies were presented in pairs and listeners had to choose the one that sounded more familiar. In experiments 2 and 3, melodies were presented individually and listeners had to report whether they were familiar or not.

Fig. 2.

Schematic representation of the grammars. (A) Experiment 1: The finite-state grammar designed to create melodies with a set of possible, equally probable transitions between notes of the scale. In this schematic representation, numbers refer to the position of notes in the scale. (B) The first-order Markov-chain grammar used to generate 12-TET melodies from six-note scales in experiments 2 and 3. Nodes represent scale notes; gray and green arrows represent the transitions between nodes used to generate exposure melodies; and red arrows represent two possible examples of “incorrect” transitions used to generate half of the test melodies. (C) The first-order Markov-chain grammar used to generate 14-TET melodies from seven-note scales in experiment 2. (D) The first-order Markov-chain grammar used to generate 16-TET melodies from eight-note scales in experiment 3. (E) Musical notation for two example melodies generated by a simplified implementation of the 12-TET Markov-chain grammar containing only correct transitions (Left) and one incorrect transition (Right).

Experiment 1.

Probe-tone task.

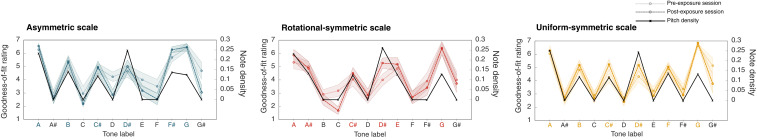

Listeners provided ratings for how well (on a seven-point Likert scale) each note in the Western chromatic scale (12-TET) fit in the context of each of the three test scales (asymmetric, rotational–symmetric, and uniform–symmetric). Each probe tone was presented twice in both the pre- and postexposure sessions. Preceding each tone was a sequence of all of the scale tones played in ascending or descending order. Fig. 3 shows the probe-tone fitness ratings averaged across participants for each pitch and each scale condition and for pre- and postexposure phases.

Fig. 3.

Results of experiment 1, probe-tone task. Shown is the average rating across participants for each note for preexposure (dotted line) and postexposure (dashed line) by scale session. Error shading corresponds to standard error. The black solid line displays the density of each note across all melodies for each of the scales computed using MELODIA, a Sonic Visualizer plugin (54).

At first glance, preexposure, postexposure, and pitch density profiles (Fig. 3) suggest that 1) participants were mostly able to differentiate between probe tones that were part of the scale and those that were not and 2) responses differed after the exposure phase. Average ratings from the preexposure phase (lighter shade, dotted line) suggest that listeners were able to extract this information already during the preexposure phase using the context presented.

An ordinal mixed-effects model analysis was conducted to determine the factors that predicted fitness ratings. The model included participant as a random effect and session (pre- or postexposure), scale (uniform–symmetric, rotational–symmetric, asymmetric), and probability (of the probe tone) as fixed effects. To make the model more interpretable, the probability values were rescaled from 0 to 1 to 0 to 10 so that a one-unit increase was equivalent to a 10% increase in probability. The results of the ordinal model can be found in Table 1. The baseline references for the two categorical factors are preexposure for session and uniform–symmetric for scale. The most intuitive result is the proportional odds ratio ( in Table 1) associated with probability, which indicated that for every 10% increase in probe-tone probability, the odds of giving a higher fitness rating increased by 266%. This relationship is also governed by the fact that pitch probabilities derived from the exposure melodies would be expected to affect only postexposure ratings, something that is evident in the significant interaction between session and probability: The odds of a one-point increase in fitness rating increased by 57% in the postexposure session. Furthermore, the odds of a fitness rating being one point higher increased by 64% for asymmetric scale trials compared to uniform–symmetric trials (there was no significant difference found between rotational–symmetric and the other two scale conditions). A detailed account of the model evaluation is provided in Materials and Methods.

Table 1.

Ordinal mixed-effects model for probe-tone task

| Variable | Variance | SD | SE | (POR) | 95% CI | |||

| Random effects | ||||||||

| Subject | 0.56 | 0.75 | ||||||

| Fixed effects | ||||||||

| Session[PostExp] | −0.342 | 0.197 | −1.741 | 0.082 | 0.71 | [0.48, 1.04] | ||

| Scale[Asymmetric] | 0.495 | 0.195 | 2.536 | 0.011* | 1.64 | [1.12, 2.40] | ||

| Scale[RotationalSymmetric] | 0.051 | 0.194 | 0.265 | 0.791 | 1.05 | [0.72, 1.54] | ||

| Probability | 0.980 | 0.118 | 8.272 | >0.001* | 2.66 | [2.11, 3.36] | ||

| Session[PostExp]*Scale[Asymmetric] | −0.426 | 0.276 | −1.541 | 0.123 | 0.65 | [0.38, 1.12] | ||

| Session[PostExp]*Scale[RotationalSymmetric] | −0.077 | 0.280 | −0.276 | 0.783 | 0.93 | [0.53, 1.60] | ||

| Session[PostExp]*Probability | 0.449 | 0.171 | 2.635 | 0.008* | 1.57 | [1.12, 2.19] | ||

| Scale[Asymmetric]*Probability | 0.004 | 0.170 | 0.026 | 0.979 | 1.00 | [0.72, 1.40] | ||

| Scale[RotationalSymmetric]*Probability | -0.197 | 0.166 | −1.183 | 0.237 | 0.82 | [0.59, 1.14] | ||

| Session[PostExp]*Scale[Asymmetric]*Probability | 0.073 | 0.244 | 0.298 | 0.765 | 1.08 | [0.67, 1.74] | ||

| Session[PostExp]*Scale[RotationalSymmetric]*Probability | 0.013 | 0.241 | 0.055 | 0.956 | 1.01 | [0.63, 1.62] |

Number of observations = 1,727. PostExp, postexposure; probability, probability of probe tone; CI, confidence interval; POR, proportional odds ratio.*P .05.

Recognition–generalization task.

Mean values for each scale condition broken down by task type are shown in Fig. 4. There were two types of trials, one testing recognition and the other testing generalization. In each trial, one of the melodies presented was taken from the exposure phase. The other was either a new melody generated from a different grammar (generalization task) or a new melody generated from the original grammar (recognition task). Participants were asked to choose whether the first or the second melody sounded more familiar. Listeners performed poorly on the recognition task, as indicated by the low values in all symmetry conditions.

Fig. 4.

Results of experiment 1, recognition and generalization task. Shown are values for recognition (Left) and generalization (Right) tasks. values were computed and averaged across participants for asymmetric (blue), rotational–symmetric (red), and uniform–symmetric (yellow) scales. Error bars correspond to standard error.

A generalized linear mixed-effects model (GLMM) with a probit link function was used to further analyze the data. To utilize a signal detection theory paradigm, the actual response (as opposed to correct/incorrect) served as the binomial dependent variable (labeled as ChoseSecond, indicating whether the first or the second melody was chosen as familiar). The glmer function in the R package lme4 was used to implement the model. The model included participant as a random effect and scale, IsSecond (whether or not the familiar melody was presented second), and task (generalization, recognition) as fixed effects. The baseline references for the model were Scale[UniformSymmetric] and Task[Generalization]. The results are shown in Table 2. A detailed account of the model evaluation is provided in Materials and Methods.

Table 2.

Mixed-effects model for the recognition–generalization task

| Variable | Variance | SD | SE | 95% CI | |||

| Random effects | |||||||

| Participant | 0.003 | 0.057 | |||||

| Fixed effects | |||||||

| Intercept | −0.002 | 0.029 | −0.07 | 0.945 | [−0.06, 0.06] | ||

| IsSecond | 0.611 | 0.048 | 12.68 | >0.001* | [0.52, 0.71] | ||

| Scale[Asymmetric] | −0.032 | 0.059 | −0.55 | 0.585 | [−0.15, 0.08] | ||

| Scale[RotationalSymmetric] | −0.006 | 0.059 | −0.10 | 0.919 | [−0.12, 0.11] | ||

| Task[Recognition] | 0.096 | 0.048 | 1.98 | 0.048* | [0.001, 0.19] | ||

| IsSecond*Scale[Asymmetric] | 0.494 | 0.118 | 4.20 | >0.001* | [0.26, 0.72] | ||

| IsSecond*Scale[RotationalSymmetric] | 0.387 | 0.117 | 3.30 | >0.001* | [0.16, 0.62] | ||

| IsSecond*Task[Recognition] | −0.931 | 0.097 | −9.63 | >0.001* | [−1.12, −0.74] |

Number of observations = 2,880; statistic = 0.67; Somers’ . *P .05.

The intercept corresponds to a measure of the observer’s criterion or response bias. In this case, it was not significant, indicating that listeners were not inclined to choose either the first or the second melody more often. The IsSecond coefficient of 0.61 corresponds to sensitivity or overall for the task. The Scale[Asymmetric] and Scale[RotationalSymmetric] coefficients correspond to bias for choosing either the first or the second melody more often for their respective conditions, and neither one was significant. There was a significant effect of Task[Recognition], indicating there was slightly less bias for choosing the second melody in the recognition task compared to the generalization task; in other words, there was a relatively smaller proportion of false alarms to misses in the recognition condition. The interactions are of primary interest in this model: They indicated that s for the asymmetric and rotational–symmetric scale conditions were both significantly higher than for the uniform–symmetric condition. However, there was no significant difference between the asymmetric and rotational–symmetric conditions (this was determined when Scale[Asymmetric] was used as the baseline reference instead of Scale[UniformSymmetric]). The last interaction, between IsSecond and Task[Recognition], was also significant and indicated that sensitivity for the recognition task was significantly lower than for the generalization task; in other words, participants found the recognition task more difficult.

Experiment 1 produced promising results, but the methodology raised a number of questions, which led to some unresolved problems. First, the scales were all derived from the 12-TET tuning system on which Western music is based. Previous studies suggest that after a short exposure, listeners are able to learn new intervals based on non–12-TET musical systems (55, 56). Subsequently, experiments 2 and 3 featured scales based on 14-TET and 16-TET systems to extend the main findings of scale structure effect to non-Western tonal systems and to reduce any unwanted effects resulting from scale familiarity.

Another possible confound in experiment 1 was an artifact of how the melodies were generated: The same grammar with fixed notes/nodes combination was used for all participants and, in the process, produced a repeated set of distinct and fixed melodic intervals. Some intervals, such as the tritone (six semitones) or perfect fifth (seven semitones), are highly distinctive or familiar and could potentially influence melodic learning. The transition between notes 1 and 4 in the grammar was a tritone for all three scales, and the transition between notes 2 and 5 was a tritone for the uniform–symmetric and rotational–symmetric scales. The perfect fifth was present between notes 2 and 5 of the asymmetric scale and notes 1 and 5 of the rotational–symmetric scale but was never present in melodies derived from the uniform–symmetric scale. Given the relative (yet not systematic) prevalence of consonant intervals across musical cultures (13), this also could have potentially facilitated melodic learning in the asymmetric and rotational–symmetric cases.

Altogether, the way the grammar was implemented in experiment 1 created a fixed distribution of intervals that consisted of frequent and distinct melodic leaps that might have served as more obvious “signposts” for recognition and generalization rather than the scale structure itself. In experiments 2 and 3, the note/node combinations in the grammar were systematically randomized to produce a varied set of intervals for each participant, thus preventing unwanted effects from recurring intervals. Another issue was that the finite-state grammar used in experiment 1 produced noticeably unmusical melodies. In experiments 2 and 3, this problem was mitigated by using a first-order Markov chain to generate melodies, resulting in more complex transition probabilities that in turn yielded more ecologically valid melodies. Finally, the parallel designs of experiments 2 and 3 allowed for more direct comparisons between rotational and reflective symmetry in addition to the baseline uniform–symmetric and asymmetric comparisons.

Experiment 2.

Similar to the experiment 1 recognition–generalization task, scales with three levels of asymmetry were used to generate melodies in experiment 2. In this experiment, the middle symmetry condition differed, featuring reflective symmetry instead of rotational symmetry (Fig. 1B). Additionally, two different tuning systems were used, 12-TET and 14-TET, resulting in six different scales used to generate melodies for six separate exposure/test sessions that took place on 2 different days (one for each tuning system). Similar to experiment 1, an exposure phase preceded a test phase where participants were presented with new melodies derived from either the exposure grammar or a slightly different one (Fig. 2 B and C). Listeners were asked to report whether each test melody seemed familiar or unfamiliar with respect to what they had heard in the exposure phase. The mean values for all symmetry levels in each tuning system are shown in Fig. 5A. In the case of both tuning systems, the asymmetric condition had the highest values and the uniform–symmetric had the lowest.

Fig. 5.

Results of experiments 2 and 3. values (calculated independently of the models) are averaged across participants by symmetry condition: asymmetric (blue), reflective–symmetric (purple), rotational–symmetric (red), and uniform–symmetric (yellow). Error bars correspond to standard error. (A) Experiment 2 values for the 12-TET and 14-TET scales. (B) Experiment 3 values for the 12-TET and 16-TET scales.

As in the analysis for the recognition–generalization test in experiment 1, a GLMM with response as the dependent variable was used to analyze the data in experiment 2. The model included participant as a random effect and IsFamiliar (familiar grammar or new grammar), scale (uniform–symmetric, reflective–symmetric, asymmetric), and tuning (12-TET or 14-TET) as fixed effects. The results of the model are shown in Table 3. The baseline references for the model were Scale[ReflectiveSymmetric], and Tuning[12TET]. A detailed account of the model evaluation is provided in Materials and Methods.

Table 3.

Experiment 2 results

| Variable | Variance | SD | SE | 95% CI | |||

| Random effects | |||||||

| Participant | 0.141 | 0.375 | |||||

| Fixed effects | |||||||

| Intercept | 0.306 | 0.083 | 3.68 | >0.001* | [0.14, 0.48] | ||

| IsFamiliar | 1.113 | 0.028 | 39.86 | >0.001* | [1.06, 1.17] | ||

| Scale[UniformSymmetric] | −0.081 | 0.033 | −2.44 | 0.015* | [−0.15, −0.02] | ||

| Scale[Asymmetric] | −0.096 | 0.034 | −2.80 | 0.005* | [−0.16, −0.03] | ||

| Tuning[14TET] | 0.135 | 0.028 | 4.88 | >0.001* | [0.08, 0.19] | ||

| IsFamiliar*Scale[UniformSymmetric] | −0.271 | 0.067 | −4.07 | >0.001* | [−0.40, −0.14] | ||

| IsFamiliar*Scale[Asymmetric] | 0.368 | 0.069 | 5.35 | >0.001* | [0.23, 0.50] | ||

| IsFamiliar*Tuning[14TET] | −0.005 | 0.055 | −0.09 | 0.930 | [−0.11, 0.10] |

Number of observations = 10,080; statistic = 0.78; Somers’ . *P .05.

The intercept was significant, indicating participants were generally biased toward choosing “familiar” as a response. The coefficient for IsFamiliar (1.11) corresponds to the overall . The corresponding values for Scale[Asymmetric], Scale[Uniform], and Tuning[14TET] were also significant, indicating there was slightly less bias in responses to melodies in the asymmetric and uniform–symmetric scale conditions compared to reflective–symmetric and more bias in the 14-TET condition compared to 12-TET. However, there was no significant difference in sensitivity between the two tuning conditions. The significant interactions show that there were differences in sensitivity between all scale conditions, with uniform–symmetric having the lowest , asymmetric the highest, and reflective–symmetric in between. Consistent with experiment 1, there is again a significant difference between the uniform–symmetric and the two other conditions.

Experiment 3.

Experiment 3 was conducted as a complement to experiment 2. The procedure was identical to experiment 2, but it featured a rotational–symmetric scale instead of a reflective–symmetric one in addition to the uniform–symmetric and asymmetric scales (similar to experiment 1; Fig. 1C). The nonstandard tuning condition in experiment 3 was 16-TET instead of 14-TET. This change was due to the fact that it was not possible to create a rotational–symmetric scale that matched the seven-note uniform–symmetric scale in 14-TET. The 16-TET system allowed for eight-note uniform–symmetric and rotational–symmetric scales. The grammars used to generate the melodies in experiment 3 are shown in Fig. 2 B–D).

The mean values for all symmetry levels in each tuning system are shown in Fig. 5B. Again, for both tuning systems, the asymmetric condition had the highest values. In the case of the 12-TET scales, the rotational–symmetric was higher than uniform–symmetric, while for the 16-TET scales, it was the reverse. The same mixed-effects model from experiment 2 was used to analyze the data from experiment 3. The model included participant as a random effect and IsFamiliar (familiar grammar or new grammar), scale (uniform–symmetric, rotational–symmetric, asymmetric), and tuning (12-TET or 16-TET) as fixed effects. The results of the model are shown in Table 4. The baseline references in this case were Scale[Asymmetric] and Tuning[12-TET]. A detailed account of the model evaluation is provided in Materials and Methods.

Table 4.

Experiment 3 results

| Variable | Variance | SD | SE | 95% CI | |||

| Random effects | |||||||

| Participant | 0.181 | 0.425 | |||||

| Fixed effects | |||||||

| Intercept | 0.481 | 0.096 | 5.01 | >0.001* | [0.28, 0.68] | ||

| IsFamiliar | 0.785 | 0.029 | 27.43 | >0.001* | [0.73, 0.84] | ||

| Scale[UniformSymmetric] | 0.050 | 0.035 | 1.43 | 0.152 | [−0.02, 0.12] | ||

| Scale[RotationalSymmetric] | 0.042 | 0.035 | 1.20 | 0.230 | [−0.03, 0.11] | ||

| Tuning[16TET] | −0.071 | 0.028 | −2.50 | 0.012* | [−0.127, −0.02] | ||

| IsFamiliar*Scale[UniformSymmetric] | −0.242 | 0.070 | −3.48 | >0.001* | [−0.38, −0.11] | ||

| IsFamiliar*Scale[RotationalSymmetric] | −0.228 | 0.070 | −3.28 | >0.001* | [−0.36, −0.09] | ||

| IsFamiliar*Tuning[16TET] | −0.287 | 0.057 | −4.04 | >0.001* | [−0.40, −0.18] |

Number of observations = 9600; statistic = 0.74; Somers’s . CI = confidence interval. *P .05.

The intercept was significant, indicating participants were biased toward choosing “familiar” as a response. The overall was 0.79, which is lower than for experiment 2. There was no significant difference in bias between any of the scale conditions (including between Scale[RotationalSymmetric] and Scale[Uniform], which was determined by using Scale[UniformSymmetric] as a baseline). There were significant differences in both bias and sensitivity between the two types of tuning: less bias and a marked decrease in sensitivity for 16-TET. The other interactions indicate that there was significantly higher sensitivity for the asymmetrical scale compared to both the uniform–symmetric and rotational–symmetric scales. There was no significant difference in sensitivity between the uniform–symmetric and rotational–symmetric conditions (again determined by using Scale[UniformSymmetric] as a baseline).

Discussion

The findings we present suggest that the prevalence of nonuniform scales across musical cultures may be grounded in the cognitive benefits it has on the position finding of tones (27, 28). Butler and Brown (28) suggest that the intervallic structure contained in scales provides perceptual anchors that help listeners orient in a tonal circular space (35) and that these anchors are manifested in the balance between rare and common intervals. This could be an epiphenomenon of the uniqueness property of a scale; each tone in such a scale has a unique set of relations with the others and therefore has a potentially unique musical role (30). Balzano noted that “a melody based on a scale satisfying Uniqueness should be easier for a perceiver to deal with because the notes of the melody are individuated not only by their particular frequency locations, but also by their interrelations with one another” (ref. 29, p. 326).

The results of the current work suggest that the uniqueness property in musical scales does indeed enhance performance on a task central to music cognition: melodic expectancy encoding. This study also explored the impact of scale structure on tonal hierarchy perception. When listening to music, people are exposed to statistical distributions of musical events (e.g., notes or chords) and implicitly build a representation of the hierarchical system of tones (47). These representations are acquired rapidly when listeners are exposed to a new musical system (40, 41, 57), even when the system’s structural properties are not found in any referenced musical culture (43). Results from the probe-tone task in experiment 1 are consistent with these previous findings. We observed that listeners were able to extract some information about the tonal structure from hearing the scale alone, even before the exposure phase. The pre- and postexposure ratings appeared to improve more for the asymmetric scale, but these results were not strong enough to make the claim that this difference stemmed from a higher degree of uniqueness.

The results of the recognition–generalization test in experiment 1 showed that overall performance was better for the two nonuniform scales compared to the uniform one. This was driven in part by the strong performances on the generalization task. The recognition task results, on the other hand, revealed that listeners did not form robust memories of particular melodies. This is consistent with results reported in Loui et al. (43) (second experiment), who noted that musicians performed poorly on the recognition task in comparison to the generalization task. In the case of the asymmetric scale, performance on the recognition task significantly differed from chance level. This might have resulted from either the structure of the scale or the particular set of intervals defined by both the scale and the grammar. In other words, it may be the case that melodies generated from the asymmetric scale displayed a pattern of intervals that was easier to memorize. Dowling’s model of memory for melodies proposes that the formation of robust representations of melodies depends both on scale structure and the set of intervals (50). Dowling and Barlett (58) also demonstrated that intervals are better stored in long-term memory than other features of melodic sequences. This possible confound motivated the design of experiments 2 and 3, in which sets of intervals were randomly generated for each participant to better assess the effect of scale structure alone.

The results of experiments 2 and 3 supported the hypothesis that the uniqueness property was a facilitating factor in learning musical structure. When listeners were asked to identify whether melodies generated in the exposure grammar or a new grammar sounded familiar or not, their performance on the task was significantly better for the scales that exhibited intervallic uniqueness (asymmetric and reflective–symmetric) than for the scales that did not meet or only partially met the criterion (uniform–symmetric and rotational–symmetric). Furthermore, this pattern of performance for asymmetric and reflective–symmetric was similar regardless of the tuning context (12-TET vs. 14-TET); when a more complex model that included a three-way interaction between tuning, response, and scale condition was tested, it did not fit the data better than the model that included only interactions between response and the other factors (see Materials and Methods for details on model evaluation). The difference between reflective–symmetric and asymmetric observed in experiment 2 could originate from the associations that listeners make between pitch patterns and their inversions (59). It might be more difficult to orient in a space that is identical when rotating clockwise and counterclockwise (similar to reflective symmetry). Listeners identify intervallic patterns with their inversions implicitly, and this type of association increases with musical training (60, 61). Finally, the overall lower sensitivity observed in experiment 3, mostly driven by in the 16-TET scales, could result from the higher number of tones present in these octatonic scales.

Making predictions about upcoming events is a crucial cognitive ability shared across multiple cognitive domains (62). Music provides a unique perspective on how the mind extracts regularities from past input to form expectations about future events. This learning occurs through passive exposure (63) and is critical to a listener’s enjoyment of music; the interplay between the fulfillment and frustration of expectations is believed to give rise to emotional responses to music (62, 64). The rules recruited to produce musical predictions are derived from the joint contributions of Gestalt principles, shared with other cognitive domains, and statistical learning resulting from an individual’s specific exposure (65). For the most part, accurate predictions rely on a stable and well-characterized tonal space that allows listeners to orient in the inherently cyclical space of tonality resulting from octave equivalence (66). Here, we provided evidence that nonuniform scales and more precisely their uniqueness property support perceptual anchoring by specifying the tonal space in which listeners are immersed. An analogy to clarify this interpretation would be to imagine people placed in a circular room where they must remember a specific path between points distributed around this circular space. It would be easier if the points were organized in a manner such that their relation to all of the other points was uniquely defined; this would mean that the entire space could be anchored relative to a specific point (analogous to the tonic in a musical context).

However, there could be other factors beyond the scope of this study that facilitate processing of musical structure that in turn might explain the prevalence of the uniqueness property in existing scales. For example, the presence of consonant intervals might play an important role (67) or there might be spectral qualities of pitch combinations that influence scale structures (68, 69). Maximal evenness of scales, a music–theoretic principle that describes the maximal spreading of tones around the octave (a concept not equivalent to uniform symmetry) (70), could also play a facilitating role and has also been observed in musical rhythms (71). Finally, the balance between common and rare intervals (respectively, the perfect fourth and the tritone in the Western diatonic scale) could enhance position finding (27, 28).

Overall, these results shed further light on the thorny question of how basic cognitive and sensory constraints intersect with cultural influences and result in universal features in human production (72, 73). From a cross-domain perspective, Chomsky’s (74) claim that innate principles underlie the many manifestations of language (the concept of a universal grammar) provided a framework for such a question and, in the process, ignited an unprecedented scientific explosion in the field of human cognition. The discovery of structural universals in language, such as the architecture of phonological structures (75), has tremendously impacted our understanding of the human mind (76). In the domain of music cognition, such efforts have been more limited but will surely benefit from the recent development of corpus-based statistical analysis (6, 19).

One example of how innate, sensory principles shape musical features concerns how the smallest intervals found between structural tones (e.g., semitones) (13) reflect typical frequency discrimination abilities (77). More recently, Jacoby and McDermott (16) found that listeners from different cultural backgrounds produce complex rhythms converging on integer–ratio temporal intervals. On the other hand, this line of research can also demonstrate the opposite—that seemingly pervasive elements of musical structure fail to point to universal aspects of perception and production (78, 79).

Ultimately, the difficulty in identifying putative musical universals lies in the definition of what is considered “universal.” Strictly speaking, no properties of language or music are universal per se; in the absence of known counterexamples, it is not prudent to claim that such exceptions do not exist or have never existed. The definition of universality then depends on one’s view of exceptions or, in other words, whether they invalidate or support a rule. Ellis’s research on tonality (80) is a good example of how different interpretations can be drawn from empirical evidence, based on how exceptions on universality are viewed. Ellis observed tunings for dozens of instruments from various cultures in Europe and Asia. He found that the intervals of an octave, a fifth, and a fourth were present in all musical tuning systems with the exception of instruments in Java. He thus concluded that this exception invalidated the idea of universal intervallic preferences. Although this exception was worth reporting and echoes recent empirical findings (78), this does not mean that the striking prevalence of octaves, fifths, and fourths across musical cultures should be entirely disregarded (81). The concept of a “statistical universal” (also referred to as “typological generalization” in language) is preferable, as it offers the flexibility needed when working with empirical data (19, 82).

The results of the current study provide evidence that the statistical universality of certain scale structures may result from a cognitive advantage in processing complex melodic patterns and encoding musical regularities. The symmetry properties discussed here merit serious consideration as a cognitive constraint that defines structural aspects of music. Although more behavioral and neurophysiological studies would be needed to further strengthen this hypothesis, the current findings strongly suggest that nonuniformity, which might enhance the position finding of tones in an octave diatonic context, provides cognitive benefits that could explain its pervasiveness. This study opens an avenue of research in the field of music cognition that should encourage systematic empirical investigations of recently identified statistical universals.

Materials and Methods

Experiment 1.

Experiment 1 was divided into three separate four-part sessions, one session per scale. The first and last parts of each session consisted of a probe-tone task to assess listeners’ perception of goodness of fit for all chromatic pitches in the context of the scale. In the second, listening-only part, participants were exposed to melodies generated from the scale by an artificial grammar. In the third part, listeners were tested on their ability to rapidly form explicit memories of melodies from the prior exposure phase (recognition) and implicitly acquire syntactic rules governing the transitions between notes in the melodies (generalization).

Participants.

Fourteen participants with self-reported normal hearing took part in experiment 1. One was excluded because of participating only in the first session and another because the participant did not follow instructions and systematically gave the same response throughout one part of the experiment. Among the 12 remaining participants (5 females and 7 males, mean age = 24.33 y, ), 8 were enrolled in music performance and music technology programs at New York University; they all had formal musical training, had practiced an instrument for more than 7 y, and were still engaged in daily musical practice. Among the four remaining participants, none reported having received formal training in music. This binary distribution between musicians and nonmusicians was arbitrary and a function of the sample of participants who volunteered for the experiment. All gave informed consent to participate in the study and were paid for their participation. The protocols for experiment 1 as well as experiments 2 and 3 were approved by the New York University institutional review board (University Committee on Activities Involving Human Subjects).

Scales.

Throughout the experiment, participants listened to melodies derived from one of three 12-TET, hexatonic scales. The three scales are commonly known as the Prometheus, tritone, and whole-tone scales. The Prometheus scale is asymmetric, the tritone scale is rotationally symmetric, and the whole-tone scale is uniformly symmetric. Their intervallic structures are depicted in Fig. 1A. The uniform–symmetric scale had tones organized in a manner that did not satisfy the uniqueness property: Each tone did not have a unique set of intervallic relations with the other tones. The asymmetric scale satisfied the uniqueness property: Each tone had a unique set of relations with the others tones in the scale. The rotational–symmetric scale had partial uniqueness: Each tone had a unique set of relations with only half of the others tones.

Grammar.

Melodies were composed of the notes within a given scale, and their construction was determined by a finite-state grammar inspired by Loui et al. (43). A schematic representation of this grammar is shown in Fig. 2A. Each circle corresponds to the position of a note in the scale. For example, “1” corresponds to the first note of the current scale (A is 220 Hz for all scales) and “2” to the second note of the scale (B for the uniform–symmetric and asymmetric scales and B-flat for the reflective–symmetric scale). Arrows connecting circles determine the permissible transitions between notes, all being equally probable.

Melodies.

All melodies were composed of sine tones of 300 ms duration separated by 500 ms of silence intervals with onset ramp times of 50 ms. In the probe-tone phases, each of the 12 tones of the equal-tempered chromatic scale was used as a probe tone twice. Each probe pitch was preceded by an ascending version of the current scale in one trial and a descending version of the scale in another trial. Therefore, the melody preceding the probe tone was kept constant in terms of the pitches that it contained. In the recognition–generalization test phase, melodies were generated using the current scale and a corresponding finite-state grammar.

Procedure.

Prior to participation, listeners were separated into two counterbalanced groups, each exposed to different versions of the finite-state grammars. For participants in group 1, the finite-state grammar was used to generate melodies in a forward direction (melodies were generated in a left-to-right direction). Participants in group 2 were presented with stimuli generated by the same finite-state grammar in a backward direction (the melodies were generated in a right-to-left direction). Since the grammars differed only in their intermediate notes, the beginnings and endings of melodies generated by grammars 1 and 2 were identical. In other words, melodies from grammar 2 were simply the same as grammar 1 melodies played backward.

The melodies were generated using Max/MSP (a visual programming language for sound and music) and then presented to listeners using a MATLAB interface. This was a way to ensure that all participants were exposed to the same complexity in terms of note transitions during the recognition and generalization tasks. It also had the advantage of ensuring that the frequency of occurrence of each note was the same, regardless of which grammar was assigned, a crucial factor for the probe-tone ratings analysis. Participants were tested individually in a double-walled, sound isolation booth. Audio files of the stimuli were encoded at 16-bit resolution and 44.1-kHz sampling rate and presented on Sennheiser HD 650 headphones. The sound presentation level was above 60 dB sound pressure level (SPL), A-weighted. Instructions were displayed on a computer screen and participants’ responses were collected with a keyboard and mouse.

Listeners were first asked to assess goodness of fit for all 12 chromatic pitches in the context of the featured scale (probe-tone task). The stimuli were presented in random order and participants were asked to provide a rating from 1 to 7 (1 = lowest fit, 7 = highest fit) for how well the final pitch of each melody fitted with the prior context. Then in the subsequent exposure phase, 100 melodies generated with the designated grammar in the given scale were played once each in random order. Participants cycled through the melodies by clicking on a “Continue” button to hear the next melody. This was followed by a test phase (recognition–generalization test), in which listeners were presented with pairs of melodies and asked to judge which melody sounded more familiar. In each trial, one of the melodies was taken from the original set of melodies presented in the exposure phase and the other was either a new melody generated from a different grammar (generalization task) or a new melody generated from the original grammar (recognition task). The presentation orders of the two melodies in each trial and the type of task (generalization vs. recognition) were systematically shuffled and counterbalanced between participants. Informal debriefing of pilot participants suggested that all scales were perceived as equally unfamiliar. Given this observation, it was decided that formal ratings of familiarity would not be collected after each session to avoid the possibility of drawing unnecessary attention to the familiarity aspect of the scales in subsequent sessions.

Evaluation of the mixed-effects models.

Probe-tone task.

The proportional odds (parallel slopes) assumption for the ordinal model was evaluated by conducting a series of binary logistic regressions on the fitness ratings and then checking the equality of coefficients across various cut points. The cut points transformed the original ordinal values into binary variables equal to 0 if the original value was less than a particular fitness value or equal to 1 if it was greater than or equal to that value (ranging from 2 to 7 on the ratings scale). This analysis indicated that the parallel slope assumption held in all cases with the possible exception of probe-tone probability. Given that the fitness values were (arguably) equally spaced and encompassed more than five categories, a linear model was employed in conjunction with the ordinal model. The results of this model were similar to those of the ordinal model, so they are not reported here (the only difference is one additional significant interaction between session and Scale[Asymmetric]). The ordinal model was evaluated by comparing it to a reduced model consisting only of the intercept and the random effect. A likelihood-ratio test indicated the full model was a better fit than the reduced model [, ], with pseudo- values calculated to describe relative fit (McFadden = 0.086, Cox and Snell = 0.274, Nagelkerke = 0.281).

Recognition–generalization model evaluation.

The GLMM was evaluated by comparing it to a null model consisting only of the intercept and the random effect and a basic model with the intercept, the random effect, and IsSecond. The results of χ2 tests comparing the selected model with the other models were all significant [null model, ; basic model, ]. The Bayesian information criterion (BIC) and Akaike information criterion (AIC) values were lower for the selected model than for the other models, indicating a better fit. The statistic (area under the receiver operating characteristic curve) for the model was 0.67, indicating that the model’s discriminability was somewhat poor. Multicollinearity was tested using the kappa.mer and vifs.mer functions in the mer-utils R library, with kappa = 1.8 and all variance inflation factors (VIFs) 1.3, indicating there were no issues.

Experiment 2.

Experiment 2 utilized a more flexible artificial grammar and extended the results to the learning of non-Western musical systems.

Participants.

Twenty-two participants with self-reported normal hearing took part in the experiment. One was excluded because the experimenter made an error in the presentation protocol. Among the 21 remaining participants (14 males and 7 females, mean age = 24.3 y, ), 15 had 5 or more y of formal musical training and all were still engaged in daily musical practice. None of the six other participants reported having formal training, although two of them reported regularly performing as disc jockeys. This distribution of musical experience was a function of the sample of participants who volunteered for the study. All gave informed consent to participate in the study and were paid for their participation.

Scales.

Participants were presented with melodies generated from hexatonic or heptatonic scales in each of the three structure conditions, asymmetric, reflective–symmetric, and uniform–symmetric. Each hexatonic scale was composed of six tones in 12-TET, and each heptatonic scale was composed of seven tones in 14-TET. The unconventional octave division used for the heptatonic scales ensured that the melodies generated would be highly unfamiliar to all listeners, since 14-TET is not used at all in Western music. The three scale conditions were obtained by positioning tones in the 12-TET or 14-TET space in a manner that conformed to the different intervallic structural properties, as illustrated in Fig. 1B. The reflective–symmetric scales possessed an axis of symmetry; the sequence of intervals formed by starting at a particular tone and moving both clockwise and counterclockwise halfway around the circle (i.e., until meeting in the middle) was identical. However, each tone had a unique set of relations with all of the others tones when moving from one tone to another in the same direction (clockwise/counterclockwise or up/down) across the octave span, thus satisfying the uniqueness property.

Grammar.

Melodies were composed of the tones within a given scale, and their construction was determined by a first-order Markov chain inspired by Rohrmeier et al. (83). Since two scale types were used (hexatonic, 12-TET and heptatonic, 14-TET), two different grammars were used as well, each including all of the tones in the scales. However, the complexity of both grammars was kept constant with respect to the transition probabilities that were used to generate the melodies. Schematic representations of the hexatonic and heptatonic grammars are shown in Fig. 2 B and C. Each node corresponds to a note in the scale. The correspondence between nodes and notes was randomized for each participant and for each structure condition. Arrows connecting nodes determine the permissible transitions between notes, along with the probability of transition. The “correct” version of the grammar was determined for each listener prior to the exposure phase. The “incorrect” version of the grammar was obtained by switching nodes 3 to 4 and 5 to 6, which introduced 10 possible wrong transitions. Melodies generated with the incorrect grammar contained a set of three transitions between tones that were never part of the melodies generated with the correct grammar (see simplified examples of melodies with correct and incorrect transitions in Fig. 2E).

Melodies.

All melodies were composed of 500-ms sine tones to which a tapered-cosine (Tukey) window was applied. Tones were not separated by a silence interval. During the exposure phase, 100 melodies were generated in real time and presented to listeners. Melodies were produced using the current tuning condition (hexatonic 12-TET or heptatonic 14-TET) and the current structure condition (asymmetric, reflective–symmetric, or uniform–symmetric) and the correct version of the grammar. Melodies were constrained so that they did not exceed 15 tones and had to reach the final note, as defined by the grammar (Fig. 2 B and C). During the test phase, half of the melodies were produced the same way using the correct grammar and half of the melodies were produced the same way but using the incorrect grammar.

Procedure.

The experiment consisted of two separate sessions, one for the hexatonic, 12-TET scales and another for the heptatonic, 14-TET scales, on 2 different days separated by 1 wk on average. The order of sessions was systematically counterbalanced across subjects. Each session was divided into three parts, each part corresponding to a symmetry condition in which order of testing was randomized. During each part, listeners had to first complete an exposure phase during which they listened to 100 melodies. During this phase, melodies were generated in real time with the designated scale and grammar. Only the correct version of the grammar was used to generate the exposure melodies. Listeners had to simply click a mouse to play the next melody. Immediately after the exposure phase was the test phase. During this phase, 80 melodies were generated on the fly; half of them were generated using the correct version of the grammar and the other half with the incorrect version. After the melody was played, listeners were asked to report whether the melody seemed familiar or unfamiliar with respect to what they had heard in the exposure phase. Participants were tested individually in a double-walled, sound isolation booth. Audio files of the stimuli were encoded at 16-bit resolution and 44.1-kHz sampling rate and presented on Sennheiser HD 650 headphones. The presentation level was above 60 dB SPL (A-weighted). Instructions were displayed on a computer screen and participants’ responses were collected with a keyboard and mouse. As in experiment 1, informal debriefing with participants indicated that all scales were perceived as equally unfamiliar, although heptatonic scales were reported as unconventional sounding. No formal ratings of familiarity were collected after each session.

Evaluation of the mixed-effects model.

The GLMM was evaluated by comparing it to 1) a null model consisting only of the intercept and the random effect; 2) a basic model with the intercept, the random effect, and IsFamiliar; and 3) a three-way interaction model with all of the terms. The results of χ2 tests comparing the selected model with the simpler models were significant [null model, ; basic model, ]. There was no significant difference between the selected model and the full interaction model (). The BIC values were lower for the selected model than for all other models, indicating a better fit. The AIC was also lower for the selected model compared to the null and basic models and nearly the same in the case of the full interaction model (11,262 for the selected model versus 11,261 for the interaction model). The statistic for the model was 0.78, indicating that the model’s discriminability was very good. Multicollinearity was assessed, with the resulting test values kappa = 1.7 and all VIFs 1.4, indicating there were no issues.

Experiment 3.

The procedure in experiment 3 was identical to that in experiment 2, but a rotational–symmetric scale instead of a reflective–symmetric scale was used in addition to the uniform–symmetric and asymmetric scales.

Participants.

Twenty participants with self-reported normal hearing took part in the experiment (12 males and 8 females, mean age = 25 y, ). Among them, 12 had 5 or more y of formal musical training and 9 were still engaged in daily musical practice. This distribution of musical experience was a function of the sample of participants who volunteered for the study. All gave informed consent to participate in the study and were paid for their participation.

Scales.

Participants were presented with melodies generated from hexatonic or octatonic scales in each of the three symmetry conditions: asymmetric, rotational–symmetric, and uniform–symmetric. Each hexatonic scale was composed of six tones in 12-TET, and each octatonic scale was composed of eight tones in 16-TET. The unconventional 16-TET served the same purpose as in experiment 2: to test the effect of scale structure in an unfamiliar tuning system. The three scale conditions were obtained by positioning tones in the 12-TET or 16-TET space to obtain different intervallic structural properties, as illustrated in Fig. 1C. As in experiment 1, the rotational–symmetric scales had partial uniqueness: Each tone had a unique set of relations with only half of the other tones.

Grammar.

In experiment 3, Markov-chain grammars were designed as in described for experiment 2. Since two scale types were used (hexatonic 12-TET and octatonic 16-TET), two different grammars were used as well, each including all of the tones in the scales. However, the complexity of both grammars was kept constant with respect to the transition probabilities that were used to generate the melodies. The grammar used for the hexatonic 12-TET melodies was the same as in experiment 2 and is shown in Fig. 2B. The grammar for the octatonic 16-TET melodies is shown in Fig. 2D.

Melodies.

The melodies were generated as described for experiment 2. For all tuning (hexatonic 12-TET and octatonic 16-TET) and structure conditions (asymmetric, rotational–symmetric, and uniform–symmetric), melodies were constrained so that they did not exceed 15 tones and had to reach the final note, as defined by the grammar. During the test phase, half of the melodies were produced using the correct grammar and half of the melodies were produced using the incorrect grammar.

Procedure.

The procedure was identical to that in experiment 2. The two sessions were conducted on 2 different days and were separated by 1 wk on average: one session for the hexatonic, 12-TET scales and the other session for the octatonic, 16-TET scales. Again, informal debriefing with participants indicated that all scales were perceived as equally unfamiliar, although the task for the 16-TET tuning was reported as particularly difficult to perform. No formal ratings of familiarity were collected after each session.

Evaluation of the mixed-effects model.

The GLMM was evaluated by comparing it to 1) a null model consisting only of the intercept and the random effect; 2) a basic model with the intercept, the random effect, and IsFamiliar; and 3) a three-way interaction model with all of the terms. The results of χ2 tests comparing the selected model with the other models were all significant [null model, ; basic model, ; full interaction model, ]. The BIC values were lower for the selected model than for all other models, indicating a better fit. The AIC values were also lower for the selected model compared to the null and basic models, although higher for the selected model compared to the full interaction model (difference = ). The selected model was chosen over the full interaction model on the basis of the BIC difference (). The statistic for the model was 0.74, indicating that the model’s discriminability was quite good. Multicollinearity was assessed, with the resulting test values kappa = 1.7 and all VIFs 1.4, indicating there were no issues.

Acknowledgments

We thank Natalie Wu and Emma Ning for help with data collection. This work has also benefited from discussions with Shihab Shamma, Fred Lerdahl, and Michael Seltenreich. We thank David Poeppel for critical comments on the manuscript.

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission. C.L.K. is a guest editor invited by the Editorial Board.

*Debussy’s use of the whole-tone scale was supposedly inspired by his visit to the Exposition Universelle held in Paris in 1889, which featured Gamelan music from Java (26). The whole-tone scale has also been used in compositions by Béla Bartók, Olivier Messiaen, Maurice Ravel, and Lili Boulanger.

Data Availability

The datasets collected and analyzed in the reported studies, as well as examples of the stimuli used, are available on the Open Science Framework (OSF) (DOI: 10.17605/OSF.IO/XEQPA). Behavioral data, sound files, code sound files, and tables data have also been deposited in the OSF archive (84).

References

- 1.Merriam A. P., Merriam V., The Anthropology of Music (Northwestern University Press, 1964). [Google Scholar]

- 2.Nettl B., The Study of Ethnomusicology: Thirty-Three Discussions (University of Illinois Press, 2015). [Google Scholar]

- 3.Blacking J., Venda Children’s Songs: A Study in Ethnomusicological Analysis (University of Chicago Press, 1995). [Google Scholar]

- 4.Fourer D., Rouas J.-L., Hanna P., Robine M., “Automatic timbre classification of ethnomusicological audio recordings” in Proceedings of the International Society for Music Information Retrieval Conference (ISMIR) (ISMIR, 2014), pp. 295–300.

- 5.Polak R., et al. , Rhythmic prototypes across cultures: A comparative study of tapping synchronization. Music Percept. 36, 1–23 (2018). [Google Scholar]

- 6.Mehr S. A., et al. , Universality and diversity in human song. Science 366, eaax0868 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Brown D. E., Human Universals (McGraw-Hill, New York, NY, 1991). [Google Scholar]

- 8.Hagen E. H., Bryant G. A., Music and dance as a coalition signaling system. Hum. Nat. 14, 21–51 (2003). [DOI] [PubMed] [Google Scholar]

- 9.Trehub S. E., The developmental origins of musicality. Nat. Neurosci. 6, 669–673 (2003). [DOI] [PubMed] [Google Scholar]

- 10.Herzog G., Music’s dialects: A non-universal language. Independent J. Columb. Univ. 6, 1–2 (1939). [Google Scholar]

- 11.List G., On the non-universality of musical perspectives. Ethnomusicology 15, 399–402 (1971). [Google Scholar]

- 12.Meyer L. B., Universalism and relativism in the study of ethnic music. Ethnomusicology 4, 49–54 (1960). [Google Scholar]

- 13.Ellis A. J., On the musical scales of various nations. J. Soc. Arts 33, 485–527 (1885). [Google Scholar]

- 14.Fritz T., et al. , Universal recognition of three basic emotions in music. Curr. Biol. 19, 573–576 (2009). [DOI] [PubMed] [Google Scholar]

- 15.Honing H., Cate C. T., Peretz I., Trehub S. E., Biology, cognition and origins of musicality [special issue]. Philos. Trans. Biol. Sci. 370, 1664 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Jacoby N., McDermott J. H., Integer ratio priors on musical rhythm revealed cross-culturally by iterated reproduction. Curr. Biol. 27, 359–370 (2017). [DOI] [PubMed] [Google Scholar]

- 17.Juslin P. N., Sloboda J. A., “The past, present, and future of music and emotion research” in Handbook of Music and Emotion: Theory, Research, Applications, JJuslin P. N., JSloboda J. A., Eds. (Oxford University Press, New York, NY, 2010), pp. 933–955. [Google Scholar]

- 18.Nettl B., An ethnomusicologist contemplates universals in musical sound and musical culture. Origins Music 3, 463–472 (2000). [Google Scholar]

- 19.Savage P. E., Brown S., Sakai E., Currie T. E., Statistical universals reveal the structures and functions of human music. Proc. Natl. Acad. Sci. U.S.A. 112, 8987–8992 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Panteli M., et al. , Learning a Feature Space for Similarity in World Music (ISMIR, 2016). [Google Scholar]

- 21.Harvey P. H., et al. , The Comparative Method in Evolutionary Biology (Oxford University Press, Oxford, UK, 1991), vol. 239. [Google Scholar]

- 22.Brown S., Jordania J., Universals in the world’s musics. Psychol. Music 41, 229–248 (2013). [Google Scholar]

- 23.Fink R., “The neanderthal flute and origin of the scale: Fang or flint? A response” in Studies in Music Archaeology III, Hickmann E., Kilmer A. D., Eichmann R., Eds. (Verlag Marie Leidorf, Rahden, 2003), pp. 83–87. [Google Scholar]

- 24.Whittall A., Tonality and the whole-tone scale in the music of Debussy. Music Rev. 36, 261–271 (1975). [Google Scholar]

- 25.Perle G., Serial Composition and Atonality: An Introduction to the Music of Schoenberg, Berg, and Webern (University of California Press, 1972). [Google Scholar]

- 26.Tamagawa K., Echoes from the East: The Javanese Gamelan and Its Influence on the Music of Claude Debussy (Lexington Books, 2019). [Google Scholar]

- 27.Browne R., Tonal implications of the diatonic set. Theory Only 5, 3–21 (1981). [Google Scholar]

- 28.Butler D., Brown H., Tonal structure versus function: Studies of the recognition of harmonic motion. Music Percept. 2, 6–24 (1984). [Google Scholar]

- 29.Balzano G. J., “The pitch set as a level of description for studying musical pitch perception” in Music, Mind, and Brain, Clynes M., Ed. (Plenum Press, New York and London, 1982), pp. 321–351. [Google Scholar]

- 30.Pearce M., The Group-Theoretic Description of Musical Pitch Systems (City University, London, UK, 2002). [Google Scholar]

- 31.Pressing J., Cognitive isomorphisms between pitch and rhythm in world musics: West Africa, the Balkans and Western tonality. Stud. Music 17, 38–61 (1983). [Google Scholar]

- 32.Messiaen O., Technique de Mon Langage Musical (Alphonse Leduc, 1944), vol. 1. [Google Scholar]

- 33.Trehub S. E., Schellenberg E. G., Kamenetsky S. B., Infants’ and adults’ perception of scale structure. J. Exp. Psychol. Hum. Percept. Perform. 25, 965 (1999). [DOI] [PubMed] [Google Scholar]

- 34.Graves J. E., Micheyl C., Oxenham A. J., Expectations for melodic contours transcend pitch. J. Exp. Psychol. Hum. Percept. Perform. 40, 2338 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Krumhansl C. L., The psychological representation of musical pitch in a tonal context. Cognit. Psychol. 11, 346–374 (1979). [Google Scholar]

- 36.Krumhansl C. L., Shepard R. N., Quantification of the hierarchy of tonal functions within a diatonic context. J. Exp. Psychol. Hum. Percept. Perform. 5, 579 (1979). [DOI] [PubMed] [Google Scholar]

- 37.Krumhansl C. L., Kessler E. J., Tracing the dynamic changes in perceived tonal organization in a spatial representation of musical keys. Psychol. Rev. 89, 334 (1982). [PubMed] [Google Scholar]

- 38.Krumhansl C. L., Keil F. C., Acquisition of the hierarchy of tonal functions in music. Mem. Cognit. 10, 243–251 (1982). [DOI] [PubMed] [Google Scholar]

- 39.Corrigall K. A., Trainor L. J., Enculturation to musical pitch structure in young children: Evidence from behavioral and electrophysiological methods. Dev. Sci. 17, 142–158 (2014). [DOI] [PubMed] [Google Scholar]

- 40.Castellano M. A., Bharucha J. J., Krumhansl C. L., Tonal hierarchies in the music of north India. J. Exp. Psychol. Gen. 113, 394 (1984). [DOI] [PubMed] [Google Scholar]

- 41.Rohrmeier M., Widdess R., Incidental learning of melodic structure of north Indian music. Cognit. Sci. 41, 1299–1327 (2017). [DOI] [PubMed] [Google Scholar]

- 42.Moldwin T., Schwartz O., Sussman E. S., Statistical learning of melodic patterns influences the brain’s response to wrong notes. J. Cognit. Neurosci. 29, 2114–2122 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Loui P., Wessel D. L., Kam C. L. H., Humans rapidly learn grammatical structure in a new musical scale. Music Percept. 27, 377–388 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Narmour E., The “genetic code” of melody: Cognitive structures generated by the implication-realization model. Contemp. Music Rev. 4, 45–63 (1989). [Google Scholar]

- 45.Rohrmeier M., Cross I., Artificial grammar learning of melody is constrained by melodic inconsistency: Narmour’s principles affect melodic learning. PloS One 8, e66174 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Stevens C. J., Music perception and cognition: A review of recent cross-cultural research. Topic. Cognit. Sci. 4, 653–667 (2012). [DOI] [PubMed] [Google Scholar]

- 47.Krumhansl C. L., Cuddy L. L., “A theory of tonal hierarchies in music” in Music Perception, Jones M. R., Fay R. R., Popper A. N., Eds. (Springer, 2010), pp. 51–87. [Google Scholar]

- 48.Lange K., Czernochowski D., Does this sound familiar? Effects of timbre change on episodic retrieval of novel melodies. Acta Psychol. 143, 136–145 (2013). [DOI] [PubMed] [Google Scholar]

- 49.Halpern A. R., Bartlett J. C., “Memory for melodies” in Music Perception, Jones M. R., Fay R. R., Popper A. N., Eds. (Springer, New York, NY, 2010), pp. 233–258. [Google Scholar]

- 50.Dowling W. J., Scale and contour: Two components of a theory of memory for melodies. Psychol. Rev. 85, 341 (1978). [Google Scholar]

- 51.Eerola T., The Dynamics of Musical Expectancy: Cross-Cultural and Statistical Approaches to Melodic Expectations (Jyväskylän Yliopisto, 2003), vol. 9. [Google Scholar]

- 52.Pearce M. T., Wiggins G. A., Auditory expectation: The information dynamics of music perception and cognition. Topic. Cognit. Sci. 4, 625–652 (2012). [DOI] [PubMed] [Google Scholar]

- 53.Di Liberto G. M., et al. , Cortical encoding of melodic expectations in human temporal cortex. eLife 9, e51784 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Cannam C., Landone C., Sandler M., “Sonic Visualiser: An open source application for viewing, analysing, and annotating music audio files” in Proceedings of the 18th ACM International Conference on Multimedia, del Bimbo A., Chang S., Smeulders A., Eds. (Association for Computing Machinery, New York, NY, 2010), pp. 1467–1468. [Google Scholar]

- 55.Zatorre R. J., Delhommeau K., Zarate J. M., Modulation of auditory cortex response to pitch variation following training with microtonal melodies. Front. Psychol. 3, 544 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Leung Y., Dean R. T., The difficulty of learning microtonal tunings rapidly: The influence of pitch intervals and structural familiarity. Psychomusicol. Music Mind Brain 28, 50 (2018). [Google Scholar]

- 57.Creel S. C., Newport E. L., “Tonal profiles of artificial scales: Implications for music learning” in Proceedings of the 7th International Conference on Music Perception and Cognition, Stevens C., Burnham D., McPherson G., Schubert E., Renwick J., Eds. (Causal Productions, Adelaide, 2002), pp. 281–284. [Google Scholar]

- 58.Dowling W. J., Bartlett J. C., The importance of interval information in long-term memory for melodies. Psychomusicol. J. Res. Music Cognit. 1, 30 (1981). [Google Scholar]

- 59.Dowling W. J., Recognition of melodic transformations: Inversion, retrograde, and retrograde inversion. Percept. Psychophys. 12, 417–421 (1972). [Google Scholar]

- 60.Krumhansl C. L., Sandell G. J., Sergeant D. C., The perception of tone hierarchies and mirror forms in twelve-tone serial music. Music Percept. 5, 31–77 (1987). [Google Scholar]

- 61.Dienes Z., Longuet-Higgins C., Can musical transformations be implicitly learned? Cognit. Sci. 28, 531–558 (2004). [Google Scholar]

- 62.Huron D. B., Sweet Anticipation: Music and the Psychology of Expectation (MIT Press, 2006). [Google Scholar]

- 63.Tillmann B., Bharucha J. J., Bigand E., “Implicit learning of regularities in Western tonal music by self-organization” in Connectionist Models of Learning, Development and Evolution, French R. M., Sougné J. P., Eds. (Springer, 2001), pp. 175–184. [Google Scholar]

- 64.Salimpoor V. N., Zald D. H., Zatorre R. J., Dagher A., McIntosh A. R., Predictions and the brain: How musical sounds become rewarding. Trends Cognit. Sci. 19, 86–91 (2015). [DOI] [PubMed] [Google Scholar]

- 65.Morgan E., Fogel A., Nair A., Patel A. D., Statistical learning and gestalt-like principles predict melodic expectations. Cognition 189, 23–34 (2019). [DOI] [PubMed] [Google Scholar]

- 66.Kallman H. J., Octave equivalence as measured by similarity ratings. Percept. Psychophys. 32, 37–49, 1982. [DOI] [PubMed] [Google Scholar]

- 67.Huron D., Interval-class content in equally tempered pitch-class sets: Common scales exhibit optimum tonal consonance. Music Percept. 11, 289–305 (1994). [Google Scholar]

- 68.Gill K. Z., Purves D., A biological rationale for musical scales. PloS One 4, e8144 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]