Abstract

COVID-19 is an ongoing pandemic that is widely spreading daily and reaches a significant community spread. X-ray images, computed tomography (CT) images and test kits (RT-PCR) are three easily available options for predicting this infection. Compared to the screening of COVID-19 infection from X-ray and CT images, the test kits(RT-PCR) available to diagnose COVID-19 face problems such as high analytical time, high false negative outcomes, poor sensitivity and specificity. Radiological signatures that X-rays can detect have been found in COVID-19 positive patients. Radiologists may examine these signatures, but it's a time-consuming and error-prone process (riddled with intra-observer variability). Thus, the chest X-ray analysis process needs to be automated, for which AI-driven tools have proven to be the best choice to increase accuracy and speed up analysis time, especially in the case of medical image analysis. We shortlisted four datasets and 20 CNN-based models to test and validate the best ones using 16 detailed experiments with fivefold cross-validation. The two proposed models, ensemble deep transfer learning CNN model and hybrid LSTMCNN, perform the best. The accuracy of ensemble CNN was up to 99.78% (96.51% average-wise), F1-score up to 0.9977 (0.9682 average-wise) and AUC up to 0.9978 (0.9583 average-wise). The accuracy of LSTMCNN was up to 98.66% (96.46% average-wise), F1-score up to 0.9974 (0.9668 average-wise) and AUC up to 0.9856 (0.9645 average-wise). These two best pre-trained transfer learning-based detection models can contribute clinically by offering the patients prediction correctly and rapidly.

Keywords: COVID-19 detection, Deep learning, Chest X-ray images, Ensemble models, Convolutional neural network

Introduction

The first case of humans infected by Phinolophus bat originated in Zoonotic virus nomenclature in Wuhan City of China as severe acute respiratory syndrome Coronavirus-2 (SARS-CoV-2 virus) December 2019, the world observed. The city would soon become a coronavirus disease epicentre (COVID-19). The epidemic spreads around the globe later, resulting in millions of human deaths and economic losses of billions of dollars. Many people have lost their jobs, along with millions of fatalities, and the GDP of economically sound countries such as the USA, Italy, Spain, India and many others is experiencing negative growth. Most countries, financially, medically and socially, were not prepared for this type of situation where the disease, due to its transmittable nature, enters the stage of population spread. This results in many nations’ complete lockdown, contributing to an adverse psychological effect on people's health and minds.

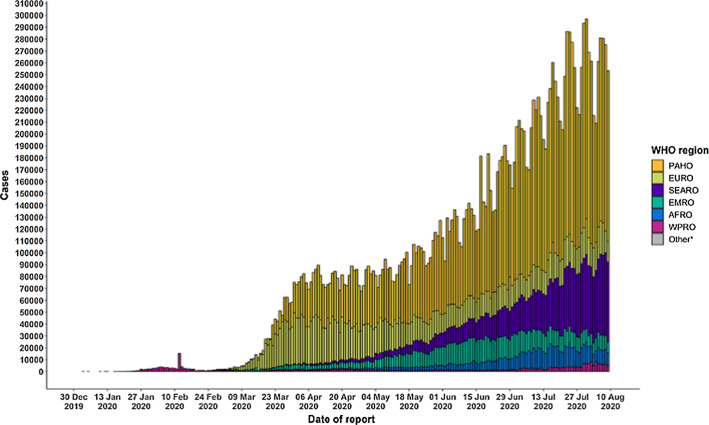

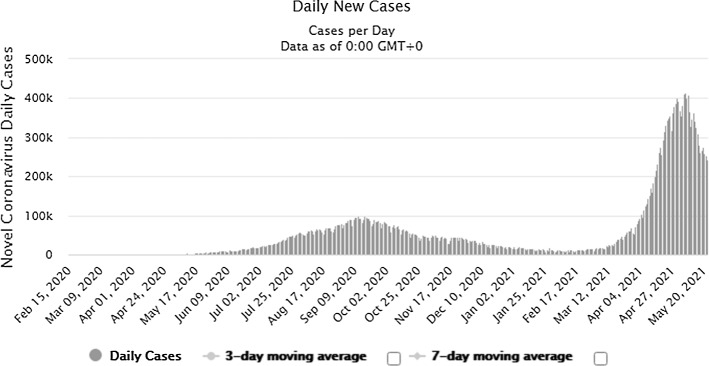

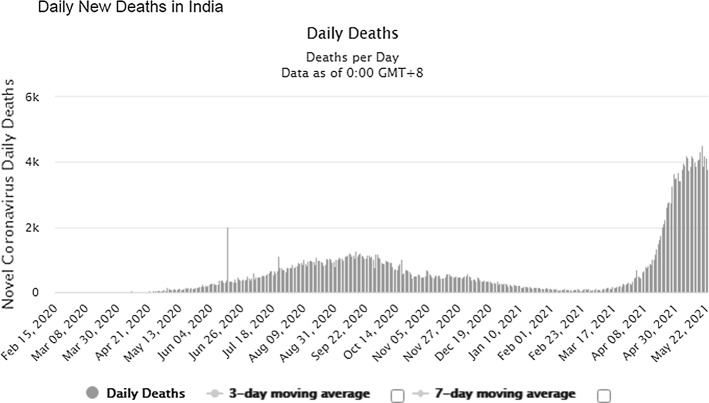

The pandemic is widely spreading its wings across the world and is proliferating leading to the spread at the community level. The number of patients increased from 2,810,325(confirmed case) and 193,825(deaths) in April 2020 to 16,114,449(confirmed case) and 646,641(deaths) till July 27, 2020. Till August 17, 2020, 21.59 million confirmed cases were reported and 777.6 thousand death tolls [1], and upto September 17, 2020, there were 29.87million confirmed cases and 940.72 thousand death tolls (Refer Fig. 1). On 05.04.2021, there were approximately 131 million patients worldwide, and the death count reached 2.85 million. On 03.04.2021, India observed 93,249 new cases (7-day average was 73,412) and recorded 2, 40,766 new COVID-19 cases and 3,736 deaths on May 22 (Refer Figs. 2 and 3). The country has so far reported a total of 2, 65, 28,846 cases and 2, 99,296 deaths (till 22.05.2021) (Refer Figs. 2 and 3). India leads the world in the daily average number of new infections reported, accounting for one in every two infections reported worldwide each day. As of date, the top five countries infected by this disease are the USA, India, Brazil, France and the Turkey.

Fig. 1.

Number of confirmed COVID-19 cases, by date of report and WHO region, 30 December2019 through 10 August2020 [1]

Fig. 2.

Number of COVID-19 cases reported in INDIA during last 18 months. [www. https://www.worldometers.info/]

Fig. 3.

Number of COVID-19 deaths reported in INDIA during last 18 months [www. https://www.worldometers.info/]

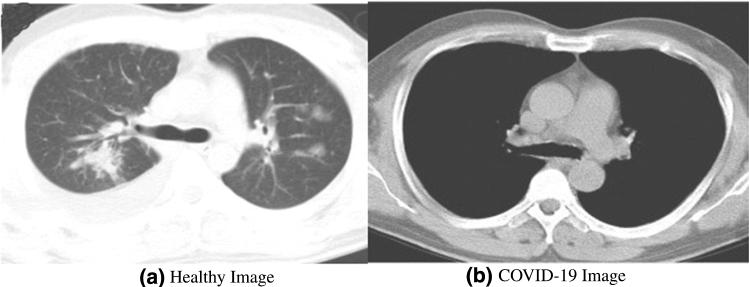

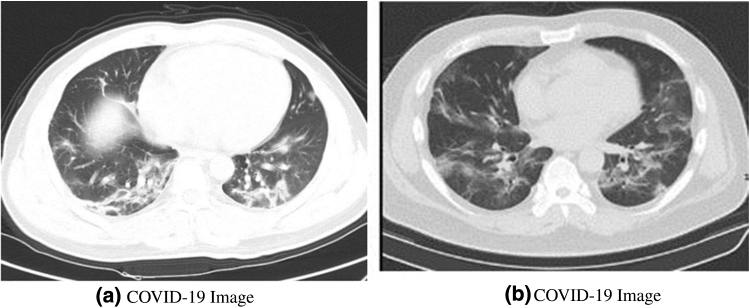

COVID-19 symptoms include acute respiratory disease, fever, dry cough, sore throat, chest pain or pressure, speech loss, myalgia, weakness, taste loss, weakness and nasal blockage, critical care respiratory condition breathing and death. The virus is mainly transmitted through respiratory droplets. The virus can also be found on surfaces and in the environment. Patients aged more than 50 years and having diabetes, cancer, cardiovascular diseases and chronic respiratory diseases are advised to follow precautions and preventions strictly. Many studies indicate 80% of mild-to-moderate patients recovered without hospitalization. Some specific patterns such as bilateral involvement, peripheral distribution and basal predominant ground-glass opacity, multifocal patchy focal consolidation, vascular thickness and crazy-paving pattern with peripheral distribution are seen in chest CT images of positive patients [2].

There is no known exact cure; only prevention such as washing hands, covering the mouth and nose and social isolation are available. Early diagnosis will allow for quarantining patients, rapid intubation in specialized hospitals and monitoring of the diseases’ spread. There is a great need for the system to identify an infection to predict future patients quickly. Hence, we can minimize the death rate with the help of early diagnosis and immediate treatment. The medical tool specification (PPE package, ventilators, beds and masks) will increase if the disease spreads further. The most appropriate molecular assay is RT-PCR, which detects the SARS-CoV-2 RNA using nasal or throat swabs, but kits of these have some significant disadvantages. These disadvantages include a potential lack of methodology, resource-intensive, low production, less sensitivity, as compared to CT images, and their diagnosis time is 6–9 h [3–6]. PCR tests involve several stages where errors may occur.

While the thoracic radiological examination was accepted as the key to diagnosing potential COVID-19 patients, the approach faces its difficulties. Studies have shown that non-COVID-19 patients with community-acquired infections caused by agents such as Streptococcus pneumoniae, Mycoplasma pneumonia and Chlamydia pneumoniae are present [7]. The CT symptoms are identical to those found in patients with COVID-19. Symptoms such as fever, cough and tiredness are not peculiar to COVID-19 pneumonia and are seen in pneumonia cases infected with the virus. In comparison, clinical practice has demonstrated no abnormality in CT images of certain COVID-19 patients, raising the complexity of diagnosing new coronavirus pneumonia infections in patients [7]. RT-PCR testing could be inaccessible in all confirmed cases due to the rapid dissemination of COVID-19 pneumonia [7]. A detailed diagnosis of COVID-19 pneumonia in patients with clinical symptoms and CT signs by an easy-to-implement approach will be helpful to implement selective and efficient isolation. Another type of test is also called an antibody test, which is a slow process and takes about 9–28 days to validate the patient as 'positive.' There is a risk that the infected person can multiply the infection these days if the quarantine is not successful.

The radiologist informs the patient of early (and timely) diagnosis, treatment and distinguishing of COVID-19 from other similar viruses (MERS). In this type of pandemic situation, a substantial increase in chest CT's is necessary. We require highly experienced radiologists, but there is a shortage of them [8, 9]. This will help improve their performances by providing them with a computer-aided alternative. Moreover, radiologists have some issues, too; the COVID-19 diagnosis is based on the radiologist's assessment and evaluation of CT and X-Ray images. The work can be time-consuming, and it is often riddled with intra-observer variability. To overcome these limitations, we need an advanced Artificial Intelligence system that is reliable and reproducible. With these systems, we may reduce the dependence on radiologists. Discussing from the other perspective, World Health Organization (WHO) has strongly recommended exhaustive testing of potential suspects and citizens living in hotspot areas, but the guidelines are not sincerely followed due to the scarcity of the resources/personnel/testing-kit. So it is an immediate priority to develop and test Artificial Intelligence (AI)-based fast and robust alternative approach that can help limit the rate of spread because prior studies, like lung cancer detection [10], heart disease prediction [62], liver lesions in CT scans [63], have justified the performance of AI-based models in clinical practice. These AI-assisted models have high accuracy, which will aid clinical in best decision making. Prior studies [11, 12] conclude that manual examination in the case of X-ray images provides precise recognition of the disease in 60–70%, and in the case of the CT images, manual analysis performance lies between 50 and 75%. The experienced radiologists decide based on prior clinical experience; however, such verdict is easily influenced by subjective factors and individual proficiency. More automated and intelligent methods are needed to search globally, especially in regions with limited resources. Deep learning has been a cornerstone in AI healthcare and medicine for over a decade. Then there should be a computer-based (deep learning approach) alternative for diagnosis of the disease. It supports users in the COVID-19 screening. The last issue in using the manual methods for evaluating chest X-rays is the low sensitivity of ground-glass opacity (GGO) nodules. Hence, early detection of the disease using X-ray is not possible. Deep learning can differentiate between nodules that an untrained human will not detect.

The above discussed factors inspire researchers to discover an artificial intelligence-based alternative health system solution that can diagnose the disease more cheaply, produce results in less time and be highly accurate. X-ray images are limited by low patient exposure and result in the diagnosis of soft tissues or disease areas in the thorax. Computer-aided diagnosis (CAD) systems eliminate the limitations of chest X-rays and help radiologists detect potential conditions automatically in low-contrast X-ray images. Computing technology makes use of more advanced components and recent image processing algorithms. Deep learning has recently become the most advanced approach in AI. They can learn by labeling previously labeled examples, like learning facial expression recognition in computers. A new subfield of computer vision involves using neural networks to analyze medical images. CT results cannot be reproduced accurately when lungs are affected in a large amount. AI-based artificial intelligence can provide a complete analysis.

With the rapid advancement of computers and their applications in multidisciplinary fields, the researcher’s fraternity in the medical field, such as organ segmentation and image enhancement and repair, is actively introduced, actively promoting medical diagnosis [13, 14]. By being additional supporting instruments for clinicians, machine learning has proved its applicability in the medical field [15, 16].

Deep learning methods, such as CNN, have been shown to predict clinical results from the classification of skin and breast cancer classification, glaucoma detection, lung segmentation, lung-related abnormalities like cancer and nodule detection, chest X-ray pneumonia detection, classification of brain disease, detection of arrhythmias, viral infections and biomedical studies [11, 17, 18]. The CNN models have also shown their ability to achieve human-like accuracies, or more than that in concise span, in image classification issues. Expertise in diagnosis can be found by digitizing and standardizing image knowledge to help medical professionals make a perfect decision [19].

The above discussion motivates us to develop a Computer-Assisted Diagnosis (CAD) framework based on deep learning to predict COVID-19 infection. The device must deliver an appropriate screening method that can distinguish the CT and X-ray images of potential COVID-19 patients from related viral pneumonia, SARS and MERS diseases. The CAD concept was introduced in 1966 [17] and has been fully applied since 1980 [18]. CAD systems have demonstrated their ability to support radiologists with high trust in making their decisions (such as triage, quantification and follow-up of promising cases). They also have advantages over radiologists, such as reproducibility and subtle improvements that are not apparent by visual examination.

The paper’s organization begins with simple COVID-19 coverage and the motivation to construct a diagnostic method based on artificial intelligence. The literature on COVID-19 chest X-ray and CT images using a deep learning method are reviewed in Sect. 2. Information on shortlisted datasets and information on models selected for experimentation is provided in Sect. 3, along with discussing appropriate settings and procedural phases. Section 4 reports the method of investigation, along with results in tabular and graphical presentations. Section 5 provides a discussion of the findings and a comparison of recent works. We presented a detailed debate on the functional applicability and outlook of our presented work in Sect. 6. Finally, we conclude in Sect. 7.

The main contribution of this paper is as follows:

In this paper, X-ray and CT images are used to overcome the RT-PCR sensitivity problem. It was noted that COVID-19 reveals some signatures which can easily be observed via X-ray images. Deep learning approaches can automatically analyze chest X-rays, which can accelerate the time of the investigation. Applying multiple pre-trained convolutional neural networks proposed by the scientific community and our proposed ones that are selective and reliable to achieve the best pulmonary classification X-ray picture illnesses. The generated results are remarkable and highly encouraging and prove that deep learning is successful, and more specifically, transfer learning with CNNs to the automatic detection of abnormal X-ray images related to the COVID-19 disease. The study is an end-to-end solution for the diagnosis of COVID-19 which can be converted into a low-priced, quick, robust and reliable intelligent screening tool to reduce the error rate in binary classification between COVID-19 patients and healthy people (Non-COVID Pneumonia and Normal).

Extensive experimentation (16 experiments) and evaluation has been conducted using 20 pre-trained transfer learning deep models on 4 datasets to validate the best model performance while addressing the COVID-19 detection. Performance is measured by standard metrics such as accuracy, AUC and F1 score. Through 21 premium studies recently published in SCI indexed COVID-19 studies, we have concluded that we are the first to do such an extensive experiment with so many models.

We also have designed three novel CNN models (one from scratch, next one hybrid and last one an ensemble model) based on deep transfer learning system with different pre-trained CNNs architecture to detect the use of X-ray and CT images in COVID-19 patients. The models are quick in nature and are highly acceptable for its accuracy.

This study contributes to scientific research by recommending two novel best classifier models with over 98% accuracy in the detection of COVID-19 cases in all 15 experiments for the binary class task. These two best performed deep learning models would help radiologists and doctors to diagnose and decide on the disease as a hand and also as a second opinion.

Literature Survey

We have recently examined several research studies in world-renowned journals to present what scientists have recently implemented to diagnose X-ray and/or CT image COVID-19 infection using different approaches based on profound learning. Of these, more than 25 studies (Springer's 10, Elsevier's 14, and the Romanian Journal of Information Science and Technology's remaining 1) were shortlisted and recently published in highly indexed journals. Below is a concise summary of the study's key points. We also built Table 1 displayed at the end of this section to present key points of selected analysis.

Table 1.

Comparative chart of the prior studies

| Paper title | Selected DataSet and its size | Models Applied | Best Model Justified |

|---|---|---|---|

| Butt et al. [20] | 618 transverse section CT samples |

ResNet 23 and self-crafted Self-Crafted ResNet-18-based CNN model |

ResNet 23 and self-crafted |

| Das et al. [21] | Approximately public 627 images [28] | Inceptionnet V3 Alexnet,Resnet50 VGGNet,CNN,Deep CNN and Xccetion-based self-crafted model | Deep CNN and Xccetion-based self-crafted model |

| Alakus et al. [22] | 18 laboratory findings of the 600 patients |

ANN,CNN,LSTM RNN,CNNLSTM CNNRNN |

CNNLSTM |

| Ardakani et al. [23] | Private dataset of 1020 images | AlexNet,VGG-16,VGG-19,SqueezeNet,GoogLeNet MobileNet-V2, ResNet-18,ResNet-50, ResNet-101,Xception | ResNet-101 and Xception |

| Singh et al. [24] | Public dataset of 1419 images | Modified XceptionNet | Modified XceptionNet |

| Panwar et al. [25] | Publicly available 337 images | VGG-16 inspired nCOVnet | VGG-16 inspired nCOVnet |

| Wang et al. [26] | Publicly available 3545 images | ResNet50 + FPN inspired model | ResNet50 + FPN inspired model |

| Abraham et al. [27] | 531 COVID-19 images; total 1100 images | 25pretrained networks (10 Basic and 15 Hybrid) | SqueezeNet + DarkNet-53 + MobileNetV2 + Xception + ShuffleNet |

| Toraman et al. [28] | Approximately public 731 images | Convolutional capsule network architecture | Convolutional capsule network architecture |

| Ozturk et al.[29] | Approximately public 627 images | Darknet inspired model | Darknet inspired model |

| Xu et al.[19] | Private 618 images | ResNet-18-based classification model | ResNet-18-based classification model |

| Khan et al. [30] | 1200 images of two public datasets | CNN model based on Xception architecture pre-trained on ImageNet dataset | CNN model based on Xception architecture pre-trained on ImageNet dataset |

| Ucar et al. [31] | 2800 images (consisting 45 images of COVID-19) from two public dataset | Deep Bayes-SqueezeNet inspired model | Deep Bayes-SqueezeNet inspired model |

| Nour et al. [2] | 2905 images (consisting 219 images of COVID-19) | CNN-Machine Learning-Bayesian Optimization-based Model | CNN-Machine Learning-Bayesian Optimization-based Model |

| Brunese et al. [32] | 6523 images (consisting 250 images of COVID-19) | VGG Inspired model | VGG Inspired model |

| Panwar et al. [33] | Private dataset of 526 images and Public dataset of 1300 images | Applying Grad-CAM technique in VGG-19 inspired model | Applying Grad-CAM technique in VGG-19 inspired model |

| Goel et al. [34] | 800 COVID-19 images; total 2600 images | Self-created CNN-based OptCoNet model | Self-created CNN-based OptCoNet model |

| Jain et al. [35] | 490 COVID-19 images; total 6432 images |

Inception V3, Xception and ResNetXt |

Xception |

| Abbas et al. [36] | 105 COVID-19 images; total 200 images | Self-composed a Deep CNN-based DeTraC model; (Uses AlexNet,VGG-19,GoogleNet, Resnet, SqueezeNet for the transfer learning stage in DeTraC) | VGG-19 in DeTraC |

| Zebin et al. [37] | 202 COVID-19 images; total 802 images | VGG-16,Resnet50 and EfficientNetB0 | EfficientNetB0 |

| Punn et al. [38] | 108 COVID-19 images; total 1200 images |

ResNet,Inception-v3,InceptionResNet-v2,DenseNet169, and NASNetLarge |

NASNetLarge |

Butt et al.[20] observed CT image radiographic patterns of potential COVID-19 patients using a CNN-based approach. Authors evaluated two CNN models; one was RestNet23, and the other was designed. ResNet-18 was used to extract image features. The CT images dataset achieved an overall accuracy of 86.7%. Das et al.[21] selected X-ray images to identify COVID-19 infection using a deep-transfer learning-based approach using a modified Inception model(Xception). Eleven existing machine and deep learning models/algorithms (including one proposed) were compared; a maximum accuracy of 97.40% justifies the superiority of the proposed model. Alakus et al. [22] selected 18 laboratory findings from 600 COVID-19 patients using four different types of deep learning models and two hybrid models. In the case of tenfold cross-validation, maximum accuracy is achieved by LSTM (86.66%), and maximum accuracy is conducted in the CNNLSTM hybrid model (92.30%) train-test split approach. Ardakani et al. [23] compared ten convolutionary neural networks to diagnose COVID-19 from CT images. Over 1000 images private dataset was selected for training and testing. The authors conclude that ResNet-101 and Xception achieved the best performance of their shortlisted models. Singh et al. [24] used modified XceptionNet to identify COVID-19. The dataset contains 1419 images with fewer than 132 images of COVID cases. Compared to the other three competitive models, the proposed modified model (95.80%) achieved the best accuracy. Panwar et al. [25] proposed a new VGG-16 deep learning neural network, nCOVnet, for X-ray detection. The limited COVID-19 images, 142, are selected for training and testing; the proposed model’s performance is not compared with any other pre-trained or modified image. Limited data achieved an overall accuracy of 88.10%. Wang et al. [26] applied deep learning to chest X-ray images for two purposes (discrimination and location). For COVID-19 discrimination, the first objective was lung features extraction, and the second objective was to identify infected pulmonary tissues from each detected chest X-rays. Abraham et al. [27] selected X-ray images to investigate COVID-19. The authors chose 10 CNNs and 15 multiCNNs for performance evaluation. The best result was obtained in combining five pre-trained CNN’s: SqueezeNet, DarkNet-53, MobileNetV2, Xception and ShuffleNet. Authors also used correlation-based feature selection and Bayesnet classifier to identify infection. Bayesnet 's performance was compared to seven other classifiers. The authors also showed that Bayesnet's accuracy (91.15%) is equivalent to 5 CNN's best combination.

Authors, Toraman et al. [28], suggested a novel small number of layer-based imagenet, Convolutional CapsNet, for X-ray diagnosis. The model uses tenfold cross-validations to generate outputs in two classes, binary classification (COVID-19 and No-Findings) with 97.24% accuracy and multi-class classification (COVID-19, No-Findings and Pneumonia) with 84.22% accuracy. Researchers, Ozutark et al. [29], used deep neural networks to find COVID-19 infection using potential patient X-ray images. The investigators used the presented model for you to look only once at real-time object detection system. DarkNet model used to perform binary classification with 98.08% accuracy and multi-class classification with 87.02% accuracy when using fivefold cross-validation. The count of COVID-19 images selected for training and testing is less, and the study implements only one model. Xu et al. [19] selected CT images for screening Coronavirus disease with the support of a ResNet-based deep learning system. Initially, deep-learning models were used to segment infection regions. Using the local-attention classification model, these images were then categorized into three classes (including COVID-19). Using Noisy-or Bayesian function, the infection type and overall confidence score were calculated as a final step. Khan et al. [30] introduced a new deep-neural network-based image architecture based on Xcpetion, CoroNet, to detect and infect COVID-19 from X-ray images. They selected 284 COVID images (total 1,600 images) for performance evaluation. The system classifies images into 2-class (99% accuracy), 3-class (95% accuracy) and 4-class (89.6% accuracy) classification. In Ucar et al.'s next study [31], authors explored a novel Deep Bayes-Squeeze-based COVIDiagnosis net for chest X-ray glaucoma screening. Dataset consists of 76 COVID-19 images. The authors demonstrated their model 's superiority in accuracy achieved with other existing network designs using fine-tuned hyperparameters and augmented data sets. They justify the performance by presenting the accuracy as 100% (single recognition of COVID-19 among other classes) and 98.3% (among Normal, COVID and Pneumonia). In their study, Nour et al. [2] presented a new system for detecting COVID-19 infection from chest X-rays based on deep features and Bayesian optimization. Model was trained from scratch to transfer learning approach. The deep features were extracted from the self-designed five-layer-based CNN model and finally fed into Machine Learning (ML) classifiers. Model hyperparameters were optimized using the Bayesian optimization algorithm. However, the text lacks a detailed description of extracted features. Brunese et al. [32] applied customized VGG16 deep learning model for COVID-19 chest X-ray detection. The approach consists of three phases: first, pneumonia detection, if the answer is yes, the next phase is called, which distinguishes between COVID-19 and pneumonia, and finally the last phase is implemented to locate the areas in the COVID-19 presence X-ray. In the subsequent study by Panwar et al. [33], a fusion of deep learning (VGG-19 inspired model) and Grad-CAM-based color visualization approach was employed on chest X-ray and CT-scan images for COVID-19 infection detection in the form of binary image classification. Grad-CAM-based color visualization approach was used to interpret and explains the detection of radiology images. The system attains an overall accuracy of 95.61%.

Goel et al. [34] proposed an optimized OptCoNet picture classifying model to automatically detect COVID-19 from chest X-ray images with six CNN architecture layers. The Grey Wolf Optimizer algorithm played the role of optimizing hyperparameters required for training the CNN layers. Authors Jain et al. [35] compared three models, Inception V3, Xception and ResNeXt, in the subsequent study recently published to examine their efficacy detecting COVID-19 infections in patients with radiation images. The public repository has been selected for approximately 575 COVID-19 images; the Xception model is the most exact. Researchers suggested a deep CNN(DeTraC), which DCompose, Transfer and Compose for the classification of X-ray chest pictures of COVID-19 in the subsequent empirical study of Abbas et al. [36]. Five different CNN pre-trained ImageNet models were used for DeTraC 's transfer learning phase. Combining VGG-19 and DeTraC, model achieved the highest accuracy by 97.35%. For X-ray pictures detection, Practitioners Zebin et al. [37] used VGG-16,ResNet50 and EfficientNetB0. In the following study by Pun et al. [38], researchers have used five pre-trained CNN models to identify chest X-ray infections in patients from 153 COVID-19 shortlisted images. In the subsequent article by Gianchandani et al. [5], the authors proposed two different ensemble deep transfer learning models to predict subject disease and classify subject patient images into binary and multi-class problems. Researchers selected two different datasets for validation of the proposed models. The proposed ensemble model's performance (VGG16 + DenseNet) was compared with VGG16, ResNet152V2, InceptionResNetV2 and DenseNet201 and found to be best with 96.15% accuracy on the binary dataset. Later, in the case of Kaur et al. [39] authors used the same dataset of [5] for their experimentation. Researchers show the novelty in their work by proposing modified AlexNet architecture for feature extraction and classification. Strength Pareto evolutionary algorithm-II(SPEA-II) was employed to tune the hyperparameters of this modified imagenet. The proposed model's performance was compared with 09 models in which it performs the best with more than 99% accuracy. They tested for classifying images of the patients into four classes. Another approach was developed by researchers in [40] by ensembling the deep transfer learning models, ResNet152V2, DenseNet101 and VGG16. They gather approximately 11,000 images to classify them into four classes (COVID-19, Pneumonia, Tuberclosis and Healthy). The model’s performance was compared with 06 existing models, and the generated results reveal that the proposed one was the best with more than 98% score (in all parameters like Accuracy, AUC, F-measure, sensitivity and specificity). Jaiswal et al. [41] presented a pre-trained deep learning architecture (DenseNet201) to classify COVID-19, infected patients, on the basis of their CT scan. They evaluate the performance with the other three competitive models (VGG16, InceptionResNet and DenseNet) on five efficiency measuring parameters. Authors conclude that their presented model with more than 96% score (in all parameters like Precision, Recall, F-Score, Specificity and Accuracy). In just recently published study researchers, Tiwari et al. [42] suggested a system based on visual geometry group capsule network (VGG-CapsNet) to screen COVID-19 disease based on input images. The composed dataset contains around 2900 images belonging to three classes (normal, pneumonia and COVID-19). Their presented model achieved an accuracy of 97% for segregating COVID-19 samples from non-COVID-19 samples and 92% accuracy for segregating COVID-19 samples from normal and other viral pneumonia samples.

Materials and Models

The dataset used and the methodology used is explained in the subsequent sections.

Dataset Description

The first three datasets selected for the validation of the shortlisted models are open-access publicly available repositories. The fourth one is a self-crafted dataset whose images (X-ray and CT both) are collected from the internet and private hospitals. As per the best of our knowledge (gathered from various shortlisted prior published studies), we are the first to experiment with four datasets. Next, as per the acquired knowledge, very few studies have tested on so many COVID-19 images; although the count of the COVID-19 images is not very large still it is greater than experiments performed by previous researchers. After that, regarding the first dataset, we want to communicate that as per the best of our knowledge, none of the earlier studies have experimented on this dataset; this dataset belongs to the University of California, where an open coding competition was going on (01.09.2020). Last observation regarding dataset, while going through these studies, the practitioners have discriminated the images into classes (X-ray or CT). They have selected either X-ray images for experimentations or CT images for the same. We have overcome this discrimination by creating our customized dataset consisting of both X-ray and CT images of COVID and non-COVID patients. The details of the images are stated in Table 2.

Table 2.

Datasets used in the study

| Data Set Serial Number | Total Number of Images | Count and nature (COVID/Non-COVID) | Address (Last Access Date 01/10/2020) | X-ray/CT images |

|---|---|---|---|---|

| 1 | 746 |

COVID: 349 Non-COVID: 397 |

https://github.com/UCSD-AI4H/COVID-CT/tree/master/Images-processed | X-ray images |

| 2 | 516 |

COVID: 278 Non-COVID: 238 |

https://ieee-dataport.org/open-access/COVID19action-radiology-cxr | X-ray images |

| 3 | 418 |

COVID: 210 Non-COVID: 218 |

https://www.kaggle.com/bachrr/COVID-chest-x-ray?select=images | X-ray images |

| 4 | 770 |

COVID: 142(CT images) and 183(X-ray) Non-COVID: 207(CT images) and 238(X-ray) |

Private Customized dataset. Images collected from different resources available on internet and private hospitals located in author’s hometown | X-ray images and CT images |

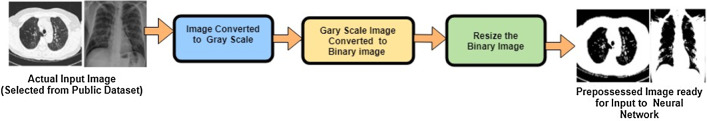

Preprocessing

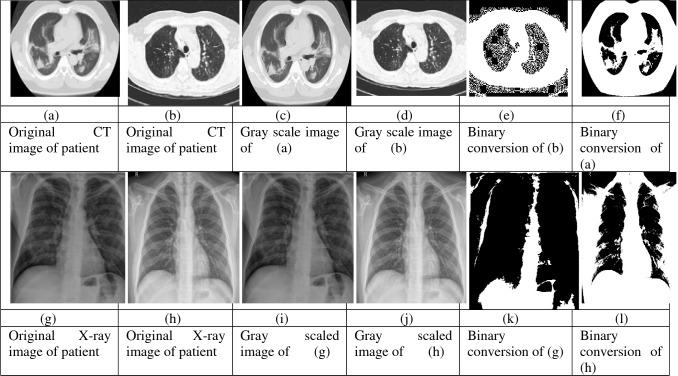

Images in the dataset may be of different sizes, so we need to pre-process them using the steps displayed in Figs. 4, 5. Initially, all the images are passed through the preprocessing module, and then

Fig. 4.

Pictorial representation of the initial stage

Fig. 5.

Outputs of the various processes implemented during initial stage

they are converted into a gray scale using OpenCV. After that, we have converted images into the binary conversion of images. We then resized each image into 224 × 224 pixels for the following processing steps in the deep learning framework pipeline. The output of the images after conversion is shown in the above diagram. We have then applied different flips and rotation while executing data augmentation as more images will help train the data out of which are available to us.

Deep Learning, CNN, Transfer Learning, Fine-Tuning and Ensemble Method

Deep learning (DL) is a branch of machine learning that discusses approaches focused on data representation learning. Deep learning with convolution is an effective way to simplify data processing. Computer models learn to perform classification tasks from pictures, text and sound. DL methods using several neural network layers can precisely extract and acquire specific characteristics from the data. These models are reliable, often sometimes outperforming humans. These models are trained by inputting a wide range of data. In Deep Learning, superior model efficiency can be observed as data quantity increases. With traditional methods, the output is the same after an initial wave. With vast volumes of data, Deep Learning will also be an optimal alternative to current classification methods. Additionally, CNN deep learning has fascinating advantages like low processing time, superior performance, high computing power and reliable outcomes (Prediction). Recent advancements in neural network architecture design and training have allowed researchers to solve previously unsustainable DL method learning tasks. Profound learning algorithms are contrasted with traditional algorithms. Convolution neural networks are one of the most popular DL approaches in medical imaging. The DL approach is a multi-stacked convolutionary algorithm. The CNN is composed of a convolutionary layer, a nonlinear layer and a softmax layer, a maximum or average pooling layer. Convolutionary layers also use pooling layers to increase invariance and reduce function map computing costs. It subsamples, the convolutional layer to reduce the feature map's size and computes the maximum and average function over the convolutional layer and are called max pooling and average pooling concerning the function they perform. Spacing in the pixels of the image is used with pooling and is called stride.

The number of parameters in the model grows as networks deepen to optimize learning performance. Deep learning models require large volumes of data. Deeper networks lead to more complicated computation and more difficult training data. We don't have many photos to train, check and evaluate data. It appears too small to train a deep CNN, and we need a definition of transfer learning. Transfer learning uses the CNN model in an extensive database to understand the objective mission (e.g., diagnosis of COVID-19 from X-ray CT scans) with limited preparation details. Learning transition is the mechanism by which previously acquired information is passed to an alternate mission. This means we move information (learning descriptive and widespread representations of features) to our database's insufficient issue. We are concerned about the use of this technique. Big picture data belong primarily to the general domain, such as pet, dog and chair, while our pictures are X-ray and CTs(of COVID-19) with different visual appearances. As a result, the visual representations learned from these large images cannot represent CT images correctly. It makes the network extraction feature partial to the source data and less widespread to the target data. The pre-trained models are also ideally suited to our current data collection of fewer numbers of images. In other words, our task is to change the pre-trained CNN structures. Usually, this approach is much easier than conventional CNN style random weight lifting. Too much data are required to train the enormous CNN model parameters correctly. We use transfer learning to mitigate the scarcity of such datasets and produce improved performance. A vast number of different types of images are trained in various special CNN architectures and are then considered the pre-trained CNN model. However, these models need a lot of data to prevent overfitting.

To solve this dilemma, techniques of transfer learning are applied. Transfer learning (TL) considers the standard neural architecture and prepared weights on broad datasets and then changes the weights of the goal task with limited training data. Transfer learning is a technique used to pretrain models to its best growth so that we can have better efficiency than the present model we are using. It is a machine learning approach in which a model designed for one task is used as the foundation for a model on a different task. Given the large computing and time resources needed to build neural network models on these problems and the massive leaps inability to provide on similar problems, it is a common method in deep learning where pre-trained models are used as the starting point on computer vision and natural language processing tasks. When modeling the second mission, transfer learning is an optimization that makes for faster development or increased results. Transfer learning enhances the learning of a new task by transferring information from a previously learned similar task. When modeling the second mission, transfer learning is an optimization that makes for faster development or increased results. TL is associated with multi-task learning and paradigm drift, and it is not solely a field of research for deep learning. Transfer learning is common in deep learning due to the immense resources needed to train deep learning models or the vast and challenging datasets used to train deep learning models. Deep learning only fits with transfer learning if the model features learned from the first challenge are standard. Shift learning entails initially training a base network on a base dataset and task and then repurposing or moving the learned features to a second target network trained on a target dataset and task. This process is more likely to succeed if the functionality is universal, applicable to both the base and target tasks, rather than unique to the base role. By reducing errors, an ensemble of transfer learning networks can be a stable solution. It generates optimum outcomes from the integrated networks for the fewest errors necessary. The convolutional neural network architecture is designed utilizing pre-trained models after data preprocessing. Researchers also applied the same approach in the recently published study for COVID-19 prediction [5, 41, 43, 44]. Researchers in their work [5] designed ensemble deep transfer learning models to predict this disease from chest X-ray images. Authors focused that TL uses feature extraction capabilities of pre-trained models on large datasets such as ImageNet, capturing class boundaries well. TL not only eliminates the need for a large dataset but also provides quick results. This motivates authors to incorporate these techniques into their proposed deep learning framework for COVID-19 classification. The authors of [43] employed a deep convolutional neural network as the backbone. They suggested three improvements (i) stochastic pooling to replace average classical pooling and maximum pooling methods; (ii) developing conv block by combining conv layer and batch normalization, and (iii) creating a fully connected block by combining dropout layer and fully connected layer. In the following study [44] by Zhang et al., the authors developed a novel method combining DenseNet and optimization of transfer learning setting (OTLS) strategy to solve COVID-19 prediction. Authors suggest that transfer learning is a solution to (i) accelerate developing CNN and (ii) avoid overfitting. Authors explore that with this technique's employability, researchers can develop a better performing deep neural network rapidly. The authors of [41] justify that hyper-tuning of Deep transfer learning models (DTL) can improve the results further; a DTL model with DenseNet201 was suggested in this study.

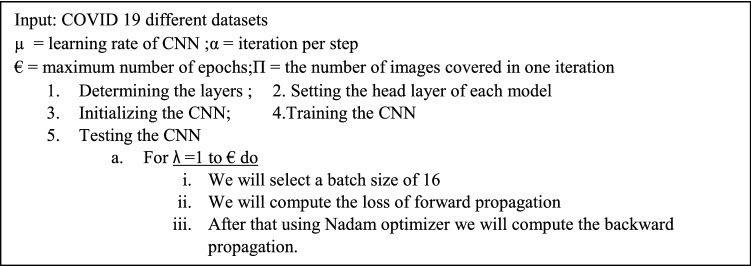

TL acquires the knowledge from solving another problem and applies that knowledge to a different but related problem. Authors further explored that three elements are paramount to support the transfer are (i) The triumph of pre-trained models supports the user get rid of hyperparameter tuning” (ii)The initial layers in pre- trained models can be thought of as feature descriptors which extract low-level features and (iii) The target model may only need to re-train the last several layers of the pre-trained model. In this technique, we take one model, for example, VGG-16, and train it first; then we freeze all the models’ layer; after that, we apply layers from our side on the model so we train the model on that part using the weights of VGG-16. This is a solid and robust technique for increasing the efficiency of the model. In other words, our approach is to train a solid, deep network to extract comprehensive visual properties by preparing broad data sets and then translating the network weights of this deep network into a small dataset's targeting mission. Fine-tuning is used to fine-tune the model we are using after the transfer learning technique; we used two parameters for fine-tuning early stopping and reducing rl over plateau. This technique helps to make the graph straighter and to reduce ridges in the graph. Early stopping is mainly used to stop the model when the validation accuracy or testing accuracy reaches its best height and when the loss is minimum in validation accuracy and training accuracy. For example, if we have used 50 epochs to train the model, the training is more sure to stop before 50 epochs when minimum loss and maximum accuracy have been reached. A general algorithm for all the models can be visualized as given below.

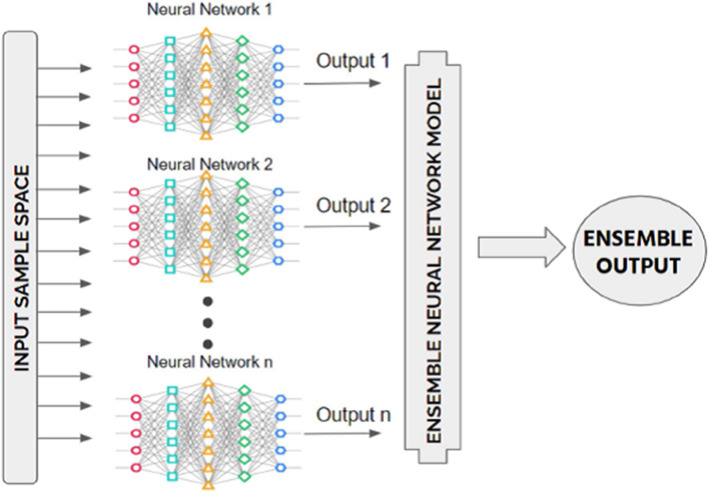

Nonlinear methods include neural network simulations. Nonlinear methods include neural network simulations. This means they can discover dynamic nonlinear interactions in results. The disadvantage of this adaptability is that they are vulnerable to initial conditions, both in terms of initial random weights and statistical noise in the testing dataset. Since the learning algorithm is stochastic, each time a neural network model is learned, it can retain a marginally (or dramatically) different version of the mapping function from inputs to outputs, resulting in other performance on the training and holdout datasets. As such, we should consider a neural network to be a system with a low bias and a high variance. When trained on massive datasets to accommodate the high variance, allowing some variance in a final model designed to render predictions can be disappointing.

The high variance of neural networks can be resolved by training several models and integrating their predictions. The aim is to incorporate forecasts from many strong yet distinct models. A good model has talent, which means that its predictions outperform random chance. Notably, the models must be good in various ways; they must have different prediction errors. Combining projections from several neural networks introduces bias, which offsets the volatility of a single learned neural network construct. The result is forecasted that are less vulnerable to the particulars of the training data, training scheme selection, and the chance of a successful training run. In addition to minimizing prediction uncertainty, the ensemble can provide better predictions than any single model. This method is part of a broader class of techniques known as "ensemble learning," which defines methods that aim to make the best use of predictions from several models prepared for the same challenge. In general, ensemble learning entails training several networks on the same dataset, then predicting using one of the trained models before integrating the predictions in some manner to produce an outcome or forecast. Indeed, the model ensemble is a common technique in advanced machine learning to ensure the most reliable and best possible prediction. So the main focus for using the ensemble approach is reducing the variance of neural network models.

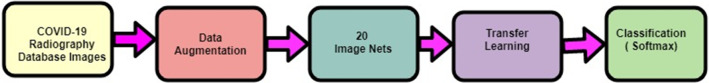

Ensemble learning is a method in which all models are combined using either soft or hard voting. For this, we use a voting classifier. Using a single model, the model’s boundary curve is not as smooth as the ensemble produced. The curve that follows the ensemble better separates the COVID and non-COVID cases than the curve that precedes it. As a result, we used Ensemble learning with a voting classifier to reduce our model's errors. The voting classifier also aids in reducing the likelihood of overfitting, thus improving the models' overall accuracy. Ensemble approaches are among the most appealing techniques. First and foremost, it connects the distinct core model through the Ensemble framework and generates a new optimal predictive model. Bagging is a form of ensemble process that uses bootstrapping and aggregation to create the ensemble system. Bootstrapped selected a random section of the dataset and built a decision tree for each random partition of the dataset. Following the development of each partition of the Decision Tree dataset, an algorithm is used to aggregate the Decision Trees to construct the most valuable predictors. The ensemble method's main aim is to generate the best predictor after combining various models. By reducing errors, an ensemble of transfer learning networks can be a robust solution. It creates optimum results from the combined networks with the fewest errors possible (Refer Fig. 6).

Fig. 6.

Block diagram representation of the proposed work

A hyperparameter is a machine learning technique that can improve a classical neural network's overall performance. This optimization method chooses the best parameters to describe the model architecture, also known as hyperparameters. The entire training process, precision, and necessary modifications are used to tune hyperparameters. The model is being revised to find the best combination to meet the challenge. The hyperparameter can be tuned to improve classification techniques. Hidden layer number, learning rate, epochs, batch size, activation functions, and so on are examples of common hyperparameters.

Motivation

The rapid spread of the novel coronavirus (COVID-19), which has since become an ongoing pandemic, has necessitated fast detection methods to avoid further virus spread and mortality. Globally, three methods are used for screening COVID-19 infectious patients. This includes screening via chest X-ray images, chest CT images, and the RT-PCR examination. Because of the many issues associated with the RT-PCR test, it is not always recommended on its own. Many studies have proposed using both CT/X-ray and RT-PCR parallel. Meanwhile, the scarcity and overburdening of expert radiologists to diagnose infection from CT/X-ray is a severe problem.

Furthermore, revealing radiological signatures from suspicious patient’s photographs is a time-consuming and human-error-prone operation (riddled with intra-observer variability), so we must automate X-ray/CT image analysis. Thus, the development and application of a fast and accurate early-stage classification screening method is critical, and deep learning models may be used, as one of the efficient available alternative, to meet this requirement. The scientific community has made numerous serious attempts to predict suspected infectious patients using deep transfer learning models. However, these models are still plagued by the overfitting problem. In this paper, one novel hybrid model and another created by ensembling deep transfer learning models are developed for classifying suspected artifacts into two categories (COVID-19 infected patients and healthy people). We have worked on four datasets to validate the best performing model(s). The best performing model should perform paramount on all datasets and their combinations and should not be a dataset-specific performer.

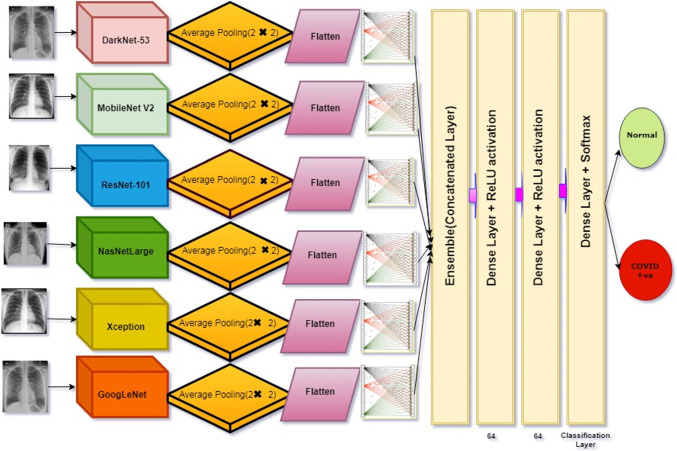

Model Formulation

For this highly intensive experimental study, we have shortlisted different state-of-the-art ImageNet pre-trained CNN networks which have proven their performance previously. We have proposed three models from our side, first one was built from scratch (called as Proposed Model in this study), and the next one is the hybrid model called as LSTMCNN and last one is the ensemble deep transfer learning model called as “DarkNet-53 + MobileNetV2 + ResNet-101 + NASNetLarge + Xception + GoogLeNet” in this paper. It should be noted that, to the best of the author's knowledge, this is the first attempt to use the LSTMCNN model for COVID-19 detection from X-ray and CT images, and possibly the first attempt in medical image analysis.

Moreover three models are adapted from the previous studies where they have shown their incredible performance to solve the problem; first one is named as “COVID research paper” which is adapted as such from [2]; next one as “U-Net(Glaucoma)” which is exactly adapted from [45] where this model has shown auspicious performance for Glaucoma binary classification on very large dataset; final one is named as “SqueezeNet + DarkNet-53 + MobileNetV2 + Xception + ShuffleNet” which is adapted from [27] as this hybrid model yielded impressive performance in COVID-19 classification. The complete list is displayed in Table 3. Next, we discuss the necessary settings, pre-trained backbones, and procedural stages along with some compulsory abstract level discussion on all the models (including abstract level pictorial representation of only some). The self-explanatory block diagram of the proposed work is shown below (Fig. 6).

Table 3.

List of models implemented in the study

| Model | Inspired/Adapted | Model | Inspired/Adapted | Model | Inspired/Adapted |

|---|---|---|---|---|---|

| VGG-16 | [60] | VGG-19 | [23] | MobileNet | [47] |

| InceptionResNetV2 | [48] | InceptionV3 | [48] | ResNet-101 | [49] |

| ResNet50V2 | [50] | Xception | [51] | SqueezeNet | [52] |

| DarkNet-53 | [53] |

SqueezeNet + DarkNet-53 + MobileNetV2 + Xception + ShuffleNet |

[27](Adapted) | EfficentNetB7 | [54] |

| DCGAN | [55] | LSTMCNN | Our proposed | U-Net(Glaucoma) (Adapted) | [45] |

| Proposed Model | (Self-Made Model) | NASNetLarge | [38, 56] | ResNet-101V2 | [61] |

| COVID research paper | [2](Adapted) | DarkNet-53 + MobileNetV2 + ResNet-101 + NASNet Large + Xception + GoogLeNet | (Self-Made-ensemble deep transfer learning model) |

VGG-16 has been applied in transfer learning fashion. First a model of VGG-16 from scratch is being downloaded and put upon the base model after that own model is created with layers of average pooling 2D with pool size 4, 4, Flatten layer, Dense layer of 64 neurons with ReLU activation, Dropout layer of 0.5 and dense layer of 2 neurons with Softmax activation. All these layers have been fine-tuned using early stopping and reducer lover plateau. Also due to the less quantity of images these images have been passed through data augmentation with batch size 16 and which flips the images, rotation angle set to 15 degrees and fill mode is nearest. The optimizers which have been tested are SGD, Nadam, AdaGrad. Here Nadam comes out to be best which fits the data for each dataset. In early stopping, the patience level is 5 and the learning rate for the optimizer is 0.001. Experiment has been performed up to 50 epochs.

Total params: 14,747,650

Trainable params: 32,962

Non-trainable params: 14,714,688.

ResNet-101 has been applied in transfer learning fashion here, a model is put on the top of the base model which includes the following layer;Flatten, Dense(64),Batch Normalization, Activation tanh, Dropout 0.25,Dense 64,Batch Normalization, Activation tanh, Dropout 0.25,Dense 32,Batch Normalization, Activation tanh, Dropout 0.5,Dense 2,Activation Softmax. All these layers have been fine tuned and data augmented with batch size 16 and rotation angle 15 degree to increase the quantity of images. In fine tuning we have used early stopping with patience level of 5. Here also we have used optimizer Nadam with learning rate 0.01 to get the accuracy. Experiment has been performed upto 50 epochs.

Total params: 49,088,898

Trainable params: 6,430,338

Non-trainable params: 42,658,560.

Xception net is used for classification of images in a more précised way with some different layers as its base model and head model keeps the same as we have stated above like from the scratch. Its base model includes the layers of Global Average pooling 2D, Dense 200, Activation ReLU, Dropout 0.4, Dense 170,Activation ReLU, Dense 2 and Activation Softmax. This net is also data augmented with the same rotation angle of 15 degrees and fill mode to the nearest having early stopping with patience value of 6 setting up the best weights quality also using reduce rl over plateau for the softening of the graphs and to increase the accuracy of each dataset on which we have worked on. Optimizer here which we have used is Nadam which remains top in the list.Experiment has been performed upto 50 epochs.

Total params: 21,305,792

Trainable params: 21,251,264

Non-trainable params: 54,528.

InceptionV3 net is a highly rated net and been applied in the phase of transfer learning having base model of Flatten, Dense 1024,Dropout 0.25,Dense 2 and Activation Softmax. This model also used data augmentation and fine tuning with early stopping having patience level of 5 and batch size of 16; the optimizers tested were nada, Nadam, SGD and AdaGrad. Experiment has been performed upto 50 epochs.

Total params: 74,234,658

Trainable params: 52,431,874

Non-trainable params: 21,802,784.

InceptionResNetV2 net was proudly said to be used in transfer learning phase with data augmentation and fine tuning with early stopping and reducer lover plateau batch size 16 optimizer Nadam learning rate of 0.001 patience level 5. It’s base model includes the following layers, Flatten, Dense(64),Batch Normalization, Activation tanh, Dropout 0.25,Dense 64,Batch Normalization, Activation tanh,Dropout 0.25,Dense 64,Batch Normalization, Activation tanh, Dropout 0.25,Dense 64,Batch Normalization, Activation tanh, Dropout 0.25,Dense 32,Batch Normalization, Activation tanh, Dropout 0.5,Dense 2,Activation Softmax. Experiment has been performed upto 50 epochs.

Total params: 56,802,530

Trainable params: 2,465,410

Non-trainable params: 54,337,120

In case of EfficientNetB7 for the improvement of accuracy the base model for transfer learning which we have used Batch Normalization, Activation tanh, Dropout 0.25,Dense 32,Batch Normalization, Activation tanh, Dropout 0.5,Dense 2,Activation Softmax. Experiment has been performed upto 50 epochs.

Total params: 72,134,041

Trainable params: 8,035,970

Non-trainable params: 64,098,071.

VGG-19 is the model which we have worked on with data augmentation, fine tuning and transfer learning including all the concepts of early stopping patience level 5 batch size 16 optimizer Nadam using binary cross entropy having the base model of Average pooling 2D with pool size 4,4 Flatten layer, Dense layer of 64 neurons with ReLU activation, Dropout layer of 0.5 and Dense layer of 2 neurons with Softmax activation. Experiment has been performed upto 50 epochs.

Total params: 20,057,346

Trainable params: 32,962

Non-trainable params: 20,024,384.

ResNet50V2 has been used with the help of transfer learning having base model content as follows: Flatten, Dense(64),Batch Normalization, Activation tanh, Dropout 0.25,Dense 64,Batch Normalization, Activation tanh, Dropout 0.25,Dense 64,Batch Normalization, Activation tanh, Dropout 0.25, Dense 64,Batch Normalization, Activation tanh, Dropout 0.25,Dense 32,Batch Normalization, Activation tanh, Dropout 0.5,Dense 2,Activation Softmax, The Nadam optimizer is applied with learning rate of 0.001.It also has early stopping with patience level of 5 and having the best weights set this model is the best as compared to any other model having the less data leakage value as compared to other models it also used data augmentation which has a value of 15 degree angle of rotation batch size of 16 and 50 epochs. The epochs in all the cases remains same that is 50.

Total params: 29,995,522

Trainable params: 6,430,338

Non-trainable params: 23,565,184.

MobileNet is another deep learning net which has been applied in the transfer learning fashion with head model as a mobile net and base model as stated below: Global average pooling 2D, Dense 1024,Activation ReLU,Dense 1024,Activation ReLU,Dense 512,Activation ReLU,Dense 2 and Activation Softmax. The optimizer here which we have used is Nadam with learning rate of 0.001with early stopping patience level 5 batch size 16 50 epochs. Data augmentation is applied with rotation angle of 15 degree. We have also used the best fit here in this case.

Total params: 23,301,729

Trainable params: 5,627,522

Non-trainable params: 17,674,207.

DarkNet-53 has been trained also in the transfer learning phase with all the optimizers tested namely Adam, AdaGrad and sgd.

Total params: 120,466

Trainable params: 119,154

Non-trainable params: 1,312.

DCGAN model has been trained on two models which are imposed on discriminator and generator. In this model discriminator has been used for discriminating the images from the right and wrong images the architecture of the discriminator and generator has been added.

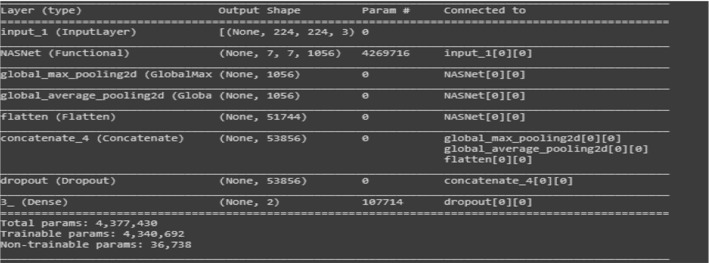

NASNetLarge has been implemented in the transfer learning phase and the vital details are shown with the help of screen shot. Batch size is16, Nadam optimizer used to compile the whole model with learning rate of 0.0001.

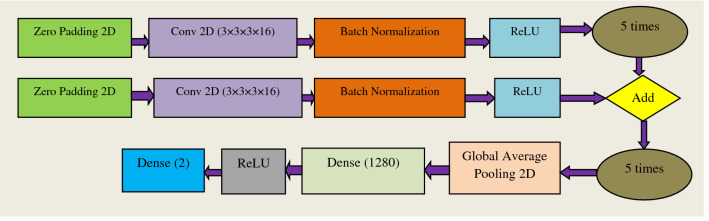

Most of the models we use are either pre-installed libraries or on Google; we apply transfer learning to them by creating our small neural network. But making a self-made model (Fig. 7) from scratch is an extraordinary task, so here is the novelty coming out to be. To make our model of our own, we first have made four layers of zero paddings, Convo2D, Batch Normalization with activation ReLU and then added them with one layer of such type; we have put this configuration in such a manner that it fits our each and every dataset on which we have tested our network.

Fig. 7.

Abstract form of Proposed Model (Built from Scratch)

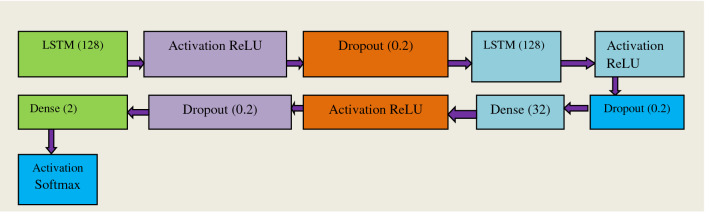

LSTM’s (Long short term memory) [60] are rarely used for image classification but it has been discovered that after incorporating certain convolution neural network layers we can use CNN for the classification of images. It can be observed from the diagram of the proposed model, certain layers have been added to the LSTM network to aid in classification of images into COVID-19 and Non-COVID. Such modifications made are highly practical for detection as LSTM runs faster than any CNN network. LSTM has been mostly used to compile the code in regression task but after some modification in transfer learning phase it can be used for image classification as it helps to classify the images; most of the aspects of it remains the same as the other models but the basic difference in this model is that there is large difference between the base model which we have used here. To apply for classification we have used the following steps with a CNN model: Input, CNN model, LSTM, Dense 2 and Activation Softmax. This is how LSTMCNN (Fig. 8) is used as a tool to classify images; this model comes out with a high expectation of images with good results. We are the frontrunners in using this LSTMCNN model for COVID-19 detection from X-ray and CT images to the best of our knowledge (Fig. 9).

Fig. 8.

Abstract form of LSTMCNN Model

Fig. 9.

Block diagram of an ensemble learning model by considering n number of artificial neural networks[5]

Total params: 213,301

Trainable params: 213,301

Non-trainable params: 0

It can be observed from Fig. 10, we have proposed ensemble deep transfer learning model (DenseNet-53 + MobileNetV2 + ResNet-101 + NASNetLarge + XceptionNet + GoogleNet) where these models have displayed range of performances during classification of similar type of problems. We have combined all the models; after that, we have applied a dense layer of 1024 units with activation function ReLU. Next, we have applied a dense layer with value 2 for this layer, activation function Softmax has been selected; finally we compiled the model. Here we have trained one best model, create its weights and used those weights for another model. From another model, we have created weights, and we have used those weights in another model. By doing so, we finally conclude that in between performance may decrease, but after passing those weights of the best model, it helps for sharpening of slopes and ultimately increases in accuracy. For each phase, we have trained the model on batch size 16 with Nadam as an optimizer. It can be analyzed that maybe the accuracy for a single model is less but cumulatively each model’s accuracy comes out to be best. Broadly default parameter settings were used for all pre-trained networks used for creating this multiCNN ensemble deep transfer learning model.

Fig. 10.

Detailed representation of proposed ensemble deep transfer learning model

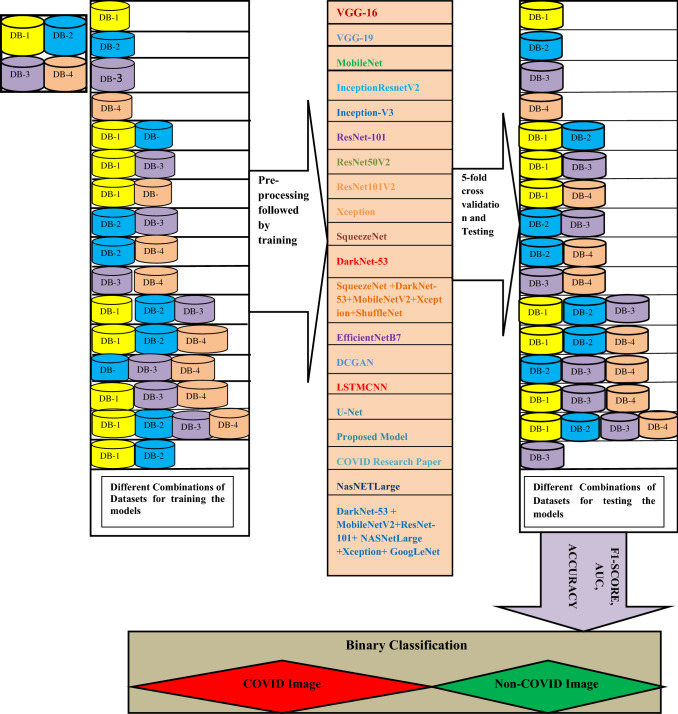

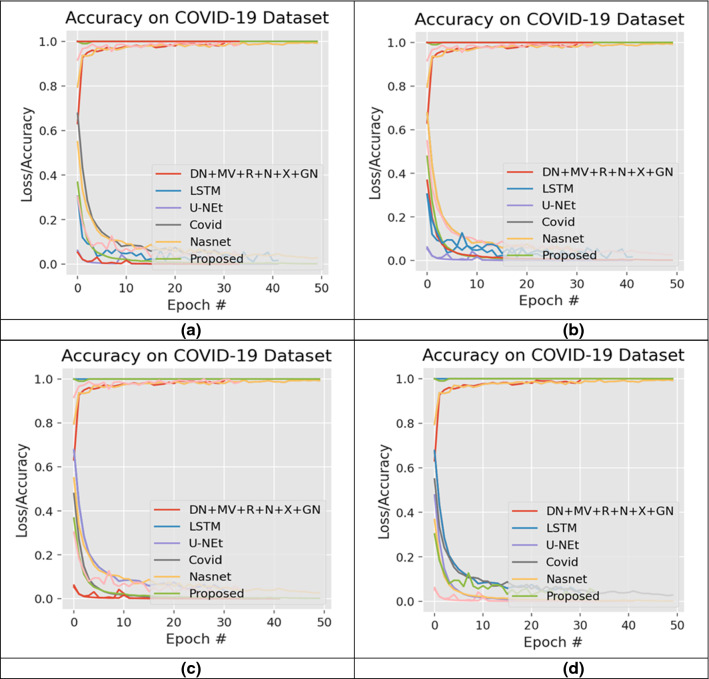

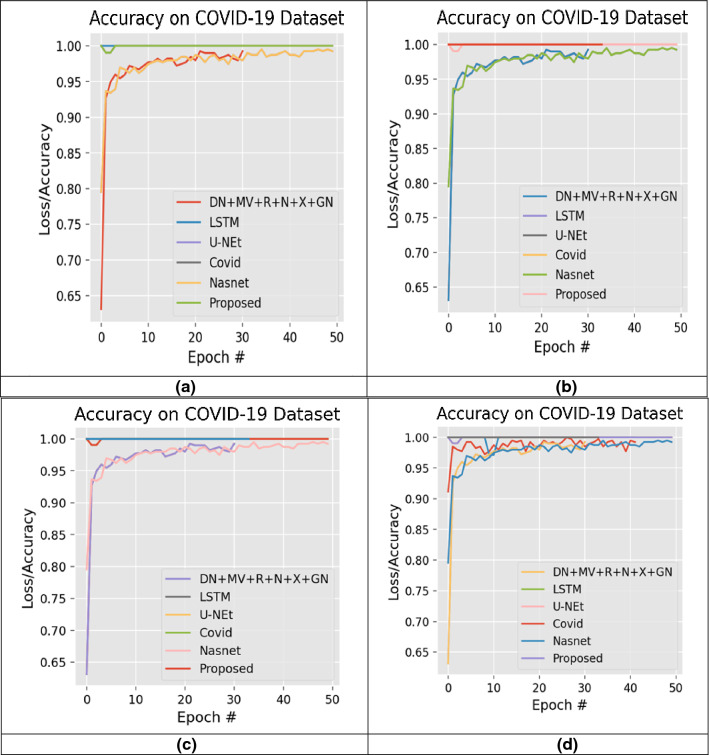

Experimental Results and Models Evaluation

Experimental Setup

We have planned 16 experiments to demonstrate each model's robustness, where various combinations of selected datasets are used. Fifteen studies are known examples, while the other is an unknown one. Established example means that the data for training and testing are the same, while unknown means that training is carried out on a dataset(s) and testing is carried out on different data sets. In Table 4, the specifics of the experimental setup are illustrated. During all 16 studies, we have carried out fivefold cross-validation. The tests were conducted using a 6GBGPU corei7, GTX1060 and coding in Python 3.8 were implemented. Figures 11, 12 display the complete layout of the proposed study.

Table 4.

Details of the requisite experimental setup

| Experiment Number | Dataset Used to Train the model(s) | Dataset used for Testing | Cross-validation rate (number of folds) | Example Type |

|---|---|---|---|---|

| 1 | (1) | (1) | 5 | Known Examples |

| 2 | (2) | (2) | 5 | |

| 3 | (3) | (3) | 5 | |

| 4 | (4) | (4) | 5 | |

| 5 | (1,2) | (1,2) | 5 | |

| 6 | (1,3) | (1,3) | 5 | |

| 7 | (1,4) | (1,4) | 5 | |

| 8 | (2,3) | (2,3) | 5 | |

| 9 | (2,4) | (2,4) | 5 | |

| 10 | (3,4) | (3,4) | 5 | |

| 11 | (1,2,3) | (1,2,3) | 5 | |

| 12 | (1,2,4) | (1,2,4) | 5 | |

| 13 | (2,3,4) | (2,3,4) | 5 | |

| 14 | (1,3,4) | (1,3,4) | 5 | |

| 15 | (1,2,3,4) | (1,2,3,4) | 5 | |

| 16 | (1,2) | (3) | 5 | Unknown Example |

Fig. 11.

Representation of the Confusion Matrix used in proposed work

Fig. 12.

Layout of the complete process executed in this study for early detection of COVID-19

Performance Indicators, Evaluation Metrics and Generated Results

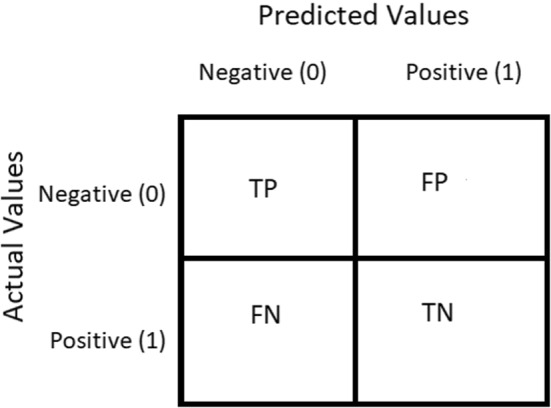

In classifying the images into COVID-19 and Non-COVID, this subsection presents the output of the models shortlisted. Confusion Matrix is a performance calculation used to solve classification problems in Deep Learning where output can be two or more classes (Refer Table 5). It is a table composed of four distinct variations of predicted and actual values. The confusion matrices are in the order of 2 X 2. It is a chart that is typically used to portray how the particular classification network is performing on a given test dataset for which the actual values are already known. The matrix of uncertainty consists of four values: True Positive (TP), False Positive (FP), True Negative (TN) and False Negative (FN). In this analysis, various normative performance assessment metrics such as accuracy, precision, recall, F-score, and AUC have been computed. These are based on four words, i.e., TP, FP, TN and FN. TP means prediction is positive and the person is COVID-19 positive, we want that; while FP is the occurrence in which we anticipated true and the real yield was likewise false, a false alarm that is bad. Similarly, TN applies to people who do not have an infection, and the result is negative, and FN is a disease patient, but the test is negative which means prediction is negative, and a person is COVID-19 positive, the worst.

Table 5.

Confusion matrix

| Predicted cases | ||

|---|---|---|

| COVID-19 | Healthy (Non-COVID) | |

| Actual cases | ||

| Positive | True Positive (TP) | False Negative (FN) |

| Negative | True Negative (TN) | False Positive (FP) |

Figure 11 shows the confusion matrix for proposed work where Negative (0) represents normal and Positive (1) represents COVID-19.

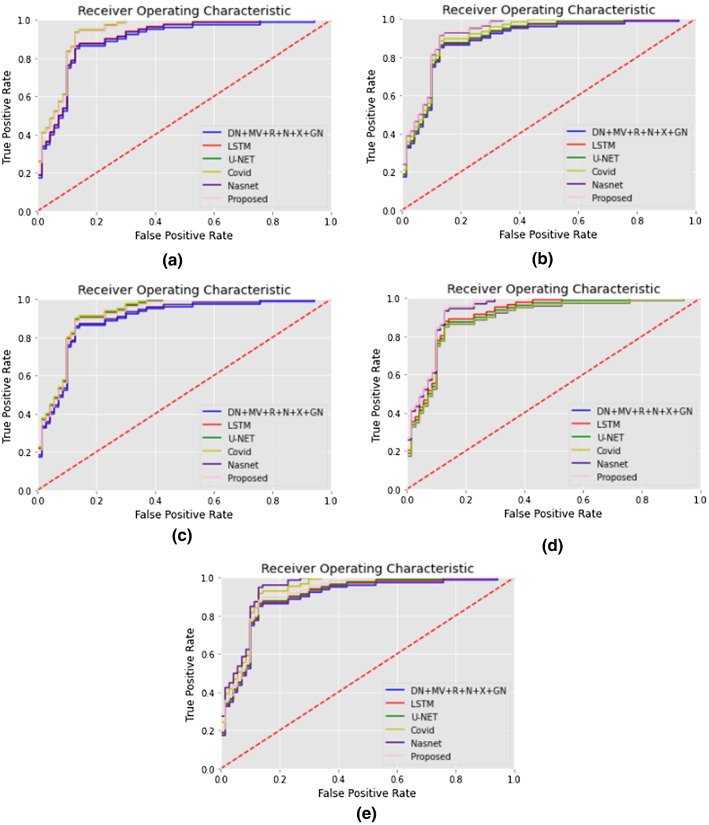

Sensitivity (a true positive rate) and Recall (the number of true positives separated by the sum of a number of true positives and false negatives) are the preeminent measures of diagnostic precision. The evaluation shows us the frequency at which CNN accurately predicts COVID-19 infection. The sum of sensitivity and false negative incidence is the number of true negatives divided by the total number of checks. The accuracy is the number of true negatives divided by the number of true negatives and false positives. The sensitivity measure calculates just how much of the positive cases were correctly predicted, while the specificity measure determines just how much of the negative cases were correctly expected. The research shows that CNN's medical reporting is reliable for individuals who don't have COVID-19 infection. The recall is the number of accurate outcomes. Precision is how close to the target the sample comes. F1-score tests the accuracy of a source. The F1-score is a metric used to gauge model accuracy. It represents both accuracy and the test which is a recall for the computation of the score. The method incorporates the accuracy and recall of the model to determine a single value, the harmonic mean. Precision is the amount of significance the measurement has with the outcome. Precision can be defined as the number of true positives divided by the number of true positive and false positive. Accuracy is the most critical metric used to measure the efficiency (trueness) of the classifier model. The algorithm calculates the ratio of correctly defined photos vs the overall number of photographs in the dataset. Sensitivity and specificity scores help assess the accuracy of performance. A receiver operating characteristic curve (ROC curve) is a graph of a classification model that shows how well a classification performs to decrease classification thresholds. A ROC curve maps True and False Positive against True Positive at differing degrees of classification. The Receiver Operational Characteristic (ROC) study shows a reliable calculation of COVID-19 classification. The region under the ROC curve indicates the sensitivity of the measurement; it is a curve drawn as the relationship between 1-specificity and sensitivity, showing the characteristic representation of the classification. The field under the curve is a partially independent vector. The plot of True Positive Rate versus False Positive Rate is the receiver operating characteristics (ROC) curve. By calculating the degree of separability between different groups, the ROC reflects the model’s investigative competence. It explores how our CNN can discriminate between normal and COVID-19.

The area under the receiver operating characteristic curve (AUC) is used to assess the model's performance; the area is the entire two-dimensional area under the receiver operating characteristic curve from (0,0) to (1,1). The greater superior region under the curve (AUC) is the model in which various groups are divided. Ideally, the AUC should be 1.0, its value is 0, and 0.5 is when the model is equal to random guessing in the worst case. This is an overall indicator of success across every conceivable degree of identity.

| 1 |

| 2 |

| 3 |

| 4 |

| 5 |

| 6 |

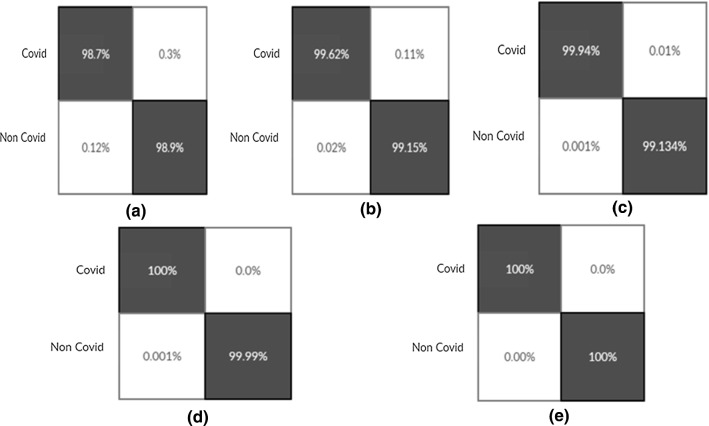

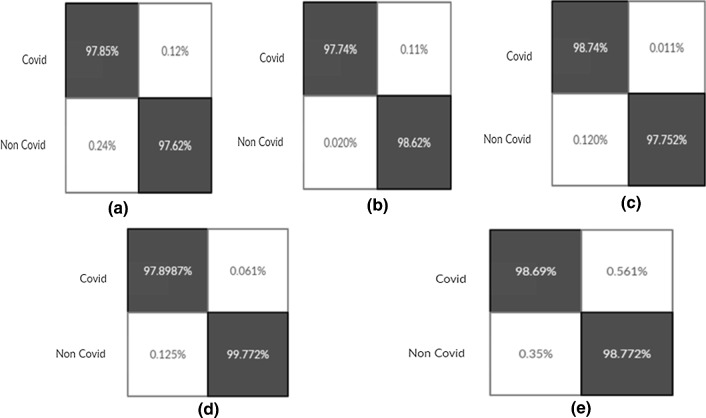

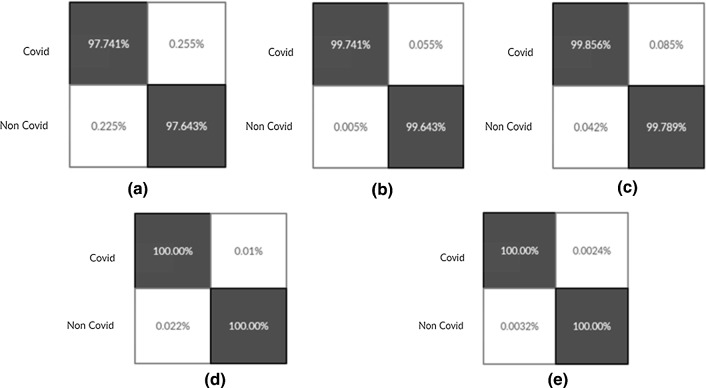

The result of confusion matrixes for binary classification problem in detecting COVID-19 positive are shown in Figs. (16, 17, 18). In order to show the produced result during each experiment, Tables 6, 7, 8, 9, 10, 11, 12 are compiled. During the first experiment, Table 6 shows the performance of all 20 selected models; we presented the performance of the best 09 models (top 50 percent) in experiments 2,3,11,13,14,15 and16 (Refer Tables 7, 8, 9, 10) due to space constraint. After that, a cumulative average table is generated where the effects of each model are summed and presented on individual performance metrics during all 16 experiments (Table 11). Combined Table 12 is extracted from this Average Table, which highlights the final best five models for each metric. Bold numbers highlight the best score produced, corresponding to each metric, in particular experiment, during each table (Refer Tables 6, 7, 8, 9, 10, 11, 12).

Fig. 16.

Confusion Matrixes for Proposed Model build from scratch (Experiment 14(Fig-a), 15(Fig-b), 16(Fig-c), 17(Fig-d), 18(Fig-e))

Fig. 17.

Confusion Matrixes for LSTMCNN Model (Experiment 14(Fig-a), 15(Fig-b), 16(Fig-c), 17(Fig-d), 18(Fig-e))

Fig. 18.

Confusion Matrixes for Proposed Ensemble Model (Experiment 14(Fig-a), 15(Fig-b), 16(Fig-c), 17(Fig-d), 18(Fig-e))

Table 6.

Comparative analysis of the classification results of the 19 models during experiment 1

| Experiment -1 | Metric -1 | Metric-2 | Metric-3 |

|---|---|---|---|

| Image nets | F1 Score | AUC | Accuracy |

| VGG-16 | 0.79330 | 0.79375 | 0.82350 |

| VGG-19 | 0.83333 | 0.83482 | 0.77240 |

| MobileNet | 0.90000 | 0.90000 | 0.91000 |

| InceptionResNetV2 | 0.83000 | 0.84000 | 0.90000 |

| InceptionV3 | 0.53000 | 0.50000 | 0.52100 |

| ResNet-101 | 0.79000 | 0.79330 | 0.86000 |

| ResNet-101V2 | 0.9012 | 0.9147 | 0.9167 |

| ResNet50V2 | 0.82410 | 0.82660 | 0.83000 |

| Xception | 0.79333 | 0.79370 | 0.73790 |

| SqueezeNet | 0.52000 | 0.53000 | 0.53000 |

| DarkNet-53 | 0.53450 | 0.54230 | 0.53240 |

| SqueezeNet + DarkNet-53 + MobileNetV2 + Xception + ShuffleNet | 0.89650 | 0.90120 | 0.87450 |

| EfficentNetB7 | 0.51230 | 0.52330 | 0.53000 |

| DCGAN | 0.82145 | 0.82569 | 0.90000 |

| LSTMCNN | 0.84123 | 0.82365 | 0.87456 |

| U-Net(Glaucoma) | 0.83645 | 0.82654 | 0.88975 |

| Proposed Model | 0.98746 | 0.98654 | 0.99655 |

| COVID research paper | 0.87645 | 0.88875 | 0.86457 |

| NASNet Large | 0.87457 | 0.88570 | 0.98746 |

| DarkNet-53 + MobileNetV2 + Resnet-101 + NASNet Large + Xception + GoogLeNet | 0.84563 | 0.87163 | 0.86347 |

Table 7.

Comparative analysis of the classification results of the best performing 9 models during experiments 2 and 3

| Experiment-2 | Metric-1 | Metric-2 | Metric-3 | Experiment-3 | Metric-1 | Metric-2 | Metric-3 |

|---|---|---|---|---|---|---|---|

| Image nets | F1 | AUC | Accuracy | Image nets | F1 | AUC | Accuracy |

| VGG-19 | 0.98756 | 0.96587 | 0.99634 | InceptionResNetV2 | 0.96359 | 0.97584 | 0.96359 |

| InceptionResNetV2 | 0.96548 | 0.96387 | 0.96471 | ResNet-101 | 0.99785 | 0.97458 | 0.98745 |

| Xception | 0.96348 | 0.94127 | 0.96547 | ResNet50V2 | 0.96547 | 0.95412 | 0.98745 |

| DCGAN | 0.93647 | 0.94226 | 0.96785 | DCGAN | 0.97465 | 0.96548 | 0.97845 |

| LSTMCNN | 0.96355 | 0.98564 | 0.97459 | LSTMCNN | 0.96359 | 0.97855 | 0.96359 |

| Proposed Model | 0.92940 | 0.96245 | 0.99245 | U-Net(Glaucoma) | 0.93235 | 0.96235 | 0.96984 |

| COVID research paper | 0.97459 | 0.96326 | 0.96458 | Proposed Model | 0.95487 | 0.95055 | 0.96358 |

| NASNet Large | 0.97847 | 0.98636 | 0.97414 | COVID research paper | 0.97423 | 0.98746 | 0.98632 |

| ResNet-101V2 | 0.8865 | 0.8659 | 0.8737 | ResNet-101V2 | 0.8963 | 0.9147 | 0.9213 |

|

DarkNet-53 + MobileNetV2 + Resnet-101 + NASNet Large + Xception + GoogLeNet + Resnet-101 + NASNet Large + Xception + GoogLeNet |

0.99483 | 0.93584 | 0.98742 | DarkNet-53 + MobileNetV2 | 0.97478 | 0.99789 | 0.97779 |

Table 8.

Comparative analysis of the classification results of the best performing 9 models during experiments 11 and 13

| Experiment-11 | Metric-1 | Metric-2 | Metric-3 | Experiment-13 | Metric-1 | Metric-2 | Metric-3 |

|---|---|---|---|---|---|---|---|

| Image nets | F1 | AUC | Accuracy | Image nets | F1 | AUC | Accuracy |

| ResNet50V2 | 0.80426 | 0.84035 | 0.89550 | MobileNet | 0.98763 | 0.97856 | 0.98565 |

| Xception | 0.84660 | 0.86301 | 0.89206 | InceptionResNetV2 | 0.96547 | 0.95635 | 0.98615 |

| DCGAN | 0.93642 | 0.93348 | 0.93476 | ResNet50V2 | 0.98236 | 0.96584 | 0.99655 |

| LSTMCNN | 0.97457 | 0.97895 | 0.97456 | LSTMCNN | 0.97845 | 0.98746 | 0.97855 |

| U-Net(Glaucoma) | 0.96805 | 0.98069 | 0.98660 | U-Net(Glaucoma) | 0.89233 | 0.98698 | 0.98524 |

| Proposed Model | 0.98630 | 0.98604 | 0.98936 | Proposed Model | 0.89975 | 0.98555 | 0.92126 |

| Covid research paper | 0.97456 | 0.95479 | 0.96470 | Covid research paper | 0.96325 | 0.98464 | 0.97456 |

| ResNet-101V2 | 0.8924 | 0.9124 | 0.9010 | ResNet-101V2 | 0.8947 | 0.9214 | 0.9007 |

| NASNet Large | 0.96548 | 0.94756 | 0.94752 | NASNet Large | 0.94365 | 0.96412 | 0.97413 |

| DarkNet-53 + MobileNetV2 + Resnet-101 + NASNet Large + Xception + GoogLeNet | 0.95686 | 0.97845 | 0.98875 | DarkNet-53 + MobileNetV2 + Resnet-101 + NASNet Large + Xception + GoogLeNet | 0.97878 | 0.98747 | 0.99785 |

Table 9.

Comparative analysis of the classification results of the best performing 9 models during experiments 14 and 15

| Experiment-14 | Metric-1 | Metric-2 | Metric-3 | Experiment-15 | Metric-1 | Metric-2 | Metric-3 |

|---|---|---|---|---|---|---|---|

| Image nets | F1 | AUC | Accuracy | Image nets | F1 | AUC | Accuracy |

| VGG-19 | 0.89641 | 0.84563 | 0.94567 | ResNet-101 | 0.94563 | 0.93654 | 0.96413 |

| MobileNet | 0.95675 | 0.95641 | 0.96875 | ResNet50V2 | 0.92146 | 0.96285 | 0.96421 |

| DCGAN | 0.98745 | 0.93647 | 0.97456 | DCGAN | 0.94569 | 0.93215 | 0.97459 |

| LSTMCNN | 0.97855 | 0.97465 | 0.96578 | LSTMCNN | 0.94742 | 0.97456 | 0.96458 |

| U-Net(Glaucoma) | 0.98523 | 0.96785 | 0.96875 | U-Net(Glaucoma) | 0.99455 | 0.99214 | 0.98669 |

| Proposed Model | 0.97854 | 0.96874 | 0.98756 | Proposed Model | 0.93298 | 0.94783 | 0.98547 |

| ResNet-101V2 | 0.8938 | 0.8997 | 0.9045 | ResNet-101V2 | 0.8932 | 0.8897 | 0.8740 |

| COVID research paper | 0.98631 | 0.96346 | 0.97852 | COVID research paper | 0.93648 | 0.97413 | 0.98746 |

| NASNet Large | 0.96875 | 0.98215 | 0.99785 | NASNet Large | 0.96335 | 0.94758 | 0.96354 |

| DarkNet-53 + MobileNetV2 + Resnet-101 + NASNet Large + Xception + GoogLeNet | 0.98634 | 0.98632 | 0.94578 | DarkNet-53 + MobileNetV2 + Resnet-101 + NASNet Large + Xception + GoogLeNet | 0.97459 | 0.98875 | 0.97879 |

Table10.

Comparative analysis of the classification results of the best performing 7 models during experiment 16

| Experiment-16 | Metric-1 | Metric-2 | Metric-3 |

|---|---|---|---|

| Image nets | F1 | AUC | Accuracy |

| DenseNet53 + MobileNetv2 + ResNet-101 + NASNetLarge + Xception + GoogLeNet | 0.9156 | 0.9063 | 0.9074 |

| LSTMCNN | 0.8765 | 0.91368 | 0.8936 |

| ResNet-101V2 | 0.9111 | 0.8993 | 0.9054 |

| U-Net(Glaucoma) | 0.8623 | 0.8836 | 0.8845 |

| COVID research paper | 0.9012 | 0.90014 | 0.8856 |

| NasNetLarge | 0.8745 | 0.91141 | 0.8763 |

| Self-Made Model | 0.9123 | 0.91256 | 0.8934 |

Table 11.

Average-wise performance comparison of all the models

| Model NAME | All experiments average F1 | All experiments average AUC | All experiments average accuracy |

|---|---|---|---|

| VGG-16 | 0.920733 | 0.888575 | 0.914426 |

| VGG-19 | 0.915139 | 0.903742 | 0.921005 |

| MobileNet | 0.911087 | 0.919878 | 0.925677 |

| InceptionResNetV2 | 0.904686 | 0.913151 | 0.920659 |

| InceptionV3 | 0.896025 | 0.885667 | 0.882325 |

| ResNet-101 | 0.899679 | 0.900224 | 0.899411 |

| ResNet50V2 | 0.880875 | 0.910234 | 0.919958 |

| ResNet-101V2 | 0.8971 | 0.9036 | 0.8989 |

| Xception | 0.917826 | 0.902268 | 0.91461 |

| SqueezeNet | 0.521689 | 0.530245 | 0.51925 |

| DarkNet-53 | 0.529491 | 0.54358 | 0.532157 |

| SqueezeNet + DarkNet-53 + MobileNetV2 + Xception + ShuffleNet | 0.897339 | 0.902605 | 0.894416 |

| EfficentNetB7 | 0.513148 | 0.532155 | 0.532913 |

| DCGAN | 0.949983 | 0.940607 | 0.957902 |

| LSTMCNN | 0.966804 | 0.964544 | 0.964641 |

| U-Net(Glaucoma) | 0.962745 | 0.959091 | 0.950293 |

| Proposed Model | 0.945833 | 0.960826 | 0.946373 |

| COVID research paper | 0.961163 | 0.957247 | 0.962947 |

| NASNetLarge | 0.959736 | 0.957844 | 0.954607 |

| DarkNet-53 + MobileNetV2 + Resnet-101 + NASNetLarge + Xception + GoogLeNet | 0.968263 | 0.95837 | 0.965129 |

Table 12.

Average-wise best performing 5 models on three efficiency measuring parameters

| Ranking of Models | On the basis of Accuracy | On the basis of AUC | On the basis of F1-SCORE |

|---|---|---|---|

| First | DarkNet-53 + MobileNetV2 + Resnet-101 + NASNetLarge + Xception + GoogLeNet | LSTMCNN | DarkNet-53 + MobileNetV2 + Resnet-101 + NASNet Large + Xception + GoogLeNet |

| Second | LSTMCNN | Proposed Model | LSTMCNN |

| Third | COVID Research Paper | U-Net(Glaucoma) | U-Net(Glaucoma) |

| Fourth | U-Net(Glaucoma) | DarkNet-53 + MobileNetV2 + Resnet-101 + NASNet Large + Xception + GoogLeNet | COVID Research Paper |

| Fifth | NASNetLarge | NASNetLarge | NASNetLarge |