Abstract

Due to COVID-19, demand for Chest Radiographs (CXRs) have increased exponentially. Therefore, we present a novel fully automatic modified Attention U-Net (CXAU-Net) multi-class segmentation deep model that can detect common findings of COVID-19 in CXR images. The architectural design of this model includes three novelties: first, an Attention U-net model with channel and spatial attention blocks is designed that precisely localize multiple pathologies; second, dilated convolution applied improves the sensitivity of the model to foreground pixels with additional receptive fields valuation, and third a newly proposed hybrid loss function combines both area and size information for optimizing model. The proposed model achieves average accuracy, DSC, and Jaccard index scores of 0.951, 0.993, 0.984, and 0.921, 0.985, 0.973 for image-based and patch-based approaches respectively for multi-class segmentation on Chest X-ray 14 dataset. Also, average DSC and Jaccard index scores of 0.998, 0.989 are achieved for binary-class segmentation on the Japanese Society of Radiological Technology (JSRT) CXR dataset. These results illustrate that the proposed model outperformed the state-of-the-art segmentation methods.

Keywords: Deep learning, Semantic segmentation, Attention U-Net, Covid-19 dataset

1. Introduction

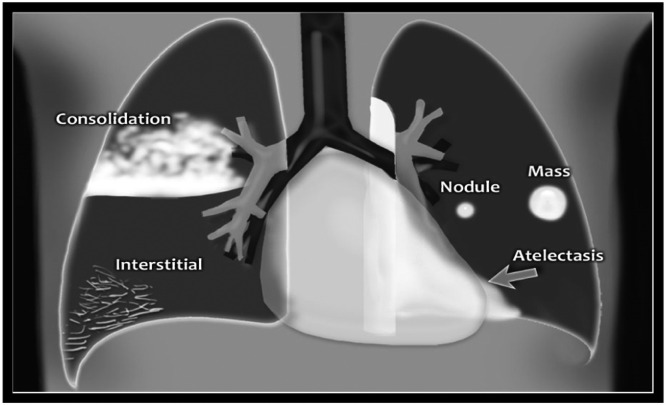

In the year 2020, Coronavirus Disease 2019 (COVID-19) a serious health issue badly affected the lives of people worldwide. The Reverse Transcription Polymerase Chain Reaction (RTPCR) clinical test and CXR the first-line screening tool are mainly used for early diagnosis of this disease. Mostly, COVID-19 CXR images are observed with lung abnormalities such as Ground Glass opacities (GGOs), consolidation, cardiomegaly, and infiltrates [1]. Fig. 1 demonstrates the structural information of various lung abnormalities. It can be observed from Fig. 1 that increased density area in consolidation due to alveoli filled with fluid, pus, etc. result in ill-defined opacities and indicate pneumonia disease. The reticular opacities due to interstitial pattern causes pulmonary edema, mass or nodule lesion due to fluid-filled sac may result into lung cancer and atelectasis due to collapsed lungs may result in pneumothorax problem. Similarly, cardiomegaly is idiopathic that results in enlargement of the heart show symptoms like chest pain, edema, etc. and infiltration results when substance denser than air lingers within the lungs may result in tuberculosis [2]. Reading a CXR image require high-level knowledge of radiologists, years of expertise in this field, and needs more time for interpretation. Although, there are numerous research systems available that focus on segmenting specific pathologies such as nodules, tuberculosis in CXR images. But there is a need of designing an architecture embedded with expert domain knowledge that can efficiently localize and segment multi-class abnormalities of COVID-19 in CXR images.

Fig. 1.

Visual appearance of various lung abnormalities [2].

This has motivated us to propose a novel Deep Learning (DL) segmentation method that can automatically localize and segment lung abnormalities specifically GGOs, consolidation, cardiomegaly, and infiltrates because these abnormalities are inherently interrelated. This trained system can assist radiologists to detect COVID-19 findings easily, and fight against this pandemic, and help in making correct decisions for further treatment.

The main strategies used in the proposed CXAU-Net model are summarized as:

-

•

We modified the architecture of the Attention U-Net model that extracts rich image features and structures along lung field boundaries. This model uses attention blocks in the encoder and decoder architecture of U-Net. These blocks apply channel and spatial attention that provides information of small areas i.e., low-level feature maps, that enables this architecture to detect multiple lung pathologies.

-

•

We apply dilated convolution in all layers that expands receptive field i.e., the field of the convolution kernel, and maintains feature maps spatial dimension without increasing parameters of the model. This process extracts multiscale features through multiple convolution paths and improves the sensitivity of the model.

-

•

We introduced a hybrid loss function based on Active Contour (AC) loss and Dice Similarity Coefficient (DSC) loss in this model. This loss function concatenates pixel and geometrical information for optimizing, updating weights and reducing error of segmentation model.

-

•

Despite hybrid loss function, this model is trained and tested on different loss functions such as Categorical Cross-Entropy (CE), Tversky loss, and DSC loss functions. Out of all these loss functions, the hybrid loss function shows the highest DSC and overlap scores due to better control on small-scale edges and regions of the segmentation contours.

-

•

Moreover, this model used different training strategies and applied both image-based and patch-based approaches for the segmentation of sub-regions and detection of multiple lung pathologies.

-

•

We also checked the convergence of the proposed model using distinct activation functions like Rectified Linear Unit (ReLU), Exponential Linear Unit (ELU), Scaled Exponential Linear Unit (SeLU), Leaky ReLU, and Parametric ReLU.

-

•

In addition to multi-class segmentation on the COVID-19 dataset, this model enables to perform of binary-class segmentation of lung fields on the JSRT dataset.

The rest of this paper is organized as follows: Section 2, introduces related work on CXR images in tabular form. Section 3 describes the methodology of the proposed architecture. In Section 4 the experimental results are evaluated and compared with other state-of-the-art methods. At last, Section 5 concludes this paper.

2. Related work

In the literature, many attempts are conducted that segment CXR images using DL methods but their solutions vary as per their toolsets and targets. In this section, existing DL methods for the segmentation of medical images particularly CXR images and COVID-19 images are presented.

The most commonly used architecture in medical image segmentation is U-Net which is introduced by Ronneberger et al. [3]. It consists of contraction path built with convolutional and pooling layers that extracts high-level features which are concatenated with low-level features in the expansion path. A modified encoder-decoder Convolutional Neural Network (CNN) is designed by Kalinovsky et al. [4] with Broyden-Fletcher-Goldfrab-Shanno (L-BFGS) algorithm which optimized lung segmentation model. Although, DSC score of 96.2% is achieved but model needs to be validated on different dataset as same dataset is used for training and testing the model. Some of the important DL-based methods utilized for the segmentation of CXR images mentioned in the literature can be found in Table 1. These methods have utilized multiple processing layers with linear and non-linear transformations for the abstraction of multiple-level features. Further, a literature review on studies regarding the detection of COVID-19 using CXR and CT images is shown in Table 2. The study illustrates the importance of DL-based image analysis for detecting COVID-19 infections. Most of these cases employed CXR as the primary screening method but cases with severe GGOs and consolidation used CT image analysis. Although, above mentioned attempts have succeeded in showing promising results for segmentation tasks. But, still, there is a crucial need to explore a new horizon because factors like overfitting due to the use of small datasets, vanishing gradient problem, dying ReLU problem, etc., affect the performance of DL-based models. Motivated from these challenges in existing segmentation networks in the literature review, we adapted Attention U-Net architecture and applied different training strategies intending to improve results for detection and segmentation of different lung abnormalities.

Table 1.

DL based methods for segmentation of CXR images.

| Approaches | Strategy | Dataset & Results | Pros/Cons |

|---|---|---|---|

| Novikov et al. [5] | Modified U- Net | JSRT CXR dataset | Improvement in experimental results can be achieved using more dataset |

| Overlap: lungs 95%, heart 88.2% and clavicles 86.8% | |||

| Wang [6] | Multi-task Fully Convolutional Networks | JSRT CXR dataset | More dataset can be used to improve the performance of the model |

| Overlap: Lungs 96% heart 94.3% and clavicles 90.6% | |||

| Arbabshirani et al. [7] | Multi-scale CNN | PACS and JSRT dataset | The patch-based approach applied but performance is low in comparison to other methods |

| Overlap: 91% | |||

| Jiang et al. [8] | CNN model with Recurrent layers | JSRT Dataset | Only quantitative results are given |

| Quantitative results not given | |||

| Xie et al. [9] | Multi-view knowledge-based collaborative (MV-KBC) model using ResNet-50 and U-Net model | LIDC-IDRI dataset | Low-performance results and Patch-based approach applied |

| DSC: 80.23% | |||

| Eslami et al. [10] | Mukti-task organ segmentation using conditional Generative Adversarial Network (GAN) pix2pix network | JSRT dataset | Outperforms other multi-class segmentation methods |

| Overlap:98.4%, | |||

| DSC: 99.2% | |||

| Park et al. [11] | Deep CNN model for segmentation of Diffuse Interstitial Lung Diseases (DILD) | High-Resolution Computed Tomography (HRCT) dataset | The model is applied to different diseases. |

| Overlap: 96.76%, | |||

| DSC: 98.84% | |||

| Gaál et al. [12] | Adversarial Attention U-Net model for lung segmentation | JSRT and MC dataset | Good performance for lung segmentation |

| DSC: 97.5% | |||

| Wang et al. [13] | Multitask Dense U-Net (MDU-Net) model for rib and clavicle segmentation | CXR dataset | A small dataset of only 88 CXR images used, no data extension method is used |

| DSC: 88.38% |

Table 2.

DL based methods for segmentation of COVID-19 images.

| Approaches | Strategy | Dataset & Results | Pros/Cons |

|---|---|---|---|

| Chen et al. [14] | Residual Attention U-Net for multi-class segmentation of COVID-19 CT images | CT images | Achieved low DSC score |

| DSC: 83% | |||

| Oh et al. [15] | Fully Convolutional (FC) DenseNet103 for segmentation of lungs and heart | JSRT and MC dataset | Poor segmentation results in images with severe consolidation and opacities abnormalities |

| DSC:84.4% | |||

| Alom et al. [16] | NABLA-3 built with encoding and decoding units for segmentation of chest regions | JSRT and MC dataset | The model needs to train and tested on more dataset |

| Overlap: 86.50% | |||

| DSC:88.46% | |||

| Amyar et al. [17] | Multi-task DL model with two decoders for segmentation and image reconstruction | CT images, DSC: 88.0% | Modification required in segmentation network |

| Qiu et al. [18] | MiniSeg DL model made of Attentive hierarchical Spatial Pyramid (AHSP) module for segmentation of COVID-19 data | COVID-19 CT dataset | The model achieves results with high speed and high efficiency |

| Speed: 516.3 fps, | |||

| DSC:80.06% | |||

| Teixeira et al. [19] | U-Net architecture for lung segmentation | JSRT, MC, and Covid-19 dataset, DSC:98.2% | Poor performance for segmented images |

| Kim et al. [20] | DL model for segmentation of four regions of lung CXRs of COVID-19 images | RSNA pneumonia and JSRT dataset DSC:90% | The model can be evaluated from multiple sites |

| Chen et al. [21] | U-Net++ DL model applied for segmentation of COVID-19 pneumonia | CT dataset, Accuracy: 92.59% | Useful resource for fighting COVID-19 pneumonia |

| Shan et al. [22] | COVID-19 infection regions segmentation using VB-Net | CT dataset, DSC:91.6% | Generalization of the model is missing |

3. Methods

This section explains in detail all the steps that are used for the design of the proposed CXAU-Net model for segmentation and detection of COVID-19 chest pathologies. First, patches of CXR images are extracted from the COVID-19 dataset. Then, the proposed CXAU-Net is constructed, and trained, and tested for multi-class and binary-class segmentation. Table 3 provides detail of datasets used by this model.

Table 3.

Detail of datasets used by the proposed CXAU-Net model.

| Segmentation type | Multi-class Segmentation |

Binary-class Segmentation |

||||

|---|---|---|---|---|---|---|

| Approach used | Image-based |

Patch-based |

Image-based |

|||

| Dataset type | Training dataset | Testing dataset | Training dataset | Testing dataset | Training dataset | Testing Dataset |

| Dataset | COVID-19 dataset | Chest X-ray 14 dataset | COVID-19 dataset | Chest X-ray 14 dataset | NLM-China and MC CXR datasets | JSRT dataset |

| Number of Images | 105 images | 640 images with 160 images of each case | 210 patches | 640 patches with 160 patches of each case | 704 images | 247 images |

| Number of Augmented Images used | 840 images | – | 1680 patches | – | 5632 images | – |

| Size of images | 256×256×3 | 256×256×3 | 128×128×3 | 128×128×3 | 256×256×1 | 256×256×1 |

3.1. Datasets

The easily accessible four CXR datasets used for multi-class and binary-class segmentation and detection of chest pathologies are as follows:

-

1)

COVID-19 Dataset: For the study of multi-class segmentation and detection of COVID infections, we selected the COVID-19 dataset [23] which contains about 105 CXR images of COVID positive patients from different countries. All these images are resized to 256×256×3 pixels and are in Portable Network Graphics (PNG) format. These images show radiological signs of COVID-19 conditions such as GGOs, consolidation, cardiomegaly, and infiltrates. An expert thoracic radiologist generated a mask of these images using label me python library for image annotation according to RTOG1106 guidelines.

-

2)

NLM-The Shenzhen and Montgomery County (MC) CXR Datasets: It consists of 800 CXR images with 394 cases of tuberculosis and 406 normal cases. Out of these 800 images, gold standard lung segmentation masks of only 704 images are available, so the rest of the 96 images are discarded during the training of the proposed CXAU-Net model [24], [25].

-

3)

ChestX-ray14 and Japanese Society of Radiological Technology (JSRT) Datasets: To test the performance of this model for multi-class segmentation, we used publicly available Chest X-ray14 dataset [26]. It consists of a total of 640 images with 160 images of each case i.e., GGOs, consolidation, cardiomegaly, and infiltrates. This model is separately tested for binary-class segmentation with JSRT CXR dataset [27]. It consists of 247 images with both lung and heart segmentation masks. All these images are also resized to 256×256×1 pixels.

3.2. Pre-processing operations

The low contrast CXR images are improved by using Contrast Limited Adaptive Histogram Equalization (CLAHE) method [28]. This technique adaptively equalizes intensity values of the image in a range of [0,255] such that the Cumulative Distribution Function (CDF) reaches close to CDF of the uniform distribution. The quality of these images is further enhanced through gamma correction operation and using a gamma of value 0.5. Also, to achieve high performance of the proposed model, the COVID-19 dataset is increased 8 times using data augmentation. We used the albumentations python library for augmenting these images. The augmented images and their corresponding masks are obtained by performing transformations such as flip, random contrast, transpose, crop transforms, elastic transform, and grid distortion.

3.3. Patch extraction

Since the proposed CXAU-Net model is also implemented with the patch-based approach for multi-class segmentation of COVID infections. So, patches of the cropped left lung and right lung ROIs are extracted based on their relative positions. The extracted 1680 ROIs patches are then resized to 128×128×3 pixels and used for training and testing the model. This approach helps the model to focus on small regions and generate ground-truth segmentations. As the model runs through each patch and also through overlapping patches, it decreases the processing speed of the model. But the resulting outputs detect and segment COVID-19 conditions of GGOs, consolidation, cardiomegaly, and infiltrates effectively.

3.4. Proposed method

In this paper, a CNN architecture is implemented after considering a balance between network size and training data. The detail of the architecture used for multi-class and binary-class segmentation is described in the following sub-sections.

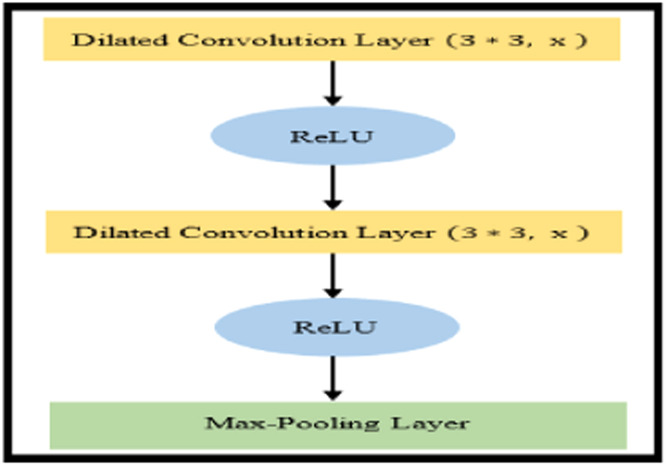

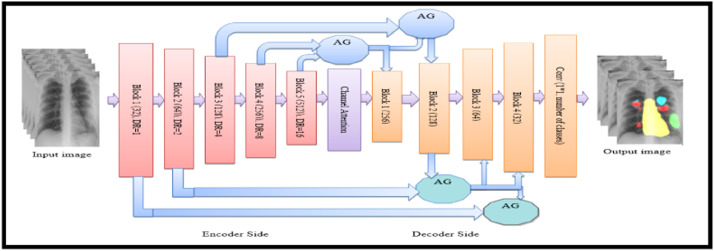

3.4.1. Multi-class segmentation

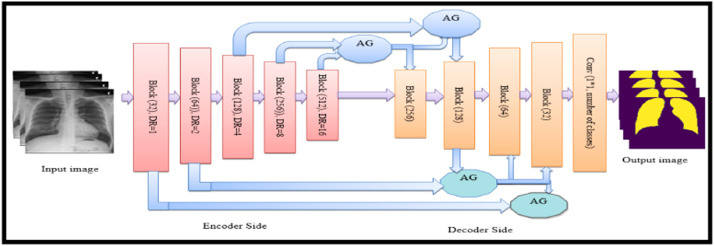

The proposed CXAU-Net model modifies the Attention U-Net model for the segmentation task. The original Attention U-Net model consists of two paths i.e., encoder and decoder also known as contracting and expanding paths respectively. The contracting path extracts low-level features from the input image and its architecture repeatedly applies two 3×3 convolution layers followed by nonlinear activation function ReLU and 2×2 max-pooling operations. After each down sampling operation in the contracting path, image size is reduced while the number of feature channels doubles. The symmetrical architecture is applied in the decoder with the difference that the number of feature channels is halved during up sampling operations. The decoder side concatenates the extracted high-resolution low-level features of down sampling operations with high-level features of up sampling operations that produce more accurate segmentation masks [3]. However, the modified Attention U-Net model encoder side consists of 5 blocks of dilated convolution layers with dilation factors of 1,2,4,8, and 16 respectively, followed by ReLU activation function and 2×2 max-pooling operations as shown in Fig. 2. The advantage of using dilated convolution with x dilation rate is that it increases filter size by incorporating x-1 zeros between consecutive filter values. This increases receptive fields of the network and thus extracts more discriminative high-level features that represent the better performance of the network [29]. In this model, the decoder side incorporates soft additive AGs [30] that perform element-wise weighted aggregation of outputs from the previous two layers of the encoder network. These self-learning weights are implemented by convolutional layers and are applied to feature values of different positions.

Fig. 2.

Block consists of dilated convolutions with x dilation rate followed by ReLU and 2×2 max-pooling operation, used in the CXRAU-Net model.

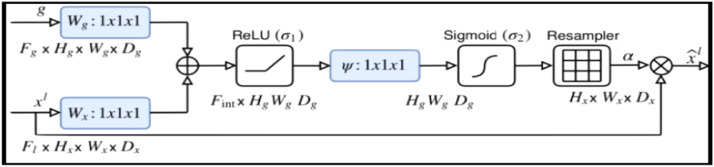

The resultant vector is followed by the ReLU activation function and is up sampled to original dimensions using bilinear interpolation. This step is repeated for each output value from 5 blocks of the encoder network as shown in Fig. 4. The AG as shown in Fig. 3 which preserves only salient features from image pixel values by computing multiplication of input feature maps with multi-dimensional attention coefficients αi for segmenting multiple classes. Where, gating vector g Є Fg identifies contextual information and high-dimensional local features xl which are concatenated using channel-wise 1*1*1 convolution for input tensors. This linear transformation is followed by non-linear ReLU and sigmoid activation function which normalizes attention coefficients at the output. The AGs capture non-linear low-level inter-spatial features such as contours, edges of chest abnormality areas and ignores the irrelevant ones, and increases the generalization power of the model. Mathematically, the attention mechanism is represented in Eq. 1:

| (1) |

where σ and σ2 are ReLU and sigmoid activation functions, ψ is intermediate space, Wx and Wg are convolutions for input tensor and bg is bias term.

Fig. 4.

Architecture of the proposed CXAU-Net model for multi-class segmentation with encoder and decoder blocks, channel and spatial attention with (AGs). Output image indicate the medical condition of cardiomegaly by yellow mask, GGOs with red, consolidation with green, and infiltrate with sky blue masks. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

Fig. 3.

Schematic diagram of Attention Gate (AG) consists of input features xl and gating signal g processed by both activation functions and ascended by attention coefficients αi, provide spatial and contextual information of the salient regions [30].

Also, the last layer of the encoder network of this model incorporates a channel attention module [31]. It performs aggregation operation on complex features extracted from different channels, similar to spatial features that are extracted from different layers of the encoder network. Thus, spatial and channel attention processes tend to segment multiple abnormal areas with aligned weights and drops down the regions with unaligned weights in the encoder-decoder network.

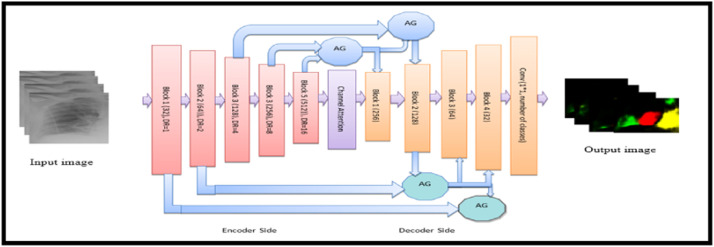

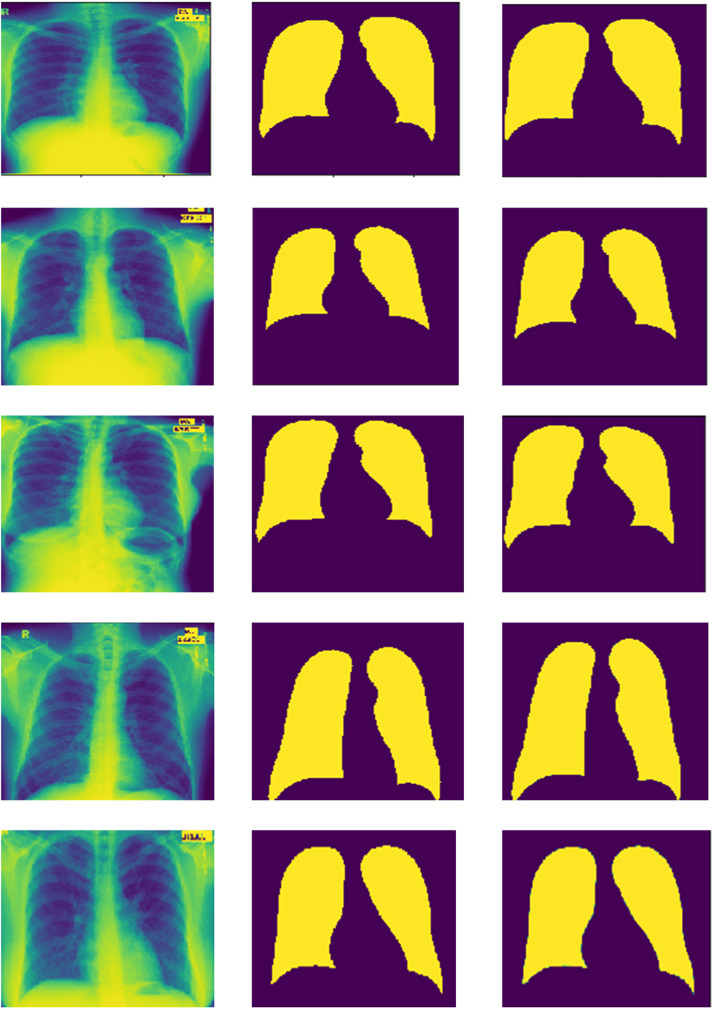

3.4.2. Binary-class segmentation

The proposed CXAU-Net model is authenticated by performing binary-class segmentation of lung fields i.e., right and left lungs in CXR images. The CXAU-Net model is trained on gray-scale 704 images and masks of NLM Shenzhen and MC CXR datasets of tuberculosis and is resized to 256 ×256 pixels. Since gray-scale images have only one channel, so only the spatial attention module performs aggregation operations. This module concatenates extracted low-level spatial features from encoder network with up sampling high-level features from decoder network and perform segmentation of lung fields as shown in Fig. 4. ( Fig. 5).

Fig. 5.

Architecture of the proposed CXAU-Net model for binary-class segmentation with encoder and decoder blocks, spatial attention with (AGs). Output image segments left and right lung fields with yellow masks and background with the purple region. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

3.4.3. Patch-based multi-class segmentation

In addition to multi-class and binary-class segmentation, the network is simulated on more data by extracting patches of left and right lungs from full-scale images of the COVID-19 dataset, so that it can better learn characteristics of chest abnormalities is shown in Fig. 6. The 2D patches of size 128×128 pixels with three channels are obtained by crop and cut operation. The proposed CXRAU-Net architecture analyses additional local information i.e., ROIs marked by the radiologist on these patches, and inefficient manner characterize and localize dense predictions such as GGOs, cardiomegaly, consolidation, and infiltrates in CXR images. To avoid overfitting of the model, 1680 patches are generated by applying data augmentation on training patches. The model is trained in an end-to-end manner that minimizes cross-entropy loss. Then, tested on unseen 680 patches obtained from the ChestX-ray14 dataset and thus segments all the COVID-19 infections effectively.

Fig. 6.

Architecture of the proposed CXAU-Net model for patch-based multi-class segmentation. Given input are patches of ROI i.e., left and right lung fields, and provide output with masks of ROI indicating cardiomegaly by yellow mask, GGOs with red, consolidation with green, and infiltrate with sky blue masks. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

3.5. Loss functions

The loss or cost function plays a significant role in optimizing the model and providing high-precision segmentation results. Here, a brief review of different loss functions and newly proposed hybrid loss function used in the proposed CXAU-Net model is given below:

-

1)Categorical Cross Entropy (CCE) Loss Function: It is most commonly used loss function for classification and segmentation tasks [3]. It is expressed as:

where n is the number of classes, and ai is actual probabilities for each class and sp is the gradient for each predicted positive class(2) -

2)Dice Similarity Coefficient (DSC) Loss Function: DSC calculates pixel-wise intersection-over-union between actual and predicted segmentation masks. It can be expressed as:

where n is the number of classes and ai and pi are actual and predicted probabilities for each class(3) -

3)Tversky Loss Function: In medical images, models often get biased to background pixels due to class-imbalance problems. To avoid such issues, Tversky loss weighs more on False Negatives (FNs) than False Positives (FPs) during training, unlike DSC loss that weighs equally FNs and FPs. Thus, Tversky loss function enables network to detect boundaries of small regions preciously [32]. The mathematical expression for Tversky loss is:

where x and x's are the probability of pixels to be an infection and non-infection respectively and y is 1 for pixels with infection and 0 for pixels that are not infected and vice versa for y′. The α and β are weighed for FP and FN respectively.(4) Although CE, DSC, and Tversky losses tend to produce improved segmentation results but they do not consider geometrical information of regions. So, a novel hybrid loss function is introduced that incorporates semantic information i.e., size and area information for every pixel in an image, as well as similarity and diversity information of predicted and ground-truth masks.

-

4)

Hybrid Loss Function: A new hybrid loss function is introduced by combining AC and DSC losses, which back-propagates and optimizes weights of all layers and measures the segmentation error. This loss function aims to precisely detect small region boundaries and segment ROIs accurately. A brief review of AC loss function is given as:

3.5.1. Active contour (AC) loss function

The concept behind the AC loss function is to minimize the global energy function of the Active Contour Without Edge (ACWE) model. The AC loss function applies boundaries length term, inside and outside region terms, and thus predicts irregular edges and contours of different shapes [33]. The area and length functions used are defined as:

| (5) |

| (6) |

where u and v, indicates predicted and actual image respectively. The signs xi, yj, Ω symbolizes pixel coordinates in horizontal, vertical, and all directions respectively. The parameters c1 and c2 indicate inside energy and outside energy terms of regions respectively.

The length term includes the square root of gradients of predicted pixels in the horizontal and vertical direction with ε > 0 which avoids it to be 0. The hybrid loss function is defined as:

| (7) |

-

5)

Other Loss Function: Weighted cross-entropy loss calculates the real error for each class based on the cross-entropy function and weights of each class.

| (8) |

Focal loss calculates loss for object detection tasks specifically for imbalance classes using a modulating factor and focusing parameter with cross-entropy function.

| (9) |

The Log cosh dice loss calculates loss by utilizing the log-cosh approach for regression problems with features of dice loss.

| (10) |

4. Results and discussion

This section presents a quantitative and qualitative analysis of the proposed CXRAU-Net model for both multi-class and binary-class segmentation. The subsections report about the performance of this model on both image-based and patch-based approaches and also compares it with four state-of-the-art segmentation methods i.e., U-Net, Link-Net, FCN, and FPN. All the experiments are performed on a system equipped with Intel Xeon W-2255@3.7 GHz CPU and NVIDIA GPU Quadro P5000 with 16 GB memory using Keras on top of TensorFlow software packages.

4.1. Evaluation metrics

The performance of the CXRAU-Net model is evaluated via metrics accuracy, DSC score, and Jaccard index that are defined in Table 4. Where True Positive (TP) indicates a case truly with the abnormality, True Negative (TN) is a case truly without abnormality, FP is a case falsely considered as an abnormality but normal and FN is a case falsely considered as normal but abnormal. Although, the value for all the above-mentioned metrics ranges in [0,1] it should have a value close to 1 for perfect segmentation results.

Table 4.

Detail of metrics used by the proposed CXAU-Net model.

| Metrics | Description |

|---|---|

| Accuracy | Accuracy is the ratio of correct predictions to the total number of predictions. A = (TP+TN) /(TP+TN+FP+FN) |

| DSC | DSC-score measures similarity between target and predicted masks. DSC = (2TP) /(2TP+FP+FN) |

| Jaccard Index | Jaccard index is the intersection-over-union of the target mask and prediction mask. Jaccard index = (TP) /(TP+FP+FN) |

4.2. Multi-class segmentation with hybrid loss function

As mentioned above, the proposed CXRAU-Net model is evaluated on both image-based and patch-based approaches. The experimental results of both schemes are reported as:

4.2.1. Image-based multi-class segmentation

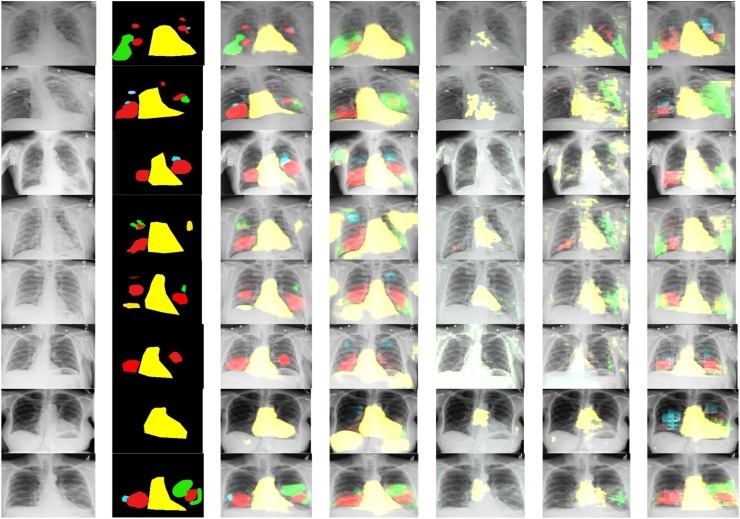

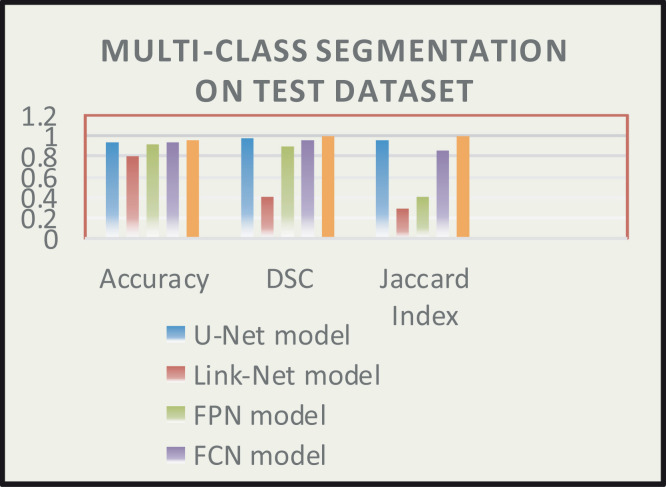

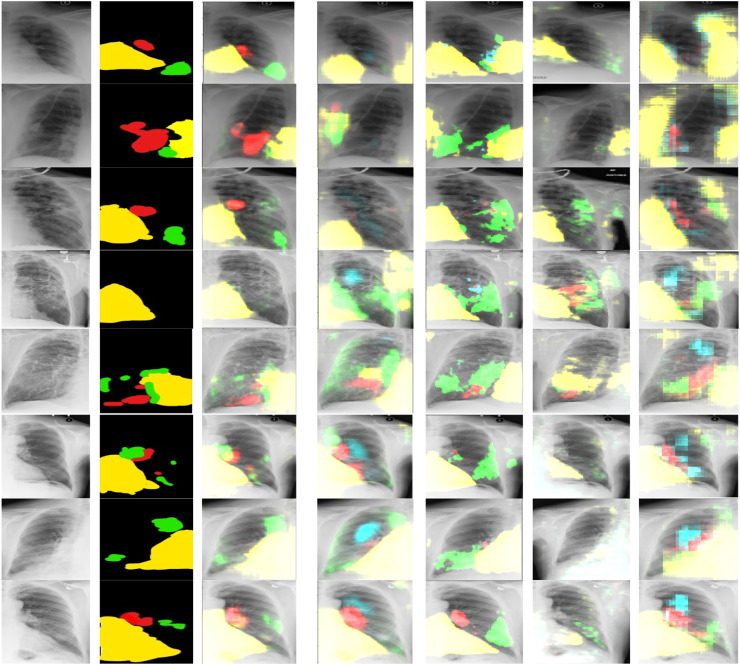

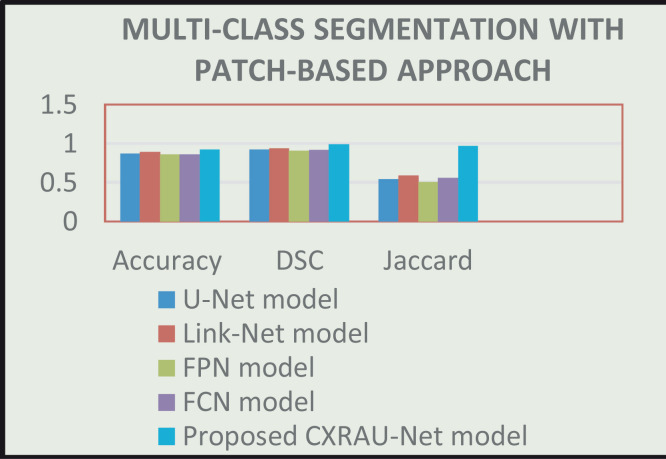

First training of the proposed CXRAU-Net model and four other state-of-the-art segmentation models is done on full-scale COVID-19 dataset images. All the models are trained for 500 epochs with a batch size of 5 and optimized with Adam optimizer using a learning rate of 0.0001. Then, performance of all the trained models is checked and validated on full-scale unseen testing images i.e., ChestX-ray14 dataset. The segmented results of randomly selected 8 test images for all the models that show regions with abnormalities i.e., GGOs, cardiomegaly, consolidation and infiltrates are visualized in Fig. 7. The predicted regions are obtained after using an empirically found threshold value of 0.3 on pixels of the test images. A comparison between the proposed CXRAU-Net model and four segmentation models is provided in Table 5 after finding statistical results in terms of accuracy, DSC score, and Jaccard index values on the test dataset. Also, a graphical analysis of all these models is presented by bar graph in Fig. 8 considering values of evaluation metrics from Table 5. These results clearly show that the proposed CXRAU-Net model outperformed the four state-of-the-art segmentation models.

Fig. 7.

Visual segmentation results, columns left to right are (a) input images, (b) ground truths and (c)–(g) results by proposed CXRAU-Net model, U-Net, Link-Net, FPN, and FCN models respectively. Output patches obtained indicate the medical condition of cardiomegaly by yellow mask, GGOs with red, consolidation with green and infiltrate with sky blue masks. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

Table 5.

Summary of evaluation metrics of the proposed CXAU-Net model with different segmentation models.

Fig. 8.

Comparison of all models to a range of accuracy, DSC, and Jaccard index values (x-axis) on ChestX-Ray14 test dataset for multi-class segmentation.

Another fair comparison is shown in Table 6 between CXRAU-Net model and other multi-class segmentation models in the literature which are designed for CXR image datasets obtained from different sources. The best score of DSC and Jaccard index with values 99.3% and 98.4% respectively are achieved by the proposed CXRAU-Net model. Also, Table 7 shows the accuracy score of the proposed CXRAU-Net model and other models that applied COVID-19 datasets. The accuracy score of 95.15% depicts the comparable performance of the proposed method with other methods.

Table 6.

Comparison of the proposed CXAU-Net model with other multi-class segmentation models.

| Methods/Metrics | CXR image dataset | DSC | Jaccard Index |

|---|---|---|---|

| U-Net [3] | JSRT dataset | 0.983 | 0.966 |

| Pix2pix MTdG [10] | JSRT dataset | 0.992 | 0.984 |

| Inverted Net [37] | JSRT dataset | 0.941 | 0.89 |

| Adv. ATTN [38] | JSRT dataset | 0.983 | – |

| MDU-Net [13] | Constructed own CXR image dataset | 0.913 | 0.838 |

| Proposed CXRAU-Net | COVID-19, Chest X-ray 14 dataset | 0.993 | 0.98 |

Table 7.

Comparison of the proposed CXAU-Net model with other segmentation models applied COVID-19 dataset.

4.2.2. Patch-based multi-class segmentation

Like, image-based approach, all models are individually trained on patches of the COVID-19 dataset. All the models are trained for 500 epochs with a batch size of 5 and optimized with Adam optimizer using a learning rate of 0.0001. The patch data is also separately labelled by the expert radiologist. The masks are then generated from labelled patch data and utilized during the training of these models. Then, experimental results of all trained models are obtained on patches of unseen Chest X-ray 14 test dataset. The segmented regions with abnormalities for all the models on randomly selected 8 patches of the test set are shown in Fig. 9. The predicted regions in patch data are obtained using a threshold value of 0.3 on pixels of the patches of the test set. Table 8 summarizes statistical information about all the above-mentioned models in terms of evaluation metrics. And a bar graph of these results is shown in Fig. 10.

Fig. 9.

Visual patch-based segmentation results, columns left to right are (a) input images, (b) ground truths and (c)–(g) are results by proposed CXRAU-Net model, U-Net, Link-Net, FPN, and FCN models respectively. Output patches obtained indicate the medical condition of cardiomegaly by yellow mask, GGOs with red, consolidation with green and infiltrate with sky blue masks. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

Table 8.

Summary of evaluation metrics of the proposed CXAU-Net model with different segmentation models for patch-based approach.

Fig. 10.

Comparison of all models to a range of accuracy, DSC, and Jaccard index values (x-axis) on patches of ChestX-Ray14 test dataset for multi-class segmentation.

As illustrated in Table 8 and Fig. 9 the proposed CXRAU-Net model achieves superior multi-class segmentation results on patches of the test set in comparison to four state-of-the-art segmentation models. But in comparison to image-based approach prediction results of these nonoverlapped patches are fewer and affected by less differentiated abnormalities i.e., GGOs and consolidation, and global and local features of other dense classes like infiltrates. It is also affected by misclassified areas like mid axillary, midclavicular, and axilla inside patches with conditions like GGOs, consolidation, cardiomegaly. Moreover, another comparison is shown in Table 9 between the proposed CXRAU-Net model and patch-based segmentation models in the literature which are trained and tested on images of different modalities and different datasets. The best Jaccard index value of 0.95 is obtained by the proposed model in comparison to other models. However, the accuracy obtained is somehow less than [44]. But accuracy is not the best measure for segmentation models, especially for class imbalanced datasets. The results illustrate that the proposed model is capable of extracting high-resolution global and edge features and thus has high confidence in localizing and segmenting small regions of infiltrates abnormality, which is a more challenging task due to large visual variations. While other models like U-Net have drawbacks of over-detection due to repeated transfer of low-resolution information in feature maps with skip connections and show greater uncertainty in learning edge information, which results in poor network decision at the boundaries during cardiomegaly and consolidation segmentation. The Link-Net model shows unfocused and inappropriate segmentation results due to a lack in utilizing parameters of the network and up sampling capabilities, despite using VGG16 as the backbone. The FPN model also utilized VGG16 as the backbone but is unable to extract high-level global features and optimize the network for accurate segmentation of ROIs. The FCN model demonstrates blurred and wrong segmentation results due to uneven overlapping of the decoder output.

Table 9.

Comparison of the proposed CXAU-Net model with other multi-class patch-based segmentation models.

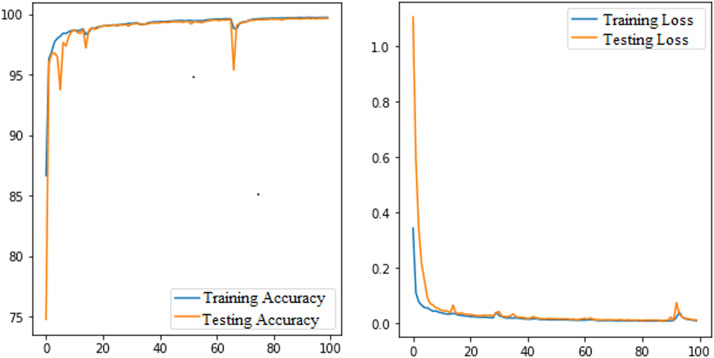

4.3. Image-based binary-class segmentation with hybrid loss function

To authenticate the performance of the proposed model, it is also trained and tested for binary-class segmentation tasks i.e., segmentation of lung fields. This model is trained using NLM-China and MC CXR datasets due to the easy availability of the segmentation masks of left and right lung fields. The model is trained for 100 epochs with a batch size of 5 and optimized with Adam optimizer using a learning rate of 0.0001. After that, the trained model is validated using the JSRT dataset. The segmentation results of 5 randomly selected input images with actual mask and predicted mask is shown in Fig. 11. The left and right lung fields are segmented after using an empirically found threshold value of 0.1 on pixels of the test images. Where areas with yellow colour are regions of lung fields and areas with purple are background. The effectiveness of this model due to the hybrid loss function is depicted by loss and accuracy curves as a function of epochs in Fig. 12. Where, the average value of training and testing loss are 0.0087 and 0.0108 respectively, and the average value of accuracy are 0.9967 and 0.9961 respectively.

Fig. 11.

Visual segmentation results, columns left to right are input image, ground truth mask, and predicted masks of lung fields by the proposed CXRAU-Net model. (For interpretation of the references to colour in this figure, the reader is referred to the web version of this article.)

Fig. 12.

The loss and accuracy curves of the proposed CXRAU-Net model for training and testing sessions as a function of epochs.

A summary of the segmentation results of the proposed model with different segmentation models that also utilized the JSRT dataset is presented in Table 10 in terms of DSC and Jaccard index values. The proposed model achieves the highest DSC score of 0.998 and a Jaccard index value of 0.989.

Table 10.

Comparison of evaluation metrics of the proposed CXAU-Net model with different segmentation models that utilized the JSRT dataset.

All the experimental results clearly illustrate that the proposed CXRAU-Net model yields better results than other segmentation models for both image-based and patch-based approaches as well as for multi-class and binary-class segmentation.

4.4. Effect of different hyperparameters

Motivated by the work of Novikov et al. [37], this section evaluates the performance of the proposed CXRAU-Net model on different hyperparameters. Hyperparameters shape the DL models and have a vast impact on their performance. So, experiments are conducted by training the proposed model with different activation functions, different loss functions, and considering different metrics described in Section 2.E. The model is trained on CXR dataset images for 500 epochs and using a hybrid loss function.

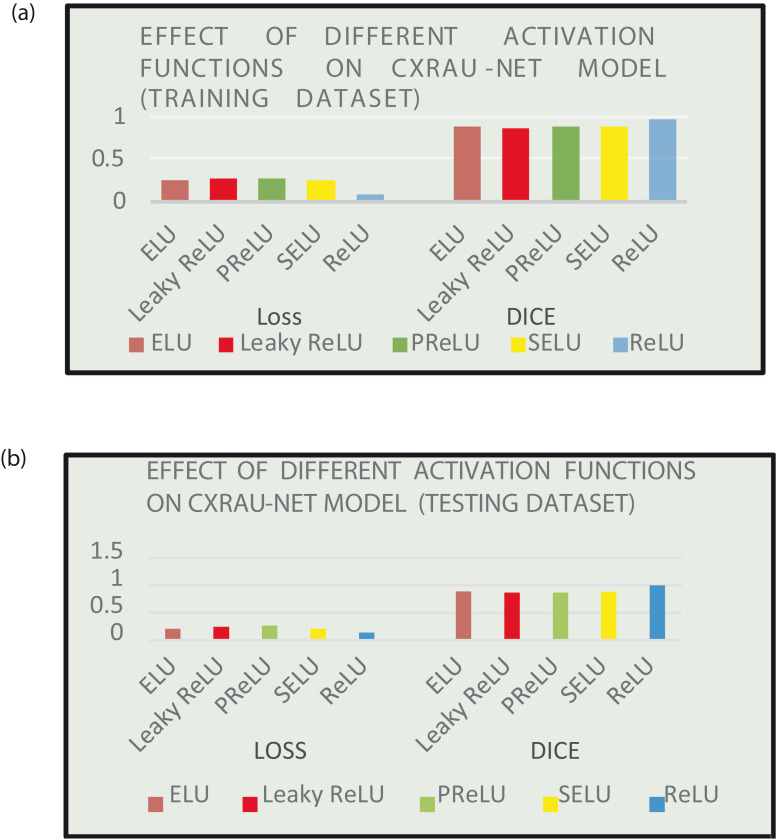

Table 11 and Fig. 13 (a) and 13 (b) depicts the effect of different activation functions such as Rectified Linear Unit (ReLU), Exponential Linear Unit (ELU), Leaky ReLU, Parametric ReLU (PReLU), and Scaled Exponential Linear Unit (SELU) on loss and DSC score of training and testing dataset respectively. The results clearly show that the model outperforms with ReLU activation function.

Table 11.

Effect of different activation function on loss ad DSC score of the proposed CXRAU-Net model.

| Activation function | Training set |

Testing set |

||

|---|---|---|---|---|

| Loss | DSC | Loss | DSC | |

| ReLU | 0.0892 | 0.9510 | 0.1340 | 0.9932 |

| ELU | 0.2562 | 0.8707 | 0.2078 | 0.8853 |

| Leaky ReLU | 0.2752 | 0.8630 | 0.2393 | 0.8637 |

| PReLU | 0.2642 | 0.8666 | 0.2683 | 0.8686 |

| SELU | 0.2412 | 0.8750 | 0.2076 | 0.8806 |

Fig. 13.

(a) Performance comparison of different activation function on loss and DSC score values for the training dataset (b) Performance comparison of different activation function on loss and DSC score values for testing dataset.

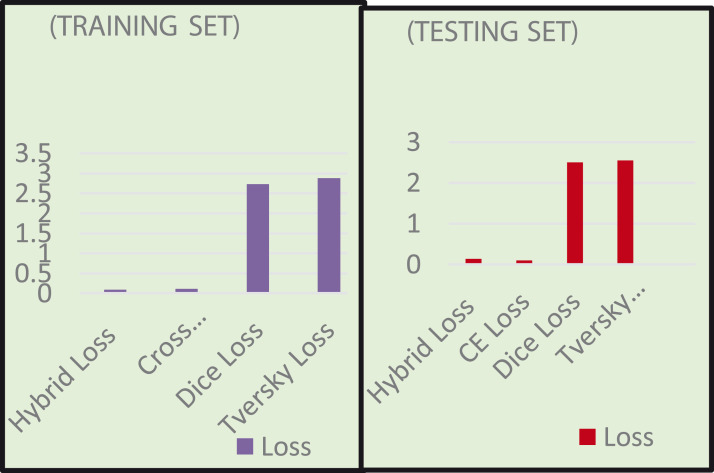

Table 12 and Fig. 14 show the effect of different loss functions on the CXRAU-Net model for training and testing datasets in terms of metrics, loss, and DSC score.

Table 12.

Effect of different loss function on loss ad DSC score of the proposed CXRAU-Net model.

| Loss functions | Training set |

Testing set |

||

|---|---|---|---|---|

| Loss | DSC | Loss | DSC | |

| Hybrid loss | 0.0892 | 0.9510 | 0.1340 | 0.9407 |

| Dice loss | 2.7278 | 0.8586 | 2.4995 | 0.8581 |

| Tversky loss | 2.8798 | 0.8305 | 2.5568 | 0.8241 |

| CE loss | 0.1084 | 0.8827 | 0.0998 | 0.8912 |

Fig. 14.

Performance comparison of different loss function on loss and DSC score values for training and testing datasets.

The results obtained indicate that the proposed hybrid loss function shows minimum loss and maximum DSC score for training datasets and minimum loss for CE loss and maximum DSC score for hybrid loss function on testing datasets. It is important to differentiate that a metric evaluates the model after the learning process while loss functions train the model. Considering this, the model is also evaluated on different loss metric functions.

Table 13 results show that Log cosh dice loss metrics have a lower value for the proposed CXRAU-Net model.

Table 13.

Comparison of results of different loss metrics for the proposed CXRAU-Net model.

| Metrics | Training set loss | Testing set loss |

|---|---|---|

| Weighted CE loss | 2.3635 | 2.1204 |

| Focal loss | 0.0534 | 0.0414 |

| Log cosh dice loss | 0.0093 | 0.0097 |

| Dice loss | 0.1350 | 0.1336 |

| Tversky loss | 0.1137 | 0.1135 |

5. Conclusion

This paper proposed CXRAU-Net model for automatic multi-class image segmentation of COVID-19 infections by detecting conditions of GGOs, consolidation, cardiomegaly, and infiltrates from the COVID-19 CXR image dataset. The model is introduced with modification by applying spatial and channel attention, dilated convolution in attention U-Net along with the proposed hybrid loss function. This model along with four other state-of-the-art segmentation models are trained and tested on full-scale and patches of images of different CXR datasets. For generalization of the proposed model, it also performs binary-class image segmentation i.e., segmentation of left and right lung fields on NLM-China, MC, and JSRT datasets. Moreover, the model is exploited on different hyperparameters using different activation, loss functions, and different metrics. Overall results show that by extracting high-resolution global, edge, and multiscale features and including pixel and geometrical information in hybrid loss function the proposed CXRAU-Net model generates better results in comparison to different state-of-the-art models for both multi-class and binary-class segmentation task and both image-based and patch-based approaches.

Declaration of Competing Interest

We declare that we have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- 1.Wong H.Y.F., Lam H.Y.S., Fong A.H.-T., Leung S.T., Chin T.W.-Y., Lo C.S.Y., Lui M.M., Lee J., Chiu K.W., Chung T.W., Lee E., Wan E., Hung I., Lam T., Kuo M.D., Ng M.Y. Frequency and distribution of chest radiographic findings in patients positive for COVID-19. Radiology. 2020;296:E72–E78. doi: 10.1148/radiol.2020201160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Available: https://radiologyassistant.nl/chest/chest-x-ray/lung-disease.

- 3.Ronneberger Olaf, Fischer Philipp, Brox Thomas. International Conference on Medical Image Computing and Computer-assisted Intervention. Springer; 2015. Unet: Convolutional networks for biomedical image segmentation; pp. 234–241. [Google Scholar]

- 4.Alexander Kalinovsky A., Kovalev V. XIII International Conference on Pattern Recognition and Information Processing. Publishing Center of BSU; Minsk: 2016. Lung image segmentation using deep learning methods and convolutional neural networks. [Google Scholar]

- 5.A.A. Novikov, D. Major, D. Lenis, J. Hladuvka, M. Wimmer, K. Buhler, Fully convolutional architectures for multi-class segmentation in chest radiographs. arXiv preprint arXiv:1701. 08816, 2017. [DOI] [PubMed]

- 6.Wang C. In: Image Analysis. Sharma P., Bianchi F.M., editors. Springer; Cham, Switzerland: 2017. Segmentation of multiple structures in chest radiographs using multi-task fully convolutional networks; pp. 282–289. [Google Scholar]

- 7.M.R. Arbabshirani, A.H. Dallal, C. Agarwal, A. Patel, G. Moore. Accurate segmentation of lung fields on chest radiographs using deep convolutional networks. In SPIE Medical Imaging International Society for Optics and Photonics, pp. 1013,305–1013,305, 2017.

- 8.Jiang Feng, Grigorev Aleksei, Rho Seungmin, Tian Zhihong, Fu YunSheng, Jifara Worku, Khan Adil, Liu Shaohui. Medical image semantic segmentation based on deep learning. Neural Comput. Appl. 2018;29:1257–1265. doi: 10.1007/s00521-017-3158-6. [DOI] [Google Scholar]

- 9.Xie Y., Xia Y., Zhang J., Song Y., Feng D., Fulham M., Cai W. Knowledge-based collaborative deep learning for benign-malignant lung nodule classification on chest CT. IEEE Trans. Med. Imaging. 2018;38(4):991–1004. doi: 10.1109/TMI.2018.2876510. [DOI] [PubMed] [Google Scholar]

- 10.Eslami M., Tabarestani S., Albarqouni S., Adeli E., Navab N., Adjouadi M. Image-to-images translation for multi-task organ segmentation and bone suppression in chest x-ray radiography. IEEE Trans. Med. Imaging. 2020;39(7):2553–2565. doi: 10.1109/TMI.2020.2974159. [DOI] [PubMed] [Google Scholar]

- 11.Park B., Park H., Lee S.M., Seo J.B., Kim N. Lung segmentation on HRCT and volumetric CT for diffuse interstitial lung disease using deep convolutional neural networks. J. Digit. Imaging. 2019;32(6):1019–1026. doi: 10.1007/s10278-019-00254-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.G. Gaál, B. Maga, A. Lukács, Attention unet-based adversarial architectures for chest x-ray lung segmentation. arXiv preprint arXiv:2003.10304, 2020.

- 13.Wang W., Feng H., Bu Q., Cui L., Xie Y., Zhang A., Chen Z. MDU-net: a convolutional network for clavicle and rib segmentation from a chest radiograph. J. Healthc. Eng. 2020;2020 doi: 10.1155/2020/2785464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.X. Chen, L. Yao, Y. Zhang, Residual attention u-net for automated multi-class segmentation of covid-19 chest ct images. arXiv preprint arXiv:2004.05645, 2020.

- 15.Oh Y., Park S., Ye J.C. Deep learning covid-19 features on cxr using limited training data sets. IEEE Trans. Med. Imaging. 2020;39(8):2688–2700. doi: 10.1109/TMI.2020.2993291. [DOI] [PubMed] [Google Scholar]

- 16.M.Z. Alom, M.M. Rahman, M.S. Nasrin, T.M. Taha, V.K. Asari, Covid_mtnet: Covid-19 detection with multi-task deep learning approaches. arXiv preprint arXiv:2004.03747, 2020.

- 17.Amyar A., Modzelewski R., Li H., Ruan S. Multi-task deep learning-based CT imaging analysis for COVID-19 pneumonia: classification and segmentation. Comput. Biol. Med. 2020;126 doi: 10.1016/j.compbiomed.2020.104037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Y. Qiu, Y. Liu, J. Xu, Miniseg: an extremely minimum network for efficient covid-19 segmentation. arXiv preprint arXiv:2004.09750, 2020. [DOI] [PubMed]

- 19.L.O. Teixeira, R.M. Pereira, D. Bertolini, L.S. Oliveira, L. Nanni, Y. M. Costa, Impact of lung segmentation on the diagnosis and explanation of COVID-19 in chest X-ray images. arXiv preprint arXiv:2009.09780, 2020. [DOI] [PMC free article] [PubMed]

- 20.Y.G. Kim, K. Kim, D. Wu, H. Ren, W.Y. Tak, S.Y. Park, Q. Li, Deep Learning-based Four-region Lung Segmentation in Chest Radiography for COVID-19 Diagnosis. arXiv preprint arXiv:2009.12610, 2020. [DOI] [PMC free article] [PubMed]

- 21.Chen Y., Ma B., Xia Y. Domain Adaptation and Representation Transfer, and Distributed and Collaborative Learning. Springer; Cham: 2020. $$\alpha $$-UNet++: a data-driven neural network architecture for medical image segmentation; pp. 3–12. [Google Scholar]

- 22.F. Shan, Y. Gao, J. Wang, W. Shi, N. Shi, M. Han, Y. Shi, Lung infection quantification of COVID-19 in CT images with deep learning. arXiv preprint arXiv:2003.04655, 2020.

- 23.J.P. Cohen, P. Morrison, L. Dao, “COVID-19 image data collection,” arXiv preprint arXiv 2003.11597, 2020. [Online]. Available: HTTPS: //github.com/ieee8023/covid-chestxray-dataset.

- 24.Jaeger S., Karargyris A., Candemir S., Folio L., Siegelman J., Callaghan F., Xue Z., Palaniappan K., Singh R.K., Antani S., Thoma G., Wang Y.X., Lu P.X., McDonald C.J. Automatic tuberculosis screening using chest radiographs. IEEE Trans. Med Imaging. 2014;33(2):233–245. doi: 10.1109/TMI.2013.2284099. PMID: 24108713. [DOI] [PubMed] [Google Scholar]

- 25.Candemir S., Jaeger S., Palaniappan K., Musco J.P., Singh R.K., Xue Z., Karargyris A., Antani S., Thoma G., McDonald C.J. Lung segmentation in chest radiographs using anatomical atlases with nonrigid registration. IEEE Trans. Med. Imaging. 2014;33(2):577–590. doi: 10.1109/TMI.2013.2290491. PMID: 24239990. [DOI] [PubMed] [Google Scholar]

- 26.X. Wang, Y. Peng, L. Lu, Z. Lu, M. Bagheri, Summers, R.M. “Chestx-ray8: Hospital-scale chest x-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases” In Proceedings of the IEEE conference on computer vision and pattern recognition pp. 2097–2106, 2017.

- 27.Shiraishi Junji, Katsuragawa Shigehiko, Ikezoe Junpei. Development of a digital image database for chest radiographs with and without a lung nodule: receiver operating characteristic analysis of radiologists’ detection of pulmonary nodules. Am. J. Roentgenol. 2000;174(1):71–74. doi: 10.2214/ajr.174.1.1740071. [DOI] [PubMed] [Google Scholar]

- 28.Reza A.M. Realization of the contrast limited adaptive histogram equalization (CLAHE) for real-time image enhancement. J. VLSI Signal Process. Syst. Signal Image Video Technol. 2004;38(1):35–44. doi: 10.1023/B:VLSI.0000028532.53893.82. [DOI] [Google Scholar]

- 29.Ozan Oktay, Jo Schlemper, Loic Le Folgoc, Matthew Lee, Mattias Heinrich, Kazunari Misawa, Kensaku Mori, Steven McDonagh, Nils Y. Hammerla, Bernhard Kainz, et al. Attention U-Net: Learning where to look for the pancreas. arXiv preprint arXiv:1804.03999, 2018.

- 30.Schlemper J., Oktay O., Schaap M., Heinrich M., Kainz B., Glocker B., Rueckert D. Attention gated networks: Learning to leverage salient regions in medical images. Med. Image Anal. 2019;53:197–207. doi: 10.1016/j.media.2019.01.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Zhao P., Zhang J., Fang W., Deng S. SCAU-net: spatial-channel attention U-net for gland segmentation. Front. Bioeng. Biotechnol. 2020;8:670. doi: 10.3389/fbioe.2020.00670. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Salehi S.S.M., Erdogmus D., Gholipour A. InternationaL Workshop on Machine Learning In Medical Imaging. Springer; Cham: 2017. Tversky loss function for image segmentation using 3D fully convolutional deep networks; pp. 379–387. [Google Scholar]

- 33.X. Chen, B.M. Williams, S.R. Vallabhaneni, G. Czanner, R. Williams, Y. Zheng, Learning active contour models for medical image segmentation. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. pp. 11624–11632, June 2019.

- 34.L. Zhou, C. Zhang, M. Wu, D-link net: Link net with pre-trained encoder and dilated convolution for high-resolution satellite imagery road extraction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 182–186, 2018.

- 35.S. Seferbekov, V. Iglovikov, A. Buslaev, A. Shvets, Feature pyramid network for multi-class land segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 272–275, 2018.

- 36.J. Long, E. Shelhamer, T. Darrell, “Fully convolutional networks for semantic segmentation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3431–3440, 2015. [DOI] [PubMed]

- 37.Novikov A.A., Lenis D., Major D., Hladůvka J., Wimmer M., Bühler K. Fully convolutional architectures for multiclass segmentation in chest radiographs. IEEE Trans. Med. Imaging. 2018;37(8):1865–1876. doi: 10.1109/TMI.2018.2806086. [DOI] [PubMed] [Google Scholar]

- 38.G. Gaál, B. Maga, A. Lukács, Attention unet-based adversarial architectures for chest x-ray lung segmentation. arXiv preprint arXiv:2003.10304, 2020.

- 39.Ioannis D. Apostolopoulos1, Tzani Bessiana, COVID-19: Automatic Detection from X-Ray Images Utilizing Transfer Learning with Convolutional Neural Networks, arXiv preprint arXiv:2003.11617. [DOI] [PMC free article] [PubMed]

- 40.L. Wang, A. Wong, COVID-Net: A Tailored Deep Convolutional Neural Network Design for Detection of COVID-19 Cases from Chest Radiography Images, arXiv preprint arXiv:2003.09871, 2020. [DOI] [PMC free article] [PubMed]

- 41.P.K. Sethy, S.K. Behera, Detection of Coronavirus Disease (COVID-19) Based on Deep Features, 2020.

- 42.E.E.D. Hemdan, M.A. Shouman, M.E. Karar, COVIDX-Net: A Framework of Deep Learning Classifiers to Diagnose COVID-19 in X-Ray Images, arXiv preprint arXiv:2003.11055, 2020.

- 43.Li J., Sarma K.V., Ho K.C., Gertych A., Knudsen B.S., Arnold C.W. AMIA Annual Symposium Proceedings. American Medical Informatics Association; 2017. A multi-scale u-net for semantic segmentation of histological images from radical prostatectomies; p. 1140. [PMC free article] [PubMed] [Google Scholar]

- 44.Nardelli P., Jimenez-Carretero D., Bermejo-Pelaez D., Washko G.R., Rahaghi F.N., Ledesma-Carbayo M.J., Estépar R.S.J. Pulmonary artery–vein classification in CT images using deep learning. IEEE Trans. Med. Imaging. 2018;37(11):2428–2440. doi: 10.1109/TMI.2018.2833385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Wang S., Yu L., Yang X., Fu C.-W., Heng P.-A. Patch-based output space adversarial learning for joint optic disc and cup segmentation. IEEE Trans. Med. Imag. 2019;38(11):2485–2495. doi: 10.1109/TMI.2019.2899910. 10.1109/TMI.2019.2899910. [DOI] [PubMed] [Google Scholar]

- 46.van Ginneken B., Stegmann M.B., Loog M. Segmentation of anatomical structures in chest radiographs using supervised methods: a comparative study on a public database. Med. Image Anal. 2006;10(1):19–40. doi: 10.1016/j.media.2005.02.002. [DOI] [PubMed] [Google Scholar]

- 47.Seghers D., Loeckx D., Maes F., Vandermeulen D., Suetens P. Minimal shape and intensity cost path segmentation. IEEE Trans. Med. Imaging. 2007;26(8):1115–1129. doi: 10.1109/TMI.2007.896924. [DOI] [PubMed] [Google Scholar]