Abstract

Sensitivity to the scope of public good provision is an important indication of validity for the contingent valuation method. An online survey was administered to an opt-in non-probability sample panel to estimate the willingness-to-pay to protect hemlock trees from a destructive invasive species on federal land in North Carolina. We collected survey responses from 907 North Carolina residents. We find evidence that attribute non-attendance (ANA) is a factor when testing for sensitivity to scope. When estimating the model with stated ANA, the ecologically and socially important scope coefficients become positive and statistically significant with economically significant marginal willingness-to-pay estimates.

1. Introduction

The contingent valuation method (CVM) is a stated preference approach to the valuation of non-market goods (Johnston et al. 2017). Since the National Oceanic and Atmospheric Administration (NOAA) Panel’s report on contingent valuation (Arrow et al. 1993), the scope test, for which stated preference willingness-to-pay (WTP) estimates are expected to increase with the scope of a policy, has been an important validity test and a significant source of controversy. Tests of sensitivity to scope fall into two categories. External scope tests rely on between-respondent variation either through split sample designs or by restricting estimation to just one valuation question per respondent.1 Internal scope tests allow within-respondent variation to inform the test by using multiple responses from each survey participant.2

Not every CVM study passes the scope test (Desvousges, Mathews and Train 2012). One explanation may be attribute non-attendance (ANA), a deviation from neoclassical theory and an example of preference heterogeneity.3 ANA arises when survey respondents ignore one or more of the choice attributes for a variety of reasons (Aleumu et al. 2013). Generally, ANA is handled empirically by restricting estimated attribute coefficients to zero for individuals who do not have fully compensatory preferences. Stated ANA models rely on survey respondent statements about which attributes they ignored. Inferred ANA models allow the empirical model to provide clues about ANA.

The survey we use to test for sensitivity to scope in the presence of ANA presents respondents with plans to manage an invasive species in public forests and builds on the analysis of Moore, Holmes, and Bell (2011). Respondents are asked if they would be willing to pay additional taxes to support a forest protection plan described using three attributes: treatment of ecologically important acreage, treatment of socially important acreage, and treatment method. We employ a split sample design to perform our scope tests on two different CVM response formats. One subsample receives a multiple bounded dichotomous choice survey in which respondents can respond to up to three tax amounts with other attributes held fixed, with follow-up tax amounts dependent on the response to the previous amount. The other subsample receives a repeated dichotomous choice survey in which all attributes can change over three questions.

This study design allows us to test for both external and internal sensitivity to scope. Because the first question for both samples follows the same single binary choice (SBC) format, those responses can be used to estimate a marginal WTP surface that relies on between-respondent variation only. A statistically significant and positive marginal WTP for a given attribute is an indication of external sensitivity to scope.4 Next, by examining the sequential valuation questions, we test for internal scope.5 We repeat both scope tests using stated ANA models and random parameters logit to examine the impact of non-attendance and preference heterogeneity on scope effects. We also extend this line of tests to include inferred ANA using latent class logit models.

To our knowledge this is the first paper to treat CVM binary choice sequential data as a discrete choice experiment (DCE) and test for the effects of stated and inferred ANA. We find evidence that ANA is a factor when testing for sensitivity to scope. When estimating the model with stated attribute non-attendance the ecologically and socially important scope coefficients become positive and statistically significant. We find several differences between the multiple-bounded and repeated sequential contingent valuation data samples that support the use of the repeated sequential design. Our results suggest that accounting for attribute non-attendance is one factor that identifies a core group of CVM respondents who exhibit sensitivity to scope. These results could have implications on the interpretation of past CVM studies that find insensitivity to scope and the implementation of public policy.

2. Literature review

Contingent valuation method and discrete choice experiments

In this paper we address the CVM scope test with strategies adopted by DCEs, which have proliferated in the environmental economics literature (Mahieu et al. 2017). The scope test validity issue is not an empirical concern for DCE researchers in the same way that it is for contingent valuation researchers. In short, CVM studies tend to conduct external scope tests and DCE studies tend to conduct internal scope tests. Discrete choice experiments employ more complicated designs by increasing the number of choice alternatives, increasing the number of choice questions, or separating the description of the public good into a number of varying attributes (Carson and Louviere 2011). Each of these may contribute to the finding of sensitivity to scope in, to our knowledge, all published DCEs. In this paper we consider increasing the number of binary choice questions and separating the description of the public good into several varying attributes as in Boyle et al. (2016). While several studies have compared internal and external scope tests in the contingent valuation literature (e.g., Giraud, Loomis, and Johnson 1999; Veisten et al. 2004), to our knowledge only one DCE article has addressed external scope. Lew and Wallmo (2011) find that most differences in willingness-to-pay estimates are statistically significant in external scope test comparisons. Most DCE researchers have focused on internal scope tests. Sequential binary choice question formats have been used to examine ordering effects (Holmes and Boyle 2005; Day et al. 2012; Nguyen, Robinson, and Kaneko 2015) but have not been explicitly concerned with a comparison of internal and external scope tests.

The SBC, or referendum, form of the contingent valuation method presents survey respondents with a policy description and a randomly assigned policy cost (e.g., a tax). Respondents reveal their preferences by indicating if they would be willing to pay the randomly assigned cost in the hypothetical setting. Carson and Groves (2007, 2011) and Carson, Groves, and List (2014) describe the situations in which a SBC should lead to a truthful revelation of preferences. If the survey respondent believes the survey to be consequential and the policy description is for a public good, then the best response to the single binary choice is truth-telling (Zawojska and Czajkowski 2017). A consequential survey is one in which respondents care about the outcome and believe their answers are important to the policy process, as in an advisory referendum.

Our paper is similar to Christie and Azevedo (2009), Siikamäki and Larson (2015) and Petrolia, Interis and Hwang (2014, 2018). All four compared valuation questions from two separate samples of respondents. In Christie and Azevedo (2009), one sample received a survey with three CVM questions while the other sample was presented with eight DCE questions. The authors found scope effects with the CVM and DCE questions but willingness-to-pay differed across question format.6 Similarly, Siikamäki and Larson (2015) asked two sets of “two and one-half bounded” binary choice valuation questions for an improvement in water quality. Their conditional logit models failed the scope test but a mixed logit model that accounted for preference heterogeneity found statistically and economically significant scope effects.7 Petrolia et al. (2014) compared single binary choice and single multinomial choice valuation questions in split samples of survey respondents. The authors found scope effects for all three attributes in the multinomial choice version of the survey.8 Finally, Petrolia et al. (2018) compared single multinomial choice and sequential multinomial choice question versions with and without consideration of attribute non-attendance. They found scope effects in both survey versions and similarity in the magnitudes of scope effects across versions. In addition, Petrolia et al. (2018) made a comparison of external and internal scope tests but with multinomial choice data.

Attribute non-attendance

A standard assumption made when analyzing stated preference survey data is that respondents have unlimited substitutability (fully compensatory preferences) between attributes (Scarpa et al. 2009). Recent research, however, suggests that respondents often employ simplified heuristics such as threshold rules, attribute aggregation, or attribute non-attendance when making choices (Swait 2001; Caparros, Oviedo, Campos 2008; Greene and Hensher 2008; Hensher 2006, 2008; Puckett and Hensher 2008). ANA occurs when respondents fail to consider a particular attribute from a stated preference discrete choice (Alemu et al. 2013). Such discontinuous preference orderings violate the “continuity axiom” and thus pose problems for neoclassical analysis (Gowdy and Mayumi 2001; McIntosh and Ryan 2002; Rosenberger et al. 2003; Lancsar and Louviere 2006; Scarpa et al. 2009). In this context, ANA models can be thought of as an extension to other methods that account for preference heterogeneity such as empirical methods including latent class and random parameters models.

Economic literature on the ANA began appearing in the early 2000s (McIntosh and Ryan 2002; Sælensminde 2002; Lancsar and Louviere 2006; Hensher, Rose, and Green 2005; Hensher 2006; Rose, Hensher, Greene 2005; Campbell, Hutchinson, Scarpa 2008; Scarpa et al. 2009). In our study, the use of a relatively inexpensive opt-in sample may raise the potential for attribute non-attendance (i.e., insensitivity to scope for two of our three attributes) as respondents may pay less attention to survey details (Baker et al. 2010; Johnston et al. 2017). Attribute non-attendance tends to cause statistical insignificance of attribute coefficients or bias them toward zero. Perhaps most importantly, this body of literature has found that WTP and willingness-to-accept (WTA) estimates are lower when ANA is accounted for (McIntosh and Ryan 2002; Hensher et al. 2005; Hole 2011; Rose et al. 2005; Campbell, Hutchinson, and Scarpa 2008; Scarpa et al. 2009; Scarpa et al. 2011; Scarpa et al. 2012; Hensher and Greene 2010; Puckett and Hensher 2008; Koetse 2017).9 These inaccurate estimates could subsequently influence policy through benefit transfer (Glenk et al. 2015).

Several empirical strategies have arisen to account for ANA (Scarpa et al. 2009). There are two primary methods for identifying ANA: stated and inferred. In the former, a survey explicitly asks respondents to indicate the degree of attention they paid to each attribute that described the choice alternative. The researcher will then use these data to group respondents into an attendance class (Hensher et al. 2005; Carlsson, Kataria, Lampi 2010; Hensher, Rose, Greene 2012; Scarpa et al. 2012; Alemu et al. 2013; Kragt 2013). Attribute non-attendance may be inferred by use of latent class methods to separate respondents into different groups based on their preference orderings (Scarpa et al. 2009; Hensher et al. 2012; Scarpa et al. 2012; Kragt 2013; Glenk et al. 2015; Koetse 2017). Inferred models are important for two main reasons. First, not all surveys explicitly ask attribute attendance questions. Thus analysis of ANA implications using past data is possible with inferred models. Second, there is evidence that respondents may not respond accurately to stated attribute attendance questions (Armitage and Conner 2001; Ajzen, Brown, Caravajal 2004; Hensher et al. 2012; Scarpa et al. 2011; Kragt 2013; Carlsson et al. 2010; Hess and Hensher 2010; Scarpa et al. 2012; Alemu et al. 2013). Regardless of the empirical method chosen, estimated attribute coefficients are constrained to zero for cases of non-attendance (Hensher et al. 2012).

Our work also builds on the body of literature that has investigated preference heterogeneity of responses from choice survey data (Provencher, Baerenklau and Bishop, 2002; Boxall and Adamowicz 2002; Scarpa and Thiene 2005; Hensher and Greene 2003). Specifically, this paper is most closely related to Kragt (2013) and Koetse (2017) in that we explore whether stated or inferred ANA methods with respect to the scope attributes can lead to statistically significant scope effects. Like Koetse (2017) we focus on a particular subset of attribute non-attendance, and following Kragt (2013) explore several different empirical specifications. In addition, our analysis is similar to Thiene, Scarpa, and Louviere (2015) in that we compare various numbers of potential classes.

3. Survey and data

Our application is to the control of an invasive species, hemlock woolly adelgid (HWA), in public forests in North Carolina. We developed the binary choice question format with randomly assigned attributes for the SurveyMonkey online survey platform and pretested the survey with 62 respondents. In order to collect a large sample of data at relatively low cost we conducted an internet survey with a non-probability panel of respondents. So called “opt-in” panels are becoming popular in social science research, but their ability to adequately represent sample populations and obtain high quality data is still unresolved (Hays, Liu, and Kapteyn 2015). Yeager et al. (2011) found that non-probability internet samples are less accurate than more representative probability samples for socioeconomic variables. Lindhjem, Henrik, and Navrud (2011) reviewed the stated preference literature and find that internet panel data quality is no lower than more traditional survey modes and internet panel willingness-to-pay estimates are lower.

Our Southern Appalachian Forest Management Survey (SAFMS) was administered in September 2017. More than 8400 individuals were invited to take the online survey and roughly 13 percent opted to be panelists. About 83 percent of those panelists completed the survey for a total of 974 respondents. We use a sample of 907 respondents who answered each of the choice questions. The survey questions can be divided into one of three categories. First, we asked preliminary questions about the respondents’ prior knowledge of HWA and recreational experiences in Pisgah National Forest, Nantahala National Forest, and Great Smoky Mountains National Park (see appendix figure A1). Second, we asked a series of either two or three referendum questions (called “situations” in the survey, and in the rest of this paper) depending on the respondent’s survey treatment. Finally, we asked debriefing questions about consequentiality, attribute non-attendance, and individual-specific characteristics.

Respondents were then led through a series of education materials and instructions. First, they were led through descriptions about ecologically important and socially important areas of hemlock dominated forest and asked about the importance of each. Ecologically important areas contribute to natural diversity, provide habitat for rare plants and animals, and tend to be in remote areas of the forest. Socially important areas are used by visitors for recreation and are near parking areas or accessible by trail. Then, respondents were described biological and chemical treatment methods and asked about whether they agree with their use. Finally, respondents were informed about the choice situation that would be described by treatment options or costs, and include multiple situations.

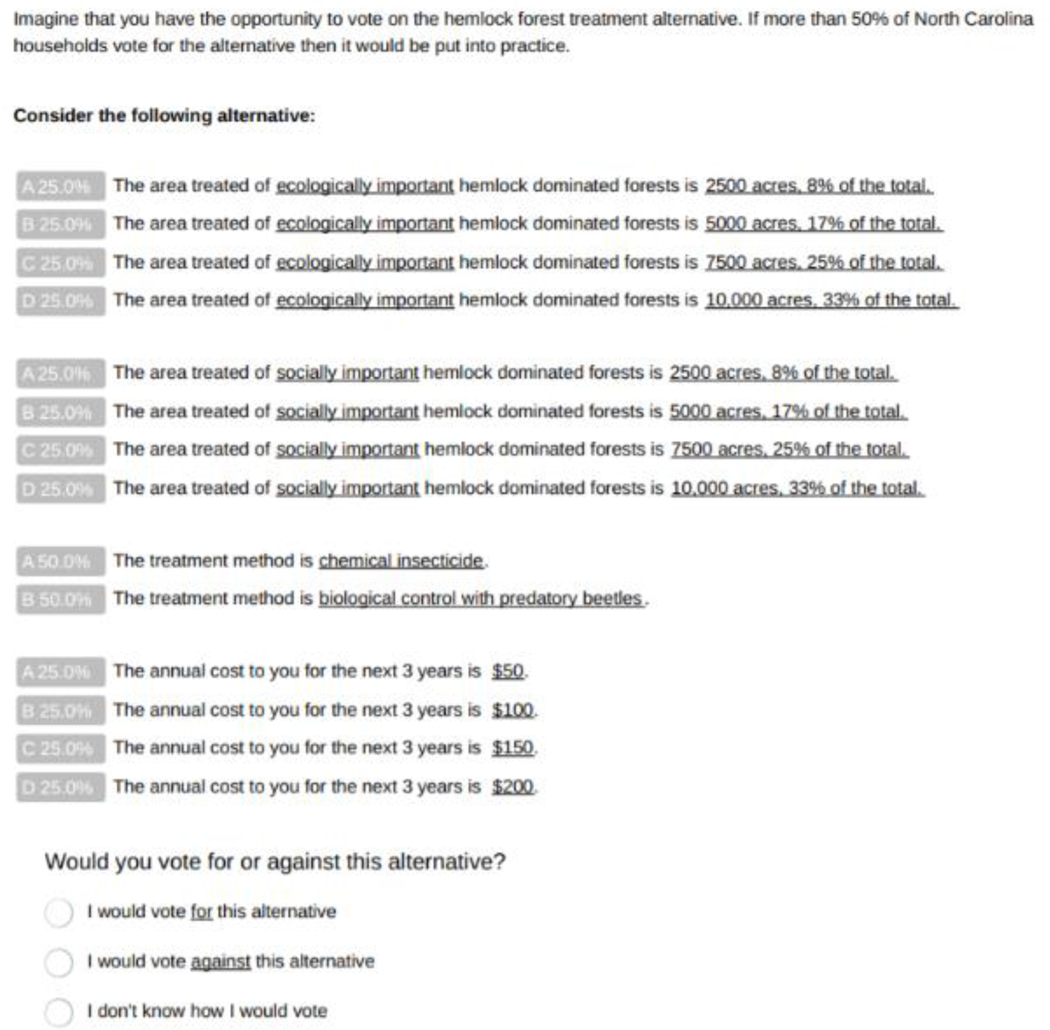

Figure 1 shows two important features of our survey. First, it shows how the four attributes (ecologically important acreage, socially important acreage, treatment method, and annual cost over the next three years) describing the referendum policy varied in the first situation. There were four levels of acreage for each type of hemlock dominated forest: 2500, 5000, 7500 and 10,000. There were also four cost amounts in the first situation: $50, $100, $150 and $200. Second, figure 1 shows the wording of the referendum questions and the answer choices for all situations. Respondents were asked how they would vote for the referendum and given three choices: For, Against, or Don’t know.10 In the remainder of the paper we combine the “Against” and “Don’t know” votes and treat the responses to choice situations as binary.11

Figure 1.

Binary choice question

After the first choice situation respondents were randomly assigned to either a bounded or repeated referendum treatment.12 In the repeated treatment all four attributes randomly varied in each situation.13 Conversely, in the bounded treatment only the cost attribute varied, the other three attributes remained constant throughout the survey. For example, respondents in the bounded treatment who answered affirmatively in the first situation were then asked in the second situation if they would vote for the same acreage to be treated using the same method if the cost was $250. Fifty-five percent of those respondents voted “For” the referendum at the higher cost. Alternatively, respondents who did not answer affirmatively in the first situation were then asked how they would vote if the cost was $25. Fifty percent of those respondents voted “For” the referendum at the lower cost.

Table 1 reports the referendum responses. In the bounded sample the percentage of “For” votes falls from 66 percent to 45 percent as the cost amount increases from $50 to $200.14 In the repeated sample the percentage of “For” votes falls from 60 percent to 36 percent as the cost amount rises. Furthermore, table 1 also shows that in the repeated treatment the cost attribute’s range expanded to include the new costs in the bounded treatment.15 In the second choice situation of the repeated treatment the “For” votes fell from 62 percent to 41 percent as the cost amount increased from $25 to $250. Table 1 also shows some evidence of non-monotonicity and fat tails, which can cause the range of WTP estimates measured with different approaches to be wide and for the standard errors to be large.

Table 1:

Binary choice sequence referendum votes

| First binary choice referendum situation | ||||||

|---|---|---|---|---|---|---|

| Bounded | Repeated | |||||

| Cost | For votes | Sample size | % for | For votes | Sample size | % for |

| 50 | 72 | 109 | 66% | 55 | 92 | 60% |

| 100 | 65 | 123 | 53% | 64 | 118 | 54% |

| 150 | 51 | 113 | 45% | 64 | 118 | 54% |

| 200 | 56 | 124 | 45% | 40 | 110 | 36% |

| Total | 244 | 469 | 52% | 223 | 438 | 51% |

| Second binary choice referendum situation | ||||||

| Bounded | Repeated | |||||

| Cost | For votes | Sample size | % for | For votes | Sample size | % for |

| 25 | 113 | 225 | 50% | 38 | 61 | 62% |

| 50 | 40 | 76 | 53% | |||

| 100 | 35 | 74 | 47% | |||

| 150 | 26 | 63 | 41% | |||

| 200 | 31 | 83 | 37% | |||

| 250 | 134 | 244 | 55% | 33 | 81 | 41% |

| Total | 247 | 469 | 53% | 203 | 438 | 46% |

| Third binary choice referendum situation | ||||||

| Bounded | Repeated | |||||

| Cost | For votes | Sample size | % for | For votes | Sample size | % for |

| 5 | 35 | 112 | 31% | 43 | 67 | 64% |

| 25 | 38 | 55 | 69% | |||

| 50 | 24 | 44 | 55% | |||

| 100 | 29 | 57 | 51% | |||

| 150 | 22 | 58 | 38% | |||

| 200 | 15 | 54 | 28% | |||

| 250 | 16 | 53 | 30% | |||

| 300 | 94 | 134 | 70% | 16 | 50 | 32% |

| Total | 129 | 246 | 52% | 203 | 438 | 46% |

The third choice situation of the bounded sample was designed like the second situation. Respondents who voted for the policy at $250 were asked if they would vote for the referendum at $300. Seventy percent of those respondents vote “For” the referendum at the higher cost. Conversely, respondents who voted against (or “Don’t know”) the policy at $25 were asked how they would vote if the cost was $5. Thirty-one percent of those respondents voted “For” the referendum at the lowest cost. In the third situation of the repeated sample the “For” votes decreased from 64 percent to 32 percent as the cost amount increased from $5 to $300.

Survey respondents were also asked how much attention they paid to each of the four attributes and given four choices: “a lot,” “some,” “not much,” and “none.” Respondents who chose “none” and “not much” are classified as not attending to the attribute. Overall, about 13 percent of respondents did not attend to the “size of the ecologically important area treated” (see appendix table A1). Nearly 25 percent of respondents did not attend to the “size of the socially important area treated.” The “treatment method (chemical or biological)” was not attended to by about 15 percent of respondents. Almost 17 percent of respondents did not attend to the “cost over the next 3 years.”

4. Empirical models

We employ several increasingly general models to analyze our data and incorporate ANA for our hypothesis tests. Our first test of external scope, for which only the first response is used in estimation, can be conducted with a standard discrete choice model. If the estimated coefficients on protection of ecological and social areas are positive and statistically significant, then our data exhibit external sensitivity to scope. Estimating the model based on repeated choice data provides the means to test for internal scope effects. Estimation of the coefficients in both cases is based on a linear utility function:

| Eq. [1] |

The observable portion of individual n’s utility from choosing alternative j in situation s is a linear function of the attribute vector ansj and coefficient vector β. Total utility is the sum of observable utility (Vnsj) and an additive component that is unobservable to the researcher (εnsj).

A respondent will choose the alternative that yields the highest utility but their choice may also depend on characteristics of that individual, such as income and education, which are placed in a vector xn. Using the parameter vectors α and γ to capture these additional effects, and assuming the unobservable portion of utility εnsj is distributed type 1 extreme value (Gumbel), the probability that individual n will choose alternative j in situation s is:

| Eq. [2] |

Our first departure from the standard model in equation 2 is the stated ANA model which relies on debriefing questions about attribute attendance to place respondents into classes. Each class is defined by a set of parameter restrictions, setting coefficients equal to zero when a respondent indicated they did not attend to the corresponding attributes (Hensher et al. 2012; Kragt 2013; Koetse 2017). Generalizing our standard model to allow for classes of non-attending respondents yields the choice probabilities given by:

| Eq. [3] |

where the β vector is now indexed by class c indicating which elements of β are restricted to zero.

For k attributes describing a discrete alternative there are a total of 2k possible attribute (non-) attendance classes (Hensher et al. 2012; Thiene et al. 2015; Glenk et al. 2015). Thus, for our empirical setting with four attributes (cost over the next three years, ecologically important acreage, socially important acreage, and treatment method) there are 16 possible classes of attribute (non-)attendance to which individual n may belong. We abstract away from the full set of possibilities and largely focus on two classes: total attendance, and non-attendance to at least one attribute. Such an assumption is not unconventional given our empirical focus. In fact, Koetse (2017) investigates how accounting for non-attendance to the cost attribute both corrects hypothetical bias, and decreases the WTA-WTP disparity. Related to this issue is the relationship between attribute non-attendance and consequentiality. Koetse (2017) argues that consequentiality, or rather the lack thereof, is the most important reason for respondents ignoring the cost attribute. While Scarpa et al. (2009) discuss how cost non-attendance is often correlated with non-attendance to other attributes, Thiene et al. (2015) focus on non-attendance to a single attribute due to data limitations. Hensher et al. (2016) also agree that investigating all 2k possible classes is not ideal.

We further generalize the stated ANA model by estimating a random parameters logit model which allows coefficients that are not restricted to zero to vary over respondents (Train 2003). In the random parameters logit, the β vector is distributed multivariate normal so that if ak is attended to by respondent n, and otherwise. βk represents the mean of the distribution of marginal utilities across the sample population, σk represents the spread of preferences around the mean, and vnk is the random draw taken from the assumed distribution (Hensher et al. 2015).

Concerns about the accuracy of this self-reported information has led to use of latent class (LC) models to separate individuals into classes and motivates our inferred ANA model (Scarpa et al. 2009, 2012; Hensher et al. 2012; Kragt 2013; Glenk et al. 2015; Thiene et al. 2015). In this framework class membership is unknown to the analyst and is instead treated probabilistically. Estimation requires specifying the ANA class probabilities, πnc, which are the probabilities that individual n belongs to class c (Hensher et al. 2015). These probabilities can be specified by the logit formula and estimated as a function of the choice-invariant characteristics in xn:

| Eq. [4] |

where is a vector of estimated parameters and C is the number of classes specified by the analyst. The class membership probabilities can be combined with the conditional probability of equation 3 to express the unconditional probability of individual n’s response via the Law of Total Probability:

| Eq. [5] |

4. Results

We estimate the probability of voting for the hemlock woolly adelgid treatment policy as a function of its attributes using conditional logit, latent class, and random parameters logit models.16 The coefficients from the conditional logits are shown in table 2. 17 The estimated coefficient on the cost is negative and statistically significant as one would expect for the repeated sample. The bounded sample, however, exhibits some strange behavior on the cost attribute. When considering only the first choice situation the coefficient for the cost attribute has the correct sign, but its significance disappears when both the first and second choice situations are included. The statistical significance reappears when all three choice situations are included in the model. Most strikingly table 2 shows no robust evidence of scope effects. Thus, our simple models show limited evidence of the repeated sample passing the internal scope test. Furthermore, our simple models fail the external scope test. In other words, the coefficients on the ecologically important and socially important acreage amounts are not statistically different from zero.

Table 2:

Conditional logit models

| 1st situation | 1st and 2nd situations | 1st, 2nd, and 3rd situations | ||||

|---|---|---|---|---|---|---|

| Bounded | Repeated | Bounded | Repeated | Bounded | Repeated | |

| COST | −0.00537*** | −0.00597*** | −0.00050 | −0.00449*** | 0.00189*** | −0.00511*** |

| (0.00172) | (0.00183) | (0.00078) | (0.00104) | (0.00062) | (0.00076) | |

| ECOL | 0.00257 | 0.01212 | −0.01589 | 0.03211 | −0.02382 | 0.04061* |

| (0.03330) | (0.03457) | (0.02378) | (0.02395) | (0.02223) | (0.02095) | |

| SOCIAL | −0.04875 | 0.04592 | −0.02042 | 0.01434 | −0.01744 | −0.00680 |

| (0.03411) | (0.03410) | (0.02379) | (0.02481) | (0.02146) | (0.02071) | |

| BIOL | 1.25593*** | 0.63952 | 0.50122** | 0.46297* | 0.14702 | 0.55832** |

| (0.36772) | (0.40075) | (0.25122) | (0.27797) | (0.21959) | (0.23139) | |

| CHEM | 0.83718** | 0.22553 | 0.26585 | −0.01324 | 0.01785 | 0.16084 |

| (0.37275) | (0.38325) | (0.25644) | (0.26739) | (0.22778) | (0.22458) | |

| AIC | 641.0 | 599.8 | 1303.2 | 1189.7 | 1633.0 | 1758.5 |

Notes: The estimated coefficients are reported above with their clustered standard errors in parentheses (st. er.). The statistical significance is reported using the following convention:

p<0.05,

p<0.01,

p<0.001.

Table 3 reports estimated coefficients from the random parameters logit model. It shows, like Siikamäki and Larson (2015), the evidence for scope effects becomes stronger when we account for preference heterogeneity. In addition, the evidence of scope effects for ecologically important acreage in the repeated sample appear when the first two choice situations are included in the model. We also estimated latent class (LC) models that show evidence of scope effects in the repeated sample. For example, in a two-class non-equality constrained latent class (non-ECLC) model, we observe positive and statistically significant scope effects in the dominant class, class 1 (see appendix table A2).18 The coefficient for ecologically important acreage, however, is not distinguishable from zero unless all three choice situations are included in the model. Like the random parameters logit models, the two-class non-ECLC estimates a smaller Akaike Information Criteria (AIC) function than the conditional logit model. Thus, we have evidence that accounting for preference heterogeneity improves the fit of the model and the data exhibits internal scope effects.

Table 3:

Random parameters logit models

| 1st situation | 1st and 2nd situations | 1st, 2nd, and 3rd situations | ||||

|---|---|---|---|---|---|---|

| Bounded | Repeated | Bounded | Repeated | Bounded | Repeated | |

| Means | ||||||

| COST | −0.96750 | −0.01565*** | −0.01343*** | −0.01664*** | −0.01257*** | |

| (40.07943) | (0.00585) | (0.00379) | (0.00531) | (0.00197) | ||

| ECOL | 0.30392 | −0.01911 | 0.11972* | −0.03402 | 0.09724** | |

| (13.78935) | (0.06675) | (0.06557) | (0.06441) | (0.03869) | ||

| SOCIAL | −6.71789 | −0.04884 | 0.03110 | −0.05546 | 0.00392 | |

| (279.6456) | (0.06258) | (0.06138) | (0.06313) | (0.03687) | ||

| BIOL | 212.462 | 2.77834** | 1.14801 | 3.04692*** | 1.07004** | |

| (8806.537) | (1.09939) | (0.70789) | (1.04466) | (0.42025) | ||

| CHEM | 119.209 | 2.06624** | −0.27417 | 2.43398** | 0.19143 | |

| (4944.689) | (0.97498) | (0.67697) | (0.95249) | (0.39494) | ||

| Standard deviations | ||||||

| COST | 1.87494 | 0.01324*** | 0.01717*** | 0.01077*** | 0.01483*** | |

| (77.65121) | (0.00446) | (0.00597) | (0.00307) | (0.00243) | ||

| ECOL | 3.78483 | 0.08309 | 0.04716 | 0.11504 | 0.09731 | |

| (156.1534) | (0.22499) | (0.35066) | (0.13442) | (0.08603) | ||

| SOCIAL | 0.12290 | 0.00978 | 0.19717 | 0.00037 | 0.07550 | |

| (9.82321) | (0.11575) | (0.15094) | (0.11984) | (0.11423) | ||

| BIOL | 280.770 | 2.50185*** | 2.85834*** | 2.80256*** | 1.99260*** | |

| (11628.52) | (0.94452) | (0.92091) | (0.80142) | (0.44627) | ||

| CHEM | 52.0507 | 2.37414** | 3.09622*** | 2.67901*** | 1.58960*** | |

| (2150.484) | (0.99621) | (1.03889) | (0.77203) | (0.44627) | ||

| AIC | 648.5 | singular | 1286.8 | 1119.9 | 1594.1 | 1620.5 |

Notes: The estimated coefficients are reported above with their clustered standard errors in parentheses (st. er.). The statistical significance is reported using the following convention:

p<0.05,

p<0.01,

p<0.001.

We next investigate several ways of allowing for attribute non-attendance. First, we compare the effects of accounting for stated ANA on the conditional logit and random parameters logit models. Second, we examine a number of inferred ANA models.

There are 2k possible attribute (non-)attendance classes in an equality constrained latent class (ECLC) model framework. In our application k is equal to 4 and refers to the four attributes that describe the referenda (cost, ecologically important acreage, socially important acreage, and treatment). Table A3 in the appendix illustrates how under the full 2k (16) class ECLC model we only estimate 5 different coefficients (cost over the next three years, ecologically important acreage, socially important acreage, biological HWA treatment, and chemical HWA treatment). The estimated coefficient on attribute i (βi) is the same for all classes that attend to the attribute. If the attribute is not attended to, then the coefficient is restricted to be equal to zero. The same conditions apply to models with fewer classes. Table A3 also shows that 59 percent of respondents exhibit total attribute attendance, or the continuity axiom of neoclassical theory.19 About 10 percent of the respondents ignored only the social scope of the policy, and about four percent ignored treatment. Almost 15 percent of respondents ignored a combination of attributes. Unlike many studies socially important acreage, and not “cost,” was the most commonly ignored attribute (Kragt 2013). Similar to Thiene et al. (2015) and Koetse (2017) we do not attempt to investigate the 2k classes.20 We limit our analysis and consider three specific cases of stated ANA: scope non-attendance, cost non-attendance, and cost and scope non-attendance.

We defined stated attribute non-attendance using the respondent’s answer to a Likert scale question. Specifically, the survey asked, “When you were making your decisions about the different alternatives, how much influence did each of the following have on your voting decision?” Respondents were able to answer either “a lot,” “some,” “not much,” “none,” or they could decline to answer. We report estimated coefficients for models where ANA has been defined as either “none” or “not much” influence (see appendix table A1). Later we will discuss the sensitivity of our results to our definition.21

Table 4 reports the conditional logit model that accounts for stated non-attendance to the scope attributes (ecologically and socially important acreage). The model is conceptually similar to an equality constrained latent class model in that there are four (22) possible latent classes. Specifically, the four classes are: 1) total attendance (class 16 in appendix table A3), 2) non-attendance to ecologically important acreage (class 2 in appendix table A3), 3) non-attendance to socially important acreage (class 3 in appendix table A3), and 4) non-attendance to both ecologically and socially important acreage (class 8 in appendix table A3).

Table 4:

Conditional logit models allowing for stated ANA on the scope attributes

| 1st situation | 1st and 2nd situations | 1st, 2nd, and 3rd situations | ||||

|---|---|---|---|---|---|---|

| Bounded | Repeated | Bounded | Repeated | Bounded | Repeated | |

| COST | −0.00601*** | −0.00599*** | −0.00076 | −0.00457*** | 0.00167*** | −0.00528*** |

| (0.00173) | (0.00186) | (0.00079) | (0.00105) | (0.00062) | (0.00077) | |

| ECOL | 0.05970** | 0.06910** | 0.04088** | 0.07004*** | 0.03530* | 0.08695*** |

| (0.02931) | (0.02919) | (0.02062) | (0.02221) | (0.01945) | (0.01974) | |

| SOCIAL | 0.05605** | 0.06986** | 0.04284** | 0.04545** | 0.03790** | 0.02836 |

| (0.02726) | (0.02818) | (0.01928) | (0.02158) | (0.01727) | (0.01866) | |

| BIOL | 0.50862* | 0.33524 | −0.07800 | 0.18810 | −0.41441** | 0.19645 |

| (0.30132) | (0.32266) | (0.18427) | (0.22520) | (0.16662) | (0.19298) | |

| CHEM | 0.04034* | −0.13598 | −0.34983* | −0.31165 | −0.57802*** | −0.20600 |

| (0.30501) | (0.32528) | (0.18327) | (0.22796) | (0.16490) | (0.18819) | |

| AIC | 632.2 | 586.4 | 1292.7 | 1170.5 | 1623.7 | 1728.3 |

Notes: The estimated coefficients are reported above with their clustered standard errors in parentheses (st. er.). The statistical significance is reported using the following convention:

p<0.05,

p<0.01,

p<0.001.

Unlike our previous results, tables 4 and 5 show that we find robust external and internal scope effects for both ecologically and socially important acreage in both the bounded and repeated samples. This is true for both the conditional logit (table 4) and random parameters logit (table 5) specifications.22 We find qualitatively similar results (robust scope effects in both bounded and repeated samples) when we account for both cost and scope stated attribute non-attendance. However, as previously discussed, the estimated coefficient on the cost attribute is not always statistically significant in the bounded sample. In addition, table 4 shows that we estimate a positive and statistically significant coefficient on cost using the bounded sample and all choice situations.23When we account for stated ANA on the cost attribute alone we find no evidence of scope effects in the bounded sample, and non-robust evidence of scope effects for ecologically important acreage in the repeated sample. Based on the AIC the best fit is provided by the random parameters logit model that accounts for ANA to the scope attributes only.

Table 5:

Random parameters logit models allowing for stated ANA on the scope attributes

| 1st situation | 1st and 2nd situations | 1st, 2nd, and 3rd situations | ||||

|---|---|---|---|---|---|---|

| Bounded | Repeated | Bounded | Repeated | Bounded | Repeated | |

| Means | ||||||

| COST | −0.01708** | −0.01392*** | −0.01738*** | −0.01263*** | ||

| (0.00683) | (0.00383) | (0.00605) | (0.00195) | |||

| ECOL | 0.13246* | 0.19745*** | 0.13306* | 0.17089*** | ||

| (0.07203) | (0.06548) | (0.07285) | (0.03798) | |||

| SOCIAL | 0.12342** | 0.14222** | 0.12284** | 0.06926** | ||

| (0.06261) | (0.05865) | (0.05760) | (0.03472) | |||

| BIOL | 1.30989* | 0.37306 | 1.33916* | 0.44609 | ||

| (0.78863) | (0.54599) | (0.75729) | (0.34823) | |||

| CHEM | 0.52594 | −1.03015* | 0.64885 | −0.40213 | ||

| (0.61366) | (0.59986) | (0.62843) | (0.32398) | |||

| Standard deviations | ||||||

| COST | 0.01414*** | 0.01885*** | 0.01178*** | 0.01499*** | ||

| (0.00501) | (0.00519) | (0.00392) | (0.00236) | |||

| ECOL | 0.15785 | 0.02545 | 0.13622 | 0.00265 | ||

| (0.15403) | (0.03262) | (0.17306) | (0.00746) | |||

| SOCIAL | 0.00376 | 0.04158 | 0.01450 | 0.05388 | ||

| (0.04836) | (0.07351) | (0.02769) | (0.12494) | |||

| BIOL | 2.41250** | 2.82772*** | 2.69285*** | 2.02659*** | ||

| (1.04564) | (0.84316) | (0.87180) | (0.40029) | |||

| CHEM | 2.40761** | 3.31496*** | 2.63348*** | 1.64282*** | ||

| (1.18825) | (0.95997) | (0.86165) | (0.42421) | |||

| AIC | singular | singular | 1273.9 | 1100.1 | 1581.5 | 1593.1 |

Notes: The estimated coefficients are reported above with their clustered standard errors in parentheses (st. er.). The statistical significance is reported using the following convention:

p<0.05,

p<0.01,

p<0.001.

Our results do not change qualitatively as we change our measurement of stated ANA. We do observe less peculiar behavior in the estimated coefficient on the cost attribute for the bounded data as we broaden our definition from “none” to “none,” “not much,” and “some.” The most substantial difference in the results occurs when we extend our definition of stated ANA to include “some” influence. We find that the statistical significance of socially important acreage begins to decrease.

Because there is evidence in the literature that respondents may not answer accurately to ANA questions, we estimate equality constrained latent class (ECLC) models (Armitage and Conner 2001; Ajzen et al. 2004; Carlsson et al. 2010; Hess and Hensher 2010; Hensher et al. 2012; Scarpa et al. 2011; Scarpa et al. 2012; Alemu et al. 2013; Kragt 2013). We examine whether or not these inferred ANA models will show statistically significant scope effects. We examine several different cases of latent classes: 16 classes (1–16 on appendix table A3), 9 classes (1–4, 8, and 14–16 on appendix table A3), 5 classes (3, 4, 8, 15, and 16 on appendix table A3), 4 classes (3, 8, 15, and 15 on appendix table A3), 3 classes (3, 8 and 16 on appendix table A3), and 2 classes (8 and 16, and 1 and 16 on appendix table A3). We find that when including only the first situation in the model the AIC generally decreases for both the bounded and repeated samples, suggesting that fewer classes is better. These results are noisy when both the first and second situations are included in the model, but generally speaking the AICs still decrease. Interestingly, the AIC seems to increase as ECLC models with fewer classes are estimated when all three choice situations are included. Furthermore, these findings are confounded by the fact that we also often estimate singular variance matrices.24

We report the inferred scope non-attendance and inferred cost non-attendance models in table A4 of the appendix. The estimated coefficients in these tables once again illustrate the robustness of the lack of scope effects.25 The first specification in table A4 assumes one class does not attend to both ecologically and socially important acreage (scope ANA). The second of these two inferred ANA models, cost ANA, comes from Koetse (2017). Specifically, in the second specification we set the estimated coefficient for the cost attribute to be zero in the non-attending class. Unlike Kragt (2013) and Koetse (2017) our inferred models do not exhibit scope effects.

Table 6 reports the marginal WTP estimates calculated from coefficients presented in the previous tables. It once again illustrates our two main points. First, there is a lack of scope effects in models that do not account for attribute non-attendance. Second, accounting for preference heterogeneity using either stated ANA or random parameters logit models can reveal scope effects. In general, when using stated ANA in conditional logit models we find that respondents are willing to pay between about $9 and $16 per acre. Our negative WTP estimates for treatment of ecologically and socially important acreage is the result of peculiarities in the bounded data. Specifically, the positive coefficient estimated on cost, shown in table 4, causes this puzzling result. Random parameters logit models, however, suggest a lower range of marginal WTP of $5 to $14 per acre.

Table 6:

Marginal willingness-to-pay

| 1st situation | 1st and 2nd situations | 1st, 2nd, and 3rd situations | ||||

|---|---|---|---|---|---|---|

| Bounded | Repeated | Bounded | Repeated | Bounded | Repeated | |

| Conditional logit | ||||||

| ECOL | $0 | $0 | $0 | $0 | $0 | $7.95* |

| SOCIAL | $0 | $0 | $0 | $0 | $0 | $0 |

| Random parameters logit | ||||||

| ECOL | $0 | $8.91* | $0 | $7.74** | ||

| SOCIAL | $0 | $0 | $0 | $0 | ||

| Conditional logit using stated ANA on the scope attributes | ||||||

| ECOL | $9.93** | $11.54** | $0 | $15.33*** | −$21.14* | $16.47*** |

| SOCIAL | $9.33** | $11.66** | $56.37** | $9.95** | −$22.69** | $0 |

| Random parameters logit using stated ANA on the scope attributes | ||||||

| ECOL | $7.76* | $14.18** | $7.66* | $13.53*** | ||

| SOCIAL | $7.23** | $10.21** | $7.07** | $5.48** | ||

Notes: Marginal willingness-to-pay estimates are presented above following stars that indicate statistical significance. The stars correspond to the minimum statistical significance between the cost attribute and scope attribute. The statistical significance is reported using the following convention:

p<0.05,

p<0.01,

p<0.001.

A zero indicates that our estimated coefficients were not statistically significant, and a missing value indicates that we did not estimate that model.

5. Conclusions

We have shown that like many other contingent valuation studies, the 2017 Southern Appalachian Forest Management Survey data generally fails internal and external scope tests when using naive models that assume fully compensatory preferences. This result is robust to the number of choice situations in the survey and empirical specification. We employ several methods to account for preference heterogeneity: 1) stated attribute non-attendance, 2) inferred attribute non-attendance, 3) equality constrained latent class models, 4) random parameters models, and 5) combinations of the four. Accounting for stated attribute non-attendance allows our models to pass both internal and external scope tests, shedding light on a major criticism of contingent valuation. As noted by other researchers, such a result could have large policy implications (Scarpa et al. 2009; Kragt 2013; Glenk et al. 2015; Koetse 2017).

We compare bounded and repeated valuation questions and employ a number of different specifications to search for scope effects in single or sequential binary choice situations. While some models like random parameters logit and 2-class non-ECLC models find scope effects for ecologically important acreage in the repeated data when we include all three choice situations, such results are not robust to specification. Robust scope effects appear only when we account for stated ANA on scope attributes. The preferred models are stated ANA random parameters logit models using the repeated data which has a better statistical fit than the bounded data. The bounded sample models typically have inferior goodness of fit than the repeated data for the same specifications, except when all three choice situations are included in our models. There is also less robust evidence of scope effects with the bounded sample. As we change our definition of ANA the qualitative results do not change substantially, however, we begin to see less evidence of scope effects for socially important acreage. Our results conflict with Kragt (2013) and Koetse (2017). While they favor the inferred models, the results of our analysis favor stated models. A striking result from our investigation into the inferred models is that we find no evidence of scope effects on the bounded survey treatment sample, regardless of the number of latent classes we assume. If researchers are unable to rely on inferred ANA via latent class models, then economic survey design should routinely include questions that capture stated ANA.

Discovery of a factor that provides a rationale for a longstanding criticism of contingent valuation, insensitivity to scope, warrants further investigation on the effects of ANA and implications of discontinuous preference ordering among survey respondents. Our results provide evidence of a need and an opportunity to revisit old data that exhibits insensitivity to scope using new techniques. It is possible that use of ANA methods could lead to some of these old studies passing scope tests. Our results also highlight the need for research focused on the determinants of ANA and ways to mitigate it. Our analysis suggests that accounting for stated attribute non-attendance for scope attributes could help overcome other CVM problems like fat tails, temporal insensitivity and others.

While the primary aim of our analysis does not require scaling household values up to the population level, such as for benefit cost analysis, our results raise the question of how to treat respondents that do not attend to certain attributes - cost in particular - in that scaling exercise. To our knowledge the existing literature has not addressed this question directly but, generally, the difference between naive models and those that account for ANA is viewed as a bias arising from process heterogeneity (Glenk et al. 2015; Hensher et al. 2015). Taking that point of view, one would conclude that WTP values found with models that correct for that bias could be applied to the larger population to estimate total social benefits. A more conservative approach would only apply positive WTP to the percentage of the population corresponding to the proportion of the sample that fully attended to the attributes, or just the cost attribute if that is the primary concern. Alternatively, if a latent class model is used to correct for ANA and the class probability functions rely on data that are available for the larger population, those probabilities could be estimated for the population, thereby scaling the likelihood of non-attendance to the population as well. No doubt there are other possibilities that could provide other population-level estimates to generate lower and upper bounds for comparison with total cost for program evaluations.

Supplementary Material

6. Acknowledgements

Previous versions of this paper were presented at the 2018 Society for Benefit-Cost Analysis meetings in Washington, DC and the 2018 Appalachian Experimental & Environmental Economics Workshop in Blowing Rock, North Carolina. The authors thank participants in those sessions, Dan Petrolia and Matt Interis, and two journal referees for helpful comments.

The views and opinions expressed in this article are those of the authors and do not necessarily reflect the official policy or position of any agency of the United States (U.S.) government.

Footnotes

If the estimated willingness to pay is greater for the larger policy intervention the study passes an external scope test (Carson, Flores and Meade 2001).

When individuals are asked multiple CVM questions the response format is known as a discrete choice experiment and permits tests of internal scope (Carson and Louviere 2011; Carson and Czajkowski 2014). Internal scope tests verify consistency of preferences within an individual’s set of responses (Carson and Mitchell 1995).

Carson and Mitchell (1995) and Carson et al. (2001) review several other reasons why willingness-to-pay may be insensitive to scope.

The economic importance of the scope test can be assessed with scope elasticity (Whitehead 2016). We include a table of marginal WTP estimates. In an attribute-based CVM experiment design the marginal WTP estimates serve as the slope of the WTP function, which is a ray from the origin. In our case and most others in the DCE literature, the scope elasticity is equal to one. In other words, the percentage change in treated acreage is equal to the percentage change in WTP.

While Carson and Groves (2007) discuss how sequential choice questions generally violate incentive compatibility, Vossler et al. (2012) identify cases where such a survey design maintains the important property. See also Boyle et al. (2016).

Christie and Azevedo (2009) combine the CVM and DCE treatments, test for parameter equality, and find evidence of convergent validity between the two types of choice questions. Their comparison, however, is confounded by the number of valuation questions presented to respondents (three in repeated CVM and eight in the choice experiment) and the number of choice alternatives (two in the repeated CVM and three in the choice experiment) creating potentially large differences in cognitive burden between samples.

Siikamäki and Larson (2015) find scope effects with bounded sequential questions but do not compare their results with those from the single binary choice and do not ask repeated questions.

Petrolia et al. (2014) did not conduct a scope test in their single binary choice question.

Meyerhoff and Liebe (2009) and Carlsson et al. (2010) did not find that WTP values declined when accounting for ANA. DeShazo and Fermo (2004) and Hensher et al. (2007), however, find higher marginal WTP when accounting for ANA.

In the first choice situation, for the bounded survey sample, 52 percent voted “For” the treatment referendum, 23 percent voted “Against” it, and 25 percent did not know how they would vote. Similarly, for the repeated survey sample in the first situation, 55 percent voted “For” the treatment referendum, 22 percent voted “Against” it, and 23 percent did not know how they would vote. These differences are not statistically significant across bounded and repeated survey samples. This is not surprising since there were no differences in the first situation between the two samples.

Carson et al. (1996) discuss the 1993 replication of an Alaskan survey introduced the “would-not-vote” option, which did not have an effect on stated choices. Additionally they mention that ““would-not-vote” and “don’t know” responses were treated as choices against the plan (a conservative decision recommended by Schuman (1996)).”

Our survey design is referred to as a binary choice sequence (BC-Seq) by Carson and Louviere (2011).

We do not use an efficient survey design. In the repeated treatment the attributes all vary independently from one another.

In dichotomous choice CVM the “fat tails” problem results when survey respondents, in the aggregate, fail to respond rationally to increases in the cost of the policy (Ready and Hu 1995). This is often a problem with the subsample sizes are small. Our data exhibits a fat tail from $150 (“For” = 45.13 percent, n=113) to $200 (“For” = 45.16 percent, n=124) with the repeated data. When the data on the first question are pooled we no longer see the fat tail problem as the percent of “For” votes falls from 49.78 at $150 (n=231) to 41.03 at $200 (n=234). The difference is statistically significant at the 0.05 level in a one-tailed test.

We find no evidence of anchoring in our repeated treatment, however data from the bounded treatment do exhibit anchoring. This suggests CVM researchers should move towards the repeated referendum type question with random cost and other attributes.

We find no differences in results for those who meet the conditions for survey consequentiality and those who do not (Petrolia et al. 2014). Also, similar binary choice sequence question formats have been used to examine ordering effects (Holmes and Boyle 2005; Day et al. 2012; Nguyen et al. 2015). We find little evidence of ordering effects in our data.

While there is some evidence of incentive incompatibility and starting point bias, it is not a result that is robust enough to be cause for concern. For example, we generally only observe such evidence in the bounded data when controlling for both issues, but do find some evidence of starting-point bias in the first two situations of the bounded data when we do not control for incentive compatibility.

We purposely omit the results from the first situation, and the first and second situation. The variance matrix for the repeated sample is singular in both cases, and the bounded sample does not exhibit scope effects for the first situation. Including both the first and second situation for the bounded sample yields statistically significant scope effects for ecological acreage in one class, while the estimated coefficient is negative and statistically significant at the 10 percent level in the other.

This is comparable to Hensher (2008) and Kragt (2013). Sixty-two percent of Hensher’s sample exhibit total attribute attendance and 55 percent of Kragt’s respondents attended to all attributes.

Our primary motivation for this is the small dataset that is likely to yield singular variance estimates in the 2k classes case. For example, large numbers of latent classes lead to identification problems such as singular Hessians (Hensher et al. 2015). In fact, using our data the 2k-class LC model and several specifications of the 2k-class ECLC model often fail to converge or is singular. While Scarpa et al. (2009) discuss how cost attribute non-attendance is often correlated with non-attendance to other attributes, due to data limitations Thiene et al. (2015) and Koetse (2017), focus on non-attendance to a single attribute.

Our qualitative results are robust to our definition of ANA (none, none and not much, none and not much and some). We chose to report answers of either not much or none as our baseline definition.

Our random parameters model uses 1,000 standard Halton sequence draws from normally distributed attribute parameters. The relative size of our estimated standard deviations range from 0.02 times smaller (social) than the mean to 19.26 times larger (social) than the mean. On average the standard deviations of the scope parameters are 2.53 times larger than the means.

Such peculiar results could be caused by a number of factors including model misspecification. In our context it is likely the result of our survey design and its effect on respondents answers. For example, the cost amount provided in follow-up situations may have changed relative to the other static attributes in a manner that is not seen as realistic. Thus follow-up situations may not be incentive compatible leading to yea-saying behavior (Whitehead 2002).

We were unable to estimate a 2k non-equality constrained latent class model due to data limitations.

We also tried to estimate a 16 class (non-equality constrained) latent class models but it did not converge.

Contributor Information

Chris Giguere, 13223 Black Walnut Court, Silver Spring, MD 20906.

Chris Moore, National Center for Environmental Economics, U.S. Environmental Protection Agency 1200 Pennsylvania Avenue, NW (1809T), Washington, DC 20460.

John C. Whitehead, Appalachian State University, 416 Howard Street, Room 3092 Peacock Hall, Boone, NC 28608-2051.

7. Works cited

- Ajzen Icek, Brown Thomas C., and Carvajal Franklin. 2004. “Explaining the discrepancy between intentions and actions: The case of hypothetical bias in contingent valuation.” Personality and Social Psychology Bulletin 30(9): 1108–1121. [DOI] [PubMed] [Google Scholar]

- Alemu Mohammed H., Mørkbak Morten R., Olsen Soren B., and Jensen Carsten L.. 2013. “Attending to the Reasons for Attribute Non-attendance in Choice Experiments.” Environmental and Resource Economics 54(3): 333–359. [Google Scholar]

- Armitage Christopher J., and Conner Mark. 2001. “Efficacy of the theory of planned behaviour: A meta-analytic review.” British Journal of Social Psychology 40(4): 471–499. [DOI] [PubMed] [Google Scholar]

- Arrow Kenneth, Solow Robert, Portney Paul R., Leamer Edward E., Radner Roy, and Schuman Howard. 1993. “Report of the NOAA Panel on Contingent Valuation.” Federal Register 58(10): 4601–4614. [Google Scholar]

- Baker Reg, Blumberg Stephen J., Brick J. Michael, Couper Mick P., Courtright Melanie, Dennis J. Michael, Dillman Don, Frankel Martin R., Garland Philip, Groves Robert M., Kennedy Courtney, Krosnick Jon, and Lavrakas Pazul J. 2010. “AAPOR Report on Online Panels.” The Public Opinion Quarterly 74(4): 711–781. [Google Scholar]

- Boxall Peter C., and Adamowicz Wiktor L.. 2002. “Understanding heterogeneous preferences in random utility models: a latent class approach.” Environmental and Resource Economics 23(4): 421–446. [Google Scholar]

- Boyle Kevin J.. Paterson Robert, Carson Richard, Leggett Christopher, Kanninen Barbara, Molenar John, and Neumann James. 2016. “Valuing shifts in the distribution of visibility in national parks and wilderness areas in the United States.” Journal of Environmental Management 173: 10–22. [DOI] [PubMed] [Google Scholar]

- Campbell Danny, Hutchinson W. George, and Scarpa Riccardo 2008. “Incorporating discontinuous preferences into the analysis of discrete choice experiments.” Environmental and Resource Economics 41(3): 401–417. [Google Scholar]

- Caparros Alejandro, Oviedo Jose L., and Campos Pablo. 2008. “Would you choose your preferred option? Comparing choice and recoded ranking experiments.” American Journal of Agricultural Economics 90(3): 843–855. [Google Scholar]

- Carlsson Fredrik; Kataria Mitesh, and Lampi Elina. 2010. “Dealing with ignored attributes in choice experiments on valuation of Sweden’s environmental quality objectives.” Environmental and Resource Economics 47(1): 65–89. [Google Scholar]

- Carson Richard T., and Louviere Jordan J.. 2011. “A common nomenclature for stated preference elicitation approaches.” Environmental and Resource Economics 49(4): 539–559. [Google Scholar]

- Carson Richard T., and Czajkowski Mikolaj. 2014. “The discrete choice experiment approach to environmental contingent valuation.” In Handbook of Choice Modelling, ed. Hess Stephanie, and Daly Andrew, 202–235. Cheltenham, UK: Edward Elgar Publishing Co. [Google Scholar]

- Carson Richard T., Flores Nicholas E., and Meade Norman F.. 2001. “Contingent valuation: controversies and evidence.” Environmental and Resource Economics 19(2): 173–210. [Google Scholar]

- Carson Richard T., Hanemann W. Michael, Kopp Raymond J., Krosnick Jon A., Mitchell Robert C., Presser Stanley, Rudd Paul A., and Smith V. Kerry, with Conaway Michael, and Martin Kerry. 1996. “Was the NOAA Panel Correct about Contingent Valuation?” Discussion Paper 96–20, Washington, DC: Resources for the Future. [Google Scholar]

- Carson Richard T., and Groves Theodore. 2007. “Incentive and informational properties of preference questions.” Environmental and Resource Economics 37(1): 181–210. [Google Scholar]

- Carson Richard T., and Groves Theodore. 2011. “Incentive and Information Properties of Preference Questions: Commentary and Extensions” in International Handbook of Non-Market Environmental Valuation, ed. Jeff Bennett. Northampton, MA: Edward Elgar Publishing Co. [Google Scholar]

- Carson Richard T., Groves Theodore, and List John A.. 2014. “Consequentiality: A theoretical and experimental exploration of a single binary choice.” Journal of the Association of Environmental and Resource Economists 1(1/2): 171–207. [Google Scholar]

- Carson Richard T., and Mitchell Robert Cameron. 1995. “Sequencing and Nesting in Contingent Valuation Surveys.” Journal of Environmental Economics and Management 28(2): 155–173. [Google Scholar]

- Christie Mike, and Azevedo Christopher D.. 2009. “Testing the consistency between standard contingent valuation, repeated contingent valuation and choice experiments.” Journal of Agricultural Economics 60(1): 154–170. [Google Scholar]

- Day Brett, Bateman Ian J., Carson Richard T., Dupont Diane, Louviere Jordan J., Morimoto Sanae, Scarpa Riccardo, and Wang Paul. 2012. “Ordering effects and choice set awareness in repeat-response stated preference studies.” Journal of Environmental Economics and Management 63(1): 73–91. [Google Scholar]

- DeShazo JR, and Fermo G.. 2004. “Implications of Rationally-Adaptive Pre-choice Behavior for the Design and Estimation of Choice Models.” Working paper. University of California, Los Angeles, School of Public Policy and Social Research. [Google Scholar]

- Desvousges William, Mathews Kristy, and Train Kenneth. 2012. “Adequate responsiveness to scope in contingent valuation.” Ecological Economics 84(1): 121–128. [Google Scholar]

- Giraud KL, Loomis John B., and Johnson RL. 1999. “Internal and external scope in willingness-to-pay estimates for threatened and endangered wildlife.” Journal of Environmental Management 56(3): 221–229. [Google Scholar]

- Glenk Klaus, Martin-Ortega Julia, Pulido-Velazquez Manuel, and Potts Jacqueline. 2015. “Inferring Attribute Non-attendance from Discrete Choice Experiments: Implications for Benefit Transfer.” Environmental and Resource Economics 60(4): 497–520. [Google Scholar]

- Gowdy John M., and Mayumi Kozo. 2001. “Reformulating the foundations of consumer choice theory and environmental valuation.” Ecological Economics 39(2): 223–237. [Google Scholar]

- Greene William H., and Hensher David A.. 2008. “Ordered choice, heterogeneity attribute processing.” Working paper. The University of Sydney, Institute of Transport and Logistics Studies. [Google Scholar]

- Hays Ron D., Liu Honghu, Kapetyn Arie. 2015. “Use of Internet Panels to Conduct Surveys.” Behavior Research Methods 47(2): 685–690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hensher David A. 2006. “How do respondents process stated choice experiments? Attribute consideration under varying information load.” Journal of Applied Econometrics 21(6): 861–878. [Google Scholar]

- Hensher David A. 2008. “Joint estimation of process and outcome in choice experiments and implications for willingness-to-pay.” Journal of Transport Economics and Policy 42(2): 297–322. [Google Scholar]

- Hensher David A., and Greene William H.. 2003. “A latent class model for discrete choice analysis: contrasts with mixed logit.” Transportation Research Part B: Methodological 37(8): 681–698. [Google Scholar]

- Hensher David A., and Greene William H.. 2010. “Non-attendance and Dual Processing of Common-Metric Attributes in Choice Analysis: A Latent Class Specification.” Empirical Economics 39(2): 413–426. [Google Scholar]

- Hensher David A., Rose John M., and Bertoia Tony. 2007. “The Implications on willingness-to-pay of a Stochastic Treatment of Attribute Processing in Stated Choice Studies.” Transportation Research Part E: Logistics and Transportation Review 43(2): 73–89. [Google Scholar]

- Hensher David A., Rose John M., and Greene William H.. 2005. “The implications on willingness-to-pay of respondents ignoring specific attributes.” Transportation 32(3): 203–222. [Google Scholar]

- Hensher David A., Rose John M., and Greene William H.. 2012. “Inferring attribute non-attendance from stated choice data: Implications for willingness-to-pay estimates and a warning for stated choice experiment design.” Transportation 39(2): 235–245. [Google Scholar]

- Hensher David A., Rose John M., and Greene William H.. 2015. Applied Choice Analysis, 2nd Edition. Cambridge, United Kingdom: Cambridge University Press. [Google Scholar]

- Hess Stephane, and Hensher David A.. 2010. “Using Conditioning on Observed Choices to Retrieve Individual-Specific Attribute Processing Strategies.” Transportation Research Part B: Methodological 44(6): 781–790. [Google Scholar]

- Hole Arne R. 2011. “A discrete choice model with endogenous attribute attendance.” Economics Letters 110(3): 203–205. [Google Scholar]

- Holmes Thomas P., and Boyle Kevin J.. 2005. “Dynamic learning and context-dependence in sequential, attribute-based, stated-preference valuation questions.” Land Economics 81(1): 114–126. [Google Scholar]

- Johnston Robert J., Boyle Kevin J., Adamowicz Wiktor, Bennett Jeff, Brouwer Roy, Cameron Trudy Ann, Michael Hanemann W, Hanley Nick, Ryan Mandy, Scarpa Ricardo, Tourangeau Roger, and Vossler Christian A.. 2017. “Contemporary guidance for stated preference studies.” Journal of the Association of Environmental and Resource Economists 4(2): 319–405. [Google Scholar]

- Koetse Mark J. 2017. “Effects of payment vehicle non-attendance in choice experiments on value estimates and the WTA–WTP disparity.” Journal of Environmental Economics and Policy 6(3): 225–245. [Google Scholar]

- Kragt Marit. 2013. “Stated and Inferred Attribute Attendance Models: A Comparison with Environmental Choice Experiments.” Journal of Agricultural Economics 64(3): 719–736. [Google Scholar]

- Lancsar Emily, and Louviere Jordan J.. 2006. “Deleting ‘irrational’ responses from discrete choice experiments: a case of investigating or imposing preferences?” Health Economic 15(8): 797–811. [DOI] [PubMed] [Google Scholar]

- Lew Daniel K., and Wallmo Kristy. 2011. “External tests of scope and embedding in stated preference choice experiments: an application to endangered species valuation.” Environmental and Resource Economics 48(1): 1–23. [Google Scholar]

- Lindhjem Henrik, and Navrud Ståle. 2011. “Are Internet surveys an alternative to face-to-face interviews in contingent valuation?” Ecological Economics 70(9): 1628–1637. [Google Scholar]

- Mahieu Pierre-Alexandre, Andersson Henrik, Beaumais Olivier, Sourd Romain Crastes dit, Hess Stephanie, and Wolff François-Charles. 2017. “Stated preferences: a unique database composed of 1657 recent published articles in journals related to agriculture, environment, or health.” Review of Agricultural, Food and Environmental Studies 98(3): 1–20. [Google Scholar]

- McIntosh E, and Ryan M. 2002. “Using discrete choice experiments to derive welfare estimates for the provision of elective surgery: Implications of discontinuous preferences.” Journal of Economic Psychology 23(2): 367–382. [Google Scholar]

- Meyerhoff J, and Liebe U. 2009. Discontinuous preferences in choice experiments: Evidence at the choice task level. Presented at the 17th Annual conference of the European Association of Environmental and Resource Economists, Amsterdam, The Netherlands, June 2009 [Google Scholar]

- Moore Christopher C., Holmes Thomas P., and Bell Katherine P.. 2011. “An attribute-based approach to contingent valuation of forest protection programs.” Journal of Forest Economics 17(1): 35–52. [Google Scholar]

- Nguyen Thanh Cong, Robinson Jackie, and Kaneko Shinji. 2015. “Examining ordering effects in discrete choice experiments: A case study in Vietnam.” Economic Analysis and Policy 45(1): 39–57. [Google Scholar]

- Petrolia Daniel R., Interis Matthew G., and Hwang Joonghyun. 2014. “America’s wetland? A national survey of willingness-to-pay for restoration of Louisiana’s coastal wetlands.” Marine Resource Economics 29(1): 17–37. [Google Scholar]

- Petrolia Daniel R., Interis Matthew G., and Hwang Joonghyun. 2018. “Single-Choice, Repeated-Choice, and Best-Worst Scaling Elicitation Formats: Do Results Differ and by How Much?” Environmental and Resource Economics 69(2): 365–393. [Google Scholar]

- Provencher Bill, Baerenklau Kenneth A., and Richard C Bishop. 2002. “A finite mixture model of recreational angling with serially correlated random utility.” American Journal of Agricultural Economics 84(4): 1066–1075. [Google Scholar]

- Puckett Sean M., and Hensher David A.. 2008. “The Role of Attribute Processing Strategies in Estimating the Preferences of Road Freight Stakeholders.” Transportation Research Part E: Logistics and Transportation Review 44(3): 379–395. [Google Scholar]

- Ready Richard C., and Hu Dayuan. 1995. “Statistical Approaches to the Fat Tail Problem for Dichotomous Choice Contingent Valuation.” Land Economics 71(4): 491–499. [Google Scholar]

- Rose John M., Hensher David A., and Greene William H.. 2005. “Recovering costs through price and service differentiation: Accounting for exogenous information on attribute processing strategies in airline choice.” Journal of Air Transport Management 11(6): 400–407. [Google Scholar]

- Rosenberger Randall S., Peterson George L., Clarke Andrea, and Brown Thomas C.. 2003. “Measuring dispositions for lexicographic preferences of environmental goods: integrating economics, psychology and ethics.” Ecological Economics 44(1): 63–76. [Google Scholar]

- Sælensminde Kjartan. 2002. “The impact of choice inconsistencies in stated choice studies.” Environmental and Resource Economics 23(4): 403–420. [Google Scholar]

- Scarpa Riccardo, Gilbride Timothy J., Campbell Danny, and Hensher David A.. 2009. “Modelling attribute non-attendance in choice experiments for rural landscape valuation.” European Review of Agricultural Economics 36(2): 151–174. [Google Scholar]

- Scarpa R, Raffaelli R, Notaro S, and Louviere J. 2011. Modelling the effects of stated attribute non-attendance on its inference: An application to visitors benefits from the Alpine grazing commons. Presented at the International Choice Modelling Conference 2011, Oulton, Leeds, July 4–6. [Google Scholar]

- Scarpa Riccardo, and Thiene Mara. 2005. “Destination choice models for rock climbing in the Northeastern Alps: a latent-class approach based on intensity of preference.” Land Economics 81(3): 426–444. [Google Scholar]

- Scarpa Riccardo, Zanoli Raffaele, Bruschi Viola, and Naspetti Simona. 2012. “Inferred and Stated Attribute Non-attendance in Food Choice Experiments.” American Journal of Agricultural Economics 95(1): 165–180. [Google Scholar]

- Schuman Howard. 1996. “The Sensitivity of CV Outcomes to CV Survey Methods,” in The Contingent Valuation of Environmental Resources: Methodological Issues and Research Needs, eds. Bjornstad DJ and Kahn JR. Cheltenham, UK: Edward Elgar Publishing Co. [Google Scholar]

- Siikamäki Juha, and Larson Douglas M.. 2015. “Finding sensitivity to scope in nonmarket valuation.” Journal of Applied Econometrics 30(2): 333–349. [Google Scholar]

- Swait Joffre D. 2001. “A non-compensatory choice model incorporating attribute cutoffs.” Transportation Research Part B: Methodological 35(10): 903–928. [Google Scholar]

- Thiene Mara, Scarpa Riccardo, and Louviere Jordan J.. 2015. “Addressing Preference Heterogeneity, Multiple Scales and Attribute Attendance with a Correlated Finite Mixing Model of Tap Water Choice.” Environment and Resource Economics 62(3): 637–656. [Google Scholar]

- Train Kenneth. 2003. Discrete Choice Methods and Simulation, 1st ed. New York: Cambridge University Press. [Google Scholar]

- Veisten Knut, Hans Fredrik Hoen Ståle Navrud, and Strand Jon. 2004. “Scope insensitivity in contingent valuation of complex environmental amenities.” Journal of Environmental Management 73(4): 317–331. [DOI] [PubMed] [Google Scholar]

- Vossler Christian A., Doyon Maurice, and Rondeau Daniel. 2012. “Truth in Consequentiality: Theory and Field Evidence on Discrete Choice Experiments.” American Economic Journal: Microeconomics 4(4): 145–171. [Google Scholar]

- Whitehead John C. 2002. “Incentive Incompatibility and Starting-Point Bias in Iterative Valuation Questions.” Land Economics 78(2): 285–297. [Google Scholar]

- Whitehead John C. 2016. “Plausible Responsiveness to Scope in Contingent Valuation.” Ecological Economics 128:17–22. [Google Scholar]

- Yeager David S., Krosnick Jon A., Chang Linchiat, Javitz Harold S., Levendusky Matthew S., Simpser Alberto, and Wang Rui. 2011. “Comparing the Accuracy of RDD Telephone Surveys and Internet Surveys Conducted with Probability and Non-Probability Samples.” Public Opinion Quarterly 75(4): 709–747. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.