Abstract

Most humans can walk effortlessly across uniform terrain even when they do not pay much attention to it. However, most natural terrain is far from uniform, and we need visual information to maintain stable gait. Recent advances in mobile eye-tracking technology have made it possible to study, in natural environments, how terrain affects gaze and thus the sampling of visual information. However, natural environments provide only limited experimental control, and some conditions cannot safely be tested. Typical laboratory setups, in contrast, are far from natural settings for walking. We used a setup consisting of a dual-belt treadmill, 240 projection screen, floor projection, three-dimensional optical motion tracking, and mobile eye tracking to investigate eye, head, and body movements during perturbed and unperturbed walking in a controlled yet naturalistic environment. In two experiments (N = 22 each), we simulated terrain difficulty by repeatedly inducing slipping through accelerating either of the two belts rapidly and unpredictably (Experiment 1) or sometimes following visual cues (Experiment 2). We quantified the distinct roles of eye and head movements for adjusting gaze on different time scales. While motor perturbations mainly influenced head movements, eye movements were primarily affected by the presence of visual cues. This was true both immediately following slips and—to a lesser extent—over the course of entire 5-min blocks. We find adapted gaze parameters already after the first perturbation in each block, with little transfer between blocks. In conclusion, gaze–gait interactions in experimentally perturbed yet naturalistic walking are adaptive, flexible, and effector specific.

Keywords: virtual reality, gait stability, treadmill perturbations, motion tracking, eye movements, walking

Introduction

Walking is a complex action that depends on a myriad of dynamic factors regarding the body in motion as well as its surroundings, yet humans typically walk effortlessly and without giving it much thought. Walking has also been shown to be robust to a variety of perturbations and missing information, as successful locomotion has been found in conditions that include walking over obstacles (Weerdesteyn, Nienhuis, Hampsink, & Duysens, 2004), slipping (Marigold & Patla, 2002), and walking without continuous vision (Laurent & Thomson, 1988). In nonhuman models, even deafferented cats can be able to walk (Brown, 1911), and indeed human locomotion is controlled on a variety of different levels from reflexes (Belanger & Patla, 1987; Capaday & Stein, 1986; Moore, Hirasaki, Raphan, & Cohen, 2001) to cognitive control (Hausdorff, Yogev, Springer, Simon, & Giladi, 2005) and uses many different sensory inputs and dynamics (Gibson, 1958), including but not restricted to vestibular (Jahn, Strupp, Schneider, Dieterich, & Brandt, 2000), haptic (Ferris, Louie, & Farley, 1998), and many different visual cues (Laurent & Thomson, 1988). Thus, on the one hand, humans use a huge variety of sensory information and control mechanisms for walking; on the other hand, most of the time, they apparently do not depend on this information. This raises the question: How do we sample the visual information around us to facilitate walking, and how does this change under more difficult conditions?

The most common model of walking mechanics is that of a double-inverted pendulum (Mochon & McMahon, 1980) in which each foot is a pivot and the pelvis is the bob, which also coincides with the walker's center of mass (Whittle, 1997). This model has been very successful in explaining walking under a variety of conditions. These include unperturbed walking over flat, uniform surfaces, but typical responses to perturbations can also be quantified within this model. For example, adjusting the center of mass is a typical response to different kinds of perturbations to walking (Barton, Matthis, & Fajen, 2019; Marigold & Patla, 2002) as well as terrain difficulty (Kent, Sommerfeld, & Stergiou, 2019) and explains much of the variance in gait patterns (Wang & Srinivasan, 2014). Step length, on the other hand, is also sensitive to perturbations (Rand, Wunderlich, Martin, Stelmach, & Bloedel, 1998; Weerdesteyn, Nienhuis, Hampsink, & Duysens, 2004) and changes with irregular terrain (Warren, Young, & Lee, 1986).

Adjustments to locomotion parameters need to be based on sensory information that walkers have available. Among this information, vision plays a special role (Patla, 1997), being the only sensory information that is available at a distance and critical for online control of walking (Fajen & Warren, 2003). Vision is perhaps especially important in perturbed walking since, as Warren and colleagues put it, in the context of slipping and stumbling, “prevention is better than cure” (Warren, Young, & Lee, 1986) in other words, knowing of potential obstacles in advance (and adjusting gait accordingly) is preferable to simply reacting. Correspondingly, seminal work has shown a central role of vision when steps need to be adjusted toward a target (Laurent & Thomson, 1988; Warren, Young, & Lee, 1986). On difficult terrain, humans tend to fixate where the most information regarding potential sources of instability is found (Marigold & Patla, 2007): close to where they step (Hollands, Marple-Horvat, Henkes, & Rowan, 1995), as well as toward obstacles (Rothkopf, Ballard, & Hayhoe, 2007; Tong, Zohar, & Hayhoe, 2017) and transition regions between surfaces. Indeed, even unperturbed steps are less precise when visual information is lacking completely (Reynolds & Day, 2005b), with the importance of vision differing by step phase (Matthis, Barton, & Fajen, 2017). Conversely, fixating relevant objects directly leads to improved performance in both reaching and avoiding locations on the walking surface (Tong, Zohar, & Hayhoe, 2017).

It comes as no surprise, then, that eye and body movements tend to be coupled: Not only do the eyes interact with how the body and the head move (Guitton, 1992; Imai, Moore, Raphan, & Cohen, 2001; Moore, Hirasaki, Raphan, & Cohen, 2001; Solman, Foulsham, & Kingstone, 2017), but they have also been shown to move in coordinated fashion with the feet in a stepping task (Hollands & Marple-Horvat, 2001). In walking more generally, higher terrain difficulty correlates with a lowered gaze ('t Hart & Einhäuser, 2012), a relationship that holds not just with respect to terrain difficulty but also to the walker's assessment of the terrain (Thomas, Gardiner, Crompton, & Lawson, 2020). Recent work has suggested that such effects may reflect walkers’ strategy of fixating position ahead of themselves by roughly a constant offset when navigating terrains of varying difficulty, not just in terms of the number of steps (Hollands, Marple-Horvat, Henkes, & Rowan, 1995) but also time (Matthis, Yates, & Hayhoe, 2018). Questions remain, however, for example, about how and if participants learn to direct their gaze like they do in other tasks (Dorr, Martinetz, Gegenfurtner, & Barth, 2010; Hayhoe & Rothkopf, 2010) and as they learn to adjust their gait (Kent, Sommerfeld, & Stergiou, 2019; Malone & Bastian, 2010; Nashner, 1976; Rand, Wunderlich, Martin, Stelmach, & Bloedel, 1998).

Another key issue is methodological. So far, we have touched only briefly on the fact that the aforementioned studies used distinct settings the laboratory (Barton, Matthis, & Fajen, 2019; Fajen & Warren, 2003; Jahn, Strupp, Schneider, Dieterich, & Brandt, 2000; Marigold & Patla, 2007; Rothkopf, Ballard, & Hayhoe, 2007; Weerdesteyn, Nienhuis, Hampsink, & Duysens, 2004) or the real world (Matthis, Yates, & Hayhoe, 2018; 't Hart & Einhäuser, 2012), with some also using fully or partially virtual environments (Barton, Matthis, & Fajen, 2019; Fajen & Warren, 2003; Matthis, Barton, & Fajen, 2017; Rothkopf, Ballard, & Hayhoe, 2007). These studies also investigated different classes of locomotion: walking (Fajen & Warren, 2003; Marigold & Patla, 2002, 2007; Rothkopf, Ballard, & Hayhoe, 2007; 't Hart & Einhäuser, 2012; Thomas, Gardiner, Crompton, & Lawson, 2020; Weerdesteyn, Nienhuis, Hampsink, & Duysens, 2004), running (Ferris, Louie, & Farley, 1998; Jahn, Strupp, Schneider, Dieterich, & Brandt, 2000; Lee, Lishman, & Thomson, 1982; Warren, Young, & Lee, 1986), or stepping (Barton, Matthis, & Fajen, 2019; Hollands & Marple-Horvat, 2001; Hollands, Marple-Horvat, Henkes, & Rowan, 1995; Matthis, Barton, & Fajen, 2017; Reynolds & Day, 2005b). These distinctions regarding settings are, however, critical. There is some trade-off between the experimental control afforded by a laboratory and the ecological validity of more real-world-like settings. This trade-off applies to behavioral studies in general but has also been debated specifically for studies on locomotion (Multon & Olivier, 2013) and on eye movements (Hayhoe & Rothkopf, 2010).

In the present study, we combined a high-performance dual-belt treadmill, a 240 virtual reality projection, high-precision real-time motion capture, and mobile eye tracking to achieve a much more naturalistic setting for walking than most previous lab-based studies while maintaining full experimental control over visual stimulation and terrain difficulty (Figure 1, Figure 2 and 3 Supplementary Movies S1 and S2). We applied slip-like perturbations to walking in unimpaired participants and measured how such perturbations affected body and eye movements. The analysis considered two different time scales: 8-s time windows around each perturbation as well as whole 5-min blocks of the same conditions. In two experiments, we manipulated the frequency and intensity (Experiment 1), as well as, through visual cues (transparent blueish rectangles on the virtual road), the predictability of perturbations (Experiment 2). This allowed us to tell apart the effects of walking under difficult conditions on different parameters and on multiple time scales. Based on previous real-world work, we expected differences between conditions in the cumulative eye movement data, in particular, lowered gaze when gait is perturbed ('t Hart & Einhäuser, 2012), especially for perturbations visible ahead of time (Matthis, Yates, & Hayhoe, 2018). With respect to rapid adjustments (i.e., differences between successive slips in the same condition and carryover across blocks), predictions were less clear. While gait-stability investigations have shown a lot of learning on the first perturbation (Marigold & Patla, 2002) and individual differences in how strongly and quickly gait is adjusted (Potocanac & Duysens, 2017), such data are lacking when it comes to eye movements. To address these questions, we assessed (a) immediate effects in a 3-s time window after each perturbation, (b) adaptive changes to the perturbation condition in each 5-min block, and (c) persistent changes between blocks, each with respect to eye, head, and body movements.

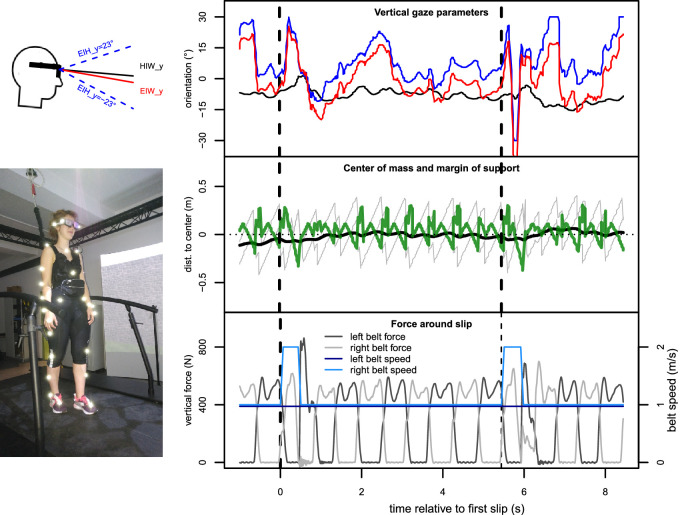

Figure 1.

Our setup and the main variables recorded. Top left: Schematic side view of a head wearing SMI glasses to illustrate gaze parameters. The four markers on the glasses were used to calculate head orientation (the vertical component of which is plotted here in degrees as ”head-in-world,” or HiW_y) and the position of the cyclopean eye. Knowing the field of view of the SMI glasses (46 vertically and 60 horizontally for the head camera, as seen in the videos, and 60/80 for gaze tracking) allowed us to add the ”eye-in-head” or EiH_y gaze vector (also in degrees) to this vector and gave us ”eye-in-world,” EiW_y, when adding up the two parameters. Bottom left: Setup for our experiment. Participant wearing 39 retro-reflective markers and SMI glasses on a dual-belt treadmill, looking at a virtual road presented on a 240 screen. Right: Gaze and gait parameters over two slip events from Experiment 1 as an example of the measured data. Top panel: Gaze-related parameters, including vertical coordinates of the head's pointing direction position of head-in-world (black), eye-in-world (red), and eye-in-head (blue). Time axis is relative to the initiation of one slip (i.e., a perturbation event); y-axis shows y-component of each parameter in degrees. Dashed vertical lines indicate time of perturbation. Middle: Movement-adjusted center of mass (black) compared to anterior and posterior base of support (gray), giving us the anterior–posterior margin of support (, green, in m; higher values indicate higher gait stability). Bottom: Vertical force in N on the left and right belt, respectively, which was used to detect steps online. Light blue and dark blue lines show the respective nominal belt speeds.

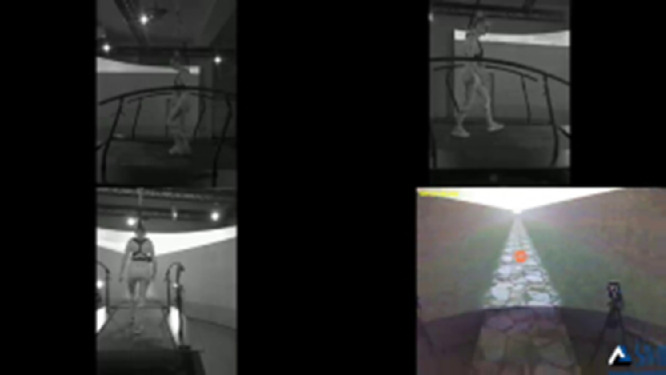

Figure 2.

Supplementary Movie S1 (https://osf.io/73cbm/), a participant walking and slipping from three angles (from behind and side views), as well as the participant's head-cam view. Footage from one of the first slips of this participant, in Experiment 1 (i.e., without visual cues).

Figure 3.

Supplementary Movie S2 (https://osf.io/teumk/), head-cam view of a participant in Experiment 2 walking with perturbations and visual cues (v1m1 condition). As the participant traverses each of the two blueish rectangles, one belt of the treadmill accelerates to induce a motor perturbation.

Method

Participants

For Experiment 1, we invited a total of 26 participants into the lab for testing. Two of these were tested as a replacement for the first two participants, in whom we had noticed issues with stimulus display; for two further participants, we later discovered that recordings were incomplete (data from eye tracking, in one case, and motion tracking in the other case), leaving us with complete data sets from N = 22 participants who were included in the analyses. These included 16 women and 6 men with average age 22.5 years (between 18 and 37), average height 169 9 cm, average body mass 63 10 kg, and average leg length 91 6 cm. Participants received either course credit or a monetary reimbursement of 6€/h.

For Experiment 2, we again invited 26 participants into the lab. Two were replacements for participants whose data were incomplete (in one case due to a computer crash, another whose uncorrected visual acuity was insufficient). Again, one data set turned out to be incomplete, and one participant's data were excluded due to a too high proportion of missing data, over 25%, leaving us with a set of N = 22 participants included in analysis (13 women, 9 men; average age 25.6 years, between 19 and 38; average height 170 12 cm, average body mass 64 11 kg, average leg length 84 6 cm). Participants were reimbursed with course credit, or 8€/h.

For each experiment, our desired sample size was N = 24, a sample that at = .05 and Cohen's f = 0.25 (roughly the effect size we expected for changes in gaze allocation based on previous results such as 't Hart & Einhäuser, 2012) would give us 80% power (Cohen, 1988). Participants for both experiments were recruited via an online mailing list and invited to the lab if they self-reported normal or corrected-to-normal vision without needing glasses (contact lenses were permitted), no neurological or walking impairments, and weighing 130 kg or less. Prior to the experiment, all participants gave written, informed consent but were naive to the hypotheses. They also filled in a questionnaire asking biographical details, handedness, visual and auditory impairments, current state of being awake, and whether they felt in good health. Biometric measurements were taken that were required for the motion-tracking model. Participant data were protected following the guidelines of the 2013 Declaration of Helsinki. Participants were debriefed after the experiment. All procedures were approved by the Chemnitz University of Technology, Faculty of Behavioural and Social Sciences ethics committee (V-314-PHKP-WET-GRAIL01-17012019).

Perturbations and the virtual environment

We used a dual-belt treadmill (GRAIL; Motek Medical, Amsterdam, Netherlands) capable of accelerating each belt independently at up to 15 m/s (Sessoms et al., 2014) to induce perturbations. These started when the participants put their foot down on the to-be-perturbed belt (force > 100 N) and ended when the same foot was lifted off the belt (force < 50 N). On average, perturbations lasted 643 318 ms when the belt was accelerated to 2 m/s and 695 312 ms when it was accelerated to 1.5 m/s. The visual environment was a simple endless road (see Supplementary Movie S1 Figure 2), displayed at 60 Hz on a 240 screen 2.5 m in front of the center of the treadmill with a virtual horizon at 1.25 m height, rendered from the perspective of a virtual camera positioned at 1.6 m height at the x-y origin. Thirty-nine retro-reflective markers were placed on the subjects’ body segments (see Figure 1) to facilitate motion capture of the subjects, gait using a Vicon Plug-In Gait full-body model (Vicon Motion Systems, Yarnton, UK). Markers were placed directly on subjects’ skin or on tight-fitting athletic apparel and always applied by the same experimenters within each experiment to increase reliability (McGinley, Baker, Wolfe, & Morris, 2009). Head orientation was captured using four head markers. Marker positions were recorded at 250 Hz by 10 infrared cameras positioned at different angles and heights around the treadmill. Force plates below the belts recorded ground-reaction-force time series at 1,000 Hz, used to compute stride data, with 50 N vertical force as a threshold for ground contact. Eye positions were recorded at 60 Hz using SMI glasses (SensoMotoric Instruments, Teltow, Germany) with a gaze-position accuracy of 0.5 according to the manufacturer.

Procedure

First, motion-tracking cameras were calibrated, anthropometric measurements including height and leg length were taken, and markers were applied. Participants who reported being unfamiliar with walking on treadmills were given up to a 1-min practice that consisted of unperturbed walking at 1 m/s. Following this, experimenters calibrated the motion-capture model using a standard set of movements (T-pose and ca. 10 s of walking). SMI glasses were then calibrated using a three-point calibration; this eye-tracking calibration was repeated each time the participant took a break.

Prior to each block, participants were instructed whether they were in a baseline or perturbation block and were asked to walk normally at the speed imposed by the treadmill for ca. 5 min, until it came to a stop. No further information about the experimental condition were given. Each block was preceded by a 20-point validation of the eye tracker (Supplementary Movie S3 Figure 4). This would have enabled us to retroactively exclude participants with unusable data (none were identified). Moreover, we could check the precision, accuracy, and stability of calibration independent of the device. We found a comparably large (median 5.5) error, which, however, was consistent across the visual field within each participant. This allowed us to apply a blockwise correction procedure, reducing the error to 2.2 for the region in which over 90% of gaze was directed (see Appendix for details and definition of these measures). Importantly, this corrected calibration was stable across a block (0.3 degrees shift between blocks). Note that most of our measures consider eye-position changes over a short interval and are therefore unaffected by gradual drift. After a countdown of 5 s (Supplementary Movie S4 Figure 5), treadmill speed was increased to the baseline speed of 1 m/s over 5 s in steps of 0.2 m/s. Deceleration at the end of blocks followed the same stepwise pattern.

Figure 4.

Supplementary Movie S3 (https://osf.io/6yntb/), head-cam view of the eye-tracker validation procedure. As 20 red dots were presented on the screen in a predefined order, the participant was asked to always fixate the one that was visible. Head movements were explicitly allowed. These recordings were used to validate that the eye tracker was able to record data of sufficient quality for further analysis.

Figure 5.

Supplementary Movie S4 (https://osf.io/gvhu2/), head-cam view of the countdown to walking and the participant starting to walk. This countdown was always displayed after the validation and always showed the participant number, block number, and how many seconds were left until the treadmill would start. The word ”Los” is German for ”Go.”

The main experiment started with a baseline block of another 5 min (Experiment 1) or 2:30 min (Experiment 2) of unperturbed walking. After this, participants completed perturbation blocks of 5 min each, during which one of the belts accelerated (at 15 m/s) on certain steps, perturbations that simulated and were subjectively experienced akin to slipping on ice: In Experiment 1, these perturbations occurred quasi-randomly with a probability of either .05 or .1 on every step (with a minimum distance of five steps between perturbations) depending on the experimental block (factor perturbation probability); see Table 1. The perturbation strength (i.e., the target speed of the acceleration) was either 1.5 m/s or 2.0 m/s (factor perturbation strength), giving us 2 × 2 = 4 conditions that were presented to each participant with the order counterbalanced between participants. In Experiment 2, we fixed the frequency and speed of perturbation but also included visual cues: transparent blue 1-m × 1-m squares on the road spaced between 12 m and 20 m apart (16 m on average, for a median 19.5 perturbations per block; see Supplementary Movie S2 Figure 3) that were present in half of the blocks (factor visual cue, denoted as “v1” and “v0” for visual cues being present or not present, respectively). Motor perturbations were always accelerations to 2.0 m/s, triggered when participants stepped into one of the 1-m × 1-m squares (visible in the “v1m1” condition and invisible in v0m1) for the leg they first stepped into the square with. They were present also in only half of the blocks (the two factor levels present and not present named “m1” and “m0” following the same logic used for visual cues; a summary of our conditions can be seen in Table 1), again giving us a 2 × 2 design. This allowed us to isolate the respective contributions of seeing (and potentially tracking) a visual cue, on the one hand, and, on the other hand, experiencing a slip-like motor perturbation. For example, the condition with the motor perturbation coinciding with the visual display of ice on the road that could be seen approaching from the distance (Supplementary Movie S2 Figure 3) was referred to as “v1m1” and allowed participants to know in advance not just that perturbations would occur but also when, since in such blocks, visual cues and motor perturbations always occurred together. Each condition was presented twice, with each half of the experiment containing each condition once in reverse order of each other, counterbalanced between participants. In both Experiment 1 and Experiment 2, this was followed by another block of unperturbed walking that was identical to the first block.

Table 1.

Conditions in our experiments, their basic characteristics with respect to slips, and proportion of missing eye-tracking data.

| Proportion missing data, % | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Exp | Condition | Velocity | Probability | Visual cues | Slips | Eye | Eye, slips | Mocap | Mocap, slips |

| 1 | 1.5 m/s * 0.05 | 1.5 m/s | .05 | No | 20.5 | 1.1 | 1.1 | 0.3 | 0.3 |

| 2.0 m/s * 0.05 | 2.0 m/s | .05 | No | 23.0 | 1.2 | 1.5 | 0.3 | 0.4 | |

| 1.5 m/s * 0.1 | 1.5 m/s | .1 | No | 37.5 | 1.4 | 1.4 | 0.3 | 0.3 | |

| 2.0 m/s * 0.1 | 2.0 m/s | .1 | No | 40.5 | 1.6 | 1.8 | 0.3 | 0.3 | |

| 2 | v0m0 | 2.0 m/s | − | No | NA | 1.4 | 0 | 0.1 | 0 |

| v0m1 | 2.0 m/s | ca..05 | No | 19.0 | 1.5 | 1.4 | 0.1 | 0.1 | |

| v1m0 | 2.0 m/s | − | Yes | 19.0 | 1.4 | 1.4 | 0 | 0 | |

| v1m1 | 2.0 m/s | With cue: 1 | Yes | 20.0 | 1.5 | 1.5 | 0.2 | 0.2 | |

Data processing and variables

Eye-tracking data were exported to text files using BeGaze (SensoMotoric Instruments, Teltow, Germany) and synchronized with motion-capture data by using the time stamp of the countdown preceding each block, which also involved down-sampling motion-capture data to 60 Hz to match eye-tracking data. We then cleaned the data by interpolating missing values with a cubic spline and filtering them with a third-order Savitzky–Golay filter (Savitzky & Golay, 1964) with a window of just under 100 ms. This procedure was applied to both eye-tracking data (blockwise median: 1.4% missing values, ranging from 0.08% to 9.5%; this included blinks as detected by BeGaze) and motion-tracking data (blockwise median: 0.2% missing values for markers included in analyses, ranging from 0% to 18.6%; high values typically indicated an occluded hip marker or, in rare cases, a foot marker falling off). We found very similar proportions of missing values in 8-s windows around slips (medians: 0.2% and 1.6% for motion capturing and eye tracking, respectively), indicating that missing values did not cluster around those events; see Table 1.

Our main dependent variables (see Figure 1) were (a) the head orientation (“head-in-world”), defined as the mean slope, in degrees, of the two vectors between the back-head markers and the front-head markers, and (b) the point of regard relative to the field of view of the SMI glasses (“eye-in-head”), also in degrees. From these, we calculated (c) the gaze orientation relative to the real-world coordinate system (“eye-in-world”). We restricted quantitative analysis to the vertical dimension, for two reasons: (a) The setting is symmetric relative to the vertical meridian of the display, and (b) all relevant information for further step placement, which is where humans tend to look (Hollands, Marple-Horvat, Henkes, & Rowan, 1995; Matthis, Yates, & Hayhoe, 2018), arises from the line of progression, which is along the vertical as participants walk straight ahead. For gait stability, we computed (d) the anterior–posterior margin of support () as the minimum distance between bases of support (most anterior and most posterior foot marker touching the ground) and the center of mass (, estimated as the mean position of the hip markers; see Whittle, 1997). The was then adjusted for its movement (its temporal derivative estimated through the same Savitzky–Golay filter used for smoothing) and the angular frequency of the pendulum (Hof, Gazendam, & Sinke, 2005; McAndrew Young, Wilken, & Dingwell, 2012) derived from heel–pelvis distance l and gravity g to give us the adjusted center of mass , calculated as

| (1) |

Eye-tracking and motion capture data, as well as analysis scripts, are available via the Open Science Framework: https://doi.org/10.17605/OSF.IO/UMW5R.

Results

In each of two experiments, we asked participants to walk on the treadmill at a moderate speed while viewing a virtual world whose motion was synchronized to treadmill motion (Figure 1, Supplementary Movies S1 and S2). Quasi-randomly, the belt below one foot would accelerate rapidly at the time of foot placement on some steps; speed returned to standard for the next step. In Experiment 1, we manipulated the rate at which these perturbations occurred and the strength of the perturbation. In Experiment 2, we fixed these parameters. Instead, we independently manipulated on a blockwise basis whether perturbations were present or not and whether there were visual cues indicating a possible perturbation. Reflecting this, our primary analyses were 2 × 2 repeated-measures analyses of variance (ANOVAs) to evaluate each parameter in each experiment, with factors perturbation strength and perturbation probability in Experiment 1, and visual cue and motor perturbation in Experiment 2. Note that the presentation of our results is ordered by variables first, rather than by experiments.

Event-related gaze patterns around slips

First, we verified that our perturbations induced slipping as intended by calculating and determining the difference between its maximum and minimum in an 8-s time window around each perturbation event (from 5 s prior to 3 s after, chosen generously to not miss effects of approaching visual cues and not overlap with a following slip). This peak–trough difference of values in a given time window provided a measure of how strongly a parameter varied during that time, a marker of that parameter responding to the perturbation. We found that, as expected (Bogaart, Bruijn, Dieën, & Meyns, 2020; Madehkhaksar et al., 2018), was sensitive to our perturbation as there was significantly more variability around slips, with perturbation strength in Experiment 1 (F(1, 21) = 102.51, p < 0.001, repeated-measures ANOVA) and motor perturbation in Experiment 2 (F(1, 21) = 331.88, p < 0.001) being the deciding factors (other main effects and interactions p > 0.15). This, along with inspection of Figure 6, verified that our experimental manipulation worked as intended.

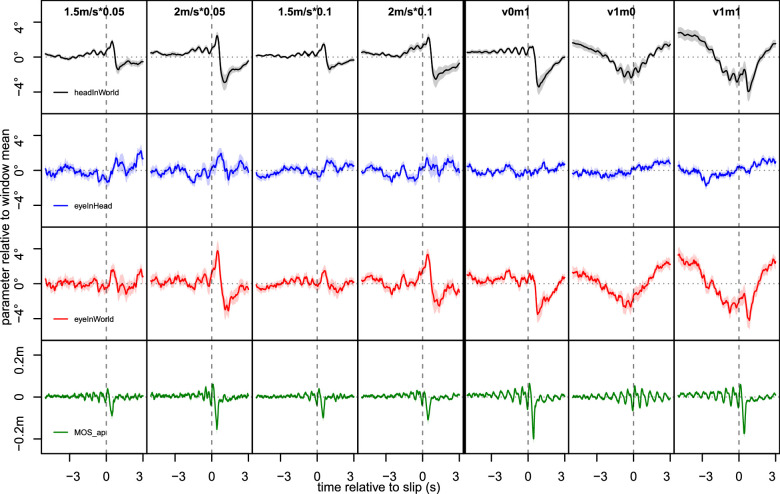

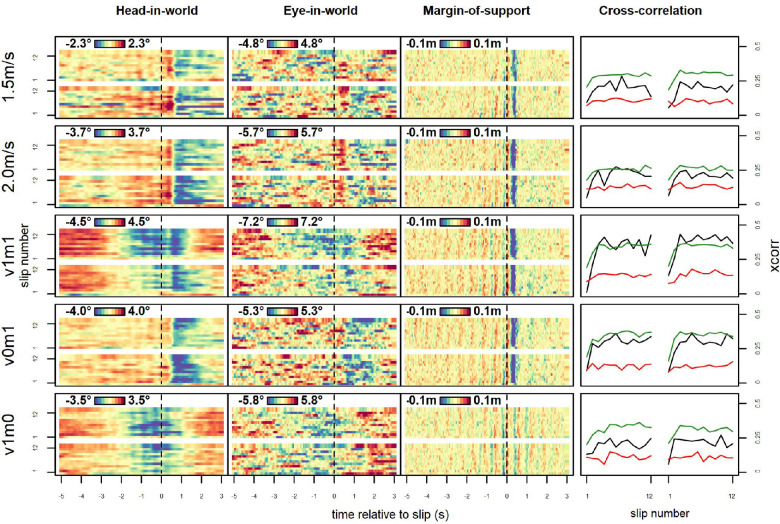

Figure 6.

Average gaze and gait parameters relative to slips. Average trajectories across slips and participants shown for vertical head-in-world (top row), eye-in-head (second row), and eye-in-world (third row), as well as anterior-posterior margin-of-support (bottom row). Shaded areas indicate between-subject standard-error of the mean (SEM). Noticeable patterns include slip-related peak-dip-recovery profiles in head-in-world and eye-in-world, as well as continuously lowered gaze when visual cues were present. shows a sharp decrease following the slip indicating the loss of stability, as well as oscillatory patterns close to the slip likely caused by the fact that, as the slip was always locked to a step, steps were more in sync closer to slip events. A similar (albeit much weaker) pattern of oscillations can be seen in head-in-world. Panels ordered column-wise by perturbation strength and probability for Experiment 1 (1.5 m/s or 2.0 m/s and .05 or .1 on each step, respectively), and by whether visual cues and motor perturbations were present for Experiment 2 (visual cue absent/present: v0/v1; perturbation present/absent m0/m1; note that v0m0 is not shown as no events could be defined).

We analyzed gaze behavior by looking at head-in-world, eye-in-head, and eye-in-world (see Figure 1, top row). For each parameter, we computed peak–trough differences per perturbation event in the same way as for and averaged them to give us mean values per participant and condition (see Table 2 and Figure 6, right).

Table 2.

Mean peak–trough ranges for slips (left), and block-means with slips excluded (right), for all gaze parameters (head-in-world, HiW; eye-in-head, EiH; eye-in-world, EiW), along the y-axis.

| Mean range per slip | Mean per block | ||||||

|---|---|---|---|---|---|---|---|

| Experiment | Condition | HiW slips | EiH slips | EiW slips | HiW block | EiH block | EiW block |

| 1 | 1.5 m/s * 0.05 | 13.1 | 30.7 | 34.9 | −5.0 | 2.7 | −2.3 |

| 2.0 m/s * 0.05 | 15.8 | 30.7 | 36.8 | −5.5 | 2.3 | −3.2 | |

| 1.5 m/s * 0.1 | 12.7 | 29.5 | 33.8 | −4.9 | 2.0 | −2.9 | |

| 2.0 m/s * 0.1 | 17.7 | 32.0 | 39.7 | −6.0 | 2.8 | −3.3 | |

| 2 | v0m0 | 11.4 | 30.8 | 35.4 | −8.9 | −4.1 | −13.0 |

| v0m1 | 15.4 | 30.8 | 38.0 | −10.8 | −3.7 | −14.5 | |

| v1m0 | 13.9 | 31.3 | 37.7 | −9.3 | −2.8 | −12.1 | |

| v1m1 | 18.0 | 33.3 | 41.6 | −10.1 | −2.2 | −12.3 | |

Head-in-world

Our first main analysis concerned if and how perturbations affected head movements. We quantified this by measuring peak–trough differences for the head-in-world orientation around perturbations. For Experiment 1, we found that head-in-world parameters responded strongly to perturbation strength (F(1, 21) = 23.19, p < 0.001) but not to perturbation probability (F(1, 21) = 1.01, p = 0.326). This means that stronger perturbations lead to stronger head responses, but more frequent perturbations did not. In Experiment 2, where we introduced visual cues and made motor perturbations binarily either present or not, we found main effects of motor perturbation (F(1, 21) = 29.17, p < 0.001) and visual cue (F(1, 21) = 4.37, p = 0.049). This confirms that head orientation responds to perturbations and is to some extent influenced by the presence of a visual cue. In both experiments, there were no interactions between factors (all p > 0.07).

Eye-in-head

Next, we considered vertical eye movements relative to the head, that is, the signal measured by the eye-tracking device. Unlike head-in-world orientation, eye-in-head neither depended clearly on perturbation strength (F(1, 21) = 0.95, p = 0.342) nor on perturbation probability (F(1, 21) = 0.01, p = 0.947) in Experiment 1 (with an interaction: F(1, 21) = 7.42, p = 0.013, showing that there was a notable difference between perturbation strengths mainly when perturbations were relatively frequent). In Experiment 2, on the other hand, eye-in-head differed not on the presence of a motor perturbation (F(1, 21) = 1.78, p = 0.196) but on whether there were visual cues (F(1, 21) = 6.41, p = 0.019), with no significant interaction being present (F(1, 21) = 3.77, p = 0.066). Together, both experiments show that the presence of visual cues affected vertical eye movements, while motor perturbations had comparably little effect on eye-in-head orientation.

Eye-in-world

The previous analysis suggests that motor perturbations primarily affect head movements, while visual cues primarily affect eye movements. Gaze (“eye-in-world”) is a combination of these variables. Eye-in-world parameters, computed from a combination of the previous variables, were sensitive to perturbation strength (F(1, 21) = 11.16, p = 0.003), with an interaction with perturbation probability (F(1, 21) = 7.38, p = 0.013) that indicated that this effect of gaze in real-world coordinates varying more around perturbations was clearer in blocks with more frequent perturbations. There was, however, no main effect of perturbation probability (F(1, 21) = 0.25, p = 0.624) in Experiment 1. In Experiment 2, eye-in-world differed depending on both visual cue (F(1, 21) = 5.85, p = 0.025) and motor perturbation (F(1, 21) = 12.45, p = 0.002), with no interaction (F(1, 21) = 1.24, p = 0.279), with each manipulation increasing peak-trough differences when it was present; see Table 2.

Considering all three head and gaze parameters, we thus see that visual information and motor perturbations both affected gaze in the world but both through different effectors: Visual information affected gaze primarily via eye movements, motor perturbations primarily via affecting head movements. In all conditions with a motor perturbation (i.e., all of both experiments except “v0m0” and “v1m0”), we observed a clear event-based modulation of all gaze measures, with a short slight upward shift of gaze followed by a longer and pronounced downward movement that scales with the perturbation speed. Slips with a visual cue showed a steady lowering of gaze (mostly through head movements) prior to the slip, indicative of participants tracking the cue as it approached them.

Gaze and gait

Finally, to see whether less stable gait and more variable gaze tended to occur together, that is, whether some perturbations just had overall stronger effects on the participants, we calculated Pearson correlations between peak–trough ranges for gaze and gait parameters. Across all measures, correlations between gaze and gait were on average positive but small and with very wide ranges: Mean within-participant correlations in Experiment 1 were = .21, ranging from −.45 to .59, and = .13 (−.19, .40); in Experiment 2, these were = .18 (−.41, .48) and = .06 (−.24, .31). This indicates that perturbations that destabilize gait more effectively do not necessarily exert a stronger effect on gaze parameters than less effective perturbations. This (near) absence of an event-by-event correlation also renders trivial explanations of perturbation effects on gaze, such as a direct coupling of body posture and gaze with the head dip as a biomechanical consequence of slipping, exceedingly unlikely, as they predict stronger slips to cause larger dips.

Effects of perturbation per block

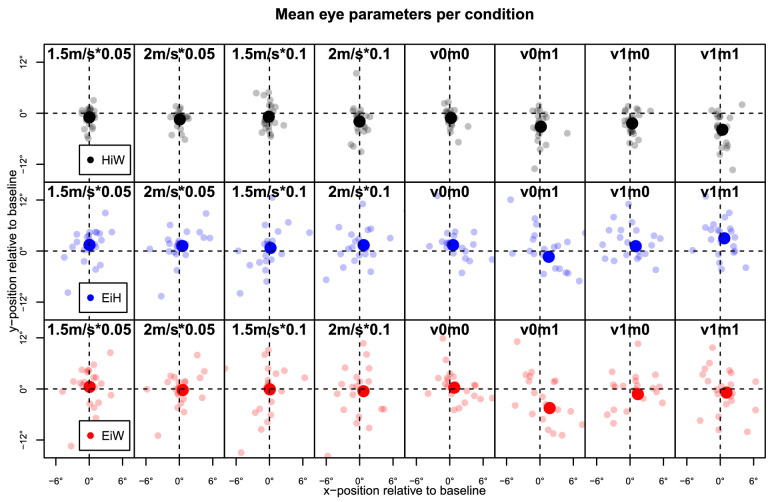

Having found clear gaze adjustments around perturbation-induced slips, we investigated whether participants’ gaze showed longer-lasting adjustment by averaging parameters over entire blocks, excluding 8-s periods (5 s before and 3 s after) around perturbations (Figure 7, Table 2) to look at longer-lasting changes independent of immediate effects.

Figure 7.

Mean gaze parameters for each type of block, relative to baseline (unperturbed blocks of walking at beginning and end of each experiment). Plotted are baseline-corrected means of head-in-world, eye-in-head, and eye-in-world for the entire duration of each block type, in degrees. Each small dot represents one participant; large dots indicate overall means. As expected, variability was primarily along the vertical axis, where most information was found. Columns arranged in the same as in Figure 6; those on the left show blocks from Experiment 1; columns on the right show blocks from Experiment 2.

Head-in-world

On average, throughout a block, vertical head-in-world position was not affected by perturbation strength in Experiment 1 (F(1, 21) = 3.20, p = 0.088) or by perturbation probability (F(1, 21) = 0.16, p = 0.698), with no interaction (F(1, 21) = 0.52, p = 0.477). When visual cues as well as blocks without any motor perturbation were introduced (Experiment 2), head-in-world differed depending on motor perturbation (F(1, 21) = 12.16, p = 0.002) but not visual cue (F(1, 21) = 0.05, p = 0.829), with a statistically significant interaction (F(1, 21) = 5.00, p = 0.036), which indicated that the effects of motor perturbations were somewhat stronger when no visual cues were present. Descriptively, we saw lower gaze for faster perturbations in Experiment 1 (mean difference −0.9 ) and when motor perturbations were present in Experiment 2 (−1.3 ), indicating that the head was lowered.

Eye-in-head

Neither perturbation strength (F(1, 21) = 0.16, p = 0.694) nor perturbation probability (F(1, 21) = 0.19, p = 0.668) affected vertical eye-in-head position in Experiment 1. Correspondingly, the presence or absence of a motor perturbation in Experiment 2 did not significantly affect eye-in-head position, either (F(1, 21) = 0.08, p = 0.783). The presence or absence of visual cues did, on the other hand (F(1, 21) = 11.37, p = 0.003), with no interaction between visual cues and motor perturbation (F(1, 21) = 3.41, p = 0.079). Specifically, gaze was raised (on average by 2.5 of visual angle) when visual cues were present. Thus, eye movements were impacted by visual cues but not by motor perturbations. This held during slip responses, as well as during regular walking between perturbations.

Eye-in-world

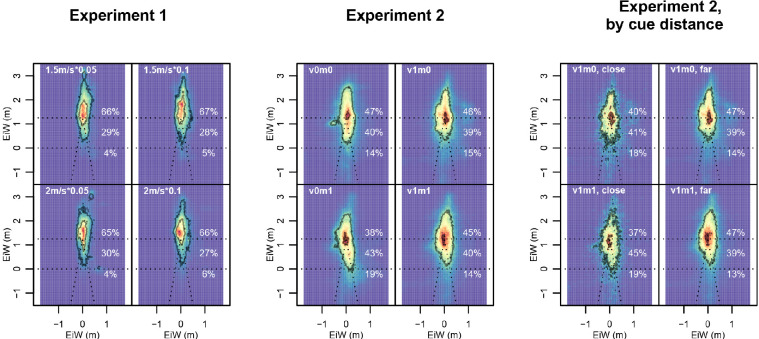

Similar to eye-in-head, vertical eye-in-world did not differ significantly depending on either perturbation strength (F(1, 21) = 0.67, p = 0.423) or perturbation probability (F(1, 21) = 0.36, p = 0.556) in Experiment 1. In Experiment 2, we again saw an effect of visual cues (F(1, 21) = 10.65, p = 0.004, with an effect magnitude of 2.6) but not of motor perturbations (F(1, 21) = 3.27, p = 0.085), but an interaction (F(1, 21) = 5.23, p = 0.033) indicative of a lowered gaze specifically in v0m1 blocks. This pattern in the two gaze variables also likely indicates some form of tracking of visual cues (for an example, see Supplementary Movie S2), which were relatively far away (and thus high on the screen) for the majority of the time. To visualize this, we computed aggregated gaze maps, shown in Figure 8. These are based on two-dimensional (2D) densities of gaze (eye-in-world) using bivariate normal kernels. For both experiments, data are split up by type of condition (Figure 8, left and middle columns). The gaze maps underline the finding that gaze was lowered especially for blocks with perturbation but without visual cues (v0m1). For Experiment 2, we also split up data from blocks with visual cues by whether the most proximal cue was displayed on the treadmill (“close”) or further away on the screen (“far”; Figure 8, right column). The maps suggest that in blocks with visual cues, gaze was lowered when the cue was close. Gaze also became more variable in this case, in particular if the close cue signaled that a perturbation was imminent (v1m1).

Figure 8.

Distribution of gaze orientation depending on experimental condition. Eye-in-world is plotted in absolute coordinates (units of meters). Colors show relative density over entire blocks, from blue (lowest) to dark red (highest). Contours delineate areas containing 10% and 90% of data. Dotted lines indicate the outlines of the treadmill belt, bottom of the screen, and virtual horizon. Numbers on the right in white indicate what proportion of the time gaze was directed (a) above the virtual horizon, (b) on the screen below the virtual horizon, and (c) on the treadmill belt or its extension in front of the screen. Left: Experiment 1; middle: Experiment 2; right: Blocks of Experiment 2 in which visual cues were given, split up by whether this visual cue was on the treadmill belt (left column) or further away, that is, above the belt (right column). We see the highest density centrally close to the virtual horizon and most variation along the line of progression. Also visible are small local peaks close to the bottom of the screen, roughly 0.5 m (Experiment 1) or 1 m (Experiment 2) off center; here were motion-capture cameras. Crucially, we see that participants directed their gaze toward the treadmill much more when this was where the visual cue was (second-to-right column), compared to both when the cue was farther away (rightmost column). We also see that even in conditions where no visual cue was present, participants’ gaze patterns in Experiment 2 were much more focused around the vanishing point (and consequently lower) than in Experiment 1.

In sum, our results show blockwise changes of eye and head movements that were neither clearly complementary nor compensatory, and each effector responded to different kinds of stimuli: the head mostly to motor influences, the eyes mostly to visual cues. Eye-in-world positions, which depend on both head and eye movements, also differed mainly depending on whether visual cues were present and less due to motor perturbations.

Short-term and long-term differences

A key question when investigating any perception-action loop is how adaptive actions are learned—how we adjust our behavior when we do something more than once. Effects of terrain on gait stability measures are known to vary over time of exposure (Kent, Sommerfeld, & Stergiou, 2019), as do fixation patterns toward movement targets (Rienhoff, Tirp, Strauß, Baker, & Schorer, 2016), but whether this is also the case for gaze patterns has remained open. To show the change of the measured parameters across slip responses, we averaged events across participants sorted by slip number within the block and split by condition (i.e., taking the average of all participants’ first, second, up until the twelfth slip in a given type of block; Figure 9).

Figure 9.

Gaze and gait parameters relative to slips, by slip number. The x-axis shows time relative to the slip; y-axis shows slip number. Colors indicate vertical gaze parameters and margin of support relative to the mean of each window, in m, with shading relative to the range of each parameter. Plotted are the means for the first 12 events (minimum number of perturbations presented in a block) of each of the two blocks that each condition was presented to each participant, with each row showing one condition. As each condition was presented to each participant in two separate blocks, the bottom half of each panel shows the first block of the corresponding condition and variable while the second half (above the white line) shows the second block. All colors adjusted for the range within each variable. In addition to clear patterns of decreases (blue) and increases (red) that may in some instances decrease over time, oscillations are also visible (as striation) in . Note that for Experiment 1, data are collapsed across blocks of different perturbation probabilities. Critically, patterns visible across virtually all slips were absent in first slips for head-in-world. Rightmost column: Median cross-correlation (maximum lag: 0.2 s) for each slip with all other slips of the same participant within the same condition, indicating how typical each slip's trajectory was. Plotted are head-in-world (black), eye-in-world (red), and (green).

The pattern for most slips was similar to the one seen in the aggregates shown in Figure 6, as gaze parameters (left and middle columns) showed a short peak, then a sharp decline followed by a recovery after motor perturbations, and a steady decline up until short before the slip in blocks with visual cues. This pattern was somewhat noisier for eye-in-world than for head-in-world, as the latter measure was computed from two variables (head-in-world and eye-in-head) that were not complementary and responded to different variables. , on the other hand, showed a sharp decline postslip, as well as some striation indicating steps that became clearer close to the slip, as data were time-locked to the slip event, which in turn was triggered by a step.

For all of these parameters, we make a critical observation: The very first slip in a block was qualitatively different from all others. No clear pattern emerged in the across-subject average, as all participants responded strongly but not as uniformly as for subsequent slips. To quantify this effect, we measured how typical each slip parameter's trajectory was. We computed median cross-correlations (Figure 9, right) between each slip and all other slips (a leave-one-out approach) of the same participant and slip condition (highest cross-correlation with a maximum lag of 0.2 s, which was chosen to make sure that trajectories were not separated by a full step). Median cross correlations were moderate, ranging from .12 for eye-in-world to .23 for head-in-world and .31 for when collapsed across trials and conditions. Within conditions, we saw a noticeable jump from the first slip of each block to all others as values for these two slips (with medians between .08 for head-in-world in blocks with motor perturbation and .20 for in blocks without motor perturbation) fell outside the ranges for other slips in almost all types of blocks but virtually no increase afterward (linear slopes ~ ranging from −.001 to .004). Unsurprisingly, while the v1m0 blocks without motor perturbations had the lowest median cross-correlations, first slips of each block in this condition showed the highest levels of similarity to other slips.

The first slip's special role has been pointed out before (Marigold & Patla, 2002), but what is more surprising is that in the second block of each slip type (top half of each panel), the same also applied, despite the fact that participants had already adjusted their response. Thus, we observe only minimal—if any retention of adjustments across blocks, even when the kind of slip did not differ at all. We note that participants were unaware of the order of blocks (which was counterbalanced across participants) but aware what block they would be in after the first perturbation, which may have played a role as contextual information (Gredin, Bishop, Broadbent, Tucker, & Williams, 2018). That said, participants tracked visual cues even with the knowledge that it would not signal a motor perturbation (v1m0; see third row of Figure 6).

Summary: Quick and effector-specific gaze and gait changes

We found effects on gaze and gait measures that scaled with perturbation intensity but not with perturbation frequency. Notably, gaze adjustments by head movements and eye movements were dissociable, with the former responding primarily to motor perturbations, while the latter was sensitive mostly to visual cues. Subtle but significant changes were observed within an experimental block: Blocks containing perturbations showed lowered gaze on average relative to unperturbed walking, again driven primarily by changes in head orientation. The presence of visual cues resulted in a raised gaze on average. We observed little meaningful adjustments persisting between blocks but adjustments mainly within blocks for eye, head, and body parameters.

Discussion

In our experiments, we combined quantitative experimental control over terrain difficulty with continuous walking in a visually complex environment. In concordance with real-world studies, we found that walking on an unreliable surface prompted participants to look down as gaze was directed toward potentially relevant visual cues. In addition, our unique experimental setup allowed us to isolate the effects around perturbation events contributing to the surface's (un)reliability. Right around perturbations, even clearer patterns emerged, and distinctly so for each condition. We observed distinct roles of head and eyes in gaze adjustment, the former being more sensitive to motor perturbations and the latter to visual cues. Interestingly, we observed an almost complete lack of carry over between blocks manifesting itself in adjustments of gaze parameters to motor perturbations that started anew with each block of the same condition which suggests that in the context of gaze for walking, much of the adjustment happens rapidly and with a high degree of flexibility.

Our results show that walking on a treadmill in virtual reality behaves in many ways similarly to real-world walking: Difficult terrain leads to lowered gaze (Marigold & Patla, 2007; Matthis, Yates, & Hayhoe, 2018; 't Hart & Einhäuser, 2012) and lasting changes to eye and head orientation, participants tend to look where they are most likely to find task-relevant information (Marigold & Patla, 2007), and gait is adapted to perturbations (Kent, Sommerfeld, & Stergiou, 2019; Rand, Wunderlich, Martin, Stelmach, & Bloedel, 1998). Such consistent patterns are important to establish, as of course even high-fidelity virtual reality environments are never perfect both with respect to the visual presentation and the necessarily somewhat restricted movement (e.g., in our experiments, we limited both walking and perturbations to the anterior–posterior dimension), and differences in gait parameters between walking on a treadmill and walking in the real world have been shown to exist (Dingwell, Cusumano, Cavanagh, & Sternad, 2001).

By having full experimental control over the timing of perturbation events despite the naturalistic setting, our setup provides additional information, especially with respect to the time scales of gaze and gait adjustments: We show the distinct immediate adjustments made as responses to perturbations and slips (Figure 6) within a regular walking task. We see distinct patterns for eye movements and head movements that contribute to gaze responses to our slip perturbations, characterized by a brief increase and then a sharp dip of head movements, while eye movements were much less systematically related to slips. Judging from the time course of the slip responses, the brief initial upward movement typically occurring within approximately 200 ms of the slip could potentially be reflex based (Nashner, 1976), whereas the characteristic looking-down action that followed would clearly be on a different time scale, occurring on average a few hundred milliseconds after the perturbation and lasting well over a second. This time course, along with the only weak coupling of gait and gaze on a per-slip basis (i.e., very mild correlations), points toward the lowered gaze being a deliberate action to direct gaze, rather than due to reflexes or the passive biomechanical slip response.

Isolating those events also allows us to demonstrate that changes in parameters for entire blocks are not driven just by immediate reactions to events but persist when those are excluded. This is especially relevant for the observed dissociations between eye-in-head and head-in-world, which changed as a function of visual and motor perturbations, respectively. Looking only at average data of entire blocks, the latter could very well have been interpreted as an artifact of motor responses to slips. However, these patterns persist over entire blocks, even when postslip time windows are excluded. This confirms that we do indeed see robust and stimulus-specific changes in each parameter. We may speculate why participants exhibited different changes in head and in eye orientation: Unnecessary changes in head orientation might be avoided for comfort and thus not displayed in response to just visual cues, or this may indicate a strategy in which orienting the head according mainly to the felt properties of the surface and using the eyes to scan for possible new information allows observers more flexible responses. The fact that participants readjusted to similar patterns in each of two blocks for each condition, specifically for head and body movements, is consistent with this conjecture (Figure 9, right). Finally, it should be noted that while participants adjusted their gaze to track visual cues, these gaze changes were generally smaller than the changes in position for the visual cues (Figure 8) in other words, the cues were not tracked perfectly and not fixated throughout. This is consistent with work showing that difficult terrain is fixated not directly under but at a certain distance in front of one's own feet (Matthis, Yates, & Hayhoe, 2018) and that fixating visual targets may not be an optimal strategy for action when the scene is predictable (Vater, Williams, & Hossner, 2020). We refrained from analyzing fixations toward our visual cue due to technical challenges: Mobile eye tracking tends to be less precise and accurate than stationary eye tracking, in particular when there are necessarily strong head movements. This is the case in our paradigm, resulting in a mean spatial error of approximately 2.2 as assessed by our validation procedure; see Appendix. This could have been an issue for fixation analyses toward a small target in a dynamic environment, which would require high precision and accuracy at any given time. Conversely, our analysis is based on within-participant data using relative eye-position trajectories (for slips) and blockwise averages. These measures are robust against absolute position errors, and therefore our results and conclusions are unlikely to be affected by this kind of error. We note also that our visual environment was somewhat reduced, consisting of a simple road with walls on each side and in some conditions schematic visual cues. Investigating gaze patterns while walking through a more complex environment could be an interesting issue for future research.

Furthermore, we analyzed changes over time for event responses specifically (Figure 9), which shows several interesting findings: First slip events are qualitatively different from later ones, not just overall within conditions but also in the second block of each condition. This shows that while adjustments are strong within blocks, participants were also quick to revert. Of course, this may well be a good adaptive strategy: Perhaps adjustments that can be taken up very quickly do not need to be maintained for long. Another option is that the reversion back to unadjusted parameters in the first slip of the second block of each condition might simply be due to uncertainty about the condition, given that participants had information about which block they were in only during unperturbed blocks. If this was the case, however, it would be interesting that participants would not err on the side of caution—preparing for a slip when a visual cue is approaching that has previously occurred with a motor perturbation seems like a more prudent strategy than not doing so. Nevertheless, not knowing whether there would be slips remains a possible cause, given the role of uncertainty in other tasks involving eye movements (Domínguez-Zamora, Gunn, & Marigold, 2018; Sullivan, Johnson, Rothkopf, & Ballard, 2012; Tong, Zohar, & Hayhoe, 2017). It is worth pointing out that for our young and healthy participants, the costs of falling, to be weighed against the costs of large and lasting changes to gait, would not be as high as they would be, for example, for older participants, for whom the costs of a potential fall are huge (Hadley, Radebaugh, & Suzman, 1985). This group indeed displayed noticeably different eye movement patterns in real-world situations (Dowiasch, Marx, Einhäuser, & Bremmer, 2015), as well as smaller adjustments than younger participants in other locomotor tasks (Potocanac & Duysens, 2017). Testing how gaze adjustments to gait difficulty vary across age and between individuals in a controlled and safe—setting may therefore be an exciting avenue for future research.

Supplementary Material

Acknowledgments

The authors thank Julia Trojanek and Marvin Uhlig for their help collecting data and Lorelai Kästner, Christiane Breitkreutz, Elisa-Maria Heinrich, and Melis Koç for their help annotating videos. The data reported here were also presented at the 2020 Vision Sciences Society Meeting.

Funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) project number 222641018 SFB/TRR 135. Funders had no role in the design or analysis of the research.

Commercial relationships: none.

Corresponding author: Karl Kopiske.

Email: karl.kopiske@physik.tu-chemnitz.de.

Address: Cognitive Systems Lab, Institute of Physics, Chemnitz University of Technology, Reichenhainer Str. 70, D-09126 Chemnitz, Germany.

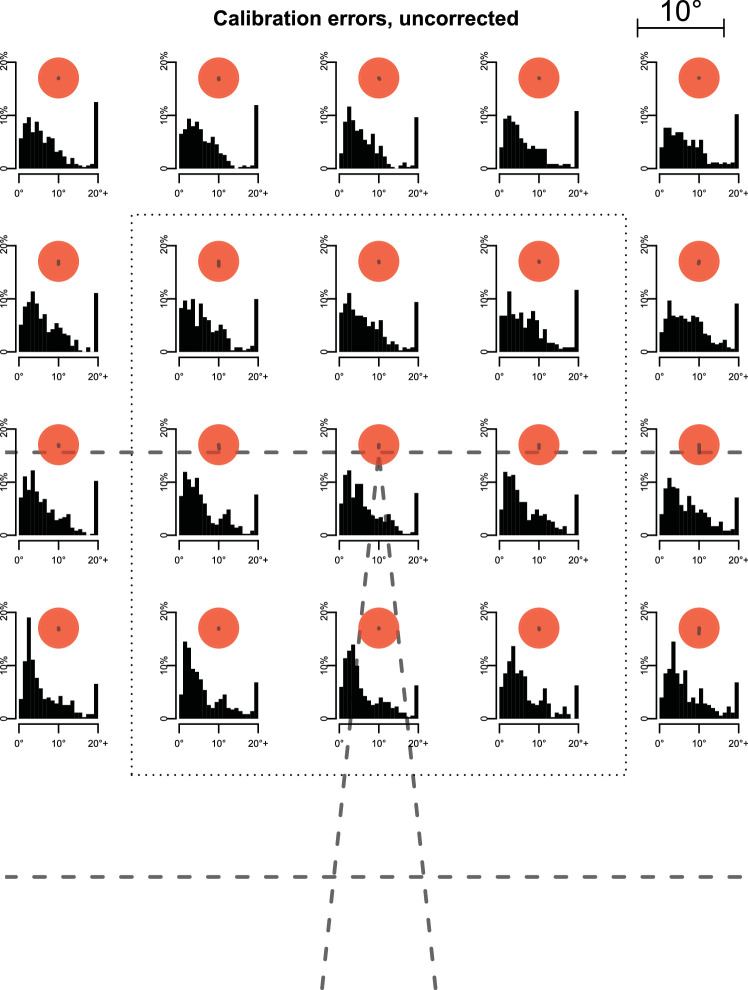

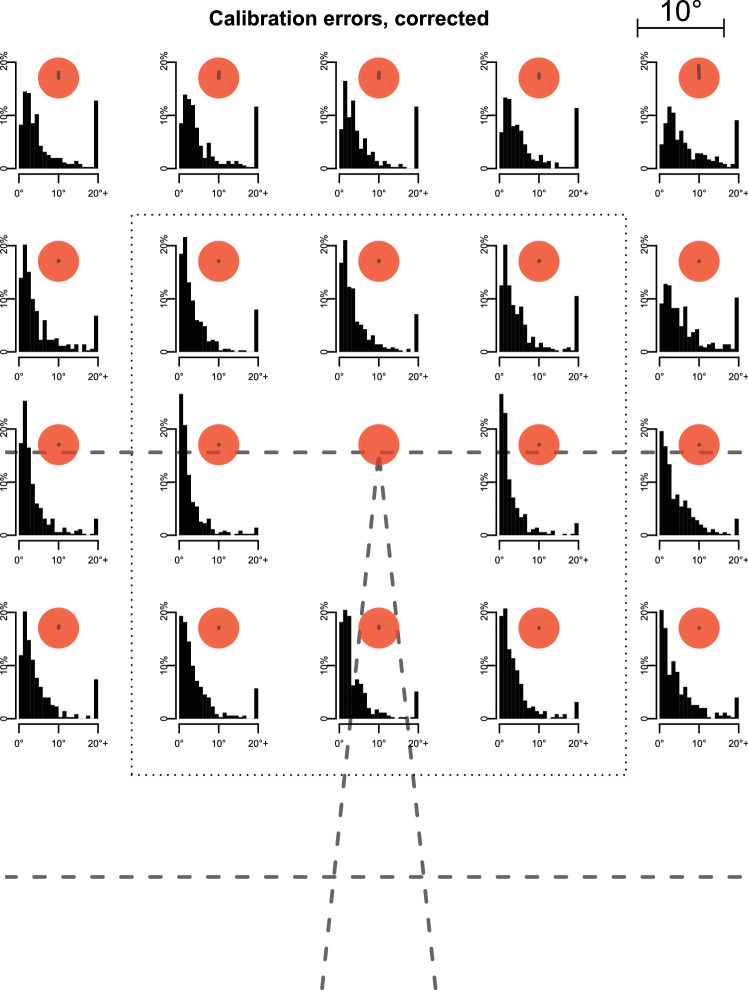

Appendix

The eye-tracking device was calibrated once at the start of the session and whenever the participants removed it in a break. To test the accuracy, precision, and stability of this calibration, we introduced an independent validation procedure. Each block was preceded by a 20-point validation procedure (Supplementary Movie S3). The validation error was rather large for these 20 points (median over all data: 5.5; Figure 10). However, within each participant, direction and size of the error were consistent across the visual field, such that when we corrected for an overall shift of the pattern using the central point, the error across all points reduced to 2.8 (Figure 11) and to 2.2 for the central area of the display, which accounted for over 90% of gaze directions (Figure 8). Over the course of a block, the thus corrected calibration did not drift to a relevant extent. We quantified this by applying the corrected calibration to the validation grid of the subsequent block and found the shift to be only 0.3 on average from the start of one block to the next.

Figure 10.

Distributions of calibration errors. As no motion-capturing data were recorded during calibration phase, we compared gaze positions eye-in-head with the position of red calibration dots retrieved from the head-cam videos. Periods of fixation were selected from the video by independent annotators for each fixation point separately. Within the thus identified period, we selected the 100-ms interval, in which gaze was closest to the fixation point, and took the maximal Euclidian distance within this interval as error measure for the respective block and participant. Shown are data across all blocks in both experiments combined. Bins for 20+ may include dots where the automatic detection did not work as intended, so that error medians are likely slightly overestimated. Dashed lines indicate the outlines of the treadmill and virtual road (visible during calibration), bottom of the screen, and virtual horizon. Size of the red dots is scaled approximately as in the actual display, with degrees of visual angle shown in the top right corner. Errors were sometimes considerable, especially further from the center of the screen. Lines from the center of each dot outward depict mean bias (in the same scale), which was minimal. Median absolute error was 5.5, virtually the same in the center (within the dotted rectangle; over 90% of gaze was allocated here; see Figure 8) and in the periphery (outside the rectangle), at 5.6 and 5.4, respectively.

Figure 11.

Distributions of calibration errors, corrected for each participant's bias. Errors were computed as described above and then corrected for the median error in x- and y-directions of the respective participant for dot appearing at the vanishing point. We see a markedly improved accuracy compared to the uncorrected data (median error: 2.8), indicating that within-participant effects were unproblematic for the measures and analyses considered. This improvement was especially marked in the center of the display (2.2) compared to the periphery (3.4), which is unsurprising given our choice of correcting for the error at the lower central dot. Notation as in Figure 10.

Supplementary Material

Four movies are available at https://doi.org/10.17605/OSF.IO/UMW5R:

Supplementary Movie S1. A participant walking and slipping from three angles (from behind and side views), as well as the participant's head-cam view. Footage from one of the first slips of this participant, in Experiment 1 (i.e., without visual cues).

Supplementary Movie S2. Head-cam view of a participant in Experiment 2 walking with perturbations and visual cues (v1m1 condition). As the participant traverses each of the two blueish rectangles, one belt of the treadmill accelerates to induce a motor perturbation.

Supplementary Movie S3. Head-cam view of the eye-tracker validation procedure. As 20 red dots are presented on the screen in a predefined order, the participant was asked to always fixate the one that was visible. Head movements were explicitly allowed. These recordings were used to validate that the eye tracker was able to record data of sufficient quality for further analysis.

Supplementary Movie S4. Head-cam view of the countdown to walking and the participant starting to walk. This countdown was always displayed after the validation and always showed the participant number, block number, and how many seconds were left until the treadmill would start.

References

- Barton, S. L., Matthis, J. S., & Fajen, B. R. (2019). Control strategies for rapid, visually guided adjustments of the foot during continuous walking. Experimental Brain Research, 237(7), 1673–1690, 10.1007/s00221-019-05538-7. [DOI] [PubMed] [Google Scholar]

- Belanger, M., & Patla, A. E. (1987). Phase-dependent compensatory responses to perturbation applied during walking in humans. Journal of Motor Behavior, 19(4), 434–453, 10.1080/00222895.1987.10735423. [DOI] [PubMed] [Google Scholar]

- Bogaart, M. van den, Bruijn, S. M., Dieën, J. H. van, & Meyns, P. (2020). The effect of anteroposterior perturbations on the control of the center of mass during treadmill walking. Journal of Biomechanics, 103, 109660, 10.1016/j.jbiomech.2020.109660. [DOI] [PubMed] [Google Scholar]

- Brown, T. G. (1911). The intrinsic factors in the act of progression in the mammal. Proceedings of the Royal Society B: Biological Sciences, 84(572), 308–319, 10.1098/rspb.1911.0077. [DOI] [Google Scholar]

- Capaday, C., & Stein, R. B. (1986). Amplitude modulation of the soleus H-reflex in the human during walking and standing. Journal of Neuroscience, 6(5), 1308–1313, 10.1523/jneurosci.06-05-01308.1986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). New York, NY: Psychology Press. [Google Scholar]

- Dingwell, J. B., Cusumano, J. P., Cavanagh, P. R., & Sternad, D. (2001). Local dynamic stability versus kinematic variability of continuous overground and treadmill walking. Journal of Biomechanical Engineering, 123, 27–32, 10.1115/1.1336798. [DOI] [PubMed] [Google Scholar]

- Domínguez-Zamora, F. J., Gunn, S. M., & Marigold, D. S. (2018). Adaptive gaze strategies to reduce environmental uncertainty during a sequential visuomotor behaviour. Scientific Reports, 8(1), 14112, 10.1038/s41598-018-32504-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorr, M., Martinetz, T., Gegenfurtner, K. R., & Barth, E. (2010). Variability of eye movements when viewing dynamic natural scenes. Journal of Vision, 10(28), 1–17, 10.1167/10.10.28. [DOI] [PubMed] [Google Scholar]

- Dowiasch, S., Marx, S., Einhäuser, W., & Bremmer, F. (2015). Effects of aging on eye movements in the real world. Frontiers in Human Neuroscience, 9, Article 46, 10.3389/fnhum.2015.00046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fajen, B. R., & Warren, W. H. (2003). Behavioral dynamics of steering, obstacle avoidance, and route selection. Journal of Experimental Psychology: Human Perception and Performance, 29(2), 343–362, 10.1037/0096-1523.29.2.343. [DOI] [PubMed] [Google Scholar]

- Ferris, D. P., Louie, M., & Farley, C. T. (1998). Running in the real world: Adjusting leg stiffness for different surfaces. Proceedings of the Royal Society B: Biological Sciences, 265, 989–994, 10.1098/rspb.1998.0388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gibson, J. J. (1958). Visually controlled locomotion and visual orientation in animals. British Journal of Psychology, 49(3), 182–194, 10.1111/j.2044-8295.1958.tb00656.x. [DOI] [PubMed] [Google Scholar]

- Gredin, N. V., Bishop, D. T., Broadbent, D. P., Tucker, A., & Williams, A. M. (2018). Experts integrate explicit contextual priors and environmental information to improve anticipation efficiency. Journal of Experimental Psychology: Applied, 24(4), 509–520, 10.1037/xap0000174. [DOI] [PubMed] [Google Scholar]

- Guitton, D. (1992). Control of eye-head coordination during orienting gaze shifts. Trends in Neurosciences, 15(5), 174–179, 10.1016/0166-2236(92)90169-9. [DOI] [PubMed] [Google Scholar]

- Hadley, E., Radebaugh, T. S., & Suzman, R. (1985). Falls and gait disorders among the elderly: A challenge for research. Clinics in Geriatric Medicine, 1(3), 497–500, 10.1016/S0749-0690(18)30919-4. [DOI] [PubMed] [Google Scholar]

- Hamill, J., Lim, J., & Emmerik, R. van. (2020). Locomotor coordination, visual perception and head stability during running. Brain Sciences, 10, Article 174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hausdorff, J. M., Yogev, G., Springer, S., Simon, E. S., & Giladi, N. (2005). Walking is more like catching than tapping: Gait in the elderly as a complex cognitive task. Experimental Brain Research, 164(4), 541–548, 10.1007/s00221-005-2280-3. [DOI] [PubMed] [Google Scholar]

- Hayhoe, M. M., & Rothkopf, C. A. (2010). Vision in the natural world. Cognitive Science, 2(2), 158–166, 10.1002/wcs.113. [DOI] [PubMed] [Google Scholar]

- Hof, A. L., Gazendam, M. G. J., & Sinke, W. E. (2005). The condition for dynamic stability. Journal of Biomechanics, 38(1), 1–8, 10.1016/j.jbiomech.2004.03.025. [DOI] [PubMed] [Google Scholar]

- Hollands, M. A., & Marple-Horvat, D. E. (2001). Coordination of eye and leg movements during visually guided stepping. Journal of Motor Behavior, 33(2), 205–216, 10.1080/00222890109603151. [DOI] [PubMed] [Google Scholar]

- Hollands, M. A., Marple-Horvat, D. E., Henkes, S., & Rowan, A. K. (1995). Human eye movements during visually guided stepping. Journal of Motor Behavior, 27(2), 155–163. [DOI] [PubMed] [Google Scholar]

- Imai, T., Moore, S. T., Raphan, T., & Cohen, B. (2001). Interaction of the body, head, and eyes during walking and turning. Experimental Brain Research, 136, 1–18, 10.1007/s002210000533. [DOI] [PubMed] [Google Scholar]

- Jahn, K., Strupp, M., Schneider, E., Dieterich, M., & Brandt, T. (2000). Differential effects of vestibular stimulation on walking and running. NeuroReport, 11(8), 1745–1748. [DOI] [PubMed] [Google Scholar]

- Kent, J. A., Sommerfeld, J. H., & Stergiou, N. (2019). Changes in human walking dynamics induced by uneven terrain are reduced with ongoing exposure, but a higher variability persists. Scientific Reports, 9, Article 17664, 10.1038/s41598-019-54050-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laurent, M., & Thomson, J. A. (1988). The role of visual information in control of a constrained locomotor task. Journal of Motor Behavior, 20(1), 17–37. [DOI] [PubMed] [Google Scholar]

- Lee, D. N., Lishman, J. R., & Thomson, J. A. (1982). Regulation of gait in long jumping. Journal of Experimental Psychology: Human Perception and Performance, 8(3), 448–459, 10.1037/0096-1523.8.3.448. [DOI] [Google Scholar]

- Madehkhaksar, F., Klenk, J., Sczuka, K., Gordt, K., Melzer, I., & Schwenk, M. (2018). The effects of unexpected mechanical perturbations during treadmill walking on spatiotemporal gait parameters, and the dynamic stability measures by which to quantify postural response. PLoS ONE, 13(4), e0195902, 10.1371/journal.pone.0195902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malone, L. A., & Bastian, A. J. (2010). Thinking about walking: Effects of conscious correction versus distraction on locomotor adaptation. Journal of Neurophysiology, 103(4), 1954–1962, 10.1152/jn.00832.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marigold, D. S., & Patla, A. E. (2002). Strategies for dynamic stability during locomotion on a slippery surface: Effects of prior experience and knowledge. Journal of Neurophysiology, 88, 339–353, 10.1152/jn.00691.2001. [DOI] [PubMed] [Google Scholar]

- Marigold, D. S., & Patla, A. E. (2007). Gaze fixation patterns for negotiating complex ground terrain. Neuroscience, 144, 302–313, 10.1016/j.neuroscience.2006.09.006. [DOI] [PubMed] [Google Scholar]

- Matthis, J. S., Barton, S. L., & Fajen, B. R. (2017). The critical phase for visual control of human walking over complex terrain. Proceedings of the National Academy of Sciences of the United States of America, 114(32), E6720–E6729, 10.1073/pnas.1611699114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matthis, J. S., Yates, J. L., & Hayhoe, M. M. (2018). Gaze and the control of foot placement when walking in natural terrain. Current Biology, 28, 1224–1233.e5, 10.1016/j.cub.2018.03.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McAndrew Young, P. M., Wilken, J. M., & Dingwell, J. B. (2012). Dynamic margins of stability during human walking in destabilizing environments. Journal of Biomechanics, 45(6), 1053–1059, 10.1016/j.jbiomech.2011.12.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGinley, J. L., Baker, R., Wolfe, R., & Morris, M. E. (2009). The reliability of three dimensional kinematic gait measurements: A systematic review. Gait and Posture, 29, 360–369, 10.1016/j.gaitpost.2008.09.003. [DOI] [PubMed] [Google Scholar]

- Mochon, S., & McMahon, T. A. (1980). Ballistic walking. Journal of Biomechanics, 13, 49–57. [DOI] [PubMed] [Google Scholar]

- Moore, S. T., Hirasaki, E., Raphan, T., & Cohen, B. (2001). The human vestibulo-ocular reflex during linear locomotion. Annals of the New York Academy of Sciences, 942, 139–147, 10.1111/j.1749-6632.2001.tb03741.x. [DOI] [PubMed] [Google Scholar]

- Multon, F., & Olivier, A. H. (2013). Biomechanics of walking in real world: Naturalness we wish to reach in virtual reality. In Steinicke F., Visell Y., Campos J. L., & Lécuyer A. (Eds.), Human walking in virtual environments: Perception, technology, and applications (pp. 55–77). New York, NY: Springer. [Google Scholar]

- Nashner, L. M. (1976). Adapting reflexes controlling the human posture. Experimental Brain Research, 26, 59–72, 10.1007/BF00235249. [DOI] [PubMed] [Google Scholar]

- Patla, A. E. (1997). Understanding the roles of vision in the control of human locomotion. Gait and Posture, 5, 54–69, 10.1016/S0966-6362(96)01109-5. [DOI] [Google Scholar]

- Potocanac, Z., & Duysens, J. (2017). Online adjustments of leg movements in healthy young and old. Experimental Brain Research, 235, 2329–2348, 10.1007/s00221-017-4967-7. [DOI] [PubMed] [Google Scholar]

- Rand, M. K., Wunderlich, D. A., Martin, P. E., Stelmach, G. E., & Bloedel, J. R. (1998). Adaptive changes in responses to repeated locomotor perturbations in cerebellar patients. Experimental Brain Research, 122, 31–43, 10.1007/s002210050488. [DOI] [PubMed] [Google Scholar]

- Reynolds, R. F., & Day, B. L. (2005a). Rapid visuo-motor processes drive the leg regardless of balance constraints. Current Biology, 15(2), R48–R49, 10.1016/j.cub.2004.12.051. [DOI] [PubMed] [Google Scholar]

- Reynolds, R. F., & Day, B. L. (2005b). Visual guidance of the human foot during a step. Journal of Physiology, 569(2), 677–684, 10.1113/jphysiol.2005.095869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rienhoff, R., Tirp, J., Strauß, B., Baker, J., & Schorer, J. (2016). The ‘Quiet Eye’ and motor performance: A systematic review based on Newell's constraints-led model. Sports Medicine, 46(4), 589–603, 10.1007/s40279-015-0442-4. [DOI] [PubMed] [Google Scholar]

- Rothkopf, C. A., Ballard, D. H., & Hayhoe, M. M. (2007). Task and context determine where you look. Journal of Vision, 7(14), 1–20, 10.1167/7.14.16. [DOI] [PubMed] [Google Scholar]

- Savitzky, A., & Golay, M. J. E. (1964). Smoothing and differentiation of data by simplified least squares procedures. Analytical Chemistry, 36(8), 1627–1639, 10.1021/ac60214a047. [DOI] [Google Scholar]

- Sessoms, P. H., Wyatt, M., Grabiner, M., Collins, J. D., Kingsbury, T., Thesing, N., & Kaufman, K. (2014). Method for evoking a trip-like response using a treadmill based perturbation during locomotion. Journal of Biomechanics, 47, 277–280, 10.1016/j.jbiomech.2013.10.035. [DOI] [PubMed] [Google Scholar]

- Solman, G. J. F., Foulsham, T., & Kingstone, A. (2017). Eye and head movements are complementary in visual selection. Royal Society Open Science, 4, 160569, 10.1098/rsos.160569. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sullivan, B. T., Johnson, L., Rothkopf, C. A., & Ballard, D. (2012). The role of uncertainty and reward on eye movements in a virtual driving task. Journal of Vision, 12(13), 19, 10.1167/12.13.19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 't Hart, B. M., & Einhäuser, W. (2012). Mind the step: Complementary effects of an implicit task on eye and head movements in real-life gaze allocation. Experimental Brain Research, 223(2), 233–249, 10.1007/s00221-012-3254-x. [DOI] [PubMed] [Google Scholar]

- 't Hart, B. M., Vockeroth, J., Schumann, F., Bartl, K., Schneider, E., König, P., & Einhäuser, W. (2009). Gaze allocation in natural stimuli: Comparing free exploration to head-fixed viewing conditions. Visual Cognition, 17(6–7), 1132–1158, 10.1080/13506280902812304. [DOI] [Google Scholar]

- Thomas, N. D. A., Gardiner, J. D., Crompton, R. H., & Lawson, R. (2020). Keep your head down: Maintaining gait stability in challenging conditions. Human Movement Science, 73, 102676, 10.1016/j.humov.2020.102676. [DOI] [PubMed] [Google Scholar]

- Tong, M. H., Zohar, O., & Hayhoe, M. M. (2017). Control of gaze while walking: Task structure, reward, and uncertainty. Journal of Vision, 17(1):28, 1–19, 10.1167/17.1.28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vater, C., Williams, A. M., & Hossner, E. J. (2020). What do we see out of the corner of our eye? The role of visual pivots and gaze anchors in sport. International Review of Sport and Exercise Psychology, 13(1), 81–103, 10.1080/1750984X.2019.1582082. [DOI] [Google Scholar]

- Wang, Y., & Srinivasan, M. (2014). Stepping in the direction of the fall: The next foot placement can be predicted from current upper body state in steady-state walking. Biology Letters, 10, 20140405, 10.1098/rsbl.2014.0405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Warren, W. H., Young, D. S., & Lee, D. N. (1986). Visual control of step length during running over irregular terrain. Journal of Experimental Psychology: Human Perception and Performance, 12(3), 259–266, 10.1037/0096-1523.12.3.259. [DOI] [PubMed] [Google Scholar]

- Weerdesteyn, V., Nienhuis, B., Hampsink, B., & Duysens, J. (2004). Gait adjustments in response to an obstacle are faster than voluntary reactions. Human Movement Science, 23, 351–363, 10.1016/j.humov.2004.08.011. [DOI] [PubMed] [Google Scholar]

- Whittle, M. W. (1997). Three-dimensional motion of the center of gravity of the body during walking. Human Movement Science, 16, 347–355, 10.1016/S0167-9457(96)00052-8. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.