Abstract

The purposes of this methods article are to (a) discuss how integration can occur through a connecting approach in explanatory sequential mixed methods studies, (b) describe a connecting strategy developed for a study testing a conceptual model to predict lung cancer screening, and (c) describe three analytic products developed by subsequent integration procedures enabled by the connecting strategy. Connecting occurs when numeric data from a quantitative strand of a study are used to select a sample to be interviewed for a subsequent qualitative strand. Because researchers often do not fully exploit numeric data for this purpose, we developed a multi-step systematic sampling strategy that produced an interview sample of eight subgroups of five persons (n = 40) whose profiles converged with or diverged from the conceptual model in specified ways. The subgroups facilitated the development of tailored interview guides, in-depth narrative summaries, and exemplar case studies to expand the quantitative findings.

Keywords: mixed methods, explanatory sequential designs, connecting, interview guides

Mixed methods (MM) research is widely used to answer complex questions in health and social sciences. MM research is “research that intentionally combines the perspectives, approaches, data forms, and analyses associated with quantitative and qualitative research to develop nuanced and comprehensive findings” (Plano Clark, 2019, p. 107). MM researchers use a wide range of MM designs depending on the purpose for mixing methods, timing of the quantitative and qualitative strands, relative priority given to each stand, and level of interaction (i.e., dependence or independence) of each strand (Creswell & Plano Clark, 2018).

Creswell and Plano Clark (2018) describe three common types of MM designs. Their typology emphasizes the intent of the designs rather than the timing of the strands or the weight given to either strand. One type is the explanatory sequential design in which collection and analysis of quantitative data proceeds collection and analysis of qualitative data. The design is used primarily to explain or expand quantitative results. A second type is the exploratory sequential design in which collection and analysis of qualitative data proceeds collection and analysis of quantitative data. This design is often used to develop an instrument, identify a new variable in a conceptual model, or inform intervention development. The third type is the convergent design in which qualitative and quantitative data are compared or combined. This design provides a comprehensive understanding of a phenomenon or a validation of one of the sets of findings.

Integration is a process in MM research in which the qualitative and quantitative strands of a study “come into conversation with each other” (Plano Clark, 2019, p. 108). Bazeley (2018) argues that purposeful interdependence between data sources and methodological strategies distinguishes MM designs from multimethod designs in which integration occurs only when conclusions are drawn. Points of interface in MM research can occur at the design, methods, interpretation, or reporting levels (Fetters et al., 2013). Integration at the methods level involves linking of data collection or analysis procedures of one strand to data collection or analysis procedures of the other strand (Fetters et al., 2013). Four approaches are used for integration at the methods level: (a) connecting—data from one strand inform sampling for the other strand; (b) building—data from one strand inform data collection for the other strand, (c) merging—data from both strands are linked by analysis, and (4) embedding—data from both strands are linked in a variety of ways at several points throughout a study (Fetters et al., 2013).

Meaningful integration is the hallmark of high-quality MM research (Guetterman et al., 2015). Several organizations, including the American Psychological Association (Levitt et al., 2018), National Institutes of Health Office of Behavioral and Social Sciences (2018), and Patient-Centered Outcomes Research Institute (PCORI; 2019) have provided guidelines for conducting MM research that call for intentional and well-described integration strategies. The PCORI standards for MM research, for example, instruct researchers to do the following:

Identify the order of study components and the points of integration. State who will conduct the integration; describe how their qualifications, training, and expertise equip them to understand and address the complexities and challenges unique to MM analysis; and state how integrated analyses will proceed in terms of the qualitative and quantitative components (p. 16).

Despite emphasis placed on the implementation of rigorous integration strategies as a standard for MM research, some mixed methodologists argue that genuine integration remains limited and MM researchers continue to present separate qualitative and quantitative findings without true integration (Fetters et al., 2013; Guetterman et al., 2015; Plano Clark, 2019). Bazeley (2018) argues that without rigorous integration in MM research “questions are left unanswered and possibilities for deeper insights unexplored. Worse, inappropriate conclusions might be drawn” (p. 13). These methodologists advocate “pushing the integration envelope” (p. 116) to ensure that MM research produces comprehensive answers to salient questions in a manner that is not possible by either a quantitative or qualitative approach alone (Fetters & Freshwater, 2015). However, some mixed methodologists point out that the goal of improving integration in MM research is impeded because the MM literature contains few detailed descriptions of practical strategies that can be used to achieve meaningful integration in a variety of ways (Plano Clark, 2019).

The purposes of this methods article, therefore, are to (a) discuss how integration can occur through a connecting approach in explanatory sequential MM studies, (b) describe a connecting strategy developed for a study testing a conceptual model to predict lung cancer screening, and (c) describe three analytic products developed by subsequent integration procedures enabled by the connecting strategy. We first describe how a connecting approach is used to select persons from a primary quantitative sample to participate in subsequent qualitative data collection. We then provide several examples of connecting strategies with examples of how they have been used in published studies. Next, we describe a multi-step systematic strategy that our research team developed in an explanatory sequential MM study of lung cancer screening to select an interview sample from the primary sample in the quantitative strand. Lastly, we describe how our sampling strategy enabled the development of the interview guide, an in-depth narrative description to explain unexpected quantitative findings, and an exemplar case study to extend expected quantitative findings.

Connecting in Explanatory Sequential MM Designs

The explanatory sequential design has two distinct strands that are implemented consecutively: a quantitative strand, in which numeric data are collected and analyzed, followed by a qualitative strand, in which textual data are collected and analyzed (Ivankova et al., 2006). The quantitative strand is often prioritized, and the two strands can be linked in a variety of ways at an intermediate stage (Ivankova et al., 2006). The purpose of an explanatory sequential design is often to use narrative data to explain or interpret numeric findings, especially those that are unexpected (Creswell et al., 2003). For example, study hypotheses are tested statistically and, using merging procedures, in-depth participant narratives are used to provide a more thorough understanding of the statistical findings (Fetters et al., 2013). Another purpose of the explanatory sequential design—one we focus on here—is to use quantitative data in order to purposively, rather than randomly, sample for the qualitative strand of a study (Creswell et al., 2003).

Selecting participants for a qualitative strand has been done in a variety of ways in studies claiming an explanatory sequential design (Creswell & Plano Clark, 2018). In some studies, all participants from the quantitative strand are invited to participate in follow-up interviews and the final qualitative sample consists of those who volunteer. For example, in an explanatory sequential study of employment goals, expectations, and migration intentions of nursing graduates in a Canadian border city, Freeman and colleagues (2012) conducted a web-based self-report survey of graduating baccalaureate nursing students and then followed up with semi-structured interviews with those participants who volunteered to be interviewed and responded to an email invitation. In other studies, information about demographic characteristics collected in a quantitative strand is used to purposively select an interview sample that represents the demographic composition of quantitative sample. For example, in an explanatory sequential study, Howells et al. (2019a, 2019b) conducted a quantitative online survey about dysphagia services and practices for adults in the community settings in Australia with speech-language therapists. They then followed up with semi-structured interviews with survey participants who were selected by purposive sampling to ensure representations from range of locations, service types, settings, and states.

Some studies select participants for the qualitative strand whose scores on quantitative variables differ in specific ways, such as having scores near the mean of a variable to allow exploration of typical manifestations of a phenomenon of interest or having scores at the extremes to allow targeted exploration of outlying manifestations of the phenomenon of interest. For example, in an explanatory sequential study of health-promoting behaviors, Baheiraei et al. (2014) conducted a quantitative survey of health-promoting behaviors and determinants with Iranian women of reproductive age and followed up with interviews with participants who had extreme (10th and 90th percentiles) health-promoting behavior scores. In some studies, subgroups are formed from the primary quantitative sample based on a combination of high and low scores on one or more quantitative variables, and participants are randomly selected from those groups for follow-up interviews. For example, in an explanatory sequential study of nurses’ perceptions of implementing smoking cessation guidelines, Katz et al. (2016) conducted a survey assessing positive and negative attitudes (pros and cons) toward the delivery of smoking cessation assistance. The investigators grouped the participants in four possible subgroups (high pros, low cons; high pros, high cons; low pros, high cons; low pros, low cons), and randomly selected participants from each of these groups for interviews.

The choice of connecting strategies to select interview participants can determine the extent to which quantitative and qualitative data can be later integrated. Interview samples that include all participants who volunteer, represent the demographic make-up of the quantitative sample, or have extreme or typical scores may not be able to provide the most relevant information to explain or extend specific quantitative findings. For example, we assume that participants who scored low on a predictor variable but high on an outcome variable, when the two variables were predicted to correlate positively, would likely be able to provide more relevant information to describe this discordance than participants chosen because of their demographic profiles or because they volunteered. Based on this assumption, we developed a strategy to create an interview sample of participants with specific profile scores who could provide the most germane information to explain or expand quantitative findings. The following is a description of how we used this strategy in our study of lung cancer screening.

Connecting in a Study of Lung Cancer Screening

The lung cancer screening study (Carter-Harris et al., 2019; Draucker et al., 2019) conducted by our research team (referred to as the parent study) is first briefly summarized to provide the context for the discussion that follows describing the strategy we developed to select the interview sample. All strands of the parent study received approval from the Institutional Review Board (IRB) of the investigators’ institution.

Parent Study

Despite screening for lung cancer with low-dose computed tomography (LDCT) decreases lung cancer deaths by 20% (Aberle et al., 2011), screening rates are low and little is known about variables that influence screening participation (American Cancer Society, 2016; Carter-Harris et al., 2016). The parent study, which used an explanatory sequential MM design to identify these variables, included a quantitative strand that was emphasized and implemented first and a qualitative strand that followed to explain and extend the quantitative findings.

Quantitative Strand.

In the quantitative strand of the study, a conceptual model developed by the research team to predict lung cancer screening was tested using path analysis. The antecedent variables in the model are psychological variables, demographic and health status characteristics, knowledge about lung cancer and lung cancer screening, healthcare provider recommendation for screening (y/n), and social and environmental influences. The mediating variables are lung cancer screening health beliefs, and the outcome variable is lung cancer screening participation (y/n).

An 88-item self-report web-based survey was developed to measure variables in the model. A sample of 515 persons in the United States who currently or formerly smoked long-term and who were eligible for lung cancer screening per the United States Preventive Services Task Force (USPSTF) guidelines (American Cancer Society, 2016) (hereafter referred to as survey participants) were recruited by nationwide Facebook targeted advertisements. The conceptual model and the findings of the path analysis have been published (Carter-Harris et al., 2019). In summary, the path analysis revealed that a healthcare provider recommendation to screen, higher self-efficacy, and lower medical mistrust were directly associated with screening participation (p < .05). The link between self-efficacy and screening participation was fully mediated by cancer fatalism, lung cancer fear, family history of lung cancer, income, knowledge of lung cancer and lung cancer screening, a healthcare provider recommendation to screen, and social influences.

Qualitative Strand.

Phone interviews based on a semi-structured interview guide were conducted with 40 participants (hereafter referred to as interview participants) who had completed the survey. The interviews, which were conducted by undergraduate nursing honors students, were recorded and transcribed. Interview participants were asked to describe their decisions regarding screening with LDCT as well as to discuss some of their responses on the quantitative survey in greater depth. Initial results of this strand of the study have been published (Draucker et al., 2019). In summary, a content analysis of the interview transcripts revealed that the interview participants’ screening decisions ranged from firm decisions not to screen because they deemed it to be a waste of time to enthusiastic decisions to screen because they believed screening was essential to their health and well-being. The findings also revealed that receiving a healthcare provider recommendation was a key factor in a decision to screen and that many participants had a number of misconceptions regarding LDCT, including that a scan is needed only if one is symptomatic or had not had a chest x-ray (Draucker et al., 2019).

Points of Integration.

The integration of quantitative and qualitative data of the parent study occurred at three points of interface. First, using a connecting approach (Fetters et al., 2013), we used quantitative data from the survey to develop individual participant profiles in order to selectively sample participants for the qualitative interviews. Second, using a building approach (Fetters et al., 2013), we used the participant profiles to tailor the interview guides for the qualitative interviews. Third, using a merging approach (Fetters et al., 2013), we created a data display to link major quantitative and qualitative findings to identify points of convergence and divergence. The steps used to implement the connecting approach at the first point of interface are described as follows.

A Multi-step Systematic Strategy for Selectively Sampling Interview Participants

Our aims for the qualitative strand of the study were to obtain robust descriptions of variables associated with lung cancer screening participation and to elucidate the relationships among the variables in the conceptual model. Because the number of participants we could interview was limited by available resources, we sought to maximize the relevance of information obtained during each interview. Therefore, we used the numeric scores that participants obtained on scales measuring select variables to develop participant profiles that guided selection of the interview sample. This strategy ensured that we obtained narratives from participants with varied characteristics thought to predict screening behaviors. In order to explain and extend the findings of the path analysis, we chose to interview participants whose experiences converged with, as well as diverged from, our conceptual model. The narratives of the participants whose experiences converged with the model would provide additional information about the significant paths in the model. Conversely, participants whose experiences diverged from the model could help explain why predicted paths were insignificant as well as reveal novel variables that influenced screening decisions that were not included in the model.

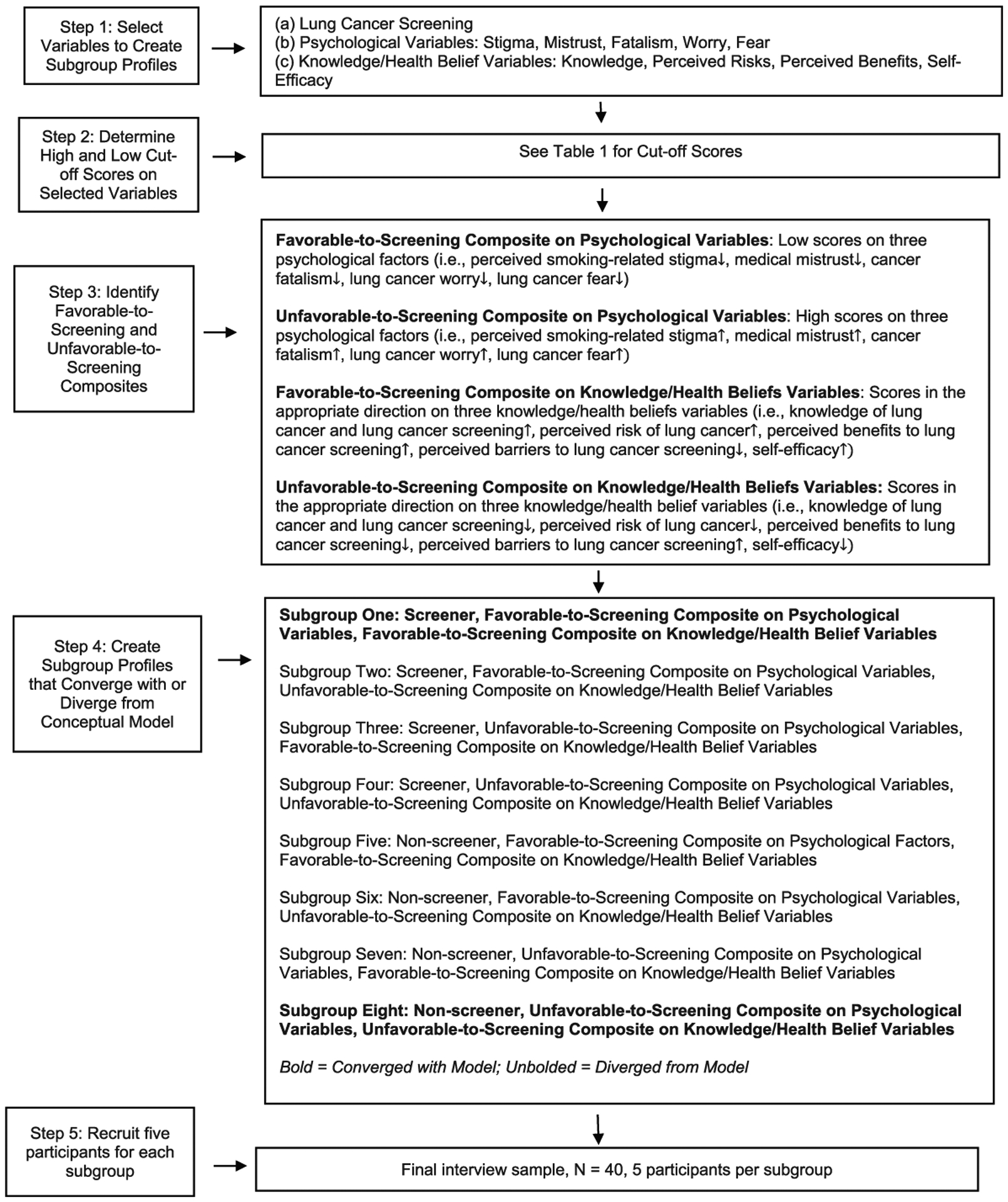

To create an interview sample of participants whose scores converged with or diverged from the conceptual model in a variety of ways, we developed profiles for each participant based on (a) whether or not they had screened, (b) whether their combination of scores on the psychological variables was favorable or unfavorable to screening, and (c) whether their combination of scores on the knowledge/health belief variables was favorable or unfavorable to screening. We then created eight subgroups that represented all combinations of these three sets of scores. The strategy occurred in five steps that are described in the following section and presented in Figure 1.

Figure 1.

A multi-step systematic strategy for selectively sampling interview participants.

Step 1: Select Variables to Create Subgroups.

Because we were interested in understanding variables that influenced screening decisions, the profiles were based, in part, on the dichotomous outcome variable of screening participation. Based on participant responses to the self-reported lung cancer screening participation item (Y/N), we determined that half of the subgroups would be comprised of participants who had screened and half comprised of those who had not.

While we aimed to include participants whose profiles converged with or diverged from the conceptual model, we needed to limit the profiles to a manageable and interpretable number of predictive variables. Of all the predictor variables in the model, we chose to focus on the psychological variables and a combination of the knowledge of lung cancer and lung cancer screening variable and health belief variables (hereafter referred to as knowledge/health belief variables). We chose these variables because (a) they reflected personal characteristics of the participants (as opposed to external forces such as healthcare provider recommendations and social and environmental influences) and (b) are considered modifiable traits (as opposed to demographic variables or family history of lung cancer). These characteristics, therefore, would potentially have the greatest relevancy for future intervention development for persons at risk for lung cancer.

Step 2: Determine High and Low Cutoff scores on the Selected Variables.

We set high and low cutoff points for scales measuring the variables to be used in selecting interview participants. Team members most familiar with the measures made decisions about cutoff points based on clinical relevance and the psychometric properties of the scales. The cutoff scores are displayed in Table 1.

Table 1.

Cutoff Scores for Determining Scores in the Favorable-to-Screening or Unfavorable-to-Screening Direction on the Psychological or Knowledge/Health Belief Factors (Draucker et al., 2019).

| Characteristics | Measures and Cutoff Scores |

|---|---|

| Psychological factors: favorable to screening | Participants who meet cut-off scores on 3 of the following 5 scales:

|

| Psychological factors: unfavorable to screening | Participants who meet cutoff scores on 3 of the following 5 scales:

|

| Knowledge/health belief factors: favorable to screening | Participants who meet cutoff scores on 3 of the following 5 scales:

|

| Knowledge/health belief factors: unfavorable to screening | Participants who meet cutoff scores on 3 of the following 5 scales:

|

Investigator-developed measure.

Step 3: Identify Favorable-to-Screening and Unfavorable-to-Screening Composites.

The cutoff scores allowed us to identify participants whose combination of scores (hereafter referred to as composites) on the psychological and knowledge/health belief variables were predicted to be favorable or unfavorable to screening. Participants were considered to have a favorable-to-screening composite on the psychological variables if they scored low on at least three of the five relevant scales (i.e., perceived smoking-related stigma, medical mistrust, cancer fatalism, lung cancer worry, lung cancer fear) and to have an unfavorable-to-screening composite if they scored high on at least three of these scales. For example, an individual with a score of 13 on the Cataldo Lung Cancer Stigma Smoking Subscale (Cataldo et al., 011), 20 on the Patient Trust in the Medical Profession Scale (Dugan et al., 2005), and 18 on the Lung Cancer Fear Scale (adapted from Champion et al., 2004) would be classified to have an unfavorable-to-screening composite on the psychological variables. Feeling blamed for being a smoker, mistrusting one’s healthcare provider, and feeling threatened by what it would mean to be diagnosed with lung cancer are likely to dissuade lung cancer screening. Participants were considered to have a favorable-to-screening composite on the knowledge/health belief variables if they scored in the appropriate direction on at least three of the five relevant scales (i.e., knowledge of lung cancer and lung cancer screening, perceived risk of lung cancer, perceived benefits to lung cancer screening, perceived barriers to lung cancer screening, self-efficacy) and to have an unfavorable-to-screening composite if they scored in the opposite direction on at least three of these scales.

Step 4: Create Subgroup Profiles that Converged with or Diverged from the Model.

We then created profiles for all participants based on their screening decisions and whether they are favorable-to-screening and/or unfavorable-to-screening composites on the psychological and/or knowledge/health belief variables and divided these profiles based on whether they converged with or diverged from the model. For example, the Subgroup 1 profile converged with the model because it identified participants who had screened and had favorable-to-screening composites on both the psychological and the knowledge/health belief variables. The Subgroup 8 profile also converged with the model because it identified participants who had not screened and had unfavorable-to-screening composites on the psychological and knowledge/health belief variables. Subgroup 2–7 profiles diverged from the model because they identified participants whose composites on the psychological and/or knowledge/health belief variables were inconsistent with their screening decisions. For example, the Subgroup 2 profile diverged from the model because it identified participants who had screened and had favorable-to-screening composites on the psychological variables but unfavorable-to-screening composites on the knowledge/health belief variables. Similarly, the Subgroup 7 profile diverged from the model because it identified participants who not screened and had unfavorable-to-screening composites on the psychological variables but favorable-to-screening composites on the knowledge/health belief variables.

Step 5: Recruit Five Participants for the Eight Subgroups.

We created participant profiles for the 236 survey participants who had agreed to participate in the qualitative strand of the study based on whether they screened or not and their composites on the psychological and the knowledge/health belief variables. We used these profiles to randomly select five participants for each subgroup to comprise the final interview sample of 40 participants. If a selected participant could not be reached or declined to be interviewed, we replaced that person with a randomly chosen person from the same subgroup until the subgroups for the interview sample were filled. Given that the pool from which interview participants were drawn was large and the profile criteria were broad (high versus low) and flexible (high/low scores on 3 out of 5 scales), we had little trouble compiling the final interview sample.

Integration Procedures Facilitated by the Subgroups

The connecting strategy that resulted in the eight subgroups facilitated several subsequent integration procedures that enabled the development of a number of analytic products needed to expand or explain quantitative findings. Here we provide examples of the development of three such products: (a) tailored interview guides, (b) in-depth narrative descriptions to explain unexpected quantitative findings, and (c) exemplar case studies to extend expected quantitative findings.

Using Subgroups to Develop Tailored Interview Guides.

Using a building integration approach (Fetters et al., 2013), tailored interview guides were created for each interview participant based on his or her subgroup membership. The questions on the guide corresponded to each participant’s screening decision and their composites on the psychological and knowledge/health belief variables in order to obtain targeted informaton about the variables. The items invited participants to elaborate on their thoughts about the variables, describe their day-to-day experiences related to the variables, and consider how these experiences influenced their screening decisions.

The following example demonstrates how the guides were developed. A 64-year-old male participant who currently smoked and who had not screened for lung cancer scored high on stigma, medical mistrust, cancer fatalism, knowledge, and perceived benefits and low on perceived barriers. He would be a member of Subgroup 7 because he did not screen and had an unfavorable-to-screening composite on the psychological variables but a favorable-to-screening composite on the knowledge/health belief variables. An interview guide developed for this participant would first have items focused on his thoughts and experiences related to the psychological variables on which he scored high. For example, items addressing smoking-related stigma would include the following: “You indicated you often feel blamed for smoking. Tell me more about those experiences. Describe a time in particular that you felt blamed for smoking. Are there any ways you can think of that being blamed for smoking influenced your decision not to get a lung scan?” Similar items would address the psychological variables of medical mistrust and cancer fatalism. These items would be followed by items focused on his thoughts and exeriences related to the knowledge/health belief variables. For example, he would be asked to elaborate on what benefits and barriers he associates with screening and to discuss his decision not to screen despite these views. These guides, therefore, allowed us to obtain the most relevant information from each participant based on his or her scores on the quantitative survey.

Using Subgroups to Develop an In-depth Narrative to Explain Unexpected Quantitative Findings.

Using a merging integration approach (Fetters et al., 2013), we created narrative summaries for each subgroup. Because the subgroups allowed us to identify participants whose scores diverged from the model in specific ways, the summaries provide a more thorough undersanding of non-signficant findings. To construct the summaries, we used standard content analytic techniques (Miles et al., 2014) focusing on remarks related to the influence of psychological and knowledge/health belief variables on screening decisions.

For example, a subgroup that was divergent from the model and represented persons who would be of particular clinical interest was Subgroup 5. These participants had favorable-to-screening composites on both the psychological and knowledge/health belief variables but had not screened as would have been predicted. By considering these participants as a group, we explored what might have influenced them not to screen despite favorable-to-screening composites.

Narrative Summary of Subgroup 5

The participants in this subgroup ranged in age from 55 to 70 years of age. Four were female; one was male. Four were Caucasian; one was African American. Two were widowed, two were married, and one was divorced. Three currently smoked, and two formerly smoked. Other than their screening decisions, the narratives of the five participants in this subgroup were primarily consistent with their numeric profiles. Overall, they tended to speak highly of their healthcare providers, suggesting they were collaborative, trustworthy, and informative; did not report feeling much smoking-related stigma, other than blaming themselves for having smoked; and did not endorse the notion of fatalism. Despite not having screened themselves, most advocated for screening generally because it would allow one to “catch it [cancer] early” or “pinpoint where [your are] at.” Moreover, they tended to suggest that they were knowledgeable about lung cancer and were active in seeking information about it; one was a registered nurse, several routinely scoured the internet for information on health-related issues, and some said they were knowledgable about lung cancer because family members had had the disease. Despite favorable-to-screening composites, the participants provided reasons in their narratives about why they had not screened. Two were quite adamant that the reason they had not screened was because of the cost. One stated she “would do it [screening] tomorrow, if it didn’t cost anything” and another was convinced that Medicare would pay only a portion of the cost and was sure she could not afford the scan because she was not currently working due to an injury. All five of the participants in this group also said they did not screen because it had not been recommended by a healthcare provider, and, in fact, one participant stated that her provider had recommended against screening because the participant had no pulmonary symptoms. Moreover, despite having knowledge of lung cancer and lung cancer screening, two participants in the group made statements that represented lung cancer screening misconceptions. The nurse in the group stated that her knowledge about screening led her to not screen because she had “no signs or symptoms,” and another participant stated that “when something is wrong, there is always a sign.” One participant who shared that she had strong religious beliefs stated that “God would let me know if I had cancer” and therefore screening was unnecessary.

The narrative summary of Subgroup 5 led to some working hypotheses about why persons who had favorable-to-screening composites might not have screened. The hypotheses warrant further examination but have implications for refining the model. First, given that lung cancer screening is a Grade B recommendation by the USPSTF, and thus has a zero copayment for Medicare beneficiaries, persons eligible for lung cancer screening may be misinformed about the cost of the screening, despite having other knowledge about lung cancer screening. Second, despite having a psychological propensity toward screening, knowledge regarding lung cancer and lung cancer screening, and health beliefs that support screening, lack of a healthcare provider recommendation may override these person-centered characteristics. Third, despite feeling informed about lung cancer and lung cancer screening, people may have certain misconceptions that interfere with screening participation. Fourth, spiritual beliefs, or other personal worldviews not included in the model, may be a deciding factor in the decision to screen. These hypotheses that can help explain some non-significant quantitative findings and led to a refinement of the conceptual model.

Using Subgroups to Select an Exemplar Case Study to Expand on Expected Quantiative Findings.

Also using a merging integration approach (Fetters et al., 2013), we created exemplar case studies to elaborate on expected quantiative findings. The use of exemplars is an analytic approach in which individual cases are intentionally chosen for closer examination because they exhibit a phenomenon of particular interest and relevancy to the research question (Bronk et al., 2013). The subgroup profiles directed our choice of exemplar cases for deeper exploration of relevant textual data. Subgroup 8, for example, was of particular interest to the research team. These participants’ profiles converged with the model because they had not screened and had unfavorable-to-screening composites on both the psychological and knowledge/health belief variables. Because participants in this subgroup were particularly resistent to screening, their narratives provided information-rich explanations about reluctance to screen. We present a case exemplar, referred to as Participant A, chosen from this group. This case was chosen because Participant A provided rich discussion and detail and many examples of unfavorable-to-screening thoughts and experiences.

Participant A: Subgroup 8

Particpant A was a 55-year-old Caucasian woman who was a former smoker. She had not been screened with LDCT. She was living with a partner and working part-time for pay. She met cut-off criteria for Subgroup 8. On the quantitative survey, she scored high on stigma, mistrust, fear, and worry scales (unfavorable-to-screening psychological variables) and low on knowledge and perceived risk and high in perceived barriers scales (unfavorable-to-screening knowledge/health belief variables).

Patient A clearly stated that she had not screened for lung cancer because of fear. She stated, “[I have not screened] because I am scared. And it’s stupid, but I’ve always been like that, real scared of doctors. I don’t know why. I guess because my dad was sick a lot and every time he went to the doctor, it seemed like it was awful. So I don’t know. I kind of have a fear of doctors. It’s dumb but that is what I have.” She again claimed later in the interview that she has avoided screening because she fears finding out the something terrible, does not want to die, and must work up the courage to screen.

When asked about worrying about lung cancer, she said she worries about “having it [cancer], suffering, and dying.” She connected worry and fear. She stated, in answer to a question about worry, “I know its crazy, but just fear again. I am going to go [to get screened] but right now I haven’t done. I’m trying to work up the courage.” She refers to her fears as “terrible” and “awful.”

Moreover, when asked about feeling confident about getting a lung scan, she again discussed her fear. She later suggested that she is “not the only one” who is fearful of doctors. She stated, “I think a lot of people have fear like I do and we need to get over it and be screened. I know I’ve talked to other people that feel the same way I do. Oh, I know, I am scared to death. A lot of fear out there for that. Then there are other people who just love to run to the doctor and have all these scans. It makes them feel better. I wish I were like that.”

She felt blamed for smoking and shared a story in she was asked at a family gathering to “move away” when she smoked in the presence of others. Family members complained that they did not like the smell of cigarettes and did not want to be near her if she smoked. She indicated, however, that this blame did not influence her decision not to screen.

Participant A also felt blamed for smoking by doctors and revealed that, because of this, she had downplayed the amount she actually smoked (a pack a day). She did not trust doctors because she felt they often give people the wrong medications or medications they do not need.

She reported that she has shortness of breath that comes and goes, which is why she quit smoking. She said she believes she may have “COPD” because that is what her mother had. Her shortness of breath influenced her thoughts about getting a scan but not her actions. She indicated that she thought she should get a scan to find the cause of her shortness of breath, but had not done so because the symptom had improved.

Participant A acknowledged that her risk of lung cancer was affected by smoking but stressed that lung cancer was also caused by genetics. She revealed that although some of her uncles had died of lung cancer, she did not believe this affected her risk because she did not share their “bloodline.” She stated, “I think a lot of it is hereditary, maybe a gene.”

In addition to fear, she indicated that a barrier to screening was not having a family practitioner as she used an urgent care center for her healthcare needs. She indicated that without a regular practitioner, she would not know “where to begin” to get a lung scan.

She again returned to the topic of fear when ending her interview. She shared that perhaps she should see a psychiatrist to get over her fear. She stated, “It’s just like it’s a doom for me. If I go, I just feel like it’s going to be the worst things and it’s just not a good way to be but that is how I am.”

Although Participant’s A profile as a member of Subgroup 8 converged with the model, her narrative illuminates in a more nuanced way why she did not screen. In particular, her story adds to our understanding of how two psychological variables—cancer fear and cancer worry—contributed to her thoughts about screening.

Participant A focused extensively on her fear throughout the interview and tended to return to this topic regardless of what else was asked. This suggests that although several variables might coalesce to dissuade persons from screening, in some cases, there can be one factor that most clearly drives the decision. Participant A was fearful not just of finding cancer but of medical care (doctors) more generally. She revealed that her fear was so intense that she may need psychiatric intervention, rather than education about lung cancer risk, to address this fear and make way for her to be screened. Participant A’s forthright narrative reveals that fear of medical care may do more than dissuade persons from preventive screenings but rather may paralyze them from seeking any healthcare.

Participant A’s narrative sheds light on lung cancer worry. She points out that some people who worry a great deal about cancer, like herself, avoid screening, whereas others “run to the doctor” to have “all these scans.” Her narrative thus suggests that lung cancer worry is a psychological response to the threat of illness that can have opposing affects on screening choices—some persons seek out screening to quell their worries, whereas others reject screening to avoid confronting their worries. Her narrative thus reveals some complexities related to the construct of lung cancer worry and suggests the phenomenon may need to be conceptualized in a more nuanced way.

Discussion

This methods article addresses calls for elaboration of best practices in MM research (National Institutes of Health Office of Behavioral and Social Sciences, 2018) by providing an in-depth discussion of an integration strategy in which data from a primary quantitative strand are used to select persons for follow-up interviews who can provide relevant and targeted information needed to address study aims. Moreover, in response to calls for transparent descriptions of practical strategies that are useful in achieveing optimal integration in MM studies (Plano Clark, 2019), we detailed a five-step process in which this strategy was used in an explanatory sequential MM study of lung cancer screening. The process, which we believe could be applied to any study in which the goal is to test and refine an explanatory conceptual model to advance intervention or instrument development, includes creating participant profiles based on numeric scores on measures of the outcome variables and carefully selected predictor variables from the model and creating subgroups with shared profiles from which participants are selected for an interview sample. This strategy can enable several subsequent integration opportunities in which analytic products needed to explain or expand quantitative findings can be developed. The findings from the quantitative strand (e.g., signficant and non-signifcant paths) can be merged with findings from the qualitative strand (e.g., summaries of participant narratives reflecting on personal experiences related to those paths) to provide a comprehensive description of the phenomena the model seeks to explain.

Although we outlined one strategy for creating subgroups, we recognize that there are other strategies that are employed for this purpose. For example, we were able to assign participants to subgroups without using statistical procedures or software, but these tools have been used in other studies using explanatory sequential designs. For example, a two-step explanatory sequential MM study was conducted by Jessup et al. (2018) to describe a process that involved inviting staff and patients to develop health literacy interventions. In the first step of the study, hospitalized patients completed a health literacy survey and a hierarchial cluster analysis was performed on the survey data to place participants in mutually exclusive groups with profiles of health literacy scores that reflected different patterns of health literacy needs and strengths. The literacy profiles were used for purposive critical sampling to identify participants who represented a range of literacy profiles and demographic characteristics for a follow-up Step 2. Step 2 included workshops in which patients and clinicans co-designed literacy interventions and provided information about their experiences. The literacy profiles were also used to develop clinical vignettes that were used in the workshops.

Although the connecting approach we described yielded ample information to answer a number of analytic questions, we encountered several challenges that are common to an explanatory sequential design (Creswell & Plano Clark, 2018). The first challenge was the extended time needed for study completion as the quantitative data collection occurred in a relatively short amount of time but the qualitative work took longer thereby necessitating participants be accessible for a protracted period of time. We addressed this challenge by budgeting an ample length of time to conduct the qualitative interviews and by contacting those participants who had agreed to an interview as soon as the quantiative anlayses were complete and we had constructed the participant profiles. A common challenge discussed by Creswell and Plano Clark (2018) is obtaining IRB approvals for the qualitative strand given that researchers cannot specify in advance what participants will be interviewed. To address this challenge, our IRB application indicated that we would conduct qualitative interviews with participants who had specific profiles based on numeric data in the quantitative strand. That is, we did not specify a priori who we would interview but did provide a detailed plan by which we would select the interview sample once when we obtained the quantitative data needed to do so.

Another challenge occurred when the interview participants denied some of their survey responses or were puzzled when we reminded them how they had answered particular items (i.e., You indicated on your survey that. . ..). We addressed this challenge in several ways. A few particpants indicated on the survey that they had screened with LDCT, whereas they denied having been screened when interviewed. We opted to gently remind these participants about what an LDCT entails and then questioned them again about whether they had had one. We prepared follow-up questions on screening based on their response to this probe as we determined it was inadvisable to challenge participants further about the discrepancy and left open the possibility the discrepancy was due to careless responding to the survey item rather than an attempt to mask their actual screening choices. Similarly, discrepancies occurred in response to questions fashioned to explore their high or low scores on the psychological and knowledge/health belief variables. However, in these instances, their discrepant responses seem to reflect more the complexity of the phenomenon measured in the quantitative componet rather that a reversal of their orginal responses. Therefore, we handled these responses analytically by merging their numeric and narrative responses to better understand their experiences with the phenomena in questions.

Despite such challenges, MM research continues to gain momentum as a well-established and rigorous approach for examining a variety of health-related phenomena. To advance the approach, it continues to be important for researchers to provide detailed and transparent descriptions of the integration approaches they use in MM studies. The intentional, systematic, and transparent implementation of integration strategies will allow researchers to conduct high-quality MM research that provides comprehensive and novel answers to pressing health-related questions.

Funding

The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: The research was support by the National Cancer Institute (1R15 CA208543).

Footnotes

Declaration of Conflicting Interests

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

References

- Aberle DR, Adams AM, Berg CD, Black WC, Clapp JD, Fagerstrom RM, Gareen IF, Gatsonis C, Marcus PM, & Sicks JD (2011). Reduced lung-cancer mortality with low-dose computed tomographic screening. The New England Journal of Medicine, 365(5), 395–409. 10.1056/NEJMoa1102873 [DOI] [PMC free article] [PubMed] [Google Scholar]

- American Cancer Society. (2016). Cancer facts and figures 2015. http://www.cancer.org/acs/groups/content/@editorial/documents/document/acspc-044552.pdf

- Baheiraei A, Mirghafourvand M, Charandabi SM-A, Mohammadi E, & Nedjat S (2014). Health-promoting behaviors and social support in Iranian women of reproductive age: A sequential explanatory mixed methods study. International Journal of Public Health, 59(3), 465–473. 10.1007/s00038-013-0513-y [DOI] [PubMed] [Google Scholar]

- Bazeley P (2018). Integrating analysis in mixed methods research. SAGE. [Google Scholar]

- Bronk KC, King PE, & Matsuba K (2013). An introduction to exemplar research. New Directions for Child and Adolescent Development, 2013(142), 1–12. 10.1002/cad.20045 [DOI] [PubMed] [Google Scholar]

- Carter-Harris L, Comer RS, Goyal A, Vode EC, Hanna N, Ceppa D, & Rawl SM (2017). Development and usability testing of a computer-tailored decision support tool for lung cancer screening: Study protocol. JMIR Research Protocols, 6(11), e225. 10.2196/resprot.8694 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carter-Harris L, Slaven JE 2nd, Monahan PO, Draucker CB, Vode E, & Rawl SM (2019). Understanding lung cancer screening behaviour using path analysis. Journal of Medical Screening. 10.1177/0969141319876961 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carter-Harris L, Tan ASL, Salloum RG, & Young-Wolff KC (2016). Patient-provider discussions about lung cancer screening pre- and post-guidelines: Health Information National Trends Survey (HINTS). Patient Education and Counseling, 99(11), 1772–1777. 10.1016/j.pec.2016.05.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cataldo JK, Slaughter R, Jahan TM, Pongquan VL, & Hwang WJ (2011). Measuring stigma in people with lung cancer: Psychometric testing of the Cataldo Lung Cancer Stigma Scale. Oncology Nursing Forum, 38(1), E46–E54. 10.1188/11.ONF.E46-E54 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Champion VL, Skinner CS, Menon U, Rawl S, Giesler RB, Monahan P, & Daggy J (2004). A breast cancer fear scale: Psychometric development. Journal of Health Psychology, 9(6), 753–762. 10.1177/1359105304045383 [DOI] [PubMed] [Google Scholar]

- Creswell JW, Plano Clark VL, Gutmann ML, & Hanson WE (2003). Advanced mixed methods research design. In Tashakkori A & Teddlie C (Eds.), Handbook of mixed methods in social and behavioral research (pp. 209–240). SAGE. [Google Scholar]

- Creswell JW, & Plano Clark VL (2018). Designing and conducting mixed methods research. SAGE. [Google Scholar]

- Draucker CB, Rawl SM, Vode E, & Carter-Harris L (2019). Understanding the decision to screen for lung cancer or not: A qualitative analysis. Health Expectations: An International Journal of Public Participation in Health Care and Health Policy, 22(6), 1314–1321. 10.1111/hex.12975 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dugan E, Trachtenberg F, & Hall MA (2005). Development of abbreviated measures to assess patient trust in a physician, a health insurer, and the medical profession. BMC Health Services Research, 5, 64. 10.1186/1472-6963-5-64 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetters MD, Curry LA, & Creswell JW (2013). Achieving integration in mixed methods designs—Principles and practices. Health Services Research, 48(6, Pt 2), 2134–2156. 10.1111/1475-6773.12117 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetters MD, & Freshwater D (2015). The 1 + 1 = 3 integration challenge. Journal of MM Research, 9(2), 115–117. 10.1177/1558689815581222 [DOI] [Google Scholar]

- Freeman M, Baumann A, Akhtar-Danesh N, Blythe J, & Fisher A (2012). Employment goals, expectations, and migration intentions of nursing graduates in a Canadian border city: A mixed methods study. International Journal of Nursing Studies, 49(12), 1531–1543. 10.1016/j.ijnurstu.2012.07.015 [DOI] [PubMed] [Google Scholar]

- Guetterman TC, Fetters MD, & Creswell JW (2015). Integrating quantitative and qualitative results in health science mixed methods research through joint displays. Annals of Family Medicine, 13(6), 554–561. 10.1370/afm.1865 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hay JL, Buckley TR, & Ostroff JS (2005). The role of cancer worry in cancer screening: A theoretical and empirical review of the literature. Psycho-Oncology, 14(7), 517–534. 10.1002/pon.864 [DOI] [PubMed] [Google Scholar]

- Howells SR, Cornwell PL, Ward EC, & Kuipers P (2019a). Dysphagia care for adults in the community setting commands a different approach: Perspectives of speech-language therapists. International Journal of Language & Communication Disorders, 54(6), 971–981. 10.1111/1460-6984.12499 [DOI] [PubMed] [Google Scholar]

- Howells SR, Cornwell PL, Ward EC, & Kuipers P (2019b). Understanding dysphagia care in the community setting. Dysphagia, 34(5), 681–691. 10.1007/s00455-018-09971-8 [DOI] [PubMed] [Google Scholar]

- Ivankova NV, Creswell JW, & Stick SL (2006). Using mixed-methods sequential explanatory design: From theory to practice. Field Methods, 18(1), 3–20. 10.1177/1525822X05282260 [DOI] [Google Scholar]

- Jessup RL, Osborne RH, Buchbinder R, & Beauchamp A (2018). Using co-design to develop interventions to address health literacy needs in a hospitalised population. BMC Health Services Research, 18(1), 989. 10.1186/s12913-018-3801-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Katz DA, Stewart K, Paez M, Holman J, Adams SL, Vander Weg MW, Battaglia CT, Joseph AM, Titler MG, & Ono S (2016). “Let me get you a nicotine patch”: Nurses’ perceptions of implementing smoking cessation guidelines for hospitalized veterans. Military Medicine, 181(4), 373–382. 10.7205/MILMED-D-15-00101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levitt HM, Bamberg M, Creswell JW, Frost DM, Josselson R, & Suárez-Orozco C (2018). Journal article reporting standards for qualitative primary, qualitative meta-analytic, and MM research in psychology: The APA Publications and Communications Board task force report. American Psychologist, 73(1), 26–46. 10.1037/amp0000151 [DOI] [PubMed] [Google Scholar]

- Mayo RM, Ureda JR, & Parker VG (2001). Importance of fatalism in understanding mammography screening in rural elderly women. Journal of Women & Aging, 13(1), 57–72. [DOI] [PubMed] [Google Scholar]

- Miles MB, Huberman AM, & Saldaña J (2014). Qualitative data analysis: A methods sourcebook (3rd ed.). SAGE. [Google Scholar]

- National Institutes of Health Office of Behavioral and Social Sciences. (2018). Best practices for mixed methods research in the health sciences (2nd ed.). National Institutes of Health. [Google Scholar]

- Patient-Centered Outcomes Research Institute. (2019). PCORI methodology standards. https://www.pcori.org/sites/default/files/PCORI-Methodology-Standards.pdf

- Plano Clark VL (2019). Meaningful integration within mixed methods studies: Identifying why, what, when, and how. Contemporary Educational Psychology, 57, 106–111. 10.1016/j.cedpsych.2019.01.007 [DOI] [Google Scholar]