Abstract

Objectives

To determine the associations between a care coordination intervention (the Transitions Program) targeted to patients after hospital discharge and 30 day readmission and mortality in a large, integrated healthcare system.

Design

Observational study.

Setting

21 hospitals operated by Kaiser Permanente Northern California.

Participants

1 539 285 eligible index hospital admissions corresponding to 739 040 unique patients from June 2010 to December 2018. 411 507 patients were discharged post-implementation of the Transitions Program; 80 424 (19.5%) of these patients were at medium or high predicted risk and were assigned to receive the intervention after discharge.

Intervention

Patients admitted to hospital were automatically assigned to be followed by the Transitions Program in the 30 days post-discharge if their predicted risk of 30 day readmission or mortality was greater than 25% on the basis of electronic health record data.

Main outcome measures

Non-elective hospital readmissions and all cause mortality in the 30 days after hospital discharge.

Results

Difference-in-differences estimates indicated that the intervention was associated with significantly reduced odds of 30 day non-elective readmission (adjusted odds ratio 0.91, 95% confidence interval 0.89 to 0.93; absolute risk reduction 95% confidence interval −2.5%, −3.1% to −2.0%) but not with the odds of 30 day post-discharge mortality (1.00, 0.95 to 1.04). Based on the regression discontinuity estimate, the association with readmission was of similar magnitude (absolute risk reduction −2.7%, −3.2% to −2.2%) among patients at medium risk near the risk threshold used for enrollment. However, the regression discontinuity estimate of the association with post-discharge mortality (−0.7% −1.4% to −0.0%) was significant and suggested benefit in this subgroup of patients.

Conclusions

In an integrated health system, the implementation of a comprehensive readmissions prevention intervention was associated with a reduction in 30 day readmission rates. Moreover, there was no association with 30 day post-discharge mortality, except among medium risk patients, where some evidence for benefit was found. Altogether, the study provides evidence to suggest the effectiveness of readmission prevention interventions in community settings, but further research might be required to confirm the findings beyond this setting.

Introduction

In April 2010, the Centers for Medicare and Medicaid Services (CMS) introduced the readmission rate as a publicly reported hospital quality measure, which was followed by the Hospital Readmissions Reduction Program (HRRP) two years later.1 The penalties imposed as part of the HRRP were intended to incentivize improvements in care coordination among Medicare fee-for-service members. Among health systems also caring for Medicare Advantage members, HRRP penalties play a relatively smaller role, although these health systems are still strongly incentivized to decrease readmission rates, owing to their participation in the CMS five star quality rating system.2 Hospitals with excess readmissions might experience a drop in their rating, resulting in substantial decreases both in reimbursement and in their ability to attract patients.

After the implementation of the HRRP, readmission rates among Medicare fee-for-service members seemed to modestly improve,3 4 5 6 although potentially at the cost of increased 30 day mortality.4 7 Among Medicare Advantage members, HRRP implementation might also be associated with reductions in readmission rates, although the rates appear to remain higher than those of Medicare fee-for-service members.8 Moreover, evidence is also growing for the existence of a spillover effect of the HRRP among patients with Medicaid and private insurance9 as well as among uninsured people10 and patients with conditions not targeted by the HRRP, including stroke11 and certain types of surgery.12 Many previous evaluations have aimed to characterize the impacts of interventions launched in response to the HRRP on readmission, mortality, and other outcomes.13 14 15 16 17 18 19 20 Estimating the precise impact of these interventions, however, can be challenging. Administrative and claims data do not identify which patients are treated, nor do they measure patient acuity—which is essential for accurate risk adjustment. Conversely, single institution studies cannot guarantee complete outcome follow-up, and such studies might also exhibit limited external validity,13 partly owing to a lack of cross site heterogeneity in implementation and care processes.

In 2015, Kaiser Permanente Northern California (KPNC), an integrated healthcare delivery system, developed a predictive model for the composite outcome of non-elective readmission or death within 30 days after hospital discharge,21 taking advantage of existing digital infrastructure developed for a hospital early warning system.22 23 24 25 After the development of this model, KPNC’s leadership decided to deploy it as the basis of an initiative, referred to as the Transitions Program, to standardize readmission prevention efforts across all 21 KPNC hospitals. Because the Transitions Program relied on dedicated implementation teams—a limited resource—its deployment was staggered and non-randomized, with KPNC hospitals going live sequentially over an 18 month period. In this report, we describe a hybrid quasi-experimental evaluation of the associations between implementation of this systems level care coordination intervention enabled by predictive analytics26 and 30 day readmission and mortality outcomes.

Methods

The reporting of this study conforms to the Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) Statement.27

We conducted a retrospective cohort study with a hybrid difference-in-differences and regression discontinuity design approach, with the objective of characterizing associations between the implementation of the Transitions Program and 30 day readmission and mortality outcomes. Our approach aimed to emulate the analogous target trial,28 with a randomized stepped wedge design and following the protocol as described in supplementary table SM1.

Data collection

Our setting consisted of the 21 KPNC hospitals.21 29 30 31 32 33 Index hospital discharges for patients met several criteria: the patient was discharged alive from hospital between 1 June 2010 and 31 December 2018, the patient was aged ≥18 years at hospital admission, and the admission was not for childbirth (although post-delivery complications were included) or for same day surgery. We linked hospital stays for transferred patients and identified all deaths using public and internal sources.9 13 14 15 16 We assigned 30 day readmission and mortality to the discharging hospital and followed patients to the end of January 2019.

Given that KPNC practice standards discourage direct admission to hospital from the outpatient setting, we considered readmissions to be non-elective if they began in the emergency department, if they began in the outpatient setting and the patient had elevated severity of illness (mortality risk ≥7.2% based on a risk score utilizing acute physiology alone),21 or if the principal diagnosis was an ambulatory care sensitive condition.34 All other hospital admissions that did not meet these criteria were classed as elective.

Examining the membership histories of patients admitted to hospital also enabled us to determine whether their discharge satisfied National Committee for Quality Assurance-Healthcare Effectiveness Data and Information Set criteria. These criteria stipulate continuous health plan membership in the 12 months before hospital discharge and 30 days after hospital discharge.35 Finally, we captured whether a hospital admission was as an inpatient or for observation only, and we classified episodes that began as observational but led to an inpatient admission as inpatient admissions. Supplementary file appendix A provides further information on the patient level covariates collected and used in this study.

Intervention

Before implementation of the Transitions Program, individual KPNC hospitals employed non-standardized criteria to determine which admitted patients were eligible for discrete care processes targeting non-elective readmission. Examples of such processes, which varied considerably across the 21 hospitals, included proactive scheduling of follow-up appointments, face-to-face medication reconciliation with a pharmacist, and individualized patient instruction by nurses. After implementation of the Transitions Program at each KPNC hospital, the discharge process was standardized based on patients’ risk estimates generated every morning at 0600. These risk estimates were used to target a primarily a telephone based care coordination intervention scheduled by trained Transitions Program case managers (table 1).

Table 1.

The Transitions Program readmission prevention intervention

| TSL risk level | Initial assessment | Week 1 | Week 2 | Week 3 | Week 4 | |

|---|---|---|---|---|---|---|

| High (≥45%) | Phone follow-up within 24 to 48 hours and follow-up visit to a primary care physician within 2 to 5 days | Phone follow-up every other day | ≥2 phone follow-ups (more as needed) | Once weekly phone follow-up (more as needed) | Once weekly phone follow-up (more as needed) | |

| Medium (25-45%) | Phone follow-up within 24 to 48 hours and follow-up visit to a primary care physician within 2 to 5 days | Once weekly phone follow-up (more as needed) | Once weekly phone follow-up (more as needed) | Once weekly phone follow-up (more as needed) | Once weekly phone follow-up (more as needed) | |

| Low (<25%) | Usual care at discretion of discharging physician | Usual care at discretion of discharging physician | Usual care at discretion of discharging physician | Usual care at discretion of discharging physician | Usual care at discretion of discharging physician | |

TSL=Transitions Support Level.

Patients with a predicted risk of the composite outcome <25% were considered at low risk and were assigned to receive usual care at the discretion of the discharging physician. Weeks 1 to 4 are relative to the discharge date, and the initial assessment occurs immediately after hospital discharge at the beginning of week 1.

The predictive algorithm used to assign the Transitions Program intervention is a logistic regression model that has been described previously,21 and which is now referred to as the transition support level score. It was developed using KPNC data from 2010 to 2013 and uses five predictors: a laboratory based acuity of illness score computed at admission (LAPS2), a comorbidity score (COPS2), length of stay, an indicator that the patient was “full code” status, and a variable with four levels denoting the pattern of previous hospital admissions in the 30 days preceding the current admission. Supplementary file appendix E describes the predictor variables in more detail, including coefficient estimates and standard errors. As originally developed, the transition support level score (also referred to as the RTCO30D model in Escobar et al21) exhibited fair calibration and discrimination (C statistic=0.756), improving on the discriminative ability of the LACE (length of stay, acuity of admission, comorbidities based on Charlson comorbidity score, and number of emergency visits in the last six months) score36 (C statistic=0.729) in a validation study comparing both models in the same KPNC dataset.

The first KPNC hospital went “live” with the Transitions Program on 7 January 2016, and implementation was completed across all 21 hospitals on 31 May 2017. Other than a training period, no ramp-up phase preceded the go live date at each hospital—that is, the implementation was applicable for all eligible patients awaiting discharge on or after the go live date for that hospital. Patients with an estimated risk of 30 day readmission or death of ≥25% (medium risk) computed on their discharge day were followed post-discharge by the Transitions Program. Furthermore, patients with an estimated risk of ≥45% at discharge were considered at high risk and were assigned to receive a version of the intervention with intensified telephone outreach (table 1). Stakeholders, including regional and hospital leadership and the KPNC Division of Research, chose these risk thresholds based on available staffing resources.

Patients with a risk threshold <25% received usual care, which varied across hospitals. Clinicians could, however, request that specific patients be assigned to the Transitions Program if, for example, they had additional information on social determinants of health associated with increased risk, such as homelessness, that are not easily captured by electronic health record data. Training on the intervention components was provided to KPNC service area staff, as well as to the relevant staff at individual hospitals immediately preceding each hospital’s go live date. Supplementary file appendix F provides additional details on the intervention, including standard workflows and example scripts used by Transitions Program staff.

In our analyses, we considered patients to be at increased risk and thus to have been assigned to the intervention if their predicted risk exceeded 25% and their admission occurred after the go live date for the discharging hospital. Hence, in keeping with the approach to emulate the target trial (supplementary table SM1), in our analyses we primarily estimate the observational analog of the intention-to-treat effect in a randomized trial. Compliance data were available for patients whose index discharges took place after July 2017 and were derived from a care management platform (KP CareLinx) through which case managers followed-up on Transitions Program referrals and coordinated telephone outreach. After discharge of a flagged patient, Transitions Program case managers were directed to make up to three initial outreach phone calls to attempt to enroll the patient. If this initial outreach proved unsuccessful, then the referral was closed and the patient was not considered enrolled in the Transitions Program.

Outcomes

The primary outcomes were non-elective readmission and post-discharge mortality in the 30 days after the index hospital discharge. For the non-elective readmission outcome, a hospital admission either as an inpatient or for observation only after the index discharge could count as a readmission. As a secondary outcome, we also assessed 30 day post-discharge mortality out of hospital—that is, deaths that occurred at a location other than a KPNC hospital within the 30 days post-discharge.

Statistical analyses

To characterize associations between the implementation of the Transitions Program and post-discharge outcomes, we applied two complementary quasi-experimental methods: two group two period difference-in-differences analyses and regression discontinuity designs.37 In addition, we also assessed longitudinal trends in the observed to expected ratio of the composite outcome of 30 day readmission or post-discharge mortality, when the expected number of such events was computed using the same statistical model used to assign the Transitions Program intervention.

In an observational study, a difference-in-differences analysis accounts for underlying secular trends that could potentially influence outcomes. This analysis relies on two main assumptions: the parallel trends assumption—that trends in the outcomes between the treated and non-treated groups are the same before the intervention, and the common shocks assumption—that unrelated effects during or after implementation affect both groups to the same extent.38 As the Transitions Program was rolled out to the 21 KPNC hospitals in a staggered, non-random fashion, in our target trial emulation approach, we conceptualized the data as if it had been generated by a non-randomized stepped wedge design,39 and we estimated associations using generalized linear mixed effects models that clustered random effects at the hospital and year levels.

This model included a binary term indicating if an admission occurred during the post-implementation period for that hospital, in addition to a binary term indicating medium or high (≥25%) risk. The model also included an interaction between these two terms, representing treatment assignment, and we interpreted its coefficient as the association between the Transitions Program intervention and the outcome. This model also adjusted for patient level covariates, including comorbidity burden and acuity at admission and discharge, as well as secular trends in the outcome over the study period through the random effect for the year of an index discharge. Supplementary file appendix B provides a full specification of this model, including details on implementation using the R programming language.

To complement the difference-in-differences analyses,37 we also pooled the post-implementation data from all 21 hospitals to fit regression discontinuity designs,40 41 taking advantage of the existence of a continuous forcing variable—in this case, the transition support level risk score—with a threshold (25%) used to assign treatment. A regression discontinuity design estimates a local average treatment effect as the difference in the outcomes among the two groups of patients immediately near either side of this threshold. In our analyses, we assumed that treatment is completely determined by the forcing variable, representing a sharp regression discontinuity design. As a sensitivity analysis for the two primary outcomes, we also employed a fuzzy regression discontinuity design, which adjusts for individuals’ propensity to self-select into treatment.

The local average treatment effect estimate from a regression discontinuity design can be interpreted as the causal effect of the intervention for patients near the risk threshold, and this contrasts with the difference-in-differences approach, which estimates an average association across the whole cohort. Linear regressions were specified for readmission and local linear regressions for mortality, with the optimal bandwidth chosen by the Imbens-Kalyanaraman method.42 The regression discontinuity design makes several mild assumptions, which are further described in supplementary file appendix C.

In addition, in a sensitivity analysis we also replicated these analyses in two additional cohorts: inpatient hospital admissions only, excluding observation stays, and only those hospital admissions meeting the Healthcare Effectiveness Data and Information Set criteria, which represents a subset of the inpatient only cohort. In these sensitivity analyses we used the same definition of the primary readmission outcome as that evaluated in the main analysis, in that both inpatient admissions and observation stays in the 30 days post-discharge were counted as readmissions.

Patient and public involvement

No patients or members of the public were involved in the conceptualization or design of this study, nor in the interpretation of the results, primarily due to the lack of funding allotted for such purposes.

Results

Among patients discharged from any of the 21 KPNC hospitals during the study period, 1 539 285 discharges corresponding to 739 040 unique patients met the inclusion criteria, of which 1 269 460 discharges (82.5%) followed inpatient hospital admissions and 269 825 discharges (17.5%) followed stays for observation. Overall, 1 127 778 (73.3%) patients were discharged during the pre-implementation period and 411 507 (26.7%) after implementation. Table 2 summarizes all discharges across both periods and stratified by period.

Table 2.

Characteristics of cohort over study period (June 2010-December 2018) and before and after implementation of the Transitions Program, including both index and non-index hospital admissions. Values are percentages unless stated otherwise (numbers in parentheses denote the range across all 21 study hospitals)

| Characteristics | Total | Pre-implementation | Post-implementation | P value | Standardized mean difference |

|---|---|---|---|---|---|

| No of hospital admissions | 1 584 902 | 1 161 452 | 423 450 | ||

| No of patients | 753 587 | 594 053 | 266 478 | ||

| Inpatient* | 82.8 (69.7-90.6) | 84.4 (73.1-90.7) | 78.5 (57.7-90.3) | <0.001 | −0.151 |

| Observation | 17.2 (9.4-30.3) | 15.6 (9.3-26.9) | 21.5 (9.7-42.3) | <0.001 | 0.151 |

| Inpatient stay <24 hours† | 5.2 (3.3-6.7) | 5.1 (3.8-6.5) | 5.6 (1.8-9.1) | <0.001 | 0.041 |

| Transport-in‡ | 4.5 (1.4-8.7) | 4.5 (1.7-8.8) | 4.5 (0.4-8.4) | 0.56 | −0.001 |

| Mean age | 65.3 (62.2-69.8) | 65.1 (61.9-69.6) | 65.8 (62.8-70.4) | <0.001 | 0.038 |

| Men | 47.5 (43.4-53.8) | 47.0 (42.4-53.5) | 48.9 (45.3-54.9) | <0.001 | 0.037 |

| Kaiser Foundation health plan member | 93.5 (75.3-97.9) | 93.9 (80.0-98.0) | 92.5 (61.7-97.6) | <0.001 | −0.052 |

| Met strict membership definition§ | 80.0 (63.4-84.9) | 80.6 (67.6-85.5) | 78.5 (51.3-83.8) | <0.001 | −0.053 |

| Met regulatory definition§ | 61.9 (47.2-69.7) | 63.9 (50.2-72.2) | 56.5 (38.7-66.6) | <0.001 | −0.152 |

| Admission through emergency department | 70.4 (56.7-82.0) | 68.9 (56.0-80.3) | 74.4 (58.4-86.6) | <0.001 | 0.121 |

| Median Charlson comorbidity score¶ | 2.0 (2.0-3.0) | 2.0 (2.0-2.0) | 3.0 (2.0-3.0) | <0.001 | 0.208 |

| Charlson comorbidity score ≥4 | 35.2 (29.2-40.7) | 33.2 (28.2-39.8) | 40.9 (33.0-46.2) | <0.001 | 0.161 |

| Mean COPS2** | 45.6 (39.1-52.4) | 43.5 (38.4-51.5) | 51.2 (39.7-55.8) | <0.001 | 0.159 |

| COPS2 ≥65 | 26.9 (21.5-32.0) | 25.3 (21.0-31.6) | 31.1 (22.5-35.4) | <0.001 | 0.129 |

| Mean admission LAPS2†† | 58.6 (48.0-67.6) | 57.6 (47.4-65.8) | 61.3 (50.2-72.8) | <0.001 | 0.092 |

| Mean discharge LAPS2 | 46.7 (42.5-50.8) | 46.3 (42.5-50.8) | 47.6 (42.3-52.9) | <0.001 | 0.039 |

| LAPS2 ≥110 | 12.0 (7.8-16.0) | 11.6 (7.5-15.2) | 12.9 (8.3-18.4) | <0.001 | 0.039 |

| Full code at discharge | 84.4 (77.3-90.5) | 84.5 (77.7-90.5) | 83.9 (75.9-90.5) | <0.001 | −0.016 |

| Mean length of stay (days) | 4.8 (3.9-5.4) | 4.9 (3.9-5.4) | 4.7 (3.9-5.6) | <0.001 | −0.034 |

| Discharge disposition‡‡: | 0.082 | ||||

| Regular discharge | 72.7 (61.0-86.2) | 73.3 (63.9-85.9) | 71.0 (52.1-86.9) | <0.001 | |

| Home health | 16.1 (6.9-23.3) | 15.2 (6.9-22.6) | 18.5 (7.0-34.5) | <0.001 | |

| Regular skilled nursing facility | 9.9 (5.9-14.3) | 10.0 (6.0-15.2) | 9.5 (5.6-12.4) | <0.001 | |

| Custodial skilled nursing facility | 1.3 (0.7-2.5) | 1.5 (0.8-2.7) | 0.9 (0.4-1.8) | <0.001 | |

| Hospice referral | 2.6 (1.7-4.4) | 2.6 (1.7-4.6) | 2.7 (1.5-4.0) | <0.001 | 0.007 |

| Outcomes§§: | |||||

| Inpatient mortality | 2.8 (2.1-3.3) | 2.8 (2.1-3.3) | 2.8 (1.8-3.3) | 0.17 | −0.003 |

| 30 day mortality | 6.0 (4.0-7.3) | 6.1 (4.1-7.6) | 5.9 (3.9-6.8) | <.01 | −0.006 |

| Any readmission | 14.5 (12.7-17.2) | 14.3 (12.3-17.3) | 15.1 (13.3-17.0) | <0.001 | 0.021 |

| Any non-elective readmission | 12.4 (10.4-15.4) | 12.2 (10.2-15.5) | 13.1 (10.8-15.4) | <0.001 | 0.029 |

| Non-elective inpatient readmission | 10.5 (8.2-12.6) | 10.4 (8.1-12.8) | 10.8 (8.6-12.9) | <0.001 | 0.012 |

| Non-elective observation readmission | 2.4 (1.4-3.7) | 2.2 (1.2-3.4) | 3.0 (1.9-5.6) | <0.001 | 0.049 |

| 30 day post-discharge mortality | 4.0 (2.6-5.2) | 4.1 (2.7-5.4) | 3.9 (2.3-4.9) | <0.001 | −0.007 |

| Composite outcome | 15.2 (12.9-18.8) | 15.0 (12.9-19.1) | 15.8 (13.3-18.0) | <0.001 | 0.023 |

COPS2=comorbidity point score, version 2; LAPS2=laboratory based acute physiology score, version 2.

Rates employ hospital admission episodes (which can include linked stays for patients who were transported) as the denominator.

Hospital episodes where patients transitioned from observation to inpatient status are classified as inpatients.

Refers to patients whose linked hospital admission episode began at a hospital not owned by Kaiser Foundation Hospitals. These hospital admissions, involving 60 326 patients, had increased inpatient (4.9%) and 30 day (9.2%) mortality, compared with 2.8% and 6.0% in the rest of the Kaiser Permanente Northern California cohort.

The Healthcare Effectiveness Data and Information Set (HEDIS) membership definition restricts the denominator to patients with continuous health plan membership in the 12 months preceding and the 30 days after hospital discharge, with a maximum gap in coverage of 45 days in the preceding 12 months. The public reporting definition only includes patients meeting HEDIS membership criteria and excludes hospital admissions for observation and inpatient admissions with length of stay <24 hours.

Scores were computed using the methodology of Deyo et al.43

COPS2 is assigned based on all diagnoses incurred by a patient in the 12 months preceding the index hospital admission. The univariate relationship of COPS2 with 30 day mortality is: 0-39, 1.7%; 40-64, 5.2%; ≥65, 9.0%.

LAPS2 is assigned based on a patient’s worst vital signs, pulse oximetry, neurological status, and 16 laboratory test results in the preceding 24 hours (discharge LAPS2) or 72 hours (admission LAPS2). The univariate relationship of an admission LAPS2 with 30 day mortality is: 0-59, 1.0%; 60-109, 5.0%; ≥110, 13.7%; for discharge LAPS2, the relationship is 0-59, 2.2%; 60-109, 8.1%; ≥110, 20.5%.

Disposition among patients discharged alive from hospital. Hospice referral is independent of discharge disposition.

Only patients who survived to discharge are included in the post-discharge outcomes. Non-elective readmissions are those which began in the emergency department, or were experienced by patients with an ambulatory care sensitive condition or with an admission LAPS2 score ≥60, as described in Escobar et al.21 The composite outcome corresponds to non-elective readmission to hospital or death within 30 days after discharge. These figures do not include transports-in (see ‡).

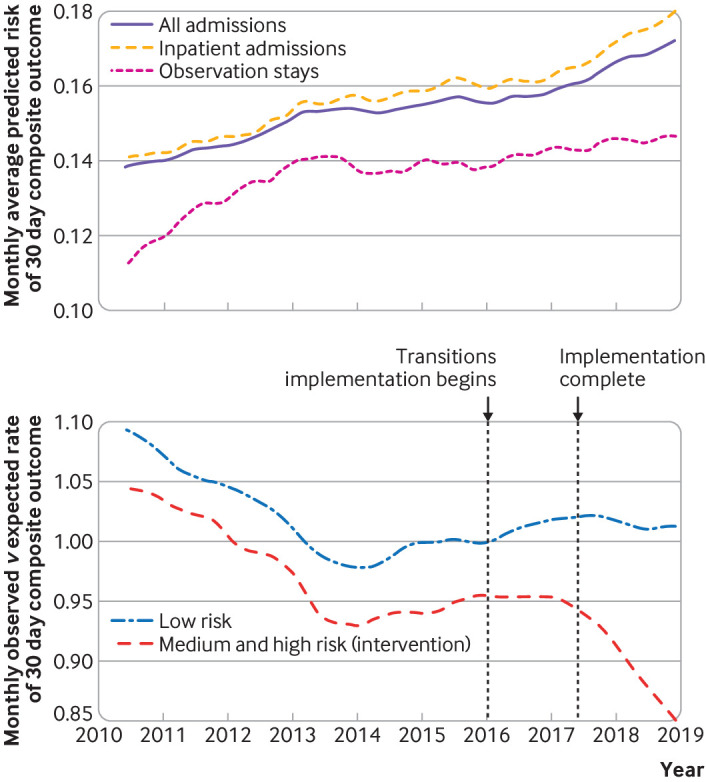

Among the 739 040 included patients, the mean age was 65.0 years and 52.5% were women. The overall 30 day non-elective readmission rate among the index discharges was 12.4%, and 30 day post-discharge mortality was 4.0%. The 30 day non-elective readmission rate increased over the study period, from 12.1% in 2010 to 13.3% in 2018, which paralleled an increase in the predicted risk of the composite outcome at discharge (fig 1). This increase in predicted risk appeared to be driven by both increased acuity at admission and comorbidity burden across levels of predicted risk over this period, whereas acuity at discharge appeared largely unchanged (supplementary tables SR6-SR8). In addition, the mean monthly discharge rate also decreased from 64.5% to 52.6% over this period. Moreover, the calibration of the transition support level risk estimates used to assign the Transitions Program intervention appeared stable over the study period, although these estimates tended to overpredict actual risk among patients with a predicted risk exceeding 40% (supplementary figure SR9).

Fig 1.

(Top) Monthly average predicted risk of composite outcome of 30 day post-discharge non-elective readmission or mortality among all Kaiser Permanente Northern California (KPNC) hospital discharges, June 2010 to December 2018, for three groups (top). (Bottom) Monthly observed to expected rate of 30 day composite outcome for discharged patients with predicted low risk (<25%) or predicted medium and high risk (≥25%), June 2010 to December 2018. These subgroups correspond to the non-treated and intervention groups for the Transitions Program intervention, respectively. Vertical lines denote start of the implementation period, when first KPNC hospital went “live” with the Transitions Program (7 January 2016); and implementation complete, when last KPNC hospital went “live” (31 May 2017). All time series were smoothed using a three month moving average, and were seasonally adjusted using the X11 method44

Of the 411 507 patients with index discharges taking place post-implementation, 80 424 (19.5%) had a predicted readmission risk that was medium or high (elevated) and thus were assigned to be followed by the Transitions Program. Among these discharged patients, there were 21 355 (26.5%) non-elective readmissions and 11 073 (13.8%) deaths within 30 days. The observed to expected ratio of the 30 day composite outcome appeared to significantly decrease (P=0.006 for a differential trend post-implementation) after the completion of the Transitions Program implementation in mid-2017 in the medium and high risk subgroup but not in the low risk subgroup (P=0.37; fig 1).

Initial outreach and enrollment were successful in 14 239 of 39 853 (35.7%) patients at medium and high risk with index discharges from July 2017 to December 2018 for whom tracking data were available. Compared with non-enrollees, on average enrollees carried a higher comorbidity burden (COPS2 score 125.2 points for enrollees v 117.1 for non-enrollees; P<0.001 by t test) but were slightly younger (73.0 v 74.6 years; P<0.001) and were somewhat less acutely ill at discharge (discharge LAPS2 score 60.6 v 62.6 points; P<0.001). Among 221 400 patients with low risk index discharges in this same period, an additional 2924 (1.3%) were manually upgraded to medium risk by their inpatient care team before hospital discharge (but were not considered assigned to the medium and high risk group in our analysis), of which 1257 (42.9%) were successfully enrolled in the Transitions Program.

Enrollees in the Transitions Program at high risk underwent an average of 5.82 documented outreach encounters in the 30 days after discharge, compared with 5.64 for enrollees at medium risk; the actual frequency of outreach was not statistically significantly different between these groups (P=0.23 by the Mann-Whitney U-test). In this same period, 89.3% (2120 of 2373 such enrollees with data recorded) of high risk enrollees received any referral to a primary care physician, compared with 88.2% (9195 of 10 423) of enrollees at medium risk; this difference was not significant (P=0.13 by χ2 test).

30. day non-elective readmissions

Overall, the implementation of the Transitions Program was statistically significantly associated with reduced odds of 30 day non-elective readmission (adjusted odds ratio 0.91, 95% confidence interval 0.89 to 0.93; table 3), corresponding to an absolute risk reduction of −2.5% (95% confidence interval −3.1% to −2.0%; number needed to treat 39.7, 95% confidence interval 32.3 to 49.1) given an estimated control event rate of 26.9% among participants with index discharges and predicted risk >25% during the pre-implementation period. Moreover, using the post-implementation data to fit a regression discontinuity yielded a local average treatment effect estimate of the absolute risk reduction of −2.7% (95% confidence interval −3.2% to −2.2%) for patients with medium risk index discharges near the risk threshold of 25% used for enrollment (table 4). Finally, when accounting for compliance by fitting a fuzzy regression discontinuity, the local average treatment effect estimate of the absolute risk reduction for 30 day readmission was −3.8% (−5.1% to −2.5%).

Table 3.

Difference-in-differences estimates of associations between implementation of the Transitions Program and 30 day post-discharge outcomes by cohort

| 30 day outcome | Adjusted odds ratio (95% CI) | |||

|---|---|---|---|---|

| All admissions | Inpatient only | HEDIS regulatory definition only | High risk only* | |

| Non-elective readmission | 0.91 (0.89 to 0.93) | 0.89 (0.87 to 0.91) | 0.90 (0.87 to 0.92) | 0.97 (0.89 to 1.06) |

| Post-discharge mortality | 1.00 (0.95 to 1.04) | 0.99 (0.95 to 1.04) | NA† | 0.96 (0.88 to 1.05) |

| Out-of-hospital mortality | 1.02 (0.97 to 1.06) | 1.01 (0.96 to 1.06) | NA† | 1.00 (0.91 to 1.10) |

HEDIS=Healthcare Effectiveness Data and Information Set; NA=not applicable.

Patients with ≥45% predicted risk of composite outcome.

Excludes patients with <30 days of follow-up, and hence also excludes patients who died in this period.

Table 4.

Regression discontinuity estimates of associations between implementation of the Transitions Program and 30 day post-discharge outcomes

| 30 day outcome | Absolute risk reduction* (%, 95% CI) | ||

|---|---|---|---|

| All admissions | Inpatient only | HEDIS regulatory definition only† | |

| Non-elective readmission | −2.7 (−3.2 to −2.2) | −2.9 (−3.4 to −2.3) | −2.5 (−3.1 to −1.9) |

| Post-discharge mortality | −0.7 (−1.4 to −0.0) | −0.9 (−1.7 to −0.1) | NA† |

| Out-of-hospital mortality | −0.4 (−1.1 to 0.2) | −0.5 (−1.2 to 0.2) | NA† |

HEDIS=Healthcare Effectiveness Data and Information Set; NA=not applicable.

Regression discontinuity design fits the estimates only near 25% risk threshold used to assign the Transitions Program intervention; hence, these local average treatment effect (LATE) estimates do not generalize to higher or lower risk levels.

Estimate for LATE.

Excludes patients with <30 days of follow-up, and hence also excludes patients who died in this period.

In a hospital stratified difference-in-differences analysis (supplementary figure SR10), with one exception (hospital D), all KPNC hospitals showed either statistically significantly improved odds of readmission or a trend towards the same. Similarly, regression discontinuity estimates stratified by hospital also suggested significant reductions in readmission rates among patients near the 25% risk cut-off across many KPNC hospitals; 11 of these 21 estimates were statistically significant and negative, suggesting significant reductions in readmission rates for these relatively lower risk patients (supplementary table SR11).

When restricting the denominator to patients with inpatient index hospital admissions (thus excluding observation admissions), implementation was also statistically significantly associated with reduced odds of any 30 day non-elective readmission (adjusted odds ratio 0.89, 95% confidence interval 0.87 to 0.91; local average treatment effect estimate of absolute risk reduction −2.9%, 95% confidence interval −3.4% to −2.3%; table 3 and table 4). Similarly, restricting the denominator further to consider only patients with discharges after HEDIS eligible inpatient hospital admissions as index events yielded comparable estimates of the odds ratio (adjusted odds ratio 0.90, 95% confidence interval 0.87 to 0.92) and the absolute risk reduction (local average treatment effect −2.5%, 95% confidence interval −3.1% to −1.9%; table 3 and table 4).

However, this benefit appeared attenuated for high risk patients with index discharges, among whom implementation of the Transitions Program was not significantly associated with the odds of any 30 day non-elective readmission (adjusted odds ratio 0.97, 95% confidence interval 0.89 to 1.06), nor was the regression discontinuity estimate using the high risk threshold of 45% as a cut-off statistically significant (local average treatment effect estimate of absolute risk reduction −1.2%, 95% confidence interval −2.6% to 0.1%).

30. day post-discharge mortality

The implementation of the Transitions Program did not appear to be statistically significantly associated with the overall odds of 30 day post-discharge mortality when considering patients with discharges after both inpatient hospital admissions and observation only stays as index events (adjusted odds ratio 1.00, 95% confidence interval 0.95 to 1.04) or those after inpatient index hospital admissions only (0.99, 0.95 to 1.04). A similar trend was observed for high risk patients with index discharges (0.96, 0.88 to 1.05; local average treatment effect estimate of absolute risk reduction at 45% high risk threshold −0.6%, 95% confidence interval −1.8% to 0.6%).

When these estimates were stratified by KPNC hospital, only three facilities had statistically significant regression discontinuity estimates; at two facilities, the value of the local average treatment effect estimate was positive, suggesting increased mortality, whereas for the third hospital, the treatment effect estimate was negative, implying decreased mortality (supplementary table SR11). Across the hospital specific difference-in-differences estimates, only one hospital had statistically significantly increased odds of 30 day post-discharge mortality; for all others, the estimated associations with mortality were non-significant (supplementary figure SR12).

The regression discontinuity analysis, however, yielded some evidence for an association of the Transitions Program with 30 day post-discharge mortality for medium risk patients with index discharges near the 25% risk threshold, as shown by local average treatment effect estimates of the absolute risk reduction of −0.7% (95% confidence interval −1.4% to −0.0%) when including index discharges after both inpatient and observation hospital admissions in the denominator, and −0.9% (−1.7% to −0.1%) for index discharges after inpatient hospital admissions only (table 4). Accounting for compliance by estimating a fuzzy regression discontinuity yielded a local average treatment effect estimate of the absolute risk reduction for 30 day post-discharge mortality of −0.6% (−3.1% to 0.9%).

No evidence was found for any association between implementation of the Transitions Program and out-of-hospital mortality in the 30 days after discharge, either for all patients with index discharges (adjusted odds ratio 1.02, 95% confidence interval 0.97 to 1.06; local average treatment effect estimate of absolute risk reduction −0.4%, 95% confidence interval −1.1% to 0.2%) or for patients with index discharges after inpatient hospital admissions (adjusted odds ratio 1.01, 95% confidence interval 0.96 to 1.06; local average treatment effect estimate of absolute risk reduction −0.5%, 95% confidence interval −1.2% to 0.2%; table 3 and table 4).

Other associations with the Transitions Program implementation

In a complementary difference-in-differences analysis, the implementation of the Transitions Program was also associated with a mean change in hospital length of stay of −12.1 hours (95% confidence interval −13.4 to −10.9 hours) among medium and high risk patients during the hospital admission leading to an index discharge. No significant association was, however, found between implementation and the severity of illness at the beginning of a readmission, as measured by the LAPS2 score at admission among patients who were assigned to be followed by the Transitions Program but who were subsequently readmitted (mean LAPS2 decrease −0.66 points, 95% confidence interval −1.36 to 0.03). Likewise, implementation was also found to not be significantly associated with the odds of in-hospital death during a readmission among patients who were assigned to be followed by the Transitions Program but who were subsequently readmitted (adjusted odds ratio 1.02, 95% confidence interval 0.93 to 1.11).

Discussion

This observational evaluation of a care coordination intervention to prevent hospital readmission in a community setting found that implementation was statistically significantly associated with decreased 30 day readmission rates. Moreover, implementation did not seem to be statistically significantly associated with 30 day post-discharge mortality, except among patients at relatively lower risk, among whom our analyses found some evidence of benefit. Our evaluation relied on three primary pieces of evidence: longitudinal trends in risk adjusted rates in the intervention and non-treated groups, together with estimates of association derived from two complementary quasi-experimental methods. These results were replicated across three different cohort definitions, as well as across KPNC facilities, all of which except one showed statistically significantly reduced odds of readmission associated with implementation of the Transitions Program, or a trend towards the same.

Strengths and weaknesses of this study

Our study has several strengths. First, we took advantage of laboratory based acuity of illness measures available at admission and discharge—these are often not available in claims based and other analyses—which represent a unique strength of our analysis insofar as these measures could yield more accurate risk adjustment. Second, given KPNC’s status as an integrated health system and its extent of information exchange with other hospitals and health systems in northern California and elsewhere, the degree of outcome follow-up in our study, although not necessarily guaranteed to be complete, might be greater than that of single institution studies and others more limited in scope. Third, unlike in many other studies, we were able to stratify the data by KPNC hospital, which allowed us to study the potential heterogeneity in the associations between implementation and outcomes across all 21 hospitals. With one or two exceptions, the estimated hospital specific associations appeared to recapitulate the overall findings.

Fourth, in conjunction with the more detailed acuity of illness and other patient level data, our study is also notable for its large and relatively heterogeneous cohort compared with other studies. Finally, the design of both the predictive model and the intervention itself means it could easily be applied in other settings if the requisite digital infrastructure is available, primarily to both provide real time risk estimates and assign referrals for patients to be followed post-discharge. In particular, the transition support level predictive model used to assign the intervention is simple, consisting of only five predictors. Although this model has not yet been externally validated, its components, including the LAPS and COPS scores, have been successfully computed (see supplementary file for full details) and used for predictive purposes in other populations outside of KPNC.45 46 47

Our study also has several limitations. First, the rate of compliance, as measured by successful enrollment into the Transitions Program, was relatively low. At 35.7% of eligible hospital discharges, however, this rate was higher than that observed (25.2%) in a randomized trial of a comparable intervention.20 Moreover, the data used to assess compliance were only available for a subset of patients discharged during the latter half of the post-implementation period. While this phenomenon could have attenuated the true associations between implementation and outcomes, the fuzzy regression discontinuity estimate appeared to recapitulate our primary findings for the readmission outcome and suggested that the association of implementation with reduced risk of readmission might have been stronger among patients who were actually enrolled.

Second, given the observational nature of our study, our ability to ascribe causality to the observed associations is necessarily somewhat limited. In particular, we were not able to exclude any potential contribution of unobserved confounding, although we assessed multiple checks of the key assumptions required for the validity of both the difference-in-differences and the regression discontinuity analyses, and these assessments appeared consistent with these assumptions. Furthermore, we were able to replicate the observed associations across different cohort definitions, as well as across nearly all KPNC hospitals. This system-wide replication reduces a potential concern that the observed overall association might have been isolated to several hospitals that were unusually effective at implementation, or otherwise might have arisen as a result of some other form of idiosyncratic variation across KPNC hospitals. Finally, while the transition support level risk model used for treatment assignment appeared well calibrated for low risk patients as well as for those near the medium risk (25%) assignment threshold, it tended to overpredict actual risk for patients with predicted risks exceeding 40%. However, the overall extent of bias introduced by this observed miscalibration is likely negligible, owing largely to these higher risk patients comprising a small proportion (roughly 5%) of the post-implementation cohort.

Third, the full extent of the generalizability of our findings is unclear. Compared to other settings, the KPNC cohort studied here comprises an insured population receiving care within a highly integrated health system. While other systems exist in the US and elsewhere exhibiting a similarly high degree of integration, not all settings possess the information technology capability required to provide real time and customized risk estimates. Thus, the extent of reduction in readmission rates observed here might not be replicable in all settings. This could be compounded by KPNC’s status as a capitated system, which incentivizes preventing hospital admission more generally, and which might also partly explain why readmission rates in our study appeared to improve while mortality did not increase, in contrast with the findings of studies such as by Wadhera et al.4 Finally, the rate of admission for observation at KPNC was relatively high and increased over time, but this finding is in line with underlying secular trends in observation use in the United States.48 49

Strengths and weaknesses in relation to other studies

Our findings appear to align closely with those of several recently published studies that also evaluated the use of a predictive model to target similarly designed care coordination interventions to high risk patients.17 20 50 51 Notably, the Aiming to Improve Readmissions Through InteGrated Hospital Transitions (AIRTIGHT) trial20 estimated both intention-to-treat and per protocol effects of that intervention on readmission, which were roughly similar in magnitude (10% and 20% reductions in relative risk, respectively) to the observational analogs of those estimates yielded by our analyses. In addition, AIRTIGHT is also notable in that patients with predicted risks >20% were targeted, comparable to the 25% risk threshold used by the Transitions Program. Similarly, Dhalla et al17 and Low et al50 also observed analogous reductions in readmission rates, although those studies enforced more stringent eligibility criteria (and thus potentially reached more engaged patient populations) compared with AIRTIGHT or our study, and in the case of Dhalla et al the estimated effect was not statistically significant.

Another similarity our study shares with the results of the AIRTIGHT trial is the observation of a relatively low rate of compliance with the intervention. In our study, only 35.7% of eligible patients were successfully enrolled into the Transitions Program post-discharge, compared with 25.2% into the Transition Services program evaluated in AIRTIGHT. These rates reflect the challenges of real world implementation of these care coordination interventions and could have attenuated the estimates of the intention-to-treat effect and their observational analogs. However, the patterns of self-selection into treatment in our study appeared to differ from those observed in AIRTIGHT; enrollees in the Transitions Program were only 1.6 years younger than non-enrollees, on average (73.0 v 74.6 years) compared with 4.7 years younger for enrollees (54.8 v 59.5) in AIRTIGHT. Moreover, whereas AIRTIGHT did not characterize the comorbidity burden of participants, enrollees in the Transitions Program on average had a higher comorbidity burden compared with non-enrollees (COPS2 score 125.2 v 117.1 points), although they were slightly less acutely ill at discharge (discharge LAPS2 score 60.6 v 62.6 points).

In a broader context, our study is also notable for being a systems level evaluation of a care coordination intervention as applied in a large population in a community setting, of which few such evaluations currently seem to exist in the literature. The UK National Health Service has been an early adopter of readmission (and emergency admission) prediction models at various levels, having commissioned the development and validation of the patients at risk for rehospitalisation (PARR) model and combined predictive model in NHS England,52 53 54 Scottish patients at risk of readmission and admission (SPARRA) in NHS Scotland, and predictive risk stratification model (PRISM) in NHS Wales. These efforts culminated in a 2014 NHS England enhanced service55 that provided funding for general practices and primary care trusts to implement risk stratification tools and create registries of patients at high risk for emergency hospital admission. However, despite high uptake (>95% of general practices) of these tools and plans to carry out a national evaluation of their impacts on outcomes, no such evaluation seems to have been performed to date.56

Similarly, NHS Wales also provided funding under the Quality and Outcomes Framework to general practices to invest in risk stratification tools to identify patients at high risk of emergency admission.56 One evaluation of the impacts of these efforts—the predictive risk stratification model: a randomised stepped-wedge trial in primary care (PRISMATIC)—has been published to date, which studied 32 general practices in Wales as the PRISM tool was implemented from 2013 to 2014.56 It found that use of the PRISM tool was associated with a modest increase in the rate of emergency hospital admissions and resource utilization, particularly among those at highest risk, while having no apparent effect on mortality. However, it is unclear how to contextualize these results in relation to those of our study, owing to the decentralized nature of PRISM implementation—participating practices were given broad latitude in how and when to use the risk estimates to manage care—and that in practice NHS predictive models appear to target the riskiest 1% to 2%,57 whereas in our study KPNC targeted roughly the riskiest 20%.

Furthermore, previous work has raised the concern that upcoding could be contributing in large part to the apparent decrease in risk adjusted readmission rates observed in some analyses on the effects of HRRP implementation.5 58 Upcoding—where claims submitted to payers, including Medicare, might include diagnosis codes that inaccurately overstate a patient’s underlying severity of illness, whether intentionally done or as a side effect of more comprehensive coding practices—has the potential to overestimate the extent of reductions in readmissions, if any, achieved by such initiatives. In our study, we did not find any evidence for increased rates of upcoding at KPNC over time.

In particular, although we found that the predicted risk of the composite outcome in KPNC patients admitted to hospital tended to increase over the study period, paralleling the increase in comorbidity burden, we also found evidence that this increase in risk appeared to track with a concomitant increase in patients’ acuity of illness at admission. Notably, particularly in the context of the difference-in-differences analysis, both comorbidity burden and predicted risk were observed to increase in roughly the same proportion in both the non-treated group and the intervention group. Finally, we also found that patient acuity at discharge was largely unchanged over the study period, together with a decrease in the hospital discharge rate. These observations suggest that longitudinal changes in hospital discharge patterns might not have confounded our results—that is, through the reversion of a trend toward prematurely discharging patients that might have existed earlier in the study period.

Unanswered questions and future research

An important question left unanswered by this study begins from the premise that patients at risk of readmission might exhibit heterogeneous responses to interventions designed to prevent readmission. This heterogeneity could arise from variation in patients’ levels of activation, socioeconomic status, extent of social support, and causal risk factors related to underlying comorbidity status,59 as well as heterogeneity across their levels of predicted risk.60 Our analysis, in its current form, although finding limited evidence for heterogeneity in the associations between implementation and readmission and mortality outcomes across levels of predicted risk, might not be able to capture the full extent of whatever treatment effect heterogeneity might exist.61

Indeed, in our analysis we found that the regression discontinuity estimates of the extent of association between implementation and outcomes for patients at relatively lower risk (25%) tended to be larger than those from the difference-in-differences estimates, which represent an overall association that includes all patients at increased risk (25% to 100%), including high risk patients. This discrepancy was particularly pronounced for post-discharge mortality, where the regression discontinuity estimate suggesting benefit at a relatively lower risk level was statistically significant, but not the overall difference-in-differences estimate. In addition, a separate difference-in-differences analysis carried out in a high risk subgroup also suggested that any benefit of the intervention for either of the primary outcomes could have been attenuated in that subgroup.

Taken together, our results might point to a larger effect for the Transitions Program intervention among patients at medium risk compared with those at higher risk, which is consistent with some previous observations in the literature on readmissions.59 60 62 Although predicted risk may serve as a useful proxy measure that can be used to target interventions, in this context it might not reliably identify preventable readmissions even among patients at high risk.60 A fuller understanding of this distinction could have implications for policy and the design of such readmission reductions programs. Future predictive modeling studies and related quality improvement initiatives should instead consider focusing on identifying those patients most likely to benefit from these interventions—that is, the most impactible patients, among whom the intervention will potentially have the most impact on outcomes—while designing alternative interventions for the riskiest and so potentially those in whom the intervention may have the least impact.60 63

Conclusions

A standardized and comprehensive care coordination intervention targeted using a predictive algorithm was associated with a statistically significant reduction in the risk of 30 day non-elective hospital readmissions in this study of outcomes using data from more than 1.5 million people discharged from hospital in a large integrated health system. No association was found between the intervention and 30 day post-discharge mortality, except possibly among patients at medium risk, where some evidence for benefit was observed.

What is already known on this topic

The effectiveness of hospital readmission reduction programs appears mixed

Previous studies have found reductions in readmission rates but potentially at the cost of increased mortality

Many initiatives are targeted to patients with the highest predicted risk, although it is unclear whether this subgroup is also the one most likely to benefit

What this study adds

The implementation of a standardized care coordination intervention in an integrated health system was statistically significantly associated with a reduced risk of 30 day readmission but not 30 day mortality, although some evidence for mortality benefit among patients at lower predicted risk was observed

With a few exceptions, these observed associations were replicated across nearly all 21 hospitals in the health system, as well as across different cohort definitions

The observed rate of compliance with the intervention was somewhat low but higher than that reported by a recent randomized trial of a similar intervention (35.7% v 25.2%)

Acknowledgments

We thank Tracy Lieu, Diane Brown, and Angela Wahleithner for reviewing the manuscript; Christine Robisch, Philip Madvig, and Stephen Parodi for administrative support; Heather Everett for facilitating access to patient intervention tracking data; and Kathleen Daly for help with formatting the manuscript.

Web extra.

Extra material supplied by authors

Supplementary information: supplementary tables and figures and appendices A-E

Contributors: BJM, GJE, MTB, and AS designed the study. BJM, CCP, and AS performed statistical analysis. BJM, GJE, MTB, VXL, and AS interpreted the data and results of analyses. BJM and GJE drafted the manuscript, and BJM, GJE, MTB, VXL, CCP, and AS revised the manuscript for critical content. GJE and VXL acquired funding. CCP provided technical assistance. The corresponding author attests that all listed authors meet authorship criteria and that no others meeting the criteria have been omitted. BJM, GJE, and AS are the guarantors.

Funding: This work was funded by The Permanente Medical Group and Kaiser Foundation Hospitals. BJM was supported by a predoctoral training fellowship (T15LM007033) from the National Library of Medicine of the National Institutes of Health (NIH) as well as by a Stanford University School of Medicine dean’s fellowship. MTB was supported by grant KHS022192A from the Agency for Healthcare Research and Quality. VXL was supported by NIH grant R35GM128672. The funders played no role in the study design, data collection, analysis, reporting of the data, writing of the report, or the decision to submit the manuscript for publication. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Competing interests: All authors have completed the ICMJE uniform disclosure form at www.icmje.org/coi_disclosure.pdf and declare: support from the National Institutes of Health (NIH) National Library of Medicine, the Agency for Healthcare Research and Quality, the NIH National Institute for General Medical Sciences, Kaiser Foundation Hospitals, and The Permanente Medical Group for the submitted work. BJM received consulting fees from Kaiser Foundation Hospitals; otherwise, he and the other authors declare no financial relationships with any organizations that might have an interest in the submitted work in the previous three years, nor other relationships or activities that could appear to have influenced the submitted work.

The manuscript’s guarantors (BJM, GJE, and AS) affirm that the manuscript is an honest, accurate, and transparent account of the study being reported; that no important aspects of the study have been omitted; and that any discrepancies from the study as planned (and, if relevant, registered) have been explained.

Dissemination to participants and related patient and public communities: Owing to the deidentified nature of the data used in this study, it is not possible to disseminate the results of this research to the participants directly. However, the results of this study have previously been disseminated in an oral presentation at the 2020 AcademyHealth Annual Research Meeting. We also anticipate that further dissemination will occur through a KP press release as well as news articles (on both internal and external websites), which will also be shared through Division of Research and KPNC social media accounts. These media will be accessible to Kaiser Permanente members and the public.

Provenance and peer review: Not commissioned; externally peer reviewed.

Ethics statements

Ethical approval

Ethical approval: This study was approved by the Kaiser Permanente Northern California institutional review board for the protection of human subjects (study ID 1279154-7), which waived the requirement for individual informed consent.

Data availability statement

Data sharing: No additional data available.

References

- 1.Centers for Medicare and Medicaid Services. Hospital Readmissions Reduction Program (HRRP). https://www.cms.gov/Medicare/Medicare-Fee-for-Service-Payment/AcuteInpatientPPS/Readmissions-Reduction-Program.html. 2019. Accessed July 23, 2020.

- 2.CMS.gov. Health Insurance Exchange Quality Ratings System 101 https://www.cms.gov/newsroom/fact-sheets/health-insurance-exchange-quality-ratings-system-101. 2019. Accessed July 23 2020.

- 3.Dharmarajan K, Qin L, Lin Z, et al. Declining admission rates and thirty-day readmission rates positively associated even though patients grew sicker over time. Health Aff (Millwood) 2016;35:1294-302. 10.1377/hlthaff.2015.1614 [DOI] [PubMed] [Google Scholar]

- 4.Wadhera RK, Joynt Maddox KE, Wasfy JH, Haneuse S, Shen C, Yeh RW. Association of the Hospital Readmissions Reduction Program With Mortality Among Medicare Beneficiaries Hospitalized for Heart Failure, Acute Myocardial Infarction, and Pneumonia. JAMA 2018;320:2542-52. 10.1001/jama.2018.19232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ody C, Msall L, Dafny LS, Grabowski DC, Cutler DM. Decreases In Readmissions Credited To Medicare’s Program To Reduce Hospital Readmissions Have Been Overstated. Health Aff (Millwood) 2019;38:36-43. 10.1377/hlthaff.2018.05178. [DOI] [PubMed] [Google Scholar]

- 6.Khera R, Wang Y, Bernheim SM, Lin Z, Krumholz HM. Post-discharge acute care and outcomes following readmission reduction initiatives: national retrospective cohort study of Medicare beneficiaries in the United States. BMJ 2020;368:l6831. 10.1136/bmj.l6831. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Huckfeldt P, Escarce J, Sood N, Yang Z, Popescu I, Nuckols T. Thirty-Day Postdischarge Mortality Among Black and White Patients 65 Years and Older in the Medicare Hospital Readmissions Reduction Program. JAMA Netw Open 2019;2:e190634. 10.1001/jamanetworkopen.2019.0634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Panagiotou OA, Kumar A, Gutman R, et al. Hospital Readmission Rates in Medicare Advantage and Traditional Medicare: A Retrospective Population-Based Analysis. Ann Intern Med 2019;171:99-106. 10.7326/M18-1795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ferro EG, Secemsky EA, Wadhera RK, et al. Patient Readmission Rates For All Insurance Types After Implementation Of The Hospital Readmissions Reduction Program. Health Aff (Millwood) 2019;38:585-93. 10.1377/hlthaff.2018.05412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Gai Y, Pachamanova D. Impact of the Medicare hospital readmissions reduction program on vulnerable populations. BMC Health Serv Res 2019;19:837. 10.1186/s12913-019-4645-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Bambhroliya AB, Donnelly JP, Thomas EJ, et al. Estimates and Temporal Trend for US Nationwide 30-Day Hospital Readmission Among Patients With Ischemic and Hemorrhagic Stroke. JAMA Netw Open 2018;1:e181190. 10.1001/jamanetworkopen.2018.1190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Mehtsun WT, Papanicolas I, Zheng J, Orav EJ, Lillemoe KD, Jha AK. National Trends in Readmission Following Inpatient Surgery in the Hospital Readmissions Reduction Program Era. Ann Surg 2018;267:599-605. 10.1097/SLA.0000000000002350. [DOI] [PubMed] [Google Scholar]

- 13.Hansen LO, Young RS, Hinami K, Leung A, Williams MV. Interventions to reduce 30-day rehospitalization: a systematic review. Ann Intern Med 2011;155:520-8. 10.7326/0003-4819-155-8-201110180-00008. [DOI] [PubMed] [Google Scholar]

- 14.Hesselink G, Schoonhoven L, Barach P, et al. Improving patient handovers from hospital to primary care: a systematic review. Ann Intern Med 2012;157:417-28. 10.7326/0003-4819-157-6-201209180-00006. [DOI] [PubMed] [Google Scholar]

- 15.Rennke S, Nguyen OK, Shoeb MH, Magan Y, Wachter RM, Ranji SR. Hospital-initiated transitional care interventions as a patient safety strategy: a systematic review. Ann Intern Med 2013;158:433-40. 10.7326/0003-4819-158-5-201303051-00011. [DOI] [PubMed] [Google Scholar]

- 16.Kripalani S, Theobald CN, Anctil B, Vasilevskis EE. Reducing hospital readmission rates: current strategies and future directions. Annu Rev Med 2014;65:471-85. 10.1146/annurev-med-022613-090415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Dhalla IA, O’Brien T, Morra D, et al. Effect of a postdischarge virtual ward on readmission or death for high-risk patients: a randomized clinical trial. JAMA 2014;312:1305-12. 10.1001/jama.2014.11492. [DOI] [PubMed] [Google Scholar]

- 18.Leppin AL, Gionfriddo MR, Kessler M, et al. Preventing 30-day hospital readmissions: a systematic review and meta-analysis of randomized trials. JAMA Intern Med 2014;174:1095-107. 10.1001/jamainternmed.2014.1608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kansagara D, Chiovaro JC, Kagen D, et al. So many options, where do we start? An overview of the care transitions literature. J Hosp Med 2016;11:221-30. 10.1002/jhm.2502. [DOI] [PubMed] [Google Scholar]

- 20.McWilliams A, Roberge J, Anderson WE, et al. Aiming to Improve Readmissions Through InteGrated Hospital Transitions (AIRTIGHT): a Pragmatic Randomized Controlled Trial. J Gen Intern Med 2019;34:58-64. 10.1007/s11606-018-4617-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Escobar GJ, Ragins A, Scheirer P, Liu V, Robles J, Kipnis P. Nonelective Rehospitalizations and Postdischarge Mortality: Predictive Models Suitable for Use in Real Time. Med Care 2015;53:916-23. 10.1097/MLR.0000000000000435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Escobar GJ, Dellinger RP. Early detection, prevention, and mitigation of critical illness outside intensive care settings. J Hosp Med 2016;11(Suppl 1):S5-10. 10.1002/jhm.2653. [DOI] [PubMed] [Google Scholar]

- 23.Escobar GJ, Turk BJ, Ragins A, et al. Piloting electronic medical record-based early detection of inpatient deterioration in community hospitals. J Hosp Med 2016;11(Suppl 1):S18-24. 10.1002/jhm.2652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kipnis P, Turk BJ, Wulf DA, et al. Development and validation of an electronic medical record-based alert score for detection of inpatient deterioration outside the ICU. J Biomed Inform 2016;64:10-9. 10.1016/j.jbi.2016.09.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Escobar GJ, Liu VX, Schuler A, Lawson B, Greene JD, Kipnis P. Automated Identification of Adults at Risk for In-Hospital Clinical Deterioration. N Engl J Med 2020;383:1951-60. 10.1056/NEJMsa2001090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Van Calster B, Wynants L, Timmerman D, Steyerberg EW, Collins GS. Predictive analytics in health care: how can we know it works? J Am Med Inform Assoc 2019;26:1651-4. 10.1093/jamia/ocz130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.von Elm E, Altman DG, Egger M, Pocock SJ, Gøtzsche PC, Vandenbroucke JP, STROBE Initiative . Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement: guidelines for reporting observational studies. BMJ 2007;335:806-8. 10.1136/bmj.39335.541782.AD. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Hernán MA, Robins JM. Using Big Data to Emulate a Target Trial When a Randomized Trial Is Not Available. Am J Epidemiol 2016;183:758-64. 10.1093/aje/kwv254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Escobar GJ, Greene JD, Scheirer P, Gardner MN, Draper D, Kipnis P. Risk-adjusting hospital inpatient mortality using automated inpatient, outpatient, and laboratory databases. Med Care 2008;46:232-9. 10.1097/MLR.0b013e3181589bb6. [DOI] [PubMed] [Google Scholar]

- 30.Escobar GJ, Greene JD, Gardner MN, Marelich GP, Quick B, Kipnis P. Intra-hospital transfers to a higher level of care: contribution to total hospital and intensive care unit (ICU) mortality and length of stay (LOS). J Hosp Med 2011;6:74-80. 10.1002/jhm.817. [DOI] [PubMed] [Google Scholar]

- 31.Liu V, Kipnis P, Rizk NW, Escobar GJ. Adverse outcomes associated with delayed intensive care unit transfers in an integrated healthcare system. J Hosp Med 2012;7:224-30. 10.1002/jhm.964. [DOI] [PubMed] [Google Scholar]

- 32.Escobar GJ, Gardner MN, Greene JD, Draper D, Kipnis P. Risk-adjusting hospital mortality using a comprehensive electronic record in an integrated health care delivery system. Med Care 2013;51:446-53. 10.1097/MLR.0b013e3182881c8e. [DOI] [PubMed] [Google Scholar]

- 33.Escobar GJ, Plimier C, Greene JD, Liu V, Kipnis P. Multiyear Rehospitalization Rates and Hospital Outcomes in an Integrated Health Care System. JAMA Netw Open 2019;2:e1916769. 10.1001/jamanetworkopen.2019.16769. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Agency for Healthcare Research and Quality, U.S. Department of Health and Human Services. Ambulatory Care Sensitive Conditions: Quality Indicators Software Instructions, 2014. Accessed August 14, 2019, http://www.qualityindicators.ahrq.gov/Downloads/Software/SAS/V45/Software_Instructions_SAS_V4.5.pdf

- 35.National Committee for Quality Assurance . HEDIS 2019. Vol 2. Technical Specifications, 2014. [Google Scholar]

- 36.van Walraven C, Dhalla IA, Bell C, et al. Derivation and validation of an index to predict early death or unplanned readmission after discharge from hospital to the community. CMAJ 2010;182:551-7. 10.1503/cmaj.091117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Walkey AJ, Bor J, Cordella NJ. Novel tools for a learning health system: a combined difference-in-difference/regression discontinuity approach to evaluate effectiveness of a readmission reduction initiative. BMJ Qual Saf 2020;29:161-7. 10.1136/bmjqs-2019-009734. [DOI] [PubMed] [Google Scholar]

- 38.Dimick JB, Ryan AM. Methods for evaluating changes in health care policy: the difference-in-differences approach. JAMA 2014;312:2401-2. 10.1001/jama.2014.16153. [DOI] [PubMed] [Google Scholar]

- 39.Hemming K, Haines TP, Chilton PJ, Girling AJ, Lilford RJ. The stepped wedge cluster randomised trial: rationale, design, analysis, and reporting. BMJ 2015;350:h391. 10.1136/bmj.h391. [DOI] [PubMed] [Google Scholar]

- 40.Moscoe E, Bor J, Bärnighausen T. Regression discontinuity designs are underutilized in medicine, epidemiology, and public health: a review of current and best practice. J Clin Epidemiol 2015;68:122-33. 10.1016/j.jclinepi.2014.06.021. [DOI] [PubMed] [Google Scholar]

- 41.Walkey AJ, Drainoni ML, Cordella N, Bor J. Advancing Quality Improvement with Regression Discontinuity Designs. Ann Am Thorac Soc 2018;15:523-9. 10.1513/AnnalsATS.201712-942IP. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Imbens G, Kalyanaraman K. Optimal Bandwidth Choice for the Regression Discontinuity Estimator. Rev Econ Stud 2012;79:933-59. 10.1093/restud/rdr043. [DOI] [Google Scholar]

- 43.Deyo RA, Cherkin DC, Ciol MA. Adapting a clinical comorbidity index for use with ICD-9-CM administrative databases. J Clin Epidemiol 1992;45:613-9. 10.1016/0895-4356(92)90133-8. [DOI] [PubMed] [Google Scholar]

- 44.Shiskin J, Young AH, Musgrave JC. The X-11 Variant of the Census Method II Seasonal Adjustment Program. U.S. Department of Commerce, Bureau of Economic Analysis, 1967. [Google Scholar]

- 45.Lagu T, Pekow PS, Shieh MS, et al. Validation and Comparison of Seven Mortality Prediction Models for Hospitalized Patients With Acute Decompensated Heart Failure. Circ Heart Fail 2016;9:e002912. 10.1161/CIRCHEARTFAILURE.115.002912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.van Walraven C, Escobar GJ, Greene JD, Forster AJ. The Kaiser Permanente inpatient risk adjustment methodology was valid in an external patient population. J Clin Epidemiol 2010;63:798-803. 10.1016/j.jclinepi.2009.08.020. [DOI] [PubMed] [Google Scholar]

- 47.Rawal S, Kwan JL, Razak F, et al. Association of the Trauma of Hospitalization With 30-Day Readmission or Emergency Department Visit. JAMA Intern Med 2019;179:38-45. 10.1001/jamainternmed.2018.5100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Lind KD, Noel-Miller CM, Sangaralingham LR, et al. Increasing Trends in the Use of Hospital Observation Services for Older Medicare Advantage and Privately Insured Patients. Med Care Res Rev 2019;76:229-39. 10.1177/1077558717718026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Wright B, O’Shea AM, Ayyagari P, Ugwi PG, Kaboli P, Vaughan Sarrazin M. Observation Rates At Veterans’ Hospitals More Than Doubled During 2005-13, Similar To Medicare Trends. Health Aff (Millwood) 2015;34:1730-7. 10.1377/hlthaff.2014.1474. [DOI] [PubMed] [Google Scholar]

- 50.Low LL, Tan SY, Ng MJ, et al. Applying the Integrated Practice Unit Concept to a Modified Virtual Ward Model of Care for Patients at Highest Risk of Readmission: A Randomized Controlled Trial. PLoS One 2017;12:e0168757. 10.1371/journal.pone.0168757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Berkowitz SA, Parashuram S, Rowan K, et al. Johns Hopkins Community Health Partnership (J-CHiP) Team . Association of a Care Coordination Model With Health Care Costs and Utilization: The Johns Hopkins Community Health Partnership (J-CHiP). JAMA Netw Open 2018;1:e184273. 10.1001/jamanetworkopen.2018.4273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Billings J, Dixon J, Mijanovich T, Wennberg D. Case finding for patients at risk of readmission to hospital: development of algorithm to identify high risk patients. BMJ 2006;333:327-30. 10.1136/bmj.38870.657917.AE. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Wennberg D, Siegel M, Darin B, et al. Combined Predictive Model: Final Report and Technical Documentation. 2006. https://www.kingsfund.org.uk/sites/default/files/Combined%20Predictive%20Model%20Final%20Report%20and%20Technical%20Documentation.pdf

- 54.Billings J, Mijanovich T, Dixon J, et al. Case finding algorithms for patients at risk or re-hospitalisation: PARR1 and PARR2, 2006. https://www.kingsfund.org.uk/sites/default/files/field/field_document/PARR-case-finding-algorithms-feb06.pdf

- 55.NHS England. Enhanced Service Specification: avoiding unplanned admissions: proactive case finding and patient review for vulnerable people 2014-2015, 2014. https://www.england.nhs.uk/wp-content/uploads/2014/08/avoid-unplanned-admissions.pdf

- 56.Snooks H, Bailey-Jones K, Burge-Jones D, et al. Effects and costs of implementing predictive risk stratification in primary care: a randomised stepped wedge trial. BMJ Qual Saf 2019;28:697-705. 10.1136/bmjqs-2018-007976. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Hutchings HA, Evans BA, Fitzsimmons D, et al. Predictive risk stratification model: a progressive cluster-randomised trial in chronic conditions management (PRISMATIC) research protocol. Trials 2013;14:301. 10.1186/1745-6215-14-301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Ibrahim AM, Dimick JB, Sinha SS, Hollingsworth JM, Nuliyalu U, Ryan AM. Association of Coded Severity With Readmission Reduction After the Hospital Readmissions Reduction Program. JAMA Intern Med 2018;178:290-2. 10.1001/jamainternmed.2017.6148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Lindquist LA, Baker DW. Understanding preventable hospital readmissions: masqueraders, markers, and true causal factors. J Hosp Med 2011;6:51-3. 10.1002/jhm.901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Steventon A, Billings J. Preventing hospital readmissions: the importance of considering ‘impactibility,’ not just predicted risk. BMJ Qual Saf 2017;26:782-5. 10.1136/bmjqs-2017-006629. [DOI] [PubMed] [Google Scholar]

- 61.Marafino BJ, Schuler A, Liu VX, Escobar GJ, Baiocchi M. Predicting preventable hospital readmissions with causal machine learning. Health Serv Res 2020;55:993-1002. 10.1111/1475-6773.13586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Finkelstein A, Zhou A, Taubman S, Doyle J. Health Care Hotspotting - A Randomized, Controlled Trial. N Engl J Med 2020;382:152-62. 10.1056/NEJMsa1906848. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Lewis GH. “Impactibility models”: identifying the subgroup of high-risk patients most amenable to hospital-avoidance programs. Milbank Q 2010;88:240-55. 10.1111/j.1468-0009.2010.00597.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary information: supplementary tables and figures and appendices A-E

Data Availability Statement

Data sharing: No additional data available.